Abstract

With the increasing penetration of renewable energy, uncertainty has become the main challenge of power systems operation. Fortunately, system operators could deal with the uncertainty by adopting stochastic optimization (SO), robust optimization (RO) and distributionally robust optimization (DRO). However, choosing a good decision takes much experience, which can be difficult when system operators are inexperienced or there are staff shortages. In this paper, a decision-making approach containing robotic assistance is proposed. First, advanced clustering and reduction methods are used to obtain the scenarios of renewable generation, thus constructing a scenario-based ambiguity set of distributionally robust unit commitment (DR-UC). Second, a DR-UC model is built according to the above time-series ambiguity set, which is solved by a hybrid algorithm containing improved particle swarm optimization (IPSO) and mathematical solver. Third, the above model and solution algorithm are imported into robots that assist in decision making. Finally, the validity of this research is demonstrated by a series of experiments on two IEEE test systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The target of unit commitment (UC) is to reduce the operation cost of power systems while ensuring high power supply reliability (Egbue et al., 2022). For many years, UC has been regarded as one of the most important control process of power systems. Nowadays, with the concern of environmental issues, renewable energy generation has received great attention. For example, the wind power installed capacity in China has reached 26GW in 2019, accounting for 44 percent of newly installed global capacity (Zhu et al., 2022). However, renewable generation like wind power and photovoltaic (PV) power usually shows strong intermittence and randomness, which brings significant uncertainty and challenge to the economic and reliable operation of power systems (Yuan et al., 2021).

In order to solve the above problem, scholars mainly use point forecast, interval forecast, probabilistic forecast and scenario generation to handle the uncertainty of renewable power, and adopt stochastic optimization (SO) and robust optimization (RO) to solve the UC problem. Stochastic unit commitment (SUC) takes numerous possibilities of uncertain information into account, and the solution aims to make the overall performance of the objective function best under various scenarios. Liu et al. proposed a SUC model for electric-gas coupling system and used the improved progressive hedging (PH) algorithm to accelerate the optimization of SUC (2021) (Liu et al., 2021). Asensio et al. added a CVaR constraint to the traditional SUC model to effectively quantify risks and make reasonable decisions according to risks (2016) (Asensio & Contreras, 2016).

Generally, SO can reduce the cost of power generation on the premise of improving the reliability of system operation, but it may not guarantee system reliability under extreme situation, which makes the risk of SUC solution difficult to measure. Therefore, RO is introduced as a better way to alleviate the above shortcomings. Based on the analysis of power systems under various uncertainties, the UC schedule generated by RO performs well in the worst case (Chen et al., 2022). In the literature (Gupta & Anderson, 2019), robust unit commitment (RUC) method based on the feature sorting algorithm used in the traditional pattern recognition problem was proposed, which is flexible on the basis of considering all cases. Lee et al. considered both unit and transmission line failure rates, then used N-K criterion to establish a generation cost minimization model in the worst scenario (Lee et al., 2015). However, RO tends to pay more attention on low probability events, making the generated schedule too conservative (Lin et al., 2021).

By contrast, distributionally robust optimization (DRO) combines the characteristics of SO and RO, and has attracted great attention in recent years (Bian et al., 2015). Instead of setting exact type and parameters of the probability distribution of uncertain information, DRO establishes the so-called ambiguity set based on available data, and makes UC decisions on the worst-case probability distribution within the set. Essentially, DRO ensures the robustness of system operation compared with SO, and improves the economy of UC decision compared with RO.

It is worth noting that, constructing ambiguity sets is the key and prerequisite of DRO. In the literature, there are many ways to construct an ambiguity set, including the moment-based method (Zhang et al., 2017) and the distance-based method (Zhu et al., 2019). The statistical moment reflects the statistical information of random variables.

A. Constants | |

Cg | Energy generation cost of thermal units |

\(C_{g}^{SU}\) | Start-up cost of thermal units |

\({C_{g}^{U}}({C_{g}^{D}})\) | Upward (downward) reserve capacity procurement cost |

\(C_{g}^{+}(C_{g}^{-})\) | Upward (downward) reserve deployment offer cost |

Cshed | Penalty cost of involuntary load shedding |

\({u_{g}^{0}}\) | Initial commitment status of unit g |

\({L_{g}^{U}}({L_{g}^{D}})\) | Number of generators g must be online (offline) |

from the beginning of the scheduling horizon | |

UTg | Minimum up time for generator g |

DTg | Minimum down time for generator g |

\({R_{g}^{U}}({R_{g}^{D}})\) | Upward (downward) ramping limit |

\(\underline {P}_{g}(\overline {P}_{g})\) | Minimum (maximum) generation bound |

\(R_{g}^{+}(R_{g}^{-})\) | Upward (downward) reserve capacity |

Wj | Wind power capacity |

\(W_{jt\omega }^{*}\) | Wind power production in scenario ω |

\(\hat {f}_{l}\) | Day-ahead network power flows |

Fl | Transmission capacity limits |

D | Ambiguity set |

B. Variables | |

ugt | On/off status of unit g at hour t |

ygt | Status indicator of unit g at hour t for the startup process |

zgt | Status indicator of unit g at hour t for the shutdown process |

pgt | Setting value of power output by unit g at hour t |

\(r_{gt}^{+}(r_{gt}^{-})\) | Amount of upward (downward) reserve capacity of unit g at hour t |

ωjt | Wind power dispatch under scenario j at hour t |

\(\hat {f}_{lt}\) | Network power flows |

\(\hat {f}_{lt\omega }\) | Real-time power flows |

\(p_{gt\omega }^{+}\) | Upward reserves of unit g at hour t under scenario ω |

\(p_{gt\omega }^{-}\) | Downward reserves of unit g at hour t under scenario ω |

\(\omega _{jt\omega }^{spill}\) | Amount of wind power production under scenario ω at hour t |

\(l_{nt\omega }^{shed}\) | Allowable load shedding at each node |

Compared with the specific probability distribution function, the moment information is easier to obtain. For instance, Zhang et al. constructed an ambiguity set based on moment information of wind power uncertain variable (2019) (Zhang et al., 2019). However, because different random variables may have the same statistical moment, the ambiguity sets based on statistical moment may contain probability distributions that deviate greatly from the empirical distribution. By contrast, distance-based method is more widely used in DRO UC. Essentially, the distance method first obtains the empirical probability distribution of random variables based on historical data, and then constructs the ambiguity set by defining the distance between other probability distributions and the empirical distribution. Therefore, the measurement of distance and the boundary of ambiguity set are two important parts of the distance method. In existing studies, norm and Kullback-Leibler (KL) divergence are commonly used distance measurement functions, and the boundary is usually selected according to a given formula or expert experience. For example, Ding et al. used the 1 norm and infinite norm to measure the distance, and obtained the boundary of the proposed ambiguity set according to certain formula (2019) (Ding et al., 2019). Chen et al. constructed an ambiguity set based on KL divergence, and the boundary was selected according to the experience of decision makers (2018) (Chen et al., 2018).

Although the above studies provide promising ways to solve DRO UC, all the ambiguity sets were constructed under a single time horizon, which ignores the time series features of uncertain information over the scheduling horizons. By contrast, renewable generation scenarios contain spatial-temporal correlation, therefore some scholars have also carried out relevant studies. For an instance, Zhang et al. screened historical data according to real-time information, then obtained a series of scenarios through clustering and constructed ambiguity set based on the 1-norm and the infinite norm (2021) (Zhang et al., 2021). Nonetheless, the construction methods based on norm only assume that the probability value of each scenario is variable. Considering the strong randomness of renewable generation and the limitation of historical data, there will also be some error in the power generation of each period in each scenario. Besides, in the face of different data sets, many existing studies use the same formula to obtain the boundary of ambiguity sets, which makes the effectiveness and objectivity of such methods questionable. Therefore, constructing time series ambiguity sets based on scenarios still has a lot of room for improvement.

Apart from constructing ambiguity sets, the solution methods of DRO UC is also significant. In order to solve the DRO problem, many scholars employ mathematical optimization methods that transform the infinite dimensional subproblems in the model based on linear decision programming and duality theory, then use mathematical solvers to handle the main problem and subproblems repeatedly. For example, a DRO framework of an electric-gas coupling system was proposed in Sayed et al. (2021), and at the same time, the model was solved by using nested columns and constraint generation algorithm. Liang et al. proposed an alternate iteration method based on bender decomposition to solve a min-max bi-level DRO model (2022) (Liang et al., 2022). Although mathematical solvers can guarantee the solution optimality, the model transformation process is rather complicated, and sometimes impossible to achieve. Besides, the use of linear decision rules in the transformation process may also make the solution deviate from the original model.

In addition, system operators need to evaluate and select UC decision, which often requires much experience and makes scheme selection highly subjective. Especially in some new energy power plants in bad environment, system operators can not be stationed in large numbers. Nowadays, the rapid development of robots has brought great convenience and help to many aspects of people’s life. Some tasks that require precision or are dangerous can be assisted by robots. Chen et al. used robots to explore unknown environments based on deep learning (2022) (Chen et al., 2022). Besides, artificial intelligence plays an important role in fighting the COVID-19 pandemic, including robotic assistance in isolation area (Piccialli et al., 2021). However, there is still much room for robotic assistance in the field of renewable energy generation.

Therefore, to mitigate the above defects, this paper proposes a scenario-based distributionally robust unit commitment (S-DR-UC) model handled by an improved hybrid solution algorithm, which contains robotic assistance. Specifically, this study firstly conducts clustering, classification and scenario reduction on the collected actual renewable power data and point forecast renewable power data to obtain the empirical distribution of ambiguity set. Then, the historical distribution method (Jabr, 2020) and bootstrap method (Luo et al., 2019) are used to get the boundary of the ambiguity set. After that, a S-DR-UC model based on the time series ambiguity set is established, and a hybrid solution algorithm containing improved particle swarm optimization (PSO), mathematical solver and parallel computing is designed to handle the complicated nonlinear S-DR-UC model, which are imported into robots that assist in decision making. Therefore, the contributions of this study can be summarized as follows:

-

(1)

Scenario-based ambiguity set: Compared with existing studies, scenario can better integrate the temporal correlation between uncertain information and directly serve the optimization scheduling of power systems. In addition, by using the historical method and bootstrap method, the scenario-based ambiguity set proposed in this study is more comprehensive in considering uncertainty, and more objective in setting the boundary of ambiguity sets.

-

(2)

Improved PSO: Compared with classical PSO, cosine similarity is introduced to measure the similarity between empirical distributions for temporal ambiguity set, thus dynamically adjusts weight to mitigates the local convergence problem.

-

(3)

An improved hybrid solution algorithm for S-DR-UC model: A novel algorithm containing PSO, mathematical solver and parallel computing is designed to handle S-DR-UC model. Compared with existing studies, our algorithm avoids complex transformations and conduces to assure the physical meaning of the original model. Besides, our method can achieve a satisfied solution of the model within an acceptable runtime cost.

-

(4)

Robotic assistance: Compared with the traditional UC decision for prediction results, this study introduces robots into the decision-making process. By importing models and algorithms into multiple robots, helping to reduce the subjectivity of scheme selection.

The rest of the paper is arranged as follows: Section 2 explains the way to construct the scenario-based ambiguity set. Section 3 provides the S-DR-UC formulation which includes the objective function and constraints considered. Section 4 introduces the hybrid solution algorithm which integrates an improved PSO, mathematical solver and parallel computing. In Section 5, the effectiveness of the research is justified on modified IEEE RTS-96 and RTS-24 systems. Finally, Section 6 concludes the work.

2 Ambiguity Set Construction Based on Renewable Generation Scenarios

In this section, the method to construct the scenario-based ambiguity set is explained. The ambiguity sets in most existing studies has been constructed by taking certain probability distribution e.g., Gaussian distribution as the empirical distribution, which ignores the temporal correlation among the scheduling horizons. By contrast, the method proposed in this study constructs the ambiguity set based on a number of scenarios with temporal attributes. The architecture of the overall process is shown in Fig. 1. We first introduce the way to obtain the empirical distribution of the ambiguity set, i.e., a set of renewable generation scenarios based on clustering and reduction techniques, then the method to construct the ambiguity set is explained.

2.1 Acquiring the Scenario Set

Nowadays, it is possible to collect both actual generation (AG) data and point forecast (PF) data of renewable power from several database. Taking wind power as an example, PF data and AG data of desired years are easily obtained from certain database like NERL (Hodge, 2016), which mostly refer to the wind power value with a time interval of five minutes. Since PF data and actual AG data have huge volume that cannot be directly applied to UC scheduling and this study considers day-head hourly UC optimization, the data is processed on an hourly basis to obtain daily scenarios. In detail, the data is divided on hours first, and the mode in the data of each hour is selected to represent the forecast and actual value of the hour. After that, these data are classified on a daily basis to obtain daily scenarios.

Obviously, the original scenarios are numerous and diversified, thus we need to carefully select a number of special scenarios among them for the day to be scheduled. In this research, a data clustering method named clustering by fast search and find of density peaks (CFSFDP) is adopted to process the original scenarios to obtain different categories of scenario sets, each of which contains daily PF scenarios with statistical similarity.

CFSFDP was first proposed in Rodriguez and Laio (2014). Generally, compared with traditional clustering methods such as k-means, CFSFDP avoids repeated iterative operation, which is greatly time-saving. In detail, we define Ssum = {s1,...,sm,...,sM} as a total set of scenarios of PF data, and each daily scenario \(s_{i}=\{{s^{1}_{i}},...,{s^{t}_{i}},...,{s^{T}_{i}}\}\) has T dimensions that correspond to the T time intervals of a day, and in this study T is equal to 24. The distance between scenarios si and sj is measured by Euclidean distance.

After deciding the truncation distance distcutoff based on adjustment of parameters, ρi and δi can be calculated by Eqs. 1.2 and 1.3 for scenario si, where ε is the unit step function and ρi represents the density of scenario si and δi represents the minimum distance between scenario si and other scenario with higher density, which are seen as coordinates to draw a decision graph. In detail, ρi is the X-axis and δ is the Y-axis. After setting the minimum of ρ and δ, the point i.e., scenario on the upper right of the decision graph is the center point of the cluster, and the remaining points will be grouped into the cluster with the nearest cluster center.

Then a number of daily scenario sets of PF are obtained and AG scenarios are separated into corresponding AG scenario sets according to PF sets. Since the number of scenarios in these AG sets could be greatly different, reduction and consolidation are performed to obtain AG sets including the specified number of scenarios. In detail, scenarios that have high ρ and δ are retained for large sets, while small sets are consolidated with similar classes. Besides, AG scenarios are classified corresponding to each AG scenario set according to Euclidean distance, and the initial probability value of each scenario is its corresponding quantity divides by total number of AG scenarios. So far, the scenario set i.e., the empirical distribution is obtained for the sake of subsequent ambiguity set constructing and its form is as follows.

where \({s_{i}^{t}}\) is wind power value at hour t of scenario si in the scenario set and pi is initial probability value of scenario si.

2.2 Construction of Ambiguity Set

In this part, the ambiguity set is constructed according to the scenario set obtained in Section 2.1. Apart from the fluctuation range of power value in each scenario, the fluctuation range of probability of each scenario is also taken into consideration. Specially, the historical distribution method is adopted to determine the fluctuation range of power value of scenarios, while the bootstrap method helps to find the fluctuation range of scenario probability, which is explained as follows respectively.

As the name suggests, historical distribution method is based on reliable historical distribution to determine the fluctuation range, so it is of importance to find several representative historical distributions. Firstly, the upper and lower bounds of the scenario si at hour t in scenario set S are denoted as \(\xi _{i,t}^{up}\) and \(\xi _{i,t}^{low}\). As can be seen from the previous section, the scenarios with the high ρ and δ are closer to the center. So we select z such scenarios in all the classes, corresponding to the set of scenarios that make up the z historical distribution and denote the upper and lower bounds of the ith scenario at hour t in historical scenario set as \([\xi _{i,t}^{up}]_{z}\) and \([\xi _{i,t}^{low}]_{z}\). Therefore, the fluctuation of upper and lower bounds of scenario are expressed as follows.

So far, the scenario set S is transformed into the following form.

Secondly, the bootstrap method is used to calculate the fluctuation range of each scenario probability. Based on the above form, the probability of the scenario si in S is written as pi, while the set including all AG scenarios is written as H and the number of scenarios in AG scenario set is equal to M. Then these AG scenarios are divided into AG scenario sets based on Euclidean distance between scenarios in H and scenarios in AG scenario sets. Generally, the number of AG scenarios corresponding to the category of the ith scenario in set is recorded as \({m_{i}^{0}}\), so the initial probability \(p_{i} = {m_{i}^{0}}/M\). Later sampling with replacement is performed on set H for Q times and the qth new scenario set is denoted as Hq. By performing the above classification operation on these Q scenario sets, it can be obtained that the number of scenarios corresponding to scenario i in the S in scenario set Hq is \({m_{i}^{q}}\). Denoting \(\delta {m_{i}^{q}}={m_{i}^{q}}-{m_{i}^{0}}\), and \(\delta {m_{i}^{q}}\) is arranged in ascending order. The μth and the (Q − μ)th \(\delta {m_{i}^{q}}\) are respectively expressed as \(\delta m_{i}^{*}\left (1-\frac {\mu }{Q}\right )\) and \(\delta m_{i}^{*}\left (\frac {\mu }{Q}\right )\). \(\left (100*\frac {Q-2*\mu }{Q}\right )\%\) bootstrap confidence interval is \(\left [p_{i}-\frac {\delta m_{i}^{*}(1-\frac {\mu }{Q})}{m},p_{i}-\frac {\delta m_{i}^{*}(\frac {\mu }{Q})}{m}\right ]\). In conclusion,the ambiguity set D is as follows.

It is worth noting that the left part refers to the fluctuation range of the scenario value, while the right part refers to the fluctuation range of the scenario probability.

3 Problem Formulation

In this section, the mathematical formulation of S-DR-UC is provided, which involves two stages of decision making. The objective function and operation constraints are described as follows.

3.1 Objective Function

The proposed S-DR-UC aims to minimize the total operation cost that comprises both day-ahead and real-time components as follows:

where the operation cost in the day-ahead part accounts for the production and start-up costs of thermal units in the wind power prediction base case i.e., the empirical distribution obtained by Eq. 1.4. The operation cost in real-time is the expectation of re-dispatch cost under the worst-case distribution of wind power, where πω represents the probability of distribution ω.

3.2 System Constraints

The power system constraints in the wind power empirical distribution include (2.2)-(2.17), which ensure that the day-ahead schedule respects the technical limits of power systems. The value range of first stage variable is \(\{u_{gt},y_{gt},z_{gt}=\{0,1\}, p_{g}t,r_{gt}^{+/-}\geq 0,\forall g,t; \omega _{jt}\geq 0,\forall j,t; \tilde {\delta }_{nt}\ free,\forall n,t; \hat {f}_{lt}\ free, \forall l,t\}\) Specifically, constraint (2.2) is the initial state of the units in the beginning of the scheduling horizon, where the resulting allowable start-up and shut-down actions are imposed through constraints (2.3) and (2.4), respectively.

Constraints (2.5) and (2.6) model the transition from start-up to shut-down state. Constraint (2.7) states whether an unit can start up or shut down at time period t.

Constraints (2.8) and (2.9) respectively enforce the upward ramping limit and the downward ramping limit. Constraints (2.10) and (2.11) give the minimum and maximum generation bounds. Constraints (2.12) and (2.13) limit the procurement of upward and downward reserves by the corresponding capacity offers.

Constraint (2.14) bounds wind power dispatch to the installed wind power capacity.

Constraint (2.15) is the nodal power balance, which considers the day-ahead network power flows constraints (2.16) and (2.17) based on a DC flow approximation.

3.3 Real-Time Dispatch Constraints

The real-time dispatch constraints (2.18)-(2.24) model the balancing recourse actions under the worst-case distribution of wind power. The value range of real-time variable is \(\{p_{gt\omega }^{*}\geq 0,\forall g,t,\omega ; \omega _{jt\omega }^{spill}\geq 0,\forall j,t; \tilde {f}_{lt\omega }\ free,\forall l,t,\omega ; l_{nt\omega }^{shed},\hat {\delta }_{nt\omega } \ free,\forall n,t,\omega \}\) Constraint (2.18) ensures that conventional generation, wind power production and load are properly re-dispatched such that the whole system remains in balance.

Constraints (2.19) and (2.20) ensure that the upstream and downstream reserve deployments are within the range of the corresponding purchase quantity in the day-ahead period.

Constraints (2.21) and (2.22) are node requirements, limiting the amount of wind power that can overflow from each node and the amount of wind power that can be realized under each scenario. The transmission capacity limits on the real-time power flow are expressed in constraints (2.23) and (2.24)

Finally, the complete model of S-DR-UC is built by taking (2.1) as the objective function, and (2.2)-(2.24) as the constraints.

4 Solution Algorithm

Generally, S-DR-UC is difficult to be solved by existing methods due to the complicated nature of DRO and the introduction of scenario-based ambiguity set. Therefore in this section, a hybrid solution algorithm named improved PSO-mathematical solver (IPSO-MS) for the proposed model is designed, which combines an improved PSO algorithm, a mathematical optimization solver and parallel computing. In what follows, the basic knowledge of classical PSO is firstly introduced, followed by our improvements on the algorithm itself. Then the way to combine PSO with mathematical solver and implementation of parallel computing is explained.

4.1 Classical PSO

PSO was first proposed by Kennedy and Eberhart (1995). The basic idea of PSO is to find the optimal solution through the cooperation and information sharing among individuals in the group. Basically, each particle Pi has only two properties, i.e., speed vi and position xi. Each particle searches for its optimal solution in its search space as individual extreme values pbesti, and then finds the optimal solution among these individual extreme values as the global optimal solution gbesti. All particles are then varied according to individual and global extremes. The formulas that update the velocity and position of each particle are as follows:

where c1 and c2 are learning factors, usually taking the value of 2. Besides, ω is a non-negative inertia parameter, which is used to adjust the global and local optimization ability of each particle. Basically, ω is positively correlated with the global optimization ability, and negatively correlated with the local optimization ability.

4.2 Improved PSO with Cosine Similarity-Based Nonlinear Inertia Weight

It can be found that the inertia weight ω in Eq. 3.1 has a direct impact on the velocity as well as the position of each particle. In most existing PSO algorithms, ω is treated as a fixed value. A large ω value is unfavourable for local search of each particle, especially in the later iterations, while a small ω value will deteriorate the global search ability of the swarm. In recent years, there have been many researches on the improvement of PSO (Zhao et al., 2017). For example, Alkhraisat et al. proposed a dynamic inertia weight particle swarm optimization (DIW-PSO) algorithm in (2016) (Alkhraisat & Rashaideh, 2016), which updated the inertia weight value through the law of linear decline. The update formula of inertia value is as follows:

where ωmin and ωmax are the minimum value and maximum value of the range of inertia change respectively. itmax is the maximum number of iterations. i represents the current iteration. In this way, the iteration speed of particles with different iterations is adjusted, and the imbalance between global and local optimization of classical PSO algorithm is improved to some extent. However, the above improvement cannot be used directly in this study. The reason is that the ambiguity set of this research is scenario-based, and each particle position represents a possible realization of the worst-case scenario set whose form is shown in Eq. 3.4 within the ambiguity set.

In order to dynamically adjust the global optimization capability, the similarity among each particle position, i.e., different realizations of Eq. 3.4 should be taken into account. Fortunately, an effective measurement named cosine similarity can reflect the directional consistency of two vectors, and cosine similarity of vector a and vector b is defined as follows:

In this paper, cosine similarity is introduced to measure the similarity of the optimal scenario set and other scenario sets. By constructing the matrix between the current scenario set and the optimal scenario set, cosine similarity is used as the distribution index. The similarity of particle p and particle q is defined as follows:

According to the above formula, when the scenario distribution difference between particle p and current global optimum is great, R(Sp,gbesti) will take a small value. It means that the particle p needs to jump out of the current region and therefore the inertia weight in the next iteration should be lager. While if the particle is close to the optimal particle, the optimization would need to be conducted in a local small range, so the inertia weight of the particle in the next iteration should be small. Therefore, according to the above definition of particle similarity value, the weight function is updated as:

The dynamic inertia weight adjustment method based on cosine similarity can update the evolution speed of particles according to the difference between particles and the optimal particle, and better adapt to each stage of the optimization process.

In additions, multiprocess module is used to create several processes at the same time, and the whole particle swarm is separated into some processes in a certain number for parallel computation, which should greatly reduce the runtime cost of the entire algorithm.

4.3 Proposed IPSO-MS

Based on the above improvement, a hybrid algorithm IPSO-MS is designed to solve the two-stage S-DR-UC in a hierarchical way. Specifically, the upper layer algorithm tries to extract the worst scenario set of the ambiguity set, while the lower layer is a mathematical solver that minimizes the objective function under the scenario set given by the upper layer. Based on the above knowledge, the detailed IPSO-MS to solve S-DR-UC problem is as follows:

Firstly, the S-DR-UC model can be summarized as follows, which includes two stages.

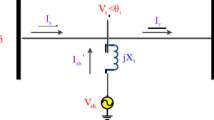

The front portion i.e., \({\min \limits } C^{day-ahead}\) is the setting of the day-ahead stage, accounting for the energy production and start-up costs of all conventional units. In order to avoid the complex solution brought by the three-layer optimization problem, we optimize this stage directly and focus on the optimization of the real-time stage. Since the real-time phase is a max-min problem, IPSO-MS adopts the mathematical solver Gurobi to solve the lower min problem that minimizes re-dispatch cost, where the upper improved PSO is used to find the worst-case distribution of wind power uncertainty. The flow chart of IPSO-MS is depicted in Fig. 2, which can be summarized as follows.

Step 1: Initialization of particles. According to the ambiguity set D, a specified number of particles and their corresponding probability are obtained by taking a random value within the fluctuation range. Probability of scenarios is normalized and will be adjusted if there is probability that oversteps the fluctuation range. A certain proportion of scenario values are selected as the initial value and boundary of particle velocity.

Step 2: Evaluating the fitness of particles. Multiprocess module is adopted to created several processes and mathematical solver is called to solve the min problem based on the given particles i.e., scenario sets. Finally, the operation cost obtained by the mathematical solver is taken for the fitness value of each particle.

Step 3: Updating the position and velocity of each particle. Local optimum pbest and global optimum gbest are determined according to fitness of each particle. Position and velocity of each particle are updated according to Eqs. (3.10) and (3.11), so is probability of scenario. Then the position and velocity of particles are judged and adjusted to ensure that the scenario set and its corresponding probability and velocity are within the specified range.

Step 4: Algorithm iteration. If the condition that the number of iterations reaches the maximum is satisfied, algorithm stops iterating, records the current optimal particle position i.e., the worst-case distribution and returns the scheduling scheme and scheduling cost for the worst-case distribution. Otherwise, algorithm goes to step 2.

5 Case Study

In this section, the effectiveness of scenario-based ambiguity set, S-DR-UC model as well as the hybrid solution algorithm IPSO-MS is demonstrated by a number of experiments on two modified IEEE test systems. Firstly, the performance of ambiguity set obtained by scenario clustering and reduction techniques is evaluated and the impact of ambiguity set fluctuation is analyzed. Then, the performance of the S-DR-UC model is discussed by comparing with existing SUC and RUC. Finally, the effectiveness of IPSO-MS algorithm is demonstrated on the modified IEEE RTS-24 system and IEEE 3-Area RTS-96 system. Node data of IEEE RTS-24 comes from Ordoudis et al. (2016) and that of IEEE 3-Area RTS-96 is provided in Pandzic et al. (2015).

The numerical experiments carried out on both test systems were performed on a server with an intel(R) Xeon(R) E5-2650 CPU with 2 processors clocking at 2.20GHz. All test cases were implemented and solved in python with Gurobi as the MILP mathematical solver. The parallelization of the particles was realized using the Joblib Python library with a multi-processing scheme.

5.1 Performance of Scenario-Based Ambiguity Set Construction

The clustering algorithm and ambiguity set are evaluated respectively in Sections 5.1.1 and 5.1.2.

5.1.1 Evaluation of Clustering Algorithm

The wind power data used in this paper comes from NREL database, which includes the AG data and the corresponding day-ahead PF data from May 2003 to September 2007 in USA. Firstly, PF and AG data is transformed into PF and AG daily scenarios. Then CFSFDP is adopted for scenarios clustering. According to the principle of CFSFDP, after calculating the truncation distance ρ and density δ of each scenario, a decision graph is obtained, as shown in Fig. 3(a). After the experiments of adjusting parameters, the minimum values of ρ and δ are respectively set as 2 and 3.5, resulting in 22 scenario sets, which is shown in Fig. 3(b). Since the number of objects in different categories are greatly different, two categories whose object quantity is huge are chosen to evaluate the clustering algorithm. In addition, only part of two categories is shown in Fig. 3(c)-(d) for ease of display. As can be seen, scenarios in the same category are closely related to each other with similar trends, while the trend of objects in different categories varies significantly. Therefore, CFSFDP can quickly and efficiently cluster scenarios without the need for repeated iterations, which effectively reduces the time of the data processing part of the overall algorithm.

5.1.2 Evaluation of Ambiguity Set

So far, a series of PF scenario sets have been obtained by using the clustering algorithm CFSFDP. Then AG scenarios are separated into corresponding sets to obtain AG scenario sets. Obviously, due to the geographical features of farm, the number of scenarios in each set is different, which may be either larger or smaller than our expectation. Usually, we hope to obtain a specified number of scenarios to conduct the subsequent UC optimization. Therefore, if the number exceeds our expectation, scenarios whose ρ and δ are both high will be retained, while sets whose number of scenarios is too small will be merged according to Euclidean distance. Finally, 20 AG sets of 20 scenarios are obtained. In addition, for the sake of universality of study, the set corresponding to the largest scenario sets is chosen for subsequent experiments.

The initial probability value of each scenario in the scenario sets is determined based on Euclidean distance between objects in AG scenario sets and AG scenarios, which is the number of the corresponding AG scenarios divided by the total number. Then the scenario sets are converted to ambiguity sets based on historical distribution and bootstrap methods. In detail, scenarios slashed of initial set with high ρ and δ are chosen as representative historical distributions. When more historical distributions are introduced, the fluctuation range of scenarios is larger. In our study, 2, 5 and 8 historical distributions are introduced and ambiguity sets are constructed respectively, which are shown in Fig. 4. After that, sampling with replacement is performed for several times and partial frequency values are selected to construct the specified confidence interval on the basis of Eq. 1.8. Specially in this study, sampling process is repeated for 100 times and the 5th and 95th frequency values in descending order are selected to obtain a 90% confidence interval.

In what follows, experiments based on different ambiguity sets are performed to evaluate effect of different fluctuation. As can be seen in Fig. 4(a)-(c), the introduction of more historical distributions leads to a great diversity of scenarios and a larger range of ambiguity sets. Generally, large ambiguity sets could contain more extreme worst-case distributions, resulting in higher operation cost of DRO UC scheme. Our model can effectively reflect the above phenomenon, which is shown in Fig. 4(d). Hence, it is significant to choose the fluctuation range reasonably. In particular, the subsequent experiment adopts ambiguity set D2.

5.2 Performance of the S-DR-UC Model and the IPSO-MS Algorithm

Firstly, the performance of the S-DR-UC is evaluated compared with SUC and RUC. Besides, the numerical experiments of IPSO-MS algorithm were performed to prove its validity in Section 5.2.2.

5.2.1 Evaluation of the S-DR-UC

In this section, experiments based on ambiguity sets i.e., D1, D2 and D3 obtained by Section 5.1 are performed on IEEE 3-Area RTS-96 to evaluate the robustness and economy of the S-DR-UC model. SUC takes the numerous possibilities of uncertain information into account, and the scheduling results performs the best considering all the scenarios. In this study, we initialized 60 scenario sets and called Gurobi one by one to solve, and regarded the minimum solution as the solution of SUC. By contrast, RUC aims to performs the best considering the worst case of all the scenarios. Given the worst scenario set in this study, RUC modeling is carried out on the original model, and Gurobi is called to solve it, and the obtained solution is regarded as the solution of RUC.

The operation costs of the SUC and RUC models are $2.445 × 106 and $2.913 × 106, respectively. The comparison of the S-DR-UC based on three ambiguity sets with SUC and RUC is shown in Fig. 5. The proposed S-DR-UC outperforms both the SUC and RUC models. In detail, take D2 as an example, the proposed model is able to see all distributions in the ambiguity set, therefore it tackles more wind uncertainty and finds the 7.1% worse worst-case distribution compared with SUC. Besides, compared with the RUC model, the proposed model provides a less conservative decision whose operation cost reduces 10.2%. Based on the above discussion, the proposed model has better performance in balancing the robustness and economy of the dispatch scheme than the SUC and RUC models.

5.2.2 Evaluation of IPSO-MS

In this section, the validity of IPSO-MS is evaluated on both test systems. In addition, the parameters of PSO are confirmed through random search (Do and Ohsaki, 2021), which are shown in Table 1.

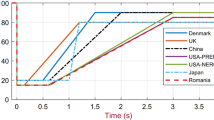

First of all, in order to assess the improvement of PSO algorithm, experiments on IEEE RTS-24 system are conducted by changing the number of particles and controlling the maximum number of iterations. Nonlinear weights and cosine similarity in Section 3 are introduced to improve the fast convergence of traditional particle swarm optimization algorithm. Therefore, the experiments of 400 iterations were carried out for 40, 60 and 80 sets containing 20 scenarios respectively. And then the results are compared in terms of the worse-case distribution found by each algorithm, which is expressed by the corresponding operation cost as shown in Fig. 6. The experimental result shows that the proposed improved scheme performs well under different particle number conditions. At the same time, compared with the classical PSO, it avoids excessive convergence, and the classical PSO basically converges in 200 iterations. However, the improved scheme proposed by us converges around the 300 iterations, and at the same time finds the worse worst-case distribution i.e., scenario set that makes the cost value larger, effectively avoiding local convergence.

Therefore, in the subsequent experiment on the IEEE 3-Area RTS-96 system, the number of particles is selected as 60 and the maximum iteration number is set as 300. Besides, to facilitate the evaluation, we include one improvement at a time in the IPSO-MS algorithm and compare the results in terms of calculation time and worst-case found.

-

(1)

PSO-mathematical solver (PSO-MS): In order to establish a benchmark to evaluate the improvement of our IPSO-MS, we try to combine classical PSO and mathematical solver to handle the S-DR-UC model and the process is as follows. After initializing the particle swarm, mathematical solver Gurobi is called to solve problem based on particles i.e., scenario sets one by one, and the solved value is regarded as particle swarm fitness, and iterated according to the iterative nature of the classical PSO.

-

(2)

DIW-PSO-MS: On the basis of the version of the PSO-MS algorithm, dynamic inertia weight is introduced to improve PSO. The rest of the process is the same as PSO-MS.

-

(3)

IPSO-MS: On the basis of the version of the DIW-PSO-MS, cosine similarity is adopted to measure the similarity between scenarios. Besides, 10 processes are created to realize parallel computation

Table 2 summarizes the different improved performance of PSO-MS, DIW-PSO-MS and IPSO-MS and compares the final running time and worst-case found and the convergence process of the three ways is also shown in Fig. 7. It can be clearly seen that, compared with the classical PSO algorithm, both DIW-PSO-MS and IPSO-MS can mitigate the premature local convergence and find more extreme scenarios distributions within the ambiguity set, thus resulting in higher operation costs. Besides, the introduction of cosine similarity indeed helps to find worse case than DIW-PSO-MS and parallel computing makes our method save 81% computing time.

5.3 Evaluation of Robotic Assistance

A complete forecasting process often takes a lot of time, which also leads to few options for system operators. In this study, robots are introduced to help make decisions. Specifically, the model and algorithm designed above are imported into robots R1, R2 and R3, and the decision results are output together to provide reference for the decision P already made.

As can be seen from Fig. 8, the assistance of robots effectively helps verify the rationality of the system operators’ choice. In some areas having bad environment but suitable for the construction of new energy power plants, power plants are often unable to station many stationed staff. Robotic assistance can effectively reduce the burden of system operators, and in the future it may be possible to make some simple decisions alone.

6 Conclusions

In this paper, a scenario-based distributionally robust unit commitment model was proposed to advance the temporal sequence feature of existing DRO models, and a hybrid algorithm IPSO-MS which combines an improved PSO with mathematical solver was designed to improve the effectiveness of existing solution algorithms, and robots were introduced to assist decision-making. Especially, ambiguity set is constructed with scenario based on historical and bootstrap methods. Besides, nonlinear inertia weight, cosine similarity and parallel computing were introduced to improve the shortcomings of classical PSO algorithm. Experiments on IEEE RTS-24 system and IEEE RTS-96 system show that the S-DR-UC model has good reliability and economy and IPSO-MS algorithm can be used to solve S-DR-UC problems with high efficiency. The assistance of robots provides more options and also helps verify the rationality of the system operators’ choices.

Future research will focus more on human-robot cooperation and interaction. Besides, the decision-making ability of robots could be trained and improved.

References

Alkhraisat, H., & Rashaideh, H. (2016). Dynamic inertia weight particle swarm optimization for solving nonogram puzzles. International Journal of Advanced Computer Science and Applications, 7(10), 277–280.

Asensio, M., & Contreras, J. (2016). Stochastic unit commitment in isolated systems with renewable penetration under CVaR assessment. IEEE Transactions on Smart Grid, 7 (3), 1356–1367. https://doi.org/10.1109/TSG.2015.2469134.

Bian, Q., Xin, H., Wang, Z., Gan, D., & Wong, K. (2015). Distributionally robust solution to the reserve scheduling problem with partial information of wind power. IEEE Transactions on Power Systems, 30 (5), 2822–2823. https://doi.org/10.1109/TPWRS.2014.2364534.

Chen, Y., Guo, Q., Sun, H., Li, Z., Wu, W., & Li, Z. (2018). A distributionally robust optimization model for unit commitment based on Kullback-Leibler divergence. IEEE Transactions on Power System, 33(5), 5147–5160. https://doi.org/10.1109/TPWRS.2018.2797069.

Chen, S., He, Q., & Lai, C. (2022). Correction to: Deep reinforcement learning-based robot exploration for constructing map of unknown environment Information Systems Frontiers. https://doi.org/10.1007/s10796-021-10218-5.

Chen, L., Tang, H., Wu, J., Li, C., & Wang, Y. (2022). A robust optimization framework for energy management of CCHP users with integrated demand response in electricity market. International Journal of Electrical Power & Energy Systems, 141, 108181. https://doi.org/10.1016/j.ijepes.2022.108181.

Do, B., & Ohsaki, M. (2021). A random search for discrete robust design optimization of linear-elastic steel frames under interval parametric uncertainty. Computers & Structures, 249, 106506. https://doi.org/10.1016/j.compstruc.2021.106506.

Ding, T., Yang, Q., Liu, X., Huang, C., Yang, Y., Wang, M., & Blaabjerg, F. (2019). Duality-free decomposition based data-driven stochastic security-constrained unit commitment. IEEE Transactions on Sustainable Energy, 10(1), 82–93. https://doi.org/10.1109/TSTE.2018.2825361.

Egbue, O., Uko, C., Aldubaisi, A., & Santi, E. (2022). A unit commitment model for optimal vehicle-to-grid operation in a power system. International Journal of Electrical Power & Energy Systems, 141, 108094. https://doi.org/10.1016/j.ijepes.2022.108094.

Gupta, A., & Anderson, C. (2019). Statistical bus ranking for flexible robust unit commitment. IEEE Transactions on Power Systems, 34(1), 236–245. https://doi.org/10.1109/TPWRS.2018.2864131.

Hodge, B.M. (2016). Final report on the creation of the wind integration national dataset (wind) toolkit and api: October 1 2013-september 30, 2015, NREL (National Renewable Energy Laboratory (NREL), Golden, CO (United States)), Tech. Rep.

Jabr, R.A. (2020). Distributionally Robust CVaR constraints for power flow optimization. IEEE Transactions on Power Systems, 35(5), 3764–3773. https://doi.org/10.1109/TPWRS.2020.2971684.

Kennedy, J., & Eberhart, R. (1995). Particle swarm optimization. Proceedings of ICNN’95 - International Conference on Neural Networks, 4, 1942–1948.

Liang, W., Lin, S., Lei, S., Xie, Y., Tang, Z., & Liu, M. (2022). Distributionally robust optimal dispatch of CCHP campus microgrids considering the time-delay of pipelines and the uncertainty of renewable energy. Energy, 239, 122200. https://doi.org/10.1016/j.energy.2021.122200.

Lee, C., Liu, C., Mehrotra, S., & Shahidehpour, M. (2015). Modeling transmission line constraints in two-stage robust unit commitment problem. IEEE Transactions on Power Systems, 29(3), 1221–1231. https://doi.org/10.1109/PESGM.2015.7285975.

Lin, Z., Chen, H., Wu, Q., Huang, J., Li, M., & Ji, T. (2021). A data-adaptive robust unit commitment model considering high penetration of wind power generation and its enhanced uncertainty set. International Journal of Electrical Power and Energy Systems, 129, 106797. https://doi.org/10.1016/j.ijepes.2021.106797.

Liu, H., Shen, X., Guo, Q., Sun, H., Shahidehpour, M., Zhao, W., & Zhao, X. (2021). Application of modified progressive hedging for stochastic unit commitment in electricity-gas coupled systems. CSEE Journal of Power and Energy Systems, 7(4), 840–849. https://doi.org/10.17775/CSEEJPES.2020.04420.

Luo, X., Zhu, X., & Lim, E.G. (2019). A parametric bootstrap algorithm for cluster number determination of load pattern categorization. Energy, 180, 50–60. https://doi.org/10.1016/j.energy.2019.04.089.

Ordoudis, C., Pinson, P., Gonzalez, J., & Zugno, M. (2016). An updated version of the IEEE RTS 24-bus system for electricity market and power system operation studies Technical University of Denmark.

Pandzic, H., Dvorkin, Y., Qiu, T., Wang, Y., & Kirschen, D. (2015). Unit commitment under uncertainty - GAMS models. Library of the Renewable Energy Analysis Lab (REAL), University of Washington, Seattle.

Piccialli, F., Cola, V., Giampaolo, F., & Cuomo, S. (2021). The role of artificial intelligence in fighting the COVID-19 pandemic. Information Systems Frontiers, 23, 1467–1497.

Rodriguez, A., & Laio, A. (2014). Clustering by fast search and find of density peaks. Science, 344(6191), 1492–1496.

Sayed, A., Wang, C., Chen, S., Shang, C., & Bi, T. (2021). Distributionally robust day-ahead operation of power systems with two-stage gas contracting. Energy, 231, 120840. https://doi.org/10.1016/j.energy.2021.120840.

Yuan, R., Wang, B., Mao, Z., & Watada, J. (2021). Multi-objective wind power scenario forecasting based on PG-GAN. Energy, 226, 120379. https://doi.org/10.1016/j.energy.2021.120379.

Zhang, Y., Shen, S., & Erdogan, A. (2017). Distributionally robust appointment scheduling with moment-based ambiguity set. Operations Research Letters, 45(2), 139–144. https://doi.org/10.1016/j.orl.2017.01.010.

Zhang, Y., Liu, Y., Shu, S., Zheng, F., & Huang, Z. (2021). A data-driven distributionally robust optimization model for multi-energy coupled system considering the temporal-spatial correlation and distribution uncertainty of renewable energy sources. Energy, 216, 119171. https://doi.org/10.1016/j.energy.2020.119171.

Zhao, W., Zeng, Q., Zheng, G., & Yang, L. (2017). The resource allocation model for multi-process instances based on particle swarm optimization. Information Systems Frontiers, 19, 1057–1066.

Zhu, R., Wei, H., & Bai, X. (2019). Wasserstein Metric based distributionally robust approximate framework for unit commitment. IEEE Transactions on Power Systems, 34 (4), 2991–3001. https://doi.org/10.1109/TPWRS.2019.2893296.

Zhang, Y., Le, J., Zheng, F., Zhang, Y., & Liu, K. (2019). Two-stage distributionally robust coordinated scheduling for gas-electricity integrated energy system considering wind power uncertainty and reserve capacity configuration. Renewable Energy, 135, 122–135. https://doi.org/10.1016/j.renene.2018.11.094.

Zhu, M., Qi, Y., & Hultman, N. (2022). Low-carbon energy transition from the commanding heights: How state-owned enterprises drive China’s wind powermiracle. Energy Research & Social Science, 85, 102392. https://doi.org/10.1016/j.erss.2021.102392.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 61603176).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

None.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, X., Wang, B., Lin, PC. et al. Scenario-Based Distributionally Robust Unit Commitment Optimization Involving Cooperative Interaction with Robots. Inf Syst Front 26, 9–23 (2024). https://doi.org/10.1007/s10796-022-10335-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-022-10335-9