Abstract

Change detection is of fundamental importance when analyzing data streams. Detecting changes both quickly and accurately enables monitoring and prediction systems to react, e.g., by issuing an alarm or by updating a learning algorithm. However, detecting changes is challenging when observations are high-dimensional. In high-dimensional data, change detectors should not only be able to identify when changes happen, but also in which subspace they occur. Ideally, one should also quantify how severe they are. Our approach, ABCD, has these properties. ABCD learns an encoder-decoder model and monitors its accuracy over a window of adaptive size. ABCD derives a change score based on Bernstein’s inequality to detect deviations in terms of accuracy, which indicate changes. Our experiments demonstrate that ABCD outperforms its best competitor by up to 20% in F1-score on average. It can also accurately estimate changes’ subspace, together with a severity measure that correlates with the ground truth.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data streams are open-ended, ever-evolving sequences of observations from some process. They pose unique challenges for analysis and decision-making. One crucial task is to detect changes, i.e., shifts in the observed data, that may indicate a change in the underlying process. Change detection has been an active research area. However, the high-dimensional setting, in which observations contain a large number of simultaneously measured quantities, did not receive enough attention. Yet, it may yield useful insights in environmental monitoring (de Jong and Bosman 2019), human activity recognition (Vrigkas et al. 2015), network traffic monitoring (Naseer et al. 2020), automotive (Liu et al. 2019), predictive maintenance (Zhao et al. 2018), and biochemical engineering (Mowbray et al. 2021):

Example

(Biofuel production) The production of fuel from biomass is a complex process comprising many interdependent process steps. Those include pyrolysis, synthesis, distillation, and separation. Many steps rely on (by-)products of other steps as reactants, leading to a highly interconnected system with many process parameters. A monitoring system tracks the process parameters to detect failures in the plant: (i) The system must detect changes in a large (i.e., high-dimensional) vector of process parameters, which may indicate failures. (ii) The system must find out which process parameters are affected by the change to allow for a targeted reaction. Since the system is very complex and has many interconnected components, change is often evident only when considering correlations between process parameters. An example would be the correlation between temperature and concentration fluctuations. So it is insufficient to monitor each process parameter in isolation. (iii) There can exist slight changes which only require minor adjustments and more severe ones that require immediate intervention to avoid a shutdown of the plant. The monitoring system should provide an estimate of the severity of change.

The example illustrates three requirements for modern change detectors:

-

R1: Change point The primary task of change detectors is to identify that the data stream has changed and when it occurred.

-

R2: Change subspace A change may only concern a subset of dimensions—the change subspace. Change detectors for high-dimensional data streams should be able to identify such subspaces.

-

R3: Change severity Quantifying relative change severity to distinguish between changes of different importance is essential to react appropriately.

Prior works already acknowledge the relevance of the above requirements (Lu et al. 2019; Webb et al. 2018). However, fulfilling R1–R3 in combination remains challenging since they depend on each other: on the one hand, detecting changes in high-dimensional data is difficult because changes typically only affect few dimensions. Unaffected dimensions “dilute” a change (i.e., a change occuring in a subspace appears to be less severe in the full space). This might make changes harder to detect in all dimensions. On the other hand, detecting the change subspace should occur after detecting a change, since monitoring all possibles subspaces is intractable. Last, one should restrict computation of change severity to the change subspace to eliminate dilution.

Existing methods for change detection, summarized in Table 1, either are univariate (UV), multivariate (MV), or specifically designed for high-dimensional data (HD); the latter claim efficiency w.r.t. high-dimensionality or resilience against the “curse of dimensionality”. However, they do not fulfill R1–R3 in combination sufficiently well as Sect. 2 describes.

Thus, we propose the Adaptive Bernstein Change Detector (ABCD), which addresses R1–R3 in combination. We articulate our contributions as follows:

(i) Problem Definition We formalize the problem of detecting changes in high-dimensional data streams such that R1-R3 can be tackled in combination. (ii) Adaptive Bernstein Change Detector We present ABCD, a change detector for high-dimensional data, that satisfies R1–R3. It monitors the loss of an encoder-decoder model using an adaptive window size and statistical testing. Adaptive windows enable ABCD to detect severe changes quickly and, over a longer period, identify hard-to-detect changes that would typically require a large window size. (iii) Bernstein change score Our approach applies a statistical test based on Bernstein’s inequality. This limits the probability of false alarms. (iv) Online computation We propose an efficient method for computing the change score in adaptive windows and discuss design choices leading to constant time and memory. (v) Benchmarking We conduct experiments on 10 data streams based on real-world and synthetic data with many dimensions and compare ABCD with recent approaches. The results indicate that ABCD outperforms its competitors consistently w.r.t. R1–R3, is robust to high-dimensional data and is useful in domains including human activity recognition, gas detection, and image processing. We also study ABCD’s parameter sensitivity. Our codeFootnote 1 follows the popular Scikit-Multiflow API (Montiel et al. 2018), so it is easy to use in future research.

2 Related work

2.1 Change detector types

Most existing change detectors are supervised, i.e., they focus on detecting changes in the relationship between input data and a target variable (Iwashita and Papa 2019). However, class labels are rarely available in reality, which limits their applicability. On the contrary, the unsupervised change detectors aim to detect changes only in the input data. Our approach belongs to this category, so we restrict our review to unsupervised approaches.

Most existing approaches detect changes whenever a measure of discrepancy between newer observations (the current window) and older observations (the reference window) exceeds a threshold. Some approaches, e.g., D3 (Gözüaçık et al. 2019) or PCA-CD (Qahtan et al. 2015), implement the reference and current window as two contiguous sliding windows. Other approaches, such as IBDD (de Souza et al. 2020), IKS (dos Reis et al. 2016) or WATCH (Faber et al. 2021) use a fixed reference window. A major problem is to choose the appropriate size for the window; thus Bifet and Gavaldà (2007) propose windows of adaptive size, that grow while the stream remains unchanged and shrink otherwise. Several work leverage this principle, e.g. (Sun et al. 2016; Khamassi et al. 2015; Fouché et al. 2019; Suryawanshi et al. 2022). We also use adaptive windows to lower the number of parameters of ABCD.

2.2 Univariate change detection

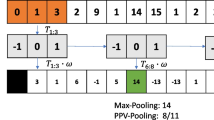

There exist many approaches for change detection in univariate (UV) data streams. Two of them, Adaptive Windowing (ADWIN) (Bifet and Gavaldà 2007) and SeqDrift2 (Pears et al. 2014), share some similarity with our approach. Like ADWIN, ABCD relies on an adaptive window. Like SeqDrift2, it uses Bernstein’s inequality (Bernstein 1924). But unlike ADWIN and SeqDrift2, ABCD can handle high-dimensional data while fulfilling R1-R3.

2.3 Multivariate change detection

To detect changes in multivariate (MV) data, some approaches apply univariate algorithms in each dimension of the stream. Faithfull et al. (2019) propose to use one ADWIN detector per dimension (with k dimensions). They declare a change whenever a certain fraction of the detectors agree. We call this approach AdwinK later on. Similarly, IKS (dos Reis et al. 2016) uses an incremental variant of the Kolmogorov–Smirnov test deployed in each dimension. Unlike AdwinK, IKS issues an alarm if at least one dimension changes.

There also exist approaches specifically designed for multivariate (Jaworski et al. 2020; Ceci et al. 2020; Qahtan et al. 2015; Gözüaçık et al. 2019; Dasu et al. 2006), or even high-dimensional (HD) data (Faber et al. 2021; de Souza et al. 2020).

Similar to ABCD, Jaworski et al. (2020) and Ceci et al. (2020) use dimensionality-reduction methods to capture the relationships between dimensions. However, our approach is computationally more efficient, limits the probability of false alarms, identifies change subspace, and estimates change severity. D3 (Gözüaçık et al. 2019) uses the AUC-ROC score of a discriminative classifier that tries to distinguish the data in two sliding windows. It reports a change if the AUC-ROC score exceeds a pre-defined threshold. PCA-CD (Qahtan et al. 2015) first maps observations in two windows to fewer dimensions using PCA. Then the approach estimates the KL-divergence between both windows for each principal component. PCA-CD detects a change if the maximum observed KL-divergence exceeds a threshold. However, Goldenberg and Webb (2019) point out that this technique is limited to linear transformations and ignores combined change in multiple dimensions. LDD-DSDA (Liu et al. 2017) measures the degree of local drift that describes regional density changes in the input data. The approach proposed by Dasu et al. (2006) structures observations from two windows (sliding or fixed) in a kdq-tree. For each node, they measure the KL-divergence between observations from both windows. However, Qahtan et al. (2015) show experimentally that this approach is not suitable for high-dimensional data.

IBDD (de Souza et al. 2020) and WATCH (Faber et al. 2021) specifically address challenges arising from high-dimensional data. The former monitors the mean squared deviation between two equally sized windows. The latter monitors the Wasserstein distance between a reference and a sliding window. However, both cannot detect change subspaces or measure severity.

2.4 Offline change point detection

Offline change point detection, also known as signal segmentation, divides time series of a given length into K homogeneous segments (Truong et al. 2020). Many of the respective algorithms are not suitable for data streams: Some require specifying K a priori (Bai and Perron 2003; Harchaoui and Cappe 2007; Lung-Yut-Fong et al. 2015); others (Killick et al. 2012; Lajugie et al. 2014; Matteson and James 2014; Chakar et al. 2017; Garreau and Arlot 2018) scale superlinearly with time. WATCH (Faber et al. 2021), discussed above, is the state of the art extension of offline change point detection to data streams.

2.5 Change subspace

The notion of a change subspace is different from the existing notion of change region (Lu et al. 2019). The former describes a subset of dimensions that changed, the latter identifies density changes in some local region, e.g., a hyper-rectangle or cluster (Liu et al. 2017). Our definition of change subspaces is related to marginal change magnitude (Webb et al. 2018), but is more general since it can also accomodate changes in a subspace’s joint distribution.

Because high-dimensional spaces are typically sparse (due to the curse of dimensionality), identifying density changes in them is not effective. On the other hand, knowing that a change affected a specific set of dimensions can help identify the cause of the change, as we have motivated in our introductory example. Thus, we focus on detecting change subspaces in this work.

In the domain of statistical process control, some approaches extend well-known methods, such as Cusum (Page 1954) or Shewhart charts (Shewhart 1930), to multiple dimensions. They address the problem of identifying change subspaces to some extent, however, they often make unrealistic assumptions: they focus on Gaussian or sub-Gaussian data (Chaudhuri et al. 2021; Xie et al. 2020), require that different dimensions are initially independent (Chaudhuri et al. 2021), require subspace changes to be of low rank (Xie et al. 2020), or assume that the size of the change subspace is known a priori (Jiao et al. 2018).

From the approaches reviewed in Sect. 2.3 only AdwinK and IKS identify the corresponding change subspace. However, both approaches do not find changes that hide in subspaces, e.g., correlation changes, because they monitor each dimension in isolation. In contrast, our approach aims to learn the relationships between different dimensions so that it can detect such changes. Next, AdwinK cannot identify subspaces with fewer than k dimensions.

2.6 Change severity

According to Lu et al. (2019), change severity is a positive measure of the discrepancy between the data observed before and after the change. One can either measure the divergence between distributions directly, as done by kdq-Tree (Dasu et al. 2006), LDD-DSDA (Liu et al. 2017), and WATCH (Faber et al. 2021), or indirectly with a score that correlates with change severity, as done by D3 (Gözüaçık et al. 2019). Following this reasoning, an approach that satisfies R3 should compute a score that depends on the change severity (Gözüaçık et al. 2019; Dasu et al. 2006; de Souza et al. 2020; Qahtan et al. 2015; Faber et al. 2021), i.e., the higher the score, the higher the severity. Finally, hypothesis-testing-based approaches, such as ADWIN (Bifet and Gavaldà 2007), SeqDrift2 (Pears et al. 2014), AdwinK (Faithfull et al. 2019), or IKS (dos Reis et al. 2016), do not quantify change severity: a slight change observed over a longer time can lead to the same p-value as a severe change observed over a shorter time, hence p is not informative about change severity.

2.7 Pattern based change detection

A related line of research, pattern-based change detection, deals with identifying changes in temporal graphs (Loglisci et al. 2018; Impedovo et al. 2019, 2020a, b). In particular, Loglisci et al. (2018) detect changes in the graph, identify the affected subgraphs, and quantify the amount of change for these subgraphs. This is similar to our methodology. However, these methods work well with graph data, but we are dealing with vector data. To apply these methods in our context, one would need to create a graph, e.g., by representing each dimension as a node and indicating pairwise correlations with edges. However, constructing such a graph becomes impractical for high-dimensional observations because of the exponentially growing number of subspaces.

2.8 Competitors

In our experiments, we compare to AdwinK, IKS, D3, IBDD, and WATCH. IBDD, WATCH, and D3 are recent change detectors for multivariate and high-dimensional data that fulfill R3. AdwinK extends the ADWIN algorithm to the multivariate case and fulfills R2. Finally, IKS is the only approach employing a non-parametric two-sample test for change detection while also satisfying R2.

3 Preliminaries

We are interested in finding changes in the last t observations \(S=(x_1, x_2, \ldots , x_t)\) from a stream of data. Each \(x_i\) is a d-dimensional vector independently drawn from a (unknown) distribution \(F_i\). We assume without loss of generality that each vector coordinate is bounded in [0, 1], i.e., \(x_i \in [0,1]^d\).

Definition 1

(Change) A change occurs at time point \(t^*\) if the data-generating distribution changes after \(t^*\): \(F_{t^*} \ne F_{t^*+1}\).

In high-dimensional data, changes typically affect only a subset of dimensions, which we call the change subspace. Let \(D=\{1,2,\dots ,d\}\) be the set of dimensions and \(F^{D'}_i\) be the joint distribution of \(F_i\) observed in the subspace \(D' \subseteq D\) at time step i. We define the change subspace as follows:

Definition 2

(Change subspace) The change subspace \(D^*\) at time \(t^*\) is the union of all \(D' \subseteq D\) in which the joint distribution \(F^{D'}\) changed and which does not contain a subspace \(D''\) for which \(F^{D''}_{t^*} \ne F^{D''}_{t^*+1}\).

If the dimensions in \(D^*\) are uncorrelated, then changes will be visible on the marginal distributions, i.e., all \(D'\) are of size 1. However, changes may only be detectable w.r.t the joint distribution of \(D^*\) or the union of its subspaces of size greater than 1, which our definition accommodates. Note that the definition can also handle multiple co-occurring changes and considers them as one single change. Last, change severity measures the difference between \(F^{D^*}_{t^*}\) and \(F^{D^*}_{t^*+1}\):

Definition 3

(Change severity) The severity of a change is a positive function \(\Delta \) of the mismatch between \(F^{D^*}_{t^*}\) and \(F^{D^*}_{t^*+1}\).

Since we do not know the true distributions \(F_{t^*}\) and \(F_{t^*+1}\), the best we can do is detecting changes and their characteristics based on the observed data.

4 Approach

4.1 Principle of ABCD

Direct comparison of high-dimensional distributions is impractical as it requires many samples (Gretton et al. 2012). Yet the number of variables required to describe such data with high accuracy is often much smaller than d (Lee and Verleysen 2007). Dimensionality reduction techniques let us encode observations in fewer dimensions. The more information encodings retain, the better one can reconstruct (decode) the original data. However, if the distribution changes, the reconstruction will degrade and produce higher errors.

We leverage this principle in ABCD by monitoring the reconstruction loss of an encoder-decoder model \(\psi \circ \phi \) for some encoder function \(\phi \) and decoder function \(\psi \). Figure 1 illustrates this. Specifically, we first learn \(\phi : [0,1]^d \rightarrow [0,1]^{d'}\) with \(d' = \lfloor \eta d\rfloor < d\), \(\eta \in ({1}/{d},1)\), mapping the data to fewer dimensions, and \(\psi : [0,1]^{d'} \rightarrow [0,1]^d\). Then, we monitor the loss between each \(x_t\) and its reconstruction \(\hat{x}_t = \psi \circ \phi (x_t) = \psi (\phi (x_t))\):

We hypothesize that distribution changes lead to outdated encoder-decoder models—see for example (Jaworski et al. 2020) for empirical evidence. Hence, we assume that changes in the reconstruction affect the mean \(\mu _{t^*+1}\) of the loss, because the model can no longer accurately reconstruct the input:

We can now replace the definition of change in high-dimensional data with an easier-to-evaluate, univariate proxy:

It allows detecting arbitrary changes in the original (high-dimensional) distribution as long as they affect the average reconstruction loss of the encoder-decoder. Since the true \(\mu _{t^*}\) and \(\mu _{t^*+1}\) are unknown, we estimate them from the stream:

4.2 Detecting the change point

ABCD detects a change at \(t^*\) if \({\hat{\mu }}_{1,t^*}\) differs significantly from \({\hat{\mu }}_{t^*+1,t}\). To quantify this, we derive a test based on Bernstein’s inequality (Bernstein 1924). It is often tighter than more general alternatives like Hoeffding’s inequality (Boucheron et al. 2013). Let \({\hat{\mu }}_1, {\hat{\mu }}_2\) be the averages of two independent samples from two univariate random variables. One wants to evaluate if both random variables have the same expected values: The null hypothesis \(H_0\) is \(\mu _1 = \mu _2\). Based on the two samples, one rejects \(H_0\) if \(\Pr \left( |{\hat{\mu }}_1 - {\hat{\mu }}_2|\ \ge \epsilon \right) \le \delta \) where \(\delta \) is a preset significance level. The following theorem allows evaluating Eq. (3) based on Bernstein’s inequality.

Theorem 1

(Bound on \(\Pr \left( |\hat{\mu }_1 - \hat{\mu }_2|\ge \epsilon \right) \)) Given two independent samples \(X_1,X_2\) of size \(n_1\) and \(n_2\) from two random variables with unknown expected values \(\mu _1, \mu _2\) and variances \(\sigma ^2_1, \sigma ^2_2\). Let \(\hat{\mu }_1, \hat{\mu }_2\) denote the sample means and let \(|\mu _1-x_i|< M\) for all \(x_i\in X_1\) and \(|\mu _2-x_i|< M\) for all \(x_i\in X_2\) respectively. Assuming \(\mu _1 = \mu _2\), we have:

Proof

We follow the same steps as in Bifet and Gavaldà (2007) and Pears et al. (2014).

Recall Bernstein’s inequality: Let \(x_1, \ldots , x_n\) be independent random variables with sample mean \({\hat{\mu }} = 1/n\sum x_i\) and expected value \(\mu \) s.th. \(\forall x_i: |x_i - \mu |\le M\). Then, for all \(\epsilon > 0\),

We apply the union bound to \(\Pr \left( |\hat{\mu }_1 - \hat{\mu }_2|\ge \epsilon \right) \). For all \(\kappa \in [0,1]\), we have:

Substituting above with Bernstein’s inequality completes the proof. \(\square \)

With regard to change detection, one can use Eq. (5) to evaluate for a time point k if a change occurred. The question is, however, how to choose \(\epsilon \) to limit the probability of false alarm at any time t to a maximum \(\delta \).

Our approach is to set \(\epsilon \) to the observed \(|\hat{\mu }_{1,k} - \hat{\mu }_{k+1,t}|\) and to set \(n_1 = k\), \(n_2 = t-k\). The result bounds the probability of observing \(|\hat{\mu }_{1,k} - \hat{\mu }_{k+1,t}|\) between two independent samples of sizes k and \(t-k\) under \(H_0\). If this probability is very low, the distributions must have changed at k. Then, we search for changes at multiple time points k in the current window. Hence, we obtain multiple such probability estimates; our change score is their minimum:

The corresponding change point \(t^*\) splits \((L_1, L_2, \ldots , L_t)\) into the two subwindows with the statistically most different mean.

4.2.1 Choice of parameter \(\kappa \)

The bound in Eq. (5) holds for any \(\kappa \in [0,1]\). A good choice, however, provides a tighter estimate, resulting in faster change detection for a given rate of allowed false alarms \(\delta \). Bifet and Gavaldà (2007) suggest to choose \(\kappa \) s.th. \(Pr(|\hat{\mu }_1 - \mu _1 |\ge \kappa \epsilon ) \approx Pr(|\hat{\mu }_2 - \mu _2|\ge (1- \kappa )\epsilon )\), that approximately minimizes the upper bound. Substituting both sides with Bernstein’s inequality, we get

Setting \(n_1 = rn_2\) and simplifying, we have

To solve for \(\kappa \), note that \(|\hat{\mu }_{1,k} - \hat{\mu }_{k+1,t}|\approx 0\) for large enough k and \(t-k\) while there is no change. This leads to a change score \(p \gg \delta \) for any choice of \(\kappa \). Hence, choosing \(\kappa \) optimal is irrelevant while there is no change.

In contrast, if a change occurs, the change in the model’s loss dominates the variance in both subwindows, leading to \( M\epsilon \gg \sigma _1^2, \sigma _2^2\). In that case, the influence of \(\sigma _1^2, \sigma _2^2\) is negligible for sufficiently large \(\kappa \) and \(1-\kappa \):

Solving Eq. (11) for \(\kappa \) results in our recommendation for \(\kappa \) (Eq. (12) which we restrict to \([\kappa _{min}, 1-\kappa _{min}]\) with \(\kappa _{min}=0.05\).

4.2.2 Minimum sample sizes and outlier sensitivity

This section investigates the conditions under which ABCD detects changes.

We derive a minimum size of the first window above which ABCD detects a change. It bases on the fact that the number of observations before an evaluated time point k remains fixed while the number of observations after k grows with t. Those counts are \(n_1=k\) and \(n_2=t-k\) in Eq. (5). Also, since we consider bounded random variables, their variance is bounded as well. Hence, the second term in Eq. (5) approaches 0 for any \(\epsilon > 0\). With this, solving Eq. (5) for \(n_1\) yields:

By setting \(\epsilon = |{\hat{\mu }}_1 - {\hat{\mu }}_2 |\) we see that the required size of the first window decreases the larger the change in the average reconstruction error. For example, with \(M=1\), \(\epsilon = \sigma _1 = 0.1\), and \(\delta = 0.05\) our approach requires \(n_1 \ge 32\).

Since ABCD detects changes in the average reconstruction loss of a bounded vector, it is stable with respect to outliers as long as they are reasonably rare. To see this, assume w.l.o.g. that window 1 contains \(n_{out}\) outliers and that \(\epsilon >0\). One can show that the average of the outliers, \({\hat{\mu }}_{out}\), must exceed the average of the remaining inliers, \({\hat{\mu }}_{in}\), by \(n_1\epsilon /n_{out}\). In the example above, a single outlier would thus have to exceed \({\hat{\mu }}_{in}\) by \(n_1\epsilon =3.2\). This, however, is impossible because \(M=1\) bounds the reconstruction loss.

4.3 Detecting the change subspace

After detecting a change, we identify the change subspace. Restricting the encoding size to \(d' < d\) forces the model to learn relationships between different input dimensions. As a result, the loss observed for dimension j contains not only information about the change in that dimension (i.e., the marginal distribution in j changes), but also about correlations influencing dimension j. Hence, we can detect changes in the marginal- and joint-distributions by evaluating in which dimensions the loss changed the most.

Algorithm 1 describes how we identify change subspaces. For each dimension j, we compute the average reconstruction loss (the squared error in dimension j) before and after \(t^*\), denoted \(\hat{\mu }^j_{1,t^*},\hat{\mu }^j_{t^*+1,t}\) (lines 5 and 6), and the standard deviation \(\sigma ^j_{1,t^*}, \sigma ^j_{t^*+1,t}\) (lines 6 and 7). We then evaluate Eq. (5), returning an upper bound on the p-value in the range (0, 4] for dimension j (line 9). If \(p_j < \tau \in [0,4]\), an external parameter for which we give a recommendation later on, we add j to the change subspace (lines 10 and 11).

4.4 Quantifying change severity

ABCD provides a measure of change severity in the affected subspace, based on the assumption that the loss in the change subspace increases with severity. Hence, we compute the average reconstruction loss observed in \(D^*\) before and after the change,

and the standard deviation observed before the change:

We then standard-normalize the average reconstruction loss \(\hat{\mu }_{t^*+1}^{D^*}\) observed after the change:

Intuitively, \(\Delta \) is the standard deviation of model’s loss on the new distribution.

4.5 Working with windows

In comparison to most approaches, ABCD evaluates multiple possible change points within an adaptive time interval \([1, \ldots , t]\). This frees the user from choosing the window size a-priori and allows to detect changes at variable time scales. Next, we discuss how to efficiently evaluate those time points.

4.5.1 Maintaining loss statistics online

To avoid recomputing average reconstruction loss values and their variance for multiple time points every time new observations arrive, we store Welford aggregates \(A_{1,k}\) summarizing the stream in the interval \([1, \ldots , k]\). Each aggregate \(A_{1,k}\) is a tuple containing the average reconstruction loss \({\hat{\mu }}_{1,k}\) and the sum of squared differences \(ssd_{1,k} = k^{-1} \sum _{j = 1}^k L_j\). We store these aggregates for the time interval \([1, \ldots , t]\).

Creating a new aggregate Every time a new observation with loss \(L_{t}\) arrives, we create a new aggregate based on the previous aggregate \(A_{1,t-1} = (\hat{\mu }_{1,t-1}, ssd_{1,t-1})\) in \(\mathcal {O}(1)\) using Welford’s algorithm (Knuth 1997):

Computing the statistics Two aggregates \(A_{1,k}\) and \(A_{1,t}\), \(t>k\) overlap in the time interval \([1, \ldots , k]\). We leverage this overlap to derive an aggregate \(A_{k+1,t} = (\hat{\mu }_{k+1,t}, ssd_{k+1,t})\) representing the time interval \([k+1, \ldots , t]\). Equations (19) and (20) are based on Chan’s method for combining variance estimates of non-overlapping samples (Chan et al. 1982).

From \(ssd_{1,k}\) and \(ssd_{k+1,t}\) we can compute the sample variances as follows:

Derivation

Given two non-overlapping samples \(A = \{x_1, \ldots , x_m\}\) and \(B= \{x_1, \ldots , x_n\}\) of a real random variable. Let \(T_A = \sum _{i=1}^m x_i\) and \(T_B = \sum _{i=1}^n x_i\) be the sums of the samples and \(ssd_A = \sum _{i=1}^m (x_i - m^{-1}T_A)^2\) and \(ssd_B = \sum _{i=1}^n (x_i - n^{-1}T_B)^2\) be the sums of squared distances from the mean.

For the union of both sets \(AB = A \cup B\) we have \(T_{AB} = T_A + T_B\), which is equivalent to \((m+n){\hat{\mu }}_{AB} = m{\hat{\mu }}_A + n{\hat{\mu }}_B\). Solving for \({\hat{\mu }}_B\) gives

Substituting \(n=t-k\), \(m=k\), \({\hat{\mu _A}} = {\hat{\mu }}_{1,k}\), \({\hat{\mu _B}} = {\hat{\mu }}_{k+1,t}\), and \({\hat{\mu }}_{1,t} = {\hat{\mu }}_{AB}\) gives Eq. (19); next we derive Eq. (20). Chan et al. (1982) state:

which is equivalent to

Solving for \(ssd_B\), applying the former substitutions, and setting \(ssd_A = ssd_{1,k}\), \(ssd_B = ssd_{k+1,t}\), and \(ssd_{1,t} = ssd_{AB}\) results in Eq. (20).

4.6 Implementation

Algorithm

One can implement ABCD as a recursive algorithm, see Algorithm 2, which restarts every time a change occurs. We keep a data structure W that contains the aggregates, instances, and reconstructions. W can either be empty, or, in the case of a recursive execution, already contain data from the previous run.

Prior to execution, our algorithm must first obtain a model of the current data from an initial sample of size \(n_{min}\). If necessary, ABCD allows enough instances to arrive (lines 5–7). Larger choices of \(n_{min}\) allow for better approximations of the current distribution but delay change detection. Hence our recommendation is to set \(n_{min}\) as small as possible to still learn the current distribution; a default of \(n_{min} = 100\) has worked well for us.

Afterwards, the algorithm trains the model using the instances in W (lines 8–9). ABCD can in principle work with various encoder-decoder models; thus we deal with tuning the model only on a high level. Nonetheless, we give recommendations in our sensitivity study later on.

After model training, ABCD detects changes. It reconstructs each new observation \(x_{t+1}\) (line 11), creates a new aggregate \(A_{1,t+1}\) (line 12), and adds \(w_{t+1} :=(A_{1,t+1}, \hat{x}_{t+1}, x_{t+1})\) to W (lines 13–14). Our approach then computes change score p and change point \(t^*\) (lines 15–16). If \(p < \delta \), it detects a change.

Once ABCD detects a change, it identifies the corresponding subspace and evaluates its severity (lines 21–22). Then it adapts W by dropping the outdated part of the window (line 23), including all information obtained with the outdated model. At last, we restart ABCD with the adapted window (line 24).

Discussion

In the worst case our approach consumes linear time and memory because W grows linearly with t. However, we can simply restrict the size of W to \(n_{max}\) items for constant memory or evaluate only \(k_{max}\) window splits for constant runtime. In the latter case we split W at every \(t/k_{max}\)th time point. Regarding \(n_{max}\), it is beneficial that the remaining aggregates still contain information about all observations in \((1, \ldots , t)\). Hence, ABCD considers the entire past since the last change even though one restricts the size of W.

ABCD can work with any encoder-decoder model, such as deep neural networks. However, handling a high influx of new observations faster than the model’s processing capability can be challenging. Assuming that \(\psi \circ \phi \in \mathcal {O}(g(d))\) for some function g of dimensionality d, the processing time of a single instance during serial execution is in \(\mathcal {O}\left( g(d) + k_{max}\right) \). Nevertheless, both the deep architecture components and the computation of the change score (cf. Eq. 8) can be executed in parallel using specialized hardware.

Dimensionality reduction techniques are often already present in data stream mining pipelines, for example as a preprocessing step to improve the accuracy of a classifier (Yan et al. 2006). Reusing an existing dimensionality reduction model makes it is easy to integrate ABCD into an existing pipeline.

Bernstein’s inequality holds for zero-centered bounded random variables that take absolute values of at maximum M almost surely. While \(M=1\) serves as a theoretical upper limit of the zero-centered reconstruction error \(L_t - \mathbb {E}[L_t]\) for \(x_t\in [0,1]^d\), we observe that this theoretical limit is very conservative in practice (cf. “Reconstruction loss over time” section in “Appendix”). In fact, observing an error of 1 corresponds to an instance and reconstruction of \(x = [0]^d\) and \({\hat{x}} = [1]^d\). This leads us to use \(M=0.1\) in our experiments.

5 Experiments

This section describes our experiments and results. We first describe the experimental setting (Sect. 5.1). Then we analyze ABCD’s change detection performance (Sect. 5.3), its ability to find change subspaces and quantify change severity (Sect. 5.4), and its parameter sensitivity (Sect. 5.5).

5.1 Algorithms

We evaluate ABCD with different encoder-decoder models: (1) Principal Component Analysis (PCA) (\(d'=\eta d\)), (2) Kernel-PCA (\(d' = \eta d\), RBF-kernel), and (3) a standard fully-connected autoencoder model with one hidden ReLU layer (\(d'=\eta d\)) and an output layer with sigmoid activation. For (1) and (2), we rely on the default scikit-learn implementations. We implement the autoencoder (3) in pytorch and train it through gradient descent using E epochs and an Adam optimizer with default parameters according to Kingma and Ba (2015); see “Training of autoencoder” section in “Appendix” for pseudocode of the autoencoder training procedure.

We compare ABCD with AdwinK, IKS, IBDD, WATCH, and D3 (c.f. Section 2). We evaluate for each approach a large grid of parameters, shown in Table 2. Whenever possible, the evaluated grids of hyperparameters for competitors base on recommendations in respective papers. Otherwise, we choose them based on preliminary experiments. For ABCD, we evaluate larger and smaller values for \(\delta \), \(\eta \) and E to observe our approach’s sensitivity to those parameters. The choice of \(\tau =2.5\) is our recommended default based on our sensitivity study in Sect. 5.5. Last, we set \(n_{min}=100\) and \(k_{max}=20\), minimum values that have worked well in preliminary experiments.

5.2 Datasets

There are not many public benchmark data streams for change detection. Thus we generate our own from seven real-world (rw) and synthetic (syn) classification datasets, similar to Faber et al. (2021) and Faithfull et al. (2019). We simulate changing data streamsFootnote 2 by sorting the data by label, unless stated otherwise. If the label changes, a change has occurred. In real-world data streams, the number of observations between changes depends on each dataset, reported below. In the synthetic streams, we introduce changes every 2000 observations, which is a relatively large interval, to assess whether some approaches generate many false alarms. The generators base on the following datasets:

-

HAR (rw) The dataset Human Activity Recognition with Smartphones (Anguita et al. 2013) (\(d=561\)) bases on smartphone accelerometer and gyroscope readings for different actions a person performs. A change occurs on average every 1768 observations.

-

GAS (rw) This data set (Vergara et al. 2011) (\(d=128\)) contains data from 16 sensors exposed to 6 gases at various concentrations. A change occurs on average every 2265 observations.

-

LED (syn) The LED generator samples instances representing a digit on a seven segment display. It contains 17 additional random dimensions. We add changes by varying the probability of bit-flipping in the relevant dimensions.

-

RBF (syn) The RBF generator (Bifet et al. 2010) starts by drawing a fixed number of centroids. For each new instance, the generator chooses a centroid at random and adds Gaussian noise. To create changes, we increment the seed of the generator resulting in different centroids. We then use samples from the new generator in a subspace of random size.

-

MNIST, FMNIST, and CIFAR (syn) Those data generators sample from the image recognition datasets MNIST (LeCun et al. 1998), Fashion MNIST (FMNIST) (Xiao et al. 2017) (\(d=784)\), and CIFAR (Krizhevsky et al. 2009) (\(d=1024\), grayscale).

Changes can occur rapidly (“abrupt” or “sudden”) or in time intervals (“gradual” or “incremental”). The shorter the interval, the more sudden the change. We vary the interval size between 1 and 300 unless stated otherwise. Real-world and image data do not have a ground truth for change subspaces and severity. Thus we generate three additional data streams:

-

HSphere (syn) This generator draws from a \(d^*\)-dimensional hypersphere bound to [0, 1] and adds \(d-d^*\) random dimensions. We vary the radius and center of the hypersphere to introduce changes. The change subspace contains those dimensions that define the hypersphere.

-

Normal-M/V (syn) These generators sample from a \(d^*\)-dimensional normal distribution and add \(d-d^*\) random dimensions. For type M, changes affect the distribution’s mean, for V we change the distribution’s variance.

5.3 Change point detection

We use precision, recall, and F1-score to evaluate the performance of the approaches at detecting changes. We define true positives (TP), false positives (FP) and false negatives (FN) as follows:

-

TP A change was detected before the next change.

-

FN A change was not detected before the next change.

-

FP A change was detected although no change occurred.

Also, we report the mean time until detection (MTD) indicating the average number of instances until a change is detected.

Figure 2 shows F1-score, precision, recall, and MTD for all datasets and algorithm, as well as a column “Average” that summarizes across datasets. Each box contains the results for the grid of hyperparameters shown in Table 2. We see that our approach outperforms its competitors w.r.t. F1-score and precision. It also is competitive in terms of recall, though it loses against IKS, IBDD, and WATCH. These approaches seem overly sensitive. The results also indicate that ABCD works well for a wide range of hyperparameters. One reason is that ABCD uses adaptive windows, thereby eliminating the effect of a window size parameter (demonstrated in Sect. 5.6). Another reason is that ABCD detects changes in reconstruction loss irrespective of the actual quality of the reconstructions. For instance, Kernel PCA and PCA produce reconstructions of different accuracy in our experiments. However, for both models, the average accuracy changes when the stream changes, which is what our algorithm detects. Refer to “Reconstruction loss over time” section “Appendix” for an illustration of the models’ reconstruction loss over time. Hence, our reported results do not yield information about the actual accuracy of the underlying encoder-decoder models.

ABCD has a higher MTD than D3, IBDD, and IKS, i.e., it requires more data to detect changes. However, those competitors are much less conservative and detect many more changes than exist in the data. Hence they have low precision but high recall—this leads to a lower MTD.

Table 3 reports the results of all approaches with their best hyperparameters. WATCH and D3 achieve relatively high F1-score and precision. In fact, those approaches are our strongest competitors although we still outperform them by at least 3 %. Further, WATCH has an MTD of 626, which is more than ABCD while D3 and ABCD have a comparable MTD.

ABCD has much higher precision than its competitors. We assume this is because ABCD (1) leverages the relationships between dimensions, in comparison to AdwinK, IKS, or IBDD, and (2) learns those relationships more effectively than, say, D3 or WATCH. For example, we observed in our experiments that WATCH was frequently unable to accurately approximate the Wasserstein distance in high-dimensional data.

ABCD has lower recall than most competitors, partly due to their over-sensitivity. In this regard, our approach might benefit from application-specific encoder-decoder models that leverage structure in the data, such as spacial relationships between the pixels of an image, more effectively.

5.4 Change subspace and severity

We now evaluate change subspace identification and change severity estimation. We set \(d=\{24, 100, 500\}\) and vary the change subspace size \(d^*\) randomly in [1, d] (except for LED, here the subspace always contains dimensions 1–7). We set the ground truth for the severity to the absolute difference between the parameters that define the concepts, e.g., the hypersphere-radius in HAR before and after the change. We report an approach’s subspace detection accuracy (SAcc.), where true positives (true negatives) represent those dimensions that were correctly classified as being member (not being member) of the change subspace. We use Spearman’s correlation between the detected severity and the ground truth. We also report the F1-score for detecting change points.

Figure 3 shows our results. As before, each box summarizes the results for the grid of evaluated hyperparameters.

Comparing the two approaches, AdwinK and IKS, that monitor each dimension separately, we see that the former can only detect changes that affect the mean of the marginal distributions (i.e., on Norm-M, LED). At the same time, the latter can also detect other changes (e.g., changes in variance). This is expected since AdwinK compares the mean in two windows while IKS compares the empirical distributions.

Regarding subspace detection, our approach achieves an accuracy of 0.72 for PCA, 0.78 for autoencoders, and 0.79 for Kernel PCA. AdwinK performs similarly well when changes affect the mean of the marginal distributions. Except on LED, IKS performs worse than ABCD and AdwinK, presumbably because IKS issues an alarm as soon as a single dimension changed.

The estimates of our approach correlate more strongly with the ground truth than those of competitors, with an average of 0.31 for PCA, 0.36 for Kernel PCA and 0.37 for Autoencoders. However, we expect more specialized models to better than our tested models. On LED, PCA-based models appear to struggle to separate patterns from noise, resulting in poor noise level estimates and low correlation scores.

5.5 Parameter sensitivity of ABCD

Sensitivity to \(\eta \)

Figure 4a plots F1 for different datasets over \(\eta \). We observe that the size of the bottleneck does not significantly impact the change detection performance of ABCD (ae) and ABCD (kpca). For PCA, however, too large bottlenecks seem to inhibit change detection on CIFAR, Gas, and MNIST. For those datasets, we assume that the change occurs along the retained main components, rendering it undetectable; see “Detectable and undetectable change for ABCD (pca)” section “Appendix” for an illustration. Figure 4b shows the subspace detection accuracy and Spearman’s \(\rho \). The influence of \(\eta \) on both metrics is low. As mentioned earlier, we assume that a change in reconstruction loss, rather than the quality of reconstruction itself, is crucial for ABCD. An exception is the LED dataset, on which PCA and Kernel-PCA are unable to provide a measure that positively correlates with change severity. We hypothesize that those methods struggle to separate patterns from noise, resulting in poor noise level estimates and low correlation scores.

Sensitivity to E

Fig. 4c plots our approach’s performance for different choices of E. Overall, our approach seems to be robust to the choice of E. On LED, however, larger choices of E lead to substantial improvements in F1-score. The reason may be that the autoencoder does not converge to a proper representation of the data for small E. To avoid this, we recommend choosing \(E \ge 50\) and to increase the value if one observes that the model has not yet converged sufficiently.

Sensitivity to \(\tau \)

Fig. 4d investigates how the choice of \(\tau \) affects the performance of ABCD at detecting subspaces. Since the change score in Eq. (5) provides an upper bound on the probability that a change occurred, the function can return values greater than 1, i.e,. in the range (0, 4]. Hence we vary \(\tau \) in that range and record the obtained subspace detection accuracy. For all approaches we achieve optimal accuracy at \(\tau \approx 2.5\). This is probably because some dimensions could change more severely than others, resulting in variations of the change scores observed in the different dimensions of the change subspace. Based on our findings we recommend \(\tau =2.5\) as default.

5.6 Ablation study on window types

Next, we investigate the effect of different window types on change detection performance. We evaluate those commonly found in change detection literature (and in our competitors) and couple them with encoder-decoder models and the probability bound in Eq. (5). In particular, we compare: (1) Adaptive windows (AW), as in ADWIN, AdwinK, and our approach, (2) fixed reference windows (RW), as in IKS, (3) sliding windows (SW), as in WATCH, and (4) jumping windows (JW), as in D3. The latter “jump” every \(\rho |W |\) instances.

We evaluate the hyperparameters mentioned in Table 2. For example, because D3 uses jumping windows, we include the evaluated hyperparameters for D3 in our evaluation of jumping windows. In addition, we extend the grid with other reasonable choices since we already preselected those in Table 2 for our competitors in a preliminary study. For ABCD we use \(\eta =0.5\) and \(E=50\).

Table 4 reports the average over all hyperparameter combinations. AWs yield higher F1-score and recall than other techniques, while precision remains high (\(\ge 0.95\)). SWs have a lower MTD than AWs and hence seem to require a fewer instances until they detect a change. This is expected: in contrast to sliding windows, adaptive windows allow the detection of even slight changes after a longer period of time, resulting in both higher MTD and recall.

5.7 Runtime analysis

5.7.1 Comparison with competitors

Figure 5a shows the mean time per observation (MTPO) of ABCD and its competitors for \(d\in \{10, 100, 1000, 10,000\}\) running single-threaded. The results are averaged over all evaluated parameters (Table 2). ABCD (id) replaces the encoder-decoder model with the identity which does not cause overhead. This allows measuring how much the encoder-decoder model influences ABCD’s runtime. The results confirm that the runtime of ABCD alone, i.e, without the encoding-decoding-process, remains unaffected by a stream’s dimensionality.

We observe that our approach is able to process around 10,000 observations per second for \(d\le 100\). This is more than IKS, WATCH and AdwinK (except at \(d=10\)) but slower than D3 and IBDD. The reason is that our approach evaluates \(k_{max}\) possible change points in each time step. In high-dimensional data, our competitors’ MTPO grows faster than ABCD with PCA or KPCA; in fact, ABCD (pca) is second fastest after D3 for \(d\ge 1000\). An exception is WATCH at \(d=10000\). This is due to an iteration cap for approximating the Wasserstein distance restricting the approach’s MTPO.

5.7.2 Runtime depending on window size

Next, we investigate ABCD’s runtime for different choices of \(k_{max}\) and \(\eta \). We run this experiment on a single CPU thread. For all three evaluated models, the encoding-decoding of an observation has a time complexity of \(\mathcal {O}(\eta d^2)\); hence, ABCD’s processing time of one instance is in \(\mathcal {O}(\eta d^2 + k_{max})\). We therefore expect a quadratic increase in execution time with dimensionality and a linear increase with \(\eta \) and \(k_{max}\) when running on a single core.

The results in Fig. 5b show the influence of \(k_{max}\) on the execution time: \(k_{max}\) effectively restricts the MTPO as soon as \(|W |= k_{max}\). Afterwards, MTPO remains unaffected by \(|W|\). This also confirms that one can evaluate different possible change points in constant time using the proposed aggregates.

We show the runtime for different choices of bottleneck-size \(\eta \) in Fig. 5c. \(\eta \) has little influence on the runtime of ABCD with PCA and Kernel-PCA. However, coupled with an autoencoder (implemented in pytorch) we observe the expected linear increase in execution time from 0.1 ms for \(\eta = 0.3\) to 0.3 ms for \(\eta = 0.7\). Considering that change detection performance has shown to remain stable even for smaller choices of \(\eta \), we recommend \(\eta \le 0.5\) as default.

6 Conclusion

We presented a change detector for high-dimensional data streams, called ABCD, that monitors the reconstruction loss of an encoder-decoder-model in an adaptive window with a change score based on Bernstein’s inequality. Our approach identifies changes and change subspaces, and provides a severity measure that correlates with the ground truth. Since encoder-decoder models are already used in many domains (Rani et al. 2022), our approach is widely applicable. In the future, it would thus be interesting to test ABCD with application or data specific encoder-decoder models. For example, one might observe even better performance on streams of image data when applying convolutional autoencoders. Last, ABCD could also benefit from a theoretical analysis of the relationship between changes in data distribution and the loss of different encoder-decoder models.

References

Anguita D, Ghio A, Oneto L et al (2013) A public domain dataset for human activity recognition using smartphones. In: ESANN. https://www.esann.org/sites/default/files/proceedings/legacy/es2013-84.pdf

Bai J, Perron P (2003) Critical values for multiple structural change tests. Econometr J 6(1):72–78. https://doi.org/10.1111/1368-423X.00102

Bernstein SN (1924) On a modification of Chebyshev’s inequality and of the error formula of Laplace. Ann Sci Inst Sav Ukraine Sect Math

Bifet A, Gavaldà R (2007) Learning from time-changing data with adaptive windowing. In: Proceedings of the seventh SIAM international conference on data mining. SIAM, pp 443–448. https://doi.org/10.1137/1.9781611972771.42

Bifet A, Holmes G, Pfahringer B (2010) Leveraging bagging for evolving data streams. In: ECML PKDD, lecture notes in computer science, vol 6321. Springer, pp 135–150. https://doi.org/10.1007/978-3-642-15880-3_15

Boucheron S, Lugosi G, Massart P (2013) Concentration inequalities: a nonasymptotic theory of independence. Oxford University Pressm. https://doi.org/10.1093/acprof:oso/9780199535255.001.0001

Ceci M, Corizzo R, Japkowicz N et al (2020) ECHAD: embedding-based change detection from multivariate time series in smart grids. IEEE Access 8:156,053-156,066. https://doi.org/10.1109/ACCESS.2020.3019095

Chakar S, Lebarbier E, Lévy-Leduc C et al (2017) A robust approach for estimating change-points in the mean of an \(\operatorname{AR}(1)\) process. Bernoulli 23(2):1408–1447. https://doi.org/10.3150/15-BEJ782

Chan TF, Golub GH, LeVeque RJ (1982) Updating formulae and a pairwise algorithm for computing sample variances. Technical report, Heidelberg

Chaudhuri A, Fellouris G, Tajer A (2021) Sequential change detection of a correlation structure under a sampling constraint. In: ISIT, pp 605–610. https://doi.org/10.1109/ISIT45174.2021.9517736

Dasu T, Krishnan S, Venkatasubramanian S et al (2006) An information-theoretic approach to detecting changes in multi-dimensional data streams. In: Proceedings of the symposium on the interface of statistics, computing science, and applications (interface)

de Jong KL, Bosman AS (2019) Unsupervised change detection in satellite images using convolutional neural networks. In: IJCNN 2019. IEEE, pp 1–8. https://doi.org/10.1109/IJCNN.2019.8851762

de Souza VMA, Chowdhury FA, Mueen A (2020) Unsupervised drift detection on high-speed data streams. In: BigData. IEEE, pp 102–111. https://doi.org/10.1109/BigData50022.2020.9377880

dos Reis DM, Flach PA, Matwin S et al (2016) Fast unsupervised online drift detection using incremental Kolmogorov-Smirnov test. In: SIGKDD. ACM, pp 1545–1554. https://doi.org/10.1145/2939672.2939836

Faber K, Corizzo R, Sniezynski B et al (2021) WATCH: Wasserstein change point detection for high-dimensional time series data. In: Big data. IEEE, pp 4450–4459. https://doi.org/10.1109/BigData52589.2021.9671962

Faithfull WJ, Diez JJR, Kuncheva LI (2019) Combining univariate approaches for ensemble change detection in multivariate data. Inf Fusion 45:202–214. https://doi.org/10.1016/j.inffus.2018.02.003

Fouché E, Komiyama J, Böhm K (2019) Scaling multi-armed bandit algorithms. In: SIGKDD. ACM, pp 1449–1459. https://doi.org/10.1145/3292500.3330862

Garreau D, Arlot S (2018) Consistent change-point detection with kernels. Electron J Stat 12(2):4440–4486

Goldenberg I, Webb GI (2019) Survey of distance measures for quantifying concept drift and shift in numeric data. Knowl Inf Syst 60(2):591–615. https://doi.org/10.1007/s10115-018-1257-z

Gözüaçık O, Büyükçakır A, Bonab H et al (2019) Unsupervised concept drift detection with a discriminative classifier. In: CIKM. ACM, pp 2365–2368. https://doi.org/10.1145/3357384.3358144

Gretton A, Borgwardt KM, Rasch MJ et al (2012) A kernel two-sample test. J Mach Learn Res 13:723–773. https://doi.org/10.5555/2503308.2188410

Harchaoui Z, Cappe O (2007) Retrospective multiple change-point estimation with kernels. In: IEEE/SP 14th workshop on statistical signal processing, pp 768–772. https://doi.org/10.1109/SSP.2007.4301363

Impedovo A, Loglisci C, Ceci M et al (2020) Condensed representations of changes in dynamic graphs through emerging subgraph mining. Eng Appl Artif Intell 94(103):830. https://doi.org/10.1016/j.engappai.2020.103830

Impedovo A, Ceci M, Calders T (2019) Efficient and accurate non-exhaustive pattern-based change detection in dynamic networks. Lecture notes in computer science. Springer, vol 11828, pp 396–411. https://doi.org/10.1007/978-3-030-33778-0_30

Impedovo A, Mignone P, Loglisci C et al (2020b) Simultaneous process drift detection and characterization with pattern-based change detectors. Lecture notes in computer science. Springer, vol 12323, pp 451–467. https://doi.org/10.1007/978-3-030-61527-7_30

Iwashita AS, Papa JP (2019) An overview on concept drift learning. IEEE Access 7:1532–1547. https://doi.org/10.1109/ACCESS.2018.2886026

Jaworski M, Rutkowski L, Angelov P (2020) Concept drift detection using autoencoders in data streams processing. In: ICAISC. Springer, pp 124–133. https://doi.org/10.1007/978-3-030-61401-0_12

Jiao Y, Chen Y, Gu Y (2018) Subspace change-point detection: a new model and solution. IEEE J Sel Top Signal Process 12(6):1224–1239. https://doi.org/10.1109/JSTSP.2018.2873147

Khamassi I, Sayed Mouchaweh M, Hammami M et al (2015) Self-adaptive windowing approach for handling complex concept drift. Cogn Comput 7(6):772–790. https://doi.org/10.1007/s12559-015-9341-0

Killick R, Fearnhead P, Eckley IA (2012) Optimal detection of changepoints with a linear computational cost. J Am Stat Assoc 107(500):1590–1598. https://doi.org/10.1080/01621459.2012.737745

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: ICLR. arXiv:1412.6980

Knuth DE (1997) The art of computer programming: seminumerical algorithms, vol 2. Addison-Wesley

Krizhevsky A, Hinton G et al (2009) Learning multiple layers of features from tiny images. Technical report

Lajugie R, Bach FR, Arlot S (2014) Large-margin metric learning for constrained partitioning problems. In: ICML, JMLR workshop and conference proceedings, vol 32. JMLR.org, pp 297–305. http://proceedings.mlr.press/v32/lajugie14.html

LeCun Y, Cortes C, Burges C (1998) The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist/

Lee JA, Verleysen M (2007) Nonlinear dimensionality reduction. Springer. https://doi.org/10.1007/978-0-387-39351-3

Liu A, Song Y, Zhang G et al (2017) Regional concept drift detection and density synchronized drift adaptation. In: IJCAI. ijcai.org, pp 2280–2286. https://doi.org/10.24963/ijcai.2017/317

Liu P, Wang J, Wang Z et al (2019) High-dimensional data abnormity detection based on improved Variance-of-Angle (VOA) algorithm for electric vehicles battery. In: 2019 IEEE energy conversion congress and exposition (ECCE), pp 5072–5077. https://doi.org/10.1109/ECCE.2019.8912777

Loglisci C, Ceci M, Impedovo A et al (2018) Mining microscopic and macroscopic changes in network data streams. Knowl Based Syst 161:294–312. https://doi.org/10.1016/j.knosys.2018.07.011

Lu J, Liu A, Dong F et al (2019) Learning under concept drift: a review. IEEE Trans Knowl Data Eng 31(12):2346–2363. https://doi.org/10.1109/TKDE.2018.2876857

Lung-Yut-Fong A, Lévy-Leduc C, Cappé O (2015) Homogeneity and change-point detection tests for multivariate data using rank statistics. Journal de la Société Française de Statistique 156(4):133–162

Matteson DS, James NA (2014) A nonparametric approach for multiple change point analysis of multivariate data. J Am Stat Assoc 109(505):334–345. https://doi.org/10.1080/01621459.2013.849605

Montiel J, Read J, Bifet A et al (2018) Scikit-multiflow: A multi-output streaming framework. JMLR 19(1):2915–2914

Mowbray M, Savage T, Wu C et al (2021) Machine learning for biochemical engineering: a review. Biochem Eng J 172:108,054

Naseer S, Ali RF, Dominic PDD et al (2020) Learning representations of network traffic using deep neural networks for network anomaly detection: a perspective towards oil and gas IT infrastructures. Symmetry 12(11):1882. https://doi.org/10.3390/sym12111882

Page ES (1954) Continuous inspection schemes. Biometrika 41(1–2):100–115. https://doi.org/10.1093/biomet/41.1-2.100

Pears R, Sakthithasan S, Koh YS (2014) Detecting concept change in dynamic data streams. Mach Learn 97(3):259–293. https://doi.org/10.1007/s10994-013-5433-9

Qahtan AA, Alharbi B, Wang S et al (2015) A PCA-based change detection framework for multidimensional data streams: Change detection in multidimensional data streams. In: SIGKDD. ACM, New York, NY, USA, pp 935–944. https://doi.org/10.1145/2783258.2783359

Rani R, Khurana M, Kumar A et al (2022) Big data dimensionality reduction techniques in IoT: review, applications and open research challenges. Clust Comput 25(6):4027–4049. https://doi.org/10.1007/s10586-022-03634-y

Shewhart WA (1930) Economic quality control of manufactured product, vol 9. https://doi.org/10.1002/j.1538-7305.1930.tb00373.x

Sun Y, Wang Z, Liu H et al (2016) Online ensemble using adaptive windowing for data streams with concept drift. Int J Distrib Sens Netw 12(5):4218,973:1-4218,973:9. https://doi.org/10.1155/2016/4218973

Suryawanshi S, Goswami A, Patil P et al (2022) Adaptive windowing based recurrent neural network for drift adaption in non-stationary environment. J Amb Intell Hum Comput 10:10. https://doi.org/10.1007/s12652-022-04116-0

Truong C, Oudre L, Vayatis N (2020) Selective review of offline change point detection methods. Signal Process. https://doi.org/10.1016/j.sigpro.2019.107299

Vergara A, Huerta R, Ayhan T et al (2011) Gas sensor drift mitigation using classifier ensembles. In: Proceedings of the fifth international workshop on knowledge discovery from sensor data. ACM, SensorKDD ’11, pp 16–24. https://doi.org/10.1145/2003653.2003655

Vrigkas M, Nikou C, Kakadiaris IA (2015) A review of human activity recognition methods. Front Robot AI 2:28. https://doi.org/10.3389/frobt.2015.00028

Webb GI, Lee LK, Goethals B et al (2018) Analyzing concept drift and shift from sample data. Data Min Knowl Discov 32(5):1179–1199. https://doi.org/10.1007/s10618-018-0554-1

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. CoRR arXiv:1708.07747

Xie L, Xie Y, Moustakides GV (2020) Sequential subspace change point detection. Seq Anal 39(3):307–335. https://doi.org/10.1080/07474946.2020.1823191

Yan J, Zhang B, Liu N et al (2006) Effective and efficient dimensionality reduction for large-scale and streaming data preprocessing. IEEE Trans Knowl Data Eng 18(3):320–333. https://doi.org/10.1109/TKDE.2006.45

Zhao X, Wu J, Shi Y et al (2018) Fault diagnosis of motor in frequency domain signal by stacked de-noising auto-encoder. Comput Mater Contin 57(2):223–242

Acknowledgements

This work was supported by the German Research Foundation (DFG) Research Training Group GRK 2153: Energy Status Data—Informatics Methods for its Collection, Analysis and Exploitation.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Charalampos Tsourakakis.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Training of autoencoder

Algorithm 3 describes the training of the autoencoder model as done in our experiments. First, we collect the training data from the current window W (line 2). Afterwards we perform gradient descent on \(X_{train}\) for E epochs at a learning rate of lr.

1.2 Detectable and undetectable change for ABCD (pca)

This section illustrates under which conditions one can use principal component analysis to detect change. Figure 6 shows data from two distributions: black points (e.g., before the change) plus the associated main principle component, and blue points (e.g., after the change). On the left, the change affects the correlation between Dim. 1 and Dim. 2. This leads to an increased reconstruction error for the points highlighted in blue. On the right, the change occurs along the main principle component. I.e., the variance along the main principle component has increased. Such kind of change is undetectable by ABCD (pca) as the reconstruction error remains unchanged.

1.3 Reconstruction loss over time

Figure 7 shows the reconstruction loss of the evaluated encoder-decoder models over the length of the stream. We observe that indeed the reconstruction loss decreases with increasing bottleneck size (controlled by \(\eta \)), and with increasing number of training epochs E (first three columns). Further, we see that regardless of E, \(\eta \), or the type of model, the reconstruction loss typically changes after a change point. After the change was detected, ABCD learns the new concept, which mostly leads to a decrease in reconstruction loss. Last, we observe that the theoretical limit of \(M=1\) for the absolute difference between the reconstruction loss and its expected value is overly conservative. A value of \(M=0.1\) seems to be a more realistic choice.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heyden, M., Fouché, E., Arzamasov, V. et al. Adaptive Bernstein change detector for high-dimensional data streams. Data Min Knowl Disc 38, 1334–1363 (2024). https://doi.org/10.1007/s10618-023-00999-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-023-00999-5