Abstract

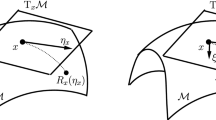

This paper considers the problem of minimizing the summation of a differentiable function and a nonsmooth function on a Riemannian manifold. In recent years, proximal gradient method and its variants have been generalized to the Riemannian setting for solving such problems. Different approaches to generalize the proximal mapping to the Riemannian setting lead different versions of Riemannian proximal gradient methods. However, their convergence analyses all rely on solving their Riemannian proximal mapping exactly, which is either too expensive or impracticable. In this paper, we study the convergence of an inexact Riemannian proximal gradient method. It is proven that if the proximal mapping is solved sufficiently accurately, then the global convergence and local convergence rate based on the Riemannian Kurdyka–Łojasiewicz property can be guaranteed. Moreover, practical conditions on the accuracy for solving the Riemannian proximal mapping are provided. As a byproduct, the proximal gradient method on the Stiefel manifold proposed in Chen et al. [SIAM J Optim 30(1):210–239, 2020] can be viewed as the inexact Riemannian proximal gradient method provided the proximal mapping is solved to certain accuracy. Finally, numerical experiments on sparse principal component analysis are conducted to test the proposed practical conditions.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

Notes

The commonly-used update expression is \(x_{k+1}=\arg \min _x\langle \nabla f(x_k),x-x_k\rangle _2+\frac{L}{2}\Vert x-x_k\Vert _2^2+g(x)\). We reformulate it equivalently for the convenience of the Riemannian formulation given later.

Note that if \(\Psi (\Lambda ) \ne 0\), then \(\eta\) defined by (42) may be not in \(\mathrm {T}_x \mathcal {M}\). Therefore, we add an orthogonal projection.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009). https://doi.org/10.1137/080716542

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA (2017). https://doi.org/10.1137/1.9781611974997

Darzentas, J.: Problem Complexity and Method Efficiency in Optimization (1983)

Nesterov, Y.E.: A method for solving the convex programming problem with convergence rate \(O(1/k^{2})\). Dokl. Akas. Nauk SSSR (In Russian) 269, 543–547 (1983)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116, 5–16 (2009)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: International Conference on Neural Information Processing Systems (2015)

Ghadimi, S., Lan, G.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Program. 59–99 (2016)

Ozolinš, V., Lai, R., Caflisch, R., Osher, S.: Compressed modes for variational problems in mathematics and physics. Proc. Natl. Acad. Sci. 110(46), 18368–18373 (2013). https://doi.org/10.1073/pnas.1318679110

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15(2), 265–286 (2006)

Huang, W., Wei, K.: An extension of fast iterative shrinkage-thresholding algorithm to Riemannian optimization for sparse principal component analysis. Numer. Linear Algebra Appl. (2021). https://doi.org/10.1002/nla.2409

Ulfarsson, M.O., Solo, V.: Sparse variable PCA using geodesic steepest descent. IEEE Trans. Signal Process. 56(12), 5823–5832 (2008). https://doi.org/10.1109/TSP.2008.2006587

Cai, T.T., Ma, Z., Wu, Y.: Sparse PCA: optimal rates and adaptive estimation. Ann. Stat. 41(6), 3074–3110 (2013). https://doi.org/10.1214/13-AOS1178

Xiao, N., Liu, X., Yuan, Y.: Exact penalty function for l2, 1 norm minimization over the Stiefel manifold. Optmization online (2020)

Ye, J., Zhao, Z., Wu, M.: Discriminative k-means for clustering. In: Platt, J., Koller, D., Singer, Y., Roweis, S. (eds.) Advances in Neural Information Processing Systems, vol. 20. Curran Associates, Inc. (2008). https://proceedings.neurips.cc/paper/2007/file/a5cdd4aa0048b187f7182f1b9ce7a6a7-Paper.pdf

Liang, X., Ren, X., Zhang, Z., Ma, Y.: Repairing sparse low-rank texture. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) Computer Vision—ECCV 2012, pp. 482–495. Springer, Berlin (2012)

Mishra, A., Dey, D.K., Chen, K.: Sequential co-sparse factor regression. J. Comput. Graph. Stat. 26(4), 814–825 (2017)

Zhang, T., Ghanem, B., Liu, S., Xu, C., Ahuja, N.: Low-rank sparse coding for image classification. In: 2013 IEEE International Conference on Computer Vision, pp. 281–288 (2013)

Shi, J., Qi, C.: Low-rank sparse representation for single image super-resolution via self-similarity learning. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 1424–1428 (2016). https://doi.org/10.1109/ICIP.2016.7532593

Chen, S., Ma, S., So, A.M.-C., Zhang, T.: Proximal gradient method for nonsmooth optimization over the Stiefel manifold. SIAM J. Optim. 30(1), 210–239 (2020). https://doi.org/10.1137/18M122457X

Huang, W., Wei, K.: Riemannian proximal gradient methods. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01632-3. Published online https://doi.org/10.1007/s10107-021-01632-3

Le, O.Y., Zhang, X.F., Yan, H.: Sparse regularized low-rank tensor regression with applications in genomic data analysis. Pattern Recogn. 107(502), 107516 (2020)

Hsieh, C.-J., Sustik, M., Dhillon, I., Ravikumar, P.: QUIC: quadratic approximation for sparse inverse covariance estimation. J. Mach. Learn. Res. 15, 2911–2947 (2014)

Combettes, P.L.: Solving monotone inclusions via compositions of nonexpansive averaged operators. Optimization 53(5–6), 475–504 (2004). https://doi.org/10.1080/02331930412331327157

Fadili, J.M., Peyré, G.: Total variation projection with first order schemes. IEEE Trans. Image Process. 20(3), 657–669 (2011). https://doi.org/10.1109/TIP.2010.2072512

Schmidt, M., Roux, N., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 24. Curran Associates, Inc. (2011). https://proceedings.neurips.cc/paper/2011/file/8f7d807e1f53eff5f9efbe5cb81090fb-Paper.pdf

Villa, S., Salzo, S., Baldassarre, L., Verri, A.: Accelerated and inexact forward-backward algorithms. SIAM J. Optim. 23(3), 1607–1633 (2013). https://doi.org/10.1137/110844805

Bonettini, S., Prato, M., Rebegoldi, S.: Convergence of inexact forward-backward algorithms using the forward-backward envelope. SIAM J. Optim. 30(4), 3069–3097 (2020). https://doi.org/10.1137/19M1254155

Boothby, W.M.: An Introduction to Differentiable Manifolds and Riemannian Geometry, 2nd edn. Academic Press, Cambridge (1986)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Hosseini, S., Huang, W., Yousefpour, R.: Line search algorithms for locally Lipschitz functions on Riemannian manifolds. SIAM J. Optim. 28(1), 596–619 (2018)

Lee, J.M.: Introduction to Riemannian Manifolds. Graduate Texts in Mathematics, vol. 176, 2nd edn. Springer, Berlin (2018)

do Carmo, M.P.: Riemannian Geometry. Mathematics: Theory & Applications (1992)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control. Optim. 14(5), 877–898 (1976). https://doi.org/10.1137/0314056

Boumal, N., Absil, P.-A., Cartis, C.: Global rates of convergence for nonconvex optimization on manifolds. IMA J. Numer. Anal. 39(1), 1–33 (2018). https://doi.org/10.1093/imanum/drx080

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka–Łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010). https://doi.org/10.1287/moor.1100.0449

Attouch, H., Bolte, J., Svaiter, B.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137, 91–129 (2013)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1–2), 459–494 (2014)

Kurdyka, K., Mostowski, T., Adam, P.: Proof of the gradient conjecture of r. thom. Ann. Math. 152, 763–792 (2000)

Bento, G.C., de Cruz Neto, J.X., Oliveira, P.R.: Convergence of inexact descent methods for nonconvex optimization on Riemannian manifold. arXiv preprint arXiv:1103.4828 (2011)

Qian, Y., Pan, S., Xiao, L.: Error bound and exact penalty method for optimization problems with nonnegative orthogonal constraint (2022)

Huang, W., Wei, K.: Riemannian Proximal Gradient Methods (extended version). arXiv:1909.06065 (2019)

Qi, H., Sun, D.: A quadratically convergent newton method for computing the nearest correlation matrix. SIAM J. Matrix Anal. Appl. 28(2), 360–385 (2006). https://doi.org/10.1137/050624509

Zhao, X.-Y., Sun, D., Toh, K.-C.: A newton-cg augmented Lagrangian method for semidefinite programming. SIAM J. Optim. 20(4), 1737–1765 (2010). https://doi.org/10.1137/080718206

Xiao, X., Li, Y., Wen, Z., Zhang, L.: A regularized semi-smooth Newton method with projection steps for composite convex programs. J. Sci. Comput. 76(1), 364–389 (2018). https://doi.org/10.1007/s10915-017-0624-3

Clarke, F.H.: Optimization and Nonsmooth Analysis. Classics in Applied Mathematics of SIAM (1990)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009). https://doi.org/10.1007/s10107-007-0170-0

Zhou, Z., So, M.C.: A unified approach to error bounds for structured convex optimization problems. Math. Program. 165(2), 689–728 (2017)

Huang, W., Gallivan, K.A., Absil, P.-A.: A Broyden class of quasi-Newton methods for Riemannian optimization. SIAM J. Optim. 25(3), 1660–1685 (2015). https://doi.org/10.1137/140955483

Huang, W., Absil, P.-A., Gallivan, K.A.: A Riemannian symmetric rank-one trust-region method. Math. Program. 150(2), 179–216 (2015)

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. Available online http://www.nicolasboumal.net/book (2020)

Huang, W., Absil, P.-A., Gallivan, K.A., Hand, P.: ROPTLIB: an object-oriented C++ library for optimization on Riemannian manifolds. ACM Trans. Math. Softw. 4(44), 43–14321 (2018)

Jolliffe, I.T., Trendafilov, N.T., Uddin, M.: A modified principal component technique based on the Lasso. J. Comput. Graph. Stat. 12(3), 531–547 (2003)

Huang, W., Absil, P.-A., Gallivan, K.A.: Intrinsic representation of tangent vectors and vector transport on matrix manifolds. Numer. Math. 136(2), 523–543 (2016). https://doi.org/10.1007/s00211-016-0848-4

Acknowledgements

The authors would like to thank Liwei Zhang for discussions on perturbation analysis for optimization problems. Wen Huang was partially supported by National Natural Science Foundation of China (NO. 12001455) and the Fundamental Research Funds for the Central Universities (NO. 20720190060). Ke Wei was partially supported by the NSFC Grant 11801088 and the Shanghai Sailing Program 18YF1401600.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Implementations of \(B_x^T\) and \(B_x\)

Appendix: Implementations of \(B_x^T\) and \(B_x\)

In this section, the implementations of the functions \(B_x^T: \mathbb {R}^n \rightarrow \mathbb {R}^{n - d}\) and \(B_x: \mathbb {R}^{n - d} \rightarrow \mathbb {R}^n\) are given for Grassmann manifold, manifold of fixed-rank matrices, manifold of symmetric positive definite matrices, and products of manifolds. Note that the Riemannian metric is chosen to be the Euclidean metric in this section.

Grassmann manifold We consider the representation of Grassmann manifold by

where \([X] = \{X O : O^T O = I_p\}\). The ambient space of \({{\,\mathrm{\mathrm {Gr}}\,}}(p, n)\) is \(\mathbb {R}^{n \times p}\) and the orthogonal complement space of the horizontal space \(\mathcal {H}_X\) at \(X \in {{\,\mathrm{\mathrm {St}}\,}}(p, n)\) is given by

Therefore, we have

Manifold of fixed-rank matrices The manifold is given by

The ambient space is therefore \(\mathbb {R}^{m \times n}\). Given \(X \in \mathbb {R}_r^{m \times n}\), let \(X = U S V\) be a thin singular value decomposition. The normal space at X is given by

where \(U_{\perp } \in \mathbb {R}^{m \times (m - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(U)\) and \(V_{\perp } \in \mathbb {R}^{n \times (n - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(V)\). It follows that

Note that it is not necessary to form the matrices \(U_{\perp }\) and \(V_{\perp }\). One can use [53, Algorithms 4 and 5] to implement the actions of \(U_{\perp }\), \(U_{\perp }^T\), \(V_{\perp }\), and \(V_{\perp }^T\).

Manifold of symmetric positive semi-definite matrices The manifold is

The ambient space is \(\mathbb {R}^{n \times n}\). Given \(X \in \mathbb {S}_r^{n \times n}\), let \(X = H H^T\), where \(H \in \mathbb {R}^{n \times r}\) is full rank. The normal space at X is

where \(H_{\perp } \in \mathbb {R}^{n \times (n - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(H)\). Therefore, we have

where \(\mathrm {vec}(M) = (M_{11}, M_{22}, \ldots , M_{ss}, \sqrt{2} M_{12}, \sqrt{2} M_{13}, \sqrt{2} M_{1s}, \ldots , \sqrt{2} M_{(s - 1) s})^T\) for \(M \in \mathbb {R}^{s \times s}\) being a symmetric matrix, and \(\mathrm {vec}^{-1}\) is the inverse function of \(\mathrm {vec}\).

Product of manifolds Let the product manifold \(\mathcal {M}\) be denoted by \(\mathcal {M}_1 \times \mathcal {M}_2 \times \ldots \times \mathcal {M}_t\). Let the ambient space of \(\mathcal {M}_i\) be \(\mathbb {R}^{n_i}\) and the dimension of \(\mathcal {M}_i\) be \(d_i\). For any \(X = (X_1, X_2, \ldots , X_t) \in \mathcal {M}\), the mappings \(B_X^T\) and \(B_X\) are given by

where \(B_{X_i}^T\) and \(B_{X_i}\) denote the mappings for manifold \(\mathcal {M}_i\) at \(X_i\), and \(v_i \in \mathbb {R}^{n_i - d_i}\), \(i = 1, \ldots , t\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, W., Wei, K. An inexact Riemannian proximal gradient method. Comput Optim Appl 85, 1–32 (2023). https://doi.org/10.1007/s10589-023-00451-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-023-00451-w