Abstract

We discuss a pointwise numerical differentiation formula on multivariate scattered data, based on the coefficients of local polynomial interpolation at Discrete Leja Points, written in Taylor’s formula monomial basis. Error bounds for the approximation of partial derivatives of any order compatible with the function regularity are provided, as well as sensitivity estimates to functional perturbations, in terms of the inverse Vandermonde coefficients that are active in the differentiation process. Several numerical tests are presented showing the accuracy of the approximation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\varPi _{d}\left( {\mathbb {R}}^{s}\right) \) be the space of polynomials of total degree at most d in the variable \({\mathbf {x}}=\left( \xi _{1},\dots ,\xi _{s}\right) \). A basis for this space, in the multi-index notation, is given by the monomials \({\mathbf {x}}^{\alpha }\mathbf { =}\xi _{1}^{\alpha _{1}}\dots \xi _{s}^{\alpha _{s}}\), where \(\alpha =\left( \alpha _{1},\dots ,\alpha _{s}\right) \in {\mathbb {N}} _{0}^{s}\), \(\left| \alpha \right| =\alpha _{1}+\dots +\alpha _{s}\le d\) and therefore \(\dim \varPi _{d}\left( {\mathbb {R}}^{s}\right) =\left( {\begin{array}{c} d+s\\ s\end{array}}\right) \). We introduce a total order in the set of all multi-indices \(\alpha \). More precisely, we assume \(\alpha <\beta \) if \(\left| \alpha \right| <\left| \beta \right| \), otherwise, if \(\left| \alpha \right| =\left| \beta \right| \) we follow the lexicographic order of the dictionary of words of \(\left| \alpha \right| \) letters from the ordered alphabet \(\left\{ \xi _{1},\dots ,\xi _{s}\right\} \) with the possibility to repeat each letter \(\xi _{i}\) only consecutively many times. For instance, if \(\left| \alpha \right| =3\) , we have \(\left( 3,0,0\right)<\left( 2,1,0\right)<\left( 2,0,1\right)<\left( 1,2,0\right)<\left( 1,1,1\right)<\left( 1,0,2\right)<\left( 0,3,0\right)<\left( 0,2,1\right)<\left( 0,1,2\right) <\left( 0,0,3\right) \) . Further details on multivariate polynomials and related multi-index notations can be found in [6, Ch. 4].

Let us consider a set

of \(m=\left( {\begin{array}{c}d+s\\ s\end{array}}\right) \) pairwise distinct points in \( {\mathbb {R}} ^{s}\) and let us assume that they are unisolvent for Lagrange interpolation in \(\varPi _{d}\left( {\mathbb {R}}^{s}\right) \), that is for any choice of \( y_{1},\dots ,y_{m}\in {\mathbb {R}}\), there exists and it is unique \(p\in \varPi _{d}\left( {\mathbb {R}}^{s}\right) \) satisfying

An equivalent result [6, Ch. 1] is the non singularity of the Vandermonde matrix

where the index i, related to the points, varies along the rows while the index \(\alpha \), related to the powers, increases with the column index by following the above introduced order. By denoting with \(\overline{{\mathbf {x}}} \) any point in \({\mathbb {R}}^{s}\) and by fixing the basis

the Vandermonde matrix centered at \(\overline{{\mathbf {x}}}\)

is non singular as well [6, Theorem 3, Ch. 5]. Therefore, for any choice of the vector

the solution \(p\left[ {\mathbf {y}},\sigma \right] \left( {\mathbf {x}}\right) \) of the interpolation problem (2) in the basis (3), can be obtained by solving the linear system

and by setting, using matrix notation,

where \({\mathbf {c}}=\left[ c_{\alpha }\right] _{\left| \alpha \right| \le d}^{T}\in {\mathbb {R}}^{m}\) is the solution of the system (6). This approach, for \(s=2\) and \(\overline{{\mathbf {x}}}\) the barycenter of the node set \(\sigma \), has been recently proposed in [12] in connection with the use of the \(PA=LU\) factorization of the matrix \(V_{\overline{{\mathbf {x}}}}\left( \sigma \right) \).

The main goal of the paper is to provide a pointwise numerical differentiation method of a target function f sampled at scattered points, by locally using the interpolation formula (7). The key tools are the connection to Taylor’s formula via the shifted monomial basis (3), suitably preconditioned by local scaling to reduce the conditioning of the Vandermonde matrix, together with the extraction of Leja-like local interpolation subsets from the scattered sampling set via basic numerical linear algebra. Our approach is complementary to other existing techniques, based on least-square approximation or on different function spaces, see for example [2, 7, 8, 16] and the references cited therein. In Sect. 2 we provide error bounds in approximating function and derivative values at a given point \(\overline{{\mathbf {x}}}\), as well as sensitivity estimates to perturbations of the function values, and in Sect. 3 we conduct some numerical experiments to show the accuracy of the proposed method.

2 Error bounds and sensitivity estimates

In the following we assume that \(\varOmega \subset {\mathbb {R}} ^{s}\) is a convex body containing \(\sigma \) and that the sampled function \( f:\varOmega \) \(\rightarrow {\mathbb {R}} \) is of class \(C^{d,1}\left( \varOmega \right) \), that is \(f\in C^{d}\left( \varOmega \right) \) and all its partial derivatives of order d

are Lipschitz continuous in \(\varOmega \). Let \(K\subseteq \varOmega \) compact convex: we equip the space \(C^{d,1}\left( K \right) \) with the semi-norm [13]

and we denote by \(T_{d}\left[ f,\overline{{\mathbf {x}}}\right] \left( \mathbf {x }\right) \) the truncated Taylor expansion of f of order d centered at \( \overline{{\mathbf {x}}}\in \varOmega \)

and by \(R_{T}\left[ f,\overline{{\mathbf {x}}}\right] \left( {\mathbf {x}}\right) \) the corresponding remainder term in integral form [19]

where [6, Ch. 4]

with the multi-indices \(\beta \) following the order specified in Sect. 1.Let us denote by \(\ell _{i}\left( {\mathbf {x}}\right) \) the \(i^{th}\) bivariate fundamental Lagrange polynomial. Since

by setting for each \(i=1,\dots ,m,\)

and by solving the linear system \(V_{\overline{{\mathbf {x}}}}\left( \sigma \right) {\mathbf {a}}^{i}=\delta ^{i}\), we get the expression of \(\ell _{i}\left( {\mathbf {x}}\right) \) in the translated canonical basis (3), that is

where \({\mathbf {a}}^{i}=\left[ a_{\alpha }^{i}\right] _{\left| \alpha \right| \le d}^{T}\).

Remark 1

Denoting by

in order to control the conditioning, it is useful to consider the scaled canonical polynomial basis centered at \(\overline{{\mathbf {x}}}\)

so that \(\left( {\mathbf {x}}-\overline{{\mathbf {x}}}\right) /h\) belongs to the unit disk (cf. [12]), and (13), in the scaled basis (15), becomes

where \(a_{\alpha ,h}^{i}=a_{\alpha }^{i}h^{\left| \alpha \right| }\). The interpolation polynomial \(p\left[ {\mathbf {y}},\sigma \right] \) (7) can also be expressed in the basis (15) as

where \({\mathbf {c}}_{h}=\left[ c_{\alpha ,h}\right] _{\left| \alpha \right| \le d}^{T}\), with \(c_{\alpha ,h}=c_{\alpha }h^{\left| \alpha \right| }\).

In the following we denote by \(V_{\overline{{\mathbf {x}}},h}(\sigma )\) the Vandermonde matrix in the scaled basis (15)

and by \(B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \) the ball of radius h centered at \(\mathbf {\overline{{\mathbf {x}}}}\).

Proposition 1

Let \(\overline{{\mathbf {x}}}\in \varOmega \) and \( f\in C^{d,1}\left( \varOmega \right) \). Then for any \({\mathbf {x}}\in K=B_h(\overline{{\mathbf {x}}})\cap \varOmega \) and for any \(\nu \in {\mathbb {N}} _{0}^{s}\) such that \(\left| \nu \right| \le d\), we have

where \(k_{j}=\frac{s^{j}}{\left( j-1\right) !}\) for \(j>0\), \(k_{0}=1\). In particular, for \({\mathbf {x}}=\overline{{\mathbf {x}}}\), we have

Proof

Since

by (16), we have

In line with [1, Equation (1-5)] we represent \(f\left( {\mathbf {x}}\right) \) and \(f\left( {\mathbf {x}} _{i}\right) =y_{i} \) in truncated Taylor series of order d centered at \( \overline{{\mathbf {x}}}\) (10) with integral remainder (11) and we obtain

On the other hand

since interpolation of degree d at the nodes in \(\sigma \) reproduces exactly polynomials of total degree less than or equal to d. Therefore by (12) we have

Consequently, by substituting (22) in (21), we get

By applying the differentiation operator \(D^{\nu }\) to the expression (23) and by using the triangular inequality, we obtain

where [13, Lemma 2.1]

Consequently,

\(\blacksquare \)

The estimates in Proposition 1 can be written in terms of the Lebesgue constant of interpolation at the node set \(\sigma \), defined by

Proposition 2

Let \(f\in C^{d,1}\left( \varOmega \right) \) and \(B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \subset \varOmega \). Then for any \({\mathbf {x}}\in K=B_{h}\left( \mathbf {\overline{{\mathbf {x}}}} \right) \) and for any \(\nu \in {\mathbb {N}} _{0}^{s}\) such that \(\left| \nu \right| \le d\), we have

where \(k_{j}=\frac{s^{j}}{\left( j-1\right) !}\) for \(j>0\), \(k_{0}=1\), and

In particular, for \({\mathbf {x}}=\overline{{\mathbf {x}}}\), the following inequality holds

Proof

Since \(\sum \limits _{i=1}^{m}R_{T}\left[ f,\overline{{\mathbf {x}}} \right] \left( {\mathbf {x}}_{i}\right) \ell _{i}\left( {\mathbf {x}}\right) \) is a polynomial of total degree less than or equal to d, by repeatedly applying the Markov inequality [20] for a ball with radius h in the form

where \(\varPi _{n}(B_h(\overline{{\mathbf {x}}}))\) denotes the space of polynomials of degree n in s variables restricted to the ball \(B_h(\overline{{\mathbf {x}}})\), and recalling that each partial derivative lowers the degree by one, we easily obtain

by using [13, Lemma 2.1] we get

Consequently

Based on the inequality (19) in Theorem 1, we have

and since \({\mathbf {x}}\in B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \), it follows that

while (27) follows easily by evaluating (30) at \(\overline{{\mathbf {x}}}\). \(\blacksquare \)

Proposition 3

Let \(\overline{{\mathbf {x}}}\in \varOmega \) and \(f\in C^{d,1}\left( \varOmega \right) \). Then for any \(\mathbf { x}\in K=B_h(\overline{{\mathbf {x}}})\cap \varOmega \) and for any \(\nu \in {\mathbb {N}} _{0}^{s}\) such that \(\left| \nu \right| \le d\), we have

where \(k_{j}=\frac{s^{j}}{\left( j-1\right) !}\) for \(j>0\), \(k_{0}=1\). The inequalities between multi-indices in this case are interpreted componentwise, that is \( \alpha \le \beta \) if and only if \(\alpha _{i}\le \beta _{i}\), \(i=1,\dots ,s\). In particular, for \({\mathbf {x}}=\overline{{\mathbf {x}}}\), the following inequality holds

Proof

By using the expression of the fundamental Lagrange polynomial \(\ell _{i}\left( {\mathbf {x}}\right) \) in the scaled basis (16) and by applying the differentiation operator \(D^{\nu }\) to the expression (23), we obtain

where

By taking the modulus of both sides of (33) and by using the triangular inequality, we get

Therefore, (24), (34) and (28) imply

Since \({\mathbf {x}}\in B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \), we get

Evaluating (36) at \(\overline{{\mathbf {x}}}\) yields to (32). \(\blacksquare \)

It is worth noting that the analysis developed in [14] in connection with the estimation of the error of Hermite interpolation in \({\mathbb {R}}^{s}\) can be used to obtain analogous bounds with respect to those obtained in (31) and (32). To do this, in line with [14], given an integer \(l\in {\mathbb {N}}\), we denote with \(D^{l}f\left( {\mathbf {x}}\right) \) the l-th derivative of f in a point \( {\mathbf {x}}\in \varOmega \), that is the l linear operator

which acts on the canonical basis element \(\underset{\nu {_{1}\text { times}}}{(\underbrace{{\mathbf {e}}_{1},\dots ,{\mathbf {e}}_{1}}},\underset{\nu _{2}\text { times}}{\underbrace{{\mathbf {e}}_{2},\dots ,{\mathbf {e}}_{2}}},\dots ,\underset{ \nu {_{s}\text { times}}}{\underbrace{{\mathbf {e}}_{s},\dots ,{\mathbf {e}}_{s}}})\) , \(\nu =\left( \nu _{1},\nu _{2},\dots ,\nu _{s}\right) \in {\mathbb {N}} _{0}^{s}\), \(\left| \nu \right| =l\), as follows

where we use the previously introduced notations for partial derivatives (8). As an l linear operator, the norm of \( D^{l}f\left( {\mathbf {x}}\right) \) is defined in a standard way as follows

and therefore, for each \(\nu \in {\mathbb {N}}_{0}^{s}\), \(\left| \nu \right| =l\), we have

By introducing the Sobolev semi-norm

which is meaningful for functions \(f\in W^{d+1,p}\left( \varOmega \right) \) for each \(d+1\ge l\), from [14, Theorem 2.1] by taking \( p=+\infty \), we get using (38)

for any \(\overline{{\mathbf {x}}}\in \varOmega ,\) \(\mathbf {x\in }K\mathbf {=} B_{h}\left( \overline{{\mathbf {x}}}\right) \cap \varOmega \) and for any multi-index \(\nu \) of length \(\left| \nu \right| =l\le d\). In order to point out links between proof of Proposition 3 and that one given in [14, Theorem 2.1], we start with the inequality

which is already stated in the paper [14, Page 414, line 9] for the general case of Hermite interpolation. By the linearity of the operator \(D^{l}\) with respect to the function argument, we get by using the expression (16) of Lagrange polynomials

We can explicitly compute the expression of \(D^{l}\left( {\mathbf {x}}- \overline{{\mathbf {x}}}\right) ^{\alpha }\) when applied to a vector \(\left( {\mathbf {u}}_{1},\dots ,{\mathbf {u}}_{l}\right) \in \left( {\mathbb {R}} ^{s}\right) ^{l}\). In order to simplify the notations, in line with [14, Section 2], we assume that

and, denoting with \(\left\{ \varepsilon _{1},\varepsilon _{2},\dots ,\varepsilon _{s}\right\} \) the canonical basis of \( {\mathbb {R}} ^{s}\), we set

and

Therefore

From the bound (39), the equality (42) and by recalling that [13, Lemma 2.1]

we obtain

which is a slight different version of the bound (31) given in Proposition 3. In order to have an analogous version of the bound (32), we evaluate at \( \overline{{\mathbf {x}}}\) the estimation (43), and consequently we get

We notice that the s-tuple \(\varepsilon _{\lambda ,l}\) in the internal sum depends on \(\alpha \), but its length is equal to \(l\le d\) and therefore we get

It is easy to see that, despite the bounds (32) and (45) are similar, their comparison depends on the sign of the coefficients \(a_{\alpha ,h}^{i}\), \(\left| \alpha \right| =l\), since the sum \(\sum \limits _{\left| \alpha \right| =l}\alpha !a_{\alpha ,h}^{i}\) contains the term \(\nu !a_{\nu ,h}^{i}\).

In line with [12] we can write the bounds (31) and (32) in Proposition 3 in terms of the 1-norm condition number, say \( {{\,\mathrm{cond}\,}}_h\left( \sigma \right) \), of the Vandermonde matrix \(V_{\overline{\mathbf { x}},h}\left( \sigma \right) \).

Corollary 1

Let \(\overline{{\mathbf {x}}}\in \varOmega \) and \(f\in C^{d,1}\left( \varOmega \right) \). Then for any \({\mathbf {x}}\in K=B_{h}\left( \mathbf {\overline{{\mathbf {x}}}} \right) \cap \varOmega \) and for any \(\nu \in {\mathbb {N}} _{0}^{s}\) such that \(\left| \nu \right| \le d\), we have

where \(k_{j}=\frac{s^{j}}{\left( j-1\right) !}\) for \(j>0\), \(k_{0}=1\). As in Proposition 3, the inequalities between multi-indices are interpreted componentwise. In particular, for \({\mathbf {x}}=\overline{{\mathbf {x}}}\), the following inequality holds

Proof

By applying the triangular inequality to (36), we get

where \({\mathbf {a}}_{h}^{i}=\left[ a_{\alpha ,h}^{i}\right] _{\left| \alpha \right| \le d}^{T}\). Based on the expression of \(\ell _{i}\left( {\mathbf {x}}\right) \) (16), we have [12]

and then

Moreover, since \(\left\| V_{\overline{{\mathbf {x}}},h}\left( \sigma \right) \right\| _{1}=m\) then \(\sum \limits _{i=1}^{m}\left\| {\mathbf {a}}_{h}^{i}\right\| _{1}\le {{\,\mathrm{cond}\,}}_{h}\left( \sigma \right) \) and therefore

Being \({\mathbf {x}}\in B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \), it follows that

By evaluating (48) at \(\overline{{\mathbf {x}}}\), we obtain (47). \(\blacksquare \)

The results of Table 1 in Sect. 3 show that the bounds (27) and (47) are much larger than (32), which is only based on the “active coefficients” in the differentiation process. Therefore, in the analysis of the sensitivity to the perturbation of the function values, we use only the “active coefficients”.

Proposition 4

Let \(\widetilde{{\mathbf {y}}}={\mathbf {y}}+\varDelta {\mathbf {y}}\), where \(\varDelta {\mathbf {y}}=\left[ \varDelta y_{i}\right] _{i=1,\dots ,m}\) corresponds to the perturbation on the function values \({\mathbf {y}}= \left[ y_{i}\right] _{i=1,\dots ,m}\). Then for any \({\mathbf {x}}\in K=B_{h}\left( \mathbf {\overline{{\mathbf {x}}}}\right) \cap \varOmega \), for any \(\nu \in {\mathbb {N}} _{0}^{s}\) such that \(\left| \nu \right| \le d\) and for any \(\varDelta {\mathbf {y}} \) with \(\left| \varDelta {\mathbf {y}}\right| \le \varepsilon \), we have

where \(k_{j}=\frac{s^{j}}{\left( j-1\right) !}\) for \(j>0\), \(k_{0}=1\). As above, the inequalities between multi-indices are interpreted componentwise. In particular, for \({\mathbf {x}}=\overline{{\mathbf {x}}}\), the following inequality holds

The interpolation points (in red) in the ball of radius \(r=1/4\) centered at (0.5, 0.5) selected from 1000 (left), 2000 (center) and 4000 (right) Halton points at degrees \(d=17,25,35\), respectively, corresponding to minimal errors in Fig. 2

Relative errors (54)-(56) for function \(f_1\) by using the subsets of 1000, 2000 and 4000 Halton points intersecting \(B_r\left( \overline{{\mathbf {x}}}\right) \), where \( \overline{{\mathbf {x}}}=\left( 0.5,0.5\right) \) and \(r=\frac{1}{2},\frac{3}{8}, \frac{1}{4},\frac{1}{8}\) on a sequence of degrees. Note that shorter sequences (missing marks) are due to a lack of points for interpolation of higher degree

As in Figure 2 starting from uniform random points

Proof

Denoting by \(\widetilde{{\mathbf {y}}}={\mathbf {y}}+\varDelta {\mathbf {y}}\), where \(\varDelta {\mathbf {y}}=\left[ \varDelta y_{i} \right] _{i=1,\dots ,m}\) corresponds to the perturbation on the function values \({\mathbf {y}}=\left[ y_{i}\right] _{i=1,\dots ,m}\), by (20 ) we get

Then for any \(\varDelta {\mathbf {y}}\) such that \(\left| \varDelta {\mathbf {y}}\right| \le \varepsilon \),

Since, using (34), we have

then

For \({\mathbf {x}}=\overline{{\mathbf {x}}}\), it follows that

\(\blacksquare \)

Remark 2

It is worth observing that the quantity defined as

is the “stability constant” of pointwise differentiation via local polynomial interpolation, namely the value at the center of the “stability function” for the ball, that is

Notice also that in view of (32) and (51) the overall numerical differentiation error, in the presence of perturbations on the the function values of size not exceeding \(\varepsilon \) , can be estimated as

For the purpose of illustration, in Table 2 and Fig. 14 of Sect. 3 we show the magnitude of the stability constant (51) relative to some numerical tests.

Remark 3

The previous results are useful to estimate the error of approximation in several processes of scattered data interpolation which use polynomials as local interpolants, like for example triangular Shepard [4, 11], hexagonal Shepard [10] and tetrahedral Shepard methods [5]. They are also crucial to realize extensions of those methods to higher dimensions [9].

3 Numerical experiments

In this section we provide some numerical tests to support the above theoretical results in approximating function, gradient and second order derivative values. We fix \(s=2\), \(\varOmega =[0,1]^2\) and we take different positions for the point \(\overline{{\mathbf {x}}}\) in \(\varOmega \): at the center, and near/on a side and a corner of the square. We use different distributions of scattered points in \(\varOmega \), namely Halton points [15] and uniform random points. We focus on the scattered points in the ball \(B_r(\overline{{\mathbf {x}}})\) centered at \( \overline{{\mathbf {x}}}\) for different radii, from which we extract an interpolation subset \(\sigma \) of \(m=\left( {\begin{array}{c}d+2\\ 2\end{array}}\right) \) Discrete Leja Points computed through the algorithm proposed in [3] (see Fig. 1).

The reason for adopting Discrete Leja Points is twofold. We recall that they are extracted from a finite set of points (in this case the scattered points in the ball) by LU factorization with row pivoting of the corresponding rectangular Vandermonde matrix. Indeed, Gaussian elimination with row pivoting performs a sort of greedy optimization of the Vandermonde determinant, by searching iteratively the new row (that is selecting the new interpolation point) in such a way that the modulus of the augmented determinant is maximized. In addition, if the polynomial basis is lexicographically ordered, the Discrete Leja Points form a sequence, that is the first \({\left( {\begin{array}{c}k+s\\ s\end{array}}\right) }\) are the Discrete Leja Points for interpolation of degree k in s variables, \(1\le k\le d\); see [3] for a comprehensive discussion.

Then, on one hand Discrete Leja Points provide, with a low computational cost, a unisolvent interpolation set, since a nonzero Vandermonde determinant is automatically seeked. On the other hand, since they are computed by a greedy maximization, one can expect, as a qualitative guideline, that the elements of the corresponding inverse Vandermonde matrix (that are cofactors divided by the Vandermonde determinant), and thus also the relevant sum in the error bound (32) as well as the condition number, are not allowed to increase rapidly. These results are in line with those shown in [12, Table 1]. In addition, using Discrete Leja Points has also the effect of trying to minimize the sup-norm of the fundamental Lagrange polynomials \(\ell _i\) (which, as it is well-known, can be written as ratio of determinants, cf. [3]) and thus the Lebesgue constant, which is relevant to estimate (27). Nevertheless, it is clear from Table 1 that the bounds involving the Lebesgue constant and the condition number are much larger than (32) which rests only on the “active coefficients” in the differentiation process. Further numerical experiments show that, while decreasing r, for each value of \( \left| \nu \right| \), the first and third rows in Table 1 remain of the same order of magnitude thanks to the scaling of the basis, for the feasible degrees (since unisolvence of interpolation of degree d is possible until there are enough scattered points in the ball).

For simplicity, from now on we set

and, to measure the error of approximation, we compute the relative errors

and

using the following bivariate test functions

where \(f_{1}\) is the well known Franke’s function and \(f_{3}\) is an oscillating function (see Fig. 6) both in Renka’s test set [17], whereas \(f_{2}\) is obtained by a superposition of the univariate exponential with an inner product and then is constant on the parallel hyperplanes \(x+y=q\), \(q\in {\mathbb {R}}\) (ridge function). For each test function we approximate \(D^{\nu }f\left( \overline{ {\mathbf {x}}}\right) \) by

where \(c_{\nu ,h}\), with \(h\le r\) defined in (14), are the coefficients of the interpolating polynomial (17 ) at the point \(\overline{{\mathbf {x}}}\). Interpolation is made at Discrete Leja Points in \(B_r(\overline{{\mathbf {x}}})\) for \(r=\frac{1}{2},\frac{3}{8}, \frac{1}{4},\frac{1}{8}\) at a sequence of degrees d. We stress that for a fixed radius r, unisolvence of interpolation is possible only for a finite number of degrees, that is until there are enough scattered points in the ball.

In the first experiment we start from 1000, 2000 and 4000 Halton and uniform random points and we set \(\overline{{\mathbf {x}}}=\left( 0.5,0.5\right) \) (see Fig. 1). For the test function \(f_{1}\), the numerical results are displayed in Figs. 2, 3.

In the second experiment, we start from 2000 Halton points for the test function \(f_2\), again with \(\overline{{\mathbf {x}}}=\left( 0.5,0.5\right) \). The numerical results are displayed in Fig. 4.

In the third experiment, for the test function \(f_3\), we start from 4000 Halton points choosing \(\overline{{\mathbf {x}}}\) at the center, then close to the right side and finally close to the north-east corner (see Figures 5, 6). The numerical results are displayed in Figs. 7, 8 and 10. We repeat the same experiments choosing \(\overline{{\mathbf {x}}}\) on the right side and at the north-east corner and we report the results in Figs. 9 and 11.

Relative sensitivity (60) for the gradient of p computed with perturbed function values (gs) and its estimate (61) involving the stability constant of the gradient (gse) for the functions \(f_1\) (top left), \(f_2\) (top right) and \( f_3\) (bottom) by using 1000 Halton points with \(\overline{{\mathbf {x}}} =(0.5,0.5)\)

The stability constant (51), for degrees \(d=5,10,15\) and \(\vert \nu \vert =0\) (left), \(\vert \nu \vert =1\) (center) and \(\vert \nu \vert =2\) (right) computed by using 4000 Halton points with \(r=1/2\) and \(\overline{{\mathbf {x}}}\) being 101 equispaced points on the horizontal line \(y=0.5\) (top) and on the diagonal \(y=x\) (bottom)

As in Figure 15 using 10000 uniform random points

In the last experiment, for each test function \(f_{k}\), \(k=1,2,3\), we include a random noise in the m function values, namely

where \(U(-\varepsilon ,\varepsilon )\) denotes the multivariate uniform distribution in \([-\varepsilon ,\varepsilon ]^{m}\). In Figure 12 we display the relative error

for the gradient at \(\overline{{\mathbf {x}}}=\left( 0.5,0.5\right) \), computed using 1000 Halton points with exact function values (55) and perturbed function values (59) for \( \varepsilon =10^{-6}\). In Figure 13 we display the relative sensitivity in computing the gradient of the interpolating polynomial p under the perturbation of the function values (\( \varepsilon =10^{-6}\))

together with its estimate involving the stability constant of the gradient (51)

Notice that the relative errors gep in Fig. 12 and sensitivity gs in Fig. 13 are of the same order of magnitude when the errors ge become negligible with respect to gs. Moreover, gse turns out to be a slight overestimate of the relative sensitivity gs. This fact, as we can see in Table 2 where \(\overline{{\mathbf {x}}}=(0.5,0.5)\), is due to the relative small values of the stability constant that varies slowly while decreasing the radius r or increasing the degree of the interpolating polynomial.

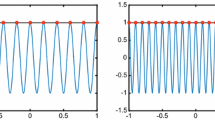

Finally, it is worth stressing that the stability constant (51) is a function of \(\overline{{\mathbf {x}}}\) and then it depends on the position of \(\overline{{\mathbf {x}}}\) in the square. More precisely, for a fixed interpolation degree d, it slowly varies except for a neighborhood of the boundary where it increases rapidly, expecially near the vertices, as can be observed in Fig. 14, where for clarity we restrict the stability constant to lines. On the other hand, it can be noticed that by increasing the degree, the stability constant tends to increase, much more rapidly at the boundary. Such a behavior, that explains the worsening of the accuracy at the boundary (see Figs. 8–9) and at the vertex (see Figs. 10–11) of the square, can be ascribed to the fact that the stability functions (52) increase rapidly near the boundary of the local interpolation domains \(B_h(\overline{ {\mathbf {x}}}) \cap \varOmega \). This phenomenon, that resembles the behavior at the boundary of Lebesgue functions of univariate interpolation at equispaced points (cf. e.g. [18]), is worth of further investigation.

We stress that our method is not restricted to dimension 2. Indeed, in Figs. 15–16 we show the accuracy of derivative approximation for the 3D version of the function \(f_2\) by using 10000 Halton and uniform random points, respectively. The error behaviour is quite similar to the 2D case with Halton points (see Fig. 4). Notice that for the smallest radius the maximum interpolation degree is smaller than the 2D case since there are not enough interpolation points in the ball (to reach the same maximum degree we would need around 56000 points).

References

Arcangeli, R., Gout, J.L.: Sur l‘èvaluation de l‘erreur d‘interpolation de Lagrange dans un ouvert de \({\mathbb{R}}^n\). ESAIM Math. Model. Numer Anal—Modèlisation Mathèmatique et Analyse Numèrique 10(R1), 5–27 (1976)

Belward, J.A., Turner, I.W., Ilić, M.: On derivative estimation and the solution of least squares problems. J. Comput. Appl. Math. 222(2), 511–523 (2008)

Bos, L., De Marchi, S., Sommariva, A., Vianello, M.: Computing multivariate Fekete and Leja points by numerical linear algebra. SIAM J. Numer. Anal. 48(5), 1984–1999 (2010)

Cavoretto, R., De Rossi, A., Dell‘Accio, F., Di Tommaso, F.: Fast computation of triangular Shepard interpolants. J. Comput. Appl. Math. 354, 457–470 (2019)

Cavoretto, R., De Rossi, A., Dell‘Accio, F., Di Tommaso, F.: An efficient trivariate algorithm for tetrahedral Shepard interpolation. J. Sci. Comput. 82(3), 1–15 (2020)

Cheney, E.W., Light, W.A.: A course in approximation theory, vol. 101. American Mathematical Soc, Washington (2009)

Davydov, O., Schaback, R.: Error bounds for kernel-based numerical differentiation. Numer. Math. 132(2), 243–269 (2016)

Davydov, O., Schaback, R.: Minimal numerical differentiation formulas. Numer. Math. 140(3), 555–592 (2018)

Dell’Accio, F., Di Tommaso, F.: Rate of convergence of multinode Shepard operators. Dolomites Res. Notes Approx. 12(1) (2019)

Dell‘Accio, F., Di Tommaso, F.: On the hexagonal Shepard method. Appl. Numer. Math. 150, 51–64 (2020)

Dell‘Accio, F., Di Tommaso, F., Hormann, K.: On the approximation order of triangular Shepard interpolation. IMA J. Numer. Anal. 36(1), 359–379 (2016)

Dell‘Accio, F., Di Tommaso, F., Siar, N.: On the numerical computation of bivariate Lagrange polynomials. Appl. Math. Lett. 106845 (2020)

Farwig, R.: Rate of convergence of Shepard‘s global interpolation formula. Math. Comp. 46(174), 577–590 (1986)

Gout, J.: Estimation de l‘erreur d‘interpolation d‘Hermite dans \({\mathbb{R}}^n\). Numer. Math. 28(4), 407–429 (1977)

Kocis, L., Whiten, W.J.: Computational investigations of low-discrepancy sequences. ACM Trans. Math. Software 23(2), 266–294 (1997)

Ling, L., Ye, Q.: On meshfree numerical differentiation. Anal. Appl. (Singap.) 16(05), 717–739 (2018)

Renka, R.J., Brown, R.: Algorithm 792: accuracy test of ACM algorithms for interpolation of scattered data in the plane. ACM Trans. Math. Software 25(1), 78–94 (1999)

Trefethen, L.N.: Approximation Theory and Approximation Practice, Extended Edition. SIAM, New Delhi (2019)

Waldron, S.: Multipoint Taylor formulæ. Numer. Math. 80(3), 461–494 (1998)

Wilhelmsen, D.R.: A Markov inequality in several dimensions. J. Approx. Theory 11(3), 216–220 (1974)

Acknowledgements

This research has been achieved as part of RITA “Research ITalian network on Approximation” and was supported by the GNCS-INdAM 2020 Projects “Interpolation and smoothing: theoretical, computational and applied aspects with emphasis on image processing and data analysis” and “Multivariate approximation and functional equations for numerical modelling”. The third author’s research was supported by the National Center for Scientific and Technical Research (CNRST-Morocco) as part of the Research Excellence Awards Program (No. 103UIT2019). The fourth author was partially supported by the DOR funds and the biennial project BIRD 192932 of the University of Padova. The authors thank the anonymous referees for their useful and very interesting suggestions which allow to improve the paper.

Funding

Open access funding provided by Universitá della Calabria within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Tom Lyche.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dell’Accio, F., Di Tommaso, F., Siar, N. et al. Numerical differentiation on scattered data through multivariate polynomial interpolation. Bit Numer Math 62, 773–801 (2022). https://doi.org/10.1007/s10543-021-00897-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-021-00897-6