Abstract

The two dimensional advection–diffusion equation in a stochastically varying geometry is considered. The varying domain is transformed into a fixed one and the numerical solution is computed using a high-order finite difference formulation on summation-by-parts form with weakly imposed boundary conditions. Statistics of the solution are computed non-intrusively using quadrature rules given by the probability density function of the random variable. As a quality control, we prove that the continuous problem is strongly well-posed, that the semi-discrete problem is strongly stable and verify the accuracy of the scheme. The technique is applied to a heat transfer problem in incompressible flow. Statistical properties such as confidence intervals and variance of the solution in terms of two functionals are computed and discussed. We show that there is a decreasing sensitivity to geometric uncertainty as we gradually lower the frequency and amplitude of the randomness. The results are less sensitive to variations in the correlation length of the geometry.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When solving partial differential equations, uncertain geometry of the computational domain may arise for many reasons. Examples include irregular materials, inaccurate Computer-Aided Design (CAD) software, imprecise manufacturing machines and non-perfect mesh generators. We study the effects of this uncertainty and impose the boundary condition at stochastically varying positions in space. Related techniques are boundary perturbation [26], Lagrangian approach [1] and isoparametric mapping [5]. Other techniques dealing with geometric uncertainty include polynomial chaos with remeshing of geometry [8, 9] as well as chaos collocation methods with fictious domains [3, 17].

We transform the stochastically varying domain into a fixed one. This procedure has previously been used in Xiu et al. [25] for elliptic problems. Numerical techniques can be employed if the analytical transformation of the geometry is unavailable [4]. In this article it is extended to the analysis of the time-dependent advection–diffusion equation. The continuous problem is analyzed using the energy method, and strong well-posedness is proved [14, 15].

We discretize using high-order finite difference methods on summation-by-parts form with weakly imposed boundary conditions, and prove strong stability [23, 24]. The statistics of the solution such as the mean, variance and confidence intervals are computed non-intrusively using quadrature rules for the given stochastic distributions [10, 12]. As an application, we analyze the heat transfer at rough surfaces in incompressible flow [2, 18, 22].

The paper will proceed as follows: in Sect. 2 we define the continuous problem in two space dimensions, transform it to the unit square using curvilinear coordinates and derive energy estimates that lead to well-posedness. We formulate a finite difference scheme for the continuous problem and prove stability in Sect. 3. In Sect. 4, we consider a heat transfer problem in incompressible flow. Finally, in Sect. 5 we draw conclusions.

2 The continuous problem

Consider the advection–diffusion problem on the stochastically varying domain \(\Omega (\mathbf {\theta })\)

In (2.1), \(\bar{u}\) and \(\bar{v}\) are the known mean velocities in the x- and y-directions satisfying the divergence relation \(\bar{u}_x + \bar{v}_y = 0\) stemming from an incompressible Navier–Stokes solution. Furthermore, \(\epsilon = \epsilon (x,y,t)\) is a positive diffusion coefficient, \(u = u(x, \, y, \, t, \, \mathbf {\theta })\) represents the solution to the problem and \(\mathbf {\theta } = (\theta _1, \, \theta _2,\dots )\) is a vector of random variables describing the geometry of the domain. F, g and f are data to the problem. The goal of this study is to investigate the effects of placing the boundary condition \(Hu = g\) at the stochastically varying boundary \(\partial \Omega (\mathbf {\theta })\).

2.1 The transformation

We transform the stochastically varying domain \(\Omega \) into the unit square by the transformation,

where \(0 \le \xi ,\,\eta \le 1\). The Jacobian matrix of the transformation is given by,

By applying the chain rule to (2.1) and multiplying by \(J = x_{\xi } y_{\eta } - x_{\eta } y_{\xi } > 0\), we obtain

The final formulation of the transformed problem is

where

and \(\Phi = [0, \, 1] \times [0, \, 1]\). A more complete derivation of the transformed problem is included in “Appendix A”. In (2.4), we have used the notation \(\nabla = (\frac{\partial }{\partial x}, \frac{\partial }{\partial y})^\mathrm{T}\). The transformed fixed domain including normal vectors are given in Fig. 1. Note that the wave speeds \(\tilde{a}\) and \(\tilde{b}\) depend on the stochastic variables \(\mathbf {\theta }\).

2.2 The energy method

We multiply the transformed problem (2.3) with u, integrate over the domain \(\Phi \) (ignoring the forcing function F), and apply the Green–Gauss theorem. This yields

where \(\bar{A} = (\tilde{a}, \tilde{b}) \cdot n\), \(\bar{F} = (\tilde{f}, \tilde{g}) \cdot n\) and n is the outward pointing normal vector from \(\partial \Phi \), see Fig. 1. In (2.5), \(\left\| u \right\| _{J}^2 = \int _{\Phi } u^2 J \, d \xi \, d \eta = \int _{\Omega } u^2 \, d x \, d y\) is the \(L_2\)-norm, while

where

The right-hand side (RHS) of (2.5) can be expanded as

where for example the fluxes at the boundaries \(\xi = 1\) and \(\eta = 1\) are

respectively. Further, we note that \(\tilde{f}\) and \(\tilde{g}\) can also be written in terms of \(u_{\xi }\) and \(u_{\eta }\) as

The formulation (2.6) in matrix form can be written

The matrices in (2.7) are symmetric, and hence they can be diagonalized as

for \(\tilde{a}, \tilde{b} \ne 0\). By imposing the boundary conditions

where

the (RHS) of (2.8) is bounded by data and hence gives an energy estimate.

2.3 Weak imposition of boundary conditions

As a preparation for the numerical approximation, we now impose the boundary conditions weakly using penalty terms. This gives

To illustrate the procedure, we assume \(\tilde{a} \big |_{\xi = 0}^{\xi = 1} > 0\) and \(\tilde{b}\big |_{\eta = 0}^{\eta = 1} > 0\) and impose the boundary conditions using the operators in (2.10), which yields

The indefinite terms in (2.12) are canceled by letting

By using (2.13) in (2.12) we obtain

which lead directly to an energy estimate.

For general \(\tilde{a}\) and \(\tilde{b}\), the choices

bounds the (RHS) of (2.11), in a similar way. The special cases with \(\tilde{a},\tilde{b} = 0\) are treated in a similar way, see “Appendix B”.

We can now prove

Proposition 2.1

The problem (2.3) with the boundary conditions (2.9) and the penalty coefficients in (2.15) is strongly well-posed.

Proof

Consider the specific case in (2.14). For other values of \(\tilde{a}\) and \(\tilde{b}\), the same general procedure is used. Time integration (from 0 to T) of (2.14) results in

In (2.16), the boundary terms with zero data all give a non-positive contribution, and hence the solution is bounded by data. The bound leads directly to uniqueness, and existence is guaranteed by the fact that we use the correct (i.e., minimal) number of boundary conditions.

3 The semi-discrete formulation

In this section we consider the numerical approximation of (2.3) formulated by using Summation-By-Parts (SBP) operators with Simultaneous Approximation Terms (SAT), the so called SBP-SAT technique [24]. First, we rewrite our variable coefficient continuous problem (2.3) using the splitting technique described in [13], to obtain,

In (3.1), we note that the lower order terms vanish, since \(\tilde{a}_{\xi } + \tilde{b}_{\eta } = 0\). The corresponding semi-discrete version of (3.1) including penalty terms for the boundary conditions is

where

In (3.2) and (3.3), \(P_{\xi , \eta }^{-1} Q_{\xi , \eta }\) are the finite difference operators, \(P_{\xi , \eta }\) are diagonal positive definite matrices, and \(Q_{\xi , \eta }\) are almost skew-symmetric matrices satisfying \(Q_{\xi , \eta } + Q_{\xi , \eta }^\mathrm{T} = B = diag[-1,0,\dots ,0,1]\).

U is a vector containing the numerical solution \(U_{i,j}\) which approximates \(u(\xi _i, \eta _j)\) ordered as

The indices \(i = 0,1,\dots ,N\) and \(j = 0,1,\dots ,M\) correspond to the grid points in \(\xi \)- and \(\eta \)-direction.

To ease the notation we denote \((P_{\xi }^{-1} Q_{\xi }\otimes I_{\eta })U = U_{\xi }\) and \((I_{\xi } \otimes P_{\eta }^{-1} Q_{\eta })U = U_{\eta }\) as the discrete derivatives with respect to \(\xi \) and \(\eta \). \(E_{0N}\) and \(E_{0M}\) are zero matrices with the exception of the first element which is equal to one, and the corresponding sizes of the matrices are \((N+1) \times (N+1)\) and \((M+1) \times (M+1)\). Similarly, \(E_{NN}\) and \(E_{MM}\) are zero matrices with the exception of the last element which is equal to one, and the corresponding sizes of the matrices are \((N+1) \times (N+1)\) and \((M+1) \times (M+1)\). The notations \(I_{\xi }\), \(I_{\eta }\) and \(I_{\xi \eta }\) correspond to the identity matrices of sizes \((N+1) \times (N+1)\), \((M+1) \times (M+1)\) and \((M+1)(N+1) \times (M+1)(N+1)\), respectively. \(\tilde{A}\), \(\tilde{B}\), \(\tilde{F}\), \(\tilde{G}\), \(\tilde{\xi }_x\), \(\tilde{\xi }_y\), \(\tilde{\eta }_x\), \(\tilde{\eta }_y\), \(\tilde{\epsilon }\) and \(\tilde{J}\) are diagonal matrices approximating \(\tilde{a}\), \(\tilde{b}\), \(\tilde{f}\), \(\tilde{g}\), \(\xi _x\), \(\xi _y\), \(\eta _x\), \(\eta _y\), \(\epsilon \) and J pointwise.

The discrete boundary operators \(\mathbf {H}_E^-\), \(\mathbf {H}_W^-\), \(\mathbf {H}_N^-\) and \(\mathbf {H}_S^-\) are defined as

which corresponds to the continuous counterparts in (2.10). Finally, The penalty matrices \(\varvec{\varSigma }_E, \varvec{\varSigma }_W, \varvec{\varSigma }_N\) and \(\varvec{\varSigma }_S\) will be chosen such that the numerical scheme (3.2) becomes stable. For more details on the SBP-SAT techniques, see [24].

3.1 Stability

To prove stability (we only consider the west boundary, as the treatment of the other boundaries is similar), we multiply (3.2) with \(U^\mathrm{T} (P_{\xi } \otimes P_{\eta })\) from the left, add the transpose of the outcome and define the discrete norm \(\left\| U \right\| _{J(P_{\xi } \otimes P_{\eta })}^2 = U^\mathrm{T} \tilde{J} (P_{\xi } \otimes P_{\eta }) U\) to obtain

By observing that \(\mathbf{{H}_W^-} U = U - \tilde{A}^{-1} \tilde{F}\), \(Q_{\xi }+Q_{\xi }^\mathrm{T} = E_{NN} - E_{0N}\), \(Q_{\eta }+Q_{\eta }^\mathrm{T} = E_{MM} - E_{0M}\), and ignoring the contribution from the other boundaries (the terms including \(E_{NN}, E_{MM}\) and \(E_{0M}\)) we can rewrite (3.4) as

where

The remaining derivations leading to the discrete energy estimate

is included in “Appendix C”.

We can now prove

Proposition 3.1

The numerical approximation (3.2) using the penalty coefficients

is strongly stable.

Proof

For ease of presentation we prove the special case when \(\tilde{a}, \tilde{b} > 0\). By integrating (3.6) in time, considering also the remaining boundaries and using the penalty parameters in (3.7) we find

As in the continuous energy estimate (2.14), the RHS of (3.8) consists of boundary data and negative semi-definite dissipative boundary terms which result in a strongly stable numerical approximation.

Remark 3.1

Note the similarity between the discrete energy estimate (3.8) and its continuous counterpart (2.16).

Remark 3.2

The possibility of applying the SBP-SAT technique to the coupled PDEs resulting from the use of polynomial chaos in combination with a stochastic Galerkin projection is shown in Pettersson et al. [19, 20].

4 Numerical results

We start with a quality control by using the method of manufactured solution [16, 21] to verify the accuracy and stability of the scheme.

4.1 Rate of convergence for the deterministic case

We use \(\bar{u} = \sin (x) \cos (y)\), \(\bar{v} = -\cos (x)\sin (y)\), \(\epsilon = 0.01\) in order to satisfy the incompressibility condition. The rate of convergence is verified by computing the order of accuracy p defined as

In (4.1), \(u_h\) is the numerical solution, using the grid spacing h, and the manufactured solution is

The order of accuracy computed for different number of grid points and SBP-operators, is shown in Table 1. As time-integrator, the classical 4th-order Runge–Kutta method with 5000 grid points was used. The results shown in Table 1 confirm that the scheme is accurate for the 2nd-, 3rd-, 4th- and 5th-order SBP-SAT schemes [23].

4.2 Heat transfer at rough surfaces

Equipped with a provably stable scheme, we will now investigate the stochastic properties of a heat distribution problem in incompressible flow. The problem in two dimensions is of the form

where we specify the following boundary conditions

In (4.2), T is the temperature, \((\bar{u}, \bar{v})\) the given velocity field, \(\epsilon \) the viscosity. The boundary conditions in (4.3) are a well-posed subset of the general ones derived previously.

To simulate a boundary layer the quantities \(\bar{u}, \bar{v}\) and \(\epsilon \) are chosen as

where \(T_{\infty } = \sqrt{\epsilon }\) and \(\frac{\partial T}{\partial n} = n \cdot \nabla T\). The velocity field is generated on the unit square (Fig. 2), then injected on the corresponding grid points on the varying domain. The simplified velocity field in (4.4) satisfies the divergence relation \(\bar{u}_x + \bar{v}_y = 0\) and has a boundary layer.

4.3 Statistical results

In the calculations below, the 4th-order Runge–Kutta method is used together with 3rd-order SBP-operators on a grid with 50 and 100 grid points in the x- and y-direction and 9000 grid points in time, in order to minimize the time discretization error.

We start by enforcing the following stochastic variation on the south boundary of the geometry, (see Fig. 3)

where \(\theta _1 \sim N(-1,1)\) and \(\theta _2 \sim U(2,10)\) are stochastic variables controlling the amplitude and frequency of the periodic variation respectively. In order to study the influence of different correlation lengths, we use

where we let \(y_{S_{short}}\) and \(y_{S_{long}}\) represent short and long correlation lengths respectively.

As typical measures of the results, we compute statistics of the integral of the solution and squared solution over the domain, that is

To compute the integrals in the stochastic analysis, we have used 20 grid points in both the \(\theta _1\)- and \(\theta _2\)-direction. For high-dimensional problems adaptive sparse grid techniques or multilevel Monte Carlo methods can be used to improve the efficiency of calculations like these, see for example [6, 11]. However, in this particular case, with only two stochastic dimensions, straightforward quadrature is efficient enough.

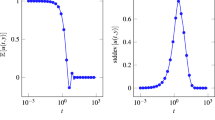

Figures 4 and 5 show the variance with respect to \(\theta _2\) of the integral of the solution and squared solution respectively, as a function of time for different realizations of \(\theta _1\) with \(y_S\) as south boundary. Figures 4 and 5 both illustrate the fact that the variance increase with increasing amplitude, as could be expected.

Figures 6 and 7 depict the variance with respect to \(\theta _1\) of the integral of the solution and squared solution respectively, as a function of time for fixed values of \(\theta _2\) using \(y_S\) as south boundary. As can be seen, an increased frequency leads to an increased variance. Hence high-frequency random variation in the geometry affects the solution more than low-frequency random variation.

Figures 8 and 9 illustrate the effects of correlation length on the variance. The variance as a function of time is shown for two different correlation lengths (one short \(y_{S_{short}}\) and one long \(y_{S_{long}}\)). The figures show no significant difference between the two cases, and we conclude that the correlation length has a minor impact on the variance of the solution.

5 Conclusions and future work

We have studied how the solution to the advection–diffusion equation is affected by imposing boundary data on a stochastically varying geometry. The problem was transformed to the unit square resulting in a formulation with stochastically varying wave speeds. Strong well-posedness and strong stability were proven.

As an application, the two-dimensional heat transfer problem in incompressible flow with a given velocity field was studied. One of the boundaries was assumed to be stochastically varying. The geometry of the boundary was prescribed to have a periodic behaviour with stochastic variations in both amplitude and frequency.

The variances were computed for different fixed realizations of \(\theta _1\) (when varying \(\theta _2\)) and \(\theta _2\) (when varying \(\theta _1\)) controlling the amplitude and frequency respectively. A tentative conclusion is that an increased frequency of the randomness in the geometry leads to an increased variance in the solution. Also, as expected, the variance of the solution grows as the amplitude of the randomness in the geometry increases. Finally, computational results suggests that the correlation length of the geometry has no significant impact on the variance of the solution.

In the next paper we will extend the analysis using polynomial chaos combined with stochastic Galerkin projection to incompletely parabolic systems, including calculations using the Navier–Stokes equations.

References

Agarwal, N., Aluru, N.R.: A stochastic lagrangian approach for geometrical uncertainties in electrostatics. J. Comput. Phys. 226(1), 156–179 (2007)

Antohe, B., Lage, J.: A general two-equation macroscopic turbulence model for incompressible flow in porous media. Int. J. Heat Mass Transf. 40(13), 3013–3024 (1997)

Canuto, C., Kozubek, T.: A fictitious domain approach to the numerical solution of PDEs in stochastic domains. Numer. Math. 107(2), 257–293 (2007)

Chakravarthy, S., Anderson, D.: Numerical conformal mapping. Math. Comput. 33(147), 953–969 (1979)

Chauviere, C., Hesthaven, J.S., Lurati, L.: Computational modeling of uncertainty in time-domain electromagnetics. SIAM J. Sci. Comput. 28, 751–775 (2006)

Cliffe, K.A., Giles, M.B., Scheichl, R., Teckentrup, A.L.: Multilevel monte carlo methods and applications to elliptic PDEs with random coefficients. Comput. Vis. Sci. 14(1), 3 (2011)

Farhat, C., Geuzaine, P., Grandmont, C.: The discrete geometric conservation law and the nonlinear stability of ALE schemes for the solution of flow problems on moving grids. J. Comput. Phys. 174, 669–694 (2001)

Hosder, S., Walters, R.W., Perez, R.: A non-intrusive polynomial chaos method for uncertainty propagation in CFD simulations. AIAA Pap. 891, 2006 (2006)

Lin, G., Su, C.H., Karniadakis, G.E.: Stochastic modeling of random roughness in shock scattering problems: theory and simulations. Comput. Method Appl. Mech. 197, 3420–3434 (2008)

Lin, G., Tartakovsky, A.M., Tartakovsky, D.M.: Uncertainty quantification via random domain decomposition and probabilistic collocation on sparse grids. J. Comput. Phys. 229, 6995–7012 (2010)

Liu, M., Gao, Z., Hesthaven, J.S.: Adaptive sparse grid algorithms with applications to electromagnetic scattering under uncertainty. Appl. Numer. Math. 61(1), 24–37 (2011)

Mendes, M.A.A., Ray, S., Pereira, J.M.C., Pereira, J.C.F., Trimis, D.: Quantification of uncertainty propagation due to input parameters for simple heat transfer problems. Int. J. Therm. Sci. 60, 94–105 (2012)

Nordström, J.: Conservative finite difference formulations, variable coefficients, energy estimates and artificial dissipation. J. Sci. Comput. 29(3), 375–404 (2006)

Nordström, J., Eriksson, S., Eliasson, P.: Weak and strong wall boundary procedures and convergence to steady-state of the Navier–Stokes equations. J. Comput. Phys. 231(14), 4867–4884 (2012)

Nordström, J., Svärd, M.: Well-posed boundary conditions for the Navier–Stokes equations. SIAM J. Numer. Anal. 43(3), 1231–1255 (2005)

Nordström, J., Wahlsten, M.: Variance reduction through robust design of boundary conditions for stochastic hyperbolic systems of equations. J. Comput. Phys. 282, 1–22 (2015)

Parussini, L., Pediroda, V., Poloni, C.: Prediction of geometric uncertainty effects on fluid dynamics by polynomial chaos and fictitious domain method. Comput. Fluids 39, 137–151 (2010)

Patankar, S.V., Spalding, D.B.: A calculation procedure for heat, mass and momentum transfer in three-dimensional parabolic flows. Int. J. Heat Mass Transf. 15(10), 1787–1806 (1972)

Pettersson, P., Iaccarino, G., Nordström, J.: Numerical analysis of the burgers equation in the presence of uncertainty. J. Comput. Phys. 228(22), 8394–8412 (2009)

Pettersson, P., Nordström, J., Doostan, A.: A well-posed and stable stochastic galerkin formulation of the incompressible Navier–Stokes equations with random data. J. Comput. Phys. 306, 92–116 (2016)

Roache, P.J.: Code verification by the method of manufactured solutions. Trans. Am. Soc. Mech. Eng. J. Fluids Eng. 124(1), 4–10 (2002)

Seban, R.A.: Skin-friction and heat-transfer characteristics of a laminar boundary layer on a cylinder in axial incompressible flow. J Aeronaut. Sci. 18, 671–675 (2012)

Svärd, M., Nordström, J.: On the order of accuracy for difference approximations of initial-boundary value problems. J. Comput. Phys. 218, 333–352 (2014)

Svärd, M., Nordström, J.: Review of summation-by-parts schemes for initial-boundary-value problems. J. Comput. Phys. 268, 17–38 (2014)

Xiu, D., Shen, J., et al.: An efficient spectral method for acoustic scattering from rough surfaces. Commun. Comput. Phys. 1, 54–72 (2007)

Xiu, D., Tartakovsky, D.M.: Numerical methods for differential equations in random domains. J. Sci. Comput. 28, 1167–1185 (2006)

Acknowledgements

The UMRIDA project has received funding from the European Union’s Seventh Framework Programme for research, technological development and demonstration under Grant Agreement No. ACP3-GA-2013-605036.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jan Hesthaven.

Appendices

Appendix A: Transformation

Using the product rule (2.2) becomes

We use the Geometric Conservation Law (GCL) (\((J \xi _x)_{\xi } + (J \eta _x)_{\eta } = 0\) and \((J \xi _y)_{\xi } + (J \eta _y)_{\eta } = 0\), see [7] for more details) and note that \(\bar{u}_x = J \bar{u}_{\xi } \xi _x + J \bar{u}_{\eta } \eta _x\) and \(\bar{v}_y = J \bar{v}_{\xi } \xi _y + J \bar{v}_{\eta } \eta _y\) in (A.1), resulting in

Finally, the divergence relation \(\bar{u}_x + \bar{v}_y = 0\) is utilized to (A.2).

Appendix B: Boundary conditions for the case \(\tilde{a} = \tilde{b} = 0\)

By considering (2.7) with \(\tilde{a} = \tilde{b} = 0\) we obtain

Due to symmetry, the matrices in (B.1) can be diagonalized as

To bound the RHS of (B.2) we impose the following boundary conditions

where

By imposing (B.4) in (B.1) weakly gives

We expand the boundary terms \(W_{E,W,N,S}^-\) to obtain

In an attempt to cancel the indefinite terms in (B.5) we choose

The choices (B.6) in (B.5) gives

From (B.7), we note as in (2.14) that the solution is bounded by data.

Proposition B.1

The problem (2.3) with the boundary conditions (B.3) and the penalty coefficients in (B.6) is strongly well-posed.

Proof

Time integration (from 0 to T) of (B.7) results in

Similarly to Proposition 2.1, the solution is bounded by data.

Appendix C: Stability

The matrix \(I_2\) is the identity matrix of size \(2 \times 2\). Note that \(\overline{DI}\) is positive semi-definite and mimics its continuous counterpart in (2.5).

By rewriting (3.5) we find

As in the continuous case, see (2.15), we cancel the indefinite terms, by the choice

The use of (C.2) in equation (C.1) results in

By adding and subtracting \(g^\mathrm{T} (E_{0N} \otimes P_{\eta }) \tilde{A} g\) in (C.3) one obtains

and hence, the RHS of (3.6) is bounded by data with the initial assumption (as in the continuous case) that \(\tilde{A} > 0\). Note the resemblance between (C.4) and the related continuous estimate in (2.14) considering only the west boundary.

When considering all the boundaries, the following choices

give us a discrete energy estimate.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wahlsten, M., Nordström, J. The effect of uncertain geometries on advection–diffusion of scalar quantities. Bit Numer Math 58, 509–529 (2018). https://doi.org/10.1007/s10543-017-0676-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0676-7

Keywords

- Incompressible flow

- Advection–diffusion

- Uncertainty quantification

- Uncertain geometry

- Boundary conditions

- Parabolic problems

- Variable coefficient

- Temperature field

- Heat transfer