Abstract

The fundamental objective of earthquake engineering is to protect lives and livelihoods through the reduction of seismic risk. Directly or indirectly, this generally requires quantification of the risk, for which quantification of the seismic hazard is required as a basic input. Over the last several decades, the practice of seismic hazard analysis has evolved enormously, firstly with the introduction of a rational framework for handling the apparent randomness in earthquake processes, which also enabled risk assessments to consider both the severity and likelihood of earthquake effects. The next major evolutionary step was the identification of epistemic uncertainties related to incomplete knowledge, and the formulation of frameworks for both their quantification and their incorporation into hazard assessments. Despite these advances in the practice of seismic hazard analysis, it is not uncommon for the acceptance of seismic hazard estimates to be hindered by invalid comparisons, resistance to new information that challenges prevailing views, and attachment to previous estimates of the hazard. The challenge of achieving impartial acceptance of seismic hazard and risk estimates becomes even more acute in the case of earthquakes attributed to human activities. A more rational evaluation of seismic hazard and risk due to induced earthquakes may be facilitated by adopting, with appropriate adaptations, the advances in risk quantification and risk mitigation developed for natural seismicity. While such practices may provide an impartial starting point for decision making regarding risk mitigation measures, the most promising avenue to achieve broad societal acceptance of the risks associated with induced earthquakes is through effective regulation, which needs to be transparent, independent, and informed by risk considerations based on both sound seismological science and reliable earthquake engineering.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The study of earthquakes serves many noble purposes, starting with humankind’s need to understand the planet on which we live and the causes of these calamitous events that challenge the very idea of residing on terra firma. Throughout history, peoples living in seismically active regions have formulated explanations for earthquakes, attributing their occurrence to the actions to disgruntled deities, mythical creatures or, later on, the Aristotelian view that earthquakes are caused by winds trapped and heated within a cavernous Earth (which is echoed in Shakespeare’s Henry IV, Part 1). While it is easy for us to look on these worldviews as quaint or pitifully ignorant, our modern understanding of earthquakes and their origins is very recent (when my own father studied geology as part of his civil engineering education, the framework of plate tectonics for understanding geological events had yet to be formulated and published). The discipline of seismology has advanced enormously during the last century or so, and our understanding of earthquakes continues to grow. The study of seismicity was instrumental in understanding plate tectonics and the analysis of seismic waves recorded on sensitive instruments all over the world has revealed, like global X-rays, the interior structure of our planet. As well as such advances in science, the development of seismology has also brought very tangible societal benefits, one of the most laudable being to distinguish the signals generated by underground tests of nuclear weapons from those generated by earthquakes, which made a comprehensive test ban treaty possible (Bolt 1976).

The most compelling reason to study earthquakes, however, must now be to mitigate their devastating impacts on people and on societies. A great deal of effort has been invested in developing predictions of earthquakes, since with sufficient prior warning, evacuations could prevent loss of life and injury. There have been some remarkable successes, most notably the prediction of the February 1975 Haicheng earthquake in China (Adams 1976); however, the following year, the Tangshan earthquake on 28 July occurred without warning and took the lives of several hundreds of thousands of people. More recently, there has been a focus on earthquake early warning systems (e.g., Gasparini et al. 2007), which can provide between seconds and tens of seconds of advance warning that can allow life-saving actions to be taken. However, whether strong ground shaking is predicted a few seconds or even a few days ahead of time, the built environment will still be exposed to the effects of the earthquake. Consequently, the most effective and reliable approach to protecting individuals and societies from the impact of earthquakes is through seismically resistant design and construction.

To be cost effective in the face of limited resources, earthquake-resistant design first requires quantification of the expected levels of loading due to possible future earthquakes. Although not always made explicit, to demonstrate that the design is effective in providing the target levels of safety requires the analysis of the consequences of potential earthquake scenarios, for which the expected shaking levels are also required. The practice of assessing earthquake actions has progressed enormously over the last half century, especially in terms of identifying and quantifying uncertainties related to the location, magnitude, and frequency of future earthquakes, and to the levels of ground shaking that these will generate at a given location. The benefit of incorporating these uncertainties into the estimates of ground shaking levels is that the uncertainty can be taken into account in the definition of the design accelerations. This is not to say that seismic safety relies entirely on estimating the ‘correct’ level of seismic loading: additional margin is included in structural design, as has been clearly demonstrated by the safe performance of three different nuclear power plants in recent years. In July 2007, the magnitude 6.6 Niigata Chūetsu earthquake in western Japan occurred very close to the Kashiwazaki-Kawira nuclear power plant (NPP). At all seven reactor units, recorded accelerations exceeded the design motions (Fig. 1) without leading to any loss of radioactive containment. The magnitude 9.0 Tōhoku earthquake in March 2011 on the opposite coast of Japan generated motions at the Fukushima Daiichi NPP that also exceeded the design accelerations (Grant et al. 2017); the ensuing tsunami led to a severe nuclear accident at the plant, but the plant withstood the ground shaking without distress. A few months later, motions recorded at the North Anna NPP due to the M 5.8 Mineral, Virginia, USA earthquake also exceeded design acceleration levels without causing damage (Graizer et al. 2013).

Seismic safety in critical structures such as NPPs depends therefore on both the margins of resistance above the nominal design accelerations and the degree to which the estimates of the site demand, to which the design motions are referenced, reflect the uncertainty in their assessment. Therefore, for a nuclear regulator, capture of uncertainty in the assessment of seismic shaking levels provides assurance regarding the provision of adequate safety. However, the inclusion of large degrees of uncertainty can be viewed quite differently by other groups. For example, since inclusion of uncertainty generally leads to higher estimates of the accelerations (in theory broader uncertainty bands could lead to lower accelerations, but in practice it tends to push estimates in the opposite direction), owners and operators of these facilities may be averse to the inclusion of large intervals of uncertainty, especially if these are viewed as unnecessarily wide. For the public, capture of broad ranges of uncertainty in the estimates of earthquake hazard could be interpreted either way: on the one hand, it could be viewed positively as nuclear safety being enhanced through consideration of events that are stronger than what has been previously observed, whereas on the other hand, it could be seen as evidence that the science is too unsure to inform rational decision making and, in the face of such unknowns, safety cannot be guaranteed. The challenge therefore is two-fold: to develop impartial quantification of earthquake hazard and risk, and for these estimates to then be objectively accepted as the baseline for decision making regarding the management of the risk. This article discusses important advances in the estimation of earthquake hazard, and also explores, with concrete examples from practice, why impartial hazard estimates are sometimes met with stern— or even belligerent—resistance.

In recent years, earthquakes related to human activities—and generally referred to as induced seismicity—have attracted a great deal of scientific and societal attention. This has been driven primarily by more frequent occurrence of earthquakes of anthropogenic origin; a prime example being the remarkable increase in seismicity in the states of Oklahoma, Kentucky, and Texas, which has been related to hydrocarbon production (Fig. 2). However, the profile of induced seismicity in public debate, the media, and government policy has also been heightened by the controversy related to some of the industrial activities that have been shown to cause induced earthquakes, particularly hydraulic fracturing or fracking.

Increase in seismicity in the Central and Eastern United States from 2009 to 2015 related to hydrocarbon production (Rubinstein and Babaie Mahani 2015)

The seismic hazard (shaking levels) and risk (damage) due to induced seismicity can be estimated using the procedures that have been developed for natural seismicity, with appropriate adjustments for the distinct characteristics of induced earthquakes. The frameworks that have been developed for estimating seismic hazard due to natural earthquakes should be taken advantage of in the field of induced seismicity given that the controversy surrounding these cases often makes it imperative to correctly identify the degrees of uncertainty. Equally important, however, is to bring into the quantification of induced seismic hazard an engineering perspective that relates the hazard to risk. I make the case in this article that to date the assessment of induced seismic hazard has often not quantified uncertainty well and, perhaps more importantly, has failed to relate the hazard to a rational quantification of risk. These shortcomings are particularly important because the challenges of the hazard estimates being accepted by different groups are often particularly acute, much more so than is the case of natural seismicity. A key question that the article sets out to address is whether it is possible for robust estimates of seismic hazard associated with potential induced earthquakes to be adopted at face value. This leads to the question of whether the hazard estimates can be used as a starting point in discussions surrounding the rational management of the associated risk and its balance with the benefits of the industrial activity with the potential to cause seismic activity. This article discusses a number of case histories in which such objectivity was glaringly absent, and also explores options that might facilitate the impartial acceptance of estimates of induced seismic hazard.

The focus of this paper, as its title indicates, is to promote objectivity in the assessment of seismic hazard and risk for both natural and induced earthquakes. Assessment therefore refers to two different processes, reflecting the focus of this article on the balance of these two aspects noted above: (1) the estimation of possible or expected levels of earthquake shaking; and (2) the interpretation or evaluation of these estimates as a reliable basis for risk mitigation. Despite this deliberate ambiguity in the use of the word assessment, clear and consistent terminology is actually of great importance, for which reason the article starts with brief definitions of the key concepts embedded in the title: the meaning of hazard and risk (Sect. 1.1), and then the nature of uncertainty (Sect. 1.2). This introduction then concludes with a brief overview of the paper (Sect. 1.3).

1.1 Seismic hazard and seismic risk

Seismic risk refers to undesirable consequences of earthquakes, which include death, injury, physical damage to buildings and infrastructure, interruption of business and social activities, and the direct and indirect costs associated with such outcomes. In a generic sense, risk can be defined as the possibility of such consequences occurring at a given location due to potential future earthquakes. In a more formal probabilistic framework, seismic risk is quantified by both the severity of a given metric of loss and the annual frequency or probability of that level of loss being exceeded.

Seismic hazard refers to the potentially damaging effects of earthquakes, the primary example being strong ground shaking (the full range of earthquake effects is discussed in Sect. 2). Again, in a generic sense, seismic hazard can be thought of as the possibility of strong shaking—measured, for example, by a specific level of peak ground acceleration (PGA)—occurring at a given location. In a probabilistic framework, the hazard is the probability or annual frequency of exceedance of different levels of the chosen measure of the vibratory ground motion.

Seismic hazard does not automatically create seismic risk: an earthquake in an entirely unpopulated region or in the middle of the ocean (remote from any submarine cables) will not constitute a risk: except, potentially, to any passing marine vessel (Ambraseys 1985). Risk only arises when there are buildings or infrastructure (such as transport networks, ports and harbours, energy generation and distribution systems, dams, pipelines, etc.) present at the locations affected by the shaking. The elements of the built environment that could be affected by earthquakes are referred to collectively as the exposure.

For a given element of exposure, the seismic risk is controlled in the first instance by the degree of damage that could be inflicted by an earthquake. This depends on the strength of the possible ground shaking at the site (the hazard) and how much damage the structure is likely to suffer under different levels of ground shaking, which is referred to as the fragility. Damage is often generally defined by discrete damage states, such as those specified in the European Macroseismic Scale (Grünthal 1998): DS1 is negligible to slight (slight non-structural damage, no structural damage), DS2 is moderate (slight structural damage, moderate non-structural damage), DS3 is substantial to heavy (moderate structural damage, heavy non-structural damage), DS4 is very heavy (heavy structural damage, very heavy non-structural damage), and DS5 is extensive (very heavy structural damage or collapse). An example set of fragility functions for a given building type is shown in Fig. 3.

Fragility curves for a specific type of building, indicating the probability of exceeding different damage states as a function of spectral acceleration at a period of 2 s (Edwards et al. 2021)

Risk is generally quantified by metrics that more readily communicate the impact than the degree of structural and non-structural damage, such as the number of injured inhabitants or the direct costs of the damage. To translate the physical damage into other metrics requires a consequence function. Figure 4 shows examples of such functions that convert different damage states to costs, defined by damage ratios or cost ratios that are simply the cost of repairing the damage normalised by the cost of replacing the building. In some risk analyses, the fragility and consequence functions are merged so that risk metrics such as cost ratios or loss of life are predicted directly as a function of the ground shaking level; such functions are referred to as vulnerability curves. The choice to use fragility or vulnerability curves depends on the purpose of the risk study: to design structural strengthening schemes, insight is required regarding the expected physical damage, whereas for insurance purposes, the expected costs of earthquake damage may suffice.

Examples of consequence functions that translate damage states to damage or cost ratios, from a Italy, b Greece, c Turkey and d California, (Silva et al. 2015)

Referring back to the earlier discussion, earthquake engineering for natural (or tectonic) seismicity generally seeks to reduce seismic risk to acceptable levels by first quantifying the hazard and then providing sufficient structural resistance to reduce the fragility (i.e., move the curves to the right, as shown in Fig. 5) such that the convolution of hazard and fragility will result in tolerable levels of damage. This does not necessarily mean no damage since designing all structures to resist all levels of earthquake loading without structural damage would be prohibitively expensive. The structural performance targets will generally be related to the consequences of structural damage or failure: single-family dwellings are designed to avoid collapse and preserve life safety; hospitals and other emergency services to avoid damage that would interrupt their operation; and nuclear power plants to avoid any structural damage that could jeopardise the containment of radioactivity. Earthquake engineering in this context is a collaboration between Earth scientists (engineering seismologists) who quantify the hazard and earthquake engineers (both structural and geotechnical) who then provide the required levels of seismic resistance in design. Until now, the way that the risk due to induced seismicity has been managed is very different and has been largely driven by Earth science: implicit assumptions are made regarding the exposure and its fragility, and the risk is then mitigated through schemes to either reduce the hazard at the location of the buildings by either relocating the operations (i.e., changing the exposure) or by controlling the induced seismicity. These two contrasting approaches are illustrated schematically in Fig. 6.

Illustration of the effect of seismic strengthening measures on fragility curves for a specific building type and damage state (Bommer et al. 2015a)

Schematic illustration of the classical approaches for mitigating seismic risk due natural and induced earthquakes by controlling different elements of the risk; in practice, explicit consideration of the exposure and its fragility has often been absent in the management of induced seismicity, replaced instead by vague notions of what levels of hazard are acceptable

1.2 Randomness and uncertainty

The assessment of earthquake hazard and risk can never be an exact science. Tectonic earthquakes are the result of geological processes that unfold over millennia, yet we have detailed observations covering just a few decades. The first seismographs came into operation around the turn of the twentieth century, but good global coverage by more sensitive instruments came many decades later. This has obvious implications for models of future earthquake activity that are based on extrapolations from observations of the past. Historical studies can extend the earthquake record back much further in time in some regions, albeit with reduced reliability regarding the characteristics of the events, and geological studies can extend the record for larger earthquakes over much longer intervals at specific locations. The first recordings of strong ground shaking were obtained in California in the early 1930s, but networks of similar instruments were installed much later in other parts of the world—the first European strong-motion recordings were registered more than three decades later. Even in those regions where such recordings are now abundant, different researchers derive models that yield different predictions. Consequently, seismic hazard analysis is invariably conducted with appreciable levels of uncertainty, and the same applies to risk analysis since there are uncertainties in every element of the model.

Faced with these uncertainties, there are two challenges for earthquake hazard and risk assessment: on the one hand, to gather data and to derive models that can reduce (or eliminate) the uncertainty, and, on the other hand, to ensure that the remaining uncertainty is identified, quantified, and incorporated into the hazard and risk analyses. In this regard, it is very helpful to distinguish those uncertainties that can, at least in theory, be reduced through the acquisition of new information, and those uncertainties that are effectively irreducible. The former are referred to as epistemic uncertainties, coming from the Greek word ἐπιστήμη which literally means science or knowledge, as they are related to our incomplete knowledge. The term uncertainty traditionally referred to this type of unknown, but the adjective epistemic is now generally applied to avoid ambiguity since the term uncertainty has often also been applied to randomness. Randomness, now usually referred to as aleatory variability (from alea, Latin for dice), is thought of as inherent to the process or phenomenon and, consequently, irreducible. In reality, it is more accurate to refer to apparent randomness since it is always characterised by the distribution of data points relative to a specific model (e.g., Strasser et al. 2009; Stafford 2015), and consequently can be reduced by developing models that include the dependence of the predicted parameter on other variables. Consider, for example, a model that predicts ground accelerations as a function of earthquake size (magnitude) and the distance of the recording site from the source of the earthquake. The residuals of the recorded accelerations relative to the predictions define the aleatory variability in the predictions, but this variability will be appreciably reduced if the nature of the surface geology at the recording sites is taken into account, even if this is just a simple distinction between rock and soil sites (Boore 2004). In effect, such a modification to the model isolates an epistemic uncertainty—the nature of the recording site and its influence on the ground acceleration—and thus removes it from the apparent randomness; this, in turn, creates the necessity, when applying the model, to obtain additional information, namely the nature of the surface geology at the target site.

Aleatory variability is generally measured from residuals of data relative to the selected model and is characterised by a statistical distribution. The quantification of epistemic uncertainty requires expert judgement (as discussed in Sect. 6) and is represented in the form of alternative models or distributions of values for model parameters. As is explained in Sect. 3, aleatory variability and epistemic uncertainty are handled differently in seismic hazard analysis and also influence the results in quite distinct ways. What is indispensable is that both types be recognised, quantified and incorporated into the estimation of earthquake hazard and risk.

1.3 Overview of the paper

Following this Introduction, the paper is structured in two parts that deal with natural earthquakes and induced seismicity, with the focus in both parts being the quest for objectivity in the assessment of their associated hazard.

Part I addresses natural earthquakes of tectonic origin, starting with a brief overview of the hazards associated with earthquakes (Sect. 2) followed by an overview of seismic hazard assessment, explaining how it incorporates aleatory variability in earthquake processes, as well as highlighting how hazard is always defined, explicitly or implicitly, in the context of risk (Sect. 3). Section 4 then discusses features of good practice in seismic hazard analysis that can be expected to facilitate acceptance of the result, emphasising especially the importance of capturing epistemic uncertainties. Section 5 discusses the construction of input models for seismic hazard analysis, highlighting recent developments that facilitate the representation of epistemic uncertainty in these inputs. Section 6 then discusses the role of expert judgement in the characterisation of epistemic uncertainty and the evolution of processes to organise multiple expert assessments for this objective. Part I concludes with a discussion of cases in which the outcomes of seismic hazard assessments have met with opposition (Sect. 7), illustrating that undertaking an impartial and robust hazard analysis does not always mean that the results will be treated objectively.

Part II addresses induced seismicity, for which objectivity in hazard and risk assessments can be far more elusive. The discussion begins with a brief overview of induced seismicity and some basic definitions, followed by a discussion of how induced earthquakes can be distinguished from natural earthquakes (Sect. 8), including some examples of when making this distinction has become controversial. Section 9 discusses seismic hazard and risk analysis for induced earthquakes through adaptation of the approaches that have been developed for natural seismicity, including the characterisation of uncertainties. Section 10 then discusses the mitigation of induced seismic risk, explaining the use of traffic light protocols (TLP) as the primary tool used in the scheme illustrated in Fig. 6, but also making the case for induced seismic risk to be managed in the same way as seismic risk due to tectonic earthquakes. Section 11 addresses the fact that for induced seismicity, there is often concern and focus on earthquakes of magnitudes that would generally be given little attention were they of natural origin, by reviewing the smallest tectonic earthquakes that have been known to cause damage. This then leads into Sect. 12 and four case histories of induced earthquakes that did have far-reaching consequences, despite their small magnitude. In every case it is shown that the consequences of the induced seismicity were not driven by physical damage caused by the ground shaking but by other non-technical factors, each one illustrating a failure to objectively quantify and rationally manage the perceived seismic risk. Part II closes with a discussion of the implications of the issues and case histories presented in terms of achieving objective and rational responses to earthquake risk arising from induced seismicity. A number of ideas are put forward that could contribute to a more balanced and objective response to induced earthquakes.

The paper then closes with a brief Discussion and Conclusions section that brings together the key messages from both Part I and Part II.

Finally, a few words are in order regarding the audience to which the paper is addressed. The article is addressed in the first instance to seismologists and engineers, since both of these disciplines are vital to the effective mitigation of earthquake risk (and, I shall argue, the contribution from earthquake engineering to confronting the challenges of induced seismicity has been largely lacking to date). However, if both impartial quantification of earthquake hazard and risk, and objective evaluation of hazard and risk estimates in the formulation of policy are to be achieved, other players need to be involved in the discussions, particularly regulators and operators from the energy sector, who may not have expertise in the field of Earth sciences or earthquake engineering. Consequently, the paper begins with a presentation of some fundamentals so that it can be read as a standalone document by non-specialists, as well as the usual readership of the Bulletin of Earthquake Engineering. Readers in the latter category may therefore wish to jump over Sects. 2 and 3 (and may feel that they should have been given a similar warning regarding Sect. 1.1 and 1.2).

Part I: Natural Seismicity

2 Earthquakes and seismic hazards

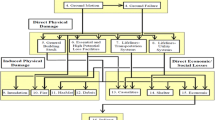

An earthquake is the abrupt rupture of a geological fault, initiating at a point referred to as the focus or hypocentre, the projection of which on the Earth’s surface is the epicentre. The displacement of the fault relaxes the surrounding crustal rocks, releasing accumulated strain energy that radiates from the fault rupture in the form of seismic waves whose passage causes ground shaking. Figure 7 illustrates the different hazards that can result from the occurrence of an earthquake.

adapted from Bommer and Boore (2005)

Earthquake processes and their interaction with the natural environment (ellipses) and the resulting seismic hazard (rectangles);

2.1 Fault ruptures

As illustrated in Fig. 7, there are two important hazards directly associated with the fault rupture that is the source of the earthquake: surface fault rupture and tsunami.

2.1.1 Surface rupture

The dimensions of fault ruptures grow exponentially with earthquake magnitude, as does the slip on the fault that accompanies the rupture (e.g., Wells and Coppersmith 1994; Strasser et al. 2010; Leonard 2014; Skarlatoudis et al. 2015; Thingbaijam et al. 2017). Similarly, the probability of the rupture reaching the ground surface—at which point it can pose a very serious threat to any structure that straddles the fault trace—also grows with magnitude (e.g., Youngs et al. 2003). The sense of the fault displacement is controlled by the fault geometry and the tectonic stress field in the region: predominantly vertical movement is dip-slip and horizontal motion is strike-slip. Vertical motion is referred to as normal in regions of tectonic extension (Fig. 8) and reverse in regions of compression (Fig. 9).

Normal-faulting scarp created by the 2006 Machaze M 7 earthquake in Mozambique, which occurred towards the southern end of the East African Rift (Fenton and Bommer 2006). The boy is standing on the hanging block (i.e., the fault dips under his feet) that has moved downwards in the earthquake

Reverse-faulting scarp in Armenia following the Spitak earthquake of 1988, in the Caucasus mountains (Bommer and Ambraseys 1989). The three people to the left of the figure are on the foot wall (the fault dips away from them) and the hanging wall has moved upwards

The risk objective in the assessment of surface rupture hazard is generally to avoid locations where this hazard could manifest (in other words, to mitigate the risk by changing the exposure). For safety–critical structures such as nuclear power plants (NPPs), the presence of a fault capable of generating surface rupture would normally be an exclusionary criterion that would disqualify the site. Meehan (1984) relates the story of several potential NPP sites in California that were eventually abandoned when excavations for their foundations revealed the presence of active geological faults. For extended lifeline infrastructure, however, such as roads, bridges, and pipelines, it is often impossible to avoid crossing active fault traces and in such circumstances the focus moves to quantifying the sense and amplitude of potential surface slip, and to allow for this in the design. An outstanding example of successful structural design against surface fault rupture is the Trans-Alaskan Oil Pipeline, a story brilliantly recounted by the late Lloyd Cluff in his Mallet-Milne lecture of 2011. The pipeline crosses the Denali fault and was designed to accommodate up to 6 m of horizontal displacement and 1.5 m of vertical offset. The design was tested in November 2003 by a magnitude M 7.9 earthquake associated with a 336-km rupture on the Denali fault, with a maximum slip of 8.8 m. In the area where the pipeline crosses the fault trace, it was freely supported on wide sleepers to allow it to slip and thus avoid the compressional forces that would have been induced by the right-lateral strike-slip motion (Fig. 10). No damage occurred at all and not a drop of oil was spilt and thus a major environmental disaster was avoided: the pipeline transports 2.2 million barrels of crude oil a day. Failure of the pipeline would also have had severe economic consequences since at the time it transported 17% of US crude oil supply and accounted for 80% of Alaska’s economy.

There are also numerous examples of earth dams built across fault traces—the favourable topography allowing the creation of a reservoir often being the consequence of the faults—and designed to accommodate future fault offset (e.g., Allen and Cluff 2000; Mejía 2013). There have also been some spectacular failures causes by fault rupture, such as the Shih-Kang dam that was destroyed by the fault rupture associated with the 199 Chi-Chi earthquake in Taiwan (e.g., Faccioli et al., 2006).

Accommodating vertical offset associated with dip-slip faults can be even more challenging, but innovative engineering solutions can be found. Figure 11, for example, shows a detail of a high-pressure gas pipeline in Greece at a location where it crosses the trace of a dip-slip fault, and design measures have been added to allow the pipeline to accommodate potential fault slip without compromising the integrity of the conduit.

Construction of high pressure gas pipeline from Megara to Corinth, Greece: where the pipeline crosses active faults, it is encased to prevent damage due to fault slip (Image courtesy of Professor George Bouckovalas, NTUA http://users.ntua.gr/gbouck/proj-photos/megara.html)

2.1.2 Tsunami

When a surface fault rupture occurs in the seabed, and especially for a reverse or thrust (a reverse fault of shallow dip) rupture typical of subduction zones, the displacement of a large body of water above the fault can create a gravity wave of small amplitude and great wavelength that travels across the ocean surface at a velocity equal to \(\sqrt{gd}\), where g is the acceleration due to gravity (9.81 m/s2) and d is the depth of the ocean. As the wave approaches the shore, the speed of the wave reduces with the water depth and the wave height grows to maintain the momentum, creating what is called a tsunami, which is a Japanese word meaning ‘harbour wave’. Tsunamis can be the most destructive of all earthquake effects, as was seen in the 2004 Boxing Day M 9.2 earthquake that originated off the coast of Indonesia (e.g., Fujii and Satake 2007) and caused loss of life as far away as East Africa (Obura 2006), and the tsunami that followed the 2011 Tōhoku M 9.0 earthquake in Japan (e.g., Saito et al. 2011), which caused the loss of 20,000 lives. As indicated in Fig. 7, tsunamis can also be generated by submarine landslides (e.g., Ward 2001; Harbitz et al. 2006; Gusman et al. 2019), an outstanding example of which was the Storegga slide in the North Sea, assumed to have been triggered by an earthquake, that generated a tsunami that inundated areas along the east coast of Scotland (e.g., Dawson et al. 1988).

The estimation of tsunami hazard generally focuses on potential wave heights and run-up, the latter referring to the highest elevation on land to which the water rises. Such parameters can inform design or preventative measures, including elevated platforms and evacuation routes. Insufficient sea wall height at the Fukushima Daiichi NPP in Japan led to inundation of the plant due to the tsunami that followed the Tōhoku earthquake, leading to a severe nuclear accident despite the fact that the plant had survived the preceding ground shaking without serious damage. There can be significant scope for reducing loss of life due to tsunami through early warning systems that alert coastal populations to an impending wave arrival following a major earthquake (e.g., Selva et al. 2021); for tsunami the lead times can be much longer than early warning systems for ground shaking, for which reason these can be of great benefit.

2.2 Ground shaking

On a global scale, most earthquake destruction is caused by the strong shaking of the ground associated with the passage of seismic waves, and this shaking is also the trigger for the collateral geotechnical hazards discussed in Sect. 2.3. The focus of most seismic hazard assessments is to quantify possible levels of ground shaking, which provides the basis for earthquake-resistant structural design.

2.2.1 Intensity

Macroseismic intensity is a parameter that reflects the strength of the ground shaking at a given location, inferred from observations rather than instrumental measurements. There are several scales of intensity, the most widely used defining 12 degrees of intensity (Musson et al. 2010), such as the European Macroseismic Scale, or EMS (Grünthal 1998). For the lower degrees of intensity, the indicators are primarily related to the response of humans and to the movement of objects during the earthquakes; as the intensity increases, the indicators are increasingly related to the extent of damage in buildings of different strength. The intensity assigned to a specific location should be based on the modal observation and is often referred to as an intensity data point (IDP). Contours can be drawn around IDPs and these are called isoseismals, which enclose areas of equal intensity. The intensity is generally written as a Roman numeral, which reinforces that notion that it is an index and should be treated as an integer value. An isoseismal map, such as the one shown in Fig. 12, conveys both the maximum strength of the earthquake shaking and the area over which the earthquake was felt, and provides a very useful overview of an earthquake. Intensity can be very useful for a number of purposes, including the inference of source location and size for earthquakes that occurred prior to the dawn of instrumental seismology (e.g., Strasser et al. 2015). However, for the purposes of engineering design to mitigate seismic risk, intensity is of little use and recourse is made to instrumental recordings of the strong ground shaking.

Isoseismal map for an earthquake in South Africa (Midzi et al. 2013). The IDPs for individual locations are shown in Arabic numerals

2.2.2 Accelerograms and ground-motion parameters

The development and installation of instruments capable of recording the strong ground shaking caused by earthquakes was a very significant step in the evolution of earthquake engineering since it allowed the detailed characterisation of these motions as input to structural analysis and design. The instruments are called accelerographs since they generate a record of the ground acceleration against time, which is known as an accelerogram. Many different parameters are used to characterise accelerograms, each of which captures a different feature of the shaking. The mostly widely used parameter is the peak ground acceleration, PGA, which is simply the largest absolute amplitude on the accelerogram. Integration of the accelerogram over time generates the velocity time-history, from which the peak ground velocity, PGV, is measured in the same way (Fig. 13). In many ways, PGV is a superior indicator of the strength of the shaking to PGA (Bommer and Alarcón 2006).

The acceleration and velocity time-series from the recording at the CIG station of the M 5.7 San Salvador, El Salvador, earthquake of October 1986. The upper plot shows the accumulation of Arias intensity and the significant duration (of 0.96 s) based on the interval between obtaining 5% and 75% of the total Arias intensity

Another indicator of the strength of the shaking is the Arias intensity, which is proportional to the integral of the acceleration squared over time (Fig. 13). Arias intensity has been found to be a good indicator of the capacity of ground shaking to trigger instability in both natural and man-made slopes (Jibson and Keefer 1993; Harper and Wilson 1995; Armstrong et al. 2021).

The duration of shaking or number of cycles of motion can also be important parameters to characterise the shaking. Numerous definitions have been proposed for the measurement of both of these parameters (Bommer and Martinez-Pereira 1999; Hancock and Bommer 2005). The most commonly used measure of duration is called the significant duration and it is based on the accumulation of Arias intensity, defined as the time elapsed between reaching 5% and 75% or 95% of the total. Figure 13 illustrates this measure of duration.

The response of a structure to earthquake shaking depends to a large extent on the natural vibration frequency of the structure and the frequency content of the motion. As a crude rule-of-thumb, the natural vibration period of a reinforced concrete structure can be estimated as the number of storeys divided by 10, although this can also be calculated more accurately considering the height and other characteristics of the structure (Crowley and Pinho 2010). The response spectrum is a representation of the maximum response experienced by single-degree-of-freedom oscillators with a given level of damping (usually assumed to be 5% of critical) to a specific earthquake motion. The concept of the response spectrum is illustrated in Fig. 14. The response spectrum is the basic representation of ground motions used in all seismic design, and all seismic design codes specify a response spectrum as a function of location and site characteristics. The response spectrum can be scaled for damping ratios other than the nominal 5% of critical although the scaling factors depend not only on the target damping value, but also on the duration or number of cycles of motion (Bommer and Mendis 2005; Stafford et al. 2008a).

The concept of the acceleration response spectrum: structures (lowest row) are represented as equivalent single-degree-of-freedom oscillators characterised by their natural period of vibration and equivalent viscous damping (middle row), which are then excited by the chosen accelerogram and the response of the mass calculated. The maximum response is plotted against the period of the oscillator and the complete response spectrum of the accelerogram is constructed by repeating for a large number of closely-spaced periods; building photographs from Spence et al. (2003)

2.2.3 Ground-motion prediction models

An essential element of any seismic hazard assessment is a model to estimate the value of the ground-motion parameter of interest at a particular location as a result of a specified earthquake scenario. The models reflect the influence of the source of the earthquake (the energy release), the path to the site of interest (the propagation of the seismic waves), and the characteristics of the site itself (soft near-surface layers will modify the amplitude and frequency of the waves). The parameters that are always included in such a model are magnitude (source), distance from the source to the site (path), and a characterisation of the site. Early models used distance from the epicentre (Repi) or the hypocentre (Rhyp) but these distance metrics ignore the dimensions of the fault rupture and therefore are not an accurate measure of the separation from the source for sites close to larger earthquakes associated with extended fault ruptures. More commonly used metrics in modern models are the distance to the closest point on the fault rupture (Rrup) or the shortest horizontal distance to the projection of the fault rupture onto the Earth’s surface, which is known as the Joyner-Boore distance (Joyner and Boore 1981) or Rjb. Site effects were originally represented by classes, sometimes as simple as distinguishing between ‘rock’ and ‘soil’, but nowadays are generally represented by explicit inclusion of the parameter VS30, which is the shear-wave velocity (which is a measure of the site stiffness) corresponding to the travel time of vertically propagating shear waves over the uppermost 30 m at the site. The reference depth of 30 m was selected because of the relative abundance of borehole data to this depth rather than any particular geophysical significance. The modelling of site effects has sometimes included additional parameters to represent the depth of sediments, such as Z1.0 or Z2.5 (the depths at which shear-wave velocities of 1.0 and 2.5 km/s are encountered). The more advanced models also include the non-linear response of soft soil sites for large-amplitude motions, often constrained by site response models developed separately (Walling et al. 2008; Seyhan and Stewart 2014). Another parameter that is frequently included is the style-of-faulting, SoF (e.g., Bommer et al. 2003). Figure 15 shows an example of predictions from a model for PGV, showing the influence of magnitude, distance, site classification and style-of-faulting.

Predictions of PGV as a function of distance for two magnitudes showing the influence of site classification (left) and style-of-faulting (right) (Akkar and Bommer 2010)

Acceleration response spectra predicted by five European models and one from California for sites with a VS30 = 270 m/s and b VS30 = 760 m/s for an earthquake of M 7 at 10 km (Douglas et al. 2014a)

By developing a series of predictive models for response spectral accelerations at a number of closely spaced oscillator periods, complete response spectra can be predicted for a given scenario. Figure 16 shows predicted response spectra for rock and soil sites at 10 km from a magnitude M 7 earthquake obtained from a suite of predictive models derived for Europe and the Mediterranean region, compared with the predictions from the Californian model of Boore and Atkinson (2008), which was shown to provide a good fit to European strong-motion data (Stafford et al. 2008b). The range of periods for which reliable response spectral ordinates can be generated depends on the signal-to-noise ratio of the accelerograms, especially for records obtained by older, analogue instruments, although processing is generally still required for modern digital recordings as well (Boore and Bommer 2005). The maximum usable response period of a processed record depends on the filters applied to remove those parts of the signal that are considered excessively noisy (Akkar and Bommer 2006).

There are many different approaches to developing predictive models for different ground-motion parameters (Douglas and Aochi 2008) but the most commonly used are regression on empirical datasets of ground-motion recordings, and stochastic simulations based on seismological theory (e.g., Boore 2003). The former is generally used in regions with abundant datasets of accelerograms, whereas simulations are generally used in regions with sparse data, where recordings from smaller earthquakes are used to infer the parameters used in the simulations. Stochastic simulations can also be used to adjust empirical models developed in a data-rich region for application to another region with less data, which preserves the advantages of empirical models (see Sect. 5.2). A common misconception regarding empirical models is that their objective is to reproduce as accurately as possible the observational data. The purpose of the models is rather to provide reliable predictions for all magnitude-distance combinations that may be considered in seismic hazard assessments, including those that represent extrapolations beyond the limits of the data. The empirical data provides vital constraint on the models, but the model derivation may also invoke external constraints obtained from simulations or independent analyses.

At this point, a note is in order regarding terminology. Predictive models for ground-motion parameters were originally referred to as attenuation relations (or even attenuation laws), which is no longer considered an appropriate name since the models describe the scaling of ground-motion amplitudes with magnitude as well as the attenuation with distance. This recognition prompted the adoption of the term ground motion prediction equations or GMPEs. More recently, there has been a tendency to move to the use of ground motion prediction models (GMPMs) or simply ground motion models (GMMs); in the remainder of this article, GMM is used.

Predicted curves such as those shown in Figs. 15 and 16 paint an incomplete picture of GMMs. When an empirical GMM is derived, the data always displays considerable scatter with respect to the predictions (Fig. 17). For a given model, this scatter is interpreted as aleatory variability. When the regressions are performed on the logarithmic values of the ground-motion parameter, the residuals—observed minus predicted values—are found to be normally distributed (e.g., Jayaram and Baker 2008). The distribution of the residuals can therefore be characterised by the standard deviation of these logarithmic residuals, which is generally represented by the Greek letter \(\sigma \) (sigma). Consequently, GMMs do not predict unique values of the chosen ground-motion parameter, Y, for a given scenario, but rather a distribution of values:

where \(\varepsilon \) is the number of standard deviations above or below the mean (Fig. 17). If \(\varepsilon \) is set to zero, the GMM predicts median values of Y, which have a 50% probability of being exceeded for the specified scenario; setting \(\varepsilon =1\) yields the mean-plus-one-standard deviation value, which will be appreciably higher and have only a 16% probability of being exceeded.

Typical values of the standard deviation of logarithmic ground-motion residuals are generally such that 84-percentile values of motion are between 80 and 100% larger than the median predictions. The expansion of ground-motion datasets and the development of more sophisticated models has not resulted in any marked reduction of sigma values (Strasser et al., 2009); indeed, the values associated with recent models are often larger than those that were obtained for earlier models (e.g., Joyner and Boore 1981; Ambraseys et al. 1996) but this may be the result of early datasets being insufficiently large to capture the full distribution of the residuals. Progress in reducing sigma values has been made by decomposition of the variability into different components, which begins with separating the total sigma into between-event (\(\tau \)) and within-event (\(\phi \)) components, which are related by the following expression:

The first term corresponds to how the average level of the ground motions varies from one earthquake of a given magnitude to another, whereas the latter reflects the spatial variability of the motions. The concepts are illustrated schematically in Fig. 18: \(\tau \) is the standard deviation of the \(\delta B\) residuals and \(\phi \) the standard deviation of the \(\delta W\) residuals. Additional decomposition of these two terms is then possible, in which it is possible to identify and separate elements that in reality correspond to epistemic uncertainties (i.e., repeatable effects that can be constrained through data acquisition and modelling) rather than aleatory variability; such decomposition of sigma is discussed further in Sect. 5.

Conceptual illustration of between-event and within-event residuals (Al Atik et al. 2010)

Several hundred GMMs, which predict all of the ground-motion parameters described in Sect. 2.2 and are derived for application to many different regions of the world, have been published. Dr John Douglas has provided excellent summaries of these models (Douglas 2003; Douglas and Edwards 2016), and also maintains a very helpful online resource that allows users to identify all currently published GMMs (www.gmpe.org.uk).

2.3 Geotechnical hazards

While the single most important contributor to building damage caused by earthquakes is ground shaking, damage and disruption to transportation networks and utility lifelines is often the result of earthquake-induced landslides and liquefaction (Bird and Bommer 2004).

2.3.1 Landslides

Landslides are frequently observed following earthquakes and can be a major contributor to destruction and loss of life (Fig. 19).

Major landslide triggered by the El Salvador earthquake of January 2001 (Bommer and Rodriguez 2002); another landslide triggered in Las Colinas by this earthquake killed around 500 people

The extent of this collateral hazard depends on the strength of earthquake as reflected by the magnitude (e.g., Keefer 1984; Rodrıguez et al. 1999), but it also depends strongly on environmental factors such as topography, slope geology, and precedent rainfall. Assessment of the hazard due to earthquake-induced landslides begins with assessment of shaking hazard since this is the basic trigger. In a sense, it can be compared with risk assessment as outlined in Sect. 1.1, with the exposure represented by the presence of slopes, and the fragility by the susceptibility of the slopes to become unstable due to earthquakes (which is reflected by their static factor of safety against sliding). Indeed, Jafarian et al. (2021) present fragility functions for seismically induced slope failures characterised by different levels of slope displacement as a function of measures of the ground shaking intensity.

2.3.2 Liquefaction

Liquefaction triggering is a phenomenon that occurs in saturated sandy soils during earthquake shaking, which involves the transfer of overburden stress from the soil skeleton to the pore fluid, with a consequent increase in pore water pressure and reduction in effective stress. This stress transfer is due to the contractive tendencies of the soil skeleton during earthquake shaking. Once liquefied, the shear resistance of the soil drastically reduces and the soil effectively behaves like a fluid, which can result in structures sinking into the ground. Where there is a free face such as a river or shoreline, liquefaction can lead to lateral spreading (Fig. 20). Liquefaction can result in buildings becoming uninhabitable and can also cause extensive disruption, especially to port and harbour facilities. However, there are no documented cases of fatalities resulting from soil liquefaction, unless one includes flow liquefaction (e.g., de Lima et al. 2020;).

Lateral spreading on the bank of the Lempa River in El Salvador due to liquefaction triggered by the M 7.7 subduction-zone earthquake of January 2001; notice the collapsed railway bridge in the background due to the separation of the piers caused by the spreading (Bommer et al. 2002)

As with landslide hazard assessment, the assessment of liquefaction triggering hazard can also be compared to risk analysis, with the shaking once again representing the hazard, the presence of liquefied soils the exposure, and the susceptibility of these deposits to liquefaction the fragility. In the widely used simplified procedures (e.g., Seed and Idriss 1971; Whitman 1971; Idriss and Boulanger 2008; Boulanger and Idriss 2014), the ground motion is represented by PGA and a magnitude scaling factor, MSF, which is a proxy for the number of cycles of motion.

Geyin and Maurer (2020) present fragility functions for the severity of liquefaction effects as a function of a parameter that quantifies the degree of liquefaction triggering. Structural fragility functions can be derived in terms of the resulting soil displacement (Bird et al. 2006) or another measure of the liquefaction severity (Di Ludovico et al. 2020), so that liquefaction effects can be incorporated into seismic risk analyses although this requires in situ geotechnical data and information regarding the foundations of buildings in the area of interest (Bird et al. 2004).

3 Seismic hazard and risk analysis

In this section, I present a brief overview of seismic hazard assessment, focusing exclusively on the hazard of ground shaking, highlighting what I view to be an inextricable link between hazard and risk, and also emphasising the issue of uncertainty, which is a central theme of this paper. For reasons of space, the description of hazard and risk analysis is necessarily condensed, and I would urge the genuinely interested reader to consider three textbooks for more expansive discussions of the fundamentals. Earthquake Hazard Analysis: Issues and Insights by Reiter (1990) remains a very readable and engaging overview of the topic and as such is an ideal starting point. The monograph Seismic Hazard and Risk Analysis by McGuire (2004) provides a succinct and very clear overview of these topics. For an up-to-date and in-depth treatment of these topics, I strongly recommend the book Seismic Hazard and Risk Analysis by Baker et al. (2021)—I have publicly praised this tome in a published review (Bommer 2021) and I stand by everything stated therein.

3.1 Seismic hazard analysis

The purpose of a seismic hazard assessment is to determine the ground motions to be considered in structural design or in risk estimation. Any earthquake hazard assessment consists of two basic components: a model for the source of future earthquakes and a model to estimate the ground motions at the site due to each hypothetical earthquake scenario. Much has been made over the years of the choice between deterministic and probabilistic approaches to seismic hazard assessment. In a paper written some 20 years ago (Bommer 2002), I described the vociferous exchanges between the proponents of deterministic seismic hazard analysis (DSHA) and probabilistic seismic hazard analysis (PSHA) as “an exaggerated and obstructive dichotomy”. While I would probably change many features of that article if it were being written today, I think this characterisation remains valid for the simple reason that it is practically impossible to avoid probability in seismic hazard analysis. Consider the following case: imagine an important structure very close (< 1 km) to a major geological fault that has been found to generate earthquakes of M 7 on average every ~ 600 years (this is actually the situation for the new Pacific locks on the Panama Canal, as described in Sect. 7.2). Assuming the structure has a nominal design life in excess of 100 years, it would be reasonable to assume that the fault will generate a new earthquake during the operational lifetime (especially if the last earthquake on the fault occurred a few centuries ago, as is the case in Panama) and therefore the design basis would be a magnitude 7 earthquake at a distance of 1 km. However, to calculate the design response spectrum a decision needs to be made regarding the exceedance level at which the selected GMM should be applied: if the median motions are adopted (setting \(\varepsilon =0\)), then in the event of the earthquake occurring, there is a 50% probability that the design accelerations will be exceeded. If instead the 84-percentile motions are used (mean plus one standard deviation), there will be a 1-in-6 chance of the design accelerations being exceeded. The owner of the structure would need to choose the level commensurate with the desired degree of safety, and this may require more than one standard deviation on the GMM. Whatever the final decision, the hazard assessment now includes a probabilistic element (ignoring the variability in the GMM and treating it as a deterministic model, which implies a 50% probability of exceedance, does not make the variability disappear).

If a probabilistic framework is adopted, the decision regarding the value of \(\varepsilon \) would take into account the recurrence interval of the design earthquake (in this case, 600 years) to choose the appropriate GMM exceedance level: the median level of acceleration would have a return period of 1,200 (600/0.5) years, whereas for the 84-percentile motions, the return period would be 3,600 years. If the target return period were selected as 10,000 years, say, then the response spectrum would need to be obtained by including 1.55 standard deviations of the GMM, yielding accelerations at least 2.5 times larger than the median spectral ordinates.

In practice, most seismic design situations are considerably more complex in terms of the seismic sources and the earthquakes contributing to the hazard than the simple case described above. For example, the site hazard could be still be dominated by a single geological fault, located a few kilometres away from the site at its closest approach, but of considerable length (such that individual earthquakes do not rupture the full length of the fault and will thus not necessarily occur on the section of the fault closest to the site), and which is capable of generating earthquakes of different magnitudes, the larger earthquakes occurring less frequently (i.e., having longer average recurrence intervals) than the smaller events. A deterministic approach might propose to assign the largest magnitude that the fault is considered capable of producing to a rupture adjacent to the target site. However, this would ignore two important considerations, the first is that the smaller earthquakes are more frequent (as a rule-of-thumb, there is a tenfold increase in the earthquake rate for every unit reduction in magnitude) and more frequent earthquakes can be expected to sample higher values of \(\varepsilon \), or expressed another way, the more earthquakes of a particular size that occur, the more likely they are to generate higher-than-average levels of ground shaking. The second consideration is that ground-motion amplitudes do not increase linearly with increasing earthquake magnitude, as shown in Fig. 21. Consequently, more frequent scenarios of M 6, sampling higher \(\varepsilon \) values, could result in higher motions at the site than scenarios of M 7. Of course, the rate could simply be ignored, and a decision could be taken to base the design on the largest earthquake, but the rationale—which is sometimes invoked by proponents of DSHA—would be that by estimating the hazard associated with the worst-case scenario one effectively envelopes the various possibilities. However, for this to be true, the scenario would need to correspond to the genuine upper bound of all scenarios, which would mean placing the largest earthquake the fault could possibly produce at the least favourable location, and then calculating the ground motions at least 3 or 4 standard deviations above the median. In most cases, such design motions would be prohibitive and in practice seismic hazard assessment always backs away from such extreme scenarios.

The scenario of a single active fault dominating all hazard contributions is a gross simplification in most cases since there will usually be several potential sources of future earthquakes that can influence the hazard at the site. Envisage, for example, a site in a region with several seismogenic faults, including smaller ones close to the site and a large major structure at greater distance, all having different slip rates. A classical DSHA would simply estimate the largest earthquake that could occur on each fault (thus defining the magnitude, M) and associate it with a rupture located as close to the site as possible (which then determines the distance R); for each M-R pair, the motions at the site would then be calculated with an arbitrarily chosen value of \(\varepsilon \) and the final design basis would be the largest accelerations (although for different ground-motion parameters, including response spectral ordinates at different periods, different sources may dominate). In early practice, \(\varepsilon \) was often set to zero, whereas more recently it became standard practice to adopt a value of 1. If one recognises that the appropriate value of this parameter should reflect the recurrence rate of the earthquakes, and also takes account of the highly non-linear scaling of accelerations with magnitude (Fig. 21), identifying the dominant scenario that should control the hazard becomes considerably more challenging.

An additional complication that arises in practice is that it is usually impossible to assign all observed seismicity to mapped geological faults, even though every seismic event can be assumed to have originated from rupture of a geological fault. This situation arises both because of the inherent uncertainty in the location of earthquake hypocentres and the fact that not all faults are detected, especially smaller ones and those embedded in the crust that do not reach the Earth’s surface. Consequently, some sources of potential future seismicity are modelled simply as areas of ‘floating’ earthquakes that can occur at any location within a defined region. The definition of both the location and the magnitude of the controlling earthquake in DSHA then becomes an additional challenge: if the approach genuinely is intended to define the worst-case scenario, in many cases this will mean that the largest earthquake that could occur in the area would be placed directly below the site, but this is rarely, if ever, done in practice. Instead, the design earthquake is placed at some arbitrarily selected distance (in the US, where DSHA was used to define the design basis for most existing NPPs, this was sometimes referred to as the ‘shortest negotiated distance’), to which the hazard estimate can be very sensitive because of the swift decay of ground motions with distance from the earthquake source (Fig. 22).

Median PGA values predicted by the European GMM of Akkar et al. (2014) at rock sites (VS30 = 760 m/s) plotted against distance for a magnitude M 6.5 strike-slip earthquake; both plots show exactly the same information but the left-hand frame uses the conventional logarithmic axes whereas the right-hand frame used linear axes and perhaps conveys more clearly how swiftly the amplitudes decay with distance

The inspired insight of Allin C. Cornell and Luis Esteva was to propose an approach to seismic hazard analysis, now known as PSHA, that embraced the inherent randomness in the magnitude and location of future earthquakes by treating both M and R as random variables (Esteva 1968; Cornell 1968). The steps involved in executing a PSHA are illustrated schematically in Fig. 23.

(adapted from USNRC 2018)

Illustration of the steps involved in a PSHA

A key feature of PSHA is a model for the average rate of earthquakes of different magnitudes, generally adopting the recurrence relationship of Gutenberg and Richter (1944):

where N is the average number of earthquakes of magnitude ≥ M per year, and a and b are coefficients found using maximum likelihood method (e.g., Weichert 1980); least squares fitting is not appropriate since for a cumulative measure such as N, the data points are not independent. The coefficient a is the activity rate and is higher in regions with greater seismicity, whereas b reflects the relative proportions of small and large earthquakes (and often, but not always, takes a value close to 1.0). The recurrence relation is truncated at an upper limit, Mmax, which is the largest earthquake considered to be physically possible within the source of interest. The estimation of Mmax is discussed further in Sect. 9.2.

Rather than an abrupt truncation of the recurrence relationship at Mmax, it is common to use a form of the recurrence relationship that produces a gradual transition to the limiting magnitude:

where Mlower is the lower magnitude limit, \(\nu ({M}_{lower})\) is the annual rate of earthquakes with that magnitude, and \(\beta =b.\mathrm{ln}(10)\). For faults, it is common to adopt instead a characteristic recurrence model, since it has been observed that large faults tend to generate large earthquakes with an average recurrence rate that is far higher than what would be predicted from extrapolation of the recurrence statistics of smaller earthquakes (e.g., Wesnousky et al. 1983; Schwartz and Coppersmith 1984; Youngs and Coppersmith 1985). Whereas the Gutenberg-Richter recurrence parameters are generally determined from analysis of the earthquake catalogue for a region, the parameterisation of the characteristic model is generally based on geological evidence.

In publications that followed the landmark paper of Cornell (1968), the variability in the GMM was also added as another random variable in PSHA calculations (see McGuire 2008). Consequently, PSHA is an integration over three variables: M, R and \(\varepsilon \). Rather than identifying a single scenario to characterise the earthquake hazard, PSHA considers all possible scenarios that could affect the site in question, calculating the consequent rate at which different levels of ground motion would be exceeded at the site of interest as a result. For a given value of the ground-motion parameter of interest (say, PGA = 0.2 g), earthquakes of all possible magnitudes are considered at all possible locations within the seismic sources, and the value of \(\varepsilon \) required to produce a PGA of 0.2 g at the site is calculated in each case. The annual frequency at which this PGA is produced at the site due to each earthquake is the frequency of events of this magnitude (determined from the recurrence relationship) multiplied by the probability associated with the \(\varepsilon \) value (obtained from the standard normal distribution). By assuming that all the earthquake scenarios are independent—for which reason foreshocks and aftershocks are removed from the earthquake catalogue before calculating the recurrence parameters, a process known as de-clustering—the frequencies can be summed to obtain the total frequency of exceedance of 0.2 g. Repeating the exercise for different values of PGA, a hazard curve can be constructed, as in the lower right-hand side of Fig. 23. The hazard curve allows rational selection of appropriate design levels on the basis of the annual exceedance frequency (or its reciprocal, the return period): return periods used to define the design motions for normal buildings are usually in the range from 475 to 2,475 years, whereas for NPPs the return periods are in the range 10,000 to 100,000 years.

Since PSHA calculations are effectively a book-keeping exercise that sums the contributions of multiple M-R-\(\varepsilon \) triplets to the site hazard, for a selected annual exceedance frequency the process can be reversed to identify the scenarios that dominate the hazard estimates, a process that is referred to as disaggregation (e.g., McGuire 1995; Bazzurro and Cornell 1999). An example of a hazard disaggregation is shown in Fig. 24; to represent this information in a single scenario, one can use the modal or mean values of the variables, each of which has its own merits and shortcomings (Harmsen and Frankel 2001).

Disaggregation of the hazard in terms of spectral accelerations at 1.0 s for an annual exceedance frequency of 10–4 showing the relative contributions of different M-R-\(\upvarepsilon \) combinations (Almeida et al. 2019)

Since PSHA is an integration over three random variables, it is necessary to define upper and lower limits on each of these, as indicated in Fig. 25. The upper limit on magnitude has already been discussed; the lower limit on magnitude, Mmin, is discussed in Sect. 3.2. For distance, the minimum value will usually correspond to an earthquake directly below the site (unlike the upper left-hand panel in Fig. 23, the site is nearly always located within a seismic source zone, referred to as the host zone), whereas the upper limit, usually on the order of 200–300 km, is controlled by the farthest sources that contribute materially to the hazard (and can be longer if the site region is relatively quiet and there is a very active seismic source, such as a major fault or a subduction zone, at greater distance). Standard practice is to truncate the residual distribution at a limit such as 3 standard deviations; the lower limit on \(\varepsilon \) is unimportant. There is neither a physical nor statistical justification for such a truncation (Strasser et al. 2008) but it will generally only impact on the hazard estimates for very long return periods in regions with high seismicity rates (Fig. 26).

source zones, b recurrence relations, and c GMMs (Bommer and Crowley 2017)

Illustration of integration limits in PSHA in terms of a seismic

3.2 Seismic risk as the context for PSHA

In my view, seismic hazard assessment cannot—and should not—be separated from considerations of seismic risk. Leaving aside hazard sensitivity calculations undertaken for research purposes, all seismic hazard assessments have a risk goal, whether this is explicitly stated or only implicit in the use of the results. When I have made this point in the past, one counter argument given was that one might conduct a PSHA as part of the design of strong-motion recording network, but in that case I would argue that the ‘risk’ would be installing instruments that yield no or few recordings. To be meaningful, hazard must be linked to risk, either directly in risk analysis or through seismic design to mitigate risk. In the previous section I referred to return periods commonly used as the basis for seismic design, but in themselves these return periods do not determine the risk level; the risk target is also controlled by the performance criteria that the structure should meet under the specified loading condition, such as the ‘no collapse’ criterion generally implicit in seismic design codes as a basis for ensuring life safety. For a NPP, the performance target will be much more demanding, usually related to the first onset of inelastic deformation. In effect, the return period defines the hazard, and the performance targets the fragility, both chosen in accordance with the consequences of failure to meet the performance criterion. For NPPs, the structural strength margins (see Fig. 1) mean that the probability of inelastic deformations will be about an order of magnitude lower than the annual exceedance frequency of the design motions, and additional structural capacity provides another order of magnitude against the release of radiation: design against a 10,000-year ground motion will therefore lead to a 1-in-1,000,000 chance of radiation release.

One way in which risk considerations are directly linked to PSHA is in the definition of the minimum magnitude, Mmin, considered in the hazard integrations. This is not the same as the smallest magnitude, Mlower, used in the derivation of the recurrence relation in Eq. (4), but rather it is the smallest earthquake that is considered capable of contributing to the risk (and is therefore application specific). This can be illustrated by considering how seismic risk could be calculated in the most rigorous way possible, for a single structure. For every possible earthquake scenario (defined by its magnitude and location), a suite of acceleration time-histories could be generated or selected from a very large database; collectively, the time-histories would sample the range of possible ground motions for such a scenario in terms of amplitude, frequency content, and duration or number of cycles. Non-linear structural analyses would then be performed using all these records, and the procedure repeated for all possible scenarios. For a given risk metric, such as a specified level of damage, the rate can be determined by the proportion of analyses leading to structural damage above the defined threshold, which can then be combined with the recurrence rate of the earthquake scenarios to estimate annual rates of exceeding the specified damage level (Fig. 27).

Schematic illustration of rigorous risk assessment for a single structure and a defined response condition or limit state; a for each earthquake scenario, a suite of accelerograms is generated and used in dynamic analyses of a structural model, and b the results used to determine the rate at which damage occurs (Bommer and Crowley 2017)

For any given structure, there will be a magnitude level below which the ground motions never cause damage, regardless of their distance from the site. The usual interpretation of such a result is that the short-duration motions from these smaller earthquakes lack the required energy to cause damage. Now, in practice, such an approach to seismic risk analysis would be prohibitively intensive in terms of computational demand, for which reason several simplifications are made. Firstly, the earthquake scenarios and resulting acceleration time-histories are represented by the results of hazard analyses, and secondly the dynamic analyses are summarised in a fragility function. Usually, the hazard is expressed in terms of a single ground-motion parameter that is found to be sufficient to act as an indicator of the structural response; it is also possible, however, to define the fragility in terms of a vector of ground-motion parameters (e.g., Gehl et al. 2013). In a Monte Carlo approach to risk assessment, individual earthquake scenarios are still generated, but for each one the chosen ground-motion parameter is estimated rather than generating suites of accelerograms. If the hazard is expressed in terms of a simple hazard curve, the risk can be obtained by direct convolution of the hazard and fragility curves (Fig. 28). However, in this simplified approach it is necessary to avoid inflation of the risk through inclusion of hazard contributions from the small-magnitude events that are effectively screened out in the more rigorous approach. This is the purpose of the lower magnitude limit, Mmin, imposed on the hazard integral, although there has been a great deal of confusion regarding the purpose and intent of this parameter (Bommer and Crowley 2017). In an attempt to address these misunderstandings, Bommer and Crowley (2017) proposed the following definition: “Mmin is the lower limit of integration over earthquake magnitudes such that using a smaller value would not alter the estimated risk to the exposure under consideration.” The imposition of Mmin can modify the hazard—in fact, if it did not, it would be pointless—but it should not change the intended risk quantification. For NPP, typical values for Mmin are on the order of 5.0 (e.g., McCann and Reed 1990).

Illustration of seismic risk assessment starting with a a seismic hazard curve in terms of PGA and then b combining this hazard curve with a fragility function so that c the convolution of the two yields the total probability of collapse (Bommer and Crowley 2017)