Abstract

Accurately assessing suicide risk is a critical concern in mental health care. Traditional methods, which often rely on self-reporting and clinical interviews, are limited by their subjective nature and may overlook non-verbal cues. This study introduces an innovative approach to suicide risk assessment using facial image analysis. The Suicidal Visual Indicators Prediction (SVIP) Framework leverages EfficientNetb0 and ResNet architectures, enhanced through Bayesian optimization techniques, to detect nuanced facial expressions indicating mental state. The models’ interpretability is improved using GRADCAM, Occlusion Sensitivity, and LIME, which highlight significant facial regions for predictions. Using datasets DB1 and DB2, which consist of full and cropped facial images from social media profiles of individuals with known suicide outcomes, the method achieved 67.93% accuracy with EfficientNetb0 on DB1 and up to 76.6% accuracy with a Bayesian-optimized Support Vector Machine model using ResNet18 features on DB2. This approach provides a less intrusive, accessible alternative to video-based methods and demonstrates the substantial potential for early detection and intervention in mental health care.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Annually, 703,000 individuals lose their lives to suicide, with countless others making attempts. Each suicide is a heart-wrenching tragedy that casts a profound shadow over families, communities, and entire nations, leaving lasting scars on those who remain. Suicide knows no age boundaries, and in 2019, it stood as the fourth major cause of mortality among individuals aged 15–29 on a global scale (WHO 2023).

This tragic phenomenon is frequently intertwined with mental health challenges, encompassing conditions like depression, anxiety, and substance misuse. It is noteworthy that a substantial portion of those who succumb to suicide grapple with underlying mental health disorders. Consequently, the implementation of robust suicide prevention measures and the provision of comprehensive mental health support are of paramount importance. In response to this public health concern, numerous entities, including governmental bodies and various organizations, have launched extensive campaigns and initiatives to tackle the issue head-on.

Preventing suicide has risen to the forefront of global priorities, inspiring extraordinary endeavors focused on raising awareness, advancing research, and improving access to care. Despite these advancements, suicide remains a leading cause of mortality, accompanied by inherent complexities in its prevention. While there is a wealth of well-studied screening and assessment tools available to clinicians, researchers, and educators, the absence of a consensus on a gold-standard for suicide risk assessment and management, coupled with the lack of standardized terminology, presents challenges in accurately identifying risks and effectively preventing suicide outcomes (Silverman et al. 2007).

In our modern, interconnected world, digital platforms, particularly social media, have become the primary means for individuals to express themselves, including their emotions and experiences. However, this shift also brings forth a significant concern: identifying mental health issues, particularly those related to suicidal thoughts and emotional distress, from the images shared on these platforms. This issue is of great importance due to the vast amount of visual content available online, which may contain subtle yet crucial indicators of emotional turmoil and self-harm contemplation. Detecting these signs is complex, often relying on meticulous human observation. Given the sheer volume of online content, there is an urgent need for automated solutions to address this challenge.

Conducting clinical assessments for individuals at risk of self-harm, whether fatal or non-fatal, represents the initial step in suicide prevention efforts. Guidelines have been created to assist healthcare professionals in this demanding task (Jacobs et al. 2010), and assessment tools can complement these clinical evaluations (Bernert et al. 2014; Fochtmann and Jacobs 2015). Several recent evaluations have explored the predictive accuracy of suicide assessment tools, revealing subpar performance in anticipating future suicide attempts and suicides (Herrman et al. 2022). However, these assessments lacked detailed descriptions of selection processes and neglected to consider potential biases.

Furthermore, in recent years, the domain of deep learning has emerged as an indispensable tool, particularly in the realm of face image classification. Deep learning, powered by architectures such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), has showcased exceptional proficiency in extracting facial features, recognizing emotional expressions, and the precise differentiation of individuals. This confluence of deep learning’s capabilities with the intricate domain of face image classification opens up novel horizons in technology and applications. It holds the promise of delivering systems that are not only more accurate and efficient but also exceptionally versatile in the domains of face recognition and image analysis.Recent advancements in AI and Machine Learning have significantly impacted the field of facial medicine, demonstrating the potential for these technologies to enhance diagnostic accuracy and patient outcomes. For instance, Umirzakova et al. (2023) presented a deep learning-driven diagnosis approach for segmenting stroke and Bell’s palsy, showcasing the utility of AI in medical diagnostics.

The scope and implications of this research extend far beyond its technical contributions. It underscores the potential of artificial intelligence, particularly deep learning, in bolstering mental health support and suicide prevention. In a world where digital platforms continue to reshape the landscape of human connection and sharing, it is our imperative duty to harness technology to preserve lives and extend timely intervention where it is most needed.

This study presents a novel approach to suicide risk assessment through the analysis of facial features using deep learning techniques. Unlike previous studies that have relied on video and verbal cues, this work is pioneering in its focus on static images, which presents a unique set of challenges and opportunities. By leveraging advanced machine learning models and Bayesian optimization, the study aims to extract meaningful patterns from facial expressions that could indicate suicidal tendencies. Our principal objective is to provide a scalable, proactive, and effective solution for detecting suicide risk through image analysis within the digital landscape. The contributions of this study can be summarized as follows:

-

Unprecedented Research Point: Emphasize the originality of the research, focusing on the rarity of studies addressing suicide detection through facial analysis. Highlight the limited literature on this subject, making our work a pioneering contribution.

-

Novel Public Dataset Creation: Elaborate on creating an exclusive public dataset containing 319 images drawn from social media sources. Highlight the dataset’s remarkable diversity and delve into the intricacies encountered during the collection process, with a specific emphasis on ensuring image authenticity and rigorous case verification.

-

Introducing Suicidal Visual Indicators Prediction (SVIP) Framework: Present the SVIP framework designed to predict suicidal behavior through facial analysis. Explain the two dataset versions and the primary approaches, combining pre-trained deep learning and machine learning models to maximize predictive accuracy.

-

Feature extraction: Implement feature extraction techniques using state-of-the-art neural networks such as ResNet18 and EfficientNetb0, which are instrumental in identifying subtle facial cues associated with suicidal tendencies.

-

Hyperparameter Optimization: Employment of Bayesian optimization for fine-tuning Support Vector Machine (SVM) models has achieved state-of-the-art accuracy in classification tasks.

-

Automated Machine Learning: The incorporation of Automated Machine Learning (AutoML) techniques, such as Lazy Predict, TPOT (Tree-based Pipeline Optimization Tool), and Orange AutoML, to streamline the model selection process and enhance predictive performance without extensive manual intervention.

-

Enhancing Model Interpretability: Utilization of explainable AI techniques, such as Gradient-weighted Class Activation Mapping (Grad-CAM), Local Interpretable Model-agnostic Explanations (LIME), and Occlusion Sensitivity, to provide insights into the decision-making process of the models, enhancing the interpretability of the predictions.

-

Performance Evaluation: Comprehensive performance evaluation using two distinct datasets, demonstrating the robustness and effectiveness of the proposed approach across different data scenarios. A comparative analysis with existing state-of-the-art techniques showcasing the superior performance of the proposed method in the context of suicide risk assessment through facial analysis.

The paper’s structure is as follows: Sect. 2 delves into the relevant literature. Section 3 provides background. Section 4 outlines the methodology applied and in-depth description of the materials employed. Section 5 presents the results obtained using the proposed approach. Section 6 compares the proposed method with state-of-the-art approaches. Section 7 offers the concluding remarks and future work.

2 Literature review

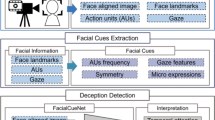

As Fig. 1 depicts, suicide analysis can be conducted using both verbal and non-verbal methods in the quest to understand and prevent self-harm and suicidal tendencies comprehensively. An overview of these dual approaches is as follows:

2.1 Verbal analysis can be categorized into:

-

Text-Based Analysis: This involves the scrutiny of written or spoken content like social media posts, text messages, or speech transcripts. Its purpose is to identify linguistic cues and sentiments associated with suicidal thoughts.

-

Natural Language Processing (NLP): Leveraging NLP techniques, experts uncover patterns and sentiments in the language that may signal suicidal ideation.

-

Sentiment Analysis: The emotional tenor and sentiment conveyed in written or spoken text are analyzed to unveil signs of depression, hopelessness, or suicidal ideation.

-

Topic Modeling: Common themes or topics within textual data, especially those tied to distress, self-harm, or suicide, are identified.

2.2 Non-verbal analysis can be categorized into:

-

Facial Analysis: By utilizing computer vision techniques, facial expressions are evaluated, shedding light on emotions like sadness, hopelessness, or agitation, which are indicative of emotional distress.

-

Eye Movement Analysis: Eye movement patterns and gaze direction are explored, offering insights into signs of discomfort or avoidance when discussing suicide-related subjects.

-

Voice Analysis: Acoustic features in speech, such as pitch, tone, and speech rate, are scrutinized to detect variations linked to distress or suicidal ideation.

-

Physiological Measurements: Monitoring physiological cues, including heart rate, skin conductance, and pupil dilation, helps identify emotional states associated with suicidal tendencies.

Utilizing machine learning and data analysis methodologies enables the creation of models for early detection and intervention, potentially saving lives. Nevertheless, it is of utmost importance to maintain a conscientious awareness of ethical and privacy considerations while dealing with sensitive mental health and suicide-related data. The following subsections delve into the research directions within verbal and non-verbal analysis.

2.3 Verbal analysis research directions

In recent years, there has been a considerable focus on understanding the intricate interplay between language usage and mental health, intending to shed light on the early detection and prevention of suicidal ideation. The following sections provide an extensive review of research related to verbal analysis for suicide prediction, encompassing the evaluation of suicide notes and other pertinent studies in this domain.

Well-established linguistic features commonly employed in the field of psychiatry, such as LIWC (Robinson and Lumontod 2020), emotion-based indicators (Masuda et al. 2013), and insights from suicide notes (Pestian et al. 2010). While these linguistic approaches offer valuable tools for analyzing language in isolation, it’s important to recognize their limitations when dealing with extensive and diverse datasets. Nobles et al. (2018) developed a model to identify periods of suicidality by analyzing text messages from individuals with a history of suicidal thoughts. Their unique SMS dataset allowed them to identify distinct communication patterns preceding suicide attempts. They used a deep neural network (DNN) to model these patterns, achieving notable performance with accuracy, F1-Score, Recall, and Precision rates of 70.0, 75.0, 81.0, and 71.4, respectively.

Jingcheng et al. (2018) used deep learning and transfer learning to identify psychiatric stressors related to suicide in Twitter data. They employed a convolutional neural network (CNN) for the binary classification of suicide-related tweets. Recurrent neural networks (RNN) were used for psychiatric stressor recognition. Deep learning surpassed traditional methods, with CNN achieving 78% precision and an F-1 measure of 83%. The RNN-based recognition achieved an F-1 measure of 53.25% (exact match) and 67.94% (inexact match). Transfer learning from clinical notes improved the F-1 measure to 54.9% (exact match). Tadesse et al. (2019) aimed to detect suicidal ideation in social media posts, focusing on early identification. They utilized deep learning and machine learning, working with Reddit content. Their model combined Long Short-Term Memory (LSTM) and Convolutional Neural Network (CNN) components, outperforming other classification models. The LSTM-CNN architecture, especially when integrated with word embeddings, achieved remarkable performance with accuracy, F1-Score, Recall, and Precision rates of 93.8, 93.4, 94.1, and 93.2, respectively.

Aldhyani et al. (2022) developed a machine-learning system to detect suicidal ideation and behavioral changes in social media posts. They used Reddit datasets, utilizing TF-IDF and Word2Vec for text representation. Their approach combined deep learning (CNN-BiLSTM) and machine learning (XGBoost) algorithms. In two experiments, the CNN-BiLSTM model outperformed XGBoost, achieving 95% accuracy for suicidal ideation detection, surpassing XGBoost’s 91.5%. Conversely, using LIWC features, XGBoost outperformed CNN-BiLSTM. Baghdadi et al. (2022) proposed tracking depression and mental disorders in Arabic social media data. This presented unique challenges due to the Arabic language’s wide usage and complex syntax. The study involved scraping and annotating Arabic tweets, introducing a classification framework for categories like Normal or Suicidal. They presented an Arabic tweet preprocessing algorithm, comparing lemmatization, stemming, and various lexical analysis methods. The study conducted experiments on Twitter data with five annotators and reported various performance metrics. The best-performing model achieved a WSM of 95.26% with Arabic BERT models.

Renjith et al. (2022) developed an automated method for detecting behavioral shifts by analyzing social media interactions, employing deep learning and machine learning, including LSTM and CNN models. Their LSTM-Attention-CNN model achieved 90.3% accuracy and a 92.6% F1-score, surpassing baseline models.

Chadha and Kaushik (2022) tokenized a dataset of 20,000 Reddit posts using word2vec techniques and proposed the ACL (Attention Convolution Long Short-Term Memory) model, achieving an 88.48% accuracy, 87.36% precision, 90.82% F1 score, and 79.23% specificity. The ACL model with Random embedding reached a 94.94% recall. Ghosal and Jain (2023) presented a framework to differentiate depression-related content and suicidal risk, utilizing fastText embeddings, TF-IDF vectors, and the XGBoost classifier. Their approach achieved an accuracy of 71.05%, an AUC of 78%, and a weighted F1-score of 71% on a Reddit dataset.

Burkhardt et al. (2023) developed an innovative model using Bidirectional Encoder Representations from Transformers (BERT) to identify suicide risk signals in social media posts. Their multi-stage transfer learning approach optimized the model’s performance and introduced a novel metric for assessing its ability to expedite response times for distressed individuals. This method achieved an enhanced F1 score of 79.7% from the initial 73.4%. Implementing this model could reduce response times by 15 min for urgent messages.

2.4 Non-verbal analysis research directions

Suicidal intent is strongly associated with co-occurring mental health conditions, particularly depression and anxiety. Individuals displaying suicidal tendencies exhibit symptoms commonly linked to depression and anxiety, such as elevated stress levels, persistent fatigue, and a tendency to withdraw from social interactions Waern et al. (2016). Furthermore, advanced research involving statistical analyses and machine learning-based assessments of facial and ocular movement patterns has unearthed specific patterns. For instance, patients diagnosed with depression frequently manifest narrower eye openings, extended blink durations, and slower, less frequent head movements, which may be indicative of fatigue and a preference for avoiding eye contact (Alghowinem et al. 2013a; Waxer 1977; Alghowinem et al. 2013b). These nuanced findings offer valuable insights into the intricate relationship between suicidal ideation and these concurrent mental health conditions. An expanding body of research in mental disorder detection has demonstrated that facial appearance and expressions can convey meaningful non-verbal cues (Dhelim et al. 2023). These cues can be effectively analyzed to evaluate a range of mental disorders, including depression (Pampouchidou et al. 2017), bipolar disorder (Venn et al. 2004), and social anxiety (Silvia et al. 2006).

In a study by Laksana et al. (2017), facial behaviors in interviews from three hospitals were analyzed to differentiate patients with suicidal ideation and mental health disorders. Significant differences were observed, particularly in Duchenne smile occurrences. The study also noted the influence of interview stages on these behaviors. Facial expressions hold promise as markers for identifying such issues. Three predictive models were compared: SVM, Random Forest, and Multinomial Naïve Bayes, with Random Forest achieving the highest accuracy at 39.4%.

In their study, Eigbe et al. (2018) explored dynamics in smiles to differentiate genuine from fake smiles in individuals with suicidal ideation and investigated smile frequency across various conversational contexts. They also assessed gaze aversion as a potential behavioral marker. Analyzing 74 interviews with hospital patients, they developed predictive models that showed promise in distinguishing between mental health conditions, particularly identifying those with suicidal tendencies. This research underscores the potential of behavioral cues in detecting mental health conditions during clinical interviews, achieving 69% accuracy in classification.

Shah and colleagues (Shah et al. 2019) investigated behavioral markers associated with expressed suicidal intent in social media videos using a unique annotated dataset. Their research involved statistical hypothesis testing to validate hypotheses from the literature. Employing multimodal predictive modeling, they identified significant markers like silences, slouched shoulders, rapid hand movements, and profanity indicating suicidal intent. Combining visual, acoustic, and language elements, the trimodal approach achieved an AUC of around 71%."

Liu et al. (2022) explore suicide risk assessment and nonverbal behavioral interpretation using statistical analysis, feature selection, and machine learning. They analyze unique data on eye and head signals, discovering that high-risk individuals display psychomotor retardation, anxiety, and depression symptoms as behavioral cues like eye contact avoidance, slower blinks, and downward eye gaze. Classification methods effectively distinguish levels of suicide risk, consistently achieving over 98% accuracy. Table 1 compares conducted research studies on suicide risk detection.

2.5 Research gap and motivation

In the field of suicide prevention, the bulk of research has primarily focused on verbal and behavioral indicators, with substantial attention given to the analysis of language patterns and non-verbal behaviors such as facial expressions and head movements. Despite the valuable insights yielded by these studies, there is a discernible research gap in the investigation of suicide prediction through static facial image analysis, as opposed to dynamic videos or interactive interviews. This gap presents an untapped opportunity to devise new methods that can function with less data-intensive inputs, like single-frame images, which are more accessible and pose fewer privacy concerns.

Existing studies are heavily weighted toward verbal cues, analyzing text messages and social media posts with various deep-learning models to predict suicidal tendencies. While these methods are promising, several challenges are commonly encountered, such as:

-

There is a shortage of research that employs static facial images for suicide prediction, which could offer a subtler and more readily available data source than videos or text, potentially enabling earlier and less intrusive interventions.

-

Non-verbal indicators in the current literature are mostly obtained from video analyses or patient interviews, which might not always be practical or ethical to collect, given the subject’s sensitive nature.

-

The potential for machine learning models, especially in extracting features from static images to predict suicidal ideation, remains underexplored. This represents an unexplored path for research that could supplement the existing verbal and behavioral analysis techniques.

-

Innovative approaches incorporating explainable AI are needed to provide transparent interpretations of the predictive features used by machine learning models, thereby increasing the research’s trustworthiness and practical application in real-world settings.

-

There is a notable lack of dedicated public datasets specifically tailored for suicide prediction research, which restricts the ability of researchers to train, test, and improve their models on diverse populations.

-

Cultural variability significantly affects facial expressions and their interpretations, which introduces complexity in developing universally applicable models and raises concerns about algorithmic bias.

-

The interdisciplinary complexity of combining psychological insights with advanced machine learning techniques is a significant hurdle, necessitating collaboration across fields to ensure the development of ethically sound and scientifically valid predictive tools.

To address these challenges, we have significantly expanded our dataset to include 319 images from diverse cultural backgrounds, enhancing generalizability. Our research employs a three-phase approach, utilizing eleven deep neural networks for classification and feature extraction alongside auto-machine learning techniques for comprehensive analysis of suicide-related images. We enhance model performance using the Bayesian Optimization Algorithm (BOA) for hyperparameter tuning, improving accuracy and computational efficiency in suicide prediction. This holistic approach not only boosts accuracy but also advances our understanding of and capacity to prevent suicidal behavior. By conducting a thorough analysis of suicide-related images using deep learning and machine learning techniques, our research contributes to the broader goal of suicide prevention.

3 Background

This section details the materials, methodologies, and techniques employed in our comprehensive approach to suicide prediction. Our study leverages diverse data sources, cutting-edge technologies, and well-established methodologies to construct a robust framework for the early detection of suicidal tendencies. By carefully selecting and processing data, employing advanced machine learning and deep learning models, and implementing ethical considerations, our methodology encompasses a multifaceted approach designed to address the complex challenges of suicide prediction effectively. The subsequent sections provide a systematic breakdown of our dataset, preprocessing steps, artificial intelligent suicide detection models, and performance metrics, offering a comprehensive insight into our research methodology.

3.1 Dataset utilization in suicide detection

In suicide detection, the dataset is undeniably the linchpin upon which the development and assessment of effective models hinge. This section is dedicated to the critical exploration of dataset utilization in the context of suicide detection, where researchers rely on a diverse array of meticulously curated datasets. These datasets offer invaluable insights into the behavioral indicators that are instrumental in assessing suicide risk. By scrutinizing the role of datasets in the multifaceted field of suicide detection, we endeavor to illuminate the intricacies and subtleties inherent to this vital area of research. Furthermore, this examination underscores the essential role of data-driven approaches in the pursuit of innovative solutions that have the potential to save lives. To provide a comprehensive overview, Table 2 offers a catalog of datasets employed for suicide detection, encompassing pertinent information such as the data source, type, number of classes, distribution, and the number of instances for each dataset.

3.2 Artificial intelligent suicide detection models

In suicide detection, artificial intelligence (AI) models have become crucial tools for assessing and predicting suicide risk. Two main categories of AI models play a significant role in this field: deep learning and machine learning. These models encompass various algorithms and techniques, offering valuable insights into the complex factors influencing mental and emotional well-being. In this section, we explore and compare the roles of deep learning and machine learning models in suicide detection, highlighting their strengths and limitations.

In suicide detection, deep learning models play a pivotal role due to their ability to extract intricate patterns from complex data, particularly in image analysis. The prominent deep learning models frequently employed in suicide detection, shedding light on their distinctive features and applications are as follows:

-

1.

GoogleNet (Inception Network): GoogleNet is celebrated for its depth and the incorporation of inception modules. Its innovative design makes it adept at efficiently and accurately extracting features. Suicide detection tasks often leverage GoogleNet to discern subtle facial expressions and visual cues indicating emotional distress (Simonyan and Zisserman 2014).

-

2.

ResNet18 and ResNet50: ResNet, characterized by its deep architecture with residual blocks, simplifies the training of highly complex networks. These models are particularly useful for capturing nuanced features within facial images, leading to improved recognition of emotional cues associated with suicide risk (He et al. 2016).

-

3.

DarkNet19: DarkNet is a renowned open-source neural network framework acknowledged for its speed and efficiency. DarkNet 19, a variation of this framework, is employed in suicide detection, especially in real-time video analysis where swift processing is essential (Redmon and Farhadi 2017).

-

4.

EfficientNet: EfficientNet is a highly efficient and accurate deep learning model widely acclaimed for its performance in computer vision tasks (Tan and Le 2019).

-

5.

NasNet Mobile: NasNet Mobile is a lightweight yet powerful neural network architecture, making it well-suited for mobile applications. Its adaptability and exceptional feature extraction capabilities make it a valuable asset for image classification tasks (Zoph et al. 2018).

-

6.

DenseNet: DenseNet, short for Densely Connected Convolutional Networks, is renowned for its interconnected architecture that promotes efficient feature reuse. This characteristic makes it a strong candidate for image analysis and classification (Huang et al. 2017).

-

7.

VGG, or Visual Geometry Group: VGG is a classic deep neural network model lauded for its straightforward yet effective design. Its utilization of small convolutional filters within a deep architecture has cemented its status as a fundamental component in various image-related applications (Simonyan and Zisserman 2014).

In addition, we discuss three different optimizers to train the models and to determine which model produces the best results (Mehedi et al. 2021). A brief description of the optimizers is given below:

Root Mean Square Propagation (RMSprop), introduced by Geoffrey Hinton, aims to mitigate oscillations and automatically adapt the learning rate. RMSprop tailors a distinct learning rate for each parameter, and updates are computed as in Eqs. (1)–(3).

where \(\mu \) is the initial learning, \(v_t\) is the exponential average of squares of gradients, and \(g_t\) is the gradient at a time along \(w_j\).

Adam optimizer, combines the heuristics of both momentum and RMSprop. The Eqs. (3) and (4)–(6) describe the updating each parameter \(w_t\).

where \(s_t\) is the exponential average of squares of gradients along \(w_j\) and \(\beta _1\) and \( \beta _2\) are the hyperparameters.

The fine-tuned pre-trained models utilized the Softmax activation function to generate class probabilities, ranging between 0 and 1, from the input images. Applying the Softmax activation function at the end of a CNN model to transform outcome scores into a normalized probability distribution is a well-recognized and established practice. The mathematical representation of the Softmax function is described in Eq. (7).

where \(\textbf{Z}\) is an input vector, \(z_i\) are the elements in \(\textbf{Z}\), \(e^{z_i}\) is the exponential function, and \(\sum _{j=1}^{k}e^{z_j}\) is the normalization term.

A wide array of deep learning models provides versatility, allowing researchers to select models that suit their needs. These needs range from attaining superior accuracy to resource-efficient processing or real-time analysis. The selection of a model hinges on factors like the characteristics of the available data, computational capabilities, and the specific nuances of the suicide detection mission.

3.3 Automated machine learning (AutoML) in data science

Automated Machine Learning (AutoML) stands as a transformative innovation within data science, simplifying and expediting critical facets of the machine learning pipeline. Covering data understanding, engineering, feature selection, model training, and hyperparameter optimization, AutoML streamlines the process, making machine learning more approachable. It assumes the role of automating the development and implementation of machine learning models, alleviating decision-making pressures on researchers and engineers, and even extending to complex tasks such as neural network architecture design. This section explores the AutoML frameworks (Rashed et al. 2023) adopted in this study.

3.4 Lazy predict

Lazy Predict is a robust AutoML framework lauded for its proficiency in crafting a diverse array of fundamental models sourced from libraries like scikit-learn and similar resources, all achieved with minimal coding requirements. This framework plays a pivotal role in assessing model performance and illuminating the models that exhibit superior results without delving into intricate parameter tuning. Lazy Predict is invaluable for expeditiously evaluating multiple solutions simultaneously, primarily focusing on preprocessing and model training, bypassing the complexities of fine-tuning (Rashed et al. 2023).

3.5 Tree-based Pipeline Optimization Tool (TPOT)

The TPOT represents another outstanding AutoML framework that optimizes machine learning pipelines via genetic programming. It effectively merges the strengths of hyperopt-sklearn for fine-tuning and auto-sklearn for data preprocessing. Distinguishing itself, TPOT emphasizes the simplicity and interpretability of models. Unlike its counterparts, TPOT avoids complex model ensembling, prioritizing the maintenance of straightforward models while delivering high-performance results. It is invaluable for advanced modeling tasks and streamlined, interpretable solutions (Rashed et al. 2023).

3.6 Orange AutoML

Additionally, it’s essential to recognize the pivotal role of the Orange AutoML framework within the AutoML landscape. Orange AutoML provides a user-friendly environment that streamlines the entire machine-learning process, encompassing everything from data preprocessing to model selection and evaluation. It empowers data scientists and analysts, offering the potential to leverage AutoML’s capabilities while retaining transparency and control over the process. With a wide array of machine learning algorithms and comprehensive model assessment features, Orange AutoML proves to be a versatile and invaluable asset within the AutoML arena (Rashed et al. 2023).

Collectively, these AutoML frameworks contribute significantly to democratizing machine learning, enabling a wider audience to harness the potential of data science without requiring extensive coding or manual intervention. As the AutoML field evolves, these frameworks remain indispensable tools for data professionals searching for efficient and effective solutions for complex machine-learning challenges.

3.6.1 The Bayesian Optimization Algorithm (BOA)

The Bayesian Optimization Algorithm (BOA) (Pelikan et al. 1999) is a genetic algorithm-based methodology that harnesses estimated probability distributions to generate novel candidate solutions. BOA effectively employs Bayesian networks to identify and recombine building blocks, free from any reliance on variable ordering within solution strings and independent of prior problem-specific information. Preliminary experiments have consistently showcased BOA’s superior performance compared to straightforward genetic algorithms, even as problem sizes grow and complexities in building blocks increase. What distinguishes Bayesian Optimization from Random Search and Grid Search is its ability to optimize the search process using historical performance data. Unlike the other methods, Bayesian Optimization considers past evaluations, mirroring the iterative nature of manual hyperparameter tuning. This adaptive feature incorporates insights from prior performance to make informed decisions, rendering it a highly efficient approach compared to Random Search and Grid Search, which lack this adaptability. Bayesian Optimization offers a streamlined approach to hyperparameter tuning, building a probabilistic model of the objective function (typically the validation error as a function of hyperparameters). This model guides the selection of hyperparameters to evaluate next, driven by an acquisition function that balances exploration (evaluating hyperparameters with uncertain outcomes) and exploitation (evaluating hyperparameters where the model predicts low validation error). This approach surpasses traditional grid search or random search techniques in several ways. It identifies superior hyperparameters with fewer evaluations, substantially reducing the time and computational resources needed for hyperparameter tuning. Additionally, by incorporating uncertainty into the decision-making process, Bayesian Optimization effectively avoids being trapped in local minima and has a heightened chance of locating the global optimum (Pelikan et al. 1999). At its core, Bayesian Optimization represents a systematic approach for optimizing intricate, black-box functions guided by a probabilistic objective function model. The process commences with formulating of a Gaussian Process (GP) prior, serving as a surrogate model for the objective function and represented mathematically in Eq. (8).

Where,

-

f(x) is the objective function we want to optimize.

-

m(x) represents the mean function.

-

\(k(x,x^{'})\) is the kernel function, capturing the covariance structure between points x and \(x^{'})\).

-

The GP prior reflects our prior belief about the objective function and its associated uncertainty.

Bayesian Optimization proceeds iteratively, with each iteration acquiring new data to update the GP model. This is done using a GP posterior model as in Eq. (9).

In Eq. (9):

-

\(D_n\) represents the set of observed data up to the current iteration n.

-

\(m_n(x)\) represents the mean function of the Gaussian process (GP) at input point "x" given the data \(D_n\).

-

The posterior GP model refines our understanding of the objective function based on the available data.

To guide the selection of the next sampling point, Bayesian Optimization employs an acquisition function, often denoted as \(\alpha (x)\). This function quantifies the utility of sampling at a given point x and is calculated using the current posterior distribution. One common choice for the acquisition function is the Expected Improvement (EI), which can be expressed as:

where \(x_{best}\) represents the best-observed point so far. The next sampling point, \(x_n\), is selected by maximizing the acquisition function:

Once \(x_n\) is chosen, the true objective value is observed at that point:

The iteration then continues to increment n, and this process repeats until a predetermined stopping criterion is met. The final solution selection can be based on different criteria, such as maximizing the posterior mean, finding the point with the largest EI, or choosing the point with the maximum observed value. This comprehensive approach to Bayesian Optimization efficiently explores the objective function landscape, harnessing the power of Gaussian Processes and acquisition functions, and it has applications in a wide range of fields, including hyperparameter tuning, experimental design, and automated machine learning. The basic pseudo-code for Bayesian optimization is depicted in Algorithm 1.

3.7 Performance metrics

This study utilizes various performance metrics to assess the effectiveness of deep learning (DL) and machine learning classifiers. These metrics provide a holistic evaluation, considering imbalanced datasets and predictive accuracy (Rashed Amr et al. 2024). The detailed formulations of these performance metrics is shown in Table 3 and can be defined as follows:

-

Accuracy measures the overall correctness of predictions, including true positives and true negatives, suitable for balanced datasets. Precision quantifies the accuracy of positive predictions, emphasizing true positives and helping minimize false positives, which is crucial in medical diagnoses.

-

Precision is a metric that precisely quantifies the number of accurate positive predictions, effectively gauging the accuracy concerning the minority class.

-

Recall, or true positive rate, assesses true positives in the context of actual positive instances, crucial for reducing false negatives as in fraud detection. The F1-score combines precision and recall into a single value, balancing these metrics.

-

F1-score is a statistical metric that balances precision and recall, providing a single value to assess a model’s accuracy, particularly in situations with imbalanced datasets or the need to minimize both false positives and false negatives.

-

Specificity, the false positive rate, evaluates the model’s ability to correctly identify negative cases, which is especially important for such instances.

-

AUC, the Area Under the ROC Curve, provides a comprehensive measure of the model’s ability to distinguish between different classes.

4 Materials and methods

In this section, a comprehensive overview of the materials and methodologies employed in this study is presented, outlining the critical steps and considerations involved in developing the Suicidal Visual Indicators Prediction (SVIP) Framework for automated suicide risk detection through facial image analysis. This includes a detailed examination of the datasets used, emphasizing their diversity and the ethical considerations undertaken to ensure the responsible use of image-based data in suicide research. The process of dataset preprocessing is discussed, highlighting the techniques applied to enhance image quality and consistency, which are vital for the robust performance of our deep-learning models. Following this, an in-depth description of the proposed SVIP framework is provided, elucidating its architecture, the integration of advanced machine learning techniques, and the interpretability tools used to provide insights into the model’s decision-making processes. This structured approach aims to offer a clear and thorough understanding of the research methodologies and the underlying principles guiding this study, ensuring the reliability and ethical integrity of the findings.

4.1 Used datasets

In this study, with a primary objective of predicting suicidal behavior through facial analysis, we’ve carefully curated an extensive dataset, encompassing approximately 319 images thoughtfully sourced from various social media platforms. These images represent individuals from diverse cultural backgrounds who, tragically, have lost their lives to suicide. Our dataset is thoughtfully divided into two versions: Dataset 1, containing raw and unprocessed images, and Dataset 2, consisting of meticulously cropped images.The images in our datasets, DB1 and DB2, were collected from public sources and published as news on social media platforms, and websites. The dataset contains a total of 319 images, with 199 images of people who died by suicide and 120 images of people who died normally (non-suicide). Table 4 provides a detailed breakdown of demographic information for normal (non-suicide) and suicide cases, categorized by gender, age group, and country. In terms of gender, there are more males than females in both categories, with 74 males and 46 females in the non-suicide group and 138 males and 61 females in the suicide group. Age distribution shows a higher concentration of suicide cases in the 20–29, 30–39, 60–69, 70–79, 80–89, and 90–99 age ranges, with the most significant number in the 90–99 group. Country-wise, America has the highest number of cases in both categories, followed by specific mentions of South Korea and Japan in the suicide group, and the rest categorized under “Other.”

The distribution of nationalities among suicide cases encompasses 28 different countries, with the highest number of cases from America (65 cases), followed by South Korea (17 cases) and Japan (10 cases). While the distribution among non-suicide cases involves 22 different countries, with the highest number of cases also from America (44 cases), followed by British (8 cases), and Argentine & Dutch (4 cases each). The inclusion of people from different nationalities in the dataset is commendable, as it provides a diverse representation of suicide from around the world. This diverse representation from around the world invites further research into the cultural, economic, and healthcare factors that might influence these patterns. It is important to declare that the authors consider the ethical and moral implications of working with such sensitive data. Additionally, the authors use the dataset to respect the privacy and dignity of the individuals included.

4.2 Ethical considerations and methodology in utilizing image-based data for suicide research

In the realm of suicide research, the use of image-based data presents unique opportunities and challenges. Our research leverages a dataset of images sourced primarily from news articles and websites, encompassing individuals who have tragically taken their own lives, as well as those who have not. The primary objective of this data collection is to apply neural network algorithms for classification purposes, aiming to identify patterns and features that may contribute to a deeper understanding of suicide.

However, the sensitive nature of this subject necessitates strict adherence to ethical considerations and personal rights. To ensure fair and unbiased model performance across diverse demographic groups, our datasets, DB1 and DB2, incorporate a wide range of facial images representing various ethnic backgrounds. This diversity is essential to mitigate the risk of model bias towards specific facial features or ethnicities. By including a broad spectrum of facial characteristics, we train our model to recognize and interpret a wide array of facial cues, thereby reducing the potential bias that may result from a homogeneous dataset. Moreover, this inclusion of diverse facial features is anticipated to improve the model’s overall accuracy and performance. By increasing the representation of images from different ethnic backgrounds, we aim to decrease the rates of false positives and false negatives. This approach ensures that our model can reliably assess suicide risk across individuals from diverse ethnic and cultural backgrounds.

Incorporating diverse datasets not only addresses ethical concerns but also enhances the model’s robustness and reliability. This comprehensive strategy highlights our commitment to developing an ethical and fair predictive model for effective application across various populations, ultimately improving the safety and efficacy of suicide risk assessments through facial image analysis.

We have taken every measure to ensure that our research respects the rights and dignity of the individuals involved. No actual images of the individuals were displayed or used directly in the research. Instead, we focused on extracting certain common features from these images, which were then used for classification.

The features extracted were those that were predominantly consistent across the images or those that could serve as a basis for classification. This approach ensures that the privacy and dignity of the individuals in the images are respected while still allowing for meaningful research to be conducted.

We believe in the importance of maintaining the highest ethical standards in research, especially when dealing with sensitive subjects such as suicide. Every precaution has been taken to ensure that our research respects the rights and dignity of the individuals involved. We are committed to upholding these standards in all future endeavors, ensuring that our research contributes positively to the field while respecting the individuals it seeks to understand.

4.3 Dataset preprocessing

To ensure the reliability of model predictions and address the variability and potential noise in images obtained from social media, several comprehensive image preprocessing techniques were implemented. These methods enhance the quality and consistency of the dataset, crucial for improving the performance and robustness of deep learning models used in this study. All images were resized to match the input size requirements of pre-trained models such as EfficientNetb0 and ResNet, standardizing image dimensions and ensuring compatibility and optimal performance. For the DB2 dataset, images were cropped to focus on relevant facial regions, removing unnecessary background elements and standardizing the area of interest, which helps the models concentrate on critical facial features indicative of suicide risk. Pixel values across all images were normalized to achieve uniform intensity levels, mitigating biases that may arise from variations in lighting and exposure. This normalization promotes consistency within the dataset, enhancing the accuracy of the model’s predictions. To further increase the dataset’s diversity and improve the model’s ability to generalize, augmentation techniques such as random rotations, flips, and shifts were applied. These augmentations artificially expand the dataset and help the models adapt to different orientations and conditions, making them more robust to variations in the input data. Cross-validation was utilized to split the data into multiple subsets, ensuring a reliable and robust evaluation of the model’s performance. This approach allows for consistent testing across different segments of the data, validating the models’ robustness and generalizability

The cropping process is critical to the data preparation strategy, allowing us to eliminate irrelevant background noise and focus solely on the essential data features. Importantly, we’ve intentionally refrained from using data augmentation techniques on either dataset to ensure data authenticity and minimize the risk of unintentional biases.

The face detection and cropping process, illustrated in Fig. 2, is skillfully executed through a cascade object detector, effectively using the Viola-Jones algorithm for recognizing facial components. This detector relies on a pre-trained cascade classification model known as ’FrontalFaceCART,’ tailored for identifying upright and forward-facing faces. This model comprises weak classifiers based on classification and regression tree analysis (CART) and utilizes Haar features to encode intricate facial characteristics. During the model’s training, we used an image size of [20*20], providing a solid foundation for its performance. Our research uses pre-trained image classification neural networks to harness the powerful transfer learning technique. These networks have undergone extensive training on a subset of the ImageNet database, equipping them with proficiency in various tasks, including classification and feature extraction. Feature extraction is especially pivotal for suicide detection, particularly with smaller datasets, as it offers an efficient alternative to training complete neural networks.

4.4 The proposed Suicidal Visual Indicators Prediction (SVIP) framework

Our Suicidal Visual Indicators Prediction (SVIP) Framework comprises three distinct phases, each meticulously designed to advance our research goals. The preprocessing step takes center stage in all these phases, a critical component that ensures the data is appropriately prepared for analysis. This preprocessing includes image resizing, customized to the pre-trained network’s specifications, and rigorous image inspection to verify compliance with the RGB format. For grayscale images, this process entails replicating the image three times to meet the RGB format’s three-channel requirement. Furthermore, pixel value normalization is fundamental, aligning values within the [0, 1] or [− 1, 1] range, ensuring uniformity, and mitigating potential model biases stemming from pixel intensity variations.

As shown in Fig. 3, the first phase or transfer learning approach involves evaluating twelve state-of-the-art pre-trained models across both datasets. These models encompass ResNet18, ResNet50, GoogleNet, NasNet Mobile, VGG16, DarkNet19, and DenseNet201, each evaluated under two optimizers: ADAM and RMSPROP, and determine which model produces the best performance. The process unfolds in four key phases: “Load Pre-trained Network,” “Replace final Layers,” “Model Training and testing,” and “visual explanation.” These steps collectively validate the transfer learning approach’s potential for identifying suicidal visual indicators through facial analysis. In the fine-tuning process of the pre-trained models, the final layer is substituted with a classifier that can classify two objects since the dataset consists of images with two classes (normal and suicide). Hence, the models are fine-tuned at the top layers. In the fine-tuning process, the last three layers of the models are substituted with (i) a fully connected layer, (ii) a Softmax activation layer, and (iii) a classification layer (cross-entropy). Table 5 presents the standard hyperparameter configurations used during the training and evaluation of the models.

Furthermore, we implement a fivefold cross-validation strategy in the model training and evaluation process. This method involves splitting the dataset into five subsets and conducting five training and testing rounds, each subset serving as training and testing data once. Cross-validation enhances the accuracy of model performance estimation, minimizes variance, and streamlines effective parameter tuning, ultimately contributing to a more dependable and steady diagnostic system. Afterward, we enter the evaluation stage, where the effectiveness and robustness of our models are meticulously examined. Here, we leverage a diverse array of performance metrics, encompassing accuracy, precision, recall, F1-score, and more, to gain a comprehensive understanding of our models’ performance and their ability to identify suicidal behavior accurately. This multifaceted evaluation process ensures that our models meet the highest standards for suicide risk detection. Ultimately, the synergy of occlusion specificity, Grad-CAM, and Lime techniques augments our model’s comprehension of its output. This flexible and scalable approach eradicates the need for manual hyperparameter adjustments and opens up possibilities for the early detection of suicidal tendencies. By integrating Grad-CAM, occlusion specificity, and Lime methods into our analysis, we delve deeper into the feature extraction capabilities of our models. These methodologies facilitate a meticulous examination of the models’ proficiency in identifying and leveraging essential dataset features, thereby boosting both their performance and interpretive capacities.

In the second phase, we rank the adopted deep learning networks based on their performance results, selecting the best-performing ones for feature extraction across both datasets. This step leverages the strength of deep learning to uncover intricate patterns and subtle cues in the data, which are critical for identifying suicide risk indicators. Subsequently, we seamlessly integrate AutoML tools, such as Lazy Predict, TPOT, and Orange, into our pipeline, streamlining model development and enabling parallel exploration of multiple models. We achieve several key advantages by integrating these AutoML tools into our pipeline. Firstly, it significantly expedites the model development process by automating the generation of multiple models, saving us time and effort. Secondly, it allows us to explore and evaluate many models in parallel, optimizing the subsequent phases of our analysis. This parallel exploration of models enhances our ability to select the best-performing models and ensures we can identify suicidal visual indicators with the highest accuracy and reliability. As we transition to the training and testing phase, these AutoML-generated models undergo meticulous fine-tuning, ensuring their alignment with the specific task of identifying suicidal visual indicators in our datasets. This fine-tuning is pivotal in refining the models’ performance, making them adept at accurate and reliable predictions. Finally, we enter the evaluation stage and apply the same metrics in the first phase.

In the third phase, we continue the methodical progression of our analysis by selecting the most suitable machine learning techniques and performing hyperparameter optimization. This phase is pivotal to refining and optimizing our predictive models for identifying suicidal visual indicators ensuring their accuracy, reliability, and robustness. Building on the foundations laid in the previous phases, we begin by selecting the best deep-learning networks based on the performance results achieved in the feature extraction phase. These networks have consistently proven their efficiency in capturing intricate patterns and cues within the datasets, making them the ideal choice for feature selection. The next crucial step involves ranking the machine-learning techniques employed in the previous phase. These techniques are assessed based on performance, allowing us to discern which ones have demonstrated superior capabilities in identifying suicidal visual indicators. We select the best-performing techniques to ensure the highest possible accuracy in our predictions. One of the key advantages of this phase is the incorporation of hyperparameter optimization, specifically utilizing Bayesian optimization techniques. Bayesian optimization, a probabilistic model-based optimization approach, enables us to systematically search the hyperparameter space to find the most effective configurations. This process is invaluable in fine-tuning our models, ensuring that they are precisely adjusted to the specific requirements of the task. The subsequent train and test step is where the selected machine learning techniques, enhanced by hyperparameter optimization, are rigorously tested. The models are trained on the prepared datasets, and their performance is evaluated using rigorous testing methodologies to ensure their accuracy and reliability. Finally, in the evaluation step, the effectiveness of our models is comprehensively assessed using the same adopted metrics in the first phase.

The advantages of this third phase are multifold. First, we ensure our models are built on a strong foundation by selecting the most efficient machine-learning techniques. The integration of hyperparameter optimization significantly enhances the models’ performance by fine-tuning them to deliver the highest accuracy and reliability. This phase not only contributes to the overall robustness of our models but also plays a vital role in our mission to provide an effective and efficient solution for the early detection of suicidal tendencies.

5 Results and discussion

In this section, we present a detailed exploration of the results and engage in a comprehensive discussion of our research findings in the domain of suicide detection through facial analysis. Our study has encompassed various intricate phases, from meticulously curating the dataset and developing advanced models to subjecting them to rigorous evaluation. Here, we unveil the performance metrics and delve into an in-depth analysis, collectively shedding light on the effectiveness and potential of our groundbreaking Suicidal Visual Indicators Prediction (SVIP) Framework. Throughout this journey, our primary goal is to underscore our significant progress in early suicide risk detection, reaffirming our work’s pioneering contribution in this critical field.

5.1 Performance evaluation of the first phase

In the initial phase, which centered around fine-tuning and transfer learning, the outcomes are detailed in Table 6. This table provides an overview of the distribution of normal and suicide cases within the training and test sets throughout the five folds. Each fold’s training set comprised 96 normal cases and 158 or 159 suicide cases, while the corresponding test set contained 24 normal cases and 40 or 39 suicide cases. This consistent distribution was meticulously upheld to preserve a balanced representation of both classes within each fold’s training and test sets.

5.1.1 Performance evaluation results using the original dataset (DB1)

Table 7 displays the performance evaluation outcomes of different models using the unaltered original dataset (DB1) without any augmentation. These models underwent training with two distinct training functions: ADAM and RMSPROP. The performance metrics assessed encompass Area Under the Curve (AUC), Accuracy, Sensitivity, Specificity, Precision, Recall, and F-measure. Among these models, the EfficientNetb0 model trained with the RMSPROP optimization function exhibited the highest level of performance, achieving an accuracy of 67.93%. Notably, ResNet18 also delivered competitive results.

5.1.2 Performance evaluation results for dataset after cropping images (DB2)

Table 8 illustrates the performance evaluation results of various deep learning models using the image-cropped dataset (DB2) without augmentation. These models underwent training with two distinct optimization functions: ADAM and RMSPROP. The data in the table reveals that the ResNet Mobile model, trained with the RMSPROP optimizer, and the ResNet50 model, also trained with RMSPROP, demonstrated the highest performance levels. The NasNet Mobile model achieved an accuracy of 65.1%, while the ResNet50 model reached an accuracy of 64.16%. These findings underscore the critical role of both model selection and optimizer choice in achieving high accuracy in suicide prediction through facial analysis.

Furthermore, in Fig. 4, you can observe the learning curve for the NasNet Mobile model trained with the RMSprop optimizer on the DB2 dataset. This curve demonstrates a consistent increase in accuracy over time, indicative of effective learning during the training process. The model’s convergence suggests a good fit to the data without indications of overfitting or underfitting.

As presented in Table 9, in the case of the DB1 dataset, the EfficientNetb0 model, when trained with the RMSPROP optimizer, exhibited the highest accuracy, reaching 0.6793. Following closely, the ResNet1 8 model, using the ADAM optimizer, achieved an accuracy of 0.6699. Shifting to the DB2 dataset, the NasNet Mobile model, with the RMSPROP optimizer, outperformed other models by achieving an accuracy of 0.651. Notably, the ResNet50 model, whether using the ADAM or RMSPROP optimizers, also delivered competitive performance, yielding an accuracy of 0.6478.

Figure 5 presents heat map visualizations utilizing GRADCAM, Occlusion Sensitivity, and LIME techniques. To enhance the interpretability of the model’s predictions, several visualization techniques are employed, providing valuable insights into the specific facial areas that influence the output. GRADCAM reveals that the model primarily focuses on a circular area encompassing the eyes and mouth. This concentration underscores the significance of these facial features, as they are crucial for conveying emotional expressions, which are integral to the model’s decision-making process. Occlusion Sensitivity heat maps highlight the importance of the nose’s opening. This finding suggests that subtle cues in this region play a significant role in the model’s predictions, indicating that less obvious facial features can also be critical for assessing emotional states. LIME visualizations indicate that the model adopts a more holistic approach, taking into account various parts of the face and their interplay. This method shows that the model does not rely solely on specific features but considers a broader context of facial regions and their interactions when making predictions. These visualization tools collectively emphasize the complexity of facial analysis in predicting emotional states. They demonstrate that multiple facial features and regions must be considered to understand the nuances of the model’s decision-making process fully. By employing GRADCAM, Occlusion Sensitivity, and LIME, the study provides a comprehensive view of how the model interprets different facial cues, enhancing the transparency and reliability of its predictions.

5.2 Performance evaluation of the second phase

Table 9 highlights our observations from the first phase, indicating that EfficientNetB0 and ResNet18 excel in DB1, while NasNet Mobile and ResNet50 lead in DB2. Therefore, these models were chosen for the second phase to extract features from both datasets, creating eight feature sets (as outlined in Table 10). Specifically, ResNet18 yielded 512 features, ResNet50 produced 2048 features, EfficientNetB0 generated 1280 features, and NasNet Mobile provided 1056 features from the input images.

Table 10 presents the accuracy of different deep learning models paired with TPOT AutoML on both DB1 and DB2 datasets. Notably, for the DB1 dataset, the ResNet18 extracted features combined with MultinomialNB achieved an impressive accuracy of 70.2%, while for the DB2 dataset, the ResNet18 extracted features paired with ExtraTreesClassifier achieved a notable accuracy of 70.12%. These results underscore the effectiveness of leveraging a combination of deep learning and TPOT AutoML techniques for suicide prediction using facial analysis. The careful selection of the deep learning model for feature extraction and the TPOT AutoML model for classification can significantly influence overall performance.

Table 11 presents the Lazy Predict results for ResNet18 extracted features on the DB1 and DB2 datasets. The top three models vary between the two datasets, given the evaluation of 27 different models. For the DB1 dataset, the BernoulliNB model excelled, achieving an accuracy of 70.31%. Conversely, for the DB2 dataset, the BaggingClassifier model achieved an accuracy of 65.63%. These findings emphasize the diverse effectiveness of various machine learning models when dealing with ResNet18 to extract features for suicide prediction. It underscores that the choice of model can significantly impact performance and should be thoughtfully selected based on the specific requirements and constraints of the task.

Table 12 displays the Lazy Predict results for ResNet50 extracted features on both the DB1 and DB2 datasets, revealing distinct top-performing models for each dataset among the 27 models evaluated. In the case of the DB1 dataset, the GaussianNB model demonstrated superior performance with an accuracy of 68.75%. On the other hand, for the DB2 dataset, both the BernoulliNB and SGDClassifier models achieved an accuracy of 65.63%. These results underscore the varying effectiveness of different machine learning models in dealing with ResNet50 to extract features for suicide prediction, highlighting the importance of model selection based on specific task requirements and constraints.

Table 13 displays the Lazy Predict results for the EfficientNetb0 extracted features on both the DB1 and DB2 datasets, revealing distinct top-performing models for each dataset among the 27 models evaluated. For the DB1 dataset, the LGBMClassifier model exhibited the highest accuracy of 0.6563. In contrast, on the DB2 dataset, the LGBMClassifier model outperformed others with an accuracy of 0.7656. These findings emphasize the varying effectiveness of different machine learning models in handling EfficientNetb0 to extract features for suicide prediction. The LGBM Classifier model consistently performs strongly on both datasets, underscoring its reliability in this context.

In Table 14, the Lazy Predict results for the NasNet Mobile extracted features on the DB1 and DB2 datasets reveal varying top-performing models from the pool of 27 evaluated models. For the DB1 dataset, the Passive Aggressive Classifier, Nearest Centroid, and BernoulliNB models achieved accuracies of 62.50%, 64.06%, and 64.06%, with the NearestCentroid and BernoulliNB models achieving the highest accuracy of 64.06%. On the DB2 dataset, the ExtraTreesClassifier, BernoulliNB, and LGBMClassifier models achieved accuracies of 65.63%, 59.38%, and 60.94%, respectively, with the ExtraTreesClassifier model surpassing others with an accuracy of 65.63%. These results underscore the varying effectiveness of different machine learning models in handling NasNet Mobile to extract features for suicide prediction, with model choice impacting performance significantly.

Table 15 presents the results obtained using the Orange tool for the ResNet18 extracted features on both the DB1 and DB2 datasets, with the highest accuracy highlighted for each dataset. In the case of the DB1 dataset, the Neural Network model achieved the highest accuracy of 66.67%. On the DB2 dataset, the SVM model surpassed other models with the highest accuracy of 68.87%. These findings emphasize the critical role of model selection in achieving high accuracy in suicide prediction tasks when working with ResNet18 to extract features.

Table 16 presents the results obtained using the Orange tool for the ResNet50 extracted features on both the DB1 and DB2 datasets, with the highest accuracy highlighted for each dataset. In the case of the DB1 dataset, both the SVM and Tree models achieved the highest accuracy of 63.52%. On the DB2 dataset, the Naïve Bayes model outperformed the other models with the highest accuracy of 65.09%. These findings emphasize the critical role of model selection in achieving high accuracy in suicide prediction tasks when working with ResNet50 to extract features.

Table 17 presents the results from the Orange tool for the EfficientNetb0 extracted features on the DB1 and DB2 datasets. The model with the highest accuracy is highlighted for each dataset. For the DB1 dataset, the SVM model achieved the highest accuracy of 62.58%. For the DB2 dataset, the SVM model outperformed the other models with the highest accuracy of 67.30%. These results underscore the importance of model selection in achieving high accuracy in suicide prediction tasks using the EfficientNetb0 to extract features.

Table 18 showcases the outcomes obtained with the Orange tool for the NasNet Mobile extracted features on the DB1 and DB2 datasets, focusing on the model achieving the highest accuracy in each dataset. In the case of the DB1 dataset, the SVM model attained the highest accuracy of 63.84%. Conversely, for the DB2 dataset, the SVM model surpassed the other models, securing the highest accuracy of 65.41%. These results emphasize the critical role of thoughtful model selection in achieving elevated accuracy in suicide prediction tasks employing NasNet Mobile to extract features.

Table 19 summarizes the highest accuracy results obtained during the second phase of our SVIP framework, which involved the combination of deep learning for feature extraction and Automated Machine Learning (AutoML) techniques. The results are indeed promising. Specifically, for the DB1 dataset using ResNet18 extracted features, the LazyPredict technique identified the BernoulliNB model as the top performer, achieving an accuracy of 70.31%. Meanwhile, for the DB2 dataset, the LazyPredict technique applied to EfficientNetb0 extracted features saw the BernoulliNB model achieve an even higher accuracy of 75.00%. These outcomes underscore the effectiveness of amalgamating deep learning and AutoML techniques in the context of suicide prediction. It’s essential to recognize that the choice of feature extraction method and model can significantly impact overall performance and should be carefully considered.

5.3 Performance evaluation of the third phase

In the third phase of our SVIP framework, deep learning is employed for feature extraction, followed by SVM or NB classifiers optimized using Bayesian optimization. This phase involves the fine-tuning of the SVM classifier’s hyperparameters, including parameters like the regularization parameter C, the kernel type, degree for the polynomial kernel, gamma value, coef0 value, shrinking parameter, probability parameter, tolerance for stopping criteria, and maximum number of iterations. The selected ranges for these hyperparameters are thoughtfully based on typical values and the specific demands of our task. Bayesian optimization, known for its efficiency in hyperparameter tuning, employs a probabilistic model to predict the classifier’s performance under various hyperparameter configurations, ultimately selecting the most promising ones for evaluation. This method significantly enhances the SVM classifier’s performance, resulting in more accurate suicide prediction. You can find an overview of the hyperparameter ranges used for tuning the SVM classifier with Bayesian Optimization during the third phase in Table 20.

The Bayesian optimization process was executed across all feature datasets with 2400 iterations for each dataset and a summary of the results is presented in Table 21:

-

1.

In the DB1 dataset, utilizing the ResNet18 model for feature extraction, the SVM model with an RBF kernel achieved an impressive accuracy of 76.56%. The optimal hyperparameters for this configuration were determined as follows: C=0.995166, degree=2.274785, gamma=0.022941, coef0=0.456272, shrinking=0.537733, probability=0.96351, tol=0.004642, and max_iter=572.3271.

-

2.

Within the DB1 dataset, leveraging the EfficientNetb0 model for feature extraction, the SVM model with a sigmoid kernel achieved the second-highest accuracy of 76.56%. The associated optimal hyperparameters were specified as C=3.576271, degree=1.182956, gamma=0.009256, coef0=0.576053, shrinking=0.960926, probability=0.914704, tol=0.004164, and max_iter=458.424.

-

3.

In the DB2 dataset, utilizing the ResNet18 model for feature extraction, the SVM model with a poly kernel achieved an impressive accuracy of 75%. The optimal hyperparameters for this configuration were determined as follows: C=10; Degree=2.580493; Gama=0.001; Coef0=1; Shrinking=0; Prob=0; Tol=10; MaxIter=885.7897.

-

4.

These findings underscore the efficacy of employing Bayesian optimization for refining the SVM model’s hyperparameters in the context of suicide prediction using diverse feature extraction techniques. It is evident that the choice of kernel type and the specific values assigned to hyperparameters can significantly influence the SVM model’s performance.”

The Bayesian optimization process was applied to the Naïve Bayes (NB) model across all feature datasets with 55 iterations for each dataset, and the results are summarized in Table 22:

-

1.

In the DB1 dataset, employing the ResNet18 model for feature extraction, the NB model achieved an accuracy of 65.11%. The optimal hyperparameter for this setting was determined as alpha=0.9956744800903781.

-

2.

Within the DB2 dataset, utilizing the ResNet50 model for feature extraction, the NB model achieved the second-highest accuracy of 67.31%. The associated optimal hyperparameter was specified as alpha=4.706837441420677.

These findings emphasize Bayesian optimization’s effectiveness in fine-tuning the NB model’s hyperparameters for suicide prediction, particularly when different feature extraction methods are employed. Notably, the alpha hyperparameter, responsible for controlling the model’s smoothing, can significantly influence the NB model’s performance.

The significance of hyperparameters for the SVM model was assessed using the RReliefF algorithm, and the results are summarized in Table 23. Notably, the top five influential hyperparameters exhibited variability depending on the dataset and feature sets under consideration. For instance, when working with the DB1 dataset and utilizing the ResNet18 model for feature extraction, the hyperparameter ’MaxIter’ emerged as the most influential. In contrast, when working with the DB2 dataset and employing the NasNet Mobile model for feature extraction, the pivotal hyperparameter was ’Probability.’ This observation underscores the pivotal role of hyperparameter tuning in enhancing the SVM model’s performance. It also highlights the need to tailor tuning strategies to the specific characteristics of the dataset and features at hand.

Figure 6 provides insight into the frequency of each hyperparameter appearing in the top five rankings across all feature datasets. Notably, ’Coef0’ emerges as the most frequently influential hyperparameter, appearing eight times, underscoring its significant impact on the SVM model’s performance in various scenarios. ’Degree’ and ’Gamma’ follow closely, each making six appearances in the top rankings. ’MaxIter’ is observed five times, while ’Tol’ and ’Probability’ each appear four times. ’Shrinking’ demonstrates influence three times, and ’C’ and ’Kernel’ have the least frequent presence, appearing only twice. These findings highlight the consistent importance of ’Coef0,’ ’Degree,’ and ’Gamma’ across different datasets and features, suggesting their prioritization in hyperparameter optimization efforts.

Based on the findings, a subsequent experiment was conducted. This experiment involved the selection of the top three most frequently occurring hyperparameters from the top five rankings across all feature datasets for Support Vector Machine (SVM) classification, namely ’coef0,’ ’degree,’ and ’gamma.’ The remaining hyperparameters were maintained at their default values. Following 600 iterations of this experiment applied to the most promising feature dataset, which comprises ResNet18 features extracted from DB1, we achieved a maximum accuracy of 0.765625. The hyperparameters that led to this optimal performance were identified as follows: ’coef0’ with a value of 0.4260177602253441, ’degree’ with a value of 1.7740598538582124, and ’gamma’ with a value of 0.02230850966205276. These results signify that optimizing the most frequently occurring hyperparameters is sufficient to achieve the best classification performance.

6 Comparison with state-of-the-art techniques

We have compared our findings with the most relevant research, and to the best of our knowledge, we are the first to focus on classifying suicide behavior using facial images. A comparison with state-of-the-art techniques is presented in Table 24, highlighting several advantages of this research:

-

1.

We emphasize image classification over video analysis, as videos may not always be available for victims, potentially saving more lives.

-

2.

Unlike some of the compared research, such as Laksana et al. (2017); Eigbe et al. (2018); Liu et al. (2022), which rely on interviews with individuals from specific nationalities and lack dataset diversity, our dataset is collected from diverse cultural backgrounds who have tragically taken their own lives. This extensive dataset mitigates the constraints of limited dataset sizes and the lack of generalizability in earlier studies.