Abstract

Research interest in autonomous control of unmanned aerial vehicles (UAVs) has increased rapidly over the past decade. They are now widely used in civilian, military, and private areas. Applications include surveillance, search and rescue, and delivery tasks. More broadly, they excel at solving problems where a significant amount of space must be covered and traveled. However, using UAVs to solve navigation problems with full autonomy necessitates the mastering of complex subtasks. A solution that includes control, planning, localization, and mapping remains an open challenge. Object-goal navigation contains the same navigation problems where the main objective is to reach a target object. The search and identification of this target are central to the vehicle’s navigation. This requires an understanding of what it is and where it can be located to move around the scene. This paper presents a systematic literature review on object-goal navigation and its subtasks, using autonomous UAVs. Survey taxonomies were found for the tasks and methods behind navigation and target localization problems using UAVs. The review analyzed 67 articles found between 2011 and 2022. They were found in the ACM, IEEE Xplore, WebOfScience, Science Direct, and Scopus databases. This review revealed essential issues related to autonomous navigation task dependencies. Moreover, it highlighted gaps in UAV development and framework standardization. Open challenges for autonomous UAV control for object-goal navigation must address the research on finding methods for problems. For example, autonomy level and comparison metrics, considering safety, ethics, and legal implications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Unmanned aerial vehicles (UAVs) were first conceptualized at the beginning of the 20th century with the appearance of world conflicts (Mátyás and Máté 2019). When World War I began, flights were not only used for passenger delivery, and bombs were included. The first UAVs appeared that could fly to a target location, release the cargo, and return to base for reuse. Then, during World War II, UAVs were reduced in size, improving theirs distance range and control methods. In the Cold War, UAVs were used for remote sensing long-distance areas, taking pictures, and sensing and jamming radio frequencies. After these historical milestones, and until the end of the ’90s, UAV technologies were improved with better and newer sensor ranges, new communication interfaces such as satellite links, and greater computational power.

In this century, many advances have improved UAV hardware technology, increasing their presence for civilian, educational, and commercial uses, among many others (Mohsan et al. 2022). An interesting research area is that focused on autonomous UAVs, where the implementation of the method is tightly constrained by their application, UAV type, and onboard sensors. For example, quadrotors are more efficient in agriculture due to their maneuverability and available visual sensors; nevertheless, carrying a payload becomes challenging (del Cerro et al. 2021). Another application for UAVs is in the Internet of Things, where communication extensions or computer power can be provided to the present devices (Liu et al. 2020).

UAVs present a significant advantage in many applications, improving a system’s performance by performing tasks autonomously (Miranda et al. 2022). Additionally, the use of UAVs improves processes where a large area must be dealt with. A well-designed autonomous system must therefore allow a vehicle to move around safely and efficiently. First, an aerial vehicle flight over permitted areas without collision requires a navigation system. This system is responsible for offering suitable trajectories using sensory information. It allows the vehicle to move around the environment, ensuring travel from point A to point B. A movement strategy that considers travel speed and safety for the vehicle and the environment is important. Additionally, the vehicle must often carry out a given mission. In that case, the navigation system must also choose the point sequence that the vehicle must follow. Finally, a navigation system able to operate autonomously requires decision-making ability. It should be capable of determining suitable trajectories without human intervention.

The current literature has outlined different challenges in achieving autonomous navigation systems (ANS) (Yasuda et al. 2020). An ANS should solve problems such as control, localization, mapping, and path planning. Previous applications would occasionally include monitoring. As such, the sensing problem should also be addressed. In this regard and for simplicity, this document defines that an ANS must carry out four principal tasks: vehicle control, path planning, environment mapping, and surrounding perception.

Vehicle control is responsible for understanding how to apply force to move the vehicle at a desired velocity. For example, Song et al. (2022) uses a PID error feedback controller. The PID controller is named after its three component-equation that uses the output feedback to measure an error value. Proportional-integral-derivative equations are applied to the error or difference between the current output and the desired set point. The PID controls the vehicle’s direction along three axes, using the difference between current and desired location. This, enhanced with the Newton-Euler second-order derivative equations, can be used to control motor perturbance using position information.

Another challenge is path planning. This consists of evaluating the current vehicle state and defining where to go next. It proposes the next steps in a trajectory to reach its final location. Nitsche et al. (2020) outlines a two-stage method for path planning. The first stage creates a map using UAV orientation as a graph. In the second stage, a trajectory is created based on the map. This trajectory is proposed after comparing the current UAV location against the map. The proposed path encourages the UAV to follow the same trajectory presented on the map.

Path planning and mapping go hand in hand. Spatial representation is essential for planning, and the map is used to identify and locate objects within space. One of the objects can be the vehicle itself, as it is also part of the spatial representation. As such, localization problems are also considered mapping tasks. For this task, Kumar et al. (2020) proposed an occupancy grid map method. It used cells to divide an area, each with a corresponding probability of an object being present. Additionally, it implements Simultaneous Localization and Mapping (SLAM) to locate the UAV in a given map. SLAM is widely used to perform both tasks cost-efficiently. It provides a spatial representation of the environment and UAV attitude.

Finally, the perception task includes all the sensory data processing required by ANS methods. For example, Mumuni et al. (2022) proposed a vision-aided navigation system. It used three deep learning methods to process image depth, vehicle ego-motion, and camera optical flow. It is therefore capable of improving depth and object-level perception in dynamic, real-world environments. The resulting representation of the environment is used for control, obstacle avoidance, mapping, and planning. Most current papers address autonomous navigation as a point-driven or area-based problem. The vehicle must reach a defined coordinate point or area, respectively.

As mentioned above, an ANS handles the vehicle’s trajectory from point A to point B. Object-goal navigation extends an ANS by seeking a target object while the vehicle travels around the scene (Batra et al. 2020). Therefore, identifying the target object is an additional problem beyond those presented in ANS. It requires object recognition and detection, which are necessary to identify the target’s location. This kind of problem is commonly solved through computer vision (CV) techniques. However, the inclusion of CV methods in ANS mainly consists of adding the detection method as an independent process. Hence, the addition of CV increases the computational cost by processing visual sensor data solely for feature acquisition.

A notable challenge in object-goal navigation is the trade-off between navigation and exploration. Since all decisions should prioritize trajectories to areas with a high probability of finding the target object, the navigation behavior will travel around the known part of the scene. In contrast, if the known part does not contain the target object, the exploration behavior should select unknown locations with a high probability of finding it. In this regard, object-goal navigation methods should focus on the target’s characteristics and where it could be found. Only a few papers implement UAVs for object-goal navigation, addressing this as an object search problem. This scarcity of UAV-based implementation for object-goal navigation is the main reason for conducting this systematic literature review (SLR).

This SLR seeks to identify the state-of-the-art object-goal navigation methods using UAVs for an emergency response scenario (Agrawal and Cleland-Huang 2021). Two main UAV types were found. The first is small and lightweight, able to fly faster with less noise. The other is large and capable of carrying a payload. A lightweight vehicle that can cover an area in a short time is desirable for monitoring emergencies. For example, in a fire monitoring task, the main objective is gathering visual information to identify the fire’s location. Likewise, a larger vehicle is desirable for transporting objects that enable early assistance in a search and rescue task. Delivering medicines and/or food becomes essential if a person is found in a zone of difficult access. In both cases, the vehicle navigation is centered on finding the target object, a fire in the first example, and a person in the second.

This document also presents a well-defined methodology. It describes the search strategy, exclusion and inclusion criteria, information extraction, and quality criteria for article evaluation (Kitchenham and Charters 2007). This SLR attempts to classify, evaluate, and interpret the available research articles on object-goal navigation using UAVs. To the best of our knowledge, no previous works were found during the review’s execution addressing object-goal navigation with UAVs. Only two surveys for UAV control regarding landing (Maitra et al. 2016) and obstacle avoidance (Sarmiento and Murphy 2018) were identified. Nevertheless, an example of visual navigation for mobile robots SLR (Yasuda et al. 2020) was found during manual searching. This task shares some aspects and diagnoses presented in this review. A main common issue is the lack of standardization in the experimental setup reported by the included papers.

The rest of the document is organized as follows: Section 2 introduces the methodology used for this systematic review. It is followed by the results in Section 3 with the taxonomies discovered through the research questions. Subsequently, Section 4 discusses the literature and open challenges, with the conclusions and final remarks outlined in Section 5.

2 Methodology

The research methodology used for the systematic literature review was based on the guidelines written by Kitchenham and Charters (2007). Its main purpose was to ensure reproducibility. Additionally, the SLR aimed to add scientific value by following a search strategy. This way, all relevant aspects of the research topic should be discovered. The review protocol itself was designed to find papers addressing object-goal navigation methods using aerial vehicles. First, the research question was defined. This guides the manuscript search, establishing the review’s context and limitations. “Which characteristics and methods are required to solve an object-goal navigation problem using an unmanned aerial vehicle to explore, search, and recognize a target autonomously?.” From this question, some specific research questions were also formulated and detailed in Table 1. The entire process is illustrated in Fig. 1. More details about the review protocol and execution can be found in Appendix A.

Overall SLR method to obtain relevant papers and assess quality. On the left are the raw data, or papers obtained directly from the digital libraries. On the right are the selected papers, which were analyzed one by one. The results presented were obtained from quality questions and extracted metadata analysis of each paper

3 UAV-based autonomous object-goal navigation

No systematic literature review was found on UAV-based autonomous object-goal navigation between 2011 and 2022. A total of 67 articles included in this review were processed to extract relevant data. Two of them were topic-related article surveys. The first is on bio-inspired methods for autonomous landing (Maitra et al. 2016). The second is on small UAVs’ obstacle avoidance system (Sarmiento and Murphy 2018), inspired by animalistic flying behavior. Navigation-related papers and others focusing on navigation subproblems were found. The navigation-based papers try to automate the UAV’s behavior, solving the necessary tasks to move from one given point to another. Other articles address autonomous tasks such as mapping, planning, controlling, and detection. Additionally, other tasks were found that complement the previous four, as detailed next.

The evolution of research interest in the topic was the first data to be processed. Figure 2 shows an increase in interest in recent years. Most of papers between 2020 and 2022 used a quadcopter vehicle type (38), followed by fixed-wing (19), multicopters (4), helicopters (3), and blimps (1). Some papers applied to more than one type of vehicle, as presented in Table 2.

The main difference between each UAV is based on its motion dynamics and thrust vector. Motion dynamics encompass all the principles that govern the motion of the vehicles, including the laws of physics. The vehicle’s engine produces a thrust vector on the vehicle frame, which must be tilted to translate the vehicle. Initially, the thrust vector is parallel to the earth’s surface for fixed-wing UAVs and blimps. However, the thrust vector is perpendicular to the earth’s surface for rotor-propelled vehicles. As shown in Fig. 3,Footnote 1 each vehicle frame can rotate its orientation, illustrated by roll (\(\phi \)), pitch (\(\theta \)), and yaw (\(\psi \)) angles. The global frame is illustrated by \(E_x\), \(E_y\), and \(E_z\), representing the three-dimensional axes.

The countries with the most research output on UAV-based autonomous object-goal navigation are China (21), the USA (13), and Germany (5). Other countries and their number of publications are presented in Fig. 6. Processing this metadata allows for a better understanding of interest in the research topic. Additionally, it introduces the context for a more detailed analysis of the research questions. The following subsections present the findings of the processed data, classified into a grouping taxonomy for easier understanding.

3.1 Tasks involved

The first research question (RQ1) on the tasks involved in the object-goal navigation problems requires two primary behaviors, i.e., navigation and exploration. The main difference between these behaviors is the objective behind them. Navigation behavior was defined as the problem of displacing the vehicle from point A to point B in a fast and safe manner, while exploration behavior was defined as acquiring information from unvisited areas of the environment. As such, exploration must displace the vehicle to a more advantageous location. Only eleven articles included the described exploration behavior in their proposed method.

Diagram of the principal unmanned aerial vehicle types found in the literature, with movement axes represented. \(E_x, E_y, E_z\) refer to the global frame. Roll \((\phi )\), pitch \((\theta )\), and yaw \((\psi )\) represent the vehicle frame orientation. The red arrow thrust indicates each vehicle’s initial force direction vector, with its changes allowing motion in the global frame

The relationships between the main tasks required to achieve autonomous UAV navigation. The top row has the low-level tasks that must be executed in real-time for the UAV to operate safely in static or dynamic environments. The bottom row contains the high-level tasks that do not have to be processed in real-time. Nevertheless, they present information that can be insightful for the designed mission. The arrows indicate the dependencies of each task, e.g., the control task depends on perception and planning

Four main tasks were defined within those two main behaviors. These are presented in Fig. 4:

-

Vehicle control regulates the actuators’ movement according to current pose and target coordinates. It requires real-time processing to ensure safety. The methods vary according to the vehicle’s motion and degree of freedom.

-

Path planning determines the series of points that the vehicle must reach. Succeeding coordinates establish a trajectory for exploration or navigation behaviors. The trajectory definition should ensure safe travel to the target location.

-

Scene mapping represents the vehicle’s surroundings, tagging free space, unexplored areas, and objects. This task is executed simultaneously with localization to identify the current vehicle’s pose. Methods found use different sensorial information to create a map representation.

-

Environment perception processes all sensor data for proper surrounding and vehicle descriptors. It is responsible for delivering insightful information for the other tasks. Proper environment details are essential to achieve the mission’s goal.

The main tasks defined here matched those reported by Yasuda et al. (2020) in their SLR on visual navigation for mobile robots. The primary difference lies in the task of localization, which was included here as a mapping sub-task. A perception task was also included, which deals with all of the vehicle’s sensor data.

Localization was included as a sub-task because, as presented by Peake et al. (2020), object-goal navigation can be solved using just the representation of the surrounding environment. This method was based on deep reinforcement learning and used sub-regions from an environment map. Results prove its efficiency compared to a hard-coded policy of navigating in zigzag.

Many applications perform localization and mapping simultaneously. SLAM presents the advantage of locating the UAV in the environment map, providing the vehicle’s positional information. Localization then represents the vehicle’s pose within the environment map. A UAV’s localization can then be interpreted as the body frame coordinates and the map as the global frame coordinates. Both are representations of the environment from different perspectives. The purpose and detail level of the representation depends directly on the task’s complexity. All the identified tasks are explained below.

As shown in Table 3, 50 papers present the control task in their proposal. A vehicle must influence its actuators or motors for navigation-only in 29 papers. In fixed-wing cases, the vehicle manipulates the wind to change the thrust direction. For rotor UAVs, different propeller velocities influences the thrust degree used to achieve movement. The presence of obstacles or structures can present a navigational challenge to vehicle displacement.

Specific AV control tasks such as landing and aerial refueling were found. Only two papers addressed aerial refueling where the UAV must approach a second AV. The approaching maneuvering must ensure the docking of a probe. Additionally, the UAV control must be steady and synchronized with the tanker. The tanker position and velocity are essential to ensure the refueling process. This task was considered only for fixed-wing UAVs.

The landing control task was found in nine papers, where the UAV is required to reach a ground position. In this task, a huge difference exists between rotor-propelled and fixed-wing UAVs. The first group only need a landing position because their thrust vector is vertical. The second group need a landing path for touchdown, reducing the horizontal thrust vector. Therefore, the vehicle’s maneuvering should ensure the correct positioning in the landing path. Once positioned, it will reduce the thrust vector until it settles down.

Eleven papers deal with the control under exploration task. The control behavior must provide safe trajectories when in unknown environments. Therefore, vehicle control must reach undiscovered areas safely. The general purpose of exploration is to cover all available flight areas. Optionally, it can be used to search for interesting objects or areas.

The control tasks solved in four papers that address competition challenges rely upon other tasks. The perception or mapping tasks aid in locating the target’s position. This is very helpful for landing and aerial refueling. Nevertheless, for exploration, planning tasks are essential.

Only 32 articles addressed path planning tasks, as shown in Table 4. Thirteen papers present a waypoint-based path planning. The vehicle must reach a series of predefined location points. A waypoint is commonly used when the map is known or when prior information is available. This planning relies on positional information to mark a route point as visited.

Thirteen articles do actual trajectory planning considering sensory inputs or a populated map. It then decides which location or coordinate the vehicle must reach. The main idea is to create a path to reach a target location. The trajectory chosen must be safe and as short as possible.

Seven articles deal with path planning for exploration purposes. Besides being safe, the chosen trajectory must balance unknown and known places. When the UAV revisits a previous place, it can improve the resolution or details of known objects. While going to unknown places, it can detect objects not sensed before. Surrounding spatial information is therefore helpful for successful path planning.

Regarding the spatial tasks shown in Table 5, 34 papers addressed mapping or localization tasks. The mapping task creates a common spatial representation to provide surrounding information. The localization task identifies the vehicle pose within this spatial representation. A map representation requires global frame coordinates.

Only three articles presented a mapping-only task, representing coordinates for environment objects. That information is created in parallel with all other tasks, improving performance. Mapping constructs a spatial representation, with other tasks then querying that representation.

Similarly, sixteen papers deal with localization only. The main idea of localization is to infer relative coordinates from raw sensory data. Relative coordinates demand sensor landmarks to compare against, similar to direction finding. Sensed reference points enable the relative positioning of a given landmark.

Fifteen articles deal with mapping and localization simultaneously. The mapping task creates a representation of the surroundings, while the localization task determines the vehicle’s pose within that representation. This not necessarily the same as the simultaneous localization and mapping method (SLAM). The SLAM method performs other processes to make both localization and mapping reliable. A good representation of the surroundings and vehicle localization depend on the perception task.

The perception task shown in Table 6 is essential for autonomous vehicle operations. Obstacles and targets are the two main objects that a UAV must recognize. Only 31 articles reported obstacle or target-aware methods. A two-class distinction between perception only and perception for control was found. Eight articles solve only the recognition of a given target or surrounding obstacles. The main challenge is on the target recognition side. An autonomous system needs specific descriptors to differentiate one object from another. Additionally, it requires accurate sensor data to produce these descriptors. The same challenge holds for obstacles, although descriptors can be more generic.

Twenty-three papers include the obstacles and target features in the control pipeline. Delivering insightful descriptors therefore becomes part of the vehicle state. Ideally, the perception task must deliver position or distance information, and deal with known and unknown targets and obstacles, in addition to environmental noise that can make it difficult to identify surrounding objects. Hence, the perception task requires a higher-order understanding of the environment.

The task relationship presented in Fig. 4 shows that perception is the most essential task. The perception task manages and processes all sensory data. Proper information must be delivered regardless of the environment. Aerial vehicle sensor data can range from one-dimensional to multi-dimensional. The more dimensions present, the more computationally expensive it becomes. Sometimes, better descriptors arise from multi-dimensional data. For example, visual sensors can determine the internal vehicle control state, objects in the scene, or the structure of the surroundings. Different papers address perception through diverse methods. However, most of them are probabilistic models that aim to obtain sensor information robust to noise. The richer the sensory data information flow, the better performance can be achieved.

3.2 Current methods

Answering RQ2, different methods were found which solve the tasks mentioned above. However, only a few articles addressed the navigation problem autonomously while others didn’t address all of the necessary tasks. Additionally, some papers do not clarify what methods were used to solve some tasks. For example, in cases where the control method was omitted, the authors mention only the onboard control platform.

3.2.1 Control methods

Only 50 papers in Table 7 provided the implemented control method. 22 of them reported the use of autopilot-based control. Pixhawk autopilot is a vehicle control hardware platform that facilitates UAV development. It has easy-to-connect peripherals and mission management. Hardware-level control, executed in real-time, ensures the vehicle’s safety and stability. Because the board is control-specific, most control tasks are solved at the hardware level. However, the board cannot process tasks that have a high computational demand. As such, this kind of solution is optimal for vehicle control only. All other high-level tasks must be implemented on another computer board.Footnote 2 Five papers use the PX4 software and the Pixhawk 4 advanced autopilot hardware. PX4 is a software application developed for vehicle operation, performing low-level control. It usually performs a vehicle’s initial configuration and motor calibration. It can also execute more complex tasks such as mission planning.

Fourteen other articles used feedback error propagation methods, such as a Proportional-Integral-Derivative controller (PID controller) or Linear Quadratic Regulator (LQR). Two additional articles (de Plinval et al. 2017; Delamer et al. 2021) used a custom feedback controller implementation. Moreover, other articles include the PID controller with future value prediction through transition models (Tsiourva and Papachristos 2020; Dang et al. 2020), non-linear models (Mansouri et al. 2020b, a), and artificial neural networks (Fuentes et al., 2014). Generally, an error feedback method tries to minimize or maximize the control variable until it reaches a set point value. The difference between the input and the reference value is modified according to the implemented method. In the case of the PID, the proportional, integrative, and derivative mathematical methods are applied to calculate an output value. It is commonly to use two PID controllers to implement UAV control. One controller regulates movement along the x-axis, and the other, the y-axis. The controller’s output is always used as motor velocity. According to the error feedback method, the operations will be applied to the difference. These kinds of controllers are widely used and their hyperparameters are easily tuned. However, more than one controller is required to achieve smooth flight behaviors, thereby, increasing linearly the number of hyperparameters that must also be tuned.

The other eight papers address the control task with methods such as reinforcement learning (Fu et al. 2022; Zhao et al. 2021b) and fuzzy algorithms (Al-Jarrah et al. 2013). These methods aim to develop a suitable control policy to move the vehicle around the environment. These learning methods try to map the environment observation into a desired control output. The control behavior achieved by the UAV depends directly on the cost function used. In the case of reinforcement learning, the cost function is known as the reward function, a value that models the problem’s goal. The policy obtained will depend directly on the control problem modeling. Most policies learn to go from one point to another as an end-to-end solution. Applying learning methods for UAV control has demonstrated that high-level commands can be used to achieve movement. However, the main issue is the number of samples required to solve the problem. Most of the papers found propose methods to reduce learning time and improve performance.

Eight articles present a predictive or probabilistic method for control tasks. These methods process the vehicle’s state to aid the vehicle control algorithm. Yousuf et al. (2022) implement a probability of density hypothesis to filter possible objects’ locations. Tsiourva and Papachristos (2020) propose a visual-thermal saliency model to enhance control inside an underground mine. Mansouri et al. (2020b) present a state estimator to infer the next state, constraining control inside an underground mine. Interesting dual control cycle method implementations were found in Helgesen et al. (2019) and Lugo-Cárdenas et al. (2017). The first is focused on vehicle stability and attitude control, and the second manages high-level movements. Both methods control the UAV’s behavior to reach a defined target point. They also improve vehicle movement, providing a smooth and safe trajectory. However, the application is constrained to specific environments, presenting a low generalization.

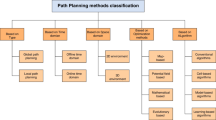

3.2.2 Planning methods

The planning methods found from Table 8 differ with regard to the objective of each behavior. Twelve papers address the planning method as a waypoint following problem. In this case, the vehicle must correct its pose to reach the next waypoint in the route. Most waypoint-following papers present a navigation-only behavior.

Ten papers focused on the planning method as a graph-based approach. They use a cost function over the graph’s vertices to determine which point to reach. Among the graph-based methods, several tree-search algorithms were found. They were Rapidly-exploring Random Tree (RRT) (Dang et al. 2018; Prophet and Trommer 2020), its improved version RRT* (Alarcon et al. 2021), the Dijkstra algorithm (Bershadsky and Johnson 2013), and frontier-based exploration (Bachrach et al. 2010). The main idea behind graph-based methods is to go from one node to another, traveling the shortest obstacle-free path based on vertex cost analysis between the required nodes.

Other methods are proposed that use genetic (Tymochko et al. 2020; Song et al. 2022) or optimization (Delamer et al. 2021; Jia et al. 2022) algorithms. An interesting approach was presented by Zhao et al. (2021b), with intermediate goals to reach the final known point. The methods in Others category, includes custom implementations and mathematical models.

In summary, all planing methods uses UAV position and the problem objective to plan tasks executions such as position, velocity, or future state that must be reached. The planning methods are focused on optimizing a score value given a function or constraints, to find the shortest path to a target location. Most of the planning implementations uses information such as UAV position, pose, and velocity, to reach a target state. However, when the target state includes the object’s location, a planning method must choose or infer where the object could be.

3.2.3 Mapping methods

Most planning methods require prior spatial information to propose a movement scheme. The mapping task solves the spatial information problem through three main types of methods. As shown in Table 9, ten papers treat the mapping task as reconstruction. They fuse sensory data to create a spatial projection for each element in the scene. Simultaneous mapping and localization (SLAM) (Bachrach et al. 2010; Bershadsky and Johnson 2013; Dang et al. 2020; Zhao et al. 2021b), a widely used navigation method, solves this reconstruction problem. It fuses internal and external sensor information to create a map with structural information such as corridors, objects, and the vehicle itself. Another method is called iterative closest point (ICP) (Li et al. 2018; Narazaki et al. 2022), which aims to improve approaches that connect a point cloud to an image obtained from sensors. This relation adds visual depth information to the map. Other map reconstruction methods are Quadtree decomposition (Peake et al. 2020), Oblique photogrammetry (Yang et al. 2021), and laser sensor based implementation (Tsiourva and Papachristos 2020). These methods, like ICP, aim to fuse visual and depth sensor data to create a map that includes distance information about the surrounding environment structure and the objects within it.

Another mapping task category was defined as representation, with seven papers employing this method. The major difference encountered between the representation and reconstruction methods introduced earlier is how they use sensor data. Reconstruction creates a map that includes all surrounding elements, while representation uses that mapped information to form a spatial projection of the environment. In simple terms, the representation of a map is simpler and contains only the information vital to the navigation problem. The map representation methods commonly use occupancy grid maps, which are separated into different cell scene regions. A variation of occupancy maps adds cell priority (Kusnur et al. 2021) according to a computed score or adds OctoMap (Dang et al. 2018), which includes the Octree data structure for efficiency. Other variations were implemented with SLAM for sensory data fusing (Dang et al. 2020; Zhao et al. 2021b).

A final category for the mapping task is the reference map. These methods use environment information as a reference for spatial information. This mapping approach lets a vehicle know its position after sensing the reference information. Only two methods were found with this kind of mapping. The first (Nitsche et al. 2020) used visual landmarks to locate an interesting spatial region in the scene. The second useda radio frequency (RF) signal (Wang et al. 2017) to find objects of interest, creating a signal fingerprint of the distance between the vehicle and different objects’ beacons in the environment.

3.2.4 Localization methods

Many mapping methods, such as SLAM-based ones, require the current vehicle pose to create correct spatial information that considers distance and coordinates. However, localization relies mainly on odometry sensor data and vehicle control state to estimate the current vehicle information. Four main localization categories were found in the literature review, which are presented in Table 10.

One category called probabilistic, had seventeen articles using this method. They process the vehicle sensor data with probability techniques to infer an expected state. As the SLAM technique processes sensor data to correct the current vehicle pose (Bachrach et al. 2010; Bershadsky and Johnson 2013; Cocchioni et al. 2014; Dang et al. 2020; Narazaki et al. 2022; Kumar et al. 2020; Zhao et al. 2021b), was included in this category. Additionally to SLAM, some methods complemented it with probability methods such as Kalman filters. There can also be variations such as extended Kalman filters (EKF) (Konrad et al. 2017; Li and Hu 2021; Mansouri et al. 2020b; Meng et al. 2019) and Kalman consensus filter (Li et al. 2018), non-linear observer (Helgesen et al. 2019), particle filters (Wang et al. 2017), and Monte Carlo processes (Delamer et al. 2021; Kusnur et al. 2021). The probabilistic method’s central idea is to infer the control vehicle’s state for accurate pose interpretation. This inference is optimized during execution, constantly updating over time to be noise-robust.

Another category was named reference localization. This is where the vehicle pose is estimated using signal strength sensor data to define a target pose. In this category, eight articles were found. Three of them used visual references for state estimation, to reach a target state for aerial refueling (Sun et al. 2019; Zhu et al. 2018; Lee and Kim 2022). Other methods used a matrix barcode (Yu et al. 2017) observed by a camera to estimate pose. The 2D barcode was created to represent an aerial vehicle’s six degrees of freedom. Similarly, two other papers use 2D ARuco markers as a visual reference to identify the target direction (Capi et al. 2021) and the landing pad position (Cho et al. 2022). Another paper presented a radio frequency-based method (Perez-Grau et al. 2021) to infer current vehicle position by processing each object’s signal strength. Each signal presents a custom identification, allowing differentiation of one beacon signal from another for correct position triangulation. Custom localization proposals were also found (Xu et al. 2013), integrating thermal images to locate points of interest used as a reference for autonomous landing. Another article presented a pose target as a reference (de Plinval et al. 2017) to obtain the current relative vehicle pose and reach the objective point.

The third localization method category was that of visual-based approaches. They process image data with or without additional odometry information to estimate current vehicle pose. Seven articles were included in this category. One custom proposal (Mao et al. 2020) uses landmark recognition and a confidence score to know the current position while traveling from one point to another. The other three articles fuse ?? the visual and inertial information to achieve accurate state estimation. This latter method can track the vehicle’s position under motion without a map or any prior information. A final category with only one paper (Alarcon et al. 2021) relies on the autopilot system, which delegates the localization method to the onboard system.

3.2.5 Perception methods

The perception methods identified during the review were classified into four categories in Table 11. A category with nineteen papers is computer vision (CV) based approaches. CV methods use hand-crafted features to interpret sensor data, mainly from visual sensors. Hand-crafted features or descriptors were captured after being processed by different CV techniques. Descriptors highlight aspects such as color, border, textures, intensity changes, and motion. Nakamura et al. (2016) present a CV method with EKF to improve the accuracy of state estimations under a noise-robust setup. A saliency map was also used with CV techniques by Tsiourva and Papachristos (2020), highlighting the zone of interest from the visual field. Narazaki et al. (2022) proposed a Structure from Motion (SfM) method with computer vision to capture geometrical information on the surroundings.

More elaborate systems require more than one sensor processing method. For example, Bhusal et al. (2022) use CV to locate the 3D position of a flock of birds after detecting movement using convolutional neural networks (CNN). Abdulov et al. (2022) use CV methods in simple mark recognition and object detection algorithms to obtain the coordinates of specific objects. In this category, Zhang and Duan (2014) proposed a target detection method through template matching. Template matching relies on prior knowledge of the target’s features to compare against visual sensor information, aiming to locate the target correctly within the image. In this regard, Fuentes et al. (2014) proposed an entropy-based value to check how many possible interest objects are in the scene. Furthermore, they processed the image with computer vision methods to identify landmarks.

Ten articles were classified as using probabilistic methods to process sensory information. Techniques such as EKF obtain a proper state estimation from current captured sensor data. Nakamura et al. (2017) uses EKF with a particle filter to achieve correct landing control.

Another category includes thirteen papers with learning methods based on the gradient-descent algorithm. These methods use artificial neural networks (ANN) to transform sensor data into another latent representation. Neural networks can learn noise robust sensor representation depending on the training process. Two main ANN kinds were found, shallow fully-connected networks and convolutional neural networks (CNN). Both can process any numerical input data, however, the CNN are widely used to capture features from higher dimensional data. From learning methods, Li and Hu (2021) implement two-hierarchy CNN to achieve autonomous landing from a ground-based vision sensor. Mansouri et al. (2020b) include geometric vector representation in the CNN processing pipeline to acquire useful sensor information on the surroundings. Other methods use the YOLO detector to locate and identify different surrounding elements. Dang et al. (2018) and Alarcon et al. (2021) used YOLO (Redmon et al. 2016) mainly to identify the target object under autonomous navigation. However, in Dang et al. (2020), the detector was aided with bayesian filters to improve the object identification and depth-related information.

A final category of custom implementations includes four papers. One uses a tracking-only method (Fu et al. 2013) to report the target’s position from visual input. Other custom implementations use Density-Based Spatial Clustering of Applications with Noise (DBSCAN) approach (Chen and Lu 2022; Prophet and Trommer 2020) to produce surrounding information from laser data. Another method processes the sensor data as spatial-structure information (Zhao et al. 2021b) to solve the autonomous navigation problem.

3.3 Sensory vehicle information

During the review it was discovered that different authors use different sensor data for the control, perception, mapping, and planning tasks. Nevertheless, it was possible to identify that most methods use visual sensors, followed by reference, range, and position sensors. The visual sensors use any visible or invisible light spectra. The control task is the one in which more sensor types were reported, followed by localization, planning, mapping, perception (obstacle and target recognition), and exploration.

3.3.1 Sensors classification

One first sensor category presented in Table 12 was named visual sensors. 42 articles use image signals as primary input data for their proposals. Most use RGB images, in a monocular setup. Some papers combined RGB with depth image information (Chen and Lu 2022; Li et al. 2018; Zaki et al. 2017; Zhao et al. 2021b), or used RGB with thermal image information (Cocchioni et al. 2014; Dang et al. 2020). Others use a stereo vision setup combined with depth (Alarcon et al. 2021) or thermal images (Tsiourva and Papachristos 2020). Finally, other papers use only thermal images (Meng et al. 2019; Xu et al. 2013; Helgesen et al. 2019). These visual sensors were used mainly to identify landmarks, objects, or points of interest around the vehicle.

Table 13 presents the second sensor category, data called reference. 34 articles used sensor data that depends on a reference framework to obtain the different measurements. Almost all papers included used inertial measurement units (IMU). These are sensor information that measures and reports a body’s specific force. However, Capi et al. (2021) used an accelerometer and magnetometer, while Bhusal et al. (2022) used a barometer only. An IMU presents a combination of accelerometer and gyroscope sensors from an aerial vehicle’s six degrees of freedom. In this category, only Meng et al. (2019) and Nakamura et al. (2016) complement the IMU sensor with an altimeter or magnetometer. This improves localization in autonomous landings. The altimeter measures the vehicle’s altitude in relation to sea level using atmospheric pressure as a reference. Likewise, the barometer measures the air pressure in a particular environment. The magnetometer uses the north pole as a reference, measuring the vehicle’s heading angle offset from north. The reference sensors are important for the vehicle’s attitude control, allowing stability, localization information, and next position estimations.

A third category is that of range sensors, which are mentioned in 22 articles, presented in Table 14. Range sensors measure distance, assessing the time required for an emitted signal to return. Most articles in this category present the Light Detection and Ranging (LiDAR) sensor. The LiDAR sensor uses laser technology to measure the time the reflected light takes to return to the receiver. Other articles use Sound Navigation and Ranging (Sonar), which use sound propagation to measure distances. Sonar technology is commonly used in maritime vehicles such as submarines. Bershadsky and Johnson (2013) and Kumar et al. (2020) combine LiDAR and Sonar sensors to achieve autonomous navigation. He et al. (2017); You et al. (2020); Hu et al. (2022) use Radio detection and ranging (Radar), where radio waves determine the distance, angle, and radial velocity. These kinds of sensors were used in two main cases. The first was to identify solid objects such as walls or obstacles in the surroundings, aiming to avoid them. The second was to add or improve depth measurement from visual input. Range sensors and visual input fusion aid in accurately locating the object in the scene and projecting into a new spatial representation.

Finally, a fourth category represents the position sensors, as shown in Table 15. In this category, ten papers presented a sensor based on geo-positional information. Most depend on the Global Position System (GPS) to obtain the vehicle’s current position. Other papers (Delamer et al. 2021; Konrad et al. 2017) did not specify which positional system was used, defining it only as a Global Navigation Satellite System (GNSS) sensor. A GPS sensor is a specific type of GNSS sensor that relies on a satellite constellation to measure position in latitude and longitude. This kind of sensor depends on satellite availability and signal strength, requiring more than one satellite to work.

3.3.2 Vehicle control state

Some articles reported vehicle state information using the sensor data mentioned above. These are presented in Table 16. In 34 of these articles there were no details regarding the state, or it was impossible to determine. Another fourteen papers did not require state information because they presented a no-control proposal. Only 30 articles successfully described the vehicle state.

Seventeen articles include the vehicle position as three or two-axis coordinates in an absolute system or calculated from a reference system. Another eight papers considered the use of vehicle orientation as essential, indicating the vehicle pose with a reference system. Movement information was found in fourteen papers, where the authors used the velocity, acceleration, or attitude data in the control state. Additional relative information was reported in ten papers with data such as gyroscope, acceleration, or heading angle. This relative information was measured in relation to a central defined value. Futhermore, five articles used information relative to the target, such as distance or direction.

Grando et al. (2020)’s paper uses laser rays to achieve a collision-free proposal. In Li and Hu (2021), the authors compute the 3D coordinates of the aerial vehicle from ground cameras. For the proposal of Delamer et al. (2021), the problem was defined as a Markov decision process with two types of states. The first state is fully-observable and obtained from sensory data. The second is partially-observable and obtained from the vehicle’s attitude estimation. For Peake et al. (2020), the state’s definition is obtained according to the vehicle’s behavior. Both the target search and region exploration behaviors use probabilistic target detection. Additionally, there is an interesting use of the gravity direction in the state definition presented by Nitsche et al. (2020). Finally, Kusnur et al. (2021) include the timestamp as a state value.

3.4 Comparison benchmarks

For research question RQ4, distinct benchmark metrics and environment tests were found. Most papers performed a quantitative evaluation. One kind of benchmark metric found used an error rate measure. The error calculation methods found were root mean square (RMS), mean average error (MAE), and relative pose error (RPE). A few articles presented a custom error calculation. For example, Zhang et al. (2018) present a custom cost function, used in an optimization algorithm as a quality metric. Mao et al. (2020) proposed a bio-inspired method that compares the path integration value against a proposed path. They used MAE to differentiate the proposed trajectory from the one proposed by the method in three different cases. The MAE can be formalized as follows,

with \(| e_i | = | y_i - x_i |\), where \(y_i\) and \(x_i\) are the predicted and true values for n samples.

He et al. (2017) used the RMS to compare the range estimation error within a given time window. The estimation error was obtained from the difference between a baseline path and the one inferred by the methods. The RMS can be formalized as follows,

with n as the number of errors, and e the error value.

The Nitsche et al. (2020) proposal uses the RPE metric to evaluate a visual odometry method. This evaluation is addressed comparing the proposed vehicle trajectory against a baseline path. The error value represents the vehicle pose’s drift.

The trajectory-related metrics are commonly implemented together with SLAM. As presented previously, SLAM can identify the vehicle pose to get the current trajectory (Prokhorov et al. 2019). Other quantitative benchmark metrics used were success ratio (Bhusal et al. 2022) and execution time (Zhao et al. 2021b; Perez-Grau et al. 2021). The success ratio indicates how often the vehicle achieves the expected behavior. The execution time indicates how long it takes to solve the problem in simulated and real-world scenarios.

A learning curve was used for articles with learning-based methods (Zhao et al. 2021a; Grando et al. 2020; Peake et al. 2020; Delamer et al. 2021), illustrating the outcome’s evolution over time. Other learning methods use deep learning metrics for problems such as classification (Zaki et al. 2017) and object detection (Albanese et al. 2022; Narazaki et al. 2022). As the methods implemented differ, the error rate was applied differently, i.e., the expected trajectory, heading angle, velocity, and acceleration, among others.

In contrast, other articles include a qualitative evaluation. They highlight the aspects where the proposal performed well and those where it did not. The qualitative assessment discussed aspects such as the performed trajectory, the visual recognition characteristics, and overall behavior stability. Lee and Kim (2022) enhanced the metric results by describing the flight route, vehicle, and target velocity in three different situations. A hyperparameter value was scaled in each situation, showing the control behavior for a refueling problem. Kumar et al. (2020) described the situation when the vehicle’s camera recognizes the object of interest. In general terms, the qualitative comments allowed for an evaluation of each method’s scope and limitations.

3.4.1 Experimental environment

Regardless of the type of metrics, each paper presented a custom experimental setup. This review divides the environments into Mathematical, Indoor, and Outdoor spaces. This is further subdivided into simulated and real-world scenarios. The mathematical space was interesting, with twelve papers that modeled the environment with Cartesian coordinates (Delamer et al. 2021; Zhao et al. 2021a). This kind of environment establishes area limits and the obstacle’s position. Under this kind of experimental setup, how well the vehicle can avoid an obstacle and reach a given point can be observed. Other environments were in outdoor and indoor configurations, with 37 and 25 articles, respectively. In the indoor scenarios, more structured environments have corridors and doors, as in Prophet and Trommer (2020); Perez-Grau et al. (2021). Those scenarios presented a shaped trajectory similar to an L or an S. Conversely, in the outdoor scenarios, the trajectory depends on the altitude of flight and other landscape characteristics (Bhusal et al. 2022; Fu et al. 2022). For example, a forest area differs greatly from a city, or from other scenarios between those two. A summary of the environments can be found in Table 17.

Regarding the experimental setup, 28 papers used simulated and real-world scenarios. Most of them only described the main aspects, such as dimension, initial vehicle position, and target position. A brief description of the size and building characteristics were included in the real-world scenes. In terms of vehicle, the brand, the model, and a brief description of commercial drones (Bachrach et al. 2010; Dang et al. 2020; Fuentes et al. 2014; Konrad et al. 2017; Li et al. 2018; Nakamura et al. 2016, 2017; Perez-Grau et al. 2021; Tsiourva and Papachristos 2020; Yu et al. 2017) were included. All articles with real-world tests include the drone sensors’ brand and model. Nevertheless, many drones were custom-built with specific parts for research purposes. Only seven articles (Al-Jarrah et al. 2013; Bachrach et al. 2010; Bhusal et al. 2022; Cho et al. 2022; Cocchioni et al. 2014; Fuentes et al. 2014; Mansouri et al. 2020b) mention the WiFi standard communication interface to transfer data between the vehicle and a ground station. Additionally, only Bachrach et al. (2010) mention the battery lifetime of the vehicle, with a 6000mAh lithium polymer battery for 15 minutes of flying autonomy.

Nevertheless, there is no common configuration in the environment organization or extension. In this regard, an application type of the environment was categorized in Table 18 to understand the method’s objective better. The arbitrary environments are those presented for method testing purposes only, without specific details. These environments are commonly designed without obstacles or structures, and only present the target location. A structured environment is also arbitrary but with some defined characteristics like walls or corridors, limiting the navigation to certain areas. A known environment means a complete map is presented as prior information to the method. Pathway environments are those where the vehicle must follow a trajectory to reach target points. Other environments are landmark-based, from which some reference visual information is used to orient the vehicle. Dynamic and static environments were also defined as categories. A dynamic environment has different moving objects inside the scene, such as humans or other vehicles. Static environments are those where the only moving object is the flying vehicle. In this regard, only seven papers (Bhusal et al. 2022; Chen and Lu 2022; Cho et al. 2022; Lee and Kim 2022; Mumuni et al. 2022; Narazaki et al. 2022; Hu et al. 2022) report that the environment was dynamic, and all the other environments were considered static.

In general terms, no standard method or error metric application was found in all the papers. Each proposal presented its environment for a specific application. The wide variety of aspects makes it difficult to compare each approach fairly. The only exceptions where the proposals implemented in existing competition with real-world tests such as the International Aerial Robotics Competition 2009 (Bachrach et al. 2010) and 2011 (Bershadsky and Johnson 2013), the American Helicopter Society MAV Challenge (Nakamura et al. 2016), and the most recent AEROBOT-2021 UAV Challenge (Abdulov et al. 2022).

As with the experimental setup, there was no standard software used across all articles to run the simulated experiments, with each task presenting unique simulation requirements. Some papers used the RotorS simulation software, a Gazebo-based software focused on developing aerial vehicle research. The Gazebo software has been widely used in different robotic simulation projects. It is a simulator with broad community support, including for the development of simulated environments. However, most proposals used custom-developed or competition simulation software (Bershadsky and Johnson 2013; Nakamura et al. 2016) to execute their performance tests. Table 19 shows the main software categories found.

For object-goal navigation using UAV, the simulation software must correctly mimic real-world physics and vehicle dynamics. The simulation software must also provide a library to gather simulated sensor data and control the simulation. The use of competition problems makes it possible to standardize the simulation scenario. Competition define the information availability, system dynamics, and aspects evaluated. As such, a simulation software’s main requirement is the capacity to mimic a real-world problem. The imitated details and simulation complexity must be able to be executed in a computing system.

Simulation software allows for the computation of a replica of the real-world. The auxiliary software included in the papers allows for communication between the proposed method and the simulated environment. One of the most common software applications is MATLAB, a programming and numeric computing platform with an integrated development environment. The programming language used depends highly on the simulation software employed. This language makes it possible to read the sensor data and operate through the simulated actuators. Another common software is the Robot Operating System (ROS), a communication middleware highly used in developing robotics solutions. The main advantage of ROS is the encapsulation of pieces of different programming language code under a single communication interface. This grants modularity to the system design, even allowing for parallel communication between simulated and real environments.

Simulated environments are sometimes sufficient to develop methods for solving further real-world problems. In most cases, they must be refined or aided with other methods in the real-world environment. Therefore, some papers used specific software to run their tests, such as Google Earth (Michaelsen et al. 2011, 2012) and MAV3DSim (Lugo-Cárdenas et al. 2017). Regarding software, Song et al. (2022) uses the Unreal Engine as the physics simulation engine, the same used by Fu et al. (2022) within the AirSim simulation platform. The mentioned software improves the visual representation of the simulated environment. Regardless of how much it may differ from the real environment, a simulation will improve the development of robotics solutions. Additionally, synthetic data has also been used to reduce the gap in implementing real-world solutions (Ju et al. 2022).

3.5 Differences over methods

This research question (RQ5) was mainly focused on finding differences in each paper’s proposed methods. A main proposal comparison is in Tables 20 and 21 show a comparison of the main proposals for the papers that solve the four tasks. This restriction was applied because these are essential tasks in UAV-based autonomous object-goal navigation. Only thirteen papers solved the control, planning, mapping, and perception tasks. The UAV column indicates the UAV type. All methods uses a quadcopter. The RW? column indicated whether or not the paper presented a real-world implementation with “Y”es or “N”o. The Env &Goal column indicated the environment and goal types. An “(S)” or “(D)” indicated whether it was applied to a static or dynamic environment, respectively. The planning methods with an “(E)” represent an exploration-based approach, while the mapping methods with an “(L)” indicate a localization-based approach.

Most studies preferred to use classical feedback controllers such as PID, sophisticated model predictive controllers (MPC), and hardware-based Autopilot boards in their control methods. Classical feedback controllers are simple with an intuitive concept implemented in various applications that have low computational overhead. Nevertheless, they are unsuitable for complex systems with multiple inputs, outputs, and constraints. MPC can deal with complex systems by predicting future system behaviors and handling constraints, disturbances, and uncertainties. However, these controllers require an accurate system dynamics model and are more computationally demanding than PID. Finally, Autopilot boards such as PX4 can perform precise and accurate control under real-time constraints. Their implementation, however, is costly and complex, requiring specific hardware components and specialized knowledge.

For planning, some studies used the simplest hand-designed waypoint following, where a sequence of coordinates that the vehicle must reach have to be specified. Other studies used mainly graph- or tree-based methods, such as Dijkstra or Rapidly Exploring Random Tree (RRT). While it is true that both methods can be efficient and flexible in discrete or continuous domains, graph-based methods can be memory intensive in large state spaces, and tree-based methods can get stuck in local minima. Additionally, in cases where the target location is unknown, both types of methods do not guarantee a globally optimal path because they rely on heuristic guidance.

Most mapping methods create a surrounding representation by dividing the observed space and indicating the occupancy level of each cell or unit of representation. Occupancy grids and grid maps are the simplest types, and they can efficiently update and query occupancy information. Nevertheless, they may not be able to capture sufficient detailed information and are unsuitable for varying resolutions or irregular environments. On the contrary, Voxblox and Quad-tree decomposition can create multi-resolution mapping, with the latter being adaptive. However, both require significant computational resources and may suffer from discretization or in dynamic environments with varying occupancy. In general, all of these methods will require a higher amount of memory for large mapping spaces. Additionally, the Simultaneous Localization and Mapping (SLAM) technique can be implemented over any of those methods to locate the UAV on the spatial representation. Most of the time, SLAM is aided by Visual-inertial methods for tracking the UAV path within the map.

Most perception methods use computer vision (CV) techniques to extract useful features about the surroundings. Some use handcrafted extracted features by specifically designed kernels, similar to Spatial-structure information. These methods can deliver enough information about the processed sensor; however, developing such kernels requires considerable expertise. Others use pre-trained classifiers or object detection models such as YOLO to deliver insightful information for the mapping or planning stages. The main advantage of this is that useful extracted information can be learned from previously presented data; nevertheless, that data must be prepared and labeled to correctly extract useful features.

Another aspect was the problem formulation presented in Table 22. Most of the articles tackle UAV control as a navigation-only problem, where the vehicle must move around the environment safely. However, this does not mean that the proposed methods solve the problem autonomously. Aerial-only formulation problems, such as landing and aerial refueling, were also found. In aerial-only problems, the proposed methods use visual sensors to identify the landing or fueling location they must reach to accomplish the mission. Formulating the problem as an object search was the most suitable method for object-goal navigation. Not all methods deal with the problem autonomously, although they can navigate an area searching for objects of interest. A few papers formulated the problem as exploration, attempting to move around in unknown areas. They aim to gather information on the surroundings and to better choose where the target might be. Another problem formulation includes no-control methods, mainly to recognize different elements or objects within the scene, such as landmarks or targets. Finally, other variant problem formulations were identified, such as algorithm optimization (Zhang et al. 2018), city mapping (Yang et al. 2021), position stabilization (de Plinval et al. 2017), runway detection (Nagarani et al. 2020), state estimation (Konrad et al. 2017), battery lifetime extension (Cocchioni et al. 2014), and remote guidance (Louali et al. 2017).

Another difference found between each proposal was the definition of the objective. While most proposals formulate the problem as a navigation problem, three main categories of objective definition were found. The first was point goal, which defines the target as a landmark at specific coordinates that the vehicle must reach. The second was object goal, where an object is defined as a target, and the vehicle’s final destination is the object’s location. The articles which fit into each category are shown in Table 23. However, most of the articles did not specify the type of goal employed, while some did not include vehicle control or the authors did not report it.

4 Challenges and future strategies

The research questions from this SLR allow for a better understanding of the problem. However, a future steps analysis is essential to understand what is still missing. Future studies reported in the literature identify two main scopes. One scope refers to how to improve the presented method, and another is about how to extend it. Because other studies did not mention future steps, an extension inference was made.

Only ten papers explicitly mention enhancement and extension of the proposal presented. The most recent ones wanted to address computational complexities to be more efficient (Mumuni et al. 2022; Narazaki et al. 2022). Initial solutions improvements and data-efficient methods are also considered (Delamer et al. 2021; You et al. 2020). Other authors reported that improvements in sensor data are required (Bhusal et al. 2022). Processing better state estimation considers robust sensor data for new real-world flight tests (Prophet and Trommer 2020). Additionally, robust visual-based methods are also required in aggressive flight behavior (Bachrach et al. 2010).

Eight papers point out future method enhancements, almost all sensor-related. From the most recent, Lee and Kim (2022) reported velocity information acquisition for temporal unavailable data. Additionally, Cho et al. (2022) reported enhancing no GPS-based methods for velocity and position information acquisition. Chen and Lu (2022) reported future improvements in visual-based state estimation during aggressive flight with data fusion. For Fu et al. (2022), refining the UAV kinematics characteristics should improve flight smoothness. Other authors referred to address limitations in sensors (Al-Jarrah et al. 2013; Bershadsky and Johnson 2013) and computational requirements (Yu et al. 2017; Xu et al. 2013).

In 26 articles, the authors discussed how to extend their proposals. Eight of these papers would like to use more complex scenarios (Hu et al. 2022; Capi et al. 2021; Mansouri et al. 2020b; Wang et al. 2017). Tests in 3D spaces (Yousuf et al. 2022; Li et al. 2018), unknown scenarios (Perez-Grau et al. 2021), and with more elements on it Peake et al. (2020) are also included. The other eight papers want to add real-world test cases (Michaelsen et al. 2012; Sun et al. 2019; Song et al. 2022), including a real fixed-wing UAV (Lugo-Cárdenas et al. 2017). Other cases want to increase the complexity of already real-world applications (Alarcon et al. 2021). Others want to improve generalization in vehicle (Grando et al. 2020) kind or include dynamic elements (Louali et al. 2017).

From the remaining papers, some authors also suggested sensor processing extensions. Two of them look to improve the pose estimation by certain adjustments. Li and Hu (2021) indicate using visual key points for position to pose autolanding. Nitsche et al. (2020) indicate a pose uncertainty reduction for improving map-adjustment accuracy. Alternatively, Kusnur et al. (2021) and Mao et al. (2020) recommend dealing with sensor history and multi-sensor sources. Tymochko et al. (2020) and Tsiourva and Papachristos (2020) presented an interesting recommendation. The first is about an alternative to prior information in vision-based methods. In contrast, the second is for a better stationary surrounding objects prior information. Finally, Cocchioni et al. (2014) instructs the use of more positioning beacon sensors for real-time performance.

Final extensions suggest checking out more efficient models for the required tasks. For example, Albanese et al. (2022) mentions DroNet and EdgeNet for navigation and surrounding perception. Jia et al. (2022) points out the multi-agent approach in dynamic environments and real-time path planning. While Fuentes et al. (2014) and Fu et al. (2013) mention extending with object detection methods and other tracker algorithms, respectively. Mansouri et al. (2020b) address payload, flight time, and communication distance in real-world scenes.

Extra challenges were extrapolated from the SLR findings. It is noteworthy that only a few papers address the object-goal navigation problem. All papers were included in at least one category for macro-comparison. This SLR recommends addressing the four main tasks of control, planning, mapping, and perception under a methodology that allows reusing the sensors processed information by the methods of each task. The unified methodology should improve the computational cost and the generalization of autonomous UAV applications. A secondary recommendation addresses developing a framework for UAV navigation solutions, which should include standard comparison metrics and an environment. Additionally, it must ensure experimental replication while considering safety, ethical, and legal aspects. An extra challenge is to measure the autonomy level between different proposals.

4.1 Autonomous control

The autonomous UAV concept implies a power of self-governance in the performance of the control function. In this regard, there is a difference between automatic and autonomous flight control, with the latter presenting a higher degree of reasoning. Automatic flight provides the necessary control to operate in normal scenarios. However, it requires manual adjustment in an unstructured environment. Conversely, an autonomous flight must detect and respond to unexpected events and conditions during control. Bio-inspired methods have been proven to achieve complex behavior. Nevertheless, this does not ensure full autonomy (Millán-Arias et al. 2022).

According to Chen et al. (2009), an autonomous control architecture presents three hierarchy levels with the principle of Increasing Precision with Decreasing Intelligence (IPDI) (Saridis 1989). The lowest is the execution level, with the fewest intelligence control algorithms. In the middle is the coordination level, which acts as an interface between both hierarchies, processing sensor data. At the top, is the organization level with the highest intelligence and lower precision, including all of the decision-making methods.

Autonomous control for object-goal navigation expands these hierarchy levels into five subproblems that must be solved, as presented in Fig. 5. Each subproblem involves more than one task. For example, the SLAM method combines localization and mapping to recognize objects and the vehicle location within the environment. The subproblems must be addressed in a cascade fashion, starting from the simplest, which must be solved in real-time, and finishing with the most complex, which requires semantic information processing.

4.1.1 Spatial interpretation

The spatial interpretation problem, as an extension of localization, was defined as recognizing the vehicle’s current position using internal information only. As mentioned earlier, perception is the fundamental task that processes all sensory data to provide insightful information to the other tasks. Hence, the perception task must process specific types of sensors to deliver correct information so that the localization task can achieve an accurate position estimation. Estimating the current position facilitates the computation of how the state control must change to reach the next desired position.

The localization concept is then expanded into spatial interpretation, because the vehicle position can be interpreted as either absolute coordinates or as a relative distance. Therefore, spatial interpretation must allow the vehicle’s movement to be observed through the sensor data measurements and by recognizing the difference between one location and another within an environment. From the SLR, it can be inferred that the IMU and magnetometer are suitable sensors to solve this problem. However, further research must be carried out to confirm this. Additionally, metrics are required to measure how well this problem has been solved, and ground-truth data may need to be obtained to compare against experimental results.

4.1.2 State codification

Because the control task requires state variables, state codification becomes another relevant problem for achieving autonomous behavior. Again, the perception task plays an essential role in processing the sensor data and attempting to negotiate a unified codification of state variables with the control task. This codification must be suitable for use by the control task, which will trigger the various vehicle actuators or motors. The state codification should contain all the information required by the control task to achieve movement. The control task requires information on the current and the target states in order to achieve any movement. If no goal state is given, the vehicle must keep still.

Sensors and metrics must need to defined to measure how well this problem is being solved and how efficient the solution is. The state codification discovered in this SLR concluded that the UAV’s sensor data of movement and position appear to be suitable to solve this problem. In terms of metrics, the evaluation of the state codification must be compared to baseline trajectory. However, further experiments are required.

4.1.3 Future state prediction

For the vehicle to perform any movement, it requires a target state given by the planning task, ante further processed by the control task. This future state estimation must be addressed to allow the vehicle to move around, maximizing its efficiency in traveling from one point to another. With regard to the navigation behavior, the travel plan must reach a given location fast and safely. For exploration behavior, the travel trajectory should select unknown locations to explore the environment. A state codification system interprets where the vehicle is in the environment. With this information, the planning task must ensure that the control task reaches all states defined in the routing schema until a final state is reached.

The sensor data required to solve this problem of state codification and spatial interpretation should capture the UAV’s current location and infer future states from it. An assessment metric for this problem must analyze the similarities between the proposed trajectories, and how far the vehicle traveled to reach a given location.

4.1.4 Environment recognition

Another important problem that must be solved is the recognition fo all possible elements within the environment. This problem should be addressed by the perception and mapping tasks, which process the sensor information to identify the objects and location. First, the perception task must recognize the object features and their labels, which can then be further processed by the mapping task. This mapping process uses the perception task’s information to assign the object data to a reference method, combining it with the current vehicle location and heading angle.

Object recognition in navigation requires visual data to identify an object’s visual characteristics, assigning a label to what is being observed. Depth information is als required to correctly identify how far the object is from the vehicle’s current position. Metrics that can measure the quality of the solution to this problem can be object detection metrics, such as the mean average precision of distance error measurements.

4.1.5 Target search

The most important problem to be solved by a vehicle under object-goal navigation is the location of the target. For this, the mapping and planning tasks must work towards processing the target location. The mapping task must deliver useful information about the vehicle’s surroundings to the planning task. The planning task will then trace a route to an area where the target is likely to be found. Selecting an area can be challenging because a wrong direction or semantic understanding will cause the vehicle to move away from the objective’s location. In both indoor and outdoor environments, it will drain the UAV’s battery, limiting the available search time. Another problem relates to how the vehicle will recognize which object must be found.

The sensory data required to solve this problem are those related to the environment recognition and spatial interpretation problems. A metric for quality measurement of this problem must be investigated, one possibility being the final sum of all of the aforementioned problems.

4.2 Comparison metrics