Abstract

This paper provides a systematic survey of artificial intelligence (AI) models that have been proposed over the past decade to screen retinal diseases, which can cause severe visual impairments or even blindness. The paper covers both the clinical and technical perspectives of using AI models in hosipitals to aid ophthalmologists in promptly identifying retinal diseases in their early stages. Moreover, this paper also evaluates various methods for identifying structural abnormalities and diagnosing retinal diseases, and it identifies future research directions based on a critical analysis of the existing literature. This comprehensive study, which reviews both the conventional and state-of-the-art methods to screen retinopathy across different modalities, is unique in its scope. Additionally, this paper serves as a helpful guide for researchers who want to work in the field of retinal image analysis in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sense of sight is the one of the most senses in humans, and it is responsible for producing vision. According to a report by the World Health Organization (WHO) in 2012, the number of individuals with visual impairment (VI) was estimated to be 285 million (Pascolini and Mariotti 2012). Among these population, a significant proportion of 246 million were observed to have low vision (LW), while 39 million were identified as being blind. Moreover, the number of visually impaired individuals were increased to 2.2 billion in the year 2019 (see Figure 1). Out of these 2.2 billion cases, approximately one billion patients could have been completely cured if the disease spread was identified timely in early stages (World Health Organization, accessed December 24, 2022). The leading causes of visual impairments and blindness are the retinal diseases, such as trachoma, diabetic retinopathy, corneal opacities, and glaucoma. The progression of the retinal diseases is slowly and the symptoms do not appear to the subject in early stages. Also, in the United States, fifty percent of the patients were found to be uninformed about their retinal conditions (Tham et al. 2014). Due to the high correlation between retinal diseases and blindness, a lot of clinical research is being conducted toward screening retinal diseases in the early stages. Additionally, computer-aided screening methods are introduced by the researchers from the past decade to aid clinicians in objectively extracting the retinal lesions, and retinal layers to give reliable and accurate diagnosis of the retinal diseases in order to prevent the spread of blindness.

In this paper, we present a review of clinical pathophysiologies related to different retinal diseases and how they are examined using various modalities. Furthermore, we provide an insight on the development of artificial intelligence (AI) methods that are specifically designed to screen and grade retinal diseases using different retinal modalities to aid ophthalmologists in their clinical practice.

1.1 Contributions

The main contributions of this paper are two-fold:

-

1.

To the best of our knowledge, this is a first attempt to compile a comprehensive review of clinical and machine learning methods related to screening and grading different retinal diseases, such as age-related macular degeneration (AMD), diabetic retinopathy (DR), diabetic macular edema (DME), and glaucoma.

-

2.

This paper presents a detailed acquisition principle of fundus and OCT modalities in ophthalmology and how these non-invasive imaging scheme are used in clinical practice for visualizing retinal abnormalities in the early stages.

The rest of the paper is organized as follows: Section 2 presents the related works. Section 3 presents the methods for procuring and discussing state-of-the-art works which satisfied the eligibility criteria. Section 4 presents the detailed structural details of the eye anatomy. Section 5 presents detailed discussion on retinal imaging modalities. Section 6 discusses the commonly and majorly occurring retinal diseases. Section 7 presents the benefits of AI on Health Economics. Section 8 discusses the involvement of digital technologies in ophthalmology. Section 9 presents the clinical studies related to screening retinal diseases. Section 10 discusses the technical studies related to designing AI models for screening retinal diseases. Section 11 highlights the advanced deep learning models which are recently proposed to robustly and reliability screening the retinal diseases. Section 12 shed light on the retinal image datasets which were publicly released to facilitate the research community working in retinal image analysis. Lastly, Section 13 presents a detailed discussion on the retinal image analysis works which were introduced over the past decade. Section 13 also shed light on the future directions which can be pursued by the researchers working in the field of retinal image analysis. Apart from this, the complete organizational framework of the paper is shown in Figure 2.

2 Related works

Retinal image analysis is a widely researched topic where clinicians and researchers have rigorously worked on proposing novel methods to screen retinal diseases, especially in the early stages. Most of these works formulate clinical and machine learning/ AI-based methods for screening specific retinal diseases and analyzing the pros and cons of different modalities to perform accurate retinal image analysis. A detailed discussion of all of these works is presented in the subsequent sections. Furthermore, the detailed timeline, demostrating the major contributions of the researchers toward designing robust AI models for screening different retinal diseases across multi-modal imagery is shown in Figure 3.

2.0.1 Disease-specific studies

Nicholson et al. (2013) performed a systematic review to analyze the pathogenesis of central serous chorioretinopathy (CSR). The study (Khalil et al. 2014) presented a survey to detect glaucoma changes through fundus images. Preprocessing, feature extraction, feature selection, and machine learning (ML) techniques were discussed. Gupta and Karandikar (2015) reported a survey study that analyzes the automated techniques for DR diagnosis. A comparison was made between the algorithm that detects the various structural changes through fundus images. A total of 13 studies were included in the survey. The review study (Das et al. 2016) was presented in the literature that analyzed diabetic macular edema (DME) management in Indian subjects. Muramatsu et al. (2018) presented the survey for the treatment of DME in Japanese subjects. The clinical and technical review was presented for glaucoma diagnosis through fundus and OCT images (Naveed et al. 2017). Pead et al.(2019) presented a review study that evaluated the ML and deep learning (DL) techniques for automated drusen detection in the context of AMD. The paper included only those studies which detected the drusen in color fundus photography. A total of 14 articles were reviewed and only compared the ML and DL methods presented in those studies. Araki et al. (2019) presented a survey to analyze the effect of steroids on Japanese CSR subjects. Another clinical review (Van Rijssen et al. 2019) investigated the different treatments related to CSC, which included photodynamic therapy, laser treatment, and pharmacology. The survey (Lakshminarayanan et al. 2021) was conducted over the period of five years, from 2016 to 2021, to investigate the automated techniques, which includes ML and DL approaches, for the detection of DR in fundus and OCT images. A total of 114 papers were comprehensively reviewed from the open literature. Another review study (Abdullah et al. 2021) was presented that compared the automated ML techniques to detect the structural changes in fundus images. In addition to this, the author discussed the various fundus-related datasets (public and private). Sarki et al. (2020) comprehensively reviewed state-of-the-art approaches for the detection of diabetic and glaucomatous changes through fundus images. Image processing, ML, and DL techniques were explored. The author also reported available datasets. The paper (Bala et al. 2021b) presented the clinical and technical survey for glaucoma diagnosis. It reported DL techniques for detecting pathological changes in fundus and OCT images. The study (Shahriari et al. 2022) discussed how artificial intelligence (AI) is being used to screen, diagnose, and categorize DME.

2.0.2 Modality-specific studies

Abramoff et al. (2010) presented a clinical review of the retinal imaging trends. Besides this, the paper summarized the most prevalent causes of blindness, which include AMD, DR, and glaucoma. The review was about 2-D fundus imaging and 3-D OCT imaging techniques. Another study (Das and Malathy 2018) in the literature presented the clinical review of fundus images for detecting retinal diseases. The study (Kafieh et al. 2013) is modality specific, where image segmentation methods were reviewed for processing the retinal OCT images. The OCT segmentation approaches were classified into five categories, such A.scan, B.scan, active contour, AI methods, 3D graphs, and 3D OCT volumetric. Baghaie et al. (2015) reported the major issues related to OCT image analysis. More specifically, different techniques for noise reduction, image segmentation, and registration were discussed. Usman et al. (2017) provided an exhaustive review of various class image processing and computer vision techniques for detecting glaucoma, DR, and pathological myopia. The authors also reported the causes, symptoms, and pathological alterations of these diseases in OCT images, which can aid in the development of an automated system for the detection of retinal disorders. The precision of algorithms determined performance after an exhaustive examination and evaluation of various methods. Khan et al. (2019) presented a survey that is also modalities-specific. The survey comprehends the automated techniques for extracting retinal vessels in fundus images. The techniques are categorized into supervised and unsupervised groups. Supervised approaches are further classified into ensemble classification and neural network-based approaches. However, unsupervised techniques are grouped into four classes: matched filtering, mathematical morphological, multi-scale-based techniques, and region-growing methods. A valuable comparison was made among the techniques which were reported on the publicly available datasets. In the article (Nuzzi et al. 2021), a clinical review was reported on the state-of-the-art applications for AI in ophthalmology, which helps clinicians to have an overview of growing trends. The paper (Badar et al. 2020) was modality specific, focusing on DL techniques for retinal analysis through fundus images. The review includes automated disease classification methods based on retinal pathological landmarks. The methods were evaluated using accuracy, F score, sensitivity, specificity, and area under ROC curve on publicly available datasets Angiography has gained popularity in the field of ophthalmology for the diagnosis of ocular diseases. Boned-Murillo et al. (2022) presented the survey study related to OCT-A in diabetes subjects. Deep learning techniques were reported for the detection of retinal vascularization. Stolte and Fang (2020) performed a comprehensive survey for DR diagnosis covering the clinical and technical aspects. The paper also described the publicly available datasets of the fundus and OCT modalities. In addition to this, ML and DL frameworks were reviewed for the detection and classification of DR. However, fundus-related literature was more critically reviewed as compared to OCT and OCT-A modalities. A systematic review of clinical and technical studies across many disorders and modalities, represented as a chord diagram (Figure 4) illustrating the relationship between different categories.

Although, there are many survey articles which reports either modality-specific (Vujosevic et al. 2023; Ye et al. 2023), or disease-specific studies (Iannucci et al. 2023; Srivastava et al. 2023). But, to the best of our knowledge, there is a scarcity towards finding a comprehensive survey article which reports different clinical and machine learning methods related to screening and grading different retinal diseases, such as age-related macular degeneration (AMD), diabetic retinopathy (DR), diabetic macular edema (DME), and glaucoma using different retinal examination modalities (Table 1).

Chord diagram showing the interactions between categories in a systematic review of clinical and technical studies across multiple diseases and modalities. The diagram highlights the relationships between subcategories of diseases (e.g., glaucoma, DR, AMD, DME, Cataract, and CSR) and modalities (e.g., Fundus, OCT, OCT-A, Adaptive optics, and FFA). The thickness of the chords represents the strength of the connections between the categories, with thicker chords indicating stronger connections.”

3 Methods

3.1 Timeline

This paper presents a comprehensive review of the works related to retinal image analysis which have been completed in the past decade (i.e., from January 2013 to January 2023).

3.2 Eligibility criteria

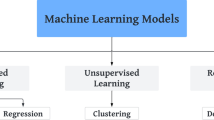

The criterion which we followed toward discussing the works in this paper are: (1) Presence of clinical and experimental findings related to retinal diseases, such as DR, glaucoma, AMD, and DME. (2) Formulation of machine learning models to extract retinal layers, and biomarkers related to retinopathy. (3) The machine learning models developed to detect retinal abnormalities from multiple modalities like fundus photography, fundus fluorescein angiography (FFA), optical coherence tomography (OCT), and OCT angiography (OCT-A). The main exclusion criteria were studies that were not related to the above-mentioned diseases and modalities.

3.3 Search strategy

The works presented in this paper are retrieved from the internet sources, scientific reports, conferences, and journal articles. The articles included in this paper are searched from public repositories, such as, PubMed, Science Direct, IEEE Xplore Digital Library, Springer Link, and Google Scholar. The search was carried out with a combination of different keywords, such as, diabetic retinopathy (DR), glaucoma, age-related macular degeneration (AMD), automated detection, mathematical retinal modeling, machine learning, deep learning, and advanced deep learning schemes. The search criterion was intentionally kept broad in order to encompass all peer-reviewed articles and report that potentially meets the eligibility criterion.

3.4 Study selection process

Articles found in the primary search were evaluated for eligibility to be included in the review based on their relevance to the research question or topic. We follow the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA), as shown in Figure 5.

3.5 Data extraction and synthesis

Following a thorough reading and summary of the chosen publications, the key points and arguments from each paper were extracted and synthesized in a separate file. The following findings were extracted from selected studies: pathological association from clinical or experimental articles, techniques, datasets, and results from technical literature. Results include evaluation metrics such as accuracy, sensitivity, specificity, precision, recall, F1 score, and intersection over union. However, some of the discussed studies have limited data, as we could not access the full paper.

4 Eye anatomy

Human vision exists due to eyes. An eye is a sensory organ that perceive visual information and sends it to the brain for interpretation. Eye is the most complicated structure in the human body; it is spherical in shape and consists of three layers (Willoughby et al. 2010). The outermost layer of eye is made up of the sclera and cornea (see Figure 6). The sclera is composed of connective tissues, which helps in maintaining the eye shape and it also provides protection to the whole eyeball. The anterior most part of an eye is cornea, which is a protective transparent membrane that covers the iris and pupil. The human cornea has an average horizontal and vertical diameter of 11.5mm and 10.5mm, respectively. Beneath the sclera, a choroid lies that contains blood vessels which provide oxygen and nourishment to the whole eyeball (Hassan et al. 2016b). Moreover, the third and innermost layer of an eyeball is the retina, which contains light-sensitive tissues responsible for producing vision. The retina comprises two main regions, i.e., the macular region (also known as the macula of the retina) and the peripheral region (Hassan et al. 2018a). Light enters the eye through the pupil, is focused by the biconvex lens, and lands on the retina, where the macular region uses rod and cone cells in the macular center (called the fovea) to produce central vision. The peripheral region is responsible for producing side vision (Raja et al. 2020c). The other parts of the eye’s middle layer are the choroid, the ciliary body, and the iris. The ciliary body provides support to the lens and also produces the aqueous humor. Also, the moment of the pupil is regulated by the iris, which controls the amount of light that gets into the eye through contraction and relaxation process (Hassan et al. 2015). Furthermore, the retina has ten layers that help translate visual data into the electrical signals that are sent to the brain. The electrical signals are transmitted from the retina to the brain through the optic nerve situated near the optic nerve head (ONH) region of the peripheral retina.

The visual information from the retina is transmitted to the brain in the form of neural impulses. The brain interprets the vision information from these neural impulses to perceive the context of the objects. There are total ten layers in the retina (see Figure 7), and each layer is responsible for a certain function, such as transforming light into electrical signals. The inner limiting membrane (ILM), which is made up of astrocytes and müller cells, is the first retinal layer beneath the vitreous body. Retinal ganglion cells (RGCs) with axons make up the retina’s second layer, also known as the retinal nerve fiber (RNFL). The 1.5 million retinal ganglion cell axons in the human eye converge at the optic nerve head (ONH), travel through the inner and outer neural canals, and finally exit the eye and enter the brain (Medeiros et al. 2012). Lamina cribrosa (LC) and bruch opening membrane (BMO) refer to the inner and outer neural canals, respectively. The LC is an inmost layer of the ONH. It is a network of capillaries that provide nourishment to RGCs and a 3D network of elastic porous connective tissues (Park and Park 2013). The fenestrated trabeculae create a pathway for the egress of RGC axons and vascular tissue. The ganglion cell layer (GCL) follows the RNFL and contains the bodies of ganglion cells. The inner plexiform layer (IPL) is situated posterior to the GCL and encompasses the synaptic connections between the dendrites of the ganglion, amacrine, and bipolar cells. The fifth position within the ocular anatomy is occupied by the inner nuclear layer (INL). It consists of the cell bodies of amacrine, bipolar, and horizontal cells. The next layer of the retina is the outer plexiform layer (OPL), which consists of a dense network of neuronal synapses between the dendrites of horizontal, bipolar cells (from INL) and photoreceptor cells. The outer nuclear layer (ONL) consists of the rod and cone nuclei responsible for visual phototransduction. The human retina contains approximately 7 million cones and 75–150 million rods. Cones correspond to photopic vision, whereas rods are responsible for scotopic vision. Cones are concentrated in the fovea, while rods are distributed throughout the retina, with the exception of the fovea. Cones and rods undergo a chemical transformation that transmits electrical impulses to the nerves. Initially, signals travel through bipolar and horizontal cells, then amacrine and ganglion cells, and finally, optic nerve fibers to the brain. These neural layers are responsible for processing the incoming picture data. The rod is the source of the signals, while the cones are the unprocessed data from individual points that are used to identify more complex features, including shapes, colors, contrasts, and motion. In contrast, photoreceptor cells’ nucleus and inner segments are separated by an outer limiting membrane (OLM). Each photoreceptor cell’s inner and outer segments can be found in the IO/OS layer. Retinal pigment epithelium (RPE) is located between IO/OS and choroid and is the outermost layer of the retina.

5 Retinal imaging modalities

Several examination schemes have been proposed to identify and track the advancement of the retinal diseases. Fundoscopy, also known as fundus photography, is a primary non-invasive retinal imaging scheme employed by ophthalmologists to examine the retinal fundus. The key areas of interest that can be evaluated through fundus photography are the macula, optic disc region, peripheral, and the central retina. The fundus image, however, does not reveal any information about the pathological changes and early diseases development within the retinal layers. For this purpose, imaging techniques like OCT/ OCT-A, scanning laser polarimetry (SLP), and confocal scanning laser ophthalmoscopy (CSLO) are typically used in the clinical practice. OCT provides assessments of both the retinal layers and the ONH, in contrast to CSLO, SLP, and other examination methods. Moreover, OCT has seen widespread usage as an imaging tool for identifying structural retinal abnormalities and tracking the progression of retinal diseases. The detailed discussion on some of the commonly used retinal imaging modalities are presented in the subsequent sections below. Apart from this, the detailed summary of these retinal modalities are reported in Table 2.

5.1 Fundus imagery

Fundus photography is used to analyze the fundus of the retina (Tran et al. 2012). In fundus photography, specialized fundus cameras are utilized that are comprised of an intricate microscope and it is connected to a flash-enabled camera. The principle of fundus cameras is based on the concept of monocular indirect ophthalmoscopy (Fundus Photography Overview, 2022). A fundus camera typically shows a 30\(^{\circ }\) to 50\(^{\circ }\) view of the retinal fundus region with a wide-angle lens.

Fundus photography can be conducted utilizing chromatic filters or specialized contrast agents such as fluorescein and indocyanine green (Fundus Photography Overview, 2022). Color fundus photograph (CFP) is acquired when the retina is illuminated by white light. A filter is utilized during the process of red-free fundus photography so that superficial lesions and certain vascular anomalies inside the retina and the surrounding tissue can be clearly observed. Fundus images are widely used by ophthalmologists for screening the retinal diseases, such as DR, glaucoma, AMD, and DME (Son et al. 2022). As the fundus photograph is two-dimensional, it does not provide the visualization of the retinal cross-sectional, and the retinal layers.

5.2 OCT imagery

OCT is a non-invasive imaging modality that employs low-coherence light to produce a higher-resolution cross-sectional retinal images (Drexler and Fujimoto 2008). Apart from ophthalmology, OCT imagery has also a wide range of clinical applications in cardiology, dermatology, oncology, and gastrointestinal (Adam et al. 2007). OCT imagery is rapidly becoming an essential tool for getting a 3-D cross-sectional representation of the retina and is, therefore, the most commonly used retinal examination method in the clinical practice. The Michaelson Interferometer is the apparatus that is used to acquire OCT scans. Michealson Interferometer measures the sample’s spatial location not through the passage of time but by means of light waves (near the infrared spectrum). The utilization of a super luminescent diode as a source is preferred in the OCT system due to its ability to comprehend broadband spectrum. Moreover, coherence length of the emitted light is a determining factor for the resolution of the OCT scans. The are two ways which are in commonly toward acquiring OCT imagery. The first one is the time-domain OCT imagery, dubbed TD-OCT. In TD-OCT, the depth range of the apparatus is sampled one point at a time by shifting the location of the reference mirror in order to create a longitudinal scan, also known as axial scan (A-scan). However, in order to achieve an A-scan, the reference mirror must be displaced mechanically by one cycle at a time. The utilization of Fourier transformation facilitated the transition from the conventional TD-OCT to the spectral-domain OCT (SD-OCT) implementation. In SD-OCT, to quantify the spectral modulations caused by interference between the reference reflection and the sample reflection, a spectrometer is used instead of a single detector, whereas the reference mirror is kept fixed (Huang 2009). Additionally, a Fourier transform was used to convert the spectrum modulations to depth information in order to capture A-scans. The working schematics of both TD-OCT and SD-OCT are shown in Figure 8 (A and B), respectively.

5.3 Optical coherence tomography angiography

Optical coherence tomography angiography (OCT-A) is another non-invasive retinal examination modality. It is dye-free OCT-based imagery that provides volumetric visualization of retinal and choroidal vasculature (CNV) (Park et al. 2016). OCT-A relies on repeated scans of the same area to identify movement. In 2006, Makita et al. (2006) initially described OCT-A utilizing an SD-OCT device with a spectral resolution of 18.7 kHz. With further improvements in the OCT hardware machines, higher quality OCT angiograms could be generated with fewer image artifacts.

OCT-A is a novel variant of OCT; it has the capability to generate 3D angiograms of the retina and choroid with high resolution. Additionally, it can detect sub-retinal neovascular blood vessels. To analyze structural changes in various retinal diseases, ophthalmologists are turning their attention toward using OCT-A. The main advantage of OCT-A over other modalities is its ability to visualize the retinal microvasculature, which greatly helps in screening retinal diseases like choroidal neovascularization (CNV) (Roisman and Goldhardt 2017), glaucoma Igarashi et al. (2017), and DR Schaal et al. (2019).

5.4 Multi-modal AI systems

The field of ophthalmology has made great strides recently with the development of multi-modal AI systems for retinal image processing, which are transforming the diagnosis and treatment of retinal diseases. Through the integration of data from multiple imaging modalities, including fundus photography, angiography, and optical coherence tomography (OCT), these systems provide a more nuanced and comprehensive view of the complex structures found within the human retina (Zhang 2023). These holistic approaches not only aid in the early diagnosis of retinal disorders but also permits a more exact characterization of diseases, such as diabetic retinopathy, glaucoma, and age-related macular degeneration.

Moreover, an important development in the diagnosis and monitoring of retinal diseases is the increased accuracy of the multi-modal retinal layer segmentation methods. These multi-modal AI systems are excellent at picking up on minute details within retinal architecture, which enables a more thorough evaluation of the underlying pathology. Furthermore, these systems exhibit a noteworthy capacity to detect early indicators of pathology that can be missed by the clinicians due to their tiring work schedule. Similarly, the integration of multi-modal data can significantly help the doctors in giving a reliable retinal diagnosis and enables more individualized treatment regimens catered to the needs of each patient (Wang 2022).

Furthermore, a proactive approach to maintaining retinal health is facilitated by the predictive powers of multi-modal AI systems. These methods help in predicting the course of a disease and its response to treatment by evaluating longitudinal data and identifying minute changes over time. These multi-modal AI systems also facilitate patient care while supporting current attempts to provide preventive measures against retinal disorders (Li 2024). All things considered, current advancements in multi-modal AI systems for retinal image processing are extremely promising for promoting a new era of precision medicine in ophthalmology and boosting both clinical practice and research.

6 Major retinal diseases

6.1 Diabetic retinopathy

Diabetic Retinopathy (DR) is a pathological condition of the eye that results from elevated levels of insulin in the bloodstream, leading to abnormalities in the retina (Klein et al. 1984). DR is the leading cause of visual impairments and blindness all over the world. DR is a chronic and degenerative disease that poses a significant challenge due to its asymptomatic nature during the early stages. The determination of the severity of DR is contingent upon the quantity and classifications of lesions that are observable on the retinal surface. The human retina comprises diverse constituents, including blood vessels, the fovea, the macula, and the optic disc (OD). DR is commonly categorized into two stages: non-proliferative DR (NPDR) and proliferative DR (PDR). NPDR is characterized by the impairment of blood vessels within the retina, leading to the leakage of fluid onto the retinal surface (Crick and Khaw 2003). This results in the swelling and moistening of the retina. NPDR may present with various manifestations of retinopathy, including microaneurysms (MAs), hemorrhages (HMs), exudates (both hard and soft), and inter-retinal microvascular abnormalities (IRMA) (Robert 1995). PDR is a severe form of DR in which new aberrant blood vessels sprout in various parts of the retina, potentially causing complete blindness. As it is shown in Figure 9, the NPDR lesions can be either MAs, HMs, or EXs. MAs are the earliest detectable indication of DR, and they form when fluid leaks out of the retina’s tiny blood capillaries. Their size is smaller, they have a round form, and they are red in color. The breakdown of MA walls results in HMs. Blot HMs are bigger red lesions, while hemorrhages seem like bright red dots (Sjølie et al. 1997). EXs are yellow spots on the retina caused by blood leakage containing lipids and proteins. If the lipid accumulation is on or close to the macula, it can result in permanent blindness. Both MAs and HMs are classified as dark lesions, while EXs are considered brilliant lesions (Robert 1995).

6.2 Age-related macular degeneration

Age-related Macular Degeneration (AMD) is a chronic retinal condition that typically impacts both eyes and arises from a metabolic disorder (de Jong et al. 2020). The condition manifests within the macula, a region of the ocular apparatus that holds particular significance in the process of visual acuity. The etiology of this particular type of maculopathy, which ranks second in terms of prevalence, remains incompletely elucidated. Experts believe that macular degeneration develops when there is an issue with the extremely high-energy metabolic processes that occur in the retina’s sensory cells. The body has evolved to handle these reactions and eliminate the metabolic byproducts. If the body is unable to process these compounds, however, they accumulate in the form of drusen. The retina does not get enough oxygen and nutrients because of these deposits. Drusen growth behind the retina causes age-related macular degeneration, which typically affects the elderly people. Due to the RPE layer thinning or atrophying caused by the drusen, central vision of the subject gets blurred, and straight lines within the normal vision appears vivid. Pathological AMD symptoms on fundus and OCT images are depicted in Figure 10. AMD can cause significant visual defects or even irreversible loss of central vision, but it does not cause blindness on its own (Seltman 2021). The clinical classification of AMD divides the condition into two sub-types: dry AMD and wet AMD. Under the retina, drusen can form when a patient has dry AMD, also known as non-exudative AMD. In the early stages of the disease, small drusen deposits do not impair vision; nevertheless, they do promote RPE atrophy and the creation of scars, both of which contribute to the gradual dimming and distortion of central vision as the disease advances. If dry AMD isn’t treated, it might progress to wet AMD, also known as exudative AMD. Wet AMD, also known as choroidal neovascularization, occurs when aberrant blood vessels in the choroid leak fluid and blood into the retina near the macula. Fluid leakage causes peripheral blind spots and a wavy appearance of straight lines (Table 3).

6.3 Glaucoma

Glaucoma is a multifaceted and intricate retinal condition that can result in an irreversible vision loss if not treated timely. Glaucoma is typically attributed to elevated intraocular pressure (IOP) exceeding 24 mm, although it can manifest in eyes with IOP levels within the normal range of less than 20 mm. The elevation of intraocular pressure within the anterior chamber is attributed to the obstruction of fluid outflow or a narrowing of the angle at the point of drainage. When there is an obstruction in the trabecular meshwork, fluid accumulates in the anterior chamber, resulting in increased pressure on the posterior chamber. The nerve fibers are pressurized by the vitreous body, leading to the eventual loss of ganglion cells. This results in the thinning of the ganglion cell complex (GCC) and the enlargement of the optic cup, as depicted in the linked Figure 11. The detection of glaucoma is facilitated by utilizing the thickness profiles of the RNFL, GCL, and IPL layers, which are encompassed by GCC as illustrated in Figure 11 (B).

6.4 Pathologic myopia

Pathologic myopia, commonly referred to as degenerative or malignant myopia, is an advanced and progressive type of nearsightedness distinguished by the elongation of the eyeball (Ohno-Matsui 2021). This elongation results in several structural alterations within the eye, such as the thinning of the sclera (the outer white covering of the eye) and the stretching of the retina. The importance of pathologic myopia resides in its capacity to induce permanent harm to the eye and, ultimately, result in loss of vision. Pathologic myopia, unlike regular myopia, cannot be easily corrected with glasses or contact lenses and poses a greater risk to the health of the eyes (Ikuno 2020).

Myopic macular degeneration is a significant consequence that can arise from pathologic myopia. This problem arises when the retinal thinning and elongation result in the development of anomalous blood vessels in the macula, the center region of the retina that is accountable for precise vision. These atypical blood vessels have the potential to release fluid and blood, resulting in the formation of scar tissue and substantial impairment of vision (Ikuno 2020). Individuals with pathologic myopia may have a progressive deterioration of their central vision, leading to difficulties in skills such as reading and recognizing faces (Ikuno 2020).

Apart from this, elongation of the eyeball within pathologic myopia can cause detachment of the retina from the underlying tissue, which can result in a medical emergency. Untreated retinal detachment can lead to lifelong blindness due to a sudden and severe impairment of vision. The retinal alterations linked to pathological myopia render individuals more vulnerable to this vision-endangering condition (Xu 2019).

Early detection and management are crucial in addressing the challenges posed by pathologic myopia. Regular eye examinations, especially for individuals with a family history of severe myopia, can help monitor the progression of the condition and implement interventions to minimize its impact (Ohno-Matsui 2021). Treatment options may include corrective lenses, prescription medications, or surgical procedures, depending on the severity of the condition. The significance of understanding and addressing pathologic myopia lies in the potential to preserve vision and prevent the devastating consequences that can lead to blindness (Xu 2019).

6.5 Retinopathy of prematurity (ROP)

Retinopathy of Prematurity (ROP) is a fatal retinal condition that primarily effects premature newborn babies, especially those who are born before 31 weeks of gestation and weigh less than 1500 gs at birth Smith (2021). The cause of this disease is undeveloped blood vessels that are contained within the retina. Retinal blood vessel growth happens in the womb during a full-term pregnancy and is typically finished by the time the baby is born Garcia (2020). However, the development of these arteries is disrupted in premature infants, which results in the start of ROP.

Two steps are usually involved in the evolution of ROP. The undeveloped blood vessels cause the body to respond by growing new, aberrant vessels during the first phase, which is referred to as the vaso-proliferative phase. While this phase aims to supply more oxygen, it frequently leads to the creation of flimsy, aberrant blood vessels that might burst or separate the retina. The second phase, known as the fibrovascular proliferation phase, is characterized by the growth of scar tissue, which, if ignored, may result in significant visual impairment or even blindness (Jones 2022).

Low birth weight, premature birth, additional oxygen therapy, and other conditions that upset the delicate equilibrium of oxygen delivery to the developing retina are risk factors for ROP. In order to mitigate the possible effects of ROP, prompt screening and management are essential (Jones 2022). The eyes of the premature babies should be routinely examined by ophthalmologists. In order to prevent further issues and preserve vision, treatment options may include surgery or laser therapy (Smith 2021). ROP is still a major problem despite advancements in diagnosis and treatment, highlighting the need of specialist care for premature newborns who are at risk of developing this disorder.

7 Benefits of AI on health economics and on screening retinal diseases

The use of artificial intelligence (AI) models in the field of health economics can fundamentally transform the identification and prediction of retinal diseases, which in turn can significantly reduce the socioeconomic consequences of blindness worldwide. By utilizing AI algorithms to precisely screen retinal disorders in their early phases, healthcare organizations might greatly diminish the financial strain linked to complex instances that require more extensive and costly treatments (Ting 2017). Utilizing AI in the screening of retinal diseases enables prompt detection of anomalies, offering a cost-efficient approach to implementing preventative measures and therapeutic interventions prior to the escalation of symptoms.

An important socioeconomic advantage of AI models in retinal disease screening is their ability to improve access to healthcare services, particularly in poor populations (Keel et al. 2019). AI technologies can be utilized in distant or resource-constrained regions, where conventional healthcare infrastructure may be deficient. The widespread availability of early detection tools allows people from various socioeconomic backgrounds to access timely and effective interventions (Ting 2017). This helps prevent a significant number of cases of blindness, which could otherwise lead to higher healthcare expenses and societal challenges.

Moreover, there are economic consequences that also include the decrease in healthcare costs in the long run that are linked to blindness. AI-based retinal screening enables prompt intervention, which can result in more controllable treatment strategies and improved patient outcomes. Consequently, the burden on healthcare systems, such as those associated with rehabilitation, long-term care, and disability assistance, is reduced. The socio-economic impact is dual, as it has the ability to enhance both individual welfare and the overall effectiveness of healthcare resource distribution (Keel et al. 2019).

Nevertheless, it is crucial to tackle ethical considerations, data privacy problems, and possible discrepancies in access to AI-powered healthcare solutions. To fully realize the socioeconomic benefits of breakthroughs in retinal disease screening, it is vital to ensure the fair distribution of AI technology and promote appropriate deployment tactics (Ting 2017). As we explore the convergence of AI and health economics, it is crucial to prioritize inclusion and ethical issues to ensure that the positive impact on blindness prevention is accessible and useful to various groups globally (Ting 2017).

8 Involvement of digital technologies in ophthalmology

The international health care organizations aim to provide an action-oriented, results-driven approaches for advancing health equity by improving the quality of care provided to minority and other undeserved communities (Hill-Briggs et al. 2021). However, healthcare organizations have increasingly acknowledged the presence of healthcare disparities across race/ethnicity and socioeconomic status, but significantly fewer have made health equity for diverse patients a proper priority (Chin 2016). The lack of financial incentives is a major barrier to achieving health equity. Now the focus of healthcare organizations is to report clinical findings based on race, ethnicity, and socioeconomic status in order to provide preventive care and primary care facilities all over the world. Social determinants of health (SDOH) have emerged as a primary focus of intervention in the quest for health equity as the healthcare system shifts toward a greater emphasis on population health outcomes and value-based treatment. Clinical ophthalmology studies have recently shifted their focus to SDOH in order to better understand and promote community health improvement prospects.

9 Current clinical practices in screening retinal diseases

In the recent years, extensive research in clinical settings has been carried out for improving clinical diagnostic capabilities, which include the consideration of risk factors, phenotypic, therapy, and drug management strategies in order to treat retinal diseases. The review of current clinical practices that are being followed to effectively screen the major retinal diseases, such as DR, AMD, and glaucoma are discussed in the subsequent sections.

9.1 Current clinical practices in screening DR

There are additional social and economic costs as a result of the diabetes patient’s inability to work. Understanding and reducing the effects of SDOH in diabetes is a top priority due to disease incidence, economic expenses, and a disproportionate population burden (Haire-Joshu and Hill-Briggs 2019; Hill et al. 2013). Hill-Briggs et al. (2021) presented a systemic review, which discusses associations of SDOH and diabetes risk and outcomes, as well as the results of programs designed to improve SDOH and its effect on diabetes outcomes. Moreover, the article also provides a brief introduction to key terms and various SDOH frameworks. Apart from this, blindness, due to DR, is mostly caused among age group of 20 to 60 years. Toward this end, one of the studies (Mistry et al. 2022) assessed the etiologic factors in a birth cohort and technology use among children and evaluated the drug management of Type-II diabetes in adolescents. Another study (Pacaud et al. 2016) presented used the international database to characterize the population of children with various forms of diabetes (non-type I). It was concluded that Type-II diabetes is more common but still difficult to diagnose worldwide. However, better management and outcomes for patients with uncommon kinds of diabetes may be achieved through collaboration with the eye hosiptals and clinics. In addition to this, another study (Tosur and Philipson 2022) summarized the history of maturity-onset diabetes of the young (MODY), and how it can be effectively treated.

The lipopolysaccharide (LPS) found on the outer membrane of gram-negative bacteria is responsible for triggering the host’s immune system and leading to systemic inflammation and cellular apoptosis. Patients with advanced diabetes have been reported to have high serum LPS levels, most likely as a result of intestinal permeability and dysbiosis. Consequently, there is substantial indication that systemic LPS challenge is closely linked to the prognosis of DR. Even though the underlying molecular mechanisms are not yet fully explored, LPS-related events in the retina may render DR’s vasculopathy and neurodegeneration severe. Qin and Zou (2022) presented a review while focusing on how LPS affects the development of DR, especially how it affects the blood-retina barrier and how it affects glial activation. In the end, they summarise the recent improvements in therapeutic strategies for blocking the effects of LPS, which could be used to treat DR with good clinical promise. It has been suggested that intestinal dysbiosis plays a contributing role in the development of type 2 diabetes (T2D) (Sharma and Tripathi 2019). The review study (Yang et al. 2021) provides an overview of the gut microbiota in T2D and associated diseases, focusing on its possible features and molecular pathways in relation to intestinal barrier breakdown, metabolic abnormalities, and chronic inflammation. The author concluded by summarising a therapeutic strategy for improving the malignant progression of type 2 diabetes and related disorders through intestinal microecology, with an emphasis on influencing gut bacteria. The goal of study (Pasini et al. 2019) was to find out how long-term exercise affects the gut flora and leaky gut in people with stable T2D. Exercise helps to control blood sugar levels by changing the gut microbiota and its functions. This data indicates an extra way exercise works and suggests that boosting gut flora could be a key part of tailor-made treatments for T2D. The putative roles of pyroptosis-signaling pathways in the pathophysiology and impact of DR development are discussed in detail in the review study (Al Mamun et al. 2021). The review reveals briefly the pharmacological drugs might be useful in the future treatment and management of DR.

The vascular endothelial growth factor (VEGF) family consists of the five ligands for the VEGF receptor (VEGFR) (VEGF-A, -B, -C, -D, and the placental growth factor [PlGF]). However, VEGF-A binds VEGFR1 and VEGFR2, while VEGF-B and PlGF only bind VEGFR1. Even though a lot of research has been done on VEGFR2 to Figure out what its main role is in retinal diseases, recent work has shown that VEGFR1 and its family of ligands are also important and play a role in microinflammatory cascades, vascular permeability, and angiogenesis in the retina (Uemura et al. 2021). VEGFR1 signaling alone leads to the pathological changes seen in DR, retinopathy of prematurity, retinal vascular occlusions, and AMD. Anti-VEGF medicines have shown remarkable clinical efficacy in various diseases, and their effect on modulating VEGFR1 signaling remains a fertile area for future research. Upregulation of VEGF-A in the diabetic eye has been linked to DR progression. The study (Singh et al. 2019) presented a review of anti-VEGF treatments for DR that have been approved for use in the USA. An improvement of 2 steps on the DR severity scale developed for the Early Treatment Diabetic Retinopathy Study is regarded clinically meaningful. After One year of medication with ranibizumab or aflibercept, about one-third of individuals with DR and DME obtain this level of improvement. Another study (Huang et al. 2022a) presents novel concepts for the prevention and treatment of DR.

There is research going on to find the association of diabetes and its risk factors with other medical conditions. Xiong et al. (2022b) investigated the IOP changes and acute angle closure (AAC) risk in diabetic patients after pupil dilatation. Diabetic patients were at a reduced risk of acquiring AAC after pupil dilatation. Increased post-dilation IOP was associated with lower pre-IOP. The study (Kjærsgaard et al. 2022) presented to determine whether or not DR is linked to and indicative of primary open-angle glaucoma (POAG). No significant links were found between DR and either the prevalence or incidence of POAG. The purpose of the study (Vergroesen et al. 2022) was to assess whether or not diabetes medication is linked to the prevalent eye disorders of AMD, OAG, and cataract, as well as to evaluate these diseases’ cumulative lifetime risks in a large cohort study. The findings of cohort analysis indicate that diabetes medication was not connected with cataracts, despite the fact that diabetes itself was definitely associated with cataracts. Metformin treatment was associated with a lower risk of OAG, and other diabetes medications were associated with a lower risk of AMD. To demonstrate the efficacy of the treatment, interventional clinical trials are required. The other studies (Cui et al. 2022; Zhang et al. 2022f; Jiang et al. 2022; Yongpeng et al. 2022; Cao et al. 2022b; Kulshrestha et al. 2022; Peled et al. 2022; Eton et al. 2022) that found in the published research that investigates the connection between diabetes and other ocular disorders. Researchers have been looking into the effects of commonly occurring comorbidities like diabetes as a result of the rapidly spreading coronavirus disease 2019 (COVID-19) pandemic. Although diabetes does not appear to raise the incidence of COVID-19 infection, it has been proven that hyperglycemia of any degree predisposes to worse outcomes, including more severe respiratory involvement, ICU admissions, the requirement for ventilators, and mortality. Infection with COVID-19 has also been linked to the development of new-onset diabetes and hyperglycemia, as well as a worsening of glycemic control in pre-existing diabetes (Xiong et al. 2022b). Previously, researchers hypothesized that this was related to the virus damaging the pancreas directly, the body’s stress response to infection, and the use of diabetogenic medicines such corticosteroids to treat severe COVID-19 infections. Patients diagnosed with mild COVID-19 may continue to take the majority of diabetes drugs while switching to insulin is the treatment of choice for those diagnosed with severe conditions. Diabetes and periodontal disease both exhibit the same pattern of inflammation. Both of these diseases, if not addressed, can cause a cytokine storm, which spreads pro-inflammatory substances all over the body (Stoica et al. 2022). Periodontitis has recently been considered to be the sixth complication of diabetes, and the most current studies point to a relationship between these two disorders that cannot be denied. Recent scientific research suggests that better glucose control in diabetes patients may be possible if their periodontal health is managed by appropriate and timely medication. New evidence of central visual system damage in diabetes patients was revealed in the recently published study (Chen et al. 2022b). Diabetes can cause damage to the peripheral sensory organs and the central visual system, which can result in decreased color vision. Adhesive capsulitis (AC) occurs more frequently and lasts longer in diabetic patients compared to patients with idiopathic AC. Joshua et al. presented a study (Gordon et al. 2022), the goal was to find out how gene expression is different in AC with and without diabetes mellitus. The study of RNA-sequencing data showed that 66 genes were significantly expressed between nondiabetic patients and diabetic patients with AC. Still, only three genes were differentially expressed between control patients with and without diabetes. In addition, 286 genes were found to have differential expression in patients with idiopathic AC, while 61 genes were found to have differential expression in patients with diabetic AC. The newly expressed genes provide an explanation for the dissimilarities in disease progression and provide potential therapeutic targets that could lead to alternative treatment strategies for the two groups. This study presented the use of ribonucleic acid (RNA) sequencing and analytics to examine gene expression in alveolar bone in health and diabetes subjects. The study (Zhu et al. 2020) presented to investigate the candidate genes involved in the T2D. The Gene Expression Omnibus (GEO) database was used to get the gene expression profile GSE26168. Differentially expressed genes were obtained using the online tool GEO2R. Metascape was used for annotation, visualization, and comprehensive discovery, to perform the Kyoto Encyclopedia of Genes and Genomes (KEGG) pathway and Gene Ontology (GO) term enrichment analysis. Cytoscape was used to identify prospective genes and important pathways for building the protein-protein interaction (PPI) network of DEGs. A total of 981 differentially expressed genes (DEGs) were identified in T2D, including 301 upregulated and 680 downregulated genes. Six potential genes (PIK3R1, RAC1, GNG3, GNAI1, CDC42, and ITGB1) were selected based on the DEGs’ PPI network. There are other studies investigation the genes affecting diabetes (Sufyan et al. 2021; Lei et al. 2021; Pujar et al. 2022; Prashanth et al. 2021; Dieter et al. 2021; Chen et al. 2022c; Oraby et al. 2022). The study (Nair et al. 2020) aims to find a strong new set of symptoms so that DR screening can be done automatically. For the automated DR detection, a new symptomatic instrument based on information-driven profound learning was made and tested. The system used a shading technique on fundus images and ranked them based on whether or not they had DR, allowing for the easy identification of medically relevant cases for referral. While the complication of DR has been extensively studied, but less attention has been given to the impact of diabetes on ocular surface health. While diabetic keratopathy can be a serious threat to one’s eyesight, it can also be used as a diagnostic and therapeutic tool for other diabetic systemic problems. In this review article (Bu et al. 2022), the current knowledge of diabetic ocular surface illness, which includes neuropathy, dry eye, and other corneal morphological alterations, was discussed. They also addressed several topics that have received less attention in the existing literature. This involves problems of the ocular surface in pre-diabetic stages as well as variances in the pathology of the ocular surface between human diabetics and animal models of diabetes. In addition to this, the author highlighted that recent breakthroughs have been made in experimental models of diabetic ocular surface problems. Finally, the most recent approaches to the diagnosis, therapy, and monitoring of ocular surface diseases caused by diabetes were analyzed. The future research prospects were described, the recent development of a technique known as protein microarrays, which has the potential to be utilized in the diagnosis and management of diabetic ocular surface disease. Traditional dilated ophthalmoscopy has been used for the initial screening of diabetic symptoms. However, as DR is a progressive disease, fundus doesn’t provide details of structural changes in the retina. Technological improvements in retinal imaging have allowed for more accurate diagnosis and treatment of DR. The review study (Saleh et al. 2022) discussed the several imaging techniques that can be used to diagnose, detect, and grade DR. Schreur et al. (2022) reviewed the current imaging modalities (CFC, OCT, OCT-A, FFA, UWFP) for the DR diagnosis. It was suggested that integrating data from multiple imaging techniques could lead to more precise diagnosis, treatment planning, and monitoring of disease.

Summary DR is the leading cause of blindness in people of working age, and it is getting worse as the number of people with diabetes rises. Imaging modalities include CFC, OCT, and OCT-A, which are used for DR screening and diagnosis. Existing treatments for DR focus on inflammation, angiogenesis, and oxidation, but they don’t work well enough to cure the disease completely. Researchers are working on finding new genes and risk factors for DR.

9.2 Current clinical practices in screening AMD

The aging of the population has been a contributing factor in recent years to the rise in the number of patients diagnosed with ocular disorders. AMD is one of the most prevalent and, if left untreated, can result in complete blindness. Toward this end, the purpose of study (Heesterbeek et al. 2020) is to review previous research on the phenotypic, demographic, environmental, genetic, and molecular risk factors for the development of AMD. The progression of the disease has been measured in different ways. Even though vision loss seems like a good way to measure the progression of AMD in natural history studies or clinical trials, it is often not a good idea to use visual acuity as an endpoint because vision loss can take years to happen. To account for this, most AMD studies have relied on anatomical endpoints to track the disease’s development across short periods of time. Geographic atrophy (GA) and choroidal neovascularization (CNV) are the two main anatomical markers used to diagnose late AMD (Schaal et al. 2016). GA, which is also known as dry AMD, is characterized by the loss of photoreceptors, RPE, and choriocapillaris, which results in a gradual loss of vision over the course of time. CFP, FAF, and OCT are the three main imaging modalities utilized for GA detection. On CFP, it can be difficult to spot early signs of GA development and establish the margins of GA in a reliable manner, whereas FAF and OCT imaging are more suited for this purpose and are more likely to produce accurate results. These new vessels expand into the retina, causing subsequent leakage and/or haemorrhage, which can lead to serous RPE detachment, which is accompanied by a rapid loss of vision, and finally causes a scar in the macula that poses a threat to the patient’s vision. CFP, OCT, and FA can identify exudative nAMD by fluid leakage and haemorrhaging, however, in some circumstances, a CNV can already be detected before exudation occurs using indocyanine green angiography (ICGA) and OCT-A imaging (Treister et al. 2018). This is possible because ICGA and OCT-A imaging are more sensitive to CNVs than CFP, OCT, and FAG. Drusen can be a result of aging or an early sign of AMD, depending on their number, size, shape, distribution, and morphology. The goal of the study (Domalpally et al. 2022) was to determine the prevalence of drusen outside the macula as well as their contribution to the development of AMD. Drusen size, area, and placement were analysed from the macular grid using 30-degree, wide-angle, colour photos from the third baseline field. Comparisons were made between drusen found outside of the macula and those found inside. It was observed that drusen outside the macula are common in eyes with AMD, and they occur more often as the number of drusen in the macula grows. Extramacular drusen do not provide an additional risk to previously recognized risk factors in the progression of intermediate AMD to late AMD. The study (Salehi et al. 2022) presented meta-analysis and systematic review, suggested that patients with AMD have significantly reduced values for several OCT measurements, including subfoveal CT, average pRNFL thickness, and average macular GCC thickness, compared to the general population. Quantifying the relative ellipsoid zone reflectivity (rEZR) could be a structural surrogate measure for an early disease development AMD (Saßmannshausen et al. 2022). Pigmentary abnormalities, the existence of reticular pseudodrusen (RPD), and the volume of the retinal pigment epithelial drusen complex (RPEDC) were examined in relation to the rEZR using linear mixed-effects models. The results of this investigation demonstrated a connection between rEZR and the existence of iAMD high-risk characteristics as well as increasing disease severity. HF seen on OCT scans is associated with ectopic RPE and hence represents a risk factor for the development of advanced AMD (Cao et al. 2021). It was observed that HF is not predictor but rather a marker of disease severity. The process of function gain and loss begins with individual RPE cells in the in-layer and extends to all aberrant phenotypes. The presence of evidence for RPE transdifferentiation, which may have been caused by ischemia, lends support to the concept of an epithelial–mesenchymal transition.

The pathophysiology and etiology of AMD are heavily dependent on inflammation. Humanin G (HNG) is a mitochondrially derived peptide (MDP) that has been shown to be cytoprotective in AMD and to be able to defend against the mitochondrial and cellular stress that is caused by damaged mitochondria in AMD. The purpose of study (Nashine et al. 2022) was to evaluate the hypothesis that the levels of inflammation-related marker proteins are higher in AMD and that treatment with HNG lowers the levels of those proteins. It was observed that HNG functions to decrease inflammatory protein production in stressed or injured cells, which may have a role in the development of AMD. It is important to highlight that HNG does not have any deleterious effects on cells that are healthy and have proper homeostasis. The study (Bhandari et al. 2022) was presented to determine if patients who underwent incident cataract surgery were at an increased risk for acquiring late-stage AMD. Late AMD was characterized by the presence of neovascular AMD or geographic atrophy seen on annual stereoscopic fundus scans or as documented by medical records, including intravitreous injections of medication intended to inhibit the effects of vascular endothelial growth factor. It was concluded that participants with up to 10 years of follow-up having cataract surgery did not raise the chance of developing late AMD. The objective of the study (Chua et al. 2022) was to investigate the correlations between air pollution and self-reported cases of AMD as well as in vivo measurements of retinal layer thicknesses. Greater self-reported AMD was associated with greater exposure to PM2.5, while differences in retinal layer thickness were associated with PM2.5, PM2.5 absorbance, PM10, NO2 and NOx. Polypoidal choroidal vasculopathy is common in Asia and is considered to be a form of neovascular AMD. In a similar vein, cardiovascular disease (CVD), which is also a complex condition associated with aging, is a main cause of morbidity and mortality. Previous work (Ikram et al. 2012; Hu et al. 2010) has shown that patients with AMD have a higher risk of cardiovascular disease, suggesting a “common soil.” Smoking, poor diet, and a lack of physical activity are all risk factors for cardiovascular disease, which also contribute to the development of AMD (Mauschitz and Finger 2022).

According to the review of many cohort studies in the general population, high levels of physical activity appear to be protective against the onset of early AMD (Mauschitz et al. 2022). These findings confirm that physical activity is a modifiable risk factor for AMD and can help guide future efforts to minimize the public health burden of this condition. A number of pharmacologic treatments are available for neovascular AMD; however, there is currently no authorized therapy that appreciably slows the progression of dry AMD. Both dry AMD and neovascular AMD have unmet medical needs related to the development of viable treatment options. In light of these findings, it is clear that innovative methods of drug delivery are required to enhance the pharmacological effect and drug concentration at the target areas. The study (Jiménez-Gómez et al. 2022b) summarised the pathophysiology and the existing therapy options for AMD, concentrating on the developing ocular sustained drug delivery techniques undergoing clinical trials. Although there is currently no cure for AMD, its symptoms can be suppressed. Current treatments for AMD are divided into four categories: device-based, anti-inflammatory drug, anti-vascular endothelial growth factor, and natural product treatment (Cho et al. 2022). All of these treatments come with side effects, but early AMD therapy combined with products has many benefits because it can stop RPE cell apoptosis at safe doses. Death of RPE cells is associated with oxidative stress, inflammation, and carbonyl stress, as well as a lack of essential cell components. Anti-oxidant, anti-inflammatory, and anti-carbonylation properties can be possessed by certain natural products. Candidates for AMD medicines derived from natural products reduce RPE cell death effectively; they have the potential to be utilized as medication for preventing early (dry) AMD. RPE cell transplantation intends to arrest or reverse vision loss by preventing the death of photoreceptor cells. It is regarded as one of the most promising stem cell therapy applications in the field of regenerative medicine. Recent studies have focused on transplanting RPE cells produced from human pluripotent stem cells (hPSC) (O’Neill et al. 2020). Early clinical trial data indicate that transplantation of RPE cells produced from hPSCs is safe and can enhance vision in AMD subjects. Unfortunately, the techniques currently employed to generate hPSC-RPE cells for clinical studies are inefficient. Delivering RPE cells on a thin porous membrane for better integration into the retina can be one way to enhance transplantation outcomes. Another way to improve transplantation outcomes is to manipulate the outcome by controlling immune rejection and inflammatory reactions. In article (Cohn et al. 2021), author summarised the most important findings from pre-clinical studies about how different laser interventions might work to make changes that are good for the RPE, Bruch’s membrane, and choriocapillaris. As laser technology has progressed toward short pulse, non-thermal delivery, such as the nanosecond laser, the most important takeaways from clinical trials of laser treatment for AMD have been summarised. Another study (Csaky et al. 2022) discussed the different treatment approaches for AMD.

Summary AMD is considered to be the most prevalent and, if left untreated, can lead to total blindness. CFP, FAF, OCT, OCT-A are the imaging modalities utilized for AMD diagnosis and progression tracking. However, OCT has been widely used by ophthalmologists to detect structural changes due to AMD. Advancements in multimodal imaging and functional testing tools, as well as continuous exploration of important disease pathways, have set the stage for future well-conducted randomized trials using nanosecond and other subthreshold short pulse lasers in AMD.

9.3 Current clinical practices in screening glaucoma

The functional and anatomical changes that occur in glaucomatous eyes can be powerfully described using today’s technologies for evaluating the disease’s activity. However, there is still a need for innovative diagnostic tools that can diagnose glaucoma early and more precisely (Wu et al. 2022). Glaucoma has been identified by screening tests, and even though therapy was associated with a lower risk of glaucoma development, there is still no evidence that treatment improves visual outcomes and quality of life (Chou et al. 2022). The study (Aspberg et al. 2021) was conducted to evaluate how population screening affects the rate of blindness caused by OAG. The longest-ever follow-up of an OAG screening project that lasted more than 20 years. According to the findings, the prevalence of cases of bilateral blindness in the population that was tested dropped by 50%. The study (Munteanu et al. 2022) performed an assessment of risk factors and various indicators of symptoms between POAG patients and non-glaucoma patients (NG), as well as between POAG with high intraocular pressure and normal intraocular pressure, in tertiary preventive measures. Only age (F = 2.381, df = 40, p = 0.000) remains statistically significant after controlling for potential confounders such as gender, place of residence, and marital status. The most common forms of pediatric glaucoma and its diagnosis and treatment were reviewed, based on the childhood glaucoma research network (CGRN) (Karaconji et al. 2022). These include juvenile open-angle glaucoma (JOAG),and primary congenital glaucoma (PCG). In addition to this, other causes of glaucoma linked to, non-acquired ocular anomalies (Peters anomaly, Axenfeld-Rieger anomaly, and aniridia), systemic disease (neurofibromatosis, Sturge-Weber syndrome) were investigated. Early diagnosis of the structural changes paves the way for earlier therapy and results in slower disease progression. Screening for glaucoma through tonometry has a significant false positive and false negative detection rate. It was observed that screening with an assessment of the optic disc is likely to identify the majority of glaucoma incidences. The study (Karvonen et al. 2020) evaluated the screening capacities of the OCT, laser polarimetry (GDx), and scanning laser ophthalmoscopy (Heidelberg Retinal Tomograph, HRT), and found that all tools were quite similar. Since the accuracy of each of the factors that were evaluated was moderate, screening with these parameters alone does not produce reliable results. The prospective study (Yu et al. 2016) demonstrates that OCT event and trend-based progression analysis programmes compare to linear mixed modeling (without relying on a normative database) and detect progression earlier than SAP. Damage to the RNFL could be detected with OCT prior to the onset of visual field abnormalities on SAP, suggesting that RNFL thickness assessment is a useful screening tool for glaucoma (Kuang et al. 2015). Vazquez et al. (2021) summarized the findings of current studies that concentrate on the relevance of OCT parameters in the diagnosis and monitoring of glaucoma. It has been shown that the ONH, RNFL, and macular parameters have significant diagnostic ability. According to Wanza et al. (2010), the maximum allowable difference in RNFL between two visits is 4µm. The thinning that is more than 4µm classified as a statistically significant progressive change from the baseline. The study (Aksoy et al. 2020) was presented to evaluate the accuracy of SD-OCT segmentation software in differentiating early glaucoma from ocular hypertension and healthy eyes. In addition to this, compassion of macular layer thicknesses between early glaucoma, ocular hypertension, and healthy eyes was performed. It was concluded that analysis of the pRNFL and macular segmentation can work together to provide a more accurate early diagnosis of glaucoma. The efficacy of SD-OCT RNFL thickness measurements in glaucoma diagnoses was evaluated (Mansoori et al. 2011), and results showed that SD-OCT could be helpful for identifying glaucoma patients in the elderly. The progression of the RNFL loss is more sensitive than the GCIPL loss in patients with early to moderate glaucoma (Hammel et al. 2017). However, in a more advanced stage, GCIPL remains above ground, making macular analysis the more promising method for diagnosing progression (Bowd et al. 2017). In addition to GCIPL, metrics related to ONH can be used to monitor development in the advanced stages (Chen 2009).

Despite developments in imaging technology, perimetry still plays a vital role in the diagnosis and management of glaucoma. The review article is to highlight recent developments in perimetry methods and to illustrate improvements in collecting and analyzing data on the visual field (Prager et al. 2021). The diagnosis and characterization of glaucomatous field damage have been significantly aided by the application of artificial intelligence in research settings. In addition, tablet-based techniques and virtual reality headsets show potential for the screening and remote monitoring of glaucoma patients. Research has shown that the LC plays a crucial role in the pathophysiology of glaucoma development and progression, and is thus considered an anatomic site of glaucomatous optic nerve injury (Czerpak et al. 2021). The most significant findings were the decrease in LC thickness, posterior LC displacement, and the presence of localized defects (Bastelica et al. 2022). In vivo, evaluation of LC features in both normal and glaucomatous eyes has been possible with the development of high-resolution OCT devices, most notably enhanced depth imaging OCT (EDI-OCT) and swept-source OCT (SS-OCT). The study (Kim et al. 2022) investigated whether the LC curve changes when IOP falls down after eye drops in normal tension glaucoma (NTG) subjects. It was concluded that topical glaucoma treatment resulted in a reduction in IOP from 15.7 2.5 mm Hg at baseline to 11.2 1.7 mm Hg. There are other clinical studies (Kim et al. 2019a; Czerpak et al. 2022; Bastelica et al. 2022; Guan et al. 2022; Glidai et al. 2022; Mochida et al. 2022) found in the literature highlighting the significance of LC for glaucoma diagnosis and progression tracking. Despite the advancement in imaging technology, accurate analysis of the LC is still changeling (Andrade et al. 2022; Kim et al. 2020).

Increased IOP and/or glaucomatous optic neuropathy have been linked to a wide range of systemic diseases, including renal disease and hemodialysis, neurologic disorders, primary familial amyloidosis, endocrine disorders, vascular disease, collagen vascular disease, hematologic disorders, irradiation; systemic viral disease, dermatologic disorders (Funk et al. 2022). An evaluation of the systemic illness causing the elevated IOP is necessary. Per et al. presented a study (Wändell et al. 2022) intended to examine the prevalence of OAG among people in Region Stockholm in relation to other somatic comorbidities. Higher fully adjusted OR (95% confidence intervals) were found for women and men with, cancer 1.175 (1.120–1.233) and 1.106 (1.048–1.166), hypertension 1.372 (1.306–1.440) and 1.243 (1.179–1.311), diabetes 1.138 (1.074–1.207) and 1.216 (1.148–1.289). It was concluded that glaucoma is more likely to develop in people who have certain somatic disorders, most notably diabetes, hypertension, and cancer. In addition to this, the risk of glaucoma is also higher in neighborhoods with higher socioeconomic status as compared to neighborhoods with lower socioeconomic status. Ro et al. (2022) conducted their research on the risk of OAG in the 12 years that followed the diagnosis of chronic kidney disease (CKD) using a cohort that was representative of the entire country. The results showed that CKD is a major contributor to the development of OAG, and that the risk of OAG increases with the severity of CKD. The purpose of study (Kolli et al. 2022) was to determine whether the genetic risk for POAG influences the correlations between cardiopulmonary diseases and glaucoma indicators. The history of common cardiopulmonary conditions and cardiopulmonary measurements were analyzed in the UK Biobank, together with history of glaucoma. The prevalence of diabetes (17.5% vs 6.5%), CKD (6.7% vs 2.0%) dyslipidaemia (31.2% vs 18.3%) were all greater in glaucoma patients than in controls (adjusted p0.0013 for each) within decile 1. Contrast test p-value for difference 0.05 indicates that the extent of the connection between glaucoma and diabetes, CKD, and glycated haemoglobin varies between deciles 1 and 10. The study (Mauschitz et al. 2022) conducted retinal layers assessment as biomarkers for brain atrophy. They investigated the relationship between segmented retinal layers and various cerebral parameters from magnetic resonance imaging (MRI). Relationships between retinal measurements and volumetric brain measures, as well as fractional anisotropy (FA) as a marker of microstructural integrity of white matter (WM) were analyzed using multiple linear regression. Inner retinal volumes were correlated with total brain and GM volumes, and even more strongly with WM volumes and FA. It was that both the inner and outer retina were linked to hippocampal size, whereas the outer retina was most strongly associated with GM volume.