Abstract

We study the long-time behaviour of a run and tumble model which is a kinetic-transport equation describing bacterial movement under the effect of a chemical stimulus. The experiments suggest that the non-uniform tumbling kernels are physically relevant ones as opposed to the uniform tumbling kernel which is widely considered in the literature to reduce the complexity of the mathematical analysis. We consider two cases: (i) the tumbling kernel depends on the angle between pre- and post-tumbling velocities, (ii) the velocity space is unbounded and the post-tumbling velocities follow the Maxwellian velocity distribution. We prove that the probability density distribution of bacteria converges to an equilibrium distribution with explicit (exponential for (i) and algebraic for (ii)) convergence rates, for any probability measure initial data. To the best of our knowledge, our results are the first results concerning the long-time behaviour of run and tumble equations with non-uniform tumbling kernels.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a kinetic-transport equation which describes the movement of biological microorganisms biased towards a chemoattractant. The model is called the run and tumble equation and introduced in [1, 22] based on some experimental observations [3] on the bacterium called Escherichia coli (E. coli). The equation is given by

where \(f:=f (t,x,v) \geq 0\) is the density distribution of microorganisms at time \(t \geq 0\) at a position \(x \in \mathbb{R}^{d}\), moving with a velocity \(v \in \mathcal{V} \subseteq \mathbb{R}^{d}\).

The term \(\lambda (v' \cdot \nabla _{x} M(x)) \kappa (v, v')\) is called the tumbling frequency where \(\lambda : \mathbb{R} \to [0, \infty ) \) is the tumbling rate. The tumbling rate \(\lambda \) depends on the gradient of the external signal \(M\) along the direction of the velocity \(v\), and it is defined by

where \(m_{0} \in \mathbb{R}^{+}_{0}\) represents the external signal in the absence of a chemical stimulus and \(S(x)\) is a given function for the density of the chemoattractant. In Eq. (1), the tumbling or turning kernel \(\kappa (v,v') \) is a probability distribution on the space \(\mathcal{V}\) and gives the probability of moving from velocity \(v\) to velocity \(v'\), i.e. \(\int _{\mathcal {V}} \kappa (v,v') \,\mathrm{d}v' = 1\).

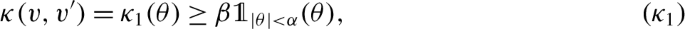

In the case of peritrichous bacteria such as E. coli and Salmonella typhimurium, experiments conducted in [3, 17] suggest that \(\kappa \) depends only on the relative angle \(\theta \) between the pre- and post-tumbling velocities \(v\) and \(v'\) respectively. Particularly, for bacterium E. coli, the tumbling kernel \(\kappa \) is given by

where \(g(\theta )\) is a sixth order polynomial satisfying \(g(0) = g(\pi ) =0\) (see [4, 21] for more details). The exact form of \(g\) is provided in [14] by polynomial fitting to the data of [3]. Here we will work in a bounded velocity space.

In our previous paper [13] we studied this equation under the assumption that \(\kappa \) was uniformly bounded above and below. However, this assumption is not realistic as the bacteria are not able to turn a full half circle. We aim to extend our previous work to the case where the maximum turning angle of the bacteria may be bounded.

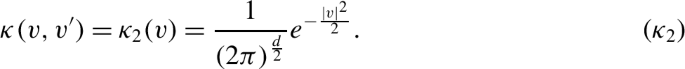

We are also interested in unbounded velocity spaces, i.e., \(v \in \mathcal {V} =\mathbb{R}^{d}\). In this setting, we consider that the tumbling kernel is given by the Maxwellian distribution on the post-tumbling velocities independently from the pre-tumbling velocities, i.e.,

To the best of our knowledge, we provide the first results concerning the long-time behaviour of the run and tumble equation with these non-uniform tumbling kernels which are more physically relevant in terms of modelling the chemotactic bacterial motion. We believe the main reason for this is the fact that the classical hypocoercivity techniques such as [9] cannot be used for the run and tumble equation even though it is a linear, conservative kinetic equation. On the other hand, Harris-type theorems (see e.g. [7, 24]) proved very effective for obtaining quantitative hypocoercivity results, especially for kinetic equations arising from applied sciences where classical techniques provide limited results. We elaborated on this fact in our previous paper [13] in detail and a brief explanation can be found below in the paragraph Motivation and novelty.

Summary of Previous Results

Previous important works on the linear run and tumble equation include [5, 6, 19–21]. In [20, 21], the authors study the diffusion approximation to a linear run and tumble equation and the diffusion limit of this equation to obtain macroscopic chemotaxis equations, respectively. Using the \(L^{2}\) hypocoercivity techniques developed in [9], the authors [6] show the existence of a unique equilibrium and exponential decay towards it in dimension \(d=1\). This paper works with the assumption that the tumbling rate \(\lambda \) can take two values depending if the bacteria is travelling up or down the gradient of the chemoattractant density. Though it is expressed differently in [6] the tumbling rate can be written as

where \(\chi \) is called the chemotactic sensitivity. In [19], the authors extended this results to higher dimensions \(d\geq 1\) considering

The result in [19] works under the assumption that the concentration of the chemoattractant \(S(x)\) is radially symmetric and decreasing in \(x\) such that \(S(x) \to 0\) as \(|x| \to \infty \). In our previous paper, [13] we improve the result in [19] by proving the exponential convergence to unique equilibrium without requiring \(S(x)\) to be radially symmetric. Our result is valid in an arbitrary dimension \(d \geq 1\) where the tumbling kernel \(\lambda \) can take a much more general form. Most importantly, we show that existence and convergence to a steady state hold when \(\lambda \) is a Lipschitz function. Our techniques in [13] are based on Harris-type theorems coming from the ergodic theory of Markov processes. They differ from the techniques used in [19] that are based on the Krein-Rutman theorem. Moreover in [13], we also consider a non-linear run and tumble model where we can prove the exponential decay to a unique equilibrium. In [5], the author studies a non-linear coupled run and tumble equation in one dimension \(d=1\). Even though [5] is mainly concerned with the travelling wave solutions of the non-linear equation, as an intermediate step, the author shows the existence of steady states for the linear equation.

All these previous works deal with situations where the tumbling kernel, which tells us how the post-tumbling velocities depend on the pre-tumbling velocities, is essentially uniform, i.e., \(\kappa \equiv 1\), and on a bounded velocity space. The main goal of this work is to look at physically more realistic cases where the tumbling kernel is not uniform and the bacteria are not able to turn to every angle in one tumbling event.

Motivation and Novelty

In our previous paper [13] we studied Eq. (1) in the case where the velocity space was a ball and the post-tumbling velocities were uniformly distributed so that \(\kappa \equiv 1\). We showed exponential convergence to equilibrium in suitable weighted total variation distances. The result was built around probabilistic techniques called Harris-type theorems coming from the theory of Markov processes. The goal of this paper is to extend the previous result to a wider class of tumbling kernels that are non-uniform and physically more relevant for modelling the motion of chemotactic bacteria.

The typical tools for showing convergence to equilibrium for kinetic equations come from hypocoercivity. It is important to note that “classical” hypocoercivity techniques such as [9, 23] cannot be applied without strong a priori knowledge of the steady state which we do not have for the run and tumble equation. This is the main reason for scarce results on the long-time behaviour with arbitrary dimensions \(d \geq 1\) even though the run and tumble equation is widely studied. Harris-type theorems (see, e.g., [7, 15, 16] and references therein) provide the existence and uniqueness of the steady state as a by-product while simultaneously showing convergence to equilibrium. Using these tools, one can obtain quantitative hypocoercivity results in weighted total variation (or in weighted \(L^{1}\)) distances independently from the initial data (see, e.g., [8, 24]).

Harris-type theorems are based on verifying two hypotheses: minorisation and geometric drift conditions. The minorisation condition requires providing a quantifiable positive lower bound on the process. Therefore, non-uniform tumbling kernels pose additional challenges compared to the case with a uniform tumbling kernel when using Harris-type theorems. This is because we need to prove lower bounds on the law uniform over a large set of initial conditions. This means that we need to find some possible paths a bacterium can take when moving from one point in phase space to another. The fact that the tumbling angle is bounded means that bacteria may only be able to travel between two points along paths involving many tumbling events. Tracking bounds on the probabilities of these complex paths (and paths close to them) is challenging and required us to generate new technical tools.

1.1 Assumptions and Main Results

The two main results of our paper concern angularly dependent tumbling kernels and unbounded velocity spaces. In both cases, we will make the following assumptions on the tumbling rate, \(\lambda \), and the logarithm of the chemoattractant concentration, \(M\).

-

(H1)

The tumbling rate \(\lambda (m): \mathbb{R} \to (0, \infty )\) is a function of the form

where \(\psi \) is a bounded (with \(\|\psi \|_{\infty }\leq 1\)), odd, increasing function and \(m\psi (m) \in W^{1, \infty}_{loc}(\mathbb{R})\).

-

(H2)

There exists a contstant a strictly positive integer \(b>0\), such that for every \(B>0\), there exists \(c > 0\) depending on \(B\) so that

for \(|m| \leq B\). We note that this holds if \(\psi \) is the sign function or if it is odd and differentiable around zero with strictly positive \(k\)th derivative for some \(k \geq 1\).

-

(H3)

We suppose that \(M(x) \to -\infty \) as \(|x| \to \infty \), \(|\nabla _{x} M(x)|\) is bounded and that there exist \(R\geq 0\) and \(m_{*} >0\) such that whenever \(|x|>R\) we have

Moreover, we suppose that \(\operatorname{Hess}(M)(x) \to 0\) as \(|x|\to \infty \) and \(|\operatorname{Hess}(M) (x)|\) is bounded.

The following assumptions concern the tumbling kernels we work with.

-

(H4)

We assume that \(\mathcal{V} = V_{0} \mathbb{S}^{d-1}\) and there exist \(\alpha , \beta >0\) such that

where \(\theta = \arccos \left ( \dfrac{v \cdot v'}{V_{0}^{2}} \right )\) and \(\kappa _{1}\) is a decreasing function of \(|\theta |\) (similar arguments work if \(\kappa _{1}\) is even and bounded below by a decreasing function of \(|\theta |\)).

-

(H5)

We assume that \(\mathcal{V} = \mathbb{R}^{d}\) and the tumbling kernel is given by the Maxwellian distribution on the post-tumbling velocities independently from the pre-tumbling velocities,

After the assumptions, we state the main results of the paper below.

Theorem 1.1

Angularly dependent tumbling kernel (\(\kappa _{1}\))

Suppose that \(t \mapsto f_{t} \) is the solution of Equation (1) with initial data \(f_{0} \in \mathcal{P} (\mathbb{R}^{2} \times \mathbb{S}^{1} )\). We suppose that hypotheses (H1), (H2), (H3) and (H4) are satisfied. Then there exist positive constants \(C\), \(\sigma \) (independent of \(f_{0}\)) such that

where \(f_{\infty}\) is the unique steady state solution to Equation (1). The norm \(\|\cdot \|_{*}\) is the weighted total variation norm defined by

where \(\gamma , A, C_{\kappa }> 0\) are positive constants that can be computed explicitly and will be chosen so that \(\|\cdot \|_{*}\) is indeed a norm, and \(\mu \) is a finite measure.

Remark 1.2

We believe that Theorem 1.1 works in arbitrary dimension \(d \geq 1\). The reason for stating Theorem 1.1 in dimension \(d=2\) is that we provide the proof of Proposition 2.7 only in \(d=2\). We do not believe that there is a major mathematical obstacle in proving it in higher dimensions. However, even in \(d=2\), the computations become delicate, and the notations get intricate. Therefore, we decided to provide it in \(d=2\) to keep the exposition of our ideas clear.

Theorem 1.3

Unbounded velocity space with tumbling kernel (\(\kappa _{2}\))

Suppose that \(t \mapsto f_{t}\) is the solution of Equation (1) with initial data \(f_{0} \in \mathcal{P}( \mathbb{R}^{d} \times \mathbb{R}^{d})\). We suppose that hypotheses (H1), (H2), (H3) and (H5) are satisfied and assume further that \(M \operatorname{Hess}(M)\) is bounded. Then there exists a positive constant \(C>0\) such that

where

with \(A >0\) is a constant that can be computed explicitly and it is sufficiently large so that \(M_{f_{0}}>0\).

Even though we study the long-time behaviour of the run and tumble equation (1) with the non-uniform tumbling kernels in this paper, we would like to briefly comment on the Cauchy theory for these equations.

Cauchy Theory for Equation (1)

As Eq. (1) is a linear integro-differential equation with bounded coefficients, showing the existence and uniqueness of global-in-time, measure-valued solutions is relatively standard. One could either use Picard iteration arguments to construct short-time solutions and then use the fact that \(\lambda \) is bounded to show these can be glued together globally in time or one can directly write down a Markov process whose law satisfies Eq. (1). We briefly explain how to do the latter. Let us generate a Poisson process with intensity \((1+\chi )\) and call its jump times \(J_{1}, J_{2}, \dots \) and a series of thinning variables \(U_{1}, U_{2}, \dots \) independent and all having the uniform law on \([0, 1+ \chi ]\) then we define initial points \((X_{0}, V_{0})\) having law \(f_{0}\) then set \(J_{0} = 0\) and for \(t \in (J_{i}, J_{i+1})\) we write \(X_{t} = X_{J_{i}} + (t-J_{i})V_{J_{i}}\) and \(V_{t} = V_{J_{i}}\) then for \(t = J_{i+1}\) we set \(X_{t} = X_{J_{i}} + (J_{i+1}- J_{i})V_{J_{i}}\) then if \(U_{i+1} \leq \lambda (V_{J_{i}}\nabla _{x} M(X_{t}))\) we generate \(V_{J_{i+1}}\) as a new random variable having law \(\kappa (V_{J_{i}}, \cdot )\) and if \(U_{i+1} > \lambda (V_{J_{i}} \nabla _{x} M(X_{t}))\) we set \(V_{J_{i+1}} = V_{J_{i}}\).

Plan of the Paper

After listing our assumptions and stating our main results in Sect. 1.1, in the following section (Sect. 1.2), we describe our methodology, particularly we state Harris’s theorem in geometric and subgeometric settings, Theorems 1.4 and 1.6 respectively. Then, Sects. 2 and 3 are dedicated to proving Theorems 1.1 and 1.3 for the cases (i) angularly dependent tumbling kernels and (ii) unbounded velocity spaces respectively.

1.2 Methodology

This paper is an extension of the work in [13] and as such is built on Harris’s theorem from Markov process theory. More precisely, Harris-type theorems are used in showing geometric (exponential) or sub-geometric (algebraic) rates of convergence to a unique equilibrium solution for equations that can be described as Markov processes. Harris-type theorems rely on verifying two hypotheses: a Foster-Lyapunov condition and a uniform minorisation condition.

We briefly introduce the functional setting and some notations below and then we provide the statements of the theorems both in geometric and sub-geometric settings in the spirit of [7, 16, 18] and the references therein. We skip the proofs of these theorems.

Notations

We consider a measurable space \((\Omega , \Sigma )\) where \(\Omega \) is a Polish space endowed with a probability measure. We denote the space of probability measures on \(\Omega \) by \(\mathcal {P} (\Omega )\). Note that in our setting \(\Omega = \mathbb{R}^{d} \times \mathcal {V}\) so that \((x, v) \in \mathbb{R}^{d} \times \mathcal {V} = \Omega \). We sometimes use the notation \(z := (x,v)\).

We define the weighted total variation (or weighted \(L^{1}\)) distance by

where \(\mu \) is a finite measure or a measurable function and \(\phi : \Omega \to [1, +\infty ) \) is a measurable weight function.

We call \((S_{t})_{t \geq 0}\) a Markov (or stochastic) semigroup if it is a linear semigroup conserving mass and positivity. Remark that if \(f\) solves Eq. (1), then \(f(t,x,v) = S_{t}f_{0}(x,v)\) and \((S_{t})_{t \geq 0}\) is a Markov semigroup since Eq. (1) is positivity and mass-preserving. Moreover, we also use the notation \({\partial} _{t} f = \mathcal {L} [f]\) equivalently to Eq. (1). If Eq. (1) has a stationary soluton \(f_{\infty}\), this means that \({\partial} _{t} f_{\infty }=\mathcal {L} [f_{\infty}] = 0 \) and \(f_{\infty}\) is an invariant measure for the semigroup \(S_{t}\), i.e., \(S_{t} f_{\infty }= f_{\infty}\).

Theorem 1.4

Harris’s Theorem

Let \((S_{t})_{t \geq 0}\) be a Markov semigroup satisfying the following hypotheses:

Hypothesis 1 (Foster-Lyapunov condition). There exist positive constants \(\zeta \), \(D\) and a continuous, measurable function \(\phi : \Omega \to [1, +\infty )\) such that

Hypothesis 2 (Minorisation condition). There exist a probability measure \(\nu \), a constant \(\beta \in (0,1)\) and some time \(\tau >0 \) such that

where \(\mathcal{C} = \{ z \colon \phi (z) \leq R \}\) for some \(R > \frac{2D (1-e^{-\zeta \tau})}{\zeta (1-\alpha )}\).

Then \((S_{t})_{t \geq 0}\) has a unique invariant measure \(\mu _{\infty}\) and for any \(\mu \in \mathcal {P} (\Omega )\) there exist some constants \(C>1\), \(\sigma >0\) such that for all \(t \geq 0\) we have

Remark 1.5

The constants \(C\), \(\sigma \) can be computed explicitly in terms of \(D\), \(\tau \), \(\alpha \), \(\beta \), \(\zeta \) (see Remark 3.10 in [15] or Remark 2 in [24]).

There are versions of Harris’s Theorem adapted to weaker Lyapunov conditions, providing subgeometric convergence results, see, e.g., [2, 10–12]. Here, we state and use a version which is found in [7, 15, 24]. We refer the reader, e.g., to [11] (Theorems 3.10 and 3.12) or to [2] (Theorem 1.2) for different versions of this theorem.

Theorem 1.6

Subgeometric Harris’s Theorem

Let \((S_{t})_{t \geq 0}\) be a Markov semigroup satisfying the following hypotheses:

Hypothesis 3 (Weaker Foster-Lyapunov condition). There exist constants \(\zeta > 0\), \(D \geq 0\) and a continuous function \(\phi : \Omega \to [1, +\infty )\) with pre-compact sub-level sets such that

where \(h: \mathbb{R}_{+} \to \mathbb{R}\) is a strictly concave, positive, increasing function and \(\lim _{u \to +\infty} h'(u)=0\).

Hypothesis 4 (Minorisation condition). For every \(R>0\), there exist a probability measure \(\nu \), a constant \(\beta \in (0,1)\) and some time \(\tau >0 \) such that

where \(\mathcal{C} = \{ z \colon \phi (z) \leq R \}\).

Then \((S_{t})_{t \geq 0}\) has a unique invariant measure \(\mu _{\infty}\) satisfying

and there exists a constant \(C\) such that

holds for every \(\mu (\phi ) = \int \phi (z) \mu (\,\mathrm{d}z)\) where the function \(H_{h}\) is defined by

The proofs of these theorems can be found in [7, 11, 15]. In [11, 15], the authors make a weaker assumption, namely \(h(u) \leq u\) for any \(u \geq 1\), instead of the one which is stated above \(\lim _{u \to +\infty} h'(u)=0\). Their assumption allows for linear growth at infinity, whereas \(\lim _{u \to +\infty} h'(u)=0\) essentially requires \(h\) to be flat at infinity.

For the case of angularly dependent jump kernels, we are able to recover exponential convergence using Harris’s theorem (Theorem 1.4). The Foster-Lyapunov condition is proven in a similar way though more intricate than the work in our previous paper [13]. The minorisation condition is considerably more challenging due to the fact that we need to track the dynamics over many more jumps to produce a lower bound. Therefore, we present the proof that the minorsiation condition holds in dimension \(d=2\) to simplify the computations of the lower bound. Thus, the convergence result is stated in \(d=2\) in Theorem 1.4. However, we believe that there is no serious obstacle to generalising it to higher dimensions.

For the case of unbounded velocity spaces, we are only able to show subgeometric rates of convergence. We do this via the subgeometric version of Harris’s theorem (Theorem 1.6). Here, we are able to use exactly the same uniform minorisation condition as in our previous paper [13] in the linear case. The Foster-Lyapunov condition is substantially different and this is reflected in the subgeometric rate of convergence.

Before verifying these hypotheses for Eq. (1) we would like to add some comments on the Foster-Lyapunov condition. In order to show that (FL1) holds true for Eq. (1) we would like to find some function \(\phi (z)\) where \(\phi (z) \rightarrow \infty \) as \(|z| \rightarrow \infty \) and the existence of some \(\tau >0\), \(C>0\) and \(\alpha \in (0,1)\) such that

for any initial data \(f_{0}(x,v) \in \mathcal{P}(\mathbb{R}^{d}\times \mathcal{V})\). This is because for \(f\) satisfying \(\partial _{t} f = \mathcal{L}[ f]\), (7) is equivalent to showing that

where \(\mathcal{L}^{*}\) is the formal adjoint of ℒ and \(\zeta = \frac{\log \alpha}{\tau}\) and \(D = C \frac{\log \alpha}{\tau (1+\alpha )}\). In our case we have

and therefore,

2 Angle-Dependent Tumbling Kernel

This section is dedicated to the long-time behaviour of the linear run and tumble equation, Eq. (1) with the angularly dependent tumbling kernel (\(\kappa _{1}\)). The following two sections are dedicated to verifying the two hypotheses of Harris’s theorem (Theorem 1.4). At the end of this section, we provide a proof of Theorem 1.1.

2.1 Minorisation Condition

In this section, we prove that Hypothesis 2, (M1), is satisfied for Eq. (1) with the angular-dependent tumbling kernel (\(\kappa _{1}\)). Our overall strategy is as follows: we show that the solution \(f \equiv f_{t}\) fo Eq. (1) attains a lower bound using a Duhamel’s formula and bounding below by the part of \(f_{t}\) where there are a particular number of jumps.

To keep the exposition of our strategy clear, we provide the following computations for only \(d=2\). The very same approach can be used to extend the results to higher dimensions.

Denoting \(v_{\theta }:= (\cos \theta , \sin \theta )\) and \(\nabla _{x,y} g:= (\partial _{x} g, \partial _{y} g)\) for any differentiable function \(g\), Eq. (1) becomes

Next, we define the semigroup \((T_{t})_{t\geq 0}\) accounting for the transport part,

and the operator \(\tilde{L}\),

Then we have the following lemma

Lemma 2.1

Suppose that  . Then for any \(n \geq 1\) we have

. Then for any \(n \geq 1\) we have

Proof

We have that

Therefore writing \(\Lambda (t,x,y,\theta ) = \int _{0}^{t} \lambda ( v_{\theta }\cdot \nabla _{x} M(x-s\cos \theta , y - s\cos \theta ))\,\mathrm{d}s\) we obtain

Changing variables and using the fact that \(1-\chi \leq \lambda \leq 1+\chi \), we obtain

Then using the assumption on \(b\) we have

We note that the second term above is positive, so we have

Then, we can plug this into the integral term to obtain

We then continue substituting this into the integral term \(n\) times to obtain the result for any \(n \geq 1\). This finishes the proof. □

Next, we want to show that we can find an \(n \geq 1\) and a range of admissible times for which we can provide a lower bound on the term \(T_{t-t_{n}} \tilde{L} T_{t_{n} - t_{n-1}} \dots \tilde{L} T_{t_{3}-t_{2}} \tilde{L} T_{t_{2}-t_{1}} \tilde{L} T_{t_{1}} f_{0}\).

Let us define \(P_{t} g := \tilde{L} T_{t} {g}\) for any probability measure \(g\). Then we write \(P_{t_{1},t_{2},t_{3}}^{3} := P_{t_{1}} P_{t_{2}} P_{t_{3}}\) and note that for any measure \(g\), \(P_{t_{1},t_{2},t_{3}}^{3} g\) has a density. In particular, \(P_{t_{1},t_{2},t_{3}}^{3} \delta _{x_{0}} \delta _{y_{0}} \delta _{ \theta _{0}} (x,y, \theta )\) is bounded below by a uniform measure of a set with non-empty interior. Hence, we can bound it below by a constant times the indicator function of a ball in the position variable \(x\) with a centre at a point which we can compute. We can then use this computation to show that \(P^{3}_{t_{1}, t_{2}, t_{3}}\) acting on the indicator function of a ball is bounded below by a constant times the indicator function of another ball with a slightly larger radius and a different centre. By tracking how these centres move and how the radii of the balls grow, we can then get a bound below in \((x,y)\)-space by the indicator function of a ball whose centre is at the origin (rather than depending on the initial point).

We then need to prove that we can subsequently reach all possible angles (i.e., velocities) while maintaining a uniform lower bound below in position. We show this by looking at repeated jumps in a small time period so that we reach all angles without moving too far. This then allows us to reach all possible angles but slightly shrinks the ball we found in the lower bound for the spatial variables.

Therefore, in the following lemma, we look at \(P_{t_{1},t_{2},t_{3}}^{3} \delta _{x_{0}} \delta _{y_{0}} \delta _{ \theta _{0}} (x,y, \theta )\) and show that it is bounded below by a constant times the indicator function of a set which contains a ball whose radius and centre we can compute.

Lemma 2.2

Let \(\alpha < \dfrac{\pi}{2}\) and \(r_{1}, r_{2}, r_{3} >0 \) are a set of times, \(\varepsilon >0\) and \(|s_{i}-r_{i}| < \varepsilon \) for each \(i \in \{1,2,3\}\). Then if \(\varepsilon \) is sufficiently small we have

where

Proof

Since we have

and

applying \(T_{s_{2}}\) once more we obtain,

Iterating this yields

We perform a change of variables,

then we have

and

The Jacobian of this change of variables is \(\mathrm{d} \tilde{x} \,\mathrm{d}\tilde{y} = s_{2} s_{3} \sin (\theta _{2}) \,\mathrm{d}\theta _{1} \,\mathrm{d}\theta _{2}\).

Moreover, defining \(\tilde{\theta} := \theta _{1} + \theta _{2} +\theta _{3}\) and then \(\mathrm{d}\tilde{\theta} = \mathrm{d}\theta \), we obtain

where \(S(x_{0},y_{0},\theta _{0},s_{1},s_{2},s_{3})\) is the set of possible values of \(x_{0} + s_{1} \cos (\theta _{0}) +\tilde{x}\), \(y_{0} + s_{1} \sin ( \theta _{0}) +\tilde{y}\), and \(\theta _{0} + \tilde{\theta}\), (see Fig. 1). Then, as \(|\sin (\theta _{2}) | \leq 1\) we have

Next, we want to show that \(S(x_{0},y_{0},\theta _{0}, s_{1}, s_{2}, s_{3})\) contains the ball mentioned in the statement of the lemma. We notice that

We choose \(\theta _{2} = \pm \frac{\alpha}{2}\) and \(\theta _{1} = \pm \arctan \left ( \frac{s_{3} \sin (\alpha /2)}{s_{2} +s_{3}\cos (\alpha /2)} \right )\) and this will give the centre of the ball.

This image is an illustration of the set \(S\) (dotted region) mentioned in the proof of Lemma 2.2 for fixed values of \(r_{2}\), \(r_{3}\), \(\alpha \). The ball found inside the set \(S\) is grey coloured.

Now for a given \(\theta _{2}\), we choose \(\beta = \arctan \left ( \frac{s_{3} \sin (\theta _{2})}{s_{2} +s_{3}\cos (\theta _{2})} \right )\) then we can write

So we can set \(\tilde{x} = R\cos (\omega )\) and \(\tilde{y}=R \sin (\omega )\) as long as we can choose

We can see that for \(\theta _{1}\) and \(\theta _{2}\) small we have

Thus, we expect to be able to choose \(|\beta |\) up to \(\frac{s_{3} \alpha}{s_{2}+s_{3}}\) which is at least as large as it is needed to cover the ball given that \(\alpha \) is small. Then since all the computations depend continuously on \(s_{1}\), \(s_{2}\), \(s_{3}\) and the ball is strictly contained inside the set \(S\), we can replace \(s_{1}\), \(s_{2}\), \(s_{3}\) with \(r_{1}\), \(r_{2}\), \(r_{3}\) provided that \(\varepsilon \) is small enough. This concludes the proof. □

Using Lemma 2.2 we then prove the following:

Lemma 2.3

Let \(r_{1}, r_{2}, r_{3} >0\) be a set of times, \(R\), \(\alpha \) are as in Lemma 2.2. Then we have

where

and \(\gamma \) a constant that can be computed explicitly. Let us define the map \(F\),

which tracks how the centres of the balls move.

Proof

We have

where ∫ represents the triple integral, \(\int _{\mathbb{R}^{2} \times \mathbb{R}^{2}} \,\mathrm{d}x' \,\mathrm{d}y' \int _{-\alpha}^{\alpha} \,\mathrm{d}\theta '\). Now applying three times \(T_{t}\) and \(\tilde{L}\) yields,

In the last line above, we fixed \(\theta '\) and considered the integral in \(x'\) and \(y'\) which will measure the size of the overlap between the balls \(B((x-(r_{1}+R) \cos \theta ', y-(r_{1}+R) \sin \theta ') ; r)\) and \(B((x_{0},y_{0}); \tilde{r})\).

If \((x, y) \in B((x_{0}+(r_{1}+R)\cos \theta ', y_{0} +(r_{1}+R) \sin \theta '); \tilde{r} + \frac{r}{2})\) then we can bound the size of the overlap below by \(\frac{\pi r^{2}}{4}\). We recall from Lemma 2.2 that \(r = r_{2} r_{3} \left (1- \cos (\frac{\alpha}{2})\right )\), so we have

Therefore the lower bound becomes, denoting \(C(r_{2},r_{3}, \alpha ) : = \frac{\pi r_{2} r_{3}}{4} \left (1- \cos \left ( \frac{\alpha}{2} \right ) \right )^{2}\) (a constant depending on \(r_{2}\), \(r_{3}\), \(\alpha \)),

Thus, if we impose that \((x_{0}-x, y_{0} - y) \in B \big ( ( (R+r_{1})\cos (\theta _{0} + \delta \theta ), (R+r_{1}) \sin (\theta _{0} + \delta \theta ) ); \tilde{r} + \frac{r}{4} \big )\) then we can bound the last integral above by

where \(\gamma \) is a constant depending on \(r_{1}\), \(r_{2}\), \(r_{3}\) and is bounded uniformly in terms of upper and lower bounds on \(r_{1}\), \(r_{2}\), \(r_{3}\). Roughly we can compute this constant \(\gamma \approx \frac{\pi r_{2}^{2} r_{3}^{2} \alpha ^{2}}{32(r_{1}+R)}\). □

As we iterate the process that moves the centre of the balls, we can find a radius \(\hat{R} >0\) so that the path of centres stays inside \(B((x_{0},y_{0}); \hat{R})\) forever. We prove this in the following lemma.

Lemma 2.4

Let \(k \geq 1\) and consider a sequence of maps \((x_{k}, y_{k}, \theta _{k}) = F^{k}(x_{0}, y_{0}, \theta _{0})\) where \(F\) is defined in Lemma 2.3. Then there exists a constant \(\hat{R}>0\) depending on \(r_{1}\), \(r_{2}\), \(r_{3}\) such that \((x_{k}, y_{k}) \in B((x_{0}, y_{0});\hat{R})\) for every \(k \geq 1\).

Proof

We have

and \(y_{k}\) is defined similarly. The points \((x_{k}, y_{k})\) are illustrated in Fig. 2 below.

This image shows the iterates of the map \(F\) in \((x,y)\)-space. We notice that each triangle formed by the points \(C\), \((x_{k}, y_{k})\), \((x_{k+1}, y_{k+1})\) is an isosceles triangle and a rotation of the triangle formed by the points \(C\), \((x_{0}, y_{0})\), \((x_{1}, y_{1})\). We can then compute the circle that they all lie on and the point at the centre \(C\) using standard tools in trigonometry.

We can then compute the ball that all the points lie on, which is a circle whose radius \(\hat{R}\) and centre \(C\) are given by

This finishes the proof. □

In the next lemma, we prove that after \(\frac{24\hat{R}}{r}\) steps, the \(x\), \(y\) marginal is bounded by a constant times the indicator function of a ball of radius \(\hat{R}\).

Lemma 2.5

If we let \(\tilde{n} = \big \lceil \frac{24\hat{R}}{r} \big \rceil \), then there exists some constant \(\tilde{\gamma}>0\) such that

Proof

By Lemma 2.4 we can choose a path such that \((x_{k},y_{k}) \in B((x_{0},y_{0}); \hat{R})\) and writing \(k=\frac{\tilde{n}}{3}\) Lemma 2.3 yields

Since we also have that \(\left (1+\frac{k-1}{4} \right )r \geq 2 \hat{R}\), therefore we obtain (13) with \(\tilde{\gamma}= \gamma ^{k}\). □

Next, we look at the angles and prove the following lemma:

Lemma 2.6

Let \(n_{*}= \left \lceil \frac{4\pi}{\alpha} \right \rceil \) and suppose that \(t_{1} +t_{2} + \cdots + t_{n_{*}} \leq l \). Then we have

uniform in \(\theta \).

Proof

First, let us look at

We can keep iterating this to obtain

For some constant \(C\) that doesn’t depend on \(\theta _{0}\), \(\theta \). Therefore,

Hence we can write

where \(\mu ( (x,y) \mid \theta , (x_{0}, y_{0}, \theta _{0}))\) is the conditional law of the position variables given \(\theta \) and the initial point \((x_{0}, y_{0})\) so we have that

We can also see that

as the \(x\) and \(y\) variables can have travelled a distance of at most \(l\). Then

Then using the translation invariance of the transport map we can write

so we have

Above, we use the fact that if \((x, y) \in B((0,0);\hat{R}-2l)\) then we will be integrating over the full support of \(\mu ((-x',-y') \mid \theta , (-x, -y, \theta '))\). □

Using the previous lemmas, we can prove that the minorisation condition (M1) for Eq. (1) with the angularly dependent tumbling kernel is satisfied.

Proposition 2.7

Let \(f_{0} (x,y, \theta )= \delta _{x_{0}} \delta _{ y_{0}} \delta _{ \theta _{0}} (x,y,\theta )\) with \((x_{0}, y_{0}) \in B \big ((0,0); \frac{\hat{R}}{2} \big )\) then, after \(\big \lceil \frac{24 \hat{R}}{r} \big \rceil + n_{*}\) steps, provided that the times are chosen suitably, we have

for some \(C\) and \(\hat{R}\) that can be computed explicitly.

Proof

Suppose that the first \(n_{**}\) inter-jump times are within \(\varepsilon \) of \(r_{1}\), \(r_{2}\), \(r_{3}\) with \(\varepsilon \) small enough so that Lemma 2.2 applies and \(\gamma \) is chosen such that Lemma 2.5 holds for any interjump times in this range with constant \(\gamma \). Suppose further that the sum of the last \(n_{*}\) jump times is less than \(l\). Then, using the previous result we have

We then take \(m = \frac{\hat{R}}{8}\) and substitute this into Lemma 2.1 and integrate over the admissible possible jump times and obtain

where \(\hat{\gamma}\) is a constant we could, in principle, compute. This verifies (M1) for (11) with the angularly dependent kernel (\(\kappa _{1}\)). □

2.2 Foster-Lyapunov Condition

In this section, we verify the Foster-Lyapunov condition (FL1) for Eq. (1) for the case (\(\kappa _{1}\)). We remark that the minorisation condition in the previous section was given in dimension \(d=2\) to keep the exposition clear. The Foster-Lyapunov condition we prove in this section is valid for arbitrary dimensions \(d\geq 1\). We start by proving the following lemma.

Lemma 2.8

Suppose that \(\kappa \) is a collision kernel (\(\kappa _{1}\)) satisfying the hypothesis (H4) then

where \(C_{\kappa }\leq 1\) is a constant that only depends on the form of the collision kernel.

Proof

We perform a change of variables and write \(v' = \cos (\theta ) v + \sin (\theta ) w\) where \(w \in S_{v}\) ranges over the sphere of dimension \(d-2\) lying in the hyperplane perpendicular to \(v\). This gives us

Since \(\kappa _{1}\) is an even function, integrating first in \(\theta \) yields

where \(|S_{v}|\) is the size of \(S_{v}\), similarly for \(|\mathbb{S}^{d-2}|\). Therefore we obtain (14) with

□

Lemma 2.9

Suppose that \(\kappa \) is a collision kernel (\(\kappa _{1}\)) and that hypotheses (H2) and (H4) are satisfied. Then we have

Proof

Let us first prove the lemma when \(d=2\) which is the application of Theorem 1.1. Performing the same change of variables as in Lemma 2.8, we have

Then, using the assumption (\(m\)) we can bound this integral below by

where \(\alpha \) is the angle between \(v\) and \(\nabla _{x} M\). If we write

then we can compute that

and by changing variables to get \(F'(\alpha ) = b \int _{-\pi}^{\pi }\kappa _{1}(\theta +\alpha ) | \cos ^{2}(\theta )|^{\frac{b}{2}-1} \cos (\theta )\sin (\theta ) \,\mathrm{d}\theta \) and then changing variables back we have

Therefore, \(F(\alpha ) = 0\) when \(\alpha = \left \{0, \pm \frac{\pi}{2}, \pm \pi \right \}\) and in the case \(\alpha = \pm \frac{\pi}{2}\) we have \(F''(\alpha ) < 0\) as

which is negative when \(\kappa _{1}'(\theta ) \sin (\theta ) \geq 0\) for all \(\theta \) which will be the case if \(\kappa _{1}\) is a decreasing function of \(|\theta |\). Therefore, for all \(\alpha \) we have

In the case where we have \(d >2\), let us make the change of variables

where \(u\) is the unit vector in the direction \(\nabla _{x}{M} - \left (\nabla _{x} M \cdot v\right ) v\) and \(p\) is a variable vector that ranges over the sphere of vectors of norm 1 perpendicular to both \(v\) and \(u\) (which we call \(S_{v,u}\). The Jacobian of this change of variable is \(|\sin (\theta )|^{d-2}\). Therefore, we want to find a lower bound for the integral

The integral \(\mathrm{d} p\) just gives us a constant factor. To evaluate the rest, we write \(\phi _{v}\) to be the angle between \(\nabla _{x}M\) and \(v\). Then we have

In the last line above, we have used similar considerations to the case \(d=2\). □

Now, we can move on to the proof of the Foster-Lyapunov condition (FL1).

Proposition 2.10

If hypotheses (H1), (H2), (H3) and (H4) are satisfied then we can choose a constant \(A\) so that the function

verifies a Foster-Lyapunov condition (FL1) for Eq. (1).

Proof

First, we notice that if \(A\) is sufficiently small then

so we can choose

and this ensures

Now, we differentiate the separate parts of the Lyapunov function, remembering (10) and using Lemmas 2.8 and 2.9,

and

Now, we can put this all together in a Lyapunov functional for positive \(A\) sufficiently small

Then, choosing \(A \leq \frac{\chi}{1+\chi}\) we have

Now, we know that we can choose \(R_{*}\) such that if \(|x|>R_{*}\) then,

then if we choose \(\gamma \) small enough so that \(\gamma C_{2} V_{0}^{2} \leq A(1-\chi ) \dfrac{m_{*}^{b}}{4}\), then we will have

for some constant \(C_{3}\). Finally, using (15) we have

This verifies (FL1) with \(\zeta = \text{$\gamma $}A (1-\chi ) m_{*}^{b}\) and \(D = C_{4}\). □

Proof of Theorem 1.1

We verify the two hypotheses of Harris’s theorem in Propositions 2.7 and 2.10. The contraction in the \(\phi \)-weighted total variation norm and the existence of a unique steady state follow by Harris’s theorem (Theorem 1.4). □

3 Unbounded Velocity Spaces

This section is dedicated to the long-time behaviour of the linear run and tumble equation, Eq. (1) posed in an unbounded velocity space \(\mathcal {V} = \mathbb{R}^{d}\) with a tumbling kernel (\(\kappa _{2}\)). The following two sections are dedicated to verifying the two hypotheses of the subgeometric version of Harris’s theorem (Theorem (1.6)). At the end of this section, we provide a proof of Theorem 1.3.

3.1 Minorisation Condition

For unbounded velocities, our minorisation part is essentially identical to that in our previous paper [13]. For the sake of completeness, we include a proof here. Let us again write \((T_{t} f) (t,x,v) = f(t,x- vt, v)\) for the transport semigroup and define

Then we have the following lemmas.

Lemma 3.1

There exists a constant \(C\) such that

Proof

The proof is exactly the same as in [13] after observing that there exists some \(\tilde{C}>0\) such that

□

Lemma 3.2

For every \(R_{*}>0\), we can take \(t = 3 +\frac{R_{*}}{V_{0}}\) such that any solution of Eq. (1) with initial data \(f_{0} \in \mathcal{P}(\mathbb{R}^{d} \times \mathbb{R}^{d})\) with \(\int _{|x|\leq R_{*}} \int _{B(0; V_{0})}f_{0}(x,v) \,\mathrm{d}x \,\mathrm{d}v =1\) satisfies

Proof

We take \(f_{0} (x,v): = \delta _{(x_{0}} \delta _{v_{0})} \) where \((x_{0},v_{0}) \in \mathbb{R}^{d} \times B(0,V_{0})\), is an arbitrary point with an arbitrary velocity. We only need to consider \(x_{0} \in B(0, R_{*})\), then the bound we obtain depends on \(R_{*}\). First, we have that

Applying \(\hat{L}\) to this we obtain

Performing a change of variables we have

Therefore we have

Applying the transport operator once more we obtain

Moreover, we have

which is implied by \(|x-v(t-s)-x_{0} -rv_{0}| \leq (s-r) V_{0}\) since all the velocities are smaller than \(V_{0}\). Then, if we ensure that \((s-r) \geq 2 + \frac{R_{*}}{V_{0}}\), \(r \leq \frac{1}{2}\) and that \((t-s) \leq \frac{1}{2}\), we will obtain

Therefore, setting \(t = 3+\frac{R_{*}}{V_{0}}\) and restricting the time integrals to \(r \in \big (0,\frac{1}{2} \big)\), \(s \in \big (\frac{5}{2}+ \frac{R_{*}}{V_{0}}, 3 + \frac{R_{*}}{V_{0}} \big )\), we obtain

This gives the uniform lower bound and verifies the minorisation condition (M2). We can extend this result from the Dirac delta function initial data to general initial data by using the fact that the associated semigroup is Markov. □

3.2 Foster-Lyapunov Condition

In this section, we verify the Foster-Lyapunov condition (FL2) for Eq. (1) for the case (\(\kappa _{2}\)). Thus, we prove the following lemma.

Lemma 3.3

If hypotheses (H1), (H2), (H3), (H5) are satisfied and assuming that \(M \operatorname{Hess}(M)\) is bounded, then the function

verifies a weaker Foster-Lyapunov function (FL2) for a constant \(A\) sufficiently large and with computable constants \(C>0\), \(\Lambda >0\) so that

Proof

In this proof, it is useful to remember that \(M\) is negative for \(|x|\) sufficiently large. Since it is only defined up to a constant, let us choose \(M < 0\). Similar to the previous case, we look at how the adjoint \(\mathcal {L}^{*}\) (defined by (10)) acts on different terms. Precisely, we have

Then we have, for any \(c>0\)

Summing these up and choosing \(c = \frac{2\chi}{1+\chi}\) we have

Now we also have

Therefore choosing

we have

Lastly, choosing \(A\) sufficiently large ensures that \(\phi >0\), and for \(A\) sufficiently large, \(\phi \) is comparable to \(M^{2}+v^{2}\). □

3.3 Subgeometric Convergence Rates

We can now combine the results of the two previous sections to get a proof of Theorem 1.3.

Proof of Theorem 1.3

We have verified the hypotheses of the subgeometric Harris’s theorem with the Foster-Laypunov function being

and the function \(h(t) = \sqrt{t}\). We can, therefore, compute that the function \(H^{-1}(t) = \big (\frac{t}{2}+1 \big )^{2}\) and \(h \circ H_{h}^{-1}(t) = \big (\frac{t}{2} +1 \big )\). Hence, applying the conclusion of Theorem 1.6 gives the existence of a steady state \(f_{\infty}\) and that

This proves the result. □

References

Alt, W.: Biased random walk models for chemotaxis and related diffusion approximations. J. Math. Biol. 9(2), 147–177 (1980)

Bakry, D., Cattiaux, P., Guillin, A.: Rate of convergence for ergodic continuous Markov processes: Lyapunov versus Poincaré. J. Funct. Anal. 254(3), 727–759 (2008)

Berg, H.C., Brown, D.A.: Chemotaxis in Eschericha coli analysed by three-dimensional tracking. Nature 239, 500–504 (1972)

Brosilow, B.J., Ford, R.M., Sarman, S., Cummings, P.T.: Numerical solution of transport equations for bacterial chemotaxis: effect of discretization of directional motion. SIAM J. Appl. Math. 56(6), 1639–1663 (1996)

Calvez, V.: Chemotactic waves of bacteria at the mesoscale. J. Eur. Math. Soc. 22, 593–668 (2019)

Calvez, V., Raoul, G., Schmeiser, C.: Confinement by biased velocity jumps: aggregation of Escherichia coli. Kinet. Relat. Models 8(4), 651–666 (2015)

Cañizo, J.A., Mischler, S.: Harris-type results on geometric and subgeometric convergence to equilibrium for stochastic semigroups. J. Funct. Anal. 284(7), 109830 (2021)

Cañizo, J.A., Cao, C., Evans, J., Yoldaş, H.: Hypocoercivity of linear kinetic equations via Harris’s theorem. Kinet. Relat. Models 13(1), 97–128 (2020)

Dolbeault, J., Mouhot, C., Schmeiser, C.: Hypocoercivity for linear kinetic equations conserving mass. Trans. Am. Math. Soc. 367(6), 3807–3828 (2015)

Douc, R., Fort, G., Moulines, E., Soulier, P.: Practical drift conditions for subgeometric rates of convergence. Ann. Appl. Probab. 14(3), 1353–1377 (2004)

Douc, R., Fort, G., Guillin, A.: Subgeometric rates of convergence of \(f\)-ergodic strong Markov processes. Stoch. Process. Appl. 119(3), 897–923 (2009)

Down, D.G., Meyn, S.P., Tweedie, R.L.: Exponential and uniform ergodicity of Markov processes. Ann. Probab. 23(4), 1671–1691 (1995)

Evans, J., Yoldaş, H.: On the asymptotic behaviour of a run and tumble equation for bacterial chemotaxis. SIAM J. Math. Anal. 55(6), 7635–7664 (2023)

Frymier, P.D., Ford, R.M., Cummings, T.: Cellular dynamics simulations of bacterial chemotaxis. Chem. Eng. Sci. 48(4), 687–699 (1993)

Hairer, M.: Convergence of Markov Processes. Lecture Notes (2016)

Hairer, M., Mattingly, J.C.: Yet another look at Harris’ ergodic theorem for Markov chains. In: Seminar on Stochastic Analysis, Random Fields and Applications VI, vol. 63, pp. 109–117. Birkhäuser, Basel (2011)

Macnab, R.M.: Sensing the environment: bacterial chemotaxis. In: Goldberger, R.F. (ed.) Biological Regulation and Development, vol. 2, pp. 377–412. Springer, Boston (1980)

Mischler, S., Scher, J.: Spectral analysis of semigroups and growth-fragmentation equations. Ann. Inst. Henri Poincaré, Anal. Non Linéaire 33(3), 849–898 (2016)

Mischler, S., Weng, Q.: On a linear runs and tumbles equation. Kinet. Relat. Models 10(3), 799–822 (2017)

Othmer, H.G., Hillen, T.: The diffusion limit of transport equations derived from velocity-jump processes. SIAM J. Appl. Math. 61(3), 751–775 (2000)

Othmer, H.G., Hillen, T.: The diffusion limit of transport equations II: Chemotaxis equations. SIAM J. Appl. Math. 62(4), 1222–1250 (2002)

Stroock, D.W.: Some stochastic processes which arise from a model of the motion of a bacterium. Z. Wahrscheinlichkeitstheor. Verw. Geb. 28(4), 305–315 (1974)

Villani, C.: Hypocoercivity. Mem. Am. Math. Soc. 202(950), iv+141 (2009)

Yoldaş, H.: On quantitative hypocoercivity estimates based on Harris-type theorems. J. Math. Phys. 64, 031101 (2023)

Acknowledgements

The authors would like to thank Emeric Bouin for helpful discussions and for suggesting to look at unbounded velocity spaces.

Funding

The authors would like to thank the Isaac Newton Institute for Mathematical Sciences for support and hospitality during the programme “Frontiers in kinetic theory: connecting microscopic to macroscopic scales - KineCon 2022” when work on this paper was undertaken. This work was supported by EPSRC Grant Number EP/R014604/1.

J. Evans acknowledges partial support from the Leverhulme Trust, Grant ECF-2021-134. H. Yoldaş was partially supported by the Vienna Science and Technology Fund (WWTF) with a Vienna Research Groups for Young Investigators project, grant VRG17-014 (until October 2022).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Evans, J., Yoldaş, H. Trend to Equilibrium for Run and Tumble Equations with Non-uniform Tumbling Kernels. Acta Appl Math 191, 6 (2024). https://doi.org/10.1007/s10440-024-00657-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10440-024-00657-y