Abstract

We study the computational complexity of (deterministic or randomized) algorithms based on point samples for approximating or integrating functions that can be well approximated by neural networks. Such algorithms (most prominently stochastic gradient descent and its variants) are used extensively in the field of deep learning. One of the most important problems in this field concerns the question of whether it is possible to realize theoretically provable neural network approximation rates by such algorithms. We answer this question in the negative by proving hardness results for the problems of approximation and integration on a novel class of neural network approximation spaces. In particular, our results confirm a conjectured and empirically observed theory-to-practice gap in deep learning. We complement our hardness results by showing that error bounds of a comparable order of convergence are (at least theoretically) achievable.

Similar content being viewed by others

1 Introduction

The use of data-driven classification and regression algorithms based on deep neural networks—coined deep learning—has made a big impact in the areas of artificial intelligence, machine learning, and data analysis and has led to a number of breakthroughs in diverse areas of artificial intelligence, including image classification [24, 29, 32, 47], natural language processing [53], game playing [34, 45, 46, 51], and symbolic mathematics [31, 42].

More recently, these methods have been applied to problems from the natural sciences where data driven approaches are combined with physical models. Example applications in this field—called scientific machine learning—include the development of drugs [33], molecular dynamics [18], high-energy physics [5], protein folding [43], or numerically solving inverse problems and partial differential equations (PDEs) [4, 17, 26, 37, 40].

For this wide variety of different application areas, one can summarize the underlying computational problem as approximating an unknown function f (or a quantity of interest depending on f) based on possibly noisy and random samples \((f(x_i))_{i=1}^m\). In deep learning this is being done by fitting a neural network to these samples using stochastic optimization algorithms. While there is still no convincingly comprehensive explanation for the empirically observed success (or failure) of this methodology, its success critically hinges on the properties

-

A.

that f can be well approximated by neural networks, and

-

B.

that f (or a quantity of interest depending on f) can be efficiently and accurately reconstructed from a relatively small number of samples \((f(x_i))_{i=1}^m\).

In other words, the validity of both A and B constitutes a necessary condition for a deep learning approach to be efficient. This is especially true in applications related to scientific machine learning where often a guaranteed high accuracy is required and where obtaining samples is computationally expensive.

To date most theoretical contributions focused on Property A, namely studying which functions can be well approximated by neural networks. It is now well understood that neural networks are superior approximators compared to virtually all classical approximation methods, including polynomials, finite elements, wavelets, or low rank representations; see [15, 22] for two recent surveys. Beyond that it was recently shown that neural networks can approximate solutions of high dimensional PDEs without suffering from the curse of dimensionality [21, 27, 30]. In light of these results it becomes clear that neural networks are a highly expressive and versatile function class whose theoretical approximation capabilities vastly outperform classical numerical function representations.

On the other hand, the question of whether property B holds, namely to which extent these superior approximation properties can be harnessed by an efficient algorithm based on point samples, remains one of the most relevant open questions in the field of deep learning. At present, almost no theoretical results exist in this direction. On the empirical side, Adcock and Dexter [1] recently performed a careful study finding that the theoretical approximation rates are in general not attained by common algorithms, meaning that the convergence rate of these algorithms does not match the theoretically postulated approximation rates. In [1] this empirically observed phenomenon is coined the theory-to-practice gap of deep learning. In this paper we prove the existence of this gap.

1.1 Description of Results

To provide an appropriate mathematical framework for understanding Properties A and B we introduce neural network spaces which classify functions \(f:[0,1]^d \rightarrow \mathbb {R}\) according to how rapidly the error of approximation by neural networks with n weights decays as \(n\rightarrow \infty \). Specifically we consider neural networks using the rectified linear unit (ReLU) activation function, i.e., functions of the form

where

are affine mappings and \(\varrho \bigl ( (x_1,\dots ,x_n)\bigr ) = \bigl (\max \{x_1,0\},\dots ,\max \{x_n,0\}\bigr )\). Referring to L as the depth of the neural network (1.1) and to total number of nonzero coefficients of the matrix-vector pairs \((A_\ell ,b_\ell )_{\ell =1}^L\) in (1.2) as number of weights of the neural network, we can formalize the property of being well approximable by neural networks as follows.

For \(\alpha > 0\) let

In words, the sets \(U^\alpha \) consist of all functions that are approximable by neural networks with depth L and at most n uniformly bounded coefficients to within uniform accuracy \(\lesssim n^{-\alpha }\). For the remainder of the introduction we will say that f can be approximated at rate \(\alpha \) by depth L neural networks if \(f\in U^\alpha \).

We emphasize that all our results apply to much more general approximation spaces than the sets \(U^\alpha \) (which is in fact the unit ball of some approximation space), incorporating more complex constraints on the approximating neural network while considering approximation with respect to arbitrary \(L^p\) norms; see Sect. 2.2 for more details. In any case, for the current discussion it is sufficient to note that membership of f in such a space for large \(\alpha \) simply means that Property A is satisfied.

For the mathematical formalization of Property B we employ the formalism of Information Based Complexity (more precisely we will study sampling numbers of neural network approximation spaces), as for example presented in [25]. This theory provides a general framework for studying the complexity of approximating a given solution mapping \(S : U \rightarrow Y\), with \(U \subset C([0,1]^d)\) bounded, and Y a Banach space, under the constraint that the approximating algorithm is only allowed to access point samples of the functions \(f \in U\). Formally, a (deterministic) algorithm using m point samples is determined by a set of sample points \(\varvec{x}= (x_1,\dots ,x_m) \in ([0,1]^d)^m\) and a map \(Q : \mathbb {R}^m \rightarrow Y\) such that

The set of all such algorithms is denoted \({\text {Alg}}_m (U,Y)\) and we define the optimal order for (deterministically) approximating \(S : U \rightarrow Y\) using point samples as the best possible convergence rate with respect to the number of samples:

In a similar way one can define randomized algorithms and consider the optimal order \(\beta _*^{\textrm{ran}} (U, S)\) for approximating S using randomized algorithms based on point samples; see Sect. 2.4.2 below. We emphasize that all currently used deep learning algorithms, such as stochastic gradient descent (SGD) [44] and its variants (such as ADAM [28]) are of this form.

In this paper we derive bounds for the optimal orders \(\beta _*^{\textrm{det}} (U, S)\) and \(\beta _*^{\textrm{ran}} (U, S)\) for the unit ball \(U=U^\alpha \) and the following solution mappings:

-

1.

The embedding into \(C([0,1]^d)\), i.e., \(S = \iota _{\infty }\) for \(\iota _\infty : U \rightarrow C([0,1]^d), f \mapsto f\),

-

2.

The embedding into \(L^2([0,1]^d)\), i.e., \(S = \iota _2\) for \(\iota _2 : U \rightarrow L^2([0,1]^d), f \mapsto f\), and

-

3.

The definite integral, i.e., \(S = T_{\int }\) for \(T_{\int } : U \rightarrow \mathbb {R}, f \mapsto \int _{[0,1]^d} f(x) \, d x\).

1.1.1 Approximation with Respect to the Uniform Norm

We first consider the solution mapping \(S = \iota _{\infty }\) operating on \(U=U^\alpha \), i.e., the problem of approximation with respect to the uniform norm. Then the property \(\beta _*^{\textrm{ran}} (U, \iota _\infty )=\alpha \) would imply that the theoretical approximation rate \(\alpha \) with respect to the uniform norm can in principle be realized by a (randomized) algorithm such as SGD and its variants. On the other hand, if \(\beta _*^{\textrm{ran}} (U, \iota _\infty )<\alpha \), then there cannot exist any (randomized) algorithm based on point samples that realizes the theoretical approximation rate \(\alpha \) with respect to the uniform norm—that is, there exists a theory-to-practice gap. We now present (a slightly simplified version of) our first main result establishing such a gap for \(\iota _\infty \).

Theorem 1.1

(special case of Theorems 4.2 and 5.1) We have

Theorem 1.1 states that for every \(\beta < \frac{1}{d} \cdot \frac{\alpha }{\lfloor L /2\rfloor + \alpha }\) and for every \(m\in \mathbb {N}\) there exists an algorithm using m point samples such that every function \(f\in U^\alpha \) (i.e., f can be approximated at rate \(\alpha \) by depth L neural networks) can be reconstructed to within \(L^\infty \) error \(\lesssim m^{-\beta }\). Conversely, this rate is the maximally achievable rate. Note the big discrepancy between the approximation rate \(\alpha \) and the maximally achievable reconstruction rate \(\frac{1}{d} \cdot \frac{\alpha }{\lfloor L /2\rfloor + \alpha }\), especially for large input dimensions d. Probably the term “gap” is a vast understatement for the difference between the theoretical approximation rate \(\alpha \) and the rate \(\beta _*\le \min \{ \frac{1}{d},\frac{\alpha }{d}\}\) that can actually be realized by a numerical algorithm. A particular consequence of Theorem 1.1 is that if all one knows is that a function f is well approximated by neural networks— no matter how rapidly the approximation error decays—any conceivable numerical algorithm based on function samples (such as SGD and its variants) requires at least \(\Theta (\varepsilon ^{-d})\) many samples to guarantee an error \(\varepsilon >0\) with respect to the uniform norm. Since evaluating f takes a certain minimum amount of time, any conceivable numerical algorithm based on function samples (such as SGD and its variants) must have a worst-case runtime scaling at least as \(\Theta (\varepsilon ^{-d})\) to guarantee an error \(\varepsilon >0\) with respect to the uniform norm—irrespective of how well f can be theoretically approximated by neural networks. In particular:

-

Any conceivable numerical algorithm based on function samples (such as SGD and its variants) suffers from the curse of dimensionality—even if neural network approximations exist that do not.

-

On the class of all functions well approximable by neural networks it is impossible to realize these high convergence rates for uniform approximation with any conceivable numerical algorithm based on function samples (such as SGD and its variants).

-

If the number of layers is unbounded it is impossible to realize any positive convergence rate on the class of all functions well approximable by neural networks for the problem of uniform approximation with any conceivable numerical algorithm based on function samples (such as SGD and its variants).

Our findings disqualify deep learning-based methods for problems where high uniform accuracy is desired, at least if the only available information is that the function of interest is well approximated by neural networks.

1.1.2 Approximation with Respect to the \(L^2\) Norm

Next we consider the solution mapping \(S = \iota _{2}\) operating on \(U=U^\alpha \), i.e., the problem of approximation with respect to the \(L^2\) norm. Also in this case we establish a considerable theory-to-practice gap, albeit not as severe as in the case of \(S = \iota _{\infty }\). A slightly simplified version of our main result is as follows.

Theorem 1.2

(special case of Theorems 6.3 and 7.1) We have

We see again that it is impossible to realize a high convergence rate with any conceivable algorithm based on point samples, no matter how high the theoretically possible approximation rate \(\alpha \) may be. Indeed, the theorem easily implies \( \beta _*^{\textrm{ran}} \bigl ( U^\alpha , \iota _2 \bigr ), \beta _*^{\textrm{det}} \bigl ( U^\alpha , \iota _2 \bigr ) \le \frac{3}{2}, \) irrespective of \(\alpha \). This means that any conceivable (possibly randomized) numerical algorithm based on function samples (such as SGD and its variants) must have a worst-case runtime scaling at least as \(\Theta (\varepsilon ^{-2/3})\) to guarantee an \(L^2\) error \(\varepsilon >0\)—irrespective of how well the function of interest can be theoretically approximated by neural networks. On the positive side, there is a uniform lower bound of \(\frac{1}{2+\frac{2}{\alpha }}\) for the optimal rate, which means that there exist algorithms (in the sense defined above) that almost realize an error bound of \(\mathcal {O}(m^{-1/2})\), given m point samples, for \(\alpha \) sufficiently large. Note however that the existence of such an algorithm by no means implies the existence of an efficient algorithm, say, with runtime scaling linearly or even polynomially in m.

Our findings disqualify deep learning-based methods for problems where a high convergence rate of the \(L^2\) error is desired, at least if the only available information is that the function of interest is well approximated by neural networks. On the other hand, deep learning based methods may be a viable option for problems where a low—but dimension independent—convergence rate of the \(L^2\) error is sufficient.

1.1.3 Integration

Finally we consider the solution mapping \(S = T_{\int }\) operating on \(U=U^\alpha \). The question of estimating \(\beta _*^{\textrm{ran}}\bigl (U^\alpha ,T_{\int }\bigr )\) and \(\beta _*^{\textrm{det}} \bigl (U^\alpha , T_{\int }\bigr )\) can be equivalently stated as the question of determining the optimal order of (Monte Carlo or deterministic) quadrature on neural network approximation spaces. Again we exhibit a significant theory-to-practice gap that we summarize in the following simplified version of our main result.

Theorem 1.3

(special case of Theorems 9.1, 9.4, 8.1 and 8.4) We have

We see in particular that there are no (deterministic or Monte Carlo) quadrature schemes achieving a convergence order greater than 2. Further, if the number of layers is unbounded, there are no (deterministic or Monte Carlo) quadrature schemes achieving a convergence order greater than 1. On the other hand there exist Monte Carlo algorithms that almost realize a rate 1 for \(\alpha \) sufficiently large. This again does not imply the existence of an efficient algorithm with this convergence rate; but it is well-known that the error bound \(\mathcal {O}(m^{-1/2})\) can be efficiently realized by standard Monte Carlo integration, Theorem 1.3 implies that there is not much room for improvement.

1.1.4 General Comments

We close the overview of our results with the following general comments.

-

Our results for the first time shed light on the question of which problem classes can be efficiently tackled by deep learning methods and which problem classes might be better handled using classical methods such as finite elements. These findings enable informed choices regarding the use of these methods. Concretely, we find that it is not advisable to use deep learning methods for problems where a high convergence rate and/or uniform accuracy is needed. In particular, no high order (approximation or quadrature) algorithms exist, provided that the only available information is that the function of interest is well approximated by neural networks.

-

As another contribution, we exhibit the exact impact of the choice of the architecture, such as the number of layers, and magnitude of the coefficients. Particularly, we show that allowing the number of layers to be unbounded adversely affects the optimal rate \(\beta _*\).

-

Our hardness results hold universally across virtually all choices of network architectures. Concretely, all hardness results of Theorems 1.1, 1.2 and 1.3 hold true whenever at least 3 layers are used. This means that limiting the number of layers will not help. In this context we also note that it is known that at least \(\lfloor \alpha /2d \rfloor \) layers are needed for ReLU neural networks to achieve the (essentially) optimal approximation rate \(\frac{\alpha }{d}\) for all \(f\in C^{\alpha }([0,1]^d)\); see [36, Theorem C.6].

-

Our hardness results hold universally across all size constraints on the magnitudes of the approximating network weights. Furthermore, a careful analysis of our proofs reveals that our hardness results qualitatively remain true if analogous constraints are put on the \(\ell ^2\) norms of the weights of the approximating networks. Such constraints constitute a common regularization strategy, termed weight decay [23]. This means that applying standard regularization strategies—such as weight decay—will not help.

-

In many machine learning problems one assumes that one only has access to inexact (noisy) samples of a given function. Since this noise can be incorporated into the stochasticity of a randomized algorithm, our hardness results also hold for the case of noisy samples.

1.2 Related Work

To put our results in perspective we discuss related work.

1.2.1 Information-Based Complexity and Classical Function Spaces

The study of optimal rates \(\beta _*\) for approximating a given solution map based on point samples or general linear samples has a long tradition in approximation theory, function space theory, spectral theory and information based complexity. It is closely related to so-called Gelfand numbers of linear operators—a classical and well-studied concept in function space theory and spectral theory [38, 39]. It is instructive to compare our findings to these classical results, for example for U the unit ball in a Sobolev spaces \(W_\infty ^\alpha ([0,1]^d)\) and \(S=\iota _\infty \). These Sobolev spaces can be (not quite but almost, see for example [49, Theorem 5.3.2] and [16, Theorem 12.1.1]) characterized by the property that its elements can be approximated by polynomials of degree \(\le n\) to within \(L^\infty \) accuracy \(\mathcal {O}(n^{-\alpha })\). Since the set of polynomials of degree \(\le n\) in dimension d possesses \(\asymp n^d\) degrees of freedom, this approximation rate can be fully harnessed by a deterministic, resp. randomized algorithm based on point samples if \(\beta _*^{\textrm{det}} \bigl (U, S\bigr ) = \alpha /d\), resp. \(\beta _*^{\textrm{ran}} \bigl (U, S\bigr ) = \alpha /d\). It is a classical result that this is indeed the case, see [25, Theorem 6.1]. This fact implies that there is no theory-to-practice gap in polynomial approximation and can be considered the basis of any high order (approximation or quadrature) algorithm in numerical analysis.

In the case of classical function spaces it is the generic behavior that the optimal rate \(\beta _*\) increases (linearly) with the underlying smoothness \(\alpha \), at least for fixed dimension d. On the other hand, our results show that neural network approximation spaces have the peculiar property that the optimal rate \(\beta _*\) is always uniformly bounded, regardless of the underlying “smoothness” \(\alpha \).

To put our results in a somewhat more abstract context we can compare the optimal rate \(\beta _*\) to other complexity measures of a function space. A well studied example is the metric entropy related to the covering numbers \(\textrm{Cov}(V,\varepsilon )\) of sets \(V \subset C[0,1]^d\). The associated entropy exponent is

which, roughly speaking, determines the theoretically optimal rate \(\mathcal {O}(m^{-s_*})\) at which an arbitrary element of U can be approximated from a representation using at most m bits. On the other hand, \(\beta _*\) determines the optimal rate \(\mathcal {O}(m^{-\beta _*})\) that can actually be realized by an algorithm using m point samples of the input function \(f\in U\). For a solution mapping S to be efficiently computable from point samples, one would therefore expect that \(\beta _*= s_*\) or at least that \(\beta _*\) grows linearly with \(s_*\). For example, for U the unit ball in a Sobolev spaces \(W_\infty ^\alpha ([0,1]^d)\) and \(S=\iota _\infty \) we have \({ s_{*}(U) = \beta _*^{\textrm{det}} ( U, \iota _\infty ) = \beta _*^{\textrm{ran}} ( U, \iota _\infty ) =\frac{\alpha }{d} . }\) In contrast,  satisfies

satisfies  according to Lemma 6.2, while Theorem 1.1 shows

according to Lemma 6.2, while Theorem 1.1 shows  independent of \(\alpha \), and even

independent of \(\alpha \), and even  if the number of layers is unbounded. This is yet another manifestation of the wide theory-to-practice gap in neural network approximation.

if the number of layers is unbounded. This is yet another manifestation of the wide theory-to-practice gap in neural network approximation.

1.2.2 Other Hardness Results for Deep Learning

While we are not aware of any work addressing the optimal sampling complexity on neural network spaces, there exist a number of different approaches to establishing various “hardness” results for deep learning. We comment on some of them.

A prominent and classical research direction considers the computational complexity of fitting a neural network of a fixed architecture to given (training) samples. It is known that this can be an NP complete problem for certain specific architectures and samples; see [9] for the first result in this direction that has inspired a large body of follow-up work. This line of work does however not consider the full scope of the problem, namely the relation between theoretically possible approximation rates and algorithmically realizable rates. In our results we do not take into account the computational efficiency of algorithms at all. Our results are stronger in the sense that they show that even if there was an efficient algorithm for fitting a neural network to samples, one would need to access too many samples to achieve efficient runtimes.

Another research direction considers the existence of convergent algorithms that only have access to inexact information about the samples, as is commonly the case when computing in floating point arithmetic. Specifically, [3] identifies various problems in sparse approximation that cannot be algorithmically solved based on inputs with finite precision using neural networks. The deeper underlying reason is that these problems cannot be solved by any algorithm based on inexact measurements. Thus, the results of [3] are not really specific to neural networks. In contrast, our hardness results are highly specific to the structure of neural networks and do not occur for most other computational approaches.

A different kind of hardness results appears in the neural network approximation theory literature. There, typically lower bounds are provided for the number of network weights and/or number of layers that a neural network needs to have in order to reach a desired accuracy in the approximation of functions from various classical smoothness spaces [10, 36, 48, 52]. Yet, these bounds exclusively concern theoretical approximation rates for classical smoothness spaces while our results provide bounds for the realizability of these rates based on point samples

1.2.3 Other Work on Neural Network Approximation Spaces

Our definition of neural network approximation spaces is inspired by [20] where such spaces were first introduced and some structural properties, such as embedding theorems into classical function spaces, are investigated. The neural network spaces  introduced in the present work differ from those spaces in the sense that we also allow to take the size of the network weights into account. This is important, as such bounds on the weights are often enforced in applications through regularization procedures. Another construction of neural network approximation spaces can be found in [7] for the purpose of providing a calculus on functions that can be approximated by neural networks without curse of dimensionality. While all these works focus on aspects related to theoretical approximability of functions, our main focus concerns the algorithmic realization of such approximations.

introduced in the present work differ from those spaces in the sense that we also allow to take the size of the network weights into account. This is important, as such bounds on the weights are often enforced in applications through regularization procedures. Another construction of neural network approximation spaces can be found in [7] for the purpose of providing a calculus on functions that can be approximated by neural networks without curse of dimensionality. While all these works focus on aspects related to theoretical approximability of functions, our main focus concerns the algorithmic realization of such approximations.

1.3 Notation

For \(n \in \mathbb {N}\), we write \(\underline{n} := \{ 1,2,\dots ,n \}\). For any finite set \(I \ne \varnothing \) and any sequence \((a_i)_{i \in I} \subset \mathbb {R}\), we define  . The expectation of a random variable X will be denoted by \(\mathbb {E}[X]\).

. The expectation of a random variable X will be denoted by \(\mathbb {E}[X]\).

For a subset \(M \subset X\) of a metric space X, we write \(\overline{M}\) for the closure of M and \(M^\circ \) for the interior of M. In particular, this notation applies to subsets of \(\mathbb {R}^d\). We write \(\varvec{\lambda }(M)\) for the Lebesgue measure of a (measurable) set \(M \subset \mathbb {R}^d\).

1.4 Structure of the paper

Section 2 formally introduces the neural network approximation spaces  and furthermore provides a review of the most important notions and definitions from information based complexity. The basis for all our hardness results is developed in Sect. 3, where we show that the unit ball

and furthermore provides a review of the most important notions and definitions from information based complexity. The basis for all our hardness results is developed in Sect. 3, where we show that the unit ball  in the approximation space

in the approximation space  contains a large family of “hat functions”, depending on the precise properties of the functions \(\varvec{\ell },\varvec{c}\) and on \(\alpha > 0\).

contains a large family of “hat functions”, depending on the precise properties of the functions \(\varvec{\ell },\varvec{c}\) and on \(\alpha > 0\).

The remaining sections develop error bounds and hardness results for the problems of uniform approximation (Sects. 4 and 5), approximation in \(L^2\) (Sects. 6 and 7), and numerical integration (Sects. 8 and 9). Several technical proofs and results are deferred to Sect. A.

2 The Notion of Sampling Complexity on Neural Network Approximation Spaces

In this section, we first formally introduce the neural network approximation spaces  and then review the framework of information based complexity, including the notion of randomized algorithms and the concept of the optimal order of convergence based on point samples.

and then review the framework of information based complexity, including the notion of randomized algorithms and the concept of the optimal order of convergence based on point samples.

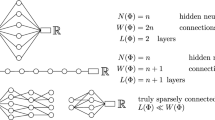

2.1 The Mathematical Formalization of Neural Networks

In our analysis, it will be helpful to distinguish between a neural network \(\Phi \) as a set of weights and the associated function \(R_\varrho \Phi \) computed by the network. Thus, we say that a neural network is a tuple \({\Phi = \big ( (A_1,b_1), \dots , (A_L,b_L) \big )}\), with \(A_\ell \in \mathbb {R}^{N_\ell \times N_{\ell -1}}\) and \(b_\ell \in \mathbb {R}^{N_\ell }\). We then say that \({\varvec{a}(\Phi ) := (N_0,\dots ,N_L) \in \mathbb {N}^{L+1}}\) is the architecture of \(\Phi \), \(L(\Phi ) := L\) is the number of layersFootnote 1 of \(\Phi \), and \({W(\Phi ) := \sum _{j=1}^L (\Vert A_j \Vert _{\ell ^0} + \Vert b_j \Vert _{\ell ^0})}\) denotes the number of (nonzero) weights of \(\Phi \). The notation \(\Vert A \Vert _{\ell ^0}\) used here denotes the number of nonzero entries of a matrix (or vector) A. Finally, we write \(d_{\textrm{in}}(\Phi ) := N_0\) and \(d_{\textrm{out}}(\Phi ) := N_L\) for the input and output dimension of \(\Phi \), and we set \(\Vert \Phi \Vert _{\mathcal{N}\mathcal{N}} := \max _{j = 1,\dots ,L} \max \{ \Vert A_j \Vert _{\infty }, \Vert b_j \Vert _{\infty } \}\), where \({\Vert A \Vert _{\infty } := \max _{i,j} |A_{i,j}|}\).

To define the function \(R_\varrho \Phi \) computed by \(\Phi \), we need to specify an activation function. In this paper, we will only consider the so-called rectified linear unit (ReLU) \({\varrho : \mathbb {R}\rightarrow \mathbb {R}, x \mapsto \max \{ 0, x \}}\), which we understand to act componentwise on \(\mathbb {R}^n\), i.e., \(\varrho \bigl ( (x_1,\dots ,x_n)\bigr ) = \bigl (\varrho (x_1),\dots ,\varrho (x_n)\bigr )\). The function \(R_\varrho \Phi : \mathbb {R}^{N_0} \rightarrow \mathbb {R}^{N_L}\) computed by the network \(\Phi \) (its realization) is then given by

2.2 Neural Network Approximation Spaces

Approximation spaces [14] classify functions according to how well they can be approximated by a family \(\varvec{\Sigma } = (\Sigma _n)_{n \in \mathbb {N}}\) of certain “simple functions” of increasing complexity n, as \(n \rightarrow \infty \). Common examples consider the case where \(\Sigma _n\) is the set of polynomials of degree n, or the set of all linear combinations of n wavelets. The notion of neural network approximation spaces was originally introduced in [20], where \(\Sigma _n\) was taken to be a family of neural networks of increasing complexity. However, [20] does not impose any restrictions on the size of the individual network weights, which plays an important role in practice and—as we shall see—also influences the possible performance of algorithms based on point samples.

For this reason, we introduce a modified notion of neural network approximation spaces that also takes the size of the individual network weights into account. Precisely, given an input dimension \(d \in \mathbb {N}\) (which we will keep fixed throughout this paper) and non-decreasing functions \({\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}}\) and \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) (called the depth-growth function and the coefficient growth function, respectively), we define

Then, given a measurable subset \(\Omega \subset \mathbb {R}^d\), \(p \in [1,\infty ]\), and \(\alpha \in (0,\infty )\), for each measurable \(f : \Omega \rightarrow \mathbb {R}\), we define

where \(d_{p}(f, \Sigma ) := \inf _{g \in \Sigma } \Vert f - g \Vert _{L^p(\Omega )}\).

The remaining issue is that since the set  is in general neither closed under addition nor under multiplication with scalars,

is in general neither closed under addition nor under multiplication with scalars,  is not a (quasi)-norm. To resolve this issue, taking inspiration from the theory of Orlicz spaces (see e.g. [41, Theorem 3 in Section 3.2]), we define the neural network approximation space quasi-norm

is not a (quasi)-norm. To resolve this issue, taking inspiration from the theory of Orlicz spaces (see e.g. [41, Theorem 3 in Section 3.2]), we define the neural network approximation space quasi-norm  as

as

giving rise to the approximation space

The following lemma summarizes the main elementary properties of these spaces.

Lemma 2.1

Let \(\varnothing \ne \Omega \subset \mathbb {R}^d\) be measurable, let \(p \in [1,\infty ]\) and \(\alpha \in (0,\infty )\). Then,  satisfies the following properties:

satisfies the following properties:

-

1.

is a quasi-normed space. Precisely, given arbitrary measurable functions \(f,g : \Omega \rightarrow \mathbb {R}\), it holds that

is a quasi-normed space. Precisely, given arbitrary measurable functions \(f,g : \Omega \rightarrow \mathbb {R}\), it holds that  for \(C := 17^\alpha \).

for \(C := 17^\alpha \). -

2.

We have

for \(c \in [-1,1]\).

for \(c \in [-1,1]\). -

3.

if and only if

if and only if  .

. -

4.

if and only if

if and only if  .

. -

5.

. Furthermore, if \(\Omega \subset \overline{\Omega ^\circ }\), then

. Furthermore, if \(\Omega \subset \overline{\Omega ^\circ }\), then  , where \(C_b (\Omega )\) denotes the Banach space of continuous functions that are bounded and extend continuously to the closure \(\overline{\Omega }\) of \(\Omega \).

, where \(C_b (\Omega )\) denotes the Banach space of continuous functions that are bounded and extend continuously to the closure \(\overline{\Omega }\) of \(\Omega \).

Proof

See Sect. A.1. \(\square \)

2.3 Quantities Characterizing the Complexity of the Network Architecture

To conveniently summarize those aspects of the growth behavior of the functions \(\varvec{\ell }\) and \(\varvec{c}\) most relevant to us, we introduce three quantities that will turn out to be crucial for characterizing the sample complexity of the neural network approximation spaces. First, we set

Furthermore, we define

Remark 2.2

Clearly, \(\gamma ^{\flat }(\varvec{\ell },\varvec{c}) \le \gamma ^{\sharp }(\varvec{\ell },\varvec{c})\). Furthermore, since we will only consider settings in which \(\varvec{\ell }^*\ge 2\), we always have \(\gamma ^{\sharp }(\varvec{\ell },\varvec{c}) \ge \gamma ^{\flat }(\varvec{\ell },\varvec{c}) \ge 1\). Next, note that if \(\varvec{\ell }^*= \infty \) (i.e., if \(\varvec{\ell }\) is unbounded), then \(\gamma ^{\flat }(\varvec{\ell },\varvec{c}) = \gamma ^{\sharp }(\varvec{\ell },\varvec{c}) = \infty \). Finally, we remark that if \(\varvec{\ell }^*< \infty \) and if \(\varvec{c}\) satisfies the natural growth condition \(\varvec{c}(n) \asymp n^\theta \cdot (\ln (2 n))^{\kappa }\) for certain \(\theta \ge 0\) and \(\kappa \in \mathbb {R}\), then \( \gamma ^{\flat }(\varvec{\ell },\varvec{c}) = \gamma ^{\sharp }(\varvec{\ell },\varvec{c}) = \theta \cdot \varvec{\ell }^*+ \lfloor \varvec{\ell }^*/ 2 \rfloor . \) Thus, in most natural cases—but not always—\(\gamma ^{\flat }\) and \(\gamma ^{\sharp }\) agree.

An explicit example where \(\gamma ^{\flat }\) is not identical to \(\gamma ^{\sharp }\) is as follows: Define \(c_1 := c_2 := c_3 := 1\) and for \(n,m \in \mathbb {N}\) with \(2^{2^m} \le n < 2^{2^{m+1}}\), define \(c_n := 2^{2^m}\). Then, assume that \(\gamma _1,\gamma _2 \in [0,\infty )\) and \(\kappa _1,\kappa _2 > 0\) satisfy \(\kappa _1 \, n^{\gamma _1} \le c_n \le \kappa _2 \, n^{\gamma _2}\) for all \(n \in \mathbb {N}\). Applying the upper estimate for arbitrary \(m \in \mathbb {N}\) and \(n = n_m = 2^{2^m}\), we see \(n = c_n \le \kappa _2 \, n^{\gamma _2}\); since \(n_m = 2^{2^m} \rightarrow \infty \) as \(m \rightarrow \infty \), this is only possible if \(\gamma _2 \ge 1\). On the other hand, if we apply the lower estimate for arbitrary \(m \in \mathbb {N}\) and \(n = n_m = 2^{2^{m+1}} - 1\), we see because of \(c_n = 2^{2^m} = 2^{2^{m+1} / 2} = \sqrt{2^{2^{m+1}}} = \sqrt{n+1}\) that \( \kappa _1 \, n^{\gamma _1} \le c_n = \sqrt{n+1} . \) Again, since \(n_m = 2^{2^{m+1}} - 1 \rightarrow \infty \) as \(m \rightarrow \infty \), this is only possible if \(\gamma _1 \le \frac{1}{2}\).

Given these considerations, it is easy to see for \(\varvec{\ell } \equiv L \in \mathbb {N}_{\ge 2}\) that \(\gamma ^{\flat }(\varvec{\ell },\varvec{c}) \le \frac{L}{2} + \lfloor \frac{L}{2} \rfloor \), while \(\gamma ^{\sharp }(\varvec{\ell },\varvec{c}) \ge L + \lfloor \frac{L}{2} \rfloor \). In particular, \(\gamma ^{\flat }(\varvec{\ell },\varvec{c}) < \gamma ^{\sharp }(\varvec{\ell },\varvec{c})\). \(\triangle \)

2.4 The Framework of Sampling Complexity

Let \(d \in \mathbb {N}\), let \(\varnothing \ne U \subset C([0,1]^d)\) be bounded, and let Y be a Banach space. We are interested in numerically approximating a given solution mapping \(S : U \rightarrow Y\), where the numerical procedure is only allowed to access point samples of the functions \(f \in U\). The procedure can be either deterministic or probabilistic (Monte Carlo). In this short section, we discuss the mathematical formalization of this problem, based on the setup of numerical complexity theory, as for instance outlined in [25, Section 2].

The reader should keep in mind that we are mostly interested in the setting where U is the unit ball in the neural network approximation space  , i.e.,

, i.e.,

and where the solution mapping is one of the following:

-

1.

The embedding into \(C([0,1]^d)\), i.e., \(S = \iota _{\infty }\) for \(\iota _\infty : U \rightarrow C([0,1]^d), f \mapsto f\),

-

2.

The embedding into \(L^2([0,1]^d)\), i.e., \(S = \iota _2\) for \(\iota _2 : U \rightarrow L^2([0,1]^d), f \mapsto f\), or

-

3.

The definite integral, i.e., \(S = T_{\int }\) for \(T_{\int } : U \rightarrow \mathbb {R}, f \mapsto \int _{[0,1]^d} f(x) \, d x\).

2.4.1 The Deterministic Setting

A (potentially nonlinear) map \(A : U \rightarrow Y\) is called a deterministic method using m \(\in \) \(\mathbb {N}\) point measurements (written \(A \in {\text {Alg}}_m (U,Y)\)) if there exists \(\varvec{x}= (x_1,\dots ,x_m) \in ([0,1]^d)^m\) and a map \(Q : \mathbb {R}^m \rightarrow Y\) such that

Given a (solution) mapping \(S : U \rightarrow Y\), we define the error of A in approximating S as

The optimal error for (deterministically) approximating \(S : U \rightarrow Y\) using m point samples is then

Finally, the optimal order for (deterministically) approximating \(S : U \rightarrow Y\) using point samples is

2.4.2 The Randomized Setting

A randomized method using \(m \in \mathbb {N}\) point measurements (in expectation) is a tuple \((\varvec{A},\varvec{m})\) consisting of a family \(\varvec{A}= (A_\omega )_{\omega \in \Omega }\) of (potentially nonlinear) maps \(A_\omega : U \rightarrow Y\) indexed by a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) and a measurable function \(\varvec{m}: \Omega \rightarrow \mathbb {N}\) with the following properties:

-

1.

for each \(f \in U\), the map \(\Omega \rightarrow Y, \omega \mapsto A_\omega (f)\) is measurable (with respect to the Borel \(\sigma \)-algebra on Y),

-

2.

for each \(\omega \in \Omega \), we have \(A_\omega \in {\text {Alg}}_{\varvec{m}(\omega )}(U,Y)\),

-

3.

\(\mathbb {E}_{\omega } [\varvec{m}(\omega )] \le m\).

We write \((\varvec{A}, \varvec{m}) \in {\text {Alg}}^{\textrm{ran}}_m (U,Y)\) if these conditions are satisfied. We say that \((\varvec{A},\varvec{m})\) is strongly measurable if the map \(\Omega \times U \rightarrow Y, (\omega ,f) \mapsto A_\omega (f)\) is measurable, where \(U \subset C([0,1]^d)\) is equipped with the Borel \(\sigma \)-algebra induced by \(C([0,1]^d)\).

Remark

In most of the literature (see e.g. [25, Section 2]), randomized algorithms are always assumed to be strongly measurable. All randomized algorithms that we construct will have this property. On the other hand, all our hardness results apply to arbitrary randomized algorithms satisfying Properties 1–3 from above. Thus, using the terminology just introduced we obtain stronger results than we would get using the usual definition.

The expected error of a randomized algorithm \((\varvec{A},\varvec{m})\) for approximating a (solution) mapping \(S : U \rightarrow Y\) is defined as

The optimal randomized error for approximating \(S : U \rightarrow Y\) using m point samples (in expectation) is

Finally, the optimal randomized order for approximating \(S : U \rightarrow Y\) using point samples is

The remainder of this paper is concerned with deriving upper and lower bounds for the exponents \(\beta _*^{\textrm{det}}(U,S)\) and \(\beta _*^{\textrm{ran}}(U,S)\), where  is the unit ball in

is the unit ball in  , and S is either the embedding of

, and S is either the embedding of  into \(C([0,1]^d)\), the embedding into \(L^2([0,1]^d)\), or the definite integral \(S f = \int _{[0,1]^d} f(t) \, d t\).

into \(C([0,1]^d)\), the embedding into \(L^2([0,1]^d)\), or the definite integral \(S f = \int _{[0,1]^d} f(t) \, d t\).

For deriving upper bounds (i.e., hardness bounds) for randomized algorithms, we will frequently use the following lemma, which is a slight adaptation of [25, Proposition 4.1]. In a nutshell, the lemma shows that if one can establish a hardness result that holds for deterministic algorithms in the average case, then this implies a hardness result for randomized algorithms.

Lemma 2.3

Let \(\varnothing \ne U \subset C([0,1]^d)\) be bounded, let Y be a Banach space, and let \(S : U \rightarrow Y\). Assume that there exist \(\lambda \in [0,\infty )\), \(\kappa > 0\), and \(m_0 \in \mathbb {N}\) such that for every \(m \in \mathbb {N}_{\ge m_0}\) there exists a finite set \(\Gamma _m \ne \varnothing \) and a family of functions \((f_{\gamma })_{\gamma \in \Gamma _m} \subset U\) satisfying

Then \(\beta _*^{\textrm{det}}(U,S),\beta _*^{\textrm{ran}}(U,S) \le \lambda \).

Proof

Step 1 (proving \(\beta _*^{\textrm{det}}(U,S) \le \lambda \)): For every \(A \in {\text {Alg}}_m(U,Y)\), Eq. (2.5) implies because of \(f_{\gamma } \in U\) that

Since this holds for every \(m \in \mathbb {N}_{\ge m_0}\) and every \(A \in {\text {Alg}}_m(U,Y)\), with \(\kappa \) independent of A, m, this easily implies \(e_{m}^{\textrm{det}}(U,S) \ge \kappa \, m^{-\lambda }\) for all \(m \in \mathbb {N}_{\ge m_0}\), and then \(\beta _{*}^{\textrm{det}}(U,S) \le \lambda \).

Step 2 (proving \(\beta _*^{\textrm{ran}}(U,S) \le \lambda \)): Let \(m \in \mathbb {N}_{\ge m_0}\) and let \((\varvec{A},\varvec{m}) \in {\text {Alg}}_{m}^{\textrm{ran}}(U,Y)\) be arbitrary, with \(\varvec{A}= (A_\omega )_{\omega \in \Omega }\) for a probability space \((\Omega ,\mathcal {F},\mathbb {P})\). Define \(\Omega _0 := \{ \omega \in \Omega :\varvec{m}(\omega ) \le 2 m \}\) and note \(m \ge \mathbb {E}_\omega [\varvec{m}(\omega )] \ge 2 m \cdot \mathbb {P}(\Omega _0^c)\), which shows \(\mathbb {P}(\Omega _0^c) \le \frac{1}{2}\) and hence \(\mathbb {P}(\Omega _0) \ge \frac{1}{2}\).

Note that \(A_\omega \in {\text {Alg}}_{2m} (U, Y)\) for each \(\omega \in \Omega _0\), so that Eq. (2.5) (with 2m instead of m) shows  for a constant \(\widetilde{\kappa } = \widetilde{\kappa }(\kappa ,\lambda ) > 0\). Therefore,

for a constant \(\widetilde{\kappa } = \widetilde{\kappa }(\kappa ,\lambda ) > 0\). Therefore,

and hence \( e_m^{\textrm{ran}} \big ( U, S \big ) \ge \frac{\widetilde{\kappa }}{2} \cdot m^{-\lambda } , \) since Eq. (2.6) holds for any randomized algorithm \((\varvec{A},\varvec{m}) \in {\text {Alg}}_m^{\textrm{ran}} (U, Y)\). Finally, since \(m \in \mathbb {N}_{\ge m_0}\) can be chosen arbitrarily, we see as claimed that \( \beta _*^{\textrm{ran}}(U, S) \le \lambda . \) \(\square \)

3 Richness of the Unit Ball in the Spaces

In this section, we show that ReLU networks with a limited number of neurons and bounded weights can well approximate several different functions of “hat-function type,” as shown in Fig. 1. The fact that this is possible implies that the unit ball  is quite rich; this will be the basis of all of our hardness results.

is quite rich; this will be the basis of all of our hardness results.

A plot of the “hat-function” \(\Lambda _{M,y}\) formally defined in Eq. (3.1)

We begin by considering the most basic “hat function” \({\Lambda _{M,y} : \mathbb {R}\rightarrow [0,1]}\), defined for \(M > 0\) and \(y \in \mathbb {R}\) by

For later use, we note that \(\int _{\mathbb {R}} \Lambda _{M,y}(x) \, d x = M^{-1}\). Furthermore, we “lift” \(\Lambda _{M,y}\) to a function on \(\mathbb {R}^d\) by setting \(\Lambda _{M,y}^*: \mathbb {R}^d \rightarrow \mathbb {R}, x = (x_1,\dots ,x_d) \mapsto \Lambda _{M,y}(x_1)\). The following lemma gives a bound on how economically sums of the functions \(\Lambda _{M,y}\) can be implemented by ReLU networks.

Lemma 3.1

Let \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) and \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) be non-decreasing. Let \(M \ge 1\), \(n \in \mathbb {N}\), and \(0 < C \le \varvec{c}(n)\), as well as \(L \in \mathbb {N}_{\ge 2}\) with \(L \le \varvec{\ell }(n)\).

Then

Proof

Let \(\varepsilon _1,\dots ,\varepsilon _n \in [-1,1]\) and \(y_1,\dots ,y_n \in [0,1]\). Let \(e_1 := (1,0,\dots ,0) \in \mathbb {R}^{1 \times d}\) and define

as well as

Finally, set \(E := (C \mid -C) \in \mathbb {R}^{1 \times 2}\) and

Note that \( \Vert A \Vert _{\infty }, \Vert B \Vert _{\infty }, \Vert D \Vert _{\infty }, \Vert E \Vert _{\infty }, \Vert A_1 \Vert _{\infty }, \Vert A_2 \Vert _{\infty }, \Vert A_2^{(0)} \Vert _{\infty } \le C . \) Furthermore, since \(y_j \in [0,1]\) and \(M \ge 1\), we also see \(\Vert b_1 \Vert _{\infty } \le C\). Next, note that \(\Vert A_1 \Vert _{\ell ^0}, \Vert A_2^{(0)} \Vert _{\ell ^0}, \Vert b_1 \Vert _{\ell ^0} \le 3n\), \(\Vert A_2 \Vert _{\ell ^0} \le 6 n\), \(\Vert A \Vert _{\ell ^0}, \Vert B \Vert _{\ell ^0}, \Vert D \Vert _{\ell ^0} \le 2n\), and \(\Vert E \Vert _{\ell ^0} \le 2 \le 2 n\).

For brevity, set \(\gamma := \frac{C^L \, n^{\lfloor L/2 \rfloor }}{4 n M}\) and \(\Xi := \sum _{i=1}^n \varepsilon _i \Lambda _{M,y_i}^*\), so that \(\Xi : \mathbb {R}^d \rightarrow \mathbb {R}\). Before we describe how to construct a network \(\Phi \) implementing \(\gamma \cdot \Xi \), we collect a few auxiliary observations. First, a direct computation shows that

Based on this, it is easy to see

By definition of \(A_2\), this shows \(F(x) = \frac{C^2}{4 M} \bigl (\varrho (\Xi (x)) , \varrho (-\Xi (x))\bigr )^T\) for all \(x \in \mathbb {R}^d\), for the function \(F := \varrho \circ A_2 \circ \varrho \circ (A_1 \bullet + b_1) : \mathbb {R}^d \rightarrow \mathbb {R}^2\).

A further direct computation shows for \(x,y \in \mathbb {R}\) that

Thus, setting \( G := B \circ \varrho \circ A: \mathbb {R}^2 \rightarrow \mathbb {R}^2\), we see \(G(x,y) = C^2 n \bigl (\varrho (x), \varrho (y)\bigr )^T\). Therefore, denoting by \(G^j := G \circ \cdots \circ G\) the j-fold composition of G with itself, we see \(G^j (x,y) = (C^2 n)^j \cdot \bigl (\varrho (x), \varrho (y)\bigr )^T\) for \(j \in \mathbb {N}\), and hence

where the case \(j = 0\) (in which it is understood that \(G^j = \textrm{id}_{\mathbb {R}^2}\)) is easy to verify separately.

In a similar way, we see for \(H := D \circ \varrho \circ A : \mathbb {R}^2 \rightarrow \mathbb {R}\) that

Now, we prove the claim of the lemma, distinguishing three cases regarding \(L \in \mathbb {N}_{\ge 2}\).

Case 1 (\(L = 2\)): Define \(\Phi := \big ( (A_1, b_1), (A_2^{(0)}, 0) \big )\). Then Eq. (3.2) shows \(R_\varrho \Phi = \frac{C^2}{4 M} \Xi \). Because of \(\frac{C^L \, n^{\lfloor L/2 \rfloor }}{4 n M} = \frac{C^2}{4 M}\) for \(L = 2\), this implies the claim, once we note that

as well as \(W(\Phi ) \le 9 n \le (2 L + 8) n\), since \(L = 2\).

Case 2 (\(L \ge 4\) is even): In this case, define

and note for \(j := \frac{L - 4}{2}\) that \(j+1 = \frac{L-2}{2} = \lfloor L/2 \rfloor - 1\), so that a combination of Eqs. (3.5) and (3.4) shows

since \(\varrho (\varrho (z)) = \varrho (z)\) and \(\varrho (z) - \varrho (-z) = z\) for all \(z \in \mathbb {R}\). Finally, we note as in the previous case that \(L(\Phi ) = L \le \varvec{\ell }( (2 L + 8) n)\) and \(\Vert \Phi \Vert _{\mathcal{N}\mathcal{N}} \le C \le \varvec{c}( (2L + 8) n)\), and furthermore that

Overall, we see also in this case that  , as claimed.

, as claimed.

Case 3 (\(L \ge 3\) is odd): In this case, define

Then, setting \(j := \frac{L-3}{2}\) and noting \(j = \lfloor L/2 \rfloor - 1\), we see thanks to Eq. (3.4) and because of \(E = (C \mid -C)\) that

It remains to note as before that \(L(\Phi ) = L \le \varvec{\ell }( (2L + 8) n)\) and \(\Vert \Phi \Vert _{\mathcal{N}\mathcal{N}} \le C \le \varvec{c}( (2L + 8) n)\), and finally that \( W(\Phi ) \le 3 n + 3 n + 6 n + \frac{L-3}{2} (2 n + 2 n) + 2 = 2 + 6n + 2 L n \le (8 + 2 L) n, \) so that indeed  also in this case. \(\square \)

also in this case. \(\square \)

As an application of Lemma 3.1, we now describe a large class of functions contained in the unit ball of the approximation space  .

.

Lemma 3.2

Let \(\alpha > 0\) and let \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) and \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) be non-decreasing. Let \(\sigma \ge 2\), \(0< \gamma < \gamma ^{\flat }(\varvec{\ell },\varvec{c})\), \(\theta \in (0,\infty )\) and \(\lambda \in [0,1]\) with \(\theta \lambda \le 1\) be arbitrary and define

Then there exists a constant \(\kappa = \kappa (\alpha ,\theta ,\lambda ,\gamma ,\sigma ,\varvec{\ell },\varvec{c}) > 0\) such that for every \(m \in \mathbb {N}\), the following holds:

Setting \(M := 4 m\) and \(z_j := \frac{1}{4 m} + \frac{j-1}{2 m}\) for \(j \in \underline{2m}\), the functions \(\bigl (\Lambda _{M,z_j}^*\bigr )_{j \in \underline{2m}}\) are supported in \([0,1]^d\) and have disjoint supports, up to a null-set. Furthermore, for any \(\varvec{\nu }= (\nu _j)_{j \in \underline{2m}} \in [-1,1]^{2m}\) and \(J \subset \underline{2 m}\) satisfying \(|J| \le \sigma \cdot m^{\theta \lambda }\), we have

Proof

Since \(\gamma < \gamma ^{\flat }(\varvec{\ell },\varvec{c})\), we see by definition of \(\gamma ^{\flat }\) that there exist \(L = L(\gamma ,\varvec{\ell },\varvec{c}) \in \mathbb {N}_{\le \varvec{\ell }^*}\) and \(C_1 = C_1(\gamma ,\varvec{\ell },\varvec{c}) > 0\) such that \(n^\gamma \le C_1 \cdot (\varvec{c}(n))^L \cdot n^{\lfloor L/2 \rfloor }\) for all \(n \in \mathbb {N}\). Because of \(\varvec{\ell }\ge 2\), we can assume without loss of generality that \(L \ge 2\). Furthermore, since \(L \le \varvec{\ell }^*\), we can choose \(n_0 = n_0(\gamma ,\varvec{\ell },\varvec{c}) \in \mathbb {N}\) satisfying \(L \le \varvec{\ell }(n_0)\).

Let \(m \in \mathbb {N}\) and let \(\varvec{\nu }\) and J be as in the statement of the lemma. For brevity, define \({f_{\varvec{\nu },J}^{(0)} := \sum _{j \in J} \nu _j \Lambda _{M,z_j}^*}\). We note that \(\Lambda _{M,z_j}^*\) is continuous with \(0 \le \Lambda _{M,z_j}^*\le 1\) and

This shows that the supports of the functions \(\Lambda _{M,z_j}^*\) are contained in \([0,1]^d\) and are pairwise disjoint (up to null-sets), which then implies \(\big \Vert f_{\varvec{\nu },J}^{(0)} \big \Vert _{L^\infty } \le 1\).

Next, since \(\theta \lambda \le 1\), we have \(\lceil m^{\theta \lambda } \rceil \le \lceil m \rceil = m \le 2 m\). Thus, by possibly enlarging the set \(J \subset \underline{2m}\) and setting \(\nu _j := 0\) for the added elements, we can without loss of generality assume that \(|J| \ge \lceil m^{\theta \lambda } \rceil \ge 1\). Note that the extended set still satisfies \(|J| \le \sigma \cdot m^{\theta \lambda }\) since \(\lceil m^{\theta \lambda } \rceil \le 2 m^{\theta \lambda }\) and \(\sigma \ge 2\).

Now, define \(N := n_0 \cdot \big \lceil m^{(1-\lambda ) \theta } \big \rceil \) and \(n := N \cdot |J|\), noting that \(n \ge n_0\). Furthermore, writing \({J = \{ i_1,\dots ,i_{|J|} \}}\), define

By choice of \(C_1\), we have \(n^\gamma \le C_1 \cdot (\varvec{c}(n))^L \cdot n^{\lfloor L/2 \rfloor }\), so that we can choose \(0 < C \le \varvec{c}(n)\) satisfying \(n^\gamma \le C_1 \cdot C^L \cdot n^{\lfloor L/2 \rfloor }\). Since we also have \(L \ge 2\) and \(L \le \varvec{\ell }(n_0) \le \varvec{\ell }(n)\), Lemma 3.1 shows that

here the final equality comes from our choice of \(\varepsilon _1,\dots ,\varepsilon _n\) and \(y_1,\dots ,y_n\).

To complete the proof, we first collect a few auxiliary estimates. First, we see because of \({|J| \ge m^{\theta \lambda }}\) that \(n \ge n_0 \, m^{(1-\lambda ) \theta } \, m^{\theta \lambda } \ge m^\theta \).

Thus, setting \(C_2 := 16 \sigma C_1\) and recalling that \({\omega \le \theta \cdot (\gamma - \lambda ) - 1}\) by choice of \(\omega \), we see for any \(0 < \kappa \le C_2^{-1}\) that

Here, we used in the last step that \(|J| \le \sigma \, m^{\theta \lambda }\), which implies \( \frac{N}{n} = |J|^{-1} \ge \sigma ^{-1} m^{-\theta \lambda } . \) Thus, noting that \(c \Sigma _{t}^{\varvec{\ell },\varvec{c}} \subset \Sigma _{t}^{\varvec{\ell },\varvec{c}}\) for \(c \in [-1,1]\), we see  as long as \(0 < \kappa \le C_2^{-1}\).

as long as \(0 < \kappa \le C_2^{-1}\).

Finally, set \(C_3 := \max \bigl \{ 1, \,\, C_2, \,\, (2 L + 8)^\alpha \, (2 n_0 \sigma )^\alpha \bigr \}\). We claim that  for \(\kappa := C_3^{-1}\). Once this is shown, Lemma 2.1 will show that

for \(\kappa := C_3^{-1}\). Once this is shown, Lemma 2.1 will show that  as well. To see

as well. To see  , first note that \(\big \Vert \kappa \, m^\omega \, f_{\varvec{\nu },J}^{(0)} \big \Vert _{L^\infty } \le \Vert f_{\varvec{\nu },J}^{(0)} \Vert _{L^\infty } \le 1\) since \(\omega < 0\) and \(\kappa = C_3^{-1} \le 1\). Furthermore, for \(t \in \mathbb {N}\) there are two cases: For \(t \ge (2 L + 8) n\) we have shown above that

, first note that \(\big \Vert \kappa \, m^\omega \, f_{\varvec{\nu },J}^{(0)} \big \Vert _{L^\infty } \le \Vert f_{\varvec{\nu },J}^{(0)} \Vert _{L^\infty } \le 1\) since \(\omega < 0\) and \(\kappa = C_3^{-1} \le 1\). Furthermore, for \(t \in \mathbb {N}\) there are two cases: For \(t \ge (2 L + 8) n\) we have shown above that  and hence \(t^\alpha \, d_\infty (\kappa \, m^\omega \, f_{\mathbf {\nu },J}^{(0)}; \Sigma _{t}^{\varvec{\ell },\varvec{c}}) = 0 \le 1\). On the other hand, if \(t \le (2 L + 8) n\) then we see because of \( \big \lceil m^{(1-\lambda ) \theta } \big \rceil \le 1 + m^{(1-\lambda ) \theta } \le 2 \cdot m^{(1-\lambda ) \theta } \) and \(|J| \le \sigma \, m^{\theta \lambda }\) that \(n \le 2 n_0 \sigma \, m^\theta \). Since we also have \(\omega \le - \theta \alpha \), this implies

and hence \(t^\alpha \, d_\infty (\kappa \, m^\omega \, f_{\mathbf {\nu },J}^{(0)}; \Sigma _{t}^{\varvec{\ell },\varvec{c}}) = 0 \le 1\). On the other hand, if \(t \le (2 L + 8) n\) then we see because of \( \big \lceil m^{(1-\lambda ) \theta } \big \rceil \le 1 + m^{(1-\lambda ) \theta } \le 2 \cdot m^{(1-\lambda ) \theta } \) and \(|J| \le \sigma \, m^{\theta \lambda }\) that \(n \le 2 n_0 \sigma \, m^\theta \). Since we also have \(\omega \le - \theta \alpha \), this implies

All in all, this shows  . As seen above, this completes the proof. \(\square \)

. As seen above, this completes the proof. \(\square \)

For later use, we also collect the following technical result which shows how to select a large number of “hat functions” as in Lemma 3.2 that are annihilated by a given set of sampling points.

Lemma 3.3

Let \(m \in \mathbb {N}\) and let \(M = 4 m\) and \(z_j = \frac{1}{4 m} + \frac{j-1}{2 m}\) as in Lemma 3.2. Given arbitrary points \(\varvec{x}= (x_1,\dots ,x_m) \in ([0,1]^d)^m\), define

Then \(|I_{\varvec{x}}| \ge m\).

Proof

Let \(I_{\varvec{x}}^c := \underline{2m} \setminus I_{\varvec{x}}\). For each \(i \in I_{\varvec{x}}^c\), there exists \(n_i \in \underline{m}\) satisfying \(\Lambda _{M,z_i}^*(x_{n_i}) \ne 0\). The map \(I_{\varvec{x}}^c \rightarrow \underline{m}, i \mapsto n_i\) is injective, since \(\Lambda _{M,z_i}^*\Lambda _{M,z_\ell }^*\equiv 0\) for \(i \ne \ell \) (see Lemma 3.2). Therefore, \(|I_{\varvec{x}}^c| \le m\) and hence \(|I_{\varvec{x}}| = 2m - |I_{\varvec{x}}^c| \ge m\).

The function \(\Lambda _{M,y}^*: \mathbb {R}^d \rightarrow \mathbb {R}\) has a controlled support with respect to the first coordinate of x, but unbounded support with respect to the remaining variables. For proving more refined hardness bounds, we shall therefore use the following modified construction of a function of “hat-type” with controlled support. As we will see in Lemma 3.5 below, this function can also be well implemented by ReLU networks, provided one can use networks with at least two hidden layers.\(\square \)

Lemma 3.4

Given \(d \in \mathbb {N}\), \(M > 0\) and \(y \in \mathbb {R}^d\), define

Then the function \(\vartheta _{M,y}\) has the following properties:

-

a)

\(\vartheta _{M,y}(x) = 0\) for all \(x \in \mathbb {R}^d \setminus \bigl (y + M^{-1} (-1,1)^d\bigr )\);

-

b)

\(\Vert \vartheta _{M,y} \Vert _{L^p (\mathbb {R}^d)} \le (2 / M)^{d/p}\) for arbitrary \(p \in (0,\infty ]\);

-

c)

For any \(p \in (0,\infty ]\) there is a constant \(C = C(d,p) > 0\) satisfying

$$\begin{aligned} \Vert \vartheta _{M,y} \Vert _{L^p([0,1]^d)} \ge C \cdot M^{-d/p}, \qquad \forall \, y \in [0,1]^d \text { and } M \ge \tfrac{1}{2d} . \end{aligned}$$

Proof of Lemma 3.4

Ad a) For \(x \in \mathbb {R}^d \setminus \big ( y + M^{-1} (-1,1)^d \big )\), there exists \(\ell \in \underline{d}\) with \(|x_\ell - y_\ell | \ge M^{-1}\) and hence \(\Lambda _{M,y_\ell }(x_\ell ) = 0\); see Fig. 1. Because of \(0 \le \Lambda _{M,y_j} \le 1\), this implies

By elementary properties of the function \(\theta \) (see Fig. 2), this shows \(\vartheta _{M,y}(x) = \theta (\Delta _{M,y}(x)) = 0\).

Ad b) Since \(0 \le \theta \le 1\), we also have \(0 \le \vartheta _{M,y} \le 1\). Combined with Part a), this implies \( \Vert \vartheta _{M,y} \Vert _{L^p} \le \bigl [\varvec{\lambda }(y + M^{-1}(-1,1)^d)\bigr ]^{1/p} = (2/M)^{d/p} , \) as claimed.

Ad c) Set \(T := \frac{1}{2 d M} \in (0,1]\) and \(P := y + [-T,T]^d\). For \(x \in P\) and arbitrary \(j \in \underline{d}\), we have \(|x_j - y_j| \le \frac{1}{2 d M}\). Since \(\Lambda _{M,y_j}\) is Lipschitz with \({\text {Lip}}(\Lambda _{M,y_j}) \le M\) (see Fig. 1) and \(\Lambda _{M,y_j}(y_j) = 1\), this implies

Since this holds for all \(j \in \underline{d}\), we see \(\Delta _{M,y}(x) = \sum _{j=1}^d \Lambda _{M,y_j}(x_j) - (d \!-\! 1) \ge d \!\cdot \! (1 \!-\! \frac{1}{2 d}) - (d \!-\! 1) = \frac{1}{2} , \) and hence \(\vartheta _{M,y}(x) = \theta (\Delta _{M,y}(x)) \ge \theta (\frac{1}{2}) = \frac{1}{2}\), since \(\theta \) is non-decreasing.

Finally, Lemma A.2 shows for \(Q = [0,1]^d\) that \(\varvec{\lambda }(Q \cap P) \ge 2^{-d} T^d \ge C_1 \cdot M^{-d}\) with \(C_1 = C_1(d) > 0\). Hence, \( \Vert \vartheta _{M,y} \Vert _{L^p([0,1]^d)} \ge \frac{1}{2} [\varvec{\lambda }(Q \cap P)]^{1/p} \ge C_1^{1/p} M^{-d/p} , \) which easily yields the claim.

A plot of the function \(\theta \) appearing in Lemma 3.4. Note that \(\theta \) is non-decreasing and satisfies \(\theta (x) = 0\) for \(x \le 0\) as well as \(\theta (x) = 1\) for \(x \ge 1\)

The next lemma shows how well the function \(\vartheta _{M,y}\) can be implemented by ReLU networks. We emphasize that the lemma requires using networks with \(L \ge 3\), i.e., with at least two hidden layers. \(\square \)

Lemma 3.5

Let \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) and \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) be non-decreasing. Let \(M \ge 1\), \(n \in \mathbb {N}\) and \(0 < C \le \varvec{c}(n)\), as well as \(L \in \mathbb {N}_{\ge 3}\) with \(L \le \varvec{\ell }(n)\). Then

Proof

Let \(y \in [0,1]^d\) be fixed. For \(j \in \underline{d}\), denote by \(e_j \in \mathbb {R}^{d \times 1}\) the j-th standard basis vector. Define \(A_1 \in \mathbb {R}^{4 n d \times d}\) and \(b_1 \in \mathbb {R}^{4 n d}\) by

Furthermore, set \(b_2 := 0 \in \mathbb {R}^2\) and \(b_3 := 0 \in \mathbb {R}^n\), let \(\zeta := -\frac{1}{M}\frac{d-1}{d}\) and \(\xi := -\frac{1}{M}\), and define \(A_2 \in \mathbb {R}^{2 \times 4 n d}\) and \(A_3 \in \mathbb {R}^{n \times 2}\) by

Finally, set \(A := C \cdot (1,\dots ,1) \in \mathbb {R}^{1 \times n}\), \(B := C \cdot (1,\dots ,1)^T \in \mathbb {R}^{n \times 1}\), and \(D := C \cdot (1 , -1) \in \mathbb {R}^{1 \times 2}\), as well as \(E := (C) \in \mathbb {R}^{1 \times 1}\). Note that \( \Vert A_1 \Vert _{\infty }, \Vert A_2 \Vert _{\infty }, \Vert A_3 \Vert _{\infty }, \Vert A \Vert _{\infty }, \Vert B \Vert _{\infty }, \Vert D \Vert _{\infty }, \Vert E \Vert _{\infty } \le C \) and \(\Vert b_1 \Vert _{\infty }, \Vert b_2 \Vert _{\infty } \le C\), since \(M \ge 1\) and \(y \in [0,1]^d\). Furthermore, note \(\Vert A_1 \Vert _{\ell ^0} \le 3 d n\), \(\Vert A_2 \Vert _{\ell ^0} \le 8 d n\), \(\Vert A_3 \Vert _{\ell ^0} \le 2 n\), \(\Vert A \Vert _{\ell ^0}, \Vert B \Vert _{\ell ^0} \le n\), \(\Vert D \Vert _{\ell ^0} \le 2\), and finally \(\Vert b_1 \Vert _{\ell ^0} \le 4 d n\) and \(\Vert b_2 \Vert _{\ell ^0} = 0\). Furthermore, note \(C \le \varvec{c}(n) \le \varvec{c}(15 (d + L) n)\) and likewise \(L \le \varvec{\ell }(n) \le \varvec{\ell }(15 (d+L) n)\) thanks to the monotonicity of \(\varvec{c},\varvec{\ell }\).

A direct computation shows that

Combined with the positive homogeneity of the ReLU (i.e., \(\varrho (t x) = t \varrho (x)\) for \(t \ge 0\)), this shows

In the same way, it follows that \(\bigl (A_2 \, \varrho (A_1 x + b_1) + b_2\bigr )_2 = \frac{C^2 n}{4 M} \cdot (\Delta _{M,y}(x) - 1)\). We now distinguish three cases:

Case 1: \(L=3\). In this case, set \(\Phi := \big ( (A_1,b_1), (A_2,b_2), (D,0) \big )\). Then the calculation from above, combined with the positive homogeneity of the ReLU shows

Furthermore, it is straightforward to see \(W(\Phi ) \le 3 d n + 4 d n + 8 d n + 2 \le 2 + 15 d n \le 15 (L + d) n\). Combined with our observations from above, and noting \(\lfloor \frac{L}{2} \rfloor = 1\), we thus see as claimed that  .

.

Case 2: \(L \ge 4\) is even. In this case, define

Similar arguments as in Case 1 show that \( \bigl ( A_3 \, \varrho \bigl ( A_2 \, \varrho (A_1 x + b_1) + b_2 \bigr ) + b_3 \bigr )_j = \frac{C^3 n}{4 M} \, \vartheta _{M,y}(x) \) for all \(j \in \underline{n}\), and hence \(A \circ \varrho \circ A_3 \circ \varrho \circ A_2 \circ \varrho \circ (A_1 \bullet + b_1)\) Furthermore, using similar arguments as in Eq. (3.3), we see for \(z \in [0,\infty )\) that \(A (\varrho (B z)) = C^2 n z\). Combining all these observations, we see

Since also \(W(\Phi ) \le 3 d n + 4 d n + 8 d n + 2 n + n + \frac{L-4}{2} \cdot 2 n \le 15 (d + L) n\), we see overall as claimed that  .

.

Case 3: \(L \ge 5\) is odd. In this case, define

A variant of the arguments in Case 2 shows that \( R_\varrho \Phi = C \cdot (C^2 \, n)^{(L-5)/2} \cdot \frac{C^4 n^2}{4 M} \vartheta _{M,y} = \frac{C^L \cdot n^{\lfloor L/2 \rfloor }}{4 M} \vartheta _{M,y} \) and \(W(\Phi ) \le 15 d n + 2 n + \frac{L-5}{2} \cdot 2 n + 1 \le 15 (d + L) n\), and hence  also in this last case. \(\square \)

also in this last case. \(\square \)

Lemma 3.6

Let \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) and \(\varvec{\ell } : \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) be non-decreasing with \(\varvec{\ell }^*\ge 3\). Let \(d \in \mathbb {N}\), \(\alpha \in (0,\infty )\), and \(0< \gamma < \gamma ^{\flat }(\varvec{\ell },\varvec{c})\). Then there exists a constant \(\kappa = \kappa (\gamma ,\alpha ,d,\varvec{\ell },\varvec{c}) > 0\) such that for any \(M \in [1,\infty )\) and \(y \in [0,1]^d\), we have

Proof

Since \(\gamma < \gamma ^{\flat }(\varvec{\ell },\varvec{c})\), there exist \(L = L(\gamma ,\varvec{\ell },\varvec{c}) \in \mathbb {N}_{\ge \ell ^*}\) and \(C_1 = C_1 (\gamma ,\varvec{\ell },\varvec{c}) > 0\) satisfying \(n^\gamma \le C_1 \cdot (\varvec{c}(n))^L \cdot n^{\lfloor L/2 \rfloor }\) for all \(n \in \mathbb {N}\). Since \(\varvec{\ell }^*\ge 3\), we can assume without loss of generality that \(L \ge 3\). Furthermore, since \(L \le \ell ^*\), there exists \(n_0 = n_0(\gamma ,\varvec{\ell },\varvec{c}) \in \mathbb {N}\) satisfying \(L \le \varvec{\ell }(n_0)\).

Given \(M \in [1,\infty )\) and \(y \in [0,1]^d\), set \(n := n_0 \cdot \big \lceil M^{1/(\alpha +\gamma )} \big \rceil \), noting that \(n \ge n_0\). Since \(n^\gamma \le C_1 \cdot (\varvec{c}(n))^L \cdot n^{\lfloor L/2 \rfloor }\), there exists \(0 < C \le \varvec{c}(n)\) satisfying \(n^\gamma \le C_1 \cdot C^L n^{\lfloor L/2 \rfloor }\).

Set \(\kappa := \min \{ (15 (d+L))^{-\alpha } (2 n_0)^{-\alpha }, \, (4 \, C_1)^{-1} \} > 0\) and note \(\kappa = \kappa (d,\alpha ,\gamma ,\varvec{\ell },\varvec{c})\). Furthermore, note that \(n \ge M^{1/(\alpha + \gamma )}\) and hence \( \kappa \, M^{-\frac{\alpha }{\alpha +\gamma }} = \frac{\kappa }{M} \, M^{\frac{\gamma }{\alpha +\gamma }} \le \kappa \, \frac{n^\gamma }{M} \le 4 C_1 \, \kappa \, \frac{C^L \, n^{\lfloor L/2 \rfloor }}{4 M} \le \frac{C^L \, n^{\lfloor L/2 \rfloor }}{4 M} . \) Combining this with the inclusion  for \(c \in [-1,1]\), we see from Lemma 3.5 and because of \(3 \le L \le \varvec{\ell }(n_0) \le \varvec{\ell }(n)\) that

for \(c \in [-1,1]\), we see from Lemma 3.5 and because of \(3 \le L \le \varvec{\ell }(n_0) \le \varvec{\ell }(n)\) that  .

.

We claim that  . To see this, first note \(\Vert g_{M,y} \Vert _{L^\infty } \le \Vert \vartheta _{M,y} \Vert _{L^\infty } \le 1\). Furthermore, for \(t \in \mathbb {N}\), there are two cases: For \(t \ge 15 (d+L) n\), we have

. To see this, first note \(\Vert g_{M,y} \Vert _{L^\infty } \le \Vert \vartheta _{M,y} \Vert _{L^\infty } \le 1\). Furthermore, for \(t \in \mathbb {N}\), there are two cases: For \(t \ge 15 (d+L) n\), we have  , and hence

, and hence  . On the other hand, if \(t \le 15 (d+L) n\), then we see because of \(n \le 1 n_0 + n_0 \, M^{1/(\alpha +\gamma )} \le 2 n_0 \, M^{1/(\alpha +\gamma )}\) that

. On the other hand, if \(t \le 15 (d+L) n\), then we see because of \(n \le 1 n_0 + n_0 \, M^{1/(\alpha +\gamma )} \le 2 n_0 \, M^{1/(\alpha +\gamma )}\) that

Overall, this shows  , so that Lemma 2.1 shows as claimed that

, so that Lemma 2.1 shows as claimed that  . \(\square \)

. \(\square \)

4 Error Bounds for Uniform Approximation

In this section, we derive an upper bound on how many point samples of a function  are needed in order to uniformly approximate f up to error \(\varepsilon \in (0,1)\). The crucial ingredient will be the following estimate of the Lipschitz constant of functions

are needed in order to uniformly approximate f up to error \(\varepsilon \in (0,1)\). The crucial ingredient will be the following estimate of the Lipschitz constant of functions  . The bound in the lemma is one of the reasons for our choice of the quantities \(\gamma ^{\flat }\) and \(\gamma ^{\sharp }\) introduced in Eq. (2.2).

. The bound in the lemma is one of the reasons for our choice of the quantities \(\gamma ^{\flat }\) and \(\gamma ^{\sharp }\) introduced in Eq. (2.2).

Lemma 4.1

Let \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) and \(\varvec{c}: \mathbb {N}\rightarrow [1,\infty ]\) be non-decreasing. Let \(n \in \mathbb {N}\) and assume that \(L := \varvec{\ell }(n)\) and \(C := \varvec{c}(n)\) are finite. Then each  satisfies

satisfies

Proof

Step 1: For any matrix \(A \in \mathbb {R}^{k \times m}\), define \(\Vert A \Vert _{\infty } := \max _{i,j} |A_{i,j}|\) and denote by \(\Vert A \Vert _{\ell ^0}\) the number of nonzero entries of A. In this step, we show that

To prove the first part, note for arbitrary \(x \in \mathbb {R}^m\) and any \(i \in \underline{k}\) that

showing that \(\Vert A x \Vert _{\ell ^\infty } \le \Vert A \Vert _{\infty } \, \Vert x \Vert _{\ell ^1}\). To prove the second part, note for arbitrary \(x \in \mathbb {R}^m\) that

Step 2 (Completing the proof): Let  be arbitrary, so that \(F = R_\varrho \Phi \) for a network \(\Phi = \big ( (A_1,b_1),\dots ,(A_{\widetilde{L}},b_{\widetilde{L}}) \big )\) satisfying \(\widetilde{L} \le \varvec{\ell }(n) = L\) and \(\Vert A_j \Vert _{\infty } \le \Vert \Phi \Vert _{\mathcal{N}\mathcal{N}} \le \varvec{c}(n) = C\), as well as \(\Vert A_j \Vert _{\ell ^0} \le W(\Phi ) \le n\) for all \(j \in \underline{\widetilde{L}}\).

be arbitrary, so that \(F = R_\varrho \Phi \) for a network \(\Phi = \big ( (A_1,b_1),\dots ,(A_{\widetilde{L}},b_{\widetilde{L}}) \big )\) satisfying \(\widetilde{L} \le \varvec{\ell }(n) = L\) and \(\Vert A_j \Vert _{\infty } \le \Vert \Phi \Vert _{\mathcal{N}\mathcal{N}} \le \varvec{c}(n) = C\), as well as \(\Vert A_j \Vert _{\ell ^0} \le W(\Phi ) \le n\) for all \(j \in \underline{\widetilde{L}}\).

Set \(p_j := 1\) if j is even and \(p_j := \infty \) otherwise. Choose \(N_j\) such that \(A_j \in \mathbb {R}^{N_j \times N_{j-1}}\), and define \(T_j \, x := A_j \, x + b_j\). By Step 1, we then see that \( T_j : \bigl ( \mathbb {R}^{N_{j-1}}, \Vert \cdot \Vert _{\ell ^{p_j - 1}} \bigr ) \rightarrow \bigl ( \mathbb {R}^{N_j}, \Vert \cdot \Vert _{\ell ^{p_j}} \bigr ) \) is Lipschitz with

Next, a straightforward computation shows that the “vector-valued ReLU” is 1-Lipschitz as a map \(\varrho : (\mathbb {R}^k, \Vert \cdot \Vert _{\ell ^p}) \rightarrow (\mathbb {R}^k, \Vert \cdot \Vert _{\ell ^p})\), for arbitrary \(p \in [1,\infty ]\) and any \(k \in \mathbb {N}\). As a consequence, we see that

is Lipschitz continuous as a composition of Lipschitz maps, with overall Lipschitz constant

where we used the notation \(n_j := n\) if j is even and \(n_j := 1\) otherwise. Furthermore, we used in the last step that \(C \ge 1\). The final claim of the lemma follows from the elementary estimate \(\Vert x \Vert _{\ell ^1} \le d \cdot \Vert x \Vert _{\ell ^\infty }\) for \(x \in \mathbb {R}^d\). \(\square \)

Based on the preceding lemma, we can now prove an error bound for the computational problem of uniform approximation on the neural network approximation space  .

.

Theorem 4.2

Let \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) and \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) be non-decreasing and suppose that \(\gamma ^{\sharp }(\varvec{\ell },\varvec{c}) < \infty \). Let \(d \in \mathbb {N}\) and \(\alpha \in (0,\infty )\) be arbitrary, and let  as in Eq. (2.3). Furthermore, let

as in Eq. (2.3). Furthermore, let  . Then, we have

. Then, we have

Remark

a) The proof shows that choosing the uniform grid \(\{ 0, \frac{1}{N}, \dots , \frac{N-1}{N} \}^d\) as the set of sampling points (with \(N \sim m^{1/d}\)) yields an essentially optimal sampling scheme.

b) It is well-known (see [25, Proposition 3.3]) that the error of an optimal randomized algorithm is at most two times the error of an optimal deterministic algorithm. Therefore, the theorem also implies that

Proof

Since \(\gamma ^{\sharp }(\varvec{\ell },\varvec{c}) < \infty \), Remark 2.2 shows that \(L := \varvec{\ell }^*< \infty \). Let \(\gamma > \gamma ^{\sharp }(\varvec{\ell },\varvec{c}) \ge 1\) be arbitrary. By definition of \(\gamma ^{\sharp }(\varvec{\ell },\varvec{c})\), it follows that there exists some \(\gamma ' \in \bigl (\gamma ^{\sharp }(\varvec{\ell },\varvec{c}), \gamma \bigr )\) and a constant \(C_0 = C_0(\gamma ', \varvec{\ell },\varvec{c}) = C_0(\gamma ,\varvec{\ell },\varvec{c}) > 0\) satisfying \({ (\varvec{c}(n))^L \cdot n^{\lfloor L/2 \rfloor } \le C_0 \cdot n^{\gamma '} \le C_0 \cdot n^{\gamma } }\) for all \(n \in \mathbb {N}\). Let \(m \in \mathbb {N}\) be arbitrary and choose

Furthermore, let \(I := \bigl \{ 0, \frac{1}{N}, \dots , \frac{N-1}{N} \bigr \}^d \subset [0,1]^d\) and set \(C := \varvec{c}(n)\) and \(\mu := d \cdot C^L \cdot n^{\lfloor L/2 \rfloor }\), noting that \(\mu \le d \, C_0 \, n^{\gamma } =: C_1 \, n^{\gamma }\) and \(|I| = N^d \le m\).

Next, set  and define \(S := \Omega (B)\) for

and define \(S := \Omega (B)\) for

For each \(y = (y_i)_{i \in I} \in S\), choose some \(f_y \in B\) satisfying \(y = \Omega (f_y)\). Note by Lemma 2.1 that  ; by definition of

; by definition of  , we can thus choose

, we can thus choose  satisfying \(\Vert f_y - F_y \Vert _{L^\infty } \le 2 \cdot n^{-\alpha }\). Given this choice, define

satisfying \(\Vert f_y - F_y \Vert _{L^\infty } \le 2 \cdot n^{-\alpha }\). Given this choice, define

We claim that \(\Vert f - Q (\Omega (f)) \Vert _{L^\infty } \le C_2 \cdot m^{-\alpha / (d \cdot (\gamma + \alpha ))}\) for all \(f \in B\), for a suitable constant \(C_2 = C_2(d,\gamma ,\varvec{\ell },\varvec{c})\). Once this is shown, it follows that \( \beta _*^{\textrm{det}} (U, \iota _\infty ) \ge \frac{1}{d} \frac{\alpha }{\gamma + \alpha } , \) which then implies the claim of the theorem, since \(\gamma > \gamma ^{\sharp }(\varvec{\ell },\varvec{c})\) was arbitrary.

Thus, let \(f \in B\) be arbitrary and set \(y := \Omega (f) \in S\). By the same arguments as above, there exists  satisfying \(\Vert f - F \Vert _{L^\infty } \le 2 \cdot n^{-\alpha }\). Now, we see for each \(i \in I\) because of \(f(i) = (\Omega (f))_i = y_i = (\Omega (f_y))_i = f_y(i)\) that

satisfying \(\Vert f - F \Vert _{L^\infty } \le 2 \cdot n^{-\alpha }\). Now, we see for each \(i \in I\) because of \(f(i) = (\Omega (f))_i = y_i = (\Omega (f_y))_i = f_y(i)\) that

Furthermore, Lemma 4.1 shows that \(F - F_y : (\mathbb {R}^d, \Vert \cdot \Vert _{\ell ^\infty }) \rightarrow (\mathbb {R}, |\cdot |)\) is Lipschitz continuous with Lipschitz constant at most \(2 \mu \). Now, given any \(x \in [0,1]^d\), we can choose \(i = i(x) \in I\) satisfying \(\Vert x - i \Vert _{\ell ^\infty } \le N^{-1}\). Therefore, \( |(F - F_y)(x)| \le \frac{2 \mu }{N} + |(F - F_y)(i)| \le \frac{2 \mu }{N} + 4 \, n^{-\alpha } . \) Overall, we have thus shown \(\Vert F - F_y \Vert _{L^\infty } \le \frac{2 \mu }{N} + 4 \, n^{-\alpha }\), which finally implies because of \(Q(\Omega (f)) = Q(y) = F_y\) that

It remains to note that our choice of N and n implies \(m^{1/d} \le 1 + N \le 2 N\) and hence \(\frac{1}{N} \le 2 m^{-1/d}\) and furthermore \(n \le 1 + m^{1/(d \cdot (\gamma + \alpha ))} \le 2 \, m^{1/(d \cdot (\gamma + \alpha ))}\). Hence, recalling that \(\mu \le C_1 \, n^{\gamma }\), we see

Furthermore, since \(n \ge m^{1/(d \cdot (\gamma +\alpha ))}\), we also have \(n^{-\alpha } \le m^{-\frac{\alpha }{d \cdot (\gamma + \alpha )}}\). Combining all these observations, it is easy to see that \({\Vert f - Q(\Omega (f)) \Vert _{L^\infty } \le C_2 \cdot m^{-\frac{\alpha }{d \cdot (\gamma +\alpha )}}}\), for a suitable constant \(C_2 = C_2(d,\gamma ,\varvec{\ell },\varvec{c}) > 0\). Since \(f \in B\) was arbitrary, this completes the proof. \(\square \)

5 Hardness of Uniform Approximation

In this section, we show that the error bound for uniform approximation provided by Theorem 4.2 is optimal, at least in the common case where \(\gamma ^{\flat }(\varvec{\ell },\varvec{c}) = \gamma ^\sharp (\varvec{\ell },\varvec{c})\) and \(\varvec{\ell }^*\ge 3\). This latter condition means that the approximation for defining the approximation space  is performed using networks with at least two hidden layers. We leave it as an interesting question for future work whether a similar result even holds for approximation spaces associated to shallow networks.

is performed using networks with at least two hidden layers. We leave it as an interesting question for future work whether a similar result even holds for approximation spaces associated to shallow networks.

Theorem 5.1

Let \(\varvec{\ell }: \mathbb {N}\rightarrow \mathbb {N}_{\ge 2} \cup \{ \infty \}\) and \(\varvec{c}: \mathbb {N}\rightarrow \mathbb {N}\cup \{ \infty \}\) be non-decreasing with \(\varvec{\ell }^*\ge 3\). Given \(d \in \mathbb {N}\) and \(\alpha \in (0,\infty )\), let  as in Eq. (2.3) and consider the embedding

as in Eq. (2.3) and consider the embedding  . Then

. Then

Proof

Set \(K := [0,1]^d\) and  .

.

Step 1: Let \(0< \gamma < \gamma ^{\flat }(\varvec{\ell },\varvec{c})\). Let \(m \in \mathbb {N}\) be arbitrary and \(\Gamma _m := \underline{2k}^d \times \{ \pm 1 \}\), where \(k := \big \lceil m^{1/d} \big \rceil \). In this step, we show that there is a constant \(\kappa = \kappa (d,\alpha ,\gamma ,\varvec{\ell },\varvec{c}) > 0\) (independent of m) and a family of functions \((f_{\ell ,\nu })_{(\ell ,\nu ) \in \Gamma _m} \subset U\) which satisfies

To see this, set \(M := 4 k\), and for \(\ell \in \underline{2k}^d\) define \(y^{(\ell )} := \frac{(1,\dots ,1)}{4 k} + \frac{\ell - (1,\dots ,1)}{2 k} \in \mathbb {R}^d\). Then, we have

which shows that the functions \(\vartheta _{M,y^{(\ell )}}\), \(\ell \in \underline{2k}^d\), (with \(\vartheta _{M,y}\) as defined in Lemma 3.4), have disjoint supports contained in \([0,1]^d\). Furthermore, Lemma 3.6 yields a constant \(\kappa _1 = \kappa _1(\gamma ,\alpha ,d,\varvec{\ell },\varvec{c}) > 0\) such that \( f_{\ell ,\nu } := \kappa _1 \cdot M^{-\alpha /(\alpha +\gamma )} \cdot \nu \cdot \vartheta _{M,y^{(\ell )}} \in U \) for arbitrary \((\ell ,\nu ) \in \Gamma _m\).

To prove Eq. (5.1), let \(A \in {\text {Alg}}_m (U, C([0,1]^d))\) be arbitrary. By definition, there exist \(\varvec{x}= (x_1,\dots ,x_m) \in K^m\) and a function \(Q : \mathbb {R}^m \rightarrow \mathbb {R}\) satisfying \(A(f) = Q(f(x_1),\dots ,f(x_m))\) for all \(f \in U\). Choose \( I := I_{\varvec{x}} := \big \{ \ell \in \underline{2k}^d :\forall \, n \in \underline{m} : \vartheta _{M,y^{(\ell )}} (x_n) = 0 \big \} . \) Then for each \(\ell \in I^c = \underline{2k}^d \setminus I\), there exists \(n_\ell \in \underline{m}\) such that \(\vartheta _{M,y^{(\ell )}}(x_{n_\ell }) \ne 0\). Then the map \(I^c \rightarrow \underline{m}, \ell \mapsto n_\ell \) is injective, since \(\vartheta _{M,y^{(\ell )}} \, \vartheta _{M,y^{(t)}} = 0\) for \(t,\ell \in \underline{2k}^d\) with \(t \ne \ell \). Therefore, \(|I^c| \le m\) and hence \(|I| \ge (2k)^d - m \ge m\), because of \(k \ge m^{1/d}\).