Abstract

The paper discusses systems with viscoelastic elements that exhibit repeated eigenvalues in the eigenvalue problem. The mechanical behavior of viscoelastic elements can be described using classical rheological models as well as models that involve fractional derivatives. Formulas have been derived to calculate first- and second-order sensitivities of repeated eigenvalues and their corresponding eigenvectors. A specific case was also examined, where the first derivatives of eigenvalues are repeated. Calculating derivatives of eigenvectors associated with repeated eigenvalues is complex because they are not unique. To compute their derivatives, it is necessary to identify appropriate adjacent eigenvectors to ensure stable control of eigenvector changes. The derivatives of eigenvectors are obtained by dividing them into particular and homogeneous solutions. Additionally, in the paper, a special factor in the coefficient matrix has been introduced to reduce its condition number. The provided examples validate the correctness of the derived formulas and offer a more detailed analysis of structural behavior for structures with viscoelastic elements when altering a single design parameter or simultaneously changing multiple parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sensitivity analysis of eigenvalues and their associated eigenvectors is a significant research aspect in the field of engineering and natural sciences. As modern technologies and increasingly complex projects continue to evolve, engineers are increasingly faced with the necessity to comprehend how alternations in design parameters influence the dynamic characteristics of the systems under study. Sensitivity analysis is a vital tool employed in various domains, including structural design [1], model updating [2], damage detection, optimization procedures [3], identification [4], and uncertain design parameters [5].

An overview of sensitivity analysis methods is available in the review paper [6]. One of the tools utilized in sensitivity analysis, also employed in this article, involves the computation of derivatives for quantities that describe the structural response. An early work, in which authors computed first-order derivatives for undamped systems with symmetric matrices, is referenced in [7]. In that work, the authors applied the modal expansion technique. The paper by Nelson [8] presents a method for obtaining derivatives of eigenvectors for distinct eigenvalues. The derivative is expressed as a solution comprising particular and homogeneous parts. Subsequent works have been developed based on this method, categorized as the Nelson-type method. In [9], an enhancement of this method was introduced to apply it to systems with repeated eigenvalues. In practice, in typical engineering systems, especially in cases involving system symmetry, repeated eigenvalues are relatively common. However, they present certain difficulties in calculating derivatives of eigenvectors. Eigenvectors associated with repeated eigenvalues are linearly dependent, and computing derivatives necessitates identifying the appropriate adjacent eigenvectors to ensure stable control of eigenvector changes. Papers [10, 11] improved and modified the method proposed in [9] by incorporating second-order sensitivity analysis of eigenvalues. Further research allowed for the application of the discussed method to non-self-adjoint systems [12]. In [13], the authors introduced a new normalization condition for complex eigenvectors with both distinct and repeated eigenvalues. The paper [14] extends the method of determining the sensitivity of eigenvectors through the calculation of particular and homogeneous solutions to cases where the first derivatives of eigenvalues are also repeated. This is achieved by utilizing information from the triple differentiation of the eigenvalue problem. Such a specific case, where both eigenvalues and their first derivatives are repeated, was also explored in [15,16,17]. Typically, the sensitivity of eigenvalues is calculated by solving an additional eigenvalue problem, and its solution provides the transformation matrix for obtaining new eigenvectors. In the case of repeated derivatives of eigenvalues, the transformation matrix is not unique, necessitating the use of additional algorithms to determine the eigenvector sensitivity.

The work in [18] presents a generalization of the method for linear, non-viscously damped systems with repeated eigenvalues. The authors introduced a normalization condition for eigenvectors in such systems because, in this context, the eigenvectors do not satisfy the classical orthogonality condition. The work also considers non-self-adjoint systems.

In addition to Nelson-type methods, various other approaches have been developed for calculating derivatives of eigenvectors for repeated eigenvalues. In [19], a singular value decomposition approach was applied to determine the required basis of eigenvector space for computing derivatives of eigenvectors for repeated eigenvalues. In [20], the authors introduced a method suitable for both distinct and repeated eigenvalues, although it primarily focuses on the calculation of eigenvalue sensitivities. This method is based on the characteristic polynomial of the considered eigenvalue problem and, unlike the Nelson method, does not require an eigenvector. In [21], a numerical method using an iterative procedure was proposed, considering both distinct and repeated eigenvalues. Lee [22] introduced the application of the adjoint method for calculating sensitivities of repeated eigenvalues and their corresponding eigenvectors. The advantage of this method is that it does not necessitate determining second-order eigenvalue sensitivities or linear combinations of eigenvectors. On the other hand, in [23], a method was proposed to calculate eigenvector sensitivities for repeated eigenvalues without the need for using adjacent eigenvectors.

In the works [24, 25], the calculation of sensitivities of eigenvalues and their associated eigenvectors was proposed through the solution of a system of linear algebraic equations. These works considered distinct [24] and repeated [25] eigenvalues, respectively. In [26], a method was introduced to determine particular solutions for eigenvector derivatives, leading to a significant reduction in the condition number of the coefficient matrix. Simultaneously, an error was identified in the derivation of the normalization condition derivatives in [25].

In the work [27], sensitivities of defective repeated eigenvalues and their corresponding eigenvectors were considered within the context of a square eigenvalue problem. Sensitivity analysis for the square eigenvalue problem is also discussed in [28] and in [29], considering non-self-adjoint systems.

While in most cases, researchers primarily focus on first-order sensitivities, there are situations where these prove insufficient for accurately predicting changes in the structural response. In such cases, it becomes necessary to determine second-order sensitivities. Formulas for calculating second-order sensitivities of repeated eigenvalues and their corresponding eigenvectors for systems with damping can be found in [30].

Li et al. proposed an algorithm in [31] for non-self-adjoint systems, enabling the independent computation of left and right eigenvector derivatives. This method was applied to both distinct and repeated eigenvalues.

The work in [32] introduced an extension of the method for computing particular and homogeneous solutions of eigenvector derivatives for repeated eigenvalues in systems where square eigenvalue problems arise. Meanwhile, in [33], an enhancement of this method was proposed, significantly reducing the condition number of the coefficient matrix required for calculating particular solutions. In [34], this solution was extended to non-viscously damped systems.

The work in [35] presents a generalization of this method to address the general nonlinear eigenproblem. It considers both distinct and repeated eigenvalues. However, in [36], the authors introduced a new normalization condition and derived formulas for calculating second-order sensitivities, but only for distinct eigenvalues. For repeated values, sensitivities were derived for first-order eigenvalues and eigenvectors. The systems considered involved damping leading to a square eigenvalue problem.

The work in [37] provides a solution for calculating complex eigenvector derivatives for both distinct and repeated eigenvalues, with a specific focus on asymmetric systems. The solution suggests the imposition of consistent normalization conditions on the left and right eigenvectors and the computation of sensitivities using the chain rule.

The sensitivities of eigenvalues and eigenvectors are essential in various applications, including optimization problems. In [38], the authors analyzed the eigenmode optimization problem, considering case involving repeated eigenvalues. The sensitivity of eigenvectors was applied within a gradient-based optimization algorithm. One important aspect of analyzing systems with repeated eigenvalues is the use of optimization methods that effectively solve problems where objective functions or constraints are non-differentiable. Conventional optimization methods may encounter difficulties due to the lack of continuity. In the paper [39], numerical methods for non-differentiable problems are presented. The authors analyzed a clamped–clamped column and introduced a method for structural optimization in this context, allowing to overcome the challenges associated with the non-differentiability of repeated eigenvalues. In the work [40], mass optimization of a truss was conducted considering frequency constraints, using a particle swarm optimization algorithm. The selected method is effective, eliminating the need for determining gradients of the objective function. On the other hand, in [41], a genetic algorithm based on Kirsch's approximations was utilized for structural optimization with frequency constraints. The study [42] focuses on topology optimization, where the authors proposed implementing the bundle method to solve topology of continuous structures with distinct and repeated eigenvalues.

This work focuses on systems containing viscoelastic elements. The equation describing the eigenvalue problem for such systems is as follows:

where \({\mathbf{q}}\left( t \right)\) is the displacement vector, \({\mathbf{M}}\) is the mass matrix, \({\mathbf{K}}\) is the stiffness matrix, \({\mathbf{C}}\) is the damping matrix associated with structural damping, and \({\tilde{\mathbf{G}}}\left( {t - \tau } \right)\) is the matrix of the damping kernel function. In structural dynamics, damping systems can consist of multiple mechanical components characterized by various levels of energy dissipation. To describe their behavior, they must also encompass various damping models. The form \({\tilde{\mathbf{G}}}\left( {t - \tau } \right)\) depends on the chosen damping model for viscoelastic material. Among others, the literature describes the exponential damping model [43, 44], Biot model [45, 46], the Golla–Hughes–McTavish model (GHM) [47, 48], or anelastic displacement field mode (ADF) [49, 50]. Classical and fractional rheological models are described in [51,52,53]. A review of various non-viscous damping functions expressed in the frequency domain can be found in [54, 55]. In this study, it is assumed that a single damping model is used to describe damping, which as shown in [52], is sufficient to describe the behavior of viscoelastic dampers. However, the proposed approach can also be applied to systems where more than one damping model is considered.

Works [56, 57] demonstrated methods for calculating second-order sensitivities in systems with viscoelastic elements; however, they did not explore cases where the solution to the eigenvalue problem resulted in repeated eigenvalues. This present work is dedicated to precisely these cases, offering a detailed analysis of sensitivities for repeated eigenvalues and their corresponding eigenvectors in systems with viscoelastic damping elements. Sensitivity analysis is an extremely useful tool in the engineering design process as it enables understanding how changes in design parameters affect the dynamic characteristics of the studied systems, which is essential in structural design, model updating, damage detection, and optimization procedures. The article analyzes both first and second-order sensitivity. Especially the latter can be applicable in many scenarios, such as the analysis of systems with large uncertainty ranges [58], where first-order analysis may not always yield sufficiently accurate results. The application of higher-order eigenvalue sensitivity is also demonstrated in [59] for the harmonic analysis of viscoelastically damped systems.

The presented generalized of the formulation for systems with viscoelastic elements has not been previously presented in the literature. The sensitivity of eigenvalues is obtained by solving an additional eigenvalue problem, while the sensitivity of eigenvectors is calculated based on Nelson's formulation. Compared to previous works, the presented method addresses particular cases occurring with repeated eigenvalues. The analysis encompasses both classical rheological models for describing viscoelastic properties and models involving fractional derivatives, which is a novel aspect of this work. An innovative aspect is also the analysis of a system with damping elements where sensitivities of eigenvalues are also repeated. Additionally, for the discussed systems, this work introduces, for the first time, formulas for calculating second-order sensitivities of repeated eigenvalues and their corresponding eigenvectors. It provides examples in which second-order sensitivity proves essential for obtaining accurate results. Moreover, given the ill-conditioned matrix of coefficient in the considered problem, an additional factor has been introduced, significantly improving the results, as validated by the provided examples.

The article is organized as follows: Sect. 2 presents sensitivity analysis for systems with viscoelastic elements, focusing on scenarios where sensitivities of repeated eigenvalues are distinct; Sect. 3 addresses cases where sensitivities of eigenvalues are also repeated; Sect. 4 describes application of the presented method to structures with viscoelastic elements; Sect. 5 offers examples and a comparison of the problem’s condition numbers; finally, Sect. 6 presents the conclusions. The Appendices include the stability proof of the presented method, its applicability for cases with distinct eigenvalues, and a diagram illustrating the use of the provided formulas to determine sensitivities.

2 Sensitivity analysis of repeated eigenvalues and corresponding eigenvectors – distinct derivatives of eigenvalues

2.1 Sensitivity of the first order

By applying the Laplace transform with zero initial conditions, Eq. (1) can be written in the following form:

where s represents the Laplace variable, \({\mathbf{G}}\left( s \right)\) is a matrix whose structure depends on the adopted model for the viscoelastic element (detailed matrix forms will be presented in Sect. 5), \({\overline{\mathbf{q}}}\left( s \right)\) is the Laplace transform of \({\mathbf{q}}\left( t \right)\), and \({\mathbf{D}}\left( s \right) = s^{2} {\mathbf{M}} + s{\mathbf{C}} + {\mathbf{G}}\left( s \right) + {\mathbf{K}}\) is a dynamic stiffness matrix. If the considered system is under-critically damped and has n degrees of freedom, the solution to the eigenvalue problem (2) consists of 2n complex conjugate eigenvalues and their corresponding eigenvectors. This solution also includes cases with repeated eigenvalues. For any eigenvalue \(\lambda_{i}\), the eigenvector \({\overline{\mathbf{q}}}_{i}\) can be defined using Eq. (2):

and the normalization condition can be expressed as:

where \(\frac{{\partial {\mathbf{D}}\left( {\lambda_{i} } \right)}}{\partial s} = 2\lambda_{i} {\mathbf{M}} + {\mathbf{C}} + \frac{{\partial {\mathbf{G}}\left( {\lambda_{i} } \right)}}{\partial s}\).

In the cases where the eigenproblem has repeated eigenvalues \(\lambda_{1} = \lambda_{2} = \ldots = \lambda_{m} = \lambda\), the relationship (2) can be rewritten in the following form:

where \({\mathbf{D}}\left( \lambda \right) = {\mathbf{M}}{\varvec{\Lambda }}^{2} + {\mathbf{C\Lambda }} + {\mathbf{K}} + {\mathbf{G}}\left( \lambda \right)\), \({{\varvec{\Lambda}}}\) is a diagonal matrix containing repeated eigenvalues \({{\varvec{\Lambda}}} = diag\left[ {\lambda_{1} , \lambda_{2} , \ldots ,\lambda_{m} } \right]\), and \({\mathbf{Q}}\) is the matrix containing their associated eigenvectors \({\mathbf{Q}} = \left[ {{\overline{\mathbf{q}}}_{1} ,{\overline{\mathbf{q}}}_{2} , \ldots ,{\overline{\mathbf{q}}}_{m} } \right]\). The normalization condition for the matrix \({\mathbf{Q}}\) is expressed based on (4) as:

where \({\mathbf{I}}\) is the identity matrix of dimension \(m {\text{x}} m\), and \(\frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{\partial s} = 2\lambda {\mathbf{M}} + {\uplambda }{\mathbf{C}} + \frac{{\partial {\mathbf{G}}\left( \lambda \right)}}{\partial s}\).

A characteristic feature of the solution to Eq. (5) in cases of repeated eigenvalues is that their corresponding eigenvectors are not unique. Therefore, when it becomes necessary to calculate their derivatives, it is essential to find the appropriate adjacent eigenvectors that allow for controlling changes in the eigenvectors. These adjacent eigenvectors can be defined as:

where \({\varvec{\upbeta}}_{1}\) is the transformation matrix that satisfies the condition:

The new vector \({{\varvec{\Phi}}}\) is also a solution to the eigenvalue problem (5) and must satisfy the normalization condition (6). Equations (5) and (6) can now be expressed in the following form:

The next step involves finding the transformation matrix \({\varvec{\beta}}_{1}\). To do so, we differentiate Eq. (9) with respect to the change in the design parameter \(p_{k}\):

and then, we left-multiply it by \({\mathbf{Q}}^{T}\). This leads to the subeigenvalue problem:

where \({\overline{\mathbf{D}}} = - {\mathbf{Q}}^{T} \frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k} }}{\mathbf{Q}}\).

The solutions to the additional eigenvalue problem (12) are the sensitivities of eigenvalues and the transformation matrix. To determine the sensitivities of eigenvectors, Eq. (11) is reformulated in the following form:

Due to the singularity of the matrix \({\mathbf{D}}\left( \lambda \right)\) in Eq. (13), it is not possible to directly determine the sensitivities of eigenvectors \(\frac{{\partial {{\varvec{\Phi}}}}}{{\partial p_{i} }}\). According to [8], the sensitivity of eigenvectors in cases of repeated eigenvalues can be expressed as:

where \({\mathbf{V}}_{1}\) is a particular solution of Eq. (13), and \({\mathbf{C}}_{1}\) is a coefficient matrix for calculating the homogeneous solution. Each column of the vector \({\mathbf{V}}_{1}\) is a linear combination of the eigenvectors, except for those in \({{\varvec{\Phi}}}\). Hence, based on Eq. (10), the particular solution must satisfy Eq. [34]:

Substituting Eq. (14) into (13) results in:

These two Eqs. (15) and (16) together form a system of equations, which can be expressed in matrix form as follows:

where \({\mathbf{S}}_{1} = - \frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k} }}{{\varvec{\Phi}}}\). The solution to this system of Eqs. (17) consists of the particular solution \({\mathbf{V}}_{1}\) and the sensitivities of eigenvalues \(\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }}\). The proof that the matrix on the left-hand side of Eq. (17) is non-singular is presented in Appendix A. The factor κ is introduced because the elements of the coefficient matrix have different orders of magnitude. It is determined according to [14] as the ratio of the largest element in the \({\mathbf{D}}\left( \lambda \right)\) matrix to the largest element in \(\frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{\partial s}\). This significantly reduces the condition number of the matrix, as will be presented in Sect. 5.5.

The computation of the coefficients of the matrix \({\mathbf{C}}_{1}\) will be carried out in two steps. In the first step, the off-diagonal coefficients will be calculated, and then the diagonal coefficients will be determined. To calculate the off-diagonal coefficients of the matrix \({\mathbf{C}}_{1}\), it is necessary to differentiate (9) twice and then left-multiply by \({{\varvec{\Phi}}}^{T}\). As a result, we obtain:

where \({\mathbf{R}}_{1} = - \frac{1}{2}\left[ {{{\varvec{\Phi}}}^{T} \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k}^{2} }}{{\varvec{\Phi}}} + 2{{\varvec{\Phi}}}^{T} \frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k} }}{\mathbf{V}}_{1} + 2{{\varvec{\Phi}}}^{T} \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s\partial p_{k} }}{{\varvec{\Phi}}}\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }} + {{\varvec{\Phi}}}^{T} \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s^{2} }}{{\varvec{\Phi}}}\left( {\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }}} \right)^{2} } \right]\).

Let the elements of the matrix \({\mathbf{C}}_{1}\) be denoted as \(c_{1ij}\), and the elements of the matrix \({\mathbf{R}}_{1}\) as \(r_{1ij}\). Then, the off-diagonal elements of the matrix \({\mathbf{C}}_{1}\) can be calculated using the formula:

Considering that the matrix \(\frac{{\partial^{2} {{\varvec{\Lambda}}}}}{{\partial p_{k}^{2} }}\) has zero values outside the diagonal, it will not be taken into account. It is to be noted that the use of Eq. (19) is possible only when the sensitivities of eigenvalues are distinct. The case where they are equal is more complex and will be discussed in Sect. 3.

To determine the elements on the diagonal, we substitute the differentiated Eq. (10) into (14). This leads to:

where \({\mathbf{R}}_{2} = - \left[ {{{\varvec{\Phi}}}^{T} \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s^{2} }}{{\varvec{\Phi}}}\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }} + {{\varvec{\Phi}}}^{T} \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s\partial p_{k} }}{{\varvec{\Phi}}}} \right]\).

In a generalized form similar to (19), it is possible to calculate the elements on the diagonal as:

2.2 Sensitivity of the second order

To determine the second-order sensitivities of repeated eigenvalues and their associated eigenvectors, a similar analysis to that in Sect. 2.1 will be conducted. It is necessary to differentiate Eq. (11) once again, leading to:

The solution to Eq. (22) can be presented similarly to the case of Eq. (14):

where \({\mathbf{V}}_{2}\) is the particular solution to Eq. (22), and \({\mathbf{C}}_{2}\) is the coefficient matrix for the homogeneous solution of the equation. Substituting (23) into (22), we obtain the following formula:

where \({\mathbf{S}}_{2} = - \left[ {\frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k}^{2} }}{{\varvec{\Phi}}} + 2\frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s\partial p_{k} }}{{\varvec{\Phi}}}\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }} + 2\frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k} }}\frac{{\partial {{\varvec{\Phi}}}}}{{\partial p_{k} }} + \frac{{\partial^{2} {\mathbf{D}}\left( \lambda \right)}}{{\partial s^{2} }}{{\varvec{\Phi}}}\left( {\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }}} \right)^{2} + 2\frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{\partial s}\frac{{\partial {{\varvec{\Phi}}}}}{{\partial p_{k} }}\frac{{\partial {{\varvec{\Lambda}}}}}{{\partial p_{k} }}} \right]\).

Similar to the first-order analysis, the particular solution must satisfy the condition:

Equations (24) and (25) can be expressed in matrix form as:

The solution to the system of Eqs. (26) consists of the particular solution \({\mathbf{V}}_{2}\) and the second-order sensitivities of repeated eigenvalues \(\frac{{\partial^{2} {{\varvec{\Lambda}}}}}{{\partial p_{k}^{2} }}\). It is worth noting that the structure of Eq. (26) is the same as in the first-order analysis and the system of Eqs. (17). The coefficient matrix is exactly the same, which means that to find the solution to the system of Eqs. (26), it is necessary to determine only the right-hand side vector.

The coefficient matrix \({\mathbf{C}}_{2}\) will be determined in a similar manner to the matrix \({\mathbf{C}}_{1}\). First, we differentiate Eq. (22), and then left-multiply by \({{\varvec{\Phi}}}^{T}\). Using Eq. (25), we obtain:

where

The off-diagonal elements can be determined using the formula:

The obtained form of the solution is very similar to Eq. (19). The elements on the diagonal are also determined in a similar way to the first-order sensitivity. In the first step, we differentiate Eq. (10) twice, and then, after substituting the solution (23), we get:

where

The elements on the main diagonal can be calculated using the equation:

To calculate the second-order sensitivities of eigenvectors, it is necessary to first compute the first-order sensitivities of eigenvalues and their associated eigenvectors. As before, the condition that the sensitivities of repeated eigenvalues are distinct must be met. The next section will consider the case where eigenvalue sensitivities are the same.

3 Sensitivity analysis of repeated eigenvalues and corresponding eigenvectors – repeated derivatives of eigenvalues

In the case of repeated eigenvalue sensitivities, the vectors that compose the transformation matrix \({\varvec{\beta}}_{1}\), which is a solution to Eq. (12), are not independent. As a result, using Eq. (7), will not yield independent eigenvectors \({{\varvec{\Phi}}}\). Therefore, it is necessary to determine a new adjacent vector [14]:

The matrix \({\varvec{\upbeta}}_{2}\) must satisfy the condition \({\varvec{\upbeta}}_{2}^{T} {\varvec{\upbeta}}_{2} = {\mathbf{I}}\). The new eigenvector expressed as (31) must satisfy the eigenvalue problem (5), which takes the form:

and the normalization condition (6), expressed as:

By differentiating the eigenvalue problem (32) with respect to the design parameter, the following equation is arrived at:

Based on this, the sensitivity of the eigenvectors can be expressed in a similar form as before:

The particular solution of Eq. (35), \({\mathbf{V}}_{3}\), must satisfy the equation:

Utilizing Eq. (31), sensitivity of the eigenvectors can be expressed as:

Substituting Eq. (37) into (35) and considering Eq. (14), we obtain the relation:

To derive the transformation matrix \({\varvec{\upbeta}}_{2}\), differentiate (34) once more, left-multiply it by \({{\varvec{\Phi}}}^{T}\), and, after substituting (14), (31), and (37) and performing transformations, the following subeigenproblem is obtained:

where

The solution to problem (39) consists of second-order eigenvalue sensitivities \(\frac{{\partial {{\varvec{\Lambda}}}^{2} }}{{\partial p_{k}^{2} }}\) and the transformation matrix \({{\varvec{\upbeta}}}_{2}\). After obtaining the \({{\varvec{\upbeta}}}_{2}\) matrix, it is possible to calculate the vectors \({{\varvec{\Psi}}}\). However, to find their derivatives, we need to compute \({\mathbf{C}}_{3}\) and \({\mathbf{V}}_{3}\). First, we differentiate (32) twice:

The solution to Eq. (40) can be presented in a similar form as before:

After substituting (35) and (41) into Eq. (40), we obtain:

where

Equations (36) and (42) form a system of equations:

If we express the system of Eq. (43) in the form:

then

where

To determine the vector \({\mathbf{V}}\), it is necessary to compute the inverse matrix of matrix \({\mathbf{A}}\), which can be expressed as:

The dimensions of the individual component matrices are as follows: \({\overline{\mathbf{A}}}_{11} \left[ {n \times n} \right]\), \({\overline{\mathbf{A}}}_{12} \left[ {n \times m} \right]\), \({\overline{\mathbf{A}}}_{21} \left[ {m \times n} \right]\), and \({\overline{\mathbf{A}}}_{22} \left[ {m \times m} \right]\). Utilizing Eqs. (44), (45), and (47), we can write the expression to determine the particular solution \({\mathbf{V}}_{4}\) as follows:

To find the solution \({\mathbf{V}}_{4}\), it is necessary to determine the elements of matrix \({\mathbf{C}}_{3}\). In cases where the derivatives of eigenvalues are distinct, the elements of matrices \({\mathbf{C}}_{1}\) and \({\mathbf{C}}_{2}\) on the diagonal and off the diagonal can be determined independently. However, when sensitivities of eigenvalues are repeated, this is not possible. In such cases, one should first calculate the elements of matrix \({\mathbf{C}}_{3}\) on the diagonal. Afterward, using the known values of \(c_{3ii}\), the off-diagonal elements can be determined.

The first step is to calculate the elements on the diagonal. To do this, differentiate the condition (33), and after substituting the relationship (35), the following formula is obtained:

where

The elements on the diagonal can be determined using the formula, where \(c_{3ii}\) and \(r_{5ii}\) represent the elements on the diagonal of matrices \({\mathbf{C}}_{3}\) and \({\mathbf{R}}_{5}\), respectively:

To determine the off-diagonal elements, we need to differentiate the eigenvalue problem (32) three times and multiply it from the left by \({{\varvec{\Psi}}}^{T}\). Then, using (35) and (41), we obtain the following formula:

where

Since the sensitivity matrices of the second and third orders have a diagonal structure, using the values of the elements of the matrix \(c_{3ii}\) already computed from Eq. (50), the values of the \({\mathbf{C}}_{3}\) matrix off the diagonal can be calculated by solving a system of linear equations. In the case where the number m is equal to 2, the coefficients \(c_{3ij}\) can be calculated using the formula:

Second-order sensitivities of eigenvalues can be obtained using the formula (39), and to obtain second-order sensitivities of eigenvectors, we need to repeat the procedure outlined in this chapter.

4 Application to calculating sensitivities for structures with viscoelastic elements

Equation (2) describes an eigenvalue problem that arises in systems with viscoelastic elements. The matrix \({\mathbf{G}}\left( s \right)\) depends on the viscoelastic element model, as well as on the type of structure under consideration and the placement of viscoelastic elements. It can be expressed in a general form:

where r represents the number of viscoelastic elements, \({\mathbf{K}}_{v,e}\) is a matrix that takes into account the viscoelastic element’s position, and \(g_{e} \left( s \right)\) is a function describing the material properties of viscoelastic element e. Detailed descriptions of these structures can be found in references like [60] for frames with viscoelastic dampers, [61] for beams with viscoelastic layers, [62] for plates with viscoelastic layers, and [63] for plates supported by viscoelastic supports. The eigenvalue problem for the considered systems with viscoelastic elements will be solved using a continuation method [60]. The solutions to the eigenvalue problem consist of pairs of complex conjugate eigenvalues:

where \(\mu_{i}\) and \(\eta_{i}\) are the real and imaginary parts of the eigenvalues, and \({\text{i}} = \sqrt { - 1}\). Based on this, further dynamic characteristics can be determined, such as natural frequencies \(\left( {\omega_{i} } \right)\) and non-dimensional damping ratios \(\left( {\gamma_{i} } \right)\):

Sensitivities of natural frequencies and non-dimensional damping ratios can be determined using the formulas [56]:

for the first-order sensitivity and

for the second-order sensitivity, if we express first- and second-order sensitivities of eigenvalues as:

The computed sensitivities will be used to determine the response values of the structure, denoted generally as \(f\left( p \right)\), after changing the design parameter. The new values will be determined by expanding the function in a Taylor series around the parameter \(p_{k}\) according to the formulas:

for the first-order sensitivity and

for the second-order sensitivity.

5 Examples

5.1 Example 1–four-degrees of freedom system

To validate the derived formulas and confirm the correctness of the written programs, the analysis begins with the examination of a four-degree-of-freedom system presented in Fig. 1, which has been previously studied in, among others, [35]. This is a mass-spring system with five damping elements described by the Biot model, for which the components of the \({\mathbf{G}}\left( s \right)\) matrix are as follows:

The matrices \({\mathbf{M}}\) and \({\mathbf{K}}\) have the following forms:

The computations were carried out with the following data \(k = 1000\; {\text{N}}/{\text{m}}\), \(k_{1} = 1000\; {\text{N}}/{\text{m}}\), \(m = 1 \;{\text{kg}}\), \(c = 0.3\), \(\mu = 10\). Solving the eigenvalue problem (2) yielded two repeated eigenvalues: \(\lambda_{1} = \lambda_{2} = - 1.4249 \cdot 10^{ - 3} + 44.785{\text{i}}\). A comparison of the first-order sensitivities of eigenvalues and their associated eigenvectors with the results from the work [35] is presented in Table 1. The results in the article [35] were verified by using two finite difference methods: the forward difference method and the central difference method, making them suitable for comparative analysis.

The obtained results indicate a good agreement and demonstrate the correctness of the derived formulas and the written codes.

5.2 Example 2 – 3D-frame with dampers

As a second example, consider a spatial frame with four dampers described using the fractional Zener model (Fig. 2). The elements of the matrix \({\mathbf{G}}\left( s \right)\) for one damper can now be expressed as follows:

where \({\mathbf{L}}_{e}\) is the damper location matrix. Additionally, the matrix \({\mathbf{K}}_{0}\) is formed as follows:

which is added to the structural stiffness matrix.

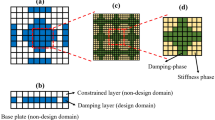

The structural data for the system are as follows: the mass of the floor plates is consistent at \(m=27{,}000\; \text{kg}\), with plate dimensions measuring 6 × 6 m. The column spacing along the axes is 5 × 5 m, while the dimensions are 0.4 × 0.4 m, and their height stands at 4 m. It is assumed that the material is concrete resulting in a Young's modulus of \(E=31\; \text{GPa}\), and a Poisson’s ratio of \(v=0.2\). In Fig. 3, the positions of the columns and dampers are shown. The dampers are located on all walls of the second floor. The calculations are based on a model with rigid slabs where each floor has three degrees of freedom acting in the horizontal plane of the floor. Consequently, the considered frame has six dynamic degrees of freedom. For this analysis, all dampers are considered identical, and their parameters are as follows: spring stiffnesses \(k_{0} = 9000\; {\text{kN}}/{\text{m}}\) and \(k_{1} = 6000\; {\text{kN}}/{\text{m}}\), damping coefficient \(c_{1} = 700\; {\text{kNs}}^{\alpha } /m\), and the fractional derivative order \(\alpha = 0.6\). Dynamic characteristics of the considered system are provided in Tables 2 and 3.

Two cases of changing design parameters of damper 1 (D1 in Fig. 3b) were considered. In the first case, the damping coefficient \({c}_{\text{1,1}}\) changes, and in the second case, the coefficient \({\alpha }_{1}\). changes. Sensitivities of eigenvalues and eigenvectors are presented in Tables 4, 5 and 6.

It is worth noting that in the case of eigenvector sensitivities with respect to a change in the parameter α, second-order sensitivities are of similar magnitudes, or in some cases, even larger magnitudes than first-order sensitivities. This demonstrates that when predicting the response of a structure to parameter variations, not accounting for second-order sensitivities can lead to erroneous results. The correctness of the derived sensitivities was verified based on Eqs. (60) and (61). Exact solutions were compared to those obtained with parameter changes, and approximate results were calculated using Eq. (60) for first-order sensitivities and Eq. (61) for second-order sensitivities. The results are presented in Tables 7 and 9 for natural frequencies and in Tables 8 and 10 for non-dimensional damping ratios. First-order sensitivities calculated from Eqs. (56) and second-order sensitivities calculated from Eqs. (57) and (58) were utilized.

The results presented in Tables 7, 8, 9 and 10 show that in most cases, the calculated sensitivities provide a very accurate approximation for predicting the structural response after a parameter change. Moreover, it is evident that second-order sensitivity enables results closer to the exact solution. It is worth analyzing the results for the non-dimensional damping ratio \({\gamma }_{4}\) calculated due to the change in the parameter α1. The first- and second-order sensitivities are as follows: \(\frac{\partial {\gamma }_{4}}{\partial \alpha }=0.002975\) and \(\frac{{\partial }^{2}{\gamma }_{4}}{\partial {\alpha }^{2}}=-0.121009\). The first-order sensitivity is a positive value, indicating that an increase in the parameter’s value leads to an increase in the non-dimensional damping ratio. However, this holds true only for a 5% change. For subsequent changes, i.e., 15% and 30% of the damping coefficient value, the non-dimensional damping ratio decreases. Therefore, it is evident that only inclusion of second-order sensitivity allows for the accurate determination of these values. This highlights the significance of considering second-order sensitivity in such analyses.

Tables 11, 12, 13 and 14 show a comparison of eigenvectors after a 5% and 30% change in the parameter \({c}_{\text{1,1}}\).

The results presented demonstrate that the provided method yields accurate results when calculating predicted values of eigenvectors corresponding to repeated eigenvectors. In many cases, second-order sensitivity offers a significantly better approximation than first-order sensitivity. This superiority is particularly evident when there is a high variation, for example, 30%.

5.3 Example 3 – 3D-truss with dampers

A spatial truss structure with dampers is under analysis (Fig. 4). The dampers are described by a fractional Kelvin model (Fig. 5) where the elements of the matrix \({\mathbf{G}}\left( s \right)\) take the form:

Similarly to the Zener model, an additional matrix \({\mathbf{K}}_{0}\), described by Eq. (64), needs to be created. It is assumed that the cross section of the rods is a circular pipe with dimensions 10 cm × 20 mm. The rod length is 2 m, and Young's modulus of steel is taken as \(E = 210\; {\text{GPa}}\). The linear mass of the rod is \(m = 13.6\; {\text{kg}}/{\text{m}}\). The parameters of the dampers are the same and are \(k_{0} = 4000\; {\text{kN}}/{\text{m}}\), \(c_{0} = 500\;{\text{kNs}}^{\alpha } /m\), \(\alpha = 0.6\). The considered system has 19 dynamic degrees of freedom, and the solution consists of 9 distinct eigenvalues and 5 repeated eigenvalues. The accuracy of the solution will be assessed based on the value \(- 2.5659 + 550.2747{\text{i}}\). Sensitivities due to changes in parameters \(c_{0}\), \(k_{0}\), \(\alpha\) are presented in Table 15. Using Eqs. (60) and (61), predicted values were calculated, considering first- and second-order sensitivities for parameter variations up to 30%. The obtained values were compared to the exact solution in Figs. 6, 7, 8 and 9. The legend for the graphs is provided in Fig. 6. Figure 9 presents a comparison with simultaneous changes in two parameters \({\alpha }_{1}\) and \({c}_{\text{0,1}}\). In this case, Eqs. (60) and (61) take the following forms:

for the first-order sensitivity and

for the second-order sensitivity, assuming that we only consider the elements on the main diagonal of the Hessian matrix [57].

All the diagrams show that considering second-order sensitivities provides better convergence to the exact solution. However, in the case of certain parameters (\({c}_{\text{0,1}}\) i \({k}_{\text{0,1}}\)), first-order sensitivity yields sufficiently close values, even for large variations in the parameter, making it unnecessary to calculate second-order sensitivities. On the other hand, for the parameter α, solutions that are close to the exact solution are obtained only for small variations, up to about 5%, when considering first-order sensitivity. For larger parameter variations, it is advisable to use second-order sensitivity to accurately determine the changes in the structural response. The derived formulas also allow for assessing the change in the structural response when assuming the simultaneous change of multiple parameters. In Fig. 9, it can be observed that even when one of the changing parameters is α, good convergence to the exact solution is achieved for changes up to about 15%.

5.4 Example 4 – system with equal eigenvalue sensitivities

To illustrate the formulas derived in Sect. 3, a three-degree of freedom system was considered. The system was based on examples analyzed in [14] and [16] but modified by adding damping elements (Fig. 10).

The following values were used for the calculations: \(m_{1} = 1\; {\text{kg}}\), \(m_{2} = 4\; {\text{kg}}\), \(m_{3} = 1 {\text{kg}}\), \(k_{1} = 8\; {\text{N}}/{\text{m}}\), \(k_{2} = 2\; {\text{N}}/{\text{m}}\), \(k_{3} = 2\; {\text{N}}/{\text{m}}\), \(k_{4} = 1\; {\text{N}}/{\text{m}}\), \(c_{1} = 4\; {\text{Ns}}/{\text{m}}\), \(c_{2} = 1\; {\text{Ns}}/{\text{m}}\), \(c_{3} = 1\; {\text{Ns}}/{\text{m}}\), \(c_{4} = 0.5\; {\text{Ns}}/{\text{m}}\). The matrices \({\mathbf{M}}\), \({\mathbf{K}}\), and \({\mathbf{G}}\left( s \right)\) are as follows:

To calculate sensitivities, it was assumed that the chosen design parameter is a combination of parameters k1 and k4, for which stiffnesses change according to a ratio of 12:1. Therefore, the derivatives of the matrices \({\mathbf{M}}\), \({\mathbf{K}}\), and \({\mathbf{G}}\left( s \right)\) are as follows:

.

The eigenvalues of the problem are \(s_{1} = s_{2} = - 1.0 + 1.7321{\text{i}}\) and \(s_{3} = - 0.25 + 0.9682{\text{i}}\), with the first two being repeated. Subsequently, sensitivities of the eigenvalues s1 and s2 and their associated eigenvectors were calculated. After solving the additional eigenproblem (12), the repeated eigenvalue sensitivities were found to be \(\frac{{\partial s_{1} }}{\partial p} = \frac{{\partial s_{2} }}{\partial p} = 0.5774{\text{i}}\). Second-order sensitivities were computed after solving another additional eigenproblem (39): \(\frac{{\partial^{2} s_{1} }}{{\partial p^{2} }} = 0.3333 + 0.1925{\text{i}}\) and \(\frac{{\partial^{2} s_{2} }}{{\partial p^{2} }} = 0.0 + 0.0{\text{i}}\). Using the transformation matrix \({{\varvec{\upbeta}}}_{2}\), basis eigenvectors were determined:

The sensitivities of the elements of the eigenvector, calculated based on Eq. (35), are as follows:

To verify the calculations, it was assumed that the selected design parameter varies by 5%. The exact solution for the modified stiffness values \({k}_{1}\) and \({k}_{4}\) was compared to the solution obtained using the Taylor series expansion (60). The results are presented in Table 16.

The comparison of the results presented in Table 16 demonstrates that the obtained sensitivities are accurate and allow for a good approximation of dynamic characteristics for systems in which both eigenvalues and eigenvalue sensitivities are repeated. At the same time, it is worth noting that in the case of eigenvalues, first-order sensitivity provides an incorrect result, suggesting that after changing the design parameter, eigenvalues will remain repeated. Only second-order sensitivity allows for a correct assessment. On the other hand, the sensitivity of eigenvectors provides a very good approximation of the new eigenvectors.

5.5 Discussion on condition numbers

To improve the conditioning of the coefficient matrices, a special factor κ has been introduced. It is calculated as the ratio of the largest element of the matrix \(\mathbf{D}\left(\lambda \right)\) to the largest element of \(\frac{\partial \mathbf{D}\left(\lambda \right)}{\partial s}\) (see Eq. 17). Table 17 provides the condition numbers of the coefficient matrices for all examples.

Comparing the results provided in Table 17, it is evident that the introduction of the additional factor κ significantly improves the condition number in most cases. For Example 4, the difference is small, but even without the κ factor, the condition number of the coefficient matrix is not high.

6 Conclusions

The paper introduces a method for calculating the sensitivities of repeated eigenvalues and their associated eigenvectors for systems with viscoelastic elements. The mechanical behavior of these elements is described using rheological models, both classical and utilizing fractional derivatives. The sensitivities of eigenvalues are determined by solving an additional eigenvalue problem, while the sensitivities of eigenvectors are computed by dividing derivatives into particular and homogeneous solutions. The paper derives formulas for both first- and second-order sensitivities, marking the first time such calculations have been presented for discussed systems. It is also demonstrated that the proposed method can be applied to a special case where the sensitivities of eigenvalues are also repeated.

An additional factor in the coefficient matrix is introduced to improve its condition number. The provided examples demonstrate the substantial improvement in the condition number achieved by this factor.

It is proven that the derived formulas can be applied to specific examples involving systems with viscoelastic elements. Furthermore, it has also been demonstrated in the presented examples that the application of second-order sensitivity analysis is necessary in some cases to accurately predict the behavior of the analyzed structure.

References

Haftka, R.T., Adelman, H.M.: Recent developments in structural sensitivity analysis. Struct. Optim. 1, 137–151 (1989). https://doi.org/10.1007/BF01637334

Mottershead, J.E., Link, M., Friswell, M.I.: The sensitivity method in finite element model updating: a tutorial. Mech. Syst. Signal Process. 25, 2275–2296 (2011). https://doi.org/10.1016/j.ymssp.2010.10.012

Haftka, R.T., Gürdal, Z.: Elements of structural optimization. 3rd ed. Springer-Science+Business Media, B.V; (1992). https://doi.org/10.1007/978-94-011-2550-5

Adhikari, S.: Structural Dynamics with Generalized Damping Models: Identification. Wiley-ISTE: Hoboken & London; (2013). https://doi.org/10.1002/9781118862971

Song, H., Chen, Z., Zhang, J.: Sensitivity analysis of statistical energy analysis models based on interval perturbation approach. Acta Mech. 231, 3989–4001 (2020). https://doi.org/10.1007/s00707-020-02744-1

Van Heulen, F., Haftka, R.T., Kim, N.H.: Review of options for structural design sensitivity analysis. Part 1: Linear systems. Comput. Methods Appl. Mech. Eng. 194, 3213–3243 (2005). https://doi.org/10.1016/j.cma.2005.02.002

Fox, R.L., Kapoor, M.P.: Rates of change of eigenvalues and eigenvectors. AIAA J. 6(12), 2426–2429 (1968). https://doi.org/10.2514/3.5008

Nelson, R.B.: Simplified calculation of eigenvector derivatives. AIAA J. 14(9), 1201–1205 (1976). https://doi.org/10.2514/3.7211

Ojalvo, I.U.: Efficient computation of modal sensitivities for systems with repeated frequencies. AIAA J. 26(3), 361–366 (1988). https://doi.org/10.2514/3.9897

Mills-Curran, W.C.: Calculation of eigenvector derivatives for structures with repeated eigenvalues. AIAA J. 26(7), 867–871 (1988). https://doi.org/10.2514/3.9980

Dailey, R.L.: Eigenvector derivatives with repeated eigenvalues. AIAA J. 27(4), 486–491 (1989). https://doi.org/10.2514/3.10137

Tang, J., Wang, W.L.: On calculation of sensitivity for non-defective eigenproblems with repeated roots. J. Sound Vib. 225(4), 611–631 (1999). https://doi.org/10.1006/jsvi.1999.2098

Xu, Z., Wu, B.: Derivatives of complex eigenvectors with distinct and repeated eigenvalues. Int. J. Numer. Methods Eng. 75(8), 945–963 (2008). https://doi.org/10.1002/nme.2280

Long, X.Y., Jiang, C., Han, X.: New method for eigenvector-sensitivity analysis with repeated eigenvalues and eigenvalue derivatives. AIAA J. 53(5), 1226–1235 (2015). https://doi.org/10.2514/1.J053362

Shaw, J., Jayasuriya, S.: Modal sensitivities for repeated eigenvalues and eigenvalue derivatives. AIAA J. 30(3), 850–852 (1992). https://doi.org/10.2514/3.10999

Friswell, M.I.: The derivatives of repeated eigenvalues and their associated eigenvectors. J. Vib. Acoust. 118, 390–397 (1996). https://doi.org/10.1115/1.2888195

Van Der Aa, N.P., Ter Morsche, H.G., Mattheij, R.M.: Computation of eigenvalue and eigenvector derivatives for a general complex-valued eigensystem. Electron. J. Linear Algebra 16, 300–314 (2007). https://doi.org/10.13001/1081-3810.1203

Adhikari, S., Friswell, M.I.: Calculation of eigensolution derivatives for nonviscously damped systems using Nelson’s method. AIAA J. 44(8), 1799–1806 (2006). https://doi.org/10.2514/1.20049

Lim, K.B., Juang, J.N.: Eigenvector derivatives of repeated eigenvalues using singular value decomposition. J. Guidance. 12(2), 282–283 (1988). https://doi.org/10.2514/3.20405

Prells, U., Friswell, M.I.: Calculating derivatives of repeated and nonrepeated eigenvalues without explicit use of eigenvectors. AIAA J. 38(8), 1426–1436 (2000). https://doi.org/10.2514/2.1119

Lee, I.W., Jung, G.H., Lee, J.W.: Numerical method for sensitivity analysis of eigensystems with non-repeated and repeated eigenvalues. J. Sound Vib. 195(1), 17–32 (1996). https://doi.org/10.1006/jsvi.1996.9989

Lee, T.H.: Adjoint method for design sensitivity analysis of multiple eigenvalues and associated eigenvectors. AIAA J. 45(8), 1998–2004 (2007). https://doi.org/10.2514/10.2514/1.25347

Yoon, G.H., Donoso, A., Bellido, J.C., Ruiz, D.: Highly efficient general method for sensitivity analysis of eigenvectors with repeated eigenvalues without passing through adjacent eigenvectors. Int. J. Numer. Methods Eng. 121(20), 4473–4492 (2020). https://doi.org/10.1002/nme.6442

Lee, I.W., Jung, G.H.: An efficient algebraic method for the computation of natural frequency and mode shape sensitivies—Part I. Distinct. Nat. Freq. Comput. Struct. 62(3), 429–435 (1997). https://doi.org/10.1016/S0045-7949(96)00206-4

Lee, I.W., Jung, G.H.: An efficient algebraic method for the computation of natural frequency and mode shape sensitivities—Part II. Multiple Nat. Freq. Comput. Struct. 62(3), 437–443 (1997). https://doi.org/10.1016/S0045-7949(96)00207-6

Wu, B., Xu, Z., Li, Z.: A note on computing eigenvector derivatives with distinct and repeated eigenvalues. Commun. Numer. Methods Eng. 23(3), 241–251 (2007). https://doi.org/10.1002/cnm.895

Wang, P., Yang, X.: Eigensensitivity of damped system with defective multiple eigenvalues. J. Vibroeng. 18(4), 2331–2342 (2016). https://doi.org/10.21595/jve.2016.15791

Xie, H.Q., Dai, H.: Derivatives of repeated eigenvalues and corresponding eigenvectors of damped systems. Appl. Math. Mech. Engl. 28(6), 837–845 (2007). https://doi.org/10.1007/s10483-007-0614-4

Xie, H., Dai, H.: Calculation of derivatives of multiple eigenpairs of unsymmetrical quadratic eigenvalue problems. Int. J. Comput. Math. 85(12), 1815–1831 (2008). https://doi.org/10.1080/00207160701581525

Choi, K.M., Cho, S.W., Ko, M.G., Lee, I.W.: Higher order eigensensitivity analysis of damped systems with repeated eigenvalues. Comput. Struct. 82, 63–69 (2004). https://doi.org/10.1016/j.compstruc.2003.08.001

Li, L., Hu, Y., Wang, X.: A parallel way for computing eigenvector sensitivity of asymmetric damped systems with distinct and repeated eigenvalues. Mech. Syst. Signal Process. 30, 30–77 (2012). https://doi.org/10.1016/j.ymssp.2012.01.008

Wang, P., Dai, H.: Calculation of eigenpair derivatives for asymmetric damped systems with distinct and repeated eigenvalues. Int. J. Numer. Methods Eng. 103(7), 501–515 (2015). https://doi.org/10.1002/nme.4901

Wang, P., Wu, J., Yang, X.: An improved method for computing eigenpair derivatives of damped system. Math. Probl. Eng. 2018, 1–8 (2018). https://doi.org/10.1155/2018/8050132

Wang, P., Dai, H.: Eigensensitivity analysis for symmetric nonviscously damped systems with repeated eigenvalues. J. Vibroeng. 16(8), 4065–4076 (2014)

Li, L., Hu, Y., Wang, X.: A study on design sensitivity analysis for general nonlinear eigenproblems. Mech. Syst. Signal Process. 34, 88–105 (2013). https://doi.org/10.1016/j.ymssp.2012.08.011

Li, L., Hu, Y., Wang, X., Ling, L.: Eigensensitivity analysis of damped systems with distinct and repeated eigenvalues. Finite Elem. Anal. Des. 72, 21–34 (2013). https://doi.org/10.1016/j.finel.2013.04.006

Phuor, T., Yoon, G.: Eigensensitivity of damped system with distinct and repeated eigenvalues by chain rule. Int. J. Numer. Methods Eng. 124, 4687–4717 (2023). https://doi.org/10.1002/nme.7331

Ruiz, D., Bellido, J.C., Donoso, A.: Eigenvector sensitivity when tracking modes with repeated eigenvalues. Comput. Methods Appl. Mech. Eng. 326, 338–357 (2017). https://doi.org/10.1016/j.cma.2017.07.031

Choi, K.K., Haug, E.J., Lam, H.L.: A numerical method for distributed parameter structural optimization problems with repeated eigenvalues. J. Struct. Mech. 10(2), 191–207 (1982)

Gomes, H.M.: Truss optimization with dynamic constraints using a particle swarm algorithm. Expert Syst. Appl. 38, 957–968 (2011). https://doi.org/10.1016/j.eswa.2010.07.086

Zuo, W., Xu, T., Zhang, H., Xu, T.: Fast structural optimization with frequency constraints by genetic algorithm using adaptive eigenvalue reanalysis methods. Struct. Multidisc. Optim. 43, 799–810 (2010). https://doi.org/10.1007/s00158-010-0610-y

Zhou, P., Du, J., Lü, Z.: Topology optimization of freely vibrating continuum structures based on nonsmooth optimization. Struct. Multidisc. Optim. 56, 603–618 (2017). https://doi.org/10.1007/s00158-017-1677-5

Wagner, N., Adhikari, S.: Symmetric state-space method for a class of nonviscously damped systems. AIAA J. 4195, 951–956 (2003). https://doi.org/10.2514/2.2032

Adhikari, S., Wagner, N.: Analysis of asymmetric nonviscously damped linear dynamic systems. J. Appl. Mech. 70(6), 885–893 (2003). https://doi.org/10.1115/1.1601251

Biot, M.A.: Theory of stress-strain relations in anisotropic viscoelasticity and relaxation phenomena. J. Appl. Phys. 25(11), 1385–1391 (1954). https://doi.org/10.1063/1.1721573

Biot, M.A.: Variational principles in irreversible thermodynamics with application to viscoelasticity. Phys. Rev. 97(6), 1463–1469 (1955). https://doi.org/10.1103/PhysRev.97.1463

Golla, D.F., Hughes, P.C.: Dynamics of viscoelastic structures—a time domain finite element formulation. J. Appl. Mech. 52(4), 897–906 (1985). https://doi.org/10.1115/1.3169166

McTavish, D.J., Hughes, P.C.: Modeling of linear viscoelastic space structures. J. Vib. Acoust. 115(1), 103–110 (1993). https://doi.org/10.1115/1.2930302

Lesieutre, G.A.: Finite element modeling of frequency-dependent material properties using augmented thermodynamic fields. AIAA J. 13(6), 1040–1050 (1990). https://doi.org/10.2514/3.20577

Lesieutre, G.A.: Finite elements for dynamic modeling of uniaxial rods with frequency-dependent material properties. Int. J. Solids Struct. 29(12), 1567–1579 (1992). https://doi.org/10.1016/0020-7683(92)90134-F

Lewandowski, R., Bartkowiak, A., Maciejewski, H.: Dynamic analysis of frames with viscoelastic dampers: a comparison of dampers models. Struct. Eng. Mech. 41(1), 113–137 (2012). https://doi.org/10.12989/sem.2012.41.1.113

Park, S.W.: Analytical modeling of viscoelastic dampers for structural and vibration control. Int. J. Solids Struct. 38(44–45), 8065–8092 (2001). https://doi.org/10.1016/S0020-7683(01)00026-9

Chang, T.S., Singh, M.P.: Seismic analysis of structures with a fractional derivative model of viscoelastic dampers. Earthq. Eng. Eng. Vib. 1, 251–260 (2002). https://doi.org/10.1007/s11803-002-0070-5

Adhikari, S., Pascual, B.: Eigenvalues of linear viscoelastic systems. J. Sound Vib. 325, 1000–1011 (2009). https://doi.org/10.1016/j.jsv.2009.04.008

Li, L., Hu, Y.: State-Space method for viscoelastic systems involving general damping model. AIAA J. 54(10), 3290–3295 (2016). https://doi.org/10.2514/1.J054180

Lewandowski, R., Łasecka-Plura, M.: Design sensitivity analysis of structures with viscoelastic dampers. Comput. Struct. 164, 95–107 (2016). https://doi.org/10.1016/j.compstruc.2015.11.011

Łasecka-Plura, M.: A comparative study of the sensitivity analysis for systems with viscoelastic elements. Arch Mech. 70(1), 5–25 (2023). https://doi.org/10.24425/ame.2022.144077

Łasecka-Plura, M., Lewandowski, R.: Dynamic characteristics and frequency response function for frame with dampers with uncertain design parameters. Mech. Based Des. Struct. Mach. 45(3), 286–312 (2017). https://doi.org/10.1080/15397734.2017.1298043

Martinez-Agirre, M., Elajabarrieta, M.J.: Higher order eigensensitivities-based numerical method for the harmonic analysis of viscoelastically damped structures. Int. J. Numer. Meth. Eng. 88(12), 1280–1296 (2011). https://doi.org/10.1002/nme.3222

Pawlak, Z., Lewandowski, R.: The continuation method for the eigenvalue problem of structures with viscoelastic dampers. Comput. Struct. 125, 53–61 (2013). https://doi.org/10.1016/j.compstruc.2013.04.021

Lewandowski, R., Baum, M.: Dynamic characteristics of multilayered beams with viscoelastic layers described by the fractional Zener model. Arch. Appl. Mech. 85(12), 1793–1814 (2015). https://doi.org/10.1007/s00419-015-1019-2

Lewandowski, R., Litewka, P., Wielentejczyk, P.: Free vibrations of laminate plates with viscoelastic layers using the refined zig-zag theory—Part 1. Theor. Backgr. Compos. Struct. 278, 114547 (2021). https://doi.org/10.1016/j.compstruct.2021.114547

Kamiński, M., Lenartowicz, A., Guminiak, M., Przychodzki, M.: Selected problems of random free vibrations of rectangular thin plates with viscoelastic dampers. Materials. 15(9), 6811 (2022). https://doi.org/10.3390/ma15196811

Funding

This study was funded by Politechnika Poznańska, internal grant No. 0411/SBAD/0010.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declares no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by the author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix A. Proof of the non-singularity of the coefficient matrix [14]

The coefficient matrix is expressed as follows:

If the considered system has m repeated eigenvalues λ, then the dimension of the matrix \({\mathbf{M}}_{C}\) is \(\left( {n + m} \right)x\left( {n + m} \right)\). The solution of the following equation will be analyzed:

The equation can be expressed in the following form:

After multiplying Eq. (A.2) from the left by \({{\varvec{\Phi}}}^{T}\), we obtain that

Upon substituting (A.4) into Eq. (A.2), we obtain:

From Eq. (A.5), it follows that vector Y is the associated eigenvector with eigenvalue λ, and thus, we can write that:

where \({{\varvec{\Phi}}}\) is also an eigenvector, and matrix \({\mathbf{b}}\) is a constant matrix. After substituting (A.6) into (A.3), the following equation is obtained:

This implies that \({\mathbf{b}} = 0\), and from (A.6), we have:

Considering Eqs. (A.4) and (A.7), it is evident that there is only one (zero) solution to Eq. (A.1), which means that the coefficient matrix \({\mathbf{M}}_{C}\) is full rank. This, in turn, ensures the numerical stability of the presented method.

Appendix B. Sensitivity of distinct eigenvalues

The presented method can also be applied to cases in which the eigenvalues are distinct. In this case, we assume that \(m=1\), treating it as a degenerate problem for repeated eigenvalues. For first-order sensitivities, the sensitivity of the eigenvalue can be calculated using a formula that can be derived by differentiating Eq. (2) and then left-multiplying by the vector \({\overline{\mathbf{q}} }_{i}^{T}\):

The sensitivity of the eigenvectors can be calculated based on Eq. (14), which takes the following form in the case of distinct eigenvalues:

The particular solution can be obtained from the solution of the system of equations:

where \({\tilde{\mathbf{S}}}_{1} = - \frac{{\partial {\mathbf{D}}\left( \lambda \right)}}{{\partial p_{k} }}{\overline{\mathbf{q}}}_{i}\).

It is worth noting that \(\tilde{c}_{1}\) in the case of a single eigenvalue is a single coefficient, and we calculate it as follows:

The second-order sensitivity of an eigenvalue can be computed by differentiating Eq. (2) twice and left-multiplying it by \({\overline{\mathbf{q}}}_{i}^{T}\):

We will calculate the second-order sensitivity of eigenvectors based on Eq. (23), which now takes the following form:

We will obtain the particular solution by solving the system of equations:

where

The coefficient \({\widetilde{c}}_{2}\) is calculated using the formula:

Appendix C. The scheme for determining first- and second-order sensitivities

The provided scheme (Fig. 11) outlines the order of applying the derived formulas based on whether distinct or repeated eigenvalues and their derivatives are obtained, providing a clear and systematic approach for practical implementation.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Łasecka-Plura, M. Comprehensive sensitivity analysis of repeated eigenvalues and eigenvectors for structures with viscoelastic elements. Acta Mech (2024). https://doi.org/10.1007/s00707-024-03967-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00707-024-03967-2