Abstract

In this paper, a surrogate merit function (SMF) is proposed to be evaluated instead of the traditional merit functions (i.e., penalized weight of the structure). The standard format of the conventional merit functions needs several expensive trial-and-error tuning processes to enhance optimization convergence quality, retuning for different structural model configurations, and final manual local search in case optimization converges to infeasible vicinity of global optimum. However, on the other hand, SMF has no tunning factor but shows statistically stable performance for different models, converges directly to outstanding feasible points, and shows other superior advantages such as reduced required iterations to achieve convergence. In other words, this new function is a no-hassle one due to its brilliant user-friendly application and robust numerical results. SMF might be a revolutionary step in commercializing design optimization in the real-world construction market.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Structural optimization belongs to the scope of constrained optimization problems in which the objective function F(\(\overrightarrow{X}\)) is the weight of the structure W (\(\overrightarrow{X}\)) (\(\overrightarrow{X}\)=\(\overrightarrow{A}\) and \(\overrightarrow{A}\) is the vector of cross sections of the beams and columns) and design criteria are the constraints of the problem C(\(\overrightarrow{X}\)) determined using the design codes (see Table 1). However, current optimization algorithms are for unconstrained problems, and a merit function or fitness function, which is a co-function of objective function F(\(\overrightarrow{X}\)) and constraints C(\(\overrightarrow{X}\)), is required to properly handle the constraints (for consistency in the text's terminology, the merit function is used everywhere else in this paper). Penalty methods are the traditional concept in structural engineering. However, due to the hassles of using them (as discussed in next Sect. 1.3.1), and unavailability of other effective methods, the authors decided to propose a new one.

The main goal is to provide a user-friendly concept as simple as a merit function formula, which is general and true on every structural model, straightforward to use and has a higher efficiency compared to penalties. Since no commercial software (e.g., ETABS or SAP2000) offers an optimization option due to penalty hassles, authors expect SMF to be a breakthrough or at least a beginning step in facilitating the presence of an optimization option in commercial software soon.

1.1 Traditional penalty-based format

The standard format of the traditional merit function formula for structural optimization includes the weight of the structure \(W(\overrightarrow{\mathrm{X}})\) multiplied by some penalty rule (called "Constraint Handling Technique") which means that the merit function is like:

where, \(\overrightarrow{X}\) is a proposed solution vector obtained by the optimization algorithm (e.g., suggested cross-section list for a steel frame design), \(\overrightarrow{C} = [{g}_{1}, {g}_{2}, {g}_{3},\ldots , {g}_{n}]\) is the vector of constraints in which each \({{g}}_{{i}}\) is a constraint of the problem to be satisfied. The rule of constraint-handling part \(P(\overrightarrow{X}, \overrightarrow{C}, \alpha , \beta , \varphi , \dots , a, b, c, \dots )\) is the key to composing the Merit function, and factors like α, β, φ, a, b, c, and so on are included in the formulas as tunning factors for convergence quality control purposes. For example, the formula by Joines and Houck (1994)) includes α, β, and c as the tuning factor:

Here, n is the number of constraints of the problem. t is the current iteration number throughout the optimization process.

The F(\(\overrightarrow{X}\)) for structural engineers is usually the weight of the structure, W(\(\overrightarrow{X}\)). Also, the engineers consider the design criteria of the codes as the constraints \({g}_{i}(\overrightarrow{X})\) and denote them in normalized format when doing structural optimization (for simplicity, we denote \({g}_{i}(\overrightarrow{X})\) as \({\mathrm{C }}_{\mathrm{i}}\) in the remaining of the text). For example, the most common criterion for members is a strength capacity check, according to AISC (2010) provisions, and stated as

which is originally written in a normalized format in the design code. However, some other criteria need to be re-written in normalized format. For instance, for story s, inter-story drift is stated as Eq. 4. like:

where \({\Delta }_{s}\) and \({\Delta }_{s+1}\) are the lateral displacements of a presumed spot on the stories s and s + 1, respectively. \({h}_{s}\) is the height of the story s, and RI is the code limit. But engineers change it to a normalized format to apply to structural optimization. In this paper, the capacity index (CI) and drift index (DI) are selected as the main design constraints and implemented in the optimization process.

1.2 Three factual strategies to develop SFM

In the current paper, SMF is developed in two steps based on three factual strategies.

Step1: Initiating Formulation Based on Fuzzy Logic

First Factual Strategy is to Use Fuzzy Platform to Develop the Formula: SFM must have a logical format with no clash with the nature of the search space to perform well. Fortunately, the fact is that the structures’ combinatorial search space is a fuzzy one; thus, it has the potential to attribute a fuzzy membership value for each candidate structure in it (as described in Sects. 3.1, 3.2, and 3.3). Since this fuzziness is an intrinsic property of the search space, a fuzzy membership format sounds to be the best fit for the formulation as the platform. However, it must be converted to a proper merit function afterward.

Step2: Converting Fuzzy Membership Function into a Useful Merit Function

Second and third factual strategies are to convert the fuzzy membership function into proper merit function to be efficient in structural optimization.

Second Factual Strategy is to Set the Minimum of SMF on the Feasibility Boundary: The fact is that an efficient merit function must be capable of helping algorithm push the exploration away from overdesign structures toward on-the-edge feasible structures to meet global optimum. The authors’ proposed strategy here is to set the minimum of SFM on the feasibility boundary by assigning zero to on-the-edge feasible elements and a positive value for any of others.

Third Factual Strategy is Securing a Feasible Exploration by Feasibility Factor: The fact is that saving the feasibility of exploration flow over the structural search space is necessary since the optimization algorithms are not built for constrained problems and will not be able to stay on the proper feasible path on the search space. To tackle this fact, a feasibility factor is defined and embedded in SFM formula in Sect. 3.5, which tends to be equal to one for 100% feasible structure and takes values higher than 1 in any other case according to the feasibility level of the structure.

Those three factual strategies are discussed in detail in Sect. 3, and SFM is developed thereafter.

1.3 Motivation behind proposing SFM

The motivation to do the current research is originated from two main streams: first, difficulties of using the traditional penalty-based formats; second, reviewed literature.

1.3.1 The hassles of using penalty based merit in structural optimization problems

The produced value by penalty formulas is sensitive to the tuning factors and requires several try and errors to be adjusted, which are expensive due to the analysis time cost of finite element models of the structures with a high number of degrees of freedom. Other than such hassle, the settings for tuning factors must usually be readjusted when applying to another structure. Moreover, when an optimization process shows convergence and stops, especially when using metaheuristic algorithms, the resulted point is in the vicinity of the feasible global optima but sometimes infeasible. Therefore, the user needs to conduct a little bit of local search manually (e.g., for the steel frames, the engineer plays with the offered result by convergence and removes slight infeasibility between the elements and obtains a feasible lightweight structure with some struggle).

All those drawbacks prevent the structural optimization from being commercialized, and none of the current software has included it. According to the authors' knowledge, penalties have been the common concept utilized by structural engineers, and the research on this topic seems to be virgin. From this viewpoint, the structural engineering field lacks a proper research record.

1.3.2 Inspiration from the literature review

A comprehensive literature review is provided in Sect. 2 on constraints-handling techniques. There, the constraint-handling importance in structural optimization has been reviewed and main review articles to read about constraint-handling techniques are mentioned (Sect. 2.1), available ideas are briefly mentioned (Sect. 2.2), other miscellaneous ideas are mentioned (Sect. 2.3), studies with a focus on structures are cited (Sect. 2.4), and finally concluding an inspiration is discussed (Sect. 2.5). Upon that review, the authors understood that those other potential ideas even though effective in other ways, not suitable enough to remove the hassles of penalty tunning and the best choice is the penalties to be replaced by a new surrogate merit function.

1.4 Conceptual comparison with related works

A conceptual list of the differences and advances of SMF which are not available in the similar works is mentioned in Table 2.

2 Comprehensive literature review

2.1 Constraint-handling background and available review articles

As mentioned earlier in the Introduction section, structural optimization is a constrained problem in which the constraints are design criteria mentioned in the related codes. In contrast, most optimization methods are developed to explore the search space to find extremums of objective functions with no constraints. Genetic algorithm (GA) (Holland 1975), differential evolution (DE)) Storn and Price 1997), particle swarm optimization (PSO) (Eberhart and Kennedy 1995), bat algorithm (BA) proposed by Yang (2016), ant colony optimization algorithm (ACO) (Dorigo and Stützle 2004), cuckoo search (CS) (Yang and Deb 2009), tabu search (Glover 1989 and 1990), imperialist competitive algorithm (ICA) (Gargari & Lucas 2007), big bang-big crunch (BB-BC) (Erol and Eksin 2006), artificial bee-colony algorithm (ABC) (Karaboğa 2005), harmony search (HS) (Geem et al. 2001), charged system search algorithm (CSS) (Kaveh and Talatahari 2010a, b), and chaos game optimization (CGO) (Talatahari and Azizi 2020) all perform in this way. Therefore, handling design criteria has been of utmost interest among structural engineers. Structural engineers and other academicians face difficulties solving a related constraint optimization problem. Therefore, so many miscellaneous techniques applicable to all or limited types of problems have been developed until now. Coello (2001) and Montes and Coello (2011) are comprehensive review surveys on different constraint handling techniques. Typically, three main groups of constraint handling techniques are available in the literature: (a) penalty-based techniques, (b) modified evolutionary algorithms (EAs) or hybrid algorithms such as co-evolutionary algorithms (CoEAs), and (c) miscellaneous techniques.

2.2 Available approaches

The most well-known methods are penalty techniques that have different forms such as static penalties, dynamic penalties, death penalty, adaptive penalty. Some other researchers have focused on the manipulated versions of the algorithms to directly embed a constraints-handling technique into the algorithm's logic directly. Yousefi et al. (2012) have improved ICA with a feasibility-based ranking embedded algorithm applied for handling design constraints of heat-transferring plates. Nema et al. (2011) have presented a hybrid co-evolutionary version of PSO combined with the gradient search and used an augmented Lagrangian method as the constraints handling technique. Mun and Cho (2012) explained a modified HS algorithm with an embedded fitness priority-based ranking method (FPBRM) to handle design constraints. Zade et al. (2017) introduced a hybridized cuckoo search with box-complex method to handle design constraints and increase the convergence rate and computation speed. Mendez and Coello (2009) utilized a selection mechanism and incorporated it into a DE algorithm to handle constraints. Liu et al. (2018) presented a modified PSO using a subset constrained boundary narrower (SCBN) method cooperating with a sequential quadratic programming for finding near-boundary feasible answers for solving engineering problems. Lee and Kang (2015) worked on handling constraints in water resources optimization problems with modifying an EA called shuffled complex evolution (SCE) with an adaptive penalty. Stripinis et al. (2019) presented a constraint handling technique incorporating a two-step selection procedure and a penalty function called the direct type constraint handling technique. Although efficient and valuable, these algorithms are usually sophisticated in logic or need exhausting programming and might not be simply available for users.

2.3 Other miscellaneous ideas

Other miscellaneous ideas are available in the literature. Chehouri et al. (2016) presented a method, so-called violation constraint handling (VCH) technique, for GAs by utilizing a violation factor without tunable parameters displaying a consistent performance. Guan et al. (2008) have developed a repairing procedure added on GAs for handling constraints applying to water resources optimization problems. Mallipeddi and Suganthan (2010) utilized four different constraint handling techniques, superiority of feasible solutions (SF), self-adaptive penalty (SP), ε-constraint (EC), and stochastic ranking (SR), simultaneously cooperating according to some outlined rules and called it ensemble of constraint handling techniques (ECHT) which outperforms all the four techniques when utilized individually. Leguizamón and Coello (2009) developed a boundary-search constraint handling technique utilizing two local and global level exploration, based on an ant colony metaphor.

2.4 Studies with focus on structures

Some studies have focused on optimizing skeletal structures. For example, Kaveh and Zolghadr (2012) have applied a penalty function to handle natural frequency constraints on some small/mean scale structures. Their algorithm has beaten all other former literature. The other exterior penalty function utilized by Gholizadeh and Barzegar (2012) is a technique that sequentially provides an unconstrained situation for handling natural frequency constraints. Also, the method presented in a work by Kim et al. (2010) is an improved version of PSO that makes the problem unconstrained. Pholdee and Bureerat (2014) have compared the results of a fuzzy-set penalty function by Cheng and Li (1997) and the three techniques. In the other work by the same authors (i.e., Bureerat and Pholdee 2016), they have introduced a more powerful penalty that outperforms another work by Kaveh and Zolghadr (2012). Their new penalty function shows the best standard deviation among all former techniques while the mean, max, and min of the statistical study are in the scope of others.

2.5 Concluding an inspiration

All the concepts mentioned above are undoubtedly excellent works, but they are not simply available and mostly not specific to structural design problems; the scholars of these studies have not utilized a structural design point of view, and mostly they have had a heuristic or artificial intelligence-based inspiration for their developed techniques. In other words, such techniques manipulate exploration/exploitation in the optimization process and do not use the merit function by inspiration from routines and logic of specific properties of the studied problem (the structural design optimization problem in this paper). Moreover, all the techniques have no statistical evidence of reliable stable performance on large-scale structural design problems. As the vision is general, trial and error for tuning purposes remains the main drawback and prevents the user conveniency, especially in the real market. Hasançebi and Erbatur (2000) have tried to avoid tunable factors of an old penalty technique and eliminated its shortcomings for providing improved performance with a reformulation; but again, designing view is not considered due to basic vision in their research.

The lack of a design vision-based technique specified for the structural optimization motivated the authors to provide a new reliable constraint handling technique. At the first look, one may believe that providing a deal with the computational cost of the optimization process might be a better way than a fitness-based technique to facilitate try-and-error attempts. However, available works on improving computation efficiency from spotlight scholars show that computation expedition methods are not at a level to highly facilitate such attempts in a way to make the process convenient and user-friendly. Here are some examples: Kaveh and Talatahari (2010a, b) presented an improved ACO utilizing the sub-optimization mechanism (SOM) to reduce the size of the pheromone vector, decision vector, search space, and number of fitness evaluations to expedite the optimization process. Hasançebi (2008) attempted to improve the computational performance of adaptive evolution strategies and increase the algorithm’s efficiency with an adaptive penalty function. Azad et al. (2013), Azad and Hasançebi (2013), Azad et al. (2014) and Hasançebi and Azad (2015) all struggle to improve the efficiency of various algorithms for solving large scale structures by applying an outstanding strategy called Upper Bound Strategy (UBS). Also, Azad and Hasançebi (2015) attempted to use a guided stochastic search (GSS) technique based on the principle of virtual work to enhance computational efficiency. Kambampati et al. (2018) presented a sparse hierarchical data structure called volumetric dynamic grid (VDG) in combination with the fast-sweeping method and multi-threaded algorithm for faster convergence in the topology optimization of structures by level set method (LSM). The work by Dunning et al. (2016) attempted to overcome computationally difficult and expensive eigenmodes of buckling constraints by reusing available eigenvectors, optimal shift estimates and some other ideas to effectively overcome with many buckling modes in topology optimization. Duarte et al. (2015) and Sanders et al. (2018) introduce two versions of efficient software for computationally fast analysis of polygonal finite element meshes with many degrees of freedom. With an investigation of these references, such expeditions do not eliminate boring tuning situations. In Venkataraman and Haftka (2004), it is illustrated that optimization complexity grows as the computations' possibilities improve and eliminating such costs would be more and more challenging and out of reach.

All said and done, the only way would be to eliminate the tuning necessity with a strategy that basically does not require tuning. The techniques mentioned above may expedite the process, but apply other hassles like hard programming or algorithm manipulation or analysis improvement concepts which don’t seem to be user friendly.

3 Factual development of SMF

3.1 A discussion on combinatorial search space

Based on the three facts mentioned in (Sect. 1.4), the new formula gets developed in this section. First, let's elaborate on the combinatorial search space of the structure itself. As shown in Fig. 1, a schematic example of a 3D steel frame may have a different set of sections randomly selected from the list of standard W-shaped sections available in the construction market. Ten sets are shown in Fig. 1., but if the 267 sections are available in the market or can be accessed in a famous software like ETABS or SAP2000 section-properties list, and the frame has n number of elements, then the size of the combinatorial search space is \({267}^{n}\) and the last member of this search space is Sn. For example, the 3D steel Frame in Fig. 1. has 8 beams and columns, so its search space size is equal to\({267}^{8}\). This number is a big one and gives a sense to the reader about the size of the search space of the larger-scale structures like the 3D numerical examples studied in Sect. 5, which have \({267}^{1026}\),\({267}^{3860}\), and \({267}^{8272}\) (i.e., almost 102489, 109366 and 1020000) size of search space.

3.2 A discussion on fuzzy logic

Zadeh (1965) presented the idea of a fuzzy point of view on truth-check values. This logic says that truth value variables may be any real number between 0 and 1 (logical response \(\forall \mathbb{R} \in [0, 1]\)). Figures 2. and 3. show the difference between binary and fuzzy logic.

In Fig. 2, the circle (a) is entirely red, the circle (b) is entirely green, the circle (c) is entirely orange, and somebody may say how each circle is colored. It is binary logic and the response to a quote like "the circle is green" receives a clear credit equal to 0 or 1.

However, in Fig. 3, each circle has different colors, and a distinct statement about their color is impossible, but we can say that each of them is somehow red, green, and orange. Figure 3a is 28% red, 47% green, and 22% orange. Figure 3b and c also has different degrees of each color. It is a fuzzy point of view. The main benefit of this logic is that a fuzzy membership can be attributed value to each circle and distinguish them from each other by using those percentages.

A set in which its members follow this logic in mathematics and logic is called fuzzy sets. Zadeh developed the proposal of the "fuzzy-set" theory immediately after defining "fuzzy logic." The segments on each fuzzy member who belongs to a fuzzy set are called fuzzy segments as they are degree takers of the membership in part. For example, areas with different colors in Fig. 3 are the fuzzy areas of each circle.

In the next section, the fuzziness of the combinatorial search space of the structures is discussed, and the initial format of SFM is founded based on that.

3.3 Step1: initiating formulation platform by first factual strategy

When external loading is applied to the structure (e.g., dead, live, earthquake, and wind load cases) and structure is analyzed, in the civil engineering culture, the response of the structure is always stated in the format of feasible (green) or infeasible (red) elements by colors, similar to Fig. 4.

The corresponding feasibility vector \(\overrightarrow{V}\) is stated with a color in the way that elements with violated constraints (\({{C}}_{{i}}>1\)), colored in red, is not acceptable and marked as infeasible, which takes value zero, Vi = 0, and the elements with satisfying constraints \(({C}_{i}\le 1)\), colored as green, takes value one, Vi = 1.

The structure is represented by degrees of feasibility and infeasibility. Therefore, we can call combinatorial search space a fuzzy set because all its members (Si, Sj, Sk,, etc.) have a potential opportunity to be distinguished by a fuzzy membership value. Si, Sj, Sk,, etc. are called fuzzy members, and structural elements (beams, columns, and braces) of each fuzzy member are fuzzy segments (because they are value takers upon feasibility or infeasibility). In other words, this fuzzy property of the combinatorial search space provides a format platform for a potential SFM.

As the vector \(V\) is so simple, and based on the number of feasible and infeasible elements, it might represent several candidates Si, Sj, Sk,,etc. on the combinatorial search space. In terms of mechanics of structure, stiffness matrix K in static equation F = Kd is its unique identity which has a distribution (may be represented by weight distribution vector \(\frac{{{w}}_{{i}}}{{W}}\)) and a scale (may be represented by absolute weight W). Also, a factor related to constraints is required and C vector is available.

As shown in Fig. 5, the C vector and weight distribution vectors compared to the V vector presented in Fig. 4. are more unique factors to provide distinguishment among Si in the combinatorial search space. Therefore, we have:

None of the three factors (\(\frac{{{w}}_{{i}}}{{W}}\)), absolute weight W, and C are unique for each structure but the maximum one of them can be the same and the other ones are certainly different. For example, structures with the same weight W have different weight distribution \(\frac{{{w}}_{{i}}}{{W}}\) and accordingly different C vector, or structures with the same weight distribution have different weights and are just physical scale of each other and accordingly different C vectors. As a result, we must use at least two of them in the membership function to make sure their product is unique. In this paper, the authors selected weight distribution (\(\frac{{{w}}_{{i}}}{{W}}\)) and C vector and dropped absolute weight W since this factor is used later in feasibility factor in Sect. 3.4, part two, and double embedding in final SMF formula has no sense; and the initial version of the formula is as Eq. 6:

This formula is still a fuzzy membership function and has no property to support the role of a merit function yet. In the following sections, this membership is converted to a merit function with two steps: first, by setting function’s minimum to the on-the-edge feasible structures (i.e., feasibility boundary) and, second, control the feasibility of the exploration by feasibility factor.

3.4 Step2: converting fuzzy membership function into an SMF

3.4.1 Second factual strategy: set the minimum of SMF on the feasibility boundary

To set the minimum of SMF, first, we define overdesign and on-the-edge feasible structures.

Overdesign Structure is a structure on the combinatorial search space Si with excessive material or stiffness and may lose an amount of its weight and remain feasible.

On-the-Edge Feasible Structure is a structure on the combinatorial search space in which any slight loss of material of any element leads to loss of mechanical resistance in a way that at least one infeasible element emerges. These structures are the boundary of the feasible subset of the combinatorial search space.

A schematic of an entirely feasible area (structures with 100% feasibility), edge structures (feasibility boundary), and structures with other degrees of feasibility are shown in Fig. 6.

Accordingly, the exploration may meet the global optimum if we set the minimum of the fuzzy membership on the edges and attract algorithms' exploration toward the critical designs and meet the minimums. Therefore, the most critical case scenario C = 1 for all the elements is considered to represent the boundary in Eqs. 6 to 1 to set the optima of the fuzzy membership function and make it one step forward to be a proper merit function. Therefore, the modified formula may be stated as:

The set of constraints C might be divided into two feasible and infeasible subsets: Cf and Cinf. To boost the exploration, its possible to add a power (e.g., 2) to Cf to push the algorithm to stay more and more near the feasibility boundary and remain far from over-design Si on the combinatorial search space. Therefore, if the structure has a bunch of elements Cf (\(\mathrm{satisfied ~constraints}\)) and the remaining with Cinf (\(\mathrm{violated~ constraints}\)) while \(nfeasible+nInfeasible=n\) (n is the total number of structural elements):

As another booster, we may remove the tense -1 for Cinf to let infeasible ones have even higher values and prohibit the algorithm from exploring the infeasible side of the feasibility edge even better. Then we have:

It is worth mentioning that the minimum of the function is still set on 1 (feasibility boundary) and the changes in Eqs. 8 and 9 do not affect this property of the formula. The minimum of the function is set, but the feasibility of exploration is not yet assured which is provided by the feasibility factor described in Part 2.

3.5 Third factual strategy: securing a feasible exploration by feasibility factor

The second important property of the SMF is that it must handle the feasibility of the exploration by algorithms. As already discussed, the optimization algorithms cannot detect the feasibility and must be supported by handling ideas embedded in the merit function. The authors here propose to have a simple and near-to-mind feasibility factor, as follows:

where wf is cumulative weight of all feasible elements and nf is the number of feasible elements available in the structure. Other formats for this factor like \(\frac{W}{{w}_{f}}\) or \(\frac{n}{{n}_{f}}\) are also available but choosing among them and the one in Eq. 10 is a deliberate one. This factor's value reaches its minimum equal to one when the structure is 100% feasible (i.e., all the members are feasible ones). However, the more infeasible parts in the structure, the bigger value this factor gets and pushes algorithms' exploration toward a higher level of feasibility. Therefore, we can state that:

and:

Figure 7 shows an unconstrained exploration and a handled exploration by feasibility factor.

Therefore, the final version of the merit function may be the product of the feasibility factor and the fuzzy membership function, as stated in Eq. 13:

The feasibility factor assures the feasibility of exploration and prevents algorithm from wasting iterations exploring infeasible areas on the combinatorial search space. Now, the membership function is fully converted to a merit function. SFM performance test results are reported in the following sections by applying to the three large-scale examples.

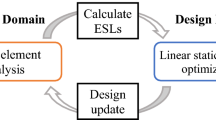

4 Numerical evaluation material

4.1 Optimization methods

Several algorithms are selected to be utilized in this paper to verify the SMF's performance, such as GA (Holland 1975), ACO (Dorigo and Stützle 2004), PSO (Eberhart and Kennedy 1995), CSS (Talatahari and Azizi 2020), interior search algorithm (ICA) (Gargari and Lucas 2007), firefly algorithm (FA) (Yang 2008, 2009), symbiotic organisms search (SOS) (Cheng and Prayogo 2014), upgraded whale optimization algorithm (WOA) (Azizi et al. 2019), and interior search algorithm (ISA) (Gandomi 2014). The constant parameters of these algorithms are set the same as the standard versions provided by the main references, as presented in Table 3. Each algorithm performed thirty optimization runs to have statistical outputs.

4.2 Utilized penalty functions to be compared with SMF

The list of all penalty methods applied to the examples is summarized in Table 4.

4.3 Output clarification

Initially, the method of Hoffmeister and Sprave (1996) is implemented as the penalty to optimize the first and second examples by nine evolutionary algorithms to compare with SMF to prove that SMF is practically hirable and then statistical results are compared. Afterward, by implementing one algorithm (CSS), the results obtained by SMF are statistically compared with the results obtained by the other penalty methods listed in Table 4.

5 Numerical examples and results

5.1 Initial information

This section presents the details of frame structures as well as the numerical results of optimization obtained by different methods. AISC (2010) and ASCE (2000) are the codes for designing and loading of the examples, respectively. In addition, normalized design constraints, the \(CI\) and \(DI\), are selected as criteria.

The proposed technique is tested by three frames optimizations: 10-story frame (Azad et al. 2013), X-braced 20-story tall frame (Azad et al. 2014), and 60-story mega-braced tubed frame (Talatahari et al. 2022). The first and second frames are both benchmark examples in literature. The possible sections for structural members of examples were taken from 267 W-shaped standard profiles. Properties of utilized steel material were \(\rho =7850\) Kg/m3, \(E=200\) GPa and \({F}_{y}= 248.2\) MPa, as the mass density, modulus of elasticity, and yielding stress, respectively. 10-story and 20-story frames are loaded by dead, live, and seismic loads while the tubed frame is loaded by dead, live, and wind. In the following, each example is described, and numerical results are presented afterward.

5.2 10-story frame

This model, as the first example, is shown in Fig. 8 and was firstly introduced by Azad et al. (2013). This frame contains 1026 elements, 580 beams, 350 columns, and 26 X-type braces. The braces are applied just along x-axes for all bays in the first story and the side-bays of the other stories. Joints are moment-resisting connections for beams and columns and pin connections for braces. Thirty-two different groups of members are available, and they are repeated every three stories from 2nd story toward upwards; 5 column groups, 2 groups for outer and inner beams, and 1 for bracings are the considered groups. It is noteworthy that all the story nodes modeled as rigid diaphragms and 10-story diaphragms modeled for this example. In addition, the effective length factor for buckling stability for columns, braces, and the major bending of beams are all taken equal to one. However, for the bending over the weak bending plane of beams, namely the plane orthogonal to the floor layer, this factor is taken equal to 0.01 since the beams are fully braced by the floors. The loadings applied on the floors are equal to 20KN/m dead and 12KN/m live, respectively. Simultaneously, 15KN/m and 7KN/m are the dead and live loads applied on the roof story. In this paper, the self-weight of the story is considered the effective story weight for calculating the related seismic lateral load and gets updated from time to time while exploration is moved on by algorithms. According to ASCE (2000), the lateral seismic load results as

where the \({w}_{x}\) and \({h}_{s}\) are the effective weight and height of the story s, respectively; \(k\) is a function of fundamental period of the structure; and \({F}_{s}\) is the lateral seismic load assigned to the mass center of the story s; for this example, the seismic base shear \(V\) was taken equal to 10 percentage of the weight of the structure \(W\) (i.e., V = 0.1 \(W\)). According to ASCE (2000), the fundamental period of the structure could be computed by

where \({C}_{T}\) is taken equal to 0.0853 and H is the height of the structure equal to 36.5 m in this example. According to this formula, the fundamental period of this structure T is equal to 1.267 s. According to ASCE (2000) guideline, the k factor for this value of fundamental period is equal to 1.38. Additionally, different load coefficients applied to 10 load combinations are considered for all the examples as presented in Table 5.

5.3 X-braced 20-story frame

This tall building consists of 8 bays in x-direction and 6 bays in y-direction, but the number of bays in x-direction reduces to 6 from 7th story. Bays are 6 m and 5 m long, in x- and y-directions, respectively. The structure contains 3860 members; 1836 beams; 1064 columns; and 960 bracing elements. This moment frame is braced with X-shaped braces in both x- and y-directions. In this frame, braces apply to frames one in a between; namely, grids 1,3,5, and 7 are braced parallel to x-direction and grids b, d, f, and h are braced parallel to y-direction as shown in Fig. 9. Considering practical construction easiness, members are divided into 73 groups, in every two stories; columns are divided into five groups, beams into two inner and outer groups and braces solely into one group for both stories. The effective length factor and unbraced length factor are the same as in the first example. This example was optimized by Azad et al. (2014) applied gravity loads, dead, and live loads, assigned as distributed loads on the beams of each floor. For the floors, 14 KN/m and 10 KN/m were applied as dead load and live load, respectively; also, 12 KN/m and 7 KN/m were applied on the roof story as dead and live loads, respectively. As a reminder, the self-weight of the structure is also added to the dead load. Like the first example, the base shear is equal to 10 percent of the effective weight of the structure; \({C}_{T}\) is taken equal to 0.0488; and H is the height of the structure equal to 70 m for this example. The fundamental period of this structure, T, is equal to 1.181 s, and the K factor for this value of the fundamental period is equal to 1.341. Load combinations are the same as in the first example.

5.4 60-story Mega-braced tubed-frame

This high-rise building is made up of four rectangular independent tubed frames. As shown in Fig. 10, tube A has 4 bays in x-direction and 2 bays in the y direction; tube B has 6 bays in x-direction and 4 bays in the y-direction. Both tubes A and B continue to the top story. Tube C has 8 bays in the x-direction and 6 bays in the y-direction, and tube D has 10 bays in the x-direction and 8 bays in the y-direction in the plan. Tubes C and D continue to stories 42 and 24, respectively. The outer tube D has mega-braces on each of its four elevation views, which are lozenge-shaped braces repeated every 6 stories. As depicted in Fig. 8, every lozenge brace consists of 4 independent elements. However, tubes B and C have just bracings in the extreme right and extreme left bays at each of their four elevation views. This structure contains 8272 frame members, 3960 beams, 3960 columns, and 352 braces. It is noteworthy that tubes connect by in-plane beam elements, hinged to the joints of the two tubes; therefore, the gravity loads of the floor system apply to these elements. However, since the floor joints are rigid-body diaphragms, these elements use determined sections and do not play any role in structural optimization and are therefore excluded from structural members and optimization process. All the members are divided into 103 groups; each group covers every six succeeding stories; in the plan of a story, any tube has its own beam group and columns are dedicated to the corner and side groups. Every 6 adjacent stories have their own bracing group, as well. The typical story height is equal to 3 m for all the stories. Like the former example, dead and live loads applied on story beams are equal to 14KN/m and 10KN/m, respectively; however, for roof story, these values are equal to 12KN/m and 7KN/m, respectively, because of the high-rise nature of this structure, the wind load is supposed to be the major lateral load. According to ASCE (2000), wind load may be computed as

in which \({P}_{w}\) is the wind pressure on the structural surface in KN/m2; Kz is the factor of velocity exposure; Kzt is the factor related to topography; Kd is the factor of winding direction; V is the wind speed; G denotes the gust factor; and \({C}_{p}\) denotes the external pressure coefficient. In this example, wind speed was set to 85 mph and exposure type to B; Kzt is set to 1, Kd equals to 0.85, and G is 0.85; Cp equals to 0.8 and 0.45 for windward and leeward faces of the building, respectively. Wind loads are considered as the major lateral loading.

5.5 Discussion on results

5.5.1 Convergence results

Tables 6 and 7 present results for the 10-story frame, utilizing the SMF and a penalty method to handle design criteria, respectively. All nine algorithms with both constraint handling methods are used. The best weight among tables for the CSS algorithm, as shown in Table 6, is equal to 543.02 (ton) found via applying the SMF, which is 8% lighter than the result reported in the literature (i.e., in Azad et al. (2013) equal to 584.93 (ton)) as mentioned in Table 7. Since some results have some violations, to have a fair comparison, total weight is modified to present feasible weight, mentioned as practical weight. It means that we made some modification on the final sections reported by the algorithm to exchange an infeasible result for a feasible one. This modification is not necessary for feasible reported results. The reason is that results handled by penalties in structural design optimization usually are not feasible solutions, as seen in our results. Thus, manual manipulation in found final sections is necessary to gain a feasible solution in engineering practice. This process usually increases the total weight of the structure. As seen in Table 7, five of nine reported solutions obtained by GA, CSS, ISA, SOS, and WOA with the weight of 591.34, 549.24, 563.19, 549.51, 558.05 tons are infeasible, and some modifications are needed upon their sections. Authors reported new total weights as GA: 612.08 (tons), CSS: 561.13 (ton), ISA: 585.74(ton), SOS: 558.23 (ton), WOA: 569.55 (ton). All these manipulated designs experienced an increase in the total weight with a minimum value of 2% for SOS and WOA and the maximum of 4% for ISA. In addition to this, such manipulation is an excessive annoying process for designers. One superior feature demonstrated by the results in Table 6 is that the SMF converges to feasible solutions in almost all cases, unlike the penalty methods. All the solutions in Table 6 are feasible ones and even some of them have DI equal to or near to one (FA, ICA, CSS, ISA), which is the governing design criteria for this example. It means that SMF precisely detects infeasibility and performs better than penalty over the boundary.

For the second example, Tables 8 and 9 present results for an X-braced 20-story frame, utilizing the SMF and the penalty mothed, respectively. Again, the best weight in both tables belongs to the CSS with a weight of 2713.57 (tons) found via applying the SMF which is much better than results obtained by the same algorithms found by penalty methods and 24% lighter than the result mentioned by Azad and Hasançebi (2015). Again, all the results of the SMF are feasible solutions, while many of the results of the penalty method needed handy manipulation. The increase in the weight after modification is presented in Table 9. Like the 10-story example, the SMF shows robust performance in discovering near/on the boundary solutions. Capacity, unlike the 10-story example, is the governing design criteria, and four of nine designs found by the SMF are exactly on the boundary with maximum CI near/equal to one.

Figures 11 and 12 are the convergence history of the best solutions found via the SMF for the two examples. As seen from the first example, after a minimum 13,500 analyses (270 iterations), all the algorithms show no more exploration while a minimum of 25,000 analyses (500 iterations) are required by most of the chosen penalty techniques. Corresponding numbers for the second example are17500 (350 iterations) and 25,000 (500 iterations), respectively. So, a tangible 46% and 30% reduction in the number of required analyses is achieved using SMF for the first and second examples, respectively.

The best results obtained for the first example using the SMF method, belonging to the CSS and SOS algorithms, are 543.02 and 550.61 (tons), respectively. ISA, WOA, ICA, FA, ACO, PSO, and GA are placed the third to ninth levels. The values are 559.32 (ton), 560.26 (ton), 568.48 (ton), 576.45 (ton), 578.96 (ton), 581.05 (ton), 598.27 (ton). The order for the results of the second example obtained by the SMF is mostly the same as the first example: CSS, SOS, ISA, WOA, ICA, FA, PSO, ACO, GA, with the weights equal to 2713.57 (ton), 2775.39 (ton), 2817.29 (ton), 2921.28 (ton), 2913.79 (ton), 3036.29 (ton), 2993.51 (ton), 3112.13 (ton), 3614.55 (ton), respectively. This consistency can be interpreted as the stability of the performance when applying the SMF. However, for the penalties, the order of the consequent weights is quite messy; for the 10-story example, the order is as CSS, SOS, WOA, ISA, ICA, GA, PSO, ACO, FA, while for the 20-story frame, it is as CSS, SOS, ISA, WOA, PSO, FA, ICA, ACO, GA. This disorder shows that the utilized penalty in this research needs tuning to assure high-quality convergence.

The all-stories DIs of the first example for the results of three superior algorithms, i.e., CSS, SOS, and ISA, are shown in Fig. 13, comparing the results by SMF and penalty methods. As it can be seen, the distribution of displacement among stories of the results reported by the SMF is smoother while the results obtained by the penalty method mostly show a harsh distribution that leads to slightly infeasible solutions. As this structure is not braced in the y-direction, the CI is not the governing criteria; therefore, as shown in Fig. 13, both the SMF and penalty method show satisfied CI. The adverse situation is established for the 20-story frame; CI is the governing criteria since the structure is braced in both X and Y global directions. Figure 13 presents the value of DIs for the second example. All DIs are feasible. As shown in Fig. 13, CI for all the designs by the SMF methods is feasible and the result of SOS (Fig. 13d) is lying on the boundary where the CI equals to one for each element, while, like the 10-story example, two of three designs found by the penalty method have some violated CIs (Fig. 13).

For the third example, some common penalty techniques (as described in Coello (2001)) are utilized. Table 10 shows the best result found by CSS for this example with a weight equal to 6779.56 (ton). The maximum DI and maximum CI of this example are 0.98 and 0.87 which are feasible and directly found by the algorithm with no handy modifications (no local search); the quality of the result shows that it is an on-the-boundary solution like the two other examples. Figure 14 shows the all-stories DIs and all-elements CIs for this structure, respectively. Figure 15 shows the convergence curvature of the mega-braced tubed frame.

5.5.2 Statistical results

Table 11 shows a statistical comparison among the SMF and the penalty method for the first two examples extracted by 30 independent optimization runs for each algorithm. For the 20-story frame, all the best results for the SMF are lighter than the ones for the penalty method, while for the 10-story frame, GA (penalty method: 591.34 against SMF: 598.28), WOA (penalty method: 558.05 against SMF: 560.26) and SOS (penalty method: 549.51, against SMF: 550.61) have provided slightly better results; however, SMF is still competitive since five other algorithms have found lighter results compared to the penalties. To compare with the results of literature, only GA has found heavier results (i.e., 598.27 (ton) via the SMF) compared to Azad et al. (2013) with 584.93 (ton) for the first example and all others are smaller. Similarly, all the best results are lighter than the one reported by Azad & Hasançebi (2015) (3539.83 (ton)) for the second example, but the other ones are competitive. In most cases, the mean of the finally converged independent results by SMF shows an absolute superiority against the results of the utilized penalty method and only WOA has shown a slightly vice versa performance (about only 5 tons and just for the first example).

Table 12 presents the statistical comparison among the penalty techniques and the SMF using the CSS algorithm for all examples obtained from 30 independent runs. As it is clear from Table 12, small values of the best and standard deviation are asserted for the SMF. Unstable order of performance was seen in the first two examples, and poor statistical performance is also seen using different penalty techniques. For the first and second examples, standard deviations of results by penalties are too bad and just the penalty by Michalewicz and Attia (1994) has shown a better and reasonable performance. Also, just three and two answers among penalty results are feasible ones, for the 10-story and X-braced frame, respectively. For the third example, only three out of ten results in Table 9 have weights less than 6780 tons: SMF, Michalewicz and Attia (1994) and Hoffmeister and Sprave (1996), with weights equal to 6779.56 (ton), 6720.45 (ton), and 6453.09 (ton); all others have weights more than 6930 tons which are severely over-design. The penalty by Deb (2000), Skalak and Shonkwiler (1998), Bean and Hadj-Alouane (1992) has found an over-design structure among all. Although the result obtained by Smith and Tate (1993) is on the boundary, interestingly, it has again an over design weight. The best-converged results among 30 runs by some of the penalty methods (i.e., Michalewicz and Attia (1994), Hoffmeister and Sprave (1996) and Joines and Houck (1994)) are infeasible and need handy modifications with try-and-errors. For the third example, the standard deviations show that penalties have reported weak distribution of answers since the deviation is about 6% to 13% for Joines and Houck (1994) and Morales and Quezada (1998) methods, respectively. However, the deviation for SMF as well as Michalewicz and Attia (1994) is very satisfactory equal to 3% and 2%, respectively. However, the answer offered by SMF is direct feasible, unlike the one obtained by Michalewicz and Attia (1994).

6 Concluding remarks

A list of SMF advantages is as below:

-

(1)

It is a new merit function specified for structural optimization for the first time.

-

(2)

There is no necessity for tuning factors, and no annoying try and errors are required.

-

(3)

No time-consuming programming is assigned to the practical use of this method.

-

(4)

Simple versions of meta-heuristics are usable and straightforward, and there is no necessity for utilizing co-evolutionary versions.

-

(5)

By utilizing SMF, it does not matter what the topography of the structure is, what type of the moment-resisting system is, and which type of design criteria is governing the design process; results remain stable and reliable.

-

(6)

The final manual manipulation (or local search) is eliminated, which is an annoying struggle, and the final converged results are directly feasible.

-

(7)

Last and foremost, a valuable expedition in convergence can be observed, which provides more facilitation in computations of the optimization process.

A prospect list for future works might be as follows: (a) applying this technique on large-scale truss and concrete structures since the type of design variables and design criteria are somehow different, (b) developing and investigating different forms of formulation for SMF technique, (c) apply this technique on continuous problems instead of skeletal structures.

Data availability

Enquiries about data availability should be directed to the authors.

References

AISC (2010) Specification for structural steel buildings (ANSI/AISC 360-10), Chicago, IL: American Institute of Steel Construction

ASCE 7–98 (2000) Minimum design loads for buildings and other structures: Revision of ANSI/ASCE 7–95, American Society of Civil Engineers

Azad SK, Hasançebi O (2015) Computationally efficient discrete sizing of steel frames via guided stochastic search heuristic. Comput Struct 156:12–28

Azad SK, Hasançebi O, Azad SK (2014) Computationally efficient optimum design of large-scale steel frames. Int J Optim Civil Eng 4(2):233–259

Azad SK, Hasançebi O, Azad SK (2013) Upper bound strategy for metaheuristic-based design optimization of steel frames. Adv Eng Softw 57:19–32

Azad SK, Hasançebi O (2013) Improving computational efficiency of particle swarm optimization for optimal structural design. Int J Optim Civil Eng 3(4):563–574

Azizi M, Ejlalia RG, Ghasemia SAM, Talatahari S (2019) Upgraded Whale Optimization Algorithm for fuzzy logic based vibration control of nonlinear steel structure. Eng Struct 192:53–70

Bean JC, Hadj-Alouane AB (1992) A dual genetic algorithm for bounded integer programs. Technical Report TR 92–53, Department of Industrial and Operations Engineering, The University of Michigan

Bureerat S, Pholdee N (2016) Optimal truss sizing using an adaptive differential evolution algorithm. J Comput Civil Eng 30(2):04015019

Chehouri A, Younes R, Perron J, Ilinca A (2016) A constraint-handling technique for genetic algorithms using a violation factor. J Comput Sci 12(7):350–362

Cheng MY, Prayogo D (2014) Symbiotic Organisms Search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112

Cheng FY, Li D (1997) Fuzzy set theory with genetic algorithms in constrained structural optimization. In: ASCE proceeding of us-japan joint seminar on structural optimization. Advances in structural optimization, New York, pp 55–56

Coello CAC (2001) Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: a survey of the state of the art. Comput Methods Appl Mech Eng 191(11–12):1245–1287

Deb K (2000) An efficient constraint handling method for genetic algorithms. Comput Methods Appl Mech Eng 186(2–4):311–338

Dorigo M, Stützle Th. Ant Colony Optimization. MIT Press, ISBN 0-262-04219-3

Duarte LS, Celes W, Pereira A, Menezes IFM, Paulino GH (2015) PolyTop++: an efficient alternative for serial and parallel topology optimization on CPUs & GPU. Struct Multidiscip Optim 52(5):845–859

Dunning PD, Ovtchinnikov E, Scott J, Kim HA (2016) Level-set topology optimization with many linear buckling constraints using an efficient and robust eigensolver. Int J Numer Meth Eng 107(12):1029–1053

Eberhart RC, Kennedy J (1995) A new optimizer using particle swarm theory. In: Proceedings of the sixth international symposium on micro machine and human science. Nagoya, Japan

Erol OK, Eksin I (2006) New optimization method: Big Bang-Big crunch. Adv Eng Softw 37(2):106–111

Gandomi AH (2014) Interior search algorithm (ISA): a novel approach for global optimization. ISA Trans 53(4):1168–1183

Gargari EA, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In: IEEE Congress on Evolutionary Computation, Singapore

Geem ZW, Kim JH, Loganathan GV (2001) A new heuristic optimization algorithm: harmony search. SIMULATION 76(2):60–68

Gholizadeh S, Barzegar A (2012) Shape optimization of structures for frequency constraints by sequential harmony search algorithm. Eng Optim 45:627–646

Glover F (1989) Tabu search—part i. ORSA J Comput 1(3):190–206

Glover F (1990) Tabu search—part II. ORSA J Comput 2(1):4–32

Guan J, Kentel E, Aral MM (2008) Genetic algorithm for constrained optimization models and its application in groundwater resources management. J Water Resour Plan Manag 134(1):64–72

Hasançebi O, Erbatur F (2000) Constraint handling in genetic algorithm integrated structural optimization. Acta Mech 139(1–4):15–31

Hasançebi O (2008) Adaptive evolution strategies in structural optimization: Enhancing their computational performance with applications to large-scale structures. Comput Struct 86(1–2):119–132

Hasançebi O, Azad SK (2015) Improving computational efficiency of bat-inspired algorithm in optimal structural design. Adv Struct Eng 18(7):1003–1015

Hoffmeister F, Sprave J (1996) Problem-independent handling of constraints by use of metric penalty functions. In: Proceedings of the fifth annual conference on evolutionary programming, MIT Press 289–294, San Diego, CA, pp 289–294

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan Press, Ann Arbor

Joines J, Houck C (1994) On the use of non-stationary penalty functions to solve nonlinear constrained optimization problems with Gas. In: Fogel D (ed.), Proceedings of the First IEEE conference on evolutionary computation, IEEE Press. Florida, USA; pp 579–584.

Karaboğa D (2005) An idea based on honey bee swarm for numerical optimization

Kambampati S, Jauregui C, Museth K, Kim HA (2018) Fast level set topology optimization using a hierarchical data structure. In: Multidisciplinary analysis and optimization conference

Kim TH, Maruta I, Sugie T (2010) A simple and efficient constrained particle swarm optimization and its application to engineering design problems. Proc Inst Mech Eng C J Mech Eng Sci 224(2):389–400

Kaveh A, Talatahari S (2010a) A novel heuristic optimization method: charged system search. Acta Mech 213(3–4):267–289

Kaveh A, Zolghadr A (2012) Truss optimization with natural frequency constraints using a hybridized CSS-BBBC algorithm with trap recognition capability. Comput Struct 102:14–27

Kaveh A, Talatahari S (2010b) An improved ant colony optimization for constrained engineering design problems. Eng Comput 27(1):155–182

Lee S, Kang T (2015) Analysis of constrained optimization problems by the SCE-UA with an adaptive penalty function. J Comput Civ Eng. https://doi.org/10.1061/(ASCE)CP.1943-5487.0000493

Leguizamón G, Coello CAC (2009) Boundary search for constrained numerical optimization problems with an algorithm inspired by the ant colony metaphor. IEEE Trans Evol Comput 3(2):350–368

Liu Zh, Li Z, Ping Zh, Chen W. A parallel boundary search particle swarm optimization algorithm for constrained optimization problems. Structural and Multidisciplinary Optimization 2018.

Mallipeddi R, Suganthan PN (2010) Ensemble of constraint handling techniques. IEEE Trans Evol Comput 14(4):561–579

Mendez AM, Coello CAC (2009) A new proposal to hybridize the Nelder-mead method to a differential evolution algorithm for constrained optimization. In: IEEE Congress on evolutionary computation, Trondheim, Norway

Michalewic Z, Attia NF (1994) Evolutionary optimization of constrained problems. In: Proceedings of the 3rd annual conference on evolutionary programming, World Scientific, Singapore, pp 98–108

Montes EM, Coello CAC (2011) Constraint-handling in nature-inspired numerical optimization: past, present and future. Swarm Evol Comput 1(4):173–194

Morales AK, Quezada CV (1998) A universal eclectic genetic algorithm for constrained optimization. In: Proceedings of the 6th european congress on intelligent techniques and soft computing, Aachen, Germany; pp 518–522

Mun S, Cho Y (2012) Modified harmony search optimization for constrained design problems. Expert Syst Appl 39:419–423

Nema S, Goulermas JY, Sparrow G, Helman P (2011) A hybrid cooperative search algorithm for constrained optimization. Struct Multidiscip Optim 43(1):107–119

Pholdee N, Bureerat S (2014) Comparative performance of meta-heuristic algorithms for mass minimisation of trusses with dynamic constraints. Adv Eng Softw 75:1–13

Sanders ED, Pereira A, Aguiló MA, Paulino GH (2018) PolyMat: an efficient Matlab code for multi-material topology optimization. Struct Multidiscip Optim 58(6):2727–2759

Skalak SC, Shonkwiler R (1998) Annealing a genetic algorithm over constraints. In: IEEE international conference on systems, man, and cybernetics, CA, USA

Smith AE, Tate DM (1993) Genetic optimization using a penalty function. In: Forrest S (Ed.), Proceedings of the fifth international conference on genetic algorithms, University of Illinois at Urbana-Champaign, Morgan Kaufmann, San Mateo, CA; pp 499–503

Storn R, Price K (1997) Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359

Stripinis L, Paulavičius R, Žilinskas J (2019) Penalty functions and two-step selection procedure based DIRECT-type algorithm for constrained global optimization. Struct Multidiscip Optim 59(6):2155–2175

Talatahari S, Azizi M, Toloo M et al (2022) Optimization of large-scale frame structures using fuzzy adaptive quantum inspired charged system search. Int J Steel Struct. https://doi.org/10.1007/s13296-022-00598-y

Talatahari S, Azizi M (2020) Optimization of constrained mathematical and engineering design problems using chaos game optimization. Comput Ind Eng 145:106560

Venkataraman S, Haftka RT (2004) Structural optimization complexity: what has Moore’s law done for us? Struct Multidiscip Optim 28(6):375–387

Yang XS (2016) A new metaheuristic bat-inspired algorithm. In: Nature inspired cooperative strategies for optimization (NISCO) studies in computational intelligence, vol 284, pp 65–74. http://arxiv.org/abs/1004.4170. Bibcode:2010arXiv1004.4170Y.

Yang XS, Deb S (2009) Cuckoo search via levy flights. In: World congress on nature and biologically inspired computing; pp 210–214

Yang XS (2009) Firefly algorithms for multimodal optimization. In: Watanabe O, Zeugmann T (Eds.), Fifth Symposium on stochastic algorithms, foundation and applications (SAGA), LNCS, 5792, pp 169–178

Yang XS (2008) Nature-inspired metaheuristic algorithms, 1st edn. Luniver Press, Frome

Yousefi M, Yousefi M, Darus A (2012) A modified imperialist competitive algorithm for constrained optimization of plate-fin heat exchangers. In: Proceedings of the institution of mechanical engineers, part a: journal of power and energy, originally, pp 226–1050

Zade A, Patel N, Padhiyar N (2017) Effective constrained handling by hybridized cuckoo search algorithm with box complex method. IFAC PapersOnLine 50(2):209–214

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sadrekarimi, N., Talatahari, S., Azar, B.F. et al. A surrogate merit function developed for structural weight optimization problems. Soft Comput 27, 1533–1563 (2023). https://doi.org/10.1007/s00500-022-07453-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07453-6