Abstract

We investigate some topological properties of random geometric complexes and random geometric graphs on Riemannian manifolds in the thermodynamic limit. In particular, for random geometric complexes we prove that the normalized counting measure of connected components, counted according to isotopy type, converges in probability to a deterministic measure. More generally, we also prove similar convergence results for the counting measure of types of components of each k-skeleton of a random geometric complex. As a consequence, in the case of the 1-skeleton (i.e., for random geometric graphs) we show that the empirical spectral measure associated to the normalized Laplace operator converges to a deterministic measure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Random Geometric Complexes

The subject of random geometric complexes has recently attracted a lot of attention, with a special focus on the study of expectation of topological properties of these complexes [5, 6, 31, 39, 43, 53]Footnote 1 (e.g. number of connected components, or, more generally, Betti numbers). In a recent paper [1], Auffinger, Lerario, and Lundberg have imported methods from [38, 48] for the study of finer properties of these random complexes, namely the distribution of the homotopy types of the connected components of the complex. Before moving to the content of the current paper, we discuss the main ideas from [1] and introduce some terminology.

Let (M, g) be a compact, Riemannian manifold of dimension m. We normalize the metric g in such a way that

We denote by \({\hat{B}}(x,r)\subset M\) the Riemannian ball centered at x of radius \(r>0\) and we construct a random M-geometric complex in the thermodynamic regime as follows. We let \(\{p_1,\ldots ,p_n\}\) be a set of points independently sampled from the uniform distribution on M, we fix a positive number \(\alpha >0\), and we consider

The choice of such r is what defines the so-called critical or thermodynamic regimeFootnote 2 and it is the regime where topology is the richest [1, 31]. We say that \({\mathcal {U}}_n\) is a random M-geometric complex; the name is motivated by the fact that, for n large enough, \({\mathcal {U}}_n\) is homotopy equivalent to its Čech complex, as we shall see in Lemma 2.4 below.

Auffinger, Lerario, and Lundberg [1] proved that, in the case when \({{\,\mathrm{vol}\,}}M=1\), the normalized counting measure of connected components of such complexes, counted according to homotopy type, converges in probability to a deterministic measure. That is,

where the sum is over all connected components u of \({\mathcal {U}}_n\), [u] denotes their homotopy type and \(b_0\) is the zeroth Betti number, therefore \(b_0({\mathcal {U}}_n)\) is the number of connected components of \({\mathcal {U}}_n\). In (1.2) the measure \({\tilde{\Theta }}_n\) is a random probability measure on the countable set of all possible homotopy types of connected geometric complexes and the convergence is in probability with respect to the total variation distance (see Sect. 4 for more precise definitions). The support of the limiting deterministic measure \({\tilde{\Theta }}\) equals the set of all homotopy types for Euclidean geometric complexes of dimension \(m = \dim M\). Roughly speaking, (1.2) tells us that, for every fixed homotopy type [u] of connected geometric complexes, denoting by \({\mathcal {N}}_n([u])\) the random variable “number of connected components of \({\mathcal {U}}_n\) that are in the homotopy equivalence class [u]”, there is a convergence of the random variable \({\mathcal {N}}_n([u])/b_0({\mathcal {U}}_n)\) to a constant \(c_{[u]}\) as \(n\rightarrow \infty \) (the convergence is in \(L^1\) and \(c_{[u]}>0\) if and only if [u] contains an \({\mathbb {R}}^m\)-geometric complex).

1.2 Isotopy Classes of Geometric Complexes

We now move to the content of the current paper. Our first goal is to include the results of [1] into a more general framework which allows to make even more refined counts (e.g. according to the type of the embedding of the components, or on the structure of their skeleta, or on the property of containing a given motifFootnote 3). The first result we prove is that (1.2) still holds if we consider isotopy classes instead of homotopy classes: intuitively, two complexes are isotopic if the vertices of one can be moved continuously to the vertices of the other without ever changing the combinatorics of the intersection of the corresponding balls (see Definition 2.5). From now on we will always make the assumption that our complexes are nondegenerate, i.e., that the boundaries of the balls defining them intersect transversely (see Definition 2.3); our random geometric complexes will be nondegenerate with probability one, and the notion of isotopic nondegenerate complexes coincides with the one from differential topology. In Theorem 4.2 we show that

where \(\Theta _n\) is defined in a similar way as \({\tilde{\Theta }}_n\) above, with isotopy classes instead of homotopy classes. Interestingly, the limiting measure depends only on \(\alpha \) and on the dimension of M.Footnote 4 To appreciate the difference with the results from [1]: the unknot and the trefoil knot in \({\mathbb {R}}^3\) (Fig. 1) are homotopy equivalent but they are not isotopic, and with positive probability there are connected M-geometric complexes whose embedding looks like these two knots (see Proposition 4.5 below); Theorem 4.2 is able to distinguish between them, whereas the construction from [1] is not.

1.3 A Cascade of Measures

Theorem 4.2 contains in a sense the richest possible information on the topological structure of our geometric complexes and the convergence of many other counting measures can be deduced from it. To explain this idea, we consider the space

and we put an equivalence relation \(\rho \) on \({\mathcal {G}}/{\cong }\) (the relation can be for example: two isotopy classes are the same if their k-skeleta are isomorphic, or if they contain the same number of given motifs). Then the natural map \(\psi :({\mathcal {G}}/{\cong })\rightarrow ({\mathcal {G}}/{\cong })/{\rho }\) defines the random pushforward measure \(\psi _*\Theta _n\) on \(({\mathcal {G}}/{\cong })/{\rho }\) and Theorem 4.2 implies that \(\psi _*\Theta _n\rightarrow \psi _*\Theta \).

This idea can be used to produce a “cascade” of random relevant measures. Consider the following diagram of maps and spaces:

where the spaces are

and the maps are the natural “forgetful” maps. For example, the map \(\varphi \) takes the isotopy class of a nondegenerate complex and associates to it its homotopy class; the map \(\varphi ^{(k)}\) associates to it the isomorphism class of its k-skeleton (it is well defined since isotopic complexes have isomorphic Čech complexes). Then for all the pushforward measures defined by these maps we have convergence in probability with respect to the total variation distance (see Sect. 4), and as \(n\rightarrow \infty \),

1.4 Random Geometric Graphs

Of special interest is the case of random geometric graphs: vertices of a random M-geometric graph \(\Gamma _n\) are the points \(\{p_1,\ldots ,p_n\}\) and we put an edge between \(p_i\) and \(p_j\) if and only if \(i\ne j\) and \({\hat{B}}(p_i,r)\cap {\hat{B}}(p_j,r)\ne \emptyset \). Using the above language, a random M-geometric graph is the 1-skeleton of the Čech complex associated to the complex \({\mathcal {U}}_n\).

To every random M-geometric graph \(\Gamma _n\) we can associate the measure

where the sum is over all connected components of \(\Gamma _n\) and \(\gamma \) denotes their isomorphism class (as graphs). There is an interesting fact regarding the random variable \(b_0(\Gamma _n)\) appearing in (1.3): it is the same random variable as \(b_0({\mathcal {U}}_n)\) (the number of components of the random graph and of the random complex are the same), and in [1] it is proven that there exists a constant \(\beta \) (depending on the parameter \(\alpha \) in (1.1)) such that

The existence of this limit also follows from [24], where the authors establish a limit law in the thermodynamic regime for Betti numbers of random geometric complexes built over possibly inhomogeneous Poisson point processes in Euclidean space, including the case when the point process is supported on a submanifold. Moreover, we note that for a related model of random graphs (the Poisson model on \({\mathbb {R}}^m\), see Sect. 1.6 below) Penrose [43] has proven that there exists a constant \(\beta \) (depending on the parameter \(\alpha \) in (1.1)) such that the normalized component count converges to a constant in \(L^2\). In fact, as we will see below, related to our M-geometric model there is a way to construct a corresponding \({\mathbb {R}}^m\)-geometric model, which is in a sense the rescaled limit of the Riemannian one, and the limit constants for the two models are the same.

In fact the limit measure \(\varphi ^{(1)}_{*}\Theta \) also comes from the rescaled Euclidean limit and it is supported on connected \({\mathbb {R}}^m\)-geometric graphs. For a given m, the set of such graphs is not easy to describe, but in the case \(m=1\) they can be characterized by a result of Roberts [45], and from this result we can deduce a description of the support of the limit measure in (1.3) (see Corollary 5.4 and Sect. 5 for more details).

Remark 1.1

(Related work on random geometric graphs) The general theory of random graphs has been founded in 1959 by Erdős and Rényi, who proposed a model of random graph G(n, p) where the number of vertices is fixed to be n and each pair of distinct vertices is joined by an edge with probability p, independently of other edges [12, 14,15,16,17]. Later on, other models have been proposed in the literature [11], as for instance the Barabási–Albert scale-free network model [2] and the Watts–Strogatz small-world network model [51]. For general references on random graphs, the reader is referred to [7,8,9,10, 13, 18, 29, 33, 42]. Here we focus on the random geometric graph model and we refer to [23, 43, 50] for more literature on this topic. Applications of random geometric graphs can be found, for instance, in the contexts of wireless networks, epidemic spreading, city growth, power grids, protein-protein interaction networks [11].

1.5 The Spectrum of a Random Geometric Graph

When talking about a graph, a natural associated object to look at is its normalized Laplace operator, see Sect. 6. It is known that the spectrum of the (symmetric) normalized Laplace operator for graphs encodes important information about the graphs [8]. For example, it tells us how many connected components a graph has; it tells us whether a graph is bipartite and whether it is complete; it tells us how difficult it is to partition the vertex set of a graph into two disjoint sets \(V_1\) and \(V_2\) such that the number of edges between \(V_1\) and \(V_2\) is as small as possible and such that the volume of both \(V_1\) and \(V_2\), i.e., the sum of the degrees of their vertices, is as big as possible. Therefore, the normalized Laplace operator gives a partition of graphs into families and isospectral graphs share important common features. Since, furthermore, the computation of the eigenvalues can be performed with tools from linear algebra, such operator is a very powerful and useful tool in graph theory and data analytics.

In the context of random M-geometric graphs, the convergence of the counting measure in (1.3) can be used to deduce the existence of a limit measure for the spectrum of the normalized Laplace operator for random geometric graphs. More specifically, we define the empirical spectral measure of a graph as the normalized counting measure of eigenvalues of the normalized Laplace operator and we prove that there exists a deterministic measure \(\mu \) on the real line such that (Theorem 7.4)

Here, \(\lambda _1(\Gamma _n),\ldots ,\lambda _n(\Gamma _n)\) are the eigenvalues of the normalized Laplace operator of \(\Gamma _n\) and the convergence in (1.4) means that for every continuous function \(f:[0,2]\rightarrow {\mathbb {R}}\) we have

The measure \(\mu \) in (1.4) is far from trivial and we do not have yet a clear understanding of it: we know it is supported on the interval [0, 2], but for example it is not absolutely continuous with respect to Lebesgue measure (in fact, \(\mu (\{0\})=\beta >0\)).

Remark 1.2

Interestingly, [12] studies the convergence of \(\mu _{\Gamma _n}\) as \(n\rightarrow \infty \) in the case where \(\Gamma _n\) is a G(n, p) random graph and the eigenvalues are the ones of the non-normalized Laplacian or the ones of the adjacency matrix. In particular, it is shown that in such context, under suitable conditions, \(\mu _{\Gamma _n}\) converges to the semi-circle law if associated to the adjacency matrix and it converges to the free convolution of the standard normal distribution if associated to the non-normalized Laplacian.

Remark 1.3

In [25], Gu, Jost, Liu, and Stadler introduce the notion of spectral class of a family of graphs. Given a Radon measure \(\rho \) on [0, 2] and a sequence \((\Gamma _n)_{n\in {\mathbb {N}}}\) of graphs with \(\#\,(V(\Gamma _n))=n\), they say that this sequence belongs to the spectral class \(\rho \) if \(\mu _{\Gamma _n}\overset{*}{\rightharpoonup }\rho \) as \(n\rightarrow \infty \). We can interpret (1.4) as saying that our family of random geometric graphs \((\Gamma _n)_n\) belongs to the spectral class \(\mu \) (in a probabilistic sense).

Remark 1.4

(Related work on spectral theory) Similarly to the spectrum of the normalized Laplace operator, also the spectra of the non-normalized Laplacian matrix (defined in Sect. 6) and the one of the adjacency matrix have been widely studied. We refer the reader to [8, 44] for general references on spectral graph theory. We refer to [3, 30] for applications of spectral graph theory in chemistry and we refer to [19,20,21, 37, 40, 46, 47] for applications in theoretical physics and quantum mechanics. For references on spectral graph theory of (not necessarily geometric) random graphs, we refer to [9, 11, 12, 41]. In [11], in particular, the eigenvalues of the adjacency matrix for random geometric graphs are studied using numerical and statistical methods. Remarkably, it is shown that random geometric graphs are statistically very similar to the other random graph models we have mentioned above: Erdős–Rényi random graphs, Barabási–Albert scale-free networks, Watts–Strogatz small-world networks. On the other hand, in [41], it is shown that symmetric structures abundantly occur in random geometric graphs, while the same does not hold for the other random graph models. Our main results on spectral graph theory for random geometric graphs, Theorem 7.4 and Proposition 7.5 below, follow the same general idea as [11] and [41], in the sense that we are interested in the limiting spectrum of large random geometric graphs. The main difference is that [11] is focused on the adjacency matrix, [41] gives a focus on the non-normalized Laplacian and we focus on the normalized Laplacian. Therefore the final implications differ very much.

It is worth mentioning the unpublished work [4], where the authors also derive convergence of the spectral measure of random \({\mathbb {R}}^m\)-geometric graphs in the thermodynamic regime. The results from [4] are slightly different from the results of our paper: the authors of [4] consider \({\mathbb {R}}^m\)-geometric graphs sampled by a general bounded density; here in the Euclidean case we only consider the Poisson distribution. On the other hand, our Theorem 7.4 holds also for general compact Riemannian manifolds and establishes the universality of the limiting measures. Moreover, we believe the technique we present here is even more general and can be used besides the context of geometric graphs, for instance by studying Laplacians of random geometric complexes.

Finally, we remark that there is an interesting connection between the spectrum of random geometric graphs on a compact Riemannian manifold and the spectrum of the Laplacian of the manifold—but in a regime different from the one of the current paper. It is possible to prove “convergence” as \(n\rightarrow \infty \) of one spectrum to the other if the size of the balls is of the order \(n^{-1/m}\log n\), see [22] for more details. However, the results from [22] do not apply in our case: they correspond to a “denser” set of points than the one for being in the thermodynamic regime.

1.6 The Euclidean Poisson Model

As we already observed, in [1], the proof of (1.2) is based on a rescaling limit idea. Namely, one can fix \(R>0\) and a point \(p\in M\), and study the limit structure of the random complexes inside the ball \({\hat{B}}(p,Rn^{-1/m})\). The random geometric complex obtained as \(n\rightarrow \infty \) can then be described as follows. Let \(P:=\{p_1,p_2,\ldots \}\) be a set of points sampled from the standard spatial Poisson distribution in \({\mathbb {R}}^m\). For \(\alpha >0\), let

and let

For the random complex \({\mathcal {P}}_R\), one can define completely analogous measures, where now the parameter is \(R>0\), and all the above discussion applies also to this model (it is discussed throughout the paper).

1.7 Structure of the Paper

This paper is structured as follows. In Sect. 2 we discuss (deterministic) M-geometric complexes and, in particular, we define and see some properties of the set \({\mathcal {G}}/{\cong }\) of isotopy classes of connected, nondegenerate M-geometric complexes. In Sect. 3 we discuss random M-geometric complexes; in Sect. 4 we prove (1.2). Moreover, in Sect. 5 we define and see some properties of geometric graphs; in Sect. 6 we recall the definition of the normalized Laplace operator \({\hat{L}}\) for graphs and we prove some properties of the spectral measure in the case of geometric graphs. Finally, in Sect. 7 we prove (1.4).

2 Geometric Complexes

Throughout this paper we fix a Riemannian manifold (M, g) of dimension m.

Definition 2.1

(M-geometric complex and its skeleta) Let \(p_1,\ldots ,p_n\) be points in M and fix \(r\ge 0\). We define an M-geometric complex as

For \({\mathcal {U}}:={\mathcal {U}}(\{p_1,\ldots ,p_n\},r)\), we also let

In particular, we call \({\check{C}}^{(1)}(\{p_1,\ldots ,p_n\},r)\) an M-geometric graph.

Remark 2.2

In order to avoid unnecessary complications, in the sequel we will always assume that the injectivity radiusFootnote 5\({\text {inj}}M\) of M is strictly positive (which is true if M is compact or if \(M={\mathbb {R}}^m\) with the flat metric) and that

This requirement ensures that for every point \(p\in M\) the set

is smooth (in fact it is the image of the sphere of radius r in the tangent space at p under the exponential map, which is a diffeomorphism on \(B_{T_pM}(0,{\text {inj}}M)\)). Observe also that for \(r\le {\text {inj}}M\) the ball \({\widehat{B}}(p,r)\) is contractible, but not necessarily geodesically convex.

We will denote by  the set of all l-tuples \(\{j_1, \ldots , j_l\}\) of elements from the set \(\{1, \ldots , n\}.\)

the set of all l-tuples \(\{j_1, \ldots , j_l\}\) of elements from the set \(\{1, \ldots , n\}.\)

Definition 2.3

We say that \({\mathcal {U}}(\{p_1,\ldots ,p_n\},r)\) is nondegenerate if for each  the intersection \(\bigcap _{k=1}^l{\hat{B}}(p_{j_k},r)\) is transversal, i.e., at a point of intersection both the interiors of the balls and their boundaries intersect transversely, in the sense of differential topology; see [27, Chaps. 1 and 3].

the intersection \(\bigcap _{k=1}^l{\hat{B}}(p_{j_k},r)\) is transversal, i.e., at a point of intersection both the interiors of the balls and their boundaries intersect transversely, in the sense of differential topology; see [27, Chaps. 1 and 3].

The next result is classical and relates the homotopy of a geometric complex to the one of its associated Čech complex.

Lemma 2.4

(Nerve Lemma) If M is compact, there exists \(\rho >0\) such that, for each \(r\le \rho \),

i.e., they are homotopy equivalent.

Proof

For the proof in this setting, see [1, Lem. 6.1]. \(\square \)

Definition 2.5

(Isotopy classes of connected geometric complexes) Let \(p_1,\ldots ,p_n\) and \(q_1,\ldots ,q_n\) be points in M and let \(r_0,r_1\ge 0\) be such that

are nondegenerate M-geometric complexes. We say that \({\mathcal {U}}_0\) and \({\mathcal {U}}_1\) are (rigidly) isotopic and we write \({\mathcal {U}}_0\cong {\mathcal {U}}_1\) if there exists an isotopy of diffeomorphisms \(\varphi _t:M\rightarrow M\) with \(\varphi _0={{\,\mathrm{id}\,}}_M\) and a continuous function \(r(t)>0\), for \(t\in [0,1]\), such that

-

for each \(t\in [0,1]\), \({\mathcal {U}}\bigl (\{\varphi _t(p_1),\ldots ,\varphi _t(p_n)\},r(t)\bigr )\) is nondegenerate,

-

\(r(0)=r_0\), \(r(1)=r_1\), and

-

\(\varphi _1(p_1)=q_1,\ldots ,\varphi _1(p_n)=q_n\).

Remark 2.6

(Isotopy classes and discriminants) The definition of two complexes being (rigidly) isotopic is very reminiscent of the notion of rigid isotopy from algebraic geometry, where the “regular” deformations are those which do not intersect some discriminant. We can make this analogy more precise. For every \(n\in {\mathbb {N}}\) consider the smooth manifold

together with the discriminant

The set \(\Sigma _{n}\) is closed since its complement \(R_{n}\) is defined by finitely many transversality conditions. Adopting this point of view, isotopy classes of nondegenerate M-geometric complexes built using n many balls are labeled by the connected components of \(R_{n}:=H_{n}\backslash \Sigma _{n}\) (the complement of a discriminant). In fact, given a nondegenerate complex \({\mathcal {U}}(\{p_1, \ldots , p_n\}, r)\), \((p_1, \ldots , p_n, r)\in R_{n}\) (because it is nondegenerate) and, vice versa, every point in \(R_{n}\) corresponds to a nondegenerate complex. Moreover, a nondegenerate isotopy of two nondegenerate complexes defines a curve between the corresponding points of \(R_{n}\), and this curve is entirely contained in \(R_{n}\); the two corresponding parameters must therefore lie in the same connected component of \(R_{n}\); vice versa, because for an open set of a manifold connected and path connected are equivalent, every two points in the same component \(R_{n}\) can be joined by an arc all contained in \(R_{n}\) and give therefore rise to isotopic complexes.

Definition 2.7

We define the set

and use the notation \({\mathcal {G}}:={\mathcal {G}}(M)\) when M is given. We also let

Remark 2.8

Observe that, by definition, each class \([{\mathcal {U}}]\in {\mathcal {G}}/{\cong }\) keeps also the information on the way \({\mathcal {U}}\) is embedded in M. In particular, it might be that to two nondegenerate M-geometric complexes \({\mathcal {U}}_1\) and \({\mathcal {U}}_2\) there correspond isomorphic Čech complexes \({\check{C}}~({\mathcal {U}}_1)\simeq {\check{C}}({\mathcal {U}}_2)\), but at the same time the complexes \({\mathcal {U}}_1\) and \({\mathcal {U}}_2\) themselves are not rigidly isotopic (see Fig. 2).

Remark 2.9

For each M of dimension m, \({\mathcal {G}}(M)/{\cong }\,\supset \,{\mathcal {G}}({\mathbb {R}}^m)/{\cong }\).

Theorem 2.10

\({\mathcal {G}}/{\cong }\) is a countable set.

Proof

We first partition \({\mathcal {G}}/{\cong }\) into countably many sets. For every \(n\in {\mathbb {N}}\) we consider the set

we need to prove that this set is countable. We have already seen (Remark 2.6) that isotopy classes of nondegenerate complexes which are built using n balls are in one-to-one correspondence with the connected components of \(R_{n}=H_{n}\backslash \Sigma _{n}\) (these sets are defined by (2.1) and (2.2)). The function “number of connected components of a nondegenerate complex” is constant on each component of \(R_{n}\) and consequently the number of isotopy classes of connected and nondegenerate complexes (i.e., the cardinality of \({\mathcal {G}}_{n}/{\cong }\)) is smaller than the number of components of \(R_{n}\):

We are therefore reduced to prove that \(R_{n}\) has at most countably many components. To this end we write \(R_{n}\) as the disjoint union of its components (each of which is an open set in \(H_{n}\)):

We cover now the manifold \(H_{n}\) with countably many manifold charts \(\{(V_j, \varphi _j)\}_{j\in {\mathbb {N}}}\) with \(\varphi _j:V_j{\mathop {\longrightarrow }\limits ^{\sim }} {\mathbb {R}}^m\). For every \(j\in {\mathbb {N}}\) we consider also the decomposition of the open set \(R_{n}\cap V_j\) into its connected components:

Since each \(C_\alpha \) from (2.3) is the union of elements of the form

with the index set j running over the countable set \(N_\alpha \subset {\mathbb {N}}\), it is therefore enough to prove that for every \(j\in {\mathbb {N}}\) the index set \(B_{j}\) is countable, i.e., that the number of connected components of \(R_{n}\cap V_j\) is countable. Observe now that, since \(\varphi _j\) is a diffeomorphism between \(V_j\) and \({\mathbb {R}}^m\), then the number of connected components of \(R_{n}\cap V_j\) is the same of the number of connected components of \(\varphi _j(R_{n}\cap V_j),\) which is an open subset of \({\mathbb {R}}^m\). Since in each component of \(\varphi _j(R_{n}\cap V_j)\) we can pick a point with rational coordinates, it follows that the number of such components is countable, and this concludes the proof. \(\square \)

Remark 2.11

Observe that the key point of the proof of Theorem 2.10 is showing that the number of connected components of an open set in a differentiable manifold is countable.

Definition 2.12

We let

In particular,

Remark 2.13

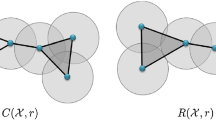

In order to appreciate the difference between the classes in \(({\mathcal {G}}/{\cong })\), \(({\mathcal {G}}/{\sim })\), and \(({\mathcal {G}}^{(k)}/{\simeq })\), we look at Fig. 2. Here, we have three \({\mathbb {R}}^3\)-geometric complexes, given by the union of balls in \({\mathbb {R}}^3\), that have three different shapes. All three complexes are homotopy equivalent to each other and they are all pairwise not isotopic. Moreover, while the first one and the second one have isomorphic 1-skeleta, the 1-skeleton of the third complex is not isomorphic to the other ones.

The three \({\mathbb {R}}^3\)-geometric complexes described in Remark 2.13. From left to right, we call them \({\mathcal {U}}_1\), \({\mathcal {U}}_2\), and \( {\mathcal {U}}_3\) and we assume the number of balls and their combinatorics needed to describe the first two are the same. Then \([{\mathcal {U}}_1]=[{\mathcal {U}}_2]=[{\mathcal {U}}_3]\) in \({\mathcal {G}}/{\sim }\), because they are all homotopy equivalent, \([{\mathcal {U}}_1]=[{\mathcal {U}}_2]\ne [{\mathcal {U}}_3]\) in \({\mathcal {G}}/{\simeq }\) (the first two give rise to the same Čech complex, which is however different from the last one) and \([{\mathcal {U}}_1]\ne [{\mathcal {U}}_2]\ne [{\mathcal {U}}_3]\ne [{\mathcal {U}}_1]\) in \({\mathcal {G}}/{\cong }\) (they are all pairwise non-isotopic)

Remark 2.14

There are natural forgetful maps

Definition 2.15

(Component counting function) Given a nondegenerate geometric complex \({\mathcal {U}}\subset M\), a topological subspace \(Y\subset M\), and a class \(w\in {\mathcal {G}}/{\cong }\), we define

In the paper [38] Nazarov and Sodin have introduced a powerful tool (the “integral geometry sandwich”) for localizing the count of the number of components of the zero set of random waves in a Riemannian manifold. This tool has been used by Sarnak and Wigman [48] for the study of distribution of components type of the zero set of random waves on a Riemannian manifold, and it has been adapted to geometric complexes in [1]. We recall here this tool, stated in the language of this paper.

Theorem 2.16

(Analogue of the Integral Geometry Sandwich) The following two estimates are true:

-

(1)

(The local case) Let \({\mathcal {U}}\) be a generic geometric complex in \({\mathbb {R}}^m\) and fix \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\). Then for \(0<r<R\),

$$\begin{aligned} \int _{B_{R-r}}\frac{{\mathcal {N}}({\mathcal {U}},B(x,r);w)}{{{\,\mathrm{vol}\,}}B_r}\,\mathrm {d}x\le {\mathcal {N}}({\mathcal {U}},B_R;w)\le \int _{B_{R+r}}\!\!\frac{{\mathcal {N}}^*({\mathcal {U}},B(x,r);w)}{{{\,\mathrm{vol}\,}}B_r}\,\mathrm {d}x. \end{aligned}$$ -

(2)

(The global case) Let \({\mathcal {U}}\) be a generic geometric complex in a compact Riemannian manifold M and fix \(w\in {\mathcal {G}}(M)/{\cong }\). Then for every \(\varepsilon >0\) there exists \(\eta >0\) such that for every \(r<\eta \),

$$\begin{aligned}&(1-\varepsilon )\int _{M}\frac{{\mathcal {N}}({\mathcal {U}},B(x,r);w)}{{{\,\mathrm{vol}\,}}B_r}\,\mathrm {d}x \le {\mathcal {N}}({\mathcal {U}},M;w)\\&\qquad \qquad \le \,(1+\varepsilon )\int _{M}\frac{{\mathcal {N}}^*({\mathcal {U}},B(x,r);w)}{{{\,\mathrm{vol}\,}}B_r}\,\mathrm {d}x. \end{aligned}$$

Proof

The proof of both statements is exactly the same as in [48], after noticing that the only property needed on the counting functions is that the considered topological spaces only have finitely many components, and these components are counted according to a specific type (here are selected according to isotopy type, but we could consider instead any function that partitions the set of components and count only the components belonging to a given class). \(\square \)

3 Random Geometric Complexes (Thermodynamic Regime)

Definition 3.1

(Riemannian case) Let M be a compact Riemannian manifold of dimension m and consider a set of points \(\{p_1,\ldots ,p_n\}\) independently sampled from the uniform distribution on M. Fix a positive number \(\alpha >0\), let \(r:=\alpha n^{-1/m}\) and

We say that \({\mathcal {U}}_n\) is a random M-geometric complex. The choice of such r is what defines the so-called critical or thermodynamic regime.

Definition 3.2

(Euclidean Poisson case) Let \(P:=\{p_1,p_2,\ldots \}\) be a set of points sampled from the standard spatial Poisson distribution in \({\mathbb {R}}^m\). For \(\alpha >0\), let

and, for \(R>0\), let

Remark 3.3

With probability 1, we have that \(\#\,(P\cap B(0,R))\) is finite. To see this, observe that

From now on, we will only consider nondegenerate complexes, without further mentioning this assumption. This is not reductive, since our random complexes are nondegenerate with probability one.

4 Random Measures

We fix the following notation. Given a set A with a fixed \(\sigma \)-algebra (omitted in the notation), we denote by

Definition 4.1

Let \({\mathcal {U}}\subset M\) be a finite geometric complex and let

be its decomposition into connected components. We define \(\Theta _{\mathcal {U}}\in {\mathcal {M}}^1({\mathcal {G}}/{\cong })\) as

Observe that the measure \(\Theta _{{\mathcal {U}}}\) just defined is a probability measure. We also endow \({\mathcal {M}}^1({\mathcal {G}}/{\cong })\) with the total variation distance:

When \({\mathcal {U}}\) is a random geometric complex, \(\Theta _{{\mathcal {U}}}\) is a random variable with values in the metric space \(({\mathcal {M}}^1({\mathcal {G}}/{\cong }),d_{{{\,\mathrm{tv}\,}}})\). In this context, recall the notion of convergence in probability:

Using the previous notation, we set

Theorem 4.2

There exists a probability measure \(\Theta \in {\mathcal {M}}^1({\mathcal {G}}/{\cong })\) such that

-

(1)

\(\Theta _n\xrightarrow [n\rightarrow \infty ]{{\mathbb {P}}}\Theta \) and \(\Theta _R\xrightarrow [R\rightarrow \infty ]{{\mathbb {P}}}\Theta \),

-

(2)

\({\text {supp}}\Theta ={\mathcal {G}}({\mathbb {R}}^m)/{\cong }\).

We shall see the proof of Theorem 4.2 in Sect. 4.1. As a first corollary we recover the results from [1].

Corollary 4.3

([1, Thms. 1.1 and 1.3]) Consider the forgetful map

We have that

Also, \({\text {supp}}\phi _*\Theta ={\mathcal {G}}({\mathbb {R}}^m)/{\sim }\).

Proof

We give the proof in the case of the measures \(\phi _*\Theta _n\), the other case is identical. By definition we have \(\phi _*\Theta _n\xrightarrow {{\mathbb {P}}} \phi _*\Theta \) if and only if

Observe now that

In particular, the inequality \(d_{{{{\,\mathrm{tv}\,}}}}(\phi _*\Theta _n, \phi _*\Theta )\ge \varepsilon \) implies the inequality \(d_{{{{\,\mathrm{tv}\,}}}}(\Theta _n, \Theta )\ge \varepsilon \) and therefore

As a second corollary we see that, because Theorem 4.2 keeps track of fine properties of the geometric complex, we can use other forgetful maps and obtain information on the limit distribution of the components type of each k-skeleton.

Corollary 4.4

For each \(k\in {\mathbb {N}}\), consider the forgetful map from Remark 2.14,

We have that

Also, \({\text {supp}}\varphi ^{(k)}_*\Theta ={\mathcal {G}}^{(k)}({\mathbb {R}}^m)/{\simeq }\).

Proposition 4.5

(Existence of all isotopy types) Let \({\mathcal {U}}\) be a nondegenerate geometric complex in \({\mathbb {R}}^m\) and let \(\alpha >0\). Let \(({\mathcal {U}}_n)_n\) be a sequence of random M-geometric complexes constructed using \(\alpha \). There exist \(n_0\), R, \(c>0\) (depending on \(\Gamma \) and \(\alpha \) but not on M) such that for every \(p\in M\) and for every \(n\ge n_0\),

Proof

The proof is similar to the proof of [1, Prop. 1.2]. Here we sketch this proof for the sake of completeness, pointing out what is the main difference with [1].

Assume that \({\mathcal {U}}\subset B(0, R')\) is constructed using balls of radius \(r=1\):

set \(R=\alpha R'\) and consider the sequence of maps:

For n large enough the map \(\psi _n\) becomes a diffeomorphism and we denote by \(\varphi _n\) its inverse. [1, Prop. 6.2] implies that there exist \(\varepsilon _0>0\) and \(n_0>0\) such that if \(\Vert \tilde{y}_k-y_k\Vert \le \varepsilon _0\) for every \(k=1, \ldots , \ell \), then for \(n\ge n_0\) the two complexes \(\bigcup _{k=1}^\ell {\hat{B}}(\varphi _n({\tilde{y}}_k), \alpha n^{-1/m})\) and \({\mathcal {U}}\) are isomorphic. In fact, because \({\mathcal {U}}\) is nondegenerate, possibly choosing \(\varepsilon _0>0\) even smaller, we can make sure that the complex \(\bigcup _{k=1}^\ell {\hat{B}}(\varphi _n({\tilde{y}}_k), \alpha n^{-1/m})\) belongs to the same rigid isotopy class of \({\mathcal {U}}\), because this is an open condition.

One then proceeds considering the event

Observe that

and in particular, in order to get the conclusion, it is enough to estimate from below the probability of \(E_n\). This is done in the last lines of the proof of [1, Prop. 1.2]. \(\square \)

Example 4.6

Let \(C_{137}\) be the cycle with 137 vertices and 137 edges. By Proposition 4.5 there exist \(n_0\), R, and \(c>0\) (depending on \(C_{137}\) and \(\alpha \) but not on M) such that for every \(p\in M\) and for every \(n\ge n_0\),

4.1 Proof of Theorem 4.2

We split the statement of Theorem 4.2 into two parts. The first part, Theorem 4.8, states that the random measure \(\Theta _R\) converges in probability to a deterministic measure \(\Theta \in {\mathcal {M}}^1({\mathcal {G}}/{\cong })\) supported on the set \({\mathcal {G}}({\mathbb {R}}^m)/{\cong }\). The second part, Theorem 4.12, states that also the random measure \(\Theta _n\) converges in probability to \(\Theta \).

4.1.1 The Local Model

Proposition 4.7

For every \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\) there exists a constant \(c_w>0\) such that the random variable

converges to \(c_w\) in \(L^1\) and almost surely as \(R\rightarrow \infty \).

Proof

Following the proof of [1, Prop. 2.1] with \({\mathcal {N}}({\mathcal {P}}_R,\tau ,w)\) instead of \({\mathcal {N}}({\mathcal {P}}_R,\tau ,\gamma )\) and with the application of Theorem 2.16 instead of [1, Thm. 6.6], one proves that there exists a constant \(c_w\) such that

Since \({\mathcal {P}}_R\subset B(0,R)\), this implies that

We now have to prove that \(c_w>0\). Since \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\), given \({\mathcal {U}}\in w\) there exist \(\beta >0\) and \(y_1,\ldots ,y_n\in {\mathbb {R}}^m\) such that

Let \(R_1\) be such that \({\mathcal {U}}\subset B(0,R_1)\) and choose r such that \(r\beta =\alpha \), where \(\alpha \) is the constant that we used for constructing the random complex \({\mathcal {P}}\). We can then rescale \({\mathcal {U}}\) in \(B(0,rR_1)\) so that it is constructed on radius \(\alpha \). Now, since \({\mathcal {U}}\) is nondegenerate, there exists \(\varepsilon >0\) such that, if for every i we have \(\Vert y_i-y'_i\Vert <\varepsilon \), then the complex

is isotopic to \({\mathcal {U}}\). Take \(K_R=k\cdot {{\,\mathrm{vol}\,}}B(0, R)\) disjoint balls \(\{B(y_j,rR_1)\}_{j=1,\ldots ,K_R}\) inside B(0, R) with \(c>0\). Then

Therefore

since the random variables \({\mathcal {N}}({\mathcal {P}}_R,B(y_j,rR_1);w)\) are identically distributed by the fact that the balls are disjoint. Now,

and

Therefore, \(c_w>0\). \(\square \)

Proposition 4.7 allows us to deduce the following theorem.

Theorem 4.8

There exists \(\Theta \in {\mathcal {M}}^1({\mathcal {G}}/{\cong })\) such that

Also, \({\text {supp}}\Theta ={\mathcal {G}}({\mathbb {R}}^m)/{\cong }\).

Proof

The proof is the same as the one of [1, Thm. 1.3], replacing the homotopy type counting function with the isotopy type one. \(\square \)

4.1.2 Riemannian Case

Theorem 4.9

Let \(p\in M\). For every \(\delta >0\) and for \(R>0\) sufficiently big there exists \(n_0\) such that for every \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\) and for \(n\ge n_0\),

Proof

The proof is the same as the one of [1, Thm. 3.1], with the following differences:

-

At point (3), instead of considering the homotopy equivalence between the unions of the balls, we consider the isotopy equivalences between their k-skeleta. This is allowed because, at the end of the proof of (3), it is proven that the combinatorics of the covers are the same.

-

After assuming point (1), point (2), and the modified point (3), we can say that the k-skeleta of the two unions of balls are isotopic and also the unions of all the components entirely contained in B(0, R) (respectively \({\hat{B}}(p,Rn^{-1/m})\)) have isotopic k-skeleta. In particular, the number of components of a given isotopy class w is the same for both sets with probability at least \(1-\delta \).\(\square \)

Corollary 4.10

For each \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\), \(\alpha >0\), \(x\in M\), and \(\varepsilon >0\), we have

Proof

The proof is the same as the one of [1, Cor. 3.2], with the application of Theorem 4.9 and Proposition 4.7 instead of [1, Thm. 3.1] and [1, Prop. 2.1]. \(\square \)

Theorem 4.11

For every \(w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }\), the random variable

converges in \(L^1\) to \(c_w\cdot {{\,\mathrm{vol}\,}}M\), where \(c_w\) is the constant appearing in Proposition 4.7. In particular, this implies that the random variable

i.e., when we consider all components with no restriction on their type, converges in \(L^1\) to c, where \(c=\sum _{w\in {\mathcal {G}}({\mathbb {R}}^m)/{\cong }}c_w>0\).

Proof

The proof is the same as the one of [1, Thm. 4.1], with the application of Theorem 2.16 instead of [1, Thm. 6.7] and the application of Corollary 4.10 instead of [1, Cor. 3.2]. \(\square \)

Theorem 4.12

The measure \(\Theta \in {\mathcal {M}}^1({\mathcal {G}}/{\cong })\) appearing in Theorem 4.8 is such that

Proof

The proof is the same as the one of [1, Thm. 1.1], with the following differences:

5 Geometric Graphs

We specialize the previous discussion to the case \(k=1\), and consider

Remark 5.1

The set of M-geometric graphs defined using closed balls equals the set of M-geometric graphs defined using open balls. To see this, assume that a geometric graph \(\Gamma =(V(\Gamma ),E(\Gamma ))\) is defined using closed balls of radius r. Then, for each pair of distinct vertices \((p_i,p_j)\),

Now, choose \(\varepsilon \ge 0\) small enough that, for each pair of distinct vertices \((p_i,p_j)\),

Therefore, \(\Gamma \) can be constructed as an M-geometric graph using open balls of radius \(r+\varepsilon \). The inverse implication is analogous.

In the case \(M={\mathbb {R}}^m\), the problem of describing the set \({\mathcal {G}}^{(1)}({\mathbb {R}}^m)\) is equivalent to asking which graphs are realizable as \({\mathbb {R}}^m\)-geometric graphs in a given dimension m. There is a vast literature about this problem and, commonly, geometric graphs realizable in dimension m are called m-sphere graphs while the minimal dimension m such that a given graph is an m-sphere graph is called its sphericity. In [35] it is proven that every graph has finite sphericity; in [32] the authors prove that the problem of deciding, given a graph \(\Gamma \), whether \(\Gamma \) is an m-sphere is NP-hard for all \(m>1\). We can also observe that, for each \(m>0\), there are graphs that are not m-sphere graphs. To see this, consider the kissing number k(m) in dimension m, defined as the number of non-overlapping unit spheres that can be arranged such that they each touch a common unit sphere. Consider the star graph with a central vertex connected to n external vertices, where \(n>k(m)\). In order to have a realization of dimension m of this graph, we need a central sphere that touches n spheres which do not touch each other. Since \(n>k(m)\), this is not possible.

Example 5.2

In dimension 2, the kissing number is 6, as shown in Fig. 3. Therefore, any star graph \(S_n\) on \(n+1\) vertices, with \(n>6\), is not realizable in dimension 2 as a sphere graph.

In the particular case of \(m=1\), 1-sphere graphs are called indifference graphs or unit interval graphs, and there are many characterizations of such graphs [26, 28, 34, 36, 45, 52]. A classical characterization is due to Roberts and Wegner [28, 45, 52] and it characterizes unit interval graphs by the absence of certain forbidden subgraphs; this is recalled in Theorem 5.3.

Theorem 5.3

(Roberts and Wegner) A graph is a unit interval graph, i.e., it is an element of \({\mathcal {G}}^{(1)}({\mathbb {R}})\), if and only if it does not contain any cycle of length at least four and any of the graphs shown in Fig. 4 as induced subgraph.

As a consequence, we get the following corollary.

Corollary 5.4

The support of the measure \(\varphi ^{(1)}_*\Theta \) defined in Corollary 4.4 is given by all graphs that do not contain any cycle of length at least four and any of the graphs shown in Fig. 4 as induced subgraph.

6 Normalized Laplacian of a Graph and Its Spectrum

We now fix a graph \(\Gamma \) on n vertices \(v_1,\ldots ,v_n\) and recall the definition of the (symmetric) normalized Laplace matrix, together with other common matrices associated to graphs. We shall then define the spectrum and the spectral measure associated to these matrices, and show some properties.

Definition 6.1

(Matrices associated to a graph) Let A be the adjacency matrix of \(\Gamma \); let \(D:={{\,\mathrm{diag}\,}}{(\deg v_1,\ldots ,\deg v_n)}\) be the degree matrix; let \(L:=D-A\) be the non-normalized Laplacian matrix and let \({\hat{L}}:=I_n-D^{-1/2}AD^{-1/2}\) be the symmetric normalized Laplacian matrix.

Definition 6.2

(Spectrum of a matrix) Given \(Q\in {{\,\mathrm{Sym}\,}}(n,{\mathbb {R}})\) let \({{\,\mathrm{spec}\,}}(Q)\) be the spectrum of Q, i.e., the collection of its eigenvalues repeated with multiplicity,

We define the empirical spectral measure of Q as

Definition 6.3

(Spectrum of a graph) We define spectrum of \(\Gamma \), \({{\,\mathrm{spec}\,}}(\Gamma )\), as the spectrum of \({\hat{L}}\) and we write it as \(\lambda _1(\Gamma )\le \ldots \le \lambda _n(\Gamma )\). We also define

and the spectral measure of \(\Gamma \) as

Recall that, for every \(i=1,\ldots ,n\), \(\lambda _i(\Gamma )\in [0,2]\) [8, Eq. (1.1) and Lem. 1.7]. In particular, this implies that \(s(\Gamma )\in {\mathcal {M}}([0,2])\) and \(\mu _\Gamma \in {\mathcal {M}}^1([0,2])\).

In order to state the next theorem, let us denote by \(C^{0}_c({\mathbb {R}},{\mathbb {R}})\) the space of continuous functions \(f:{\mathbb {R}}\rightarrow {\mathbb {R}}\) with compact support.

Theorem 6.4

Let \((\Gamma _{1,n})_n\) and \((\Gamma _{2,n})_n\) be two sequences of graphs such that, for every n, \(\Gamma _{1,n}\) and \(\Gamma _{2,n}\) are two graphs on n nodes that differ at most by c edges. Denote by \(\mu _{1,n}\) and \(\mu _{2,n}\) the spectral measures associated to one of the matrices \(A,D,L,{\hat{L}}\). Then

where \(\overset{*}{\rightharpoonup }\) denotes the weak star convergence, i.e., for each \(f\in C^{0}_c({\mathbb {R}},{\mathbb {R}})\)

We shall prove Theorem 6.4 in Sect. 6.1.

Remark 6.5

In the case of \({\hat{L}}\), we have convergence in total variation distance for “connected sum” of complete graphs, but not for paths, as we shall see in Sect. 6.2.

Remark 6.6

[25, Thm. 2.8] says that, if two families \((\Gamma _{1,n})_n\) and \((\Gamma _{2, n})_n\) differ by at most c edges and their corresponding spectral measures have weak limits, then they belong to the same spectral class (the notion of spectral class of a family of graphs is recalled in Remark 1.3). In this sense our previous Theorem 6.4 can be considered as an analogue of [25, Thm. 2.8]: the difference of the spectral measure of two families of graphs \((\Gamma _{1,n})_n\) and \((\Gamma _{2, n})_n\) differing by at most a finite number c of edges, goes to zero weakly (without the assumption that the corresponding spectral measures have weak limits).

6.1 Proof of Theorem 6.4

6.1.1 Preliminaries

Given \(Q\in {{\,\mathrm{Sym}\,}}(n,{\mathbb {R}})\), we define the 1-Shatten norm of Q as

The Weilandt–Hoffman inequality [49, Exercise 1.3.6] holds:

We will also need the Frobenius norm, defined for a symmetric matrix Q by

The Frobenius norm and the Schatten norm are orthogonally invariant. Moreover, the square of the Frobenius norm equals the sum of the squares of the entries of the matrix \(\Vert Q\Vert _F^2=\sum _{ij}Q_{ij}^2\).

Proposition 6.7

Let \(Q_1,Q_2\in {{\,\mathrm{Sym}\,}}(n,{\mathbb {R}})\) be such that

Then, for each \(f\in C^0_c({\mathbb {R}},{\mathbb {R}})\) and for each \(\varepsilon >0\), there exists \(\delta >0\) such that

Proof

Denote by \(\{\lambda _i^{(1)}\}_{i=1}^n\) and \(\{\lambda _i^{(2)}\}_{i=1}^n\) the eigenvalues of \(Q_1\) and \(Q_2\) respectively. Then

therefore

Now, since \(f\in C^0_c({\mathbb {R}},{\mathbb {R}})\), f is uniformly continuous and given \(\varepsilon >0\) there exists \(\delta =\delta (f)\) such that \(|\lambda _1-\lambda _2|\le \delta \) implies \(|f(\lambda _1)-f(\lambda _2)|\le \varepsilon \). Therefore, since by (6.1) and by hypothesis we have

it follows that

Therefore,

6.1.2 Applications to Graphs

Lemma 6.8

Let \(\Gamma _1,\Gamma _2\) be two graphs with \(V(\Gamma _1)=V(\Gamma _2)\) that differ by at most C edges. Then,

Proof

Observe that any of the matrices

consists of all zeros except for at most 4C entries, all of which are bounded by a constant (it is 1 for \(\Delta _1\) and C for \(\Delta _2\) and \(\Delta _3\)). Therefore, each \(\Delta _i\in {{\,\mathrm{Sym}\,}}(n,{\mathbb {R}})\) for \(i=1,2,3\) has rank at most 4C and all its eigenvalues are zero, except for at most 4C of them. It follows that, for \(i=1,2,3\),

where

Similarly, \(\Delta _4:={\hat{L}}_1-{\hat{L}}_2\) consists of all zeros except for at most \(2C(n-1)\) entries, all of which are bounded by 1, and it has rank at most 4C. Therefore,

As a corollary, we can prove Theorem 6.4.

Proof of Theorem 6.4

We prove that, for each \(f\in C^{0}_c({\mathbb {R}},{\mathbb {R}})\) and for each \(\varepsilon >0\),

Let \(c_1:=4C\), \(c_2=c_3:=4C^2\) and \(c_4:=2C\sqrt{2}\). By Lemma 6.8, we have \(\Vert \Delta _i\Vert _{S^1}\le c_i\) for each \(i=1,2,3\). By Proposition 6.7, there exists \(\delta >0\) such that

Therefore,

Similarly, by Lemma 6.8 we have that \(\Vert \Delta _4\Vert _{S^1}\le c_4\sqrt{n-1}\). By Proposition 6.7, there exists \(\delta >0\) such that

Therefore,

6.2 Strong Convergence for Complete Graphs

Lemma 6.9

Given \(N\in {\mathbb {N}}^+\), let \(K_N\) and \(K'_N\) be two complete graphs on N nodes. Let \(K_N\sqcup K'_N\) be their disjoint union and let \(\Gamma _N:=K_N\cup _{\text {c\,edges}}K'_N\) be their union together with c edges \((v_i,v'_i)\) where \(v_i\in K_N\) and \(v'_i\in K'_N\), for \(i=1,\ldots ,c\). Let also \(\mu _{K_N\sqcup K'_N}\) and \(\mu _{\Gamma _N}\) be the spectral measures of these two graphs. Then,

for some \(a_i\in (0,2)\).

Remark 6.10

In order to prove Lemma 6.9, we make the following observation. It is easy to see that the spectrum of the symmetric normalized Laplacian matrix \({\hat{L}}=I_n-D^{-1/2}AD^{-1/2}\) equals the spectrum of the random walk normalized Laplacian matrix \(\tilde{L}:=I_n-D^{-1}A\). Moreover, for a graph with vertex set V, \(\tilde{L}\) can be seen as an operator from the set \(\{f:V\rightarrow {\mathbb {R}}\}\) to itself. We shall work on this operator for proving Lemma 6.9 and, for a graph \(\Gamma \), we shall use the simplified notation \(L^{\Gamma }\) in order to indicate the random walk normalized Laplace operator for \(\Gamma \).

Proof of Lemma 6.9

Since the spectrum of \(K_N\sqcup K'_N\) is given by 0 with multiplicity 2 and \({N}/({N-1})\) with multiplicity \(2(N-1)\), we have that

In order to prove the second part of the lemma, we shall find \(2(N-1-c)\) functions on \(V(\Gamma _N)\) that are eigenfunctions for the normalized Laplace operator with eigenvalue \({N}/({N-1})\) and are orthogonal to each other. In particular, by the symmetry of \(\Gamma _N\), it suffices to find \(N-1-c\) such functions that are 0 on the vertices of \(K'_N\).

Observe that \(K_{N-c}\) is a subgraph of \(K_N\setminus \{v_1,\ldots ,v_c\}\) that has \(N-1-c\) eigenfunctions \(f_1,\ldots ,f_{N-1-c}\) for the largest eigenvalue. These are orthogonal to each other and orthogonal to the constants, therefore

for each \(i,j\in \{1,\ldots ,N-1-c\}\). Now, for \(i\in \{1,\ldots ,N-1-c\}\), let \(\tilde{f}_i\) be the function on \(V(K_N)\) that is equal to zero on \(v_1,\ldots ,v_c\) and is equal to \(f_i\) otherwise. Then, \(\tilde{f}_1,\ldots ,\tilde{f}_{N-1-c}\) are orthogonal to each other and orthogonal to the constants because

Since for complete graphs any function that is orthogonal to the constants is an eigenfunction for \({N}/({N-1})\), we have that \(\tilde{f}_1,\ldots ,\tilde{f}_{N-1-c}\) are (pairwise orthogonal) eigenfunctions for \({N}/({N-1})\).

Analogously, for \(i\in \{1,\ldots ,N-1-c\}\), let now \({\hat{f}}_i\) be the function on \(V(\Gamma _N)\) that is equal to zero on \(K_N'\cup \{v_1,\ldots ,v_c\}\) and is equal to \(f_i\) otherwise. It is then easy to see that also these functions are orthogonal to each other and orthogonal to the constants. Now, for each i and for each \(v\in \Gamma _N\) with \(\tilde{f}_i(v)\ne 0\), we have that \(v\in K_{N-c}\), therefore

This proves that the functions \({\hat{f}}_i\) are \(N-1-c\) orthogonal eigenfunctions of the Laplace operator in \(\Gamma _N\) for the eigenvalue \({N}/({N-1})\). Since they are all 0 on \(K'_N\), by symmetry we can also get \(N-1-c\) eigenfunctions for \({N}/({N-1})\) on \(\Gamma _N\) that are 0 on \(K_N\) and therefore are orthogonal to the first \(N-1-c\) functions. This implies that the multiplicity of \({N}/({N-1})\) for \(\Gamma _N\) is at least \(2(N-1-c)\). Therefore,

for some \(a_i\in (0,2)\). \(\square \)

Corollary 6.11

The total variation distance between the probability measures \(\mu _{K_N\sqcup K'_N}\) and \(\mu _{\Gamma _N}\) defined in the previous lemma is

In particular, if \(c=o(N)\), the total variation distance tends to zero for \(N\rightarrow \infty \).

Example 6.12

The previous corollary does not hold in general. As a counterexample, take two copies of the path on N vertices, \(P_N\) and \(P'_N\). Their union via one external edge can be for example the path on 2N vertices, and

The total variation distance between these two measures does not tend to zero for \(N\rightarrow \infty \).

7 Random Geometric Graphs

Specializing Corollary 4.4 to the case \(k=1\), we get

with \({\text {supp}}\varphi ^{(1)}_*\Theta ={\mathcal {G}}^{(1)}({\mathbb {R}}^m)\).

Definition 7.1

We define the random geometric graphs

and we associate the empirical spectral measures

Since the spectrum of a graph \(\Gamma \) is finite, we can rewrite the two random measures \(\mu _n\) and \(\mu _R\) above as follows. First, the set of all possible isomorphism classes of graphs is countable and therefore there exists a sequence \(\{x_\ell \}_{\ell \in {\mathbb {N}}}\subset [0,2]\) such that

where the random variables \(c_{\ell , n}\) and \(c_{\ell , R}\) are defined by

In the above definitions of the coefficients \(c_{\ell , n}\) and \(c_{\ell , R}\), the components should be counted “with multiplicity”: if \(x_\ell \) belongs to the spectrum of \(\Gamma _{n, j}\) (respectively \(\Gamma _{R, j}\)) with some multiplicity \(m(x_\ell )\), then the component \(\Gamma _{n, j}\) (respectively \(\Gamma _{R, j}\)) is counted \(m(x_\ell )\) times.

We will need the following lemma.

Lemma 7.2

For every \(\delta >0\) there exists \(L>0\) such that

Proof

Let \(A\subset \{x_\ell \}_{\ell \in {\mathbb {N}}} \) be any subset. Then

In particular, the two series \({\mathbb {E}}\sum _{\ell }c_{\ell ,n}\) and \({\mathbb {E}}\sum _{\ell }c_{\ell ,R}\) converge and therefore the existence of such L is clear (as the tails of the series must be arbitrarily small). \(\square \)

Corollary 7.3

For every \(\ell \in {\mathbb {N}}\) there exist constants \(c_\ell \ge 0\) such that we have the following convergence of random variables:

The constants \(c_\ell \) are positive if and only if \(x_\ell \) belongs to the spectrum of an \({\mathbb {R}}^m\)-geometric graph. Moreover, the measure

is a probability measure on \({\mathbb {R}}\) with support contained in [0, 2].

Proof

The convergence in \(L^1\) of the random variables \(c_{\ell , n}\) and \(c_{\ell , R}\) follows from their description as “number of components such that \(x_\ell \) belongs to the spectrum of the random geometric graph”: in fact, for every \(\ell \ge 0\) we can introduce the counting function

With this notation we have

The convergence of \(c_{\ell , n}\) can be argued as in the proof of Theorem 4.11, and the convergence of \(c_{\ell , R}\) proceeds as in Proposition 4.7. The measure \(\mu \) is well defined and the fact that it is a probability measure follows from the same proof as [1, Prop. 6.4], using the above Lemma 7.2 as a substitute of [1, Lem. 6.3]. \(\square \)

Theorem 7.4

Let \(\mu \in {\mathcal {M}}^1([0,2])\) be the measure defined in (7.1). Then

i.e., for every \(f\in C^0([0,2],{\mathbb {R}})\) we have \({\mathbb {E}}\int f\,\mathrm {d}\mu _n\rightarrow \int f\,\mathrm {d}\mu \) and \({\mathbb {E}}\int f\,\mathrm {d}\mu _R\rightarrow \int f\,\mathrm {d}\mu \).

Proof

The proof of the statement for \(\mu _n\) and \(\mu _R\) is the same: we do it for \(\mu _R\). Let \(f\in C^{0}([0,2], {\mathbb {R}})\) and fix \(\varepsilon >0\). Apply Lemma 7.2 with the choice of \(\delta =\varepsilon /{\text {sup}|f|}\), and get the corresponding set \(F=\{\ell _1, \ldots , \ell _a\}\subset {\mathbb {N}}\). Also, from the convergence of the series \(\sum c_\ell \) we get the existence of a finite set \(F'\) such that \(\sum _{\ell \notin F'}c_\ell <\varepsilon /{\text {sup}|f|}\). Define \(F''=F\cup F'\) (this is still a finite set) and

where in the last line we have used the \(L^1\) convergence of \(c_{\ell , R}\rightarrow c_\ell \). Since this is true for every \(\varepsilon >0\), it follows that

\(\square \)

Proposition 7.5

The measure \(\mu \) appearing in Theorem 7.4 has the following properties:

-

(1)

\(\mu \) is not absolutely continuous with respect to Lebesgue;

-

(2)

\(\displaystyle \lim _{n\rightarrow \infty }{\mathbb {E}}\,\#\{\hbox {eigenvalues of}\,\Gamma _n\,\hbox {in}\,[a,b]\}/n=\mu ([a,b])>0\);

-

(3)

\(\mu (\{0\})=\beta \).

Proof

We start with (3): we have that \(\mu (\{0\})=c_{\ell _0}\) where \(0=x_{\ell _0}\) and \(c_{\ell _0}\) is the \(L^1\)-limit of \(c_{\ell _0, R}\), which is the random variable

and therefore (3) follows from the definition of \(\beta \).

The item (1) also follows immediately, since \(\beta _0>0\) and \(\mu \) charges positively sets of Lebesgue measure zero (hence it cannot be absolutely continuous with respect to Lebesgue measure).

For the proof of (2) we argue exactly as in the proof of Theorem 7.4, by replacing f with \(\chi _{[a,b]}\) (the characteristic function of the interval [a, b]) and observing that the only property of f that we have used is its boundedness. \(\square \)

Notes

This list is by no means complete, see [5] for a survey and a more complete set of references!

Quoting the introduction from [1]: random geometric complexes are studied within three main phases or regimes based on the relation between density of points and radius of the neighborhoods determining the complex: the subcritical regime (or “dust phase”) where there are many connected components with little topology, the critical regime (or “thermodynamic regime”) where topology is the richest (and where the percolation threshold appears), and the supercritical regime where the connectivity threshold appears. The thermodynamic regime is seen to have the most intricate topology.

A motif in a graph (or more generally in a complex) is a recurrent and statistically significant sub-graph or pattern.

In the rest of the paper we will consider \(\alpha \) as fixed from the very beginning and omit it in the notation; the study of the dependence on \(\alpha >0\) of various objects is an interesting problem, on which for now we cannot say much.

Recall that the injectivity radius \({\text {inj}}_pM\) of M at one point p is defined to be the largest radius of a ball in the tangent space \(T_pM\) on which the exponential map \(\exp _p:T_{p}M\rightarrow M\) is a diffeomorphism and the injectivity radius of M is defined as the infimum of the injectivity radii at all points:

$$\begin{aligned} {\text {inj}}M=\inf _{p\in M}{\text {inj}}_pM. \end{aligned}$$

References

Auffinger, A., Lerario, A., Lundberg, E.: Topologies of random geometric complexes on Riemannian manifolds in the thermodynamic limit (2018). arXiv:1812.09224 (to appear in IMRN)

Barabási, A.-L., Albert, R.: Emergence of scaling in random networks. Science 286(5439), 509–512 (1999)

Biggs, N.L., Lloyd, E.K., Wilson, R.J.: Graph Theory 1736–1936. Clarendon Press, Oxford (1976)

Blackwell, P., Edmondson-Jones, M., Jordan, J.: Spectra of adjacency matrices of random geometric graphs (2006). http://www.jonathanjordan.staff.shef.ac.uk/rgg.pdf

Bobrowski, O., Kahle, M.: Topology of random geometric complexes: a survey. J. Appl. Comput. Topol. 1(3–4), 331–364 (2018)

Bobrowski, O., Mukherjee, S.: The topology of probability distributions on manifolds. Prob. Theory Relat. Fields 161(3–4), 651–686 (2015)

Bollobás, B.: Random Graphs. Academic Press, London (1985)

Chung, F.R.K.: Spectral Graph Theory. CBMS Regional Conference Series in Mathematics, vol. 92. American Mathematical Society, Providence (1997)

Chung, F., Lu, L.: Complex Graphs and Networks. CBMS Regional Conference Series in Mathematics, vol. 107. American Mathematical Society, Providence (2006)

Colin de Verdière, Y.: Spectres de Graphes. Cours Spécialisés, vol. 4. Société Mathématique de France, Paris (1998)

Dettmann, C.P., Georgiou, O., Knight, G.: Spectral statistics of random geometric graphs. Europhys. Lett. 118(1), # 18003 (2017)

Ding, X., Jiang, T.: Spectral distributions of adjacency and Laplacian matrices of random graphs. Ann. Appl. Probab. 20(6), 2086–2117 (2010)

Durrett, R.: Random Graph Dynamics. Cambridge Series in Statistical and Probabilistic Mathematics, vol. 20. Cambridge University Press, Cambridge (2007)

Erdős, P., Rényi, A.: On random graphs. I. Publ. Math. Debrecen 6, 290–297 (1959)

Erdős, P., Rényi, A.: On the evolution of random graphs. Magyar Tud. Akad. Mat. Kutató Int. Közl. 5, 17–61 (1960)

Erdős, P., Rényi, A.: On the evolution of random graphs. Bull. Inst. Int. Stat. 38, 343–347 (1961)

Erdős, P., Rényi, A.: On the strength of connectedness of a random graph. Acta Math. Acad. Sci. Hung. 12, 261–267 (1961)

Erdős, P., Spencer, J.: Probabilistic Methods in Combinatorics. Probability and Mathematical Statistics, vol. 17. Academic Press, New York–London (1974)

Evangelou, S.N.: Quantum percolation and the Anderson transition in dilute systems. Phys. Rev. B 27(2), 1397–1400 (1983)

Evangelou, S.N.: A numerical study of sparse random matrices. J. Stat. Phys. 69(1–2), 361–383 (1992)

Evangelou, S.N., Economou, E.N.: Spectral density singularities, level statistics, and localization in sparse random matrices. Phys. Rev. Lett. 68(3), 361–364 (1992)

García Trillos, N., Gerlach, M., Hein, M., Slepčev, D.: Error estimates for spectral convergence of the graph Laplacian on random geometric graphs toward the Laplace-Beltrami operator. Found. Comput. Math. 20, 827–887 (2020)

Gilbert, E.N.: Random plane networks. J. Soc. Ind. Appl. Math. 9(4), 533–543 (1961)

Goel, A., Trinh, K.D., Tsunoda, K.: Strong law of large numbers for Betti numbers in the thermodynamic regime. J. Stat. Phys. 174(4), 865–892 (2019)

Gu, J., Jost, J., Liu, S., Stadler, P.F.: Spectral classes of regular, random, and empirical graphs. Linear Algebra Appl. 489, 30–49 (2016)

Gutierrez, M., Oubiña, L.: Metric characterizations of proper interval graphs and tree-clique graphs. J. Graph Theory 21(2), 199–205 (1996)

Hirsch, M.W.: Differential Topology. Graduate Texts in Mathematics, vol. 33. Springer, New York (1994)

Jackowski, Z.: A new characterization of proper interval graphs. Discrete Math. 105(1–3), 103–109 (1992)

Janson, S., Łuczak, T., Rucinski, A.: Random Graphs. Wiley-Interscience Series in Discrete Mathematics and Optimization. Wiley, New York (2000)

Jost, J., Mulas, R.: Hypergraph Laplace operators for chemical reaction networks. Adv. Math. 351, 870–896 (2019)

Kahle, M.: Random geometric complexes. Discrete Comput. Geom. 45(3), 553–573 (2011)

Kang, R.J., Müller, T.: Sphere and dot product representations of graphs. Discrete Comput. Geom. 47(3), 548–568 (2012)

Kolchin, V.F.: Random Graphs. Encyclopedia of Mathematics and its Applications, vol. 53. Cambridge University Press, Cambridge (1999)

Lekkerkerker, C.G., Boland, J.C.: Representation of a finite graph by a set of intervals on the real line. Fund. Math. 51, 45–64 (1962/1963)

Maehara, H.: Space graphs and sphericity. Discrete Appl. Math. 7(1), 55–64 (1984)

Mertzios, G.B.: A matrix characterization of interval and proper interval graphs. Appl. Math. Lett. 21(4), 332–337 (2008)

Mirlin, A.D., Fyodorov, Y.V.: Universality of level correlation function of sparse random matrices. J. Phys. A 24(10), 2273–2286 (1991)

Nazarov, F., Sodin, M.: Asymptotic laws for the spatial distribution and the number of connected components of zero sets of Gaussian random functions. Zh. Mat. Fiz. Anal. Geom. 12(3), 205–278 (2016)

Niyogi, P., Smale, S., Weinberger, S.: Finding the homology of submanifolds with high confidence from random samples. Discrete Comput. Geom. 39(1–3), 419–441 (2008)

Novikov, S.P.: Schrödinger operators on graphs and symplectic geometry. In: Proceedings of the Arnoldfest (Toronto 1997). Fields Inst. Commun., vol. 24, pp. 397–413. American Mathematical Society, Providence (1999)

Nyberg, A., Gross, T., Bassler, K.E.: Mesoscopic structures and the Laplacian spectra of random geometric graphs. J. Complex Netw. 3(4), 543–551 (2015)

Palmer, E.M.: Graphical Evolution. Wiley-Interscience Series in Discrete Mathematics. Wiley, Chichester (1985)

Penrose, M.: Random Geometric Graphs. Oxford Studies in Probability, vol. 5. Oxford University Press, Oxford (2003)

Puppe, T.: Spectral Graph Drawing. Diploma work, Universität Konstanz (2005)

Roberts, F.S.: Indifference graphs. In: Proof Techniques in Graph Theory (2nd Ann Arbor Graph Theory Conf., Ann Arbor 1968), pp. 139–146. Academic Press, New York (1969)

Rodgers, G.J., Bray, A.J.: Density of states of a sparse random matrix. Phys. Rev. B 37(7), 3557–3562 (1988)

Rodgers, G.J., De Dominicis, C.: Density of states of sparse random matrices. J. Phys. A 23(9), 1567–1573 (1990)

Sarnak, P., Wigman, I.: Topologies of nodal sets of random band-limited functions. Commun. Pure Appl. Math. 72(2), 275–342 (2019)

Tao, T.: Topics in Random Matrix Theory. Graduate Studies in Mathematics, vol. 132. American Mathematical Society, Providence (2012)

Walters, M.: Random geometric graphs. In: Surveys in Combinatorics 2011. London Math. Soc. Lecture Note Ser., vol. 392, pp. 365–401. Cambridge University Press, Cambridge (2011)

Watts, D.J., Strogatz, S.H.: Collective dynamics of ’small-world’ networks. Nature 393(6684), 409–410 (1998)

Wegner, G.: Eigenschaften der Nerven homologisch-einfacher Familien im \({\mathbb{R}}^n\). PhD thesis, Göttingen University (1967)

Yogeshwaran, D., Subag, E., Adler, R.J.: Random geometric complexes in the thermodynamic regime. Prob. Theory Relat. Fields 167(1–2), 107–142 (2017)

Acknowledgements

Open access funding provided by Scuola Internazionale Superiore di Studi Avanzati - SISSA within the CRUI-CARE Agreement. We are grateful to Bernd Sturmfels, because without him this paper would not exist. We are grateful to Fabio Cavalletti, Jürgen Jost, Matthew Kahle, Erik Lundberg, Leo Mathis, and Michele Stecconi for helpful comments, discussions, and forbidden graphs. We are grateful to the anonymous referees for the constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: János Pach

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lerario, A., Mulas, R. Random Geometric Complexes and Graphs on Riemannian Manifolds in the Thermodynamic Limit. Discrete Comput Geom 66, 1072–1104 (2021). https://doi.org/10.1007/s00454-020-00238-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-020-00238-4