Abstract

Recent studies show promising potential for artificial intelligence (AI) to assist healthcare providers (HCPs) in skin cancer care. The aim of this study is to explore the views of dermatologists and general practitioners (GPs) regarding the successful implementation of AI when assisting HCPs in skin cancer care. We performed a qualitative focus group study, consisting of six focus groups with 16 dermatologists and 17 GPs, varying in prior knowledge and experience with AI, gender, and age. An in-depth inductive thematic content analysis was deployed. Perceived benefits, barriers, and preconditions were identified as main themes. Dermatologists and GPs perceive substantial benefits of AI, particularly an improved health outcome and care pathway between primary and secondary care. Doubts about accuracy, risk of health inequalities, and fear of replacement were among the most stressed barriers. Essential preconditions included adequate algorithm content, sufficient usability, and accessibility of AI. In conclusion, dermatologists and GPs perceive significant benefits from implementing AI in skin cancer care. However, to successfully implement AI, key barriers need to be addressed. Efforts should focus on ensuring algorithm transparency, validation, accessibility for all skin types, and adequate regulation of algorithms. Simultaneously, improving knowledge about AI could reduce the fear of replacement.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Artificial Intelligence (AI) has become a popular topic in medicine over recent years. In dermatology, deep neural networks (DNNs) have been reported to achieve, and even outperform, the level of accuracy of dermatologists when classifying images of skin lesions [1, 2]. Although previous studies mainly focused on AI algorithms as a potential substitute for clinicians in skin cancer detection[1,2,3], recent studies also demonstrate promising potential for this technology to assist rather than replace clinicians in skin cancer related clinical decision making, also referred to as augmented intelligence (AuI) [4,5,6]. Experts have hypothesized that the use of DNNs in skin cancer care can facilitate skin cancer screening in primary care and allows rapid triage of difficult skin lesion cases in both primary and dermatological practice [7].

The promises of AI to improve skin cancer detection are impressive, but successful implementation by healthcare providers (HCPs) in routine care will ultimately determine the benefits of this novel technology. Actual numbers regarding the current implementation of AI in skin cancer care are lacking. However, recent survey studies among dermatologists in the United States, Europe, and China have revealed a positive attitude towards the potential of AI within the field of dermatology [8,9,10]. In contrast, GPs see only limited potential for AI in primary care in general. This is mainly attributed to a perceived narrow scope of use cases of AI in primary care and cynicism about the capabilities of AI to support in undertaking diagnoses [11]. However, their views towards AI specifically in skin cancer care remain unclear.

A critical step in the implementation of complex interventions is to gain an in-depth understanding of potential users’ views towards the use of AI in skin cancer care [12]. Nevertheless, an exploration of HCPs’ views towards AI when used in the hands of physicians in skin cancer care (e.g., AuI) is currently lacking. This qualitative study aims to fill this knowledge gap by exploring the views of Dutch dermatologists and GPs regarding successful implementation of assistive AI for HCPs in skin cancer care. Results of this study can be used to improve strategies for the future implementation of AI in skin cancer care, as well as to align the development of medical devices that use DNNs for skin cancer detection with the expectations of GPs and dermatologists.

Materials and methods

Study design

A qualitative study design was chosen as this is ideally suited to provide an in- depth picture of participants’ opinions, thoughts, and experiences [13, 14]. Focus groups (FGs) were considered most appropriate as group dynamics stimulate participants to reflect on each other, resulting in more diverse conversations than individual interviews [15]. Due to COVID-19, the focus groups were hosted online using Microsoft Teams. The reporting of this study followed the Standards for Reporting Qualitative Research (SRQR) [16].

Selection of participants

Dutch dermatologists and GPs were eligible for participation in the FGs. Purposive sampling was used to reach a variable sample of participants in terms of prior knowledge and/or experience with AI, gender, and age. Participants were recruited on social media (LinkedIn, Facebook, WhatsApp/Telegram dermatologist and GP group chats), and via email. In addition, participants were invited through the Dutch Society of Dermatology and Venereology’s newsletter. The invitations included an information leaflet, and participants were offered a €30 gift card. Participants applied via a web form.

Data collection

A prespecified topic guide based on existing literature regarding the acceptance and implementation of new technology in healthcare and previous experiences from our multidisciplinary research group (supplement 1). [13, 17, 18] was used to generate a semi-structured discussion during the FGs. Written informed consent and demographic questionnaire was obtained from participants. Before starting the FGs, the moderators explicitly explained that no consensus had to be reached. The 90-min sessions were moderated by two medical doctors (MDs) (TS,FM), of whom one had previous experience with qualitative research, under supervision of an experienced qualitative researcher (ML).

Data analysis

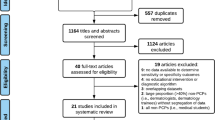

We performed a thorough inductive thematic content analysis using elements from Grounded Theory (Fig. 1), embedded in a constructivist paradigm [19]. All focus groups were audio-taped and transcribed verbatim. The transcripts were analyzed using NVivo v.12. Open coding of the first four FGs was independently done by two researchers (TS,FM), resulting in an unstructured list of codes [19]. Next, these codes were axially coded, i.e. categorized in more abstract codes resulting in a coding scheme of first concepts. This coding scheme was then discussed and refined by the multidisciplinary team of researchers (TS,FM,ML). Two additional FGs with GPs and dermatologists were organized as data saturation was not yet reached. The transcripts of the additional FGs were simultaneously openly and axially coded by two researchers (TS,FM) using this structured coding scheme and discussed with a senior qualitative researcher (ML). Based on this, the coding scheme was further refined and initial main themes and sub-themes were identified. Data saturation, meaning that no new insights were identified during the analysis[19], was reached after analysing six FGs. After selective coding, i.e. the stage in which more abstract and analytical categorization is performed, the final main themes and subthemes were determined based on discussion within the multidisciplinary research team (TS,FM,ML,MW). Demographic characteristics were analysed using SPSS Statistics v.15.

Ethical considerations

The Erasmus MC medical ethical committee waived the need for ethical approval after reviewing the study protocol (MEC-2020–764).

Results

Characteristics of participating dermatologists and GPs are presented in Table 1, and individual characteristics in supplement 2 (eTable 1). In total, 3 main themes consisting of 13 sub-themes were identified (Fig. 2).

Perceived benefits

A first important benefit of the use of AI in skin cancer care, according to dermatologists and GPs, is that it is thought to improve the health outcome for skin cancer patients in primary and secondary care. This improvement was mainly attributed to the improved diagnostic accuracy when AI is used for skin cancer detection by dermatologists and GPs, leading to fewer missed skin cancer diagnoses and less unnecessary biopsies and excisions of benign skin lesions. As a result of the improved detection accuracy, GPs indicated expecting to be more confident when they use AI in the management of suspicious skin lesions.

“I thought: ‘Oh this is really a harmless mole’ and it turned out to be a melanoma that was flagged by that app. I got a warning notification and called the patient and said: ‘Well, go to the dermatologist today’. It was a young man of about 24 years with a melanoma which I would have missed otherwise”. (GP, FG 6).

Besides an improved diagnostic accuracy and confidence, dermatologists and GPs expected AI to improve the follow-up of skin lesions by offering the possibility to standardize the analysis and storage of dermoscopic and clinical images of skin lesions. When AI can provide a lesion description with treatment advice as well, GPs expected to save time and, simultaneously, make the way skin lesions are described and analyzed more universal. Dermatologists also noted AI to be useful for the comparison of lesion pictures longitudinally. By comparing lesion changes over time with AI guidance, they expected to make more scientific based decisions regarding the management of skin lesions, ultimately leading to improved patient outcomes.

The second identified benefit of the implementation of AI in skin cancer care is the improved care pathway between primary and secondary care. Both dermatologists and GPs expected the use of AI during the assessment of skin lesions to significantly reduce the number of unnecessary referrals for benign skin lesions from primary to secondary care. In addition to the reduction of referrals for benign lesions, dermatologists and GPs expected the use of AI to facilitate substitution of low-risk skin cancer care (e.g., low-risk basal cell carcinomas, actinic keratosis) from the dermatologist to the GP practice. Furthermore, the improved care pathway was thought to lead to a cost reduction of skin cancer care due to a lower number of unnecessary referrals and a substitution of skin cancer care from secondary to primary care.

‘I would like to see that used in practice. Particularly in order to separate out certain patient groups so that you provide tailored care and don't try and waste a lot of time on things that don't really matter.’ (Dermatologist, FG 3).

Finally, dermatologists indicated AI to be useful for dismissing patients and reducing the amount of follow-up visits. This was proposed to be accomplished either by GPs having the ability to accurately classify suspicious skin lesions with AI or by providing patients with smartphone applications that use AI for the classification of skin lesions.

The educational function of AI was identified as a third benefit. According to GPs, AI can offer a possibility to provide insight into why a lesion is considered suspicious or benign, thereby enhancing their knowledge of dermatology. This educational aspect of AI for GPs was also recognized by dermatologists, describing it as a possibility to train GPs, relieving them from spending time on the education of GPs.

‘I would like it to receive feedback from the application and compare it to my own interpretation to see if I’m right. I think you can learn a lot in that way.’ (GP, FG 5).

Perceived barriers

The first identified main barrier to the use of AI in skin cancer care as perceived by GPs and dermatologists was doubts about the accuracy of AI. This was first of all related to a perceived lack of integration of clinical findings in the assessment of an algorithm. Participants considered palpation and medical history to be essential for an accurate skin cancer diagnosis, whereas AI algorithms were thought to focus on visual information from a lesion photo. Moreover, the inability to compare a lesion – especially nevi – to other lesions on the skin of the patient was considered as another crucial component in the evaluation of skin lesions at which an algorithm falls short, according to dermatologists. They believed it to be nearly impossible for AI to distinguish ‘ugly duckling’ lesions solely based on a lesion photo without an overview of other lesions on the skin. The second reason for dermatologists and GPs to doubt the accuracy of AI was a perceived lack of algorithm transparency. Algorithms were considered to be ‘black boxes’, and both GPs and dermatologists explained it being difficult to understand on what grounds the output of an algorithm is based. This made it unclear to know when the assessment of an algorithm is accurate or not.

‘You want to see such a thing in the light of what kind of patient you have in front of you, with what kind of skin type, sun exposure, eye color etc. So, I'm just missing a whole lot to comfortable rely on an algorithm.’ (Dermatologist, FG 3).

The second identified main barrier was the risk of health inequalities. Concerns were raised about a bias towards lighter skin types among algorithm training data that could potentially cause health inequalities based on skin type. Moreover, dermatologists and GPs were concerned of accuracy differences between hospitals and GP practices who use and those do not use AI for skin cancer detection, leading to health inequalities depending on the hospital or GP practice a patient visits.

‘I think it is also important to check whether it [AI] works on people with a black skin, for a lot of AI performs well on people with a white skin in particular.’ (GP, FG 5).

A third barrier mentioned by dermatologists was a fear of being replaced by AI. Participants worried that the necessity for a dermatologist may be lower once AI can make diagnostic decisions on-par with a dermatologist. Others, however, were confident that AI should be seen as an assistive tool for dermatologists instead of a replacement. GPs and dermatologists both expected a shift of clinical tasks towards a more treatment-based role instead of a diagnostic role. A perceived crucial pitfall of using AI for skin cancer diagnostics, especially for young dermatologists, was to follow AI advice without a critical clinical evaluation. Hence, concerns were raised about a potential decline in experience in skin cancer recognition and therefore AI replacing their own diagnostic capabilities in the longer term.

‘… such an algorithm is based on knowledge, on input from dermatologists, but when you start as a young dermatologist, or as a resident, or as a general practitioner, then you don't have that knowledge, so then you are more likely to blindly rely on such an algorithm and I don't think that would be good.’ (Dermatologist, FG 3).

GPs mentioned the extra time it will take to use AI as fourth barrier. They considered it laborious to get acquainted with AI-software, master the software, and to take pictures with a medical device (e.g., an algorithm-enhanced dermoscope) during already limited consultation time.

Dermatologists and GPs also raised commercialization and associated privacy concerns, which was identified as fifth main barrier. These concerns were related to the costs that could be associated with the use of commercially available AI tools for skin cancer diagnosis. Elaborating on this, GPs reported to be suspicious about the storage of patient data, questioning the privacy of their patients when shared with these companies.

Preconditions for implementation

The first precondition for successfully implementing AI in skin cancer care expressed by dermatologists and GPs was adequate algorithm content, consisting of three elements. First, participants expected AI to perform at sufficient accuracy, although it appeared difficult to state the minimally accepted accuracy, ranging from 75%-95% sensitivity. Nevertheless, participants agreed that the accuracy will never reach 100%. Second, algorithms were expected to be transparent, meaning that a clinician can understand how the output of an algorithm is calculated. Third, participants expressed a need for binary advice (e.g., perform biopsy or do not perform biopsy) from AI, instead of other forms of advice (e.g., estimating percentage of malignancy).

The second precondition identified was sufficient usability and accessibility of AI. GPs expressed that AI software should be easy to learn and use. Dermatologists stressed that AI needs to be compatible with existing patient administration systems, and should provide a possibility to share data effortlessly with colleagues. In addition, GPs mentioned the need for data of patients to be safely stored when using a medical device which uses AI technology for the assessment of skin lesions. In terms of accessibility, equal functionality of AI for all skin types was considered essential by dermatologists and GPs.

The need for adequate validation and regulation of AI algorithms was identified as third precondition, expressed by both dermatologists and GPs. Research performed by an independent organization was considered adequate validation, similar to efficacy studies of therapeutics. Moreover, dermatologists reported a preference to validate the accuracy of an algorithm for skin cancer detection themselves. In addition to proper validation, adequate regulation was considered a prerequisite in ensuring the continuous safety of algorithms when implemented in skin cancer care. In particular, the possibility to frequently update AI algorithms was considered a potential challenge for regulators, which should be addressed before successful implementation can take place.

‘AI that the dermatologist uses, and so really makes a prediction whether it [a lesion] is good or bad, should really be tested by an authoritative organization with independent research, at least two preferably. So just like you register a drug.’ (Dermatologist, FG4).

Fourth, dermatologists and GPs expected endorsement by national medical societies. National skin cancer guidelines recommending the use of AI, indicating which algorithm or medical device is accurate and safe to use, before implementing it in their own practice was considered essential by GPs. They also considered it appropriate for government regulatory agencies to take interest in validation, on the condition that they are independent and transparent. Positive feedback from other GPs concerning certain AI technologies was mentioned as possible validation as well. Dermatologists expected the national association of dermatology to recommend which AI is accurate, and added the importance of AI to be reviewed regularly to hold this confidence. Furthermore, they expressed a preference to be involved in the design and implementation process of AI applications.

Clear liability was identified as fifth and final precondition. Both dermatologists and GPs expressed a need for clear liability regulations regarding the use of AI applications. Some GPs believe to be insured for mistakes made by AI when the specific AI software is endorsed in the national guideline, whereas others thought that a mistake made by AI was their responsibility or the responsibility of the developer. Dermatologists stressed caution in trusting organizations who validate AI because in the end liability is always one’s own responsibility.

‘.. what I do worry about is indeed liability, as to what extent are you fully responsible, or is there a part in which the application itself or the developer is responsible.’ (GP, FG1).

Discussion

The convergence of human and artificial intelligence in medicine offers the potential to profoundly transform skin cancer care in the coming decades [7, 20]. This in-depth qualitative study reveals key benefits, barriers, and essential preconditions for the successful implementation of AI in skin cancer care as perceived by dermatologists and GPs.

An important finding was the perceived benefit of AI to improve the care pathway between primary and secondary care. Both dermatologists and GPs expected AI to result in a reduction of unnecessary referrals, substitution of low-risk care, and cost reduction and subsequently in an improved care pathway. In theory, skin cancer detection accuracy on-par with dermatologists in the form of AI could indeed become available in primary care, which would mean a significant improvement of the current low sensitivity of GPs to detect skin cancer. [21] Although research focusing on AI in primary care is still scarce, a recent study showed an improvement of diagnostic accuracy of primary care physicians when using an algorithm in a teledermatology setting [6]. Real-world studies are needed to confirm this improvement in a clinical setting.

A second important and related benefit of AI, stressed by both dermatologists and GPs, was the educational function of AI to train GPs in skin cancer management. This is a significant finding, as limited and restricted education in skin cancer management have previously been identified as a barrier for GPs to treat skin cancer in primary care [22,23,24]. In line with this, providing post-graduate skin cancer education to GPs has demonstrated to improve the diagnostic accuracy of primary care physicians [25,26,27]. However, post-graduate training of GPs typically involves dermatologists to provide education, which can be labor-intensive and costly, and refresher material seems critical to maintaining acquired skills [28]. AI may be a useful complementary educational tool in addition to human teaching, which may prove helpful to scale post-graduate education of GPs and can be used according to their individual needs. Nevertheless, prospective studies are needed to demonstrate to which extent AI is effective in educating GPs.

A critical barrier to the implementation of AI in skin cancer care, perceived by both dermatologists and GPs, was doubts about its accuracy. This was particularly related to the lack of integration of clinical findings, such as a lack of complete skin examination, in the assessment of an algorithm. Consistent with this, a recent survey among US dermatologists reported the inability to perform a total body skin examination (TBSE) to be AI’s greatest weakness [8]. Several solutions have already been provided to address accuracy related perceived limitations. A recent study reported an algorithm to compare lesions on an overview photo taken with a smartphone and recognize suspicious lesions with high accuracy [29]. Moreover, several algorithms have demonstrated to integrate lesion symptoms, patient characteristics and other risk factors [30]. Yet, in a clinician-computer collaboration (e.g., AuI), it could also be argued that it is in fact the clinician's role to integrate clinical findings and patient preferences with the assessment of the algorithm [4]. Currently, the optimal collaboration between clinicians and computers is still poorly understood. Future studies should focus on HCPs’ views on the perceived optimal task distribution between humans and computers during the assessment of a patient with a suspicious skin lesion.

A second identified key barrier in this study which only applied to dermatologists, was the perceived fear of replacement by AI, which was also reported in three previous surveys among dermatologists [8,9,10]. It has also been found in other medical specialties, including radiology and pathology [31, 32]. However, it is argued that this fear is not rational because AI will change, instead of replace, the role of medical specialties [33]. Furthermore, as argued by Topol, the use of AI and AuI may make medicine less artificial and more ‘human’ by allowing less time spent on diagnostics and more on empathy [34]. In line with this, participants of our study explained to expect a shift of the diagnostic role of the dermatologist and GPs towards a more treatment-based role. Research among radiologists shows that fear of replacement is associated with limited AI-specific knowledge [31], which may be applicable to dermatologists as well. Hence, improving their knowledge about AI could be an effective strategy to reduce replacement fears.

Both type of HCPs regarded adequate algorithm content, and sufficient usability and accessibility of AI as essential preconditions for successful implementation. To meet both preconditions, transparency plays a crucial role, which may be challenging to fulfil. For example, the black box aspect of AI obscuring the explainability of algorithm decisions remains a critical challenge [35,36,37]. Although several techniques, e.g. heatmaps, Gradient-weighted Class Activation Mapping (GRAD-cam), and Local Interpretable Model-Agnostic Explanations (LIME), exist to gain insight into the outcome of an algorithm, these techniques are prone to confirmation bias when used by clinicians to understand the algorithm output [35, 38,39,40]. Recently, researchers even hypothesize that we may never reach a satisfactory level of explainable AI. Instead, proper validation of algorithms may be the only alternative for algorithm transparency [35]. While this may seem a viable alternative, a recent review revealed that not only algorithms lack transparency, but also the research that is published about the training and validation of these algorithms [41]. Hence, aside from continued efforts to break the black box of algorithms, researchers play a crucial role in meeting the preconditions with regards to adequate algorithm content by transparent research reporting, which can be achieved by sharing data sets, clear descriptions of data set characteristics, and reducing data label noise [41, 42].

Strengths and limitations

A strength of this study is its comprehensive scope of including the perspectives of both dermatologists and GPs, thereby providing a broad overview of key stakeholders’ views on the implementation of AI in skin cancer care. Moreover, by conducting separate FGs, we were able to compare the views of the groups. Our results show that the views of dermatologists and GPs largely overlap, with only a few remarkable differences as mentioned above.

This study also has some limitations. Although qualitative research is always context-specific to some extent [19], focusing only on HCPs within the Dutch healthcare system where the GP is positioned as gatekeeper to specialized care could limit the generalizability of our findings to other countries. Although the benefits of an improved care pathway may be slightly different in other countries, benefits such as an improved skin cancer detection accuracy, barriers such as a fear of replacement, and preconditions such as the need for adequate validation and regulation are more generally applicable. Another limitation of this study is that we explored the views of HCPs before actual implementation, which means that the results in this study reflect the views of HCPs mainly without having any experience with using AI in skin cancer care. While it is crucial to perform qualitative research before actual implementation [12], the views may change during actual implementation and warrant follow-up qualitative research in the future. Finally, our study did not include an exploration of the perspectives of HCPs towards AI for skin cancer screening (i.e. smartphone applications) by laypersons. Although previous research explored the patient’s and general public’s views [43], future research is needed to study the perspectives of HCPs.

Conclusion

In conclusion, the results of this study indicate that HCPs perceive significant benefits from implementing AI in skin cancer care. To successfully implement AI, key barriers such as doubts about its accuracy and the risk of health inequalities need to be addressed. Efforts should be focused on ensuring algorithm transparency, validation, accessibility for all skin types, and adequate regulation of algorithms. Concurrently, the unrealistic fear of replacement needs to be addressed by improving knowledge about AI. As such, these findings can contribute to developing an optimized strategy of integration of AI in the hands of physicians in the coming years.

Data availability statement

Participants of this study did not agree for their data to be shared publicly, so supporting data is not available.

References

Haenssle HA, Fink C, Schneiderbauer R, Toberer F, Buhl T, Blum A et al (2018) Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol 29(8):1836–1842

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM et al (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639):115–118

Tschandl P, Codella N, Akay BN, Argenziano G, Braun RP, Cabo H et al (2019) Comparison of the accuracy of human readers versus machine-learning algorithms for pigmented skin lesion classification: an open, web-based, international, diagnostic study. Lancet Oncol 20(7):938–947

Tschandl P, Rinner C, Apalla Z, Argenziano G, Codella N, Halpern A et al (2020) Human–computer collaboration for skin cancer recognition. Nat Med 26(8):1229–1234

Han SS, Park I, Eun Chang S, Lim W, Kim MS, Park GH et al (2020) Augmented intelligence dermatology: deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for 134 skin disorders. J Investig Dermatol 140(9):1753–1761

Jain A, Way D, Gupta V, Gao Y, de Oliveira MG, Hartford J et al (2021) Development and assessment of an artificial intelligence-based tool for skin condition diagnosis by primary care physicians and nurse practitioners in teledermatology practices. JAMA Netw Open 4(4):e217249-e

Esteva A, Topol E (2019) Can skin cancer diagnosis be transformed by AI? Lancet 394(10211):1795

Nelson CA, Pachauri S, Balk R, Miller J, Theunis R, Ko JM et al (2021) Dermatologists’ perspectives on artificial intelligence and augmented intelligence — a cross-sectional survey. JAMA Dermatol. https://doi.org/10.1001/jamadermatol.2021.1685

Polesie S, Gillstedt M, Kittler H, Lallas A, Tschandl P, Zalaudek I et al (2020) Attitudes towards artificial intelligence within dermatology: an international online survey. Br J Dermatol 183(1):159–161

Shen C, Li C, Xu F, Wang Z, Shen X, Gao J et al (2020) Web-based study on Chinese dermatologists’ attitudes towards artificial intelligence. Ann Transl Med 8(11):698

Blease C, Kaptchuk TJ, Bernstein MH, Mandl KD, Halamka JD, DesRoches CM (2019) Artificial intelligence and the future of primary care: exploratory qualitative study of UK general practitioners’ views. J Med Internet Res 21(3):e12802-e

Skivington K, Matthews L, Simpson SA, Craig P, Baird J, Blazeby JM et al (2021) A new framework for developing and evaluating complex interventions: update of medical research council guidance. BMJ 374:n2061

Green J, Thorogood N (2018) Qualitative methods for health research, 4th edn. SAGE Publishing Ltd, pp 3–28

Pope C, Mays N (1995) Qualitative research: reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. BMJ 311(6996):42–45

Green J, Thorogood N (2018) Qualitative methods for health research, 4th edn. SAGE Publishing Ltd, pp 147–172

O’Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA (2014) Standards for reporting qualitative research: a synthesis of recommendations. Acad Med 89(9):1245–1251

Yarbrough AK, Smith TB (2007) Technology acceptance among physicians: a new take on TAM. Med Care Res Rev 64(6):650–672

Sangers TE, Wakkee M, Kramer-Noels EC, Nijsten T, Lugtenberg M (2021) Views on mobile health apps for skin cancer screening in the general population: an in-depth qualitative exploration of perceived barriers and facilitators. Br J Dermatol 185(5):961–969

Green J, Thorogood N (2018) Qualitative methods for health research, 4th edn. SAGE Publishing Ltd, pp 285–311

Topol EJ (2019) High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25(1):44–56

Jones O, Jurascheck L, van Melle M, Hickman S, Burrows N, Hall P et al (2019) Dermoscopy for melanoma detection and triage in primary care: a systematic review. BMJ Open 9(8):e027529

Noels EC, Wakkee M, van den Bos RR, Bindels PJE, Nijsten T, Lugtenberg M (2019) Substitution of low-risk skin cancer hospital care towards primary care: a qualitative study on views of general practitioners and dermatologists. PLoS One 14(3):e0213595

Hayes SF (2009) Early detection of melanoma is key, so let’s teach it. BMJ 338:a3138

van Rijsingen MCJ, van Bon B, van der Wilt GJ, Lagro-Janssen ALM, Gerritsen MJP (2014) The current and future role of general practitioners in skin cancer care: an assessment of 268 general practitioners. Br J Dermatol 170(6):1366–1368

Bedlow C, Melia M, Seyan H (2000) Impact of skin cancer education on general practitioners’ diagnostic skills. Clin Exp Dermatol 25(2):115–118

Gerbert B, Bronstone A, Wolff M, Maurer T, Berger T, Pantilat S et al (1998) Improving primary care residents’ proficiency in the diagnosis of skin cancer. J Gen Intern Med 13(2):91–97

Youl PH, Raasch BA, Janda M, Aitken JF (2007) The effect of an educational programme to improve the skills of general practitioners in diagnosing melanocytic/pigmented lesions. Clin Exp Dermatol 32(4):365–370

Harkemanne E, Baeck M, Tromme I (2021) Training general practitioners in melanoma diagnosis: a scoping review of the literature. BMJ Open 11(3):e043926

Soenksen LR, Kassis T, Conover ST, Marti-Fuster B, Birkenfeld JS, Tucker-Schwartz J et al (2021) Using deep learning for dermatologist-level detection of suspicious pigmented skin lesions from wide-field images. Sci Transl Med 13(581):eabb3652

Höhn J, Hekler A, Krieghoff-Henning E, Kather JN, Utikal JS, Meier F et al (2021) Integrating patient data into skin cancer classification using convolutional neural networks: systematic review. J Med Internet Res 23(7):e20708

Huisman M, Ranschaert E, Parker W, Mastrodicasa D, Koci M, de Santos DP et al (2021) An international survey on AI in radiology in 1041 radiologists and radiology residents part 1: fear of replacement, knowledge, and attitude. Eur Radiol 31(9):7058–7066

Sarwar S, Dent A, Faust K, Richer M, Djuric U, Van Ommeren R et al (2019) Physician perspectives on integration of artificial intelligence into diagnostic pathology. npj Digit Med 2(1):28

Jha S, Topol EJ (2016) Adapting to artificial intelligence: radiologists and pathologists as information specialists. JAMA 316(22):2353–2354

Topol E (2019) Deep medicine: how artificial intelligence can make healthcare human again. Basic Books, Inc

Ghassemi M, Oakden-Rayner L, Beam AL (2021) The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health 3(11):e745–e750

Price WN (2018) Big data and black-box medical algorithms. Sci Transl Med 10(471):eaao5333

Watson DS, Krutzinna J, Bruce IN, Griffiths CE, McInnes IB, Barnes MR et al (2019) Clinical applications of machine learning algorithms: beyond the black box. BMJ 364:l886

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2020) Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vision 128(2):336–359

Samek W, Binder A, Montavon G, Lapuschkin S, Müller K (2017) Evaluating the visualization of what a deep neural network has learned. IEEE Trans Neural Netw Learn Syst 28(11):2660–2673

Ribeiro MT, Guestrin C (2016) “Why should i trust you?”: explaining the predictions of any classifier. arXiv 1602:04938

Daneshjou R, Smith MP, Sun MD, Rotemberg V, Zou J (2021) Lack of Transparency and potential bias in artificial intelligence data sets and algorithms: a scoping review. JAMA Dermatol 157(11):1362–1369

Esteva A, Chou K, Yeung S, Naik N, Madani A, Mottaghi A et al (2021) Deep learning-enabled medical computer vision. npj Digit Med 4(1):5

Nelson CA, Pérez-Chada LM, Creadore A, Li SJ, Lo K, Manjaly P et al (2020) Patient perspectives on the use of artificial intelligence for skin cancer screening: a qualitative study. JAMA Dermatol 156(5):501–512

Funding

This study was initiated by the Erasmus MC Cancer Institute and was supported by an unrestricted research grant from SkinVision. SkinVision was not involved in the design of the study, data collection, data analysis, manuscript preparation or in the decision to publish this study.

Author information

Authors and Affiliations

Contributions

Conceptualization, TS, MW, and ML; methodology, TS and ML; validation, TS, FM, and ML.; formal analysis, TS, FM, and ML.; investigation, TS, MW, FM, TN, and ML; resources, TS, FM, MW, TN, and ML.; data curation, TS and FM.; writing original draft preparation, TS; writing review and editing, MW, FM, TN, and ML; visualization, TS, and ML; supervision, TN and ML; project administration, TS; funding acquisition, TN. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The Department of Dermatology of the Erasmus MC Cancer Institute has received an unrestricted research grant from SkinVision. Tamar Nijsten serves on the SkinVision advisory board and has equity in the company. There was no input from SkinVision on any aspect of data collection, data analysis or manuscript preparation.

Ethical approval

The need for ethical approval was waived by the medical ethical committee of the Erasmus MC University Medical Center after review of the study design (MEC-2020–764). Written informed consent was obtained from all participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sangers, T.E., Wakkee, M., Moolenburgh, F.J. et al. Towards successful implementation of artificial intelligence in skin cancer care: a qualitative study exploring the views of dermatologists and general practitioners. Arch Dermatol Res 315, 1187–1195 (2023). https://doi.org/10.1007/s00403-022-02492-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00403-022-02492-3