Abstract

A phase field approach for structural topology optimization with application to additive manufacturing is analyzed. The main novelty is the penalization of overhangs (regions of the design that require underlying support structures during construction) with anisotropic energy functionals. Convex and non-convex examples are provided, with the latter showcasing oscillatory behavior along the object boundary termed the dripping effect in the literature. We provide a rigorous mathematical analysis for the structural topology optimization problem with convex and non-continuously-differentiable anisotropies, deriving the first order necessary optimality condition using subdifferential calculus. Via formally matched asymptotic expansions we connect our approach with previous works in the literature based on a sharp interface shape optimization description. Finally, we present several numerical results to demonstrate the advantages of our proposed approach in penalizing overhang developments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) is an innovative building technique that produces objects in a layer-by-layer fashion through fusing or binding raw materials in powder and resin forms. Since its introduction in the 1970s, it has shown great versatility in allowing for the creation of highly complex geometries, immediate modifications and redesign, thus making it an ideal process for rapid prototyping and testing. But despite such advantages over traditional manufacturing technologies, AM still has not seen widespread integration in serial production, and there are still many limitations that have yet to be overcome. For an overview of the technologies involved and the challenges encountered by AM, we refer the reader to the review article [1] as well as the report [76].

In this work, we focus on a recurring issue encountered when practitioners employ AM to construct objects. Some categories of AM technologies, such as Fused Deposition Modeling and Laser Metal Deposition, construct objects in an upwards direction (hereafter referred to as the build direction) by repeatedly depositing and then fusing a new layer of raw materials on the surface of the object. Overhangs are regions of the constructed object that when placed in a certain orientation extend outwards without any underlying support. For example, the top horizontal bar of a T-shaped structure placed vertically will be classified as an overhang. The main issue with overhangs is that they can deform under their own weight or by thermal residual stresses from the construction process, and if not supported from below, there is a risk that the object itself can display unintended deformations and the entire printing process can fail.

One natural solution is to identify certain orientations of the object whose overhang regions are minimized prior to printing, and choose the build direction to be one of these orientations. Going back to the T-shape structure example, we can simply rotate the shape by \(180^{\circ }\) so that there are no overhang regions (see, e.g., [56, Fig. 5]). However, in general, there is no guarantee that orientations with no overhang regions exist. In this direction we mention the works [72, 93] that incorporate various geometric information such as contact surface area and support volume expressed as functions of the build direction within an optimization procedure. On the other hand, simultaneous optimization of build direction and topology has been considered in [90].

Another remedy is to employ support structures that are built concurrently with the object whose purpose is to reduce the potential deformation of overhang regions through mechanical loads or thermal residual stresses. These supplementary structures act like scaffolding to overhangs, and after a successful print are then removed in a post-processing step. Along with increased material costs and printing time, there is a risk of damaging delicate features of the objects during the removal step. Nevertheless, for certain AM technologies such as the aforementioned Fused Deposition Modeling, some form of support structures will always be necessary in order to mitigate the potential undesirable deformations of the finished object. Thus, recent mathematical research has concentrated on the optimizing support structures in an effort to reduce material waste and minimize contact area with the object. Many such works explore optimal support in the framework of shape and topology optimization [3, 50, 58, 62, 69], as well as proposing sparse cellular designs that have low solid volume and contact area in the form of lattices [55], honeycomb [66], and trees [89]. Further details can be found in the review article [56].

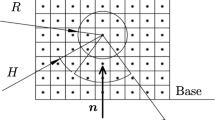

A related approach would be to allow some modifications to the object, as long as the altered design retains the intended functionality of the original, leading to creation of self-supporting objects that do not require support structures at all [32, 63,64,65]. Our present work falls roughly into this category, where we employ a well-known phase field methodology in structural topology optimization with the aim of identifying optimal designs fulfilling the so-called overhang angle constraint. In mathematical terms, let us consider an object \(\Omega _1 \subset {\mathbb {R}}^{d}\), \(d=2,3,\) being built within a hold-all rectangular domain \(\Omega = [0,1]^d\) in a layer-by-layer fashion with build direction \({\varvec{e}}_{d} = (0,\dots , 1)^{\top }\). The base plate is the region \(\mathcal {B} := [0,1]^{d-1}\times \{0\}\) where \(\Omega _1\) is assembled on. For any \({\varvec{p}}\in \partial \Omega _1 \setminus \mathcal {B}\), the overhang angle \(\alpha \) is defined as the angle from the base plate to the tangent line at \({\varvec{p}}\) (denoted as \(\alpha _1\) and \(\alpha _2\) in Fig. 1).

Then, there exists a critical threshold angle \(\psi \) where the portion of \(\Omega _1\) is self-supporting (resp. requires support structures) if the overhang angle there is greater (resp. smaller) than \(\psi \). Conventional wisdom from practitioners puts the critical angle \(\psi \) at \(45^{\circ }\) [36, 62, 84] with the reasoning that every layer then has approximately 50% contact with the previous layer and thus it is well-supported from below, although there are some authors that propose smaller values for \(\psi \) (e.g., \(40^{\circ }\) in [63, 64]). Exact values of this critical angle also depend on the setting of the 3D printer as well as physical properties of the raw materials used. We mention that other works [69] consider an alternate definition, which is given as the angle from the vertical build direction to the outward unit normal of \(\partial \Omega _1\). Denoting this as \(\beta \), a simple calculation shows that \(\beta = 180^{\circ } - \alpha \). Then, regions where \(\beta > 180^{\circ } - \psi \) (typically \(135^{\circ }\) if \(\psi = 45^{\circ }\)) will require support structures.

In this work we adopt the latter definition, but choose to define the overhang angle as the angle measured from the negative build direction \(-{\varvec{e}}_d\) to the outer unit normal, see Fig. 2 and also [4, 32]. Then, given a critical angle threshold \(\psi \) (expressed now in radians), the overhang angle constraint in the current context of shape and topology optimization would demand that an optimal geometry for the object \(\Omega _1\) has minimal regions where the overhang angles there do not lie in the interval \([\psi , 2\pi - \psi ]\) (i.e., \(\Omega _1\) should be self-supporting as much as possible), in addition to other mechanical considerations such as minimal compliance.

The definition of overhang angle used in this work. (Left) A structure with four angles measured from the negative build direction \(-{\varvec{e}}_d\) to the outward unit normal on the boundary. (Right) Visualization of the angles on the unit circle, where the angle \(\phi _i\) is associated to unit normal \({\varvec{n}}_i\)

For structural topology optimization we employ the phase field methodology proposed in [30], later popularized by many authors to other applications such as multi-material structural topology optimization [24, 91], compliance optimization [26, 80], topology optimization with local stress constraints [31], nonlinear elasticity [75], elastoplasticity [6], eigenfrequency maximization [47, 80], graded-material design [34], shape optimization in fluid flow [44,45,46], as well as resolution strategies for some inverse identification problems [23, 59]. The key idea is to cast the structural topology optimization problem as a constrained minimization problem for a phase field variable \(\varphi \), where the geometry of an optimal design \(\Omega _1\) for the object can be realized as a certain level-set of \(\varphi \). In the language of optimal control theory, \(\varphi \) acts as a control and influences a response function, e.g., the elastic displacement \({\varvec{u}}\) of the object, that is the solution to a system of partial differential equations (see (2.2) below). With an appropriate objective functional, for instance, a weighted sum of the mean compliance and a phase field formulation of the overhang constraint (see (2.6) below), we analyze the PDE-constrained minimization problem for an optimal design variable \(\varphi \).

In the above references, previous authors employ an isotropic Ginzburg–Landau functional that has the effect of perimeter penalization (see Sect. 3 for more details), and in the current context this means no particular directions are preferred/discouraged in the optimization process. In this work we follow the ideas first proposed in [4, 36] that use an anisotropic perimeter functional as a geometric constraint for overhangs (see also similar ideas in [2] for support structures), and introduce a suitable anisotropic Ginzburg–Landau functional for the phase field optimization problem to enforce the overhang angle constraint. In one sense, our first novelty is that we generalize earlier works in phase field structural topology optimization by considering anisotropic energies. The precise formulation and details of the problem are given in the next sections. For more details regarding the use of anisotropy in phase field models we refer the reader to [17, 18, 48, 83].

Our second novelty is the analysis of the anisotropic phase field optimization problem. We establish the existence of minimizers (i.e., optimal designs) and derive first-order necessary optimality conditions. It turns out that our proposed approach to the overhang angle constraint requires an anisotropy function \(\gamma \) that is not continuously differentiable. In turn, the derivation of the associated necessary optimality conditions becomes non-standard. We overcome this difficulty with subdifferential calculus, and provide a characterization result for the subdifferential of convex functionals whose arguments are weak gradients. Then, we perform a formally matched asymptotic analysis for the optimization problem with a differentiable \(\gamma \) to infer the corresponding sharp interface limit.

Lastly, let us provide a non-exhaustive overview on related works that integrate the overhang angle constraint within a topology optimization procedure. Our closest counterpart is the work [4] which employs an anisotropic perimeter functional in a shape optimization framework implemented numerically with the level-set method. The authors observed that oscillations along the object boundary would develop even though the design complied with the angle constraint. This so-called dripping effect is attributed to instability effects of the anisotropic perimeter functional, brought about by the non-convexity of the anisotropy used in [4], see also Example 3.3 and Fig. 10 below and the discussion in [54, Sect. 7.3]. An alternative mechanical constraint based on modeling the layer-by-layer construction process is then proposed to provide a different treatment of the overhangs and seems to suppress the dripping effect (see also [12] for similar ideas), but is computationally much more demanding. These boundary oscillations have also been observed earlier in [77], which used a Heaviside projection based integral to encode the overhang angle constraint within a density based topology optimization framework, and can be suppressed by means of an adaptive anisotropic filter introduced in [68]. Similar projection techniques for density filtering are used in [51], which was combined with an edge detection algorithm to evaluate feasible and non-feasible contours during optimization iterations. In [52], control of overhang angles is achieved by means of filtering with a wedge-shaped support, while in [94] an explicit quadratic function with respect to the design density is used to formulate the self-support constraint. A different approach was proposed in [60, 61] using a spatial filtering which checks element densities row-by-row and remove all elements not supported by the previous row. This idea is then extended in [62, 74, 85] to construct new filtering schemes that include other relevant manufacturing constraints. Another method was proposed in [53] using the frameworks of moving morphable components and moving morphable voids to provide a more explicit and geometric treatment of the problem, and is capable of simultaneously optimizing structural topology and build orientation. Finally, let us mention [87, 88], where a filter is developed based on front propagation in unstructured meshes that has a flexibility in enforcing arbitrary overhang angles. Focusing only on overhang angles in enclosed voids, [67] combined a nonlinear virtual temperature method for the identification of enclosed voids with a logarithmic-type function to constraint the area of overhang regions to zero.

The rest of this paper is organized as follows: in Sect. 2 we formulate the phase field structural optimization problem to be studied, and in Sect. 3 we describe our main idea of introducing anisotropy, along with some examples and relevant choices, as well as a useful characterization of the subdifferential of the anisotropic functional. The analysis of the structural optimization problem is carried out in Sect. 4, where we establish analytical results concerning minimizers and optimality conditions. The connection between our work and that of [4] is explored in Sect. 5 where we look into the sharp interface limit, and, finally, in Sect. 6 we present the numerical discretization and several simulations of our approach.

2 Problem Formulation

In a bounded domain \(\Omega \subset {\mathbb {R}}^d\) with Lipschitz boundary \(\Gamma :=\partial \Omega \) that exhibits a decomposition \(\Gamma = \Gamma _D \, \cup \, \Gamma _g \, \cup \, \Gamma _0\), we consider a linear elastic material that does not fully occupy \(\Omega \). We describe the material location with the help of a phase field variable \(\varphi : \Omega \rightarrow [-1,1]\). In the phase field methodology, we use the level set \(\{\varphi = -1\} = \{{{\varvec{x}}\in \Omega : \varphi ({\varvec{x}}) =- 1}\}\) to denote the region occupied by the elastic material, and \(\{\varphi = 1\}\) to denote the complementary void region. These two regions are separated by a diffuse interface layer \(\{|\varphi |<1\}\) whose thickness is proportional to a small parameter \(\varepsilon > 0\). Since \(\varphi \) describes the material distribution within \(\Omega \), complete knowledge of \(\varphi \) allows us to determine the shape and topology of the elastic material.

Notation. For a Banach space X, we denote its topological dual by \(X^*\) and the corresponding duality pairing by \(\langle \cdot ,\cdot \rangle _X\). For any \(p \in [1,\infty ]\) and \(k >0\), the standard Lebesgue and Sobolev spaces over \(\Omega \) are denoted by \(L^p := L^p(\Omega )\) and \(W^{k,p} := W^{k,p}(\Omega )\) with the corresponding norms \(\Vert \cdot \Vert _{L^p}\) and \(\Vert \cdot \Vert _{W^{k,p}}\). In the special case \(p = 2\), these become Hilbert spaces and we employ the notation \(H^k := H^k(\Omega ) = W^{k,2}(\Omega )\) with the corresponding norm \(\Vert \cdot \Vert _{H^k}\). For convenience, the norm and inner product of \(L^2(\Omega )\) are simply denoted by \(\Vert \cdot \Vert \) and \((\cdot ,\cdot )\), respectively. For our subsequent analysis, we introduce the space

Let us remark that, despite we employ bold symbols to denote vectors, matrices, and vector- or matrix-valued functions, we do not introduce a special notation to indicate the corresponding Lebesgue and Sobolev spaces. Thus, when those terms occur in the estimates, the corresponding norm is to be intended in its natural setting.

2.1 State System

To obtain a mathematical formulation that can be further analyzed, we employ the ersatz material approach, see [5], and model the complementary void region as a very soft elastic material. This allows us to consider a notion of elastic displacement on the entirety of \(\Omega \), leading to the displacement vector \({\varvec{u}}: \Omega \rightarrow {\mathbb {R}}^d\) and the associated linearized strain tensor

Let \(\mathcal {C}_0\) and \(\mathcal {C}_1\) be the fourth order elasticity tensors corresponding to the ersatz soft material and the linear elastic material, respectively, that satisfy the standard symmetric conditions

and assume there exist positive constants \(\theta \) and \(\Lambda \) such that for any non-zero symmetric matrices \(\mathbf{{A}}\), \(\mathbf{{B}}\in {\mathbb {R}}^{d \times d}\):

where \(\mathbf{{A}}: \mathbf{{B}}= \sum _{i,j=1}^d \mathbf{{A}}_{ij} \mathbf{{B}}_{ij}\) denotes the Frobenius inner product between two matrices. With the help of the phase field variable \(\varphi \), we define an interpolation fourth order elasticity tensor \(\mathcal {C}(\varphi )\) as

where \(g:{\mathbb {R}}\rightarrow {{\mathbb {R}}}\) is a monotone function satisfying \(g(-1) = -1\) and \(g(1) = 1\). Notice that \(\mathcal {C}(\varphi )\) yields a positive definite tensor whenever \(\varphi \in [-1,1]\). Then, it is clear that in the region \(\{\varphi = -1\}\) (resp. \(\{\varphi = 1\}\)) we obtain the elasticity tensor \(\mathcal {C}_1\) (resp. \(\mathcal {C}_0\)) by substituting \(\varphi = -1\) (resp. \(\varphi = 1\)) into the definition of \(\mathcal {C}(\varphi )\). Let \({\varvec{f}}: \Omega \rightarrow {\mathbb {R}}^d\) denote a body force and \({\varvec{g}}: \Gamma _g \rightarrow {\mathbb {R}}^d\) denote a surface traction force. On \(\Gamma _D \subset \Gamma \) we assign a zero Dirichlet boundary condition for the displacement \({\varvec{u}}\) and on \(\Gamma _0\) we assign a traction-free boundary condition. This leads to the following elasticity system for the displacement \({\varvec{u}}\), representing the state system of the problem:

where \({\varvec{n}}\) denotes the outward unit normal to \(\Gamma = \Gamma _D \cup \Gamma _g \cup \Gamma _0\), and \({\mathbb {h}}: {\mathbb {R}}\rightarrow {\mathbb {R}}\) is defined as \({\mathbb {h}}(\varphi ) = \frac{1}{2}(1-\varphi )\) is a function introduced so that the body force \({\varvec{f}}\) only acts on the elastic material \(\{\varphi = -1\}\) (where \({\mathbb {h}}(\varphi ) = 1\)) and not on the ersatz material \(\{\varphi = 1\}\) (where \({\mathbb {h}}(\varphi ) = 0\)).

The well-posedness of (2.2) is a simple consequence of [24, Thms. 3.1, 3.2], which we summarize as follows:

Lemma 2.1

For any \(\varphi \in L^\infty (\Omega )\) and \(({\varvec{f}}, {\varvec{g}}) \in L^2(\Omega , {\mathbb {R}}^d) \times L^2(\Gamma _g, {\mathbb {R}}^d)\), there exists a unique solution \({\varvec{u}}\in H^1_D(\Omega , {\mathbb {R}}^d)\) to (2.2) in the following sense

where \(\mathcal {H}^{d-1}\) denotes the \((d-1)\)-dimensional Hausdorff measure. Moreover, there exists a positive constant C, independent of \(\varphi \), such that

In addition, let \(M>0\) and \(\varphi _i \in L^\infty (\Omega )\) with \(\Vert \varphi _i\Vert _{L^\infty } \le M\), \(i=1,2,\) and \({\varvec{u}}_i\) be the associated solution to (2.3). Then, there also exists a positive constant C, depending on the data of the system and M, but independent of the difference \(\varphi _1-\varphi _2\), such that

The above result provides a notion of a solution operator, also referred to as the control-to-state operator, \({\varvec{S}}: \varphi \mapsto {\varvec{u}}\) where \({\varvec{u}}\in H^1_D(\Omega , {\mathbb {R}}^d)\) is the unique solution to (2.3) corresponding to \(\varphi \in L^\infty (\Omega )\). As such, we may view the phase field variable \(\varphi \) as a design variable that encodes the elastic response of the associated material distribution through the operator \({\varvec{S}}\) and seek an optimal material distribution \({\overline{\varphi }}\) that fulfills suitable constraints and minimizes some cost functional.

2.2 Cost Functional and Design Space

We define the design space, i.e., the set of admissible design variables, as

where \(m \in (-1,1)\) is a fixed constant for the mass constraint, and motivated by the context of additive manufacturing, we propose the following cost functional to be minimized:

Here, \(0 < \varepsilon \ll 1\) is the interfacial parameter associated to the phase field \(\varphi \), whereas \({\varvec{u}}\) is the associated displacement obtained as a solution of the state system (2.2). In Sect. 5.2 we perform a formal asymptotic analysis as \(\varepsilon \rightarrow 0\), where in order for the region occupied by the ersatz material to become void, we consider the elasticity tensor \(\mathcal {C}(\varphi )\) defined as (2.1) with

In the case of isotropic materials, one can choose, for any symmetric matrix \(\mathbf{{A}}\in {\mathbb {R}}^{d \times d}\),

with identity matrix \(\textbf{I}\) and Lamé constants \(\lambda _1\) and \(\mu _1\). Examples for the interpolation function g in the definition of \(\mathcal {C}(\varphi )\) are

In (2.6), the second term premultiplied by \(\beta > 0\) is the mean compliance functional, while the first term premultiplied by \({\widehat{\alpha }} > 0\) is an anisotropic Ginzburg–Landau functional with anisotropy function \(\gamma \). We delay the detailed discussion on \(\gamma \) to the next section and remark here that for the isotropic case \(\gamma ({\varvec{x}}) = |{\varvec{x}}|\), \({\varvec{x}}\in {\mathbb {R}}^d\), it is well-known that the Ginzburg–Landau functional is an approximation of the perimeter functional. Therefore, (2.6) can be viewed as a weighted sum between (anisotropic) perimeter penalization and mean compliance.

Lastly, for the non-negative potential function \(\Psi \) in (2.6), we require that it has \(\pm 1\) as its global minima. While many choices are available, in light of the design space \(\mathcal {V}_m\), we consider \(\Psi \) as the double obstacle potential

where \(\Psi _0(s):=\frac{1}{2}(1-s^2)\) and \({\mathbb {I}}\) stands for the indicator function. It is worth pointing out that it holds \(\Psi (\varphi ) = \Psi _0(\varphi )\) for \(\varphi \in \mathcal {V}_m\). Then, the structural optimization problem we study can be expressed as the following:

3 Anisotropic Ginzburg–Landau Functional

In the phase field methodology, the (isotropic) Ginzburg–Landau functional reads as

where \(\Psi \) is a non-negative double-well potential that has \(\pm 1\) as its minima, i.e., \(\{s \in {\mathbb {R}}\, : \, \Psi (s) = 0 \} = \{\pm 1\}\). Heuristically, the minimization of (3.1) in \(H^1(\Omega )\) results in minimizers that take near constant values close to \(\pm 1\) in large regions of the domain \(\Omega \), which are separated by thin interfacial regions with thickness scaling with \(\varepsilon \) over which the functions transit smoothly from one value to the other. Formally in the limit \(\varepsilon \rightarrow 0\) these minimizers converge to functions that only take values in \(\{\pm 1\}\). This is made rigorous in the framework of \(\Gamma \)-convergence by the seminal work of Modica and Mortola [70, 71].

To facilitate the forthcoming discussion, we review some basic properties for functions of bounded variations. For a more detailed introduction we refer the reader to [8, 38]. A function \(u \in L^1(\Omega )\) is a function of bounded variation in \(\Omega \) if its distributional gradient \(\textrm{D}u\) is a finite Radon measure. The space of all such functions is denoted as \(\textrm{BV}(\Omega )\) and its endowed with the norm \(\Vert \cdot \Vert _{\textrm{BV}(\Omega )} = \Vert \cdot \Vert _{L^1(\Omega )} + \textrm{TV}(\cdot )\), where for \(u \in \textrm{BV}(\Omega )\), its total variation \(\textrm{TV}(u)\) is defined as

The space \(\textrm{BV}(\Omega , \{a,b\})\) denotes the space of all \(\textrm{BV}(\Omega )\) functions taking values in \(\{a,b\}\). We say that a set \(U \subset \Omega \) is a set of finite perimeter, or a Caccioppoli set, if its characteristic function \(\chi _U\), where \(\chi _U({\varvec{x}}) = 1\) if \({\varvec{x}}\in U\) and \(\chi _U({\varvec{x}}) = 0\) if \({\varvec{x}}\notin U\), belongs to \(\textrm{BV}(\Omega ,\{0,1\})\). The perimeter of a set of finite perimeter U in \(\Omega \) is defined as

while its reduced boundary \(\partial ^*U\) is the set of all points \({\textbf{y}}\in {\mathbb {R}}^d\) such that \(|\textrm{D}\chi _{U}|(B_r({\textbf{y}})) > 0\) for all \(r > 0\), with \(B_r({\textbf{y}})\) denoting the ball of radius r centered at \({\textbf{y}}\), and

The unit vector \({\varvec{\nu }}_U({\textbf{y}})\) is called the measure theoretical unit inner normal to U at \({\textbf{y}}\), and a theorem by De Giorgi yields the connection \(\mathcal {P}_\Omega (U) = \mathcal {H}^{d-1}(\partial ^* U)\), see, e.g., [8]. Then, the result of Modica and Mortola [70, 71] can be expressed as follows: The \(\Gamma \)-limit of the extended functional

is equal to

with the constant \(c_\Psi := \int _{-1}^1 \sqrt{2\Psi (s)} ds\).

Remark 3.1

For \(u \in \textrm{BV}(\Omega , \{-1,1\})\), setting \(A := \{u = 1\}\) leads to the relation \(u({\varvec{x}}) = 2 \chi _{A}({\varvec{x}}) - 1\), and hence

Of particular interest is the following property of \(\Gamma \)-convergence, which states that if (i) \(\mathcal {E}_0\) is the \(\Gamma \)-limit of \(\mathcal {E}_\varepsilon \), (ii) \(u_\varepsilon \) is a minimizer to \(\mathcal {E}_\varepsilon \) for every \(\varepsilon > 0\), (iii) \(\{u_\varepsilon \}_{\varepsilon > 0}\) is a precompact sequence, then every limit of a subsequence of \(\{u_\varepsilon \}_{\varepsilon > 0}\) is a minimizer for \(\mathcal {E}_0\). This provides a methodology to construct minimizers of \(\mathcal {E}_0\) as limits of minimizers to \(\mathcal {E}_\varepsilon \), provided the associated \(\Gamma \)-limit is precisely \(\mathcal {E}_0\).

Returning to our discussion and problem in additive manufacturing, in [4] it was proposed to use anisotropic perimeter functionals to model the overhang angle constraint. In our notation, for a set U of bounded variation with \({\varvec{\nu }}_U\) as the measure theoretical inward unit normal, these functionals take the form

with a \(C^1\) function \({\hat{\gamma }}: {\mathbb {S}}^{d-1}\rightarrow {\mathbb {R}}\), and \({\mathbb {S}}^{d-1}\) is the \((d-1)\)-dimensional unit sphere. For later use, we denote by \(\gamma : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) the positively 1-homogeneous extension of \({\hat{\gamma }}\) (see Sect. 3.1):

In [4], two choices were suggested:

where \(\psi \) is a fixed angle threshold, \({\varvec{e}}_d\) denotes the build direction, and \(\psi _i:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\), for \(i = 1, \dots , m\), are given pattern functions with \({\varvec{\nu }}_{\psi _i} := \nabla \psi _i/|\nabla \psi _i|\). The first choice \({{\hat{\gamma }}}_a\) penalizes the regions of the boundary \(\partial ^* U\) where the angle between the outward normal \((-{\varvec{\nu }}_U)\) and the negative build direction \((-{\varvec{e}}_d)\) is smaller than \(\psi \), while the second choice \({{\hat{\gamma }}}_b\) compels the unit normal \({\varvec{\nu }}_U\) to be close to at least one of the directions \({\varvec{\nu }}_{\psi _i}\).

A phase field approximation of the anisotropic perimeter functional (3.2) is the following anisotropic Ginzburg–Landau functional

where as before, \(\Psi \) is a double well potential with \(\pm 1\) as its minima. In the case that \(\gamma \) is convex (see (A1)), one has the analogue of the result by Modica and Mortola for anisotropic energies (see [21, 22, 29, 73], and also Lemma 5.1 below), that is

where the extended functionals \(\mathcal {E}_{\gamma ,\varepsilon }\) and \(\mathcal {E}_{\gamma ,0}\) are defined as

This motivates our consideration of the objective functional (2.6) and of the study of the related minimization problem for the overhang angle constraint. Furthermore, let us formally state the corresponding sharp interface limit \((\varepsilon \rightarrow 0)\) of the structural optimization problem as:

where \(\textrm{BV}_m(\Omega , \{-1,1\}) = \{ f \in \textrm{BV}(\Omega , \{-1,1\}) \, : \, \int _\Omega f \, \textrm{dx}= m |\Omega | \}\) and

Note that by Lemma 2.1, the solution operator \({\varvec{S}}: \varphi \mapsto {\varvec{u}}\) is well-defined for \(\varphi \in \textrm{BV}(\Omega , \{-1,1\})\). The connection between (P) and (\(\textbf{P}_0\)) will be explored in Sect. 5.

3.1 Anisotropy Function, Wulff Shape and Frank Diagram

Consider an anisotropic density function \(\gamma :{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) satisfying

-

(A1) \(\gamma \) is positively homogeneous of degree one:

$$\begin{aligned} \gamma (\lambda {\varvec{q}}) = \lambda \gamma ({\varvec{q}}) \quad \text { for all } {\varvec{q}}\in {\mathbb {R}}^d \setminus \{{\varvec{0}}\}, \, \lambda \ge 0, \end{aligned}$$which immediately implies \(\gamma ({\varvec{0}}) = 0\).

-

(A2) \(\gamma \) is positive for non-zero vectors:

$$\begin{aligned} \gamma ({\varvec{q}}) >0 \quad \text { for all } {\varvec{q}}\in {\mathbb {R}}^d \setminus \{{\varvec{0}}\}. \end{aligned}$$ -

(A3) \(\gamma \) is convex:

$$\begin{aligned} \gamma (s {\varvec{p}}+ (1-s) {\varvec{q}}) \le s \gamma ({\varvec{p}}) + (1-s) \gamma ({\varvec{q}}) \quad \text { for all } {\varvec{p}}, {\varvec{q}}\in {\mathbb {R}}^d, \, s \in [0,1]. \end{aligned}$$

Note that it is sufficient to assign values of \(\gamma \) on the unit sphere \({{\mathbb {S}}^{d-1}}\) in \({\mathbb {R}}^d\), since by the one-homogeneity property (A1) we can define for any \({\varvec{p}}\ne {\varvec{0}}\) with \(\hat{{\varvec{p}}} = {\varvec{p}}/ |{\varvec{p}}|\)

A consequence of the convexity assumption (A3) is that \(\gamma \) is continuous (in fact it is even locally Lipschitz continuous, see, e.g., [7, E4.6, p. 129]). Then, from (A2) we have that \(\gamma \) has a positive minimum \({\tilde{c}}\) on the compact set \(\partial B_1({\varvec{0}})\), and consequently by (A1), \(\gamma ({\varvec{q}})=\gamma \big (|{\varvec{q}}| \hat{{\varvec{q}}} \big )=|{\varvec{q}}|\gamma \big ( \hat{{\varvec{q}}}\big )\ge {\tilde{c}}|{\varvec{q}}|\) for \({\varvec{q}}\ne {\varvec{0}}\). Thus, (A1)–(A3) yield the following property:

-

(A4) \(\gamma \) is Lipschitz continuous and there exists a constant \({{\tilde{c}}} > 0\) such that

$$\begin{aligned} \gamma ({\varvec{q}}) \ge {{\tilde{c}}} |{\varvec{q}}| \quad \text { for all } {\varvec{q}}\in {\mathbb {R}}^d. \end{aligned}$$

Moreover, with \(\lambda = 2\) in (A1) and \(s = \frac{1}{2}\) in (A3), it is not difficult to verify that \(\gamma \) satisfies the triangle inequality. Hence, provided that \(\gamma ({\varvec{q}})=\gamma (-{\varvec{q}})\) for all \({\varvec{q}}\in {\mathbb {R}}^d\), any \(\gamma \) satisfying (A1)–(A3) defines a norm on \({\mathbb {R}}^d\).

For such anisotropy density functions and smooth hypersurfaces \(\Gamma \) with normal vector field \({\varvec{\nu }}\), we define the anisotropic interfacial energy as

Then, the isoperimetric problem involves finding a hypersurface \(\Gamma ^*\) that minimizes \(\mathcal {F}^\gamma \) under a volume constraint. This problem has been well-studied by many authors (see for instance [41, 42, 81, 82] and [54] and the references therein) and the solution is given as the boundary of the region called the Wulff shape [92]

where \(\gamma ^*\) is the dual function of \(\gamma \) defined as

The dual function \(\gamma ^*\) also satisfies (A1)–(A3) and hence we can view the Wulff shape W as the 1-ball of \(\gamma ^*\). Besides the Wulff shape, another region of interest that is used to visualize the effects of the anisotropy is the Frank diagram [40], which is defined as the 1-ball of \(\gamma \):

which, due to (A3), is always a convex subset of \({\mathbb {R}}^d\). To see how the shape of the boundary of F determines which directions of unit sphere in \({\mathbb {R}}^d\) is preferred by the anisotropic density function \(\gamma \), let us consider three examples.

Example 3.1

(Isotropic case) Consider \(\gamma ({\varvec{q}}) = |{\varvec{q}}|\) for \({\varvec{q}}\in {\mathbb {R}}^d\). Then, the associated Frank diagram is just the unit ball in \({\mathbb {R}}^d\), with boundary \(\{\gamma ({\varvec{q}}) = |{\varvec{q}}| = 1\}\). As all points on the boundary are equidistant to the origin, all directions of the unit sphere in \({\mathbb {R}}^d\) are equally preferable.

Example 3.2

(Convex example) Consider the Frank diagram shown in the left of Fig. 3, whose boundary is composed of a circular arc C and a horizontal line L. The black dot denotes the origin in \({\mathbb {R}}^2\). For any unit vector in \({\mathbb {R}}^2\), we denote by \(\phi \in [0,2\pi )\) the angle it makes with the negative y-axis measured anticlockwise (see also Fig. 2). Then, there exists \(\theta > 0\) such that all unit vectors with angle in \([0,\theta ] \cup [2\pi - \theta , 2\pi )\) are associated with the horizontal line L in Fig. 3, while all unit vectors with angle in \((\theta , 2 \pi - \theta )\) are associated with the circular arc C. Notice, if the origin lies on L, then \(\theta = \frac{\pi }{2}\), and C is the upper semicircle.

Let \({\varvec{p}}\in C\) and \({\varvec{q}}\in L\) be arbitrary, and set \({\hat{{\varvec{p}}}} = \frac{{\varvec{p}}}{|{\varvec{p}}|}\), \({\hat{{\varvec{q}}}} = \frac{{\varvec{q}}}{|{\varvec{q}}|}\) as their unit vectors. It is clear from the figure that \(|{\varvec{p}}| \ge |{\varvec{q}}|\), and since \(\gamma ({\varvec{p}}) = \gamma ({\varvec{q}}) = 1\), by (A1) we see that

This implies that \(\gamma ({\hat{{\varvec{p}}}}) \le \gamma ({\hat{{\varvec{q}}}})\), and from the viewpoint of minimizing the interfacial energy \(\mathcal {F}^\gamma \) in (3.5), directions \({\hat{{\varvec{p}}}}\) are preferable to directions \({\hat{{\varvec{q}}}}\). Consequently, from the Frank diagram in Fig. 3 we can see that the associated anisotropy density function \(\gamma \) prefers directions with angle in \((\theta , 2\pi - \theta )\) over directions with angle in \([0,\theta ] \cup [2 \pi - \theta , 2 \pi )\).

Example 3.3

(Non-convex example) Consider the Frank diagram shown in the right of Fig. 3, whose boundary encloses a non-convex set. Let \({\varvec{r}}_1\) and \({\varvec{r}}_2\) denote the two unit vectors whose angles, say \(\theta \) and \(2 \pi - \theta \), respectively, associate to the two endpoints of the circular arc. From previous discussions, the anisotropy density function \(\gamma \) will prefer directions with angles in \((\theta , 2 \pi - \theta )\).

With such \(\gamma \), consider two spatial points \({\varvec{x}}_1\) and \({\varvec{x}}_2\) at the same height, see Fig. 4. Connecting them via a horizontal straight line is energetically expensive since this is associated to a direction with angle zero (where on the boundary of the Frank diagram is closest to the origin). An energetically more favorable connection is a zigzag path from \({\varvec{x}}_1\) and \({\varvec{x}}_2\) whose normal vectors oscillate between \({\varvec{r}}_1\) and \({\varvec{r}}_2\). This is similar to a behavior termed “dripping effect” in [4] (see also [77, Fig. 15]), which is the tendency for shapes to develop oscillatory boundaries in order to meet the overhang angle constraints.

Connection between two spatial points with non-convex anisotropic density function \(\gamma \) whose Frank diagram looks like the right of Fig. 3

3.2 Relevant Examples of Anisotropic Density Function

Motivated by the above discussion, in this section we provide some examples of \(\gamma \) that achieve the Frank diagrams shown in Fig. 3.

3.2.1 An Example of a Convex Anisotropy

We begin with introducing an anisotropy function \(\gamma \) whose corresponding convex Frank diagram yields the left of Fig. 3. To fix the ideas, let us begin with the two-dimensional case. For a fixed constant \(\alpha \in (0,1)\), we consider the function

where the set \(V{\subseteq {\mathbb {R}}^2}\) will be determined in the following. To achieve the boundary of the Frank diagram F shown in the left of Fig. 3, we notice that \(\partial F \cap V\) is a circular arc of radius 1 centered at the origin, while \(\partial F \cap V^c\) implies \(x_2 = - \alpha \) which is a horizontal line segment at height \(-\alpha \). Following the convention in Fig. 2 where angles are measured anticlockwise from the negative \(x_2\)-axis, we can parameterize the circular arc by \((\cos \phi , \sin \phi )\) for \(\phi \in [\theta , 2 \pi -\theta ]\) where \(\theta := \cos ^{-1}(\alpha )\), see Fig. 3. Hence, the set V in the definition (3.9) can be characterized as

Remark 3.2

Note that in the limiting case \(\alpha =1\), the above set V is the entire plane \({\mathbb {R}}^2\). Hence we have the isotropic case \(\gamma ({\varvec{x}}) = |{\varvec{x}}|\) for all \({\varvec{x}}\in {\mathbb {R}}^2\), and \(\partial F\) is simply the unit circle.

For a parametric characterization of the set V, let \(c := \frac{\alpha }{\sqrt{1-\alpha ^2}}\) and consider the two straight lines \(\{x_2 = c x_1\}\) and \(\{x_2 = -c x_1\}\) dividing \({\mathbb {R}}^2\) into eight regions (see Fig. 5), which we label as Region \(1, 2, \dots , 8\) in an anticlockwise direction starting from the positive \(x_1\)-axis. Then, the horizontal straight line portion \(\partial F \cap V^c\) of the Frank diagram at height \(x_2 = - \alpha \) is contained in Regions 6 and 7, whose union is described by the set \(\{x_2 < -c |x_1|\}\), while the circular arc portion \(\partial F \cap V\) is contained in Regions \(1, \dots , 5\) and 8, whose union is described by the set \(\{x_2 \ge -c |x_1|\}\). Hence, a parameteric characterization of the set V in (3.9) is

Generalizing to the d-dimensional case, we obtain

From (3.10) we see that \(\gamma _{\alpha } \in C^0({\mathbb {R}}^d)\) but it is not continuously differentiable at the points where \(x_d = - \alpha |{\varvec{x}}|\). Furthermore, it is clear that \(\gamma _{\alpha }\) satisfies the assumptions (A1)–(A3) and hence (A4).

3.2.2 An Example of a Non-convex Anisotropy

For completeness, we provide an example of an anisotropic function \(\gamma \) that yields a non-convex Frank diagram as seen in the right of Fig. 3. Referring to the construction of the previous example, we present the two-dimensional case first. Fix \(\lambda \in (0,1)\) and let \((0,-\lambda \alpha )^{\top }\) denote the intersection of the two straight line segments (which we call \(L_1\) and \(L_2\) respectively) just below the origin. Denoting by \({\varvec{p}}_-\) the endpoint of the left line segment \(L_1\) and by \({\varvec{p}}_{+}\) the endpoint of the right line segment \(L_2\) that connects \((0,-\lambda \alpha )^{\top }\) to the circular arc of radius 1, a short calculation shows that \({\varvec{p}}_{\pm } = (\pm \sqrt{1-\alpha ^2}, -\alpha )^{\top }\).

A choice of tangent vector for \(L_1\) is \({\varvec{\tau }} = (-\sqrt{1-\alpha ^2}, (\lambda - 1)\alpha )^{\top }\) so that a normal vector for \(L_1\) is \({\varvec{n}} = ((1-\lambda )\alpha , -\sqrt{1-\alpha ^2})^{\top }\). Consider, for some constant \(b>0\) to be identified,

for \({\varvec{x}}= (x_1, x_2) \in \{x_1 \le 0, x_2 \le \frac{\alpha }{\sqrt{1-\alpha ^2}}x_1\}\). This corresponds to Region 6 in the right of Fig. 5 that contains the line segment \(L_1\), which can be parameterized as

Notice that \(\gamma \) satisfies (A1)–(A2) (due to the modulus), and a short calculation shows that for \({\varvec{x}}\in L_1\),

Hence, choosing \(b = (\lambda \alpha \sqrt{1-\alpha ^2})^{-1} > 0\) yields that \(\gamma ({\varvec{x}}) = 1\) for \({\varvec{x}}\in L_1\). Similarly, a choice of tangent vector for \(L_2\) is \({\varvec{\tau }} = (\sqrt{1-\alpha ^2}, (\lambda - 1)\alpha )^{\top }\), so that a normal vector for \(L_2\) is \({\varvec{n}} = ((\lambda - 1)\alpha , -\sqrt{1-\alpha ^2})^{\top }\). We consider

for \({\varvec{x}}\in \{x_1 \ge 0, x_2 \le - \frac{\alpha }{\sqrt{1-\alpha ^2}} x_1\}\) which corresponds to Region 7 in Fig. 5 that contains the line segment \(L_2\). Then, a short calculation shows that \(\gamma ({\varvec{x}}) = 1\) for \({\varvec{x}}\in L_2\). Thus, an example of an anisotropic function \(\gamma \) that give rise to a Frank diagram whose boundary is the right figure in Fig. 3 is

for \(\alpha \in (0,1)\), \(\lambda \in (0,1)\) and \({\varvec{x}}= (x_1, x_2) \in {\mathbb {R}}^2\). Notice that in the limit \(\lambda \rightarrow 1\), we recover the convex anisotropic function \(\gamma _{\alpha }\) defined in (3.10).

To generalize to the d-dimensional case, we notice that the lines \(L_1\) and \(L_2\) in the above discussion are now replaced by the lateral surface S of a cone with apex \((0,0,\dots , 0,-\lambda \alpha ) \in {\mathbb {R}}^d\), which can be parameterized as

Then, by similar arguments leading to (3.11), we obtain the function

for \({\varvec{x}}= ({\tilde{{\varvec{x}}}}, x_d) \in {\mathbb {R}}^d\), \({\tilde{{\varvec{x}}}} \in {\mathbb {R}}^{d-1}\), where we can verify that \(\gamma _{\alpha ,\lambda }({\varvec{x}}) = 1\) for \({\varvec{x}}\in S\). In Fig. 6 we display the Frank diagrams for non-convex anisotropy functions of the form (3.11) with \(\lambda = 0.5\) and \(\alpha {= 0.7, 0.5, 0.3}\).

Frank diagrams for non-convex anisotropy (3.11) with \(\lambda =0.5\). From left to right: \(\alpha = 0.7\), 0.5 and 0.3

Let us stress that, when \(\gamma \) is non-convex, the associated energy functional \({{\mathcal {F}}}^\gamma \) in (3.5) is no longer lower semincontinuous (cf. [8,9,10]). The same conclusion applies for the phase field approximation \({E}_{\gamma ,\varepsilon }\) in (3.3) and so the associated minimization problem (P) may be ill-posed (see also Fig. 10).

3.3 Subdifferential Characterization

The analysis of the structural optimization problem (P) follows almost analogously as in [24]. The major difference is the anisotropic Ginzburg–Landau functional in (2.6). From the examples of \(\gamma \) discussed in the previous subsection, our interest lies in anisotropy density functions that are convex and continuous, but not necessarily \(C^1({\mathbb {R}}^d)\), which necessitates a non-trivial modification to the analysis performed in [24]. In this subsection we focus only on the gradient part of (2.6) and investigate its subdifferential in preparation for the first-order necessary optimality conditions for (P) (cf. Theorem 4.2).

We define \(A: {\mathbb {R}}^d \rightarrow [0,\infty )\) as

and from (A1)–(A4), it readily follows that A is convex, continuous with \(A({\varvec{0}}) = 0\), positive for non-zero vectors, positively homogeneous of degree two:

and there exist positive constants c and C such that

Associated to such a function A, we consider the integral functional

Convexity of A immediately imply that \(\mathcal {F}\) is convex, proper and weakly lower semicontinuous in \(H^1(\Omega )\), see, e.g., [37, Thm. 1, §8.2.2]. Consequently, its subdifferential \(\partial \mathcal {F}: H^1(\Omega ) \rightarrow 2^{H^1(\Omega )^*}\), defined as

is a maximal monotone operator from \(H^1(\Omega )\) to \(H^1(\Omega )^*\) (see [15, Thm. 2.43]), which is equivalent to the property that, for any \(f \in H^1(\Omega )^*\), there exists at least one solution \(\varphi _0 \in D(\partial \mathcal {F})\) with \(\xi _0 \in \partial \mathcal {F}(\varphi _0)\) such that

Let us now provide a useful lemma that characterizes the subdifferential \(\partial \mathcal {F}(\varphi )\) in terms of elements of \(\partial A(\nabla \varphi )\) (see also [15, p. 146, Problem 2.7]). Heuristically, elements of \(\partial \mathcal {F}(\varphi )\) are the negative weak divergences of elements of \(\partial A(\nabla \varphi )\).

Lemma 3.1

Consider the map \(\mathcal {G}: H^1(\Omega ) \rightarrow 2^{H^1(\Omega )^*}\) defined by

Then, for \(\varphi \in D(\partial \mathcal {F})\), it holds that

Remark 3.3

If \(A :{\mathbb {R}}^d \rightarrow [0,\infty )\) is smooth, convex, coercive with bounded second derivatives, i.e., there exists \(C> 0\) such that \(|A_{p_i,p_j}({\varvec{p}})| \le C\) for all \({\varvec{p}}\in {\mathbb {R}}^d\) and \(1 \le i,j \le d\), then the corresponding characterization of the subdifferential of \(\mathcal {F}\) can be found in [37, Thm. 4, §9.6.3]. Namely, \(\partial \mathcal {F}(\varphi )\) is single-valued and \(\partial \mathcal {F}(\varphi ) = \{- \sum _{i=1}^d \partial _{x_i} [A_{p_i}(\nabla \varphi )] \}\).

Proof of Lemma 3.1

To begin, fix \(\varphi \in H^1(\Omega )\), \(v \in \mathcal {G}(\varphi )\) and let \({\varvec{\xi }}\in L^2(\Omega , {\mathbb {R}}^d)\) with \({\varvec{\xi }}({\varvec{x}}) \in \partial A(\nabla \varphi ({\varvec{x}}))\) for almost every \({\varvec{x}}\in \Omega \) satisfying \(\langle v,p \rangle _{H^1} = ({\varvec{\xi }}, \nabla p)\) for all \(p \in H^1(\Omega )\). By the definition of the subgradient \(\partial A(\nabla \varphi ({\varvec{x}}))\), we have

Choosing \({\varvec{q}}= \nabla \phi ({\varvec{x}})\) where \(\phi \in H^1(\Omega )\) is arbitrary, and integrating over \(\Omega \) we infer that

This shows \(v \in \partial \mathcal {F}(\varphi )\) and hence the inclusion \(\mathcal {G}(\varphi ) \subset \partial \mathcal {F}(\varphi )\).

For the converse inclusion, we show \(\mathcal {G}: H^1(\Omega ) \rightarrow 2^{H^1(\Omega )^*}\) is a maximal monotone operator, and as \(\partial \mathcal {F}\) is maximal monotone we obtain by definition \(\mathcal {G}(\varphi ) = \partial \mathcal {F}(\varphi )\) for all \(\varphi \in D(\partial \mathcal {F})\). Thus, it suffices to study the solvability of the following problem: For \(f \in H^1(\Omega )^*\), find a pair \((\varphi , {\varvec{\xi }}) \in H^1(\Omega ) \times L^2(\Omega ,{\mathbb {R}}^d)\) such that \({\varvec{\xi }}\in \partial A(\nabla \varphi )\) almost everywhere in \(\Omega \) and

This result can be obtained by following a strategy outlined in [14, Thm. 2.17]. First, from recalling the properties of A in (3.13), we deduce that for \({\varvec{\xi }}= (\xi _1, \dots , \xi _d)^{\top } \in \partial A({\varvec{q}})\),

where the first inequality is obtained from applying the relation \(A({\varvec{r}}) - A({\varvec{q}}) \ge {\varvec{\xi }}\cdot ({\varvec{r}}- {\varvec{q}})\) with the choice \({\varvec{r}}= {\varvec{0}}\), and the last inequality is obtained from the second property of A in (3.13) with the choice \({\varvec{r}}= {\varvec{q}}+ {{\varvec{e}}}_i\) where \({{\varvec{e}}}_i\) is the canonical i-th unit vector in \({\mathbb {R}}^d\). Taking the maximum over \(i \in \{1, \dots , d\}\) and then the supremum over all elements \({\varvec{\xi }}\in \partial A({\varvec{q}})\), the second inequality in (3.16) implies

Next, for \(\lambda > 0\), we introduce the Moreau–Yosida approximation \(B_\lambda \) of \(B := \partial A\) as

where I denotes the identity map. The maximal monotonicity of B guarantees that \((I + \lambda B)^{-1}\) is a well-defined operator and it is bounded independently of \(\lambda \) (see [37, p. 524, Thms. 1 and 2]). It is well-known that \(B_\lambda \) is single-valued, Lipschitz continuous with Lipschitz constant \(\frac{1}{\lambda }\), and \(B_\lambda ({{\varvec{s}}})\) is an element of \(B(( I + \lambda B)^{-1} {{\varvec{s}}})\). Then, by (3.16) and (3.17) (cf. [14, p. 84, (2.143)-(2.144)]), we infer from the identity \({\varvec{q}}= (I + \lambda B)^{-1} {\varvec{q}}+ \lambda B_\lambda ({\varvec{q}})\) that for all \({\varvec{q}}\in {\mathbb {R}}^d\) and \(\lambda \in (0,\lambda _0)\), \(\lambda _0 := \frac{1}{c}\) with constant c from (3.13),

with constants independent of \(\lambda \). We now consider the approximation problem: For \(f \in H^1(\Omega )^*\), \(\lambda \in (0,\lambda _0),\) find \(\varphi _\lambda \in H^1(\Omega )\) such that

It is not difficult to verify that \(T_\lambda \) is monotone, demicontinuous (i.e., \(\varphi _n \rightarrow \varphi \) in \(H^1(\Omega )\) implies \(T_\lambda \varphi _n \rightharpoonup T_\lambda \varphi \) in \(H^1(\Omega )^*\) as \(n\rightarrow \infty \)), and coercive (i.e., \(\langle T_\lambda \varphi , \varphi \rangle _{H^1} \ge c_0 \Vert \varphi \Vert _{H^1}\) for some \(c_0 > 0\) independent of \(\lambda \)), and so by standard results (see, e.g., [14, p. 37, Cor. 2.3]) there exists at least one solution \(\varphi _\lambda \in H^1(\Omega )\) to \(T_\lambda \varphi _\lambda = f\) in \(H^1(\Omega )^*\).

We then establish uniform estimates for \(\{\varphi _\lambda \}_{\lambda \in (0,\lambda _0)}\) whose weak limit in \(H^1(\Omega )\) will be a solution to (3.15), thereby verifying the maximal monotonicity of \(\mathcal {G}\) and hence completing the proof. Choosing \(\zeta = \varphi _\lambda \) in (3.19) and recalling the identity \({\varvec{q}}= (I+\lambda B)^{-1} {\varvec{q}}+ \lambda B_\lambda ({\varvec{q}})\), we obtain

From (3.18) we immediately infer

which provides a uniform estimate for \(\varphi _\lambda \) in \(H^1(\Omega )\) with respect to \(\lambda \). On the other hand, also from (3.18), particularly \(B_\lambda ({\varvec{q}}) \cdot {\varvec{q}}\ge c |(I +\lambda B)^{-1} {\varvec{q}}|^2\), we observe that

which implies, along a non-relabeled subsequence \(\lambda \rightarrow 0\),

for some limit functions \(\varphi \) and \({\varvec{\xi }}\) satisfying

To show that \({\varvec{\xi }}({\varvec{x}}) \in \partial A(\nabla \varphi ({\varvec{x}}))\) for almost every \({\varvec{x}}\in \Omega \), for arbitrary \({{\varvec{\phi }}} \in D(\partial A)\) and \({{\varvec{\zeta }}} \in B({{\varvec{\phi }}}) \subset L^2(\Omega , {\mathbb {R}}^d)\), we use the inequality

obtained from the relation \(B_\lambda (\nabla \varphi _\lambda ) \in B(( I + \lambda B)^{-1} \nabla \varphi _\lambda )\) and the monotonicity of B. Then, passing to the limit \(\lambda \rightarrow 0\) with (3.21), as well as

we arrive at

Picking \({{\varvec{\phi }}} = (I+B)^{-1}({\varvec{\xi }}+ \nabla \varphi )\), so that \({{\varvec{\zeta }}}:= {\varvec{\xi }}+ \nabla \varphi - {{\varvec{\phi }}} \in B({{\varvec{\phi }}})\), we see that the above reduces to

which implies \(\nabla \varphi = {{\varvec{\phi }}}\) and \({{\varvec{\zeta }}}= {\varvec{\xi }}\in B(\nabla \varphi ) = \partial A(\nabla \varphi )\) almost everywhere in \(\Omega \). This shows that \((\varphi , {\varvec{\xi }}) \in H^1(\Omega ) \times L^2(\Omega , {\mathbb {R}}^d)\) is a solution to (3.15) and concludes the proof. \(\square \)

4 Analysis of the Structural Optimization Problem

By Lemma 2.1 the solution operator \({\varvec{S}}: L^\infty (\Omega ) \rightarrow H^1_D(\Omega ,{\mathbb {R}}^d)\), \({\varvec{S}}(\varphi ) = {\varvec{u}}\) where \({\varvec{u}}\) is the unique solution to (2.2) corresponding to the design variable \(\varphi \), is well-defined. This allows us to consider the reduced functional \(\mathcal {J}: \mathcal {V}_m \rightarrow {\mathbb {R}}\),

in the structural optimization problem (P). Invoking [37, Thm. 1, §8.2.2], the convexity of \(\gamma \) and hence of \(A(\cdot ) = \frac{1}{2} |\gamma (\cdot )|^2\) yields that the gradient term in (2.6) is weakly lower semicontinuous in \(H^1(\Omega )\). Then, following the proof of [24, Thm. 4.1] we obtain the existence of an optimal design to (P).

Theorem 4.1

Suppose that (A1)–(A3) hold, and let \(({\varvec{f}}, {\varvec{g}}) \in L^2(\Omega , {\mathbb {R}}^d) \times L^2(\Gamma _g, {\mathbb {R}}^d)\). Then, (P) admits a minimiser \({\overline{\varphi }}\in \mathcal {V}_m\).

Proof

Since the proof is now rather standard, we only sketch some of the essential details. Using the bound (2.4) we infer that the reduced functional \(\mathcal {J}\) is bounded from below in \(\mathcal {V}_m\). This allows us to consider a minimizing sequence \(\{\phi _n\}_{n \in {\mathbb {N}}} \subset \mathcal {V}_m\) such that \(\mathcal {J}(\phi _n) \rightarrow \inf _{\zeta \in \mathcal {V}_m} \mathcal {J}(\zeta )\) as \(n\rightarrow \infty \). Then, again by (2.4) and also (A4) we deduce that \(\{\phi _n\}_{n \in {\mathbb {N}}}\) is uniformly bounded in \(H^1(\Omega )\cap L^\infty (\Omega )\), from which we extract a non-relabeled subsequence converging weakly to \({\overline{\varphi }}\) in \(H^1(\Omega )\). As \(\mathcal {V}_m\) is a convex and closed set, hence weakly sequentially closed, we infer also \({\overline{\varphi }}\in \mathcal {V}_m\) and by passing to the limit infimum of \(\mathcal {J}(\phi _n)\), employing the weak lower semicontinuity of the gradient term in (2.6) and also the weak convergence \({\varvec{S}}(\phi _n) \rightarrow {\varvec{S}}({\overline{\varphi }})\) in \(H^1(\Omega , {\mathbb {R}}^d)\) we infer that \(\mathcal {J}({\overline{\varphi }}) = \inf _{\zeta \in \mathcal {V}_m} \mathcal {J}(\zeta )\), which implies that \({\overline{\varphi }}\) is a solution to (P). \(\square \)

To derive the first-order optimality conditions, we first take note of the following result concerning the Fréchet differentiability of the solution operator \({\varvec{S}}\), which can be inferred from [24, Thm. 3.3]:

Lemma 4.1

Let \(({\varvec{f}}, {\varvec{g}}) \in L^2(\Omega , {\mathbb {R}}^d) \times L^2(\Gamma _g, {\mathbb {R}}^d) \) and \({\overline{\varphi }}\in L^\infty (\Omega )\). Then \({\varvec{S}}\) is Fréchet differentiable at \({\overline{\varphi }}\) as a mapping from \(L^\infty (\Omega )\) to \(H^1_D(\Omega , {\mathbb {R}}^d)\). Moreover, for every \(\zeta \in L^\infty (\Omega )\), \({\varvec{S}}'({\overline{\varphi }}) \in \mathcal {L}(L^\infty (\Omega ); H^1_D(\Omega , {\mathbb {R}}^d))\) and

where \({{\varvec{w}}}\in H^1_D(\Omega , {\mathbb {R}}^d)\) is the unique solution to the linearized system

for all \({\varvec{\eta }}\in H^1_D(\Omega , {\mathbb {R}}^d),\) with \({\overline{{\varvec{u}}}}= {\varvec{S}}({\overline{\varphi }})\), where \({\mathbb {h}}'=-\frac{1}{2}\) is the derivative of the affine linear function \({\mathbb {h}}\) introduced in Sect. 2.1.

Then, we introduce the adjoint system for the adjoint variable \({\varvec{p}}\) associated to \({\overline{\varphi }}\), whose structure is similar to the state equation (2.2) for \({\varvec{u}}\):

Via a similar argument (see also [24, Thm. 4.3]), the well-posedness of the adjoint system is straightforward:

Lemma 4.2

For any \({\overline{\varphi }}\in L^\infty (\Omega )\) and \(({\varvec{f}}, {\varvec{g}}) \in L^2(\Omega , {\mathbb {R}}^d) \times L^2(\Gamma _g, {\mathbb {R}}^d)\), there exists a unique solution \({\varvec{p}}\in H^1_D(\Omega , {\mathbb {R}}^d)\) to (4.2) satisfying

for all \({\varvec{v}}\in H^1_D(\Omega , {\mathbb {R}}^d)\). In fact, if \({\overline{{\varvec{u}}}}= {\varvec{S}}({\overline{\varphi }}) \in H^1_D(\Omega , {\mathbb {R}}^d)\) is the unique solution to (2.3), then \({\varvec{p}}= \beta {\overline{{\varvec{u}}}}\).

Our main result is the following first-order optimality conditions for the structural optimization problem with anisotropy (P):

Theorem 4.2

Suppose (A1)–(A3) hold. Let \(({\varvec{f}}, {\varvec{g}}) \in L^2(\Omega , {\mathbb {R}}^d) \times L^2(\Gamma _g,{\mathbb {R}}^d)\) and \({\overline{\varphi }}\in \mathcal {V}_m\) be an optimal design variable with associated state \({\overline{{\varvec{u}}}}= {\varvec{S}}({\overline{\varphi }})\). Then, there exists \({\varvec{\xi }}\in \partial A(\nabla {\overline{\varphi }})\) almost everywhere in \(\Omega \) such that

for all \(\phi \in \mathcal {V}_m\).

Proof

Recalling the definition of the functional \(\mathcal {F}\) in (3.14), we introduce

so that the reduced functional \(\mathcal {J}\) can be expressed as \(\mathcal {J}(\phi ) = {{\widehat{\alpha }} }\varepsilon \mathcal {F}(\phi ) + \mathcal {K}(\phi )\). Using the differentiability of \({\varvec{S}}\) we infer that \(\mathcal {K}\) is also Fréchet differentiable with derivative at \({\overline{\varphi }}\in \mathcal {V}_m\) in direction \(\zeta \in \mathcal {V}= \{ f \in H^1(\Omega ) \, : \, f({{\varvec{x}}}) \in [-1,1] \text { a.e.~in } \Omega \}\) given as

where \({{\varvec{w}}}= {\varvec{S}}'({\overline{\varphi }})[\zeta ]\) is unique solution to the linearized system (4.1) and \({\overline{{\varvec{u}}}}= {\varvec{S}}({\overline{\varphi }})\). We can simplify this using the adjoint system by testing (4.3) with \({\varvec{v}}= {{\varvec{w}}}\) and testing (4.1) with \({\varvec{\eta }}= {\varvec{p}}\) to obtain the identity

so that, together with the relation \({\varvec{p}}= \beta {\overline{{\varvec{u}}}}\), (4.6) becomes

On the other hand, the convexity of \(\mathcal {F}\) and the optimality of \({\overline{\varphi }}\in \mathcal {V}_m\) imply that

holds for all \(t \in [0,1]\) and arbitrary \(\phi \in \mathcal {V}_m\). Dividing by t and passing to the limit \(t \rightarrow 0\) yields

The above inequality allows us to interpret \({\overline{\varphi }}\in \mathcal {V}_m\) as a solution to the convex minimization problem

denotes the indicator function of the set \(\mathcal {V}_m\). Since \({\mathcal {F}}[\cdot ]\) and \({\mathcal {K}}'({\overline{\varphi }})[\cdot ]\) are defined on the whole of \(H^1(\Omega )\), we can use the well-known sum rule for subdifferentials of convex functions, see, e.g., [15, Cor. 2.63], so that the inequality (4.8) can be interpreted as

where \(\partial \) denotes the subdifferential mapping in \(H^1(\Omega )\). This implies the existence of elements \(v \in \partial \mathcal {F}({\overline{\varphi }})\) and \(\psi \in \partial {\mathbb {I}}_{\mathcal {V}_m}({\overline{\varphi }})\) such that

For arbitrary \(\phi \in \mathcal {V}_m\), by definition of \(\partial {\mathbb {I}}_{\mathcal {V}_m}({\overline{\varphi }})\) we have

Then, using Lemma 3.1, there exists \({\varvec{\xi }}\in L^2(\Omega , {\mathbb {R}}^d)\) with \({\varvec{\xi }}\in \partial A(\nabla {\overline{\varphi }})\) almost everywhere in \(\Omega \) and

The optimality condition (4.4) is then a consequence of the above and (4.7) with \(\zeta = \phi - {\overline{\varphi }}\). \(\square \)

Remark 4.1

An alternate formulation of the optimality condition (4.4) is as follows: There exist \({\varvec{\xi }}\in \partial A(\nabla {\overline{\varphi }})\), \(\theta \in \partial {\mathbb {I}}_{[-1,1]}({\overline{\varphi }})\), and a Lagrange multiplier \(\mu \in {\mathbb {R}}\) for the mass constraint such that

for all \(\zeta \in H^1(\Omega )\). In the above, the subdifferential of the indicator function \({\mathbb {I}}_{[-1,1]}\) has the explicit characterization

Indeed, instead of (4.9) we can interpret \({\overline{\varphi }}\in \mathcal {V}_m\) as a solution to the minimization problem

which then yields the existence of elements \(\theta \in \partial {\mathbb {I}}_{[-1,1]}({\overline{\varphi }})\) and \(\eta \in \partial {\mathbb {I}}_{m}({\overline{\varphi }})\) such that

For arbitrary \(\zeta \in H^1(\Omega )\), we test the above equality with \(\zeta - (\zeta )_\Omega \), where \((\zeta )_\Omega = \frac{1}{|\Omega |} \int _\Omega \zeta \, \textrm{dx}\), and on noting that

this yields (4.11) with Lagrange multiplier \(\mu := - |\Omega |^{-1} (\mathcal {K}'({\overline{\varphi }})[1] + \theta )\).

5 Sharp Interface Asymptotics

Our interest is to study the behavior of solutions under the sharp interface limit \(\varepsilon \rightarrow 0\), which connects our phase field approach with the shape optimization approach of [4].

5.1 \(\Gamma \)-Convergence of the Anisotropic Ginzburg–Landau Functional

We begin with the \(\Gamma \)-convergence of the extended anisotropic Ginzburg–Landau functional (3.4a) to the extended anisotropic perimeter functional (3.4b), which is formulated as follows:

Lemma 5.1

Let \(\Omega \subset {\mathbb {R}}^d\) be an open bounded domain with Lipschitz boundary. Let \(\gamma : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) satisfy (A1)–(A3), \(\Psi : {\mathbb {R}}\rightarrow {\mathbb {R}}_{\ge 0}\) is a double well potential with minima at \(\pm 1\) and define \(c_{\Psi } = \int _{-1}^1 \sqrt{2 \Psi (s)} ds\). Then, for any \(\varepsilon > 0\)

-

(i)

If \(\{u_\varepsilon \}_{\varepsilon >0} \subset \mathcal {V}_m\) is a sequence such that \(\liminf _{\varepsilon \rightarrow 0} \mathcal {E}_{\gamma ,\varepsilon }(u_\varepsilon ) < \infty \) and \(u_\varepsilon \rightarrow u_0\) strongly in \(L^1(\Omega )\), then \(u_0 \in \textrm{BV}_m(\Omega , \{-1,1\})\) with \(\mathcal {E}_{\gamma ,0}(u_0) \le \liminf _{\varepsilon \rightarrow 0} \mathcal {E}_{\gamma ,\varepsilon }(u_\varepsilon )\).

-

(ii)

Let \(u_0 \in \textrm{BV}(\Omega , \{-1,1\})\). Then, there exists a sequence \(\{u_\varepsilon \}_{\varepsilon > 0}\) of Lipschitz continuous functions on \(\Omega \) such that \(u_\varepsilon ({\varvec{x}}) \in [-1,1]\) a.e. in \(\Omega \), \(u_\varepsilon \rightarrow u_0\) strongly in \(L^1(\Omega )\), \(\int _\Omega u_\varepsilon ({\varvec{x}}) \, \textrm{dx}= \int _\Omega u_0({\varvec{x}}) \, \textrm{dx}\) for all \(\varepsilon > 0\), and \(\limsup _{\varepsilon \rightarrow 0} \mathcal {E}_{\gamma ,\varepsilon }(u_\varepsilon ) \le \mathcal {E}_{\gamma ,0}(u_0)\).

-

(iii)

Let \(\{u_\varepsilon \}_{\varepsilon > 0} \subset \mathcal {V}_m\) be a sequence satisfying \(\sup _{\varepsilon > 0} \mathcal {E}_{\gamma ,\varepsilon }(u_\varepsilon ) < \infty \). Then, there exists a non-relabeled subsequence \(\varepsilon \rightarrow 0\) and a limit function u such that \(u_\varepsilon \rightarrow u\) strongly in \(L^1(\Omega )\) with \(\mathcal {E}_{\gamma ,0}(u)< \infty \).

The first and second assertions are known as the liminf and limsup inequalities, respectively, while the third assertion is the compactness property. In the following, we outline how to adapt the proofs of [22, Thms. 3.1 and 3.4] for the \(\Gamma \)-convergence result in the multi-phase case. Our present setting corresponds to the case \(N = 2\) in their notation.

Proof

For arbitrary \(u \in \mathcal {V}_m\), we introduce the associated vector \({\varvec{w}} = (w_1, w_2)\) where \(w_1 := \frac{1}{2}(1-u)\) and \(w_2 := \frac{1}{2}(1+u)\). Then, \({\varvec{w}}\) take values in the Gibbs simplex \(\Sigma = \{ (w_1, w_2) \in {\mathbb {R}}^2 \, : \, w_1, w_2 \ge 0, \, w_1 + w_2 = 1\}\). Setting \({\varvec{\alpha }}^1 := (0,1)^{\top }\) and \({\varvec{\alpha }}^2:= (1,0)^{\top }\), we consider a multiple-well potential \(W: \Sigma \rightarrow [0,\infty )\) satisfying \(W^{-1}(0) = \{{\varvec{\alpha }}^1, {\varvec{\alpha }}^2\}\) and

where the latter relation connects W to \(\Psi _0\) defined in (2.9). Denoting by \(M^{2 \times d}\) the set of 2-by-d matrices, we consider a function \(f: \Sigma \times M^{2 \times d} \rightarrow [0,\infty )\) defined as \(f({\varvec{w}}, {\varvec{X}}) = 2|\gamma (w_1 {\varvec{X}}_2 - w_2 {\varvec{X}}_1) |^2\), where \(\gamma \) satisfies (A1)–(A3), and \({\varvec{X}}_i\) denotes the i-th row of \({\varvec{X}}\). Taking \({\varvec{X}} = \nabla {\varvec{w}}\) for \({\varvec{w}} \in \Sigma \) yields that \({\varvec{X}}_1 + {\varvec{X}}_2 = \nabla w_1 + \nabla w_2 = 0\) and

by the relation \(\nabla w_2 = \frac{1}{2} \nabla u\) and the one-homogeneity of \(\gamma \). Assumptions (A1)–(A3) on \(\gamma \) ensure the function f defined above fulfills the corresponding assumptions in [22, p. 80], and thus by [22, Thm. 3.1], the extended functional

\(\Gamma \)-converges in \(L^1(\Omega ; \Sigma )\) to a limit functional

where the definitions of the set \(S_{{\varvec{w}}}\), normal \({\varvec{\nu }}\) and function \(\varphi \) can be found in [22, p. 78, p. 81]. It is clear that \(\mathcal {E}_{\gamma ,\varepsilon }(u) = G_\varepsilon ((\frac{1}{2}(1-u),\frac{1}{2}(1+u)))\) for \(u \in \mathcal {V}_m\), and our aim is to show \(\mathcal {E}_{\gamma ,0}(u) = \mathcal {G}((\frac{1}{2}(1-u),\frac{1}{2}(1+u)))\) for \(u \in \textrm{BV}(\Omega , \{-1,1\})\). For a fixed vector \({\varvec{\nu }}\in {\mathbb {S}}^{d-1}\), let \(\mathcal {C}_\lambda \) denote the \((d-1)\)-dimensional cube of side \(\lambda \) lying in the orthogonal complement \({\varvec{\nu }}^{\perp }\) centered at the origin. Following [22], a function \({\varvec{w}}: {\mathbb {R}}^d \rightarrow \Sigma \) is \(\mathcal {C}_\lambda \)-periodic if \({\varvec{w}}({\varvec{x}}+ \lambda {\varvec{e}}_i) = {\varvec{w}}({\varvec{x}})\) for every \({\varvec{x}}\) and every \(i = 1, \dots , d-1\), with \({\varvec{e}}_1, \dots , {\varvec{e}}_{d-1}\) as directions of the sides of \(\mathcal {C}_\lambda \). Setting \(Q_{\lambda ,\infty }^{{\varvec{\nu }}} = \{ {\varvec{z}} + t {\varvec{\nu }} \, : \, {\varvec{z}} \in \mathcal {C}_\lambda , \, t \in {\mathbb {R}}\}\) we denote by \(\mathcal {X}(Q_{\lambda ,\infty }^{{\varvec{\nu }}})\) the class of all functions \({\varvec{w}} \in W^{1,2}_{\textrm{loc}}({\mathbb {R}}^d; \Sigma )\) which are \(\mathcal {C}_\lambda \)-periodic and satisfy \(\lim _{\langle {\varvec{x}}, {\varvec{\nu }} \rangle \rightarrow - \infty } {\varvec{w}}({\varvec{x}}) = {\varvec{\alpha }}^1\) and \(\lim _{\langle {\varvec{x}}, {\varvec{\nu }} \rangle \rightarrow + \infty } {\varvec{w}}({\varvec{x}}) = {\varvec{\alpha }}^2\). Then, by [22, (6)], the integrand in the \(\Gamma \)-limit functional \(\mathcal {G}\) has the representation formula

where \(G_1({\varvec{w}},A) = \int _A f({\varvec{w}}, \nabla {\varvec{w}}) + W({\varvec{w}}) \, \textrm{dx}\). To simplify the above expression, for a fixed \({\varvec{\nu }}\in {\mathbb {S}}^{d-1}\), consider a function \(u \in \mathcal {V}_m\) of the form \(u({\varvec{x}}) = \psi ({\varvec{x}}\cdot {\varvec{\nu }}/\gamma ({\varvec{\nu }}))\) for a monotone function \({\psi } : {\mathbb {R}}\rightarrow [-1,1]\) such that \(\lim _{s \rightarrow \pm \infty } \psi (s) = \pm 1\) and satisfies \(\psi ''(s) = \Psi _0'(\psi (s))\). For the choice \(\Psi _0(s) = \frac{1}{2}(1-s^2)\) we have the explicit solution (known also as the optimal profile)

Furthermore, multiplying the equality \(\psi ''(s) = \Psi '_0(\psi (s))\) by \(\psi '(s)\) and integrating yields the so-called equipartition of energy \(\frac{1}{2}|\psi '(s)|^2 = \Psi _0(\psi (s))\) for all \(s \in {\mathbb {R}}\). Then, it is clear that \({\varvec{w}} = (\frac{1}{2}(1-u), \frac{1}{2}(1+u)) \in \mathcal {X}(Q_{\lambda ,\infty }^{{\varvec{\nu }}})\). By (A1), (5.1) and (5.2), as well as Fubini’s theorem, for \(u({\varvec{x}}) = {\psi }({\varvec{x}}\cdot {\varvec{\nu }}/ \gamma ({\varvec{\nu }}))\) we have \(\nabla u({\varvec{x}}) = {\psi }'({\varvec{x}}\cdot {\varvec{\nu }}/ \gamma ({\varvec{\nu }})) {\varvec{\nu }}/ \gamma ({\varvec{\nu }})\), and

after a change of variables \(r = \psi (s)\) and using the equipartition of energy \(\frac{1}{2}|\psi '(s)|^2 = \Psi _0(\psi (s))\). Hence, we obtain the identification \(\varphi ({\varvec{\alpha }}^1, {\varvec{\alpha }}^2, {\varvec{\nu }}) = c_{\Psi } \gamma ({\varvec{\nu }})\) with constant \(c_{\Psi } = \int _{-1}^1 \sqrt{2 \Psi _0(r)} \, \textrm{d}r= \pi \). Then, for \({\varvec{w}} = (w_1, w_2) \in \textrm{BV}(\Omega , \{ {\varvec{\alpha }}^1, {\varvec{\alpha }}^2 \})\), we define \(u \in \textrm{BV}(\Omega , \{-1,1\})\) via the relation \(u = w_2 - w_1\), and this allows us to identify \(S_{{\varvec{w}}} = \partial ^* \{u = 1\}\) and consequently \(\mathcal {G}({\varvec{w}}) = \mathcal {E}_{\gamma ,0}(u)\). Then, the liminf, limsup and compactness properties follow directly from [22, Thms. 3.1 and 3.4]. \(\square \)

An immediate consequence of the above lemma is the following result on the convergence of minimizers as \(\varepsilon \rightarrow 0\).

Corollary 5.1

For each \(\varepsilon > 0\), let \({\overline{\varphi }}_\varepsilon \in \mathcal {V}_m\) denote a minimizer to the extended reduced cost functional \(\mathcal {J}_\varepsilon (\phi ) = \widehat{\alpha } \mathcal {E}_{\gamma ,\varepsilon }(\phi ) + \beta G(\phi )\), where

Then, there exists a non-relabelled subsequence \(\varepsilon \rightarrow 0\) such that \({\overline{\varphi }}_\varepsilon \rightarrow {\overline{\varphi }}_0\) strongly in \(L^1(\Omega )\) to a limit function \({\overline{\varphi }}_0 \in \textrm{BV}(\Omega , \{-1,1\})\), \(\lim _{\varepsilon \rightarrow 0} \mathcal {J}_\varepsilon ({\overline{\varphi }}_\varepsilon ) = \mathcal {J}_0({\overline{\varphi }}_0)\), where upon recalling (3.4b),

and \({\overline{\varphi }}_0\) is a minimizer of \(\mathcal {J}_0\).

The proof relies on the stability of \(\Gamma \)-convergence under continuous perturbations, and the fact that \(G(\phi _k) \rightarrow G(\phi )\) whenever \(\phi _k \rightarrow \phi \) in \(L^1(\Omega )\) thanks to the continuity of the solution operator \({\varvec{S}}\) from Lemma 2.1. We refer the reader to [45, proof of Thm. 2] and [59, proof of Thm. 5.2] for more details.

5.2 Formally Matched Asymptotic Analysis

In this section we consider an anisotropy function \(\gamma \in C^2({{\mathbb {R}}^d\setminus \{{\varvec{0}}\}})\cap W^{1,\infty }({\mathbb {R}}^d)\) satisfying (A1)–(A3) in the structural optimization problem (P), as well as a more regular body force \({\varvec{f}}\in H^1(\Omega , {\mathbb {R}}^d)\). The differentiability of \(\gamma \) implies the characterization of the subdifferential \(\partial A(\nabla \varphi )\) as the singleton set \(\{ [\gamma \textrm{D}\gamma ](\nabla \varphi ) \}\), where

Then, the corresponding optimality condition for a minimizer \(({\overline{\varphi }}_\varepsilon , {\overline{{\varvec{u}}}}_\varepsilon )\) to (P) becomes

and its strong formulation reads as (see (4.11))

with Lagrange multiplier \(\mu _\varepsilon \) for the mass constraint. Our aim is to perform a formally matched asymptotic analysis as \(\varepsilon \rightarrow 0\), similar as in [27, Sect. 5], in order to infer the sharp interface limit of (5.5). The method proceeds as follows, see [33]: formally we assume that the domain \(\Omega \) admits a decomposition \(\Omega = \Omega _\varepsilon ^+ \cup \Omega _\varepsilon ^- \cup \Omega _\varepsilon ^{\mathrm{{I}}}\), where \(\Omega _\varepsilon ^{\mathrm{{I}}}\) is an annular domain and \({\overline{\varphi }}_\varepsilon \in \mathcal {V}_m\) satisfies

The zero level set \(\Lambda _\varepsilon := \{ {\varvec{x}}\in \Omega \, : \, {\overline{\varphi }}_\varepsilon ({\varvec{x}}) = 0\}\) is assumed to converge to a smooth hypersurface \(\Lambda \) as \(\varepsilon \rightarrow 0\), and that \(({\overline{\varphi }}_\varepsilon , {\overline{{\varvec{u}}}}_\varepsilon )\) admit an outer expansion in regions in \(\Omega _\varepsilon ^{\pm }\) as well as an inner expansion in regions in \(\Omega _\varepsilon ^{\mathrm{{I}}}\). We substitute these expansions (in powers of \(\varepsilon \)) in (2.2) and (5.5), collecting terms of the same order of \(\varepsilon \), and with the help of suitable matching conditions connecting these two expansions, we deduce an equation posed on \(\Lambda \). For an introduction and more detailed discussion of this methodology we refer to [39, 49].

To start, let us collect some useful relations. As \(\gamma \) is positively homogeneous of degree one, taking the relation \(\gamma (t {\varvec{p}}) = t \gamma ({\varvec{p}})\) for \(t> 0\) and \({\varvec{p}}\in {\mathbb {R}}^d \setminus \{{\varvec{0}}\}\) and differentiating with respect to t, and also with respect to \({\varvec{p}}\) leads to

with the latter relation showing that \(\textrm{D}\gamma \) is positively homogeneous of degree zero. Then, it is easy to see \(\gamma \textrm{D}\gamma \) is positively homogeneous of degree one. Next, from (3.12), we see that A is positively homogeneous of degree two. Taking the relation \(A(t {\varvec{p}}) = t^2 A({\varvec{p}})\) and differentiating with respect to t twice leads to

where \(\textrm{D}(\gamma \textrm{D}\gamma ) = \gamma \textrm{D}^2 \gamma + \textrm{D}\gamma \otimes \textrm{D}\gamma \) with \(\textrm{D}^2 \gamma \) denoting the Hessian matrix of \(\gamma \). Lastly, differentiating the relation \([\gamma \textrm{D}\gamma ](t {\varvec{p}}) = t [\gamma \textrm{D}\gamma ]({\varvec{p}})\) with respect to t and setting \(t = 1\) yields

To compare our results with [4], in this section we consider \(\mathcal {C}_0 = \varepsilon ^2 \mathcal {C}_1\) in the definition of the elasticity tensor:

and recall that \(g(-1) = -1\), \(g(1) = 1\), \({\mathbb {h}}(-1) = 0\), \({\mathbb {h}}(1) = 1\) and \({\mathbb {h}}' = - \frac{1}{2}\).

5.2.1 Outer Expansions

For points \({\varvec{x}}\) in \(\Omega _\varepsilon ^{\pm }\), we assume an outer expansion of the form

where all functions are sufficiently smooth and the summations converge. From (5.6) we deduce that

Setting \(\Omega _+ = \{{\overline{\varphi }}_0 = 1\}\) and \(\Omega _{-} = \{{\overline{\varphi }}_0 = -1\}\), it holds that \((\partial \Omega _+ \cap \partial \Omega _-)\cap \Omega = \Lambda \). Then, substituting the outer expansion into (2.2), we obtain to order \(\mathcal {O}(1)\) the following system of equations

Note that no equations are inferred for \(\Omega _+\) due to the scaling \(\mathcal {C}_0 = \varepsilon ^2 \mathcal {C}_1\). Moreover, from the definition of the Lagrange multiplier \(\mu _\varepsilon \) we see that

It remains to derive the boundary conditions for (5.10) holding on \(\Lambda \), which can be achieved with the inner expansions.

5.2.2 Inner Expansions

We assume the outer boundary \(\Gamma _\varepsilon ^+\) and inner boundary \(\Gamma _\varepsilon ^-\) of the annular region \(\Omega _\varepsilon ^{\mathrm{{I}}}\) can be parameterized over the smooth hypersurface \(\Lambda \). Let \({\varvec{\nu }}\) denote the unit normal of \(\Lambda \) pointing from \(\Omega _-\) to \(\Omega _+\), and we choose a spatial parameter domain \(U \subset {\mathbb {R}}^{d-1}\) with a local parameterization \({\varvec{r}}: U \rightarrow {\mathbb {R}}^d\) of \(\Lambda \). Let d denote the signed distance function of \(\Lambda \) with \(d({\varvec{x}}) > 0\) if \({\varvec{x}}\in \Omega _+\), and denote by \(z = \frac{d}{\varepsilon }\) the rescaled signed distance. Then, by the smoothness of \(\Lambda \), there exists \(\delta _0 > 0\) such that for all \({\varvec{x}}\in \{|d({\varvec{x}})| < \delta _0\}\), we have the representation

where \({\varvec{s}}= (s_1, \dots , s_{d-1}) \in U\). In particular, \({\varvec{r}}({\varvec{s}}) \in \Lambda \) is the projection of \({\varvec{x}}\) to \(\Lambda \) along the normal direction. This representation allows us to infer the following expansion for gradients and divergences [49]:

for scalar functions \(b({\varvec{x}}) = \hat{b}({\varvec{s}}({\varvec{x}}), z({\varvec{x}}))\) and vector functions \({\varvec{j}}({\varvec{x}}) = \widehat{{\varvec{j}}}({\varvec{s}}({\varvec{x}}), z({\varvec{x}}))\). In the above \(\nabla _\Lambda \) is the surface gradient on \(\Lambda \) and \(\textrm{div}_\Lambda \) is the surface divergence. Analogously, for a vector function \({\varvec{j}}\), we find that

For points close by \(\Lambda \) in \(\Omega _\varepsilon ^{\mathrm{{I}}}\), we assume an expansion of the form

For later use let us note here the inner expansion for \(g(\varphi )\) and \(\mathcal {C}(\varphi )\) are

Defining \({\mathbb {C}}(s) = \frac{1}{2}(1 - g(s)) \mathcal {C}_1\) we notice that

Furthermore, from the positive definiteness of \(\mathcal {C}_1\) and the fact that \(|g(s)| < 1\) for \(|s| < 1\), we infer that \({\mathbb {C}}(\Phi _0)\) is coercive for \(|\Phi _0| < 1\).

5.2.3 Matching Conditions

In a tubular neighborhood of \(\Lambda \), we assume the outer expansions and the inner expansions hold simultaneously. Since \(\Gamma _\varepsilon ^\pm \) are assumed to be graphs over \(\Lambda \), we introduce the functions \(Y_\varepsilon ^{\pm }({\varvec{s}})\) such that \(\Gamma _{\varepsilon }^{\pm } = \{ z = Y_{\varepsilon }^{\pm }({\varvec{s}}) \, : \, {\varvec{s}}\in U \}\). Furthermore, we assume an expansion of the form

is valid. As \(\Gamma _\varepsilon ^{\pm }\) converge to \(\Lambda \), in the computations below we will deduce the values for \(Y_0^{\pm }({\varvec{s}})\). Then, by comparing these two expansions in this intermediate region we infer matching conditions relating the outer expansions to the inner expansions via boundary conditions for the outer expansions. For a scalar function \(b({{\varvec{x}}})\) admitting an outer expansion \(\sum _{k=0}^\infty \varepsilon ^k b_k({{\varvec{x}}})\) and an inner expansion \(\sum _{k=0}^\infty \varepsilon ^k B_k({{\varvec{s}}},z)\), it holds that (see [33] and [49, Appendix D])

for \({\varvec{x}}\in \Lambda \). Consequently, we denote the jump of a quantity b across \(\Lambda \) as

5.2.4 Analysis of the Inner Expansions

We introduce the notation \(\big ( {\varvec{B}} \big )^{\textrm{sym}} = \frac{1}{2}({\varvec{B}} + {\varvec{B}}^{\top })\) for a second order tensor \({\varvec{B}}\). Plugging in the inner expansions to the state equation (2.2), to leading order \(\mathcal {O}(\frac{1}{\varepsilon ^2})\) we find that

Taking the product with \({\varvec{U}}_0\) and by the symmetry of \({\mathcal {C}_1}\) we have the relation

Integrating over z and by parts, using \(\partial _z {\varvec{\nu }}= {\varvec{0}}\) and the matching condition \(\lim _{z \rightarrow Y_0^{\pm }} \partial _z {\varvec{U}}_0 = {\varvec{0}}\) leads to

Coercivity of \({{\mathbb {C}}}(\Phi _0)\) yields that \(\big ( \partial _z {\varvec{U}}_0 \otimes {\varvec{\nu }} \big )^{\textrm{sym}} = {\varvec{0}}\) and hence \(\partial _z {\varvec{U}}_0({\varvec{s}},z) = {\varvec{0}}\). Then, to the next order \(\mathcal {O}(\frac{1}{\varepsilon })\) we obtain from the state equation (2.2)

Integrating over z and using the matching condition

as well as the observations \({\mathbb {C}}(-1) = \mathcal {C}_1\), \({\mathbb {C}}(1) = {\varvec{0}}\), we obtain