Abstract

We prove that \({{\,\textrm{poly}\,}}(t) \cdot n^{1/D}\)-depth local random quantum circuits with two qudit nearest-neighbor gates on a D-dimensional lattice with n qudits are approximate t-designs in various measures. These include the “monomial” measure, meaning that the monomials of a random circuit from this family have expectation close to the value that would result from the Haar measure. Previously, the best bound was \({{\,\textrm{poly}\,}}(t)\cdot n\) due to Brandão–Harrow–Horodecki (Commun Math Phys 346(2):397–434, 2016) for \(D=1\). We also improve the “scrambling” and “decoupling” bounds for spatially local random circuits due to Brown and Fawzi (Scrambling speed of random quantum circuits, 2012). One consequence of our result is that assuming the polynomial hierarchy (\({{\,\mathrm{\textsf{PH}}\,}}\)) is infinite and that certain counting problems are \(\#{\textsf{P}}\)-hard “on average”, sampling within total variation distance from these circuits is hard for classical computers. Previously, exact sampling from the outputs of even constant-depth quantum circuits was known to be hard for classical computers under these assumptions. However the standard strategy for extending this hardness result to approximate sampling requires the quantum circuits to have a property called “anti-concentration”, meaning roughly that the output has near-maximal entropy. Unitary 2-designs have the desired anti-concentration property. Our result improves the required depth for this level of anti-concentration from linear depth to a sub-linear value, depending on the geometry of the interactions. This is relevant to a recent experiment by the Google Quantum AI group to perform such a sampling task with 53 qubits on a two-dimensional lattice (Arute in Nature 574(7779):505–510, 2019; Boixo et al. in Nate Phys 14(6):595–600, 2018) (and related experiments by USTC), and confirms their conjecture that \(O(\sqrt{n})\) depth suffices for anti-concentration. The proof is based on a previous construction of t-designs by Brandão et al. (2016), an analysis of how approximate designs behave under composition, and an extension of the quasi-orthogonality of permutation operators developed by Brandão et al. (2016). Different versions of the approximate design condition correspond to different norms, and part of our contribution is to introduce the norm corresponding to anti-concentration and to establish equivalence between these various norms for low-depth circuits. For random circuits with long-range gates, we use different methods to show that anti-concentration happens at circuit size \(O(n\ln ^2 n)\) corresponding to depth \(O(\ln ^3 n)\). We also show a lower bound of \(\Omega (n \ln n)\) for the size of such circuit in this case. We also prove that anti-concentration is possible in depth \(O(\ln n \ln \ln n)\) (size \(O(n \ln n \ln \ln n)\)) using a different model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Random unitaries are central resources in quantum information science. They appear in many applications including algorithms, cryptography, and communication. Moreover, they are important toy models for random chaotic systems, capturing phenomena like thermalization or scrambling of quantum information.

An idealized model of a random unitary is the uniform distribution over the unitary group, also known as the Haar measure. However, the Haar measure is an unrealistic model for large systems because the number of random coins and gates needed to generate an element of the Haar distribution scale exponentially with the size of the system (i.e. polynomially with dimension, meaning exponentially in the number of qubits or independent degrees of freedom). To resolve this dilemma, approximate t-designs have been proposed as physically and computationally realistic alternatives to the Haar measure. They approximate the behavior of the Haar measure if one only cares about up to the first t moments.

Several constructions of t-designs have been proposed based on either random or structured circuits. While structured circuits can in some cases be more efficient [22, 25, 48], random quantum circuits have other advantages. They are plausible models for chaotic random processes in nature, such as scrambling in black holes [17, 55], or the spread of entanglement in condensed matter systems [46, 47], growth of quantum complexity [12], and decoupling [18]. Moreover, they are practical candidates to benchmark computational advantage for quantum computation over classical models, since they seem to capture the power of a generic polynomial-size unitary circuit. Indeed, the Google quantum AI group has recently run a random unitary circuit on a 53-qubit superconducting device and has argued that this should be hard to simulate classically [5, 8] (see Fig. 1 for a demonstration of their proposal). Here the random gates are useful not only for the 2-design property, specifically “anti-concentration”, but also for evading the sort of structure which would lend itself to easy simulation, such as being made of Clifford gates.

All previous random circuit based constructions for t-designs required the circuits to have linear depth. In this paper, we show that certain random circuit models with small depth are approximate t-designs. We consider two models of random circuits. The first one is nearest-neighbor local interactions on a D-dimensional lattice. In this model, we apply random \(\text {U}(d^2)\) gates on neighboring qudits of a D-dimensional lattice in a certain order.

Depending on the application we want, we can define convergence to the Haar measure in different ways. For example, for scrambling [17] we measure convergence w.r.t. the norm \({\mathbb {E}}_C \Vert \rho _S(s) - \frac{1}{2^{|S|}} \Vert ^2_1\), where \(\rho _S(s)\) is the density matrix \(\rho (s)\) reduced to a subset S of qudits and \(\rho (s)\) is the quantum state that results from s steps of the random process. But for anti-concentration, which corresponds loosely to a claim that typical circuit outputs have nearly maximal entropy, we use a norm related to \({\mathbb {E}}_C\sum _x |\langle x|C|0\rangle |^4\). For other measures of convergence to the Haar measure see [42] or Sect. 2.4. In general, these measures are equivalent but moving between them involves factors that are exponentially large in the number of qudits, i.e., if one norm converges to \(\epsilon \) the translation implies that another norm converges to \(2^{O(n)} \epsilon \). Some of the known size/depth bounds for designs are of the form \(O(f(n,t)(n + \ln 1/\epsilon ))\) (e.g. [13]) and in 1-D simple arguments yield an \(\Omega (n + \ln (1/\epsilon ))\) lower bound [17]. In this case, replacing \(\epsilon \) with \(2^{-O(n)}\epsilon \) will not change the asymptotic scaling. [13] defined a strong notion of convergence which implies all the mentioned definitions.

However, in D dimensional lattices the natural lower bound is \(\Omega (n^{1/D}+\ln (1/\epsilon ))\). Our main challenge in this work is to show that this depth bound is asymptotically achievable, and along the way, we need to deal with the fact that we can no longer freely pay norm-conversion costs of \(2^{O(n)}\). We are able to achieve the desired \({{\,\textrm{poly}\,}}(t)(n^{1/D} + \ln (1/\epsilon ))\) in many operationally relevant norms, but due in part to the difficulty of converting between norms, we do not establish it in all cases. The asymptotic dependency on t in our result for \(D =2\) is \(O(t \ln t)\) times the best asymptotic dependency on t for the \(D=1\) architecture, according to the strong measure defined in [13]. [13] gave a bound of \(t^{10.5}\). Recently this bound was improved to \(t^{5 + o_t(1)}\) by Haferkamp [31]. The dependency on t in our result is hence \(t^{6 + o_t(1)} \ln t\).

Approximate unitary designs We will consider several notions of approximate designs in this paper. First, we will introduce some notation. A degree-(t, t) monomial in \(C\in \text{ U }(({\mathbb {C}}^d)^{\otimes n})\) is degree t in the entries of C and degree t in the entries of \(C^*\). We can collect all these monomials into a single matrix of dimension \(d^{2nt}\) by defining \(C^{\otimes t,t}:= C^{\otimes t} \otimes C^{*\otimes t}\). We say that \(\mu \) is an exact [unitary] t-design if expectations of all t, t moments of \(\mu \) match those of the Haar measure. We can express this succinctly in terms of the operator

Then \(\mu \) is an exact t-design iff \(G_\mu ^{(t)} = G_{\text {Haar}}^{(t)}\). Since \(G_{\text {Haar}}^{(t)}\) is a projector, we sometimes call \(G_\mu ^{(t)}\) a quasi-projector operator and we will later use the fact that it can sometimes be shown to be very close to a projector.

Most definitions of approximate designs demand that some norm of \(G_\mu ^{(t)} - G_{\text {Haar}}^{(t)}\) be small. Three norms that we will consider are based on viewing \(G_{\mu }^{(t)}\) as either a vector of length \(d^{4nt}\), a matrix of dimension \(d^{2nt}\) or a quantum operation acting on a space of dimension \(d^{nt}\). In each case, one can show that the t-design property implies the \(t'\)-design property for \(1\le t'\le t\).

Definition 1

(Monomial definition of t-designs). \(\mu \) is a monomial-based \(\epsilon \)-approximate t-design if any monomial has expectation within \(\epsilon d^{-nt}\) of that resulting from the Haar measure. In other words,

\(\textsf{vec}(A)\) is a vector consisting of the elements of matrix A (in the computational basis) and \(\Vert \cdot \Vert _\infty \) refers to the vector \(\ell _\infty \) norm.

The monomial measure is natural when studying anti-concentration, since a sufficient condition for anti-concentration is that \({\mathbb {E}}_C |\langle 0|C|0\rangle |^4\) is close to the quantity that arises from the Haar measure, namely \( \frac{2}{2^n(2^n+1)}\). This is achieved by [monomial measure] 2-designs.

If the operator-norm distance between \(G_\mu ^{(t)}\) and \(G_\text {Haar}^{(t)}\) is small then instead of calling \(\mu \) an approximate design we call it a t-tensor product expander [36]. This controls the rate at which certain nonlinear (i.e. degree-t polynomial) functions of the state converge to the average value they would have under the Haar measure. We can also measure the distance between \(G_\mu ^{(t)}\) and \(G_\text {Haar}^{(t)}\) in the 1-norm (i.e. trace norm) and this notion of approximate designs has been considered before [4, 54], although it does not have direct operational meaning. We will show \({{\,\textrm{poly}\,}}(t)(n^{1/D}+\ln (1/\epsilon ))\)-depth convergence in each of these measures.

Finally, we can consider \(G_\mu ^{(t)}\) to be a superoperator using the following canonical map. Define \(\text {Ch}\left[ \sum _i X_i \otimes Y_i^T\right] \) by \( \text {Ch}\left[ \sum _i X_i \otimes Y_i^T\right] (Z):= \sum _i X_i Z Y_i\). Thus

Note that \(\text {Ch}\left[ G_{\mu }\right] \) is completely positive and trace preserving, i.e., a quantum channel. For superoperators \({\mathcal {M}},{\mathcal {N}}\) we say that \({\mathcal {M}}\preceq {\mathcal {N}}\) if \({\mathcal {N}}-{\mathcal {M}}\) is a completely positive (cp) map. Based on this ordering, a strong notion of being an approximate design was proposed by Andreas Winter and first appeared in [13].

Definition 2

(Strong definition of t-designs). A distribution \(\mu \) is a strong \(\epsilon \)-approximate t-design if

Circuit models The result of [13] constructs t-designs in the strong measure (Definition 2) for \(D=1\) and linear depth, and we generalize this result to construct weak monomial designs for arbitrary D and \(O(n^{1/D})\) depth. We also show that the same construction converges to the Haar measure in other norms: diamond, infinity and trace norm. Our proof techniques do not seem to yield t-designs in the strong measure. We do not even know whether the construction of “strong” t-designs in sub-linear depth is possible.

The second model we consider is circuits with long-range two-qubit interactions. In this model, at each step, we pick a pair of qubits uniformly at random and apply a random \(\text {U}(4)\) gate on them. This model is the standard one when considering bounded-depth circuit classes, such as \(\textsf{QNC}\). Physically, it could model chaotic systems with long-range interactions. Following Oliveira, Dahlsten and Plenio [51] (see also [17, 18, 34]), we can map the \(t=2\) moments of this process onto a simple random walk on the points \(\{1,2,\ldots ,n\}\). We map this random walk to the classical (and exactly solvable) Ehrenfest model, meaning a random walk with a linear bias towards the origin. Further challenges are that this mapping introduces random and heavy-tailed delays and that the norm used for anti-concentration is exponentially sensitive to some of the probabilities. However, we are able to show (in Sect. 4) that after \(O(n \ln ^2 n)\) rounds of this process the resulting distribution over the unitary group converges to the Haar measure in the mentioned norm.

For a distribution p its collision probability is defined as \(\text {Coll}(p) = \sum _x p_x^2\). If \(\text {Coll}(p)\) is large (\(\Omega (1)\)) then the support of p is concentrated around a constant number of outcomes, and if it is small (\(\approx 1/2^n\)) then it is anti-concentrated. The norm that we consider for anti-concentration is basically the expected collision probability of the output distribution of a random circuit. The expected collision probability for the Haar measure is \(\frac{2}{2^n+1}\) and our result shows that a typical circuit of size \(O(n \ln ^2 n)\) outputs a distribution with expected collision probability \(\frac{2}{2^n} \left( 1+\frac{1}{{{\,\textrm{poly}\,}}(n)}\right) \). Along with the Paley–Zygmund anti-concentration inequality this result proves that these circuits have the following anti-concentration property:

Here \(\mu \) is the distribution of random circuits we consider, and x is any n-bit string. This bound is related to the hardness of classical simulation for random circuits. We furthermore show that sub-logarithmic depth quantum circuits in this model have expected collision probability \(\frac{2}{2^n+1} \omega (1)\). The best anti-concentration depth bound we get from this model is \(O(\ln ^2 n)\). However, we are able to construct a natural family of random circuits with depth \(O(\ln n \ln \ln n)\) that are anti-concentrated.

The organization of this paper The rest of this introductory section states the basic results, ideas and implications related to the main results. In particular, in Sect. 1.1 we explain the connections between our result and the result experiments performed by groups such as the Google AI group aiming towards demonstrating the superiority of quantum computing compared with classical computers on specific tasks. In Sect. 1.2, we describe the models we consider in this paper. In Sect. 1.3, we express the main results of this paper including proof sketches and basic ideas. We then give a brief overview of the previous works related to this paper in Sect. 1.4 and several open questions in Sect. 1.5.

The organization of the rest of this paper is as follows. In Sect. 2 we introduce the preliminary concepts, definitions and tools needed for our proofs. In Sect. 3 we give detailed proofs about how we get approximate t-designs on D-dimensional lattices. In Sect. 4 we give detailed proofs related to anti-concentration bounds from circuits with all-to-all interactions. In Sect. 5 we provide alternative proofs for anti-concentration via low-depth D-dimensional lattices and in Sect. 6 we provide improvements on the existing scrambling and decoupling bounds. Appendix A gives a proof of Theorem 3 about the implications of anti-concentration bound we obtain on computational difficulty of simulating low-depth random quantum circuits. Finally Appendix B gives a background about the basic properties of Krawtchouk polynomials which we use in Sect. 4.

1.1 Connections with quantum computational supremacy experiments

Outperforming classical computers, even for an artificial problem such as sampling from the output of an ideal quantum circuit would be a significant milestone for early quantum computers which has recently been called “quantum computational supremacy” [35, 52]. The reason to study quantum computational supremacy in its own right (as opposed to general quantum algorithms) is that it appears to be a distinctly easier task than full-scale quantum computing and even various non-universal forms [2, 3, 8, 14, 15, 29] of quantum computing can be shown to be hard to simulate classically. For example, the outputs of constant-depth quantum circuits cannot be simulated exactly by classical computers unless the \({{\,\mathrm{\textsf{PH}}\,}}\) collapses [56]. In general, families of quantum circuits have this property if they are universal under postselection, meaning that after measuring all the qubits at the end of the circuit and producing a string of bits, we condition on the values of some of these bits and use the other bits for the output.

However, these hardness results are not robust under noise and error in measurements. A central open question in the theory of quantum computational supremacy is whether simulating these distributions to within constant or \(1/{{\,\textrm{poly}\,}}(n)\) variational distance would still be hard. It is plausible to conjecture that if such a robust hardness of sampling is true, it would also hold for generic circuits [1, 3] (although see [49] for a counterexample). A standard approach to proving such a robust hardness result for generic circuits has been to prove that “anti-concentration” holds, and to use this to relate additive error approximation to average-case relative error approximation; see e.g. [16]. Here “anti-concentration” means having near-maximal entropy in the output of a quantum circuit, which implies that any fixed amplitude of a quantum circuit is likely to be \(\ge \frac{\Omega (1)}{2^n}\). This property implies that the complexity of estimating the amplitudes additively (within \(\pm \frac{1}{{{\,\textrm{poly}\,}}(n) \cdot 2^n})\) is on-average as hard as computing them within inverse polynomial relative error. This lets us turn an assumption about the average-case hardness of relative-error approximation of the amplitudes into a hardness result for the sampling problems. Approximate t-designs (and even approximate 2-designs) have the desired “anti-concentration” property.

For experimental verification of quantum computational supremacy we can consider the following sampling task: let \(\mu \) be a distribution over random circuits that satisfies

(which we call the anti-concentration property). Let \({\mathcal {C}}_x\) be the family of circuits constructed by first applying a circuit \(C \sim \mu \) and then an X gate to each qubit with probability 1/2 (and identity with probability 1/2). A similar line of reasoning as in Bremner-Montanaro-Shepherd (see Theorem 6 and 7 of [16]) implies that

Theorem 3

Fix \(\epsilon >0\) and \(0<\delta <1/8\). Let \(\mu \) be a \(\frac{1}{{{\,\textrm{poly}\,}}(n)}\)-approximate 2-design. If there exists a \({{\,\mathrm{\textsf{BPP}}\,}}\) machine which takes \(C \sim \mu \) as input and for at least \(1-\delta \) fraction of such inputs outputs a probability distribution that is within \(\epsilon \) total variation distance from the probability distribution \(p_x = |\langle x | C| 0\rangle |^2\), then there exists an \({{\,\mathrm{\textsf{FBPP}}\,}}^{{{\,\mathrm{\textsf{NP}}\,}}}\) algorithm that succeeds with probability \(1-\delta \) and computes the value \(|\langle 0|C'|0\rangle |^2\) within multiplicative error \(\frac{2(\epsilon + 1/{{{\,\textrm{poly}\,}}(n)})}{\delta }\) for \(1/8 -\frac{1}{{{\,\textrm{poly}\,}}(n)}\) fraction of circuits \(C' \sim {\mathcal {C}}_x\).

This theorem is proved in Appendix A. If we further conjecture the \({{\,\mathrm{\textsf{PH}}\,}}\) is infinite and that amplitudes of the random circuits in Theorem 3 are \(\#{\textsf{P}}\)-hard to approximate on average, then this implies that classical computers cannot efficiently sample from any distribution close to the ones generated by these circuits. At the moment, it is only known that nearly exact computation of these amplitudes is hard for \(\# {\textsf{P}}\) [10, 44, 45]. It is an open question whether average-case hardness for the approximation task remains \(\#{\textsf{P}}\)-hard.

The linear to sub-linear improvement of the depth required for anti-concentration provided in this paper is likely to be significant for near-term quantum computers that will be constrained both in terms of the number of qubits (n) and noise rate per gate (\(\delta \)). Due to the constraints in the number of qubits (say 50-100), quantum computational supremacy will only be possible without the overhead of error correction, since even the most efficient known schemes for fault-tolerant quantum computation reduce the number of qubits by more than a factor of two [21]. Thus a quantum circuit with S gates will have an expected \(S\delta \) errors. Recent work due to Yung and Gao [57] and the Google group [9] states that noisy random quantum circuits with \(O(\ln n)\) random errors output distributions that are nearly uniform, and thus are trivially classically simulable. Thus S can be at most \(\ln (n)/\delta \). In proposed near-term quantum devices [6, 8, 26, 50] we can expect \(n\sim 10^2\) and \(\delta \sim 10^{-2}\). Thus the \(S=O(n \ln ^2 n)\) for long-range interactions or \( S = O(n \sqrt{n})\) bound for 2-D lattices from our work is much closer to being practical than the previous \(S=O(n^2)\). (This assumes that the constants are reasonable. We have not made an effort to calculate them rigorously but for the case of long-range interactions we do present a heuristic that suggests that in fact \(\approx \frac{5}{6} n\ln n\) gates are necessary and sufficient.)

1.2 Our models

We consider two models of random quantum circuits. The first involves nearest-neighbor local interactions on a D-dimensional lattice and the second involves long-range random two-qubit gates. The order of gates in the first model has some structure but in the second model it is chosen at random. Hence, we can view the second model as the natural dynamics of an n-qubit system, connected as a complete graph.

We first define the following random circuit model for \(D=1\) which was also considered in [13]:

Definition 4

(Random circuits on one-dimensional lattices). \(\mu ^{\text {lattice}, n}_{1, s}\) is the distribution over unitary circuits resulting from the following random process.

This definition assumes that n is even but we modify it in the obvious way when n is odd. Another modification which would not change our results would be to put the qudits on a ring so that sites n and 1 are connected.

Building on this, we define the following distribution of random circuits on a two-dimensional lattice.

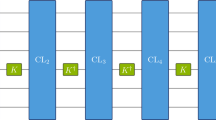

The architecture proposed by the quantum AI group at Google to demonstrate quantum supremacy consists of a 2D lattice of superconducting qubits. This figure depicts two illustrative timesteps in this proposal. At each timestep, 2-qubit gates (blue) are applied across some pairs of neighboring qubits

Definition 5

(Random circuits on two-dimensional lattices). Consider a two-dimensional lattice with n qudits. Let \(r_{\alpha ,i}\) be the i\(^{\text {th}}\) row of the lattice in direction \(\alpha \in \{1,2\}\), for \(1 \le i \le \sqrt{n}\). For each \(\alpha \in \{1,2\}\) let \(\text {SampleAllRows}(\alpha )\) denote the following procedure (see Fig. 2):

Now define \(\mu ^{\text {lattice}, n}_{2, c, s}\) to be the distribution over unitary circuits resulting from the following random process:

This distribution has depth \((2c+1)2s\) and is related but not identical to the Google AI group’s experiment [5, 8], see Fig. 1. For our results on t-designs, we will take c to be \({{\,\textrm{poly}\,}}(t)\) and s to be \({{\,\textrm{poly}\,}}(t) \cdot \sqrt{n}\). We believe that our result can be extended to any natural family of circuits with nearest-neighbor interactions. We also assume for convenience that \(\sqrt{n}\) is an integer, but believe that this assumption is not fundamentally necessary.

Next, we give a recursive definition for our random circuits model on arbitrary D-dimensional lattices. We view a D-dimensional lattice as a collection of \(n^{1/D}\) sub-lattices of size \(n^{1-1/D}\), labeled as \(\xi _{1}, \ldots , \xi _{n^{1-1/D}}\). We label the rows of the lattice in the D-th direction by \(r_1,\ldots , r_{n^{1/D}}\).

Definition 6

(Random circuits on D-dimensional lattices). \(\mu ^{\text {lattice}, n}_{D,c,s}\) is the distribution resulting from the following random process.

Next, we define the model with long-range interactions on a complete graph.

Definition 7

(Random circuit models on complete graphs). \(\mu ^{\text {CG}}_s\) is the distribution over unitary circuits resulting from the following random process.

The size of the circuits in this ensemble is s.

1.3 Our results

Our first result is the following.

Theorem 8

Let \(s, c,n > 0\) be positive integers with \(\mu ^{\text {lattice},n}_{2,c,s}\) defined as in Definition 5.

-

1.

\(s {=} {{\,\textrm{poly}\,}}(t)\!\left( \sqrt{n} {+}\ln \frac{1}{\delta }\!\right) , c {=} O\!\left( t \ln t {+} \frac{\ln (1/\delta )}{\sqrt{n}}\!\right) \! \,{\implies }\, \!\left\| \textsf{vec}\!\left[ G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)}-G_\text {Haar}^{(t)}\!\right] \!\right\| _\infty {\le }\frac{\delta }{d^{nt}}\).

-

2.

\(s {=} {{\,\textrm{poly}\,}}(t) \left( \sqrt{n} {+} \ln \frac{1}{\delta }\right) , c {=} O\left( t \ln t {+} \frac{\ln (1/\delta )}{\sqrt{n}}\right) \implies \left\| \text {Ch}\left[ G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)}{-}G_\text {Haar}^{(t)}\right] \right\| _\diamond {\le } \delta \).

-

3.

\(s = {{\,\textrm{poly}\,}}(t) \left( \sqrt{n} + \ln \frac{1}{\delta } \right) , c = O\left( t \ln t + \frac{\ln (1/\delta )}{\sqrt{n}}\right) \implies \left\| G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _1 \le \delta \).

-

4.

\(\left\| G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _\infty \le c \cdot \sqrt{n} \cdot e^{-s/{{\,\textrm{poly}\,}}(t)} + \frac{1}{d^{O( c \sqrt{n} )}}\).

The random circuit model in definition 5. Each black circle is a qudit and each blue link is a random \(\text {SU}(d^2)\) gate. The model does \(O(\sqrt{n}{{\,\textrm{poly}\,}}(t))\) rounds alternating between applying (1) and (2). Then for \(O(\sqrt{n}{{\,\textrm{poly}\,}}(t))\) rounds it alternates between (3) and then (4). This entire loop is then repeated \(O({{\,\textrm{poly}\,}}(t))\) times

The three norms in the above theorem refer to the vector \(\ell _\infty \) norm, the superoperator diamond norm \(\Vert \cdot \Vert _\diamond \) (see Sect. 2.1) and the operator \(S_\infty \) norm, also known simply as the operator norm.

Proof sketch for part 1

We first give a brief overview of the proof in [13] and explain why their construction requires a circuit to have linear depth. Let \(G_{i,i+1}\) be the projector operator for a random two-qudit gate applied to qudits i and \(i+1\), and let \(G = \frac{1}{n-1} \sum _i G_{i,i+1}\). Therefore \(G_s = G^s\) is the quasi-projector corresponding to a 1-D random circuit with size s. [13] observed that \(G - G_{\text {Haar}}\) corresponds to a certain local Hamiltonian and \(\epsilon = 1- \Vert G - G_{\text {Haar}}\Vert _\infty \) is its spectral gap. The central technical result of [13] is the bound \(\epsilon \ge \frac{1}{n\cdot {{\,\textrm{poly}\,}}(t)}\). As a result, \(\Vert G_s - G_{\text {Haar}}\Vert _\infty = (1 - \frac{1}{n\cdot {{\,\textrm{poly}\,}}(t)})^s\). In general \(G - G_{\text {Haar}}\) has rank \(e^{O(n)}\), and in order to construct a strong approximate t-design (Definition 2), one needs to apply a sequence of expensive changes of norm that lose factors polynomial in the overall dimension of G, i.e., \(e^{O(nt)}\). Thereby in order to compensate for such exponentially large factors one needs to choose \(s = O(n^2\cdot {{\,\textrm{poly}\,}}(t))\), meaning depth growing linearly with n. Brown and Fawzi [17] furthermore observed that if G is the projector corresponding to one step of a random circuit on a 2-D lattice, the spectral gap still remains \(1-\Vert G - G_{\text {Haar}}\Vert _\infty = O\big (\frac{1}{n\cdot {{\,\textrm{poly}\,}}(t)}\big )\), and using the same proof strategy one needs linear depth.

The new ingredient we contribute is to show that if \(s = O(\sqrt{n})\) one can replace \(G^{(t)}_{\mu ^{\text {lattice},n}_{2,1,s}}\) with a certain quasi-projector \(G'\), such that

-

(1)

\(G'-G_{\text {Haar}}\) has rank \(t!^{O(\sqrt{n})}\) and

-

(2)

\(\Vert G' -G_{\text {Haar}}\Vert _\infty \approx 1/e^{\Omega (\sqrt{n})}\),

-

(3)

\(G^{(t)}_{\mu ^{\text {lattice},n}_{2,1,s}} \approx G'\) in various norms.

We first use (1) to relate the monomials definition of t-designs to the infinity norm and then use (2) to bound the infinity norm

For \(c = t \ln t\) the error bound is \(1/e^{\Omega (\sqrt{n})} \frac{1}{d^{nt}}\). As a result using (3)

This step requires a certain change of norm for which we only have to pay a factor like \(e^{O(\sqrt{n})}\), which we justify by bounding the ranks of the right intermediate operators. The factor of \(1/d^{nt}\) comes from the fact that the Haar measure itself has monomial expectation values on this order (in fact as large as \(t!/d^{nt}\) but we suppressing the t-dependence in this proof sketch.)

We now briefly describe the construction of \(G'\). Let \(G_R\) (and \(G_C\)) be the projector operators corresponding to applying a Haar unitary to each row (and column) independently. Then \(G' = G_R G_C\). \(G'\) has rank \(t!^{O(\sqrt{n})}\) because \(G_R\) and \(G_C\) are each tensor products of \(\sqrt{n}\) Haar projectors each with rank t!. Let \(V_R\), \(V_C\), and \(V_\text {Haar}\) be respectively the subspaces that \(G_R\), \(G_C\) and \(G_{\text {Haar}}\) project onto. In order to prove (1) in Sect. 3.6.1 we first use the fact that our circuits are computationally universal to argue that \(V_C \cap V_R = V_\text {Haar}\). We then prove that the angle between \(V_R \cap V_\text {Haar}^\perp \) and \(V_C \cap V_\text {Haar}^\perp \) is very close to \(\pi /2\), i.e., \(\approx \pi /2 \pm \frac{1}{d^{\sqrt{n}}}\). This implies that \(G_C G_R = G_{\text {Haar}} + P\), where P is a small matrix in the sense that \(\Vert P\Vert _{\infty } \approx 1/d^{\sqrt{n}}\). Choosing \(c = {{\,\textrm{poly}\,}}(t)\) we obtain (1). To show (2) it is not hard to see that the rank of \(G' - G_{\text {Haar}}\) is indeed \(e^{O(\sqrt{n})}\). For (3) we use the construction of t-designs from [13]. In particular, our random circuits model first applies an \(O(\sqrt{n})\) depth circuit to each row and then an \(O(\sqrt{n})\) depth circuit to each column and repeats this for \({{\,\textrm{poly}\,}}(t)\) rounds. The result [13] implies that each of these rounds is effectively the same as applying a strong approximate t-design to the rows or columns of the lattice. We then analyze how these designs behave under composition in various norms and prove (3). \(\square \)

Our second result generalizes Theorem 8 to arbitrary dimensions.

Theorem 9

There exists a value \(\delta = 1/d^{\Omega (n^{1/D})}\) such that for some large enough c depending on D and t:

-

1.

\(s > c \cdot n^{1/D} \implies \left\| \textsf{vec}\left[ G_{\mu ^{\text {lattice},n}_{D,c,s}}^{(t)}-G_\text {Haar}^{(t)}\right] \right\| _\infty \le \frac{\delta }{d^{nt}}\).

-

2.

\(s > c \cdot n^{1/D} \implies \left\| \text {Ch}\left[ G_{\mu ^{\text {lattice},n}_{D,c,s}}^{(t)}-G_\text {Haar}^{(t)}\right] \right\| _\diamond \le \delta \).

-

3.

\(s > c \cdot n^{1/D} \implies \left\| G_{\mu ^{\text {lattice},n}_{D,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _\infty \le \delta \).

-

4.

\(s > c \cdot n^{1/D} \implies \left\| G_{\mu ^{\text {lattice},n}_{D,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _1 \le \delta \).

In order to understand the implication of this result for anti-concentration, let’s first define

Definition 10

(Anti-concentration). We say a family of circuits \(\mu \) satisfy the \((\alpha ,\beta )\) anti-concentration property if for any \(x \in \{0,1\}^n\)

As mentioned before, unitary 2-designs imply strong anti-concentration bound. In particular

Theorem 11

Let \(\mu \) be a an \(\epsilon \)-approximate 2-design in the monomial measure. Then \(\mu \) satisfies the \((\alpha , \beta )\) anti-concentration property for \(\alpha = \delta (1-\epsilon )\), \(\beta = \frac{(1-\delta )^2 (1-\epsilon )^2}{2 (1+\epsilon )}\) and \(0 \le \delta \le 1\).

Proof

(See Appendix A and also Theorem 5 of [32]). The proof is based on the Paley–Zigmond anti-concentration inequality: for a non-negative random variable X and \(\delta >0\) we have

\(\square \)

We remark that based on the result of [24], while sufficient, the 2-design property is not necessary for anti-concentration. In Sect. 5 we give an alternative proof for anti-concentration in \(O(D \cdot n^{\frac{1}{D}})\) depth based on different ideas. The method directly implies anti-concentration and not the approximate 2-design property.

For these spatially local circuits we also improve on some bounds in [17] and [18] about scrambling and decoupling, removing polylogarithmic factors. Here we give an informal statement of the result with full details and definitions found in Sect. 6.

Theorem 12

(Informal). Random quantum circuits acting on D-dimensional lattices composed of n qubits are scramblers and decoupler in the sense of [17] and [18] after \(O(D \cdot n^{1/D})\) number of steps.

Our last result concerns the fully connected model. If \(s = O(n \ln ^2 n)\) and \(d=2\) then \(\mu ^{\text {CG}}_{s}\) satisfies the anti-concentration criterion according to Definition 10 for constant \(\alpha \) and \(\beta \), i.e., (5). We phrase our result in terms of the expected “collision probability” of the output distribution of \(C \sim \mu ^{\text {CG}}_s\) from which a bound similar to the one in theorem 11 will follow using the Paley–Zygmond inequality (10). In particular, if C is a quantum circuit on n qubits, starting from \(|0^n\rangle \) the collision probability is

For the Haar measure \({\mathbb {E}}_{C \sim \textrm{ Haar}}\text {Coll}(C) = \frac{2}{2^n+1}\), and for the uniform distribution this value is \(1/2^n\). In contrast, a depth-1 random circuit has expected collision probability \( (\sqrt{\frac{2}{5}} )^n\), which is exponentially larger than what we expect from the Haar measure.

Theorem 13

There exists a c such that when \(s > c n \ln ^2 n\),

Moreover if \(t \le \frac{1}{3 c'} n \ln n\) for some large enough \(c'\), then

Proof Sketch

For the upper bound, we translate the convergence time of the expected collision probability to the mixing time of a certain classical Markov chain (which we call \(X_0,X_1,\ldots \)). This Markov chain has also been considered in previous work [18, 34, 51]. Part of our contribution is to analyze this Markov chain in a new norm. The Markov chain has n sites labeled as \(1,\ldots ,n\), and at each site x it will move only to \(x-1\), x or \(x+1\). Such chains are known as “birth and death” chains, and in our case it results from representing the state of the system by a Pauli operator and then taking x to be the Hamming weight of that Pauli operator. It is known [51] that the probability of moving to site \(x+1\) is \(\approx \frac{6}{5} \frac{x(n-x)}{n^2}\) and the probability of moving to site \(x-1\) is \(\approx \frac{2}{5} \frac{x(x-1)}{n^2}\). The major difficulty in proving mixing for this Markov chain is that the norm which we have to prove mixing in is exponentially sensitive to small fluctuations (measured in either the 1-norm or the 2-norm). Indeed, given starting condition

we would like to show that

is \(\le O(2^{-n})\). We can think of (15) as a weighted 1-norm on probability distributions.

Our proof will compute the distribution of \(X_t\) for \(t = O(n \ln ^2 n)\) nearly exactly. One distinctive feature of this chain is that when \(k/n \ll 1\), the probability of moving is O(k/n) and the chain is strongly biased to move towards the right. When k/n reaches O(n), the chain becomes more like the standard discrete Ehrenfest chain, which is a random walk with a linear bias towards (in this case) \(k=\frac{3}{4} n\). Thus the small-k region needs to be handled separately. This is especially true for anti-concentration thanks to the \(1/3^k\) term in (15), so that even a small probability of waiting for a long time in this region can have a large effect on the collision probability.

The approach of [18, 27, 34] has been to relate the original Markov chain to an “accelerated” chain which is conditioned on moving at each step. The status of the original chain can be recovered from the accelerated chain by adding a geometrically distributed “wait time” at each step. Then standard tools from the analysis of Markov chains, such as comparison theorems and log-Sobolev inequalities, can be used to bound the convergence rate of the accelerated chain. Finally, it can be related back to the original chain by arguing that the accelerated chain is unlikely to spend too long on small values of k, allowing us to bound the wait time. For our purposes, this process does not produce sharp enough bounds, due to the heavy-tailed wait times combined with fairly weak bounds on how quickly the accelerated chain converges and leaves the small-k region.

We will sharpen this approach by incompletely accelerating; i.e., we will couple the original chain to a chain that moves with a carefully chosen (but always \(\Omega (1)\)) probability. In particular, we will introduce a chain where the probabilities of moving from x to \(x-1\), x or \(x+1\) are each affine functions of x. In fact our new “accelerated” chain is only accelerated for \(x< \frac{5}{6}n\) and is actually more likely to stand still for \(x\ge \frac{5}{6}n\). This will allow us to exactly solve for the probability distribution of the accelerated chain after any number of steps, using a method of Kac to relate this distribution to the solution of a differential equation. Our solution can be expressed simply in terms of Krawtchouk polynomials, which have appeared in other exact solutions to random processes on the hypercube. We relate this back to the original chain with careful estimates of the mean and large-deviation properties of the wait time. This ends up showing only that the collision probability is small for t in some interval \([t_1,t_2]\), and to show that it is small for a specific time, we need to prove that the collision probability decreases monotonically when we start in the state \(|0^n\rangle \). A further subtlety is that (15) technically only applies when all qubits have been hit by gates and we need to extend this analysis to include the non-negligible probability that some qubits have never been acted on by a gate.

Because previous work achieved quantitatively less sharp bounds, they could omit some of these steps. For example, [27, 34] used \(O(n^2)\) gates, which meant that the probability of most bad events was exponentially small. By contrast, in depth \(O(n\ln ^2(n))\), there is probability \(n^{-O(\ln n)}\) of missing at least one qubit and so we cannot afford to let this be an additive correction to our target collision probability of \(\text {constant} \cdot 2^{-n}\). Likewise, [18] used only \(O(n \ln ^2(n))\) gates but achieved a collision probability of \(2^{\epsilon n-n}\) for small constant \(\epsilon \), which allowed them to use a simpler version of the accelerated chain whose convergence they bounded using generic tools from the theory of Markov chains.

For the lower bound we just consider the event that the initial Hamming weight does not change throughout the process. The initial state with Hamming weight k has probability mass \(\Pr [X_0=k]=\frac{{n\atopwithdelims ()k}}{2^n-1}\). Starting with Hamming weight k, the probability of not moving in each step is \(e^{-O(k/n)}\), so if \(t= c n \ln n\) for \(c \ll 1\) then we have \(\Pr [X_t=k | X_0=k] \ge e^{-O(k t/n)}\). Hence

\(\square \)

A natural question is whether there is a common generalization of our Theorems 9 and 13. In physics, the \(D\rightarrow \infty \) limit is often considered a good proxy for the fully connected model. This raises the question of whether we needed Theorem 13 to handle the fully connected case, or whether it would be enough to use Theorem 9 in the large D limit. However, Theorem 9 works only for \(D = O(\ln n/ \ln \ln n)\), and the best depth bound we can get from this theorem is \(e^{O(\ln n/\ln \ln n)}\), which is far above the \(O(\ln ^2(n))\) achievable by Theorem 9. However, in Sect. 5 we give an alternative proof for anti-concentration of outputs via circuits on D-dimensional circuits with \(t=2\) and \(D = O(\ln n)\). Using that approach we can make the depth as small as \(O(\ln n \ln \ln n)\). We conjecture that \(O(\ln n)\) depth should also possible.

In order to establish rigorous bounds, our results involve some inequalities that are not always tight. As a result, the upper bound on collision probability in Theorem 13 has a factor of 29 rather than the \(2+o(1)\) that we would expect and the bound on the number of gates required may be too high by a factor of \(\ln (n)\). Since determining the precise number of gates needed for anti-concentration may have utility in near-term quantum hardware, we also undertake a heuristic analysis of what depth seems to be required to achieve anti-concentration. Here we ignore the possibility of large fluctuations in the wait time, for example, and simply set it equal to its expected value. We also freely make the continuum approximation for the biased random walk that ignores wait time, obtaining the Ornstein–Uhlenbeck process. The resulting analysis (found in Sect. 4.6) suggests that \(\frac{5}{6} n \ln n + o(n \ln n)\) gates are needed to achieve anti-concentration comparable to the Haar measure.

This result can also be useful for understanding the near-term power of certain variational quantum algorithms, such as VQE and QAOA. [20, 43] show that when a gate sequence is drawn from a 2-design, the gradients used for optimizing VQE and other algorithms become exponentially small. This is called the “barren plateau” phenomenon. Our result would suggest that this occurs in 2-D circuits once the depth is \(\gtrsim \sqrt{n}\).

1.4 Previous work

The time evolution of the 2nd moments of random quantum circuits was first studied by Oliveira, Dahlsten and Plenio [51], who investigated their entanglement properties. This was extended by [27, 34] to show that after linear depth, arndom circuits on the complete graph yield approximate 2-designs. In [13] Brandão-Harrow-Horodecki (BHH) extended this result and showed that for a 1D-lattice after depth \(t^{10.5} \cdot O(n + \ln \frac{1}{\epsilon })\) these random quantum circuits become \(\epsilon \)-approximate t-designs. This result was subsequently improved to \(t^{5 + o_t(1)} O(n + \ln \frac{1}{\epsilon })\) by Haferkamp [31]. All of these results (except [51]) directly imply anti-concentration after the mentioned depths. The construction of t-designs in [13] is in a stronger measure than the one in HL [34]. The gap of the second-moment operator was calculated exactly for \(D=1\) and fully connected circuits by Žnidarič [58] and a heuristic estimate for the \(t^{\text {th}}\) moment operator was given by Brown and Viola for fully connected circuits [19].

In [17, 18] Brown and Fawzi considered “scrambling” and “decoupling” with random quantum circuits. In particular, they showed for a D-dimensional lattice scrambling occurs in depth \(O(n^{1/D} {\text {polylog}}(n))\), and for complete graphs, they showed that after polylogarithmic depth these circuits demonstrate both decoupling and scrambling. For the case of D-dimensional lattices they showed that for the Markov chain K, after depth \(n^{1/D} {\text {polylog}}(n)\), a string of Hamming weight 1 gets mapped to a string with linear Hamming weight with probability \(1-1/{\text {poly}}(n)\). While this result is related to ours, it does not seem to yield the results we need e.g. for anti-concentration, due to the powers of Hilbert space dimension that are lost when changing norms.

In [46, 47] Nahum, Ruhman, Vijay and Haah considered operator spreading for random quantum circuits on D-dimensional lattices. They considered the case when a single Pauli operator starts from a certain point on the lattice and they analyze the probability that after a certain time a non-identity Pauli operator appears at an arbitrary point on the lattice. For \(D=1\) they showed that this probability function satisfies a biased diffusion equation. Their result in this case is exact. For \(D=2\) they explained, both numerically and theoretically, that this probability function spreads as an almost circular wave whose front satisfies the one dimensional Kardar-Parisi-Zhang equation. They moreover explained: 1) the bulk of the occupied region is in equilibrium, 2) fluctuations appear at the boundary of this region with \(\sim t^{1/3}\), and 3) the area of the occupied region grows like \(t^2\), where t is the depth of the circuit. As far as we understand this result does not directly lead to the construction of t-designs and rigorous bounds on the quality of the approximations made in that paper are not known.

If we assume that qudits have infinite local dimension (\(d \rightarrow \infty \)) then the evolution of Pauli strings on a 2-D lattice is closely related to Eden’s model [28]. Here, Eden has found certain explicit solutions. However, apart from the \(d\rightarrow \infty \) limit, his model differs from ours also in that his considers only starting with a single occupied site and running for a time much less than the graph diameter (or equivalently, considering an infinitely large 2-D lattice), while we consider the initial distribution obtained by starting in the \(|0^n\rangle \) state.

After the first preprint version of this paper was posted online, [24] improved on our results in several ways. Unlike what we expected, they proved that random quantum circuits acting on linear chains or complete graphs anti-concentrated after depth \(\Theta (\ln n)\). It is left as an important open question whether the same bound holds for \(D = 2,3, \ldots \). They also proved one of the conjectures of this paper that the constant factor for the depth bound for the complete graph model is 5/6. The initial presentation of this result had a mistake in the heuristic reasoning and predicted the constant factor to be 5/3. This was pointed out and corrected in [24].

1.5 Open questions

-

1.

Is it possible to construct “strong” t-designs (Definition 2) using sub-linear depth random circuits? If we can show that the off-diagonal moments (see Definition 36) of the distribution, which have expectation zero according to the Haar measure, become smaller than \(1/d^{3nt}\) in sub-linear depth, then our construction of monomial designs implies the construction of strong designs. On the other hand, we cannot rule out the possibility that strong designs require linear depth.

-

2.

How large are the constant factors in bounds reported in this paper? Based on a heuristic argument in Sect. 4.6 for the complete graph architecture we conjecture that such random circuits of size \(s = \frac{5}{6} n (\ln n + \epsilon )\) are \(O(\epsilon )\)-approximate 2-designs. See Conjecture 1 for a precise conjectured bound for obtaining 2-designs. In work appearing after the first version of our paper, Ref. [24] proved this conjecture for anti-concentration. Our result had achieved an upper-bound of \(O(n \ln ^2 n)\).

-

3.

We believe our dependence on n is essentially optimal. But our depth scales with t as \(t^\alpha \) for some \(\alpha \gtrsim 5\) that is almost certainly not optimal. At the moment the best lower bound is \(\Omega (t\ln n)\) depth for any circuit, or \(\Omega (n^{1/D})\) in D dimensions. Indeed, very recently [37] provided strong analytical evidence that for the one-dimensional architecture, \(\alpha =1\) for \(D=1\). The argument, however, contains uncontrolled approximations and is not known to extend even to \(D=2\), although such an extension seems plausible. Intriguingly, also for constant n and with a different gate model, some results are known that are completely independent of t [11].

-

4.

If we pick an arbitrary graph and apply random gates on the edges of this graph, after what depth do these circuits become t-designs? We conjecture that if the graph has large expansion and diameter l, then the answer is O(l). However, if the graph has a tight bottleneck (like a binary tree), then even though the graph has small diameter, we suspect that certain measures of t-designs (including the monomial measure) require linear depth. Ideally, the t-design time for any graph could be related to other properties of the graph such as mixing time, cover time, etc.

-

5.

Can we prove a comparison lemma for random circuits, i.e., can we show that if two random circuits are close to each other, then they become t-designs after roughly the same amount of time? Such comparison lemma may imply that other natural families of low-depth circuits are approximate t-designs. A related question is whether deleting random gates from a circuit family can ever speed up convergence to being a t-design. Such a bound has been called a “censoring” inequality in the Markov-chain literature.

-

6.

Our results do not say much about the actual constants that appear in the asymptotic bounds for the required size for anti-concentration. We conjecture the leading term in the anti-concentration time for random circuits on complete graphs is \(\frac{5}{6} n \ln n\). For the D-dimensional case our bounds inherit constant factors from [13]. Simple numerical simulation and also the analysis of [8, 46, 47] suggest that the constant should be \(\approx 1\).

-

7.

For the case of D-dimensional circuits, our result does not say much about the dynamics of the distribution when depth is \(\ll n^{1/D}\). Such a result may explain the dynamics of entanglement in random circuits. [46, 47] consider this problem for the case when a single Pauli operator starts at the middle of the lattice; however, their result does not apply to arbitrary initial operators.

-

8.

The best anti-concentration lower bound we are able to prove is \(\Omega (\ln n)\) For D-dimensional lattices one would expect a lower-bound of \(\Omega (n^{1/D})\) based on the following intuition for circuits of depth \(s < n^{1/D}\): For \(s \ll n^{1/D}\), we expect any two non-overlapping clusters of \(s^D\) qubits will be close to Haar random. Hence, a crude model for such circuits would be \(n/s^D\) copies of Haar-random unitaries each on \(s^D\) qubits. In this case we would expect the collision probability to be \( \approx \frac{2^{n/s^D}}{2^n}\). Very interestingly, the recent result [24] refutes this intuition for \(D=1\) and showed an upper bound of \(O(\ln n)\) for the depth at which anti-concentration is achieved. It seems plausible that at \(D = 2,3, \ldots \) we would also have anti-concentration in depth \(O(\ln n)\) since it holds both for \(D=1\) and for fully connected circuits.

2 Preliminaries

2.1 Basic definitions

We need the following norms:

Definition 14

For a superoperator \({\mathcal {E}}\) the diamond norm [40] is defined as \(\Vert {\mathcal {E}}\Vert _\diamond := \sup _d \Vert {\mathcal {E}} \otimes \textrm{id}_d\Vert _{1 \rightarrow 1}\), where for a superoperator A the \(1 \rightarrow 1\) norm is defined as\( \Vert A\Vert _{1 \rightarrow 1}:= \sup _{X \ne 0} \frac{\Vert A (X)\Vert _1}{\Vert X\Vert _1}\).

A matrix is called positive semi-definite (psd) if it is Hermitian and has all non-negative eigenvalues. A superoperator \({\mathcal {A}}\) is called completely positive (cp) if for any \(d \ge 0\), \({\mathcal {A}} \otimes \text {id}_d\) maps psd matrices to psd matrices. A superoperator is called trace-preserving completely positive (tpcp) if it maps if it preserves the trace and is furthermore cp.

Let S be a set of qudits, then

Definition 15

\(\text {Haar}(S)\) is the Haar measure on \(U (({\mathbb {C}}^d)^{\otimes |S|})\). We refer to \(\text {Haar}(i,j)\) as the two qudit Haar measure on qudits indexed by i and j and also if m is an integer, the notation \(\text {Haar}(m)\) means Haar measure on m qudits.

We now define expected monomials, moment superoperators and quasi-projectors for a distribution \(\mu \) over the unitary group:

Definition 16

Let \(n,t >0\) be positive integers and \(\mu \) be any distribution over n-qudit unitary group \(\text{ U }(({\mathbb {C}}^d)^{\otimes n})\). Then \(G_\mu ^{(t)}:= {\mathbb {E}}_{C\sim \mu } \left[ C^{\otimes t,t} \right] \) is the quasi-projector of \(\mu \). Here \(C^{\otimes t,t} = C^{\otimes t} \otimes C^{*\otimes t}\). Also \(G^{(t)}_{(i,j)} = G^{(t)}_{\text {Haar}(i,j)}\). Using this Definition we will also use the following quantities:

-

1.

Let \(i_1, j_1, \ldots , i_t, j_t, k_1, l_1, \ldots , k_t, l_t \in [d]^n\) be any 2t-tuple of words \(\in [d]^n\). Then the \(i_1,\ldots ,l_t\) monomial is the expected value of a balanced monomial of \(\mu \) defined as

$$\begin{aligned} \mathop {{\mathbb {E}}}\limits _{C \sim \mu } \left[ C_{i_1, j_1} \ldots C_{i_t, j_t} C^*_{k_1, l_1} \ldots C^*_{k_t l_t} \right] = \langle i_1, \ldots , j_t|G_\mu ^{(t)}|k_1, \ldots , l_t\rangle \end{aligned}$$(17)\(C_{a,b}\) is the a, b entry of the unitary matrix C.

-

2.

Let \(\textrm{ad}_X (\cdot ):= X (\cdot ) X^\dagger \). Then \(\text {Ch}\left[ G^{(t)}_\mu \right] := {\mathbb {E}}_{C \sim \mu } \left[ \textrm{ ad}_{C^{\otimes t}} \right] \) is the \(t^{\text {th}}\) moment superoperator of \(\mu \).

Next, we define the building blocks of our t-design constructions.

Definition 17

(Rows of a lattice). For \(1 \le i \le n^{1-1/D}\), \(r_{\alpha ,i}\) is the i-th row of a D-dimensional lattice in the \(\alpha \)-th direction. We will label the qubits in row i by \((\alpha ,i,1),\ldots ,(\alpha ,i,n^{1/D})\). Assume for convenience that \(n^{1/D}\) is an even integer and define the sets of pairs \(E_{\alpha ,i}:= \{((\alpha ,i,1),(\alpha ,i,2)),\ldots , ((\alpha ,i,n^{1/D}-1), (\alpha ,i,n^{1/D}))\}\) and \(O_{\alpha ,i}:= \{((\alpha ,i,2),(\alpha ,i,3)),\ldots , ((\alpha ,i,n^{1/D}-2), (\alpha ,i,n^{1/D}-1))\}\).

Definition 18

(Elementary random circuits). The elementary quasi-projector in direction \(\alpha \) is

For the 2-D lattice \(g_R\) and \(g_C\) for \(g_1\) and \(g_2\), respectively.

The following defines the moment superoperator and quasi-projector of the Haar measure on the rows of a D-dimensional lattice in a specific direction.

Definition 19

(Idealized model with Haar projectors on rows). Let \(1\le \alpha \le D\) be one of the directions of a D-dimensional lattice then

For a 2-D lattice we use \(G_R\) and \(G_C\) for \(G_1\) and \(G_2\), respectively.

Next, we define moment operators and projectors corresponding to the Haar measure on the sub-lattices of a D-dimensional lattice. We view a D-dimensional lattice as a collection of \(n^{1/D}\) smaller lattices each with dimension \(D-1\), composed of \(n^{1-1/D}\) qudits. We label these sub-lattices with \(\text{ Planes }(D):= \{p_1, \ldots , p_{n^{1/D}}\}\).

Definition 20

(Haar measure on sub-lattices). \(G_{\text {Planes}(D)} = \bigotimes _{p \in \text {Planes}(D)} G^{(t)}_{\text {Haar}(p)}\equiv G^{(t)\otimes n^{1/D}}_{\text {Haar}(n^{1-1/D})}\),.

Definition 21

For \(d=2\), \(t=2\) and a superoperator \({\mathcal {A}}\) define

In particular, for a distribution \(\mu \) over circuits of size s the expected collision probability is defined as

Remark 1

For \(d=2\), \(t=2\) and when \(\nu \) is the Haar measure on \(\text {U}(4)\), \(\text {Ch}\left[ G^{(2)}_{(i,j)}\right] \) is the following map in the Pauli basis:

More generally, if S is a collection of qubits, and \(p, q \in \{0,1,2,3\}^S\), then

when \(p,q \in \{0,1,2,3 \}^S\).

See [34, 51] for the proof of these remarks.

2.2 Operator definitions of the models

Definition 22

(Random circuits on a two-dimensional lattice). The quasi-projector of \(\mu ^{\text {lattice},n}_{2,c,s}\) is \(G_{{\mu ^{\text {lattice},n}_{2,c,s}}}^{(t)} = g^s_R (g^s_C g^s_{R} )^c\).

The generalization of this definition to arbitrary D dimensions is according to:

Definition 23

(Recursive definition for random circuits on D-dimensional lattices). The quasi-projector of \(\mu ^{\text {lattice},n}_{D,c,s}\) is specified by the recursive formula:

It will be useful to our proofs to also define:

-

1.

\({\tilde{G}}_{n,D,c} = \left( {\tilde{G}}^{\otimes n^{1/D}}_{n^{1-1/D}, D-1, c} G_{\text {Rows}(D,n)} {\tilde{G}}^{\otimes n^{1/D}}_{n^{1-1/D}, D-1, c} \right) ^c\)

-

2.

\({{\hat{G}}}_{n,D,c,s} = G_{\text {Rows}(D,n)} {\tilde{G}}^{\otimes n^{1/D}}_{n^{1-1/D}, D-1, c,s} G_{\text {Rows}(D,n)}\)

In particular, \({\tilde{G}}_{n,D,c,s}\) is the same as \(G_{\mu ^{\text {lattice},n}_{D,c,s}}\) except that we have replaced \(g_{\text {Rows}(D,n)}^s\) with \(G_{\text {Rows}(D,n)}\). Definition 22 is a special case of Definition 23, but we included both of them for convenience.

Definition 24

\(G^{(t)}_{\mu ^{\text {CG}}_{s}} = \left( \frac{1}{{n \atopwithdelims ()2}} \sum _{i \ne j} G^{(t)}_{(i,j)} \right) ^s\).

2.2.1 Summary of the definitions.

See below for a summary of the definitions:

Notation | Definition | Reference |

|---|---|---|

\(\Vert \cdot \Vert _\diamond \) | superoperator diamond norm | Definition 14 |

\(\Vert \cdot \Vert _p\) | matrix p-norm for \(p \in [0, \infty ]\) | Definition 14 |

\(\text {Haar}\) | the Haar measure | Definition 15 |

\(\text {Haar}(S)\) | Haar measure on subset S of qudits | Definition 15 |

\(\text {Haar}(i,j)\) | Haar measure on qudits i and j | Definition 15 |

\(U^{\otimes t,t}\) | \(C^{\otimes t} \otimes C^{*, \otimes t}\) | Definition 16 |

\(G^{(t)}_\mu \) | average of \(C^{\otimes t,t}\) over \(C\sim \mu \) | Definition 16 |

\(G^{(t)}_\text {Haar}\) | Projects onto vectors invariant under \(C^{\otimes t,t}\) | Definition 16 |

\(G^{(t)}_{i,j}\) | Haar projector of order t on qudit i and j | Definition 16 |

\(\langle i,j| G^{(t)}_\mu |k,l\rangle \) | moment of order t: \({\mathbb {E}}_{C\sim \mu } [C_{i_1, j_1} \ldots C_{i_t, j_t} C^*_{i_1, j_1} \ldots C^*_{i_t, j_t}]\) | Definition 16 |

\(\text {Ch}[G^{(t)}_\mu ]\) | moment superoperator, equal to \({\mathbb {E}}_{C \sim \mu } [\textrm{ ad}_{C^{\otimes t}} ]\) | Definition 16 |

\(r_{\alpha ,i}\) | i-th row in the \(\alpha \) direction with \(i\in [n^{1/D}], \alpha \in [D]\) | Definition 17 |

\(\text {Rows}(\alpha ,n)\) | the collection of rows of a lattice (with n points) in the \(\alpha \) direction | Definition 17 |

\(g_{\text {Rows}(\alpha ,n)}\) | two-qudit gates applied to even then odd neighbors in each row in the \(\alpha \) direction | Definition 18 |

\(g_{r(\alpha ,i)}\) | two-qudit gates applied to even then odd neighbors in the i-th row in the \(\alpha \) direction | Definition 18 |

\(g_{R}\) and \(g_C\) | \(g_{\text {Rows}(1,n)}\) and \(g_{\text {Rows}(2,n)}\) when \(D=2\). | Definition 18 |

\(G_{\text {Rows}(\alpha ,n)}\) | Haar projector applied to each row in the\(\alpha ^{\text {th}}\) direction | Definition 19 |

\(G_R(G_C)\) | Haar projector applied to each row (column) of a 2D lattice | Definition 19 |

\(G_{\text {Planes}(\alpha )}\) | Haar projector applied to each plane perpendicular to the direction \(\alpha \) | Definition 20 |

\(\text {Coll}({\mathcal {A}})\) | collision probability from superoperator \({\mathcal {A}}\) | Definition 21 |

\(\text {Coll}_s\) | the expected collision probability of a random circuit after s steps | Definition 21 |

\(\mu ^{\text {lattice},n}_{D,c,s}\) | the distribution over D-dimensional circuits with n qudits | Definition 23 |

\({{{\tilde{G}}}}_{n,D,c}\) | same as \(G^{(t)}_{\mu ^{\text {lattice},n}_{D,c,s}}\) except that we replace \(g^s_{\text {Rows} (\alpha ,n)}\) with \(G_{\text {Rows} (\alpha ,n)}\) | Definition 23 |

\({{{\hat{G}}}}_{n,D,c,s}\) | one block of \({{{\tilde{G}}}}_{n,D,c}\) defined as \(G_{\text {Rows}(D,n)} {\tilde{G}}^{\otimes n^{1/D}}_{n^{1-1/D}, D-1, c,s} G_{\text {Rows}(D,n)}\) | Definition 23 |

\(\mu ^{\text {CG}}_{s}\) | the distribution over circuits with s random two-qubit gates | Definition 24 |

\(\measuredangle (A,B)\) | \(\cos ^{-1}\max _{x\in A, y \in B} \langle x,y\rangle \) is the angle between two vector spaces A and B | Section 3.6.1 |

2.3 Elementary tools

If A is a matrix and \(\sigma _i\) are the singular values of A, then for \(p \in [1,\infty )\) the Schatten p-norm of A is defined as \(\Vert A\Vert _p:= (\sum _i \sigma ^p_i)^{1/p}\). The \(\infty \)-norm of A is \(\Vert A\Vert _\infty := \max (i) \sigma _i\). The 1-norm is related to the \(\infty \)-norm by \(\Vert A\Vert _1 \le {{\,\textrm{rank}\,}}(A) \cdot \Vert A\Vert _\infty \). Moreover, for \(p \in [1, \infty ]\) and any two matrices A and B, \(\Vert A \otimes B\Vert _p = \Vert A\Vert _p\cdot \Vert B\Vert _p\).

If \({\mathcal {A}}\) and \({\mathcal {B}}\) are superoperators, then \(\Vert {\mathcal {A}}\otimes {\mathcal {B}}\Vert _\diamond = \Vert {\mathcal {A}}\Vert _\diamond \cdot \Vert {\mathcal {B}}\Vert _\diamond \).

\(\text {Ch}\left[ \cdot \right] \) is the linear map from matrices to superoperators such that for any two equally sized matrices A and B, \(\text {Ch}\left[ A\otimes B^*\right] = A [\cdot ] B^\dagger \). Note that \(\text {Ch}\left[ \cdot \right] \) is associative in the sense that \(\text {Ch}\left[ A \otimes B^*\right] \circ \text {Ch}\left[ C \otimes D^*\right] = \text {Ch}\left[ A C \otimes B^*C^*\right] \), for any equally sized matrices A, B, C, D.

Consider the Haar measure over \(\text{ U }(d)\). \(\text {Ch}\left[ G^{(t)}_\text {Haar}\right] \) (defined in the previous section) is the projector onto the matrix vector space of permutation operators (permuting length t words over the alphabet [d]). In particular, for any matrix \(X \in {\mathbb {C}}^{d^t \times d^t}\) we can write

where \(V(\pi )\) is the permutation matrix \(\sum _{(i_1, \ldots , i_t) \in [d]^t} |i_1, \ldots , i_t\rangle \langle i_{\pi (1)}, \ldots , i_{\pi (t)}|\), and \(Wg(\pi )\) is a linear combination of permutations. Specifically

Here the coefficients \(\alpha (\cdot )\) are known as Weingarten functions (see [23]). If \(\mu ,\nu \in S_t\) then let \(\textsf{dist}(\mu ,\nu )\) denote the number of transpositions needed to generate \(\mu ^{-1}\nu \) from the identity permutation. Then we can define \(\alpha (\cdot )\) by the following relation.

Note that \(\alpha (\pi )\) is always real and \(|\alpha (\lambda )| = O(1/d^{t+\textsf{dist}(\lambda )})\). Thus for large d, \(Wg(\pi ) \approx V(\pi )/d^t\).

Furthermore,

Let A, B be matrices. For the superoperator \({\mathcal {D}}\equiv B {\textrm{Tr}}[A \cdot ]\) we use the notation \({\mathcal {D}}= B A^*\). We need the following observation:

where \(|\psi _\pi \rangle = (I \otimes V(\pi )) \frac{1}{\sqrt{d^t}} \sum _{i \in [d]^t} |i\rangle |i\rangle \).

We need the following lemma:

Lemma 25

If A is a (possibly rectangular) matrix, then \(A A^\dagger \) and \(A^\dagger A\) have the same spectra.

Lemma 26

If A and B are matrices and \(\Vert \cdot \Vert _*\) is a unitarily invariant norm, then \(\Vert A B\Vert _*\le \Vert A\Vert _*\Vert B\Vert _\infty \).

Proof

This lemma can be viewed as a consequence of Russo-Dye theorem, which states that the extreme points of the unit ball for \(\Vert \cdot \Vert _\infty \) are the unitary matrices. Thus we can write \(B = \Vert B\Vert _\infty \sum _i p_i U_i\) for \(\{p_i\}\) a probability distribution and \(\{U_i\}\) a set of unitary matrices. We use this fact along with the triangle inequality and then unitary invariance to obtain

\(\square \)

A similar argument applies to superoperators.

Lemma 27

If \({\mathcal {A}}\) is a superoperator and \({\mathcal {B}}\) is a tpcp superoperator then \(\Vert {\mathcal {A}}{\mathcal {B}}\Vert _\diamond \le \Vert {\mathcal {A}}\Vert _\diamond \).

Proof

Let d be \(\ge \) the input dimensions of both \({\mathcal {A}}\) and \({\mathcal {B}}\). Then \(\Vert {\mathcal {A}}\Vert _\diamond = \max _{\Vert X\Vert _1 \le 1} \Vert ({\mathcal {A}}\otimes {{\,\textrm{id}\,}}_d)(X)\Vert _1\) and \(\Vert {\mathcal {A}}{\mathcal {B}}\Vert _\diamond = \max _{\Vert X\Vert _1 \le 1} \Vert ({\mathcal {A}}\otimes {{\,\textrm{id}\,}}_d)({\mathcal {B}}\otimes {{\,\textrm{id}\,}}_d)(X)\Vert _1\). Since \({\mathcal {B}}\) is a tpcp superoperator \(\Vert ({\mathcal {B}}\otimes {{\,\textrm{id}\,}}_d)(X)\Vert _1 \le 1\) and so \(\Vert {\mathcal {A}}{\mathcal {B}}\Vert _\diamond \) is maximizing over a set which is contained in the set maximized over by \(\Vert {\mathcal {A}}\Vert _\diamond \). \(\square \)

These give rise to the following well-known bound, which often is called “the hybrid argument.”

Lemma 28

Let \(\Vert \cdot \Vert _*\) be a unitarily invariant norm. If \(A_1, \ldots ,A_t\) and \(B_1, \ldots , B_t\) have \(\infty \)-norm \(\le 1\). Then

This is also true for superoperators and the diamond norm, if each superoperator is a tpcp map.

We will need a similar bound for tensor products.

Lemma 29

Suppose \(\Vert A - B\Vert _*\le \epsilon \) for some norm \(\Vert \cdot \Vert _*\) that is multiplicative under tensor product. Then for any integer \(M > 0\)

The same holds for superoperators and the diamond norm. In particular \(\Vert A^{\otimes M} - B^{\otimes M}\Vert _*\le 2\,M \Vert B\Vert _*^M \epsilon \) for \(\epsilon \le \frac{1}{2\,M}\).

We need the following definition and lemma:

Definition 30

Let X and Y be two real valued random variables on the same totally ordered sample space \(\Omega \). Then we say X is stochastically dominated by Y, if for all \(x \le y \in \Omega \), \(\Pr [X \ge x] \le \Pr [Y \ge y]\). We represent this by \(X \preceq Y\).

Lemma 31

(Coupling). \(X \preceq Y\) if and only if there exists a coupling (a joint probability distribution) between X and Y such that the marginals of this coupling are exactly X and Y and that with probability 1, \(X\le Y\).

2.4 Various measures of convergence to the Haar measure

Definition 32

Let \(\mu \) be a distribution over n-qudit gates. Let \(\epsilon \) be a positive real number.

-

1.

(Strong designs) \(\mu \) is a strong \(\epsilon \)-approximate t-design if

$$\begin{aligned} (1-\epsilon ) \cdot \text {Ch}\left[ G^{(t)}_{\text {Haar}}\right] \preceq \text {Ch}\left[ G^{(t)}_{\mu }\right] \preceq (1+\epsilon ) \cdot \text {Ch}\left[ G^{(t)}_{\text {Haar}}\right] , \end{aligned}$$(33)or equivalently if

$$\begin{aligned} (1-\epsilon )\cdot \left( \text {Ch}\left[ G^{(t)}_{\text {Haar}}\right] \otimes \textrm{id} \right) \Phi ^{\otimes t}_{d^n}\preceq & {} \left( \text {Ch}\left[ G^{(t)}_{\mu }\right] \otimes \textrm{id} \right) \phi _{d^n}^{\otimes t}\nonumber \\ {}\preceq & {} (1+\epsilon ) \cdot \left( \text {Ch}\left[ G^{(t)}_{\text {Haar}}\right] \otimes \textrm{id} \right) \Phi _{d^n}^{\otimes t}. \end{aligned}$$(34)The first \(\preceq \) is cp ordering and the second \(\preceq \) is psd ordering.

-

2.

(Monomial definition) \(\mu \) is a monomial based \(\epsilon \)-approximate t-design if for any balanced monomial m(C) of degree at most t

$$\begin{aligned} \left\| \textsf{vec}\left[ G^{(t)}_\mu \right] -\textsf{vec}\left[ G^{(t)}_\text {Haar}\right] \right\| _\infty \le \frac{\epsilon }{d^{nt}}. \end{aligned}$$(35)Here for a matrix A, \(\textsf{vec}(A)\) is a vector consisting of the entries of A (in the computational basis).

-

3.

(Diamond definition) \(\mu \) is an \(\epsilon \)-approximate t-design in the diamond measure if

$$\begin{aligned} \left\| \text {Ch}\left[ G^{(t)}_{\mu }\right] - \text {Ch}\left[ G^{(t)}_{\text {Haar}}\right] \right\| _\diamond \le \epsilon . \end{aligned}$$(36) -

4.

(Trace definition) \(\mu \) is an \(\epsilon \)-approximate t-design in the trace measure if

$$\begin{aligned} \left\| G_{\mu }^{(t)} - G_{\text {Haar}}^{(t)}\right\| _1 \le \epsilon . \end{aligned}$$(37) -

5.

(TPE) \(\mu \) is a \((d,\epsilon ,t)\) t-copy tensor product expander (TPE) if

$$\begin{aligned} \left\| G_{\mu }^{(t)} - G_{\text {Haar}}^{(t)}\right\| _\infty \le \epsilon . \end{aligned}$$(38) -

6.

(Anti-concentration) \(\mu \) is an \(\epsilon \) approximate anti-concentration design if

$$\begin{aligned} \mathop {{\mathbb {E}}}\limits _{C\sim \mu } |\langle 0|C|0\rangle |^4 \le \mathop {{\mathbb {E}}}\limits _{C\sim \text {Haar}} |\langle 0|C|0\rangle |^4 \cdot (1+\epsilon ). \end{aligned}$$(39) -

7.

(Approximate scramblers) \(\mu \) is an \(\epsilon \)-approximate scrambler if for any density matrix \(\rho \) and subset S of qubits with \(|S| \le n/3 \)

$$\begin{aligned} \mathop {{\mathbb {E}}}\limits _{C \sim \mu } \left\| \rho _S(C) - \frac{I}{2^{|S|}} \right\| ^2_1 \le \epsilon . \end{aligned}$$(40)where \(\rho _S(C) = {\textrm{Tr}}_{\backslash S}C \rho C^\dagger \) and \({\textrm{Tr}}_{\backslash S}\) is trace over the subset of qubits that is complimentary to S.

-

8.

(Weak approximate decouplers) Let \(M, M', A, A'\) be systems composed of \(m, m, n-m\) and \(n-m\), and let \(\phi _{MM'}\), \(\phi _{AA'}\) and \(\psi _{A'}\) be respectively maximally entangled states along \(M, M'\), maximally entangled state along \(AA'\) and a pure state along \(A'\). \(\mu \) is an \((m,\alpha ,\epsilon )\)-approximate weak decoupler if for any subsystem S of \(M'A'\) with size \(\le \alpha \cdot n\), when \(\mu \) applies to \(M'A'\),

$$\begin{aligned} \mathop {{\mathbb {E}}}\limits _{C \sim \mu }\left\| \rho _{MS}(C) - \frac{I}{2^{m}} \otimes \frac{I}{2^{|S|}}\right\| _1\le \epsilon . \end{aligned}$$(41)We consider two definitions. In the first definition the initial state is \(\phi _{MM'} \otimes \phi _{AA'}\) and in the second model it is \(\phi _{MM'} \otimes \psi _{A'}\). Here \(\rho _{MS}(C)\) is the reduced density matrix along MS after the application of \(C \sim \mu \).

3 Approximate t-Designs by Random Circuits with Nearest-Neighbor Gates on D-Dimensional Lattices

In this section we prove theorems 8 and 9, which state that our random circuit models defined for D-dimensional lattices (definitions 5) form approximate t-designs in several measures.

We begin in Sect. 3.1 by outlining some basic utility lemmas. The technical core of the proof is contained in the lemmas in Sect. 3.2 in which we bound various norms of products of Haar projectors onto overlapping sets of qubits. These are proved in Sects. 3.5 and 3.6 respectively. We show how to use these lemmas to prove our main theorems in Sect. 3.3 (for a 2-D grid) and in Sect. 3.4 (for a lattice in \(D>2\) dimensions).

3.1 Basic lemmas

In this section we state some utilities lemmas which are largely independent of the details of our circuit models.

3.1.1 Comparison lemma for random quantum circuits.

Definition 33

A superoperator \({\mathcal {C}}\) is completely positive (cp) if for any psd matrix X, \(({\mathcal {C}}\otimes \textrm{id})(X)\) is also psd. For superoperators \({\mathcal {A}}\) and \({\mathcal {B}}\), \({\mathcal {A}}\preceq {\mathcal {B}}\) if \({\mathcal {B}}-{\mathcal {A}}\) is cp.

Our comparison lemma is simply the following:

Lemma 34

(Comparison). Suppose we have the following cp ordering between superoperators \({\mathcal {A}}_1 \preceq {\mathcal {B}}_1, \ldots ,{\mathcal {A}}_t \preceq {\mathcal {B}}_t\). Then \({\mathcal {A}}_t \ldots {\mathcal {A}}_1 \preceq {\mathcal {B}}_t \ldots {\mathcal {B}}_1\).

Corollary 35

(Overlapping designs). If \(K_1, \ldots , K_t\) are respectively the moments superoperators of \(\epsilon _1, \ldots , \epsilon _t\)-approximate strong k-designs each on a potentially different subset of qudits, then

3.1.2 Bound on the value of off-diagonal monomials.

We first formally define an off-diagonal monomial.

Definition 36

(Off-diagonal monomials). A diagonal monomial of balanced degree t of a unitary matrix C is a balanced monomial that can be written as product of absolute square of terms, i.e., \(|C_{a_1,b_1}|^2 \ldots |C_{a_t,b_t}|^2\). A monomial is off-diagonal if it is balanced and not diagonal.

We now define the set of diagonal indices as \({\mathcal {D}}= \{|i,j\rangle \langle i',j'|: i=i',j=j', i,i',j,j' \in [d]^{nt}\}\) and the set of off-diagonal indices as \({\mathcal {O}}= \{|i,j\rangle \langle i',j'|: i\ne i' \text { or } j\ne j', i,i',j,j' \in [d]^{nt}\}\). We note that a diagonal monomial can be written as \({\textrm{Tr}}(C^{\otimes t,t} x)\) for some \(x \in {\mathcal {D}}\) and similarly, an off-diagonal monomial can be written as \({\textrm{Tr}}(C^{\otimes t,t} x)\) for some \(x \in {\mathcal {O}}\).

We relate the strong definition of designs to the monomial definiton via the following lemma.

Lemma 37

Let \(\delta > 0\). Assume that \(\text {Ch}\left[ G_\mu ^{(t)}\right] \) and \(\text {Ch}\left[ G_\nu ^{(t)}\right] \) are two moment superoperators that satisfy the following completely positive ordering

Let \({\mathcal {O}}\) and \({\mathcal {D}}\) be respectively the set of off-diagonal and diagonal indices for monomials. Then

3.1.3 Bound on the moments of the Haar measure.

We need the following bound on the t-th monomial moment of the Haar measure. Assume we have m qudits.

Lemma 38

(Moments of the Haar measure). Let \(G_{\text {Haar}(m)}^{(t)}\) be the quasi-projector operator for the Haar measure on m qudits. Then

Here the maximization is taken over matrix elements in the computational basis like

\(y= |i_1,\ldots , i_t, i'_1,\ldots , i'_t\rangle \langle j_1,\ldots , j_t, j'_1,\ldots , j'_t|\). Each label (e.g. \(i_j\)) is in \([d]^m\).

3.2 Gap bounds for the product of overlapping Haar projectors

We will later need the following results, with proofs deferred until Sect. 3.6.

Lemma 39

\(\Vert G_C G_R -G_\text {Haar}^{(t)}\Vert _\infty \le 1/d^{\Omega (\sqrt{ n})}\).

Lemma 40

Let \(D = O(\ln n / \ln \ln n)\) with small enough constant factor, then \(\Vert G_{\text {Planes}(D)} G_{\text {Rows}(D,n)} - G_{\text {Haar}} \Vert _\infty \le 1/d^{\Omega (n^{1-1/D})}\).

Lemma 41

Let \(|x\rangle \) and \(|y\rangle \) be two computational basis states. For small enough \(D = O(\ln n / \ln \ln n)\) and large enough c, \(|\langle x | {\tilde{G}}_{n,D,c} -G_\text {Haar}| y\rangle | \le \frac{\epsilon }{d^{nt}}\) for some \(\epsilon = 1/d^{\Omega (n^{1/D})}\).

Lemma 42

For large enough c, \(\left\| \text {Ch}\left[ ( G_R G_C G_R )^{c} -G_\text {Haar}^{(t)}\right] \right\| _\diamond = \frac{t^{O(\sqrt{n} t)}}{d^{\Omega (c \sqrt{n})}}\).

Lemma 43

For small enough \(D = O(\ln n / \ln \ln n)\) and large enough c,

In these last two lemmas, we see that c will need to grow with t. We believe that a sharper analysis could reduce this dependence, but since we already have a \({{\,\textrm{poly}\,}}(t)\) dependence in s, improving Lemmas 42 and 43 would not make a big difference. In fact, even in 1-D, [13] found a sharp n dependence but their factor of \({{\,\textrm{poly}\,}}(t)\) (which we inherit) is probably not optimal.

3.3 Proof of Theorem 8; t-designs on two-dimensional lattices

Theorem

(Restatement of Theorem 8). Let \(s, c,n > 0\) be positive integers with \(\mu ^{\text {lattice},n}_{2,c,s}\) defined as in Definition 5.

-

1.

\(s \,{=}\, {{\,\textrm{poly}\,}}(t)\!\left( \!\sqrt{n} \,{+}\, \ln \frac{1}{\delta } \!\right) \!, c\,{=}\, O\!\left( \!t \ln t\,{+}\, \frac{\ln (1/\delta )}{\sqrt{n}}\!\right) \! \implies \!\left\| \! \textsf{vec}\!\left[ \!G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)}-G_\text {Haar}^{(t)}\!\right] \! \right\| _\infty \,{\le }\, \frac{\delta }{d^{nt}}\).

-

2.

\(s {=} {{\,\textrm{poly}\,}}(t) \left( \sqrt{n} {+} \ln \frac{1}{\delta }\right) , c {=} O\left( t \ln t {+} \frac{\ln (1/\delta )}{\sqrt{n}}\right) \implies \left\| \text {Ch}\left[ G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)}{-}G_\text {Haar}^{(t)}\right] \right\| _\diamond {\le } \delta \).

-

3.

\(s = {{\,\textrm{poly}\,}}(t) \left( \sqrt{n} + \ln \frac{1}{\delta } \right) , c = O\left( t \ln t + \frac{\ln (1/\delta )}{\sqrt{n}}\right) \implies \left\| G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _1 \le \delta \).

-

4.

\(\left\| G_{\mu ^{\text {lattice},n}_{2,c,s}}^{(t)} - G_\text {Haar}^{(t)} \right\| _\infty \le c \cdot \sqrt{n} \cdot e^{-s/{{\,\textrm{poly}\,}}(t)} + \frac{1}{d^{O( c \sqrt{n} )}}\).

Proof

-

1.

This item corresponds to convergence of the individual moments of the Haar measure. A balanced moment of a distribution \(\mu \) can be written as

$$\begin{aligned} \mathop {{\mathbb {E}}}\limits _{C \sim \mu } [C_{i_1, j_1} \ldots C_{i_t,j_t} C^{*}_{i'_1, j'_1} \ldots C^{*}_{i'_t,j'_t} ] =\langle i, i'| G_\mu ^{(t)} | j,j'\rangle = {\textrm{Tr}}[G_\mu ^{(t)} \cdot |j,j'\rangle \langle i,i'|]\nonumber \\ \end{aligned}$$(47)where \(|i\rangle := |i_1,\ldots ,i_t\rangle \) and so on for \(|i'\rangle ,|j\rangle ,|j'\rangle \). The same moment can also be written as