Abstract

In this paper we prove a quantitative multilinear limited range extrapolation theorem which allows us to extrapolate from weighted estimates that include the cases where some of the exponents are infinite. This extends the recent extrapolation result of Li, Martell, and Ombrosi. We also obtain vector-valued estimates including \(\ell ^\infty \) spaces and, in particular, we are able to reprove all the vector-valued bounds for the bilinear Hilbert transform obtained through the helicoidal method of Benea and Muscalu. Moreover, our result is quantitative and, in particular, allows us to extend quantitative estimates obtained from sparse domination in the Banach space setting to the quasi-Banach space setting. Our proof does not rely on any off-diagonal extrapolation results and we develop a multilinear version of the Rubio de Francia algorithm adapted to the multisublinear Hardy–Littlewood maximal operator. As a corollary, we obtain multilinear extrapolation results for some upper and lower endpoints estimates in weak-type and \({{\,\mathrm{BMO}\,}}\) spaces.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An essential tool in the theory of singular operators is extrapolation. In one of its forms, the classical extrapolation theorem of Rubio de Francia [14] says that if an operator T satisfies \(L^q(w)\) boundedness for a fixed \(q\in (1,\infty )\) and for all weights w in the Muckenhoupt class \(A_q\), then T is in fact bounded on \(L^p(w)\) for all \(p\in (1,\infty )\) and all \(w\in A_p\).

Many variations of Rubio de Francia’s extrapolation theorem have appeared over the years adapted to various situations. A multilinear version of the extrapolation result was found by Grafakos and Martell [17]. Another version provided by Auscher and Martell [1] dealt with operators bounded only for a limited range of p rather than for all \(p\in (1,\infty )\). Combining these approaches, it was shown by Cruz-Uribe and Martell [7] that if there are \(0\le r_j<s_j\le \infty \) and \(q_j\in [r_j,s_j]\), \(q_j\ne 0,\infty \), such that an m-linear operator T satisfies

for all weights \(w_j^{q_j}\) in the restricted Muckenhoupt and Reverse Hölder class \(A_{q_j/r_j}\cap RH_{(s_j/q_j)'}\), where \(w=\prod _{j=1}^m w_j\), \(\frac{1}{q}=\sum _{j=1}^m\frac{1}{q_j}\), then T satisfies the same boundedness for all \(p_j\in (r_j,s_j)\) and all \(w_j^{p_j}\in A_{p_j/r_j}\cap RH_{(s_j/p_j)'}\), as well as certain vector-valued bounds.

In the linear setting for operators satisfying weighted bounds, it need not be the case that they are bounded on \(L^\infty \), as is the case, for example, for the Hilbert transform. In particular, it is impossible to extrapolate estimates to this endpoint. This is in contrast to what happens in the multilinear setting, where it may very well occur that singular integral operators satisfy boundedness as in (1.1), but with some of the \(q_j\) being equal to \(\infty \). This brings an interest to the question whether it is possible to extrapolate to bounds that include these endpoint cases \(p_j=\infty \), starting from an initial weighted estimate where the \(q_j\) are also allowed to be infinite. In this work we develop a method that does include these cases based on a multilinear Rubio de Francia algorithm. To facilitate this we give a natural extension in the definition of the weight classes to include these cases, see Definition 2.1 below. We point out that it is also possible to obtain these endpoint cases through off-diagonal extrapolation methods [32].

As an application for the theory, one can consider the bilinear Hilbert transform \({{\,\mathrm{BHT}\,}}\) given by

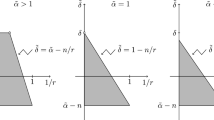

which plays a central role in the theory of time-frequency analysis. It was shown by Lacey and Thiele [25] that \({{\,\mathrm{BHT}\,}}\) is bounded \(L^{p_1}\times L^{p_2}\rightarrow L^p\) with \(\frac{1}{p}=\frac{1}{p_1}+\frac{1}{p_2}\) if \(1<p_1,p_2\le \infty \) and \(\frac{2}{3}<p<\infty \). Through the helicoidal method of Benea and Muscalu [2, 4], vector-valued bounds of the form \(L^{p_1}(\ell ^{q_1})\times L^{p_2}(\ell ^{q_2})\rightarrow L^p(\ell ^q)\) were established in this range of \(p_1\), \(p_2\), p for various choices of \(1<q_1,q_2\le \infty \), \(\frac{2}{3}<q<\infty \) with \(\frac{1}{q}=\frac{1}{q_1}+\frac{1}{q_2}\). However, they left open the problem whether one can obtain vector-valued bounds for all \(q_1\), \(q_2\), q in the same range as Lacey and Thiele’s theorem, i.e., for all \(1<q_1,q_2\le \infty \) with \(\frac{2}{3}<q<\infty \). While \({{\,\mathrm{BHT}\,}}\) satisfies weighted bounds as well as more general sparse bounds, see [3, 8], the extrapolation result by Cruz-Uribe and Martell [7] does not allow one to cover the full range of exponents. In particular, their result cannot retrieve any of the vector-valued bounds involving \(\ell ^\infty \) spaces. Such bounds also fall outside of the extrapolation result of Lorist and the author [35] where vector valued extensions of multilinear operators were considered in the setting of \({{\,\mathrm{UMD}\,}}\) Banach spaces, since \(\ell ^\infty \) does not satisfy the \({{\,\mathrm{UMD}\,}}\) property. The problem seems to be that the multilinear nature of the problem is not completely utilized when one imposes individual conditions on the weights rather than involving an interaction between the various weights.

In the recent work [33] by Li, Martell, and Ombrosi an extrapolation result was presented where they work with a limited range version of the multilinear weight condition introduced by Lerner, Ombrosi, Pérez, Torres, and Trujillo-González [30] which also appears in [3] and, in the bilinear case, in [8]. Indeed, such weight classes are characterized by boundedness of the multi-sublinear Hardy–Littlewood maximal operator as well as by boundedness of sparse forms, meaning the theory can be applied to important operators such as multilinear Calderón-Zygmund operators as well as the bilinear Hilbert transform. They introduced the weight class \(A_{\vec {p},\vec {r}}\) where \(\vec {p}=(p_1,\ldots ,p_m)\), \(\vec {r}=(r_1,\ldots ,r_{m+1})\) and \(1\le r_j\le p_j<\infty \) and \(r'_{m+1}>p\) with \(\frac{1}{p}=\sum _{j=1}^m\frac{1}{p_j}\) and \(\vec {w}=(w_1,\ldots ,w_m)\in A_{\vec {p},\vec {r}}\) if

They showed that if (1.1) holds for a \(\vec {q}\) with \(1\le r_j\le q_j<\infty \), \(r'_{m+1}>q\) and all \((w_1^{q_1},\ldots ,w_m^{q_m})\in A_{\vec {q},\vec {r}}\), then T satisfies the same boundedness for all \(\vec {p}\) and \((w_1^{p_1},\ldots ,w_m^{p_m})\in A_{\vec {p},\vec {r}}\) with \(r_j<p_j<\infty \) and \(r'_{m+1}>p\). Furthermore, their result extends and reproves some of the vector-valued bounds found by Benea and Muscalu [4] for \({{\,\mathrm{BHT}\,}}\). This class of weights does seem to be adapted to the situation even when \(p_j= \infty \), but one needs to be careful in how the constant is interpreted in this case. Similar to the proof of the extrapolation result of Cruz-Uribe and Martell, their proof of this extrapolation result is based upon an off-diagonal extrapolation result, but in their work they left open exactly what happens in the case that some of the exponents are infinite. They announced a paper in which these cases were treated which had not appeared yet when our paper was first posted, but is available now [32]. Here they show that, as a feature of off-diagonal extrapolation, it is also possible to obtain estimates that include the cases of infinite exponents.

In this work we again prove an extrapolation result using the multilinear weight classes, and our result includes these endpoint cases which, in particular, include the possibility of extrapolating from the cases where in the initial assumption the exponents can be infinite. Our proof is new and does not rely on any off-diagonal extrapolation result. Rather, we generalize the Rubio de Francia algorithm to a multilinear setting adapted to the multi-sublinear Hardy–Littlewood maximal operator. As a corollary, we are able to obtain vector-valued extensions of operators to spaces including \(\ell ^\infty \) spaces. Thus, applying this to \({{\,\mathrm{BHT}\,}}\) allows us to recover these endpoint bounds that were obtained earlier through the helicoidal method [4].

Our construction is quantitative in the sense that it allows us to track the dependence of the bounds on the weight constants. Such quantitative versions of extrapolation results were first formalized by Dragičević, Grafakos, Pereyra, and Petermichl in the linear setting in [11], but are completely new in the multilinear setting. In the linear setting this result is based on Buckley’s sharp weighted bound for the Hardy–Littlewood maximal operator. This bound has been generalized to the multi-sublinear Hardy–Littlewood maximal operator by Damián, Lerner, and Pérez [10] to a sharp estimate in the setting of a mixed type \(A_{\vec {p}}-A_{\infty }\) estimates and a sharp \(A_{\vec {p}}\) bound is found in [34]. We give a different proof of this result for the limited range version of this maximal operator by generalizing a proof of Lerner [28].

Finally, we also show how our quantitative extrapolation result recovers and extends a bound obtained for multi-(sub)linear sparsely dominated operators, generalizing the bound of Hytönen’s \(A_2\) Theorem [20]. More precisely, sparse domination yields sharp bounds for an operator for exponents \(p_1,\ldots ,p_m\) only if \(\frac{1}{p}=\sum _{j=1}^m\frac{1}{p_m}\le 1\) so that we may appeal to duality. Our extrapolation result allows us to show that this same control in terms of the weight also holds when \(\frac{1}{p}>1\).

1.1 Symmetry in Muckenhoupt weight classes

To facilitate our results, we heavily rely on the symmetric structure of the Muckenhoupt classes.

For \(p\in (1,\infty )\), a standard method of obtaining weighted \(L^p\) estimates with a weight w is by using the duality \((L^p(w))^*=L^{p'}(w^{1-p'})\) given through the integral pairing

Moreover, the Muckenhoupt \(A_p\) class is defined through these two weights w and \(w^{1-p'}\) through

where the supremum is taken over all cubes \(Q\subseteq \mathbf {R}^n\). One way to understand this definition better is by noting that we can relate the weights w and \(w^{1-p'}\) through \(w^{\frac{1}{p}}(w^{1-p'})^{\frac{1}{p'}}=1\). One can also make sense of this condition if \(p=1\) through

and one usually defines \(A_\infty :=\bigcup _{p\in [1,\infty )}A_p\).

When we replace the weight w by the weight \(w^p\) we find, using the averaging notation \(\langle h\rangle _{q,Q}:=\left( \frac{1}{|Q|}\int _Q\!|h|^q\,\mathrm {d}x\right) ^{\frac{1}{q}}\), that

for \(p\in (1,\infty )\). The symmetry in this condition is much more prevalent and this condition seems to be more naturally adapted to the weighted \(L^p\) theory. Indeed, defining

we note that \([w]_p=[w^{-1}]_{p'}\). If we denote the Hardy–Littlewood maximal operator by M and if we define the bi-sublinear Hardy–Littlewood maximal operator \(M_{(1,1)}\) by

then we have the remarkable equivalences

where the implicit constant depends only on the dimension, see Proposition 2.7 and Proposition 2.14 below.

Another way of thinking of these equivalences is by setting \(w_1:=w\), \(w_2:=w^{-1}\) and \(p_1:=p\), \(p_2:=p'\) so that we have the relations

Then one can impose a symmetric weight condition

and note that

The equivalences in (1.3) can now be thought of as

We can even make sense of these expressions when \(p_1=1\) and \(p_2=\infty \) or \(p_1=\infty \) and \(p_2=1\), given that we use the correct interpretation and this is what allows us to extrapolate using such classes. Indeed, one can think of \(f\in L^p(w^p)\) as the condition \(\Vert fw\Vert _{L^p}<\infty \), which makes sense even when \(p=\infty \) by requiring that the function fw is essentially bounded. Using the interpretation \(\langle h\rangle _{\infty ,Q}={{{\,\mathrm{ess\,sup}\,}}}_{x\in Q}|h(x)|\), we see that the condition \([w_1]_1<\infty \) is equivalent to the usual \(A_1\) condition imposed on the weight \(w_1=w\), while the condition \([w_1]_\infty <\infty \) is equivalent to the condition \(w_2=w^{-1}\in A_1\). We emphasize here that our condition \([w]_\infty <\infty \) is not equivalent to the condition \(w\in A_\infty =\bigcup _{p\in [1,\infty )}A_p\) and these notions should not be confused. The condition \(w^{-1}\in A_1\) seems to be a natural upper endpoint condition and one can show that this is equivalent to the boundedness

see Proposition 2.14 below. It also turns out that this condition allows us to extrapolate away from weighted \(L^\infty \) estimates. We point out that this idea has already been used in the endpoint extrapolation result of Harboure, Macías and Segovia [19, Theorem 3].

We wish to view our symmetric weight condition in the context of extrapolation. In proving Rubio de Francia’s extrapolation theorem, one usually starts with a pair of functions (h, f) and assumes that one has the inequality

for some \(q\in [1,\infty ]\) and all weights w satisfying \([w]_q<\infty \). The idea is then that given a \(p\in (1,\infty )\) and a weight w satisfying \([w]_p<\infty \), one can construct a weight W, possibly depending on f, h, and w, so that W satisfies \([W]_q<\infty \) as well as some additional properties to ensure that we can use (1.5) with W to conclude that

Applying this with \(h=Tf\) then gives the desired boundedness for an operator T. For the proof one usually splits into two cases, namely the case where \(p<q\) and the case where \(p>q\). In the former case one can apply Hölder’s inequality to move from \(L^p\) to \(L^q\) and in the latter case one uses duality and a similar trick to move from \(L^{p'}\) to \(L^{q'}\). The point is that both of these cases are essentially the same, but due to the notation we use we have to deal with the cases separately. Here, we wish to come up with a formalization to avoid this redundancy.

The extrapolation theorem is essentially a consequence of to the following proposition:

Proposition

Suppose we are given \(p_1, p_2\in (1,\infty )\) satisfying \(\frac{1}{p_1}+\frac{1}{p_2}=1\) and weights \(w_1,w_2\) satisfying \(w_1w_2=1\) and \([(w_1,w_2)]_{(p_1,p_2)}<\infty \). Moreover, assume we have two functions \(f_1\in L^{p_1}(w_1^{p_1})\) and \(f_2\in L^{p_2}(w_2^{p_2})\) and \(q_1, q_2\in [1,\infty ]\) with \(\frac{1}{q_1}+\frac{1}{q_2}=1\). Then there are weights \(W_1\), \(W_2\) satisfying \(W_1W_2=1\),

and

Indeed, the result of the extrapolation theorem follows by applying the proposition with \(f_1:=f\), \(q_1:=q\), \(q_2:=q'\), \(p_1:=p\), \(p_2:=p'\), \(w_1:=w\), \(w_2=w^{-1}\) and \(W_1:=W\), \(W_2:=W^{-1}\) so that, by (1.5), we have

Thus, by duality, we obtain (1.6), as desired.

The proof of the proposition uses the classical construction using the Rubio de Francia algorithm and the novelty here is our symmetric formulation. A proof can be found in this work, as it is a special case of Theorem 3.1. The case \(p<q\) in the proposition takes the form \(p_1<q_1\) and \(p_2>q_2\) while the case \(p>q\) takes the form \(p_1>q_1\) and \(p_2<q_2\). The fact that the proposition is formulated completely symmetrically in terms of the parameters indexed over \(\{1,2\}\), where we note that \([(w_1,w_2)]_{(p_1,p_2)}=[(w_2,w_1)]_{(p_2,p_1)}\), means that these respective cases can be proven using precisely the same argument, up to a permutation of the indices. Thus, without loss of generality, one only needs to prove one of the two cases.

These symmetries become especially important in the m-linear setting where we are dealing with parameters indexed over \(\{1,\ldots ,m+1\}\) and the amount of cases we have to consider increases. Thanks to our formulation, we will be able to reduce these multiple cases back to a single case in our arguments again by permuting the indices.

We wish to point out here that to facilitate our symmetric formulation and to use the duality argument involving the Rubio de Francia algorithm as above, we need to essentially restrict ourselves to the Banach range \(\frac{1}{p}\le 1\). However, in the m-linear setting one also has to deal with the quasi-Banach range \(\frac{1}{p}>1\). This means that to employ our multilinear Rubio de Francia algorithm, we must first reduce to the case where \(\sum _{j=1}^m\frac{1}{p_j}=\frac{1}{p}\le 1.\) In this case we can set \(\frac{1}{p_{m+1}}:=1-\frac{1}{p}\ge 0\) and \(\sum _{j=1}^{m+1}\frac{1}{p_j}=1\), which places us in the setting of Theorem 3.1. This is not a problem however, as reducing to this case is facilitated by the rescaling properties of the multilinear weight classes, see also Remark 2.3. In conclusion, even though our multilinear Rubio de Francia algorithm is applied in the Banach range \(\frac{1}{p}\le 1\), our result also includes the quasi-Banach range \(\frac{1}{p}>1\).

This article is organized as follows:

-

In Sect. 2 we state our main result and give an overview of the multilinear weight classes, proving some important properties as well as proving new quantitative estimates with respect to the multisublinear maximal operator as well as sparse forms.

-

In Sect. 3 we prove the main result.

-

In Sect. 4 we apply the extrapolation result for weak type bounds and certain \({{\,\mathrm{BMO}\,}}\) type bounds as well as for vector-valued bounds. Moreover, we give an application of our results to the bilinear Hilbert transform.

2 Multilinear weight classes

2.1 Setting and main result

We work in \(\mathbf {R}^n\) equipped with the Lebesgue measure \(\mathrm {d}x\). This is mostly for notational convenience and our results also hold in the more general setting of spaces of doubling quasimetric measure spaces, provided one uses the right notion of dyadic cubes in this setting, see [21]. For a measurable set E we denote its Lebesgue measure by |E|. A measurable function \(w:\mathbf {R}^n\rightarrow (0,\infty )\) is called a weight. We can identify w with a measure by \(w(E):=\int _E\!w\,\mathrm {d}x\). For \(p\in (0,\infty ]\), a weight w, and a measurable function f on \(\mathbf {R}^n\) we say that \(f\in L^p(w^p)\) provided that \(\Vert f\Vert _{L^p(w^p)}:=\Vert fw\Vert _{L^p}<\infty \). Moreover, for a measurable set \(E\subseteq \mathbf {R}^n\) with \(0<|E|<\infty \) we write

when \(0<p<\infty \) and \(\langle f\rangle _{\infty ,E}:={{{\,\mathrm{ess\,sup}\,}}}_{x\in E}|f(x)|\).

We will use the notation \(A\lesssim B\) if there is a constant \(c>0\), independent of the important parameters, such that \(A\le cB\). Moreover, we write \(A\simeq B\) if \(A\lesssim B\) and \(B\lesssim A\).

Let \(m\in \mathbf {N}\) and let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\). For \(p_1,\ldots ,p_m\in (0,\infty ]\), writing \(\vec {r}=(r_1,\ldots ,r_m)\) and similarly for \(\vec {p}\), we write \(\vec {r}\le \vec {p}\) if \(r_j\le p_j\le \infty \) for all \(j\in \{1,\ldots ,m\}\). Moreover, we write \((\vec {r},s)\le \vec {p}\) if \(\vec {r}\le \vec {p}\) and \(p\le s\), where p is defined by

Similarly, we write \(\vec {r}<\vec {p}\) if \(r_j<p_j\) for all \(j\in \{1,\ldots ,m\}\) and we write \((\vec {r},s)<\vec {p}\) if \(\vec {r}<\vec {p}\) and \(p<s\).

Definition 2.1

Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\), and \(p_1,\ldots ,p_m\in (0,\infty ]\) with \((\vec {r},s)\le \vec {p}\). Let \(w_1,\ldots , w_m\) be weights and write \(w=\prod _{j=1}^m w_j\), \(\vec {w}=(w_1,\ldots ,w_m)\). We say that \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) if

where the supremum is taken over all cubes \(Q\subseteq \mathbf {R}^n\).

As a point of comparison, we note here that, for finite \(p_j\), our condition \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) is equivalent to the condition \((w_1^{p_1},\ldots ,w_m^{p_m})\in A_{\vec {p},(r_1,\ldots ,r_m,s')}\), where the latter condition considers the weight class of Li, Martell, and Ombrosi defined in (1.2). Thus, in this range their extrapolation result [33] consider the same weights as we do.

Our main theorem is as follows:

Theorem 2.2

(Quantitative multilinear limited range extrapolation) Let \((f_1,\ldots ,f_m,h)\) be an \(m+1\)-tuple of measurable functions and let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\). Suppose that for some \(q_1,\ldots ,q_m\in (0,\infty ]\) with \(\vec {q}\ge (\vec {r},s)\) there is an increasing function \(\phi _{\vec {q}}\) such that

for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\).

Then for all \(p_1\,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) there is an increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) such that

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\). More explicitly, we can take

where \(\frac{1}{r}=\sum _{j=1}^m\frac{1}{r_j}\).

We note that if there is equality in one of the components in \(\vec {q}\ge (\vec {r},s)\), i.e., if \(q=s\) or \(q_j=r_j\) for some \(j\in \{1,\ldots ,m\}\), then we may also include the respective cases with \(p=s\) or \(p_j=r_j\) to the conclusion of the extrapolation result. In this case one should respectively use the interpretation \(\frac{\frac{1}{q}-\frac{1}{s}}{\frac{1}{p}-\frac{1}{s}}=1\) or \(\frac{\frac{1}{r_j}-\frac{1}{q_j}}{\frac{1}{r_j}-\frac{1}{p_j}}=1\). To see this, one need only note that the proof we give of the theorem already accounts for the respective cases when \(\frac{1}{p}=\frac{1}{q}\) or \(\frac{1}{p_j}=\frac{1}{q_j}\).

Our result is stronger than that in [33] in the sense that we do not have to restrict our exponents to the case where they are finite, i.e., in the initial assumption we include all the cases where \(q_j=\infty \) and in the conclusion we similarly obtain all the cases where \(p_j=\infty \), see also [32]. We emphasize here that we use the interpretation \(\Vert f_j\Vert _{L^{q_j}(w_j^{q_j})}=\Vert f_jw_j\Vert _{L^\infty }\) in the case where \(q_j=\infty \) and we need to impose the weight condition from Definition 2.1 with \(\frac{1}{q_j}=0\). For example, in the case \(m=1\), \(r=1\) and \(q=s=\infty \), one has to use the condition \(w\in A_{\infty ,(1,\infty )}\) in the initial estimate (2.1) which, following our definition, is equivalent to the condition \(w^{-1}\in A_1\). This stronger result is possible due to our use of a multilinear Rubio de Francia algorithm, fully utilizing the multilinear nature of the problem. Our result also implies vector valued estimates in these ranges and we refer the reader to Sect. 4 where we elaborate on this further.

Next we make some remarks on the quantitative result (2.3).

Usually in applications, the increasing function will be of the form \(\phi _{\vec {q}}(t)=c t^{\alpha }\) for some \(c,\alpha >0\). Then we find from (2.3) that

In the case \(m=1\), \(r=1\), \(s=\infty \), this means that we have

and this coincides with the bound obtained in [11]. This result was used in Hytönen’s \(A_2\) theorem [20] to reduce proving the sharp estimate

for Calderón-Zygmund operators T to only having to prove the linear \(A_2\) bound

Indeed, noting that \([w]_{p,(1,\infty )}=[w^p]_{A_p}^{\frac{1}{p}}\), we find that (2.5) follows from (2.4) by taking \(\alpha =2\) and \(q=2\).

The fact that we need to extrapolate from \(q=2\) to obtain the sharp bounds for Calderón-Zygmund operators speaks to their nature as operators revolving around their properties in \(L^2\). As a contrast, we note that the estimate

is central, for example, for when T is the Hardy–Littlewood maximal operator M. Indeed, by (2.4) with \(q=\infty \) and \(\alpha =1\) and by noting that \([w]_{\infty ,(1,\infty )}=[w^{-1}]_{A_1}\), this estimate extrapolates to the estimate

for \(p\in (1,\infty ]\), which is precisely Buckley’s sharp bound obtained for M. We point out here that this argument is actually circular for when \(T=M\), since the proof of the quantitative estimate in the extrapolation result makes use of Buckley’s sharp bound. Nonetheless, we think this example is heuristically interesting, since it exhibits how one can extrapolate away from weighted \(L^\infty \) estimates. Multilinear versions of Buckley’s sharp bound have been found in [10, 34] and can be recovered in a similar way, see also Theorem 4.12.

The remainder of this section will be dedicated to a discussion on the quantitative properties of the multilinear weight classes. We split this into two separate cases. In the first case we adopt the symmetric notation from the introduction and think in terms of \(m+1\)-tuples of weights and parameters satisfying a symmetric relation. In the second case we adopt the more classical approach of thinking in terms of m-tuples and we prove some key results for our main theorem.

2.2 Quantitative properties of multilinear weight classes: the \(m+1\)-tuple case

Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\), and \(p_1,\ldots ,p_m\in (0,\infty ]\) and let \(w_1,\ldots , w_m\) be weights, with \(w:=\prod _{j=1}^m w_j\). In terms of symmetries, the definition of the weight class

seems to be best suited to the case where \(\frac{1}{p}\le 1\). Indeed, if we set \(\frac{1}{p_{m+1}}:=1-\frac{1}{p}\ge 0\), \(\frac{1}{r_{m+1}}:=1-\frac{1}{s}\) and \(w_{m+1}:=w^{-1}\), then we have

The condition \((\vec {r},s)\le \vec {p}\) is equivalent to \(r_j\le p_j\) for all \(j\in \{1,\ldots ,m+1\}\) and the constant for the weight class now takes the form

where the last equality follows from the fact that the term involving the product weight in the \(m+1\)-linear weight class is equal to 1. The symmetry of this last expression also emphasizes a certain permutational invariance. Indeed, if \(\pi \in S_{m+1}\) is a permutation, then, since

we have

and this will be used in the proof of our extrapolation theorem.

Remark 2.3

While we restrict ourselves to the Banach range \(\frac{1}{p}\le 1\) in this section, we do point out that our main results do also apply in the cases where \(\frac{1}{p}>1\). This is facilitated by the rescaling property

which, in our arguments, allows us to reduce back to the case where \(\frac{1}{p}\le 1\), see also the proof of Theorem 2.2.

It will sometimes also be useful to redefine \(v_j:=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\) for \(j\in \{1,\ldots ,m+1\}\) so that

These weight classes are governed by a certain maximal operator, see also [30].

Definition 2.4

Given \(r_1,\ldots ,r_m\in (0,\infty )\), we define the m-sublinear Hardy–Littlewood maximal operator

for \(f_j\in L^{r_j}_{{{\,\mathrm{loc}\,}}}\), where the supremum is taken over all cubes \(Q\subseteq \mathbf {R}^n\) containing x. Moreover, for a dyadic grid \(\mathscr {D}\) we define

for \(f_j\in L^{r_j}_{{{\,\mathrm{loc}\,}}}\).

For the relevant definitions and results regarding dyadic grids we refer the reader to [29]. A property we need is the fact that there exist \(3^n\) dyadic grids \((\mathscr {D}^\alpha )_{\alpha =1}^{3^n}\) such that for each cube \(Q\subseteq \mathbf {R}^n\) there is an \(\alpha \) and a cube \({\tilde{Q}}\in \mathscr {D}^\alpha \) such that \(Q\subseteq {\tilde{Q}}\) and \(|{\tilde{Q}}|\le 6^n|Q|\). This implies the following:

Lemma 2.5

Let \(r_1,\ldots ,r_m\in (0,\infty )\). Then there exist \(3^n\) dyadic grids \((\mathscr {D}^\alpha )_{\alpha =1}^{3^n}\) such that

See also [29].

Definition 2.6

A collection of cubes \(\mathscr {S}\) in a dyadic grid is called sparse if there is a pairwise disjoint collection of measurable sets \((E_Q)_{Q\in \mathscr {S}}\) such that \(E_Q\subseteq Q\) and \(|Q|\le 2|E_Q|\).

Given \(r_1,\ldots ,r_m\in (0,\infty )\), for a sparse collection of cubes \(\mathscr {S}\) we define the sparse operator

and the sparse form

We point out here that the sparsity constant 2 appearing in the estimate \(|Q|\le 2|E_Q|\) is not too important and in most situations it can be replaced by any other constant greater than 1. Note however, that we will be considering the form \(\sup _{\mathscr {S}}\Lambda _{\vec {r},\mathscr {S}}\) and here it is important that one only considers sparse collections in this supremum with the same sparsity constant. See [29] for further properties and results regarding sparse collections of cubes.

Since this section contains results involving both m-tuples and \(m+1\)-tuples with the same parameters, it is convenient to separate these notationally. We will use the following convention: for \(m+1\) parameters \(\alpha _1,\ldots ,\alpha _{m+1}\) we shall use the boldface notation \(\pmb {\alpha }=(\alpha _1,\ldots ,\alpha _{m+1})\) for \(m+1\)-tuples while we will use the arrow notation \(\vec {\alpha }=(\alpha _1,\ldots ,\alpha _m)\) for m-tuples.

The main result for this section is the following:

Proposition 2.7

Let \(r_1,\ldots ,r_{m+1}\in (0,\infty )\), \(p_1,\ldots ,p_{m+1}\in (0,\infty ]\) satisfy \(\frac{1}{p_j}<\frac{1}{r_j}\) for all \(j\in \{1,\ldots ,m+1\}\) and \(\sum _{j=1}^{m+1}\frac{1}{p_j}=1\). Moreover, let \(w_1,\ldots , w_{m+1}\) be weights satisfying \(\prod _{j=1}^{m+1}w_j=1\). Then the following are equivalent:

-

(i)

\(\pmb {w}\in A_{\pmb {p},(\pmb {r},\infty )}\);

-

(ii)

\(\Vert M_{\pmb {r}}\Vert _{L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_{m+1}}(w_{m+1}^{p_{m+1}})\rightarrow L^{1,\infty }}<\infty \);

-

(iii)

\(\Vert M_{\pmb {r}}\Vert _{L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_{m+1}}(w_{m+1}^{p_{m+1}})\rightarrow L^1}<\infty \);

-

(iv)

\(\Vert \sup _{\mathscr {S}}\Lambda _{\pmb {r},\mathscr {S}}\Vert _{L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_{m+1}}(w_{m+1}^{p_{m+1}})\rightarrow \mathbf {R}}<\infty \).

Moreover, we have

where the implicit constants depend only on the dimension, and

where the implicit constant depends on the dimension and

Remark 2.8

We again point out that the condition \(\pmb {w}\in A_{\pmb {p},(\pmb {r},\infty )}\) is equivalent to the condition \(\vec {w}\in A_{\vec {p},(\vec {r},r_{m+1}')}\), with equal constants. Moreover, the results containing the sparse forms are formulated with the supremum taken inside of the norm. One can equivalently put the supremum outside of the norm which follows from the fact that there is a single sparse form that dominates all the other sparse forms, see [27, Section 4].

In the case \(m=1\), \(r_1=r_2=1\), the equivalence (2.6) takes the more familiar form

which appeared in the introduction.

We note that the estimate (2.8) was already obtained in [8] in the case \(m=2\).

For \(r_1=r\), \(r_2=s'\) the estimate (2.8) takes the form

and when \(r=1\) and \(s=\infty \) we reobtain the sharp bound from the \(A_2\) theorem. We wish to compare (2.9) to the bound obtained in [5]. For their main result they prove that

Our result implies that

see also [24], and this recovers the estimate (2.10).

Finally, we point out here that the estimate (2.8) already appears in [33, p. 12] for the particular choice \(\frac{1}{p_j}=\frac{1}{r_j}\frac{1}{\sum _{j=1}^{m+1}\frac{1}{r_j}}\), and it seems like this choice of \(p_j\) is central for the theory of these sparse forms, see also the proof of Corollary 4.2.

For the proof of the proposition we will require several preparatory lemmata.

Lemma 2.9

Let \(0<r_1,\ldots ,r_m<\infty \). Then for each dyadic grid \(\mathscr {D}\) and all \(f_j\in L^{r_j}\) there is a sparse collection \(\mathscr {S}\subseteq \mathscr {D}\) such that

pointwise almost everywhere, where \(\frac{1}{r}=\sum _{j=1}^m\frac{1}{r_j}\). In particular we have

pointwise almost everywhere.

The proof is essentially the same as the well-known result in the case \(m=1\), \(r=1\).

Proof

For \(k\in \mathbf {Z}\) we define

By taking the maximal cubes Q in \(\Omega _k\) we obtain a pairwise disjoint collection \(\mathcal {Q}_k\subseteq \mathscr {D}\) such that \(\Omega _k=\bigcup _{Q\in \mathcal {Q}_k}Q\) and

for all \(Q\in \mathcal {Q}_k\). We define \(\mathscr {S}:=\cup _{k\in \mathbf {Z}}\mathcal {Q}_k\) and claim that \(\mathscr {S}\) is a sparse collection of cubes. Indeed, for \(Q\in \mathcal {Q}_k\) it follows from (2.11) that for any \(Q'\in \mathcal {Q}_{k+1}\) we have

Thus, by maximality of \(\mathcal {Q}_k\) and Hölder’s inequality with \(\sum _{j=1}^m\frac{r}{r_j}=1\), we have

Thus, defining \(E_Q:=Q\backslash \Omega _{k+1}\), we have \(|Q|\le 2|E_Q|\).

To conclude that \(\mathscr {S}\) is sparse, it remains to check that \((E_Q)_{Q\in \mathscr {S}}\) is pairwise disjoint. Let \(Q,Q'\in \mathscr {S}\) such that \(E_Q\cap E_{Q'}\ne \emptyset \). If \(Q\in \mathcal {Q}_k\) and \(Q'\in \mathcal {Q}_{k'}\), we have \(E_Q\subseteq \Omega _k\backslash \Omega _{k+1}\) and \(E_{Q'}\subseteq \Omega _{k'}\backslash \Omega _{k'+1}\). Since \((\Omega _k\backslash \Omega _{k+1})_{k\in \mathbf {Z}}\) is pairwise disjoint, this means that we must have \(k=k'\). Since \(Q\cap Q'\ne \emptyset \), it follows from maximality of \(\mathcal {Q}_k\) that \(Q=Q'\), as desired.

Finally, if \(x\in \mathbf {R}^n\) and \(M^{\mathscr {D}}_{\vec {r}}(f_1,\ldots ,f_m)(x)\ne 0\), then there is a unique \(k\in \mathbf {Z}\) such that \(2^{\frac{n+1}{r}k}<M^{\mathscr {D}}_{\vec {r}}(f_1,\ldots ,f_m)(x)\le 2^{\frac{n+1}{r}(k+1)}\). Hence, \(x\in \Omega _k\backslash \Omega _{k+1}\) and thus there is a cube \(Q\in \mathcal {Q}_k\) so that \(x\in Q\backslash \Omega _{k+1}=E_Q\) and

This proves the assertion. \(\square \)

The following result is a reformulation of the definition of the weight class.

Lemma 2.10

Let \(r_1,\ldots ,r_{m+1}\in (0,\infty )\), \(p_1,\ldots ,p_{m+1}\in (0,\infty ]\) satisfy \(\frac{1}{p_j}<\frac{1}{r_j}\) for all \(j\in \{1,\ldots ,m+1\}\) and \(\sum _{j=1}^{m+1}\frac{1}{p_j}=1\). Moreover, let \(w_1,\ldots , w_{m+1}\) be weights satisfying \(\prod _{j=1}^{m+1}w_j=1\) and define \(v_j:=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\). Then \(\pmb {w}\in A_{\pmb {p},(\pmb {r},\infty )}\) if and only if \(v_1,\ldots ,v_{m+1}\) are locally integrable and there is a constant \(c>0\) such that for all cubes Q we have

In this case, the optimal constant c in this inequality is given by \([\pmb {w}]_{\pmb {p},(\pmb {r},\infty )}\).

The following lemma allows us to deal with weighted estimates involving sparse forms.

Lemma 2.11

Let \(r_1,\ldots ,r_{m+1}\in (0,\infty )\), \(p_1,\ldots ,p_{m+1}\in (0,\infty ]\) satisfy \(\frac{1}{p_j}<\frac{1}{r_j}\) for all \(j\in \{1,\ldots ,m+1\}\) and \(\sum _{j=1}^{m+1}\frac{1}{p_j}=1\). Moreover, let \(w_1,\ldots , w_{m+1}\) be weights satisfying \(\prod _{j=1}^{m+1}w_j=1\) with \(\pmb {w}\in A_{\pmb {p},(\pmb {r},\infty )}\) and define \(v_j:=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\). Let Q be a cube and let \(E\subseteq Q\) such that \(|Q|\le 2|E|\). Then

Remark 2.12

Having Lemma 2.10 in mind, it seems that the larger power of the weight constant in (2.12) comes from the fact that we are passing from the weighted measure of the set Q to the measure of the smaller set E. In fact, it seems like we are only using the full weight condition \(\pmb {w}\in A_{\pmb {p},(\pmb {r},\infty )}\) once and we are left with an estimate of the form

where the implicit constant depends on the weights. This estimate seems to only require the weaker Fujii-Wilson \(A_\infty \) condition satisfied by the weight \(v_j\), but we do not pursue this further here. We refer the reader to [22] where quantitative estimates involving this condition first appeared. We also point out that estimates of this type for the limited range sparse operator in the case \(m=1\) have been studied in [13, 31]. This condition has also been considered in the multilinear case in [10].

Proof

We set \(\gamma :=\max _{j=1,\ldots ,m+1}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \) and

so that \(\beta _j\le 0\) for all \(j\in \{1,\ldots ,m+1\}\). Thus, since \(\langle v_j\rangle _{1,E}\le 2\langle v_j\rangle _{1,Q}\) by the assumptions on E, we have \(\langle v_j\rangle _{1,Q}^{\beta _j}\le 2^{-\beta _j}\langle v_j\rangle _{1,E}^{\beta _j}\). Then

Next, set \(\alpha :=\sum _{j=1}^{m+1}\left( \frac{1}{r_j}-\frac{1}{p_j}\right) >0\) and \(k_j:=\alpha \left( \frac{1}{r_j}-\frac{1}{p_j}\right) ^{-1}\). Then

and

so that

Thus, since \(\prod _{j=1}^{m+1}v_j^{\frac{1}{r_j}-\frac{1}{p_j}}=\prod _{j=1}^{m+1}w_j=1\), it follows from Hölder’s inequality that

By combining this estimate with (2.13), we obtain (2.12). The assertion follows. \(\square \)

Proof of Proposition 2.7

We set \(v_j:=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\) for \(j\in \{1,\ldots ,m+1\}\).

The strategy for the proof will be as follows: We will prove the equivalence of (i) and (ii) by proving (2.6) and we will prove the equivalence of (iii) and (iv) by proving (2.7). Then, noting that the implication (iii)\(\Rightarrow \)(ii) is clear, we conclude the proof by showing that (i)\(\Rightarrow \)(iv) through (2.8).

For (2.6), for the first inequality we note that it follows from Lemma 2.5 that it suffices to consider the estimate for \(M^{\mathscr {D}}_{\pmb {r}}\) for a dyadic grid \(\mathscr {D}\). First consider a finite collection \(\mathscr {F}\subseteq \mathscr {D}\). Let \(\lambda >0\), \(f_j\in L^{p_j}(w_j^{p_j})\) and, defining \(M^{\mathscr {F}}_{\pmb {r}}\) as \(M^{\mathscr {D}}_{\pmb {r}}\) but with the supremum taken over all \(Q\in \mathscr {F}\), we set

and similarly for \(\Omega ^{\mathscr {D}}_\lambda \).

Let \(\mathscr {P}\) denote the collection of those cubes \(Q\in \mathscr {F}\) such that \(\prod _{j=1}^{m+1}\langle f_j\rangle _{r_j,Q}>\lambda \) that have no dyadic ancestors in \(\mathscr {F}\). Using the rule

where \(\langle h \rangle ^u_{r,Q}:=\left( \frac{1}{u(Q)}\int _Q\!|h|^r u\,\mathrm {d}x\right) ^{\frac{1}{r}}\), it follows from Lemma 2.10 and the fact that \(\mathscr {P}\) gives a decomposition of \(\Omega ^{\mathscr {F}}_\lambda \), that

where in the fourth step we used Hölder’s inequality with \(r_j\le p_j\) and in the last step we used Hölder’s inequality on the sum.

By considering an exhaustion of \(\mathscr {D}\) of finite sets it follows from monotonicity of the measure and by taking a supremum over \(\lambda >0\) that

For the converse inequality, fix a cube Q. Assuming for the moment that the \(v_j\) are locally integrable, we let \(0<\lambda <\prod _{j=1}^m\langle v_j\rangle ^{\frac{1}{r_j}}_{1,Q}\). Setting \(f_j:=v_j^{\frac{1}{r_j}}\chi _Q\), we obtain

for all \(x\in Q\) so that \(Q\subseteq \{M_{\pmb {r}}(f_1,\ldots ,f_{m+1})>\lambda \}\). Thus,

Taking a supremum over such \(\lambda \), we conclude that

Thus, it follows from Lemma 2.10 that

proving (2.6). To prove our initial assumption that the \(v_j\) are locally integrable, we repeat the above argument with the weights replaced by \((v_j^{-1}+\varepsilon )^{-1}\) for \(\varepsilon >0\). As these weights are bounded, they are locally integrable. An appeal to the Monotone Convergence Theorem as \(\varepsilon \downarrow 0\) after a rearrangement of (2.14) yields the desired conclusion.

For (2.7), let \(f_j\in L^{p_j}(w_j^{p_j})\) and let \(\mathscr {D}\) be a dyadic grid. By Lemma 2.9 there exists a sparse collection \(\mathscr {S}\subseteq \mathscr {D}\) such that

Thus, it follows from Lemma 2.5 that

For the converse inequality, we estimate

As this estimate is uniform in \(\mathscr {S}\), this proves (2.7) and thus the equivalence of (iii) and (iv).

To prove (2.8) and thus the implication (i)\(\Rightarrow \)(iv), we note that it follows from Lemma 2.11 that for a sparse collection \(\mathscr {S}\) in a dyadic grid \(\mathscr {D}\) and for \(\gamma =\max _{j=1,\ldots ,m+1}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \) we have

where in the last step we used the fact that the weighted dyadic maximal operator \(M^{u,\mathscr {D}}_rh:=\sup _{Q\in \mathscr {D}}\langle h\rangle _{r,Q}^u\chi _Q\) is bounded on \(L^q(u)\) for \(q>r\) with constant bounded by \(\left[ \frac{\frac{1}{r}}{\frac{1}{r}-\frac{1}{q}}\right] ^{\frac{1}{r}}\), uniformly in the weight u. As this estimate is uniform in the sparse collection \(\mathscr {S}\), this proves (2.8). The assertion follows. \(\square \)

2.3 Quantitative properties of multilinear weight classes: the m-tuple case

It is sometimes convenient to emphasize this separation of the parameter s from the \(r_j\), as it often plays a different role from the other parameters in the proofs. The following lemma provides a way to deal with this parameter.

Lemma 2.13

(Translation lemma) Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\) and \(p_1,\ldots ,p_m\in (0,\infty ]\) with \((\vec {r},s)\le \vec {p}\) and let \(w_1,\ldots ,w_m\) be weights with \(w=\prod _{j=1}^mw_j\). Then \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) if and only if there are \(\frac{1}{s_1},\ldots \frac{1}{s_m}\) satisfying \(\frac{1}{s_j}\le \frac{1}{p_j}\), \(\sum _{j=1}^m\frac{1}{s_j}=\frac{1}{s}\), and \(\vec {w}\in A_{\vec {p}(s),(\vec {r}(s),\infty )}\), where

Moreover, in this case we have

Proof

We have

it remains to note that

Taking a supremum over all cubes Q yields (2.15), proving the assertion. \(\square \)

We point out that the choice of the \(\frac{1}{s_j}\) in the lemma is not necessarily unique if \(m\ne 1\). One could, for example, take \(\frac{1}{s_j}=\frac{p}{p_j}\frac{1}{s}\), but a different choice will be made later in the proof of the main result. We also note that this lemma can be used even if \(\frac{1}{s}=0\). In this case it can occur that some of the \(\frac{1}{s_j}\) are negative, but this does not seem to cause any problems.

Having reduced to the case where \(s=\infty \), the following proposition is the main result for this subsection.

Proposition 2.14

Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(p_1,\ldots ,p_m\in (0,\infty ]\) with \((\vec {r},\infty )\le \vec {p}\) and let \(w_1,\ldots ,w_m\) be weights with \(w=\prod _{j=1}^mw_j\). Then the following are equivalent:

-

(i)

\(\vec {w}\in A_{\vec {p},(\vec {r},\infty )}\);

-

(ii)

\(\Vert M_{\vec {r}}\Vert _{L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_m}(w_m^{p_m})\rightarrow L^{p,\infty }(w^p)}<\infty \).

In this case we have

Moreover, if \(\vec {r}<\vec {p}\), then (i) and (ii) are equivalent to

-

(iii)

\(\Vert M_{\vec {r}}\Vert _{L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_m}(w_m^{p_m})\rightarrow L^p(w^p)}<\infty \)

and we have

where the implicit constant depends on the dimension and

Moreover, the power of the weight constant in (2.17) is the smallest possible one.

Remark 2.15

The equivalence (2.16) is also contained in the full range version in [30, Theorem 3.3] in the case where the \(p_j\) are finite, and the limited range version is proven in [3, Proposition 21], but here the cases \(p_j=\infty \) are only treated when \(w_j=1\).

For our result here we use the interpretation that for \(q=\infty \) and a weight u we have \(\Vert h\Vert _{L^q(u^q)}=\Vert h\Vert _{L^{q,\infty }(u^q)}=\Vert hu\Vert _{L^\infty }\).

Remark 2.16

The estimate (2.17) is a generalization of Buckley’s sharp weighted bound for the Hardy–Littlewood maximal operator. It can be proven using the sparse domination we obtained in Lemma 2.9, but we present an altogether different proof which generalizes an approach due to Lerner [28]. This construction is important, as it turns out to be key for our multilinear Rubio de Francia algorithm.

In the case \(r_1=\cdots =r_m=1\), the sharp bound (2.17) recovers the sharp bound obtained by Li, Moen, and Sun in [34] where sparse domination techniques were used. To see this, note given weights \(w_1,\ldots ,w_m\) and setting \(v_{\vec {w}}:=\prod _{j=1}^m w_j^{\frac{p}{p_j}}\), the multilinear weight constant they used is defined as

Writing \(\vec {1}=(1,\ldots ,1)\), the sharp result they prove is

for all \(p_1,\ldots ,p_m\in (1,\infty )\) and \(\vec {w}\in A_{\vec {p}}\). To compare this to our result, we replace the \(w_j\) by \(w_j^{p_j}\) and note that \(v_{\vec {w}}=\prod _{j=1}^m(w_j^{p_j})^{\frac{p}{p_j}}=w^p\), \([(w_1^{p_1},\ldots ,w_m^{p_m})]_{A_{\vec {p}}}=[\vec {w}]^p_{\vec {p},(\vec {1},\infty )}.\) Thus, (2.19) coincides with our bound found in (2.17) when \(\vec {r}=\vec {1}\).

Lemma 2.17

Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {r}<\vec {p}\) and let \(w_1,\ldots ,w_m\) be weights with \(w=\prod _{j=1}^mw_j\) and \(\vec {w}\in A_{\vec {p},(\vec {r},\infty )}\). Then there exist sublinear operators \(N_{p_j,r_j,\vec {w}}:L^{p_j}(w_j^{p_j})\rightarrow L^{p_j}(w_j^{p_j})\) so that for any \(f_j\in L^{p_j}(w_j^{p_j})\) we have

Moreover, \(N_{p_j,r_j,\vec {w}}\) satisfies

Proof

We first prove this result for the dyadic maximal operator \(M_{\vec {r}}^{\mathscr {D}}\) for a dyadic grid \(\mathscr {D}\) to obtain the appropriate operators \(N^{\mathscr {D}}_{p_j,r_j,\vec {w}}\). Then it follows from Lemma 2.5 that

The result then follows by setting

where c is an appropriate constant determined by the implicit constant in (2.21).

Now, fix a dyadic grid \(\mathscr {D}\). Let \(\gamma :=\max _{j=1,\ldots ,m}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \), let \(Q\in \mathscr {D}\), and set \(v_j:=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\). Since \(\prod _{j=1}^m w_j^{-1}w^{\frac{\frac{1}{p_j}}{\frac{1}{p}}}=\left( \prod _{j=1}^m w_j^{-1}\right) w=1\), it follows from Hölder’s inequality that

This implies that

Thus, for \(f_j\in L^{p_j}(w_j^{p_j})\) and any \(x\in Q\), we have

Setting

and by taking a supremum over all Q containing x in (2.22) we have proven (2.20) in the dyadic case. We remark here that in the case that \(\frac{1}{p_j}=0\), we use the interpretation

Noting that

for the case \(\frac{1}{p_j}>0\), we compute

and for the case \(\frac{1}{p_j}=0\), we compute

The assertion follows. \(\square \)

Proof of Proposition 2.14

We will prove the equivalence of (i) and (ii) by proving (2.16).

For \(``\lesssim ''\), we note that it follows from Lemma 2.5 that it suffices to prove the estimate for \(M_{\vec {r}}^{\mathscr {D}}\) for a fixed dyadic grid \(\mathscr {D}\). Note that by Hölder’s inequality we have \(\langle f_j\rangle _{r_j,Q}\le \langle f_jw_j\rangle _{p_j,Q}\langle w_j^{-1}\rangle _{\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}},Q}\) for a cube Q, so that

Thus, by Hölder’s inequality for weak type Lebesgue spaces, we have

where we used the fact that the weighted dyadic maximal operator \(M^{u,\mathscr {D}}_q\) is bounded \(L^q(u)\rightarrow L^{q,\infty }(u)\) with constant uniform in q and the weight u. Thus, we have shown that

For the converse inequality, fix a cube Q and let \(f_j\in L^{p_j}(w_j^{p_j})\). Letting \(0<\lambda <\prod _{j=1}^m\langle f_j\rangle _{r_j,Q}\), we have

for all \(x\in Q\) so that \(Q\subseteq \{M_{\vec {r}}(f_1,\ldots ,f_m)>\lambda \}\). Hence,

Taking a supremum over such \(\lambda \) and by replacing \(f_j\) with \(\chi _Q f_j\), we conclude that

Now set \(f_j=w_j^{-\frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}}\) and assume for the moment that \(f_j^{r_j}=f_j^{p_j}w_j^{p_j}=w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\) is locally integrable. Then the product on the right-hand side of (2.23) is positive and finite so that we may take it to the left-hand side. This yields

and taking a supremum over all cubes Q yields (2.16). To prove that \(w_j^{-\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}\) is indeed locally integrable, we choose \(f_j\) such that \(f_j^{p_j}w_j^{p_j}=(w_j^{\frac{1}{\frac{1}{r_j}-\frac{1}{p_j}}}+\varepsilon )^{-1}\) for \(\varepsilon >0\), the latter expression being bounded and thus locally integrable. Again taking the product on the right-hand side of (2.23) to the left, an appeal to the Monotone Convergence Theorem as \(\varepsilon \downarrow 0\) yields (2.24). The assertion follows.

Since the implication (iii)\(\Rightarrow \)(ii) is clear, we may finish the proof of the equivalences by showing (i)\(\Rightarrow \)(iii) through (2.17).

By Lemma 2.17, it follows from Hölder’s inequality that

as desired.

Finally, we prove optimality of (2.17). Let \(\alpha \ge 0\) denote the smallest possible constant in the estimate

We have shown that \(\alpha \le \max _{j=1,\ldots ,m}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \) and it remains to prove the lower bound. We assume that we are in dimension \(n=1\), the general case following mutatis mutandis. Moreover, we assume without loss of generality that the maximum \(\max _{j=1,\ldots ,m}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \) is attained for \(j=1\), the other cases following similarly by permuting the indices. For \(0<\varepsilon <1\) we define

Then, by Hölder’s inequality and a computation, we have

Moreover, one computes

and

Setting \(f(x):=\prod _{j=1}^m f_j(x)w_j(x)=|x|^{-\frac{1-\varepsilon }{p}}\chi _{(0,1)}(x)\), we find that

and

Letting \(\varepsilon \downarrow 0\) shows that we must have \(\alpha \left( \frac{1}{p_1}-\frac{1}{r_1}\right) -\frac{1}{p}-(-\frac{1}{r_1}-\frac{1}{p})\le 0\), i.e.,

Thus, we have \(\alpha =\max _{j=1,\ldots ,m}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} \) and the assertion follows. \(\square \)

Remark 2.18

We point out here that in the unweighted case we actually have an equivalence \(\Vert M_{\vec {r}}\Vert _{L^{p_1}\times \cdots \times L^{p_m}\rightarrow L^p}\simeq c_{\vec {p},\vec {r}}\), which follows from a similar calculation as above, with \(f_j(x):=|x|^{-\frac{1-\varepsilon }{p_j}}\chi _{(0,1)}(x)\) for all \(j\in \{1,\ldots ,m\}\).

3 Proof of the main result

The proof of the main theorem essentially follows from the theorem below. In this theorem we deal with \(m+1\)-tuples as well as m-tuples of the same parameters, which can be notationally confusing. To circumvent this problem, we shall use the earlier established convention that for \(m+1\) parameters \(\alpha _1,\ldots ,\alpha _{m+1}\) we shall use the boldface notation \(\pmb {\alpha }=(\alpha _1,\ldots ,\alpha _{m+1})\) for \(m+1\)-tuples while we will use the arrow notation \(\vec {\alpha }=(\alpha _1,\ldots ,\alpha _m)\) for m-tuples, see also Sect. 2.2.

We again point out that even though this result is formulated for the Banach range \(\frac{1}{p}\le 1\), it can be used to obtain results in the range including the cases \(\frac{1}{p}>1\), see also Remark 2.3 and the proof of Theorem 2.2.

Theorem 3.1

Let \(\frac{1}{r_1},\ldots ,\frac{1}{r_{m+1}}\in (0,1]\) and suppose we are given \(\frac{1}{p_1},\ldots ,\frac{1}{p_{m+1}}\in [0,1]\) satisfying \(\frac{1}{p_j}<\frac{1}{r_j}\) for all \(j\in \{1,\ldots ,m+1\}\) and \(\sum _{j=1}^{m+1}\frac{1}{p_j}=1\). Assume moreover that we are given weights \(w_1,\ldots w_{m+1}\) satisfying \(\prod _{j=1}^{m+1}w_j=1\) and \(\pmb {w} \in A_{\pmb {p},(\pmb {r},\infty )}\).

Suppose we are given functions \(f_j\in L^{p_j}(w_j^{p_j})\) and \(\frac{1}{q_1},\ldots ,\frac{1}{q_{m+1}}\in [0,1]\) satisfying \(\frac{1}{q_j}\le \frac{1}{r_j}\) and \(\sum _{j=1}^{m+1}\frac{1}{q_j}=1\). Then there are weights \(W_1,\ldots , W_{m+1}\) satisfying \(\prod _{j=1}^{m+1}W_j=1\) and \(\pmb {W}\in A_{\pmb {q},(\pmb {r},\infty )}\) such that

and

The proof of this theorem relies on a multilinear generalization of the Rubio de Francia algorithm.

Lemma 3.2

(Multilinear Rubio de Francia algorithm) Let \(r_1,\ldots ,r_m,p_1,\ldots ,p_m\in (0,\infty )\) with \(\vec {r}<\vec {p}\). Then for each \(\vec {w}\in A_{\vec {p},(\vec {r},\infty )}\) there exist operators \(R_{p_j,r_j,\vec {w}}:L^{p_j}\left( w_j^{p_j}\right) \rightarrow L^{p_j}\left( w_j^{p_j}\right) \) satisfying

-

(i)

\(|f_j|\le R_{p_j,r_j,\vec {w}}f_j\);

-

(ii)

\(\Vert R_{p_j,r_j,\vec {w}}f_j\Vert _{L^{p_j}(w_j^{p_j})}\le 2\Vert f_j\Vert _{L^{p_j}(w_j^{p_j})}\);

-

(iii)

\(\displaystyle \prod _{j=1}^m\langle R_{p_j,r_j,\vec {w}}f_j\rangle _{r_j,Q}\lesssim c_{\vec {p},\vec {r}}[\vec {w}]^{\max _{j=1,\ldots ,m}\left\{ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right\} }_{\vec {p},(\vec {r},\infty )} \inf _{y\in Q}\prod _{j=1}^mR_{p_j,r_j,\vec {w}}f_j(y)\) for all cubes Q, where the implicit constant depends on the dimension and

$$\begin{aligned} c_{\vec {p},\vec {r}}=\prod _{j=1}^m\left[ \frac{\frac{1}{r_j}}{\frac{1}{r_j}-\frac{1}{p_j}}\right] ^{\frac{1}{r_j}}. \end{aligned}$$

Proof

Letting \(N_{p_j,r_j,\vec {w}}\) be as in Lemma 2.17, we define

where \(N^0_{p_j,r_j,\vec {w}}(f_j):=|f_j|\) and \(N^{k}_{p_j,r_j,\vec {w}}(f_j):=N_{p_j,r_j,\vec {w}}(N^{k-1}_{p_j,r_j,\vec {w}}(f_j))\).

To prove property (i), it suffices to note that the \(k=0\) term in the sum is equal to \(|f_j|\).

For (ii) we have

To prove (iii), we first note that

Thus, it follows from Lemma 2.17 that

as desired. The assertion follows. \(\square \)

Proof of Theorem 3.1

The proof will consist of two steps. In the first step we prove the result for very specific \(\pmb {q}\). In the second step we iterate the first step to obtain the desired result.

Step 1. In this step we assume that there is some \(j_0\in \{1,\ldots ,m+1\}\) such that

Since none of the statements in the formulation of the proposition depend on the order of the indices, we may assume without loss of generality that \(j_0=m+1\). More precisely, we can let \(\pi \in S_{m+1}\) be the transposition given by \(\pi (j)=j\) for \(j\ne j_0,m+1\) and \(\pi (j_0)=m+1\), \(\pi (m+1)=j_0\). Replacing the index j by \(\pi (j)\) everywhere then indeed allows us to reduce to the case \(j_0=m+1\).

We define \(\frac{1}{s}:=1-\frac{1}{r_{m+1}}\ge 0\), \(\frac{1}{p}:=1-\frac{1}{p_{m+1}}>0\), \(\frac{1}{q}:=1-\frac{1}{q_{m+1}}\ge 0\), and \(w:=w_{m+1}^{-1}\) so that \(w=\prod _{j=1}^m w_j\). For an \(m+1\)-tuple \((\alpha _1,\ldots ,\alpha _{m+1})\) we will use the notation \(\vec {\alpha }=(\alpha _1,\ldots ,\alpha _m)\) so that the arrow notation will always refer to an m-tuple. Thus, we have now reduced the problem to proving that there exist m weights \(\vec {W}\in A_{\vec {q},(\vec {r},s)}\) such that \(f_j\in L^{q_j}(W_j^{q_j})\), \(f_{m+1}\in L^{q'}(W^{-q'})\), where \(W:=\prod _{j=1}^m W_j\), with

and

Indeed, the result then follows by setting \(W_{m+1}:=W^{-1}\) and by noting that

The construction of the m weights \(W_1,\ldots , W_m\) relies on the multilinear Rubio de Francia algorithm as well as a clever usage of the translation lemma to deal with the parameter s. Setting

we have

with equality if and only if \(\frac{1}{q_j}=\frac{1}{p_j}\) and so that \(\frac{1}{s_j}\le \frac{1}{q_j}\le \frac{1}{p_j}\), and

We set

and \(\frac{1}{p(s)}:=\sum _{j=1}^m\frac{1}{p_j(s)}=\frac{1}{p}-\frac{1}{s}\), \(\vec {p}(s):=(p_1(s),\ldots ,p_m(s))\), and similarly for \(\frac{1}{q(s)}\), \(\vec {q}(s)\), and \(\vec {r}(s)\).

We emphasize here that \(\frac{1}{p_j(s)}=0\) if and only if \(\frac{1}{p_j}=\frac{1}{q_j}\) and we encourage the reader to verify that the remaining steps in this proof remain valid in this particular case.

We may compute

We set \(g_j:=|f_j|^{\frac{\frac{1}{p_j(s)}}{\frac{1}{p_j}}}w_j^{-\frac{\frac{1}{s_j}}{\frac{1}{p_j}}}\) so that

and, using the notation from Lemma 3.2, we set

Unwinding the definitions, it follows from (3.5) and property (i) of our multilinear Rubio de Francia algorithm that

Next, it follows from (3.5), Hölder’s inequality, and property (ii) that

By combining this estimate with (3.6), we have proven (3.3).

Finally, we prove (3.4). Noting that

it follows from Hölder’s inequality and (iii) that for a cube Q we have

Moreover, we have

By combining this with (3.7) we find that

By the translation lemma, Lemma 2.13, we have \([\vec {w}]_{\vec {p}(s),(\vec {r}(s),\infty )}=[\vec {w}]_{\vec {p},(\vec {r},s)}\) and, moreover, by using (3.5) we compute

which we interpret as being equal to 1 when \(\frac{1}{q_j}=\frac{1}{p_j}=\frac{1}{r_j}\), so that

Hence, (3.4) follows by taking a supremum over all cubes Q in (3.8). This concludes Step 1.

Step 2. Now suppose \(\pmb {q}\) is arbitrary. For each j we either have \(\frac{1}{p_j}<\frac{1}{q_j}\) or \(\frac{1}{p_j}\ge \frac{1}{q_j}\). Assume without loss of generality that there is a \(j_1\in \{1,\ldots ,m\}\) such that

Indeed, if this is not the case then, just as in Step 1, we may permute the indices to reduce back to this case.

The strategy will be to construct the \(m+1\) weights \(\pmb {W}\) in \(m-j_1+1\) steps through repeated application of Step 1.

We define

so that \(0=\theta _0\le \theta _1\le \cdots \le \theta _{m-j_1+1}=1\). Thus, defining,

we have

Now, we define

First we will check that the reciprocals of the coordinates of these \(m+1\)-tuples sum to 1. Indeed, using \(\sum _{j=1}^{m+1}\frac{1}{p_j}=\sum _{j=1}^{m+1}\frac{1}{q_j}=1\), we have

so that

as desired.

Now, for \(k\in \{1,\ldots ,m-j_1+1\}\) we define

which should be interpreted as being equal to 1 when \(\frac{1}{q^k_j}=\frac{1}{r_j}\), and we write \(\vec {q}^k=(q_1^k,\ldots ,q_m^k)\) for the m-tuple given by the first m coordinates of \(\pmb {q}^k\), with \(\frac{1}{q^k}:=\sum _{j=1}^m\frac{1}{q_j^k}\).

We may apply Step 1 with \(j_0=j_1+1\) to obtain weights \(\pmb {W}^{m-j_1}=(W^{m-j_1}_1,\ldots ,W^{m-j_1}_{m+1})\) such that

and

Next we apply Step 1 with \(j_0=j_1+2\) to obtain weights \(\pmb {W}^{m-j_1-1}\) with

and

Combining these estimates with (3.10) and (3.11) we obtain

and

Continuing this process, applying Step 1 with \(j_0=j_1+k\) for \(k=3,\ldots ,m-j_1+1\), we conclude, setting \(\pmb {W}:=\pmb {W}^0\), that

and

Since \((2^m)^{m-j_1+1}\le 2^{m^2}\), we note that (3.1) now follows from (3.12). Finally, we note that (3.2) follows from (3.13), provided we can show that

Note that by our initial assumption (3.9), this maximum is attained at some \(j_2\in \{1,\ldots ,j_1\}\).

We claim that

for all \(k\in \{1,\ldots ,m-j_1+1\}\). Assuming for the moment that the claim is true, we find that

proving (3.14).

To prove the claim, we compute

so that

where

We note that proving the claim is equivalent to proving the equality

The inequality

is clear. To prove the converse inequality, it suffices to show that \(f_k\) is an increasing function for all \(k\in \{1,\ldots ,m-j_1+1\}\). Computing

we have proven the desired result. This concludes Step 2. The assertion follows. \(\square \)

Proof of Theorem 2.2

The result essentially follows from an application of Theorem 3.1. However, in order to use this result we must reduce to a case where \(\frac{1}{p}\le 1\) so that we may set \(\frac{1}{p_{m+1}}=1-\frac{1}{p}\ge 0\). To reduce to this case, we employ a general rescaling trick that also appears in the proof of the case \(m=1\) given by Auscher and Martell in [1, Theorem 4.9].

First we will show that we may assume that \(\frac{1}{r}:=\sum _{j=1}^m\frac{1}{r_j}=1\). Indeed, assuming we have shown the result for \(\frac{1}{r}=1\), we consider the \(m+1\)-tuple \((|f_1|^r,\ldots ,|f_m|^r,|h|^r)\). Then, since

we find that for all \(\vec {w}\in A_{\frac{\vec {q}}{r},(\frac{\vec {r}}{r},\frac{s}{r})}\) we have

Thus, since \(\sum _{j=1}^m \frac{r}{r_j}=1\), applying the extrapolation result with \(\vec {r}\) replaced by \(\frac{\vec {r}}{r}\), \(\vec {q}\) replaced by \(\frac{\vec {q}}{r}\), and s replaced by \(\frac{s}{r}\), we find that for any \(\frac{\vec {p}}{r}\) with \(\frac{\vec {p}}{r}>\frac{\vec {r}}{r}\) and \(\frac{p}{r}<\frac{s}{r}\), or equivalently, for all \(\vec {p}>(\vec {r},s)\), we have

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\), with

as desired.

Now that we have reduced to the case where \(\frac{1}{r}=1\), we have \(\frac{1}{s}\le \frac{1}{p}\le \sum _{j=1}^m\frac{1}{r_j}=1\). Thus, we may set \(\frac{1}{p_{m+1}}:=1-\frac{1}{p}\ge 0\), \(\frac{1}{q_{m+1}}:=1-\frac{1}{q}\ge 0\), \(\frac{1}{r_{m+1}}:=1-\frac{1}{s}\ge 0\) and \(w_{m+1}:=w^{-1}\).

Let \(f_{m+1}\in L^{p_{m+1}}(w_{m+1}^{p_{m+1}})\) and let \(\pmb {W}=(W_1,\ldots ,W_{m+1})\) be the weights obtained from Theorem 3.1. Setting \(\vec {W}=(W_1,\ldots ,W_m)\) and \(W:=\prod _{j=1}^mW_j\) we find, using the assumption (2.2) and property (3.1) of \(\pmb {W}\), that

Moreover, it follows from (3.2) that

By combining this estimate with (3.15) and by noting that

the assertion follows. \(\square \)

4 Applications of the extrapolation theorem

In applying extrapolation theorems, one can obtain further results by making appropriate choices in the \(m+1\)-tuples. We provide some applications in this section.

4.1 Boundedness of operators through extrapolation

Given an operator T defined on m-tuples of functions, one can apply the extrapolation result to the \(m+1\)-tuples \((f_1,\ldots ,f_m,T(f_1,\ldots ,f_m))\) to obtain the following extension result:

Theorem 4.1

Let T be an m-linear or a positive valued m-sublinear operator and suppose that there exist \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\) and \(q_1,\ldots ,q_m\in (0,\infty ]\) with \(\vec {q}\ge (\vec {r},s)\) and an increasing function \(\phi _{\vec {q}}\) such that

for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\).

Then for all \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) and all weights \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) the operator T extends to a bounded operator \(L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_m}(w_m^{p_m})\rightarrow L^p(w^p)\). Moreover, T satisfies the bound

where \(\frac{1}{r}=\sum _{j=1}^m\frac{1}{r_j}\).

Proof

Let \(f_1,\ldots ,f_m\) be simple functions. By (4.1) we have

for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\). Thus, by applying Theorem 2.2 to the \(m+1\)-tuple \((f_1,\ldots ,f_m,T(f_1,\ldots ,f_m))\) we find that for all \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) and all weights \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) we have

with \(\phi _{\vec {p},\vec {q},\vec {r},s}\) given by (2.3). Since this estimate holds for all simple functions \(f_1,\ldots ,f_m\), the assumptions on T allow us to conclude the results through density. \(\square \)

The initial estimate (4.1) is often obtained through sparse domination. Once we have an estimate of the form

it follows from duality and Proposition 2.7 that for \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) and \(\frac{1}{p}<1\), we have

We are, however, still missing the cases outside of the reflexive range \(\frac{1}{p}<1\). One can reach these cases through extrapolation, see [32, 33]. The novelty in our result is that we also obtain a quantitative weighted bound in this range through Theorem 4.1.

Corollary 4.2

Let T be an m-linear or a positive valued m-sublinear operator and suppose that there exist \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in [1,\infty ]\) such that for all bounded compactly supported \(f_1,\ldots ,f_m,g\) we have

where the supremum runs over all sparse collections \(\mathscr {S}\) with a fixed sparsity constant. Then for all \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) and all weights \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) the operator T extends to a bounded operator \(L^{p_1}(w_1^{p_1})\times \cdots \times L^{p_m}(w_m^{p_m})\rightarrow L^p(w^p)\). Moreover, T satisfies the bound

Proof

We set \(\frac{1}{\tau }:=\frac{1}{s'}+\sum _{j=1}^m\frac{1}{r_j}\). Assuming the set of \(\vec {p}\) satisfying \(\vec {p}>(\vec {r},s)\) is non-empty, we have \(\tau <1\). Indeed, for such a \(\vec {p}\) we have

Setting \(\frac{1}{q_j}:=\frac{\tau }{r_j}<\frac{1}{r_j}\), we have

so that

Then by (4.2) with this specific choice of \(q_j\) we obtain

for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\). Thus, it follows from (4.1) that for all \(p_1,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) and all weights \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\) we have

Noting that

the estimate (4.3) now follows from (4.4). \(\square \)

Remark 4.3

We note that in particular the quantitative bound (4.3) extends the bound (4.2) obtained from the sparse form, even though the proof only used the sparse bound for the particular values \(\frac{1}{p_j}=\frac{\tau }{r_j}\) with \(\frac{1}{\tau }=\frac{1}{s'}+\sum _{j=1}^m\frac{1}{r_j}\). It seems that these values are, in some sense, central for the sparse form and the quantitative bound for these values has already appeared in [33], but giving a quantitative bound for the whole range of \(\vec {p}>(\vec {r},s)\) is new. In the case \(m=1\) this value becomes \(p=r(\frac{1}{s'}+\frac{1}{r})=1+\frac{r}{s'}\) which is the value central in the main theorem of [5]. In particular when \(r=1\), \(s=\infty \) we have \(p=2\) which is central in the theory of Calderón-Zygmund operators.

In the full-range case, i.e., when \(r_1=\cdots =r_m=1\), \(s=\infty \), the particular case we consider becomes \(p_1=\cdots =p_m=m+1\) and in [10] a bound in this case for multilinear Calderón-Zygmund operators was found. Using the sparse domination result of [10], this result was extended by Li, Moen, and Sun in [34] to the range of \(p_j\in (1,\infty )\) with \(\frac{1}{p}\le 1\). They showed that for a multilinear Calderón-Zygmund operator T, all \(p_1,\ldots ,p_m\in (1,\infty )\) with \(\frac{1}{p}\le 1\) and all weights \(\vec {w}\in A_{\vec {p}}\) we have

where the class \(A_{\vec {p}}\) is defined through the constant in (2.18), and \(v_{\vec {w}}:=\prod _{j=1}^m w_j^{\frac{p}{p_j}}\). They proved that this same bound holds even in the case \(\frac{1}{p}>1\) for multilinear sparse operators, leading them to conjecture that the bound (4.5) should also extend to the case \(\frac{1}{p}>1\). This conjecture was independently proven to be true by Conde-Alonso and Rey [6] and Lerner and Nazarov [29] for kernels satisfying \(\log \)–Dini conditions. We also refer the reader to [23, 26], where the weaker Dini condition was considered in the linear case. The Dini condition was used in the multilinear setting by Damián, Hormozi and Li [9] where, in addition, quantitative mixed multilinear \(A_{\vec {p}}\)–\(A_\infty \) bounds were considered.

Our results yields another proof of the extension of the bound to the case \(\frac{1}{p}>1\). To see this, we note that by replacing the \(w_j\) by \(w_j^{p_j}\) we have \(v_{\vec {w}}=\prod _{j=1}^m(w_j^{p_j})^{\frac{p}{p_j}}=w^p\) and

Thus, the result (4.5) takes the equivalent form

which precisely corresponds to the bound (4.2). By applying our extrapolation result we can now extend (4.5), proving the following:

Corollary 4.4

Let T be an m-linear Calderón-Zygmund operator. Then for all \(p_1,\ldots ,p_m\in (1,\infty )\) we have

As in Corollary 4.2, our result actually yields weighted bounds for multilinear Calderón-Zygmund operators in the more general case \(p_1,\ldots ,p_m\in (1,\infty ]\) with \(\frac{1}{p}>0\).

4.2 Vector-valued extrapolation

By Fubini’s Theorem we are able to extend the extrapolation theorem to a vector-valued setting. In the following result we are considering spaces of the form \(L^p(w^p;L^q(\Omega ))\) for \(p,q\in (0,\infty ]\), a weight w, and \(\Omega \) a \(\sigma \)-finite measure space. Such spaces consist of functions \(f:\mathbf {R}^n\rightarrow L^q(\Omega )\) such that the function \(\Vert f\Vert _{L^q(\Omega )}\) lies in \(L^p(w^p)\), with \(\Vert f\Vert _{L^p(w^p;L^q(\Omega ))}:=\big \Vert \Vert f\Vert _{L^q(\Omega )}\big \Vert _{L^p(w^p)}\). In the case when \(p=q\), we can use Fubini’s Theorem to find that

valid for any \(q\in (0,\infty ]\), allowing us to carry over scalar-valued estimates to the vector-valued setting.

Theorem 4.5

(Vector-valued extrapolation) Let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\). Let \(\Omega \) be a \(\sigma \)-finite measure space, let \(q_1,\ldots ,q_m\in (0,\infty ]\) and \(\vec {q}\ge (\vec {r},s)\), and let \((f_1,\ldots ,f_m,h)\) be an \(m+1\)-tuple of measurable functions on \(\mathbf {R}^n\times \Omega \). Assume that there is an increasing function \(\phi _{\vec {q},\vec {r},s}\) such that the inequality

holds pointwise a.e. in \(\Omega \) for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\).

Then for all \(p_1\,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) there is an increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) such that

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\). More explicitly, we can take

where \(\frac{1}{r}=\sum _{j=1}^m\frac{1}{r_j}\).

Proof

Set \({\tilde{f}}_j:=\Vert f_j\Vert _{L^{q_j}(\Omega )}\), \({\tilde{h}}:=\Vert h\Vert _{L^q(\Omega )}\), which, by Fubini’s Theorem, are measurable functions on \(\mathbf {R}^n\). Then by Fubini’s Theorem, the assumption (4.6), and Hölder’s inequality, we have

Thus, we may apply Theorem 2.2 to the \(m+1\)-tuple \(({\tilde{f}}_1,\ldots ,{\tilde{f}}_m,{\tilde{h}})\), proving the result. \(\square \)

By iterated uses of Fubini’s Theorem, a similar argument also allows us to extrapolate to vector-valued bounds with iterated \(L^q\)-spaces which were considered by Benea and Muscalu through their helicoidal method [4], but we do not detail this here.

We emphasize here that our extrapolation result goes through even if we have \(q_j=\infty \) for some \(j\in \{1,\ldots ,m\}\) in (4.6). The conclusion of our result then yields vector-valued estimates in the mixed normed spaces \(L^{p_j}(L^\infty )\).

If we take \(\Omega =\mathbf {N}\) with the counting measure, we obtain vector-valued bounds for \(\ell ^q\)-spaces. Given an m-linear operator T and sequences of measurable functions \((f^1_k)_{k\in \mathbf {N}},\ldots ,(f^m_k)_{k\in \mathbf {N}}\), we may define

By combining the vector-valued extrapolation theorem with Corollary 4.2, we obtain the following:

Corollary 4.6

Let T be an m-linear operator and suppose that there exist \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in [1,\infty ]\) such that for all bounded compactly supported \(f_1,\ldots ,f_m,g\) we have

where the supremum runs over all sparse collections \(\mathscr {S}\) with a fixed sparsity constant.

Then for all \(p_1\,\ldots ,p_m,q_1,\ldots ,q_m\in (0,\infty ]\) with \(\vec {p},\vec {q}>(\vec {r},s)\), the operator T has a bounded extension \(L^{p_1}(w_1^{p_1};\ell ^{q_1})\times \cdots \times L^{p_m}(w_m^{p_m};\ell ^{q_m})\rightarrow L^p(w^p;\ell ^q)\) given by (4.7). Moreover, there is an increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) such that

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\). More explicitly, we can take

Proof

For each \(j\in \{1,\ldots ,m\}\), let \((f^j_k)_{k\in \mathbf {N}}\) be a sequence of simple functions with at most finitely many non-zero entries. Setting \(f_j(x,k):=f_k^j(x)\) and \(h(x,k):=T(f_k^1,\ldots ,f_k^m)(x)\), it follows from Corollary 4.2 that (4.6) is satisfied with

The assertion now follows from Theorem 4.5 and density. \(\square \)

Remark 4.7

If one can use an argument where extrapolation is only required once, then we may be able to replace the exponent in (4.8) by the smaller exponent

which no longer depends on the exponents of the \(\ell ^{q_j}\) spaces. One way of doing this is by considering a vector-valued sparse domination rather than a scalar one. Such a sparse domination for the bilinear Hilbert transform is obtained in [3]. See also [18] where such ideas are used for vector-valued Calderón-Zygmund operators.

4.3 The bilinear Hilbert transform

The bilinear Hilbert transform

is an integral operator falling outside of the theory of bilinear Calderón-Zygmund operators. It was introduced by A. Calderón and he wanted to know if it was bounded as an operator from \(L^2\times L^\infty \) to \(L^2\). This question was answered by Lacey and Thiele and they showed that \({{\,\mathrm{BHT}\,}}\) is bounded \(L^{p_1}\times L^{p_2}\rightarrow L^p\) for all \(p_1,p_2\in (1,\infty ]\) with \(\frac{2}{3}<p<\infty \), \(\frac{1}{p}=\frac{1}{p_1}+\frac{1}{p_2}\), see [25]. It is an open problem whether one can remove the condition \(\frac{1}{p}<\frac{3}{2}\) or not. However, in this range several weighted bounds and vector-valued extensions have been obtained, some of which we detail here.

Let \(r_1,r_2,s\in (1,\infty )\). Then, under certain conditions on \(r_1\), \(r_2\), and s, the sparse domination

was shown in [8]. These conditions can be formulated in the following equivalent ways:

Lemma 4.8

Let \(r_1,r_2,s\in (1,\infty )\). Then the following conditions are equivalent:

-

(i)

We have \(\max \left( \frac{1}{r_1},\frac{1}{2}\right) +\max \left( \frac{1}{r_2},\frac{1}{2}\right) +\max \left( \frac{1}{s'},\frac{1}{2}\right) <2;\)

-

(ii)

There exist \(\theta _1,\theta _2,\theta _3\in [0,1)\) with \(\theta _1+\theta _2+\theta _3=1\) so that

$$\begin{aligned} \frac{1}{r_1}<\frac{1+\theta _1}{2},\qquad \frac{1}{r_2}<\frac{1+\theta _2}{2},\qquad \frac{1}{s}>\frac{1-\theta _3}{2}. \end{aligned}$$

The sparse domination in terms of characterization (i) was obtained by Culiuc, Di Plinio and Ou in [8] and characterization (ii) was used in [3] where more general vector-valued sparse domination results were obtained.

Note that if we have \(r_1,r_2,s\in (1,\infty )\) satisfying one of the equivalent conditions (i) or (ii) and we have \(p_1,p_2\in (1,\infty ]\) with \(\vec {p}>(\vec {r},s)\), then

so that we are still in the range of Lacey and Thiele.

From the sparse domination result for \({{\,\mathrm{BHT}\,}}\), it was deduced in [8] that we have the weighted bounds \({{\,\mathrm{BHT}\,}}:L^{p_1}(w_1^{p_1})\times L^{p_1}(w_1^{p_1})\rightarrow L^{p}(w^p)\) for all \(p_1,p_2\in (1,\infty )\) with \(\vec {p}>(\vec {r},s)\) in the Banach range \(p>1\) and for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\). These weighted bounds were used in [7] to obtain weighted and vector-valued estimates in the range \(p\le 1\) through extrapolation using products of \(A_p\) classes. This result was extended in [33] where the full multilinear weight classes were used, but only the cases for finite \(p_j\) were treated. However, their methods can be used to also obtain the cases with \(p_j=\infty \) [32]. By applying Corollaries 4.2 and 4.6 we obtain the following result:

Corollary 4.9

Let \(r_1,r_2,s\in (1,\infty )\) satisfy one of the equivalent conditions in Lemma 4.8. Then for all \(p_1,p_2\in (1,\infty ]\) with \(\vec {p}>(\vec {r},s)\) we have

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\).

Moreover, for all \(p_1,p_2,q_1,q_2\in (1,\infty ]\) with \(\vec {p},\vec {q}>(\vec {r},s)\) there is an increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) such that

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\).

While Corollary 4.6 gives us an expression for the increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) in (4.9), this estimate will not be sharp in general, see also Remark 4.7. Rather, a better quantitative estimate can be obtained if one applies our extrapolation result to weighted bounds that can be obtained from the vector-valued sparse domination result obtained in [3, Theorem 1], but we do not pursue this further here.

Our result should be compared with [33, Corollary 2.17] and [4, Theorem 3]. Qualitatively, we completely recover the results on weighted boundedness in [33, Corollary 2.17] and extend it in the sense that we also include the cases where either \(p_1\) or \(p_2\) is equal to \(\infty \) and where either \(q_1\) or \(q_2\) is equal to \(\infty \), but this can also be done through their methods [32]. If, for example \(p_1=\infty \), then we have \(p_2=p\) and our scalar bound takes the form

for all \(p\in (r_2,s)\) and all weights \(w_1\), \(w_2\) satisfying

This is also slightly more general than the weighted bounds in [3, Corollary 3] in this endpoint case since they only formulate their result in the case \(p_1=\infty \) when \(w_1=1\) (or more generally, \(p_j=\infty \) when \(w_j=1\)), but their methods do allow for this more general case.

The result [4, Theorem 3] asserts that if \(p_1,p_2,q_1,q_2\in (1,\infty ]\) satisfy \(\vec {p},\vec {q}>(\vec {r},s)\) for \(r_1,r_2,s\in (1,\infty )\) satisfying one of the equivalent properties of Lemma 4.8, then we have

This result is completely recovered in Corollary 4.9 in the unweighted version of (4.9).

By again extrapolating from the weighted vector-valued bounds we can also consider iterated \(\ell ^q\) spaces in our results. For example, by applying Theorem 4.5 to the weighted vector valued bounds (4.9), one can obtain

for all \(p_1,p_2\in (1,\infty ]\) with \(\frac{2}{3}<p<\infty \). Such bounds were already obtained in [2] through the helicoidal method, but could not be obtained through earlier extrapolation results. More precisely, to obtain this result through extrapolation one needs to be able to extrapolate away from weighted \(L^\infty \) estimates which is one of our novelties. These type of multiple vector-valued bounds can be applied to prove boundedness results of operators such as the tensor product of \({{\,\mathrm{BHT}\,}}\) and paraproducts and we refer the reader to [2] for an overview of such operators.

4.4 Endpoint extrapolation results

Finally, we shall discuss some of the endpoint estimates one can extrapolate from.

The following is an extrapolation result involving weak-type estimates. The trick used to obtain this result is well-known and can be found already in [17].

Theorem 4.10

(Weak type extrapolation) Let \((f_1,\ldots ,f_m,h)\) be an \(m+1\)-tuple of measurable functions and let \(r_1,\ldots ,r_m\in (0,\infty )\), \(s\in (0,\infty ]\). Suppose that for some \(q_1,\ldots ,q_m\in (0,\infty ]\) with \(\vec {q}\ge (\vec {r},s)\) there is an increasing function \(\phi _{\vec {q}}\) such that

for all \(\vec {w}\in A_{\vec {q},(\vec {r},s)}\).

Then for all \(p_1\,\ldots ,p_m\in (0,\infty ]\) with \(\vec {p}>(\vec {r},s)\) there is an increasing function \(\phi _{\vec {p},\vec {q},\vec {r},s}\) such that

for all \(\vec {w}\in A_{\vec {p},(\vec {r},s)}\). More explicitly, we can take

where \(\frac{1}{r}=\sum _{j=1}^m\frac{1}{r_j}\).

Proof

Let \(\lambda >0\) and set \(E_\lambda :=\{x\in \mathbf {R}^n:|h(x)|>\lambda \}\). We define