Abstract

We derive a priori bounds for the \(\Phi ^4\) equation in the full sub-critical regime using Hairer’s theory of regularity structures. The equation is formally given by

where the term \(+\infty \phi \) represents infinite terms that have to be removed in a renormalisation procedure. We emulate fractional dimensions \(d<4\) by adjusting the regularity of the noise term \(\xi \), choosing \(\xi \in C^{-3+\delta }\). Our main result states that if \(\phi \) satisfies this equation on a space–time cylinder \(D= (0,1) \times \{ |x| \leqslant 1 \}\), then away from the boundary \(\partial D\) the solution \(\phi \) can be bounded in terms of a finite number of explicit polynomial expressions in \(\xi \). The bound holds uniformly over all possible choices of boundary data for \(\phi \) and thus relies crucially on the super-linear damping effect of the non-linear term \(-\phi ^3\). A key part of our analysis consists of an appropriate re-formulation of the theory of regularity structures in the specific context of (*), which allows us to couple the small scale control one obtains from this theory with a suitable large scale argument. Along the way we make several new observations and simplifications: we reduce the number of objects required with respect to Hairer’s work. Instead of a model \((\Pi _x)_x\) and the family of translation operators \((\Gamma _{x,y})_{x,y}\) we work with just a single object \((\mathbb {X}_{x, y})\) which acts on itself for translations, very much in the spirit of Gubinelli’s theory of branched rough paths. Furthermore, we show that in the specific context of (*) the hierarchy of continuity conditions which constitute Hairer’s definition of a modelled distribution can be reduced to the single continuity condition on the “coefficient on the constant level”.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of regularity structures was introduced in Hairer’s groundbreaking work [23] and has since been developed into an impressive machinery [6, 8, 12] that systematically yields existence and uniqueness results for a whole range of singular stochastic partial differential equations from mathematical physics. Examples include the KPZ equation [15, 22], the multiplicative stochastic heat equation [26], as well as reversible Markovian dynamics for the Euclidean \(\Phi ^4\) theory in three dimensions [23], in “fractional dimension \(d<4\)” [6], for the Sine-Gordon model [13, 28], for the Brownian loop measure measure on a manifold [7] and for the \(d = 3\) Yang–Mills theory [11].

A serious limitation of this theory so far is that these existence and uniqueness results only hold for a short time, and this existence time typically depends on the specific realisation of the random noise term in the equation. Most applications are furthermore limited to a compact spatial domain such as a torus. The reason for this limitation is that the whole machinery is set up as the solution theory for a mild formulation in terms of a fixed-point problem, and that specific features of the non-linearity, such as damping effects or conserved quantities, are not taken into account. With this method, global-in-time solutions can only be obtained in special situations, for example if all non-linear terms are globally Lipschitz [24] or if extra information on an invariant measure is available [14, 25].

This article is part of a programme to derive a priori bounds within the regularity structures framework in order to go beyond short time existence and compact spatial domains. We focus on the \(\Phi ^4\) dynamics which are formally given by the stochastic reaction diffusion equation

where \(\xi \) is a Gaussian space–time white noise over \(\mathbb {R}\times \mathbb {R}^{d}\). A priori bounds for this equation have recently been derived by several groups for the two dimensional case \(d=2\) [32, 34] and the more difficult case \(d=3\) [2, 18, 19, 30, 31]. In this article we obtain bounds throughout the entire sub-critical regime, formally dealing with all “fractional dimensions” up to (but excluding) the critical dimension \(d=4\). Here we follow the convention of [6] to emulate fractional dimensions \(d<4\) by adjusting the regularity assumption on \(\xi \), and assuming that it can only be controlled in a distributional parabolic Besov–Hölder space of regularity \(-3+\delta \) for an arbitrarily small \(\delta >0\). Connecting back to the \(\Phi ^4\) dynamics driven by space–time white noise, \(\delta = 0-\) mimics the scaling of the equation with \(d=4\) and \(\delta = 1/2-\) gives us back equation with \(d=3\).

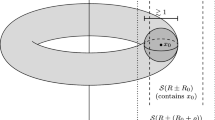

Our analysis is based the method developed in the \(d=3\) context in [30] where it was shown that if \(\phi \) solves (1.1), on a parabolic cylinder, say on

where \(|x| = \max \{ |x_1|, \ldots , |x_d| \} \) denotes the supremum norm on \(\mathbb {R}^d\), then it can be bounded on any smaller cylinder \(D_R = (R^2,1) \times \{|x|<1-R\}\) only in terms of the distance R and the realisation of \(\xi \) when restricted to a small neighbourhood of D. This bound holds uniformly over all possible choices for \(\phi \) on the parabolic boundary of D, thus leveraging on the full strength of the non-linear damping term \(-\phi ^3\). This makes the estimate extremely useful when studying the large scale behaviour of solutions, because given a realisation of the noise, any local function of the solution (for example a localised norm or testing against a compactly supported test-function) can be controlled in a completely deterministic way by objects that depend on the noise realisation on a compact set, without taking the behaviour of solution elsewhere into account.

Our main result is the exact analogue valid throughout the entire sub-critical regime.

Theorem 1.1

(Theorem 9.1 below) Let \(\delta \in (0, \frac{1}{2})\) and let \(\xi \) be of regularity \(-3+\delta \). Let \(\{ \mathbb {X}_{\bullet } \tau :\tau \in \mathcal {W}, \mathcal {V}\}\) be a local product lift of \(\xi \).

Let \(\phi \) solve

where \(\phi ^{\circ _{\mathbb {X}}3}\) refers to the renormalised cube sub-ordinate to \(\mathbb {X}\).

Then \(v:= \phi - \sum _{\tau \in \mathcal {W}} \mathbb {X}_{\bullet } \mathcal {I}(\tau )\) satisfies

uniformly in the choice of the local product, where \(\Vert \bullet \Vert _{D_R}\) denotes the supremum norm on \(D_R\) and \(m_{\scriptscriptstyle \Xi }(\tau )\) is the number of noises in \(\tau \), and the set of trees \(\mathcal {T}_{\Delta }\) is defined in (4.2).

Here the “local product” denotes a finite number of functions/distributions \(\mathbb {X}_{\bullet } \tau \), each of which is constructed as a polynomial of degree \(m_{\scriptscriptstyle \Xi }(\tau )\) in \(\xi \), see Sect. 3. Local products correspond to models [23, Definition 2.17] in the theory of regularity structures, but we use them slightly differently and hence prefer a different name and notation. The functions / distributions \(\mathbb {X}_{\bullet } \tau \) are indexed by two sets \(\mathcal {W}\) and \(\mathcal {V}\). Here \(\mathcal {W}\) contains the most irregular terms so that after their subtraction the remainder v can be bounded in a positive regularity norm. The semi-norms \([\mathbb {X};\tau ]\) are defined in (5.11) and they correspond to the order bounds on models [23, Equation (2.15)]. The renormalised cube sub-ordinate to a local product is defined in Definition 7.1. This notion corresponds exactly to the reconstruction with respect to a model / local product \(\mathbb {X}_{\bullet }\) of the abstract cube in [23].

When analysing an equation within the theory of regularity structures, one proceeds in two steps: in a probabilistic step a finite number of terms in a perturbative approximation of the solution are constructed - these terms are referred to as the model already mentioned above. The terms in this expansion are just as irregular as \(\phi \) itself, and their construction a priori poses the same problem to define non-linear operations. However, they are given by an explicit polynomial expression of the Gaussian noise \(\xi \) and they can thus be analysed using stochastic moment calculations. It turns out that in many situations the necessary non-linear operations on the model can be defined despite the low regularity due to stochastic cancellations. However, this construction does require renormalisation with infinite counterterms.

In the second analytic step the remainder of the perturbative expansion is bounded. The key criterion for this procedure to work is a scaling condition, which is called sub-criticality in [23], and which corresponds to super-renormalisability in Quantum Field Theory. This condition states, roughly speaking, that on small scales the non-linearity is dominated by the interplay of noise and linear operator. As mentioned above, in the context of (1.1) this condition is satisfied precisely for \(\xi \in C^{-3+\delta }\) if \(\delta >0\). Sub-criticality ensures that only finitely many terms in the expansion are needed to yield a remainder that is small enough to close the argument.

It is important to note that while subcriticality ensures that the number of terms needed in the model is finite, this number can still be extremely large and typically diverges as one approaches the threshold of criticality. A substantial part of [6, 8, 12] is thus dedicated to a systematic treatment of the algebraic relations between all of these terms and their interaction, as well as the effect of renormalising the model on the original equation. The local-in-time well posedness theory for (1.1) for all sub-critical \(\xi \in C^{-3+\delta }\), which was developed in [6], was one of the first applications of the complete algebraic machinery.

The three dimensional analysis in [30] was the first work that used regularity structures to derive a priori bounds. All of the previous works mentioned above [2, 18, 19, 31] were set in an alternative technical framework, the theory of paracontrolled distributions developed in [20]. These two theories are closely related: both theories were developed to understand the small scale behaviour of solutions to singular SPDEs, and both separate the probabilistic construction of finitely many terms in a perturbative expansion from the deterministic analysis of a remainder. Furthermore, many technical arguments in the theory of regularity structures have a close correspondent in the paracontrolled distribution framework. However, up to now paracontrolled distributions have only been used to deal with equations with a moderate number of terms in the expansion (for example (1.1) for \(d \leqslant 3\) [10] or the KPZ equation [21]). Despite efforts by several groups (see for example [3, 4]) this method has not yet been extended to allow for expansions of arbitrary order. Thus for some of the most interesting models mentioned above, for example the Sine-Gordon model for \(\beta ^{2}\) just below \(8\pi \), the reversible dynamics for the Brownian loop measure on a manifold, the three-dimensional Yang–Mills theory, or the \(\Phi ^4\) model close to critical dimension considered here, even a short time existence and uniqueness theory is currently out of reach of the theory of paracontrolled distributions.

The analysis in [30] was based on the idea that the large and small scale behaviour of solutions to singular SPDEs should be controlled by completely different arguments: for large scales the irregularity of \(\xi \) is essentially irrelevant and bounds follow from the strong damping effect of the non-linearity \(-\phi ^3\). The small scale behaviour is controlled using the smoothing properties of the heat operator. This philosophy was implemented by working with a suitably regularised equation which could be treated with a maximum principle and by bounding the error due to the regularisation using regularity structures.

However, this analysis did not make use of the full strength of the regularity structure machinery. In fact, the three-dimensional \(\Phi ^4\) equation is by now considered as one of the easiest examples of a singular SPDE, because the model only contains a moderate number of terms, only five different non-trivial products need to be defined using stochastic arguments and only two different divergences must be renormalised. The interplay of these procedures is not too complex and no advanced algebraic machinery is needed to deal with it. Instead, in [30] the few algebraic relations were simply treated explicitly “by hand". The main contribution of the present article is thus to implement a similar argument when the number of terms in the model is unbounded, thus combining the analytic ideas from [30] with the algebraic techniques [6, 8]. For this it turns out to be most convenient to re-develop the necessary elements of the theory of regularity structures in the specific context of (1.1), leading to bounds that are tailor-made as input for the large-scale analysis.

Along the way, we encounter various serious simplifications and new observations which are interesting in their own right:

-

As already hinted at in Theorem 1.1 we make systematic use of the “generalised Da Prato–Debussche trick” [6, 14]. This means that instead of working with \(\phi \) directly, we remove the most irregular terms of the expansion leading to a function valued remainder. This was already done in [6] but only in order to avoid a technical problem concerning the initial conditions. For us the remainder v is the more natural object, observing that for all values of \(\delta >0\) it solves an equation of the form

$$\begin{aligned} (\partial _t-\Delta )v&=-v^3 + \cdots \end{aligned}$$(1.4)where \(\ldots \) represents a large number of terms (the number diverges as \(\delta \downarrow 0\)) which involve renormalised products of either 1, v or \(v^2\) with various irregular “stochastic terms”. For each \(\delta >0\), v takes values in a positive regularity Hölder norm (that is it is a function) and so an un-renormalised damping term \(-v^3\) appears on the right hand side. Of course, the Hölder regularity of v is not enough to control many of the products appearing in \(\ldots \), and a local expansion of v is required to control these terms. However, we are able to show that for each fixed value of \(\delta \) all of these terms are ultimately of lower order relative to \((\partial _t-\Delta )v\) and \(v^3\).

-

One of the key ideas in the theory of regularity structures is positive renormalisation and the notion of order. For most of the analysis, the functions/distributions from the model need to be centered around a base-point x, that is one works with functions/distributions that depend on the usual “running variable” as well as on the base-point. In Hairer’s work, these objects are denoted by \(\Pi _x\). A good description of their behaviour under a change of base-point x is key to the analysis, and in Hairer’s framework this is encoded in a family of translation operators \(\Gamma _{x,y}\). There is a close relationship between these \(\Pi _{x}\) and \(\Gamma _{x,y}\) maps and some generic identities relating them were found in [5]. Our observation is that - at least in the context of Equation (1.4)—most of the matrix entries for \(\Gamma _{x,y}\) coincide with entries for \(\Pi _x\) evaluated at y. Therefore we can work with just a single object \(\mathbb {X}_{\bullet }\) (corresponding to \(\mathbf {\Pi }\) in [23]) and its re-centered version \(\mathbb {X}_{\bullet ,\bullet }\) that acts on itself for translation. A price to pay for this is that some care is needed for trees that involve derivatives. With this choice our framework is highly reminiscent of Gubinelli’s work on branched rough paths [17], the only real difference being the introduction of some (linear) polynomials, first order derivatives, and the flexibility to allow for non-canonical products.

-

As in [23] we use the model/local product to build a local approximation of v around any base-point x. This takes the form

$$\begin{aligned} v(y) \approx \sum _{\tau \in \mathcal {V}} \Upsilon _x(\tau ) \mathbb {X}_{y,x} \mathcal {I}(\tau ), \end{aligned}$$with a well-controlled error as y approaches x. In order to use this local expansion to control non-linearities two key analytic ingredients are needed: the first is the order bound discussed above, and the second is a suitable continuity condition on the coefficients \(\Upsilon _x(\tau )\). In [23] these conditions are encoded in a family of model-dependent semi-norms, which make up the core of the definition of a modelled distribution [23, Definition 3.1]. It turns out however, that the coefficients \(\Upsilon _x(\tau )\) that appear in the expansion of the solution v are far from generic: up to signs and combinatoric factors they can only be either 1, v(x), \(v(x)^2\), or \(v_{\textbf{X}}(x)\) (a generalised derivative of v). Furthermore, there is a simple criterion (Lemma 6.8) to see which of these is associated to a given tree \(\tau \). This fact was already observed in [6] and was called coherence there. Here we observe that the various semi-norms in the definition of a modelled distribution are in fact all truncations of the single continuity condition on the first coefficient \(\Upsilon (\textbf{1}) = v\). This observation is key for our analysis, as this particular semi-norm is precisely the output of our Schauder Lemma.

-

Our deterministic theory cleanly separates the issues of positive and negative renormalisation in the context of (1.1). Indeed, we can derive a priori bounds under extremely general assumptions on the specific choice of the local product \(\mathbb {X}\) which seems quite a bit larger and simpler than the space of models given in [8]. The key information contained in \(\mathbb {X}\) is how certain a priori unbounded products should be interpreted. Our definition of a local product allows for these interpretations to be completely arbitrary! We can then always define the centered version of \(\mathbb {X}\) (or path) and the only assumption where the various functions interact is in the assumption that these centered products satisfy the correct order bound. We do however include a Sect. 8 in which we introduce a specific class of local products for which the renormalised product \(\phi ^{\circ _{\mathbb {X}} 3}\) appearing in (1.3) is still a local polynomial in \(\phi \) and its spatial derivatives. Our approach in this section is to apply a recursive negative renormalisation that commutes with positive renormalisation, similar to [5]. Finally, the class of local products described in Sect. 8 also contains local products that correspond to the BPHZ renormalised model [8, 12].

1.1 Conventions

Throughout we will work with functions/distributions defined on (subsets of) \(\mathbb {R}\times \mathbb {R}^{d}\) for an arbitrary \(d \geqslant 1\). We measure regularity in Hölder-type norms that reflect the parabolic scaling of the heat operator. For example, we set

and for \(\alpha \in (0,1)\), we define the (local) Hölder semi-norm \([\bullet ]_\alpha \) accordingly as

Distributional norms, that is Hölder type norms for negative regularity \(\alpha <0\) play an important role throughout. These norms are defined in terms of the behaviour under convolution with rescaled versions of a suitable compactly supported kernel \(\Psi \). For example, for \(\alpha <0\) we set

where \(\Vert \bullet \Vert \) refers to the supremum norm on \(\mathbb {R}\times \mathbb {R}^{d}\) and the operator \((\bullet )_L\) denotes convolution with a compactly supported smooth kernel \(\Psi _L(x)=L^{-d-2}\Psi \Big ( \frac{x_0}{L^2}, \frac{\bar{x}}{L} \Big ) \), where \(x=(x_0,\bar{x})\). Just as in [30] we work with a specific choice of \(\Psi \), but this is only relevant in the proof of the Reconstruction Theorem, Lemma A.1. These topics are discussed in detail in Appendix A.

In the case of space–time white noise, the quantity in (1.7) is almost surely not finite, but our analysis only depends on the noise locally: a space–time cut-off can be introduced. Throughout the paper we also make the qualitative assumption that \(\xi \) and all other functions are smooth. This corresponds to introducing a regularisation of the noise term \(\xi \) (for example by convolution with a regularising kernel at some small scale—in field theory this is called an ultra-violet cut-off). This is very convenient, because it allows to avoid unnecessary discussions about how certain objects have to be interpreted and in which sense partial differential equations hold. We stress however that our main result, Theorem 9.1, is a bound only in terms of those low-regularity norms (Definition 5.7) which can be controlled when the regularisation is removed in the renormalisation procedure. Even though all functions involved are smooth, we will freely use the term “distribution” to refer to a smooth function that can only be bounded in a negative regularity norm.

2 Overview

As stated in the introduction a large part of our analysis consists of a suitable re-formulation of elements of the theory of regularity structures. The key notions we require are local products, the renormalised product sub-ordinate to a local product, as well as the relevant norms that permit us to bound these renormalised products. We start our exposition with an overview over these notions and how they are interconnected. The exposition in this section is meant to be intuitive and rather “bottom up”. The actual analysis begins in Sect. 3.

2.1 Subcriticality

The starting point of our analysis is a simple scaling consideration: assume \(\phi \) solves

for \(\xi \in C^{-3+\delta }\). Schauder theory suggests that the solution \(\phi \) is not better than \(C^{-1 + \delta }\). In this low regularity class no bounds on \(\phi ^3\) are available, but as we will see below, the notion of product we will work with has the property that negative regularities add under multiplication. Therefore we will obtain a control on (a renormalised version of) \(\phi ^3\) as a distribution in \(C^{-3+3\delta }\). Despite this very low regularity, for \(\delta >0\), the term \(\phi ^3\) is still more regular than the noise \(\xi \). This observation is the core of Hairer’s notion of sub-criticality (see [23, Assumption 8.3]) and suggests that the small-scale behaviour of \(\phi \) and \(\phi ^3\) can ultimately be well understood by building a perturbative expansion based on the linearised equation.

2.2 Trees

We follow Hairer’s convention to index the terms in this expansion by a set of trees. This is not only a convenient notation that allows to organise which term corresponds to which operation, but also allows for an efficient organisation of the relations between these terms. We furthermore follow the convention to view trees as abstract symbols which form the basis of a finite-dimensional vector space. The trees are built from a generator symbol \(\Xi \) (which represents the noise \(\xi \) and graphically are the leaves of the tree) followed by applying the operator \(\mathcal {I}(\cdot )\) (which represents to solving the heat equation and graphically corresponds to the edges of the tree) and taking products of trees (which represents to some choice of point-wise product and graphically corresponds to joining two trees at their root). To carry out the localisation procedure, discussed in Sect. 2.4 below, along with \(\Xi \), additional generators \(\{\textbf{1},\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\) are used in our construction of trees.

We associate concrete meaning to trees via an operator \(\mathbb {X}_{\bullet }\) which we call a “local product”, see Definition 3.8. Even though this may seem somewhat bulky initially, it turns out to be extremely convenient as the concrete definition of \(\mathbb {X}_{\bullet }\) on the same tree may change during the renormalisation procedure and because, the local product also appears in a centered form denoted by \(\mathbb {X}_{\bullet , \bullet }\); see Sect. 2.6 below.

2.3 Subtracting the most irregular terms

The first step of our analysis consists of subtracting a finite number of terms from \(\phi \) to obtain a remainder v which is regular enough to be bounded in a positive Hölder norm. The regularity analysis in Sect. 2.1 suggests that the regularity of \(\phi \) can be improved by removing \(\xi \) from the right hand side of (2.1). We introduce the first graph, \(\mathcal {I}(\Xi )\) or graphically  , and impose that \(\mathbb {X}_{\bullet }\) acts on this symbol yielding a function that satisfies

, and impose that \(\mathbb {X}_{\bullet }\) acts on this symbol yielding a function that satisfies

We set  so that \(\tilde{v}\) solves

so that \(\tilde{v}\) solves

Of course the problem of controlling the cube of a distribution of regularity \(-1+\delta \) has not disappeared, but instead of \(\phi ^3\) one now has to control  and

and  . At this point one has to make use of the fact that

. At this point one has to make use of the fact that  is known much more explicitly than the solution \(\phi \), and can thus be analysed using explicit covariance calculations. We do not discuss these calculations here, but rather view these products as part of the given data: we introduce two additional symbols \( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \mathcal {I}(\Xi )\) or graphically

is known much more explicitly than the solution \(\phi \), and can thus be analysed using explicit covariance calculations. We do not discuss these calculations here, but rather view these products as part of the given data: we introduce two additional symbols \( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \mathcal {I}(\Xi )\) or graphically  , and similarly \( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \) or

, and similarly \( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \) or  and assume that \( \mathbb {X}\) acts on these additional symbols yielding distributions which are controlled in \(C^{-3+3\delta }\) and \(C^{-2+2\delta }\). We stress that only the control on these norms enters the proof of our a priori bound, and no relation to

and assume that \( \mathbb {X}\) acts on these additional symbols yielding distributions which are controlled in \(C^{-3+3\delta }\) and \(C^{-2+2\delta }\). We stress that only the control on these norms enters the proof of our a priori bound, and no relation to  needs to be imposed (see however Sect. 8 below). Instead of (2.3) we thus consider

needs to be imposed (see however Sect. 8 below). Instead of (2.3) we thus consider

Note that the most irregular term on the right hand side is  so that we can expect \(\tilde{v} \in C^{-1+3\delta }\) that is we have gained \(2 \delta \) differentiability with respect to \(\phi \). We mention at this point, that we will always work with interior Schauder regularity estimates permitting us to largely avoid having to deal with estimating the behaviour near the boundary. For \(\delta >\frac{1}{3}\) (which corresponds to dimensions “\(d< 3 \frac{1}{3}\)”) \(\tilde{v}\) is thus controlled in a positive order Hölder norm. For smaller \(\delta \) we proceed to subtract an additional term to again remove the most irregular term from the right hand side as above. We define a new symbol \(\mathcal {I}( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \mathcal {I}(\Xi ))\) or graphically

so that we can expect \(\tilde{v} \in C^{-1+3\delta }\) that is we have gained \(2 \delta \) differentiability with respect to \(\phi \). We mention at this point, that we will always work with interior Schauder regularity estimates permitting us to largely avoid having to deal with estimating the behaviour near the boundary. For \(\delta >\frac{1}{3}\) (which corresponds to dimensions “\(d< 3 \frac{1}{3}\)”) \(\tilde{v}\) is thus controlled in a positive order Hölder norm. For smaller \(\delta \) we proceed to subtract an additional term to again remove the most irregular term from the right hand side as above. We define a new symbol \(\mathcal {I}( \mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \mathcal {I}(\Xi ))\) or graphically  , postulate that \(\mathbb {X}_{\bullet } \) acts on this symbol yielding a distribution which solves

, postulate that \(\mathbb {X}_{\bullet } \) acts on this symbol yielding a distribution which solves

and define a new remainder  which takes values in \(C^{-1+5 \delta }\). In general, for any \(\delta >0\) we denote by \(\mathcal {W}\) the set of trees of order \(< -2\) (for these trees, order is the same as the regularity of the local product on this tree. Below, in Sect. 2.6 we will encounter additional trees for which these notions differ) and define

which takes values in \(C^{-1+5 \delta }\). In general, for any \(\delta >0\) we denote by \(\mathcal {W}\) the set of trees of order \(< -2\) (for these trees, order is the same as the regularity of the local product on this tree. Below, in Sect. 2.6 we will encounter additional trees for which these notions differ) and define

where \(m(w)\) denotes the number of “leaves” of the tree w (all trees in \(\mathcal {W}\) have an odd number of leaves, see Sect. 3). Then v takes values in a Hölder space of positive regularity. The remainder equation then turns into

For the constraint in the last sum, note that if \(\tau = \mathcal {I}(w_1) \mathcal {I}(w_2) \mathcal {I}(w_3) \in \mathcal {W}\), one would have removed \(\mathcal {I}(\tau )\) from the remainder v in (2.6).

We stress that the structure of this equation is always the same in the sense that \( (\partial _t-\Delta )v =-v^3\) is perturbed by a large number of irregular terms (the number actually diverges as \(\delta \rightarrow 0\)). Bounding these irregular terms forces us to introduce additional trees as we will see below, but ultimately we will show that all of these terms are of lower order with respect to \( (\partial _t-\Delta )v =-v^3\).

2.4 Iterated fFreezing of coefficients

We now discuss the remainder equation (2.7) in more detail, writing it as

where we are isolating the most irregular terms in each of the three sums appearing on the right hand side of (2.7). The most irregular term in the sum on the second line of (2.7) is  and the most irregular term in the third line is

and the most irregular term in the third line is  . For the last line, the precise form of the most irregular term depends on \(\delta \) and there could be multiple terms of the same low regularity. Here we just keep track of one of them, simply denote it by \( \mathbb {X}_\bullet \tau _{0}\) and also leave the combinatorial prefactor \(\Upsilon (\tau _{0})\) implicit. We remark that \( \mathbb {X}_\bullet \tau _{0}\) is always a distribution of regularity \(C^{-2+\kappa }\) for some \(\kappa \in (0, 2 \delta )\). To simplify the exposition we disregard all of the (many) additional terms hidden in the ellipses \(\ldots \) for the moment.

. For the last line, the precise form of the most irregular term depends on \(\delta \) and there could be multiple terms of the same low regularity. Here we just keep track of one of them, simply denote it by \( \mathbb {X}_\bullet \tau _{0}\) and also leave the combinatorial prefactor \(\Upsilon (\tau _{0})\) implicit. We remark that \( \mathbb {X}_\bullet \tau _{0}\) is always a distribution of regularity \(C^{-2+\kappa }\) for some \(\kappa \in (0, 2 \delta )\). To simplify the exposition we disregard all of the (many) additional terms hidden in the ellipses \(\ldots \) for the moment.

We recall the standard multiplicative inequality

for \(\alpha , \beta >0\) which holds if and only if \(\alpha - \beta >0\). In view of the regularity  we would thus require \(v \in C^\gamma \) for \(\gamma > 2-2\delta \) in order to have a classical interpretation of the product

we would thus require \(v \in C^\gamma \) for \(\gamma > 2-2\delta \) in order to have a classical interpretation of the product  on the right hand side of (2.8). Unfortunately, v is much more irregular: it is governed by the irregularity of the term \( \mathbb {X}_\bullet \tau _{0}\) of regularity \(C^{-2+\kappa }\) on the right hand side of the equation, and therefore by Schauder theory we can only expect v to be of class \(C^\kappa \).

on the right hand side of (2.8). Unfortunately, v is much more irregular: it is governed by the irregularity of the term \( \mathbb {X}_\bullet \tau _{0}\) of regularity \(C^{-2+\kappa }\) on the right hand side of the equation, and therefore by Schauder theory we can only expect v to be of class \(C^\kappa \).

The solution to overcome this difficulty presented in [23] amounts to an “iterated freezing of coefficient” procedure to obtain a good local description of v around a fixed base-point: we fix a space–time point x and rewrite the third, and most irregular term on the right hand side of (2.8) as

and use this to rewrite the equation (2.8) as

where we have introduced new symbols  and \( \mathcal {I}( \tau _{0}) \) and postulated that \(\mathbb {X}\) acts on these symbols to yield a solution of the inhomogeneous heat equation with right hand sides

and \( \mathcal {I}( \tau _{0}) \) and postulated that \(\mathbb {X}\) acts on these symbols to yield a solution of the inhomogeneous heat equation with right hand sides  and \(\mathbb {X}_{\bullet } \tau _{0}\). The worst term on the right hand side is now

and \(\mathbb {X}_{\bullet } \tau _{0}\). The worst term on the right hand side is now  so that the left hand side can at best be of regularity \(2\delta \). However, near the base-point we can use the smallness of the pre-factor \(|v(\bullet ) - v(x)| \lesssim [v]_\kappa d(\bullet , x)^\kappa \) to get the better estimate

so that the left hand side can at best be of regularity \(2\delta \). However, near the base-point we can use the smallness of the pre-factor \(|v(\bullet ) - v(x)| \lesssim [v]_\kappa d(\bullet , x)^\kappa \) to get the better estimate

where have used the short-hand notation

This bound in turn can now be used to get yet a better approximation in (2.9): we write

At this point two additional non-classical products appear in the second and third term on the right hand side, and as before they are treated as part of the assumed data: we introduce two additional symbols  and \(\mathcal {I}(\tau _{0})\mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \) and assume that \(\mathbb {X}\) acts on these symbols yielding distributions which we interpret as playing the roles of the products

and \(\mathcal {I}(\tau _{0})\mathcal {I}(\Xi ) \mathcal {I}(\Xi ) \) and assume that \(\mathbb {X}\) acts on these symbols yielding distributions which we interpret as playing the roles of the products  and

and  . Similarly, we introduce the base-point dependent versions as

. Similarly, we introduce the base-point dependent versions as

Our full prescription defining basepoint dependent trees will require the algebraic framework given in Sect. 4, but for now we motivate the formulae above as follows - for products of trees we only need to recenter “branches” of positive degree. For instance, the first line above can be written  .

.

With these recenterings defined, (2.13) becomes re-interpreted as

The last two terms on the right hand side can now again be moved to the left hand side of the equation suggesting that near x we can improve the approximation (2.10) of v(y) by considering

where

with the improved estimate \(|\tilde{U}(y,x)| \lesssim d(y,x)^{4\delta +\kappa }\), thus gaining another \(2\delta \) with respect to U(y, x).

The whole procedure can now be iterated: in each step an improved approximation of v is plugged into the product  which in turn yields an even better local approximation of v near x. At some point, additional terms have to be added:

which in turn yields an even better local approximation of v near x. At some point, additional terms have to be added:

-

In order to get a local description of order \(>1\), “generalized derivatives” \(v_{\textbf{X}_i}\) of v appears, that is a term \(\sum _{i=1}^d v_{\textbf{X}_i}(x)(y_i - x_i)\) has to be included.

-

The term

on the right hand side of the remainder equation (2.8) has regularity \(-1+\delta \), so once one wishes to push the expansion of v to a level \(>1+\delta \), one also has to “freeze the coefficient” \(v^2\), that is write

on the right hand side of the remainder equation (2.8) has regularity \(-1+\delta \), so once one wishes to push the expansion of v to a level \(>1+\delta \), one also has to “freeze the coefficient” \(v^2\), that is write

leading to additional terms on the left hand side.

-

Of course, the various terms which were hidden in \(\ldots \) in (2.8) above have to be treated in a similar way leading to (many) additional terms in the local description of v.

Ultimately, we iterate this scheme until we have a local description an order \(\gamma \), that has to satisfy \( \gamma > 2-2\delta \). The threshold is determined by the product  : namely

: namely  is of regularity \(-2+2\delta \) and the constraint is that \(\gamma -2 +\delta \) has to be positive. Note that this corresponds exactly to the regularity of v that would be classically required to control

is of regularity \(-2+2\delta \) and the constraint is that \(\gamma -2 +\delta \) has to be positive. Note that this corresponds exactly to the regularity of v that would be classically required to control  .

.

2.5 Renormalised products

The previous discussion thus suggests that we have a Taylor-like approximation of v near the base-point x

for coefficients \(\Upsilon _{x}\) and with an error that is controlled by \(\lesssim d(x,y)^{\gamma }\). Here \(\mathcal {V}_{\textsc {prod}}\) denotes the set of trees appearing in the recursive construction described above. We unify our notation by also writing the first two terms with “trees” and set

thus permitting to rewrite (2.18) as

where \(\mathcal {V}=\mathcal {V}_{\textsc {prod}}\cup \{\textbf{1},\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\).

Of course, up to now our reasoning was purely formal, because it relied on all of the ad hoc products of singular distributions that were simply postulated along the way. We now turn this formal reasoning into a definition of the products sub-ordinate to the choices in the local product \(\mathbb {X}\). More precisely, we define renormalised products such as

Our main a priori bound in Theorem 9.1 holds for the remainder equation interpreted in this sense, under very general assumptions on the local product \(\mathbb {X}\). However, under these very general assumptions it is not clear (and in general not true) that the renormalised products are in any simple relationship to the usual products. In Sect. 8 we discuss a class of local products for which the renormalised products can be re-expressed as explicit local functionals of usual products. In particular, for those local products we always have

for real parameters \(a,b,c, d_i\). This class of local products contains the examples that can actually be treated using probabilistic arguments.

2.6 Positive renormalisation and order

One of the key insights of the theory of regularity structures is that the renormalised products defined above can be controlled quantitatively in a process called reconstruction, and the most important ingredient for that process are the definitions of suitable notions of regularity / continuity for the local products \(\mathbb {X}\) and the coefficients \(\Upsilon \). We start with the local products.

The base-point dependent or centered versions of the local product, \(\mathbb {X}_{y,x}\) that appear naturally in the expansions above (for example in (2.12), (2.14), (2.17)) are in fact much more than a notational convenience. The key observation is that their behaviour as the running argument y approaches the base-point x is well controlled in the so-called order bound. For  defined in (2.12) we have

defined in (2.12) we have

which amounts to the Hölder regularity of  . The order bounds become more interesting in more complex examples: for

. The order bounds become more interesting in more complex examples: for  defined in (2.17) we have

defined in (2.17) we have

The remarkable observation here is that the function  is itself only of regularity \(2\delta \), so that this estimate expresses that the second term

is itself only of regularity \(2\delta \), so that this estimate expresses that the second term  exactly compensates the roughest small scale fluctuations. The exponent \(4\delta \) is defined as the order of the tree

exactly compensates the roughest small scale fluctuations. The exponent \(4\delta \) is defined as the order of the tree  simply denoted by

simply denoted by  . Analogously, for the tree

. Analogously, for the tree  defined in (2.14) we have the order

defined in (2.14) we have the order  exceeding the regularity of the distribution

exceeding the regularity of the distribution  which is only \(-2+2\delta \), the same as the regularity of

which is only \(-2+2\delta \), the same as the regularity of  . As these quantities are distributions the order bound now has to be interpreted by testing against the rescaled kernel \(\Psi _T\)

. As these quantities are distributions the order bound now has to be interpreted by testing against the rescaled kernel \(\Psi _T\)

This notion of order of trees has the crucial property that it behaves additive under multiplication - just like the regularity of distributions discussed above. This property is what guarantees that for sub-critical equations the number of trees with order below any fixed threshold is always finite.

2.7 Change of base-point

As sketched in the discussion above, the base-point dependent centered local products \(\mathbb {X}_{\bullet , \bullet }\) are defined recursively from the un-centered ones. For what follows, a good algebraic framework to describe the centering operation and the behaviour under the change of base-point is required. It turns out that both operations can be formulated conveniently using a combinatorial operation called the coproduct \(\Delta \) (note that this \(\Delta \) has nothing to do with the Laplace operator, it will always be clear from the context which object we refer to). This coproduct associates to each tree a finite sum of couples \((\tau ^{(1)}, \tau ^{(2)})\) where \(\tau ^{(1)}\) is a tree and \(\tau ^{(2)}\) is a finite list of trees. Equivalently, the coproduct can be seen as a linear map

where \(\mathcal {T}_{\Delta }\) and \(\mathcal {T}_{\textrm{cen}}\) are sets of tree that we will define later (see (4.2) for the former and Sect. 4.3 for the latter), \(\textrm{Vec}(A)\) is the free vector space generated by the set A, respectively, and \(\textrm{Alg}(A)\) is the free non-commutative unital algebra generated by A (that is, \(\textrm{Alg}(A)\) consists of linear combinations of words in elements of A, where the product on words is concatenation and the unit is given by the empty word).

This coproduct is defined recursively, reflecting exactly the recursive positive renormalisation described above in Sect. 2.4. For example

so that for example the first definitions of (2.12) and (2.16) turn into

that is the different terms in the coproduct correspond to the different terms appearing in the positive renormalisation, and for each pair \(\tau ^{1} \otimes \tau ^{2}\), the first tree \(\tau ^{1}\) corresponds to the “running variable y” and \(\tau ^{2}\) to the value of the base-point. Here \(\mathbb {X}_{x}^\textrm{cen}: \textrm{Alg}(\mathcal {T}_{\textrm{cen}}) \rightarrow \mathbb {R}\) is a multiplicative map that associates to a given \(\tau \in \mathcal {T}_{\textrm{cen}}\) a corresponding quantity for recentering about x. These quantities can be defined recursively to match this definition for example

This way of codifying the relation between the centered and un-centered local products is useful, for example when analysing the effect of the renormalisation procedure (Sect. 8) but even more importantly they give an efficient way to describe how \(\mathbb {X}_{y,x} \) behave under change of base-point. It turns out that we obtain the remarkable formula for all \(\tau \in \mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\)

that is the centered object \(\mathbb {X}_{y,z}\) acts on itself as a translation operator!

2.8 Continuity of coefficients

With this algebraic formalism in hand, we are now ready to describe the correct continuity condition on the coefficients. This continuity condition is formulated in terms of the concrete realisation of the local product, in that an “adjoint” of the translation operator appears. In order to formulate it, we introduce another combinatorial notation \(C_+(\bar{\tau },\tau )\), which is defined recursively to ensure that

We argue below that the correct family of semi-norms for the various coefficients \(\Upsilon (\tau )\) is given by

The Reconstruction Theorem (see Lemma A.1 for our formulation) implies that the renormalised products (2.20) can be controlled in terms of the semi-norms (2.25) and the order bounds (for example (2.23)). Reconstruction takes as input the whole family of semi-norms (2.25), but it turns out that in our case, it suffices to deal with a single semi-norm on the coefficients: the coefficients \(\Upsilon _{x}(\tau )\) that appear in the recursive freezing of coefficients described in Sect. 2.4 are far from arbitrary. It is very easy to see that (up to combinatorial coefficients and signs) the only possible coefficients we encounter are v, \(v^2\), \(v_\textbf{X}\), and 1. It then turns out that all of the semi-norms (2.25) are in fact truncations of the single continuity condition on the coefficient v itself. This semi-norm can then be easily seen to be

which measures precisely the quality of the approximation (2.19) at the starting point of this discussion.

2.9 Outline of paper

A large part of this article is concerned with providing the details of the arguments sketched above in a streamlined ”top-down” way: The set of trees, their order and local products are defined in Sect. 3, while Sect. 4 provides a systematic treatment of combinatorial properties of the coproduct. The centering of local products and the change of base point formula (2.24) are discussed in Sect. 5, while Sect. 6 contains the detailed discussion of the coefficients \(\Upsilon \) sketched above in Sect. 2.5. The renormalised products in the spirit of (2.20) are defined in Sect. 7. As already announced above, Sect. 8 contains the discussion of a special class of local products, for which the renormalised product can be expressed in a simple form. The actual large-scale analysis only starts in Sect. 9, where the main result is announced. This section also contains a detailed outline of the strategy of proof. The various technical Lemmas that constitute this proof can then be found in Sect. 10. Finally, we provide two appendices in which some known results are collected: Appendix A discusses norms on spaces of distributions in the context of the reconstruction theorem. Appendix B collects different variants of classical Schauder estimates.

3 Tree Expansion and Local Products

The objects we refer to as trees will be built from

-

a set of generators \(\{\textbf{1},\textbf{X}_{1},\ldots , \textbf{X}_{d},\Xi \}\), which can be thought of as the set of possible types of leaf nodes of the tree

-

applications of an operator \(\mathcal {I}\), \(\tau \mapsto \mathcal {I}(\tau )\) adds a new root vertex to \(\tau \) which is connected to the old root by an edge,

-

a tree product, which joins roots of trees at a common node.

As an example, we have

In particular, when drawing our trees pictorially we decorate the leaf nodes with a \(\bullet \) for an instance of \(\Xi \), 0 for an instance of \(\textbf{1}\), and \(j \in \{1,\ldots ,d\}\) for an instance of \(\textbf{X}_{j}\). Notice that we do not decorate internal (non-leaf) nodes and have the root node at the bottom.

Our tree product is non-commutative which in terms of our pictures means that we distinguish between the ways a tree can be embedded in the plane. For example, the following trees are treated as distinct from the trees above:

Remark 3.1

We work with a non-commutative tree product to remove nearly all combinatorial factors from key algebraic relations in our framework, this greatly simplifies their statements and proofs.

Whenever we map trees over to concrete functions and or distributions this mapping will treat identically any two trees that coincide when one imposes commutativity of the tree product.

We say a tree \(\tau \) is planted if it is of the form \(\tau = \mathcal {I}(\tilde{\tau })\) for some other tree \(\tilde{\tau }\), some examples would be

We take a moment to describe the intuition behind these trees. The symbol \(\Xi \) will represent the driving noise, we will often call nodes of type \(\Xi \) noise leaves/nodes. Regarding the operator \(\mathcal {I}\), when applied to trees different from \(\{\textbf{1},\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\), \(\mathcal {I}\) will represent solving the heat equation, that is

However, we think of the trees as algebraic objects so such an equation is only given here as a mnemonic and will be made concrete when we associate functions to trees in Sect. 3.2.

The symbols \(\{\textbf{1},\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\) themselves will not correspond to any analytic object, but the trees \(\{\mathcal {I}(\textbf{1}),\mathcal {I}(\textbf{X}_{1}),\ldots ,\mathcal {I}(\textbf{X}_{d})\}\) will play the role of the classical monomials, that is \(\mathcal {I}(\textbf{1})\) corresponds to 1 and \(\mathcal {I}(\textbf{X}_{j})\) corresponds to the monomial \(z_{j}\).

Remark 3.2

Encoding polynomials as branches of a tree instead of the standard convention of treating them as node decorations is more consistent with our non-commutative approach - for example we want to treat

as different trees. If we treated \(\textbf{X}_{1}\) as a decoration at the root of the tree then both trees would be the same. This convention is also compatible with viewing every unplanted tree as a tree product of three factors, and in later inductive algebraic arguments it is convenient to think of the \( \{\textbf{1},\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\) as leaves.

We define \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) to be the smallest set of trees containing \(\{\textbf{1},\textbf{X}_{1},\ldots , \textbf{X}_{d},\Xi \} \subset \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) and such that for every \(\tau _{1}, \tau _{2}, \tau _{3} \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) one also has \(\mathcal {I}(\tau _{1})\mathcal {I}(\tau _{2})\mathcal {I}(\tau _{3}) \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\). The trees in \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}{\setminus } \{\textbf{1}, \textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\) will be used to write expansions for the right hand side of (1.1). We remark that non-leaf nodes in \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) have three offspring, for instance \(\mathcal {I}(\Xi )^{2}\mathcal {I}(\textbf{1}) \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) but  . However, the three different permutations of \(\mathcal {I}(\Xi )^{2}\mathcal {I}(\textbf{1})\) will play the role of that

. However, the three different permutations of \(\mathcal {I}(\Xi )^{2}\mathcal {I}(\textbf{1})\) will play the role of that  did in expressions like (2.4), and as an example of how this simplifies our combinatorics we remark that this allows us to forget about the “3” that appears in (2.4).

did in expressions like (2.4), and as an example of how this simplifies our combinatorics we remark that this allows us to forget about the “3” that appears in (2.4).

We also define a corresponding set of planted trees \(\mathcal {T}_{\textsc {LHS}}^{\textsc {all}}= \{ \mathcal {I}(\tau ): \tau \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\} \). The planted trees in \(\mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) will be used to describe an expansion of the solution \(\phi \) to (1.1).

At certain points of our argument the roles of the planted trees of \(\mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) and the unplanted trees of \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) will be quite different. For this reason we will reserve the use of the greek letter \(\tau \) (and \(\bar{\tau }\), \(\tilde{\tau }\), etc.) for elements of \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\). If we want to refer to a tree that could belong to either \(\mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) or \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) we will use the greek letter \(\sigma \).

3.1 The order of a tree and truncation

We give a recursive definition of the order \(|\cdot |\) on \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\cup \mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) as follows. Given \(\mathcal {I}(\tau ) \in \mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) we set \(|\mathcal {I}(\tau )| = |\tau | +2 \). Given \(\tau \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) we set

The values of \(-2\) and \(-1\) for homogeneities of the trees \(\textbf{1}\) and \(\textbf{X}_{i}\) may seem a bit odd but this is just due to the convention that it is \(\mathcal {I}(\textbf{1})\) and \(\mathcal {I}(\textbf{X}_{i})\) that actually play the role of the classical monomials and we want \(|\mathcal {I}(\textbf{1})| = 0\) and \(|\mathcal {I}(\textbf{X}_{i})| = 1\).

It will be helpful throughout this article to have notation for counting the number of occurrences of a certain leaf type in a tree. We define the functions \(m_{\textbf{1}},\ m_{\textbf{x}_{i}},\ m_{\scriptscriptstyle \Xi }: \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\rightarrow \mathbb {Z}_{\geqq 0}\) which count, on any given tree, the number of occurrences of \(\textbf{1}\), \(\textbf{X}_{i}\) and \(\Xi \) as leaves in the tree.

We also set \(m_{\textbf{x}}= \sum _{i=1}^{d} m_{\textbf{x}_{i}}\) for the function that returns the total number of \(\{\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\) leaves and \(m=m_{\textbf{1}}+m_{\textbf{x}}+m_{\scriptscriptstyle \Xi }\) which returns the total number of leaves of the given tree.

One can easily check that, for \(\tau \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\),

We will only work with a finite set of trees in our analysis, and we clarify the different roles that they play in our construction by organizing them into various subsets. We define the following subsets of \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\):

As a mnemonic, \(\mathcal {W}_{\textsc {prod}}\) (resp \(\mathcal {V}_{\textsc {prod}}\)), is the set of those trees in \(\mathcal {W}\) (resp \(\mathcal {V}\)) which are themselves the tree product of three planted trees.

The infinite sets \(\mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\) and \(\mathcal {T}_{\textsc {LHS}}^{\textsc {all}}\) will not appear in what follows and we instead work with the finite subsets

where above and in what follows we write \(\mathcal {I}(A) = \{\mathcal {I}(\tau ):\ \tau \in A\}\).

We now describe the roles of the sets \(\textrm{Poly}\), \(\mathcal {W}\), and \(\mathcal {V}\) introduced above.

The set \(\mathcal {W}\) consists of those trees that appear in our expansion of the right hand side of (1.1) that have the lowest orders. When “subtracting the most irregular terms” as described in Sect. 2.3 we will be subtracting the trees of \(\mathcal {I}(\mathcal {W})\) which are all of negative order themselves. In particular, the trees of \(\mathcal {W}\) will appear in tree expansions for the right hand side of (1.1) but will not appear by themselves on the right hand side of the remainder equation. Other than possibly requiring renormalisation, the trees of \(\mathcal {W}_{\textsc {prod}}\) behave simply in our algebraic framework because they do not require any recentering. We will see that they appear with a constant coefficient in our expansion of the right hand side of (1.1) and do not include any of the generators in \(\textrm{Poly}\). The letter chosen for this set refers to the fact that these trees are seen in the more classical “Wild expansion” for the right hand side of (1.1), that is a naive iterative perturbation expansion.

On the other hand, the trees of \(\mathcal {V}_{\textsc {prod}}\) will appear on the right hand side of expansions of both (1.1) and the remainder equation (hence why we call it \(\mathcal {V}\)). We do not include \(|\tau | > 0\) in \(\tau \in \mathcal {V}_{\textsc {prod}}\) since we only need to expand the right hand side of the remainder equation up to order 0.

While the trees in \(\mathcal {V}\) are less singular than those in \(\mathcal {W}\), they behave more complicated in our algebra - in particular all the trees in \(\mathcal {V}_{\textsc {prod}}\) will require recentering.

Our remainder will then be described by an expansion in terms of trees of \(\mathcal {I}(\mathcal {V})\) As mentioned earlier, the trees of \(\textrm{Poly}\) will not, by themselves, play a role in our expansions, but in our expansion of the remainder the trees in \(\mathcal {I}(\textrm{Poly})\) will come with unknown, space–time dependent coefficients which we think of as “generalised derivatives”and the trees in \(\mathcal {I}(\mathcal {V})\) come with coefficients which are monomials in these generalised derivatives.

Assumption 3.3

For the rest of the paper, we treat \(\delta > 0\) as fixed, and assume, without loss of generality for the purposes of our main theorem, that \(\delta \) has been chosen so that \(\{|\tau |: \tau \in \mathcal {W}\cup \mathcal {V}_{\textsc {prod}}\}\) does not contain any integers.

We have the following straightforward lemma.

Lemma 3.4

The sets \(\mathcal {W}\) and \(\mathcal {V}\) are both finite.

Proof

From the formula (3.1), one can see that \(\tau \in \mathcal {W}\) if and only if \(m_{\textbf{1}}(\tau )=m_{\textbf{x}}(\tau )=0\) and \(m_{\scriptscriptstyle \Xi }(\tau )<\delta ^{-1}\). Similarly for \(\tau \in \mathcal {V}_{\textsc {prod}}\), one has

and \(m_{\scriptscriptstyle \Xi }(\tau )<\delta ^{-1}(3-m_{\textbf{1}}(\tau )-2m_{\textbf{x}}(\tau ))\). \(\square \)

Remark 3.5

Clearly Lemma 3.4 would be false for \(\delta = 0\), that is when the equation is critical.

We also have the following lemma describing the trees in \(\mathcal {W}_{\textsc {prod}}\).

Lemma 3.6

For any \(\tau \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\setminus \{\Xi \}\), \(|\tau | \geqq -3 + 3\delta > |\Xi | = -3 + \delta \). Moreover, for any \(w \in \mathcal {W}_{\textsc {prod}}\), one has

where \(w_{1},w_{2},w_{3} \in \mathcal {W}\).

Proof

The first statement about \(|\tau |\) is a simple consequence of (3.1) and the constraint that \(m_{\scriptscriptstyle \Xi }(\tau ) \geqq 3\). For the second statement, we write \(w = \mathcal {I}(\tau _{1})\mathcal {I}(\tau _{2})\mathcal {I}(\tau _{3})\) with \(\tau _{1},\tau _{2},\tau _{3} \in \mathcal {T}_{\textsc {RHS}}^{\textsc {all}}\). Then we have, by bounding the orders of \(\tau _{2}\) and \(\tau _{3}\) from below,

Then, the condition that \(|w| \leqq -2\) forces \(|\tau _{1}| < -2\) so we have \(\tau _{1} \in \mathcal {W}\) and clearly the same argument applies for \(\tau _{2},\tau _{3}\). \(\square \)

Our various tree expansions will be linear combinations of trees in \(\mathcal {T}_{\textsc {RHS}}\) or \(\mathcal {T}_{\textsc {LHS}}\) and we define tree products of such linear combinations by using linearity. However, here we implement a truncation convention that will be in place for the rest of the paper. Namely, given \(\sigma _{1},\sigma _{2},\sigma _{3} \in \mathcal {T}_{\textsc {LHS}}\) if \(|\sigma _{1}| + |\sigma _{2}| + |\sigma _{3}| > 0\), we enforce that

Imposing (3.4) is important for analytic reasons (since one doesn’t expect infinite tree expansions to converge). in fact, the key algebraic identities for coproducts we prove (such as Lemma 4.5) hold whether or not one makes the truncation (3.4), which is a consequence of how our coproducts behave with respect to degrees (see (4.7)).

In particular, with these conventions an important identity for us will be

Above, on the left, the \((\bullet )^{3}\) indicates a three-fold tree product. To verify the identity above, note that for \(\tau _{1},\tau _{2},\tau _{3} \in \mathcal {V}\sqcup \mathcal {W}\), one has \(\tau = \mathcal {I}(\tau _1) \mathcal {I}(\tau _2) \mathcal {I}(\tau _3) \in \tau \in \mathcal {V}_{\textsc {prod}}\cup \mathcal {W}_{\textsc {prod}}\) if and only if \(|\tau | > 0\). The non-commutativity of the tree product means we see no combinatorial coefficients in (3.5).

3.2 Local products

In this section we begin to specify how trees are mapped into analytic expressions. Our starting point for this will be what we call a local product and will be denoted by \(\mathbb {X}\). Each local product \(\mathbb {X}\) should be thought of as a (minimal) description of how products of planted trees should be interpreted at a concrete level.

We will view local products as being defined on a relatively small set of trees and then canonically extended to all of \(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\) (and in the sequel, to larger sets of trees that will appear).

Definition 3.7

We define \(\mathcal {Q}\subset \mathcal {V}_{\textsc {prod}}\cup \mathcal {W}_{\textsc {prod}}\) to consist of all trees

satisfying the following properties:

-

\(\tau _{1},\tau _{2},\tau _{3} \not \in \{\textbf{X}_{1},\ldots ,\textbf{X}_{d}\}\).

-

At most one of the trees \(\tau _{1},\tau _{2},\tau _{3}\) is equal to \(\textbf{1}\).

Note that \(\mathcal {W}_{\textsc {prod}}\subset \mathcal {Q}\). The set \(\mathcal {Q}\) includes all “non-trivial” products of trees, namely those corresponding to classically ill-defined products of distributions. Our philosophy is that once a local product \(\mathbb {X}\) is specified on the noise \(\Xi \) and all these non-trivial products then we are able to define all other products that appear in our analysis—see the section immediately following. Describing a minimal set of data as above will be useful when we describe the renormalisation of local products in Sect. 8.3.

We impose the first of the two constraints stated above because multiplication by the tree \( \mathcal {I}(\textbf{X}_{i})\) corresponds to multiplication of a distribution/function by \(z_{i}\) which is always well-defined—so we enforce that this product is not deformed. We impose the second of the two constraints above since a tree of the form \(\mathcal {I}(\textbf{1})\mathcal {I}(\tau )\mathcal {I}(\textbf{1})\) (or some permutation thereof) does not represent a new non-trivial product because the factors \(\mathcal {I}(\textbf{1})\) corresponds to the the classical monomial 1.

There is a natural equivalence relation \(\sim \) on \(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\) where \(\tau \sim \tau '\) if and only if \(\tau \) and \(\tau '\) are the same modulo non-commutativity. More precisely, \(\sim \) is the smallest equivalence relation on \(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\) with the properties that \(\tau \sim \bar{\tau } \rightarrow \mathcal {I}(\tau ) \sim \mathcal {I}(\bar{\tau })\) and, for any permutation \(\theta \) on three elements, \(\sigma _{1} \sigma _{2} \sigma _{3} \sim \sigma _{\theta (1)}\sigma _{\theta (2)}\sigma _{\theta (3)}\).

Definition 3.8

A local product is a map \(\mathbb {X}: \mathcal {Q}\cup \{\Xi \} \rightarrow C^{\infty }(\mathbb {R}\times \mathbb {R}^{d})\), which we write \(\tau \mapsto \mathbb {X}_{\bullet }\tau \).

We further enforce that if \(\tau , \bar{\tau } \in \mathbb {X}\) satisfy \(\tau \sim \bar{\tau }\) then \(\mathbb {X}_{\bullet } \tau = \mathbb {X}_{\bullet } \bar{\tau }\).

3.3 Extension of local products

We now describe how any local product \(\mathbb {X}\) is extended to \(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\), this procedure will involve induction in \(m_{e}(\sigma ) + m_{\textbf{x}}(\sigma )\) where \(m_{e}(\sigma )\) is the number of edges of \(\sigma \).

We start by defining, for any function \(f: \mathbb {R}\times \mathbb {R}^{d} \rightarrow \mathbb {R}\), \((\mathcal {L}^{-1}f)\) to be the unique bounded solution u of

where \(\rho \) is a smooth cutoff function with value 1 in a neighbourhood of the parabolic cylinder D (defined in (1.2) above) and vanishes outside of \(\{z;\ d(z,0)<2\}\).

We now describe how we extend \(\mathbb {X}\) to \(\mathcal {V}_{\textsc {prod}}{\setminus } \mathcal {Q}\). If \(\tau = \mathcal {I}(\tau _{1}) \mathcal {I}(\tau _{2}) \mathcal {I}(\tau _{3}) \in \mathcal {V}_{\textsc {prod}}{\setminus } \mathcal {Q}\) then precisely one of the following conditions holds

-

(1)

Exactly one of the \(\tau _{1}\), \(\tau _{2}\), \(\tau _{3}\) belong to the set \(\{\textbf{X}_{i}\}_{i=1}^{d}\).

-

(2)

Two of the factors \(\tau _{1},\tau _{2},\tau _{3}\) are equal to \(\textbf{1}\).

In the first case above we can assume without loss of generality that \(\tau _{1} = \textbf{X}_{i}\), then we set

In the second case above we can assume without loss of generality that \(\tau _{1} = \tau _{2} = \textbf{1}\), then we set

Next we extend any local product \(\mathbb {X}\) to \(\mathcal {T}_{\textsc {LHS}}\) by setting, for any \(\mathcal {I}(\tau ) \in \mathcal {T}_{\textsc {LHS}}\),

Finally, we extend by linearity to allow \(\mathbb {X}\) to act on linear combinations of elements of \(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\). Adopting the language of rough path theory and regularity structures, given smooth noise \(\xi :\mathbb {R}\times \mathbb {R}^{d} \rightarrow \mathbb {R}\) we say a local product \(\mathbb {X}\) is a lift of \(\xi \) if \(\mathbb {X}_{z}\Xi = \xi (z)\). Without additional constraints lifts are not unique.

Definition 3.9

We say a local product \(\mathbb {X}\) is multiplicative if, for every

one has

where on the right hand side we are using the extension of \(\mathbb {X}\) to planted trees.

The following lemma is then straightforward to prove.

Lemma 3.10

Given any smooth \(\xi :\mathbb {R}\times \mathbb {R}^{d} \rightarrow \mathbb {R}\) there is a unique multiplicative lift of \(\xi \) into a local product, up to the choice of cut-off function \(\rho \).

Multiplicative local products will not play a special role in our analysis but we will use them at several points to compare our solution theory for (1.1) to the classical solution theory.

4 The Coproduct

As discussed in Sect. 2.4, local products \(\mathbb {X}_{\bullet }\) enter in our analysis in a centered form which depends on the choice of a basepoint x. The construction of these centered objects is given in Sect. 5. As a preliminary step, in this section we define a combinatorial operation on trees, called the coproduct, which plays a central role in this construction.

4.1 Derivative edges

For trees \(\sigma \) with \(|\sigma | \in (1,2)\) we will need the centering procedure to generate first order Taylor expansions in spatial directions. In order to encode these derivatives at the level of our algebraic symbols we introduce a new set of edges \(\mathcal {I}^{+}_{i}, i=1\ldots d\) and also define the sets of trees

As a mneumonic, \(\mathcal {T}_{\Delta }\) is the set of trees on which we will apply \(\Delta \). Given \(1 \leqq i \leqq d\) and \(\tau \in \mathcal {V}_{> 1}\cup \{\textbf{X}_{i}\}_{i=1}^{d}\), we also call \(\mathcal {I}_{i}^{+}(\tau )\) a planted tree. The order of these new planted trees introduced here is given by \(|\mathcal {I}_i^+(\tau )|=|\tau |+1\). We also adopt the shorthand that, for \(1 \leqq i \leqq d\),

We emphasise that these new edges will only ever appear as the bottom edge of a planted tree. Graphically we distinguish these edges by writing an index by them. For example,

At an analytic level, the role of \(\mathcal {I}^{+}_{i}(\textbf{X}_{j})\) is the same as that of \(\mathcal {I}(\textbf{1})\) but distinguishing these symbols will be important - see Remark 6.11.

4.2 Algebras and vector spaces of trees

We now give some notation for describing the codomain of our coproduct \(\Delta \). Given a set of trees T we write \(\textrm{Vec}(T)\) for the vector space (over \(\mathbb {R}\)) generated by T.

Given a set of planted trees T we write \(\textrm{Alg}(T)\) for the unital non-commutative algebra (again over \(\mathbb {R}\)) generated by T. We will distinguish between the tree product introduced in Sect. 3 and the product that makes \(\textrm{Alg}(T)\) an algebra, calling the the latter product the “forest product” (note that the truncation (3.4) is applied for the tree product but not the forest product). While both the tree product and forest product are non-commutative, the roles they play are quite different—see Remark 4.2

We will write \(\cdot \) to denote the forest product when using algebraic variables for trees, that is given \(\sigma , \tilde{\sigma } \in T\), we write \(\sigma \cdot \tilde{\sigma }\) for the forest product of \(\sigma \) and \(\tilde{\sigma }\). As a real vector space \(\textrm{Alg}(T)\) is spanned by products \(\sigma _{1} \cdot \sigma _{2} \cdots \sigma _{n}\) with \(\sigma _{1},\ldots ,\sigma _{n} \in T\). We call such a product \(\sigma _{1} \cdot \sigma _{2} \cdots \sigma _{n}\) a “forest”. The unit for the forest product is given by the “empty” forest and is denoted by 1.

Graphically, we will represent forest products just by drawing the corresponding planted trees side by side, for instance writing

4.3 Coproduct

We define another set of trees

As a mnemonic, \(\mathcal {T}_{\textrm{cen}}\) are the trees that are used to implement recentering. Our coproduct will be a map

Our definition of \(\Delta \) will be recursive. The base cases of this recursive definition are the trees in \(w\in \mathcal {W}\) and planted trees \(\mathcal {I}(w),\; w\in \mathcal {W}\), and the elementary trees \(\mathcal {I}(\textbf{1})\), \(\mathcal {I}(\textbf{X}_i)\) and \(\mathcal {I}^+_i(\textbf{X}_i)\):

Note that in the last line, the 1 appearing is the unit element in the algebra and should not be mistaken for \(\textbf{1}\in \textrm{Poly}\). The first and third definitions are compatible with the fact that \(\mathcal {I}(\textbf{1})\) and \(\mathcal {I}^{+}_{i}(\textbf{X}_{i})\) are represent the constant function 1, and so the coproduct acts trivially on them. For the second line we note that to recenter a linear monomial, one subtracts the same monomial evaluated at the basepoint (the first term on the RHS) while the second term gives the monomial evaluated on the active space–time argument (the \(\mathcal {I}^{+}_{i}(\textbf{X}_{i})\) being a way to represent the constant 1 again, see Remark 6.11). Finally, the last line of (4.4) comes from the fact that trees \(\mathcal {W}\), as they are built up iteratively from smaller trees, never involve trees of positive degree - from the algebraic point of view they behave just like the noise \(\Xi \).

The recursive part of our definition is then given by

where, on the right hand side of the last line above we are referring to the natural product \([\textrm{Vec}(\mathcal {T}_{\textsc {LHS}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})]^{\otimes 3} \rightarrow \textrm{Vec}(\mathcal {T}_{\textsc {RHS}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\). This product is just given by setting

and then extending by linearity. We also make note of the fact that, in the first and second lines of (4.5), we are using our convention of Einstein summation - since i does not appear on the left hand side then the i on the right hand side is summed from 1 to d.

In the first (resp. second) line of (4.5), the first two terms (resp. first term) on the RHS recenter the outermost integration, while the last term RHS recursively attempts to recenter earlier integrations.

We also note that a term \(\sigma ' \otimes f\) appears in the expansion of \(\Delta \sigma \) then one has

One can verify that it is indeed the case that \(\Delta \) maps \(\mathcal {T}_{\Delta }\) into \(\textrm{Vec}(\mathcal {T}_{\Delta }) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\) by checking inductively, using (4.4) for the bases cases and (4.5) for the inductive step. We also have the following lemma on how \(\Delta \) acts on subsets of \(\mathcal {T}_{\Delta }\).

Lemma 4.1

For \(X = \mathcal {T}_{\textsc {LHS}},\; \mathcal {T}_{\textsc {RHS}},\; \mathcal {V}_{\textsc {prod}}\,\) or \(\mathcal {T}_{\textrm{cen}}\) the map \(\Delta \) maps X into \(\textrm{Vec}(X) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\).

Proof

The first two statements are immediate consequences of our definitions. We turn to proving the third statement, where we proceed by induction in the number of edges of \(\tau \in \mathcal {V}\). The base case(s) where \(\tau \) has three edges are easily verified by hand. For the inductive step, we write \(\tau = \mathcal {I}(\tau _{1})\mathcal {I}(\tau _{2})\mathcal {I}(\tau _{3})\). Now, if \(\tau _{1},\tau _{2},\tau _{3} \in \mathcal {W}\) one can check that the last line of (4.5) gives us that \(\Delta \tau = \tau \otimes 1\) and we are done. On the other hand, if we have \(\tau _{i} \in \mathcal {V}\) for some i then \(\Delta \mathcal {I}(\tau ) \in \textrm{Vec}(\mathcal {I}(\mathcal {V})) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\) and so we are done by combining the last line of (4.5) with (3.3).

Finally, the fourth statement is immediate by inspection for planted trees \(\mathcal {T}_{\textrm{cen}}\setminus \mathcal {I}(\mathcal {V}_{\textsc {prod}})\) while for planted trees in \(\mathcal {I}(\mathcal {V}_{\textsc {prod}})\) it follows from using the third statement for \(\tau \) in the first line of (4.5). \(\square \)

We extend \(\Delta \) to sums of trees of linearity, so that \(\Delta : \textrm{Vec}(\mathcal {T}_{\Delta }) \rightarrow \textrm{Vec}(\mathcal {T}_{\Delta }) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\).

Remark 4.2

The two products we have introduced on trees, the tree product and the forest product, play different roles in our framework: the tree product represents a point-wise product of functions/distributions which may not be defined canonically. Therefore we do not enforce that local products act multiplicatively with respect to the tree product. On the other hand, any map on trees that is applied to a forest is extended multiplicatively - we do not allow for any flexibility in how forest products are interpreted at a concrete level. In particular, the trees in the forests that \(\Delta \) produces in the right factor of its codomain are all of non-negative order and should be thought of as being associated to products of base-point dependent constants rather than a point-wise product of space–time functions/distributions.

Example 4.3

We show one pictorial example. The value of our parameter \(\delta \) influences the definition of the set \(\mathcal {V}_{\textsc {prod}}\) and whether \(\mathcal {I}^{+}_{i}(\tau )\) vanishes or not for \(\tau \in \mathcal {V}_{\textsc {prod}}\), therefore most non-trivial computations of \(\Delta \) we would present are valid only for a certain range of the parameter \(\delta \).

For the example we present below, we restrict to \(\frac{3}{7}>\delta >\frac{1}{3}\) and therefore  . We then have, using Einstein’s convention for the index \(i\in \{1,\ldots ,d\}\) (when an index i appears twice on one side of an equation, it means a summation over \(i=1\ldots d\))

. We then have, using Einstein’s convention for the index \(i\in \{1,\ldots ,d\}\) (when an index i appears twice on one side of an equation, it means a summation over \(i=1\ldots d\))

Now we have  , along with

, along with  and

and

Putting this all together gives

We show now the example of an unplanted tree in the case \(\delta <\frac{1}{3}\) and therefore  . On the other hand, we always have

. On the other hand, we always have  . With Einstein’s convention for the index \(j\in \{1,\ldots ,d\}\)

. With Einstein’s convention for the index \(j\in \{1,\ldots ,d\}\)

Remark 4.4

The last formula in (4.5) for \(\tau \in \mathcal {V}_{\textsc {prod}}\) is also valid for \(\tau \in \mathcal {W}_{\textsc {prod}}\) where it is trivial. The first formula of (4.5) can also be extended to \(\tau \in \mathcal {V}\) if one adopts the convention that \(\Delta \textbf{X}_{i} = \Delta \textbf{1}= 0\). We chose not to do this since the trees of \(\textrm{Poly}\) do not, by themselves, play a role in our algebraic expansions and analysis except when they appear in a larger tree.

We extend \(\Delta \) to forests of planted trees by setting \(\Delta 1 = 1 \otimes 1\) and, for any forest \(\sigma _{1} \cdots \sigma _{n}\), \(n \geqq 1\),

where on the right hand side we use the forest product to multiply all the factors components-wise. We extend to sums of forests of planted trees by additivity so that \(\Delta : \textrm{Alg}(\mathcal {T}_{\textsc {LHS}}) \rightarrow \textrm{Alg}(\mathcal {T}_{\textsc {LHS}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\) and, by Lemma 4.1, we also have that \(\Delta \) maps \(\textrm{Alg}(\mathcal {T}_{\textrm{cen}})\) into \(\textrm{Alg}(\mathcal {T}_{\textrm{cen}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\).

While a single application of \(\Delta \) will be used for centering objects around a basepoint, we will see in Sect. 5 that a double application of \(\Delta \) will be used for describing the behaviour when changing this basepoint. This is our reason for also defining \(\Delta \) on the planted trees of \(\mathcal {T}_{\textrm{cen}}\setminus \mathcal {T}_{\textsc {LHS}}\). It will be important below to know that the two ways of “applying \(\Delta \) twice” agree, this is encoded in the following lemma:

Lemma 4.5

\(\Delta \) satisfies a co-associativity property: for any \(\sigma \in \mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}\),

where both sides are seen as elements of \(\textrm{Vec}(\mathcal {T}_{\textsc {RHS}}\cup \mathcal {T}_{\textsc {LHS}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}}) \otimes \textrm{Alg}(\mathcal {T}_{\textrm{cen}})\).

Proof