Abstract

We give a systematic description of a canonical renormalisation procedure of stochastic PDEs containing nonlinearities involving generalised functions. This theory is based on the construction of a new class of regularity structures which comes with an explicit and elegant description of a subgroup of their group of automorphisms. This subgroup is sufficiently large to be able to implement a version of the BPHZ renormalisation prescription in this context. This is in stark contrast to previous works where one considered regularity structures with a much smaller group of automorphisms, which lead to a much more indirect and convoluted construction of a renormalisation group acting on the corresponding space of admissible models by continuous transformations. Our construction is based on bialgebras of decorated coloured forests in cointeraction. More precisely, we have two Hopf algebras in cointeraction, coacting jointly on a vector space which represents the generalised functions of the theory. Two twisted antipodes play a fundamental role in the construction and provide a variant of the algebraic Birkhoff factorisation that arises naturally in perturbative quantum field theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a series of celebrated papers [10,11,12,13] Kuo-Tsai Chen discovered that, for any finite alphabet A, the family of iterated integrals of a smooth path \(x:\mathbf{R}_+\rightarrow \mathbf{R}^A\) has a number of interesting algebraic properties. Writing  for the tensor algebra on \(\mathbf{R}^A\), which we identify with the space spanned by all finite words \(\{(a_1\ldots a_n)\}_{n \ge 0}\) with letters in A, we define the family of functionals \(\mathbb {X}_{s,t}\) on

for the tensor algebra on \(\mathbf{R}^A\), which we identify with the space spanned by all finite words \(\{(a_1\ldots a_n)\}_{n \ge 0}\) with letters in A, we define the family of functionals \(\mathbb {X}_{s,t}\) on  inductively by

inductively by

where \(0\le s\le t\). Chen showed that this family yields for fixed s, t a character on  endowed with the shuffle product

endowed with the shuffle product , namely

, namely

which furthermore satisfies the flow relation

where  is the deconcatenation coproduct

is the deconcatenation coproduct

In other words, we have a function  which takes values in the characters on the algebra

which takes values in the characters on the algebra  and satisfies the Chen relation

and satisfies the Chen relation

where \(\star \) is the product dual to \(\Delta \). Note that  , endowed with the shuffle product and the deconcatenation coproduct, is a Hopf algebra.

, endowed with the shuffle product and the deconcatenation coproduct, is a Hopf algebra.

These two remarkable properties do not depend explicitly on the differentiability of the path \((x_t)_{t\ge 0}\). They can therefore serve as an important tool if one wants to consider non-smooth paths and still build a consistent calculus. This intuition was at the heart of Terry Lyons’ definition [46] of a geometric rough path as a function  satisfying the two algebraic properties above and with a controlled modulus of continuity, for instance of Hölder type

satisfying the two algebraic properties above and with a controlled modulus of continuity, for instance of Hölder type

with some fixed \(\gamma >0\) (although the original definition involved rather a p-variation norm, which is natural in this context since it is invariant under reparametrisation of the path x, just like the definition of \(\mathbb {X}\)). Lyons realised that this setting would allow to build a robust theory of integration and of associated differential equations. For instance, in the case of stochastic differential equations of Stratonovich type

with \(W:\mathbf{R}_+\rightarrow \mathbf{R}^d\) a d-dimensional Brownian motion and \(\sigma :\mathbf{R}^d\rightarrow \mathbf{R}^d\otimes \mathbf{R}^d\) smooth, one can build rough paths \(\mathbb {X}\) and \({\mathbb {W}}\) over X, respectively W, such that the map \({\mathbb {W}}\mapsto \mathbb {X}\) is continuous, while in general the map \(W\mapsto X\) is simply measurable.

The Itô stochastic integration was included in Lyons’ theory although it can not be described in terms of geometric rough paths. A few years later Gubinelli [29] introduced the concept of a branched rough path as a function  taking values in the characters of an algebra

taking values in the characters of an algebra  of rooted forests, satisfying the analogue of the Chen relation (1.2) with respect to the Grossman-Larsson \(\star \)-product, dual of the Connes-Kreimer coproduct, and with a regularity condition

of rooted forests, satisfying the analogue of the Chen relation (1.2) with respect to the Grossman-Larsson \(\star \)-product, dual of the Connes-Kreimer coproduct, and with a regularity condition

where \(|\tau |\) counts the number of nodes in the forest \(\tau \) and \(\gamma >0\) is fixed. Again, this framework allows for a robust theory of integration and differential equations driven by branched rough paths. Moreover  , endowed with the forest product and Connes-Kreimer coproduct, turns out to be a Hopf algebra.

, endowed with the forest product and Connes-Kreimer coproduct, turns out to be a Hopf algebra.

The theory of regularity structures [32], due to the second named author of this paper, arose from the desire to apply the above ideas to (stochastic) partial differential equations (SPDEs) involving non-linearities of (random) space–time distributions. Prominent examples are the KPZ equation [23, 27, 31], the \(\Phi ^4\) stochastic quantization equation [1, 7, 21, 32, 43, 45], the continuous parabolic Anderson model [26, 36, 37], and the stochastic Navier–Stokes equations [20, 53].

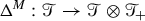

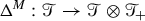

One apparent obstacle to the application of the rough paths framework to such SPDEs is that one would like to allow for the analogue of the map \(s\mapsto \mathbb {X}_{s,t}\tau \) to be a space–time distribution for some  . However, the algebraic relations discussed above involve products of such quantities, which are in general ill-defined. One of the main ideas of [32] was to replace the Hopf-algebra structure with a comodule structure: instead of a single space

. However, the algebraic relations discussed above involve products of such quantities, which are in general ill-defined. One of the main ideas of [32] was to replace the Hopf-algebra structure with a comodule structure: instead of a single space  , we have two spaces

, we have two spaces  and a coaction

and a coaction  such that

such that  is a right comodule over the Hopf algebra

is a right comodule over the Hopf algebra  . In this way, elements in the dual space

. In this way, elements in the dual space  of

of  are used to encode the distributional objects which are needed in the theory, while elements of

are used to encode the distributional objects which are needed in the theory, while elements of  encode continuous functions. Note that

encode continuous functions. Note that  admits neither a product nor a coproduct in general.

admits neither a product nor a coproduct in general.

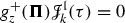

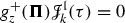

However, the comodule structure allows to define the analogue of a rough path as a pair: consider a distribution-valued continuous function

as well as a continuous function

The analogue of the Chen relation (1.2) is then given by

where the first \(\star \)-product is the convolution product on  , while the second \(\star \)-product is given by the dual of the coaction \(\Delta ^{\!+}\). This structure guarantees that all relevant expressions will be linear in the \(\Pi _y\), so we never need to multiply distributions. To compare this expression to (1.2), think of

, while the second \(\star \)-product is given by the dual of the coaction \(\Delta ^{\!+}\). This structure guarantees that all relevant expressions will be linear in the \(\Pi _y\), so we never need to multiply distributions. To compare this expression to (1.2), think of  for

for  as being the analogue of \(z \mapsto \mathbb {X}_{z,y}(\tau )\). Note that the algebraic conditions (1.5) are not enough to provide a useful object: analytic conditions analogous to (1.4) play an essential role in the analytical aspects of the theory. Once a model\(\mathbb {X}= (\Pi ,\gamma )\) has been constructed, it plays a role analogous to that of a rough path and allows to construct a robust solution theory for a class of rough (partial) differential equations.

as being the analogue of \(z \mapsto \mathbb {X}_{z,y}(\tau )\). Note that the algebraic conditions (1.5) are not enough to provide a useful object: analytic conditions analogous to (1.4) play an essential role in the analytical aspects of the theory. Once a model\(\mathbb {X}= (\Pi ,\gamma )\) has been constructed, it plays a role analogous to that of a rough path and allows to construct a robust solution theory for a class of rough (partial) differential equations.

In various specific situations, the theory yields a canonical lift of any smoothened realisation of the driving noise for the stochastic PDE under consideration to a model \(\mathbb {X}^\varepsilon \). Another major difference with what one sees in the rough paths setting is the following phenomenon: if we remove the regularisation as \(\varepsilon \rightarrow 0\), neither the canonical model \(\mathbb {X}^\varepsilon \) nor the solution to the regularised equation converge in general to a limit. This is a structural problem which reflects again the fact that some products are intrinsically ill-defined.

This is where renormalisation enters the game. It was already recognised in [32] that one should find a group \(\mathfrak {R}\) of transformations on the space of models and elements \(M_\varepsilon \) in \(\mathfrak {R}\) in such a way that, when applying \(M_\varepsilon \) to the canonical lift \(\mathbb {X}^\varepsilon \), the resulting sequence of models converges to a limit. Then the theory essentially provides a black box, allowing to build maximal solutions for the stochastic PDE in question.

One aspect of the theory developed in [32] that is far from satisfactory is that while one has in principle a characterisation of \(\mathfrak {R}\), this characterisation is very indirect. The methodology pursued so far has been to first make an educated guess for a sufficiently large family of renormalisation maps, then verify by hand that these do indeed belong to \(\mathfrak {R}\) and finally show, again by hand, that the renormalised models converge to a limit. Since these steps did not rely on any general theory, they had to be performed separately for each new class of stochastic PDEs.

The main aim of the present article is to define an algebraic framework allowing to build regularity structures which, on the one hand, extend the ones built in [32] and, on the other hand, admit sufficiently many automorphisms (in the sense of [32, Def. 2.28]) to cover the renormalisation procedures of all subcritical stochastic PDEs that have been studied to date.

Moreover our construction is not restricted to the Gaussian setting and applies to any choice of the driving noise with minimal integrability conditions. In particular this allows to recover all the renormalisation procedures used so far in applications of the theory [32, 38,39,40, 42, 51]. It reaches however far beyond this and shows that the BPHZ renormalisation procedure belongs to the renormalisation group of the regularity structure associated to any class of subcritical semilinear stochastic PDEs. In particular, this is the case for the generalised KPZ equation which is the most natural stochastic evolution on loop space and is (formally!) given in local coordinates by

where the \(\xi _i\) are independent space–time white noises, \(\Gamma ^\alpha _{\beta \gamma }\) are the Christoffel symbols of the underlying manifold, and the \(\sigma _i\) are a collection of vector fields with the property that \(\sum _i L_{\sigma _i}^2 = \Delta \), where \(L_{\sigma }\) is the Lie derivative in the direction of \(\sigma \) and \(\Delta \) is the Laplace-Beltrami operator. Another example is given by the stochastic sine-Gordon equation [41] close to the Kosterlitz-Thouless transition. In both of these examples, the relevant group describing the renormalisation procedures is of very large dimension (about 100 in the first example and arbitrarily large in the second one), so that the verification “by hand” that it does indeed belong to the “renormalisation group” as done for example in [32, 39], would be impractical.

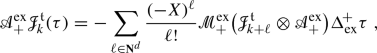

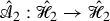

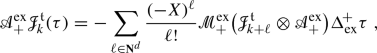

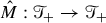

In order to describe the renormalisation procedure of SPDEs we introduce a new construction of an associated regularity structure, that will be called extended since it contains a new parameter which was not present in [32], the extended decoration. As above, this yields spaces  , such that

, such that  is a Hopf algebra and

is a Hopf algebra and  a right comodule over

a right comodule over  . The renormalisation procedure of distributions coded by

. The renormalisation procedure of distributions coded by  is then described by another Hopf algebra

is then described by another Hopf algebra  and coactions

and coactions  and

and  turning both

turning both  and

and  into left comodules over

into left comodules over  . This construction is, crucially, compatible with the comodule structure of

. This construction is, crucially, compatible with the comodule structure of  over

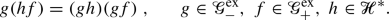

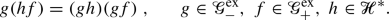

over  in the sense that \(\Delta ^{\!-}_\mathrm {ex}\) and \(\Delta ^{\!+}_\mathrm {ex}\) are in cointeraction in the terminology of [25], see formulae (3.48)–(5.26) and Remark 3.28 below. Once this structure is obtained, we can define renormalised models as follows: given a functional

in the sense that \(\Delta ^{\!-}_\mathrm {ex}\) and \(\Delta ^{\!+}_\mathrm {ex}\) are in cointeraction in the terminology of [25], see formulae (3.48)–(5.26) and Remark 3.28 below. Once this structure is obtained, we can define renormalised models as follows: given a functional  and a model \(\mathbb {X}= (\Pi ,\gamma )\), we construct a new model \(\mathbb {X}^g\) by setting

and a model \(\mathbb {X}= (\Pi ,\gamma )\), we construct a new model \(\mathbb {X}^g\) by setting

The cointeraction property then guarantees that \(\mathbb {X}^g\) satisfies again the generalised Chen relation (1.5). Furthermore, the action of  on

on  and

and  is such that, crucially, the associated analytical conditions automatically hold as well.

is such that, crucially, the associated analytical conditions automatically hold as well.

All the coproducts and coactions mentioned above are a priori different operators, but we describe them in a unified framework as special cases of a contraction/extraction operation of subforests, as arising in the BPHZ renormalisation procedure/forest formula [3, 24, 35, 52]. It is interesting to remark that the structure described in this article is an extension of that previously described in [8, 14, 15] in the context of the analysis of B-series for numerical ODE solvers, which is itself an extension of the Connes-Kreimer Hopf algebra of rooted trees [16, 18] arising in the abovementioned forest formula in perturbative QFT. It is also closely related to incidence Hopf algebras associated to families of posets [49, 50].

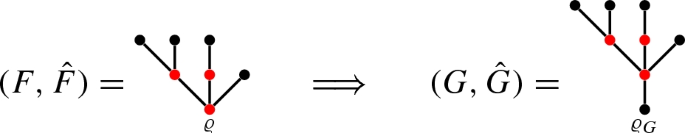

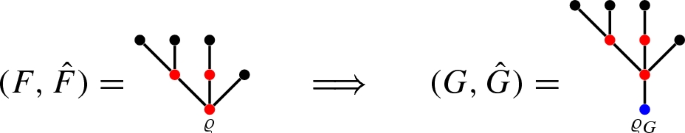

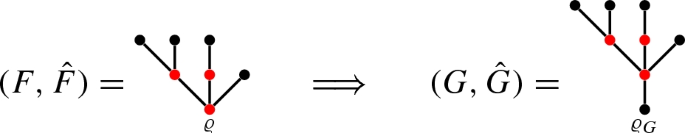

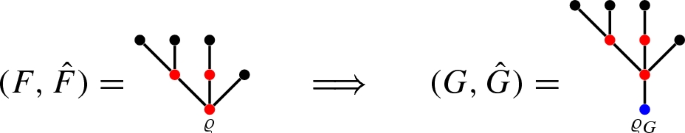

There are however a number of substantial differences with respect to the existing literature. First we propose a new approach based on coloured forests; for instance we shall consider operations like

of colouring, extraction and contraction of subforests. Further, the abovementioned articles deal with two spaces in cointeraction, analogous to our Hopf algebras  and

and  , while our third space

, while our third space  is the crucial ingredient which allows for distributions in the analytical part of the theory. Indeed, one of the main novelties of regularity structures is that they allow to study random distributional objects in a pathwise sense rather than through Feynman path integrals/correlation functions and the space

is the crucial ingredient which allows for distributions in the analytical part of the theory. Indeed, one of the main novelties of regularity structures is that they allow to study random distributional objects in a pathwise sense rather than through Feynman path integrals/correlation functions and the space  encodes the fundamental bricks of this construction. Another important difference is that the structure described here does not consist of simple trees/forests, but they are decorated with multiindices on both their edges and their vertices. These decorations are not inert but transform in a non-trivial way under our coproducts, interacting with other operations like the contraction of sub-forests and the computation of suitable gradings.

encodes the fundamental bricks of this construction. Another important difference is that the structure described here does not consist of simple trees/forests, but they are decorated with multiindices on both their edges and their vertices. These decorations are not inert but transform in a non-trivial way under our coproducts, interacting with other operations like the contraction of sub-forests and the computation of suitable gradings.

In this article, Taylor sums play a very important role, just as in the BPHZ renormalisation procedure, and they appear in the coactions of both  (the renormalisation) and

(the renormalisation) and  (the recentering). In both operations, the group elements used to perform such operations are constructed with the help of a twisted antipode, providing a variant of the algebraic Birkhoff factorisation that was previously shown to arise naturally in the context of perturbative quantum field theory, see for example [16, 18, 19, 22, 30, 44].

(the recentering). In both operations, the group elements used to perform such operations are constructed with the help of a twisted antipode, providing a variant of the algebraic Birkhoff factorisation that was previously shown to arise naturally in the context of perturbative quantum field theory, see for example [16, 18, 19, 22, 30, 44].

In general, the context for a twisted antipode/Birkhoff factorisation is that of a group G acting on some vector space A which comes with a valuation. Given an element of A, one then wants to renormalise it by acting on it with a suitable element of G in such a way that its valuation vanishes. In the context of dimensional regularisation, elements of A assign to each Feynman diagram a Laurent series in a regularisation parameter \(\varepsilon \), and the valuation extracts the pole part of this series. In our case, the space A consists of stationary random linear maps  and we have two actions on it, by the group of characters

and we have two actions on it, by the group of characters  of

of  , corresponding to two different valuations. The renormalisation group

, corresponding to two different valuations. The renormalisation group is associated to the valuation that extracts the value of \(\mathbf{E}(\varvec{\Pi }\tau )(0)\) for every homogeneous element

is associated to the valuation that extracts the value of \(\mathbf{E}(\varvec{\Pi }\tau )(0)\) for every homogeneous element  of negative degree. The structure group

of negative degree. The structure group on the other hand is associated to the valuations that extract the values \((\varvec{\Pi }\tau )(x)\) for all homogeneous elements

on the other hand is associated to the valuations that extract the values \((\varvec{\Pi }\tau )(x)\) for all homogeneous elements  of positive degree.

of positive degree.

We show in particular that the twisted antipode related to the action of  is intimately related to the algebraic properties of Taylor remainders. Also in this respect, regularity structures provide a far-reaching generalisation of rough paths, expanding Massimiliano Gubinelli’s investigation of the algebraic and analytic properties of increments of functions of a real variable achieved in the theory of controlled rough paths [28].

is intimately related to the algebraic properties of Taylor remainders. Also in this respect, regularity structures provide a far-reaching generalisation of rough paths, expanding Massimiliano Gubinelli’s investigation of the algebraic and analytic properties of increments of functions of a real variable achieved in the theory of controlled rough paths [28].

1.1 A general renormalisation scheme for SPDEs

Regularity Structures (RS) have been introduced [32] in order to solve singular SPDEs of the form

where \(u=u(t,x)\) with \(t\ge 0\) and \(x\in \mathbf{R}^d\), \(\xi \) is a random space–time Schwartz distribution (typically stationary and approximately scaling-invariant at small scales) driving the equation and the non-linear term \(F(u,\nabla u,\xi )\) contains some products of distributions which are not well-defined by classical analytic methods. We write this equation in the customary mild formulation

where G is the heat kernel and we suppose for simplicity that \(u(0,\cdot )=0\).

If we regularise the noise \(\xi \) by means of a family of smooth mollifiers \((\varrho ^\varepsilon )_{\varepsilon >0}\), setting \(\xi ^\varepsilon :=\varrho ^\varepsilon *\xi \), then the regularised PDE

is well-posed under suitable assumptions on F. However, if we want to remove the regularisation by letting \(\varepsilon \rightarrow 0\), we do not know whether \(u^\varepsilon \) converges. The problem is that \(\xi ^\varepsilon \rightarrow \xi \) in a space of distributions with negative (say) Sobolev regularity, and in such spaces the solution map \(\xi ^\varepsilon \mapsto u^\varepsilon \) is not continuous.

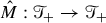

The theory of RS allows to solve this problem for a class of equations, called subcritical. The general approach is as in Rough Paths (RP): the discontinuous solution map

is factorised as the composition of two maps:

where \((\mathscr {M},\mathrm{d})\) is a metric space that we call the space of models. The main point is that the map  can be chosen in such a way that its is continuous, even though \(\mathscr {M}\) is sufficiently large to allow for elements exhibiting a local scaling behaviour compatible with that of \(\xi \). Of course this means that \(\xi ^\varepsilon \mapsto {\mathbb {X}}^\varepsilon \) is discontinuous in general. In RP, the analogue of the model \({\mathbb {X}}^\varepsilon \) is the lift of the driving noise as a rough path, the map \(\Phi \) is called the Itô-Lyons map, and its continuity (due to T. Lyons [46]) is the cornerstone of the theory. The construction of

can be chosen in such a way that its is continuous, even though \(\mathscr {M}\) is sufficiently large to allow for elements exhibiting a local scaling behaviour compatible with that of \(\xi \). Of course this means that \(\xi ^\varepsilon \mapsto {\mathbb {X}}^\varepsilon \) is discontinuous in general. In RP, the analogue of the model \({\mathbb {X}}^\varepsilon \) is the lift of the driving noise as a rough path, the map \(\Phi \) is called the Itô-Lyons map, and its continuity (due to T. Lyons [46]) is the cornerstone of the theory. The construction of  in the general context of subcritical SPDEs is one of the main results of [32].

in the general context of subcritical SPDEs is one of the main results of [32].

The construction of \(\Phi \), although a very powerful tool, does not solve alone the aforementioned problem, since it turns out that the most natural choice of \({\mathbb {X}}^\varepsilon \), which we call the canonical model, does in general not converge as we remove the regularisation by letting \(\varepsilon \rightarrow 0\). It is necessary to modify, namely renormalise, the model \({\mathbb {X}}^\varepsilon \) in order to obtain a family \(\hat{\mathbb {X}}^\varepsilon \) which does converge in \(\mathscr {M}\) as \(\varepsilon \rightarrow 0\) to a limiting model \(\hat{\mathbb {X}}\). The continuity of \(\Phi \) then implies that \(\hat{u}^\varepsilon :=\Phi (\hat{\mathbb {X}}^\varepsilon )\) converges to some limit \(\hat{u}:=\Phi (\hat{\mathbb {X}})\), which we call the renormalised solution to our equation, see Fig. 1. A very important fact is that \(\hat{u}^\varepsilon \) is itself the solution of a renormalised equation, which differs from the original equation only by the presence of additional local counterterms, the form of which can be derived explicitly from the starting SPDE, see [2].

In this figure we show the factorisation of the map \(\xi ^\varepsilon \mapsto u^\varepsilon \) into \(\xi ^\varepsilon \mapsto {\mathbb {X}}^\varepsilon \mapsto \Phi ({\mathbb {X}}^\varepsilon )=u^\varepsilon \). We also see that in the space of models \(\mathscr {M}\) we have several possible lifts of \(\xi ^\varepsilon \in {\mathcal {S}}'(\mathbf{R}^d)\), e.g. the canonical model \({\mathbb {X}}^\varepsilon \) and the renormalised model \(\hat{\mathbb {X}}^\varepsilon \); it is the latter that converges to a model \(\hat{\mathbb {X}}\), thus providing a lift of \(\xi \). Note that \(\hat{u}^\varepsilon =\Phi (\hat{\mathbb {X}}^\varepsilon )\) and \(\hat{u}=\Phi (\hat{\mathbb {X}})\)

The transformation \({\mathbb {X}}^\varepsilon \mapsto \hat{\mathbb {X}}^\varepsilon \) is described by the so-called renormalisation group. The main aim of this paper is to provide a general construction of the space of models \(\mathscr {M}\) together with a group of automorphisms  which allows to describe the renormalised model \(\hat{\mathbb {X}}^\varepsilon =S_\varepsilon {\mathbb {X}}^\varepsilon \) for an appropriate choice of

which allows to describe the renormalised model \(\hat{\mathbb {X}}^\varepsilon =S_\varepsilon {\mathbb {X}}^\varepsilon \) for an appropriate choice of  .

.

Starting with the \(\varphi ^4_3\) equation and the Parabolic Anderson Model in [32], several equations have already been successfully renormalised with regularity structures [34, 36, 37, 39,40,41,42, 51]. In all these cases, the construction of the renormalised model and its convergence as the regularisation is removed are based on ad hoc arguments which have to be adapted to each equation. The present article, together with the companion “analytical” article [9] and the work [2], complete the general theory initiated in [32] by proving that virtually everyFootnote 1 subcritical equation driven by a stationary noise satisfying some natural bounds on its cumulants can be successfully renormalised by means of the following scheme:

-

Algebraic step: Construction of the space of models \((\mathscr {M},\mathrm{d})\) and renormalisation of the canonical model \(\mathscr {M}\ni {\mathbb {X}}^\varepsilon \mapsto \hat{\mathbb {X}}^\varepsilon \in \mathscr {M}\), this article.

-

Analytic step: Continuity of the solution map

, [32].

, [32]. -

Probabilistic step: Convergence in probability of the renormalised model \(\hat{\mathbb {X}}^\varepsilon \) to \(\hat{\mathbb {X}}\) in \((\mathscr {M},\mathrm{d})\), [9].

-

Second algebraic step: Identification of \(\Phi (\hat{\mathbb {X}}^\varepsilon )\) with the classical solution map for an equation with local counterterms, [2].

We stress that this procedure works for very general noises, far beyond the Gaussian case.

1.2 Overview of results

We now describe in more detail the main results of this paper. Let us start from the notion of a subcritical rule. A rule, introduced in Definition 5.7 below, is a formalisation of the notion of a “class of systems of stochastic PDEs”. More precisely, given any system of equations of the type (1.7), there is a natural way of assigning to it a rule (see Sect. 5.4 for an example), which keeps track of which monomials (of the solution, its derivatives, and the driving noise) appear on the right hand side for each component. The notion of a subcritical rule, see Definition 5.14, translates to this general context the notion of subcriticality of equations which was given more informally in [32, Assumption 8.3].

Suppose now that we have fixed a subcritical rule. The first aim is to construct an associated space of models \(\mathscr {M}^\mathrm {ex}\). The superscript ‘\(\mathrm {ex}\)’ stands for extended and is used to distinguish this space from the restricted space of models \(\mathscr {M}\), see Definition 6.24, which is closer to the original construction of [32]. The space \(\mathscr {M}^\mathrm {ex}\) extends \(\mathscr {M}\) in the sense that there is a canonical continuous injection \(\mathscr {M}\hookrightarrow \mathscr {M}^\mathrm {ex}\), see Theorem 6.33. The reason for considering this larger space is that it admits a large group  of automorphisms in the sense of [32, Def. 2.28] which can be described in an explicit way. Our renormalisation procedure then makes use of a suitable subgroup

of automorphisms in the sense of [32, Def. 2.28] which can be described in an explicit way. Our renormalisation procedure then makes use of a suitable subgroup  which leaves \(\mathscr {M}\) invariant. The reason why we do not describe its action on \(\mathscr {M}\) directly is that although it acts by continuous transformations, it no longer acts by automorphisms, making it much more difficult to describe without going through \(\mathscr {M}^\mathrm {ex}\).

which leaves \(\mathscr {M}\) invariant. The reason why we do not describe its action on \(\mathscr {M}\) directly is that although it acts by continuous transformations, it no longer acts by automorphisms, making it much more difficult to describe without going through \(\mathscr {M}^\mathrm {ex}\).

To define \(\mathscr {M}^\mathrm {ex}\), we construct a regularity structure  in the sense of [32, Def. 2.1]. This is done in Sect. 5, see in particular Definitions 5.26–5.35 and Proposition 5.39. The corresponding structure group

in the sense of [32, Def. 2.1]. This is done in Sect. 5, see in particular Definitions 5.26–5.35 and Proposition 5.39. The corresponding structure group is constructed as the character group of a Hopf algebra

is constructed as the character group of a Hopf algebra  , see (5.23), Proposition 5.34 and Definition 5.36. The vector space

, see (5.23), Proposition 5.34 and Definition 5.36. The vector space  is a right-comodule over

is a right-comodule over  , namely there are linear operators

, namely there are linear operators

such that the identity

holds both between operators on  and on

and on  . The fact that the two operators have the same name but act on different spaces should not generate confusion since the domain is usually clear from context. When it isn’t, as in (1.8), then the identity is assumed by convention to hold for all possible meaningful interpretations.

. The fact that the two operators have the same name but act on different spaces should not generate confusion since the domain is usually clear from context. When it isn’t, as in (1.8), then the identity is assumed by convention to hold for all possible meaningful interpretations.

Next, the renormalisation group is defined as the character group of the Hopf algebra

is defined as the character group of the Hopf algebra  , see (5.23), Proposition 5.35 and Definition 5.36. The vector spaces

, see (5.23), Proposition 5.35 and Definition 5.36. The vector spaces  and

and  are both left-comodules over

are both left-comodules over  , so that

, so that  acts on the left on

acts on the left on  and on

and on  . Again, this means that we have operators

. Again, this means that we have operators

such that

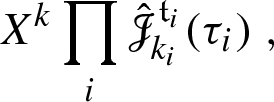

The action of  on the corresponding dual spaces is given by

on the corresponding dual spaces is given by

Crucially, these separate actions satisfy a compatibility condition which can be expressed as a cointeraction property, see (5.26) in Theorem 5.37, which implies the following relation between the two actions above:

see Proposition 3.33 and (5.27). This result is the algebraic linchpin of Theorem 6.16, where we construct the action of  on the space \(\mathscr {M}^\mathrm {ex}\) of models.

on the space \(\mathscr {M}^\mathrm {ex}\) of models.

The next step is the construction of the space of smooth models of the regularity structure  . This is done in Definition 6.7, where we follow [32, Def. 2.17], with the additional constraint that we consider smooth objects. Indeed, we are interested in the canonical model associated to a (regularised) smooth noise, constructed in Proposition 6.12 and Remark 6.13, and in its renormalised versions, namely its orbit under the action of

. This is done in Definition 6.7, where we follow [32, Def. 2.17], with the additional constraint that we consider smooth objects. Indeed, we are interested in the canonical model associated to a (regularised) smooth noise, constructed in Proposition 6.12 and Remark 6.13, and in its renormalised versions, namely its orbit under the action of  , see Theorem 6.16.

, see Theorem 6.16.

Finally, we restrict our attention to a class of models which are random, stationary and have suitable integrability properties, see Definition 6.17. In this case, we can define a particular deterministic element of  that gives rise to what we call the BPHZ renormalisation, by analogy with the corresponding construction arising in perturbative QFT [3, 24, 35, 52], see Theorem 6.18. We show that the BPHZ construction yields the unique element of

that gives rise to what we call the BPHZ renormalisation, by analogy with the corresponding construction arising in perturbative QFT [3, 24, 35, 52], see Theorem 6.18. We show that the BPHZ construction yields the unique element of  such that the associated renormalised model yields a centered family of stochastic processes on the finite family of elements in

such that the associated renormalised model yields a centered family of stochastic processes on the finite family of elements in  with negative degree. This is the algebraic step of the renormalisation procedure.

with negative degree. This is the algebraic step of the renormalisation procedure.

This is the point where the companion analytical paper [9] starts, and then goes on to prove that the BPHZ renormalised model does converge in the metric \(\mathrm{d}\) on \(\mathscr {M}\), thus achieving the probabilistic step mentioned above and thereby completing the renormalisation procedure.

The BPHZ functional is expressed explicitly in terms of an interesting map that we call negative twisted antipode by analogy to [17], see Proposition 6.6 and (6.25). There is also a positive twisted antipode, see Proposition 6.3, which plays a similarly important role in (6.12). The main point is that these twisted antipodes encode in the compact formulae (6.12) and (6.25) a number of nontrivial computations.

How are these spaces and operators defined? Since the analytic theory of [32] is based on generalised Taylor expansions of solutions, the vector space  is generated by a basis which codes the relevant generalised Taylor monomials, which are defined iteratively once a rule (i.e. a system of equations) is fixed. Definitions 5.8, 5.13 and 5.26 ensure that

is generated by a basis which codes the relevant generalised Taylor monomials, which are defined iteratively once a rule (i.e. a system of equations) is fixed. Definitions 5.8, 5.13 and 5.26 ensure that  is sufficiently rich to allow one to rewrite (1.7) as a fixed point problem in a space of functions with values in our regularity structure. Moreover

is sufficiently rich to allow one to rewrite (1.7) as a fixed point problem in a space of functions with values in our regularity structure. Moreover  must also be invariant under the actions of

must also be invariant under the actions of  . This is the aim of the construction in Sects. 2, 3 and 4, that we want now to describe.

. This is the aim of the construction in Sects. 2, 3 and 4, that we want now to describe.

The spaces which are constructed in Sect. 5 depend on the choice of a number of parameters, like the dimension of the coordinate space, the leading differential operator in the equation (the Laplacian being just one of many possible choices), the non-linearity, the noise. In the previous sections we have built universal objects with nice algebraic properties which depend on none of these choices, but for the dimension of the space, namely an (arbitrary) integer number d fixed once for all.

The spaces  ,

,  and

and  are obtained by considering repeatedly suitable subsets and suitable quotients of two initial spaces, called \(\mathfrak {F}_1\) and \(\mathfrak {F}_2\) and defined in and after Definition 4.1; more precisely, \(\mathfrak {F}_1\) is the ancestor of

are obtained by considering repeatedly suitable subsets and suitable quotients of two initial spaces, called \(\mathfrak {F}_1\) and \(\mathfrak {F}_2\) and defined in and after Definition 4.1; more precisely, \(\mathfrak {F}_1\) is the ancestor of  and

and  , while \(\mathfrak {F}_2\) is the ancestor of

, while \(\mathfrak {F}_2\) is the ancestor of  . In Sect. 4 we represent these spaces as linearly generated by a collection of decorated forests, on which we can define suitable algebraic operations like a product and a coproduct, which are later inherited by

. In Sect. 4 we represent these spaces as linearly generated by a collection of decorated forests, on which we can define suitable algebraic operations like a product and a coproduct, which are later inherited by  ,

,  and

and  (through other intermediary spaces which are called

(through other intermediary spaces which are called  ,

,  and

and  ). An important difference between

). An important difference between  and

and  is that the former is linearly generated by a family of forests, while the latter is linearly generated by a family of trees; this difference extends to the algebra structure:

is that the former is linearly generated by a family of forests, while the latter is linearly generated by a family of trees; this difference extends to the algebra structure:  is endowed with a forest product which corresponds to the disjoint union, while

is endowed with a forest product which corresponds to the disjoint union, while  is endowed with a tree product whereby one considers a disjoint union and then identifies the roots.

is endowed with a tree product whereby one considers a disjoint union and then identifies the roots.

The content of Sect. 4 is based on a specific definition of the spaces \(\mathfrak {F}_1\) and \(\mathfrak {F}_2\). In Sects. 2 and 3 however we present a number of results on a family of spaces \((\mathfrak {F}_i)_{i\in I}\) with \(I\subset \mathbf{N}\), which are supposed to satisfy a few assumptions; Sect. 4 is therefore only a particular example of a more general theory, which is outlined in Sects. 2 and 3. In this general setting we consider spaces \(\mathfrak {F}_i\) of decorated forests, and vector spaces \({\langle \mathfrak {F}_i\rangle }\) of infinite series of such forests. Such series are not arbitrary but adapted to a grading, see Sect. 2.3; this is needed since our abstract coproducts of Definition 3.3 contain infinite series and might be ill-defined if were to work on arbitrary formal series.

The family of spaces \((\mathfrak {F}_i)_{i\in I}\) are introduced in Definition 3.12 on the basis of families of admissible forests \(\mathfrak {A}_i\), \(i\in I\). If \((\mathfrak {A}_i)_{i\in I}\) satisfy Assumptions 1, 2, 3, 4, 5 and 6, then the coproducts \(\Delta _i\) of Definition 3.3 are coassociative and moreover \(\Delta _i\) and \(\Delta _j\) for \(i<j\) are in cointeraction, see (3.27). As already mentioned, the cointeraction property is the algebraic formula behind the fundamental relation (1.9) between the actions of  and

and  on

on  . “Appendix A” contains a summary of the relations between the most important spaces appearing in this article, while “Appendix B” contains a symbolic index.

. “Appendix A” contains a summary of the relations between the most important spaces appearing in this article, while “Appendix B” contains a symbolic index.

2 Rooted forests and bigraded spaces

Given a finite set S and a map \(\ell :S \rightarrow \mathbf{N}\), we write

and we define the corresponding binomial coefficients accordingly. Note that if \(\ell _1\) and \(\ell _2\) have disjoint supports, then \((\ell _1 + \ell _2)! = \ell _1!\,\ell _2!\). Given a map \(\pi :S \rightarrow \bar{S}\), we also define \(\pi _\star \ell :\bar{S} \rightarrow \mathbf{N}\) by \(\pi _\star \ell (x) = \sum _{y \in \pi ^{-1}(x)} \ell (y)\).

For \(k,\ell :S \rightarrow \mathbf{N}\) we define

with the convention \(\left( {\begin{array}{c}k\\ \ell \end{array}}\right) = 0\) unless \(0\le \ell \le k\), which will be used throughout the paper. With these definitions at hand, one has the following slight reformulation of the classical Chu–Vandermonde identity.

Lemma 2.1

(Chu–Vandermonde) For every \(k :S \rightarrow \mathbf{N}\), one has the identity

where the sum runs over all possible choices of \(\ell \) such that \(\pi _\star \ell \) is fixed. \(\square \)

Remark 2.2

These notations are also consistent with the case where the maps k and \(\ell \) are multi-index valued under the natural identification of a map \(S \rightarrow \mathbf{N}^d\) with a map \(S \times \{1,\ldots ,\infty \} \rightarrow \mathbf{N}\) given by \(\ell (x)_i \leftrightarrow \ell (x,i)\).

2.1 Rooted trees and forests

Recall that a rooted tree T is a finite tree (a finite connected simple graph without cycles) with a distinguished vertex, \(\varrho =\varrho _T\), called the root. Vertices of T, also called nodes, are denoted by \(N=N_T\) and edges by \(E=E_T\subset N^2\). Since we want our trees to be rooted, they need to have at least one node, so that we do not allow for trees with \(N_T = \varnothing \). We do however allow for the trivial tree consisting of an empty edge set and a vertex set with only one element. This tree will play a special role in the sequel and will be denoted by \(\bullet \). We will always assume that our trees are combinatorial meaning that there is no particular order imposed on edges leaving any given vertex.

Given a rooted tree T, we also endow \(N_T\) with the partial order \(\le \) where \(w \le v\) if and only if w is on the unique path connecting v to the root, and we orient edges in \(E_T\) so that if \((x,y) = (x \rightarrow y) \in E_T\), then \(x \le y\). In this way, we can always view a tree as a directed graph.

Two rooted trees T and \(T'\) are isomorphic if there exists a bijection \(\iota :E_T \rightarrow E_{T'}\) which is coherent in the sense that there exists a bijection \(\iota _N :N_T \rightarrow N_{T'}\) such that \(\iota (x,y) = (\iota _N(x),\iota _N(y))\) for any edge \((x,y) \in e\) and such that the roots are mapped onto each other.

We say that a rooted tree is typed if it is furthermore endowed with a function \(\mathfrak {t}:E_T \rightarrow \mathfrak {L}\), where \(\mathfrak {L}\) is some finite set of types. We think of \(\mathfrak {L}\) as being fixed once and for all and will sometimes omit to mention it in the sequel. In particular, we will never make explicit the dependence on the choice of \(\mathfrak {L}\) in our notations. Two typed trees \((T,\mathfrak {t})\) and \((T',\mathfrak {t}')\) are isomorphic if T and \(T'\) are isomorphic and \(\mathfrak {t}\) is pushed onto \(\mathfrak {t}'\) by the corresponding isomorphism \(\iota \) in the sense that \(\mathfrak {t}'\circ \iota = \mathfrak {t}\).

Similarly to a tree, a forestF is a finite simple graph (again with nodes \(N_F\) and edges \(E_F \subset N_F^2\)) without cycles. A forest F is rooted if every connected component T of F is a rooted tree with root \(\varrho _T\). As above, we will consider forests that are typed in the sense that they are endowed with a map \(\mathfrak {t}:E_F \rightarrow \mathfrak {L}\), and we consider the same notion of isomorphism between typed forests as for typed trees. Note that while a tree is non-empty by definition, a forest can be empty. We denote the empty forest by either \(\mathbf {1}\) or \(\varnothing \).

Given a typed forest F, a subforest \(A \subset F\) consists of subsets \(E_A \subset E_F\) and \(N_A \subset N_F\) such that if \((x,y) \in E_A\) then \(\{x,y\}\subset N_A\). Types in A are inherited from F. A connected component of A is a tree whose root is defined to be the minimal node in the partial order inherited from F. We say that subforests A and B are disjoint, and write \(A \cap B = \varnothing \), if one has \(N_A \cap N_B = \varnothing \) (which also implies that \(E_A\cap E_B = \varnothing \)). Given two typed forests F, G, we write \(F\sqcup G\) for the typed forest obtained by taking the disjoint union (as graphs) of the two forests F and G and adjoining to it the natural typing inherited from F and G. If furthermore \(A \subset F\) and \(B \subset G\) are subforests, then we write \(A \sqcup B\) for the corresponding subforest of \(F \sqcup G\).

We fix once and for all an integer \(d\ge 1\), dimension of the parameter-space \(\mathbf{R}^d\). We also denote by \(\mathbf{Z}(\mathfrak {L})\) the free abelian group generated by \(\mathfrak {L}\).

2.2 Coloured and decorated forests

Given a typed forest F, we want now to consider families of disjoint subforests of F, denoted by \((\hat{F}_i, i>0)\). It is convenient for us to code this family with a single function \(\hat{F}:E_F \sqcup N_F \rightarrow \mathbf{N}\) as given by the next definition.

Definition 2.3

A coloured forest is a pair \((F,\hat{F})\) such that

-

1.

\(F = (E_F,N_F,\mathfrak {t})\) is a typed rooted forest

-

2.

\(\hat{F} :E_F \sqcup N_F \rightarrow \mathbf{N}\) is such that if \(\hat{F}(e) \ne 0\) for \(e=(x,y) \in E_F\) then \(\hat{F}(x) = \hat{F}(y) = \hat{F}(e)\).

We say that \(\hat{F}\) is a colouring of F. For \(i > 0\), we define the subforest of F

as well as \(\hat{E} = \bigcup _{i > 0} \hat{E}_i\). We denote by \(\mathfrak {C}\) the set of coloured forests.

The condition on \(\hat{F}\) guarantees that every \(\hat{F}_i\) is indeed a subforest of F for \(i>0\) and that they are all disjoint. On the other hand, \(\hat{F}^{-1}(0)\) is not supposed to have any particular structure and 0 is not counted as a colour.

Example 2.4

This is an example of a forest with two colours: red for 1 and blue for 2 (and black for 0)

We then have \(\hat{F}_1= \hat{F}^{-1}(1) = A_1\sqcup A_3 \) and \(\hat{F}_2=\hat{F}^{-1}(2) = A_2\sqcup A_4\).

The set \(\mathfrak {C}\) is a commutative monoid under the forest product

where colouringss defined on one of the forests are extended to the disjoint union by setting them to vanish on the other forest. The neutral element for this associative product is the empty coloured forest \(\mathbf {1}\).

We add now decorations on the nodes and edges of a coloured forest. For this, we fix throughout this article an arbitrary “dimension” \(d \in \mathbf{N}\) and we give the following definition.

Definition 2.5

We denote by \(\mathfrak {F}\) the set of all 5-tuples \((F,\hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\) such that

-

1.

\((F,\hat{F})\in \mathfrak {C}\) is a coloured forest in the sense of Definition 2.3.

-

2.

One has \(\mathfrak {n}:N_F \rightarrow \mathbf{N}^d\)

-

3.

One has \(\mathfrak {o}:N_F \rightarrow \mathbf{Z}^d\oplus \mathbf{Z}(\mathfrak {L})\) with \(\text {supp}\mathfrak {o}\subset \text {supp}\hat{F}\).

-

4.

One has \(\mathfrak {e}:E_F \rightarrow \mathbf{N}^d\) with \(\text {supp}\mathfrak {e}\subset \{e\in E_F :\, \hat{F}(e)=0\}=E_F\setminus \hat{E}\).

Remark 2.6

The reason why \(\mathfrak {o}\) takes values in the space \(\mathbf{Z}^d\oplus \mathbf{Z}(\mathfrak {L})\) will become apparent in (3.33) below when we define the contraction of coloured subforests and its action on decorations.

We identify \((F,\hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\) and \((F',\hat{F}',\mathfrak {n}',\mathfrak {o}',\mathfrak {e}')\) whenever F is isomorphic to \(F'\), the corresponding isomorphism maps \(\hat{F}\) to \(\hat{F}'\) and pushes the three decoration functions onto their counterparts. We call elements of \(\mathfrak {F}\)decorated forests. We will also sometimes use the notation \((F,\hat{F})^{\mathfrak {n},\mathfrak {o}}_\mathfrak {e}\) instead of \((F,\hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\).

Example 2.7

Let consider the decorated forest \( (F,\hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e}) \) given by

In this figure, the edges in \(E_F\) are labelled with the numbers from 1 to 13 and the nodes in \(N_F\) with the letters \(\{a,b,c,f,e,f,g,h,i,j,k,l,m,p\}\). We set \(\hat{F}^{-1}(1)=\{b,d,e,j,k\}\sqcup \{3,4,9\}\) (red subforest), \(\hat{F}^{-1}(2)=\{a,c,f,g,l,m\}\sqcup \{2,5,6,11,12\}\) (blue subforest), and on all remaining (black) nodes and edges \(\hat{F}\) is set equal to 0. Every edge has a type \(\mathfrak {t}\in \mathfrak {L}\), but only black edges have a possibly non-zero decoration \(\mathfrak {e}\in \mathbf{N}^d\). All nodes have a decoration \(\mathfrak {n}\in \mathbf{N}^d\), but only coloured nodes have a possibly non-zero decoration \(\mathfrak {o}\in \mathbf{Z}^d\oplus \mathbf{Z}(\mathfrak {L})\).

Example 2.7 is continued in Examples 3.2, 3.4 and 3.5.

Definition 2.8

For any coloured forest \((F,\hat{F})\), we define an equivalence relation \(\sim \) on the node set \(N_F\) by saying that \(x \sim y\) if x and y are connected in \(\hat{E}\); this is the smallest equivalence relation for which \(x \sim y\) whenever \((x,y) \in \hat{E}\).

Definition 2.8 will be extended to a decorated forest \((F,\hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\) in Definition 3.18 below.

Remark 2.9

We want to show the intuition behind decorated forests. We think of each \(\tau =(F, \hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\) as defining a function on \((\mathbf{R}^d)^{N_F}\) in the following way. We associate to each type \(\mathfrak {t}\in \mathfrak {L}\) a kernel \(\varphi _\mathfrak {t}:\mathbf{R}^d\rightarrow \mathbf{R}\) and we define the domain

where \(\sim \) is the equivalence relation of Definition 2.8. Then we set  ,

,

where, for \(x=(x^1,\ldots ,x^d)\in \mathbf{R}^d\), \(n=(n^1,\ldots ,n^d)\in \mathbf{N}^d\) and

In this way, a decorated forest encodes a function: every node in \(N_F/\sim \) represents a variable in \(\mathbf{R}^d\), every uncoloured edge of a certain type \(\mathfrak {t}\) a function \( \varphi _{\mathfrak {t}(e)}\) of the difference of the two variables sitting at each one of its nodes; the decoration \(\mathfrak {n}(v)\) gives a power of \(x_v\) and \(\mathfrak {e}(e)\) a derivative of the kernel \(\varphi _{\mathfrak {t}(e)}\).

In this example the decoration \(\mathfrak {o}\) plays no role; we shall see below that it allows to encode some additional information relevant for the various algebraic manipulations we wish to subject these functions to, see Remarks 3.7, 3.19, 5.38 and 6.26 below for further discussions.

Remark 2.10

Every forest \(F=(N_F,E_F)\) has a unique decomposition into non-empty connected components. This property naturally extends to decorated forests \((F, \hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\), by considering the connected components of the underlying forest F and restricting the colouring \(\hat{F}\) and the decorations \(\mathfrak {n},\mathfrak {o},\mathfrak {e}\).

Remark 2.11

Starting from Sect. 4 we are going to consider a specific situation where there are only two colours, namely \(\hat{F}\rightarrow \{0,1,2\}\); all examples throughout the paper are in this setting. However the results of Sects. 2 and 3 are stated and proved in the more general setting \(\hat{F}\rightarrow \mathbf{N}\) without any additional difficulty.

2.3 Bigraded spaces and triangular maps

It will be convenient in the sequel to consider a particular category of bigraded spaces as follows.

Definition 2.12

For a collection of vector spaces \(\{V_n\,:\, n \in \mathbf{N}^2\}\), we define the vector space

as the space of all formal sums \(\sum _{n \in \mathbf{N}^2} v_n\) with \(v_n \in V_n\) and such that there exists \(k \in \mathbf{N}\) such that \(v_n= 0\) as soon as \(n_2 > k\). Given two bigraded spaces V and W, we write \(V {\hat{\otimes }}W\) for the bigraded space

One has a canonical inclusion \(V \otimes W \subset V {\hat{\otimes }}W\) given by

However in general \(V {\hat{\otimes }}W\) is strictly larger since its generic element has the form

Note that all tensor products we consider are algebraic.

Definition 2.13

We introduce a partial order on \(\mathbf{N}^2\) by

Given two such bigraded spaces V and \(\bar{V}\), a family \(\{A_{mn}\}_{m,n \in \mathbf{N}^2}\) of linear maps \(A_{mn}:V_n\rightarrow \bar{V}_m\) is called triangular if \(A_{mn} = 0\) unless \(m\ge n\).

Lemma 2.14

Let V and \(\bar{V}\) be two bigraded spaces and \(\{A_{mn}\}_{m,n \in \mathbf{N}^2}\) a triangular family of linear maps \(A_{mn}:V_n\rightarrow \bar{V}_m\). Then the map

is well defined from V to \(\bar{V}\) and linear. We call \(A:V\rightarrow V\) a triangular map.

Proof

Let \(v=\sum _nv_n\in V\) and \(k\in \mathbf{N}\) such that \(v_n=0\) whenever \(n_2>k\).

First we note that, for fixed \(m\in \mathbf{N}^2\), the family \((A_{mn}v_n)_{n\in \mathbf{N}^2}\) is zero unless \(n\in [0,m_1]\times [0,k]\); indeed if \(n_2>k\) then \(v_n=0\), while if \(n_1>m_1\) then \(A_{mn}=0\). Therefore the sum \(\sum _n A_{mn}v_n\) is well defined and equal to some \(\bar{v}_m\in \bar{V}_m\).

We now prove that \(\bar{v}_m=0\) whenever \(m_2>k\), so that indeed  . Let \(m_2> k\); for \(n_2>k\), \(v_n\) is 0, while for \(n_2\le k\) we have \(n_2< m_2\) and therefore \(A_{nm}=0\) and this proves the claim. \(\square \)

. Let \(m_2> k\); for \(n_2>k\), \(v_n\) is 0, while for \(n_2\le k\) we have \(n_2< m_2\) and therefore \(A_{nm}=0\) and this proves the claim. \(\square \)

A linear function \(A:V\rightarrow \bar{V}\) which can be obtained as in Lemma 2.14 is called triangular. The family \((A_{mn})_{m,n\in \mathbf{N}^2}\) defines an infinite lower triangular matrix and composition of triangular maps is then simply given by formal matrix multiplication, which only ever involves finite sums thanks to the triangular structure of these matrices.

Remark 2.15

The notion of bigraded spaces as above is useful for at least two reasons:

-

1.

The operators \(\Delta _i\) built in (3.7) below turn out to be triangular in the sense of Definition 2.13 and are therefore well-defined thanks to Lemma 2.14, see Remark 2.15 below. This is not completely trivial since we are dealing with spaces of infinite formal series.

-

2.

Some of our main tools below will be spaces of multiplicative functionals, see Sect. 3.6 below. Had we simply considered spaces of arbitrary infinite formal series, their dual would be too small to contain any non-trivial multiplicative functional at all. Considering instead spaces of finite series would cure this problem, but unfortunately the coproducts \(\Delta _i\) do not make sense there. The notion of bigrading introduced here provides the best of both worlds by considering bi-indexed series that are infinite in the first index and finite in the second. This yields spaces that are sufficiently large to contain our coproducts and whose dual is still sufficiently large to contain enough multiplicative linear functionals for our purpose.

Remark 2.16

One important remark is that this construction behaves quite nicely under duality in the sense that if V and W are two bigraded spaces, then it is still the case that one has a canonical inclusion \(V^* \otimes W^* \subset (V {\hat{\otimes }}W)^*\), see e.g. (3.46) below for the applications we have in mind. Indeed, the dual \(V^*\) consists of formal sums \(\sum _n v^*_n\) with \(v_n^* \in V_n^*\) such that, for every \(k \in \mathbf{N}\) there exists f(k) such that \(v^*_n = 0\) for every \(n \in \mathbf{N}^2\) with \(n_1 \ge f(n_2)\).

The set \({\mathfrak {F}}\), see Definition 2.5, admits a number of different useful gradings and bigradings. One bigrading that is well adapted to the construction we give below is

where

and \(|F \setminus (\hat{F} \cup \varrho _F)|\) denotes the number of edges and vertices on which \(\hat{F}\) vanishes that aren’t roots of F.

For any subset \(A\subseteq \mathfrak {F}\) let now \({\langle A\rangle }\) denote the space built from A with this grading, namely

where \({\mathrm {Vec}}\, S\) denotes the free vector space generated by a set S. Note that in general \({\langle M\rangle }\) is larger than \({\mathrm {Vec}}\, M\).

The following simple fact will be used several times in the sequel. Here and throughout this article, we use as usual the notation \(f {\upharpoonright }A\) for the restriction of a map f to some subset A of its domain.

Lemma 2.17

Let  be a bigraded space and let \(P:V\rightarrow V\) be a triangular map preserving the bigrading of V (in the sense that there exist linear maps \(P_n :V_n \rightarrow V_n\) such that \(P {\upharpoonright }V_n = P_n\) for every n) and satisfying \(P\circ P=P\). Then, the quotient space \(\hat{V} = V/\ker P\) is again bigraded and one has canonical identifications

be a bigraded space and let \(P:V\rightarrow V\) be a triangular map preserving the bigrading of V (in the sense that there exist linear maps \(P_n :V_n \rightarrow V_n\) such that \(P {\upharpoonright }V_n = P_n\) for every n) and satisfying \(P\circ P=P\). Then, the quotient space \(\hat{V} = V/\ker P\) is again bigraded and one has canonical identifications

3 Bialgebras, Hopf algebras and comodules of decorated forests

In this section we want to introduce a general class of operators on spaces of decorated forests and show that, under suitable assumptions, one can construct in this way bialgebras, Hopf algebras and comodules.

We recall that  is a bialgebra if:

is a bialgebra if:

-

H is a vector space over \(\mathbf{R}\)

-

there are a linear map

(product) and an element \(\mathbf {1}\in H\) (identity) such that

(product) and an element \(\mathbf {1}\in H\) (identity) such that  is a unital associative algebra, where \(\eta :\mathbf{R}\rightarrow H\) is the map \(r\mapsto r\mathbf {1}\) (unit)

is a unital associative algebra, where \(\eta :\mathbf{R}\rightarrow H\) is the map \(r\mapsto r\mathbf {1}\) (unit) -

there are linear maps \(\Delta :H\rightarrow H\otimes H\) (coproduct) and \(\mathbf {1}^\star :H\rightarrow \mathbf{R}\) (counit), such that \((H,\Delta ,\mathbf {1}^\star )\) is a counital coassociative coalgebra, namely

$$\begin{aligned} (\Delta \otimes \mathrm {id})\Delta =(\mathrm {id}\otimes \Delta )\Delta , \qquad (\mathbf {1}^\star \otimes \mathrm {id})\Delta = (\mathrm {id}\otimes \mathbf {1}^\star )\Delta =\mathrm {id}\end{aligned}$$(3.1) -

the coproduct and the counit are homomorphisms of algebras (or, equivalently, multiplication and unit are homomorphisms of coalgebras).

A Hopf algebra is a bialgebra  endowed with a linear map

endowed with a linear map  such that

such that

A left comodule over a bialgebra  is a pair \((M,\psi )\) where M is a vector space and \(\psi :M\rightarrow H\otimes M\) is a linear map such that

is a pair \((M,\psi )\) where M is a vector space and \(\psi :M\rightarrow H\otimes M\) is a linear map such that

Right comodules are defined analogously.

For more details on the theory of coalgebras, bialgebras, Hopf algebras and comodules we refer the reader to [6, 47].

3.1 Incidence coalgebras of forests

Denote by \(\mathcal {P}\) the set of all pairs (G; F) such that F is a typed forest and G is a subforest of F and by \({\mathrm {Vec}}({\mathcal {P}})\) the free vector space generated by \(\mathcal {P}\). Suppose that for all \((G;F)\in {\mathcal {P}}\) we are given a (finite) collection \(\mathfrak {A}(G;F)\) of subforests A of F such that \(G\subseteq A\subseteq F\). Then we define the linear map \(\Delta :{\mathrm {Vec}}({\mathcal {P}})\rightarrow {\mathrm {Vec}}({\mathcal {P}})\otimes {\mathrm {Vec}}({\mathcal {P}})\) by

We also define the linear functional \(\mathbf {1}^\star :{\mathrm {Vec}}({\mathcal {P}})\rightarrow \mathbf{R}\) by  . If \(\mathfrak {A}(G;F)\) is equal to the set of all subforests A of F containing G, then it is a simple exercise to show that \(({\mathrm {Vec}}({\mathcal {P}}),\Delta ,\mathbf {1}^\star )\) is a coalgebra, namely (3.1) holds. In particular, since the inclusion \(G\subseteq F\) endows the set of typed forests with a partial order, \(({\mathrm {Vec}}({\mathcal {P}}),\Delta ,\mathbf {1}^\star )\) is an example of an incidence coalgebra, see [49, 50]. However, if \(\mathfrak {A}(F;G)\) is a more general class of subforests, then coassociativity is not granted in general and holds only under certain assumptions.

. If \(\mathfrak {A}(G;F)\) is equal to the set of all subforests A of F containing G, then it is a simple exercise to show that \(({\mathrm {Vec}}({\mathcal {P}}),\Delta ,\mathbf {1}^\star )\) is a coalgebra, namely (3.1) holds. In particular, since the inclusion \(G\subseteq F\) endows the set of typed forests with a partial order, \(({\mathrm {Vec}}({\mathcal {P}}),\Delta ,\mathbf {1}^\star )\) is an example of an incidence coalgebra, see [49, 50]. However, if \(\mathfrak {A}(F;G)\) is a more general class of subforests, then coassociativity is not granted in general and holds only under certain assumptions.

Suppose now that, given a typed forest F, we want to consider not one but several disjoint subforests \(G_1,\ldots ,G_n\) of F. A natural way to code \((G_1,\ldots ,G_n;F)\) is to use a coloured forest \((F,\hat{F})\) where

Then, in the notation of Definition 2.3, we have \(\hat{F}_i=G_i\) for \(i>0\) and \(\hat{F}^{-1}(0)=F\setminus (\cup _i G_i)\).

In order to define a generalisation of the operator \(\Delta \) of formula (3.3) to this setting, we fix \(i>0\) and assume the following.

Assumption 1

Let \(i>0\). For each coloured forest \((F, \hat{F})\) as in Definition 2.3 we are given a collection \(\mathfrak {A}_i(F,\hat{F})\) of subforests of F such that for every \(A \in \mathfrak {A}_i(F,\hat{F})\)

-

1.

\(\hat{F}_i \subset A\) and \(\hat{F}_{j} \cap A=\varnothing \) for every \(j>i\),

-

2.

for all \(0<j<i\) and every connected component T of \(\hat{F}_{j}\), one has either \(T \subset A\) or \(T \cap A=\varnothing \).

We also assume that \(\mathfrak {A}_i\) is compatible with the equivalence relation \(\sim \) given by forest isomorphisms described above in the sense that if \(A \in \mathfrak {A}_i(F,\hat{F})\) and \(\iota :(F,\hat{F}) \rightarrow (G, \hat{G})\) is a forest isomorphism, then \(\iota (A) \in \mathfrak {A}_i(G,\hat{G})\).

It is important to note that colours are denoted by positive integer numbers and are therefore ordered, so that the forests \(\hat{F}_j\), \(\hat{F}_i\) and \(\hat{F}_k\) can play different roles in Assumption 1 if \(j<i<k\). This becomes crucial in our construction below, see Proposition 3.27 and Remark 3.29.

Lemma 3.1

Let \((F, \hat{F})\in \mathfrak {C}\) be a coloured forest and \(A \in \mathfrak {A}_i(F,\hat{F})\). Write

-

\(\hat{F}{\upharpoonright }A\) for the restriction of \(\hat{F}\) to \(N_A\sqcup E_A\)

-

\(\hat{F} \cup _i A\) for the function on \(E_F\sqcup N_F\) given by

$$\begin{aligned} (\hat{F} \cup _i A)(x) = \left\{ \begin{array}{ll} i &{} \text {if }x \in E_A \sqcup N_A, \\ \hat{F}(x) &{} \text {otherwise.} \end{array}\right. \end{aligned}$$

Then, under Assumption 1, \((A,\hat{F}{\upharpoonright }A)\) and \((F, \hat{F} \cup _i A)\) are coloured forests.

Proof

The claim is elementary for \((A,\hat{F}{\upharpoonright }A)\); in particular, setting \(\hat{G}{\mathop {=}\limits ^{ \text{ def }}}\hat{F}{\upharpoonright }A\), we have \(\hat{G}_j=\hat{F}_j\cap A\) for all \(j>0\). We prove it now for \((F, \hat{F} \cup _i A)\). We must prove that, setting \(\hat{G}{\mathop {=}\limits ^{ \text{ def }}}\hat{F} \cup _i A\), the sets \(\hat{G}_j{\mathop {=}\limits ^{ \text{ def }}}\hat{G}^{-1}(j)\) define subforests of F for all \(j>0\). We have by the definitions

and these are subforests of F by the properties 1 and 2 of Assumption 1. \(\square \)

We denote by \({\mathrm {Vec}}(\mathfrak {C})\) the free vector space generated by all coloured forests. This allows to define the following operator for fixed \(i>0\), \(\Delta _i:{\mathrm {Vec}}(\mathfrak {C})\rightarrow {\mathrm {Vec}}(\mathfrak {C})\otimes {\mathrm {Vec}}(\mathfrak {C})\)

Note that if \(i=1\) and \(\hat{F}\le 1\) then we can identify

-

the coloured forest \((F,\hat{F})\) with the pair of subforests \((\hat{F}_1;F)\in {\mathcal {P}}\),

-

\(\mathfrak {A}(\hat{F}_1;F)\) with \(\mathfrak {A}_1(F,\hat{F})\)

Example 3.2

Let us continue Example 2.7, forgetting the decorations but keeping the same labels for the nodes and in particular for the leaves. We recall that \(\hat{F}\) is equal to 1 on the red subforest, to 2 on the blue subforest and to 0 elsewhere. Then

A valid example of \( A \in \mathfrak {A}_{2}(F,\hat{F}) \) could be such that

Note that in this example, one has \(\hat{F}_2 \subset A\), so that \(A\notin \mathfrak {A}_{1}(F,\hat{F}) \) since A violates the first condition of Assumption 1. A valid example of \( B \in \mathfrak {A}_{1}(F,\hat{F}) \) could be such that

In the rest of this section we state several assumptions on the family \(\mathfrak {A}_i(F,\hat{F})\) yielding nice properties for the operator \(\Delta _i\) such as coassociativity, see e.g. Assumption 2. However, one of the main results of this article is the fact that such properties then automatically also hold at the level of decorated forests with a non-trivial action on the decorations which will be defined in the next subsection.

3.2 Operators on decorated forests

The set \(\mathfrak {F}\), see Definition 2.5, is a commutative monoid under the forest product

where decorations defined on one of the forests are extended to the disjoint union by setting them to vanish on the other forest. This product is the natural extension of the product (2.1) on coloured forests and its identity element is the empty forest \(\mathbf {1}\).

Note that

for any  , where \(|\cdot |_{\mathrm {bi}}\) is the bigrading defined in (2.4) above. Whenever M is a submonoid of \(\mathfrak {F}\), as a consequence of (3.6) the forest product \(\cdot \) defined in (3.5) can be interpreted as a triangular linear map from \({\langle M\rangle }{\hat{\otimes }}{\langle M\rangle }\) into \({\langle M\rangle }\), thus turning \(({\langle M\rangle },\cdot )\) into an algebra in the category of bigraded spaces as in Definition 2.12; this is in particular the case for \(M=\mathfrak {F}\). We recall that \({\langle M\rangle }\) is defined in (2.5).

, where \(|\cdot |_{\mathrm {bi}}\) is the bigrading defined in (2.4) above. Whenever M is a submonoid of \(\mathfrak {F}\), as a consequence of (3.6) the forest product \(\cdot \) defined in (3.5) can be interpreted as a triangular linear map from \({\langle M\rangle }{\hat{\otimes }}{\langle M\rangle }\) into \({\langle M\rangle }\), thus turning \(({\langle M\rangle },\cdot )\) into an algebra in the category of bigraded spaces as in Definition 2.12; this is in particular the case for \(M=\mathfrak {F}\). We recall that \({\langle M\rangle }\) is defined in (2.5).

We generalise now the construction (3.4) to decorated forests.

Definition 3.3

The triangular linear maps \(\Delta _i :{\langle \mathfrak {F}\rangle } \rightarrow {\langle \mathfrak {F}\rangle }{\hat{\otimes }}{\langle \mathfrak {F}\rangle }\) are given for \(\tau = (F, \hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})\) by

where

-

(a)

For \(A\subseteq B \subseteq F\) and \(f:E_F\rightarrow \mathbf{N}^d\), we use the notation

.

. -

(b)

The sum over \(\mathfrak {n}_A\) runs over all maps \(\mathfrak {n}_A:N_F \rightarrow \mathbf{N}^d\) with \(\text {supp}\mathfrak {n}_A\subset N_A\).

-

(c)

The sum over \(\varepsilon _A^F\) runs over all \(\varepsilon _A^F:E_F \rightarrow \mathbf{N}^d\) supported on the set of edges

$$\begin{aligned} \partial (A,F) {\mathop {=}\limits ^{ \text{ def }}}\left\{ (e_+,e_-) \in E_F\setminus E_{A} \,:\, e_+ \in N_A \right\} , \end{aligned}$$(3.8)that we call the boundary of A in F. This notation is consistent with point a).

-

(d)

For all \(\varepsilon : E_F\rightarrow \mathbf{Z}^d\) we denote

$$\begin{aligned} \pi \varepsilon :N_F\rightarrow \mathbf{Z}^d, \qquad \pi \varepsilon (x){\mathop {=}\limits ^{ \text{ def }}}\sum _{e=(x,y)\in E_F} \varepsilon (e). \end{aligned}$$

We will henceforth use these notational conventions for sums over node/edge decorations without always spelling them out in full.

Example 3.4

We continue Examples 2.7 and 3.2, by showing how decorations are modified by \(\Delta _i\). We consider first \(i=2\), corresponding to a blue subforest \(A\in \mathfrak {A}_2(F,\hat{F})\). Then we have that \( (A,\hat{F}{\upharpoonright }A,\mathfrak {n}_A+\pi \varepsilon _A^F, \mathfrak {o}{\upharpoonright }N_A,\mathfrak {e}{\upharpoonright }E_A) \) is equal to

while \((F,\hat{F} \cup _2 A,\mathfrak {n}- \mathfrak {n}_A,\ \mathfrak {o}+\mathfrak {n}_A+\pi (\varepsilon _A^F-\mathfrak {e}_\varnothing ^A),\mathfrak {e}_A^F + \varepsilon _A^F)\) becomes

Note that \(\varepsilon _A^F\) is supported by \(\partial (A,F)=\{7,8,10,13\}\), where we refer to the labelling of edges and nodes fixed in the Example 2.7, and

Note that the edge 1 was black in \((F,\hat{F})\) and becomes blue in \((F,\hat{F} \cup _2 A)\); accordingly, in \((F,\hat{F} \cup _2 A,\mathfrak {n}- \mathfrak {n}_A,\ \mathfrak {o}+\mathfrak {n}_A+\pi (\varepsilon _A^F-\mathfrak {e}_\varnothing ^A),\mathfrak {e}_A^F + \varepsilon _A^F)\) the value of \(\mathfrak {e}\) on 1 is set to 0 and \(\mathfrak {e}(1)\) is subtracted from \(\mathfrak {o}(a)\). In accordance with Assumption 1, \(A\in \mathfrak {A}_2(F,\hat{F})\) contains one of the two connected components of \(\hat{F}_1\) and is disjoint from the other one.

Example 3.5

We continue Example 3.4 for the choice of B made in Example 3.2 and for \(i=1\), corresponding to a red subforest \(B\in \mathfrak {A}_1(F,\hat{F})\). Then \( (B,\hat{F}{\upharpoonright }B,\mathfrak {n}_B+\pi \varepsilon _B^F, \mathfrak {o}{\upharpoonright }N_B,\mathfrak {e}{\upharpoonright }E_B) \) is equal to

while \((F,\hat{F} \cup _1 B,\mathfrak {n}- \mathfrak {n}_B,\ \mathfrak {o}+\mathfrak {n}_B+\pi (\varepsilon _B^F-\mathfrak {e}_\varnothing ^B),\mathfrak {e}_B^F + \varepsilon _B^F)\) becomes

Here we have that \(\partial (B,F)=\{7\}\), where we refer to the labelling of edges and nodes fixed in the Example 2.7. Therefore \(\pi \varepsilon _B^F(d)=\varepsilon _B^F(7)\). Note that the edge 8 was black in \((F,\hat{F})\) and becomes red in \((F,\hat{F} \cup _1 B)\); accordingly, in \((F,\hat{F} \cup _1 B,\mathfrak {n}- \mathfrak {n}_B,\ \mathfrak {o}+\mathfrak {n}_B+\pi (\varepsilon _B^F-\mathfrak {e}_\varnothing ^B),\mathfrak {e}_B^F + \varepsilon _B^F)\) the value of \(\mathfrak {e}\) on 8 is set to 0 and \(\mathfrak {e}(8)\) is subtracted from \(\mathfrak {o}(d)\). In accordance with Assumption 1, \(B\in \mathfrak {A}_1(F,\hat{F})\) is disjoint from the blue subforest \(\hat{F}_2\) and, accordingly, all decorations on \(\hat{F}_2\) are unchanged. Finally, note that the edge 1 is not in \(\partial (B,F)\) since it is equal to (a, b) with \(b\in B\) and \(a\notin B\).

Remark 3.6

From now on, in expressions like (3.7) we are going to use the simplified notation

namely the restrictions of \(\mathfrak {o}\) and \(\mathfrak {e}\) will not be made explicit. This should generate no confusion, since by Definition 2.5 in \((A,\hat{A},\mathfrak {n}', \mathfrak {o}',\mathfrak {e}')\) we have \(\mathfrak {o}' :N_A \rightarrow \mathbf{Z}^d\oplus \mathbf{Z}(\mathfrak {L})\) and \(\mathfrak {e}' :E_A \rightarrow \mathbf{N}^d\). On the other hand, the notation \(\hat{F}{\upharpoonright }A\) refers to a slightly less standard operation, see Lemma 3.1 above, and will therefore be made explicitly throughout. Note also that \(\mathfrak {n}_A\) is not defined as the restriction of \(\mathfrak {n}\) to \(N_A\).

Remark 3.7

It may not be obvious why Definition 3.3 is natural, so let us try to offer an intuitive explanation of where it comes from. First note that (3.7) reduces to (3.4) if we drop the decorations and the combinatorial coefficients.

If we go back to Remark 2.9, and we recall that a decorated forest encodes a function of a set of variables in \(\mathbf{R}^d\) indexed by the nodes of the underlying forest, then we can realise that the operator \(\Delta _i\) in (3.7) is naturally motivated by Taylor expansions.

Let us consider first the particular case of \(\tau =(F,\hat{F},0,\mathfrak {o},\mathfrak {e})\). Then \(\mathfrak {n}_A\) has to vanish because of the constraint \(0\le \mathfrak {n}_A\le \mathfrak {n}\) and (3.7) becomes

Consider a single term in this sum and fix an edge \(e=(v,w)\in \partial (A,F)\). Then, in the expression

the decoration of e is changing from \(\mathfrak {e}(e)\) to \(\mathfrak {e}(e)+\varepsilon _A^F(e)\). Recalling (2.2), this should be interpreted as differentiating \(\varepsilon _A^F(e)\) times the kernel encoded by the edge e. At the same time, in the expression

the term \(\pi \varepsilon _A^F(v)\) is a sum of several contributions, among which \(\varepsilon _A^F(e)\). If we take into account the factor \(1/\varepsilon _A^F(e)!\), we recognise a (formal) Taylor sum

If \(\mathfrak {n}\) is not zero, then we have a similar Taylor sum given by

The role of the decoration \( \mathfrak {o}\) is still mysterious at this stage: we ask the reader to wait until the Remarks 3.19, 5.38 and 6.26 below for an explanation. The connection between our construction and Taylor expansions (more precisely, Taylor remainders) will be made clear in Lemma 6.10 and Remark 6.11 below.

Remark 3.8

Note that, in (3.7), for each fixed A the decoration \(\mathfrak {n}_A\) runs over a finite set because of the constraint \(0\le \mathfrak {n}_A\le \mathfrak {n}\).

On the other hand, \(\varepsilon _A^F\) runs over an infinite set, but the sum is nevertheless well defined as an element of \({\langle \mathfrak {F}\rangle }{\hat{\otimes }}{\langle \mathfrak {F}\rangle }\), even though it does not belong to the algebraic tensor product \({\langle \mathfrak {F}\rangle }\otimes {\langle \mathfrak {F}\rangle }\). Indeed, since \(|\mathfrak {e}{\upharpoonright }E_A| + |\mathfrak {e}_A^F + \varepsilon _A^F| = |\mathfrak {e}| + |\varepsilon _A^F| \ge |\mathfrak {e}|\) and

it is the case that if \(|\tau |_{\mathrm {bi}}= n\), then the degree of each term appearing on the right hand side of (3.7) is of the type \((n_1+k_1,n_2-k_2)\) with \(k_i \ge 0\). Since furthermore the sum is finite for any given value of \(|\varepsilon _A^F|\), this is indeed a triangular map on \({\langle \mathfrak {F}\rangle }\), see Remark 2.15 above.

There are many other ways of bigrading \(\mathfrak {F}\) to make the \(\Delta _i\) triangular, but the one chosen here has the advantage that it behaves nicely with respect to the various quotient operations of Sects. 3.5 and 4.1 below.

Remark 3.9

The coproduct \(\Delta _i\) defined in (3.7) does not look like that of a combinatorial Hopf algebra since for \(\varepsilon _A^F\) the coefficients are not necessarily integers. This could in principle be rectified easily by a simple change of basis: if we set

then we can write (3.7) equivalently as

for \(\tau = (F, \hat{F},\mathfrak {n},\mathfrak {o},\mathfrak {e})_\circ \). Note that with this notation it is still the case that

However, since this lengthens some expressions, does not seem to create any significant simplifications, and completely destroys compatibility with the notations of [32], we prefer to stick to (3.7).

Remark 3.10

As already remarked, the grading \(|\,\cdot \,|_{\mathrm {bi}}\) defined in (3.6) is not preserved by the \(\Delta _i\). This should be considered a feature, not a bug! Indeed, the fact that the first component of our bigrading is not preserved is precisely what allows us to have an infinite sum in (3.7). A more natural integer-valued grading in that respect would have been given for example by

which would be preserved by both the forest product \(\cdot \) and \(\Delta _i\). However, since \(\mathfrak {e}\) can take arbitrarily large values, this grading is no longer positive. A grading very similar to this will play an important role later on, see Definition 5.3 below.

3.3 Coassociativity

Assumption 2

For each coloured forest \((F, \hat{F})\) as in Definition 2.3, the collection \(\mathfrak {A}_i(F,\hat{F})\) of subforests of F satisfies the following properties.

-

1.

One has

$$ \begin{aligned} \mathfrak {A}_i(F\sqcup G,\hat{F} + \hat{G}) = \{C \sqcup D \,:\, C \in \mathfrak {A}_i(F,\hat{F})\; \& \; D \in \mathfrak {A}_i(G,\hat{G})\}\;. \end{aligned}$$(3.14) -

2.

One has

$$ \begin{aligned} A \in \mathfrak {A}_i(F,\hat{F})\quad \& \quad B \in \mathfrak {A}_i(F,\hat{F} \cup _i A)\;, \end{aligned}$$(3.15a)if and only if

$$ \begin{aligned} B \in \mathfrak {A}_i(F,\hat{F})\quad \& \quad A \in \mathfrak {A}_i(B,\hat{F}{\upharpoonright }B). \end{aligned}$$(3.15b)

Assumption 2 is precisely what is required so that the “undecorated” versions of the maps \(\Delta _i\), as defined in (3.4), are both multiplicative and coassociative. The next proposition shows that the definition (3.7) is such that this automatically carries over to the “decorated” counterparts.

Proposition 3.11

Under Assumptions 1 and 2, the maps \(\Delta _i\) are coassociative and multiplicative on \({\langle \mathfrak {F}\rangle }\), namely the identities

hold for all  .

.

Proof

The multiplicativity property (3.16b) is an immediate consequence of property 1 in Assumption 2 and the fact that the factorial factorises for functions with disjoint supports, so we only need to verify (3.16a).