Abstract

We examine infinite horizon decision problems with arbitrary bounded payoff functions in which the decision maker uses finitely additive behavioral strategies. Since we only assume that the payoff function is bounded, it is well-known that these behavioral strategies generally do not induce unambiguously defined expected payoffs. Consequently, it is not clear how to compare behavioral strategies and define optimality. We address this problem by finding conditions on the payoff function that guarantee an unambiguous expected payoff regardless of which behavioral strategy the decision maker uses. To this end, we systematically consider various alternatives proposed in the literature on how to define the finitely additive probability measure on the set of infinite plays induced by a behavioral strategy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We examine infinite horizon decision problems in which the decision maker’s payoff is a bounded function of the infinite sequence of the actions chosen. Within our framework, probability measures are so-called charges: they are finitely additive, albeit not necessarily countably additive. We assume that the decision maker uses behavioral strategies. A behavioral strategy assigns a charge on the available actions depending on the actions chosen in the past. In order to assign an expected payoff to each behavioral strategy, it is necessary to define the charge induced by the behavioral strategy on the set of infinite sequences of actions.

Following established literature, we explore four distinct algebras on the set of infinite sequences of actions, and correspondingly, we define the charge induced by a behavioral strategy on each of these algebras.

Yet, because the payoff function is only assumed to be bounded, it may not be measurable with respect to these algebras. Consequently, to calculate expected payoffs through integrals, we need to extend the charge induced by a behavioral strategy from these algebras to encompass the entire power set. However, the extension of these charges is generally not unique, and thus the expected payoff under a behavioral strategy might be ambiguous.

This naturally gives rise to the question: what conditions ensure that the expected payoff is unambiguous? We address this question by finding conditions on the payoff function that guarantee an unambiguous expected payoff regardless of which behavioral strategy the decision maker adopts. Most of these conditions have a topological nature. We illustrate the results by several examples.

Related literature. Charges were advocated by de Finetti (1975), Savage (1972), and Dubins and Savage (2014). They facilitate constructions such as a uniform probability distribution over the natural numbers (cf. Schirokauer and Kadane (2007)), and avoid the problem of measure (cf. Aliprantis and Border (2005)). For a summary of the history of charges, we refer to Bingham (2010).

In decision theory, charges have been used in various models, notably in de Finetti (1975) and Savage (1972), and they are also regularly used to model beliefs (e.g. Gilboa and Marinacci (2016)). Models building on these ideas can be found in Al-Najjar (2009) and Pomatto et al. (2014). Sudderth (2016) writes about finitely additive dynamic programming, where the payoff is some type of aggregation of daily payoffs. Charges also gained recognition in game theoretic models, such as in Maitra and Sudderth (1993), Marinacci (1997), Maitra and Sudderth (1998), Harris et al. (2005), Capraro and Scarsini (2013), Al-Najjar et al. (2014), Flesch et al. (2017), Milchtaich (2020) and Cerreia-Vioglio et al. (2022).

The same type of decision problems as ours are studied in Dubins and Savage (2014), Dubins (1974), Purves and Sudderth (1976) and Flesch et al. (2019), and the algebras we consider on the infinite sequences of actions already appear in these papers. We will mention the specific connections throughout the paper.

Structure of the paper. In Sect. 2, we discuss some preliminaries on charges. In Sect. 3, we introduce the model and the main question. In Sect. 4, we discuss induced charges by behavioral strategies and the corresponding expected payoffs. In Sect. 5, as a preparation, we define a few classes of payoff functions. In Sect. 6, we present our main results: conditions for an unambiguously defined expected payoff. In Sect. 7, we illustrate our results with examples. In Sect. 8, we provide some concluding remarks. For convenience, an overview of the most important notation can be found before the Appendices.

The remaining sections contain technical issues and the proofs: Sect. 9 contains further properties on the algebras and expected payoffs, Sect. 10 contains most of the proofs, and Sect. 11 contains an additional example.

2 Preliminaries on charges

In this section we provide a brief summary on charges. For further reading, we refer to Rao and Rao (1983) and Dunford and Schwartz (1964).

Let X be a nonempty set. A collection \(\mathscr {P}\) of subsets of X is called an algebra if it has the following properties: (1) \(X \in \mathscr {P}\), (2) if \(E, F \in \mathscr {P}\), then \(E \cup F \in \mathscr {P}\), (3) if \(E\in \mathscr {P}\), then \(X{\setminus } E \in \mathscr {P}\). It follows that an algebra is closed under taking finite unions. An algebra is called a sigma-algebra, if it is even closed under taking countable unions.

Let \(\mathscr {P}\) be an algebra on X. A finitely additive probability measure, also called a charge, on \((X, \mathscr {P})\) is a function \(\mu :\mathscr {P}\rightarrow [0,1]\) such that \(\mu (X) = 1\) and for all disjoint sets \(E, F \in \mathscr {P}\) it holds that \(\mu (E \cup F) = \mu (E) + \mu (F)\). We denote the set of all charges on \((X, \mathscr {P})\) by \({\mathcal {C}}(X,\mathscr {P})\). For \(x \in X\), we denote the Dirac charge on x by \(\delta _x\), i.e., for every set \(B \in \mathscr {P}\), we have \(\delta _x(B) =1\) if \(x \in B\) and \(\delta _x(B)=0\) if \(x \notin B\).

The following statement follows from Theorem 2 in Loś and Marczewski (1949) together with the Lemma of Zorn, and is also shown in Theorem C.3 in Flesch et al. (2017). If \(\mathscr {P}\) is an algebra, and \(\mu\) is a charge on \(\mathscr {P}\), then \(\mu\) can be extended to a charge on \(2^X\). That is, there exists a charge \(\nu\) on \((X, 2^X)\) such that \(\nu (E)=\mu (E)\) for all \(E \in \mathscr {P}\). The extension \(\nu\) is generally not unique.

Let \(\mathscr {P}\) be an algebra on X. A function \(s :X \rightarrow \mathbb {R}\) is called a \(\mathscr {P}\)-measurable simple–function if there are \(c_1, \ldots , c_m \in \mathbb {R}\) and a partition \(\{B_1, \ldots , B_m\}\) of X with \(B_1, \ldots , B_m \in \mathscr {P}\) such that \(s = \sum _{i=1}^m c_i \mathbb {I}_{B_i}\), where \(\mathbb {I}_{B_i}\) is the characteristic function of the set \(B_i\). Let \(\mu\) be a charge on \((X, \mathscr {P})\). The integral of s with respect to the charge \(\mu\) is defined by \(\int _{x\in X} s(x)\,\mu (dx) = \sum _{i=1}^m c_i \cdot \mu (B_i)\).

Let \(\mu\) be a charge on \((X, 2^X)\). For every bounded function \(f :X \rightarrow \mathbb {R}\) and every \(\varepsilon >0\), there existsFootnote 1 a (\(2^X\)-measurable) simple–function s such that \(s \le f \le s + \varepsilon\). Let \(f :X \rightarrow \mathbb {R}\) be a bounded function. The integral \(\int _{x\in X} f(x)\,\mu (dx)\) is defined as the supremum of all real numbers \(\int _{x\in X} s(x)\,\mu (dx)\), where s is a simple–function with \(s \le f\). Since f is bounded, the integral is finite. The integral is linear over the set of bounded real-valued functions. We remark that the integral \(\int _{x\in X} f(x)\,\mu (dx)\) is equal to the infimum of all real numbers \(\int _{x\in X} s(x)\,\mu (dx)\), where s is a simple–function and \(s \ge f\).

When X is countably infinite,Footnote 2 we say that a charge \(\mu \in {\mathcal {C}}(X, 2^X)\) is diffuse (also called purely finitely additive) if \(\mu ( \{ x \} ) = 0\) for every \(x \in X\). Assuming the Axiom of Choice, diffuse charges exist. Note that diffuse charges are not countably additive.

3 The model and the main question

In the entire paper, we assume the Axiom of Choice.

The decision problem. Let A be an action set, having at least two elements. Let H denote the set of finite sequences in A, including the empty sequence ø. Elements of A are called actions, elements of H are called histories and elements of \(A^\mathbb {N}\) are called plays. Let \(u:A^\mathbb {N}\rightarrow \mathbb {R}\) be a bounded function, called the payoff function.

Consider the following decision problem. At each period \(t=1,2,\ldots\), the decision maker choosesFootnote 3 an action \(a_t\) from A, knowing his previous choices \((a_1,\ldots ,a_{t-1})\in H\). This induces a play \(\vec a=(a_1,a_2,\ldots )\). The payoff of the decision maker is \(u(\vec a)\).

Behavioral strategy. A behavioral strategy is a function \(b:H\rightarrow {\mathcal {C}}(A,2^A)\). The interpretation is that if history h arises during the decision problem, then the strategy b recommends the decision maker to choose an action according to the charge b(h).

Main question. To explain our main question informally: suppose that an algebra \(\mathscr {P}\) is given on the set \(A^\mathbb {N}\) of plays, and suppose also that for each behavioral strategy b the induced charge \(\mathbb {P}^\mathscr {P}_b\) on \(\mathscr {P}\) is known. We would like to investigate which payoff functions have an unambiguous expected payoff for every \(\mathbb {P}^\mathscr {P}_b\).

More precisely, following the literature, we will consider specific algebras \(\mathscr {P}\) on the set \(A^\mathbb {N}\) of plays. Given any such algebra \(\mathscr {P}\), we will formally define a charge \(\mathbb {P}_b^\mathscr {P}\) on \((A^\mathbb {N},\mathscr {P})\) for each behavioral strategy b (cf. Section 4). Intuitively, \(\mathbb {P}_b^\mathscr {P}\) is the charge that the behavioral strategy b induces on the algebra \(\mathscr {P}\); that is, for each set \(Q\in \mathscr {P}\) of plays, \(\mathbb {P}_b^\mathscr {P}(Q)\) is the probability under b that the realized play belongs to Q.

Since the payoff function u may not be measurable with regard to \(\mathscr {P}\), but u is bounded and therefore always measurable with regard to the power set of \(A^\mathbb {N}\), we extend the induced charges \(\mathbb {P}_b^\mathscr {P}\) to the power set of \(A^\mathbb {N}\). More precisely, we denote by \([\mathbb {P}_b^\mathscr {P}]\) the set of charges on the power set of \(A^\mathbb {N}\) that extend \(\mathbb {P}_b^\mathscr {P}\) from the algebra \(\mathscr {P}\). For each charge \(B\in \hspace{0.1cm} [\mathbb {P}_b^\mathscr {P}]\), we obtain an expected payoff

Hence, the set of possible expected payoffs for the behavioral strategy b, with respect to \(\mathscr {P}\), is

We say that a behavioral strategy b induces an unambiguous expected payoff with respect to \(\mathscr {P}\), if the set \([u^\mathscr {P}(b)]\) is a singleton.

Our main question is to identify conditions on the payoff function u under which all behavioral strategies induce an unambiguous expected payoff, i.e., \([u^\mathscr {P}(b)]\) is a singleton for each behavioral strategy b. This question depends heavily on the chosen algebra \(\mathscr {P}\) and the way how the induced charges \(\mathbb {P}_b^\mathscr {P}\) for the behavioral strategies are specified.

4 Algebras on the set of plays, induced charges on the algebras, and expected payoff

In this section, we define four different algebras, and for each algebra and each behavioral strategy, we define the charge induced by this behavioral strategy on the algebra. By extending these induced charges to the entire power set of plays, we define expected payoffs under behavioral strategies.

The topology on the set of plays.Footnote 4 We endow the action set A with the discrete topology and the set \(A^\mathbb {N}\) of plays with the induced product topology, denoted by \({\mathcal {T}}\). The elements of \({\mathcal {T}}\) are called the open sets, and their complements are called the closed subsets of \(A^\mathbb {N}\). Thus, a subset of \(A^\mathbb {N}\) is open exactly when it is the union of cylinder sets, where the cylinder set corresponding to a history h is the set of plays that has h as its initial segment (see p.16 for a formal definition). A subset of \(A^\mathbb {N}\) that is both open and closed is called clopen.

The topological space \((A^\mathbb {N}, {\mathcal {T}})\) is completely metrizable, for instance by the metric \(d:A^\mathbb {N}\times A^\mathbb {N}\rightarrow \mathbb {R}\) defined as: if \(\vec a=\vec a'\) then \(d(\vec a,\vec a')=0\), and otherwise \(d(\vec a,\vec a')=2^{-k(\vec a,\vec a')}\) where \(k(\vec a,\vec a') \in \mathbb {N}\) is the first period at which \(\vec a\) and \(\vec a'\) differ. Thus, a sequence of plays \((\vec a_n)_{n \in \mathbb {N}}\) converges to a play \(\vec a\) if for every \(k \in \mathbb {N}\) there exists \(N_k \in \mathbb {N}\) such that for every \(n \ge N_k\) the first k coordinates of \(\vec a_n\) coincide with those of \(\vec a\). The Borel algebra \(\mathcal {R}\) on \(A^\mathbb {N}\) is the smallest algebra of subsets of \(A^\mathbb {N}\) that contains all open sets.

The charge induced by a behavioral strategy on the Borel algebra. For each behavioral strategy b, we define the induced charge \(\mathbb {P}_b\) on the Borel algebra \(\mathcal {R}\). Our definition is in accordance with the literature, in particular with Dubins and Savage (2014) and Dubins (1974). For the formal definition, see Theorem 4.1 below.

We first need a bit of terminology and notation. Consider a decision problem G, a period \(k \in \mathbb {N}\) and a history \(h \in A^k\). We define the subproblem that starts at history h. This subproblem is played as follows: At periods \(n\ge k+1\), the decision maker chooses an action \(a_n \in A\), which induces a play \((a_{k+1},a_{k+2},\ldots )\) and a corresponding payoff \(u|_h(a_{k+1},a_{k+2},\ldots )=u(h,a_{k+1},a_{k+2},\ldots )\). The subproblem that starts at h is denoted by \(G|_h\).Footnote 5 A behavioral strategy b in decision problem G induces a behavioral strategy \(b|_h\) in the subproblem \(G|_h\) as follows: \(b|_h(a_{k+1},a_{k+2},\ldots , a_{k'})=b(h,a_{k+1},a_{k+2},\ldots , a_{k'})\), for every \(k'\ge k\). The strategy \(b|_h\) is called the continuation strategy of b at history h.Footnote 6

A specification is a mapping \(\psi\) that to each behavioral strategy b assigns a charge \(\psi (b)\) on the Borel algebra \(\mathcal {R}\) of \(A^\mathbb {N}\). As is shownFootnote 7 in Theorem 2.8.1 of Dubins and Savage (2014) and Theorem 2 in Dubins (1974), there is a unique specification \(\psi\) that satisfies two natural conditions.

Theorem 4.1

(Dubins and Savage (2014), and Dubins (1974)) There is a unique specification \(\psi\) with the following two conditions:

-

1.

Consistency for clopen (closed and open) sets: for every behavioral strategy b, for every history \(h\in A^{k-1}\) at any period k and for every clopen set \(Q\in {\mathcal {T}}\) it holds that

$$\begin{aligned} \psi (b|_h)(Q|_h) \;=\; \int _{a \in A} \ \psi (b|_{ha})(Q|_{ha}) \ b(h) (da) , \end{aligned}$$(3)where \(Q|_h=\{(a_k,a_{k+1},\ldots ):(h,a_k,a_{k+1},\ldots )\in Q\}\) is the continuation of Q after h, and similarly \(Q|_{ha}\) is the continuation of Q after the history ha.

-

2.

Regularity for open sets: for every behavioral strategy b and for every open set \(O\in {\mathcal {T}}\)

$$\begin{aligned} \psi (b)(O)\;=\;\sup \,\{\psi (b)(Q):\text {clopen}\;Q\in {\mathcal {T}}\text { and }\;Q\subseteq O\}. \end{aligned}$$(4)

Remark. Condition 1 (consistency) is first proposed by Dubins and Savage (2014).Footnote 8 The intuition behind this condition is the following. Consider a behavioral strategy b, a history h and a clopen set \(Q\in {\mathcal {T}}\). The specification \(\psi\) assigns to the continuation strategy \(b|_h\) a charge \(\psi (b|_h)\), and for each action \(a\in A\) it also assigns to the continuation strategy \(b|_{ha}\) a charge \(\psi (b|_{ha})\).Footnote 9 The left-hand-side of (3) considers the subgame at history h, and the right-hand-side of (3) considers the subgame at each history ha. Thus, Condition 1 requires the following consistency property between the charges \(\psi (b|_h)\) and \(\psi (b|_{ha})\), where \(a\in A\): the probability of \(Q|_h\) under the charge \(\psi (b|_h)\) should be equal to the expectation of the probability of \(Q|_{ha}\) at the next period under \(\psi (b|_{ha})\), where a is the action chosen after history h.

Condition 2 (regularity) is proposed by Dubins (1974). It requires inner-regularity for the probabilities of open sets: the probability of each open set is equal to the supremum of the probabilities of the contained clopen sets. \(\Diamond\)

Now, for each behavioral strategy b, the induced charge is \(\mathbb {P}_b=\psi (b)\) where \(\psi\) is the unique specification in Theorem 4.1. By \([u(b)]\) we denote the set of possible expected payoffs, which is calculated according to (2) (with \(\mathscr {P}\) being the Borel algebra \(\mathcal {R}\)).Footnote 10

Smaller algebras. As mentioned earlier, from a conceptual point of view the literature has also considered three alternative algebras, instead of the Borel algebra \(\mathcal {R}\). All three algebras are included in \(\mathcal {R}\).

-

I

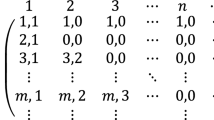

The finite horizon algebra: This algebra, denoted by \(\mathcal {R}^{I}\), consists of all subsets Q of \(A^\mathbb {N}\) such that we already know at some period whether or not the induced play belongs to Q. Formally, \(Q\subseteq A^\mathbb {N}\) belongs to \(\mathcal {R}^{I}\) exactly when Q satisfies the following property: there is a period n such that for all plays \(\vec a, \vec a'\) that coincide up to period n, either both \(\vec a,\vec a'\) belong to Q or both \(\vec a,\vec a'\) belong to \(A^\mathbb {N}{\setminus } Q\). This is a small but very natural algebra. This algebra is examined in Flesch et al. (2019), but Dubins and Savage (2014) in Sect. 2.6 also refer to events that only depend on finitely many coordinates.

-

II

The clopen algebra: This algebra, denoted by \(\mathcal {R}^{II}\), consists of all clopen subsets of \(A^\mathbb {N}\). This algebra is examined in detail in Dubins and Savage (2014).

-

III

The clopen+singleton algebra: This algebra, denoted by \(\mathcal {R}^{III}\), is the smallest algebra that contains all clopen subsets of \(A^\mathbb {N}\) plus the singleton sets \(\{\vec a\}\), for all \(\vec a \in A^\mathbb {N}\). This algebra is examined in detail in Flesch et al. (2019).

We obviously have

For each \(i=I,II,III\) and for each behavioral strategy b, we denote by \(\mathbb {P}_b^i\) the restriction of the charge \(\mathbb {P}_b\) from the Borel algebra \(\mathcal {R}\) to the algebra \(\mathcal {R}^i\). Due to the previous observation, we have \([\mathbb {P}^I_b]\;\supseteq \; [\mathbb {P}^{II}_b]\;\supseteq \; [\mathbb {P}^{III}_b]\;\supseteq \; [\mathbb {P}_b]\).

With regard to \(\mathcal {R}^i\), we can define the set of possible expected payoffs for each behavioral strategy b according to (2), and we denote this set by \([u^i(b)]\). We then have \([u^I(b)]\;\supseteq \; [u^{II}(b)]\;\supseteq \; [u^{III}(b)]\;\supseteq \; [u(b)]\). We say that a behavioral strategy b induces an unambiguous expected payoff with respect to \(\mathcal {R}^i\) if \([u^i(b)]\) is a singleton.

Details and further discussions on these three smaller algebras are deferred to Sect. 9. In particular, Proposition 9.2 shows that dealing with the algebras \(\mathcal {R}^I\), \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\) and \(\mathcal {R}\) are all essentially different; that is, our main question of unambigous expected payoffs for all behavioral strategies is different in all these algebras.

5 Classes of payoff functions

In order to be able to present our main results (cf. Sect. 6), we define several classes of payoff functions. We discuss the relation between these classes in the end of this section.

Uniformly approachable payoff functions. Let \(\mathscr {P}\) be an algebra on \(A^\mathbb {N}\). A payoff function \(u: A^\mathbb {N}\rightarrow \mathbb {R}\) is uniformly \(\mathscr {P}\)-approachable, if for every \(\varepsilon >0\) there exists a \(\mathscr {P}\)-measurable simple–function \(u':A^\mathbb {N}\rightarrow \mathbb {R}\) such that \(|u(\vec a)-u'(\vec a)|\le \varepsilon\) for every \(\vec a\in A^\mathbb {N}\).

Semicontinuous, continuous and uniformly continuous payoff functions. A payoff function \(u: A^\mathbb {N}\rightarrow \mathbb {R}\) is called upper semicontinuous if for every \(r \in \mathbb {R}\) the set \(u^{-1}([r,\infty ))\) is closed.Footnote 11 Similarly, u is called lower semicontinuous if for every \(r \in \mathbb {R}\) the set \(u^{-1}((-\infty ,r])\) is closed.Footnote 12 A function is continuous if and only if it is both upper and lower semicontinuous. The payoff function u is called uniformly continuous if for every \(\varepsilon >0\) there exists \(\delta >0\) such that for all plays \(\vec a,\vec a'\in A^\mathbb {N}\) with \(d(\vec a,\vec a')< \delta\) we have \(|u(\vec a)-u(\vec a')|<\varepsilon\).

Tame payoff functions. The oscillation of u at the play \(\vec a\) is defined as

where \({\mathcal {N}}_\varepsilon (\vec a)=\{\vec a' \in A^\mathbb {N}: d(\vec a, \vec a')< \varepsilon \}\) is the \(\varepsilon\)-neighbourhood of \(\vec a\). We call the payoff function u weakly tame if for every \(r > 0\) the set \(\{\vec a \in A^\mathbb {N}: o_u(\vec a) \ge r\}\) is finite.

We say that the payoff function \(u: A^\mathbb {N}\rightarrow \mathbb {R}\) has a limit at a play \(\vec a\in A^\mathbb {N}\) if there is \(\ell \in \mathbb {R}\) with the following property: \(u(\vec a_n)\) converges to \(\ell\) for each sequence \(\vec a_n\) in \(A^\mathbb {N}\) such that (1) \(\vec a_n\) converges to \(\vec a\) as \(n\rightarrow \infty\), and (2) \(\vec a_n\ne \vec a\) for all \(n\in \mathbb {N}\). If u has a limit at \(\vec a\), we denote it by \(L_u(\vec a)\).

For the payoff function u, let \(D_u\) denote the set of plays at which u is not continuous. We say that a discontinuity \(\vec a \in D_u\) is removable if u has a limit at \(\vec a\). We call the payoff function u strongly tame if the following two conditions hold: u is weakly tame, and each discontinuity in \(D_u\) is removable.Footnote 13 For an illustration of these concepts, we refer to Example 11.1 in Sect. 11.

Relation between classes of payoff functions. If u is continuous, then by definition, u is both upper and lower semicontinuous. If u is uniformly continuous, then u is also continuous, and if A is finite, then by compactness of \(A^\mathbb {N}\) the converse also holds.

Note that u is continuous at \(\vec a\) if and only if u has a limit at \(\vec a\) and \(L_u(\vec a)=u(\vec a)\). Also, u is continuous at \(\vec a\) if and only if \(o_u(\vec a)=0\). A continuous function is clearly strongly tame.

6 Main results

In this section we present our main results. The proofs can be found in Sect. 10.

The first theorem identifies a condition that guarantees that the expected payoffs are unambiguous under behavioral strategies with respect to any of the algebras.

Theorem 6.1

Let \(\mathscr {P}\) be any of the algebras \(\mathcal {R}^{I},\mathcal {R}^{II},\mathcal {R}^{III},\mathcal {R}\). Suppose that the payoff function u is uniformly \(\mathscr {P}\)-approachable. Then, with respect to \(\mathscr {P}\), each behavioral strategy b induces an unambiguous expected payoff.

We remark that Marinacci (1997) and Harris et al. (2005) also consider uniformly approachable functions, but in a very different setting. They consider one-shot multi-player simultaneous-move games in which the players’ strategies are charges. In their setting each strategy profile induces an unambigious expected payoff if the payoff function is uniformly approachable with respect to a specific algebra on the set of action profiles. Our Theorem 6.1 is set in infinite horizon decision problems, and hence it does not follow from their results.

The reverse implication of Theorem 6.1 also holds for the algebras \(\mathcal {R}^I\) and \(\mathcal {R}^{II}\), as is shown respectively in Theorems 6.2 and 6.3 below. However, Example 11.2 will demonstrate that the reverse implication is no longer valid for the algebras \(\mathcal {R}^{III}\) and \(\mathcal {R}\).

The next theorems provide, for each algebra separately, connections between unambiguous expected payoffs under behavioral strategies and properties of the payoff function.

For the finite horizon algebra \(R^{I}\), uniform continuity plays the crucial role.

Theorem 6.2

(for the finite horizon algebra \(R^{I}\)) The following are equivalent:

-

1.

With respect to \(\mathcal {R}^I\), each behavioral strategy b induces an unambiguous expected payoff.

-

2.

The payoff function u is uniformly continuous.

-

3.

The payoff function u is uniformly \(\mathcal {R}^{I}\)–approachable.

The following theorem, which followsFootnote 14 from Theorem 2.8.5 in Dubins and Savage (2014), gives sufficient and necessary conditions for unambiguous expected payoffs for the clopen algebra \(\mathcal {R}^{II}\). One of these conditions is continuity of the payoff function.

Theorem 6.3

(for the clopen algebra \(R^{II}\)) The following are equivalent:

-

1.

With respect to \(\mathcal {R}^{II}\), each behavioral strategy b induces an unambiguous expected payoff.

-

2.

The payoff function u is continuous.

-

3.

The payoff function u is uniformly \(\mathcal {R}^{II}\)–approachable.

For the algebras \(\mathcal {R}^{III}\) and \(\mathcal {R}\) we obtain sufficient conditions for unambiguous expected payoffs.

Theorem 6.4

(for the clopen+singleton algebra \(\mathcal {R}^{III}\)) Suppose that the payoff function u is strongly tame. Then u is uniformly \(\mathcal {R}^{III}\)–approachable, and hence with respect to \(\mathcal {R}^{III}\) each behavioral strategy b induces an unambiguous expected payoff.

Regarding a converse of Theorem 6.4, we present in Example 11.2 a decision problem in which with respect to \(\mathcal {R}^{III}\) each behavioral strategy induces an unambiguous expected payoff, yet the payoff function is not uniformly \(\mathcal {R}^{III}\)-approachable, and hence not strongly tame either.

Theorem 6.5

(for the Borel algebra \(\mathcal {R}\)) The payoff function u is uniformly \(\mathcal {R}\)–approachable if any of the following conditions hold:

-

1.

the function u is weakly tame,

-

2.

the function u is upper semicontinuous,

-

3.

the function u is lower semicontinuous.

Hence, under any of these conditions, with respect to \(\mathcal {R}\) each behavioral strategy b induces an unambiguous expected payoff.

7 Examples

In this section we discuss a number of illustrative and thought-provoking examples, with a focus on intuition and main ideas. In each example, the payoff function has an easy structure, and we look at the possible expected payoffs for focal behavioral strategies that highlight specific features of the payoff function at hand.

Example 7.1

(Play the largest number). Consider the decision problem with action space \(A=\mathbb {N}\) and the following payoff function: for a play \(\vec a=(a_1,a_2,\ldots )\in A^\mathbb {N}\), the payoff is \(u(\vec a)=\tfrac{n}{n+1}\) where \(n=a_1\). So, the payoff is determined after the first period. Intuitively, the decision maker would like to choose an action as large as possible at period 1.

The interesting question is what the expected payoff is under the following strategy b: at period 1 the decision maker uses a diffuse charge to choose an action (for the definition of a diffuse charge, cf. Section 2). The strategy b has an unambiguous expected payoff with respect to all four algebras \(\mathcal {R}^I\), \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\), \(\mathcal {R}\). Indeed, this follows by Theorem 6.2 as the payoff function u is uniformly continuous.

In fact, the behavioral strategy b induces the expected payoff of 1, and therefore it is optimal,Footnote 15 with respect to these algebras. To see this, note that, for each \(n\in \mathbb {N}\), the set \(\cup _{k=n}^\infty Q_k\) has probability 1, where \(Q_k\) is the set of plays that start with action k (cf. Lemma 10.2). This implies that, for each \(n\in \mathbb {N}\), the expected payoff is at least \(\frac{n}{n+1}\), which proves that the expected payoff under the strategy b is 1. \(\triangleleft\)

Example 7.2

(Repeat n times). Consider the decision problem with action space \(A=\mathbb {N}\) and the following payoff function: for a play \(\vec a=(a_1,a_2,\ldots )\in A^\mathbb {N}\), if \(a_1=\ldots =a_{n+1}=n\) where \(n\in \mathbb {N}\) then the payoff is \(u(\vec a)=\tfrac{n}{n+1}\) and otherwise \(u(\vec a)=0\). If action n is played at period 1, then the payoff is determined in period \(n+1\). Consequently, the payoff is determined in finite but unbounded time. Intuitively, the decision maker would like to choose an action n, as large as possible, at period 1 and repeat it at periods \(2,\ldots ,n+1\).

The behavioral strategy b we consider is similar to the one in Example 7.1. In order to choose a large action at period 1, the decision maker chooses the first action according to a diffuse charge. If action n is chosen at period 1, then he places probability 1 on action n at periods \(2,\ldots ,n+1\).

The strategy b has an unambiguous expected payoff with respect to the algebras \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\) and \(\mathcal {R}\), but b has an ambiguous expected payoff with respect to the algebra \(\mathcal {R}^{I}\). Indeed, this follows by Theorems 6.2 and 6.3 as the payoff function u is continuous but not uniformly continuous.Footnote 16

In fact, with respect to the algebras \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\) and \(\mathcal {R}\), the strategy b has an expected payoff of 1, and therefore it is optimal.Footnote 17 Indeed, for each \(k\in \mathbb {N}\), let \(Q_k\) be the set of plays that start with \({(k,\ldots ,k)}\) till period \(k+1\). Then, for each \(n\in \mathbb {N}\), the set \(\cup _{k=n}^\infty Q_k\) is clopen, and it follows from Condition 1 of Theorem 4.1 that \(\mathbb {P}_b(\cup _{k=n}^\infty Q_k) =1\) for every \(n\in \mathbb {N}\). Hence, \(\mathbb {P}^{II}_b(\cup _{k=n}^\infty Q_k) =1\) for every \(n\in \mathbb {N}\). This implies that, for each \(n\in \mathbb {N}\), the expected payoff is at least \(\frac{n}{n+1}\), and therefore, the expected payoff under the strategy b is 1. \(\triangleleft\)

The next two examples are stopping problems. In these decision problems the decision maker has two actions, one of which could be interpreted as “continue” and the other as “stop”. The payoff is determined by the first period when the decision maker plays the latter action.

Example 7.3

(Continue indefinitely). Consider the decision problem with action space \(A=\{c,s\}\) and the following payoff function: for the play \(\vec c=(c, c, \ldots )\) let \(u(\vec c)=1\), and for all \(\vec a\in A^\mathbb {N}\setminus \{\vec c\}\) let \(u(\vec a)=0\).

Consider the strategy b that chooses action c with probability 1 at every period. The strategy b has an unambiguous expected payoff with respect to the algebras \(\mathcal {R}^{III}\) and \(\mathcal {R}\), but b has an ambiguous expected payoff with respect to the algebras \(\mathcal {R}^I\) and \(\mathcal {R}^{II}\). Indeed, this follows by Theorems 6.3 and 6.4 as the payoff function u is strongly tame but not continuous.Footnote 18 In fact, b has an expected payoff of 1, and is therefore optimal, with respect to the algebras \(\mathcal {R}^{III}\) and \(\mathcal {R}\).Footnote 19\(\triangleleft\)

Example 7.4

(Repeat indefinitely). Consider the decision problem with action space \(A=\mathbb {N}\) and the following payoff function: for any \(n \in \mathbb {N}\), the play \((n, n, \ldots )\) has a payoff of \(u(n, n, \ldots )=\tfrac{n}{n+1}\), and otherwise the payoff is 0. Thus, the decision maker would like to choose an action, as large as possible, in period 1 and then to repeat it in each following period.

The interesting question is what the expected payoff is under the following strategy b: at period 1 the decision maker uses a diffuse charge to choose an action, and at every other period he places probability 1 on the action chosen in period 1.

The strategy b has an unambiguous expected payoff of 1 with respect to the Borel algebra \(\mathcal {R}\), which follows by Theorem 6.5 as the payoff function u is upper semicontinuous.

On the other hand, b has an ambiguous expected payoff with respect to the algebras \(\mathcal {R}^I\), \(\mathcal {R}^{II}\) and \(\mathcal {R}^{III}\). Indeed, u is not continuous, so this claim follows for \(\mathcal {R}^I\), \(\mathcal {R}^{II}\) by Theorem 6.3.Footnote 20 The proof for \(\mathcal {R}^{III}\) follows from Part 3 of Proposition 9.2. \(\triangleleft\)

The algebra \(\mathcal {R}\) is the largest algebra that we consider. As one might suspect, even using the algebra \(\mathcal {R}\), not all behavioral strategies have an unambiguous expected payoff. The following example is very similar to the example presented in Sections 4 and 5 in Purves and Sudderth (1976).

Example 7.5

(Do not play a infinitely many times). Consider a decision problem with action space \(A=\{a, a'\}\). Let Q be the set of plays \(\vec {a}\) such that action a appears only finitely many times in \(\vec {a}\). Note that Q is in the Borel sigma-algebra of \(A^\mathbb {N}\), but not in the algebra \(\mathcal {R}\). Consider the following payoff function: \(u(\vec {a})=1\) for every play \(\vec {a}\in Q\), and \(u(\vec {a})=0\) otherwise.

Consider the behavioral strategy b which chooses the action uniformly random in every period: \(b(h)(a)=\tfrac{1}{2}\) and \(b(h)(a')=\tfrac{1}{2}\) for every history \(h \in H\). We briefly argue that b does not induce an unambiguous expected payoff with respect to \(\mathcal {R}\), that is, \([u(b)]\) is not a singleton.

Note that the only open set that is contained in Q is the empty set, and the only open set that contains Q is the entire set \(A^\mathbb {N}\). That is, inner approximations of Q by open sets give probability 0, and outer approximations of Q by open sets give probability 1. Similarly, inner approximations of \(A^\mathbb {N}\setminus Q\) by open sets give probability 0, and outer approximations of \(A^\mathbb {N}\setminus Q\) by open sets give probability 1. As one can show (by induction on the complexity of the sets in \(\mathcal {R}\)), this implies that inner approximations of Q by sets in \(\mathcal {R}\) all give probability 0, and the same for \(A^\mathbb {N}\setminus Q\). By Theorem C.3 in Flesch et al. (2017), there exists an extension \(B\in [\mathbb {P}_b]\) such that \(B(Q)=0\) and also there exists an extension \(B'\in [\mathbb {P}_b]\) such that \(B'(Q)=1\). This completes the proof. \(\triangleleft\)

8 Concluding remarks

We examined infinite horizon decision problems with arbitrary bounded payoff functions, in which the decision maker uses finitely additive behavioral strategies. Because we only assume that the payoff function is bounded, a behavioral strategy does not necessarily induce an unambiguous expected payoff. To address this ambiguity, we derived conditions on the payoff function that guarantee an unambiguous expected payoff regardless of the behavioral strategy adopted. Our approach involved a systematic exploration of various alternatives proposed in the literature on how to define the finitely additive probability measures on the set of infinite plays induced by behavioral strategies.

In the subsequent part of this section, we discuss extensions of our results.

Multiple players playing sequentially. If there are multiple players, each having her own payoff function, and these players play sequentially (that is, there are no simultaneous moves), then our analytic framework remains applicable. Indeed, a strategy profile, which consists of one strategy for each player, defines a charge on the set of actions at each history, just like a strategy in the case of a single decision maker. Consequently, each strategy profile induces an unambiguous expected payoff to some player exactly under the same conditions on her payoff function as in the case of a single decision maker.

Multiple players playing simultaneously. When considering multiple players playing simultaneously, with finite action sets, our analytic framework remains applicable. The reason is that, in this case, under each strategy profile, there is a unique probability measure on the set of action profiles at each history. This situation contrasts starkly with instances when the action sets are infinite, because then a strategy profile can induce a set of possible charges on the set of action profiles. We refer to Flesch et al. (2017) for more details.

Extending the charge \(\mathbb {P}_b\), for a behavioral strategy b, from the Borel algebra \(\mathcal {R}\) to a larger algebra. Given a specific behavioral strategy b, Purves and Sudderth (1976) extended the charge \(\mathbb {P}_b\) from the algebra \(\mathcal {R}\) to an algebra \({\mathcal {A}}(b)\). The algebra \({\mathcal {A}}(b)\) depends on the behavioral strategy b, but always includes the Borel sigma-algebra on \(A^{\mathbb {N}}\). Even by considering these larger algebras, ambiguous expected payoffs still play a role though: Purves and Sudderth present an example with a specific payoff function and a behavioral strategy b under which the expected payoff is ambiguous.

9 Glossary

Let \(\mathbb {N}=\{1,2,\ldots \}\). We provide a table below containing the most frequently used symbols and notations with reference to the Section where they are defined (in the table, \(i\in \{I,II,III\}\)).

Notation | Meaning | Section |

|---|---|---|

A | action set | 3 |

H | set of histories | 3 |

\(A^\mathbb {N}\) | set of plays | 3 |

u | Payoff function | 3 |

b | Behavioral strategy | 3 |

d | Metric on \(A^\mathbb {N}\) | 4 |

\(\mathcal {R}\) | Borel algebra on \(A^\mathbb {N}\) | 4 |

\(\mathcal {R}^{I}\) | The finite horizon algebra on \(A^\mathbb {N}\) | 4 |

\(\mathcal {R}^{II}\) | The clopen (closed and open) algebra on \(A^\mathbb {N}\) | 4 |

\(\mathcal {R}^{III}\) | The clopen+singleton algebra on \(A^\mathbb {N}\) | 4 |

\(\mathbb {P}_b\) and \(\mathbb {P}_b^i\) | Charge induced on \(\mathcal {R}\) resp. \(\mathcal {R}^{i}\) by the behavioral strategy b | 4 |

[u(b)] and \([u^i(b)]\) | Set of possible expected payoffs when \(\mathbb {P}_b\) resp. \(\mathbb {P}_b^i\) | |

is extended from \(\mathcal {R}\) resp. \(\mathcal {R}^i\) to the power set of \(A^\mathbb {N}\) | 4 | |

\(o_u\) | the oscillation of u | 5 |

h(t) and \(\vec a(t)\) | Action in history h and respectively in play \(\vec a\) at period t | 10 |

\(\preceq\) and \(\prec\) | Notations for one sequence extending another | 10 |

[h] and [Q] | Set of plays extending the history h or a set Q of histories | 10 |

Notes

See p.272 in the appendix by WD Sudderth, D Gilat and R Purves in Dubins and Savage (2014). Indeed, consider the inverse images \(f^{-1} [z \varepsilon , (z+1) \varepsilon )\), where z is an integer. Since f is bounded, only finitely many of them are non-empty. If \(f^{-1} [z \varepsilon , (z+1) \varepsilon )\) is non-empty, then let s take value \(z \varepsilon\) on this set. It follows that \(s \le f \le s + \varepsilon\).

The definition of a diffuse charge can be generalized to sets that are uncountably infinite. However, in the general case the definition is more involved.

Note that the set of available actions does not depend on the history, and is always A.

In fact, \(G|_h\) is identical to the decision problem G, except for the payoff function (and for the inessential change that the first period has label \(k+1\)).

Note that formally \(b|_h\) is a mapping from H to \({\mathcal {C}}(A,2^A)\), so \(b|_h\) itself is also a behavioral strategy according to our definition.

The language and notation of Dubins and Savage are different from ours.

Formally, as mentioned earlier, each continuation strategy is itself a strategy in the original decision problem G, and therefore \(\psi\) assigns a charge to it.

For the Borel algebra \(\mathcal {R}\), we omit the superscript \(\mathcal {R}\) in \(\mathbb {P}_b\) and in \([u(b)]\), unlike for the other algebras that we derive later from \(\mathcal {R}\).

Equivalently, for every play \(\vec a\) and sequence of plays \(\vec a_n\) converging to \(\vec a\) we have \(\limsup _{n \rightarrow \infty } u(\vec a_n) \le u(\vec a)\).

Equivalently, for every play \(\vec a\) and sequence of plays \(\vec a_n\) converging to \(\vec a\) we have \(\liminf _{n \rightarrow \infty } u(\vec a_n) \ge u(\vec a)\).

If each discontinuity in \(D_u\) is removable, then the function \(L_u:A^\mathbb {N}\rightarrow \mathbb {R}\) is continuous; cf. Lemma 10.1.

The language and notation of Dubins and Savage are different from ours, and therefore we will provide a proof in Sect. 10.

In contrast, no countably additive strategy is optimal.

Theorem 6.2 only implies that there exists some behavioral strategy that induces an ambiguous expected payoff with respect to \(\mathcal {R}^{I}\). It is however clear from the example and from the proof of Theorem 6.2 that ambiguity of the expected payoff occurs at the behavioral strategy b in the example.

Similarly to Example 7.1, no countably additive strategy is optimal.

Similarly to footnote 16, ambiguity of the expected payoff with respect to \(\mathcal {R}^{II}\) occurs exactly at the behavioral strategy b in the example.

Indeed, it follows from Lemma 10.4 that \(\mathbb {P}^{III}_b(\vec c)=\ell (b)(\vec c)=1\).

Similarly to footnote 16, ambiguity of the expected payoff with respect to \(\mathcal {R}^{III}\) occurs exactly at the behavioral strategy b in the example.

The example is quite delicate. Consider the following related example: The action set is \(\{x,y\}\), and we define \(W^x\) as in the example, i.e., \(W^x=A^\mathbb {N}\setminus \{\vec y\}\). Now, in contrast with the example, \(W^x \in \mathcal {R}^{III}\) would hold.

References

Aliprantis D, Border KC (2005) Infinite dimensional analysis. Springer, Berlin

Al-Najjar NI (2009) Decision makers as statisticians: diversity, ambiguity, and learning. Econometrica 77(5):1371–1401

Al-Najjar N, Pomatto L, Sandroni A (2014) Claim validation. Am Econ Rev 104(11):3725–3736

Bingham NH (2010) Finite additivity versus countable additivity. Electronic Journal for History of Probability and Statistics, vol 6, no 1

Capraro V, Scarsini M (2013) Existence of equilibria in countable games: An algebraic approach. Games and Economic Behavior, 79, issue C, 163-180

Cerreia-Vioglio S, Maccheroni F, Schmeidler D (2022) Equilibria of nonatomic anonymous games. Games Econ Behav 135:110–131

Dubins LE (1974) On Lebesgue-like extensions of finitely additive measures. Annals Prob 2(3):456–463

Dubins LE, Savage LJ (2014) edited and updated by Sudderth, WD and Gilat, D, How to gamble if you must: inequalities for stochastic processes. Dover Publications, New York

Dunford N, Schwartz JT (1964) Linear operators, Part I: general theory. Interscience Publishers, New York

de Finetti B (1975) The theory of probability (2 volumes). J. Wiley and Sons, Chichester

Flesch J, Vermeulen D, Zseleva A (2017) Zero-sum games with charges. Games Econ Behav 102:666–686

Flesch J, Vermeulen D, Zseleva A (2019) On the equivalence of mixed and behavior strategies in finitely additive decision problems. J Appl Prob 56(3):810–829

Gilboa I, Marinacci M (2016) Ambiguity and the Bayesian paradigm. Readings in formal epistemology: sourcebook. Springer International Publishing, Cham, pp 385–439

Harris JH, Stinchcombe MB, Zame WR (2005) Nearly compact and continuous normal form games: characterizations and equilibrium existence. Games Econ Behav 50:208–224

Kechris AS (1995) Classical Descriptive Set Theory. Springer-Verlag

Loś J, Marczewski E (1949) Extensions of measure. Fundamenta Mathematicae, issue 36:267–276

Maitra A, Sudderth W (1993) Finitely additive and measurable stochastic games. Int J Game Theory 22:201–223

Maitra A, Sudderth W (1998) Finitely additive stochastic games with Borel measurable payoffs. Int J Game Theory 27:257–267

Marinacci M (1997) Finitely additive and epsilon Nash equilibria. Int J Game Theory 26(3):315–333

Milchtaich I (2020) Best-response equilibrium: An equilibrium in finitely additive mixed strategies. Bar-Ilan University, Research Institute for Econometrics and Economic Theory, Working Paper

Pomatto L, Al-Najjar N, Sandroni A (2014) Merging and testing opinions. Ann Stat 42(3):1003–1028

Purves R, Sudderth W (1976) Some finitely additive probability. Ann Probability 4:259–276

Rao KPSB, Rao B (1983) Theory of charges: a study of finitely additive measures. Academic Press, New York

Savage LJ (1972) The Foundations of Statistics. Dover Publications, New York

Schirokauer O, Kadane JB (2007) Uniform distributions on the natural numbers. J Theoretical Prob 20(3):429–441

Sudderth W (2016) Finitely additive dynamic programming. Math Oper Res 41:92–108

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We would like to thank William Sudderth for a very helpful discussion. We also thank the editor and two anonymous reviewers for their very useful comments.

Appendices

Appendix: Additional properties of the algebras and the expected payoffs

In this section, we provide further properties of the algebras, the induced charges and expected payoffs. All the proofs can be found in Sect. 10.

The following proposition compares the algebras \(R^{I}\), \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\), \(\mathcal {R}\) and the Borel sigma-algebra, and therefore extends (5).

Proposition 9.1

The algebras have the following relationships:

where \(\sigma (A^\mathbb {N})\) is the Borel sigma-algebra on \(A^\mathbb {N}\). Moreover, \(\mathcal {R}^{I}=R^{II}\) if and only if the action set A is finite.

Given a behavioral strategy b, we defined the induced charges \(\mathbb {P}^{I}_b\) on the algebra \(\mathcal {R}^I\) and \(\mathbb {P}^{II}_b\) on the algebra \(\mathcal {R}^{II}\). By definition, \(\mathbb {P}^{II}_b\) restricted to the smaller algebra \(\mathcal {R}^I\) equals to the charge \(\mathbb {P}^{I}_b\). The first part of the following proposition discusses the opposite direction: \(\mathbb {P}^{II}_b\) is not the unique extension of \(\mathbb {P}^{I}_b\) from the algebra \(\mathcal {R}^I\) to the algebra \(\mathcal {R}^{II}\), for certain behavioral strategies. This shows that dealing with \(\mathcal {R}^I\) or \(\mathcal {R}^{II}\) is essentially different. The second part and the third part of the proposition discuss similar results for the other algebras.

Proposition 9.2

-

1.

Assume that the action space A is infinite. Then there is a behavioral strategy b and a charge \(\mu\) on \(\mathcal {R}^{II}\) such that \(\mu (Z)\;=\;\mathbb {P}^{II}_b(Z)\) for every \(Z\in \mathcal {R}^{I}\), but \(\mu \ne \mathbb {P}^{II}_b\).

-

2.

There is a behavioral strategy b and a charge \(\mu\) on \(\mathcal {R}^{III}\) such that \(\mu (Z)\;=\;\mathbb {P}^{III}_b(Z)\) for every \(Z\in \mathcal {R}^{II}\), but \(\mu \ne \mathbb {P}^{III}_b\).

-

3.

Assume that the action space A is infinite. Then there is a behavioral strategy b and a charge \(\mu\) on \(\mathcal {R}\) such that \(\mu (Z)\;=\;\mathbb {P}_b(Z)\) for every \(Z\in \mathcal {R}^{III}\), but \(\mu \ne \mathbb {P}_b\).

In Part 1 of the above proposition, it is necessary to assume that A is infinite, in view of Proposition 9.1. We emphasize that Part 2 of the above proposition holds for any action space A, even when A is finite (under our assumption that \(|A|\ge 2\)).

Given a behavioral strategy, we defined the sets of expected payoffs \([u^I(b)]\), \([u^{II}(b)]\), \([u^{III}(b)]\) and \([u(b)]\) for the algebras \(\mathcal {R}^I\), \(\mathcal {R}^{II}\), \(\mathcal {R}^{III}\) and \(\mathcal {R}\), respectively. We compare these sets in more detail.

Proposition 9.3

The induced expected payoffs on the algebras have the following property:

-

1.

For each decision problem and each behavioral strategy b

$$\begin{aligned}{}[u^I(b)]\;\supseteq \; [u^{II}(b)]\;\supseteq \; [u^{III}(b)]\;\supseteq \; [u(b)]. \end{aligned}$$(6) -

2.

Assume that the action set A is finite. Then, for each payoff function u and each behavioral strategy b we have \([u^I(b)]\;=\; [u^{II}(b)]\), and there is a payoff function u and a behavioral strategy b for which \([u^{II}(b)]\;\supset \; [u^{III}(b)]\).

-

3.

Assume that the action set A is infinite. Then, for each inclusion in (6) there is a payoff function u and a behavioral strategy b under which this inclusion is strict.

Appendix: The proofs

In this section, we prove the results stated in the previous sections, but also make a few additional statements that we use for the proofs. We will often use the following notations:

-

For a history \(h \in A^{n}\) and a period \(t \le n\), we denote by h(t) the action in h taken at period t. Similarly, for a play \(\vec a\in A^\mathbb {N}\) we denote by \(\vec {a}(t)\) the action in \(\vec a\) taken at period t.

-

For a history \(h\in A^n\) and a play \(\vec a\in A^\mathbb {N}\), we use the notation \(h \prec \vec a\) if \(\vec a\) extends h, i.e., \(\vec {a}(t)=h(t)\) for all \(t \le n\). Similarly, for \(m\ge n\) and a history \(h' \in A^m\), we use the notation \(h \preceq h'\) if \(h(t)=h'(t)\) for all \(t \le n\), and use the notation \(h \prec h'\) if \(h\preceq h'\) and \(h \ne h'\).

-

For a history \(h\in H\), we denote the set of plays extending h by \([h]=\{\vec a\in A^\mathbb {N}:h\prec \vec a\}\), also called the cylinder set corresponding to h. Also, if \(Q\subseteq H\), then we use the notation \([Q]=\cup _{h\in H}[h]\).

The following lemma about the function \(L_g\) will be used in the proofs. For the definition of the function \(L_g\), we refer to Sect. 5.

Lemma 10.1

Consider a function \(g:A^{\mathbb {N}}\rightarrow \mathbb {R}\). Assume that each discontinuity in \(D_g\) is removable. Then the function \(L_g:A^{\mathbb {N}}\rightarrow \mathbb {R}\) is continuous.

Proof

Take an arbitrary play \(\vec a \in A^\mathbb {N}\). Consider a sequence of plays \((\vec a_k)_{k \in \mathbb {N}}\) that converges to \(\vec a\). We show that \((L_g(\vec a_k))_{k \in \mathbb {N}}\) converges to \(L_g(\vec a)\).

By the definition of \(L_g\), for every \(k \in \mathbb {N}\) there exists a play \(\vec a^\diamond _k \in A^\mathbb {N}\) such that \(d(\vec a_k, \vec a^\diamond _k) < \tfrac{1}{k}\) and \(|L_g(\vec a_k)-g(\vec a^\diamond _k)| < \tfrac{1}{k}\). Because \(d(\vec a^\diamond _k,\vec a)\le d(\vec a^\diamond _k,\vec a_k)+d(\vec a_k,\vec a)\), we find that \(d(\vec a^\diamond _k,\vec a)\) converges to 0, i.e., the sequence \((\vec a^\diamond _k)_{k \in \mathbb {N}}\) converges to \(\vec a\) as well. Hence by the definition of \(L_g\), the sequence \((g(\vec a^\diamond _k))_{k \in \mathbb {N}}\) converges to \(L_g(\vec a)\). By using

we obtain that \(|L_g(\vec a_k)-L_g(\vec a)|\) converges to 0. \(\Box\)

For the algebra \(\mathcal {R}^I\), which is the smallest algebra we consider, the charge \(\mathbb {P}_b^{I}\) takes a natural form. Indeed, based on the definition of \(\mathcal {R}^I\) in Sect. 4, for each set \(Q\in \mathcal {R}^I\) there is a period n such that at period n we already know if the induced play belongs to Q or not. Let n(Q) be the smallest such period. The following proposition follows from Theorem 4.1.

Lemma 10.2

Let \(Q\in R^I\), and let \(n\ge n(Q)\). Then, under each behavioral strategy b, the probability of the set Q can be calculated through iterated integrals over the actions of the first n periods as follows:

The following characterization for the algebra \(\mathcal {R}^{III}\) will be used in the proof later.

Lemma 10.3

A subset \(Z\subseteq A^\mathbb {N}\) belongs to \(\mathcal {R}^{III}\) if and only if it differs in at most finitely many plays from a clopen set: there is a unique clopen set \(Q\subseteq A^\mathbb {N}\) and unique finite sets \(F_1\subseteq A^\mathbb {N}{\setminus } Q\) and \(F_2\subseteq Q\) such that

Proof

Part 1: We show that \(\mathcal {R}^{III}=\mathscr {P}\), where \(\mathscr {P}\) is the set of all subsets \(Z\subseteq A^{\mathbb {N}}\) of the form

where \(Q\subseteq A^\mathbb {N}\) is clopen and \(F_1,F_2\subseteq A^\mathbb {N}\) are finite. Note that (9) is weaker than the representation in (8). By the definition of \(\mathcal {R}^{III}\), we have \(\mathscr {P}\subseteq \mathcal {R}^{III}\). So we only need to verify that \(\mathcal {R}^{III}\subseteq \mathscr {P}\).

It is clear that (1) \(\mathcal {R}^{II}\subseteq \mathscr {P}\) and (2) \(\{\vec a\}\in \mathscr {P}\) for every \(\vec a\in A^{\mathbb {N}}\). We show that \(\mathscr {P}\) is an algebra. Then, by the definition of \(\mathcal {R}^{III}\), it will follow that \(\mathcal {R}^{III}\subseteq \mathscr {P}\).

Obviously, \(A^{\mathbb {N}}\in \mathscr {P}\), by choosing \(Q=A^\mathbb {N}\) and \(F_1=F_2=\emptyset\).

Now we show that \(\mathscr {P}\) is closed under taking complements. Take an element \(Z=(Q\cup F_1)\setminus F_2\) of \(\mathscr {P}\) as in (9). The complement of Z is

Because \(Q\in \mathcal {R}^{II}\), we have \((A^{\mathbb {N}}\setminus Q)\in \mathcal {R}^{II}\) as well. Hence, \((A^{\mathbb {N}}\setminus Z)\in \mathscr {P}\).

Finally, we show that \(\mathscr {P}\) is closed under taking unions. Let \(Z,Z'\in \mathscr {P}\). Then Z and \(Z'\) are of the form \(Z=W\setminus V\) and \(Z'=W'{\setminus } V'\) where \(W=Q\cup F_1\) and \(W'=Q'\cup F'_1\), with \(Q,Q'\in \mathcal {R}^{II}\) and \(F_1\), \(F'_1\), V and \(V'\) being finite subsets of \(A^{\mathbb {N}}\). For the union of Z and \(Z'\) we have

where \({\overline{W}}=A^\mathbb {N}{\setminus } W\) and \(\overline{W}'=A^\mathbb {N}{\setminus } W'\). Note that \(W\cup W'=(Q\cup Q')\cup (F_1\cup F'_1)\). As \((Q \cup Q') \in \mathcal {R}^{II}\), and \(F_1 \cup F'_1\) is finite, and \(({\overline{W}}\cap V')\cup ({\overline{W}}'\cap V)\cup (V\cap V')\) is also finite, we have \((Z\cup Z')\in \mathscr {P}\).

Part 2: We prove that for every \(Z\in \mathcal {R}^{III}\) there is a unique representation of the form (8).

By Part 1, such a representation exists. Now assume that for some \(Z\in \mathcal {R}^{III}\) there are two representations

of the form (8). That is, \(Q,Q'\in \mathcal {R}^{II}\), and the following sets are finite: \(F_1\subseteq A^\mathbb {N}\setminus Q\), and \(F'_1\subseteq A^\mathbb {N}{\setminus } Q'\), and \(F_2\subseteq Q\), and \(F'_2\subseteq Q'\).

We first show that \(Q=Q'\). Let h be a history such that \([h]\subseteq Q\). We argue that \([h]\subseteq Q'\). Assume the opposite. Then, there is a play \(\vec a\in A^\mathbb {N}\) such that \(h\prec \vec a\) and \(\vec a\not \in Q'\). Since \(Q'\) is clopen and so \(A^\mathbb {N}{\setminus } Q'\) is open, there exists a history \(h'\) with \(h\preceq h'\prec \vec a\) and \([h']\cap Q'=\emptyset\). Thus, the set \([h']\) has the following properties: (1) \([h']\) is infinite because of our assumption that \(|A|\ge 2\), (2) \([h']\subseteq [h]\subseteq Q\) and (3) \([h']\cap Q'=\emptyset\). Then by using that \(F_1, F_1', F_2\) and \(F_2'\) are finite sets, \((Q\cup F_1){\setminus } F_2\) and \((Q'\cup F'_1){\setminus } F'_2\) cannot be equal, contradicting (10). Hence, \([h]\subseteq Q'\). Because this holds for all h such that \([h]\subseteq Q\), we conclude that \(Q\subseteq Q'\). For a similar reason, \(Q\supseteq Q'\). So, \(Q=Q'\).

Based on \(Q=Q'\), it follows by finiteness of \(F_1\), \(F_2\), \(F'_1\) and \(F'_2\) that \(F_1=F'_1\) and \(F_2=F'_2\). \(\Box\)

Using Lemma 10.3, the induced charges on the algebra \(\mathcal {R}^{III}\) can be described directly.

Lemma 10.4

Let \(Z\in \mathcal {R}^{III}\) be of the form \(Z=(Q\cup F_1)\setminus F_2\), as given in (8). Then, for each behavioral strategy b we have

where \(\ell (b)(a_1,a_2,\ldots )\,=\,\prod _{n=1}^\infty \; b(a_1,\ldots ,a_{n-1})(a_n)\).

In the lemma above, \(\ell (b)(\vec a)\) is just the usual probability of a play, which is calculated by multiplying the probabilities at each period. Naturally, \(\ell (b)(\vec a)\,=\,\mathbb {P}_b(\vec a)\); this follows by using that the complement of \(\{\vec a\}\) is open and Theorem 4.1.

Proof of Proposition 9.1: The weak set-inclusions in the proposition are immediate by (5).

Proof of \(\mathcal {R}^I = \mathcal {R}^{II}\) if and only if the action set A is finite:

\(\Longrightarrow\) Assume first that the action set A is infinite. We show that there exists a set \(U\in \mathcal {R}^{II}\setminus \mathcal {R}^{I}\). (The construction is related to Example 7.2.) Since A is infinite, by renaming the actions we can assume that \(\mathbb {N}\subseteq A\). For every \(n\in \mathbb {N}\), let \(h_n\) denote the history \((n,\ldots ,n)\) of length n. Define

Thus, U is the set of plays that start with action n at the first n periods, for some \(n\in \mathbb {N}\).

We show \(U\in \mathcal {R}^{II}\setminus \mathcal {R}^{I}\). It is clear that \(U\not \in \mathcal {R}^{I}\). So it remains to verify that \(U\in \mathcal {R}^{II}\). Since \([h_n]\) is open for every \(n\in \mathbb {N}\), the set U is open. For every \(n \in \mathbb {N}\), let \(H_n\) be the set of histories of length n that start with action n. Since \([H_n\setminus \{h_n\}]\) is open for every \(n\in \mathbb {N}\) and \([A \backslash \mathbb {N}]\) is also open, the complement of U

is open too. Hence, U is closed, and therefore \(U\in \mathcal {R}^{II}\) indeed.

\(\Longleftarrow\) Now assume first that the action set A is finite. For proving \(\mathcal {R}^{I}=\mathcal {R}^{II}\), we only need to prove \(\mathcal {R}^{I}\supseteq \mathcal {R}^{II}\). Let \(Z\in \mathcal {R}^{II}\). To show \(Z\in \mathcal {R}^{I}\), it is sufficient to prove that there is \(n \in \mathbb {N}\) such that for every history \(h \in A^n\) we have either \([h] \subseteq Z\) or \([h] \subseteq A^\mathbb {N}\backslash Z\).

Suppose the opposite. Then there exists a sequence of histories \(h_n\) such that for every \(n \in \mathbb {N}\) we have \(h_n \in A^n\) and there exists a play \(\vec a_n \succ h_n\) and a play \(\vec a^{\diamond }_n \succ h_n\) with \(\vec a_n \in Z\) and \(\vec a^{\diamond }_n \in A^{\mathbb {N}} \backslash Z\). Because A is finite, \(A^{\mathbb {N}}\) is compact. Hence, there exists a subsequence \(n_k\), with index \(k\in \mathbb {N}\), converging to infinity, such that \(\vec a_{n_k}\) converges to some play \(\vec a\). Then there also exists a sub-subsequence \(n_{k_m}\) such that \(\vec a^{\diamond }_{n_{k_m}}\) also converges. Since \(\vec a_{n_{k_m}} \succ h_{n_{k_m}}\) and \(\vec a^{\diamond }_{n_{k_m}} \succ h_{n_{k_m}}\) for every \(m \in \mathbb {N}\), and the sequence \(\vec a_{n_{k_m}}\) converges to \(\vec a\), we can conclude that \(\vec a^{\diamond }_{n_{k_m}}\) also converges to \(\vec a\). However, because Z is clopen, \(\vec a \in Z\) as it is the limit of \(\vec a_{n_{k_m}}\) and \(\vec a \in A^{\mathbb {N}} \backslash Z\) as it is the limit of \(\vec a^{\diamond }_{n_{k_m}}\). This is a contradiction.

Proof of \(\mathcal {R}^{II} \subset \mathcal {R}^{III}\). Take a play \(\vec a \in A^{\mathbb {N}}\), and consider the singleton \(\{\vec a\}\). By definition, \(\{\vec a\} \in \mathcal {R}^{III}\). As the action space A is assumed to contain at least two actions, \(\{\vec a\}\) is not open, and hence \(\{\vec a\} \notin \mathcal {R}^{II}\).

Proof of \(\mathcal {R}^{III} \subset \mathcal {R}\). We construct a set \(Z\subset A^{\mathbb {N}}\) such that \(Z\in \mathcal {R}\) but \(Z\not \in \mathcal {R}^{III}\). Take two distinct actions a and \(a'\); recall that we assumed \(|A|\ge 2\). For every \(n\in \mathbb {N}\), let \(\vec {a}_n\) denote the play where action a is played at the first n periods, and action \(a'\) is played at all periods after that. Let \(Z=\{\vec {a}_n:n\in \mathbb {N}\}\).

First we argue that \(Z\not \in \mathcal {R}^{III}\). Assume by way of contradiction that \(Z\in \mathcal {R}^{III}\). Then by Part 2 of Lemma 10.3, there are \(Q\in \mathcal {R}^{II}\) and finite subsets \(F_1\) and \(F_2\) of \(A^{\mathbb {N}}\) such that \(Z\;=\;(Q\cup F_1){\setminus } F_2,\) and \(Q\cap F_1=\emptyset\), \(Q\supseteq F_2\) and \(F_1\cap F_2=\emptyset\). Therefore, \(Q\;=\;(Z{\setminus } F_1)\cup F_2\). Since Z is countably infinite and both \(F_1\) and \(F_2\) are finite, Q must be countably infinite too. This is however in contradiction with the fact that Q is open. Thus, \(Z\not \in \mathcal {R}^{III}\) as claimed.

Now we argue that \(Z\in \mathcal {R}\). Let \(\vec {a}_\infty =(a,a,\ldots )\). Note that the closure of Z is \(Z \cup \{\vec {a}_\infty \}\). Since \(Z \cup \{\vec {a}_\infty \}\) and \(\{\vec {a}_\infty \}\) are closed, they are elements of the algebra \(\mathcal {R}\). Thus \(Z \in \mathcal {R}\) as well.

Proof of \(\mathcal {R}\subset \sigma (A^{\mathbb {N}})\): Suppose first that there are only two actions: \(A=\{a,a'\}\). Consider the set Q of plays \(\vec {a}\) such that action \(a'\) only appears finitely many times in \(\vec {a}\). As Q is countable, Q belongs to the Borel sigma-algebra of \(A^{\mathbb {N}}\). It is well-known that \(Q\not \in \mathcal {R}\). (See also Example 7.5.) If the action set A is larger and \(A\supset \{a,a'\}\), the same set Q still satisfies the desired properties. \(\Box\)

Proof of Proposition 9.2:

Part 1: Since A is infinite, by renaming the actions we can assume that \(\mathbb {N}\subseteq A\). The proof is making use of the idea of Example 7.2.

Consider the following behavioral strategy b. The charge \(b (\text {\o })\) is such that \(b (\text {\o })(\{n\})=0\) for every \(n\in \mathbb {N}\) and \(b (\text {\o })(\mathbb {N})=1\) (essentially, \(b (\text {\o })\) is a diffuse charge on \(\mathbb {N}\)), and for any other history \(h=(a_1,\ldots ,a_t) \in H\) the charge b(h) is simply the Dirac charge on action \(a_1\). Consider also the set \(Z^*=\cup _{n\in \mathbb {N}}[(n,\ldots ,n)]\), where \([(n,\ldots ,n)]\) denotes the set of plays that start with action n at the first n periods.

Now we prove that there is a charge \(\mu\) on \(\mathcal {R}^{II}\) such that \(\mu (Z)\;=\;\mathbb {P}^{II}_b(Z)\) for every \(Z\in \mathcal {R}^{I}\), but \(\mu (Z^*)\;\ne \;\mathbb {P}^{II}_b(Z^*)\).

Let \(Z\in \mathcal {R}^I\) such that \(Z\subseteq Z^*\). Then, in period 1 of the plays in Z, only finitely many different actions can appear. As a consequence, \(\mathbb {P}^I_b(Z)=0\). So, inner approximation of \(Z^*\) by sets in \(\mathcal {R}^{I}\) yields

Based on Theorem C.3 in Flesch et al. (2017) there exists an extension \(\mu\) of \(\mathbb {P}^I_b\) to the power set of \(A^\mathbb {N}\), i.e., \(\mu \in [\mathbb {P}^I_b]\), such that \(\mu (Z^*)=0\). Since \(\mathbb {P}^{III}_b(Z^*)=1 \ne 0 = \mu (Z^*)\), the proof of Part 1 is complete.

Part 2: The proof is making use of the idea of Example 7.3. Take an action \(a \in A\), and let \(\vec a=(a,a,\ldots )\in A^\mathbb {N}\). Consider the behavioral strategy b where \(b(h)(a)=1\) for every \(h\in H\). Let \(h_t\) denote the history at period t in which only action a is played. Then by Lemma 10.4

Now we prove that there is a charge \(\mu\) on \(\mathcal {R}^{III}\) such that \(\mu (Z)\,=\,\mathbb {P}^{III}_b(Z)\) for every \(Z\in \mathcal {R}^{II}\), but \(\mu (\{\vec a\})\;\ne \;\mathbb {P}^{III}_b(\{\vec a\})\).

Note that the only set \(Z \in \mathcal {R}^{II}\) such that \(Z \subseteq \{\vec a\}\) is the empty set. So the inner approximation of \(\{\vec a\}\) by sets in \(\mathcal {R}^{II}\) yields

Based on Theorem C.3 in Flesch et al. (2017) there exists an extension \(\mu\) of \(\mathbb {P}^{II}_b\) to the power set of \(A^\mathbb {N}\), i.e., \(\mu \in [\mathbb {P}^{II}_b]\), such that \(\mu (\{\vec a\})=0\). Since \(\mathbb {P}^{III}_b(\{\vec a\})=1 \ne 0 = \mu (\{\vec a\})\), the proof of Part 2 is complete.

Part 3: Since A is infinite, by renaming the actions we can assume that \(\mathbb {N}\subseteq A\). The proof is making use of the idea of Example 7.4.

Consider the following behavioral strategy b. The charge \(b (\text {\o })\) is such that \(b (\text {\o })(\{n\})=0\) for every \(n\in \mathbb {N}\) and \(b (\text {\o })(\mathbb {N})=1\) (essentially, \(b (\text {\o })\) is a diffuse charge on \(\mathbb {N}\)), and for any other \(h\in H\) the charge b(h) is simply the Dirac charge on action 1. For every \(n\in \mathbb {N}\), let \(\vec a_n\) be a play which starts with n at period 1, and at all other periods uses action 1. Let \(Z^*=\{\vec a_n:n \in \mathbb {N}\}\).

Now we prove that there is a charge \(\mu\) on \(\mathcal {R}\) such that \(\mu (Z)\;=\;\mathbb {P}_b(Z)\) for every \(Z\in \mathcal {R}^{III}\), but \(\mu (Z^*)\;\ne \;\mathbb {P}_b(Z^*)\).

One can verify that \(\mathbb {P}_b(Z^*)=1\); this follows as \(A^\mathbb {N}{\setminus } Z^*\) is open and then by Theorem 4.1 we have \(\mathbb {P}_b(A^\mathbb {N}\setminus Z^*)=0\).

Let \({\mathcal {W}}=\{Z \in \mathcal {R}^{III}: Z \subseteq Z^*\}\). Since \(Z^*\) is countable, by (8) the elements of \({\mathcal {W}}\) are finite sets. Take a \(Z \in {\mathcal {W}}\). Using \(\ell ^{III}(b)(\vec a)=0\) for every play \(\vec a \in Z\), we arrive at \(\mathbb {P}^{III}_b(Z)=\sum _{\vec a\in Z}\ell ^{III}(b)(\vec a)=0\). So the inner approximation of \(Z^*\) by sets in \(\mathcal {R}^{III}\) yields

Based on Theorem C.3 in Flesch et al. (2017) there exists an extension \(\mu\) of \(\mathbb {P}^{III}_b\) to the power set of \(A^\mathbb {N}\), i.e., \(\mu \in [\mathbb {P}^{III}_b]\), such that \(\mu (Z^*)=0\). Since \(\mathbb {P}_b(Z^*)=1 \ne 0 = \mu (Z^*)\), the proof of Part 3 is complete. \(\Box\)

Proof of Proposition 9.3: Claim 1 and the first part of Claim 2 follow directly from Proposition 9.1. The second part of Claim 2 and Claim 3 follow from Proposition 9.2, by taking a behavioral strategy b and a set Z as in Proposition 9.2, and defining the payoff function u to be the indicator of the set Z. \(\Box\)

Proof of Theorem 6.1:Let \(\mathscr {P}\) and u be as in the theorem. Consider a behavioral strategy b, and let \(\mathbb {P}_b^\mathscr {P}\) denote the charge induced by b on \(\mathscr {P}\) (i.e., for example, \(\mathbb {P}_b^\mathscr {P}=\mathbb {P}_b^I\) if \(\mathscr {P}=\mathcal {R}^I\)).

Let \(\varepsilon >0\). Since u is uniformly \(\mathscr {P}\)-approachable, there exist \(c_i \in \mathbb {R}\) and \(Q_i\in \mathscr {P}\) for each \(i=1,\ldots ,n\), and where \(\{Q_i:i=1,\ldots ,n\}\) is a partition of \(A^\mathbb {N}\), such that for all \(\vec a \in A^\mathbb {N}\)

Let \(B\in [\mathbb {P}_b^\mathscr {P}]\). We will now integrate each part of (11) with respect to B. As \(Q_i\in \mathscr {P}\) we have \(B(Q_i)=\mathbb {P}_b^\mathscr {P}(Q_i)\), for every \(i=1,\ldots ,n\). Thus, by the definition of integration of a \(\mathscr {P}\)-measurable simple–function and by (1)

This inequality also holds for \(u(B')\) for any \(B'\in [\mathbb {P}_b^\mathscr {P}]\), and hence \(-2 \varepsilon \ \le \ u(B)-u(B') \ \le \ 2\varepsilon\). As \(\varepsilon >0\) was arbitrarily chosen, it follows that \(u(B)=u(B')\). In conclusion, b induces an unambiguous expected payoff with respect to \(\mathscr {P}\).\(\Box\)

The following lemma follows directly from the definitions of continuity and uniform continuity and from the topology on the set of plays.

Lemma 10.5

Consider a function \(g:A^{\mathbb {N}}\rightarrow \mathbb {R}\).

-

1.

g is continuous at a play \(\vec a\in A^{\mathbb {N}}\) if and only if for every \(\varepsilon >0\), there is \(T \in \mathbb {N}\) such that if for a play \(\vec a^\diamond\) it holds that \(\vec a^\diamond (t)=\vec a(t)\) for all \(t \le T\), then \(|g(\vec a)-g(\vec a^\diamond )| < \varepsilon\).

-

2.

g is uniformly continuous if and only if for every \(\varepsilon > 0\), there is \(T \in \mathbb {N}\) such that if for two plays \(\vec a\) and \(\vec a^\diamond\) it holds that \(\vec a(t)=\vec a^\diamond (t)\) for all \(t \le T\), then \(|g(\vec a)-g(\vec a^\diamond )| < \varepsilon\).

The following lemma is a kind of a combinatorial result on sequences of plays.

Lemma 10.6

Let \((\vec a_k)_{k\in \mathbb {N}}\) and \((\vec a^\diamond _k)_{k\in \mathbb {N}}\) be two sequences of plays such that, for every \(k\in \mathbb {N}\), it holds that \(\vec a_k\ne \vec a^\diamond _k\). For every \(k\in \mathbb {N}\), let \(h_k\) denote the longest history on which \(\vec a_k\) and \(\vec a^\diamond _k\) coincide, and assume that the length of \(h_k\) converges to infinity as \(k\rightarrow \infty\). Then, there exists an increasing sequence \((k_\ell )_{\ell \in \mathbb {N}}\) such that for the subsequences \((\vec a_{k_\ell })_{\ell \in \mathbb {N}}\) and \((\vec a^\diamond _{k_\ell })_{\ell \in \mathbb {N}}\) at least one of the following holds:

-

1.

The subsequences \((\vec a_{k_\ell })_{\ell \in \mathbb {N}}\) and \((\vec a^\diamond _{k_\ell })_{\ell \in \mathbb {N}}\) converge to the same play as \(\ell \rightarrow \infty\).

-

2.

There exists a history h and a sequence of actions \((a_\ell )_{\ell \in \mathbb {N}}\), with \(a_\ell \ne a_{\ell '}\) for all \(\ell ,\ell '\in \mathbb {N}\) with \(\ell \ne \ell '\), such that \(\vec a_{k_\ell }\succ (h,a_\ell )\) and \(\vec a^\diamond _{k_\ell }\succ (h,a_\ell )\) for every \(\ell \in \mathbb {N}\). Here, \((h,a_\ell )\) is the history that arises when at history h the action \(a_\ell\) is played.

Proof

For every \(n\in \mathbb {N}\), let \(\bar{A}_n\) denote the set of actions that is used at period n by at least one of the plays in either one of the sequences \((\vec a_k)_{k\in \mathbb {N}}\) and \((\vec a^\diamond _k)_{k\in \mathbb {N}}\), i.e., \(\bar{A}_n=\cup _{k\in \mathbb {N}}\{\vec a_{k}(n),\vec a^\diamond _{k}(n)\}\). Thus, \(\vec a_k,\vec a^\diamond _k\in \times _{n=1}^\infty \bar{A}_n\) for every \(k\in \mathbb {N}\). We distinguish two cases.

First assume that \(\bar{A}_n\) is finite for every \(n\in \mathbb {N}\). Then, the set \(\times _{n=1}^\infty \bar{A}_n\) is a compact subset of \(A^\mathbb {N}\). This implies that there is an increasing sequence \((k_\ell )_{\ell \in \mathbb {N}}\) in \(\mathbb {N}\) such that the subsequence \((\vec a_{k_\ell })_{\ell \in \mathbb {N}}\) converges. Since the length of \(h_{k_\ell }\) tends to infinity as \(\ell \rightarrow \infty\), we conclude that both subsequences \((\vec a_{k_\ell })_{\ell \in \mathbb {N}}\) and \((\vec a^\diamond _{k_\ell })_{\ell \in \mathbb {N}}\) converge to the same play. Thus, property 1 holds.

Now assume that, for some \(n\in \mathbb {N}\), the sets \(\bar{A}_1,\ldots ,\bar{A}_{n-1}\) are finite, but \(\bar{A}_{n}\) is infinite. Since by assumption the length of \(h_k\) converges to infinity as \(k\rightarrow \infty\), there exists \(m\in \mathbb {N}\) such that the length of \(h_k\) is at least \(n+1\) for all \(k\ge m\). In particular, \(\vec a_{k}(n)=\vec a^\diamond _{k}(n)\) for all \(k\ge m\). Then, by finiteness of the set \(\bar{A}_1\times \cdots \times \bar{A}_{n-1}\) and because \(\bar{A}_n\) is infinite, there exists a history \(h\in \bar{A}_1\times \cdots \times \bar{A}_{n-1}\) such that the set of actions

is infinite. It follows that there exists an increasing sequence \((k_\ell )_{\ell \in \mathbb {N}}\) in \(\mathbb {N}\) such that \(k_1\ge m\), \(\vec a_{k_\ell }\succ h\) and thus \(\vec a^\diamond _{k_\ell }\succ h\) for all \(\ell \in \mathbb {N}\), and moreover the action \(p_{k_\ell }(n)=q_{k_\ell }(n)\) is different for each value of \(\ell\). Thus, property 2 is satisfied. \(\square\)

The following lemma will be used to define specific elements of \([\mathbb {P}_b^{II}]\) and \([\mathbb {P}_b^I]\).

Lemma 10.7

Let \((\vec a_k)_{k \in \mathbb {N}}\) be a sequence of plays that converges to a play \(\vec a=(a_1, a_2, \ldots )\). Let b be a behavioral strategy such that \(b(a_1, \ldots , a_{n-1})\) is the Dirac charge on \(a_n\) for each \(n\in \mathbb {N}\). Let \(\tau\) be a diffuse charge on \(\mathbb {N}\). Now, define a charge B on \(A^\mathbb {N}\) by for every \(Z \subseteq A^\mathbb {N}\) letting

where \(\delta _{\vec a_k}\) denotes the Dirac charge on \(\vec a_k\). Then, \(B \in [\mathbb {P}_b^{II}]\), and hence \(B \in [\mathbb {P}_b^{I}]\).

Proof

We need to show that B coincides with the charge \(\mathbb {P}_b^{II}\) on the algebra \(\mathcal {R}^{II}\), i.e., \(B(Q)=\mathbb {P}_b^{II}(Q)\) for every \(Q\in \mathcal {R}^{II}\). Let \(Q \in \mathcal {R}^{II}\), i.e., \(Q\subseteq A^\mathbb {N}\) is clopen. We distinguish two cases.

Assume first that \(\vec a \in Q\). Then \(\mathbb {P}_b^{II}(Q)=1\). Moreover, as Q is open, \(\vec a_k\in Q\) for all sufficiently large \(k\in \mathbb {N}\). As \(\tau\) is a diffuse charge, we have \(\int _{k \in \mathbb {N}} \delta _{\vec a_k} (Q)\,\tau (d k)=1=\mathbb {P}_b^{II}(Q)\).

Assume now that \(\vec a \notin Q\). Then \(\mathbb {P}_b^{II}(Q)=0\). Moreover, as \(A^\mathbb {N}\setminus Q\) is open, \(\vec a_k\in A^\mathbb {N}\setminus Q\) for all sufficiently large \(k\in \mathbb {N}\). As \(\tau\) is a diffuse charge, we have \(\int _{k \in \mathbb {N}} \delta _{\vec a_k} (Q)\,\tau (d k)=0=\mathbb {P}_b^{II}(Q)\). \(\square\)

Proof of Theorem 6.2: We prove that (1) implies (2) and that (2) implies (3). It follows from Theorem 6.1 that (3) implies (1) as well.

(2) implies (3): Assume that u is uniformly continuous. Let \(\varepsilon > 0\). As we assume that u is bounded, there is a finite set \(K\subset \mathbb {R}\) such that for every play \(\vec a\in A^\mathbb {N}\) there exists \(k\in K\) with \(|u(\vec a)-k|\le \varepsilon /2\). Because u is uniformly continuous, by Lemma 10.5, there is \(T \in \mathbb {N}\) such that if for two plays \(\vec a\) and \(\vec a^\diamond\) it holds that \(\vec a(t)=\vec a^\diamond (t)\) for all \(t \le T\), then \(|u(\vec a)-u(\vec a^\diamond )| \le \varepsilon / 2\). This means that for every history \(h \in A^T\) there is a number \(z_h \in K\) such that for every play \(\vec a\succ h\) we have \(|u(\vec a) - z_h| \le \varepsilon\). Now we define a payoff function \(\bar{u}\) by setting \(\bar{u}(\vec a)=z_h\) if \(\vec a \succ h\) for \(h \in A^T\). Thus \(|u(\vec a) - \bar{u}(\vec a)| \le \varepsilon\) for every \(\vec a \in A^\mathbb {N}\). By construction, \(\bar{u}\) is an \(\mathcal {R}^{I}\)–measurable simple–function. Indeed, \(\bar{u}\;=\; \sum _{k\in K} k\cdot \mathbb {I}_{[Q_k]},\) where \(Q_k\;=\;\{h\in A^T:z_h=k\}\) for every \(k\in K\), and thus u is uniformly \(\mathcal {R}^I\)-approachable.

(1) implies (2): We prove the contraposition of this implication. So assume that u is not uniformly continuous. Then, by Lemma 10.5, there exists \(\varepsilon >0\) for which for every \(k\in \mathbb {N}\), there exists a history \(h_k\in A^k\) and plays \(\vec a_k\succ h_k\) and \(\vec a^\diamond _k\succ h_k\) such that \(u(\vec a_k)+\varepsilon <u(\vec a^\diamond _k)\). Consider the sequences of plays \((\vec a_k)_{k\in \mathbb {N}}\) and \((\vec a^\diamond _k)_{k\in \mathbb {N}}\). In view of Lemma 10.6, by taking subsequences if necessary, we can assume that at least one of the following holds:

-

1.

The sequences \((\vec a_{k})_{k\in \mathbb {N}}\) and \((\vec a^\diamond _{k})_{k\in \mathbb {N}}\) converge to the same play as \(k\rightarrow \infty\).

-

2.

There exists a history h and a sequence of actions \((a_k)_{k\in \mathbb {N}}\), with \(a_k\ne a_{k'}\) for all \(k,k'\in \mathbb {N}\) with \(k\ne k'\), such that \(\vec a_k\succ (h,a_k)\) and \(\vec a^\diamond _k\succ (h,a_k)\) for every \(k\in \mathbb {N}\).

When property 1 holds: Assume that property 1 holds. Then the sequences \((\vec a_{k})_{k\in \mathbb {N}}\) and \((\vec a^\diamond _{k})_{k\in \mathbb {N}}\) converge to the same play, say to \((a^*_1, a^*_2, \ldots )\). Consider a behavioral strategy b such that \(b (a^*_1, \ldots , a^*_{n-1})\) is the Dirac charge on action \(a^*_n\), for every \(n\in \mathbb {N}\). We show that b does not induce an unambigouos expected payoff with respect to \(\mathcal {R}^I\), i.e., \([u^I(b)]\) is not a singleton.

Let \(\tau\) be a diffuse charge on \(\mathbb {N}\). Define two charges \(B, B^\diamond\) on \(A^\mathbb {N}\) by for every \(Z \subseteq A^\mathbb {N}\) letting

where \(\delta _{\vec a_k}\) and \(\delta _{\vec a^\diamond _k}\) denote the Dirac charges on \(\vec a_k\) and \(\vec a^\diamond _k\) respectively. It follows from Lemma 10.7 that \(B, B^\diamond \in [\mathbb {P}_b^I]\). Additionally, since \(u(\vec a_k)+\varepsilon <u(\vec a^\diamond _k)\) holds for every \(k\in \mathbb {N}\), we have \(u (B)+\varepsilon \le u(B^\diamond )\). Hence, \([u^I(b)]\) is not a singleton.

When property 2 holds: Assume that property 2 holds, and let h and \((a_k)_{k\in \mathbb {N}}\) be as in property 2. Let \(\tau\) be a diffuse charge on \(\mathbb {N}\). Consider a behavioral strategy b that prescribes to play as follows: First, from the root, follow h with Dirac charges. Then at h choose an action from \(\{a_1, a_2, \ldots \}\) according to \(\tau\). Finally, if action \(a_k\) is chosen, then follow the play \(\vec a_k\) with Dirac charges on the corresponding actions.

Define two charges \(B, B^\diamond\) on \(A^\mathbb {N}\) by for every \(Z \subseteq A^\mathbb {N}\) letting