Abstract

One of the essential requirements for intelligent manufacturing is the low cost and reliable predictions of the tool life during machining. It is crucial to monitor the condition of the cutting tool to achieve cost-effective and high-quality machining. Tool conditioning monitoring (TCM) is essential to determining the remaining useful tool life to assure uninterrupted machining to achieve intelligent manufacturing. The same can be done by direct and indirect tool wear measurement and prediction techniques. In indirect methods, the data is acquired from the sensors resulting in some ambiguity, such as noise, reliability, and complexity. However, in direct methods, the data is available in images resulting in significantly less chances of ambiguity with the proper data acquisition system. The direct methods, which provide higher accuracy than indirect methods, involve collecting images of worn tools at different stages of the machining process to predict the tool life. In this context, a novel tool wear prediction system is proposed to examine the progressive tool wear utilizing the artificial neural network (ANN). Experiments were performed on AISI 4140 steel material under dry cutting conditions with carbide inserts. The cutting speed, feed, depth of cut, and white pixel counts are considered as input parameters for the proposed model, and the flank wear along with remaining tool life is predicted as the output. The worn tool images were captured using an industrial camera during the turning operation at regular intervals. The ANN training set predicts the remaining useful tool life, especially the sigmoid function and rectified linear unit (ReLU) activation function of ANN. The sigmoid function showed an accuracy of 86.5%, and the ReLU function resulted in 93.3% accuracy in predicting tool life. The proposed model’s maximum and minimum root mean square error (RMSE) is 1.437 and 0.871 min. The outcomes showcased the ability of image processing and ANN modeling as the potential approach for developing a low-cost industrial tool condition monitoring system that can measure tool wear and predict tool life in turning operations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is an increased scope and demand for automated machining processes with advancements in technology [1]. One of the vital tasks in automation is tool wear monitoring in machining processes. Fracture, adhesion, heat stresses, or abrasion is the cause of tool failure [2]. Fracture failure in a tool refers to an abrupt brittle failure brought on by excessive stresses, material flaws, intermittent cutting, or severe vibrations. Tool bits clinging to chips producing build-up edges cause adhesive wear. Increased temperature at the interface of the job and the tool creates thermal stresses that end up causing the material to soften and cause tool distortion through plastic deformation. Crater wear occurs on rake surface of the tool due to cutting temperature and high shear stresses between tool rake surface and cutting chips sliding over the rack surface. The crater wear is measured by wear depth and wear area. Three-dimensional computer vision techniques can be applied to measure crater depth and area. Failures due to temperature, adhesive, and fracture can be prevented by using the right material of cutting tool and cutting parameters. Friction between the workpiece and tool’s cutting edge leads to abrasive wear. It is impossible to avoid abrasive wear, which limits the life of the cutting edge since constant use blunts the edge. Because it ensures the greatest tool life, flank wear that occurs on the edge of the tool is progressive wear and is the most favored tool failure [3]. Developing reliable tool life prediction techniques is critical to automating tool wear monitoring.

Estimation of tool life is also crucial for minimizing the tool cost by optimizing tool usage. Several authors reviewed the research work in tool condition monitoring techniques. Dutta et al. [4] studied the tool condition monitoring methods using digital image processing techniques and classified them as (i) direct methods and (ii) indirect methods. Pimenov et al. [5] highlighted the latest trends in applying artificial intelligence techniques and their relative merits and demerits in tool condition monitoring in machining operations. Indirect methods record specific physical characteristics of the machine, product, or process influenced by the tool wear and correlate it. Kuntoğlu et al. [6] reviewed indirect techniques published in the last two decades to study the effect of data from sensors on tool wear in turning operations. A systematic review of state-of-the-art on different sensors and tool health prognostics, including signal processing techniques, was carried out by Kuntoğlu et al. [7]. The study was focused on various machining operations like turning, milling, and grinding. Zhu et al. [8] presented a review of wavelet transform analysis for monitoring the state of tool wear and compared the effectiveness of wavelet transform to Fourier analysis methods for analyzing tool wear progression. Mohanraj et al. [9] presented a review of tool condition monitoring using indirect techniques in milling operations. In their work, Ambhoreet al. [10] reviewed various indirect methods to classify the severity of tool wear. Physical parameters like temperature, cutting force, surface roughness, torque/current, vibration, and acoustic emission were identified for correlating with tool wear. With the advancement in artificial intelligence techniques, predicting the remaining useful life of the tool is possible. Zhou et al. [11] presented the various prediction model proposed in the literature related to tool condition monitoring in milling processes. The methods researched were neural network, regression model, principal component analysis, support vector machine, fuzzy logic, and other methods.

Many researchers [12, 13, 14] have reported and discussed indirect methods for tool condition monitoring. Various forms of data are collected in indirect tool wear measurement by sensors like optical microscope imaging sensors, impact sensors, vibration sensors, acoustic emission sensors, force sensors, and power sensors. Nath [12] and Bustillo et al. [13] correlated the deviation from flatness with tool wear and spindle drive power using different machine learning algorithms. They concluded that the synthetic minority over-sampling balancing technique combined with the random forest technique provided close prediction. Abu-Zahra and Yu [14] applied ultrasound waves to monitor the tool wear during turning operations. Wavelet transforms were used to extract information from sensory signals. Karamet al. [15] conducted experiments to investigate the percentage of tool life consumed by correlating it with the signal features received from multiple sensors. Physical parameters monitored were vibration, cutting force, and acoustic emission. Bagga et al. [16] evaluated force and vibration signals in dry turning operations. The prediction of tool wear obtained through neural networks was compared with manual measurement, and close correlations were reported.

Computer vision techniques can automatically identify the tool’s wear and be implemented in the industries as a part of an intelligent manufacturing environment. The direct method detects the tool’s contour or dimensional changes using imaging sensors. The tool images are used as the input data source. Computer vision techniques are then applied to observe the tool condition. He et al. [17] performed an experimental investigation to validate the developed tool wear prediction model by analyzing the data obtained from multiple sensors and extracting the signal features in frequency, time, and frequency-time domains. The developed system had a low root mean square error. Mikołajczyk et al. [18] used CCD-based vision sensor to capture the tool wear region. Two image processing tasks to measure existing tool wear and prediction of tool wear based on previous conduct of similar tools were studied, and a mean error of 0.4 min was observed. Yu et al. [19] developed an image processing system to measure the wear of the drill bit. Image pre-processing, segmentation, and feature extraction steps were performed on the images of the drill to extract the information on the number of pixels in the wear region. The results obtained from the proposed system were in close agreement with manual measurement. Qian et al. [20] used machined surface texture analysis with the support vector machine algorithm for tool life prediction. The prediction model was developed based on the relationship between surface texture features and observed tool wear. The performance of artificial neural networks was observed to be superior compared to support vector machine with genetic algorithm.

A single category-based classifier for processing images of tool wear was proposed [21]. Based on the brightness of the wear area and the non-wear area, features of the image were extracted. Flank wear with an absolute mean relative error of 6.7% was achieved. Similarly, for feature extraction, nine geometric descriptors were identified to describe flank wear [22]. Three out of nine geometric descriptors, viz., extent, eccentricity, and solidity of the wear region, provided 98.6% of the required information to classify the severity of the tool wear. A method of categorizing tool images in various regions and each part characterized based on the local pattern in binary form was proposed [23]. Based on the condition of these regions, the tool was classified as the usable or unusable condition.

Based on the recent developments and adoption of Industry 4.0 concepts, a considerable amount of data is generated, and the traditional prediction techniques are insufficient to monitor the tool wear. Under these conditions, researchers have studied various deep learning methods [24] [25] [26] for tool wear monitoring to achieve improved accuracy. Serin et al. [24] summarized the theory of recent deep learning methods applied to tool condition monitoring for realizing the concept of Industry 4.0. Zhao et al. [25] reviewed the research carried out in deep learning on machine health monitoring and suggested relative merits and demerits of them. Martínez-Arellano et al. [26] developed a big data method for classifying tool wear based on deep learning. A deep multi-layer perceptron model for predicting three vital machining parameters with an average absolute error of 1.5%, 0.44%, and 0.38% for material removal rate, specific energy consumed, and surface roughness, respectively [27]. Some of the techniques investigated were long-short-term memory [28], convolutional neural network [29] [30], and deep heterogeneous gated recurrent unit network [31], which are among the most preferred methods of deep learning in recent years. Bergset al. [32] developed a deep learning method using image processing to monitor the state of tool wear. A convolutional neural network was applied to a cutting tool, and then, a fully convolutional network for wear severity classification was trained on every kind of tool. Tool type classification accuracy achieved was about 95%.

The research on artificial neural networks in tool wear monitoring was reported in the literature [33] [34] [35] [36]. Artificial neural network (ANN) is a traditional and practical artificial intelligence approach technique. It is made from the inspiration of the human brain neurons [37]. Like human brains, neurons are interconnected to form a neural network in different layers. It is primarily made of three layers: input, hidden, and output. D’Addonaet al. [33] conducted an experimental investigation to train neural network models using worn-out tools images. The neural network estimated the level of wear of the tool from images of tool wear. Hesser and Markert [34] implemented a neural network model to classify tool wear state in a retrofitted milling machine to integrate it into Industry 4.0. Corne et al. [35] evaluated the correlation between power drawn by the spindle and tool wear and breakage in the drilling operation. The spindle power data was fed into the neural network. The reliability of data of spindle power was studied by comparing it with the force data. The prediction error ranged from 0.8% to 18% for spindle data. Two tool wear monitoring methods are (1) model-driven and (2) data-driven. Modeling the tool wear system using physical-based mathematical modeling is difficult considering the complexity of the tool condition monitoring system. Reference [36] attempted a combined physics-guided data-driven technique based on a neural network to predict tool wear.

Given the advancements in computing capabilities, hardware, and computer vision techniques, the significance of tool condition monitoring using computer vision techniques is increasing [12]. Using computer vision techniques, the tool wear measurement methods are more accurate than indirect techniques [24]. Besides, the indirect ways that use physical parameters to correlate the degree of tool wear need vast data. Image processing techniques were developed to study the burr and slot width in micro-milling of Inconel 718 alloy by [38]. The measurement system was developed in C + + programming and Open Computer Vision (OpenCV) library. The results obtained from image processing were in very close agreement with manual measurements. Bagga et al. [39] conducted an experimental investigation of measuring flank wear using computer vision techniques. Image pre-processing was done using median filtering to remove noise from the image.

Morphological operations were performed on the tool wear region of the image to identify connected components, and their properties were arrived at to find the amount of wear. Peng et al. [40] developed a machine vision-based tool wear monitoring system. The relative error between a manual measurement using the microscope and the developed method was 7.5%. The procedure to monitor tool wear progression based on processing captured images in micromachining was proposed by [41]. An error of about 5% between actual and predicted wear was observed. Moldovanet al. [42] presented an experimental study to find the tool wear state by applying computer vision techniques. The authors proposed three methods; in which one way was based on extracting features by using image processing and following neural network classification. In the remaining two methods, neural networks were applied directly to image data without image processing techniques.

From the literature mentioned above, it is understood that various researchers have utilized different approaches to extract the features to identify and quantify the tool wear during machining. Also, the combination of more than one technique is reported to improve the accuracy of the tool condition monitoring system. Most of the work is focused on the data acquired from the sensors, which has some ambiguity, such as noise, reliability, and complexity. However, if the data is available in images, the chances of ambiguity are significantly reduced with the proper data acquisition system. Little work has been reported in the literature about computer vision techniques for tool life prediction. The issues related to illumination and magnification errors in image capturing must be addressed. Most of the work presented the use of data acquired through indirect methods to predict the amount of tool wear. There is a gap in the research in developing the technique of predicting tool wear using computer vision systems. Thus, to develop intelligent manufacturing systems, it is necessary to create a robust tool life prediction system for uninterrupted machining. Contemplating the above-said facts, this work proposes a computer vision-based neural network architecture for predicting tool wear in machining. The results showcased an improved prediction accuracy of the proposed approach. The proposed method has many industrial benefits as the real-time tool monitoring can be done with reasonable accuracy in turning operation, and the manual intervention can be removed. Also, the remaining tool life can be identified.

2 Background

By using computer vision techniques, the proposed work develops a tool wear measuring system of cutting tools utilized in the CNC lathe machine. Here, the goal of the image processing stage is to identify the wear area from the image of the cutting tool insert that is captured during image acquisition. Initially, image pre-processing steps are performed to make image suitable for high-level region identification tasks. The various image pre-processing tasks identified are image denoising, image histogram enhancement, and image thresholding. The primary purpose of image denoising is to approximate the original image by removing noise from a noisy picture representation. Image noise can be caused by various internal (sensor) and external (environment) factors that are difficult to prevent in real-world settings. Image noise is a sudden alteration of light in a picture usually caused by electrical noise. The image sensor and circuitry of a digital camera can produce it. It is a form of picture noise that cannot be avoided. A bilateral filter is used to remove noise from the picture. A bilateral filter is a non-linear picture smoothing filter that keeps edges sharp while eliminating noise. To override the intensity of each pixel, it employs a weighted average of intensity data from surrounding pixels. This weight may be calculated using a Gaussian distribution. The weights are calculated by the Euclidean distance between pixels and by range discrepancy, radiometric variances such as color intensity, depth distance, and other range changes. Sharp edges are preserved as a result. There is mainly a sharp edge in the worn-out area, so this filter can be very useful to extract noise. The histogram equalization is a technique for improving picture contrast in digital images. It accomplishes this by effectively spacing out the most frequent intensity values or extending the intensity spectrum of the image. This approach often raises the global contrast of photographs when close contrast values describe the available data and allow locations with low local contrast to achieve success.

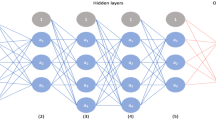

The wear region extraction is carried out subsequently. In wear region extraction, marks are attached to the pixels, and pixels with the same mark are grouped because they share something in common. The captured image is segmented into various regions in machine vision, such as the background and foreground, an unworn part of cutting tool inserts, and a wear region. In the next step, the ANN model is applied to predict the wear value and remaining tool life. An ANN is a data-processing algorithm or paradigm influenced by the human brain’s tightly interconnected, parallel structure [43]. ANNs are integrated arrays of mathematical models that mimic some of the observable features of biological nervous systems and rely on biological learning analogies. The ANN paradigm’s important element is the unique configuration of the information processing system. It comprises many highly integrated processing components that mimic neurons and are connected by weighted connections that work similarly to synapses, as shown in Fig. 1. The ANN would go through a learning cycle to function correctly. The backpropagation learning process is the most popular ANN implementation. Backpropagation training is an iterative gradient approach to reduce the mean square error between the actual output of the hidden layers and the intended result. Activation functions or transfer functions are selected to transfer the weighted sum of inputs into the output from nodes. The same activation function is applied in hidden layers.

Activation functions selected are non-linear differentiable because first-order derivative functions are required in backpropagation neural networks (BPNN) to update the model weights using a derivation of prediction error. Various activation functions are proposed in the literature [45], but only a few functions are applied in practice. There is two activation function considered in this work: (1) sigmoid activation function and (2) rectified linear unit (ReLU) activation function. As shown in Eq. 1, a sigmoid logistic function is the most often used non-linearity in the backpropagation algorithm because the sigmoid function ranges from zero to one, which makes it suitable to predict the probability at the output.

The sigmoid function is shown in Fig. 2(a). ReLU function is widely used in practice [38] and is applied in convolutional neural networks and deep learning as defined in Eq. 2.

ReLU function, shown in Fig. 2(b), has low computational complexity and can be applied to train deep multi-layer neural networks in backpropagation neural networks.

3 Materials and methods

3.1 Experimental details

The machining experiments were carried out on an HMT-made CNC turning lathe. The AISI 4140 was selected as the workpiece material with an average hardness of 34 HRC, 250 mm long, and 50 mm diameter. It has high strength, excellent machinability, and excellent corrosion resistance. AISI 4140 steel is low alloy steel with various alloying elements to improve its mechanical properties and resistance to corrosion. Multiple applications of AISI 4140 steel can be found in different industries like gas and oil, manufacturing, automotive, and aerospace [44]. AISI 4140 steel is used in slewing bearings [45] which in turn are used in large size machines with slow speed and heavy loads such as wind turbines and cranes. It is also used in various automotive parts [46] like spindles, crankshafts, cams, gears, and axles requiring high hardness values. The elemental composition of AISI 4140 steel is presented in Table 1.

The initial cut is carried out to remove the rough outer surface, and the surface of all the workpieces was made smooth before conducting experiments. Carbide insert with ISO designation SNMG120408 manufactured by WIDIA with cutting edge length of 12.7 mm, rake angle of − 6°, clearance angle of 0°, and nose radius of 0.8 mm was employed. Cutting tool holder PSSLNR1616H12 is utilized for the experimentation. The cutting speed, feed, and depth of cut are considered as the input machining parameters as given in Table 2.

The camera sensor is an effective hardware interface between the object and its image. Industrial camera systems come in a wide range of configurations like CMOS and CCD. The imaging system comprises the camera sensor, the lens, and the illumination system. The device components are chosen based on the requirements of the tool state monitoring system. For capturing the image of the cutting tool, the image is taken by placing the vision system hardware on the CNC lathe machine. A Baumer® EXG50 monochrome camera with CMOS sensor, 2592 × 1944 pixel resolution, and 14 frames per second frame rate has been used. It is selected due to its compact design, rugged construction for suitability in an industrial environment, facility to mount on the machine, short shutter time, and facility of both hardware and software triggers is available. Images obtained from the camera are raw data without the application of compression and other image enhancement algorithms, which makes the task of image processing easier since no pre-processing on image pixel intensity values is done, and the image data represents the true picture of the object under study. The Computar® TEC-V10110MPW, a bi-telecentric lens with a magnification of 1X, a focal length of 110.2 mm, and distortion of 0.015%, is used. All rays passing through an object in a telecentric lens parallel the optical axis and eliminate perspective distortions. An illumination device is a 10-W, white ring light with an inner and outer diameter of 50 mm and 100 mm. The light is mounted on the lens, and its brightness can be adjusted.

The primary function of the camera mounting in CNC machines is to hold the camera, lens, and ring light. After the tool completes a certain amount of machining length, it returns to the front of the camera and lens mounting, where the camera can conveniently click the image of the tool’s flank wear. The system’s position is constrained by the carousel’s ability to shift and rotate. The vision system is placed at one side of the spindle to capture the image to ensure that physical interference is not created in machining operations. A mounting stand for the camera, lens, and the LED light is fabricated, and the vision system is mounted. Figure 3(a) shows a model of the mounting stand. Acrylic sheets with a thickness of 6 mm are used to create the final model, as shown in Fig. 3(b). Base plate support is fabricated using a low carbon steel plate of 4 mm thickness, and the entire assembly has been mounted on the CNC lathe machine.

The imaging setup is attached to a computer device, which serves as the storage unit, frame grabber, and information communication system for this machine vision system. The device has a Windows® operating system, a 2.4 GHz Intel® core2 duo processor, and 8 GB of RAM (random access memory). It also has Baumer® camera explorer software for camera triggering. Figure 4 shows the experimental setup utilized for the turning experiments. Measurement of tool wear is carried out by considering the following steps.

-

Step 1: perform turning operation on CNC lathe machine.

-

Step 2: capture the tool images at the specified interval.

-

Step 3: calculate the flank wear and prediction of remaining tool life.

Turning operation cycle of one cut throughout length is carried out. Tool is moved to a designated location in front of camera after machining cycle, and an image of the tool wear region is taken. The tool’s position is specified in the CNC part program to ensure that the image of the tool wear region is captured in the same location each time. The computer then stores the image that is taken. A toolmaker’s microscope is used to manually measure the flank wear value at the same time. The tool life, expressed in minutes, is measured up to a wear value measured each time. The methodology adopted for the work is presented in Fig. 5.

3.2 Image processing method

Various image processing techniques are developed, resulting in pixel counts or values transforming an image into valuable data. To minimize image processing time and memory constraints, the processing area of the captured image is limited to the region of interest. Figure 6 presents the steps of the algorithm for image processing and white pixel calculation.

As shown in Fig. 7, a greyscale image is captured by the camera sensor. The grey level at the worn region has high-intensity pixel values, making the worn area different. Based on this, algorithms to identify the worn area were developed.

3.2.1 Image pre-processing

The following steps were performed for pre-processing of an image of a worn-out area: (1) image denoising, (2) image histogram enhancement, and (3) image thresholding. Figure 8 shows the result of the application of the bilateral filter to remove noise from the image.

Here, histogram equalization is used to differentiate the average grey level of the worn-out region from that of the unworn region pixels across a generally constant interval, with the original grey-level gap being mapped into a fixed interval of 0 to 255 via a linear transformation. There is also the usage of adaptive histogram equalization in this case. An adaptive histogram is a form of histogram that adapts to the adaptive approach. It is not the same as histogram equalization.

It creates multiple histograms, each corresponding to a distinct area of the image, and utilizes them to redistribute the brightness values in the picture, as shown in Fig. 9. As a result, it is excellent for increasing local contrast and enhancing edge definitions in various regions of a photograph.

3.2.2 Wear region extraction

The primary goal of image segmentation is to locate the wear area in the cutting tool insert image. Here, the segmentation method called Otsu’s thresholding is applied. It is a binarization-based method in which it directly gives the binary image of the worn-out area. Otsu binarization takes a value in the center of such peaks as the image’s threshold value. In simple terms, it uses the image histogram to calculate a threshold value for a bimodal image. After the Otsu thresholding, only two pixel values will be there 0 and 255 called binary image which shows the result of the thresholding process.

After the image segmentation, there are only two values of pixels, one for black and the other for white pixels. As shown in Fig. 10, the white area is the worn-out area, so white pixels in the image are counted.

3.3 Model development using artificial neural networks

Here, a backpropagation neural network is used to predict the wear and tool life. The data used to create the predictive model are included in the data collection through experimentation work. A data set’s variables may be one of three types: the independent variables are the inputs. The dependent variables are the targets; the unused variables are not used as inputs or targets. Training samples are used to build the model, selection samples are used to find the optimal order, and testing samples are used to validate the model’s functioning.

Here, samples represent cutting speed, feed, depth of cut, white pixels, remaining tool life (RTL), and wear amount. The first four are considered the input values for the data set and neural network, and the remaining two are the target values that the neural network predicts after the training. The uses of all the samples in the data set are depicted in the pie chart shown in Fig. 11. There are 150 samples in all. The total number of teaching samples is 80 (60.6%), the total number of collected samples is 26 (19.7%), and the total number of research samples is 26. (19.7%). The remaining tool life and wear amount will vary according to the white pixel count, as shown in Fig. 12.

The neural network represents the predictive model. Deep architectures, a form of universal approximator, are supported by neural networks in the neural designer. Neural network architecture is mainly based on the five types of layers: perceptron layer, scaling layer, un-scaling layer, bounding layer, and probabilistic layer. The essential layers of a neural network are the perceptron layers (deeply connected layers). The neural network can learn as a result of them. The perceptron neuron receives the data as a sequence of numerical inputs × 1, …, xn. To create a single numerical output y, the information is combined with bias b and a sequence of weights w1, …, wn. The neuron’s parameters have bias and weights. This neuron calculation depends on the perceptron layer. The combination function takes several input values and converts them into a single combination or net-input value, as shown in Eq. 3 below.

The activation function determines the perceptron output, as shown in Eq. 4 below in terms of its variation.

The perceptron’s activation function determines the neural network’s role, which makes up each layer. A total of two perceptron layers and 11 neurons are used. The sigmoid function and ReLU were used to trigger the perceptron. In a BPNN, the sigmoid function is generally used. It gives smoother output than the other functions. The ReLU function is also the most used activation function in the neural network. The ReLU function is better than other functions as it overcomes the vanishing gradient problem [25].

Figure 13 presents a schematic description of the network architecture. A scaling layer, a neural network, and an un-scaling layer are used. Scaling neurons are yellow circles, perceptron neurons are blue circles, and un-scaling neurons are red circles. The number of inputs is four, and there are two outputs.

The approach utilized to carry out the learning process is known as the training (or learning) technique. Initially, the training is performed using the testing approach to ensure the lowest potential failure rate. This is done by searching for parameters that will allow the neural network to match the data set. BPNN is known for reducing errors. For assessing the performance of neural network models using sigmoid and ReLU activation functions, root mean square error (RMSE) and mean absolute percentage error (MAPE) performance parameters are applied between the neural network outputs and the actual output depicted in Eqs. 5 and 6, respectively. Variables in Eqs. 5 and 6 are n, \({p}_{i}\), and \({a}_{i}\), which are the number of instances, predicted values, and actual values, respectively.

The loss index specifies the role that the neural network must complete and provides a metric for the consistency of the representation that must be learned. When creating a failure index, the selection is between an error term and a regularization term. The regularization term calculates the values of the neural network’s parameters. The neural network’s weights and biases reduce when added to the error, forcing the solution to be cleaner and avoiding overfitting. The L2 regularization approach is used in this instance. It is made up of the squared number of all the neural network’s parameters. Figure 14 shows training and selection errors in each iteration.

4 Results and discussions

The linear regression for the scaled performance for RTL using sigmoid and ReLU functions is shown in Figs. 15(a) and (b). The estimated values are plotted against the actual values. The line shows the best linear fit.

Similarly, the linear regression for scaled performance for wear amount using sigmoid and ReLU functions is shown in Figs. 16(a) and (b), respectively. The estimated values are revealed against the actual value in the form of circles. Here, line shows the best linear fit, using the sigmoid activation function.

In both backpropagation ANN and the ReLU network, ANN is trained with the data collected from the images of the cutting edges and related parameters, and then, the values are predicted based on the experimental data. After that, image processing technique is applied, giving more accurate results for the binary image, as discussed in the previous section. So, the binary image has the area of wear in white pixels with negligible noise. This wear area varies based on the input parameters like feed, speed, and depth of cut. So, there are four inputs feed, speed, depth of cut, and white pixel of image for a neural network. There are uncertainties in measuring the flank wear accurately using image processing techniques as compared to wear area. Output of image processing techniques which is wear area in terms of number of pixels is fed as input to neural network along with specific cutting parameters (speed, feed, and depth of cut) to quantify the flank wear as output of the ANN. The output is the amount of wear and remaining useful life of the tool. According to the neural network analysis, both outputs are weight changes based on the white pixel of image, speed, depth of cut, and feed comparatively. As a result, the two forms of network sigmoid ANN (BPNN) and ReLU ANN, which have two different activation functions and two different algorithms, are used. Both are used in the literature to predict the targeted values more precisely. The effects of using both algorithms are shown in Tables 3 and 4 for the same inputs.

It proves that both have almost the same accuracy in this data, but ReLU shows more accuracy. In sigmoid function ANN using nine different conditions in remaining tool life, it shows the 90.87% accuracy, and in wear, it offers the 93.077% accuracy. On the other hand, the ReLU function using nine different conditions in the remaining tool life shows 95.27% accuracy. It provides 92.79% accuracy in wear, which is more than the sigmoid function ANN. Table 3 shows predicted values and measured output parameters, RTL, and wear amount. Similarly, the output parameters observed using the ReLU activation function are shown in Table 4.

The performance measures, root mean square error (RMSE) and mean absolute percentage error (MAPE), are derived, as shown in Table 5. RMSE of the sigmoid function is 1.437 and 0.013 for RTL and wear amount, respectively. Similarly, the RMSE of the ReLU function is 0.871 and 0.006 for RTL and wear amount, respectively.

The performance of the ReLU function is better than the sigmoid activation function for both wear amount and RTL. The sigmoid function shows the MAPE of 13.5% and 5.9% for RTL and amount of wear, respectively. The values of MAPE using the ReLU function are 6.7% and 3.4% for RTL and wear amount, respectively. Both the performance parameters indicate superior performance of the ReLU activation function compared to the sigmoid activation function applied in neural networks.

Cutting parameters and output of image processing, that is, the number of white pixels, are served as inputs to the neural network prediction algorithm. It helps the proposed algorithm be implemented practically in the industries to predict the tool life under varying cutting conditions. In comparison to the above, the prediction algorithm proposed by [18] was tested by keeping the cutting parameters constant. Reference [48] used support vector regression and neural network techniques to analyze force data and predict tool wear with a minimum value of mean absolute percentage error of 12.59%, which is higher than that obtained in the proposed model. The RMSE of the proposed model is lower than the model proposed by [49] for both sigmoid and ReLU activation functions.

The present experimental study has four sources of error. The machining environment, the image acquisition system, the image processing algorithm, and the prediction model all contain these errors. With regard to error caused in machining environment due to non-controllable variables, to quantify the variability of the results and to improve the estimation’s accuracy, experiments are repeated three times under the same cutting conditions.

With regard to repeatability of the image acquisition system, to maintain a consistent illumination throughout image capturing, ring light is used as the illumination source. In the shape of a ring light constructed of LED panels and a diffuser placed in front of the LEDs, a diffused bright-field illumination with front light illumination is created. However, the ring light that is significantly larger than the cutting tool, whose image is to be captured, is chosen for a homogenous light distribution. Shadows and specular reflections are avoided by using diffused bright-field front lighting [50]. All the experiments are conducted under dry machining conditions. To clear away metal chips from the tool wear zone area, the compressed air is used before capturing image of the tool. The gap between the lens of the camera and the cutting insert is kept fixed throughout image acquisition and selected to prevent lens shadows.

The image processing algorithms are susceptible to errors due to presence of noise in the image. Although image pre-processing steps are performed to reduce the noise and level of uncertainty in image processing algorithms, there are chances of error in quantifying the flank wear. To reduce these uncertainties, neural network model is applied to estimate the flank wear from the size of wear zone and the cutting parameters.

Using computer vision techniques, tool wear area is identified, and the ANN model was trained to forecast tool life. This fully automated system, despite a higher error, is in the same limit as actual measurement and satisfies industrial needs, particularly given how well it predicts the high tool wear and remaining tool life values.

Deep learning algorithms have higher level of accuracy as compared to ANN. Despite having higher learning capabilities, deep neural networks need to be trained. The implementation of deep neural networks requires time-consuming training, and it is reliant on the availability of dataset [51]. To perform better, deep learning algorithms need a huge sample size. Training is quite costly because of complex data models. Additionally, deep learning requires costly GPUs. The cost of implementation of deep neural networks goes up as a result [52]. The most effective models were found to be CNN architectures for image processing applications, particularly object detection and classification [53]. Deep learning models can capture features from an image, but it is time-consuming and costly to gather enough datasets using experimental trials to train them. As a result, their use in tool condition monitoring by using computer vision techniques is limited to straightforward classification problems like separating wear region like flank wear, nose wear, or crater wear. Although transfer learning method [54] was used to classify tool failures with 95% accuracy, there were many false positive and misclassification. The proposed model in this paper has lower accuracy as compared to deep learning algorithms but reduction in number of sensory inputs with limited experimental data set is the goal of the present research in order to meet industrial demands.

In most of the work available in tool wear prediction, the tool wear is measured by acquiring the images by the microscope and hence offline in nature. However, in the proposed approach, the camera is mounted on the machine, and thus, it can be implemented as an online tool wear monitoring technique. Industrial cameras can be challenging to mount in machine settings, like CNC machines, where coolant is used to control the amount of heat produced in metal-cutting operations, which is the biggest barrier for TCM using computer vision system. Additionally, even during TCM data collecting operation, blurring caused by coolant, diffusion of light, and abrasive particles results in low-resolution tool wear images on the majority of CCD/CMOS cameras. The non-uniform pixel intensity distribution, which obscure highlighting of the tool wear region, is the main reason why these pictures might not demonstrate sufficient data to quantify tool wear. However, ANN is incorporated with the tool wear monitoring system for a more precise tool wear measurement detection. The process of image capturing is done by the imaging sensor, which is mounted on the CNC machine. Each time the image of the tool wear zone is to be captured, tool is to be positioned against the camera at a fixed position which is included in CNC programming and compressed air is blown on the tool wear zone. The process of image capturing is online but intermittent. Hence, some amount of time is consumed in image capturing process but the tool condition monitoring process is automated and practically can be implemented in the industries. Also, the presented approach is time-effective and accurate compared to manual measurement of tool wear. As in manual measurement, the operation must be stopped to unload the tool from the machine and the same is observed under the microscope to analyze and measure the amount of tool wear which makes the operation intermittent and time-consuming. The findings obtained from the proposed approach can be practically utilized in real-time machining operations.

5 5. Conclusions

The review of literature presented insights about prediction of tool wear in machining using image processing to evaluate existing tool wear and wear prediction considering the preceding wear behavior of similar tools. A feasible solution to monitor the tool wear using a neural network based on computer vision to automate the task of predicting tool life in turning operation is developed in this work. It proposes a two-step process of identifying wear using computer vision methods and predicting the remaining tool life of turning operations using neural network techniques. Based on the finding, the following important points can be summarized:

-

1.

An experimental investigation is conducted using a CNC lathe machine, and the experimental data are obtained under dry turning operation using the carbide tool inserts. Images of the tool wear zone are captured intermittently by mounting an imaging system on the CNC machine.

-

2.

The image processing techniques have been applied to identify the tool wear. The image pre-processing task is carried out initially to make the image suitable for further processing, including noise removal by filtering, image enhancement, and image thresholding. The wear region is then identified. The number of pixels in the wear area is calculated using image processing techniques.

-

3.

ANN model has been developed to predict remaining tool life and wear amount. A comparison of two prediction models developed using two different activation functions is studied. Two performance parameters, RMSE and MAPE, suggested better performance of ANN with ReLU activation function with MAPE of 6.7% and 3.4% for RTL and wear measurement, respectively, compared to the sigmoid activation function with MAPE of 13.5% and 5.9% for RTL and wear measurement, respectively. Appropriately choosing activation function in the neurons affects the closeness of predicted tool wear and tool life values to actual values.

-

4.

The presented approach enables more accurate and reliable evaluation of tool wear and tool life prediction using a combination of artificial intelligence and machine vision and results in faster and more precise performance.

6 Future scope of work

Future research will be focused with experimental investigations to obtain wear results using high speed cutting combination as compared with low speeds. The accuracy level of this method will also be improved through future research efforts. Prediction model accuracy will be improved by evaluating alternative artificial intelligence models, such as regressor ensembles, and expanding the ANN model training examples with new observations acquired in various machining conditions.

Data availability

Not applicable.

Code availability

Not applicable.

Abbreviations

- AISI:

-

American Iron and Steel Institute

- ANN:

-

Artificial neural network

- BPNN:

-

Backpropagation neural networks

- CNC:

-

Computer numerical control

- RAM:

-

Random access memory

- ReLU:

-

Rectified linear unit

- RMSE:

-

Root mean square error

- MAPE:

-

Mean absolute percentage error

- RTL:

-

Remaining tool life

- TCM:

-

Tool condition monitoring

- t :

-

Tool life (processing time) (min)

- DOC:

-

Cutting depth (mm)

- cs:

-

Cutting speed (m/min)

- V B :

-

Tool flank wear (mm)

- n :

-

Number of instances

- p i :

-

Predicted value

- a i :

-

Actual value

References

Lins RG, de Araujo PRM, Corazzim M (2020) In-process machine vision monitoring of tool wear for cyber-physical production systems. Robot Comput Integr Manuf 61:101859. https://doi.org/10.1016/j.rcim.2019.101859

Noordin MY, Venkatesh VC, Sharif S (2007) Dry turning of tempered martensitic stainless tool steel using coated cermet and coated carbide tools. J Mater Process Technol 185(1–3):83–90. https://doi.org/10.1016/j.jmatprotec.2006.03.137

Karandikar JM, Abbas AE, Schmitz TL (2014) Tool life prediction using Bayesian updating. Part 2: turning tool life using a Markov Chain Monte Carlo approach. Precis Eng 38(1):9–17. https://doi.org/10.1016/j.precisioneng.2013.06.007

Dutta S, Pal SK, Mukhopadhyay S, Sen R (2013) Application of digital image processing in tool condition monitoring: a review. CIRP J Manuf Sci Technol 6(3):212–232. https://doi.org/10.1016/j.cirpj.2013.02.005

D. Y. Pimenov, A. Bustillo, S. Wojciechowski, V. S. Sharma, M. K. Gupta, and M. Kuntoğlu, “Artificial intelligence systems for tool condition monitoring in machining: analysis and critical review,” J. Intell. Manuf., pp. 1–43, 2022.

Kuntoğlu M et al (2020) A review of indirect tool condition monitoring systems and decision-making methods in turning: critical analysis and trends. Sensors 21(1):108

Kuntoğlu M, Salur E, Gupta MK, Sarıkaya M, Pimenov DY (2021) A state-of-the-art review on sensors and signal processing systems in mechanical machining processes. Int J Adv Manuf Technol 116(9–10):2711–2735. https://doi.org/10.1007/s00170-021-07425-4

Zhu KP, Wong YS, Hong GS (2009) Wavelet analysis of sensor signals for tool condition monitoring: a review and some new results. Int J Mach Tools Manuf 49(7–8):537–553. https://doi.org/10.1016/j.ijmachtools.2009.02.003

Mohanraj T, Shankar S, Rajasekar R, Sakthivel NR, Pramanik A (2019) Tool condition monitoring techniques in milling process - a review. J Mater Res Technol. https://doi.org/10.1016/j.jmrt.2019.10.031

Ambhore N, Kamble D, Chinchanikar S, Wayal V (2015) Tool condition monitoring system: a review. Materials Today: Proceedings 2:4–5. https://doi.org/10.1016/j.matpr.2015.07.317

Zhou Y, Xue W (2018) Review of tool condition monitoring methods in milling processes. Int J Adv Manuf Technol 96(5–8):2509–3532. https://doi.org/10.1007/s00170-018-1768-5

Nath C (2020) Integrated tool condition monitoring systems and their applications: a comprehensive review. Procedia Manuf 48:852–863. https://doi.org/10.1016/j.promfg.2020.05.123

Bustillo A, Pimenov DY, Mia M, Kapłonek W (2021) Machine-learning for automatic prediction of flatness deviation considering the wear of the face mill teeth. J Intell Manuf 32(3):895–912. https://doi.org/10.1007/s10845-020-01645-3

Abu-Zahra NH, Yu G (2003) Gradual wear monitoring of turning inserts using wavelet analysis of ultrasound waves. Int J Mach Tools Manuf 43(4):337–343. https://doi.org/10.1016/S0890-6955(02)00274-2

Karam S, Centobelli P, D’Addona DM, Teti R (2016) Online prediction of cutting tool life in turning via cognitive decision making. Procedia CIRP 41:927–932. https://doi.org/10.1016/j.procir.2016.01.002

Bagga PJ, Makhesana MA, Patel HD, Patel KM (2021) Indirect method of tool wear measurement and prediction using ANN network in machining process. Materials Today: Proceedings 44:1549–1554. https://doi.org/10.1016/j.matpr.2020.11.770

He Z, Shi T, Xuan J (2022) Milling tool wear prediction using multi-sensor feature fusion based on stacked sparse autoencoders. Meas. J Int Meas Confed 190:110719. https://doi.org/10.1016/j.measurement.2022.110719

Mikołajczyk T et al (2018) Predicting tool life in turning operations using neural networks and image processing. Mech Syst Signal Process 104:503–513. https://doi.org/10.1016/j.ymssp.2017.11.022

Yu J, Cheng X, Lu L, Wu B (2021) A machine vision method for measurement of machining tool wear. Measurement 182:109683. https://doi.org/10.1016/j.measurement.2021.109683

Qian Y, Tian J, Liu L, Zhang Y, Chen Y (2010) “A tool wear predictive model based on SVM”, 2010 Chinese Control Decis. Conf CCDC 2010:1213–1217. https://doi.org/10.1109/CCDC.2010.5498161

Mikołajczyk T, Nowicki K, Kłodowski A, Pimenov DY (2017) Neural network approach for automatic image analysis of cutting edge wear. Mech Syst Signal Process 88:100–110. https://doi.org/10.1016/j.ymssp.2016.11.026

Castejón M, Alegre E, Barreiro J, Hernández LK (2007) On-line tool wear monitoring using geometric descriptors from digital images. Int J Mach Tools Manuf 47(12–13):1847–1853. https://doi.org/10.1016/j.ijmachtools.2007.04.001

García-Ordás MT, Alegre-Gutiérrez E, Alaiz-Rodríguez R, González-Castro V (2018) Tool wear monitoring using an online, automatic and low cost system based on local texture. Mech Syst Signal Process 112:98–112. https://doi.org/10.1016/j.ymssp.2018.04.035

Serin G, Sener B, Ozbayoglu AM, Unver HO (2020) Review of tool condition monitoring in machining and opportunities for deep learning. Int J Adv Manuf Technol 109:3–4. https://doi.org/10.1007/s00170-020-05449-w

Zhao R, Yan R, Chen Z, Mao K, Wang P, Gao RX (2019) Deep learning and its applications to machine health monitoring. Mech Syst Signal Process 115:213–237. https://doi.org/10.1016/j.ymssp.2018.05.050

Martínez-Arellano G, Terrazas G, Ratchev S, Mart G, Terrazas G, Ratchev S (2019) Tool wear classification using time series imaging and deep learning. Int J Adv Manuf Technol 104(9–12):3647–3662. https://doi.org/10.1007/s00170-019-04090-6

G. Serin, M. Ugur Gudelek, A. Murat Ozbayoglu, and H. O. Unver, “Estimation of parameters for the free-form machining with deep neural network,” Proc. - 2017 IEEE Int. Conf. Big Data, Big Data 2017, vol. 2018-Janua, no. December, pp. 2102–2111, 2017, https://doi.org/10.1109/BigData.2017.8258158.

Sun H, Zhang J, Mo R, Zhang X (2020) In-process tool condition forecasting based on a deep learning method. Robot Comput Integr Manuf 64:101924. https://doi.org/10.1016/j.rcim.2019.101924

E. Tsironi, P. Barros, and S. Wermter (2016) “Gesture recognition with a convolutional long short-term memory recurrent neural network,” ESANN 2016 - 24th EurSymp Artif Neural Networks, no. April, pp. 213–218,

Li X, Ding Q, Sun JQ (2018) Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab Eng Syst Saf 172:1–11. https://doi.org/10.1016/j.ress.2017.11.021

Wang J, Yan J, Li C, Gao RX, Zhao R (2019) Deep heterogeneous GRU model for predictive analytics in smart manufacturing: application to tool wear prediction. Comput Ind 111(1):14. https://doi.org/10.1016/j.compind.2019.06.001

Bergs T, Holst C, Gupta P, Augspurger T (2020) Digital image processing with deep learning for automated cutting tool wear detection. Procedia Manuf 48:947–958. https://doi.org/10.1016/j.promfg.2020.05.134

D’Addona DM, Ullah AMMS, Matarazzo D (2017) Tool-wear prediction and pattern-recognition using artificial neural network and DNA-based computing. J Intell Manuf 28(6):1285–1301. https://doi.org/10.1007/s10845-015-1155-0

Hesser DF, Markert B (2019) Tool wear monitoring of a retrofitted CNC milling machine using artificial neural networks. Manuf Lett 19:1–4. https://doi.org/10.1016/j.mfglet.2018.11.001

Corne R, Nath C, El Mansori M, Kurfess T (2017) Study of spindle power data with neural network for predicting real-time tool wear/breakage during inconel drilling. J. Manuf. Syst 43(287):295. https://doi.org/10.1016/j.jmsy.2017.01.004

Wang J, Li Y, Zhao R, Gao RX (2020) Physics guided neural network for machining tool wear prediction. J Manuf Syst 57(June):298–310. https://doi.org/10.1016/j.jmsy.2020.09.005

Kaviani S, Sohn I (2021) Application of complex systems topologies in artificial neural networks optimization: an overview. Expert Syst Appl 180:115073. https://doi.org/10.1016/j.eswa.2021.115073

Akkoyun F et al (2021) Measurement of micro burr and slot widths through image processing: comparison of manual and automated measurements in micro-milling. Sensors 21(13):4432. https://doi.org/10.3390/s21134432

Bagga PJ, Makhesana MA, Patel KM (2021) A novel approach of combined edge detection and segmentation for tool wear measurement in machining. Prod Eng 15(3–4):519–533. https://doi.org/10.1007/s11740-021-01035-5

Ruitao Peng H, Pang HJ, Hu Y (2020) Study of tool wear monitoring using machine vision. Autom Control Comput Sci 54(3):259–270. https://doi.org/10.3103/S0146411620030062

Fernández-Robles L, Sánchez-González L, Díez-González J, Castejón-Limas M, Pérez H (2021) Use of image processing to monitor tool wear in micro milling. Neurocomputing 452:333–340. https://doi.org/10.1016/j.neucom.2019.12.146

Moldovan OG, Dzitac S, Moga I, Vesselenyi T, Dzitac I (2017) Tool-wear analysis using image processing of the tool flank. Symmetry (Basel) 9(12):1–18. https://doi.org/10.3390/sym9120296

Abiodun OI, Jantan A, Omolara AE, Dada KV, Mohamed NAE, Arshad H (2018) State-of-the-art in artificial neural network applications: a survey. Heliyon 4(11):e00938. https://doi.org/10.1016/j.heliyon.2018.e00938

Ruiz-Trabolsi PA et al (2022) A comparative analysis of the tribological behavior of hard layers obtained by three different hardened-surface processes on the surface of AISI 4140 steel. Crystals 12(2):298. https://doi.org/10.3390/cryst12020298

Huang Q, Wu C, Shi X, Xue Y, Zhang K (2022) Synergistic lubrication mechanisms of AISI 4140 steel in dual lubrication systems of multi-solid coating and oil lubrication. Tribol Int 169:107484. https://doi.org/10.1016/J.TRIBOINT.2022.107484

M. Rafighi 2022 “Effects of shallow cryogenic treatment on surface characteristics and machinability factors in hard turning of AISI 4140 steel,” Proc. Inst. Mech. Eng. Part E J. Process Mech. Eng., p. 095440892210834, https://doi.org/10.1177/09544089221083467.

Shao H, Jiang H, Lin Y, Li X (2018) A novel method for intelligent fault diagnosis of rolling bearings using ensemble deep auto-encoders. Mech Syst Signal Process 102:278–297. https://doi.org/10.1016/j.ymssp.2017.09.026

M. A. Gebremariam, A. Azhari, S. X. Yuan, and T. A. Lemma, “Imece2017–70058 remaining tool life prediction based on force sensors signal,” pp. 1–8, 2017.

Twardowski P, Wiciak-Pikula M (2019) Prediction of tool wear using artificial neural networks during turning of hardened steel. Materials (Basel) 12(19):3091. https://doi.org/10.3390/ma12193091

Forte PMF et al (2017) Exploring combined dark and bright field illumination to improve the detection of defects on specular surfaces. Opt Lasers Eng 88:120–128. https://doi.org/10.1016/j.optlaseng.2016.08.002

Siddhpura A, Paurobally R (2013) A review of flank wear prediction methods for tool condition monitoring in a turning process. Int J Adv Manuf Technol 65(1–4):371–393. https://doi.org/10.1007/s00170-012-4177-1

Liu P, Choo KKR, Wang L, Huang F (2017) SVM or deep learning? A comparative study on remote sensing image classification. Soft Comput 21(23):7053–7065. https://doi.org/10.1007/s00500-016-2247-2

Banda T, Akhavan A, Chuan F, Veronica L, Jauw L, Seong C (2022) Application of machine vision for tool condition monitoring and tool performance optimization – a review. Int J Adv Manuf Technol 121(11–12):7057–7086. https://doi.org/10.1007/s00170-022-09696-x

T. Banda, B. Y. W. Jie, A. A. Farid, and C. S. Lim 2022 “Machine vision and convolutional neural networks for tool wear identification and classification,” in Recent Trends in Mechatronics Towards Industry 4.0, Springer, pp. 737–747.

Acknowledgements

The authors would like to acknowledge the resources and support provided by Nirma University in the form of the Minor Research Project grant with letter number “NU/DRI/MinResPrj/IT/21-22.”

Funding

This research was supported by the Nirma University minor research project grant.

Author information

Authors and Affiliations

Contributions

Prashant J. Bagga, Mayur A. Makhesana, Pranav P. Darji: writing—original draft, validation, resources, methodology, investigation, funding acquisition, formal analysis, and conceptualization. Kaushik M. Patel: writing—review and editing, methodology, investigation, and formal analysis. Danil Yu Pimenov: writing—review and editing, conceptualization. Khaled Giasin: writing—review and editing, formal analysis, and data curation. Navneet Khanna: writing—review and editing, formal analysis, and methodology.

Corresponding authors

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bagga, P.J., Makhesana, M.A., Darji, P.P. et al. Tool life prognostics in CNC turning of AISI 4140 steel using neural network based on computer vision. Int J Adv Manuf Technol 123, 3553–3570 (2022). https://doi.org/10.1007/s00170-022-10485-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-10485-9