Abstract

We exploit level-set topology optimization to find the optimal material distribution for metamaterial-based heat manipulators. The level-set function, geometry, and solution field are parameterized using the Non-Uniform Rational B-Spline (NURBS) basis functions to take advantage of easy control of smoothness and continuity. In addition, NURBS approximations can produce conic geometries exactly and provide higher efficiency for higher-order elements. The values of the level-set function at the control points (called expansion coefficients) are utilized as design variables. For optimization, we use an advanced mathematical programming technique, Sequential Quadratic Programming. Taking into account a large number of design variables and the small number of constraints associated with our optimization problem, the adjoint method is utilized to calculate the required sensitivities with respect to the design variables. The efficiency and robustness of the proposed method are demonstrated by solving three numerical examples. We have also shown that the current method can handle different geometries and types of objective functions. In addition, regularization techniques such as Tikhonov regularization and volume regularization have been explored to reduce unnecessary complexity and increase the manufacturability of optimized topologies.

Similar content being viewed by others

1 Introduction

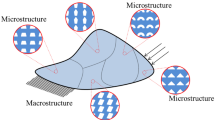

Due to the special arrangement of the constituent materials, thermal metamaterials can have heat transfer capabilities superior to those of materials available in nature. Therefore, researchers have proposed the use of artificially created thermal metamaterials to control heat fluxes. The concept opened research opportunities in heat transfer applications. Creating devices that are thermal equivalent to resistors, capacitors, inductors, diodes, transistors, etc. is one such opportunity. These devices are called heat manipulators, and several of them have already been proposed, such as thermal cloak (Narayana and Sato 2012; Guenneau et al. 2012; Schittny et al. 2013; Han et al. 2014a, b; Sklan et al. 2016; Li et al. 2019; Fujii and Akimoto 2019b), thermal concentrator (Narayana and Sato 2012; Guenneau et al. 2012; Schittny et al. 2013; Li et al. 2019; Shen et al. 2016), thermal camouflage (Han et al. 2014a; Peng et al. 2020), heat flux inverter (Narayana and Sato 2012) etc. The spatial layout of the member materials of a metamaterial-based heat manipulator has a significant impact on its performance. Because of this, structural optimization can be a useful technique for understanding the impact of the spatial arrangement of member materials and for developing superior designs. To the knowledge of the authors, work on optimization of the metamaterial-based heat manipulator is limited (Fujii et al. 2018; Fujii and Akimoto 2020, 2019a). The authors already published an article on isogeometric shape optimization in combination with the gradient-free Particle Swarm Optimization (PSO) algorithm (Jansari et al. 2022a). The present work can be considered as an extension of the previous work, as in this work, we are using topology optimization to avoid the limitations imposed by shape optimization and explore even a larger design space.

The goal of structural optimization is to determine the material distribution in the design domain that gives the best-desired performance (of a device or structure). In the early days of its inception, structural optimization primarily focused on the optimization of mechanical structures. Over a period of time, optimization transpired as a more general numerical technique that can cover a wider range of physical problems including fluids, optics, acoustics, thermodynamics. Lately, topology optimization has become increasingly popular compared to other structural optimization techniques such as shape and size optimization. The reason for its popularity is its ability to allow changes in topology during optimization, hence avoiding the need for a close-to-optimal initial design. Several topology optimization approaches have been developed to date. The most common ones are density-based, level-set-based, phase field-based, and evolutionary algorithm-based. Despite the fact that these approaches define diverse directions for topology optimizations, there are few conceptual differences between them. It is difficult to determine which technique is best for a given problem due to the lack of direct-relevant comparisons (Sigmund and Maute 2013). In addition, not simply the choice of a method but also factors like optimizers, filters, constraints define the overall performance. However, particularly in the level-set-based approach, the interface is clearly defined throughout the optimization process, and therefore, we focus on level-set topology optimization considering their possible advantage in applying objective function or constraints on the interface in future work (Sethian and Wiegmann 2000; Wang et al. 2003; Allaire et al. 2004; van Dijk et al. 2013). The remaining approaches are covered in detail in review articles (Sigmund and Maute 2013; Munk et al. 2015; Rozvany 2009; Deaton and Grandhi 2014).

The Level-Set Method (LSM), proposed by Osher and Sethian (1988), captures moving interfaces in multi-phase flow. The idea to incorporate LSM with topology optimization was suggested by Haber and Bendsoe (1998). Following this, several research groups began working and subsequently published the level-set topology optimization method (Sethian and Wiegmann 2000; De Ruiter and Van Keulen 2000). In Osher and Santosa (2001), Allaire et al. (2004), Allaire et al. (2002), and Wang et al. (2003), the shape-sensitivity-based level-set topology optimization framework was introduced. Specifically for heat manipulators, Fujii and Akimoto have explored the level-set topology optimization method. They used the method to optimize a thermal cloak (Fujii et al. 2018), a thermal cloak-concentrator (Fujii and Akimoto 2020), as well as a combined thermal-electric cloak (Fujii and Akimoto 2019a). In their work, the finite element method, for the boundary value problem, and a stochastic evolution strategy, for optimization, are utilized. Conversely, we use the isogeometric analysis for the boundary value problem and a gradient-based Sequential Quadratic Programming (SQP) method for optimization. In the next few paragraphs, we discuss the level-set topology optimization method in addition to the other aspects of the optimization process.

In level-set topology optimization, the isocontours of a level-set function (LSF) implicitly define the interfaces between the material phases. Accordingly, the topology changes with the motion of these isocontours. Additionally, the mesh remains unchanged, eliminating the cost associated with creating a new mesh at each iteration.

There are three main components of the level-set topology optimization: the level-set function (LSF) parameterization (design space), an efficient numerical method to solve the boundary value problem, and the optimization strategy. In this work, NURBS basis functions are employed to parameterize the LSF, geometry, and solution field (i.e., the temperature distribution). Isogeometric analysis (IGA) (Hughes et al. 2005) is utilized to solve the thermal boundary value problem. Here, we decouple LSF parameterization from geometry and solution field by taking two different NURBS bases. However, it will be advantageous to decouple all three parameterizations using tools such as Geometry Independent Field approximaTion (GIFT) (Atroshchenko et al. 2018; Jansari et al. 2022b). For optimization, we use a mathematical programming approach-Sequential Quadratic Programming (SQP) (Nocedal and Wright 2006). The motivation behind the above-mentioned choices and their respective alternatives are discussed in the following paragraphs. Figure 1 shows the overview of the method with its components and particular choices for each component (Schittkowski et al. 1994; Schittkowski and Zillober 2005; Lavezzi et al. 2022).

At first, we discuss LSF parameterization. The LSF is approximated over the whole design domain from a few point values using LSF parameterization, and these values at nodes/control points work as design variables. The LSF parameterization decides the design freedom, level of detail, and nature of the optimization problem. Hence, the effort required for optimization strongly depends on the LSF parameterization. In the present work, the parameterization for the LSF is decoupled from the geometry and solution field parameterizations. Decoupling allows for securing the required accuracy of the solution without increasing the complexity of the optimization. This idea is standard in LSM for free boundary problems, as discussed in Duddu et al. (2008).

The use of NURBS parameterizations and IGA provides several advantages over Lagrange parameterizations and the conventional Finite Element Method (FEM) (Wang et al. 2018; Gao et al. 2020): (i) easy control of smoothness and inter-element continuity, (ii) an exact representation of conic geometries, and (iii) higher efficiency for higher-order elements. Hughes et al. (2005) proposed Isogeometric Analysis (IGA) based on the discretization of a Galerkin formulation using NURBS basis functions. Later, several other variants of IGA such as the Isogeometric Collocation method (IGA-C) (Auricchio et al. 2010), Isogeometric Boundary Element Method (IGABEM) (Simpson et al. 2012, 2013), Geometry Independent Field approximaTion (GIFT) (Atroshchenko et al. 2018; Jansari et al. 2022b) have been proposed. IGA and its variants have been successfully implemented in shape and topology optimization framework (Wang et al. 2018; Gao et al. 2020; Lian et al. 2016, 2017).

In our work, we exploit the parameterized level-set topology optimization incorporating IGA proposed by Wang and Benson (2016). However, instead of the immersed boundary technique to map the geometry to a numerical model as in Wang and Benson (2016), we use density-based point geometry mapping due to its relatively easy implementation. Also, density-based mapping avoids ill-conditioning issues which are common in immersed boundary techniques. In density-based mapping, no special treatment is performed for the integration. Thermal conductivity at an integration point for numerical analysis is defined directly from the LSF value. However, the material definition by this simplistic approach is not very accurate near the interface. Therefore, by using a fine enough solution mesh, it ensures that the density-based mapping does not deteriorate the solution accuracy.

Lastly, the optimization procedure is discussed. It includes two parts: (i) update information and (ii) update procedure. Update information often consists of the objective function and constraint sensitivities with respect to design variables. The most common methods for sensitivity analysis are (a) the direct method, (b) the finite difference method, (c) the semi-analytical method, and (d) the adjoint method (Wang et al. 2018). The direct method requires to solve an extra system for each design variable; therefore, the direct method is significantly costly for complex problems. Similarly, the finite difference method requires to solve \({n+1}\) boundary value problems for n design variables. Furthermore, the accuracy of the finite difference method depends on the perturbation size. The semi-analytical approach is computationally efficient; however, its accuracy can be relatively unsatisfactory for special cases due to the incompatibility of the design sensitivity field with the structure (Barthelemy and Haftka 1990). The adjoint method requires to solve \({n+1}\) system for n objectives and constraints that depend on the field solution. The adjoint system is efficient for a problem with a large number of design variables and a small number of constraints, which aligns with our case. Therefore, in this work, the adjoint method (Allaire et al. 2005) is used to calculate the sensitivity.

The update procedure decides how to use the update information to advance the level-set interfaces. For the update procedure, two classes of methods are available in the literature (a) Hamilton–Jacobi (HJ) equation-based procedures and (b) mathematical programming. In the first class, the problem is considered a quasi-temporal problem. The interface motion is calculated based on the solution of the Hamilton-Jacobi (HJ) equation in pseudo-time (Burger and Osher 2005; Sethian 1999, 2001). The second class, mathematical programming (Haber 2004; Luo et al. 2007; Maute et al. 2011; Norato et al. 2004), often equipped with sophisticated step selection, constraint handling strategies, as well as optimized speed and efficiency. In this work, Sequential Quadratic Programming (SQP) (Nocedal and Wright 2006) is chosen due to its ability to accurately solve nonlinear constrained problems. In addition, MATLAB has an inbuilt subroutine on SQP in its ‘fmincon’ optimization tool, which provides an advantage from an implementation point of view.

In the context of level-set topology optimization, our approach differs from typical methods that employ the Hamilton-Jacobi equation alongside variational shape sensitivity to drive interface evolution. Our formulation, however, employs the level-set function solely as a descriptor for topology, defining the material distribution. In this regard, our approach aligns closely with parameterized level-set topology optimization method (Wang et al. 2003; Allaire et al. 2004) and three projection density topology optimization methods (Guest et al. 2004; Sigmund 2007; Xu et al. 2010). For a better understanding of nomenclature, readers are encouraged to refer to Section 3.1.3 of Sigmund and Maute (2013).

The key contributions of our work include:

-

Design of thermal metamaterials for heat flux manipulation: Our work addresses the intricate task of creating thermal metamaterials that control and manipulate heat flux to achieve specific thermal objectives. The challenge involves finding the conductivity distribution necessary to create the required temperature or flux profile at the boundary. Conventionally, this problem has been explored by analytical methods such as transformation thermotics and scattering cancellation method. In contrast, we introduced an innovative approach of exploiting topology optimization to efficiently design these thermal metamaterials.

-

Enhanced design tool: We enhanced the design tool’s effectiveness by implementing regularization techniques. This enhancement improves not only the optimization convergence but also the manufacturability of the resulting designs.

-

Practical application: We verified the practicality of our tool by showcasing its effectiveness in designing thermal cloaks and camouflages. Also, our approach can handle diverse geometries, a capability that remains beyond the reach of conventional analytical methods.

The remainder of the paper is organized as follows: Sect. 2 provides the numerical formulation of the level-set topology optimization that includes boundary value problem formulation, implicit interface representation, and numerical approximations. The optimization problem, the sensitivity analysis, the SQP algorithm, and the regularization techniques are explained in Sect. 3. In Sect. 4, three numerical examples are demonstrated: a toy problem of an annular ring with the known solutions for the state and adjoint boundary value problems (Sect. 4.1), the thermal cloak problem (Sect. 4.2), and the thermal camouflage problem (Sect. 4.3), which corroborate the efficiency and robustness of the proposed method. Section 5 presents the main conclusions of the current work.

2 Level-set topology optimization with isogeometric analysis

2.1 Boundary value problem description

As shown in Fig. 2, a heat manipulator embedded in the domain \({\Omega } \in {\mathbb {R}}^{2}\) is considered. Note that all formulations given here stand for three-dimensional physical space as well. The domain is externally bounded by \({\Gamma }=\partial {\Omega }={\Gamma }_D \cup {\Gamma }_N\), where \({\Gamma }_D\) and \({\Gamma }_N\) are two parts of the boundary, where the Dirichlet and Neumann boundary conditions are applied, respectively. \({\Gamma }_D \cap {\Gamma }_N =\emptyset\). The embedded heat manipulator uniquely divides the domain into 3 different parts; the inside region \({\Omega }_{{\text {in}}}\), the heat manipulator region \({\Omega }_{\text{design}}\), and the outside region \({\Omega }_{\text{out}}\). \({\Omega }={\Omega }_{\text {in}} \cup {\Omega }_{\text{design}} \cup {\Omega }_{\text{out}}\). The internal boundaries between these parts are collectively denoted by \({\Gamma }_I={\Gamma }_{I_{{\text {in}}}} \cup {\Gamma }_{I_{\text{out}}}\).

In this work, we restrict our scope to metamaterials made of two isotropic member materials. Therefore, in addition to above-mentioned partition, \({\Omega }_{\text{design}}\) is divided into two parts \({\Omega }_{\ell_1}\) and \({\Omega }_{\ell_2}\), representing two member materials (\({\Omega }_{\text{design}}={\Omega }_{\ell _1}\cup {\Omega }_{\ell _2}\)). An interface \({\Gamma }_L\) between the member materials is implicitly defined by the level-set function. The description of the level-set function and corresponding interface \({\Gamma }_L\) will be explained in detail in Sect. 2.2. We simplify the problem with the following assumptions: the temperature and normal flux are continuous along \({\Gamma }_I\), the heat conduction is the only present form of heat transfer, and there is no internal heat generation. The steady-state thermal boundary value problem for the given temperature field T can be written as,

where \(\varvec{\kappa }\) is the thermal conductivity matrix (for isotropic material, \(\varvec{\kappa }=\kappa {\mathbf{I}_2}\) with \({\mathbf{I}_2}\) be an identity matrix of \({\mathbb {R}}^{2}\)), \(Q_N\) is the flux applied on \({\Gamma }_N\), \(T_D\) is the prescribed temperature on \({\Gamma }_D\), \({\varvec{n}}\) is the unit normal on the boundary, \(\llbracket \cdot \rrbracket\) is the jump operator, and \(\nabla = {\left( { \frac{\partial }{\partial x},\frac{\partial }{\partial y}}\right) }\). On the internal boundary \({\Gamma }_I\), \({\varvec{n}}={\varvec{n}}^1= -{\varvec{n}}^2\) where the connected patches at \({\Gamma }_I\) are denoted by 1 and 2.

Domain description of the boundary value problem. \(\Omega _{\text{design}}\) represents the region of the heat manipulator, \(\Omega _{{\text {in}}}~ \& ~\Omega _{\text{out}}\) are, respectively, the inside and outside regions with respect to \(\Omega _{\text{design}}\). \(\Omega\)=\(\Omega _{{\text {in}}}\cup \Omega _{\text{out}}\cup \Omega _\text{design}\). The solid black line shows an explicitly defined interface \(\Gamma_I\), while the broken black & white line shows an implicitly defined interface \(\Gamma_L\) (that separates \(\Omega _\text{design}\) in two parts \(\Omega _{\ell_1}\) and \(\Omega _{\ell_2}\)). The detailed view highlights the matching of control points of connecting patches at the interface \(\Gamma_I\)

To solve the boundary value problem, the strong form described in Eq. (1) is transformed into the weak form using the standard Bubnov–Galerkin formulation. The weak formulation is given as follows: Find \(T^h \in {\mathscr {T}}^h \subseteq {\mathscr {T}} = \big \lbrace T \in {\mathbb {H}}^1 ({{\Omega }}), T= T_D \hspace{0.15cm} {\text {on}} \ {{\Gamma }}_D \big \rbrace\) such that \(\forall S^h \in {\mathscr {S}}^h_0 \subseteq {\mathscr {S}}_0 = \left\{ S \in {\mathbb {H}}^1 ({{\Omega }}), S=0 \hspace{0.15cm} {\text {on}} \ {{\Gamma }}_D \right\}\),

with

The interface continuity conditions described in Eqs. (1d)–(1e) are applied using Nitsche’s method (Nguyen et al. 2014). Nitsche’s method applies the interface conditions weakly while preserving the coercivity and consistency of the bilinear form. Nitsche’s method lies between the Lagrange multiplier method and the penalty method, designed to overcome some of the limitations of these conventional methods such as over-sensitivity to the penalty parameter, inconsistency of variational form, limitations imposed by stability conditions. Nitsche’s method modifies the bilinear form by substituting the Lagrange multipliers of the Lagrange method with their actual physical representation, i.e. normal flux. As shown in Nguyen et al. (2014) and Hu et al. (2018), the bilinear form after modification appears as follows,

where \(\beta\) is the stabilization parameter and \(\{\cdot \}\) is the averaging operator defined as \(\{\theta \}=\gamma \theta ^1 + (1-\gamma )\theta ^2\) with \(\gamma\) being the averaging parameter (\(0<\gamma <1\)) (Nguyen et al. 2014; Hu et al. 2018). For the current work, \(\beta =1\times 10^{12}\) and \(\gamma =0.5\). In the literature Nguyen et al. (2014) and Hu et al. (2018), it is also reported that the large stabilization parameter might cause ill-conditioning of the system, but we did not face any conditioning issue for our boundary value problem.

2.2 Implicit boundary representation with level-set function

As described in Sect. 2.1, the interface \(\Gamma_L\), inside \(\Omega _{\text{design}}\) that separates the member materials, is not explicitly defined in Eq. (1). It is defined by a level-set function, and its movement is the main act of level-set topology optimization.

In the level-set method in \({\mathbb {R}}^d\), the interface between two materials is implicitly represented by an isosurface of a scalar function \(\varPhi :{\mathbb {R}}^{d} \rightarrow {\mathbb {R}}\), called level-set function (LSF). Accordingly, in our work, an isosurface of LSF \(\varPhi :{\mathbb {R}}^{2} \rightarrow {\mathbb {R}}\), \(\varPhi =0\), defines the interface \(\Gamma_{L}\) separating \(\Omega _{\ell_1}\) and \(\Omega _{\ell_2}\) in \(\Omega _{\text{design}}\) as shown in Fig. 3. The overall level-set representation can be given as,

During optimization, the movement of the interface is defined by the evolution of the isosurface \(\varPhi =0\), while the background Eulerian mesh remains fixed.

Level-set representation to define the material distribution. a The level-set function \(\varPhi\) in 3D. Height represents the value of \(\varPhi\). \(\varPhi\) above and below the plane, \(\varPhi =0\), represent two member materials (shown by two different colors). b The level-set function \(\varPhi\) in 2D. The material interface is calculated by the intersection of function \(\varPhi\) with the plane, \(\varPhi =0\)

In the level-set method, the geometric mapping defines how the LSF information is utilized in the numerical solution of the boundary value problem. Via accuracy of this numerical solution, the geometric mapping affects the optimization results. There are three common geometric mapping approaches (van Dijk et al. 2013): (a) conformal discretization, (b) immersed boundary techniques (Fries and Belytschko 2010; Duprez and Lozinski 2020), and (c) density-based mapping. In conformal discretization, the mesh conforms to the interface defined by the LSF. The method is distinct from shape optimization, as the LSF governs the changes in shape. The approach provides a crisp interface representation and the most accurate solution. However, it becomes expensive due to remeshing at each iteration. On the other hand, the immersed boundary techniques allow the nonconforming mesh. In these methods, the mesh remains fixed and the interface is captured in the numerical model using special treatment. Immersed boundary techniques also have a crisp interface representation and allow the enforcement of interface conditions directly. A specialized code for numerical integration and field approximation is needed for the elements cut by level-set interfaces. Sometimes immersed boundary techniques face issues of noise and ill-conditioning due to small intersections. The last approach, density-based mapping, is the most common because of its easy implementation. For the same advantage, we utilize density-based geometric mapping.

We explore point-wise density mapping. In other words, the LSF value at an integration point defines the material density at that particular point. Hence, the thermal conductivity \(\varvec{\kappa }\) becomes directly a function of the LSF value. It takes the following form,

which can be represented using the Heaviside function H as,

with

For the sensitivity analysis (see Sect. 3.2), we need the derivative of \(\varvec{\kappa }\) with respect to \(\varPhi\), and hence the derivative of the Heaviside function with respect to \(\varPhi\), i.e. the Dirac delta function \(\delta (\varPhi )\). The singularity of the Dirac delta function brings numerical issues while calculating the derivatives; therefore, both functions are often replaced by their smooth approximations. There are several forms of smoothed Heaviside function available in the literature (van Dijk et al. 2013). Here, we use a polynomial form given as,

where \(\alpha\) is a small positive value (here we take \(\alpha =0\)) and \(\varDelta\) is the support bandwidth.

Consequently, the derivative of \(\varvec{\kappa }\) can be written as,

where one-dimensional Dirac delta function \(\delta (\varPhi )\) is approximated by,

The smoothed Heaviside and smoothed Dirac delta functions in the given polynomial form are shown in Fig. 4.

It is noteworthy that the density mapping is not without drawbacks. By exploiting the density mapping with a smoothed Heaviside function, we use the information beyond zero-level contour and therefore, the level-set description loses some of the crispness (Liu et al. 2005; Pingen et al. 2010; Kawamoto et al. 2011; Luo et al. 2012; Zhou and Zou 2008). Consequently, if the slope of LSF within support bandwidth is not controlled by some measures, the LSF can become too flat or too steep. Ultimately, it may deteriorate the convergence rate of optimization. To address this issue, a regularization technique called LSF reinitialization is utilized in this work (refer Sect. 3.4.1). Furthermore, mapping based on the smoothed Heaviside function requires a large number of closely spaced integration points for accuracy to capture its strong nonlinearity (Liu and Korvink 2008). To ensure the accuracy of our approach, we conduct a mesh study as presented in Sect. 4.1.

2.3 Solution of the boundary value problem using isogeometric analysis

Following the implicit representation of the interface by the LSF, the weak form (Eq. (5)) is modified again to accommodate the conductivity matrix functional \(\varvec{\kappa }(\varPhi )\). Since the LSF is defined in domain \(\Omega _{\text {design}}\), which does not have a common boundary with \(\partial \Omega\), the applied boundary condition is not a function of the LSF. Therefore, the linear form (Eq. (4)) remains unchanged. The modified weak form transforms to,

with

The next step is to define the geometry and solution approximations to discretize the weak form. As mentioned in Sect. 1, we use NURBS basis functions for geometry and solution field approximations. Here we use standard IGA, where both geometry and solution fields are approximated with the same NURBS basis functions. Let \({\varvec{x}}\in \Omega\), and \(\varvec{\xi }\) be the corresponding point in parametric domain as shown in Fig. 5, then the domain using n NURBS functions \(N_{i}\) and n control points \(\mathbf{X}_i\) is approximated as,

and accordingly, the test and trial functions are also approximated with the same NURBS shape functions as,

Here, \(T_i\) and \(S_{i}\) are the temperature and the arbitrary temperature at the \(i\)th control point.

By substituting Eq. (16) in Eq. (13), a linear system is obtained,

where \(\mathbf{T}\) is the vector of unknown temperatures at the control points, \(\mathbf{K}\) is the global stiffness matrix and \(\mathbf{F}\) is the global flux vector. The detailed matrix formulation is given in Appendix 1.

2.4 Level-set function parameterization

The objective of LSF parameterization is to use the basis functions (in our case, the NURBS basis functions) to approximate LSF on the entire domain via values at nodes/control points. Values at nodes/control points are called expansion coefficients and are utilized as design variables for optimization. The choice of LSF parameterization also decides the design freedom as well as the detailedness of the level-set interface and eventually influences the optimization results. A finer mesh for LSF parameterization means a bigger discretized optimization problem with a larger search pool and more design variables, which often requires more computational effort. On the other hand, the numerical analysis method requires a finer mesh to ensure adequate solution accuracy. Taking this into account, it is advantageous to decouple the LSF parameterization from the structural mesh. This way, the structural mesh can be refined without changing the design variables.

In this paper, we utilize two stages of refinement to achieve the above-mentioned decoupling. After creating a geometry on the coarsest possible NURBS mesh, we provide the first stage of refinement to get the required LSF parameterization (or the required number of design variables). After the first stage, the mesh is refined again for the second stage to ensure the required structural accuracy. The detailed refinement procedure is shown in Fig. 6. The degree elevation and knot insertion algorithms are used for both stages of refinement. All refinements are provided uniformly. For future work, it will be advantageous to define a refinement criterion (Bordas et al. 2008; Bordas and Duflot 2007; Duflot and Bordas 2008; Jansari et al. 2019) based on the LSF, and employ local refinement using advanced splines such as PHT-splines (Jansari et al. 2022b). The LSF \(\varPhi\) is parameterized using m NURBS basis functions \(R_i\) as,

where \(\varPhi _i\) is the expansion coefficient corresponding to the \(i\)th control point.

We often need the expansion coefficients corresponding to a predefined LSF, for example, to initialize the optimization. To obtain these expansion coefficients \(\varPhi _i\), a simple linear mass system is solved. The mass system can be written as,

where \(\varvec{\varPhi }=[\varPhi _1\hspace{0.7em}\varPhi _2\hspace{0.7em}...\hspace{0.7em}\varPhi _m]^\text{T}\), mass matrix \(\mathbf{M}\) and the right-side vector \(\mathbf {\Psi }\) are defined as,

3 Optimization problem

3.1 Optimization problem description

In the level-set topology optimization method, the expansion coefficients, as mentioned in Eq. (18), are used as design variables. The goal is to find the values of these design variables, such that the corresponding topology yields the optimal value of the function of interest (called objective function). In our case, \(\varvec{\varPhi }=[\varPhi _1\hspace{0.7em}\varPhi _2\hspace{0.7em}...\hspace{0.7em}\varPhi _{N_\text{var}}]^{\text{T}}\) is the vector of the \(N_{\text{var}}\) design variables, and J is the objective function. In a general case, \(N_{\text {var}}\) can be different from the number of basis functions m in Eq. (18). For the most of numerical examples in the next section, \(N_{\text {var}}\) are less than m considering imposed x and y axial symmetry. The topology optimization problem for a heat manipulator can be defined in a mathematical form as,

with

such that the following constraints are satisfied,

where \(\varPhi _{i,\text{min}}\) and \(\varPhi _{i,\text{max}}\) are the lower and upper bounds of the design variable \(\varPhi _{i}\).

As mentioned in Sect. 1, the given optimization problem is solved using a mathematical programming technique—Sequential Quadratic programming (SQP). In SQP, the boundary value problem described in Sect. 2.1 is solved in each iteration. Since SQP is a gradient-based algorithm, the sensitivity of the objective functions J with respect to each design variable \(\varPhi _i\) needs to be calculated (using the solution of the boundary value problem) at the end of each iteration. Later, the sensitivities are fed into the algorithm to generate the new values of the design variables. The following two sections will, respectively, explain the adjoint method to calculate the sensitivity at each iteration and SQP in detail.

3.2 Sensitivity analysis

In this section, we outline the sensitivity analysis method called the adjoint method (Allaire et al. 2005; Wang et al. 2003; Luo et al. 2007). In the most general case, the performance objective function \(J(T,\varPhi )\) can be written as a sum of two terms, corresponding to the volume and the surface integrals, respectively, i.e.

where \({\Omega _b}\) is the domain where the volume term is calculated, and \({\Gamma _s}\) is part of the boundary where the surface term is calculated. For our numerical examples, only the volume term of the objective function is considered.

Next, the Lagrangian can be obtained by augmenting the objective functional \(J(T,\varPhi )\) with the weak form constraint as well as Dirichlet boundary constraint (with the help of the Lagrange multipliers P and \(\lambda\), respectively) that T should satisfy. It is defined as,

with

The optimality conditions of the minimization problem are derived as the stationary conditions of the Lagrangian. The detailed derivation of the stationary conditions, respective adjoint problem and functional form of sensitivity are given in Appendix 2.

By employing the trial and test function approximations, the adjoint problem as defined in Eq. (63) is discretized into the following linear system,

where \(\mathbf{P}\) is the vector of adjoint temperature at control points, \(\mathbf{F}_{\text{adj}}\) is the global adjoint flux vector defined as,

Similarly, by employing the trial and test function approximations in Eq. (66), the parameterized sensitivity can be written as,

where \(\frac{{\text {d}} \mathbf{K}}{{\text {d}}\varPhi }\) is the derivative of the global stiffness matrix with respect to \(\varPhi\) (as given in Eq. (55)). Using the LSF parameterization (Eq. (18)), the sensitivity with respect to a particular expansion coefficient \(\varPhi _i\) is given as,

3.3 Update scheme—Sequential Quadratic Programming (SQP)

In the Sequential Quadratic Programming (SQP) approach, the optimization problem is approximated as a quadratic sub-problem at each iteration (Nocedal and Wright 2006). For the SQP method, the main challenge is to form a good quadratic sub-problem that generates a suitable step for optimization. For our optimization problem, the quadratic subproblem in the \(m\)th iteration is modeled as,

where \(J_m\), \(\nabla J_m\) and \(\varvec{{\mathcal {H}}}_m\) are the objective function value, the gradient of the objective function, and the Hessian approximation of the Lagrangian \({\mathcal {L}}\) in the \(m\)th iteration, respectively. Later, the minimizer of the subproblem \({\varvec{p}}={\varvec{p}}_m\) is used to get the next approximation \(\varvec{\varPhi }_{m+1}\) based on a line search algorithm or a trust region algorithm. One point to note here is that equality constraints are excluded. As the temperature T is evaluated from the linear system Eq. (17), the equality constraints will be satisfied explicitly at each iteration.

In this work, we use ‘fmincon’ optimization toolbox from MATLAB with the ‘sqp’ option. The ‘sqp’ option solves the above-mentioned quadratic subproblem with an active set strategy. At each iteration, the user provides the objective function \(J_m\) and the gradient \(\nabla J_m\) (using the sensitivity analysis from the last section). For the calculation of Hessian approximation \(\varvec{{\mathcal {H}}}_m\), it uses the BFGS-update scheme. Once the solution to the subproblem \({\varvec{p}}_m\) is found, the size of step \(\alpha _m\) is found using an inbuilt line search algorithm. Then, the design variables are updated as,

3.4 Regularizations

More often, the optimization problem is found to suffer from a lack of well-posedness, numerical artifacts, slower convergence, and entrapment into local minima with poor performance. To overcome these issues, regularization techniques can be used. Sometimes, regularization is utilized to control geometrical features in optimization results. Generally, a single regularization technique addresses more than one of the above-mentioned problems. For our numerical examples, we explored three regularization techniques: LSF reinitialization, Tikhonov regularization, and volume regularization.

3.4.1 Level-set function reinitialization

As the interfaces are defined by the zero-level contours of LSF, only local regions near these contours are uniquely described in the optimal solution. Therefore, the LSF is not unique, especially in the region far from interfaces. As a consequence of this non-uniqueness property, the LSF sometimes becomes too flat or too steep during optimization. This phenomenon can deteriorate the convergence rate. To alleviate the problem, the LSF is initialized as well as maintained as a sign distance function (\(||\nabla \varPhi ||_{2} = 1\)) during optimization. One way to maintain the LSF as a sign distance function is to reinitialize it after several iterations while maintaining the locations of zero-level contours. Reinitialization can be performed implicitly (by solving a PDE, while preserving the zero-level contour) (Sethian 1999; Osher and Fedkiw 2003; Wang et al. 2003; Allaire et al. 2004; Challis and Guest 2009) or by explicit recalculation (Abe et al. 2007; Yamasaki et al. 2010). In the current work, we employ an explicit recalculation method—the geometry-based reinitialization method. However, to retain the exact location of the discretized contour for curved boundaries and have \(||\nabla \varPhi ||_{2} = 1\) everywhere with reinitialization, is generally not possible. Often there is a slight shift of the LSF contours after reinitialization, introducing inconsistency in the optimization process (Osher and Fedkiw 2003; Hartmann et al. 2010). To alleviate this issue, inspired by Hartmann et al. (2010), we incorporate \(||\nabla \varPhi ||_{2} = 1\) as a constraint on LSF reinitialization calculation. Additionally, if the reinitialization is applied at each iteration, it hinders the potential emergence of new holes. Therefore, reinitialization is performed periodically (Challis and Guest 2009; Challis 2010).

Here, we explain the geometry-based constrained reinitialization technique in detail. Figure 7 outlines the steps of the technique. Using the inverse of LSF parameterization, we find several points on the interface as shown in Fig. 7a. For the sake of clarity, we call them the interface points. Then, the new LSF value at a point is defined as the distance to the closest interface point while keeping the sign from the original value (Fig. 7b). The number of interface points decides the accuracy of the reinitialization. To find the new expansion coefficients, we solve the mass matrix system as shown in Eq. (19) by implementing the new LSF values at the integration/collocation points (Fig. 7c). However, there is one difference from the initialization mass system. In this system, we apply the constraints which enforce the LSF values at interface points to be zero to preserve the location of the interface via the penalty method. An example of the LSF before and after reinitialization is shown in Fig. 8.

3.4.2 Tikhonov regularization

The effect of Tikhonov regularization (Haber 2004; Tikhonov et al. 1995) is similar to perimeter regularization, where the perimeter of the level-set interface is penalized. In Tikhonov regularization, an additional term penalizing the gradient of LSF is added to the main objective function. By penalizing the gradient of LSF, the optimization is directed towards smoother LSF and eventually smoother interface. This Tikhonov term and corresponding sensitivity are written as,

To implement Tikhonov regularization, the primary objective function and sensitivities are augmented by corresponding Tikhonov regularization terms using a weighing parameter \(\chi\) as,

3.4.3 Volume regularization

For the heat manipulator optimization, we propose one more regularization, called volume regularization. In a metamaterial-based heat manipulator (made of two member materials), often one high \(\kappa\) material and another low \(\kappa\) material are used. The high \(\kappa\) material works as the medium that allows the flow of the flux, while the low \(\kappa\) material hinders the flow and guides it towards the required path. By taking into account these particular roles, the area where the flux is not flowing (and the temperature gradient is zero or negligibly small) can be filled by low \(\kappa\) material. In other words, the areas, that do not contribute to the original objective, can be filled with low \(\kappa\) material. By doing so, those areas are made as homogeneous as possible and free of unnecessary complex features. Mathematically, this objective is defined through a volume term as,

The term calculates the volume of high \(\kappa\) material, as we assign high \(\kappa\) material on the positive side of the level-set interface. As our optimization problem is a minimization problem, the term guides the optimization toward a larger volume of low \(\kappa\) material. When this term is added to the main objective function through a weighing parameter, the combined objective of heat flux manipulation and volume filling can be achieved simultaneously.

To implement volume regularization, the primary objective function and sensitivities are augmented by corresponding volume regularization terms using a weighing parameter \(\rho\) as,

4 Numerical examples

In this section, we verify the proposed method and test its efficiency and effectiveness for several numerical examples. We denote p and q as the order of NURBS approximation in two directions of a NURBS patch, which lies along the circumferential direction and radial directions, respectively, for the given examples. Since we are using ‘fmincon’ optimization toolbox in MATLAB, there are several inbuilt stopping criteria such as, ‘OptimalityTolerance’ (tolerance value in the first-order optimality measures), ‘StepTolerance’ (tolerance value of the change in design variables’ values), ‘ObjectiveLimit’ (tolerance value of the objective function), ‘MaxFunctionEvaluations’ (maximum number of the function evaluations), ‘MaxIterations’ (maximum number of the iterations). We define ‘ObjectiveLimit’=\(1\times 10^{-9}\), ‘StepTolerance’=\(1\times 10^{-8}\), ‘OptimalityTolerance’=\(1\times 10^{-6}\). If LSF reinitialization is utilized, ‘MaxFunctionEvaluations’ and ‘MaxIterations’ are employed to stop the optimization before reinitializing it. Also, the ‘StepTolerance’ criterion often gets affected by poor LSF function. Therefore, the optimization is stopped when it fails by ‘StepTolerance’ criterion for 4 consecutive times. Otherwise, the LSF is reinitialized and the optimization is run again. The stopping criteria, tolerance values, and applied regularizations differ slightly example-wise and are mentioned in the descriptions of the examples. Most of the examples are symmetric along x and y-axes. Therefore, x and y-axes symmetry is applied in almost all numerical models, and we explicitly state when there is an exception. From here onward, the value of the objective function for a particular test case will be mentioned in the caption.

It is noteworthy that, for each numerical example, determining an appropriate value of support bandwidth is crucial. The support bandwidth defines the area characterized by intermediate densities. Achieving a clear 0-1 design involves minimizing this intermediate density area, ensuring higher numerical accuracy for state and adjoint problems. On the other hand, the sensitivity is confined to this intermediate density zone with zero sensitivity elsewhere (as shown in Eq. (11)). Therefore, for a given design mesh and solution mesh, decreasing the support bandwidth compromises the sensitivity accuracy due to fewer integration points available for sensitivity calculations. If one wants to reduce the support bandwidth while maintaining sensitivity accuracy, one has to either increase the integration points or refine the solution mesh, both constrained by the computational time. Thus, the absolute value of the support bandwidth will be decided based on a trade-off among accuracy, stability and computational effort. We will also showcase a thorough study on the effect of support bandwidth on the objective function value, sensitivity, and the accuracy of state and adjoint temperatures for the first numerical example, an annular ring problem.

4.1 Annular ring problem

In this example, we consider a benchmark problem. As per authors’ knowledge, there are not any benchmark problems of multi-material optimization with heat conduction phenomena and given interface conditions in the literature. The importance of this test case is to verify the proposed method with an analytical solution. An annular ring with inner radius \(R_{a}\) and outer radius \(R_b\) is the domain \({\Omega }\). The domain is divided into two regions \({\Omega }_{a}\) and \({\Omega }_{b}\) by an implicitly defined interface \({\Gamma }_L\) (a circle with radius \(R_L\)) as shown in Fig. 9. Regions \({\Omega }_{a}\) and \({\Omega }_{b}\) are filled with isotropic materials of conductivity \(\kappa _{a}\) and \(\kappa _{b}\), respectively. For the state variable T, the boundary value problem is given as,

and the objective is to maximize the functional J(T),

By comparing it with Eq. (27), \(\Omega _b={\Omega }\), \(J_b=T^2\), and the surface term is absent.

In the simplest case, when the interface radius \(R_{L}\) is the only design variable, the adjoint problem for the adjoint state P can be constructed using Eq. (63) as,

For the given example, we take \(R_{a}=1, R_{b}=2, T_{a}=0, T_{b}=100, \kappa _{a}=100\), and \(\kappa _{b}=10\). The analytical solutions of the boundary value problem and adjoint problem are given in Appendix 3. From the analytical solution, we can derive that the optimized value of J(T), \(J(T)=1.6094\times 10^4\), is achieved at \(R_L\approx 1.80612\). This is the only minimum in the given design space, and hence the unique solution to the optimization problem.

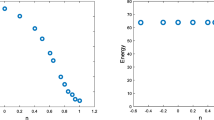

For the annular ring problem, variation of a objective function J, b sensitivity \(\frac{{\text {d}}J}{{\text {d}}R_L}\), c interface perimeter \(Per_L\) and d relative \(L_2\)-error of state variable T and e relative \(L_2\)-error of the adjoint variable P with respect to interface radius \(R_{L}\) for different values of support bandwidth \(\varDelta\) of smoothed Heaviside function. The analytical results are shown in black color. It is observed that the IGA provides better accuracy in the objective function with a smaller bandwidth. However, a bandwidth that is too small can produce unstable results with oscillations in the optimization

At first, we compare the numerical results with the analytical results for different support bandwidths \(\varDelta\) of the smoothed Heaviside function in Eq. (10). Figure 10 shows the variation of quantities such as the objective function J, sensitivity \({\text {d}}J/{\text {d}}R_L\), interface perimeter \(Per_L\), error in the state and adjoint variables with respect to interface radius \(R_L\) over range \([R_a,R_b]\). A constant mesh with 4389 degrees of freedom and 1089 expansion coefficients is exploited. For each value of \(R_L\), the expansion coefficients are defined using Eq. (19). The numerical sensitivity with respect to interface radius is calculated as follows,

and the interface perimeter \(Per_L\) is calculated using the expansion coefficients as follows,

The values of the support bandwidth \(\varDelta\) taken into account are \(\varDelta =0.5, 0.1, 0.05\), 0.01, 0.005. From Fig. 10, it is evident that the IGA provides better accuracy in the objective function with a smaller bandwidth. However, a bandwidth that is too small can produce unstable results with oscillations in the optimization. The reason behind it is inaccurate material information near the interface, as we are using density-based geometric mapping. Oscillations can stop the optimization prematurely or make it behave erratically. In addition, the mesh size has a direct relation with these oscillations.

In Fig. 11, we show the effect of the mesh size on the numerical results. Figure 11a presents the fluctuation of relative \(L_2\)-error in state variable T over the range of \(R_L\) for four different mesh size with degrees of freedom (DOF)=105, 333, 1173, 4389. \(\varDelta =0.05\) for all four cases. From the figure, it is evident that the accuracy and stability increase with the mesh refinement, which aligns with our expectations. Because we are using density-based geometry mapping, the finer mesh with a more precise material distribution improves the structural response. In addition, it captures the smoothed Heaviside and Dirac delta functions with better accuracy to provide stable results. One point to note is that a finer mesh means higher computation effort in optimization and that provides a practical limit to the mesh size.

For the annular ring problem, the effect of DOF number on stability and accuracy a variation in the relative \(L_2\)-error of the state variable T in the radius range as the mesh is refined, \(\varDelta =0.05\) b convergence of the objective function J, \(\varDelta =0.5,0.1,0.05,0.01,0.005\) c convergence of the state variable T and adjoint variable P, \(\varDelta =0.5,0.1,0.05,0.01,0.005\) d relation between relative \(L_2\)-error in J and \(\varDelta /h_{\text{avg}}\), where \(h_{\text{avg}}\) is the average mesh size, \(\varDelta =0.5,0.1,0.05,0.01,0.005\). The errors in J, T, and P reduce with the mesh refinement bounded by the support bandwidth

The relative \(L_2\)-error in objective function J and the variables T & P with respect to DOF are plotted in Fig. 11b and c, respectively. Here, we considered five values of \(\varDelta\) same as Fig. 10. We observe a similar pattern, the errors in all three quantities reduce with mesh refinement bounded by the support bandwidth. In Fig. 11d, the approximate relation between relative \(L_2\)-error in objective function J and \(\varDelta /h_\text{avg}\), where \(h_{\text{avg}}\) is the average mesh size, is developed. From the figure, it can be observed that the black line, \(\log _{10}(\vert \vert err_{J}\vert \vert _{2})=m\log _{10}(\varDelta /h_{\text{avg}})+C\); \(m\approx 1.7008, C\approx -3.8495\), represents an approximate upper bound on the improvement by refinement for a given value of \(\varDelta\). The improvement in accuracy via refinement is limited on the right side of the line. This empirical relation helps to find an appropriate mesh size (for a given \(\varDelta\)) to use for optimization.

For the annular ring problem, initial and optimized topologies for three values of \(N_{\text{var}}=25, 42\) and 1089 with \(\varDelta =0.05\). Four initial topologies (samples I, II, III, and IV) are discretized with the corresponding design basis. All optimized topologies are close to the optimal topology with a circular interface at \(R_{L}\approx 1.80612\) with the objective function \(J=1.6098\times 10^4.\)

For optimization, we take the expansion coefficients as design variables instead of the interface radius. Figure 12 shows the initial and optimized topologies for three values of \(N_{\text{var}}\), \(N_{\text{var}}=25, 42\) and 1089. The corresponding solution meshes have 4389, 4658, and 4389 DOF, respectively. For \(N_{\text{var}}=25, 1089\), we use \(p=2\) and \(q=1\), while for \(N_{\text{var}}=42\), we use \(p=3\) and \(q=2\). We choose \(\varDelta =0.05\) to trade off the accuracy, stability, and computational cost. We also consider four different LSFs (samples I, II, III, and IV) to define the initial topologies. For a particular LSF, the initial topology for each value of \(N_{\text{var}}\) can be slightly different due to their different parameterizations. From the figure, we observe that each case generates a topology close to the optimal topology from the analytical solution regardless of the initial topology, which verifies the efficiency of the proposed method. The values of the objective function are also within \(1\%\) variation of optimal value. It is interesting to observe that in all cases optimization converged to the minimum corresponding to the global minimum of J in the design space with the unique parameter \(R_L\). In the most general space, we can only claim that it is a local minimum.

4.2 Thermal cloak problem

4.2.1 Problem description

Schematic design of a a base material (aluminium alloy) plate under constant heat flux applied by the high-temperature source on the left side and low-temperature sink on the right side; b a circular insulated obstacle embedded in the base material plate; (\({\Omega }_{{\text {in}}}\) is the obstacle) c the obstacle and a surrounding metamaterial-based thermal cloak embedded in the base material plate; \({\Omega }_{\text{design}}\) is the domain of the cloak where the topology (the distribution of two materials, denoted by pink and blue colors) is optimized, \({\Omega }_{\text{out}}\) is the outside domain of remaining base material, where the temperature disturbance is sought to be reduced. \({\Omega } = {\Omega }_{{\text {in}}} \cup {\Omega }_{\text{design}}\cup {\Omega }_{\text{out}}.\)

In this example, a thermal cloak is optimized. The objective of a thermal cloak is to reduce the temperature disturbance created by an obstacle and produce the temperature distribution as if there were no obstacles. The geometry is referred from Chen and Yuan Lei (2015), however, our focus is on a thermal cloak instead of a thermal concentrator as in Chen and Yuan Lei (2015). We consider a square base material-aluminum alloy (6063) plate (\(\kappa _{\text{Al}}=200\) W/mK) with a side length of 140 mm. The plate is under constant temperature difference between the left side (at 300 K) and the right side (at 200 K) as shown in Fig. 13a. The thermally insulated boundary condition, \(\nabla T\cdot {\varvec{n}}=0\), is applied on the remaining two sides. Now, the plate is embedded with a circular obstacle, which is a thermal insulator with very low thermal conductivity, \(\kappa _\text{insulator} = 0.0001\) W/mK (see Fig. 13b). Due to the addition of an insulator, the temperature distribution is disturbed. Next, to reduce the temperature disturbance, a thermal cloak made of a metamaterial is added surrounding the obstacle (as shown in Fig. 13c). All dimensions related to the given problem are shown in Fig. 13. For the cloak, we chose a metamaterial made of copper and polydimethylsiloxane (PDMS), with thermal conductivities \(\kappa _{\text{copper}}=398\) W/mK and \(\kappa _{\text{PDMS}}=0.27\) W/mK, respectively.

4.2.2 Objective function

The thermal cloak aims to reduce the temperature disturbance in \({\Omega }_{\text{out}}\) created by the inner obstacle \({\Omega }_{{\text {in}}}\). Mathematically, the cloak objective function is defined as,

with \({\widetilde{J}}_{\text{cloak}}\) be the normalization value given as,

Here, \({\overline{T}}\) represents the temperature field for the reference case when the entire domain is filled with the base material, and \({\widetilde{T}}\) is the temperature field when \({\Omega }_{\text{design}}\) is entirely filled with the insulator.

By comparison with Eq. (27), \(\varOmega _b={\Omega }_{\text{out}}\), \(J_b=\frac{1}{{\widetilde{J}}_{\text{cloak}}}~\vert ~T~-~{\overline{T}}~\vert ^2\), and the surface term is absent.

4.2.3 Results and discussion

For the thermal cloak problem, the convergence of the objective function \(J_{\text{cloak}}\) (with respect to the number of iterations) with and without LSF reinitialization. Black color dots show the case without LSF reinitialization, while the remaining colors represent the case with LSF reinitialization. The change in the color indicates that the LSF has been reinitialized at that iteration

For the thermal cloak problem, initial and optimized topologies for three values of \(N_{\text{var}}=25, 42\) and 1089 with \(\varDelta =0.0005\). Three initial topologies (samples I, II, and III) are discretized with the corresponding design basis. All optimized topologies reach the objective function value of order \(10^{-9}\)–\(10^{-10}.\)

Here, \(N_{\text{var}}, p, q\) values are taken to be the same as in the previous example, and \(\varDelta =0.0005\). Three initial topologies (samples I, II, and III) are considered and the corresponding LSFs are discretized with \(N_{\text{var}}=25, 42\) and 1089. The solution meshes corresponding to \(N_{\text{var}}=25, 42\) and 1089 have 13167, 13974 and 13167 DOF, respectively. Additionally, the LSF reinitialization is used to increase the convergence rate. For each knot span in a parameter direction, we utilize 20 isoparameter lines to find the interface points (as described in Sect. 3.4.1). The reinitialization is performed every 10 iterations or 100 function evaluations. To illustrate the convergence pattern of the objective function with reinitialization, we show the convergence for sample III with \(N_{\text{var}}=1089\) in Fig. 14. From Fig. 14, it is observed that reinitialization improves the convergence rate substantially.

Figure 15 shows the initial and optimized topologies. From the figure, we see that the optimized topology depends on the initial topology and on the number of design variables. The objective function, however, successfully reaches the values of order \(10^{-9}\)-\(10^{-10}\) for all cases. Larger \(N_\text{var}\) represents more design freedom, and that is evidently visible for the optimized topologies for \(N_{\text{var}}=1089\). Using a large \(N_{\text{var}}\) will allow exploring more detailed topologies, but at the same time, it can create unnecessary and complicated features. One of the solutions to the cloak problem is an exact bilayer cloak as proposed in Han et al. (2014a), where the interface is a circle and the radius is uniquely defined by the conductivities at hand. From our optimization, we obtain several optimized topologies such as (Fig. 15b, d, f, h, n) close to a bilayer cloak.

For the thermal cloak problem, flux flow and temperature distribution for (left column) a homogeneous base material plate (reference case), (middle column) a base material plate embedded with a circular insulator obstacle, and (right column) a base material plate embedded with a circular insulator and surrounding thermal cloak (sample I and \(N_{\text{var}}=1089\)). The temperature and heat flux distributions are shown. The thermal cloak reduces the temperature disturbance in \({\Omega }_{\text{out}}.\)

To further illustrate the results of optimization, in Fig. 16, we present an optimized thermal cloak for sample I with \(N_{\text{var}} = 1089\). We compare the flux flow and temperature distribution among the three cases shown in Fig. 13: a background plate under constant heat flux, a plate with an obstacle and a plate with an obstacle and the cloak. From the temperature difference, we can evidently see that the thermal cloak decreases the temperature disturbance created by the obstacle. In the \(\Omega _{\text{out}}\) region, the temperature distribution mimics the reference case, and the flux remains undisturbed.

For thermal cloak problem, initial topologies, optimized topologies without any regularization and with Tikhonov regularization for \(N_{\text{var}}=1089\) with \(\varDelta =0.0005\). Three initial topologies (samples I, II and III) are discretized with the corresponding design basis. Four values of weighing parameter \(\chi\) are considered. Tikhonov regularization provides smoother optimized topologies with a slight compromise on the \(J_{\text{cloak}}\)-values

For the thermal cloak problem, initial topologies, optimized topologies without any regularization and with volume regularization for \(N_{\text{var}}=1089\) with \(\varDelta =0.0005\). Three initial topologies (samples I, II and III) are discretized with the corresponding design basis. Four values of weighing parameter \(\rho\) are considered. Volume regularization fills the zero-flux area with PDMS material with a slight compromise on the \(J_{\text{cloak}}\)-values

For the thermal cloak problem, initial topologies, optimized topologies without any regularization and with combined Tikhonov and volume regularization for \(N_{\text{var}}=1089\) with \(\varDelta =0.0005\). Three initial topologies (samples I, II and III) are discretized with the corresponding design basis. Four sets of weighing parameters \(\chi\) and \(\rho\) are considered. Combined regularization can fill the zero-flux area with PDMS material as well as produce smoother topologies with a slight compromise on the \(J_{\text{cloak}}\)-values

In this paragraph, we discuss how to avoid unnecessary complex features in optimized topologies as shown in Fig. 15f, l, r. The goal is to use regularization techniques to produce smooth and less complex topologies. First, we explore the Tikhonov regularization. With the Tikhonov regularization, the total objective function \(J_{\text{total}}= J_{\text{cloak}} + \chi J_{\text{Tknv}}\). The optimization is performed with four values of \(\chi\): \(10^{-5}\), \(10^{-4}\), \(10^{-3}\), and \(10^{-2}\). The optimization results are presented in Fig. 17. For \(\chi =10^{-5}\), \(10^{-4}\), the effect of regularization is very minor, and consequently, we can see that some of the small features are still present. For larger values of \(\chi\) (\(10^{-3}\), \(10^{-2}\)), the optimized topologies are smoother and with fewer complex features, as expected. However, regularization provides a slight constraint on the \(J_{\text{cloak}}\)-values. The values, which are within the order of \(10^{-7}\), are still capable of providing the cloaking effect with enough accuracy.

Next, we explore the effect of another type of regularization, volume regularization. With the volume regularization, the total objective function \(J_{\text{total}}= J_{\text{cloak}} + \rho J_{\text{vol}}\). Here, we use four values of \(\rho\): \(10^{-5}\), \(10^{-4}\), \(10^{-3}\) and \(10^{-2}\). Figure 18 shows the optimization results. From the figure, it is evident that volume regularization with higher \(\rho\) fills the zero-flux area with PDMS material. For lower values of \(\rho\), the effect is not dominant. Similarly to the last regularization, \(J_{\text{cloak}}\)-values suffer up to some extent, however, still lie within order \(10^{-6}\). Also, for sample II, the optimized topology without any regularization (as shown in Fig. 18h) is quite different from the bilayer. However, after volume regularization, Fig. 18l–k are close to the bilayer cloak.

Thereupon, we check the combination of these two regularizations mentioned in the last two paragraphs. We consider four combinations of \(\chi =10^{-4}, 10^{-3}\) and \(\rho =10^{-4}, 10^{-2}\), denoted by sets A to D. The optimization results are shown in Fig. 19. For sample I, all sets give similar results with very negligible differences in topology. For samples II and III, sets C and D provide smoother topologies with smaller perimeters and less complex features compared to sets A and B. \(J_{\text{cloak}}\)-values, however, are slightly higher for sets C and D. Therefore, we can say that \(\chi\) and \(\rho\) are decided based on a trade-off between the smoothness of the topologies and fulfillment of the cloaking objective. However, it is difficult to predict the exact values of \(\chi\) and \(\rho\) a priori and should be decided on the basis of the trial and error method. Other regularization techniques such as perimeter regularization and sensitivity smoothing can also be applied to get smoother geometries. Nonetheless, the issue of lack of apriori knowledge of the appropriate value of weighing parameters remains. Another way to avoid complex topologies is by applying geometric constraints such as minimum length scale in the optimization problem. Geometry-constrained optimization, however, is beyond the scope of the current work, and it will be explored in future research.

Configurations and dimensions of different types geometries of a thermal cloak problem: Config. I—square obstacle, Config. II—horizontal rectangular obstacle, Config. III—vertical rectangular obstacle, Config. IV—horizontal ellipsoidal obstacle, Config. V—inclined ellipsoidal obstacle, Config. VI—square cloak, Config. VII—horizontal rectangular cloak, Config. VIII—inclined square cloak

For the thermal cloak problem, initial topologies, optimized topologies without any regularization, optimized topologies with combined Tikhonov and volume regularizations for different types geometries of a thermal cloak for \(N_{\text{var}}=1089\) with \(\varDelta =0.0005\). The proposed method can handle different geometries

In the next study, we explore several types of geometries of the obstacle and thermal cloak for a given problem. The different configurations under consideration and their dimensions are shown in Fig. 20. The plate dimensions are the same as in Fig. 13, therefore excluded. For all configurations, we apply symmetry along x and y-axis as in the earlier cases except Config. V—inclined ellipsoidal obstacle, for which we remove the symmetry condition due to lack of symmetry in geometry itself. We perform optimization for one value of \(N_{\text{var}}\), \(N_{\text{var}}=1089\), and the corresponding results are shown in Fig. 21 In terms of the initial topology, the optimized topology (without any regularization), and the optimized topology (with combined Tikhonov and volume regularization). For combined Tikhonov and volume regularization, we present only one case that gives a smoother topology with good accuracy (the corresponding weighing parameters \(\chi\) and \(\rho\) for each case are also mentioned in Fig. 21). From the results, it can be concluded that the proposed method handles different geometries. In the same vein as the earlier results, regularization can generate smoother geometries. In some cases, the objective function of the regularized problem is better than the unregularized problem. The reason behind this is that the unregularized optimization problem can get stuck in a local minimum without exploring the full scope of the whole design space. After applying regularization, the regularized problem becomes a better-defined optimization problem for those cases. Following it, optimization has the advantage of exploring better designs that were not possible earlier.

4.3 Thermal camouflage problem

4.3.1 Problem description

Schematic design of a A base material (aluminum alloy) plate embedded with two insulator sectors under constant heat flux applied by a high-temperature source on the left side and low-temperature sink on the right side; b A conductive object, a thermal camouflage surrounding the object and two insulator sectors embedded in a base material plate; \({\Omega }_{\text{sec}}\) is the region covered by the insulator sectors, \({\Omega }_{{\text {in}}}\) is the region covered by the object, \({\Omega }_{\text{design}}\) is the area of the camouflage where the topology is optimized, \({\Omega }_{\text{out}}\) is the outside area of remaining base material, \({\Omega }={\Omega }_{{\text {in}}} \cup {\Omega }_{\text{design}}\cup {\Omega }_{\text{sec}}\cup {\Omega }_{\text{out}}.\)

In this example, we explore the optimization of thermal camouflage. Both thermal cloak and thermal camouflage mimic the thermal signature (in the region of interest) of other reference scenarios. Thermal cloaks hide the objects from outside detection; however, they can be detected from inside observation as they have a different heat signature from the reference case in the inside region. On the other hand, thermal camouflage mimics the heat signature of another object for both inside and outside detection. The schematics of the camouflage problem and the corresponding dimensions are shown in Fig. 22. Figure 22a shows the reference state where two insulated sectors are embedded in a square base material-Aluminum alloy (grade 5457) plate (\(\kappa _{\text{Al}}=177\) W/mK) with a side length of 100 mm. The insulators’ conductivity is taken as 0.0001 W/mK. Figure 22b shows that the magnesium alloy object (grade AZ91D), with thermal conductivity \(\kappa _\text{Mg}=72.7\) W/mK, is added at the center of two sectors. It also shows a metamaterial-based thermal camouflage covering the area between the sectors and the object. For camouflage, we chose the metamaterial similar to the last example, made of copper and polydimethylsiloxane (PDMS), with thermal conductivity \(\kappa _\text{copper}=398\) W/mK and \(\kappa _{\text{PDMS}}=0.27\) W/mK. The boundary conditions are identical to those in the previous example.

4.3.2 Objective function

The objective of thermal camouflage is to reduce the temperature difference with respect to the temperature signature of the reference state in \({\Omega }_{{\text {in}}} \cup {\Omega }_{\text{design}} \cup {\Omega }_{\text{out}}\). Mathematically, the camouflage function is defined as,

with \({\widetilde{J}}_{\text{cmflg}}\) be the normalization value given as,

Here, \({\overline{T}}\) represents the temperature field for the reference case, when \({\Omega }_{{\text {in}}} \cup {\Omega }_{\text{design}} \cup {\Omega }_{\text{out}}\) is filled with the base material, and \({\widetilde{T}}\) is the temperature field when \({\Omega }_{\text{design}}\) is entirely filled with the insulator.

By comparison with Eq. (27), \(\Omega _b={{\Omega }_{{\text {in}}} \cup {\Omega }_{\text{design}} \cup {\Omega }_{\text{out}}}\), \(J_b=\frac{1}{{\widetilde{J}}_{\text{cmflg}}}~\vert ~T~-~{\overline{T}}~\vert ^2\), and the surface term is absent.

4.3.3 Results and discussion

In this example, we consider \(N_{\text{var}}=1089\) (with \(p=2\), \(q=1\) and a mesh of 17655 DOF) and \(\varDelta =0.001\). We also exploit the LSF reinitialization performed after every 10 iterations or 300 function evaluations. All other parameters are taken the same as the last example.

Figure 23 shows the initial topologies, the optimized topologies without any regularization (\(J_{\text{total}}=J_{\text{cmflg}}\)), and the optimized topologies with combined Tikhonov and volume regularization (\(J_{\text{total}}=J_\text{cmflg} + \chi J_{\text{Tknv}} + \rho J_{\text{vol}}\)). For combined regularization, we consider four sets of parameters \(\chi\) and \(\rho\), denoted as set-A (\(\chi =1\), \(\rho =10^{-2}\)), set-B (\(\chi =1\), \(\rho =10^{-1}\)), set-C (\(\chi =1\), \(\rho =1\)) and set-D (\(\chi =1\), \(\rho =0\)). It can be seen that the optimal topologies provide the copper channels to allow the flux to flow similarly to the reference case. The widths of the channels are dependent on numerical accuracy, design freedom, and regularization parameters. As evident from the figure, the optimized geometries without any regularization are impractical considering their too complex features. However, the alternative designs with regularization have better practical topologies. Also, one point to note is that their \(J_{\text{cmflg}}\)-values are in the same range as the unregularized cases, sometimes even smaller. The difference among the optimized topologies from set A to D is very negligible. As discussed in the last example, the parameters \(\chi\) and \(\rho\) values are difficult to predict apriori and have to be decided based on the trial and error method according to the design requirements.

In Fig. 24, we present the optimized topologies with and without regularizations achieving the desired camouflaging objective for sample I. We compare the flux flow, temperature distribution, and temperature difference with the reference temperature distribution. From the figure, it can be seen that the thermal camouflage produces the temperature signature same as the reference case.

For the thermal camouflage problem, initial topologies, optimized topologies without any regularization and with combined Tikhonov and volume regularization for \(N_{\text{var}}=1089\) with \(\varDelta =0.001\). Three initial topologies (samples I, II and III) are discretized with the corresponding design basis. Four sets of weighing parameters \(\chi\) and \(\rho\) are considered

For the thermal camouflage problem, flux flow and temperature distribution for (left column) a base material plate embedded with two insulator sectors (reference case), (middle column) a plate embedded with two insulator sectors, a conductive object (at the center), and an optimized thermal camouflage (without regularization) surrounding the object (sample I), and (right column) a plate embedded with two insulator sectors, a conductive object (at the center), and an optimized thermal camouflage (with regularization) surrounding the object (sample I, set C). The temperature and heat flux distributions are shown. The thermal camouflage reduces the temperature disturbance in \({\Omega }_{{\text {in}}}\cup {\Omega }_{\text{design}}\cup {\Omega }_{\text{out}}.\)

5 Conclusions

In the present article, we explore the level-set topology optimization method for the design of heat manipulators. The NURBS basis functions are utilized for parameterizations of the geometry, temperature field, and level-set function. The thermal boundary value problem is solved using isogeometric analysis. For the optimization problem, a gradient-based advanced mathematical programming technique, Sequential Quadratic Programming (SQP), is used. To calculate the sensitivity, the adjoint method is utilized. Three numerical examples are presented: an annular ring problem, a thermal cloak problem, and a thermal camouflage problem that corroborates the efficiency of the proposed method.

In the annular ring problem, the numerical results match the analytical results, which verifies the accuracy of the proposed method. The results indicate that,

-

A smaller support bandwidth of the smoothed approximate Heaviside function improves the accuracy of the numerical solution and hence the optimization results. However, a too-small bandwidth affects the numerical sensitivity calculation and produces oscillations in the optimization. This would result in stopping optimization prematurely or making it behave erratically.

-

A remedy to oscillations coming from smaller bandwidths is to refine the solution mesh. However, the amount of refinement is also constrained by the associated computational cost. Therefore, a solution mesh and the bandwidth of smoothed Heaviside function are decided on the basis of a compromise among accuracy, stability, and computational cost. However, for the benchmark problem, we developed an empirical lower bound on the required mesh size for a given support bandwidth to give an estimate.

-

Considering the uniqueness of the solution in the given design space, the proposed optimization procedure successfully generates the topologies close to the analytical optimal topology irrespective of the initial topologies. The values of the objective function are also within 1% variation of the optimal value.

In the thermal cloak problem, we optimized the topology of an annular-shaped thermal cloak. We also explored two regularizations (Tikhonov regularization and volume regularization) and their combination to generate smoother and more practical optimized topologies. In the end, the efficacy of the current method for other geometries of the thermal cloak was also examined. The results indicate that,

-

The problem is not convex and can have different optimized topologies depending on the initial topology and the number of design variables. Several optimized topologies are close to one known analytical solution—a bilayer cloak with a circular interface. The objective function reaches values of order \(10^{-9}-10^{-10}\) for all cases.

-

By providing regularizations during optimization, smoother and less complex topologies are generated with a slight compromise on the objective function value.

-

The values of the parameters \(\chi\) and \(\rho\), the weights of Tikhonov and volume terms, respectively, are very difficult to predict apriori. They are often decided based on a trade-off between the complexity of the topologies and fulfillment of the cloaking objective using the trial and error method.

-

The proposed method can handle different geometries and shapes with the same effectiveness.

In the last example, we optimized a thermal camouflage mimicking the temperature signature of a reference scenario. Similar to the thermal cloak problem, we exploited regularizations to generate smoother and more practical designs. The results are equally satisfactory.

The proposed method is generalized to apply to any heat manipulator with varying geometries. Also, keeping in mind that the heat flux manipulation problems are often not unique, the method can find other possible topologies for the already developed heat manipulators based on analytical methods such as transformation thermotics, scattering cancelation method. In addition, many times we deal with a larger number of design variables, and therefore, the gradient-based optimization (with the adjoint method to find sensitivity) works faster than other gradient-free optimization processes. However, the method in its current form lacks the ability to carry out geometry-constrained optimization. Consequently, the method can be extended to impose geometry-constrained optimization in future work. In addition, the optimization problem can also be extended to multiphysics problems such as thermomagnetic, thermoacoustic, thermoelectrics. In another direction, the method can be improved to reduce the computational time using surrogate models for boundary value problems, acceleration techniques, and NURBS hyper-surfaces (Montemurro and Refai 2021; Costa et al. 2021).

References

Abe K, Kazama S, Koro K (2007) A boundary element approach for topology optimization problem using the level set method. Commun Numer Methods Eng 23:405–416

Allaire G, Jouve F, Toader A-M (2002) A level-set method for shape optimization. CR Math 334:1125–1130

Allaire G, Jouve F, Toader A-M (2004) Structural optimization using sensitivity analysis and a level-set method. J Comput Phys 194:363–393

Allaire G, de Gournay F, Jouve F, Toader A-M (2005) Structural optimization using topological and shape sensitivity via a level set method. Control Cybern 34:59–80