Abstract

Background

Intervention adaptation is often necessary to improve the fit between evidence-based practices/programs and implementation contexts. Existing frameworks describe intervention adaptation processes but do not provide detailed steps for prospectively designing adaptations, are designed for researchers, and require substantial time and resources to complete. A pragmatic approach to guide implementers through developing and assessing adaptations in local contexts is needed. The goal of this project was to develop Making Optimal Decisions for Intervention Flexibility during Implementation (MODIFI), a method for intervention adaptation that leverages human centered design methods and is tailored to the needs of intervention implementers working in applied settings with limited time and resources.

Method

MODIFI was iteratively developed via a mixed-methods modified Delphi process. Feedback was collected from 43 implementation research and practice experts. Two rounds of data collection gathered quantitative ratings of acceptability and inclusion (Round 1) and feasibility (Round 2), as well as qualitative feedback regarding MODIFI revisions analyzed using conventional content analysis.

Results

In Round 1, most participants rated all proposed components as essential but identified important avenues for revision which were incorporated into MODIFI prior to Round 2. Round 2 emphasized feasibility, where ratings were generally high and fewer substantive revisions were recommended. Round 2 changes largely surrounded operationalization of terms/processes and sequencing of content. Results include a detailed presentation of the final version of the three-step MODIFI method (Step 1: Learn about the users, local context, and intervention; Step 2: Adapt the intervention; Step 3: Evaluate the adaptation) along with a case example of its application.

Discussion

MODIFI is a pragmatic method that was developed to extend the contributions of other research-based adaptation theories, models, and frameworks while integrating methods that are tailored to the needs of intervention implementers. Guiding teams to tailor evidence-based interventions to their local context may extend for whom, where, and under what conditions an intervention can be effective.

Similar content being viewed by others

Background

Decades of research have established a wide variety of evidence-based prevention and intervention practices (EBP) for use across a range of healthcare domains. However, studies have also documented a persistent implementation gap in which EBPs are infrequently delivered at scale or with sufficient intensity to have their intended effects [1,2,3]. Scholars have spent the past 15-20 years identifying implementation determinants (i.e., barriers and facilitators) and strategies [4,5,6], particularly at the intraorganizational and interorganizational levels [7, 8]. Recently, more attention has been devoted to optimizing implementation at the intervention level. Intervention-level factors (e.g., intervention design quality) reveal novel paths to achieving quality implementation.

Intervention adaptation

There is often a mismatch between EBPs and the providers, clients, and service settings they aim to support [9]. In response to this problem of contextual appropriateness or “fit,” EBPs are frequently modified to improve their functioning within a given implementation context [10]. According to Moore et al. [11], adaptations are intentional modifications made to EBPs to improve the intervention-implementation context fit; whereas modifications can be planned (e.g., changes made prior to intervention implementation) or reactive (e.g., intentional changes made in response to emergent need based on contextual fit of the EBP as delivered) [12, 13]. Though EBPs are routinely adapted to maximize fit with real-world settings, providers, and patients (e.g., [14]), such adaptations can be reactive in ways aligned more with implementer’s personal preferences for EBP use [15] than with the intervention’s goal [16], necessitating systematic ways to guide and understand the effectiveness of adaptations made across different phases of implementation.

As awareness of widespread adaptation has increased [17, 18], the need to develop a full science of adaptation has become clear. One approach is to populate an “adaptome” to compile information about adaptation types and their respective impacts on implementation and intervention outcomes [19]. Implementation science has developed a number of taxonomies, models, and frameworks to systematize and guide efforts to identify, log, and assess the impact of adaptations. Both the Framework for Reporting Adaptations and Modifications-Expanded (FRAME) [20] and the patient-centered medical home (PCMH) adaptations model [21] characterize the who, what, when, where, and how of adaptations. While similar in their approach, FRAME offers more guidance on culturally responsive modifications whereas the PCMH adaptations model integrates implementer perceptions of the impact of modifications on implementation outcomes. The Model for Adaptation Design and Impact (MADI) [12] extends previous taxonomies (e.g., [20, 22]) to propose associations between adaptation characteristics, mediating and/or moderating factors, and implementation and intervention outcomes. Crucially, the Patient-Centered Outcomes Research Institute’s (PCORI’s) standards for studying complex interventions [23] require defining the core functions and forms of an intervention [24] to ensure that adapting the intervention does not diminish its ability to achieve the intended effects [25]. Additional models are emerging to assist researchers and practitioners in making decisions regarding whether or not to adapt and how to evaluate the adaptation process as it evolves [13].

These theories, models, and frameworks provide tools to accumulate knowledge about when intervention modification is indicated, what types of adaptations are made to interventions, and how to evaluate possible impacts on outcomes. An important next step is to develop methods of adaptation that build upon this foundation. Some existing models provide a direction for how to approach the intervention adaptation process. For example, the Dynamic Adaptation Process (DAP) [26] supports thoughtful intervention adaptation during four phases of implementation. Similarly, RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) is a comprehensive implementation framework that can be used to guide adaptations [27]. The CENTER-IT (CENTERing multilevel partner voices in Implementation Theory) approach encourages researchers to incorporate stakeholder perspectives and consider the domains of the Consolidated Framework for Implementation Research (CFIR) [9] when exploring possible adaptations [28]. Similar to the DAP and RE-AIM, the ADAPT guidance [11] supports the entire implementation process and advances the science of adaptation by acknowledging adaptation as a crucial step and recommending important factors to consider when making adaptations (e.g., potential unintended consequences, costs and resources needed). Additionally, Intervention Mapping [29] and the related Implementation Mapping [30] are systematic processes that guide the development of multi-level interventions and implementation strategies. These models benefit from the important example set by the ADAPT-ITT model [31], which provides an eight-phase process for planning, designing, and testing intervention adaptations on a large scale.

All of these frameworks can support intervention adaptation; but they require time, resources, and adoption of a large-scale implementation planning process that are not feasible in many settings. Further, beyond recommending convening a team to oversee this process and monitoring the need for ad hoc adaptations during implementation, none provide clear, detailed steps for how to adapt. What remains unarticulated are methods that “zoom in” on intervention modification and outline specific procedures for designing adaptations. These methods are especially needed in applied settings that lack the time, personnel, and other resources to launch a large-scale research-funded implementation planning process.

Human centered design

The field of human centered design (HCD) offers methods that can augment existing adaptation processes. HCD is a field dedicated to bringing innovations into alignment with the users and settings where they will be deployed [32, 33]. HCD methods can be used in efficient ways to elevate user perspectives, needs, and strengths. Fundamental to HCD is the expectation that engaging stakeholders in development or redesign processes should result in products that are more accessible, parsimonious, and usable. HCD has commonalities with other methodological approaches relevant to implementation such as community-based participatory research (CBPR) [34], but in HCD user involvement is typically more targeted and time-limited [35]. HCD complements implementation science’s multilevel frameworks by contributing well-specified approaches for engaging stakeholders, understanding user experience, and redesigning products [10, 36]. Although HCD has been applied most commonly to the development of digital technologies, recent applications have made use of HCD principles and methods to improve the contextual fit of both psychosocial interventions and implementation strategies in adult and youth health services [18, 37,38,39].

Present study

The goal of this research was to leverage HCD and develop a method for intervention adaptation that is tailored to the needs of intervention implementers (e.g., clinicians) and intervention decision makers (e.g., supervisors, program/site leaders) working in applied settings. To accomplish this goal, we focused on creating a method that (1) presents a focused set of techniques rather than a comprehensive collection of all possible ones; (2) optimizes feasibility and time/resource efficiency while remaining as scientifically rigorous as possible; (3) favors locally relevant and actionable information over widely generalizable knowledge; and (4) includes techniques from industry and HCD that can be executed rapidly and which center the experience of the end user. To select a set of adaptation steps that would be widely applicable, we referenced the large-scale scoping study conducted by Escoffery et al. [40]. To ensure that the design process would not inadvertently modify an intervention in ways that eliminate its effectiveness, we included techniques to identify the original intervention’s core functions (purposes) and forms (activities), as conceptualized by Perez Jolles et al. [24] and demonstrated by Kirk et al. [25].

Methods

To develop our method, we identified specific HCD techniques that could achieve the objectives of intervention adaptation steps drawn from a recent scoping study of adaptation frameworks. Escoffery et al. [40] identified eight steps that were common across frameworks. Of these steps, the first and last author identified the three steps most focused on designing adaptations: 1) decide what needs to be adapted, 2) adapt the original program, 3) test the adapted materials. This focused identification of the fewest possible adaptation steps was performed with the goal of creating a method that would be different from already existing comprehensive and resource-intensive adaptation frameworks. Further method development was completed via a series of literature reviews, team discussion meetings, and iterative revision. When the first draft of the intervention adaptation method was complete, we titled it Making Optimal Decisions for Intervention Flexibility during Implementation (MODIFI). To obtain feedback from people working in intervention adaptation research and practice, MODIFI was presented to a panel of experts in a two-round modified Delphi process.

Participants

Following the precedent set by previous Delphi studies in implementation science (e.g., [6]), we employed a purposive snowball sampling procedure beginning with an initial list of experts generated by members of the study team. The initial list included people with expertise in intervention design, adaptation, and implementation, as identified by reviewing the existing literature. Efforts were made to recruit a sample of people with both research and applied professional roles; thus, other participant identification methods included contacting the members of the Society for Implementation Research Collaboration (SIRC) Practitioner Network of Expertise and Intermediary Network of Expertise. Potential participants were encouraged to identify peers with expertise in intervention adaptation and/or implementation science. Participants were offered group authorship on the paper publishing the final MODIFI method.

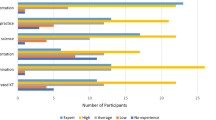

We recruited a panel of 43 experts who provided feedback on MODIFI, each of whom was invited to provide feedback again in Round 2 (32 or 74.4% participated in Round 2). Participants were 65.12% female, average age was 44.98, and 81.40% were White (86.05% not Latino/a). Most participants held a Doctoral degree (95.35%). Strategies to recruit experts with both research and applied professional experience were successful—the great majority of participants (88%) had experience in both research and practice related to EBP implementation. In fact, 74% of participants had spent greater than 50% of their professional years in implementation practice, either as part of a mixed practice/research role or in practice alone. Current professional roles were: 48.84% professor, 20.93% researcher; 11.63% clinician; 9.30% program/center director; 9.30% other.

Procedures

The Institutional Review Board at the first author’s institution approved all study procedures. This study employed a mixed-method design for the purpose of expansion, as qualitative methods were used to explain the results of quantitative methods [41]. Quantitative and qualitative data collection occurred simultaneously (quan + QUAL), and analysis occurred sequentially (quan → QUAL). The Delphi technique was used to build consensus among a panel of experts, achieving convergence of opinion through multiple rounds of feedback [42]. Figure 1 illustrates the steps of the modified Delphi process employed in this project.

The Round 1 survey first outlined the three phases of MODIFI (1. Decide what needs adaptation, 2. Adapt the EBP, 3. Pilot test the adapted EBP) and allowed participants to review MODIFI in its entirety, including the techniques situated within each phase. Then, feedback was solicited on whether the three phases were acceptable for a guide that spells out how to make adaptations to an EBP. For each step within each phase, participants were asked, “Do you think this step should be included in the adaptation how-to guide?” (answer choices: Essential, Optional, Inadvisable) and “Do you think this step is acceptable as it is currently written?” (answer choices: Yes, No [If not, what would you change to make this step acceptable?]). Participants could offer additional thoughts and/or feedback at the end of the survey.

Round 1 quantitative data were analyzed using descriptive statistics examining the proportion of responses across categories. Round 1 qualitative data were analyzed using conventional content analysis, with the aim of understanding the perspectives of panel members without imposing preconceived categories onto the feedback [43]. The first author reviewed all responses and identified key concepts within each response, then grouped together responses that conveyed similar concepts (i.e., themes). Findings were discussed with the last author, and MODIFI was revised based on discussions of themes derived from qualitative analysis and iterative MODIFI redesign. Following a series of meetings and MODIFI revisions, a new version of MODIFI was developed.

The Round 2 survey began by specifying the intended primary users for MODIFI (intervention implementers and intervention decision-makers working in applied settings), as determined based on Round 1 feedback, as well as the intended primary use (streamlined methods optimized for feasibility while still being as scientifically rigorous as possible). A summary of the Round 1 feedback that was used to revise MODIFI was presented. Participants were asked to narrow the focus of any remaining recommendations to those that were crucial to ensure MODIFI’s feasibility and effectiveness. The survey instructed participants to review the contents of the revised MODIFI method so they could provide feedback on each part with an awareness of the whole. Then, for each part of revised MODIFI, feedback was solicited on whether there were any further revisions that were crucial for MODIFI’s feasibility and effectiveness. The Round 2 quantitative ratings (feasibility) changed from the Round 1 ratings (inclusion and acceptability) to reflect the clarified priorities of the MODIFI method based on panelists’ feedback. For the detailed steps of MODIFI, participants were asked, “Regarding this step, what do you think is its feasibility of use in applied contexts?” (answer choices: Completely infeasible, Somewhat infeasible, Neither infeasible nor feasible, Somewhat feasible, Completely feasible). Participants were asked to offer additional thoughts and/or feedback at the end of the survey. Round 2 feedback was used to develop the final version of MODIFI for dissemination. Data analysis following Round 2 employed the same procedures as Round 1.

Results

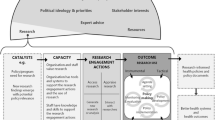

Below, we first summarize the results of each round of the modified Delphi process and how it supported MODIFI development (see Fig. 2). Then, we present the outcome of this development process—the final MODIFI method (see Additional File 1).

Round 1

The Round 1 survey focused on inclusion and acceptability. Most participants (61.4%) answered that the three MODIFI phases were acceptable for an adaptation how-to guide, while 38.6% answered that these overarching phases were unacceptable and provided written responses describing what they would change. For a full summary of the original MODIFI components and revision decisions based on Round 1 results, see Additional File 2.

For the specific MODIFI steps within each phase, participants rated inclusion favorably, with steps rated as “Essential” by 83.96% of participants on average, depending on the step (SD = 0.16). One step was rated as “Essential” by less than 50% of participants, and it was removed. In addition to inclusion, participants rated the acceptability of each specific MODIFI step. Steps were rated as “Acceptable” by 51.28% of participants on average, depending on the step (SD = 0.12). Given that almost half of participants considered most steps to be unacceptable as currently written, all steps were revised.

Acceptability ratings were further interpreted based on participants’ qualitative feedback. A summary of the Round 1 qualitative feedback can be viewed in Table 1. MODIFI was heavily revised based on this feedback, particularly the steps with lower ratings of acceptability. Following Round 1, MODIFI was streamlined, offering a narrower range of techniques. The revised version of MODIFI was designed to emphasize the creation of internally valid, locally actionable knowledge (which tends to be a more common focus in industry than in traditional academic research) and improve MODIFI’s feasibility for use in applied settings by intervention implementers (e.g., clinicians) and/or intervention decision-makers (e.g., supervisors, program/site leaders).

Round 2

For a summary of the revised MODIFI components and final revision decisions based on Round 2 results, see Additional File 3. The revised version of MODIFI participants rated in Round 2 was bookended by an introduction (MODIFI overview, definitions, prerequisites) and guidance for what users should do after completing the MODIFI process. Revised MODIFI comprised three steps: learn about the users, learn about the local context, and identify key information (Step 1); adapt the intervention (Step 2); and evaluate the adaptation (Step 3). After Step 3, MODIFI users were encouraged to return to earlier steps if the adaptation required revision to achieve the desired outcome.

Participants commented on a MODIFI phase or step if they believed it required additional revision. Higher rates of comments indicated where to apply additional revisions. Round 2 participants disproportionately commented on the introduction overview (59%) and definitions (66%), and two Step 1 components: learn about the users (53%) and identify key information about the intervention (78%). Additionally, participants rated each step’s feasibility of use in applied contexts, with all revised MODIFI components considered somewhat or completely feasible.

Similar to Round 1, Round 2 qualitative feedback was used to inform the final MODIFI revisions. A summary of the Round 2 qualitative feedback can be viewed in Table 2. Following Round 2, there were fewer recommended revisions, and these revisions were relatively minor in scope. Round 2 changes largely surrounded operationalization of terms/processes and the sequencing of content. In response to the Round 2 feedback, the final MODIFI presents a simplified figure illustrating its overall process (Fig. 3); front loads content regarding participatory co-design methods; includes newly clarified definitions of several key concepts (including an example of an intervention function/form table); instructs participants in how to consider and respond to potential unintended consequences of adaptation; and emphasizes iterative evaluation and development both within MODIFI and following its final steps. Additional small-scale changes were made in response to minor feedback.

The final MODIFI method

Round two feedback gave rise to the final version of MODIFI. The steps of MODIFI (see Fig. 3) are summarized below and presented in full in Additional File 1. MODIFI is used to make adaptations to part of an intervention—not to complete whole-intervention redesign. If the person/people applying MODIFI want to make multiple adaptations, it is recommended to complete the MODIFI steps for each adaptation (either one after another or at the same time in separate processes). This is particularly crucial when testing multiple adaptations together would not yield evidence that clarifies which adaptation(s) achieved the desired outcomes. However, to maximize feasibility and acknowledge real-world limitations, MODIFI does allow for multiple adaptations to be made at once if necessary. This is least problematic when multiple adaptations all target the same reason for adaptation (and thus would be tested by measuring the same target outcome).

MODIFI’s techniques can be carried out in many ways—to match the needs and resources of local settings, there are no prescribed numbers of participants, numbers of data collections, or time periods for data collection. MODIFI is a method that provides both structure and flexibility, because it is designed to be useful for a range of people and settings. For an illustrative case example describing what it looks like for people working in an applied setting to apply the MODIFI method for intervention adaptation, see Additional File 4. For a summary of the case example, see Table 3.

Unlike broader implementation frameworks, MODIFI is designed to “zoom in” on intervention modification and outline clear steps for how to design adaptations. Because MODIFI is a method to be used at a particular point in the implementation lifecycle, its application carries several preconditions. First, MODIFI users should already have selected an intervention to implement that addresses a problem for the population of focus, that relevant stakeholders believe is (or has the potential to be) appropriate, and ideally that has evidence for its effectiveness. Second, users have determined that adaptation is necessary [13, 44]—the original intervention cannot be implemented successfully due to potential problems with MODIFI outcomes (e.g., fit/appropriateness, usability, cultural responsiveness; see Fig. 4) and/or implementation outcomes (e.g., low fidelity, high cost). These outcomes are prioritized because they represent a causal chain. The goal of intervention adaptation is to improve the fit such that EBPs are more feasible, usable, culturally responsive, etc., and thus are more likely to be implemented with quality. Third, the user has formed a team to support intervention adaptation [11]. Adaptation teams work best when they contain a mix of people in different roles [26]; specifically, primary users, people with expertise in the intervention (or the topic/problem it addresses), and people with expertise in intervention adaptation methods. When this is infeasible, MODIFI may be used cautiously by an individual intervention implementer or decision-maker.

MODIFI step 1: Learn about the users

Step 1 of MODIFI has three components that can be completed in any order or simultaneously—learn about the users, learn about the local context, and identify key information about the intervention. Step 1 involves taking an applied anthropological approach [45]—listening, observing, and understanding experiences of the intended users within the local context—and additionally identifying the intervention’s functions and forms so the intervention can be adapted while maintaining effectiveness [25]. Learning about the users requires the person/people applying MODIFI to identify who will be the “users” of the adapted intervention (e.g., providers, service recipients) [18]. At times, the person/people applying MODIFI are primary users (interacting directly with the intervention; e.g., service providers and recipients) or secondary users (interacting indirectly with the intervention; e.g., organization leaders) themselves. Multiple users can be considered simultaneously by integrating information about their needs. After users have been identified, the person/people applying MODIFI conduct interviews asking users about their needs and assets related to the intervention and the topic/problem it addresses [46] (for strategies to effectively engage diverse stakeholders, see [47, 48]). Users might rank their unmet needs in order of priority to guide decision-making when designing adaptations. When needs conflict within or across user groups, needs should be prioritized in order of proximity to the intervention (e.g., primary users before secondary users). If needed, professional experience and research literature can be used to elaborate upon what is learned from users. After completing this component of Step 1, the person/people applying MODIFI should have a list of the highest priority unmet user needs to inform intervention adaptations in Step 2.

MODIFI step 1: Learn about the local context

Another component of Step 1 is to learn about the local context. First, the person/people applying MODIFI identify which aspects of the context (e.g., workflow, routines) are most likely to impact the intervention’s implementation. Then, in the context where the adapted intervention will be implemented, observations are conducted to gather information about the identified factors [45]. What gets observed depends on the reasons that adaptation is needed to improve intervention-context fit, so the person/people applying MODIFI identify which aspects of the context they need to learn about in order to address the “WHY” of adaptation [49]. Observations can be conducted efficiently by selecting aspects of the context that are practical to observe (e.g., physical location, working hours). The goal is to remain unobtrusive but not necessarily trying to be invisible—observers can be friendly, ask questions, reassure people that they are there to learn (not judge), and respect confidentiality [50]. After completing this component of Step 1, the person/people applying MODIFI should have a list of aspects of the local context that may interfere with intervention implementation.

MODIFI step 1: Identify key information about the intervention

Another component of Step 1 is to identify key information about the intervention. To adapt an intervention while retaining/maximizing its effectiveness, the person/people applying MODIFI must understand how it works. This can be accomplished via a function/form table that maps out how the intervention achieves its effects and is used to identify what can and cannot be changed during adaptation. Often core functions are not articulated by intervention developers but can be identified by creating a table with three columns: 1. Problems, 2. Functions, and 3. Forms [24, 25]. Using the intervention materials (e.g., manual, website), consultation with intervention developers and/or experts, professional experience, and the research literature, the columns are populated with information including the problems the intervention aims to solve, the intervention’s functions—the goals or ways the intervention solves each problem—and the form(s) that each function takes within the intervention (e.g., intervention activities; see Table 4). With the function/form table completed, the person/people applying MODIFI will have a list of intervention functions (how the intervention solves problems)—these should remain unchanged in Step 2, and intervention forms—these may be adapted in Step 2. In the adapted intervention, each function is represented in at least one form.

MODIFI step 2

Step 2 of MODIFI uses a co-design method to adapt the intervention’s forms while leaving the functions intact. Co-design involves partnership between members of different groups to explore challenging problems and identify solutions [51]. First, the person/people applying MODIFI identify who will participate in the co-design sessions. If possible, they should include at least one person with each of these roles: primary user, expert in the intervention (or the topic/problem it addresses), and expert in intervention adaptation methods. When this is not feasible, they should consider which viewpoints may be absent from the team and do their best to elevate those viewpoints as they present the information gathered during Step 1. When the group is assembled, they engage in co-design sessions (in-person or online), where they collaborate to: 1. understand the problem(s) to be solved through adaptation, 2. generate possible solutions, 3. co-create adaptations that solve the identified problem(s), 4. consider possible unintended consequences, and 5. iterate until the adaptation is ready for evaluation. In MODIFI each of these co-design aims is accompanied by a list of techniques. First, to understand the problem(s) to be solved, the person/people applying MODIFI present the information gathered in Step 1 (e.g., highest priority unmet user needs, aspects of the local context that may interfere with intervention implementation, and intervention functions), and the user/stakeholder members of the co-design sessions present information about their experiences. Then, to generate possible solutions, all co-design members contribute to brainstorming solutions to the problem(s) that they hope to solve through adaptation [52]. After brainstorming possible solutions, co-design members decide collaboratively which solutions (i.e., intervention adaptations) to select for co-creation. To create adaptations that solve the identified problem(s), co-design members draft intervention adaptation(s), during which they make sure that each intervention function is represented in at least one form (referring to the function/form table from Step 1). Then, co-design members iterate—co-creating further adaptation drafts, building upon each version by asking themselves, “How could this solution be just a little bit better?” [52].

Next, co-design members reflect on potential impacts of the drafted adaptation(s) by discussing these questions [12]: Is this adaptation designed with specific goals in mind? Is this adaptation aligned with intervention core functions? And could there be unintended negative impacts of this adaptation (e.g., on adoption, cultural responsiveness, feasibility, cost)? Then, co-design members discuss possible negative impacts (e.g., increasing an EBP’s acceptability may reduce its effectiveness if the adaptation alters the EBP’s core functions) [12], the likelihood of negative impacts, and their severity, then consider whether these can be offset by positive impacts on other outcomes. Based on the findings of the impact analysis, further iteration may be warranted. In that case, the team co-creates further adaptation drafts. Finally, co-design members reach consensus by agreeing that the problem(s) have been solved well enough that the adaptation is ready for evaluation. After completing Step 2, the person/people applying MODIFI will know what adaptation is needed to match user needs/assets and local context realities.

MODIFI step 3

The goal of Step 3 is to generate evidence that is relevant to the identified users within the local context, not to collect evidence that is generalizable to other users and contexts [53]. Thus, efficient, feasible, and locally appropriate evaluation methods are recommended. The person/people applying MODIFI should begin this step by thinking about what data they need to understand whether the intervention adaptation works for the identified users within the local context. If possible, they should include both quantitative and qualitative indicators of success (e.g., ratings of acceptability, quotes about cultural responsiveness, implementation outcomes). Ultimately, they should make decisions about what data they collect based on what’s feasible in their context, alongside what they learned from the users/context in Step 1 about the most important outcomes to maximize during intervention adaptation. Next, they should decide how they will measure the outcomes they have chosen, how often they will collect data, and what they need to see in order to conclude that the adaptation works for the identified users within the local context. These decisions are based on what they can actually track in their context. Data collection may be as narrowly scoped as a provider asking a service recipient if the adaptation is acceptable during each session while the adaptation is implemented or as complex as collecting data on multiple outcomes with multiple users over time before and after the adaptation is implemented.

MODIFI next steps

Following the three steps of MODIFI, the person/people applying MODIFI should know whether the adapted intervention works for the identified users within the local context in a way that they find relevant and satisfying. If so, then they can implement the adapted intervention, and if resources allow, implement while collecting additional data (e.g., on the outcomes they have chosen, and/or on additional changes that occur during implementation). If the adapted intervention does not work for the identified users within the local context (or a subset of the identified users), the person/people applying MODIFI should either return to Step 1 if they need to learn more about the users, context, and/or intervention before further adaptation, or return to Step 2 if they know what further adaptation is needed, then continue from there.

Discussion

We sought to develop MODIFI in response to a gap in the availability of pragmatic methods with which community-based teams can conduct implementation-explicit intervention redesign. MODIFI involves the systematic, prospective adaptation of intervention components to address intervention-level determinants. MODIFI leverages methods from HCD to gather locally-relevant, actionable information and design adaptations. We completed two Delphi rounds to gather feedback from experts in implementation science and HCD to refine the MODIFI method. In Round 1, most participants viewed all proposed components as essential but offered revisions to make them more acceptable. Round 2 emphasized feasibility, where ratings were generally high and fewer substantive revisions were recommended. Following Round 2, changes largely surrounded the overall framing of MODIFI, operationalization of its terms/processes, and sequencing of content.

As the implementation field grows, there are increasing opportunities for gaps to form between its research and practice components [54, 55]. While researchers have developed several models and frameworks to characterize stages of the intervention adaptation process (e.g., [20, 21]), pragmatic guidance for how implementers can design adaptations is lacking. In the absence of such scaffolding, implementers are likely to develop reactive, as opposed to proactive, adaptations that may not maintain the core functions necessary for the intervention to have its intended effects. MODIFI bridges implementation research and practice by offering a pragmatic and flexible method to empower community-based teams to lead this work. MODIFI can be paired with guidance on how to understand and evaluate intervention adaptations (see [12]), thus allowing teams to engage in cycles of adaptation and evaluation to facilitate implementation of locally-tailored EBPs.

MODIFI extends the contributions of other adaptation theories, models, and frameworks while integrating methods that are tailored to the needs of intervention implementers. Most existing adaptation frameworks describe important considerations without “zooming in” with step-by-step instructions for how to design adaptations (e.g., [56]) or outline such a comprehensive adaptation process that it would be infeasible for many real-world practice settings to complete (e.g., [31]). As MODIFI prioritizes rapid, resource-efficient methods for designing adaptations, it represents an opportunity for implementation researchers to compare its effects with more resource-intensive models. A unique strength of MODIFI is its inclusion of HCD approaches for engaging stakeholders, understanding perspectives, and redesigning products in ways that elevate the voices of users. Increasingly, intervention and implementation researchers are drawing upon HCD concepts and techniques (e.g., [32, 35, 37, 57]), and MODIFI provides an HCD-informed method with which to structure the application of these techniques to real-world intervention implementation problems. Thus, MODIFI may offer researchers new ways to approach intervention adaptations by prioritizing time- and resource-efficiency and user perspectives.

Limitations

The limitations of this study include the lack of diversity on the panel of experts across multiple dimensions (65.12% female, 81.40% White, 95.35% Doctoral degree holders). Given the disproportionate number of people with advanced education and research-related work, we made efforts to elevate feedback regarding the perspectives and needs of clinicians and other professionals working in applied settings. Still, MODIFI would benefit from acceptability and feasibility testing with implementers who differ from the MODIFI expert panel with regard to educational background and professional setting. Another limitation may be the difference between ratings solicited in Round 1 (acceptability) versus Round 2 (feasibility) of the modified Delphi process. This reflected the shifting priorities of the MODIFI method but somewhat limited our ability to interpret differences in ratings across rounds. Finally, in response to feedback from stakeholders, MODIFI was developed to make adaptations to part of an intervention—not whole-intervention redesign—and to do so in efficient, cost-friendly ways. Because of this streamlining, it is not as comprehensive as other adaptation frameworks that involve a large-scale research-funded implementation planning process. User needs differ, and MODIFI may not meet the needs of users who prefer a more comprehensive compilation of techniques over a single list of steps optimized for feasibility. A clear future direction for MODIFI is to evaluate its applicability for making adaptations to implementation strategies.

Conclusions

Intervention-level determinants, including aspects of interventions that are not contextually acceptable, appropriate, or feasible, require more explicit attention in research and practice. To best meet the needs of local contexts, methods for identifying and addressing intervention-level barriers should be pragmatic and accessible to community practitioners. MODIFI empowers community-based teams with knowledge accumulated through rigorous implementation research by offering a pragmatic method that teams can use to proactively design adaptations to a prioritized EBP. Guiding teams to tailor EBPs to their local context could extend for whom, where, and under what conditions EBPs can be effective. While MODIFI will benefit from future applications across multiple service settings and EBPs, our hope is that MODIFI becomes a tool for community-based teams to offer the version of EBPs that best meet the needs of the populations they serve.

Availability of data and materials

The datasets used/or analyzed during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- MODIFI:

-

Making Optimal Decisions for Intervention Flexibility during Implementation

- EBP:

-

Evidence-based prevention and intervention practice

- FRAME:

-

Framework for Reporting Adaptations and Modifications-Expanded

- PCMH:

-

Patient-centered medical home

- MADI:

-

Model for Adaptation Design and Impact

- DAP:

-

Dynamic Adaptation Process

- RE-AIM:

-

Reach, Effectiveness, Adoption, Implementation, and Maintenance

- CENTER-IT:

-

CENTERing multilevel partner voices in Implementation Theory

- CFIR:

-

Consolidated Framework for Implementation Research

- HCD:

-

Human centered design

- Quan:

-

Quantitative

- Qual:

-

Qualitative

- TF-CBT:

-

Trauma-Focused Cognitive Behavioral Therapy

References

Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform. 2000;9:65–70. https://doi.org/10.1055/s-0038-1637943.

Rolls Reutz JA, Kerns SE, Sedivy JA, Mitchell C. Documenting the implementation gap, part 1: use of fidelity supports in programs indexed in the California evidence-based clearinghouse. J Fam Soc Work. 2020;23(2):114–32. https://doi.org/10.1080/10522158.2019.1694342.

Williams NJ, Beidas RS. Annual research review: the state of implementation science in child psychology and psychiatry: a review and suggestions to advance the field. J Child Psychol Psychiatry. 2019;60(4):430–50. https://doi.org/10.1111/jcpp.12960.

Cook CR, Lyon AR, Locke J, Waltz T, Powell BJ. Adapting a compilation of implementation strategies to advance school-based implementation research and practice. Prev Sci. 2019;20(6):914–35. https://doi.org/10.1007/s11121-019-01017-1.

Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, Agarwal S. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci. 2014;9(102):1–12. https://doi.org/10.1186/s13012-014-0102-3.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):1–14. https://doi.org/10.1186/s13012-015-0209-1.

Dopp AR, Parisi KE, Munson SA, Lyon AR. Integrating implementation and user-centered design strategies to enhance the impact of health services: protocol from a concept mapping study. Health Res Policy Syst. 2019;17:1–11. https://doi.org/10.1186/s12961-018-0403-0.

Lewis CC, Mettert K, Lyon AR. Determining the influence of intervention characteristics on implementation success requires reliable and valid measures: results from a systematic review. Implement Res Pract. 2021;2:1–15. https://doi.org/10.1177/2633489521994197.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(50):1–15. https://doi.org/10.1186/1748-5908-4-50.

Lyon AR, Bruns EJ. User-centered redesign of evidence-based psychosocial interventions to enhance implementation—hospitable soil or better seeds? JAMA Psychiat. 2019;76(1):3–4. https://doi.org/10.1001/jamapsychiatry.2018.3060.

Moore G, Campbell M, Copeland L, Craig P, Movsisyan A, Hoddinott P, Evans R. Adapting interventions to new contexts—the ADAPT guidance. BMJ. 2021;374(1679):1–10. https://doi.org/10.1136/bmj.n1679.

Kirk MA, Moore JE, Stirman SW, Birken SA. Towards a comprehensive model for understanding adaptations’ impact: the model for adaptation design and impact (MADI). Implement Sci. 2020;15(56):1–15. https://doi.org/10.1186/s13012-020-01021-y.

Miller CJ, Wiltsey-Stirman S, Baumann AA. Iterative Decision-making for Evaluation of Adaptations (IDEA): a decision tree for balancing adaptation, fidelity, and intervention impact. J Community Psychol. 2020;48(4):1163–77. https://doi.org/10.1002/jcop.22279.

Hall GCN, Ibaraki AY, Huang ER, Marti CN, Stice E. A meta-analysis of cultural adaptations of psychological interventions. Behav Ther. 2016;47:993–1014. https://doi.org/10.1016/j.beth.2016.09.005.

Stirman SW, Gutner CA, Crits-Christoph P, Edmunds J, Evans AC, Beidas RS. Relationships between clinician-level attributes and fidelity-consistent and fidelity-inconsistent modifications to an evidence-based psychotherapy. Implement Sci. 2015;10(115):1–10. https://doi.org/10.1186/s13012-015-0308-z.

Cooper BR, Shrestha G, Hyman L, Hill L. Adaptations in a community-based family intervention: replication of two coding schemes. J Prim Prev. 2016;37:33–52. https://doi.org/10.1007/s10935-015-0413-4.

Eisman AB, Kilbourne AM, Greene D, Walton M, Cunningham R. The user-program interaction: How teacher experience shapes the relationship between intervention packaging and fidelity to a state-adopted health curriculum. Prev Sci. 2020;21(6):820–9. https://doi.org/10.1007/s11121-020-01120-8.

Lyon AR, Munson SA, Renn BN, Atkins DC, Pullmann MD, Friedman E, Areán PA. Use of human-centered design to improve implementation of evidence-based psychotherapies in low-resource communities: protocol for studies applying a framework to assess usability. JMIR Res Protoc. 2019;8(10):e14990. https://doi.org/10.2196/14990.

Chambers DA, Norton WE. The adaptome: advancing the science of intervention adaptation. Am J Prev Med. 2016;51(4):S124–31. https://doi.org/10.1016/j.amepre.2016.05.011.

Stirman SW, Baumann AA, Miller CJ. The FRAME: an expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. 2019;14(58):1–10. https://doi.org/10.1186/s13012-019-0898-y.

Hall TL, Holtrop JS, Dickinson LM, Glasgow RE. Understanding adaptations to patient-centered medical home activities: the PCMH adaptations model. Transl Behav Med. 2017;7(4):861–72. https://doi.org/10.1007/s13142-017-0511-3.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Mental Health. 2011;38:65–76. https://doi.org/10.1007/s10488-010-0319-7.

Patient-Centered Outcomes Research Institute (PCORI). Standards for studies of complex interventions. 2019. Available at: https://www.pcori.org/research-results/about-our-research/research-methodology/pcori-methodology-standards#Complex.

Perez Jolles M, Lengnick-Hall R, Mittman BS. Core functions and forms of complex health interventions: a patient-centered medical home illustration. J Gen Intern Med. 2019;34(6):1032–8. https://doi.org/10.1007/s11606-018-4818-7.

Kirk MA, Haines ER, Rokoske FS, Powell BJ, Weinberger M, Hanson LC, Birken SA. A case study of a theory-based method for identifying and reporting core functions and forms of evidence-based interventions. Transl Behav Mede. 2021;11(1):21–33. https://doi.org/10.1093/tbm/ibz178.

Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, Chaffin MJ. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci. 2012;7(32):1–9. https://doi.org/10.1186/1748-5908-7-32.

Glasgow RE, Battaglia C, McCreight M, Ayele RA, Rabin BA. Making implementation science more rapid: use of the RE-AIM framework for mid-course adaptations across five health services research projects in the veterans health administration. Front Public Health. 2020;8(194):1–13. https://doi.org/10.3389/fpubh.2020.00194.

Trivedi M, Hoque S, Shillan H, Seay H, Spano M, Gaffin J, Pbert L. CENTER-IT: a novel methodology for adapting multi-level interventions using the consolidated framework for implementation research—A case example of a school-supervised asthma intervention. Implement Sci Community. 2022;3(33):1–12. https://doi.org/10.1186/s43058-022-00283-5.

Fernandez ME, Ruiter RAC, Markham CM, Kok G. Intervention mapping: theory-and evidence-based health promotion program planning: perspective and examples. Front Public Health. 2019;7(209). https://doi.org/10.3389/fpubh.2019.00209.

Fernandez ME, ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, Ruiter RAC, Markham CM, Kok G. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Health. 2019,7(158). https://doi.org/10.3389/fpubh.2019.00158.

Wingood GM, DiClemente RJ. The ADAPT-ITT model: a novel method of adapting evidence-based HIV Interventions. J Acquir Immune Defic Syndr. 2008;47(1):S40–6. https://doi.org/10.1097/QAI.0b013e3181605df1.

Lyon AR, Brewer SK, Areán PA. Leveraging human-centered design to implement modern psychological science: return on an early investment. Am Psychol. 2020;75(8):1067–79. https://doi.org/10.1037/amp0000652.

Norman DA, Draper SW. User centered system design: new perspectives on human-computer interaction. 1st ed. Hillsdale, NJ: CRC Press; 1986. https://doi.org/10.1201/b15703.

Oh A. Design thinking and community-based participatory research for implementation science. Dispatches from Implementation Science at the National Cancer Institute. 2018. Retrieved from: https://cancercontrol.cancer.gov/IS/blog/2018/09-design-thinking-and-community-based-participatory-research-for-implementation-science.html.

Lyon AR, Dopp AR, Brewer SK, Kientz JA, Munson SA. Designing the future of children’s mental health services. Adm Policy Mental Health. 2020;47(5):735–51. https://doi.org/10.1007/s10488-020-01038-x.

Chen E, Neta G, Roberts MC. Complementary approaches to problem solving in healthcare and public health: implementation science and human-centered design. Transl Behav Med. 2020;11:1115–21. https://doi.org/10.1093/tbm/ibaa079.

Haines ER, Dopp A, Lyon AR, Witteman HO, Bender M, Vaisson G, Birken S. Harmonizing evidence-based practice, implementation context, and implementation strategies with user-centered design: a case example in young adult cancer care. Implement Sci Commun. 2021;2(45):1–16. https://doi.org/10.1186/s43058-021-00147-4.

Lyon AR, Coifman J, Cook H, McRee E, Liu FF, Ludwig K, McCauley E. The Cognitive Walkthrough for Implementation Strategies (CWIS): a pragmatic method for assessing implementation strategy usability. Implement Sci Commun. 2021;2(78):1–16. https://doi.org/10.1186/s43058-021-00183-0.

Lyon AR, Koerner K, Chung J. Usability Evaluation for Evidence-Based Psychosocial Interventions (USE-EBPI): a methodology for assessing complex intervention implementability. Implement Res Pract. 2020;1:1–17. https://doi.org/10.1177/2633489520932924.

Escoffery C, Lebow-Skelley E, Udelson H, Böing EA, Wood R, Fernandez ME, Mullen PD. A scoping study of frameworks for adapting public health evidence-based interventions. Transl Behav Med. 2019;9(1):1–10. https://doi.org/10.1093/tbm/ibx067.

Palinkas LA. Qualitative and mixed methods in mental health services and implementation research. J Clin Child Adolesc Psychol. 2014;43(6):851–61. https://doi.org/10.1080/15374416.2014.910791.

Hsu CC, Sandford BA. The Delphi technique: making sense of consensus. Pract Assess Res Eval. 2007;12(10):1–8. https://doi.org/10.7275/pdz9-th90.

Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88. https://doi.org/10.1177/1049732305276687.

Baumann AA, Powell BJ, Kohl PL, Tabak RG, Penalba V, Proctor EK, Cabassa L. Cultural adaptation and implementation of evidence-based parent-training: a systematic review and critique of guiding evidence. Children Youth Serv Rev. 2015;53:113–20. https://doi.org/10.1016/j.childyouth.2015.03.025.

Hamilton AB, Finley EP. Qualitative methods in implementation research: an introduction. Psychiatry Res. 2019;280:1–8. https://doi.org/10.1016/j.psychres.2019.112516.

Dopp AR, Parisi KE, Munson SA, Lyon AR. A glossary of user-centered design strategies for implementation experts. Transl Behav Med. 2019;9(6):1057–64. https://doi.org/10.1093/tbm/iby119.

O’Brien L, Marzano M, White RM. ‘Participatory interdisciplinary’: Towards the integration of disciplinary diversity with stakeholder engagement for new models of knowledge production. Sci Public Policy. 2013;40(1):51–61. https://doi.org/10.1093/scipol/scs120.

Rubin CL, Martinez LS, Chu J, Hacker K, Brugge D, Pirie A, Allukian N, Rodday AM, Leslie LK. Community-engaged pedagogy: A strengths-based approach to involving diverse stakeholders in research partnerships. Prog Community Health Partnersh. 2012;6(4):481–90. https://doi.org/10.1353/cpr.2012.0057.

Moore, J. E., Bustos, T., & Khan, S. (2021). Map2Adapt: a practical roadmap to guide decision-making and planning for adaptations. The Center for Implementation. Retrieved from: https://thecenterforimplementation.com/map2adapt.

Dale C, Stanley E, Spencer F, Goodrich J, Robert G. Carrying out observations. EBCD: Experience-based co-design toolkit. 2013. Retrieved January 28, 2021. from: https://www.pointofcarefoundation.org.uk/resource/experience-based-co-design-ebcd-toolkit/step-by-step-guide/5-carrying-observations/.

Metz A, Boaz A, Robert G. Co-creative approaches to knowledge production: what next for bridging the research to practice gap? Evid Policy. 2019;15(3):331–7. https://doi.org/10.1332/174426419X15623193264226.

IDEO.org. Design kit methods. 2015, Retrieved January 28, 2021. from: https://www.designkit.org/methods.

Daleiden EL, Chorpita BF. From data to wisdom: quality improvement strategies supporting large-scale implementation of evidence-based services. Child Adolesc Psychiatr Clin. 2005;14(2):329–49. https://doi.org/10.1016/j.chc.2004.11.002.

Lyon AR, Comtois KA, Kerns SE, Landes SJ, Lewis CC. Closing the science–practice gap in implementation before it widens. In Albers B, Shlonsky A, Mildon R (Eds.). New York, NY: Springer; 2020. pp. 295-313. Implementation Science 3.0.

Westerlund A, Nilsen P, Sundberg L. Implementation of implementation science knowledge: the research-practice gap paradox. Worldviews Evid-based Nurs. 2019;16(5):332–4. https://doi.org/10.1111/wvn.12403.

Association of Maternal & Child Health Programs (AMCHP). Adapting a program: Stoplight Model for Adaptation. 2023. Available at: https://amchp.org/resources/adapting-a-program-stoplight-model-for-adaptation/.

Munson SA, Friedman EC, Osterhage K, Allred R, Pullmann MD, Areán PA, Lyon AR. Usability issues in evidence-based psychosocial interventions and implementation strategies: cross-project analysis. J Med Internet Res. 2022;24(6):e37585. https://doi.org/10.2196/37585.

Acknowledgements

The MODIFI Expert Panel can be reached at modifi@uw.edu. This group is composed of experts in intervention design, adaptation, and implementation, including people with both research and applied professional roles. MODIFI Expert Panel group authors: Matthew Aalsma (Indiana University School of Medicine), William Aldridge (University of North Carolina at Chapel Hill), Patricia Arean (University of Washington), Maya Barnett (University of California, Santa Barbara), Melanie Barwick (The Hospital for Sick Children), Sarah Birken (Wake Forest University School of Medicine), Jacquie Brown (Jacquie Brown and Associates), Eric Bruns (University of Washington), Amber Calloway (University of Pennsylvania), Brittany Cooper (Washington State University), Torrey Creed (University of Pennsylvania), Doyanne Darnell (University of Washington), Alex Dopp (RAND Corporation), Cam Escoffery (Emory University), Kelly Green (University of Pennsylvania), Sarah Hunter (RAND Corporation), Nathaniel Jungbluth (Seattle Children’s Hospital), Sarah Kopelovich (University of Washington), Anna Lau (University of California, Los Angeles), Bryce McLeod (Virginia Commonwealth University), Maria Monroe-DeVita (University of Washington), Julia Moore (The Center for Implementation), Beth Prusaczyk (Washington University in St. Louis), Rachel Shelton (Columbia University), Cameo Stanick (Hathaway-Sycamores), Michael Williston (University of Pennsylvania), Sean Wright (Lutheran Community Services Northwest), Soo Jeong Youn (Harvard Medical School).

Funding

This work was funded by the National Institute of Mental Health (F32MH116623; P50MH115837).

Author information

Authors and Affiliations

Consortia

Contributions

Conception: SKB, ARL; Design: SKB, ARL; Acquisition, Analysis, or Interpretation of Data: SKB, ARL; Drafted or Substantively Revised the Work: SKB, CMC, AAB, SWS, JMJ, MDP, ARL, MODIFI Expert Panel.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All procedures were approved by the University of Washington IRB (STUDY00006407).

Consent for publication

Not applicable.

Competing interests

Ana Baumann is a member of the Editorial Board for Implementation Science Communications.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

43058_2024_592_MOESM1_ESM.pdf

Additional file 1. MODIFI: Making Optimal Decisions for Intervention Flexibility during Implementation. The final MODIFI method, including a description of MODIFI, definitions of key terms, necessary prerequisites, and a detailed step-by-step guide.

43058_2024_592_MOESM2_ESM.pdf

Additional file 2. Summary of original MODIFI components and revision decisions based on Round 1 results. Detailed descriptions of revisions made to each MODIFI component as informed by Round 1 results.

43058_2024_592_MOESM3_ESM.pdf

Additional file 3. Summary of revised MODIFI components and final revision decisions based on Round 2 results. Detailed descriptions of final revisions made to each MODIFI component as informed by Round 2 results.

43058_2024_592_MOESM4_ESM.pdf

Additional file 4. MODIFI Case Example. Case example of the final MODIFI method used by school-based mental health care providers to adapt an evidence-based intervention.

43058_2024_592_MOESM5_ESM.pdf

Additional file 5. Standards for Reporting Qualitative Research (SRQR). Completed checklist for qualitative research reporting standards.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Brewer, S.K., Corbin, C.M., Baumann, A.A. et al. Development of a method for Making Optimal Decisions for Intervention Flexibility during Implementation (MODIFI): a modified Delphi study. Implement Sci Commun 5, 64 (2024). https://doi.org/10.1186/s43058-024-00592-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-024-00592-x