Abstract

Background

There is general scarcity of research on key elements of implementation processes and the factors which impact implementation success. Implementation of healthcare interventions is a complex process. Tools to support implementation can facilitate this process and improve effectiveness of the interventions and clinical outcomes. Understanding the impact of implementation support tools is a critical aspect of this process. The objective of this study was to solicit knowledge and agreement from relevant implementation science and knowledge translation healthcare experts in order to develop a process model of key elements in the implementation process.

Methods

A two round, modified Delphi study involving international experts in knowledge translation and implementation (researchers, scientists, professors, decision-makers) was conducted. Participants rated and commented on all aspects of the process model, including the organization, content, scope, and structure. Delphi questions rated at 75% agreement or lower were reviewed and revised. Qualitative comments supported the restructuring and refinement. A second-round survey followed the same process as Round 1.

Results

Fifty-four experts participated in Round 1, and 32 experts participated in Round 2. Twelve percent (n = 6) of the Round 1 questions did not reach agreement. Key themes for revision and refinement were: stakeholder engagement throughout the process, iterative nature of the implementation process; importance of context; and importance of using guiding theories or frameworks. The process model was revised and refined based on the quantitative and qualitative data and reassessed by the experts in Round 2. Agreement was achieved on all items in Round 2 and the Delphi concluded. Additional feedback was obtained regarding terminology, target users and definition of the implementation process.

Conclusions

High levels of agreement were attained for all sub-domains, elements, and sub-elements of the Implementation Process Model. This model will be used to develop an Implementation Support Tool to be used by healthcare providers to facilitate effective implementation and improved clinical outcomes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Background

Healthcare and the healthcare systems are constantly changing to incorporate new knowledge and evidence to improve health outcomes, patient experiences, system and process efficiencies, waste reduction and work experiences. Changing these processes through practice change interventions is a complex task.

A goal of implementation science is to understand factors that determine why an evidence-based intervention may or may not be successful in a specific healthcare setting and this information can be used to develop and test strategies to improve the speed, quantity and quality of uptake [1, 2]. A key area of implementation science is implementation support. Implementation support, such as using tools, training, and facilitation, have been shown to improve implementation processes and support better intervention outcomes [3].

Although literature on implementation support exists [2,3,4,5,6] there is little consensus on the key elements of the implementation process that are essential to successful implementation. Identifying these elements will be valuable to healthcare providers actively implementing healthcare interventions. This study endeavoured to refine key elements of implementation processes, concluding with an Implementation Process Model upon which the development of Implementation Support Tools can be based.

Building on previous work

Our work was predicated on extensive work done by Kastner and colleagues on developing evidence-based, user-friendly knowledge translation (KT) and implementation support resources. In 2018, Kastner and colleagues produced and evaluated the Knowledge-activated Tools (KaT) Framework with the goal to detail steps and processes to support optimized, rigorous and efficient development of KT strategies [6]. Subsequently a Conceptual Implementation and Sustainability Guide (CISG) was drafted from the implementation and sustainability domains of the KaT Framework [6]. The process model upon which we focus in the current study was developed from elements of the CISG.

Study objectives

The objective of this study was to refine and obtain agreement on an implementation process model via feedback from relevant experts in healthcare knowledge translation and implementation. This model can be used to inform the development of implementation support tools for healthcare interventions. The study sought expert agreement for four aspects of the implementation process model: 1) operationalized domains, subdomains and elements; 2) structure and order; 3) labels/terminology; and 4) applicability to target users.

Methods

A Delphi process was used to refine and reach agreement on the key elements in healthcare implementation processes. Using a published framework as the basis for the study supported the goal of developing an evidence-based process model. The Delphi process consisted of two iterative rounds of ratings using an online survey. Aggregated results were distributed to participants after each round.

Rationale for Delphi approach

The classical Delphi method is an iterative approach used to solicit and distill the judgments of experts using a series of surveys and feedback [7]. This process narrows the wide range of answers and serves to converge the group answers until consensus is reached [8]. Delphi studies are particularly effective in investigating areas where empirical data are lacking [9] and where priority setting is desired [10].

Recruitment

A purposive sampling strategy was used to recruit a panel of international implementation science and knowledge translation experts. We updated the recruitment list produced for the KaT Framework Delphi study [6] to identify participants. This list included KT experts known or suggested by their project team; publicly available lists of individuals who have presented at implementation science, KT and health services research conferences and meetings (e.g., KT Canada, Alberta SPOR KT Platform, KT Connects, KT Scientific Meeting, Annual Science of Dissemination and Implementation conference, and Canadian Association for Health Services and Policy Research [CAHSPR]) and KT experts identified by other potential participants (snowball sampling). For the purpose of this study, expertise was defined as having knowledge or experience in KT or implementation science with the capacity to articulate informed opinion and provide relevant input about their area of expertise [10].

The recruitment strategy used email invitations containing a short description of the study, participation requirements, expectations of the participants, a request for referral for additional participants (snowball sampling) and a link to the online survey. We used an implied consent strategy whereby participants were informed that completion of the first survey was considered consent to participate in the study. Research and ethics board approval was obtained from the University of Toronto in August 2019.

Inclusion criteria

The following eligibility criteria was developed to ensure the inclusion of international experts who have experience in KT or implementation science and knowledge or experience in developing and using active interventions, such as data feedback, communications training and systems-level interventions, in a healthcare setting. Inclusion criteria: 1) academic, researcher or healthcare practitioner with experience in these areas; and/or have published in these areas in the last 5 years; 2) sufficient written English skills to contribute relevant input and communicate ideas effectively; and 3) willingness and availability to complete up to three rounds of online surveys.

Sample

Research suggests that a minimum panel of 15–20 experts is recommended to ensure sufficient contributions in a Delphi [11]. Taking account of the commonly high drop-out rate in Delphi studies, the recruitment target for this study was set at 30–40 participants for Round 1. This number would allow for the input of diverse views while accounting for expected attrition.

Data collection

The Delphi study was conducted online over a four-month period to provide sufficient time to gather data, aggregate and communicate group responses, and to build surveys step-wise as data were collected and analyzed. The surveys were developed and designed using Survey Monkey, an online survey platform (www.surveymonkey.com). Prior to administration, the first survey was pre-tested by two volunteers for clarity and to anticipate the average completion time. The survey was revised as a result of the pre-test. A link to each survey was distributed via email to all participants with subsequent follow up emails as necessary. Data collection took place between October 2019 and January 2020.

Round 1 survey

The first survey was comprised of 5-point Likert scale questions with comments and free-text questions. The purpose of this round was to invite participants to: 1) rate the importance of the content and structure of the process model; 2) suggest additional elements/concepts they deemed important to the implementation process; and 3) recommend items to be removed from the process model.

The first-round survey also collected the following demographic information: age; gender; primary role; years of experience with KT science/practice, implementation, Integrated KT, dissemination and de-implementation; and years of experience with developing a KT framework or model and experience with implementing a KT framework or model. The process model was modified and refined based on the percent agreement rating and qualitative data.

After Round 1, participants received a summary of the results including questions which reached or did not reach agreement, and descriptive statistics, including the mean, standard deviation, median, interquartile range and percent agreement for all questions. Consensus to include an item was defined as a mean score of 4 out of 5 on a Likert scale (1 = Strongly Disagree, 2 = Disagree, 3 = Neither Agree or Disagree, 4 = Agree, 5 = Strongly Agree) by greater than 75% of Delphi participants. Participants also received, via email, table of substantive changes made to the process model based on the Round 1 results and the refined version of the process model to review in advance of the Round 2 survey.

Round 2 survey

The Round 2 survey asked participants to review and rate the revisions made to the content and organization of the process model. Participants were also asked again to rate the comprehensiveness of the implementation process elements. All questions provided the opportunity to provide comments or feedback. The second survey was designed to 1) determine agreement on items revised based on results of Round 1; and 2) determine preliminary agreement of the new items generated in Round 1; and 3) elicit further comments and feedback. The participants were asked again to rate the questions using a 5-point Likert scale and use the free text sections to state the reasoning for their rating or provide additional comments.

After Round 2, participants received a summary of the results including descriptive statistics for all questions. Consensus was defined as higher than 75% agreement on a question. Participants also received a copy of the final Implementation Process Model.

Data analysis

Quantitative

Results were tabulated at the completion of each round and entered into an Excel spreadsheet. Descriptive statistics – mean, median, inter-quartile range (IQR), standard deviation, and percent agreement – for each question were reported for Round 1 and Round 2 results. Participants received the summary Round 1 results in advance of the Round 2 survey and were free to review and reflect on these results as they submit their responses and feedback in Round 2.

Qualitative

The data were analysed by using thematic analysis [12]. Following Braun & Clarke (2006), initially the participant comments were read, and re-read to gain familiarity. Subsequently, words, phrases and sentences were coded and organized into themes [12]. Then themes were reviewed in relation to coded sections and themes were refined [12]. Codes and themes were reviewed independently by two team members (GP, MK) to cross-check data analysis and ensure data quality, consistency in approach and transparency of analytical decision making. Differences in interpretation were resolved through discussion between the researchers.

Results

Participant characteristics

Five hundred and thirty-four survey links were sent via email, 88 viewed the survey and 54 experts (10%) participated in the Round 1 survey. The characteristics of participants are shown in Table 1. The majority of participants were women (59%) in the 55 to 64 age range (30%). The majority of participants (83%) were Researchers, Scientists or Professors living in Canada (41%), United States (39%) and the United Kingdom (7.4%). The majority of participants rated their experience with Implementation (83%), KT Science (72%), Dissemination (72%), KT practice (63%) and Integrated KT (61%) as high or expert. De-implementation expertise was rated as high or expert by 23 participants (43%) (See Fig. 1).

Round 1

Survey questions pertained to the content of the elements, order of the elements and comprehensiveness of the sub-domains. In Round 1 participants reached agreement for 46 of the 52 content questions. The questions which did not reach agreement concerned the comprehensiveness of the Element (n = 4) (Engaging Stakeholders, Monitoring and Evaluation) and the order of elements within a sub-domain (n = 2) (Developing the Implementation and Sustainability Plan, Monitoring and Evaluation). Mean scores ranged from 3.4 to 4.4, with the standard deviation ranging from 1.2 to 0.5. Table 2 provides the results for Round 1, including the mean, standard deviation, median, IQR, percent agreement. Questions that reached greater than 75% agreement were included unless qualitative data was contrary and reached agreement to amend the item.

Participants also made recommendations in the comments sections regarding items for addition or removal. Thematic analysis of the recommendations and feedback provided in the Round 1 survey identified themes to be addressed and incorporated into the revisions for Round 2. The key themes identified in the Round 1 survey:

Stakeholder engagement

Participants emphasized that stakeholder engagement should not happen at a specific point in the process, but rather is critical throughout the planning, implementation, monitoring and evaluation processes. One Delphi participant commented: “There is a ‘stream’ of stakeholder engagement work that cuts across all domains. Some of the work has a natural sequence and some might be done by different or the same stakeholders at roughly the same time period.”[P26].

Participants noted that engagement should be integrated throughout and must also involve accountability and responsibility for all parties. A participant noted: “There should be something added around ensuring meaningful engagement of stakeholder partners e.g., through building trusting relationships, valuing diverse expertise and knowledge, shared decision-making, shared goals, etc.”[P20] To address the feedback received, the process model was refined, and Engaging Stakeholders was included throughout the three sub-domains.

Context

The expert panel also felt that the importance of understanding, identifying and planning for the impact of context on the implementation process was underrepresented in the model. A number of participants stated that context and the actions which address it, need to be explicit in the process model: “I don’t see how the issue of context is highlighted; it may be implicit, but in my view since implementation is a function of the intervention by context interaction, context and potential interactions should be explicit.”[P42] In response to this feedback, context and the actions required to address it were explicitly added to elements of the process model. Context was made explicit in the first step of planning: Identify the purpose of the Implementation and Sustainability of the intervention/innovation. In addition, context was incorporated into 4 other elements where it was applicable.

Implementation as an iterative process

Many participants discussed that implementation and sustainability are iterative non-linear processes. Participants acknowledged the need for logical presentation and helpful heuristics when documenting implementation in a process model but asked that the non-linearity of implementation be highlighted. A participant stated: “… you need to be clear that these are steps to be covered, not steps to be followed. Iteration will often be necessary, and flexibility is required depending on the situation.”[P04].

One participant emphasized the impact of non-linearity on implementation efforts: “Planning allows us to prepare for contingencies, to form alliances, to gather resources. It allows us to articulate a clear statement of our intentions, and of the actions needed to achieve those intentions. However, when the plan is complete and action has begun, it is essential that we do not follow a rote, fixed implementation of the plan. Rather, we watch the plan as it unfolds, we notice what is working or not working, and we revise and adjust as we go. Each situation will be different, each social form will be characterized by unique affordances and constraints. We are firm in our intentions and flexible in our actions.” [P51] The guidance for the process model was amended to explicitly acknowledge that implementation is an iterative process and that the elements detailed in the model represent evidence-based components to consider and address to support implementation, but do not require a sequential completion.

Use of theories or frameworks

The value of using theory or frameworks to guide implementation was also highlighted by participants. One participant commented: “One always uses a framework or mental model. The only question is whether it is made explicit. And it should be.”[P42] Participants also discussed the importance of aligning theories or frameworks with the intervention. One participant noted: “[This Element] should state that the framework must be matched to the problem and determinants.” [P16] The selection of guiding frameworks was moved up in the model and additional guidance was added regarding selection and application of theories and frameworks.

Amendments as a result of round 1

Nineteen changes were made to the process model based on the responses received in Round 1. Changes applied to location (n = 11), removal (n = 4) and addition (n = 4) of sub-domains/elements/sub-elements. These changes were reported in a table of substantive changes made to the process model and a refined version of the process model.

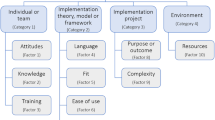

Round 2

For Round 2, 59% of Round 1 participants completed the survey (n = 32). The 19 amendments to the process model were represented in 23 survey questions which were evaluated by the participants. Again, participants were provided with comment sections on each question to provide additional feedback. All 23 questions reached agreement in the Round 2 survey. Participants provided additional feedback on the need for consistent terminology and also the need to further clarify the target user for the tool. Mean scores ranged from 3.8 to 4.8, with the standard deviation ranging from 1.0 to 0.3. Table 3 provides the results for the Round 2 Survey. Figure 2. illustrates the Delphi Process Summary. Figure 3. demonstrates the final, Implementation Process Model.

Discussion

We performed a rigorous, modified Delphi study involving an international panel of KT and implementation experts to organize, prioritize and evaluate the key elements of an implementation process model. This is the first study, to our knowledge, in which the domains, sub-domains, elements and sub-elements of intervention implementation processes were evaluated and refined to develop an implementation process model. Our findings confirm earlier work on identifying evidence-based elements in the complex process of implementing healthcare interventions [6].

Summary of key findings

Stakeholder engagement was identified as a critical component of the implementation process. The CISG identified stakeholder engagement as the first sub-domain in the process and as a result of this Delphi, stakeholder engagement was integrated throughout the process model. Stakeholder engagement has been defined as “an iterative process of actively soliciting the knowledge, experience, judgment and values of individuals selected to represent a broad range of interests in a particular issue, for the dual purposes of creating a shared understanding and making relevant, transparent and effective decisions” [13]. Research states that effective stakeholder engagement supports effective study design, data analysis and research prioritization [14]. In addition, and potentially most significant, studies report that effective stakeholder engagement improved perceived relevance and uptake of research findings [15]. The notion that stakeholder engagement should be integrated throughout and involve accountability and responsibility for all parties is prevalent in the literature which reports that accountability should be interactive between researchers, practitioners and evaluators with shared goals to achieve results [4, 16].

Delphi participants emphasized the importance of context in the implementation process. The impact of context on the implementation process is well documented in the research, but as Dryden-Palmer et al. noted, a thorough understanding of how context modifies or impacts implementation is lacking [5]. The influence of context on implementation and the need to adapt or tailor interventions to context has been recognized as essential to implementation success. Context can be the environment, setting, or organizational structure and can act as either a barrier or facilitator to implementation [5]. Making the impact and importance of context explicit in the process model is important as healthcare providers who are implementing interventions need support and guidance when adapting interventions to new settings and environments [4].

Aligning with our expert participants, the literature supports that implementation is a dynamic process which does not unfold in a linear fashion [17]. As a result of the Delphi, our process model acknowledges that moving evidence into practice is complex and often unpredictable and is influenced by many factors [18].

Nilsen noted that research with underused or misused theoretical perspectives makes it difficult to understand and explain how and why interventions succeed or fail, “thus restraining opportunities to identify factors that predict the likelihood of implementation success and develop better strategies to achieve more successful implementation” [19]. The need for theory has also been documented in two recent reviews of systematic reviews of the effectiveness of single and multifaceted interventions to change provider behaviour [20, 21]. The authors advocated for more research to develop a theoretical base for intervention selection or development and for tailoring interventions, based on identified barriers and facilitators, to increase their effectiveness [20, 21]. In addition, the importance of aligning theories or frameworks with the intervention is noted in the literature [17]. Research has identified that clinical outcomes are improved when theories or frameworks guide the implementation process, with specific attention paid to the fit with context [22]. These sentiments were expressed by participants and the process model was amended to reflect the significance and value added by using theories and frameworks to guide implementation.

Implications for policy and practice

Healthcare interventions are challenging to implement, and healthcare providers are often not experts in implementation and therefore need resources and support to succeed. Our findings offer a resource for providers and can inform tool development processes.

By evaluating and refining the elements in the implementation process we have developed an evidence-based foundation to create a simple, user friendly tool that will be effective to support both implementation effectiveness and improved clinical outcomes. The findings of this Delphi study confirm the results of previous work [6, 23] and underscore the importance of implementation support to facilitate effective, sustainable, improved outcomes for healthcare interventions.

Strengths and limitations

Our Delphi study has several strengths. Our international panel was composed of KT and implementation science and practice experts, which helped to ensure a high level, yet diverse range of expertise contributed to the findings. Using this Delphi technique ensured more diversity in expertise than would be provided from any individual member or small related group. By engaging this diverse group, we have been able to increase the generalizability and creditability of the results.

By providing the opportunity for free-text responses we ensured that participants could offer context to their ratings where they felt it necessary or helpful. This design helped to explicate the rationale and perspectives of the experts. In addition, the free-text entries allowed participants to address items and topics they felt were missing from the process model.

The anonymity in a Delphi study is both a strength and limitation, it helps to reduce the influence of participants who may dominate an in-person session but also eliminates the opportunity for the discussion and discovery that can occur during in-person meetings. There may have been bias in the selection of elements presented to the participants. We minimized this through an extensive literature search and provided participants with the opportunity to add elements to the process model in the first and second Delphi rounds. The potential influence of local factors, such as culture, healthcare systems or policy on participant’s responses should be acknowledged. While the 10% response rate may limit the generalizability of our findings, the diversity and number of participants in our sample was representative. Finally, while we included clinicians in our invite list, the sample for our Delphi turned out to be largely academic and the process may have benefitted from additional participation by healthcare providers. We will be mindful of recruitment for the tool development project to ensure more healthcare providers participate as they will be the primary target users.

Conclusions

The Delphi survey questions covered a comprehensive range of aspects of the implementation process from planning to identifying barriers to monitoring and evaluating. Using the Delphi process to gain agreement among a group of international experts, we produced an implementation process model which will be used to develop a user-friendly and evidence-based tool. This tool will be designed to support healthcare implementation efforts with the goal to improve process and clinical outcomes.

Availability of data and materials

The datasets supporting the conclusions of this article are included within the article and its additional files.

Abbreviations

- KT:

-

Knowledge translation

- KaT:

-

Knowledge-activated Tools

- CISG:

-

Conceptual Implementation and Sustainability Guide

- IQR:

-

Interquartile range

References

Lobb R, Colditz G. Implementation science and its application to population health. Annu Rev Health. 2013;34(1):235–51. https://doi.org/10.1146/annurev-publhealth-031912-114444.

Moullin JC, Dickson KS, Stadnick NA, Albers B, Nilsen P, Broder-Fingert S, et al. Ten recommendations for using implementation frameworks in research and practice. Implementation Sci Commun. 2020;1:1–2.

Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41(3–4):327–50. https://doi.org/10.1007/s10464-008-9165-0.

Meyers D, Durlak J, Wandersman A. The quality implementation framework: a synthesis of critical steps in the implementation process. Am J Community Psychol. 2012;50(3-4):462–80. https://doi.org/10.1007/s10464-012-9522-x.

Dryden-Palmer K, Parshuram C, Berta W. Context, complexity and process in the implementation of evidence-based innovation: a realist informed review. BMC Health Serv Res. 2020;20(18):1–15.

Kastner M, Makarski J, Hayden L, Lai Y, Chan J, Treister V, et al. Improving KT tools and products: development and evaluation of a framework for creating optimized, knowledge-activated tools (KaT). Implementation Sci Commun. 2020;1(1):47. https://doi.org/10.1186/s43058-020-00031-7.

Mullen P. Delphi: myths and reality. J Health Organ Manag. 2003;17(1):37–52. https://doi.org/10.1108/14777260310469319.

Diamond IR, Grant RC, Feldman BM, Pencharz PB, Ling SC, Moore AM, et al. Defining consensus: a systematic review recommends methodologic criteria for reporting of Delphi studies. J Clin Epidemiol. 2014;67(4):401–9. https://doi.org/10.1016/j.jclinepi.2013.12.002.

Evans JM, Baker GR, Berta W, Barnsley J. A cognitive perspective on health systems integration: results of a Canadian Delphi study. BMC Health Serv Res. 2014;14(1):222. https://doi.org/10.1186/1472-6963-14-222.

Blaschke S, O'Callaghan CC, Schofield P. Identifying opportunities for nature engagement in cancer care practice and design: protocol for four-round modified electronic Delphi. BMJ Open. 2017;7(3):e013527. https://doi.org/10.1136/bmjopen-2016-013527.

Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–15.

Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. https://doi.org/10.1191/1478088706qp063oa.

Deverka PA, Lavallee DC, Desai PJ, Esmail LC, Ramsey SD, Veenstra DL, et al. Stakeholder participation in comparative effectiveness research: defining a framework for effective engagement. J Comp Eff Res. 2012;1(2):181–94. https://doi.org/10.2217/cer.12.7.

Barger S, Sullivan SD, Bell-Brown A, Bott B, Ciccarella AM, Golenski J, et al. Effective stakeholder engagement implementation of a clinical trial (SVS1415CD) to improve cancer care. BMC Med Res Methodol. 2019;19(1):119. https://doi.org/10.1186/s12874-019-0764-2.

Concannon TW, Meissner P, Grunbaum JA, McElwee N, Guise J-M, Santa J, et al. A new taxonomy for stakeholder engagement in patient-centered outcomes research. J Gen Intern Med. 2012;27(8):985–91. https://doi.org/10.1007/s11606-012-2037-1.

Wandersman A, Florin P. Community interventions and effective prevention. Am Psychol. 2003;58(6-7):441–8. https://doi.org/10.1037/0003-066X.58.6-7.441.

Luig T, Asselin J, Sharma A, Campbell-Scherer D. Understanding implementation of complex interventions in primary care teams. J Am Board Fam Med. 2018;31(3):431–44. https://doi.org/10.3122/jabfm.2018.03.170273.

Estabrooks CA, Thompson DS, Lovely JJE, Hofmeyer A. A guide to knowledge translation theory. J Contin Educ Heal Prof. 2006;26(1):25–36. https://doi.org/10.1002/chp.48.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53. https://doi.org/10.1186/s13012-015-0242-0.

Lau R, Stevenson F, Ong BN, Dziedzic K, Treweek S, Eldridge S, et al. Achieving change in primary care—effectiveness of strategies for improving implementation of complex interventions: systematic review of reviews. BMJ Open. 2015;5(12):e009993.

Squires JE, Sullivan K, Eccles MP, Worswick J, Grimshaw JM. Are multifaceted interventions more effective than single-component interventions in changing health-care professionals' behaviours? An overview of systematic reviews. Implement Sci. 2014;9(1):152. https://doi.org/10.1186/s13012-014-0152-6.

Kastner M, Straus SE. Application of the knowledge-to-action and Medical Research Council frameworks in the development of an osteoporosis clinical decision support tool. J Clin Epidemiol. 2012;65(11):1163–70. https://doi.org/10.1016/j.jclinepi.2012.04.011.

Kastner M, Makarski J, Mossman K, Harris K, Hayden L, Giraldo M, Sharma D, Asalya M, Jussaume L, Eisen D, Wintemute K. An Idea Worth Sustaining: Evaluation of the sustainability potential of Choosing Wisely across Ontario Community Hospitals and Family Health Teams. Health Serv Res J. 2021. (In review).

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

GP, MK and WB conceived the study. GP and MK designed the study. GP conducted the data collection and data analysis and drafted the manuscript. WB and MK assisted with data analysis, interpretation, and provided critical commentary on the manuscript. KB provided critical commentary on the manuscript. All authors read and approved the final manuscript.

Authors’ information

Not applicable.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Approval for the study was obtained from the University of Toronto Research Ethics Board, Protocol #00037918. All methods were performed in accordance with the relevant guidelines and regulations. Participants were provided with privacy and consent information prior to participation. All participants provided their informed consent by completing the round one survey.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Parker, G., Kastner, M., Born, K. et al. Development of an Implementation Process Model: a Delphi study. BMC Health Serv Res 21, 558 (2021). https://doi.org/10.1186/s12913-021-06501-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-06501-5