Abstract

Background

Applying the knowledge gained through implementation science can support the uptake of research evidence into practice; however, those doing and supporting implementation (implementation practitioners) may face barriers to applying implementation science in their work. One strategy to enhance individuals’ and teams’ ability to apply implementation science in practice is through training and professional development opportunities (capacity-building initiatives). Although there is an increasing demand for and offerings of implementation practice capacity-building initiatives, there is no universal agreement on what content should be included. In this study we aimed to explore what capacity-building developers and deliverers identify as essential training content for teaching implementation practice.

Methods

We conducted a convergent mixed-methods study with participants who had developed and/or delivered a capacity-building initiative focused on teaching implementation practice. Participants completed an online questionnaire to provide details on their capacity-building initiatives; took part in an interview or focus group to explore their questionnaire responses in depth; and offered course materials for review. We analyzed a subset of data that focused on the capacity-building initiatives’ content and curriculum. We used descriptive statistics for quantitative data and conventional content analysis for qualitative data, with the data sets merged during the analytic phase. We presented frequency counts for each category to highlight commonalities and differences across capacity-building initiatives.

Results

Thirty-three individuals representing 20 capacity-building initiatives participated. Study participants identified several core content areas included in their capacity-building initiatives: (1) taking a process approach to implementation; (2) identifying and applying implementation theories, models, frameworks, and approaches; (3) learning implementation steps and skills; (4) developing relational skills. In addition, study participants described offering applied and pragmatic content (e.g., tools and resources), and tailoring and evolving the capacity-building initiative content to address emerging trends in implementation science. Study participants highlighted some challenges learners face when acquiring and applying implementation practice knowledge and skills.

Conclusions

This study synthesized what experienced capacity-building initiative developers and deliverers identify as essential content for teaching implementation practice. These findings can inform the development, refinement, and delivery of capacity-building initiatives, as well as future research directions, to enhance the translation of implementation science into practice.

Similar content being viewed by others

Background

With significant time lags between evidence production and implementation [1], there is a long-standing need to accelerate the uptake of research findings into practice to improve healthcare processes and outcomes. The growing implementation science literature provides information on effective methods for moving evidence into practice; however, this scientific knowledge is large, complex, and may be challenging to apply. This has led to a paradoxical research–practice gap, whereby the evidence produced in implementation science is not being applied in real-world practice settings [2]. Thus, there have been recent calls to improve the mobilization of implementation science knowledge beyond the scientific community and into practice settings [3, 4].

Moving implementation science into practice requires a workforce of implementation practitioners who understand how to apply the science of implementation. In this paper, we define “implementation practitioners” as those who are “doing” the implementation of evidence-informed practices, as well as those who are supporting or facilitating implementation efforts [5]. This may include point-of-care staff, managers, quality improvement professionals, intermediaries, implementation support staff, and policymakers. To build the workforce of implementation practitioners, there is a need for training and professional development opportunities, which we call “capacity-building initiatives.” While there are an increasing number of implementation capacity-building initiatives available [6, 7], these programs often focus on teaching researchers about implementation science, with fewer aimed at teaching how to apply implementation science to improve implementation of evidence in practice settings (i.e., implementation practice) [7,8,9]. A recent systematic review [7] of the academic literature included 31 papers (reporting on 41 capacity-building initiatives) published between 2006 and 2019. The review found that many capacity-building initiatives were intended for researchers at a postgraduate or postdoctoral level, and there were fewer options for implementation practitioners working in practice settings.

While there are some examples of practitioner-focused capacity-building initiatives in the literature [10,11,12,13,14,15,16,17,18,19,20,21,22], most are being developed and delivered in isolation and not published in the academic or grey literature. In addition, reviewing this literature revealed that most of these publications focus on reporting evaluations of the short- and long-term outcomes of the capacity-building initiatives with only high-level details of the specific training content and the rationale for this content. Despite the development of competencies [23, 24] and frameworks [22] for implementation research and practice that have been informed through primary studies, literature reviews, and convening experts, to our knowledge, there has not been a consensus-building approach to date. Thus, there is limited synthesized information on what content is currently included in implementation practitioner capacity-building initiatives and no universal agreement or guidance on what content should be included to effectively teach implementation practitioners.

The increasing demand for and offerings of implementation practice capacity-building initiatives provide an opportunity to synthesize and learn from the individuals and teams offering this training. Our research team, which is composed of implementation scientists, implementation practitioners, clinicians, health leaders, and trainees, conducted a mixed-methods study to explore the experiences of teams offering capacity-building initiatives focused on implementation practice to inform the future development of high-quality training initiatives. The study had three aims. The first aim, which is the focus of this paper, was to describe what capacity-building initiative developers and deliverers identified as essential training content for teaching implementation practice. The other two aims (to be reported on elsewhere) were to describe and compare the similarities and differences between the capacity-building initiatives (e.g., structure, participants) and explore the experiences of those developing and delivering capacity-building initiatives for practitioners.

Methods

We used the Good Reporting of a Mixed Methods Study (GRAMMS) checklist [25] to inform our reporting (Additional file 1).

Study design

The overall study was a convergent mixed-methods study [26] (cross-sectional survey and qualitative descriptive design [27]) that applied an integrated knowledge translation approach [28] where all study participants were invited to contribute to the analysis, interpretation, and reporting of the study. Here we report on one component of the larger study. Specifically, we focus on a sub-set of the quantitative and qualitative data reporting on the content and curriculum of the capacity-building initiatives.

Study participants

We enrolled English-speaking individuals who had experience developing and/or delivering a capacity-building initiative that focused on teaching learners how to apply implementation science knowledge and skills to improve the implementation of evidence-informed practices in practice settings. The capacity-building initiatives must have been offered in the last 10 years and could be offered in any geographical location or online. We excluded capacity-building initiatives that focused on training researchers or graduate students to undertake implementation research.

We used purposive sampling. First, using the professional networks of the study team, we compiled a list of capacity-building initiatives and the primary contact (e.g., training lead). Second, three team members (JR, IDG, AM) independently screened the capacity-building initiatives included in Davis and D’Lima’s systematic review [7], consulting the full-text papers as needed to identify initiatives focused on implementation practice. Finally, we used snowball sampling to identify other individuals who had developed and delivered capacity-building initiatives. The first author (JR) invited the potential participants by email. If no response was received, an email reminder was sent 2 weeks and 4 weeks after the initial invitation.

Once the primary contact for a capacity-building initiative was enrolled, they had the opportunity to share the study invitation with their other team members. This resulted in some capacity-building initiatives having more than one person enrolled in the study, providing multiple perspectives on the development and delivery of the initiative. For simplicity, we refer to them as “teams” regardless of whether there was one person enrolled or multiple people enrolled.

Data collection

First, participants completed an online questionnaire developed by the study team, which included closed-ended and open-ended questions (Additional file 2 presents the sub-set of questions used in this analysis that focused on the content and curriculum). The questionnaire was piloted internally by two team members, and minor changes were made to improve functionality (e.g., branching logic), comprehensiveness (e.g., adding in open text boxes for respondents), and clarity (e.g., defining key terms used). We asked for one completed questionnaire per capacity-building initiative. When there were multiple team members enrolled in the study, they could nominate one person to complete the questionnaire on their behalf or complete the questionnaire together.

After completing the questionnaire, all participants were interviewed individually or in a focus group via videoconference to explore the questionnaire responses and discuss the capacity-building initiative in more detail. Individual interviews were used when there was only one team member enrolled; focus groups were used when there were two or more team members. The interviews and focus groups were facilitated by one of three research team members, all of whom identified as women and were trained in qualitative interviewing: JR is a master’s prepared registered nurse; OD is a master’s prepared speech-language pathologist with doctoral training in health rehabilitation sciences research and a knowledge translation specialist; JL is a doctoral prepared researcher with expertise in patient engagement. A semi-structured question guide was developed by the first and senior author (JR, IDG) and shared with the broader team. We used the team feedback to update the question guide, including adding new questions and probes, re-ordering the questions to improve flow, and refining the wording of the questions for clarity (Additional file 2 presents the sub-set of questions used in this analysis that focused on the content and curriculum).

Finally, we asked participants to share any capacity-building initiative materials to provide further details (e.g., scientific or grey literature publications, website materials, training agendas, promotional materials). We only collected publicly available materials to minimize concerns around the sharing/disclosing of proprietary content.

The questionnaire and publicly available materials provided data on what content is currently included in the capacity-building initiatives. The interview and focus group data provided information on why certain content was included, as well as how and why content changed over time. Together, this provided information on what we have labeled “essential content,” which is a reflection of both what study participants have chosen to include in their training initiatives, and their views on priority content areas for implementation practitioners based on their own experiences developing and delivering the initiatives.

Data analysis

Closed-ended questionnaire responses were analyzed using descriptive statistics. Frequencies (counts and percentages) were calculated for nominal data. Medians and ranges were calculated for continuous data. The questionnaire responses, qualitative transcripts, and course materials shared by participants were uploaded to NVivo12Pro for data management [29]. The merged dataset was analyzed using conventional content analysis, with the codes emerging inductively from the data [30]. Two authors (JR, OD) started by independently reading the data and coding all segments that pertained to training content and curriculum. They met regularly to compare their coding, discuss and resolve differences, build and revise the coding scheme, and group codes into categories. When the coding scheme was well-developed, and the coders were coding consistently (which occurred after coding data from one-third of the teams), the remaining data were coded by either JR or OD. The coding was then audited by one of seven members of the broader research team (HA, DMB, LBG, AMH, SCH, AEL, DS). These seven team members were “senior reviewers” with subject matter expertise in implementation science and practice [31]. They audited the coding and offered their feedback on how the text segments were labeled and categorized. This feedback was discussed by the two primary coders (JR, OD) and the senior author (IDG). The review process resulted in (1) changes to which codes were applied to specific text segments, (2) changes to the coding structure, including splitting existing codes into more precise labels, and (3) re-organizing existing codes into new categories. The final coding scheme was applied to the data. Finally, we categorized the identified theories, models, frameworks, and approaches (i.e., other methods in implementation) (TMFAs) [32] according to the three main aims described by Nilsen [33]: to guide, to understand or explain, or to evaluate implementation. We also categorized the identified implementation steps and skills according to the three phases in the Implementation Roadmap [34]: issue identification and clarification; build solutions; and implement, evaluate, sustain.

Integration of quantitative and qualitative data

We used integration approaches at several levels. At the methods level, we used building where the interview probes were developed based on questionnaire responses [26]. We also used merging by bringing the questionnaire and interview/focus group data together for analysis [26], giving both datasets equal priority. At the interpretation and reporting level, we used a narrative weaving approach to describe the categories informed by both datasets [26]. The integration of quantitative and qualitative data contributed to an expansion of our understanding of the capacity-building initiative content [26], with the questionnaire contributing to identifying what content is included and the interview/focus group data providing the rationale for the content.

Strategies to enhance methodological rigor

Dependability and confirmability [35] were enhanced by maintaining a comprehensive audit trail including raw data (e.g., verbatim transcripts), iterations of coding and coding schemes, and notes from data analysis meetings. To enhance credibility and confirmability [35], 35% of the data were coded independently by two people. All study participants were sent a summary of their data prepared by the research team and were asked to review it for accuracy and comprehensiveness (i.e., member checking the data). In addition, having senior reviewers with content expertise audit the coding helped make sense of the different implementation concepts and terms in the data, ensuring that data were coded and categorized accurately. Finally, interested study participants were involved in the sense-making process through their involvement in writing and critically revising this manuscript. We aimed to facilitate an assessment of the transferability [35] of the findings by describing contextual information on the capacity-building initiatives and study participants.

Results

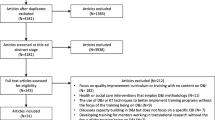

We enrolled 33 people (representing 17 teams) who developed and delivered capacity-building initiatives focused on implementation practice. Collectively, these 33 study participants shared information on 20 unique capacity-building initiatives that were offered by their 17 teams (Fig. 1). We indicate the denominator throughout the results to make clear when the results refer to capacity-building initiative level data, which was largely collected through the questionnaire and the shared capacity-building initiative materials (n = 20) or team-level data, which was largely collected through interviews and focus groups (n = 17).

Summary of data collected. aOne team reported on 3 capacity-building initiatives and one team reported on 2 capacity-building initiatives. All other teams reported on 1 capacity-building initiative only. Teams participating in this study comprised between 1 and 6 people. bFive study participants took part in two focus groups

Between September 2021 and November 2022, we collected 20 questionnaire responses (i.e., one per capacity-building initiative) and conducted 10 online interviews and 7 online focus groups (i.e., one per team) (Fig. 1). The focus groups included between 2 to 6 people. Interviews lasted an average of 60 min (range = 51–77 min) and focus groups lasted an average of 68 min (range = 51–79 min). We received materials for 11 out of 20 capacity-building initiatives, specifically: 6 publications, 2 course agendas, 2 course advertisements, and 1 website.

Study participants

The 33 study participants represented a blend of both research and practice experience. Half of the study participants (n = 17/33, 52%) currently identified as both a research professional (researcher or implementation scientist) and a practice-based professional (clinician or implementation practitioner). Three-quarters of study participants (n = 24/33, 73%) were currently involved in implementation in practice settings (clinician or implementation practitioner or manager/leader) (Table 1). Nearly all study participants reported having experience in implementation practice (n = 31/33, 94%). Of those with experience, the median number of years’ experience was 9 (range = 4–30 years).

Contextual information on capacity-building initiatives

The capacity-building initiatives (n = 20) had been offered a median of 4 times (range = 1–35 offerings) between 2009 and 2022 (Table 2).

Capacity-building initiative content

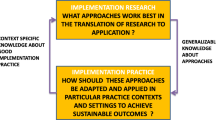

Nine of 17 teams (53%) explicitly described their capacity-building initiatives as introductory level. Study participants identified a variety of content areas included in their capacity-building initiatives, which we present according to four categories and 10 sub-categories, as well as the overarching categories of applied and pragmatic content and tailoring and evolving content (Fig. 2). Illustrative quotes are presented in Table 3.

Organization of study findings. The number of teams that discussed each category is indicated in brackets; the teams could have identified/described the category in any or all of the data sources: questionnaire, interview or focus group, shared capacity-building initiative materials. TMFAs theories, models, frameworks, approaches

Taking a process approach to implementation

Twelve teams (n = 12/17, 71%) described the importance of teaching learners to take a process approach to implementation. Participants highlighted that because learners tend to be action-focused, they needed to include content on the importance of taking a thoughtful approach and not jumping in too quickly without a thorough plan. To do this, these teams included content on how to develop a comprehensive implementation plan. Teaching this process approach also required information on how long the process can take, its iterative nature, and the need to be adaptable as things change.

Identifying and applying implementation TMFAs

All 17 teams reported that their capacity-building initiatives included two or more implementation TMFAs. In total, study participants identified 37 unique TMFAs that were introduced in their capacity-building initiatives (Table 4). The most common were the Knowledge-to-Action Framework (n = 14/20), Theoretical Domains Framework (n = 11/20), COM-B Model for Behavior Change (n = 9/20), RE-AIM (n = 9/20), Consolidated Framework for Implementation Research (n = 8/20), and the Behavior Change Wheel (n = 5/20). The remaining TMFAs were all used by four or fewer capacity-building initiatives.

Eleven capacity-building initiatives used a TMFA as the underpinning structure for the training content: nine were based on the Knowledge-to-Action framework, one was based on the Behavior Change Wheel, and one was based on the Awareness-to-Adherence Model.

Of the 20 capacity-building initiatives, 16 (80%) included at least one TMFA that guides implementation, 16 (80%) included at least one TMFA that explains implementation, and 10 (50%) included at least one TMFA to evaluate implementation. Eight of the 20 capacity-building initiatives (40%) included TMFAs from all three aims; six (30%) included TMFAs from two aims (guide/explain = 4, explain/evaluate = 1, guide/evaluate = 1); and six (30%) included TMFAs from one aim only (guide = 3, explain = 3).

Nine teams (n = 9/17, 53%) described the importance of focusing on the “how,” showing learners the menu of options and helping them to understand how to appropriately select and apply TMFAs to the different stages of their implementation projects. One team described introducing tools to facilitate the selection of TMFAs (e.g., Dissemination & Implementation Models in Health [75], T-CaST [76, 77]).

Teams noted that the content on TMFAs was often challenging for learners, with one team describing it as “bamboozling” (Case M). Challenges were due to learner anxiety with the academic nature and language of TMFAs, as well as difficulties understanding how they can be applied to their work. To address these challenges, teams changed their capacity-building initiative content to make it less theoretical (i.e., less focus on telling them about theories), with an increased focus on how to apply theory in implementation projects. Other teams described including information to reinforce the flexible application of TMFAs, emphasizing the ability to try one out and re-visit the choice if it is not meeting the project needs.

Learning implementation steps and skills

All 17 teams described how their training content focused on practical implementation skills to complete various steps in the process. Teams described seven core steps (Fig. 2).

Defining the problem and understanding context

Fifteen teams (n = 15/17, 88%) identified the importance of teaching learners to clearly define what problem the implementation project is aiming to address. Examples of this content included: clarifying what the problem is, understanding the context and current practice, using data to show the problem (evidence-practice gap), understanding the root cause of a problem, defining a problem that is specific and feasible to address, and understanding the problem from different perspectives.

Teams described spending a significant amount of time on this content due to its foundational nature for learning about subsequent steps in the implementation process. However, one participant cautioned the need to strike a balance between helping learners to thoroughly define and understand their problem without going so in-depth that they lose sight of what they are trying to accomplish within their implementation project.

Finding, appraising, and adapting evidence

Many teams (n = 12/17, 71%) described content about the evidence to be implemented as critical, including how to find, appraise, and adapt evidence for the context in which it is being implemented. Several teams described how learners could be quick to select the evidence to be implemented based on hearing about something “bright and shiny” (Team M), learnings from conferences and meetings, or papers reporting on a single study. Because of this, training content on how to conduct a more comprehensive search and appraisal of the evidence was essential.

Specifically, teams included content on the importance of ensuring there is evidence to support what is being implemented, how to search for research evidence, the importance of considering other forms of evidence such as staff and patient experiences, how to merge research evidence with experiential knowledge, considerations for ensuring the fit of the evidence to be implemented with the implementation setting, and understanding the appraised quality and levels of evidence (e.g., the evidence pyramid). Two teams (n = 2/17, 12%) acknowledged that even after learners acquired some knowledge and skills to search for and appraise evidence, they rarely had the time to undertake these tasks in their day-to-day professional roles. Therefore, making learners aware of resources to support this work was important.

Seven teams (n = 7/17, 41%) described training content related to adapting the evidence, practice, or innovation being implemented to fit with the local context. The concept of adaptation could be challenging for learners accustomed to working in a more “top-down” or directive model, where they assumed the evidence, practice, or innovation would be implemented as is. In these cases, teams identified that it was especially important to include information on how the organizational context and group needs should be considered to optimize the uptake and sustainability of the evidence, practice, or innovation being implemented.

Assessing barriers and facilitators

Fifteen teams (n = 15/17, 88%) discussed the fundamental importance of including content on how to systematically assess for barriers and facilitators that are likely to influence implementation. Teams shared how learners may either skip right from evidence selection to implementation solutions or erroneously believe that simply telling people a change is being made should be enough to result in behavior change. Teaching learners about the determinants that may influence the adoption (or lack of adoption) of evidence and the process for identifying these determinants was, therefore, identified as critical by nearly all teams. The content for this stage frequently included different TMFAs to guide the work (e.g., Theoretical Domains Framework [TDF] [37], Consolidated Framework for Implementation Research [CFIR] [41, 42]).

Selecting and tailoring implementation strategies

Fifteen teams (n = 15/17, 88%) highlighted the importance of teaching learners how to select implementation strategies using a structured approach that aligns with and addresses the identified barriers. Teams shared that learners may default to using familiar implementation strategies (such as education); therefore, teaching about the full range of implementation strategies was important. The capacity-building initiatives frequently included content and activities on how to map identified barriers to specific evidence-based implementation strategies and how to prioritize which ones to select. Again, teams described relevant resources (such as the Expert Recommendations for Implementing Change [ERIC] Taxonomy [78], the Behavior Change Technique [BCT) Taxonomy [79], and the Behavior Change Wheel [38]) that they used to help learners understand and apply the implementation strategy selection process.

Monitoring and evaluating

Fifteen teams (n = 15/17, 88%) described training content related to monitoring and evaluating implementation. Teams shared how they reinforced the importance of evaluating implementation projects to make course corrections and show the impact of their work. Three teams (n = 3/17, 18%) acknowledged that monitoring and evaluation can be unfamiliar and intimidating to learners and ensured that the content covered the “nuts and bolts” of monitoring the implementation process and conducting an outcome evaluation. Five capacity-building initiatives (n = 5/20, 25%) included logic models as a tool to plan for evaluations; other TMFAs included RE-AIM [39, 40] and Proctor’s implementation outcomes [51].

Sustaining and scaling

Eleven teams (n = 11/17, 65%) stated they included content on sustainability, such as tools for sustainability planning, determinants of sustainability, strategies for assessing and enhancing sustainability, and challenges with sustaining change over time. One team (n = 1/17, 6%) described including information on spread and scale. Although this content was often introduced near the end of the capacity-building initiative, teams reminded learners that sustainability needs to be considered at the beginning and throughout the implementation process.

Disseminating

Five teams (n = 5/17, 29%) included content on how to disseminate the findings of implementation projects. Content included strategies to disseminate project findings to interested and affected parties and decision-makers, as well as dissemination through scientific venues such as conference presentations and publications.

While all capacity-building initiatives (n = 20/20) focused on the implementation of evidence into practice, two teams (n = 2/17, 12%) also included information on how to undertake a dissemination project (e.g., developing a resource to share evidence). Teams also described the need to teach learners about the full spectrum of knowledge translation and the distinction between dissemination and implementation.

Developing relational skills

All teams (n = 17/17) discussed the importance of learning about the relational skills required throughout the implementation process, with one participant describing it as the “most neglected part of capacity building” (Case N).

Teams identified three main content areas for teaching these relational skills: forming and maintaining an implementation team, identifying and engaging interested and affected parties, and building implementation leadership and facilitation. Cutting across these three main areas, there were general examples of other relational content, including how to build trusting relationships, work inter-professionally, navigate power differences and hierarchies, and communication skills.

Forming and maintaining an implementation team

Nine teams (n = 9/17, 53%) discussed content on how to build an implementation team and define roles, how to manage team dynamics, and how to engage members throughout the implementation project.

Identifying and engaging interested and affected parties

All teams (n = 17/17) described content related to identifying and engaging interested and affected parties. Topics included the value of engagement, identifying and mapping key influencers, strategies for engagement, tailoring engagement approaches, and evaluating engagement.

Fourteen teams (n = 14/17, 82%) stated they included content on the importance of engaging health consumers (e.g., patients, families, caregivers). While some capacity-building initiatives only briefly discussed this, others described more detailed content, such as the rationale for and importance of consumer engagement, guidance for reimbursing health consumer partners, and strategies for working with health consumers. Two teams (n = 2/17, 12%) highlighted the importance of having this content delivered by health consumers themselves to showcase their experiences and stories.

Building implementation leadership and facilitation

Eleven teams (n = 11/17, 65%) included content on the knowledge and skills needed to be a facilitator of the implementation process including: the role of the facilitator, effective leadership, change management, managing resistance, and motivating others. Learners entering the capacity-building initiative may not recognize their ability to be an implementation leader; it was, therefore, important to include content that encouraged learners to reflect on their current attitudes and skills as a leader, work on leadership development, and help learners see themselves as leaders of implementation.

Offering applied and pragmatic content

All teams (n = 17/17) discussed the importance of applied content for teaching implementation practice. Teams acknowledged the growing and complex implementation science literature and highlighted the importance of content that effectively distills this literature into pragmatic and accessible content for learners (e.g., top five tips, toolkits, case examples). Teams reported that including practical tools and resources in the capacity-building initiatives was important so that learners had something tangible they could apply in their practice. Thirteen teams (n = 13/17, 76%) named at least one additional resource that they shared with learners. Twenty-seven unique resources were identified (Table 5).

Tailoring and evolving capacity-building initiative content

Seven teams (n = 7/17, 41%) described the importance of tailoring the content to each group of learners. While some teams acknowledged that there is content that is “locked in” or “universal,” other content can be tailored to meet the specific needs of learners (for example, based on learners’ area of practice, implementation projects, baseline knowledge, and learning needs).

Of the 20 capacity-building initiatives, 17 (85%) had been offered more than one time. These teams described changes to their training content over time (Table 6). These content changes were prompted by feedback received via formal learner evaluation forms; informal check-ins with learners during the capacity-building initiative; observations of what learners are asking questions about or struggling with; and new developments in the fields of knowledge translation, implementation science, and adult education.

Teams shared emerging topics that are becoming increasingly important to include in their capacity-building initiatives. More recent offerings of the capacity-building initiatives have taught learners about taking an intersectionality lens, considerations for equity, diversity, and inclusion, and applying a principled approach to partnerships.

Discussion

This study aimed to describe what capacity-building initiative developers and deliverers identify as essential content for teaching implementation practice. Based on the experiences of 17 teams that delivered 20 capacity-building initiatives, we identified four categories of content including taking a process approach to implementation, implementation TMFAs, implementation steps and skills, and relational skills, as well as the overarching categories of applied and pragmatic content, and tailored and evolving content. These findings provide an overview of the content being covered by a variety of capacity-building initiatives worldwide and the rationale for this content. Learning about the rationale for the content provided insights into some of the challenges current and aspiring implementation practitioners face both in the learning process and in their practice settings. These findings provide a foundation for building, refining, and researching capacity-building initiatives to further develop the implementation practice workforce, which is essential for scaling the implementation of evidence globally.

In this study, teams identified 37 different TMFAs and 27 additional resources that were introduced across the 20 capacity-building initiatives. While some of these were applied across a substantial number of capacity-building initiatives (e.g., Knowledge-to-Action framework [36]), most were used infrequently. This finding signals a general lack of consensus about what TMFAs and resources to use, a finding reported elsewhere [103]. A recent scoping review identified over 200 knowledge translation practice tools (i.e., tools that guide how to do knowledge translation) [104]. This has created a potentially overwhelming number of TMFAs that are used infrequently and/or inappropriately [105, 106], with many practitioners reporting a lack of confidence in choosing a framework [107]. It is worth reflecting on whether the people developing and delivering capacity-building initiatives are propagating this challenge by sharing and endorsing so many TMFAs and resources, especially without equipping learners with the tools needed to select and implement appropriate TMFAs. While some teams in our study did describe the importance of content on how to identify and select appropriate TMFAs, only one team identified the use of selection tools to facilitate this process. As more practice-based selection tools are developed and tested [104, 106], they may be helpful to implementation practitioners as they explore the large number of potential TMFAs to apply in their work.

The capacity-building initiatives in this study aligned with current understanding of core pillars [22] and essential competencies for implementation practice [23, 108]. Leppin and colleagues [22] identified three core pillars: understanding evidence-based interventions and implementation strategies; using theories, models, and frameworks during the implementation process; and methods and approaches to implementation research. The content in our included capacity-building initiatives closely aligned with the first two pillars, with less emphasis on the third pillar of implementation research. Moore and Khan identified 37 competencies linked to nine core implementation activities: inspire stakeholders and develop relationships, build implementation teams, understand the problem, use evidence to inform all aspects of implementation, assess the context, facilitate implementation, evaluate, plan for sustainability, and brokering knowledge [23]. The capacity-building initiatives we examined in our study generally covered these nine activities, although some were described less frequently (e.g., building an implementation team, sustainability). While the depth of our data did not allow for a direct comparison between the capacity-building initiative content and the more detailed individual competencies, future work should explore the alignment between training content and current and emerging competencies for implementation practice and science. For instance, novel competencies are emerging related to equity considerations in implementation science [109]. While some teams in our study described including new content on equity in their capacity-building initiatives, further work is needed to explore how this training content aligns with these emerging competencies, how effectively it is developing implementation practitioners’ capacity to integrate equity considerations during implementation, and whether there are differences in equity considerations for implementation research versus implementation practice.

We identified several areas where, despite learning content in the capacity-building initiative, practitioners might experience challenges applying this knowledge in practice. First, although about 70% of the capacity-building initiatives in our study included content on how to find, appraise, and adapt evidence, there were concerns about whether learners could (or should) action these skills in day-to-day practice, given the time-intensive nature. This concern aligns with a systematic review that found “lack of time” as a top barrier to healthcare providers searching for, appraising, and learning from evidence [110]. Support from librarians has been shown to have positive outcomes (e.g., time savings for healthcare providers, more timely information for decision making) [111], although we acknowledge that librarians may not be easily accessible in all practice-based settings. Second, nearly all teams included content on monitoring and evaluation. However, based on the collective experiences of our team of implementation scientists and implementation practitioners, monitoring and evaluation are often not done (or not done in depth) in practice-based settings. Setting up effective data collection and monitoring systems has been identified as one of the top ten challenges to improving quality in healthcare, with settings often lacking the required expertise and infrastructure [112]. It is possible that the high proportion of teams including monitoring and evaluation content in their training is in response to this gap and an attempt to better equip learners with the required knowledge to effectively apply these skills in their settings.

The topic of sustainability was included by less than two-thirds of the teams. Given the growing attention on sustainability and scalability [113,114,115], this was surprising. There are several potential explanations. First, it is possible that sustainability concepts were integrated throughout the other content and not explicitly articulated as a separate content area by study participants. Second, most of the capacity-building initiatives in our study were time-limited, introductory courses. While most capacity-building initiatives introduced process models (e.g., KTA framework [36], Quality Implementation Framework [52], EPIS [58, 59]), which encourage consideration of the full implementation process from planning to sustainability, it is possible that the focus of the training was on the earlier phases of the models, with less attention to the longer-term activities of sustainability and scalability. However, sustainability needs to be considered early and often [116, 117] and it is worth considering who bears this responsibility. Johnson et al. [118] raised a similar question and recommended sustainability planning be a “dynamic, multifaceted approach with the involvement of all those who have a stake in sustainability such as funders, researchers, practitioners, and program beneficiaries” [118] (p. 7). It is thus important to ensure that capacity-building initiatives are equipping learners with the knowledge and skills to enhance sustainability and scalability throughout the full implementation process.

All teams described the importance of relational skills in the implementation process, from forming and maintaining a core implementation team, to engaging interested and affected parties in the implementation process, to effectively leading and facilitating implementation. Relational skills are required to work effectively in implementation practice, with about half of the 37 implementation core competencies being relational in nature [23]. In addition, an international survey of implementation experts most frequently identified collaboration knowledge and skills (e.g., interpersonal skills, networking and relationship building, teamwork and leadership skills, motivational skills, and ability to work with other disciplines and cultures) as the most helpful competency [24]. Our study also provided several examples of how this relational content is evolving in alignment with societal priorities and emerging areas in the fields of knowledge translation and implementation science, including integrated knowledge translation [28] and co-production [119] approaches, power differences and dynamics [120], equity, diversity, and inclusion, intersectionality considerations [121,122,123,124,125,126], and taking a principled approach to partnerships [127, 128]. It is promising that many teams offering capacity-building initiatives are staying abreast of these latest advances and priorities in developing the knowledge and skills of implementation practitioners.

Strengths and limitations

We used a comprehensive recruitment approach to enroll a geographically diverse sample of participants with a variety of implementation, clinical, and research experiences, providing an international perspective on implementation practice training. We used a recent systematic review [7] as one strategy to identify published capacity-building initiatives; however, it is important to acknowledge that we did not conduct a comprehensive review of the literature and some capacity-building initiatives may have been missed. Furthermore, the inclusion of English-speaking participants only may have limited the identification and participation of other capacity-building initiative developers and deliverers. In addition, the current study focused on capacity-building initiatives offered primarily in the health sector. Implementation science and practice span many fields, offering an opportunity to replicate this study design to examine commonalities and unique content needs across different regions and contexts.

The use of primary and multiple data collection methods facilitated the collection of in-depth information on both what content is covered in the capacity-building initiatives as well as how and why this content is included. However, it is important to acknowledge that we only received capacity-building initiative materials from 11 of the 20 programs, which may have limited the comprehensiveness of the information on each initiative. In addition, the discussion guide asked participants about “critical content” and participants may therefore have only highlighted the “core” content in the time-limited interviews and focus groups. As such, while our findings provide an overview of what experts in the field identify as important training content, this likely is not reflective of every possible topic covered across capacity-building initiatives. Furthermore, while study participants shared what they included in their initiatives and why those content areas are important, this may not be representative of the optimal training content for all settings. Given the purpose of this study was not to assess the outcomes of the capacity-building initiatives, we cannot ascertain whether specific capacity-building initiative content is associated with better learner or health-system outcomes, which is an important area for future work.

Although this work extends our knowledge of key training content for implementation practice, content and curriculum are just one component of designing and delivering effective implementation practice training programs. Our team is currently working to synthesize additional data to describe the structure, format, and evaluation approaches of the capacity-building initiatives, as well as describe the experiences of the teams who facilitate the training.

Conclusions

The results of this study highlight what experienced capacity-building initiative developers and deliverers identify as essential content for teaching implementation practice. These learnings may be informative to researchers, educators, and implementation practitioners working to develop, refine, and deliver capacity-building initiatives to enhance the translation of implementation science into practice. Future research is needed to better understand how the training content influences implementation outcomes.

Availability of data and materials

The interview and focus group transcripts analyzed in this study are not publicly available due to them containing information that could compromise research participant privacy/consent. The questionnaire and data are available from the corresponding author on reasonable request.

Abbreviations

- TMFAs:

-

Theories, models, frameworks, approaches

References

Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. J R Soc Med. 2011;104:510–20. http://journals.sagepub.com/doi/10.1258/jrsm.2011.110180.

Westerlund A, Sundberg L, Nilsen P. Implementation of implementation science knowledge: the research-practice gap paradox. Worldviews Evid Based Nurs. 2019;16:332–4.

Rapport F, Smith J, Hutchinson K, Clay-Williams R, Churruca K, Bierbaum M, et al. Too much theory and not enough practice? The challenge of implementation science application in healthcare practice. J Eval Clin Pract. 2022;28:991–1002.

Beidas RS, Dorsey S, Lewis CC, Lyon AR, Powell BJ, Purtle J, et al. Promises and pitfalls in implementation science from the perspective of US-based researchers: learning from a pre-mortem. Implement Sci. 2022;17:1–15. https://doi.org/10.1186/s13012-022-01226-3.

Albers B, Metz A, Burke K. Implementation support practitioners: a proposal for consolidating a diverse evidence base. BMC Health Serv Res. 2020;20:1–10.

Straus SE, Sales A, Wensing M, Michie S, Kent B, Foy R. Education and training for implementation science: our interest in manuscripts describing education and training materials. Implement Sci. 2015;10:136. https://doi.org/10.1186/s13012-015-0326-x.

Davis R, D’Lima D. Building capacity in dissemination and implementation science: a systematic review of the academic literature on teaching and training initiatives. Implement Sci. 2020;15(1):97. https://doi.org/10.1186/s13012-020-01051-6.

Kislov R, Waterman H, Harvey G, Boaden R. Rethinking capacity building for knowledge mobilisation: developing multilevel capabilities in healthcare organisations. Implement Sci. 2014;9:166. https://doi.org/10.1186/s13012-014-0166-0.

Proctor E, Chambers DA. Training in dissemination and implementation research: a field-wide perspective. Transl Behav Med. 2017;7:624–35.

Park JS, Moore JE, Sayal R, Holmes BJ, Scarrow G, Graham ID, et al. Evaluation of the “foundations in knowledge translation” training initiative: preparing end users to practice KT. Implement Sci. 2018;13:63.

Moore JE, Rashid S, Park JS, Khan S, Straus SE. Longitudinal evaluation of a course to build core competencies in implementation practice. Implement Sci. 2018;13:1–14.

Bennett S, Whitehead M, Eames S, Fleming J, Low S, Caldwell E. Building capacity for knowledge translation in occupational therapy: learning through participatory action research. BMC Med Educ. 2–16;16(1):257. https://doi.org/10.1186/s12909-016-0771-5.

Eames S, Bennett S, Whitehead M, Fleming J, Low SO, Mickan S, et al. A pre-post evaluation of a knowledge translation capacity-building intervention. Aust Occup Ther J. 2018;65:479–93.

Young AM, Cameron A, Meloncelli N, Barrimore SE, Campbell K, Wilkinson S, et al. Developing a knowledge translation program for health practitioners: allied health translating research into practice. Front Heal Serv. 2023;3:1103997. https://doi.org/10.3389/frhs.2023.1103997.

Provvidenza C, Townley A, Wincentak J, Peacocke S, Kingsnorth S. Building knowledge translation competency in a community-based hospital: a practice-informed curriculum for healthcare providers, researchers, and leadership. Implement Sci. 2020;15(1):54. https://doi.org/10.1186/s13012-020-01013-y.

Black AT, Steinberg M, Chisholm AE, Coldwell K, Hoens AM, Koh JC, et al. Building capacity for implementation—the KT Challenge. Implement Sci Commun. 2021;2(1):84. https://doi.org/10.1186/s43058-021-00186-x.

Mosson R, Augustsson H, Bäck A, Åhström M, Von Thiele SU, Richter A, et al. Building implementation capacity (BIC): a longitudinal mixed methods evaluation of a team intervention. BMC Health Serv Res. 2019;19(1):287. https://doi.org/10.1186/s12913-019-4086-1.

Goodenough B, Fleming R, Young M, Burns K, Jones C, Forbes F. Raising awareness of research evidence among health professionals delivering dementia care: are knowledge translation workshops useful? Gerontol Geriatr Educ. 2017;38:392–406. https://doi.org/10.1080/02701960.2016.1247064.

Wyer PC, Umscheid CA, Wright S, Silva SA, Lang E. Teaching Evidence Assimilation for Collaborative Health Care (TEACH) 2009–2014: building evidence-based capacity within health care provider organizations. EGEMS. 2015;3(2):1165. https://doi.org/10.13063/2327-9214.1165.

Proctor E, Ramsey AT, Brown MT, Malone S, Hooley C, McKay V. Training in Implementation Practice Leadership (TRIPLE): evaluation of a novel practice change strategy in behavioral health organizations. Implement Sci. 2019;14:66. https://doi.org/10.1186/s13012-019-0906-2.

U.S. Department of Veterans Affairs. Implementation facilitation learning hub. 2023. Available from: https://www.queri.research.va.gov/training_hubs/default.cfm. Cited 2023 October 16.

Leppin AL, Baumann AA, Fernandez ME, Rudd BN, Stevens KR, Warner DO, et al. Teaching for implementation: a framework for building implementation research and practice capacity within the translational science workforce. J Clin Transl Sci. 2021;5:e147. https://doi.org/10.1017/cts.2021.809.

Moore JE, Khan S. Core competencies for implementation practice. 2020. Available from: https://static1.squarespace.com/static/62b608b9681f5f0b4f3c3659/t/638ea4ee882321609ab4b640/1670292724117/TCI+-+Core+Competencies+for+Implementation+Practice.pdf.

Schultes M-T, Aijaz M, Klug J, Fixsen DL. Competences for implementation science: what trainees need to learn and where they learn it. Adv Health Sci Educ Theory Pract. 2021;26(1):19–35. https://doi.org/10.1007/s10459-020-09969-8.

O’Cathain A, Murphy E, Nicholl J. The quality of mixed methods studies in health services research. J Heal Serv Res Policy. 2008;13:92–8.

Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs-principles and practices. Health Serv Res. 2013;48(6 Pt 2):2134–56. https://doi.org/10.1111/1475-6773.12117.

Sandelowski M. Whatever happened to qualitative description? Res Nurs Health. 2000;23:334–40.

Kothari A, McCutcheon C, Graham ID. Defining integrated knowledge translation and moving forward: a response to recent commentaries. Int J Heal Policy Manag. 2017;6(5):299–300. https://doi.org/10.15171/ijhpm.2017.15.

QSR International Pty Ltd. NVivo qualitative data analysis software, version 12 Pro. Burlington: QSR International Pty Ltd; 2017. Available from: https://support.qsrinternational.com/nvivo/s/.

Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–88.

Giesen L, Roeser A. Structuring a team-based approach to coding qualitative data. Int J Qual Methods. 2020;19:1–7.

The Center for Implementation. TMFAs. 2023. Available from: https://twitter.com/TCI_ca/status/1676848534619058176. Cited 2023 Jul 6.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53. https://doi.org/10.1186/s13012-015-0242-0.

Harrison M, Graham ID. Knowledge translation in nursing and healthcare: a roadmap to evidence-informed practice. Hoboken: John Wiley & Sons, Inc.; 2021.

Lincoln YS, Guba E. Naturalistic inquiry. Beverly Hills: Sage Publications; 1985.

Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26:13–24. https://doi.org/10.1002/chp.47.

Atkins L, Francis J, Islam R, O’Connor D, Patey A, Ivers N, et al. A guide to using the theoretical domains framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12(1):77. https://doi.org/10.1186/s13012-017-0605-9.

Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci. 2011;6:42. https://doi.org/10.1186/1748-5908-6-42.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7. https://doi.org/10.2105/ajph.89.9.1322.

RE-AIM. RE-AIM - Improving public health relevance and population health impact. 2023. Available from: https://re-aim.org/. Cited 2023 Oct 16.

Damschroder LJ, Reardon CM, Widerquist MAO, Lowery J. The updated consolidated framework for implementation research based on user feedback. Implement Sci. 2022;17(1):75. https://doi.org/10.1186/s13012-022-01245-0.

CFIR Research Team-Center for Clinical Management Research. Consolidated Framework for Implementation Research (CFIR). 2023. Available from: https://cfirguide.org/. Cited 2023 Oct 16.

Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. https://doi.org/10.1186/1748-5908-8-117.

Maher L, Gustafson D, Evans A. Sustainability model and guide. 2010. Available from: https://ktpathways.ca/system/files/resources/2019-11/nhs_sustainability_model_-_february_2010_1_.pdf.

Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41(3–4):171–81. https://doi.org/10.1007/s10464-008-9174-z.

Kitson AL, Rycroft-Malone J, Harvey G, Mccormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008;3:1. https://doi.org/10.1186/1748-5908-3-1.

Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: tools, training, technical assistance, and quality assurance/quality improvement. Am J Community Psychol. 2012;50(3–4):445–59. https://doi.org/10.1007/s10464-012-9509-7.

Canadian Institutes of Health Research. Guide to knowledge translation planning at CIHR: integrated and end-of-grant approaches. Ottawa; 2012. Available from: https://cihr-irsc.gc.ca/e/documents/kt_lm_ktplan-en.pdf.

May C, Finch T. Implementing, embedding, and integrating practices: an outline of normalization process theory. Sociology. 2009;43:535–54. https://doi.org/10.1177/0038038509103208.

May C, Rapley T, Mair FS, Treweek S, Murray E, Ballini L, et al. Normalization process theory on-line users’ manual, toolkit and NoMAD instrument. 2015. Available from: http://www.normalizationprocess.org. Cited 2021 Dec 5.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. https://doi.org/10.1007/s10488-010-0319-7.

Meyers DC, Durlak JA, Wandersman A. The quality implementation framework: a synthesis of critical steps in the implementation process. Am J Community Psychol. 2012;50:462–80. https://doi.org/10.1007/s10464-012-9522-x.

Rogers EM. Diffusion of innovations. 5th ed. 2003.

Moore G, Campbell M, Copeland L, Craig P, Movsisyan A, Hoddinott P, et al. Adapting interventions to new contexts—the ADAPT guidance. BMJ. 2021;374:n1679. https://doi.org/10.1136/bmj.n1679.

Pathman DE, Konrad TR, Freed GL, Freeman VA, Koch GG. the awareness-to-adherence model of the steps to clinical guideline compliance. Med Care. 1996;34:873–89. https://doi.org/10.1097/00005650-199609000-00002.

Harrison MB, van den Hoek J, Graham ID. CAN-IMPLEMENT: planning for best-practice implementation. 1st ed. Philadelphia: Lippincott, Williams and Wilkins; 2014.

Braithwaite J, Churruca K, Long JC, Ellis LA, Herkes J. When complexity science meets implementation science: a theoretical and empirical analysis of systems change. BMC Med. 2018;16:63. https://doi.org/10.1186/s12916-018-1057-z.

Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implement Sci. 2019;14:1. https://doi.org/10.1186/s13012-018-0842-6.

EPIS Framework. The EPIS implementation framework. Available from: https://episframework.com/. Cited 2023 Oct 16.

Hawe P. Lessons from complex interventions to improve health. Annu Rev Public Health. 2015;36:307–23. https://doi.org/10.1146/annurev-publhealth-031912-114421.

Stirman SW, Baumann AA, Miller CJ. The FRAME: an expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. 2019;14:58. https://doi.org/10.1186/s13012-019-0898-y.

Langley G, Moen R, Nolan K, Nolan T, Norman C, Provost L. The improvement guide: a practical approach to enhancing organizational performance. 2nd ed. San Francisco: Jossey-Bass Publishers; 2009.

Hilton K, Anderson A. IHI psychology of change framework to advance and sustain improvement. Boston: Institute for Healthcare Improvement; 2018. Available from: https://www.ihi.org/resources/Pages/IHIWhitePapers/IHI-Psychology-of-Change-Framework.aspx.

Parker G, Kastner M, Born K, Berta W. Development of an implementation process model: a Delphi study. BMC Health Serv Res. 2021;21:558. https://doi.org/10.1186/s12913-021-06501-5.

Iowa Model Collaborative, Buckwalter KC, Cullen L, Hanrahan K, Kleiber C, McCarthy AM, et al. Iowa model of evidence-based practice: revisions and validation. Worldviews Evid Based Nurs. 2017;14:175–82. https://doi.org/10.1111/wvn.12223.

Schiffer E. Net-Map toolbox: influence mapping of social networks. 2007. Available from: https://netmap.wordpress.com/about/. Cited 2023 Jul 11.

Valente TW, Palinkas LA, Czaja S, Chu K-H, Brown CH. Social network analysis for program implementation. PLoS ONE. 2015;10:e0131712. https://dx.plos.org/10.1371/journal.pone.0131712.

Graham ID, Logan J. Innovations in knowledge transfer and continuity of care. Can J Nurs Res. 2004;36:89–103.

Domlyn AM, Scott V, Livet M, Lamont A, Watson A, Kenworthy T, et al. R = MC2 readiness building process: a practical approach to support implementation in local, state, and national settings. J Community Psychol. 2021;49:1228–48. https://doi.org/10.1002/jcop.22531.

Bausch KC. Soft systems theory. In: The emerging consensus in social systems theory. Boston: Springer US; 2001. p. 103–22. https://doi.org/10.1007/978-1-4615-1263-9_8.

Khalil H, Lakhani A. Using systems thinking methodologies to address health care complexities and evidence implementation. JBI Evid Implement. 2022;20:3–9. https://doi.org/10.1097/XEB.0000000000000303.

Flaspohler PD, Meehan C, Maras MA, Keller KE. Ready, willing, and able: developing a support system to promote implementation of school-based prevention programs. Am J Community Psychol. 2012;50:428–44. https://doi.org/10.1007/s10464-012-9520-z.

Francis J, Presseau J. Healthcare practitioner behaviour. In: Llewellyn C, Ayers S, McManus C, editors. Cambridge Handb Psychol Heal Med. Cambridge University Press; 2019. p. 325–8.

Ajzen I. The theory of planned behavior. Organ Behav Hum Decis Process. 1991;50:179–211.

University of Colorado Denver. Dissemination & implementation models in health. 2023. Available from: https://dissemination-implementation.org/. Cited 2023 Apr 26.

North Carolina Translational and Clinical Sciences Institute. Theory, Model, and Framework Comparison and Selection Tool (T-CaST). 2023. Available from: https://impsci.tracs.unc.edu/tcast/. Cited 2023 Apr 26.

Birken SA, Rohweder CL, Powell BJ, Shea CM, Scott J, Leeman J, et al. T-CaST: an implementation theory comparison and selection tool. Implement Sci. 2018;13:143. https://doi.org/10.1186/s13012-018-0836-4.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. https://doi.org/10.1186/s13012-015-0209-1.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46:81–95. https://doi.org/10.1007/s12160-013-9486-6.

Knowledge Translation Program. Practicing knowledge translation: implementing evidence. Toronto: Achieving outcomes; 2016.

CADTH. CADTH Rx for change database. Available from: https://cadth-login.wicketcloud.com/login?service=https%3A//www.cadth.ca/casservice%3Fdestination%3D/user/login%253Fdestination%253D%25252Frx-change. Cited 2023 Apr 26.

Centre for Healthcare Innovation. Methods of patient & public engagement: a guide. Winnipeg; 2020. Available from: https://umanitoba.ca/centre-for-healthcare-innovation/sites/centre-for-healthcare-innovation/files/2021-11/methods-of-patient-and-public-engagement-guide.pdf.

Bowen S. A guide to evaluation in health research. Ottawa; 2013. Available from: https://cihr-irsc.gc.ca/e/documents/kt_lm_guide_evhr-en.pdf.

Pipes T. Work more effectively and productively with the Eisenhower Matrix. Medium. 2017. Available from: https://medium.com/taking-note/work-more-effectively-and-productively-with-the-eisenhower-matrix-998091a14b3a. Cited 2023 Apr 26.

Effective Practice and Organisation of Care (EPOC). EPOC Taxonomy. 2015. Available from: epoc.cochrane.org/epoc-taxonomy.

RAND Corporation. Learn and use getting to outcomes®. 2023. Available from: https://www.rand.org/health-care/projects/getting-to-outcomes/learn-and-use.html. Cited 2023 Apr 26.

Health Consumers Queensland. Health Consumers Queensland. Available from: https://www.hcq.org.au/. Cited 2023 Apr 26.

International Association for Public Participation (IAP2). International Association for Public Participation (IAP2). Available from: https://www.iap2.org/mpage/Home. Cited 2023 Apr 26.

Hailey D, Grimshaw J, Eccles M, Mitton C, Adair CE, McKenzie E, et al. Effective dissemination of findings from research. Alberta; 2008. Available from: https://www.ihe.ca/publications/effective-dissemination-of-findings-from-research-a-compilation-of-essays.

Fernandez ME, ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, et al. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Heal. 2019;7:158. https://doi.org/10.3389/fpubh.2019.00158.

King’s Improvement Science. KIS guide to evaluation resources. London; 2018. Available from: https://kingsimprovementscience.org/cms-data/resources/KIS_evaluation_guide_December_2018.pdf.

Barwick MA. Knowledge translation planning template. Toronto; 2008. Available from: https://www.sickkids.ca/en/learning/continuing-professional-development/knowledge-translation-training/knowledge-translation-planning-template-form/.

KTDRR (Center on Knowledge Translation for Disability & Rehabilitation Research). KT strategies database. 2023. Available from: https://ktdrr.org/ktstrategies/. Cited 2023 Apr 26.

NHS Institute for Innovation and Improvement. Sustainability guide. 2010.

National Implementation Research Network (NIRN). NIRN. Available from: https://nirn.fpg.unc.edu/. Cited 2023 Apr 26.

Ready, Set, Change! A readiness for change decision support tool. Available from: http://readiness.knowledgetranslation.ca/. Cited 2023 Apr 26.

Institute for Healthcare Improvement. SBAR Tool: Situation-Background-Assessment-Recommendation | IHI - Institute for Healthcare Improvement. 2023. Available from: https://www.ihi.org/resources/Pages/Tools/SBARToolkit.aspx. Cited 2023 Apr 26.

Washington University in St. Louis. Program Sustainability Assessment Tool (PSAT) and Clinical Sustainability Assessment Tool (CSAT). 2023. Available from: https://www.sustaintool.org/. Cited 2023 Apr 27.

Brown CH, Curran G, Palinkas LA, Aarons GA, Wells KB, Jones L, et al. An overview of research and evaluation designs for dissemination and implementation. Annu Rev Public Health. 2017;38:1–22. https://doi.org/10.1146/annurev-publhealth-031816-044215.

The Community Engagement Network. The engagement toolkit. Melbourne; 2005. Available from: https://www.betterevaluation.org/tools-resources/engagement-toolkit

The Theory and Techniques Tool. Available from: https://theoryandtechniquetool.humanbehaviourchange.org/tool. Cited 2023 Apr 26.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35. https://doi.org/10.1186/1748-5908-8-35.

Lynch EA, Mudge A, Knowles S, Kitson AL, Hunter SC, Harvey G. “There is nothing so practical as a good theory”: a pragmatic guide for selecting theoretical approaches for implementation projects. BMC Health Serv. 2018;18:857. https://doi.org/10.1186/s12913-018-3671-z.

Bhuiya AR, Sutherland J, Boateng R, Bain T, Skidmore B, Perrier L, et al. A scoping review reveals candidate quality indicators of knowledge translation and implementation science practice tools. J Clin Epidemiol. 2023;S0895-4356(23):00281-0. https://doi.org/10.1016/j.jclinepi.2023.10.021.

Strifler L, Cardoso R, McGowan J, Cogo E, Nincic V, Khan PA, et al. Scoping review identifies significant number of knowledge translation theories, models, and frameworks with limited use. J Clin Epidemiol. 2018;100:92–102. https://doi.org/10.1016/j.jclinepi.2018.04.008.

Strifler L, Barnsley JM, Hillmer M, Straus SE. Identifying and selecting implementation theories, models and frameworks: a qualitative study to inform the development of a decision support tool. BMC Med Inform Decis Mak. 2020;20(1):91. https://doi.org/10.1186/s12911-020-01128-8.

Barrimore SE, Cameron AE, Young AM, Hickman IJ, Campbell KL. Translating research into practice: how confident are allied health clinicians? J Allied Health. 2020;49:258–62.

Metz A, Louison L, Burke K, Albers B, Ward C. Implementation support practitioner profile: guiding principles and core competencies for implementation practice. Chapel Hill; 2020. Available from: https://nirn.fpg.unc.edu/sites/nirn.fpg.unc.edu/files/resources/IS%20Practice%20Profile-single%20page%20printing-v10-October%202022.pdf.

Huebschmann AG, Johnston S, Davis R, Kwan BM, Geng E, Haire-Joshu D, et al. Promoting rigor and sustainment in implementation science capacity building programs: a multi-method study. Implement Res Pract. 2022;3:26334895221146260. https://doi.org/10.1177/26334895221146261.

Sadeghi-Bazargani H, Tabrizi JS, Azami-Aghdash S. Barriers to evidence-based medicine: a systematic review. J Eval Clin Pract. 2014;20:793–802. https://doi.org/10.1111/jep.12222.

Perrier L, Farrell A, Ayala AP, Lightfoot D, Kenny T, Aaronson E, et al. Effects of librarian-provided services in healthcare settings: a systematic review. J Am Med Informatics Assoc. 2014;21:1118–24. https://doi.org/10.1136/amiajnl-2014-002825.

Dixon-Woods M, McNicol S, Martin G. Ten challenges in improving quality in healthcare: lessons from the Health Foundation’s programme evaluations and relevant literature. BMJ Qual Saf. 2012;21:876–84. https://doi.org/10.1136/bmjqs-2011-000760.

Straus SE. Implementation sustainability. In: Rapport F, Clay-Williams R, Braithwaite J, editors. Implement Sci Key Concepts. 1st ed. London: Routledge; 2022. p. 205–8.

Corôa RDC, Gogovor A, Ben Charif A, Hassine A Ben, Zomahoun HTV, McLean RKD, et al. Evidence on scaling in health and social care: an umbrella review. Milbank Q. 2023. https://doi.org/10.1111/1468-0009.12649.

Moore JE, Mascarenhas A, Bain J, Straus SE. Developing a comprehensive definition of sustainability. Implement Sci. 2017;12:110. https://doi.org/10.1186/s13012-017-0637-1.

Moore JE. Sustainability: What is it? Why is it important? How are readiness, context, and sustainability related? 2023. Available from: https://thecenterforimplementation.com/toolbox/5-components-of-sustainability. Cited 2023 Oct 16.

Kwan BM, Brownson RC, Glasgow RE, Morrato EH, Luke DA. Designing for dissemination and sustainability to promote equitable impacts on health. Annu Rev Public Health. 2022;43:331–53. https://doi.org/10.1146/annurev-publhealth-052220-112457.

Johnson AM, Moore JE, Chambers DA, Rup J, Dinyarian C, Straus SE. How do researchers conceptualize and plan for the sustainability of their NIH R01 implementation projects? Implement Sci. 2019;14:50. https://doi.org/10.1186/s13012-019-0895-1.

Graham ID, Rycroft-Malone J, Kothari A, McCutcheon C, editors. Research coproduction in healthcare. Hoboken: Wiley; 2022.

Finley EP, Closser S, Sarker M, Hamilton AB. Editorial: the theory and pragmatics of power and relationships in implementation. Front Heal Serv. 2023;3:1168559. https://doi.org/10.3389/frhs.2023.1168559.

Presseau J, Kasperavicius D, Rodrigues IB, Braimoh J, Chambers A, Etherington C, et al. Selecting implementation models, theories, and frameworks in which to integrate intersectional approaches. BMC Med Res Methodol. 2022;22:212. https://doi.org/10.1186/s12874-022-01682-x.

Etherington C, Rodrigues IB, Giangregorio L, Graham ID, Hoens AM, Kasperavicius D, et al. Applying an intersectionality lens to the theoretical domains framework: a tool for thinking about how intersecting social identities and structures of power influence behaviour. BMC Med Res Methodol. 2020;20:169. https://doi.org/10.1186/s12874-020-01056-1.

Sibley KM, Kasperavicius D, Rodrigues IB, Giangregorio L, Gibbs JC, Graham ID, et al. Development and usability testing of tools to facilitate incorporating intersectionality in knowledge translation. BMC Health Serv Res. 2022;22:830. https://doi.org/10.1186/s12913-022-08181-1.

Tannenbaum C, Greaves L, Graham ID. Why sex and gender matter in implementation research. BMC Med Res Methodol. 2016;16:145. https://doi.org/10.1186/s12874-016-0247-7.

Brownson RC, Kumanyika SK, Kreuter MW, Haire-Joshu D. Implementation science should give higher priority to health equity. Implement Sci. 2021;16:28. https://doi.org/10.1186/s13012-021-01097-0.

Fort MP, Manson SM, Glasgow RE. Applying an equity lens to assess context and implementation in public health and health services research and practice using the PRISM framework. Front Heal Serv. 2023;3:1139788. https://doi.org/10.3389/frhs.2023.1139788.

Gainforth HL, Hoekstra F, McKay R, McBride CB, Sweet SN, Martin Ginis KA, et al. Integrated knowledge translation guiding principles for conducting and disseminating spinal cord injury research in partnership. Arch Phys Med Rehabil. 2021;102:656–63. https://doi.org/10.1016/j.apmr.2020.09.393.

Hoekstra F, Trigo F, Sibley KM, Graham ID, Kennefick M, Mrklas KJ, et al. Systematic overviews of partnership principles and strategies identified from health research about spinal cord injury and related health conditions: a scoping review. J Spinal Cord Med. 2023;46:614–31. https://doi.org/10.1080/10790268.2022.2033578.

Acknowledgements

We thank Drs. Michael Brown, Gillian Harvey, T George Hornby, Alison Mudge, Peter Wyer, and Adrienne Young for their contributions to conceptualizing this study. We thank Megan Campbell, Prue McRae, Dr. Nina Meloncelli, Dr. Jan Egil Nordvik, and Dr. Michael Tresillian for their contribution to interpreting the data.

The Implementation Practice Capacity-Building Initiative (CBI) team:

• Sally Bennett, School of Health and Rehabilitation Sciences, University of Queensland, Australia

• Agnes T Black, Providence Health Care, Canada

• Ashley E Cameron, Office of the Chief Allied Health Officer, Queensland Health, Australia

• Rachel Davis, Patient-Centred Research Group, Implementation Science, Evidera, United Kingdom

• Shauna Kingsnorth, Evidence to Care, Holland Bloorview Kids Rehabilitation Hospital, Canada; Occupational Science & Occupational Therapy, University of Toronto, Canada

• Julia E Moore, The Center for Implementation, Canada

• Christine Provvidenza, Evidence to Care, Holland Bloorview Kids Rehabilitation Hospital, Canada

• Sharon E Straus, Knowledge Translation Program, Li Ka Shing Knowledge Institute, St. Michael’s Hospital, Unity Health Toronto, Canada; Department of Medicine, University of Toronto, Canada

• Ashleigh Townley, Evidence to Care, Holland Bloorview Kids Rehabilitation Hospital, Canada

Funding

This study was supported by a Canadian Institutes of Health Research (CIHR) Foundation Grant to IDG (FDN# 143237). JR is funded by a CIHR Vanier Canada Graduate Scholarship and has received awards from the Integrated Knowledge Translation Research Network (IKTRN) and the University of Ottawa. The funder had no role in the project.

Author information

Authors and Affiliations

Consortia

Contributions

JR, EAL, JLM, DS, IDG, RD, JEM, and SES contributed to conceptualizing the study. JR, OD, and JL collected the study data. JR, OD, JL, HA, DMB, CEC, BEC, SID, LBG, AMH, SCH, EAL, JLM, MRR, WR, DS, and IDG contributed to analyzing and interpreting the data and writing the results. SB, ATB, AEC, RD, SK, JEM, CP, SES, and AT contributed to interpreting the findings. JR drafted the initial manuscript. IDG oversaw the overall conduct of the study. All authors critically reviewed and revised the manuscript and approved the final version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study received approval from the Ottawa Health Science Network Research Ethics Board (20210274-01H). All participants provided written informed consent before the questionnaire and reconfirmed consent verbally prior to starting the interview or focus group.

Consent for publication

Not applicable.

Competing interests

HA, DMB, BEC, LBG, AMH, SCH, EAL, JLM, MRR, WR, DS, IDG, SB, ATB, AEC, SK, JEM, CP, SES, and AT were each involved in the development and/or delivery of one or more of the capacity-building initiatives included in this study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

GRAMMS reporting checklist.

Additional file 2.

Survey and interview questions.

Rights and permissions