Abstract

Background

Effective implementation of evidence-based practices (EBPs) remains a significant challenge. Numerous existing models and frameworks identify key factors and processes to facilitate implementation. However, there is a need to better understand how individual models and frameworks are applied in research projects, how they can support the implementation process, and how they might advance implementation science. This systematic review examines and describes the research application of a widely used implementation framework, the Exploration, Preparation, Implementation, Sustainment (EPIS) framework.

Methods

A systematic literature review was performed to identify and evaluate the use of the EPIS framework in implementation efforts. Citation searches in PubMed, Scopus, PsycINFO, ERIC, Web of Science, Social Sciences Index, and Google Scholar databases were undertaken. Data extraction included the objective, language, country, setting, sector, EBP, study design, methodology, level(s) of data collection, unit(s) of analysis, use of EPIS (i.e., purpose), implementation factors and processes, EPIS stages, implementation strategy, implementation outcomes, and overall depth of EPIS use (rated on a 1–5 scale).

Results

In total, 762 full-text articles were screened by four reviewers, resulting in inclusion of 67 articles, representing 49 unique research projects. All included projects were conducted in public sector settings. The majority of projects (73%) investigated the implementation of a specific EBP. The majority of projects (90%) examined inner context factors, 57% examined outer context factors, 37% examined innovation factors, and 31% bridging factors (i.e., factors that cross or link the outer system and inner organizational context). On average, projects measured EPIS factors across two of the EPIS phases (M = 2.02), with the most frequent phase being Implementation (73%). On average, the overall depth of EPIS inclusion was moderate (2.8 out of 5).

Conclusion

This systematic review enumerated multiple settings and ways the EPIS framework has been applied in implementation research projects, and summarized promising characteristics and strengths of the framework, illustrated with examples. Recommendations for future use include more precise operationalization of factors, increased depth and breadth of application, development of aligned measures, and broadening of user networks. Additional resources supporting the operationalization of EPIS are available.

Similar content being viewed by others

Background

Effective implementation of evidence-based interventions, treatments, or innovations (hereafter referred to as evidence-based practices [EBPs]) to address complex and widespread public health issues remains a significant challenge. Our ability to effectively implement an EBP is as important as treatment effectiveness because failed implementation efforts are often the underlying reason for lack of EBP effectiveness or impact in health and social care systems and organizations [1,2,3]. There are numerous frameworks, models, and theories that identify key factors, and sometimes processes, to facilitate EBP implementation [4,5,6]. Such implementation frameworks are commonly used to help select and structure research questions, methods, strategies, measures, and results. While an increasing number of studies use implementation frameworks, the ways in which these frameworks are used or operationalized is not well described and their theoretical and practical utility are often left unexamined [7].

The present study is a systematic review of one highly cited and widely used implementation framework, the Exploration, Preparation, Implementation, Sustainment (EPIS) framework [8]. Until recently, this comprehensive framework has had limited prescriptive guidance for its use. The EPIS framework was developed based on examination of the literature on implementation in public sector social and allied health service systems (e.g., mental health, substance use disorder treatment, social care, child welfare) in the USA, and has applicability in other countries and other settings. This study will determine how EPIS has been applied and how widely the framework has been disseminated, adopted, and implemented in diverse health, allied health, and social care sectors, and further afield.

The EPIS framework

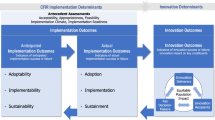

As shown in Fig. 1, EPIS has key components that include four well-defined phases that describe the implementation process, identification of outer system and inner organizational contexts and their associated factors, innovation factors that relate to the characteristics of the innovation/EBP being implemented, and bridging factors, the dynamics, complexity, and interplay of the outer and inner contexts [8].

The first key component of EPIS is the four phases of the implementation process, defined as Exploration, Preparation, Implementation, and Sustainment (EPIS). In the Exploration phase, a service system, organization, research group, or other stakeholder(s) consider the emergent or existing health needs of the patients, clients, or communities and work to identify the best EBP(s) to address those needs, and subsequently decides whether to adopt the identified EBP. In addition, consideration is given to what might need to be adapted at the system, organization, and/or individual level(s) and to the EBP itself. The exploration phase begins when implementers and relevant stakeholders are aware of a clinical or public health need and are considering ways to address that need. The implementers move into the next phase of preparation upon deciding to adopt one or more EBPs or innovations. In the Preparation phase, the primary objectives are to identify potential barriers and facilitators of implementation, further assess needs for adaptation, and to develop a detailed implementation plan to capitalize on implementation facilitators and address potential barriers. Critical within the Preparation phase is planning of implementation supports (e.g., training, coaching, audit, and feedback) to facilitate use of the EBP in the next two phases (Implementation and Sustainment) and to develop an implementation climate that indicates that EBP use is expected, supported, and rewarded [9]. In the Implementation phase and guided by the planned implementation supports from the Preparation phase, EBP use is initiated and instantiated in the system and/or organization(s). It is essential that ongoing monitoring of the implementation process is incorporated to assess how implementation is proceeding and adjust implementation strategies to support efforts accordingly. In the Sustainment phase, the outer and inner context structures, processes, and supports are ongoing so that the EBP continues to be delivered, with adaptation as necessary, to realize the resulting public health impact of the implemented EBP.

The second key component of the EPIS framework is the articulation of contextual levels and factors comprised of the outer system context and the inner organizational context. Within each phase, outer and inner context factors that could be considered as instrumental to the implementation process are highlighted, many of which apply across multiple implementation phases. The outer context describes the environment external to the organization and can include the service and policy environment and characteristics of the individuals who are the targets of the EBP (e.g., patients, consumers). The outer context also includes inter-organizational relationships between entities, including governments, funders, managed care organizations, professional societies, and advocacy groups, that influence and make the outer context dynamic. For example, collaboration between child welfare and mental health systems may occur, surrounding the development and implementation of a coordinated care program for youth served in both sectors. It is important to note that the outer context is dynamic. The inner context refers to the characteristics within an organization such as leadership, organizational structures and resources, internal policies, staffing, practices, and characteristics of individual adopters (e.g., clinicians or practitioners). Within the inner context, there are multiple levels that vary by organization or discipline and may include executive management, middle management, team leaders, or direct service providers (e.g., clinicians, practitioners). Together, the inner and outer contexts reflect the complex, multilayered, and highly interactive nature of the socioecological context of health and allied healthcare that is noted in many implementation frameworks [8, 10,11,12].

The third key component of EPIS are the factors that relate to the EBP or innovation itself. There is an emphasis of fit of the EBP to be implemented with the system and patient/client population (outer context), as well as the organization and provider (inner context). This implies that some adaptation to the EBP will likely be necessary. The aim is to maintain the core components on an EBP and adapt the periphery.

The fourth and final component of EPIS is the recognition of the interconnectedness and relationships between outer and inner context entities, what is part of what we refer to as bridging factors. The bridging factors are deemed to influence the implementation process as the inner context of organizations is influenced by the outer system in which the organization operates, but those influences are reciprocal (e.g., industry lobbyists impacting pharmacy legislation, and direct to consumer marketing). For example, hospitals are subject to federal, state, and local policies that define certification and reporting requirements.

EPIS considers that adaptation (often involving implementation strategies) will likely be necessary in regard to the outer and inner contexts as well as to the EBP. This is supported by recent work identifying the need for a dynamic approach to adaptation that involves all relevant stakeholders through the four EPIS phases in order to capitalize on the knowledge and experience of the implementation team and maximize the ability to find solutions that are acceptable to all stakeholders [13]. Furthermore, this is consistent with calls for consideration of the need for adaptation in EBP sustainment [14, 15]. This emphasis on adaptation to improve fit within the EPIS framework is akin to what others have identified as fostering values-innovation fit [16, 17]. The values-innovation fit proposes that innovation implementation will be more successful if there is a high degree of fit between the values and needs of implementers and the characteristics of the innovation to be implemented [16]. For example, one implementation strategy may be to develop system and organizational climates that support such a values-innovation fit [18]. EPIS also explicitly identifies the importance of EBP characteristics and the role of EBP developers and purveyors/intermediaries (i.e., those who support the implementation process) throughout the process of implementation and demonstration of effectiveness. This is especially important when considering values-innovation fit and identifying potential adaptations to increase EBP fit within a specific setting while preserving fidelity to EBP core elements that are responsible for clinical or service outcomes.

It is unclear the degree to which these varying components of EPIS are identified, operationalized, and studied in the published literature. To address this gap, we conducted a systematic review of the literature to describe how the EPIS implementation framework has been used in peer-reviewed, published studies. This review (1) describes EPIS use in implementation research to date and (2) makes recommendations for using the EPIS framework to advance implementation science theory, research, and practice.

Methods

A multi-step process was used to identify, review, and analyze the use of the EPIS framework.

Search strategy

A systematic search of the literature was executed in May 2017 to locate studies published in academic journals that utilized the EPIS framework. The search strategy was based on cited reference searching of the original EPIS article titled “Advancing a Conceptual Model of Evidence-Based Practice Implementation in Public Service Sectors” [8]. The title was used as the TITLE search term in each database. The following seven databases and search criteria were used: (1) PubMed single citation matcher (TITLE in ALL FIELDS CITED by), (2) Scopus: (TITLE in REFERENCES), (3) PsycINFO: (TITLE in REFERENCE and PEER REVIEWED), (4) ERIC: (TITLE in ANYWHERE), (5) Web of Science: (TITLE AND THE “TIMES CITED” LINK FOR FULL LIST OF CITATIONS OF THE ORIGINAL EPIS PAPER), (6) Social Sciences Index (TITLE in All Text Fields), and (7) Google Scholar: (TITLE Cited By).

Our initial search criteria were anchored to the original EPIS citation [8] to ensure complete identification of articles that had used the EPIS framework. We utilized the title of the original article—rather than the more recently accepted acronym that follows from the EPIS phases. As such, all records were published between 2011 (when the EPIS framework was published) and 2017 (when the search was conducted).

Prior to assessment of inclusion and exclusion criteria duplicates of resulting articles were removed.

Inclusion and exclusion criteria

Inclusion criteria were as follows:

-

1.

Report on an original (empirical) research study

-

2.

Published in a peer-reviewed journal

-

3.

Study design or implementation project focused on dissemination, implementation, or sustainment, including hybrid designs.

-

4.

Utilized the EPIS framework to guide study theory, design, data collection, measurement, coding, analysis, and/or reporting

Papers were excluded if they were conceptual (e.g., commentary, debate) rather than empirical research or a synthesis (e.g., EPIS was cited as one of a list of frameworks, theories, or models but was not used in a meaningful way).

Data collection

Four reviewers (JM, KD, NS, BR) assessed titles, abstracts, and full articles for inclusion. Each article was independently assessed by two reviewers. Papers (n = 74; 9.7%), where there was a difference of opinion regarding inclusion, were assessed by a third reviewer (GA).

Data extraction

Each article was critically appraised by two reviewers independently. Reviewers extracted the data summarized in Table 1 from each included article. Refer to Additional file 1 for extracted data of each article.

Additional classifications of implementation factors from the original 2011 paper were added to the data extraction and EPIS framework figure. There was limited emphasis in the original 2011 paper and also a large presence of factors regarding the innovation and factors connecting the innovation and the two contexts, which highlighted the need for additional terms to better represent particular factors that fall outside of the inner and outer context. As such, we refer to these as Innovation factors (for example innovation/EBP characteristics, innovation/EBP fit), and Bridging factors, or factors that span the inner and outer contexts (for example, interagency collaboration and community-academic partnerships).

Synthesis of results

Data were recorded in an extraction table (Additional file 1). Following data extraction, each reviewer met with the paired team member of each article to compare their results, reach consensus for areas that required further discussion or review, and combine extracted data into a single entry in the data extraction table. Finally, studies that had multiple articles were grouped together based on the parent project. In Additional file 1, projects that contained more than one article have a summary of the project reported first, followed by articles within each parent project listed in italics.

Results

Our search identified 2122 records (Fig. 2). After removal of duplicates, 762 articles were screened (title, abstract, and full-text review as needed) of which 67 initially met the inclusion criteria. Articles were then grouped by projects (if projects had one or multiple articles) resulting in a total of 49 unique research projects (see Additional file 1). Further results are reported by project.

PRISMA Flow Diagram of paper selection [62]

Projects utilizing EPIS were conducted in 11 countries that span high-, low-, and middle-income contexts: USA (39 projects, plus 2 in collaboration with other countries), Canada (1 project in collaboration with USA), Mexico (1 project), Sweden (1 project), Norway (1 project in collaboration with USA), Belgium (1 project), Australia (2 projects), UK (1 project), Brazil (1 project), the Bahamas (1 project), and South Africa (1 project). In 47% of projects, one or more authors had a professional connection (e.g., sponsor or mentor on a training grant) or research collaboration (e.g., co-investigator on a grant, co-author on a scholarly product) with the lead author of the original EPIS framework paper, Dr. Gregory Aarons; 6% and 4% had a professional connection or collaboration with the second (Hurlburt) and third (Horwitz) authors, respectively.

The included projects spanned a variety of public sectors: public health, child welfare, mental or behavioral health, substance use, rehabilitation, juvenile justice, education, and school-based nursing. The child welfare and mental health sectors had the highest representation across the projects (13 and 19 projects, respectively). The physical setting for the projects varied from whole service systems (e.g., Mental Health Department) to organizational level settings (e.g., schools, child welfare agencies, substance use treatment programs, community-based organizations, health clinics). The scale of the projects ranged from case studies of a few organizations to very large studies across hundreds of organizations or several states and counties. All projects were in public sector settings.

The majority of projects (n = 36, 73%) were investigating the implementation of one specific EBP. Two projects offered sites a selection of EBPs (4%), while 11 projects (22%) were implementing the concept of EBP. The health focus was reported in 8 projects (16%). These included (but not limited to) maltreatment, behavioral problems, mental health or substance use, human immunodeficiency virus (HIV), Parkinson’s Disease, teen pregnancy, and workplace disability.

There was a reasonable division in the methodologies employed in the projects. Eleven projects (22%) used quantitative methods, 13 (27%) qualitative methods, and 26 (53%) mixed methods. Projects often produced separate articles focusing on qualitative and quantitative methodology, with 22 (32%) quantitative articles, 21 (31%) qualitative articles, and 25 (37%) mixed-methods articles. The data collected in the projects included assessment of multiple inner context levels (20 projects, 41%), followed by assessment of one inner context level (15 projects, 31%) and then assessment of multiple outer and inner levels (14 projects, 29%). Data analyses conducted in the projects was usually multilevel. In total, only 12 projects (24%) were analyzed at a single level. Seven study protocols were included in the review, two used EPIS only in the study design, while three had subsequent results articles included. Seven projects employed EPIS only in analysis or coding, and/or reporting. Four projects used EPIS to frame the study and then in reporting, but not in study design, data collection or measurement. Six projects (12%) used EPIS only as background to frame the study. The role of EPIS in the projects has been summarized in Table 2.

In terms of the outer and inner context, innovation, and bridging factors, we found some variation in reporting. Factors associated with the outer and inner context were reported in 57% (n = 28) and 90% (n = 44) of projects, respectively. A large number of projects included innovation factors (37%) and bridging factors that spanned the inner and outer contexts (31%).

Regarding the EPIS phases, we noted a variation in how explicitly projects (i.e., authors overtly included the name of the phases) identified the various phases and differences in what phases were included in projects. Table 3 summarizes the distribution of the EPIS phase(s) examined and whether the phase was explicitly versus implicitly used. All of the included projects and the majority of the articles (78%) explicitly focused on the Implementation stage whereas a minority of the projects (29%) and articles (23%) explicitly focused on the Exploration stage. On average, projects included 2.02 out of the four phases (SD = 1.14). Table 4 reports the frequency of EPIS factors at each phase measured in the included articles. The most frequently measured factors across phases were organizational characteristics and individual adopter characteristics.

Finally, to quantify the overall coverage of the EPIS framework, a rating from 1 (conceptual use of EPIS) to 5 (operationalized use of EPIS) was assigned. The average rating of EPIS depth was 2.8 out of 5, indicating a moderate depth of EPIS application.

Discussion

This review describes how one of the most commonly used D&I frameworks [7], the EPIS framework, has been used in practice and reported in the literature since its first publication in 2011 until mid-2017. A total of 49 unique research projects using EPIS, published in 67 peer-reviewed articles, were identified. Projects were conducted in 11 countries, across a range of public sector settings. While the EPIS framework was developed based on the literature on implementation in public social and allied health service systems in the USA, it appears to have broad applicability in other countries and other health and/or allied health settings.

The promise of implementation science models and frameworks is that they may allow for cross-setting and cross-country comparison of factors associated with implementation, which can contribute to our understanding of optimal implementation strategies and generalizability of concepts and constructs, support the harmonization of measures and evaluation practices, and help advance the field of implementation science and implementation practice. This review shows the promise and utility of EPIS to guide studies in various settings, topic areas, and geopolitical locations, and economically resourced regions. For example, our results demonstrate that EPIS has been used in high-, low-, and middle-income countries including Sweden [19], South Africa [20], and Mexico [21]. EPIS has also been used in other settings including public health [22], schools [23], and community health centers [24]. We encourage adaptation and use of EPIS outside of currently tested projects.

There may be some tendency for frameworks, models, and theories to be used by those in aligned information and professional networks [25]; however, it is likely that a given framework may have broad applicability across settings. The EPIS framework is a relatively young model compared to some other implementation models and frameworks in the field. It is natural that after initial introduction and application, the network of users will broaden [26]. This has already been observed with EPIS, as more than 50% of research projects included in this review had no direct affiliation with the first author of the EPIS framework (Aarons). We expect that this natural diffusion of EPIS will continue and will be enhanced as more diverse examples of its use emerge. Moreover, we anticipate that more comprehensive use of EPIS, including such aspects as inter-organizational networks, innovation fit at system, organization, provider, and patient levels, may be enhanced through the examples, recommendations, and resources described in this review [27,28,29,30].

EPIS was developed as a comprehensive, stand-alone implementation framework containing the core components [4] of implementation; the implementation process was divided into four phases, and an enumeration of potential influencing factors for each phase across the multilevel context may be evaluated quantitatively and qualitatively, allowing for the testing of related implementation strategies. We reviewed the completeness and frequency with which the key components of EPIS have been used across research projects. The depth of EPIS inclusion was moderate. However, we recommend more in-depth use in the articulation, application, and measurement of factors included in implementation frameworks. On the other hand, use of all components of a framework is not always feasible, practical, desirable, or necessary for a given implementation study or project [31] and many implementation frameworks do not include all the core components of implementation [4].

In terms of the process-related characteristics of the EPIS phases (i.e., moving through phases of Exploration, Preparation, Implementation, and Sustainment), we found variability in the use of process-related aspects of EPIS, with the most frequent phase being Implementation. Furthermore, the majority of the research projects had the Implementation phase as their main focus with much less emphasis on the Exploration, Preparation, and Sustainment phases. This finding is consistent with other literature suggesting that thoughtful planning which could happen in the Exploration and Preparation phases for implementation and sustainment is infrequent although critical [4]. It is also documented in the literature that attention to sustainment is sparse but is imperative for ongoing and meaningful change, improvement in outcomes, and public health impact [32, 33]. We suggest that implementation researchers begin with sustainment in mind and as the ultimate goal of implementation efforts. This perspective does not preclude, and even embraces, the need for careful navigation through Exploration, Preparation, and Implementation phases and for adaptations of the outer or inner contexts, implementation strategies, and the EBP(s). Examples of the use of EPIS in the Exploration and Preparation phase include projects that examine service providers and supervisors/administrators attitudes towards and knowledge of an EBP(s) to inform implementation strategies and adaptation efforts [34, 35].

Projects in this review varied in regard to the depth with which EPIS was described and operationalized, with only some cases of EPIS being applied throughout the implementation phases. For the most benefit, it is desirable that implementation models and frameworks are used to inform all phases of the research process from early development of implementation research questions through to presentation and dissemination of research findings. It is also true that frameworks might have diverse strengths and might be more appropriate to use for certain purposes than others. There are five broad categories that frameworks have been classified into based on their primary purpose: process models, determinant frameworks, classic theories, implementation frameworks, and evaluation frameworks [5, 36]. For example, the Reach, Effectiveness, Adoption, Implementation, and Maintenance Framework (RE-AIM) has historically been used as a planning and evaluation framework [37, 38] and the Consolidated Framework for Implementation Research (CFIR) is frequently used as a determinant to guide qualitative methods and analyses [39]. EPIS can be classified in many categories as it may be used for the purpose of understanding process, determinants, implementation, and evaluation. By guiding multiple components of implementation, the EPIS framework may be used for several purposes, reducing the need for use of multiple frameworks.

It is critical to go beyond the mention of the framework in the introduction of a research grant or paper or only applying the framework retrospectively during data analysis, without sufficient operationalization of the framework in the research process. A content review of U.S. National Institutes of Health grant proposal abstracts, funded and not funded, showed that one key characteristic of funded proposals was that the implementation framework(s) selected was better described and operationalized [40]. We recommend careful consideration and operationalization of components, and also relating use of theory in testing and advancing knowledge of what aspects of implementation frameworks are more or less useful in driving implementation process and advancing implementation science. Greater depth and breadth of EPIS use would include providing descriptions of the implementation plan, the factors included in the project, and how and when the specified factors and process are being assessed.

There was variability in the specific factors examined at each phase, although organizational and individual adopter characteristics were the most frequent factors across all phases. It is not surprising to see that inner context factors are most commonly assessed. The relative higher frequency of measuring organizational and individual adopter characteristics may be influenced by the greater availability of quantitative measures of these factors in comparison to system level factors (refer to Table 5 for list of associated measures). A recent publication in the journal Implementation Science highlighted the need to better define and develop well operationalized and pragmatic measures for the assessment of external implementation context and bridging factors [41]. Access to existing measures is provided through a number of resources and publications [42,43,44]. More specifically, measures for various EPIS factors have been developed and tested through a number of studies. Examples of these measures are provided in Table 5. Development and use of additional measures meeting these criteria is a high priority area for Implementation Science.

It is important to note that the role and relevance of factors within the inner and outer context might vary across phases. Some factors might be important throughout all phases (e.g., funding, organizational characteristics), while others might have heightened relevance during one or two of the phases (e.g., quality and fidelity monitoring/support during the Implementation and Sustainment phases). We also emphasize the importance of attending to the bridging factors and the dynamic interplay between inner and outer context factors. We encourage those using the EPIS framework to use theory or a logic model of their particular implementation strategy and context to decide what factors are likely to be critical and relevant in their study [45, 46]. Detailed and deep use of implementation models and frameworks to identify specific implementation determinants and targets, and processes of implementation can help to address these concerns. The model developed from the EPIS framework for the Interagency Collaborative Team (ICT) project provides an example of interconnectedness and relationships between and within outer and inner context entities [47]. In the ICT project, a community-academic partnership was formed to bridge the outer and inner contexts. Furthermore, interagency collaborative relationships within and across the contextual levels were formed including between outer context policy makers with advocacy groups and community-based organizations contracted to provide home-based services with clients and families [48]. Outer context policies were instantiated through collaborative processes such as community stakeholder meetings, the use of negotiations, and procurement and contracting. Contracts, which clearly specifies the expectation to use EBPs, communicates a strong system level support (outer context) for a climate (inner context) where EBPs are expected, supported, and rewarded [49].

As discussed, EPIS includes levels across the socioecological context [12], touching on factors at the individual, organizational, and systems levels. A multi-level conceptualization of implementation processes, and the understanding that interactions across various levels need to be considered, has been an increasing discourse in the Implementation Science literature [10]. A strength of EPIS is in its perspective that draws attention to the complexities of its multi-level conceptualization including data collection and data analysis. For example, when collecting qualitative data the interviewer may ask about the respondent experience at their own unit level (e.g., experience of supervisors in their team) or other levels (e.g., the larger agency or system level policies). It is important to specify hypotheses both within levels and across levels. As an example, interventions to improve leadership and organizational implementation climate may be intended to improve clinician attitudes towards EBP, and EBP adoption, use, and fidelity [18]. In this case, the higher level leadership and climate are at the higher unit level, while attitudes, adoption, and fidelity are at the individual clinician level.

The multi-level contextual nature of EPIS lends itself to a variety and integration of methodologies using quantitative only, qualitative only, or mixed-method approaches. There is an increasing appreciation in Implementation Science for the need to use a combination of quantitative and qualitative methods which allow for a more complete assessment of our often context-dependent, pragmatic research questions (i.e., for whom, under what circumstances, why, and how does an intervention or implementation strategy work) [50, 51]. In our review, we found a number of examples where mixed-methods approaches guided by the EPIS framework were able to provide more comprehensive evaluation of an implementation research problem. For example, Gleacher and colleagues [52] used qualitative interview data from clinicians to augment quantitative utilization and implementation data to examine multilevel factors associated with adoption and implementation of measurement feedback systems in community mental health clinics. A critical challenge in the field is to find ways to publish findings from mixed-method studies; we found that two thirds of the mixed-method projects in this review published their qualitative and qualitative findings in separate papers. Space limitations and orientation of various journals (i.e., more qualitative or quantitative focus) might form barriers for mixed-methods findings to be published in an integrated manner. There are resources on how to apply mixed-methods to Implementation Science research that give guidance and examples of integration of qualitative and quantitative conceptualization, data collection and analysis, and reporting [53,54,55].

Future directions of EPIS

The results from this systematic review have informed our recommendations for the future use of EPIS for (1) more precise operationalization of EPIS factors, (2) consideration of the interplay between inner and outer context through bridging factors, and (3) discussion of how EPIS can be consistently incorporated with greater depth and throughout the lifespan of an implementation project (breadth).

Recommendation no. 1: Precise operationalization of EPIS factors

The use of precise and operationalized definitions of EPIS factors is key to facilitate the successful application of this framework and guide appropriate measurement of factors. In this vein, we have refined definitions of the EPIS factors (see Table 6). The definitions are flexible to ensure applicability of EPIS factors across phases and multiple levels. For example, the inner context factor organizational characteristics is defined as “structures or processes that take place or exist in organizations that may influence the process of implementation.” Inherent within this definition is that this construct may be an important consideration within any of the four EPIS phases and at multiple levels (e.g., provider, team, supervisor). Moving forward, we encourage implementation scientists to utilize these definitions to inform their application and measurement of EPIS factors, as well as using the EPIS factors and relationships between factors to develop theoretical models for testing in implementation studies.

Recommendation no. 2: Consideration of the dynamic interplay between inner and outer context factors

In addition to inner and outer context factors, we also now explicitly highlight and define the integral role of bridging factors. These factors were previously conceptualized as those that interlace the inner and outer context factors but were not formally classified within the EPIS framework (see Fig. 1 of Aarons et al. [2011] paper) [8]. In our current conceptualization, these factors and their interactions include: Community Academic Partnerships, and Purveyors/Intermediaries. For example, the Dynamic Adaptation Process [13] incorporates an explicit emphasis on these bridging factors to inform EBP adaptation in a planned, systematic way to increase its feasibility for implementation and sustainment. As our results suggest, these bridging factors are active ingredients to aid in understanding the interaction between outer and inner context factors and thus represent a key area of consideration in future work.

Recommendation no. 3: Increase EPIS depth and breadth

Our results show that more than one phase and level of EPIS have been considered in many implementation studies, highlighting the breadth of the EPIS framework. While this is encouraging, we recommend that future implementation efforts consider how EPIS can be applied longitudinally throughout all phases (i.e., Exploration, Preparation, Implementation and Sustainment) and levels (e.g., system, organization, provider) of the implementation process. We suggest that implementation efforts “begin with sustainment in mind.” This reflects the increasing emphasis within implementation science on explicit incorporation or acknowledgement of the sustainment phase from the outset of study planning and execution [56, 57]. Further, our results suggest that EPIS was most commonly used to inform the study design, report results, and frame the research endeavor. We recommend that EPIS, as a theoretical framework, be thoughtfully applied throughout a project from study framing to explicit identification of how EPIS was used within various levels of data collection and analysis and through reporting and interpretation of results. In a longitudinal study design, factors may be evaluated across multiple EPIS phases. Examples of quantitative measures are provided in Table 5 and definitions for qualitative analyses in Table 6.

Finally, the phases of the implementation process may be operationalized by defining and measuring movement through the phases. For example, when an organization is aware of or shows interest in using an EBP, they enter the Exploration phase. Subsequently if they make the decision to adopt the EBP then they would move into the Preparation phase. First use of the EBP would signify transition into the Implementation phase. Lastly, continued use over a designated period of time may be defined as being in Sustainment. These types of movements have been flagged for incorporation into guidelines such as PRECIS-2 [58].

Exemplar of comprehensive use of EPIS framework: JJ-TRIALS

One example of meticulous and comprehensive use of EPIS is the US National Institute on Drug Abuse (NIDA) Juvenile Justice Translational Research on Interventions for Adolescents in the Legal System (JJ-TRIALS) project [59, 60]. In this major multiple center (six centers, 34 study sites) cluster randomized implementation trial, EPIS was used throughout the implementation phases and across contextual levels to stage the implementation process, select quantitative and qualitative measures, and identify important outcomes. JJ-TRIALS is probably the best and most explicit example of application of the EPIS framework. Indeed, JJ-TRIALS may be one of the best examples of a rigorous, deep, and thoughtful approach to applying an implementation science framework to a large-scale implementation initiative. For example, JJ-TRIALS is testing a bundled strategy that supports the implementation of data-driven decision-making using two different facilitation approaches (core and enhanced). JJ-TRIALS moves beyond implementation of a single EBP to allow for implementation of evidence-based process improvement efforts. Activities to move through the EPIS phases were mapped out along with implementation factors and appropriate measures and strategies to assess and address the key multilevel system and organizational issues. Ways to document and evaluate the implementation factors, implementation strategies, and movement through all of the EPIS phases were determined. In addition, there was a conceptual adaptation of EPIS itself based on input and perspectives of community partners, investigators, and NIH staff wherein the framework was represented in a more recursive and cyclical manner consistent with improvement processes and this resulted in the development of EPIS Wheel [59, 60]. As shown in Fig. 1, based on our current systematic review, we have also provided a depiction of the EPIS framework using a more cyclical perspective that also captures the key features of outer context, inner context, as well as the nature of the practice(s) to be implemented (innovation factors), and the interaction with intervention developers and purveyors that may foster appropriate adaptations of context and practice (bridging factors).

EPIS resources

EPIS website: episframework.com

The website https://EPISFramework.com provides a number of tools for planning and use of EPIS throughout the implementation process. The website is now available and is a living resource that will be continually updated and enhanced.

Limitations

There are several limitations of this systematic review. We limited the review to peer-reviewed, empirical articles citing Aarons et al. 2011. Ongoing or completed grant-funded studies or contracts that applied EPIS are not included. In addition, unpublished applications of EPIS would not have been included nor articles that do not directly cite Aarons et al. 2011, or articles without searchable reference citations. As such, our results likely do not reflect all implementation efforts that used EPIS and in particular the search strategy may have limited the inclusion of practitioners’ application of the framework for implementation practice. Our rating of the depth to which EPIS was used was based on one item that was developed by the study team. Although operationalized and internally consistent as used in this study, it was not a standardized measure of EPIS use.

Conclusion

The EPIS framework has a great promise to serve as a multilevel, context-sensitive, broadly applicable framework for Implementation Science research and practice. Our review described the patterns of use to date, summarized promising characteristics and strengths of the EPIS framework, and illustrated those through examples. We also provide recommendations for future use including more precise operationalization, increased depth and breadth of EPIS application, improved use measures for a number of factors, and the ongoing broadening of networks of users, topics, and settings. Additional resources supporting the operationalization of EPIS are available and under development [61].

References

Griffith TL, Zammuto RF, Aiman-Smith L. Why new technologies fail: overcoming the invisibility of implementation. Ind Manage. 1999;41:29–34.

Klein KJ, Knight AP. Innnovation implementation: overcoming the challenge. Curr Dir Psychol Sci. 2005;14:243–6.

Rizzuto TE, Reeves J. A multidisciplinary meta-analysis of human barriers to technology implementation. Consult Psychol J: Pract Res. 2007;59:226–40.

Moullin JC, Sabater-Hernández D, Fernandez-Llimos F, Benrimoj SI. A systematic review of implementation frameworks of innovations in healthcare and resulting generic implementation framework. Health Res Policy Syst. 2015;13:16.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43:337–50.

Mazzucca S, Tabak RG, Pilar M, Ramsey AT, Baumann AA, Kryzer E, Lewis EM, Padek M, Powell BJ, Brownson RC. Variation in research designs used to test the effectiveness of dissemination and implementation strategies: a review. Front Public Health. 2018;6:32.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Hlth. 2011;38:4–23.

Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74.

Brownson RC, Colditz GA, Proctor EK. Dissemination and implementation research in health: translating science to practice. USA: Oxford University Press; 2012.

Chambers DA. Commentary: increasing the connectivity between implementation science and public health: advancing methodology, evidence integration, and sustainability. Annu Rev Public Health. 2018;39:1–4.

Green L, Kreuter M. Health program planning: an educational and ecological approach. Boston: McGraw Hill; 2005.

Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, Silovsky JF, Hecht DB, Chaffin MJ. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci. 2012;7:1–9.

Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117.

Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65.

Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21:1055–80.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O, Peacock R. Storylines of research in diffusion of innovation: a meta-narrative approach to systematic review. Soc Sci Med. 2005;61:417–30.

Aarons GA, Ehrhart MG, Moullin JC, Torres EM, Green AE. Testing the Leadership and Organizational Change for Implementation (LOCI) intervention in substance abuse treatment: a cluster randomized trial study protocol. Implement Sci. 2017;12:29.

Leavy B, Kwak L, Hagströmer M, Franzén E. Evaluation and implementation of highly challenging balance training in clinical practice for people with Parkinson’s disease: protocol for the HiBalance effectiveness-implementation trial. BMC Neurol. 2017;17:27.

Peltzer K, Prado G, Horigian V, Weiss S, Cook R, Sifunda S, Jones D. Prevention of mother-to-child transmission (PMTCT) implementation in rural community health centres in Mpumalanga province, South Africa. J Psychol Afr. 2016;26:415–8.

Patterson TL, Semple SJ, Chavarin CV, Mendoza DV, Santos LE, Chaffin M, Palinkas L, Strathdee SA, Aarons GA. Implementation of an efficacious intervention for high risk women in Mexico: protocol for a multi-site randomized trial with a parallel study of organizational factors. Implement Sci. 2012;7:105.

de MNT M, de RMPF S, Filho DAM. Sustainability of an innovative school food program: a case study in the northeast of Brazil. SciELO Public Health. 2016;21:1899–908.

Willging CE, Green AE, Ramos MM. Implementing school nursing strategies to reduce LGBTQ adolescent suicide: a randomized cluster trial study protocol. Implement Sci. 2016;11:145.

Williams JR, Blais MPB, D, Dusablon T, Williams WO, Hennessy KD: Predictors of the decision to adopt motivational interviewing in community health settings. J Behav Health Serv Res 2014, 41:294–307.

Skolarus TA, Lehmann T, Tabak RG, Harris J, Lecy J, Sales AE. Assessing citation networks for dissemination and implementation research frameworks. Implement Sci. 2017;12:97.

Shoup JA, Gaglio B, Varda D, Glasgow RE. Network analysis of RE-AIM framework: chronology of the field and the connectivity of its contributors. Transl Behav Med. 2014;5:216–32.

Atkins L, Francis J, Islam R, O’Connor D, Patey A, Ivers N, Foy R, Duncan EM, Colquhoun H, Grimshaw JM. A guide to using the theoretical domains framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12:77.

Stetler CB, Damschroder LJ, Helfrich CD, Hagedorn HJ. A guide for applying a revised version of the PARIHS framework for implementation. Implement Sci. 2011;6:99.

Consolidated Framework for Implementation Research (CFIR) [http://www.cfirguide.org/].

Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) [http://www.re-aim.org/].

Birken SA, Powell BJ, Presseau J, et al. Combined use of the Consolidated Framework for Implementation Research (CFIR) and the Theoretical Domains Framework (TDF): a systematic review. Implement Sci. 2017;12:2.

Tricco AC, Ashoor HM, Cardoso R, MacDonald H, Cogo E, Kastner M, McKibbon A, Grimshaw JM, Straus SE. Sustainability of knowledge translation interventions in healthcare decision-making: a scoping review. Implement Sci. 2016;11:55.

Shelton RC, Cooper BR, Stirman SW. The sustainability of evidence-based interventions and practices in public health and health care. Annu Rev Public Health. 2018;39(1):55–76.

Gates LB, Hughes A, Kim DH. Influence of staff attitudes and capacity on the readiness to adopt a career development and employment approach to services in child welfare systems. J Publ Child Welfare. 2015;9:323–40.

Moore LA, Aarons GA, Davis JH, Novins DK. How do providers serving American Indians and Alaska Natives with substance abuse problems define evidence-based treatment? Psychol Serv. 2015;12:92–100.

Birken SA, Rohweder CL, Powell BJ. T-CaST: an implementation theory comparison and selection tool. Implement Sci. 2018;13:143.

Glasgow RE, Vogt T, Boles S. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–7.

Harden SM, Smith ML, Ory MG, Smith-Ray RL, Estabrooks PA, Glasgow RE. RE-AIM in clinical, community, and corporate settings: perspectives, strategies, and recommendations to enhance public health impact. Front Public Health. 2018;6:71.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the consolidated framework for implementation research. Implement Sci. 2016;11:72.

Proctor EK, Powell BJ, Baumann AA, Hamilton AM, Santens RL. Writing implementation research grant proposals: ten key ingredients. Implement Sci. 2012;7:96.

Watson DP, Adams EL, Shue S, Coates H, McGuire A, Chesher J, Jackson J, Omenka OI. Defining the external implementation context: an integrative systematic literature review. BMC Health Serv Res. 2018;18:209.

Lewis CC, Stanick CF, Martinez RG, Weiner BJ, Kim M, Barwick M, Comtois KA. The Society for Implementation Research Collaboration Instrument Review Project: a methodology to promote rigorous evaluation. Implement Sci. 2015;10:2.

Rabin BA, Purcell P, Naveed S, P MR, Henton MD, Proctor EK, Brownson RC, Glasgow RE. Advancing the application, quality and harmonization of implementation science measures. Implement Sci. 2012;7:119.

Rabin BA, Lewis CC, Norton WE, Neta G, Chambers D, Tobin JN, Brownson RC, Glasgow RE. Measurement resources for dissemination and implementation research in health. Implement Sci. 2016;11:42.

Lewis CC, Stanick C, Lyon A, Darnell D, Locke J, Puspitasari A, Marriott BR, Dorsey CN, Larson M, Jackson C, et al. Proceedings of the fourth biennial conference of the Society for Implementation Research Collaboration (SIRC) 2017: implementation mechanisms: what makes implementation work and why? Part 1. In: Society for implementation research collaboration; 2018. Implementation science; 2017.

Proceedings from the 10th Annual Conference on the Science of Dissemination and Implementation. In Science of Dissemination and Implementation; 2018; Arlington, VA. Implementation Science; 2017: 728.

Hurlburt M, Aarons GA, Fettes D, Willging C, Gunderson L, Chaffin MJ. Interagency collaborative team model for capacity building to scale-up evidence-based practice. Child Youth Serv Rev. 2014;39:160–8.

Aarons GA, Fettes DL, Hurlburt MS, Palinkas LA, Gunderson L, Willging CE, Chaffin MJ. Collaboration, negotiation, and coalescence for interagency-collaborative teams to scale-up evidence-based practice. J Clin Child Adolesc Psychol. 2014;43:915–28.

Aarons GA, Farahnak LR, Ehrhart MG, Sklar M. Aligning leadership across systems and organizations to develop strategic climate to for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74.

Palinkas LA, Cooper BR. Mixed methods evaluation in dissemination and implementation science. In: Brownson RC, Colditz G, Proctor EK, editors. Dissemination and implementation research in health: translating science to practice. New York: Oxford University Press; 2017.

Holtrop JS, Rabin BA, Glasgow RE. Qualitative approaches to use of the RE-AIM framework: rationale and methods. BMC Health Serv Res. 2018;18:177.

Gleacher AA, Olin SS, Nadeem E, Pollock M, Ringle V, Bickman L, Hoagwood K. Implementing a measurement feedback system in community mental health clinics: a case study of multilevel barriers and facilitators. Adm Policy Ment Hlth. 2016;43:1–15.

QUALRIS workgroup: Qualitative research in implementation science. National Cancer Institute; 2017.

Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, Landsverk J. Mixed method designs in implementation research. Adm Policy Ment Hlth. 2011;38:44–53.

Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreatment. 2012;17:67–79.

Wiley T, Belenko S, Knight D, Bartkowski J, Robertson A, Aarons G, Wasserman G, Leukefeld C, DiClemente R, Jones D. Juvenile Justice-Translating Research Interventions for Adolescents in the Legal System (JJ-TRIALS): a multi-site, cooperative implementation science cooperative. Implement Sci. 2015;10:A43.

Stirman SW, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17.

Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. BMJ. 2015;350:h2147.

Knight DK, Belenko S, Wiley T, Robertson AA, Arrigona N, Dennis M, Bartkowski JP, McReynolds LS, Becan JE, Knudsen HK, et al. Juvenile Justice—Translational Research on Interventions for Adolescents in the Legal System (JJ-TRIALS): a cluster randomized trial targeting system-wide improvement in substance use services. Implement Sci. 2016;11:57.

Becan JE, Bartkowski JP, Knight DK, Wiley TRA, DiClemente R, Ducharme L, Welsh WN, Bowser D, McCollister K, Hiller N, Spaulding AC, Flynn PM, Swartzendruber DMF, Fisher JH, Aarons GA. A model for rigorously applying the exploration, preparation, implementation, sustainment (EPIS) framework in the design and measurement of a large scale collaborative multi-site study. Health Justice. 2017;6(1):9.

Ehrhart MG, Aarons GA, Farahnak LR. Going above and beyond for implementation: the development and validity testing of the implementation citizenship behavior scale (ICBS). Implement Sci. 2015;10.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097.

Mancini JA, Marek LI. Sustaining community-based programs for families: conceptualization and measurement. Fam Relat. 2004;53:339–47.

Caldwell DF, O’Reilly CA III. The determinants of team-based innovation in organizations: the role of social influence. Small Gr Res. 2003;34:497–517.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci. 2014;9:157.

Steckler A, Goodman RM, McLeroy KR, Davis S, Koch G. Measuring the diffusion of innovative health promotion programs. Am J Health Promot. 1992;6:214–25.

Patterson MG, West MA, Shackleton VJ, Dawson JF, Lawthom R, Maitlis S, Robinson DL, Wallace AM. Validating the organizational climate measure: links to managerial practices, productivity and innovation. J Organ Behav. 2005;26:379–408.

Glisson C. The organizational context of children's mental health services. Clin Child Fam Psych. 2002;5:233–53.

Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. J Subst Abus Treat. 2002;22:197–209.

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood K, Mayberg S, Green P. Research network on youth mental health: assessing the organizational social context (OSC) of mental health services: implications for research and practice. Adm Policy Ment Hlth. 2008;35:98–113.

Siegel SM, Kaemmerer WF. Measuring the perceived support for innovation in organizations. J Appl Psychol. 1978;63:553–62.

Aarons G: Organizational climate for evidence-based practice implementation: development of a new scale. Proceedings of the Annual Association for Behavioral and Cognitive Therapies: November 2011: Toronto 2011.

Anderson NR, West MA. Measuring climate for work group innovation: development and validation of the team climate inventory. J Organ Behav. 1998;19:235–58.

Holt DT, Armenakis AA, Feild HS, Harris SG. Readiness for organizational change: the systematic development of a scale. J Appl Behav Sci. 2007;43:232–55.

Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9:157.

Bass BM, Avolio BJ. The multifactor leadership questionnaire. Palo Alto, CA: Consulting Psychologists Press; 1989.

Beidas RS, Barmish AJ, Kendall PC. Training as usual: can therapist behavior change after reading a manual and attending a brief workshop on cognitive behavioral therapy for youth anxiety? Behavior Therapist. 2009;32:97–101.

Dimeff LA, Koerner K, Woodcock EA, Beadnell B, Brown MZ, Skutch JM, Paves AP, Bazinet A, Harned MS. Which training method works best? A randomized controlled trial comparing three methods of training clinicians in dialectical behavior therapy skills. Behav Res Ther. 2009;47:921–30.

Weersing VR, Weisz JR, Donenberg GR. Development of the therapy procedures checklist: a therapist-report measure of technique use in child and adolescent treatment. J Clin Child Adolesc. 2002;31:168–80.

McLeod BD, Weisz JR. The therapy process observational coding system for child psychotherapy-strategies scale. J Clin Child Adolesc Psychol. 2010;39:436–43.

Boyatzis RE, Goleman D, Rhee KS, Bar-On R, Parker JD. Handbook of Emotional Intelligence. In: Bar-On R, JDA P, editors. Clustering competence in emotional intelligence: insights from the emotional competence inventory. San Francisco: Jossey-Bass; 2000. p. 343–62.

Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the evidence-based practice attitude scale (EBPAS). Ment Health Serv Res. 2004;6:61–74.

Stumpf RE, CK H-MM, Chorpita BF. Implementation of evidence-based services for youth: assessing provider knowledge. Behav Modif. 2009;33:48–65.

Funk SG, Champagne MT, Wiese RA, Tornquist EM. Barriers: the barriers to research utilization scale. Appl Nurs Res. 1991;4:39–45.

Schaufeli WB, Leiter MP. Maslach burnout inventory–general survey. In: The Maslach burnout inventory-test manual; 1996. p. 19–26.

Acknowledgements

Dr. Aarons is core faculty and Dr. Stadnick is a fellow with the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (R25MH08091607) with additional support from the National Institute on Drug Abuse and the U.S. Department of Veterans Affairs.

Funding

This project was supported in part by the US National Institute of Mental Health R01MH072961 (Aarons), K23 MH115100 (Dickson), K23 MH110602 (Stadnick) and National Institute of Drug Abuse R01DA038466 (Aarons), and U01DA036233 (R. DiClemente). The opinions expressed herein are the views of the authors and do not necessarily reflect the official policy or position of the NIMH, NIDA, or any other part of the U.S. Department of Health and Human Services.

Availability of data and materials

Not applicable.

Author information

Authors and Affiliations

Contributions

GAA and JCM conceptualized this study. JCM, NAS, KSD, BAR, and GAA identified, reviewed, and evaluated articles, and drafted and edited the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was not required for this systematic review.

Consent for publication

Not applicable.

Competing interests

GAA is an Associate Editor of Implementation Science; all decisions on this paper were made by another editor. The authors declare that they have no other competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Data Extraction. (PDF 1250 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Moullin, J.C., Dickson, K.S., Stadnick, N.A. et al. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implementation Sci 14, 1 (2019). https://doi.org/10.1186/s13012-018-0842-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-018-0842-6