Abstract

Education in implementation science, which involves the training of health professionals in how to implement evidence-based findings into health practice systematically, has become a highly relevant topic in health sciences education. The present study advances education in implementation science by compiling a competence profile for implementation practice and research and by exploring implementation experts’ sources of expertise. The competence profile is theoretically based on educational psychology, which implies the definition of improvable and teachable competences. In an online-survey, an international, multidisciplinary sample of 82 implementation experts named competences that they considered most helpful for conducting implementation practice and implementation research. For these competences, they also indicated whether they had acquired them in their professional education, additional training, or by self-study and on-the-job experience. Data were analyzed using a mixed-methods approach that combined qualitative content analyses with descriptive statistics. The participants deemed collaboration knowledge and skills most helpful for implementation practice. For implementation research, they named research methodology knowledge and skills as the most important ones. The participants had acquired most of the competences that they found helpful for implementation practice in self-study or by on-the-job experience. However, participants had learned most of their competences for implementation research in their professional education. The present results inform education and training activities in implementation science and serve as a starting point for a fluid set of interdisciplinary implementation science competences that will be updated continuously. Implications for curriculum development and the design of educational activities are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

Introduction

Implementation science, “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services and care” (Eccles and Mittman 2006, p. 1), has become a highly relevant topic in the health sciences. Clinical practice often considerably lags behind research findings, leading to poor health outcomes and an ineffective use of resources (Kien et al. 2018). Therefore, numerous frameworks describe how the transfer of scientific knowledge to health practice and the implementation of evidence-based interventions can be carried out systematically (e.g., Damschroder et al. 2009; Fixsen et al. 2005; Proctor et al. 2011). The complexity of implementation frameworks shows that carrying out and studying implementation projects requires a comprehensive set of knowledge and skills. These imply not only an understanding of implementation theory and research, but also skills that are necessary for building constructive partnerships with project stakeholders in the health system.

As a good example of systematic implementation practice and research that is based on a scientific framework, Blanchard et al. (2017) describe how they improved the implementation of comprehensive medication management in primary care practices across the United States. Implementation capacity building in this project included needs and readiness assessments for each site, an identification of program champions, practitioner coaching as well as the installation of improvement cycles and communication structures between practice and policy. Implementation research comprised developing fidelity measures and assessing fidelity concerning adherence to the intervention content, contextual support for the intervention and the practitioners’ competences at practice sites.

For professionally educating health specialists in also becoming implementation specialists, it is essential to define the necessary competences for research and practice in implementation and to create opportunities for training on respective knowledge and skills. Embracing the interdisciplinary nature of the field, perspectives from various disciplines that comprise implementation science (e.g., health sciences, social work, education) should be integrated in the definition of competence profiles. Furthermore, with implementation science being primarily a subject for continuing education of trainees from various backgrounds, trainees’ pre-education and experiences should be acknowledged in training concepts. Moreover, as a prerequisite for competence-based teaching, the concept of “competence” needs to be theoretically informed and consistently understood among experts who define competences for education in implementation science.

Approaches for education and training in implementation science

In recent years, the field of professional education in implementation science has grown rapidly (Meissner et al. 2013; Proctor et al. 2013; Straus et al. 2015). Having been predominantly a topic for short-term training or isolated classes in university curricula, implementation science has become the center of several certificate programs at universities across the globe (Osanjo et al. 2016; Chambers et al. 2017; Ullrich et al. 2017). Hence, there is an increasing number of opportunities for students and professionals in healthcare to advance their knowledge and skills in implementation research and practice. Still, education and training in implementation science do not follow particular competence models that define what trainees in the field need to learn.

Implementation science is an evolving interdisciplinary field with a variety of different procedures, frameworks and terminologies (Durlak and DuPre 2008; Meyers et al. 2012; Tabak et al. 2012; Moullin et al. 2015). Hence, it is not obvious what the topical foci of respective educational curricula should be. Topics for implementation science classes have previously been prioritized based on implementation frameworks (Straus et al. 2011), surveys of expert groups (Moore et al. 2018; Tabak et al. 2017) or interviews with early-stage investigators (Stamatakis et al. 2013). Moreover, implementation experts, students and teachers have rated predefined competences according to their importance (Ullrich et al. 2017), skill levels (Padek et al. 2015) and their difficulty of being incorporated into a training curriculum (Tabak et al. 2017). The groups who have defined educational contents were mostly focused on a specific discipline and concentrated on a particular geographical area. Accordingly, competence profiles for implementation science that are created by diverse, international and multidisciplinary groups are still missing. The present study seeks to address this gap by presenting an international, multidisciplinary perspective on education in implementation science.

Another gap in research on education in implementation science is that it has not addressed trainees’ previous knowledge or diverse learning sources so far. With implementation science being an interdisciplinary field, the audience for professional education and training comes from various backgrounds with highly differing pre-education, experiences and training needs (Chambers et al. 2017; Proctor and Chambers 2017). Depending on their educational backgrounds, students of implementation science training might already have advanced research knowledge and skills but are seeking to improve their knowledge of implementation frameworks, or vice versa. Training needs might also differ for trainees seeking education in implementation practice or research. However, with few exemptions (e.g., Moore et al. 2018), most implementation science courses merely focus on implementation research so far (e.g., Ullrich et al. 2017) while there is little professional education in implementation practice (Proctor and Chambers 2017). The present study aims at contributing to an understanding of implementation experts’ sources of expertise and training needs while focusing on competences for both implementation practice and research.

A theoretical basis for implementation science competences

In medical education—just as in many other educational contexts—there exist multiple definitions and conceptions of the term “competence” (Fernandez et al. 2012). In order to obtain clear definitions of implementation science competences, we integrate our study into an educational framework that also clearly defines what competences constitute. As competences have been a major research focus in educational psychology in recent years (Koeppen et al. 2008), our competence approach relates implementation science to educational psychology (Fixsen et al. 2016).

With the emphasis on products of educational processes, educational psychology defines competences as “context-specific cognitive dispositions that are acquired and needed to successfully cope with certain situations or tasks in specific domains” (Koeppen et al. 2008, p. 62; Leutner et al. 2017, p. 2) or “abilities to do something successfully or efficiently based on a range of knowledge and skills” (Bergsmann et al. 2018, p. 2). Accordingly, competence based medical education is “an approach to preparing physicians for practice that is fundamentally oriented to graduate outcome abilities and organized around competencies derived from an analysis of societal and patient needs. It de-emphasizes time-based training and promises greater accountability, flexibility, and learner-centredness” (Frank et al. 2010, p. 636), “(…) making it an individualized approach that is particularly applicable in workplace training” (ten Cate 2017, p. 1).

In a systematic review, Fernandez et al. (2012) found that most authors in medical education journals agree on knowledge and skills as constituting elements of competences. Other important characteristics of competences are that they refer to a specific domain and context and, most important for competence development, that they can be improved by training and deliberate practice (Koeppen et al. 2008; Shavelson 2013; Bergsmann et al. 2015). Often the terms “competence” and “competency” are used synonymously. However, the term competence is the broader, more holistic term (Blömeke et al. 2015), which seems to be more suitable for educational psychology as well as for implementation science.

Competence research has become especially important in educational psychology for implementing competence-based teaching in (higher) education. For that purpose, competence profiles for professionals working in particular fields as well as methods for assessing competences are needed. However, although competence-based curricula have a long tradition in health sciences education, aligning teaching and learning activities with competence profiles remains a challenge (Morcke et al. 2013). Accordingly, the implementation of competence-based teaching in higher education should be systematically guided and supported (Bergsmann et al. 2015). By relating to competence research from educational psychology, the present study aims at contributing a compendium of improvable and teachable competences for implementation practice and research. This compendium can build the basis for the development of education and training curricula in implementation science as well as for their evaluation and continuous improvement.

The present study

The present study contributes to the advancement of education in implementation science as an evolving field within the health sciences by following two aims: The first aim is to compile a competence profile for conducting implementation practice and research that is based on educational psychology and provides a multidisciplinary view on implementation science competences. The second aim is to gain information on implementation practitioners’ and researchers’ existing competences and to explore where they have acquired their most helpful competences in order to assess common training needs and competence sources.

The present study comprises a survey of an international, multidisciplinary group of implementation practitioners and researchers. In order to get a better understanding of the participants’ positions and experiences, we followed a predominantly qualitative approach in data collection that explores the participants’ own verbalizations of competences. Using a concurrent triangulation mixed methods design (Creswell et al. 2003), we combined qualitative content analyses with descriptive statistics in data analysis.

Methods

Participants

We invited all registered participants of the Global Implementation Conference 2017 to participate in the study via an online survey. The conference had taken place in Toronto, Canada, in June 2017 and had hosted 514 international implementation researchers and practitioners from a variety of disciplines. The link to the survey was sent out by the conference organizers in August 2017, with two reminders 1 and 2 weeks after the initial invitation.

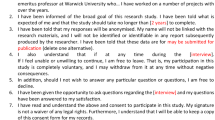

Ethics approval and consent to participate

The study was reviewed by the Office of Human Research Ethics of the University of North Carolina at Chapel Hill and was determined to be exempt from further review. Participation in the study was voluntary and anonymous.

Measures

The survey comprised demographical items on the participants’ area of work (e.g., practitioner, researcher) and their field of expertise (e.g., health, education). It was possible to select multiple answers and to provide other, open answers. Also, participants were asked to indicate their country of origin using a drop-down menu.

At the beginning of the survey, we provided the definition of competences by Bergsmann et al. (2018), emphasizing that competences are comprised of both knowledge and skills (see also Fernandez et al. 2012). An example from the implementation field followed the definition:

“We define competences as abilities to do something successfully or efficiently based on a range of knowledge and skills. An exemplary competence for both implementation research and practice would be ‘Defining the term implementation in a way which is understandable to the respective audience’.” The definition was shown on all following pages as a footnote.

Then, we asked participants to name competences that are helpful for conducting implementation practice (part A) and implementation research (part B) in several steps. The steps were identical in both parts of the survey. We framed implementation practice as “implementation capacity building”, as we assumed this wording to be more helpful when thinking of specific competences. In step one, we presented a vignette, asking participants to imagine they would hire a new co-worker who would be responsible for implementation capacity building (in part A) or implementation research (in part B). The participants were asked to name up to five competences that they would look for in applicants (general competences).

In step two, we asked the participants to indicate whether they had ever conducted implementation capacity building or implementation research themselves. If the answer was yes, they were asked to name up to five competences that had been helpful to them doing either of these activities (personal competences). In step three, the participants were presented with their personal competences and asked where they had acquired them (competence sources). They could assign each competence to “professional educational background”, “additional training” or “self-study or on-the-job experience”. Finally, the participants could name up to five competences that they would still like to expand or acquire.

The survey was piloted with a multidisciplinary group of eight implementation experts (from behavioral health, public health, pharmacy, psychology and education), whose feedback was integrated into the final version of the survey.

Data analysis

Following a concurrent triangulation mixed methods design (Creswell et al. 2003), we integrated descriptive statistics in qualitative analyses of our data. For compiling the competence profile, we conducted a qualitative content analysis (Forman and Damschroder 2007) using MAXQDA Version 12 (VERBI Software 2017). Due to the exploratory nature of our research questions, we decided to follow an inductive approach of analysis that was based on our data material. In particular, one researcher, who is trained and highly experienced in qualitative data analysis, developed an inductive coding scheme based on the qualitative data and coded all open answers. A second researcher with considerable experience in qualitative research coded the entirety of open answers using the same coding scheme. Afterwards, the two researchers discussed differences in coding as well as the clarity of categories and refined the coding scheme together. Based on the final coding scheme, the first researcher coded all open answers again, which led to the final results.

In order to link the qualitative data on competences to their respective competence sources, which had been recorded as dichotomous variables, we transferred the coded data to SPSS Version 24 (IBM 2016). We analyzed the data descriptively by using frequencies and cross-tabs.

Results

Sample

A total of 82 delegates from the Global Implementation Conference 2017 participated in the survey, which corresponds to a return rate of 16 percent. Altogether, the 82 participants named 424 competences. The majority of the sample was from North America (66.7%), followed by Europe (19.8%), Australia (9.9%) and Africa (3.7%). The countries with the most participants were Canada (39.5%), the United States (27.2%) and Australia (9.9%). For defining their areas of work and fields of expertise, participants could provide multiple answers. Most participants defined themselves as researchers (46.3%) and/or practitioners (45.1%). Health (67.1%) and/or education (31.7%) were the most frequent choices for the field of expertise. Table 1 summarizes additional sample characteristics.

Of the participants who defined themselves as researchers, most worked in health (30.5%), followed by education (17.1%). Similarly, most of the practitioners worked in health (29.3%) and/or education (20.7%).

Competence profile for implementation practice and research

The qualitative content analysis of all named competences led to nine competence clusters relevant to implementation practice and implementation research: Implementation science knowledge and skills, setting knowledge and skills, program evaluation knowledge and skills, management knowledge and skills, research methodology knowledge and skills, academic knowledge and skills, collaboration knowledge and skills, communication knowledge and skills as well as educational knowledge and skills. Each competence cluster consists of three to eight sub-competences (Table 2), which all had a frequency of at least three responses.

When asked for the most helpful competences for implementation practice, participants named collaboration knowledge and skills most frequently. Apart from that, a high number of competences could be assigned to the implementation science knowledge and skills cluster.

For conducting implementation research, participants most frequently named research methodology knowledge and skills as the most helpful competences. The second most important competence cluster was implementation science knowledge and skills (see Table 3 for frequencies of all competence clusters).

Participants working in health named collaboration knowledge and skills (27.3%) and educational knowledge and skills (16.4%) as their most helpful personal competences for implementation practice. For participants working in education, competences in the implementation science (27.5%) and collaboration knowledge and skills cluster (25%) were the most important. Social work professionals most often named collaboration knowledge and skills (26.5%), followed by implementation science knowledge and skills (20.6%).

For implementation research, participants from the health sector named research methodology knowledge and skills (22.2%) and collaboration knowledge and skills (18.5%) as their most helpful personal competences. Participants from the education field most often named collaboration (23.3%) and implementation science knowledge and skills (16.7%). For participants with a social work background, research methodology (44.4%) and implementation science knowledge and skills (16.7%) were most important.

The most frequently named sub-competences that were perceived helpful for implementation practice were knowledge of implementation theory and frameworks (8.8%), the ability to network and build relationships (7.7%) and the ability to collaborate and co-design (6.6%). For conducting implementation research, the ability to find and synthesize relevant information, knowledge of study designs and general measurement and data analysis skills (each 6.4%) were indicated as the most helpful sub-competences.

Some responses that appeared several times were not attributed to a particular competence cluster, as they represent rather general characteristics than specific competences. The most frequent (for implementation practice and research combined) were flexibility (n = 14 mentions), the ability and willingness to learn (n = 10) and analytical thinking (n = 9).

Competence sources and training needs

The participants had learned most of the competences that they found helpful for their implementation practice in self-study or by on-the-job experience, followed by their professional education and additional training. Concerning competences that were helpful for implementation research, participants had acquired most of them through professional education and self-study or on-the-job experience. Only a small percentage of implementation research competences have been acquired in additional training. Table 4 shows the frequencies of named competence sources for each competence cluster.

Of those competences that participants would still like to expand or acquire for their implementation practice, most fall into implementation science knowledge and skills (33.3%), followed by collaboration (18.8%) and research methodology knowledge and skills (16.7%). More specifically, participants indicated that they would like to expand their specialized implementation knowledge and skills (17.9%) and their knowledge of implementation frameworks (5.4%). Apart from that, participants would like to acquire leadership skills (8.9%) as well as general research knowledge and skills and presentation and facilitation knowledge and skills (each 5.4%).

For their implementation research, most participants would like to expand or acquire competences attributed to research methodology (35.3%), program evaluation (17.6%) and communication knowledge and skills (17.6%). The most frequently named sub-competences were general program evaluation knowledge and skills (14.3%), quantitative research skills and the ability to build shared understandings and agendas (both 9.5%).

Discussion

The present study aimed at advancing education in implementation science, and thus advancing the education of health professionals in how to implement evidence-based findings into health practice systematically. For that purpose, the present study compiled (1) a competence profile for implementation practice and implementation research and (2) competence sources and training needs for implementation science. In contrast to other competence studies in implementation science, we surveyed an international, multidisciplinary sample of implementation experts and theoretically based our study on educational psychology. Within this theoretical framework, competences are improvable and teachable and can be assessed in both trainees’ self-evaluations and evaluations of training programs and curricula.

The survey resulted in a competence profile including nine competence clusters. Although one highly relevant cluster comprised competences defining implementation science knowledge and skills, the remaining eight clusters mainly consisted of cross-sectional knowledge and skills. This result is similar to previous compendiums of implementation science competences (Tabak et al. 2012, 2017; Ullrich et al. 2017) that also emphasize the importance of a broad, interdisciplinary set of knowledge and skills. However, expanding the scope of other implementation science competence profiles, the present study explicitly assessed competences for both implementation practice and implementation research. When comparing results, competences from all nine clusters were relevant for both fields, but the priorities differed.

For implementation practice, competences belonging to the collaboration knowledge and skills cluster were named as most important. Certainly, these abilities are preconditions for successful implementation work in multi-professional and research-practice partnerships (Eriksson et al. 2014; Gagliardi et al. 2015). Well-functioning teams, communication and strong networks have been shown to facilitate the use of evidence-based practices in health (Jackson et al. 2018). Competences in areas such as communication, collaboration, and professional identity formation have also been named by the ICBME (international competency based medical education) Collaborators as desired outcomes for training in medical education (Frank et al. 2017). Furthermore, especially the importance of co-creation and constant feedback loops has often been emphasized by implementation experts (Fixsen et al. 2013; Metz and Albers 2014).

For conducting research on implementation, research methodology knowledge and skills, such as the ability to find and synthesize relevant information, were seen as the most important competences. These are also named in most other implementation science competence profiles (Padek et al. 2015; Ullrich et al. 2017; Tabak et al. 2017). Collaboration knowledge and skills were also listed frequently, which relates to an interview study with early career researchers (Stamatakis et al. 2013), who named “developing practice linkages” as one of the most important competences for conducting dissemination and implementation research. In this context, Luke et al. (2016) have shown that structured mentoring in implementation science significantly increases scientific collaboration.

The participants’ most important competence source for their implementation practice was their own on-the-job experience. This especially applied to their collaboration, communication and setting knowledge and skills. On the one hand, this result highlights the importance of practical experience for gaining implementation expertise. On the other hand, it indicates that collaboration and communication competences should become an integral part of implementation science curricula. This not only concerns teaching theoretical knowledge of how to build practice partnerships and models of communication but also applying collaborative teaching methods. The latter can include learning in peer groups, especially by jointly applying theoretical knowledge in real-life implementation projects as well as coaching and mentored supervision of practical implementation work. Methods like these include immediate feedback on practical activities while also providing a valuable professional network to trainees.

For building competences in implementation research, self-study and on-the-job experience were important as well, but participants had also acquired a considerable part of relevant competences in their professional education. The latter was especially an important source for the participants’ research methodology knowledge and skills. Hence, although knowledge of study designs and measurement issues as well as data analysis skills are important competences for conducting implementation research, most aspiring implementation experts might already be familiar with these topics from their former professional education. In order to get a valid estimation of these pre-experiences, a screening of participants’ competence levels in respective teaching contents of educational activities (Bergsmann et al. 2018) might be beneficial for both trainees and educators.

Since differences in learners’ pre-experiences are common in education and training in implementation science (Chambers et al. 2017), participants’ differing training needs can be met by building smaller groups within training sessions (Proctor and Chambers 2017). Another approach could be to offer implementation science curricula in a modular system, where trainees choose their modules according to the results of a competence assessment or the content’s relevance to their respective fields. Since a lot of continuing education activities in implementation science focus on particular topics, trainees can also arrange their educational curriculum according to their individual training needs. For that purpose, it is important to have an overview of learning and teaching resources in implementation science (Chambers et al. 2017), which can be easily accessed, e.g., in the form of a website (Proctor and Chambers 2017).

In terms of contents that institutions choose for their educational activities in implementation science, it makes sense to adapt general competence profiles to local needs, which also strengthens ownership among faculty (Hejri and Jalili 2013). This is especially relevant for the alignment of teaching and learning activities with desired learning outcomes. Bergsmann et al. (2015) provide a structured approach on how to define student competences for a curriculum in a participatory approach and how to align those with teaching methods and learning strategies. However, the benefit of competence profiles for teaching and learning in medical education still needs to be researched (Morcke et al. 2013). In this regard, an important aspect for discussion is the level of detail on which competences need to be defined, so that competence profiles are useful to both educators and trainees (Schultes et al. 2019).

Limitations

The current study aimed at gaining an international perspective on competences for implementation research and practice. However, similar to previous research on the topic, the sample mostly consisted of participants from North America. Moreover, in comparison to the participant number of the Global Implementation Conference 2017, the return rate of surveys was rather low. Also, it was not possible to analyze whether respondents differed from non-respondents since the survey was anonymous, and the same sociodemographic data was not available for conference participants in general and those who participated in the survey. Further studies should anticipate this problem, for example, by including participant characteristics in the survey that are known for all potential participants. However, our sample named a total of 424 competences, which still provided us with a large database for our qualitative data analyses.

Future studies that validate and refine the competence profile from the present exploratory study might follow a quantitative approach of data collection and analysis that requires larger sample sizes. In this case, we suggest surveying participants from several different implementation science conferences or members from different implementation networks. The present study serves as a starting point for a fluid set of interdisciplinary competences. Education and training activities in implementation science should not only teach relevant foundations but also keep up with developments in the field (Chambers et al. 2017). Hence, competence profiles can only be seen as temporary, and the results from our present study should be regularly updated including participants with a broad distribution of regional and professional foci.

In order to support a specific notion of relevant competences, the survey items concerning implementation practice were framed as “implementation capacity building”. This wording might have triggered a specific set of implementation practice activities. For further research on competences in implementation science, we still consider it important to use a rather specific language, but the items should also be general enough to represent a large variety of implementation practice and research activities. Furthermore, it is important to refer to the knowledge and skill components of competences explicitly and to use examples referring to teachable and learnable competences. Overall, self-reported competences provide important information on participants’ experiences. Still, these should be complemented by other data sources, such as peer reports or surveys of other stakeholder groups from implementation studies (e.g., practice partners).

Since we followed an inductive approach of data analysis, the resulting sub-competences are closely aligned with the raw data and, as a consequence, not entirely consistent in their levels of abstraction. Although the competence clusters as a whole all comprise knowledge and skills in several sub-competences, not all of the sub-competences themselves consist of both knowledge and skill components. In order to create a more consistent competence profile for education and training in implementation science, the resulting sub-competences from this study can be aligned in their levels for further studies and used as a deductive coding scheme.

Conclusions

Focusing on an emerging field in health sciences education, the present study compiled a competence profile for implementation science that includes a multidisciplinary perspective and is theoretically based on educational psychology. The competence profile comprises improvable and teachable competences that can be used for curriculum development in implementation science. Moreover, it can serve as a guide for choosing suitable training programs and for designing evaluations of education and training in implementation research and practice.

Since the present study showed that pre-experiences and competence sources differ among implementation experts, trainees’ pre-education should be considered when designing curricula and when allocating trainees to different training groups. With collaboration knowledge and skills being one of the most important competences for both implementation practice and research, the results of the present study also emphasize the importance of collaborative activities, such as learning in peer groups, coaching, or mentoring, in implementation science education. We encourage further research in competence development for implementation science that continuously updates the results of the present study and broadens our knowledge base for education in implementation science.

References

Bergsmann, E., Klug, J., Burger, C., Först, N., & Spiel, C. (2018). The competence screening questionnaire for higher education: Adaptable to the needs of a study programme. Assessment & Evaluation in Higher Education, 43, 537–554. https://doi.org/10.1080/02602938.2017.1378617.

Bergsmann, E., Schultes, M.-T., Winter, P., Schober, B., & Spiel, C. (2015). Evaluation of competence-based teaching in higher education: From theory to practice. Evaluation and Program Planning, 52, 1–9. https://doi.org/10.1016/j.evalprogplan.2015.03.001.

Blanchard, C., Livet, M., Ward, C., Sorge, L., Sorensen, T. D., & Roth McClurg, M. (2017). The active implementation frameworks: A roadmap for advancing implementation of comprehensive medication management in primary care. Research in Social and Administrative Pharmacy, 13, 922–929. https://doi.org/10.1016/j.sapharm.2017.05.006.

Blömeke, S., Gustafsson, J.-E., & Shavelson, R. J. (2015). Beyond dichotomies. Competence viewed as a continuum. Journal of Psychology, 223, 3–13. https://doi.org/10.1027/2151-2604/a000194.

Chambers, D. A., Proctor, E. K., Brownson, R. C., & Straus, S. E. (2017). Mapping training needs for dissemination and implementation research: Lessons from a synthesis of existing D&I research training programs. Translational Behavioral Medicine, 7, 593–601. https://doi.org/10.1007/s13142-016-0399-3.

Creswell, J. W., Plano Clark, V. L., Gutmann, M. L., & Hanson, W. E. (2003). Advanced mixed methods research designs. In A. Tashakkori & C. Teddlie (Eds.), Handbook of mixed methods in social and behavioral research (pp. 209–240). Thousand Oaks, CA: Sage Publications.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, 50. https://doi.org/10.1186/1748-5908-4-50.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41, 327–350. https://doi.org/10.1007/s10464-008-9165-0.

Eccles, M. P., & Mittman, B. S. (2006). Welcome to implementation science. Implementation Science, 1, 1. https://doi.org/10.1186/1748-5908-1-1.

Eriksson, C. C.-G., Fredriksson, I., Fröding, K., Geidne, S., & Pettersson, C. (2014). Academic practice–policy partnerships for health promotion research: Experiences from three research programs. Scandinavian Journal of Public Health, 42, 88–95. https://doi.org/10.1177/1403494814556926.

Fernandez, N., Dory, V., Ste-Marie, L.-G., Chaput, M., Charlin, B., & Boucher, A. (2012). Varying conceptions of competence: An analysis of how health sciences educators define competence. Medical Education, 46, 357–365. https://doi.org/10.1111/j.1365-2923.2011.04183.x.

Fixsen, D., Blase, K., Metz, A., & Van Dyke, M. (2013). Statewide implementation of evidence-based programs. Exceptional Children, 79, 213–230. https://doi.org/10.1177/001440291307900206.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, National Implementation Research Network. (FMHI Publication No. 231).

Fixsen, D. L., Schultes, M.-T., & Blase, K. A. (2016). Bildung-Psychology and implementation science. European Journal of Developmental Psychology, 13, 666–680. https://doi.org/10.1080/17405629.2016.1204292.

Forman, J., & Damschroder, L. (2007). Qualitative content analysis. In L. Jacoby & L. A. Siminoff (Eds.), Empirical methods for bioethics: A primer (pp. 39–62). Oxford: Elsevier.

Frank, J. R., Mungroo, R., Ahmad, Y., Wang, M., De Rossi, S., & Horsley, T. (2010). Toward a definition of competency-based education in medicine: A systematic review of published definitions. Medical Teacher, 32, 631–637. https://doi.org/10.3109/0142159X.2010.500898.

Frank, J. R., Snell, L., Englander, R., & Holmboe, E. S. (2017). Implementing competency-based medical education: Moving forward. Medical Teacher, 39, 568–573. https://doi.org/10.1080/0142159X.2017.1315069.

Gagliardi, A. R., Berta, W., Kothari, A., Boyko, J., & Urquhart, R. (2015). Integrated knowledge translation (IKT) in health care: A scoping review. Implementation Science, 11, 38. https://doi.org/10.1186/s13012-016-0399-1.

Hejri, S. M., & Jalili, M. (2013). Competency frameworks: Universal or local. Advances in Health Sciences Education, 18, 865–866. https://doi.org/10.1007/s10459-012-9426-4.

IBM. (2016). IBM SPSS statistics for macintosh, version 24.0. Armonk, NY: IBM Corp.

Jackson, C. B., Brabson, L. A., Quetsch, L. B., & Herschell, A. D. (2018). Training transfer: A systematic review of the impact of inner setting factors. Advances in Health Sciences Education, 24, 167–183. https://doi.org/10.1007/s10459-018-9837-y.

Kien, C., Schultes, M.-T., Szelag, M., Schoberberger, R., & Gartlehner, G. (2018). German language questionnaires for assessing implementation constructs and outcomes of psychosocial and health-related interventions: A systematic review. Implementation Science, 13, 150. https://doi.org/10.1186/s13012-018-0837-3.

Koeppen, K., Hartig, J., Klieme, E., & Leutner, D. (2008). Current issues in competence modeling and assessment. Journal of Psychology, 216, 61–73. https://doi.org/10.1027/0044-3409.216.2.61.

Leutner, D., Fleischer, J., Grünkorn, J., & Klieme, E. (2017). Competence assessment in education: An introduction. In D. Leutner, J. Fleischer, J. Gründkorn, & E. Klieme (Eds.), Competence assessment in education: Research, models and instruments (pp. 1–6). Cham: Springer.

Luke, D. A., Baumann, A. A., Carothers, B. J., Landsverk, J., & Proctor, E. K. (2016). Forging a link between mentoring and collaboration: A new training model for implementation science. Implementation Science, 11, 137. https://doi.org/10.1186/s13012-016-0499-y.

VERBI Software. (2017). MAXQDA version 12. Berlin: VERBI Software.

Meissner, H. I., Glasgow, R. E., Vinson, C. A., Chambers, D., Brownson, R. C., Green, L. W., et al. (2013). The U.S. training institute for dissemination and implementation research in health. Implementation Science, 8, 12. https://doi.org/10.1186/1748-5908-8-12.

Metz, A., & Albers, B. (2014). What does it take? How federal initiatives can support the implementation of evidence-based programs to improve outcomes for adolescents. Journal of Adolescent Health, 54, 92–96. https://doi.org/10.1016/j.jadohealth.2013.11.025.

Meyers, D. C., Durlak, J. A., & Wandersman, A. (2012). The quality implementation framework: A synthesis of critical steps in the implementation process. American Journal of Community Psychology, 50, 462–480. https://doi.org/10.1007/s10464-012-9522-x.

Moore, J. E., Rashid, S., Park, J. S., Khan, S., & Straus, S. E. (2018). Longitudinal evaluation of a course to build core competencies in implementation practice. Implementation science, 13, 106. https://doi.org/10.1186/s13012-018-0800-3.

Morcke, A. M., Dornan, T., & Eika, B. (2013). Outcome (competency) based education: An exploration of its origins, theoretical basis, and empirical evidence. Advances in Health Sciences Education, 18, 851–863. https://doi.org/10.1007/s10459-012-9405-9.

Moullin, J. C., Sabater-Hernández, D., Fernandez-Llimos, F., & Benrimoj, S. I. (2015). A systematic review of implementation frameworks of innovations in healthcare and resulting generic implementation framework. Health Research Policy and Systems, 13, 16. https://doi.org/10.1186/s12961-015-0005-z.

Osanjo, G. O., Oyugi, J. O., Kibwage, I. O., Mwanda, W. O., Ngugi, E. N., Otieno, F. C., et al. (2016). Building capacity in implementation science research training at the University of Nairobi. Implementation Science, 11, 30. https://doi.org/10.1186/s13012-016-0395-5.

Padek, M., Colditz, G., Dobbins, M., Koscielniak, N., Proctor, E. K., Sales, A. E., et al. (2015). Developing educational competencies for dissemination and implementation research training programs: An exploratory analysis using card sorts. Implementation Science, 10, 114. https://doi.org/10.1186/s13012-015-0304-3.

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38, 65–76. https://doi.org/10.1007/s10488-010-0319-7.

Proctor, E. K., & Chambers, D. A. (2017). Training in dissemination and implementation research: A field-wide perspective. Translational Behavioral Medicine, 7, 624–635. https://doi.org/10.1007/s13142-016-0406-8.

Proctor, E. K., Landsverk, J., Baumann, A. A., Mittman, B. S., Aarons, G. A., Brownson, R. C., et al. (2013). The implementation research institute: Training mental health implementation researchers in the United States. Implementation Science, 8, 105. https://doi.org/10.1186/1748-5908-8-105.

Schultes, M.-T., Mitchell, F., & Harvey, D. (2019). Implementing implementation competencies. Presentation at the global implementation conference in Glasgow, Scotland.

Shavelson, R. J. (2013). On an approach to testing and modeling competence. Educational Psychologist, 48, 73–86. https://doi.org/10.1080/00461520.2013.779483.

Stamatakis, K. A., Norton, W. E., Stirman, S. W., Melvin, C., & Brownson, R. C. (2013). Developing the next generation of dissemination and implementation researchers: Insights from initial trainees. Implementation Science, 8, 29. https://doi.org/10.1186/1748-5908-8-29.

Straus, S. E., Brouwers, M., Johnson, D., Lavis, J. N., Légaré, F., Majumdar, S. R., et al. (2011). Core competencies in the science and practice of knowledge translation: Description of a Canadian strategic training initiative. Implementation Science, 6, 127. https://doi.org/10.1186/1748-5908-6-127.

Straus, S. E., Sales, A., Wensing, M., Michie, S., Kent, B., & Foy, R. (2015). Education and training for implementation science: Our interest in manuscripts describing education and training materials. Implementation Science, 10, 136. https://doi.org/10.1186/s13012-015-0326-x.

Tabak, R. G., Khoong, E. C., Chambers, D. A., & Brownson, R. C. (2012). Bridging research and practice. American Journal of Preventive Medicine, 43, 337–350. https://doi.org/10.1016/j.amepre.2012.05.024.

Tabak, R. G., Padek, M. M., Kerner, J. F., Stange, K. C., Proctor, E. K., Dobbins, M. J., et al. (2017). Dissemination and implementation science training needs: Insights from practitioners and researchers. American Journal of Preventive Medicine, 52, 322–329. https://doi.org/10.1016/j.amepre.2016.10.005.

Ten Cate, O. (2017). Competency-based postgraduate medical education: Past, present and future. GMS Journal for Medical Education, 34, 1–13. https://doi.org/10.3205/zma001146.

Ullrich, C., Mahler, C., Forstner, J., Szecsenyi, J., & Wensing, M. (2017). Teaching implementation science in a new master of science program in Germany: A survey of stakeholder expectations. Implementation Science, 12, 55. https://doi.org/10.1186/s13012-017-0583-y.

Acknowledgements

Open access funding provided by University of Vienna. We thank Evelyn Steinberg (formerly Bergsmann), Carrie Martin Blanchard, Donna Burton, Monika Finsterwald, Joumana Haidar and Herbert Peterson for their valuable feedback on a previous version of the online survey. We also thank Jen Schroeder and Kelly Huber for their support in distributing the survey to the participants of the Global Implementation Conference.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schultes, MT., Aijaz, M., Klug, J. et al. Competences for implementation science: what trainees need to learn and where they learn it. Adv in Health Sci Educ 26, 19–35 (2021). https://doi.org/10.1007/s10459-020-09969-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-020-09969-8