Abstract

In the nonparametric data envelopment analysis literature, scale elasticity is evaluated in two alternative ways: using either the technical efficiency model or the cost efficiency model. This evaluation becomes problematic in several situations, for example (a) when input proportions change in the long run, (b) when inputs are heterogeneous, and (c) when firms face ex-ante price uncertainty in making their production decisions. To address these situations, a scale elasticity evaluation was performed using a value-based cost efficiency model. However, this alternative value-based scale elasticity evaluation is sensitive to the uncertainty and variability underlying input and output data. Therefore, in this study, we introduce a stochastic cost-efficiency model based on chance-constrained programming to develop a value-based measure of the scale elasticity of firms facing data uncertainty. An illustrative empirical application to the Indian banking industry comprising 71 banks for eight years (1998–2005) was made to compare inferences about their efficiency and scale properties. The key findings are as follows: First, both the deterministic model and our proposed stochastic model yield distinctly different results concerning the efficiency and scale elasticity scores at various tolerance levels of chance constraints. However, both models yield the same results at a tolerance level of 0.5, implying that the deterministic model is a special case of the stochastic model in that it reveals the same efficiency and returns to scale characterizations of banks. Second, the stochastic model generates higher efficiency scores for inefficient banks than its deterministic counterpart. Third, public banks exhibit higher efficiency than private and foreign banks. Finally, public and old private banks mostly exhibit either decreasing or constant returns to scale, whereas foreign and new private banks experience either increasing or decreasing returns to scale. Although the application of our proposed stochastic model is illustrative, it can be potentially applied to all firms in the information and distribution-intensive industry with high fixed costs, which have ample potential for reaping scale and scope benefits.

Similar content being viewed by others

Introduction

Performance analysis of multi-product firms has been conducted using several economic concepts, such as economies of scale (returns to scale), economies of scope, and marginal rates of technical substitutions. However, this study concentrates on the determination and measurement of returns to scale, as it has important implications for policy design and industry regulation. Scale elasticity is a quantitative measure of the returns-to-scale characterization of a firmFootnote 1 operating on the production frontier, and is used to determine improvement or deterioration in productivity by resizing their scales of operation.

In the literature, there are two analytical approaches to the empirical estimation of scale elasticity: the neoclassical and axiomatic approaches (Färe et al. 1988). While the former is usually estimated using parametric techniques such as stochastic frontier analysis (SFA), the latter is estimated nonparametric via data envelopment analysis (DEA). However, DEAFootnote 2 has distinct advantages over stochastic frontier estimation (Sahoo and Tone 2022). First, DEA avoids the choice of specific functional forms and the choice of the stochastic structure, which the stochastic frontier approach suffers from due to which it can confound the effects of misspecification of functional form with scale economies (Fusco et al. 2018; Sahoo and Acharya 2010; Sahoo et al. 2017; Sahoo and Gstach 2011). Second, contrary to the general belief, DEA is a full-fledged statistical methodology based on the characterization of firm efficiency as a stochastic variable. DEA estimators have desirable properties and provide a basis for constructing a wide range of formal statistical tests (Banker 1993; Banker and Natarajan 2004; Banker et al. 2022). Third, DEA reflects individual firm efficiency or inefficiency, which is particularly convenient for managerial decision making.

In the DEA literature, both qualitative and quantitative approaches are used to evaluate the returns-to-scale characterization of firms. While the former deals with the identification of the types of returns to scale (i.e., increasing, decreasing, or constant), the latter deals with the computation of scale elasticity (Førsund 1996; Sahoo et al. 1999, 2012; Tone and Sahoo 2003, 2004; Podinovski et al. 2009; Sahoo and Sengupta 2014; Sahoo and Tone 2015). Most studies that apply bespoke computational methods to evaluate scale economies are confined to specific DEA technologies under the constant returns to scale (CRS) specification of Charnes et al. (1978) and the variable returns to scale (VRS) specification of Banker et al. (1984). However, there is a large class of polyhedral technologies that include CRS and VRS technologies with production trade-offs and weight restrictions (Tone 2001; Atici et al. 2015; Podinovski 2004a; 2007, 2015, 2016), and weak and managerially disposable technologies (Kuosmanen 2005; Kuosmanen and Podinovski. 2009), hybrid returns to scale (Podinovski 2004b), technology with multiple component processes (Cook and Zhu 2011; Cherchye et al. 2013, 2015, 2016), and network technologies (Sahoo et al. 2014a, b), etc. See Podinovski et al. (2016) for a discussion of scale elasticity evaluation using a more general methodology.

Scale elasticity evaluation was also performed using the cost efficiency model of Färe et al. (1985) (Sueyoshi 1997; Tone and Sahoo 2005, 2006). The use of this model requires that input prices are exogenously given and measured with full certainty. However, in real-life situations, input prices are not exogenous but vary according to the actions of firms (Sahoo and Tone 2013). In addition, firms often face ex-ante price uncertaintyFootnote 3 when making production decisions (Camanho and Dyson 2005). Furthermore, input prices are synthetically constructed and hence represent average prices. Since decisions are made at the margin, the measure of allocative efficiency based on average prices can be distorted (Fukuyama and Weber 2008). Finally, the cost efficiency measure of Färe et al. (1985) reflects only input inefficiencies, but not market (price) inefficiencies (Camanho and Dyson 2008).Footnote 4 Therefore, to account for both input and market inefficiencies, Tone’s (2002) alternative cost-efficiency model can be used. Furthermore, the use of this value-based technology is more appropriate for computing the scale elasticity scores of real-life firms in several situations, for example (a) when input proportions change in the long run, (b) when inputs are heterogeneous, and (c) as the cost can be more easily related to economies of scale and size-specific costs due to indivisibility.

Scale elasticity evaluation in DEA has thus far been made using only deterministic technologies. These technologies were developed based on the premise that the inputs and outputs are precisely measured. However, in real-life situations, general production processes are often stochastic,Footnote 5 and the concepts of efficiency and scale elasticity are inextricably related to how firms deal with uncertainty underlying stochastic inputs and outputs. Therefore, when there are variations in inputs and outputs owing to uncertainty, the evaluation of efficiency and scale elasticity in a deterministic DEA setting becomes sensitive to such variations. Consequently, one expects to observe whether the efficiency and returns-to-scale characteristics of firms are subject to change in this stochastic environment.

To account for random variations in inputs and outputs underlying any production process, several authors (Banker and Morey 1986; Cooper et al. 2004, 2011, 1996, 1998; Jess et al. 2001; Lamb and Tee 2012; Olesen 2006; Sengupta 1990) have considered various approaches in DEA setting to compute efficiency. Sengupta (1982) and Cooper et al. (1996) were the first to introduce the theory of chance constraints in DEA to formulate an efficiency evaluation for firms. This formulation was later extended by several scholars (Sengupta and Sfeir 1988; Land et al. 1993, 1994; Li 1995a, b, 1998; Olesen and Petersen 1995, 2000; Grosskopf 1996; Morita and Seiford 1999; Huang and Li 2001; Kao and Liu 2014; Wei et al. 2014). Cooper et al. (2011), Simar and Wilson (2015), and Olesen and Petersen (2016) provided extensive reviews of the development of various stochastic DEA models.

To the best of our knowledge, no studies in the DEA literature has dealt with the estimation of scale elasticity using a technology setup that allows such stochastic variations in the data. Therefore, in this study, we focus on the estimation of scale elasticity when inputs and outputs are uncertain in stochastic form. The existence of random changes in the data permits the prediction of changes in the inputs and outputs. Because deterministic programs are sensitive to such variations, we need the technique of chance-constrained (CC) programmingFootnote 6 (see Charnes and Cooper (1959, 1962, 1963) for its early developments) because this method can potentially deal with the cases in which the constraints–the minimal (maximal) inputs (outputs)–are required to be no more (less) than the actual inputs (outputs) may be violated, but not too frequently. Although stochastic variation around the production function is allowed, most observations must fall below it.

To set up value-based measures of efficiency and scale elasticity, first, we formulate, using the CC-programming method, the stochastic version of the alternative cost efficiency model of Tone (2002), which is essentially a quadratic model. Second, we set up a deterministic equivalent of the stochastic quadratic model. Third, with the help of a one-factor assumption, we converted this deterministic quadratic model into a linear model using the goal programming theory of Charnes and Cooper (1977). Fourth, we solved this linear model to compute the value-based efficiency scores of firms at pre-determined tolerance levels of chance constraints. Finally, we set up the dual of this linear model to compute the value-based scale elasticity scores of firms at these tolerance levels.

The method employed to convert the quadratic model into a linear model is based on a simplified single-factor assumption, which has long been used in economics. This single-factor assumption is extremely convenient for finding solutions but requires some additional assumptions that the correlation coefficient between any two stochastic inputs/outputs and between input and output for any firm is always 1 (Kao and Liu 2019). Thus, there is a tradeoff between a quadratic (nonlinear) model, which is inconvenient to solve, and a linear model, which is easy to solve but requires some additional assumptions. However, we believe that this perfect correlation assumption between inputs/outputs is certainly important as it helps convert a quadratic model into a linear one, which not only removes the inconveniences associated with solving a quadratic model, but also helps compute the scale elasticity scores of firms using its dual.

To demonstrate the ready applicability of our proposed model, we exhibited an illustrative empirical application wherein we employ Indian banking data, which was used earlier by Sahoo and Tone (2009a, b). It is important to investigate the extent of economies of scale in the banking industry in general, and the banking industry of a fast-growing economy like India, in particular. The banking industry is technology-driven, and technical progress is scale augmenting (Berger 2003). The evaluation of scale economies in India’s banking industry during the post-reform period from 1998 to 2005 is considered important because this exercise has important implications for policy design and regulation due to changing regulatory environments and different ownership types (public, private, and foreign). The Indian financial sector had initially been operating in a closed and regulated environment until 1992 and then underwent a radical change during the nineties. To promote the efficiency and profitability of banks, in 1991–92 the Reserve Bank of India (RBI) initiated a process of liberalization through various reforms such as entry deregulation, branch de-licensing, deregulation of interest rates, allowing public banks to raise their equity in the capital markets, gradual reduction in cash reserve ratio, statutory liquidity ratio, and relaxation of several restrictions on the composition of their portfolios. All of these have given rise to heightened competitive pressure in the banking industry. In this scenario, we believe that banks were in the pursuit of enlarging their size using available scale economies to enhance their asset base and profit to create systemic financial efficiency and shareholder value. Furthermore, the introduction of several reform measures and technological advances has put the banking industry in a more challenging and volatile position, making the underlying bank production processes stochastic. In this environment, following Shiraz et al. (2018), stochasticity in the inputs and outputs is first introduced, and our proposed stochastic efficiency model is then applied to these stochastic input–output data to produce valid efficiency and scale elasticity estimates.

In our empirical illustration, we examine the efficiency and returns-to-scale characteristics of banking in India across three ownership types: public, private, and foreign. This enables us to investigate the economic linkage between ownership and efficiency performance by considering the property rights hypothesis (De Alessi 1980) and public choice theory (Levy 1987; Niskanen 1975). According to the property rights hypothesis, private enterprises should perform more efficiently than public enterprises because of the strong link between the markets for corporate control and the efficiency of private enterprises. While this argument may apply more to developed countries, testing for efficiency differentials across ownership types in the banking industry of a developing country such as India can yield insights into the success of the reform process.

Although our current empirical application to Indian banking is illustrative, our proposed chance-constraint efficiency model can potentially be applied to analyze the efficiency and scale properties of many real-life firms whose underlying production processes are stochastic. Examples of these firms can be found in many industries, for example agriculture, where unpredictability in weather makes the input–output relationship stochastic; manufacturing industries, where firms face considerable variation in the quality of their inputs and outputs produced; product development industries, where firms face uncertainty regarding their new designs; and high-technology industries, where firms face hyper (dynamic) completion in the new (Internet) economy.

Finally, Fig. 1 depicts the conceptual framework of our investigation, highlighting both the methodological and applied approaches.

The remainder of the paper is organized as follows: “A value-based measure of efficiency and scale elasticity in the deterministic case” section discusses the evaluation of value-based scale elasticity measures in a deterministic DEA technology defined in the cost-output space. In “A value-based measure of TE and SE in the stochastic case” section, we first set up the stochastic version of Tone’s (2002) value-based cost efficiency model and then propose its transformation in a deterministic setting for the computations of value-based measures of efficiency and scale elasticity scores. “The illustrative empirical application” section demonstrates an illustrative empirical application of our proposed stochastic efficiency model to data on 71 banks in India. “Discussions” section presents a discussion of the results. Finally, we conclude with remarks in “Concluding remarks” section, followed by the limitations of our study and future recommendations.

A value-based measure of efficiency and scale elasticity in the deterministic case

We suppose there are \(n\) firms to be evaluated and each firm uses \(m\) inputs to produce \(s\) outputs. Let \({x}_{j} = {\left({x}_{1j} , . . . , {x}_{mj} \right)}^{T}\in {\mathbb{R}}_{\ge 0}^{m}\) and \({y}_{j}={\left({y}_{1j}, . . . , {y}_{sj} \right)}^{T}\in {\mathbb{R}}_{\ge 0}^{s}\) be the input and output vectors of firm\(j\), respectively, \({w}_{j}=\left({w}_{1j}, . . . , {w}_{mj}\right)\in {\mathbb{R}}_{\ge 0}^{m}\) be its unit price vector, and \(J\) be the index set of all firms, that is, \(J = \{1, . . . , n\}\). We set up a value-based technology \({(T}^{V})\) set as

where \({c}_{j}=\sum_{i=1}^{m}{w}_{ij}{x}_{ij}.\) The \({\lambda =(\lambda }_{1}, {\lambda }_{2}, \dots , {\lambda }_{n})\) is an intensity vector of dimension n.

To produce the output vector \(\beta {y}_{o}\) by firm \(o\), its value-based measure of technical efficiency \((TE)\), \(\overline{\alpha }\left(\beta \right)\) is defined as

\(\beta\) is a user-defined value that reflects the proportional output change, and \(\overline{\alpha }(\beta )\) is defined for all \(\beta \in [0,\widehat{\beta }]\), where \(\widehat{\beta }\) is the largest proportion of the output vector \({y}_{o}\) found in units of technology \({T}^{V}\).

Based on the definition in (2), \(\overline{\alpha }\left(\beta \right)\) can be determined using the following linear program (LP):

\(s.t.\) \(\sum_{j=1}^{n}{\lambda }_{j}{c}_{j}\le \alpha {c}_{o},\) \(\sum_{j=1}^{n}{\lambda }_{j}{y}_{rj}\ge \beta {y}_{ro} \left(\forall r\right),\) \(\sum_{j=1}^{n}{\lambda }_{j}=1,\) \({\lambda }_{j}\ge 0 \left(\forall j\right),\mathrm{ and }\alpha :\mathrm{free}.\)

Program (3) was used to identify explicit real peer units for each unit under evaluation. As previously stated, \({\lambda =(\lambda }_{1}, {\lambda }_{2}, \dots , {\lambda }_{n})\) is an intensity vector in which each \({\lambda }_{j}\) shows the effect of \({DMU}_{j}\) on constructing the peer unit for \({DMU}_{o}\) under evaluation.

The dual formulation of the program (3) can be set up as

\(s. t.\) \(\sum_{r=1}^{s}{u}_{r} {y}_{rj}-v{c}_{j}+{\omega }_{0}\le 0,\) \(v{c}_{o}=1,\) \(v,u\ge 0.\)

Based on program (4) for firm \(o\), we derive its transformation function as

We assume that the transformation function (5) is differentiable. Differentiating (5) with respect to \(\beta\) yields.

In the spirit of Panzar and Willig (1977), Baumol et al. (1982), and Banker et al. (2004), we define the value-based scale elasticity \((SE)\) measure of firm \(o\), \(\varepsilon \left(\overline{\alpha }(\beta ){c}_{o}, \beta {y}_{o}\right)\) as the ratio of its marginal utilization of input \(\frac{\partial \overline{\alpha }(\beta )}{\partial \beta }\) to its average utilization of input \(\frac{\overline{\alpha }(\beta )}{\beta }\). In other words,

If the firm \(o\) is technically efficient, we have \(\beta =\overline{\alpha }\left(\beta \right)=1\) and \(\varepsilon \left({c}_{o}, {y}_{o}\right)=1-{\omega }_{o}\).

In the immediately following section, we extend these results to the stochastic case.

A value-based measure of \({\varvec{T}}{\varvec{E}}\) and \({\varvec{S}}{\varvec{E}}\) in the stochastic case

Stochastic value-based \({\varvec{T}}{\varvec{E}}\) measure

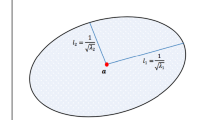

Let \({\widetilde{x}}_{j}={\left({\widetilde{x}}_{1j}, {\widetilde{x}}_{2j},\dots ,{\widetilde{x}}_{Mj}\right)}^{T}\in {\mathbb{R}}_{\ge 0}^{m}\) and \({\widetilde{y}}_{j}={\left({\widetilde{y}}_{1j}, {\widetilde{y}}_{2j},\dots ,{\widetilde{y}}_{Sj}\right)}^{T}\in {\mathbb{R}}_{\ge 0}^{s}\) be the random input and output vectors of firm \(j\), respectively. Furthermore, let \(\left({\widetilde{x}}_{j}\right)=\) \({x}_{j}\), \(E\left({\widetilde{y}}_{j}\right)={y}_{j}\), and all random inputs and outputs are jointly and normally distributed. The structure of our stochastic value-based technology set \({(T}^{V(S)})\) in the CC programming setup is related to the technology set employed in the BCC program. \({T}^{V(S)}\) is the union of confidence regions \({D}_{j}\left({1-\gamma }_{j}\right)\) with a probability level \((1-{\gamma }_{j})\) \((j=1,\dots ,n)\). In other words, \({T}^{V(S)}\) is the union of the input and output values that are probably observed for \({DMU}_{j} (j=1,\dots ,n)\) with probability \({1-\gamma }_{j}\). Using the axioms of free disposability, unbounded rays, convexity, minimal extrapolation, and inclusion of observations within confidence regions, \({T}^{V(S)}\) can be set up as follows:

where \({\widehat{c}}_{j}=\sum_{i=1}^{m}{w}_{ij}{\widehat{x}}_{ij}\) and \({D}_{j}\left({1-\gamma }_{j}\right)\) is defined as follows:

where \({\Lambda }_{j}^{-1}\) represents the inverse of the variance–covariance matrix of \(({\widetilde{x}}_{j}, {\widetilde{y}}_{j})\). Moreover, \({c}_{j}\) is determined by \({\mathbb{P}}\left({\chi }_{M+S}^{2}\le {c}_{j}^{2}\right)=1-{\gamma }_{j}\), and \({\chi }_{M+S}^{2}\) is the Chi-square random variable with \(M+S\) degrees of freedom.

Inspired by the deterministic value-based \(TE\) measure \(\overline{\alpha }\left(\beta \right)\) in program (3), we define its stochastic counterpart as the greatest possible radial contraction of \({\widetilde{c}}_{o}\) possible in \({T}^{V(S)}\) to produce \(\beta {\widetilde{y}}_{o}\). Mathematically, the stochastic value-based \(TE\) measure of firm \(o\) can be computed by solving Eq. (9), where \(\beta\) is a predefined parameter.

\({\lambda }_{j}\ge 0 \left(\forall j\right),\) and \(\alpha :\mathrm{free}.\)

In program (9), we used the “E-model” form of the marginal chance-constrained DEA program, which was introduced by Cooper et al. (2002) with the help of the BCC model of Banker et al. (1984). Therefore, we used two separate probability constraints: one for the input cost and the other for the set of outputs.

The objective of program (9) is to measure the stochastic value-based TE of any firm \(o\) \((o=\mathrm{1,2},\dots ,n)\) because its input and outputs are all assumed to be stochastic. This is achieved by minimizing the contraction factor \(\alpha\) under certain input and output constraints. On the input side, there is a chance constraint, that is, the best-practice (minimum) cost must not exceed the observed cost more than \(\gamma \%\) of the time. With regard to the output side, there are chance constraints that each observed output must not exceed its maximum by more than \(\gamma \%\) of the time. Finally, there is a convexity constraint, that is, the sum of the intensity coefficients equals 1. \(\gamma\) is interpreted as the tolerance level for the chance constraint.

In stochastic efficiency program (9), the random inputs and outputs of firm \(j\) are expressed as follows:

where the error terms \({a}_{ij}{\varepsilon }_{ij} \mathrm{and} {b}_{rj}{\eta }_{rj}\) represent the symmetric disturbances of inputs and outputs, respectively, which arise because of factors that are entirely outside the firm’s control. Furthermore, \({\varepsilon }_{ij}\) \(\left(\forall i\right)\) and \({\eta }_{rj}\) \(\left(\forall r\right)\) are all assumed to follow a multivariate normal distribution, with zero means, that is, \(E({\varepsilon }_{ij})=E({\eta }_{rj})=0 \left(\forall i,r\right)\), and unity variances, that is, \(Var({\varepsilon }_{ij})=Var({\eta }_{rj})=1 \left(\forall i,r\right)\). Then, for each firm \(j (j=1,\dots ,n)\), \({\widetilde{x}}_{ij}\sim N\left({x}_{ij},{a}_{ij}^{2}\right)\) and \({\widetilde{y}}_{rj}\sim N\left({y}_{rj},{b}_{rj}^{2}\right)\), where \({E({\widetilde{x}}_{ij})=x}_{ij}\) \(\left(\forall i\right)\), \({E({\widetilde{y}}_{rj})=y}_{ij}\) \(\left(\forall r\right)\), \(Var({\widetilde{x}}_{ij})={a}_{ij}^{2}\) \(\left(\forall i\right)\), and \(Var\left({\widetilde{y}}_{rj}\right)={b}_{rj}^{2}\) \(\left(\forall r\right)\).

The firm-specific symmetric disturbance terms—\({\varepsilon }_{ij}\) \(\left(\forall i\right)\) and \({\eta }_{rj}\) \(\left(\forall r\right)\) allow the technology set and its resulting efficiency frontier to vary arbitrarily across firms and capture the presence of measurement and specification errors, if any, in the data. It is also important to note that these errors vary with the confidence level specified by the user.

Using the CC-programming theory, Land et al. (1993, 1994) proposed a method to transform the stochastic efficiency program (9) into a nonlinear programming (NLP) problem. However, solving this NLP problem requires information on a substantial number of parameters in the covariance matrix between the input and output components. Therefore, to reduce the computational time involved in the estimation of such parameters, Cooper et al. (1996) suggested a linearization method using a simplified assumption that the components of the inputs and outputs are related only through a common relationship with a single factor, which has long been used in economics (Sharpe 1963; Kahane 1977; Huang and Li 1996, 2001; Li 1995a, b, 1998; Li and Huang 1996. Following Cooper et al. (1996), we use this single-factor assumption in our proposed stochastic approach. In other words, \({\widetilde{x}}_{ij}={x}_{ij}+{a}_{ij}\varepsilon \left(\forall i\right)\) and \({\widetilde{y}}_{rj}={y}_{rj}+{b}_{rj}\varepsilon \left(\forall r\right)\) where \(\varepsilon\) is a standard normal random variable with mean \(E\left(\varepsilon \right)=0\) and a constant (finite) standard deviation \(\sigma (\varepsilon )\).

The use of the single-factor assumption for linearization is not free. This simplifying assumption, as Cooper et al. (1996) rightly pointed out, requires further assumptions in the resulting linear program that the correlation coefficient between any two stochastic inputs/outputs, and between input and output, for any firm \(j\) is always 1, that is, \(\rho \left({\widetilde{{\varvec{x}}}}_{pj}, {\widetilde{{\varvec{x}}}}_{qj}\right)=1\) (\(p\ne q)\), \(\rho \left({\widetilde{{\varvec{y}}}}_{rj}, {\widetilde{{\varvec{y}}}}_{sj}\right)=1\) (\(r\ne s)\), and \(\rho \left({\widetilde{{\varvec{x}}}}_{pj}, {\widetilde{{\varvec{y}}}}_{sj}\right)=1\), which may not be easy to meet in practical applications. However, we believe that this convenient unit correlation coefficient assumption is important as it helps convert an NLP problem into an LP problem, which not only removes the inconveniences associated with solving an NLP problem but also helps one, as we will show later, compute the \(SE\) behavior of firms using its dual.

Using the single factor assumption, we express, for each firm j (\(j=1,\dots ,n)\), the constraint (9.1) as follows:

Hence, we can see that \(\widetilde{h}\sim N\left(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{x}_{io}, {\left(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{a}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{a}_{io}\right)}^{2}\right) .\)

Now, consider a new variable \(\widetilde{z}=\frac{\widetilde{h}-E(\widetilde{h})}{{\sigma }_{\widetilde{h}}}\) that follows a standard normal distribution. As per the Central Limit Theorem, we have \({\mathbb{P}}\left(\widetilde{z}\le \frac{-\left(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{x}_{io}\right)}{\tilde{\sigma }\left|\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{a}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{a}_{io}\right|}\right)\ge 1-\gamma .\) Then, \(\Phi \left(\frac{-\left(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{x}_{io}\right)}{\tilde{\sigma }\left|\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{a}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{a}_{io}\right|}\right)\ge 1-\gamma .\) Therefore, using the property of invertibility of \(\Phi (\bullet )\), we have \(\frac{-\left(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{x}_{io}\right)}{\tilde{\sigma }\left|\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{a}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{a}_{io}\right|}\ge {\Phi }^{-1}\left(1-\gamma \right).\)

Consequently, the deterministic form of Constraint (9.1) is expressed as \(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-{\Phi }^{-1}(\gamma )\left|\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{a}_{ij}\right)-\alpha \sum_{i=1}^{m}{w}_{io}{a}_{io}\right|\le \alpha \sum_{i=1}^{m}{w}_{io}{x}_{io}.\) Similarly, Constraint (9.2) can be expressed as \(\sum_{j=1}^{n}{\lambda }_{j} {y}_{rj}+{\Phi }^{-1}\left(\gamma \right)\tilde{\sigma } \left|\sum_{j=1}^{n}{\lambda }_{j} {b}_{rj}-\beta {b}_{ro}\right|\ge \beta {y}_{ro} (\forall r).\)

Thus, we set up the deterministic version of program (9) as

\({\lambda }_{j}\ge 0 \left(\forall j\right),\) and \(\alpha :\mathrm{free}.\)

Program (11) is nonlinear owing to the existence of an absolute function. Using the goal programming theory of Charnes and Cooper (1977), we transformed it into a quadratic programming problem.Footnote 7 To proceed, we use the following transformations:

The existence of two constraints, − \({p}^{+}{p}^{-}=0 \mathrm{and } {q}_{r}^{+}{q}_{r}^{-}=0\), in our suggested transformations makes program (11) non-linear. However, if an LP problem exhibits an optimal solution vector, it also has an extreme optimal solution vector in which at least one of the variables from (\({p}^{+}, {p}^{-})\) and \(({q}_{r}^{+}, {q}_{r}^{-})\) is zero. Hence, the nonlinear constraints \({p}^{+}{p}^{-}=0\) and \({q}_{r}^{+}{q}_{r}^{-}=0\) can be safely ignored. Consequently, using the simplex algorithm, program (11) can be solved to find extreme optimal solutions.

Using the above transformations and notations, program (11) is converted into the following:

\(s.t\).\(\sum_{j=1}^{n}{\lambda }_{j}\left(\sum_{i=1}^{m}{w}_{io}{x}_{ij}\right)-{\Phi }^{-1}\left(\gamma \right)\tilde{\sigma }\left({p}^{+}+{p}^{-}\right)\le \alpha \sum_{i=1}^{m}{w}_{io}{x}_{io},\)

\(\lambda ,{p}^{+},{p}^{-},{q}^{+},{q}^{-}\ge 0,\) and \(\alpha :\mathrm{free}.\)

Stochastic value-based \({\varvec{S}}{\varvec{E}}\) measure

To compute the value-based measure of \(SE\) of firm \(o\), we set up the dual program (12) as

For each firm \(o\in \left\{1,\dots ,n\right\}\), its transformation function is considered as follows:

On differentiation of (14) with respect to \(\beta\) yields

The (input-oriented) value-based measure of \(SE\) of firm \(o\) can then be obtained as follows:

Because DEA technologies are not smooth at vertices, we face multiple optimal values for \({\omega }_{0}\). Therefore, to compute the maximum (minimum) value of \({\omega }_{0}\) for firm \(o\), we set the following program.

Using the optimal values of program (17), the (input-oriented) right- and left-hand value-based \(SE\) scores of firm \(o\) can be calculated as follows:

We present Theorem 1 to determine, using the formulae in (18), the returns-to-scale types at each confidence level given the values of \({\omega }_{0}^{-}\) and \({\omega }_{0}^{+}\).

Theorem 1

For every confidence level \(\gamma\), the (input-oriented) returns to scale of firm \(o\) are (a) decreasing (DRS) (i.e., \({\varepsilon }_{I}^{-}\left(\gamma \right)>1\)) if \({\omega }_{0}^{+}<0,\) (b) constant (CRS) (i.e., \({\varepsilon }_{I}^{-}\left(\gamma \right)\le 1\le {\varepsilon }_{I}^{+}\left(\gamma \right))\) if \({\omega }_{0}^{-}\le 0\le {\omega }_{0}^{+},\) and (c) increasing (IRS) (i.e., \({\varepsilon }_{I}^{+}\left(\gamma \right)<1\)) if \({\omega }_{0}^{-}>0.\)

Proof

Let \({v}^{*},{u}^{*}, {\vartheta }^{*},{\mu }^{*}\), and \({\omega }_{0}^{*}\) be optimal solutions of the LP program (13) for the radial efficient firm \(({\widetilde{x}}_{o}, {\widetilde{y}}_{o})\), which is defined according to (10) as \({\widetilde{x}}_{io}={x}_{io}+{a}_{io}{\varepsilon }_{io} (\forall i)\) and \({\widetilde{y}}_{ro}={y}_{ro}+{b}_{ro}{\eta }_{ro} \left(\forall r\right).\) So, \(\sum_{r=1}^{S}\left({u}_{r}^{*}{y}_{ro}+{\mu }_{r}^{*}{b}_{ro}\right)+{\omega }_{0}^{*}=\sum_{i=1}^{m}{w}_{io}\left({v}^{*}{x}_{io}-{\vartheta }^{*}{a}_{io}\right)=1\). Furthermore, \(\sum_{r=1}^{S}\left({u}_{r}^{*}{y}_{rj}+{\mu }_{r}^{*}{b}_{rj}\right)-\sum_{i=1}^{m}{w}_{io}\left({v}^{*}{x}_{ij}-{\vartheta }^{*}{a}_{ij}\right)+{\omega }_{0}^{*}=0\) is a supporting hyperplane to \({T}^{V(S)}\) in (7), and the corresponding value at \(\left(\overline{\alpha }\left(\beta \right), \beta \right)\) is presented in (14). Differentiating (14) for \(\beta\), the following measure of SE was used: \(\varepsilon \left(\gamma \right)=\frac{\overline{\alpha }\left(\beta \right)-{\omega }_{o}^{*}}{\overline{\alpha }\left(\beta \right)}\). The right- and left-hand SEs can then be easily computed as \({\varepsilon }^{+}\left(\gamma \right)=\frac{\overline{\alpha }\left(\beta \right)-{\omega }_{o}^{*-}}{\overline{\alpha }\left(\beta \right)} \mathrm{and} {\varepsilon }^{-}\left(\gamma \right)=\frac{\overline{\alpha }\left(\beta \right)-{\omega }_{o}^{*+}}{\overline{\alpha }\left(\beta \right)}\), respectively. It then immediately follows that

-

(a)

\({\varepsilon }^{-}\left(\gamma \right)>1\)if \({\omega }_{o}^{*+}<0\) and since \({\omega }_{o}^{*-}<{\omega }_{o}^{*+}\), then \({\varepsilon }^{+}\left(\gamma \right)>1\). Thus, returns to scale decrease (DRS).

-

(b)

If \({\omega }_{o}^{*-}<0\) then \({\varepsilon }^{+}\left(\gamma \right)>1\) and if \({\omega }_{o}^{*+}>0\) then \({\varepsilon }^{-}\left(\gamma \right)<1\). Therefore, \({\varepsilon }^{-}\left(\gamma \right)\le 1\le {\varepsilon }^{+}\left(\gamma \right)\) and returns to scale are constant (CRS).

-

(c)

If \({\omega }_{o}^{*-}>0\) then \({\varepsilon }^{+}\left(\gamma \right)<1\) and since \({\omega }_{o}^{*+}>{\omega }_{o}^{*-}\) then \({\varepsilon }^{-}\left(\gamma \right)<1\). Thus, returns to scale (IRS) are increasing.

$$\square$$

Regarding the returns-to-scale types between deterministic and stochastic cases, we present Theorem 2.

Theorem 2

For a predetermined significance level of \(\gamma =0.5\), the returns-to-scale types obtained from deterministic and stochastic programs were the same.

Proof

To prove this, it suffices to show that for \(\gamma =0.5\), programs (12) and (3) are equivalent. To this end, since programs (12) and (11) are equivalent and \({\Phi }^{-1}\left(0.5\right)=0\), replacing it in the constraints of program (11) yields program (3).

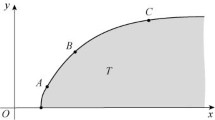

For the visual presentation of technology, we consider in Table 1 a hypothetical dataset of six firms, each producing one output using one input.

Based on the data in Table 1, we constructed the stochastic production frontiers in Fig. 2 by considering three different levels of tolerance (\(\gamma =0.1, 0.3,\mathrm{ and }0.5\)).Footnote 8

The piecewise linear line (L3) passing through the mean points of the centers of circles represents the production frontier at the tolerance level of 0.5 and according to Theorem 2, this stochastic frontier is the same as the deterministic frontier. Similarly, lines L2 and L1 represent the production frontiers at tolerance levels \(\mathrm{of} 0.3\) and 0.1, respectively. The TE scores at these tolerance levels are presented in Table 2. At each of these three tolerance levels, four firms, A, B, C, and D, are consistently found to be technically efficient. Of the remaining two firms, F becomes efficient at a tolerance level of 0.1, but inefficient at tolerance levels of \(0.3\) and 0.5. However, E was inefficient at all three tolerance levels. This finding suggests that a firm’s efficiency status in a deterministic environment can be subject to change in the stochastic case.

Input-oriented right- and left-hand SE scores were computed using value-based technology (7) in a stochastic environment \(({T}^{V(S)})\), the input-oriented right- and left-hand \(SE\) scores are computed. Table 2 presents the results.

At each of these three tolerance levels considered, out of the four stochastically technically efficient firms (A, B, C, and D), B is CCR-efficient and hence exhibits CRS because its left-hand SE scores are all less than 1, and the right-hand SE scores are all greater than 1. D is CCR-efficient only at tolerance levels of \(0.1\) and 0.3 and hence exhibits CRS at those tolerance levels, but IRS at the tolerance level of 0.5 (as its right-hand SE score is less than 1). Of the remaining two stochastically technically efficient firms, A exhibits IRS (as its right-hand SE score is less than 1) and C exhibits DRS (as its left-hand SE score is greater than 1) at each of the three tolerance levels. Finally, regarding the returns-to-scale types of inefficient firms, since E’s projection onto the frontier is located on the interior point of the facet DB (see Fig. 2), its right- and left-hand SE values are all equal, but all less than one, implying IRS. As is evident from Table 2, the efficiency status of F is the mix, that is, it is efficient at a tolerance level of 0.1, but inefficient at other tolerance levels. However, at all these tolerance levels, DRS is exhibited because its left-hand SE values are all greater than one. The finding that D exhibits IRS at the tolerance level of 0.5, but CRS at the other tolerance levels, indicates that the returns-to-scale characterizations of firms in a deterministic technology is subject to change in the stochastic case.

The illustrative empirical application

We now demonstrate an illustrative empirical application of our proposed stochastic efficiency program using a dataset used earlier by Sahoo and Tone (2009a, b) for analyzing capacity utilization and profit change behaviors of Indian banks for eight years (1998–2005).

The data

The dataset consists of 71 banks (26 public banks, 27 private banks, and 18 foreign banks), each using three inputs, borrowed funds \(\left({x}_{1}\right)\), fixed assets \(\left({x}_{2}\right)\), and labor \(\left({x}_{3}\right)\), to produce three outputs, investments \(\left({y}_{1}\right)\), performing loan assets \(\left({y}_{2}\right)\), and non-interest income \(\left({y}_{3}\right)\). All monetary values of the input and output quantities are deflated by the wholesale price index with a base of 1993–94 to obtain their present (implicit) quantities. All monetary values are measured for Indian rupees (Rs.) for crores (Rs. 1 crore = Rs. 10 million). Concerning the prices of inputs, the unit prices of \({x}_{1}\), \({x}_{2}\), and \({x}_{3}\) are measured as the average interest paid per rupee of \({x}_{1}\left({w}_{1}\right)\), non-labor operational cost per rupee amount of \({x}_{2}\) \(\left({w}_{2}\right)\), and average staff cost \(\left({w}_{3}\right)\), respectively. The input and output quantities are all assumed to be random, with each following a normal distribution with a known mean (presented in Table 3) and known standard deviation (shown in Table 4).

In DEA, if the number of DMUs (\(n\)) is less than the combined number of inputs (m) and outputs (s), many DMUs are efficient. Therefore, \(n\) must exceed (\(m\) + \(s\)). The rule of thumb in the DEA literature suggests that \(n\) ≥ max {\(m\) × \(s\), 3 × (\(m\) +\(s\))}. In our dataset, \(n\) = 71, and \(m\) = \(s\) = 3. Therefore, the thumb rule was satisfied at 71 ≥ max {9, 18}.

We used the GAMS software on a machine with the following specifications to compute the efficiency and scale elasticity scores of banks: CPU, Intel Pentium 4 at 2 GHz and RAM, 1024 MB.

Results

Stochastic value-based \(TE\) scores

Table 5 presents the value-based TE scores of 71 banks in the stochastic environment at the three different confidence levels considered. As observed, only 38 banks were efficient at all three confidence levels. It is also interesting to observe that inefficient banks tend to experience a decline in their efficiency performance with an increase in \(\gamma .\)

Stochastic value-based \(SE\) scores

The results for both the lower and upper bounds of the stochastic value-based \(SE\) scores for all 71 banks are presented in Tables 6 and 7 for \(\beta =0.99\) and \(\beta =1.01\), respectively.

It is well known that scale elasticity is defined only for efficient units, and for inefficient units, this computation can be performed only at their input- or output-oriented projections. However, in our case, we considered input-oriented projections. To interpret our results for \(\beta =0.99\) in Table 6, for example, for bank 20, which is efficient at all three tolerance levels, the lower-bound \(SE\) score is less than 1 and its upper-bound value is more than 1, that is, \({\varepsilon }^{-}<1<{\varepsilon }^{+}\), implying that its returns to scale are constant [as per Theorem 1 (b)]. For efficient bank 54, the upper bound \(SE\) scores are all less than 1 (i.e., \({\varepsilon }^{+}<1)\) at all three tolerance levels, implying that its returns to scale are increasing [as per Theorem 1 (c)]; for the remaining efficient banks such as 1, 2, and 3, since the lower bound of \(SE\) is greater than 1 at all three tolerance levels (i.e., \({\varepsilon }^{+}>1),\) it exhibits a decreasing return to scale [as per Theorem 1 (a)].

Note that for some stochastically inefficient banks, such as 4, 12, 14, and 23, both lower- and upper-bound \(SE\) scores are equal at all three tolerance levels, implying that their projections onto the boundary are all located on interior points of the facet. Of the 71 banks, we find 30 banks exhibiting DRS, 10 banks exhibiting CRS, and 11 banks exhibiting IRS, at all three tolerance levels. Two more interesting observations were made in this study. First, some banks exhibit returns to scales of different types at different tolerance levels. For example, for \(\beta =0.99\) in Table 6, inefficient bank 37 (at \(\gamma =0.1\mathrm{ and }0.3\)) exhibits DRS. This means that its projections onto the efficient frontier are all located on points of the facet exhibiting DRS. However, the projection of this inefficient bank at \(\gamma =0.5\) onto the efficient frontier exhibits IRS. Furthermore, bank 51 at \(\gamma =0.1\mathrm{ and }0.3\) is both VRS- and CRS-efficient and hence exhibits CRS. However, this bank was inefficient at \(\gamma =0.5\). Hence, its projection onto the efficient frontier exhibits IRS. In addition, the SE results for \(\beta =1.01\) in Table 7, can be interpreted analogously.

Furthermore, for \(\beta =0.99\) (Table 6), the upper-bound \(SE\) scores of some banks, such as 1, 6, and 10, are unbounded. This implies that any proportional increase in output lies outside the technology set. However, in Tables 6 and 7, both lower- and upper-bounds of \(SE\) s of some banks, such as 45 in Table 6 and 48 in Table 7, are indicated by a dashed line, implying that model (12) is infeasible because changing \(\beta\) from 1 to 0.99 or 1.01, these units appear outside the feasible region. Therefore, program (12) becomes infeasible when there is no data available outside the feasible region to determine returns to scale (Grosskopf 1996).

Deterministic value-based \(SE\) scores of the banks

In addition to program (17), we ran the valued-based LP (4) to compute the lower and upper bounds of the \(SE\) scores of 71 banks in a deterministic environment for \(\beta =0.99\) and \(\beta =1.01\), which are presented in Table 8. The results show that the 38 efficient banks that are found to be fully technically efficient in a stochastic environment are also efficient, and the remaining 33 banks are inefficient. Regarding their returns-to-scale characterizations, for the case of \(\beta =1.01\), we find six efficient banks (i.e., 5, 20, 31, 35, 49, and 69) exhibiting CRS. For the five efficient banks (i.e., 44, 45, 54, 64, and 68), returns to scale increase because their upper bounds of \(SE\) scores are all less than unity. Finally, for some efficient banks, such as 1, 6, 9, 10, 11, 13, 19, 21, 22, 30, 34, 48, 52, and 61, both lower- and upper-bound \(SE\) scores are indicated by dashed lines. Hence, as discussed in Sect. 4.3, no data are available to determine the returns-to-scale type. This is because, as we consider \(\beta\) from 1 to 0.99 or 1.01, these banks appear outside the feasible region. Therefore, program (3) becomes infeasible when there is no data available outside the feasible region to determine their returns-to-scale possibilities. The remaining 13 efficient banks operate under the DRS.

For \(\beta =0.99\), some efficient banks (1, 6, 9, and 13) exhibit DRS and some efficient banks (10, 19, 21, 34, and 61) exhibit CRS. However, as pointed out earlier, the returns-to-scale types of these banks for the case of \(\beta =1.01\) were not determined. Moreover, for bank 8, while returns to scale are constant for \(\beta =0.99\), they decrease for \(\beta =1.01\).

A comparison between the deterministic and stochastic methods

Regarding the comparison between deterministic and stochastic \(TE\) estimates, a few observations are noteworthy. First, both deterministic and stochastic methods are in complete agreement in declaring the same 38 banks to be fully technically efficient. Second, both methods yield the same value-based \(TE\) scores for banks \(\gamma =0.5\). This finding is consistent with our expectation (see Theorem 2), which leads us to conclude that deterministic technology is a special case of stochastic technology for \(\gamma =0.5\) in exhibiting the same \(TE\) scores. Third, the stochastic \(TE\) scores of inefficient states are higher than their deterministic counterparts. This finding is also expected, because the main purpose of our chance-constrained formulation is to allow the observed inputs and outputs of banks to cross the efficiency frontier, but not too often. For any given observed input–output vector, the efficiency frontier in the stochastic case is now located closer than before. Finally, the efficiency scores of banks increase with sharpening (decreasing) the tolerance level of chance constraints.

Regarding the comparison between deterministic and stochastic \(SE\) estimates, we find apparent differences in their returns-to-scale characterizations. First, while the stochastic method finds efficient bank 13 exhibiting DRS at all three tolerance levels considered, the deterministic method is unable to find its returns-to-scale status for \(\beta =1.01\) but finds the same DRS type for \(\beta =0.99\). Second, although the returns-to-scale types of the remaining banks are the same in both deterministic and stochastic methods, the degree of returns to scale (i.e., lower and upper bounds of the SE) vary to some degree. For example, consider bank 65. For the case of \(\beta =0.99,\) and \(\gamma\)= 0.1, the stochastic lower and upper bounds of the SE estimates suggest that a 1% increase in cost raises the output by 4.78% and 8.81%, respectively. However, for \(\beta =0.99\) in the deterministic case, the lower and upper \(SE\) bounds suggest that a 1% increase in cost yields the same 7.03% increase in output. Third, for \(\gamma =0.5,\) the value-based \(SE\) scores of banks were the same between the two methods. According to Theorem 2, this finding is not contrary to our expectations. Therefore, one can conclude that deterministic technology is again a special case of stochastic technology for \(\gamma =0.5\) in exhibiting the same returns-to-scale characterization of banks.

\(TE\) vis-à-vis ownership

We examine banks’ \(TE\) performance across ownership types. The results are presented in Table 9. As shown in Table 9, public banks exhibit higher \(TE\) scores than private and foreign banks at all three tolerance levels. The finding of higher efficiency accrual of public banks over private and foreign banks may be due to their long-held formal status, wherein these banks have constantly been facilitating their access to scarce resources such as credit, foreign exchange, licenses, and skilled labor, which are necessary for efficient production.

Returns to scale across ownership

Finally, we examined the distribution of returns-to-scale types across ownership types. For β = 0.99, out of 44 public and private banks, 28 (68%) operate under DRS at a confidence level γ of 0.1. As shown in Table 10, while public sector banks mostly exhibit DRS, foreign banks exhibit IRS. Returns-to-scale types of private banks are a mix that supports all types of returns-to-scale, that is, IRS, CRS, and DRS. This may be because private banks are of two types: old and new, with the former type following the tradition of public sector banks and the latter type following that of foreign banks.

In the next section, we discuss the results of our illustrative empirical application of Indian banks from 1998 to 2005.

Discussions

As observed from our empirical results, different values of \(\beta\) and \(\gamma\) lead to varying results.

for returns-to-scale bank types. In such cases, the relevant question arises as to how bank management makes an appropriate judgment for its scale decisions. As noted earlier, the possible values of \(\beta\) are user-defined values that reflect the proportional output change, and the value-based \(TE\) function \(\overline{\alpha }(\beta )\) for any bank \(o\) is defined for all \(\beta \in [0,\widehat{\beta }]\), where \(\widehat{\beta }\) is the largest proportion of its output vector \({y}_{o}\) found on \({T}^{V}\). However, the scale elasticity of any radial technically efficient bank \(\left({x}_{o}, {y}_{o}\right)\) is well defined at \(\beta =\alpha \left(\beta \right)=1\) as we determine its returns-to-scale status locally at the neighborhood of point \(\left({x}_{o}, {y}_{o}\right)\), where a derivative is to be evaluated. Since scale elasticity is a one-sided concept, one can therefore determine a bank’s (local) returns-to-scale type at its right or left by measuring the response to outputs by considering only a one percent increase or decrease in inputs, that is, \(\beta =1.01\) (right) and \(\beta =0.99\) (left) at a specific confidence level.Footnote 9

Regarding the values of tolerance level \(\gamma\), a pre-determined parameter (\(0<\gamma \le 0.5\)) makes the confidence level of each firm large or small, that is, the confidence level that forms an ellipse around the mean value of the inputs and outputs of each firm, increase or decrease. Consequently, a bank may experience a change in the returns-to-scale status for a change in \(\gamma\). Therefore, the relevant question for any bank facing uncertainty is at what level of \(\gamma\) should it consider making an appropriate judgment about its scale decision. Because our proposed stochastic approach generates only expected \(SE\) scores rather than distributions of random \(SE\) scores, we are at odds to answer this question. Our focus in this study is, however, on ex-post-facto analysis of already affected decisions, which can have certain uses in the control aspects of bank management where evaluations of returns-to-scale performance are required. We need to address the ex-ante (planning) problem of how to use this knowledge to arrive at the possible value of \(\gamma\), which can be used while affecting future-oriented decisions regarding whether to scale up or scale down operations.

Regarding the results concerning bank efficiency performance across ownership types, we find that public banks exhibit higher efficiency than private and foreign banks. This finding fails to provide empirical support for the property rights hypothesis that private enterprises should perform better than public enterprises, which precisely holds in a developed country where there is a strong link between the market for takeover and the efficiency of private enterprises. In India, public banks are known for their better organizational structure and greater penetration into the customer base. During the post-reform period of our study (1998–2005), government policies favored public banks in managing their expansionary activities well because of their age-old learning experience. In the absence of an active market for corporate control, substantial government ownership, and a relatively low level of technological advancement, conventional wisdom that private banks should perform better needs to be challenged.

The returns-to-scale results during our study period reveal that, while large public and old private banks mostly exhibit either DRSFootnote 10 or CRS, small foreign and new private banks experience returns-to-scale possibilities of all types. Therefore, the finding of large public and old private banks exhibiting DRS does not bode well with the perception that regulators consider very large-size banks “too-big-to-fall,” which would encourage large or excessive risk-taking to drive bank growth. On the contrary, the finding of new small- and medium-sized private and foreign banks exhibiting IRS suggests that these banks have enough opportunity to increase output by either increasing the scale or merging with other banks to improve their performance.

Concluding remarks

Using the CC programming method, value-based measures of efficiency and scale economies’ behavior of firms are investigated from the viewpoint of the economic theory of production in the possible presence of stochastic variability in the underlying input and output data. There is considerable variation in the results concerning both the efficiency and returns-to-scale characterizations of banks between the deterministic and stochastic models. These differences are precisely due to the existence of confidence regions for various tolerance levels of the chance constraints. However, deterministic technology is a special case of stochastic technology at a tolerance level of 0.5, exhibiting both the efficiency and returns-to-scale characterizations of firms. Regarding the key empirical findings of our illustrative application to the Indian banking industry during the post-reform period (1998–2005), we have a few key findings. First, as expected, the stochastic model generates higher efficiency scores for inefficient banks as compared to the deterministic model. Second, public banks are more efficient than private and foreign banks, which challenges the property rights hypothesis. However, this finding is not unusual because the Indian banking industry is characterized by the absence of an active market for corporate control and substantial government ownership. Third, large public and old private banks mostly exhibit either decreasing or constant returns to scale, whereas small foreign and new private banks experience either increasing or decreasing returns to scale. Finally, based on bank-specific scale properties, the management can decide on the expansion or contraction of their operations in the subsequent operation period, which provides a vision of how to change outputs for changing inputs to preserve efficiency status in the subsequent period of activities.

Limitations and future recommendations

The proposed stochastic model requires information on the joint probability distributions of random inputs and outputs, which are generally inferred from their respective frequency distributions. Because this information is often not available in practice, we are at a loss to estimate them empirically. Given the nature of the input and output data that we deal with in this study, they are all subject to uncertainties; therefore, the requirement of availability of data on their joint probability distributions should not constrain the use of our empirically appealing stochastic model.

However, our stochastic model cannot be used freely. First, the inputs and outputs are assumed to be well approximated by a normal distribution, although they are all subject to empirical testing. Second, the use of a single-factor assumption for linear transformation requires further assumptions in the resulting LP problem that there are perfect correlations between any two inputs/outputs, or between input and output. We strongly believe that in any real-life decision-making situation characterized by the presence of uncertainty, the benefit of using the stochastic model outweighs its cost. For example, if the underlying objective is to analyze the efficiency, returns to scale, and returns to growth behavior of technology-intensive firms in the new economy, one must resort to the use of a stochastic model because these firms are all characterized by the presence of uncertainty concerning inventory holdings, excess capacity, and organizational slack as contingencies against uncertain future developments.

Finally, we point to some avenues for future potential research subjects.

-

From our proposed stochastic model, the possible presence of input and output slack for individual banks can be explored. Unlike in the case of a deterministic model, the slacks here can presumably be interpreted as inventory (i.e., excess reserves), desired excess capacity, and organizational slack, which firms must hold as contingencies against uncertain future developments. This analysis will aid management in deciding the accumulation of optimal slack to sustain market competition and price uncertainty.

-

One could also extend our stochastic model to network production technologies to analyze the efficiency and returns-to-scale characterizations of firms.

-

Both the chance-constrained program and stochastic frontier analysis can be compared to analyze and contrast the efficiency and returns-to-scale classification of firms.

-

Because the linear transformation method used in our proposed stochastic model requires the assumption of perfect correlations between inputs/outputs, the desired future research involves the development of an alternative linear transformation method, which could assume such assumptions.

-

Our proposed stochastic approach yields the expected efficiencies rather than the distribution of random efficiencies. Therefore, one can use the probabilistic approach by Kao and Liu (2009, 2019) to generate distributions of efficiency and scale elasticity scores for each firm, which could be more informative for better decision-making.

-

Our focus in this study is on the ex-post-facto analysis of already affected decisions, which can be used in the control aspects of management. However, future research requires an ex-ante (planning) analysis of how to use the returns to scale results based on various possible pre-defined tolerance levels to arrive at the precise tolerance level using which future-oriented decisions regarding whether to scale up or scale down operations can be affected.

-

Our empirical application of 71 banks over eight years (1998–2005) is primarily for illustrative purposes. However, an extension of the data set to the year 2021 is required to conduct a detailed empirical investigation concerning whether the effects of global extreme events such as COVID-19 and internal events such as the adoption of the Insolvency and Bankruptcy Code (IBC) Bill influence banks’ efficiency and scale performance differentials. Further, since our findings are not clear as to whether scale economies still provide an impetus for banks to become larger, we expect future researchers to examine returns to scale from either revenue or profit perspectives, which can provide a more complete picture of scale economies in the Indian banking industry.

-

Although the application of our proposed chance-constraint efficiency model is in banking to analyze efficiency and scale properties, it can potentially be applied to a wide selection of areas studied earlier, such as deriving innovative carbon-reducing emission strategies to increase the performance of solar energy investment projects in the transportation sector (Kou et al. 2022), evaluating the credit risks of small and medium-sized enterprises (SMEs) using payment and transactional data (Kou et al. 2021), evaluating credit ratings of online peer-to-peer (P2P) loans to control default risk and improve profit for lenders and platforms (Wang et al. 2021), evaluating the performance of various clustering algorithms that are used to assess financial risk (Kou et al. 2014), and detecting clusters in financial data to infer users’ behaviors and identify potential risks (Li et al. 2021).

-

Although our current empirical application to Indian banking is illustrative, our proposed chance-constraint efficiency model can potentially be applied to analyze the efficiency and scale properties of real-life firms with stochastic underlying production processes. Examples of these firms can be found in industries such as agriculture, where unpredictability in weather makes the input–output relationship stochastic; manufacturing industry, where firms face considerable variation in the quality of their inputs and outputs produced; product development industry, where firms face uncertainty regarding their new designs; and high-technology industries, where firms face hyper (dynamic)completion in the new (Internet) economy.

Availability of data and materials

All data used in this paper are available per request.

Notes

The concepts—‘firm’, ‘decision-making unit, DMU’, and ‘production unit’—are used synonymously in this paper. However, they are conceptually different. The concept of a firm is much broader as it includes not only the technology of the production unit but also the entire gamut of organization, management, learning by doing, reorganization of inputs, and other capabilities of the firm.

DEA (Charnes et al. 1978; Banker et al. 1984) has also been widely used in various application areas, inter alia, economic efficiency performance of countries (Koengkan et al. 2022), resource and energy efficiency of countries (Kazemzadeh et al. 2022), human development index (Despotis 2005; Lozano and Gutierrez 2008), and strategy (Saen and Azadi 2009). See Emrouznejad et al. (2008) for an excellent survey on the scholarly literature on the evaluation of research in efficiency and productivity.

The stochastic production relationship in a DEA setting may arise in different situations, for example, when stochastic variations in inputs and outputs affect the production frontier; when inputs and outputs are faced with stochastic prices while measuring allocative efficiency; when the slacks obtained from the DEA efficiency frontier are analyzed in terms of their statistical distribution; when an economic method is applied to estimate the stochastic production frontier; etc. (Sengupta 1982, 1987, 1990).

Alternatively, one may consider using the semi-parametric stochastic frontier analysis (SFA) approach. The advantage of using this approach over the chance-constrained DEA (CC-DEA) approach is that it has a solid statistical foundation. However, the underlying problem here is that it is not well suited to accommodate the firm-specific distributions of noise and inefficiency, due to which the results may not be useful from an individual decision-making point of view in the short run. The CC-DEA approach, on the other hand, accommodates measurement error and management inefficiency in the technology by replacing the observed inputs and outputs with the firm-specific distributions of inputs and outputs.

To linearize the non-linear program (11), one can also use the properties of the absolute function.

Cooper et al. (2000) have suggested, in general, considering the following tolerance level of the chance constraints: $$0<\gamma \le 0.5$$ for which the $$TE$$ scores lie between 0 and 1. However, for data with large amounts of uncertainty where \(0.5<\gamma <1\), the efficiency frontier moves closer to the given observations, and many observations will automatically come with unity or close to unit TE scores. The results of the CC program may then be questioned. That is why most of the studies in the stochastic case have considered the values for γ between 0 and 0.5, i.e., 0 < γ ≤ 0.5.

However, following Banker et al. (2004), one can consider evaluating the returns-to-scale type of bank $$\left({x}_{o},\mathrm{ }{x}_{o}\right)$$ globally with respect to the most productive scale size by jointly maximizing the proportional increase in outputs and minimizing the proportional decrease in inputs.

This finding is at odds with Das and Das (2007) that there is no evidence of diseconomies of scale for larger banks. We believe that this contrasting finding is due the use of econometric method, which, we suspect, can confound the effects of misspecification of functional form with scale economies. Therefore, for a more stable picture of scale economies in the Indian banking industry, we recommend future researchers to consider an examination of returns to scale from either revenue or profit perspective, which we have indicated as one of the potential future research projects.

Abbreviations

- DEA:

-

Data envelopment analysis

- RTS:

-

Returns to scale

- CRS:

-

Constant returns to scale

- IRS:

-

Increasing returns to scale

- DRS:

-

Decreasing returns to scale

- VRS:

-

Variable returns to scale

- SE:

-

Scale elasticity

- TE:

-

Technical efficiency

- CE:

-

Cost efficiency

- LP:

-

Linear programming

- CC:

-

Chance-constrained

References

Atici KB, Podinovski VV (2015) Using data envelopment analysis for the assessment of technical efficiency of units with different specializations: an application to agriculture. Omega 54:72–83

Banker RD (1993) Maximum likelihood, consistency and data envelopment analysis: a statistical foundation. Manage Sci 39:1265–1273

Banker RD, Morey RC (1986) Efficiency analysis for exogenously fixed inputs and outputs. Oper Res 34:513–521

Banker RD, Natarajan R (2004) Statistical tests based on DEA efficiency scores. In: Cooper WW, Seiford LM, Zhu J (eds) Handbook on data envelopment analysis. Springer

Banker RD, Charnes A, Cooper WW (1984) Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manage Sci 30:1078–1092

Banker RD, Cooper WW, Seiford LM, Thrall RM, Zhu J (2004) Returns to scale in different DEA models. Eur J Oper Res 154:345–362

Banker, R., Park, H. U., & Sahoo, B. (2022). A statistical foundation for the measurement of managerial ability. https://mpra.ub.uni-muenchen.de/111832/

Baumol WJ, Panzar JC, Willig RD (1982) Contestable markets and the theory of industry structure. Harcourt Brace Jovanovich, New York

Berger AN (2003) The economic effects of technological progress: evidence from the banking industry. J Money Credit Bank 35:141–176

Camanho AS, Dyson RG (2005) Cost efficiency measurement with price uncertainty: a DEA application to bank branch assessments. Eur J Oper Res 161:432–446

Camanho AS, Dyson RG (2008) A generalization of the Farrell cost-efficiency measure applicable to non-fully competitive settings. Omega 36:147–162

Charnes A, Cooper WW (1959) Chance-constrained programming. Manage Sci 6:73–79

Charnes A, Cooper W (1962) Chance constraints and normal deviates. J Am Stat Assoc 57:134–148

Charnes A, Cooper WW (1963) Deterministic equivalents for optimizing and satisficing under chance constraints. Oper Res 11:18–39

Charnes A, Cooper WW (1977) Goal programming and multiple objective optimizations: Part 1. Eur J Oper Res 1:39–54

Charnes A, Cooper WW, Rhodes E (1978) Measuring the efficiency of decision-making units. Eur J Oper Res 2:429–444

Cherchye L, Rock BD, Dierynck B, Roodhooft F, Sabbe J (2013) Opening the “black box” of efficiency measurement: input allocation in multioutput settings. Oper Res 61:1148–1165

Cherchye L, De Rock B, Walheer B (2015) Multi-output efficiency with good and bad outputs. Eur J Oper Res 240:872–881

Cherchye L, De Rock B, Walheer B (2016) Multi-output profit efficiency and directional distance functions. Omega 61:100–109

Cook WD, Zhu J (2011) Multiple variable proportionality in data envelopment analysis. Oper Res 59:1024–1032

Cooper W, Huang Z, Li SX (1996) Satisficing DEA models under chance constraints. Ann Oper Res 66:279–295

Cooper WW, Huang Z, Lelas V, Li SX, Olesen OB (1998) Chance constrained programming formulations for stochastic characterizations of efficiency and dominance in DEA. J Prod Anal 9:53–79

Cooper WW, Deng H, Huang Z, Li SX (2002) Chance constrained programming approaches to technical efficiencies and inefficiencies in stochastic data envelopment analysis. J Oper Res Soc 53:1347–1356

Cooper WW, Deng H, Huang Z, Li SX (2004) Chance constrained programming approaches to congestion in stochastic data envelopment analysis. Eur J Oper Res 155:487–501

Cooper WW, Seiford LM, Tone K (2000) Data envelopment analysis. In: Cooper WW, Seiford LM, Zhu J (eds) Handbook on data envelopment analysis, 1st edn, pp 1–40

Cooper WW, Huang Z, Li SX (2011) Chance-constrained DEA. In: Handbook on data envelopment analysis. Springer, Boston, MA, pp 211–240

Das A, Das S (2007) Scale economies, cost complementarities and technical progress in Indian banking: evidence from fourier flexible functional form. Appl Econ 39:565–580

De Alessi L (1980) The economics of property rights: a review of the evidence. In: Richard OZ (ed) Research in law and economics: a research annual, vol 2. Jai Press, Greenwich, pp 1–47

Despotis DK (2005) A reassessment of the human development index via data envelopment analysis. J Oper Res Soc 56:969–980

Emrouznejad A, Parker BR, Tavares G (2008) Evaluation of research in efficiency and productivity: a survey and analysis of the first 30 years of scholarly literature in DEA. Socioecon Plann Sci 42:151–157

Färe R, Grosskopf S, Lovell CAK (1985) The measurement of efficiency of production. Kluwer Nijhoff, Boston

Färe R, Grosskopf S, Lovell CAK (1988) Scale elasticity and scale efficiency. J Inst Theor Econ 144:721–729

Farrell MJ (1957) The measurement of productive efficiency. J R Stat Soc 120:253–290

FarzipoorSaen R, Azadi M (2009) The use of super-efficiency analysis for strategy ranking. Int J Soc Syst Sci 1:281–292

Førsund FR (1996) On the calculation of the scale elasticity in DEA models. J Prod Anal 7:283–302

Fukuyama H, Weber WL (2004) Economic inefficiency measurement of input spending when decision-making units face different input prices. J Oper Res Soc 55:1102–1110

Fukuyama H, Weber WL (2008) Profit inefficiency of Japanese securities firms. J Appl Econ 11:281–303

Fusco E, Vidoli F, Sahoo BK (2018) Spatial heterogeneity in composite indicator: a methodological proposal. Omega 77:1–14

Grosskopf S (1996) Statistical inference and nonparametric efficiency: a selective survey. J Prod Anal 7:161–176

Huang Z, Li SX (1996) Dominance stochastic models in data envelopment analysis. Eur J Oper Res 95:390–403

Huang Z, Li SX (2001) Stochastic DEA models with different types of input-output disturbances. J Prod Anal 15:95–113

Jess A, Jongen HT, Neralić L, Stein O (2001) A semi-infinite programming model in data envelopment analysis. Optimization 49:369–385

Kahane Y (1977) Determination of the product mix and the business policy of an insurance company—a portfolio approach. Manag Sci 23:1060–1069

Kao C, Liu ST (2009) Stochastic data envelopment analysis in measuring the efficiency of Taiwan commercial banks. Eur J Oper Res 196:312–322

Kao C, Liu ST (2014) Measuring performance improvement of Taiwanese commercial banks under uncertainty. Eur J Oper Res 235:755–764

Kao C, Liu ST (2019) Stochastic efficiency measures for production units with correlated data. Eur J Oper Res 273:278–287

Kazemzadeh E, Fuinhas JA, Koengkan M, Osmani F, Silva N (2022) Do energy efficiency and export quality affect the ecological footprint in emerging countries? A two-step approach using the SBM–DEA model and panel quantile regression. Environ Syst Decis 42:608–625

Koengkan M, Fuinhas JA, Kazemzadeh E, Osmani F, Alavijeh NK, Auza A, Teixeira M (2022) Measuring the economic efficiency performance in Latin American and Caribbean countries: an empirical evidence from stochastic production frontier and data envelopment analysis. Int Econ 169:43–54

Kou G, Peng Y, Wang G (2014) Evaluation of clustering algorithms for financial risk analysis using MCDM methods. Inf Sci 275:1–12

Kou G, Xu Y, Peng Y, Shen F, Chen Y, Chang K, Kou S (2021) Bankruptcy prediction for SMEs using transactional data and two-stage multiobjective feature selection. Decis Support Syst 140:113429

Kou G, Yüksel S, Dinçer H (2022) Inventive problem-solving map of innovative carbon emission strategies for solar energy-based transportation investment projects. Appl Energy 311:118680

Kuosmanen T (2005) Weak disposability in nonparametric production analysis with undesirable outputs. Am J Agric Econ 87:1077–1082

Lamb JD, Tee KH (2012) Resampling DEA estimates of investment fund performance. Eur J Oper Res 223:834–841

Land KC, Lovell CAK, Thore S (1993) Chance-constrained data envelopment analysis. Manag Decis Econ 14:541–554

Land KC, Lovell CAK, Thore S (1994) Productive efficiency under capitalism and state socialism: an empirical inquiry using chance-constrained data envelopment analysis. Technol Forecast Soc Chang 46:139–152

Levy B (1987) A theory of public enterprise behavior. J Econ Behav Organ 8:75–96

Li SX (1995a) A satisficing chance constrained model in the portfolio selection of insurance lines and investments. J Oper Res Soc 46:1111–1120

Li SX (1995b) An insurance and investment portfolio model using chance constrained programming. Omega 23:577–585

Li SX (1998) Stochastic models and variable returns to scales in data envelopment analysis. Eur J Oper Res 104:532–548

Li SX, Huang Z (1996) Determination of the portfolio selection for a property-liability insurance company. Eur J Oper Res 88:257–268

Li T, Kou G, Peng Y, Philip SY (2021) An integrated cluster detection, optimization, and interpretation approach for financial data. IEEE Trans Cybern

Lozano S, Gutiérrez E (2008) Data envelopment analysis of the human development index. Int J Soc Syst Sci 1:132–150

Morita H, Seiford LM (1999) Characteristics on stochastic DEA efficiency. J Oper Res Soc 42:389–404

Niskanen WA (1975) Bureaucrats and politicians. J Law Econ 18:617–643

Olesen OB (2006) Comparing and combining two approaches for chance-constrained DEA. J Prod Anal 26:103–119

Olesen OB, Petersen N (1995) Chance constrained efficiency evaluation. Manag Sci 41:442–457

Olesen OB, Petersen NC (2016) Stochastic data envelopment analysis—a review. Eur J Oper Res 251:2–21

Olesen OB, Petersen NC (2000) Foundation of chance constrained data envelopment analysis for Pareto-Koopmann efficient production possibility sets. In: International DEA symposium 2000, measurement and improvement in the 21st century. The University of Queensland, pp 313–349

Panzar JC, Willig RD (1977) Economies of scale in multi-output production. Quart J Econ, 481–493

Park KS, Cho J-W (2011) Pro-efficiency: data speak more than technical efficiency. Eur J Oper Res 215:301–308

Podinovski VV (2004a) Production trade-offs and weight restrictions in data envelopment analysis. J Oper Res Soc 55:1311–1322

Podinovski VV (2004b) Bridging the gap between the constant and variable returns-to-scale models: selective proportionality in data envelopment analysis. J Oper Res Soc 55:265–276

Podinovski VV (2007) Improving data envelopment analysis by the use of production trade-offs. J Oper Res Soc 58:1261–1270

Podinovski VV (2016) Optimal weights in DEA models with weight restrictions. Eur J Oper Res 254:916–924

Podinovski VV, Førsund FR, Krivonozhko VE (2009) A simple derivation of scale elasticity in data envelopment analysis. Eur J Oper Res 197:149–153

Podinovski VV, Chambers RG, Atici KB, Deineko ID (2016) Marginal values and returns to scale for nonparametric production frontiers. Oper Res 64:236–250

Podinovski, V. V. (2015). DEA models with production trade-offs and weight restrictions. In: Data envelopment analysis. Springer, Boston, pp 105–144.

Sahoo BK, Acharya D (2010) An alternative approach to monetary aggregation in DEA. Eur J Oper Res 204(3):672–682

Sahoo BK, Gstach D (2011) Scale economies in Indian commercial banking sector: evidence from DEA and translog estimates. Int J Inf Syst Soc Chang 2:13–30

Sahoo B, Sengupta J (2014) Neoclassical characterization of returns to scale in nonparametric production analysis. J Quant Econ 12:78–86

Sahoo BK, Tone K (2009a) Decomposing capacity utilization in data envelopment analysis: an application to banks in India. Eur J Oper Res 195:575–594

Sahoo BK, Tone K (2009b) Radial and non-radial decompositions of profit change: with an application to Indian banking. Eur J Oper Res 196:1130–1146

Sahoo BK, Tone K (2013) Non-parametric measurement of economies of scale and scope in non-competitive environment with price uncertainty. Omega 41:97–111

Sahoo BK, Tone K (2022) Evaluating the potential efficiency gains from optimal industry configuration: a case of life insurance industry of India. Manag Decis Econ 43:3996–4009

Sahoo BK, Mohapatra PKJ, Trivedi ML (1999) A comparative application of data envelopment analysis and frontier translog production function for estimating returns to scale and efficiencies. Int J Syst Sci 30:379–394

Sahoo BK, Kerstens K, Tone K (2012) Returns to growth in a nonparametric DEA approach. Int Trans Oper Res 19:463–486

Sahoo BK, Mehdiloozad M, Tone K (2014a) Cost, revenue and profit efficiency measurement in DEA: a directional distance function approach. Eur J Oper Res 237:921–931

Sahoo BK, Zhu J, Tone K, Klemen BM (2014c) Decomposing technical efficiency and scale elasticity in two-stage network DEA. Eur J Oper Res 233:584–594

Sahoo BK, Singh R, Mishra B, Sankaran K (2017) Research productivity in management schools of India during 1968–2015: a directional benefit-of-doubt model analysis. Omega 66:118–139

Sahoo BK, Tone K (2015) Scale elasticity in non-parametric DEA approach. In: Data envelopment analysis. Springer, Boston, pp 269–290

Sahoo BK, Zhu J, Tone K (2014a) Decomposing efficiency and returns to scale in two-stage network systems. In: Data envelopment analysis. Springer, Boston, pp 137–164

Sengupta JK (1982) Efficiency measurement in stochastic input-output systems. Int J Syst Sci 13:273–287

Sengupta JK (1987) Data envelopment analysis for efficiency measurement in the stochastic case. Comput Oper Res 14:117–129