Abstract

This paper proposes two numerical approaches for solving the coupled nonlinear time-fractional Burgers’ equations with initial or boundary conditions on the interval \([0, L]\). The first method is the non-polynomial B-spline method based on L1-approximation and the finite difference approximations for spatial derivatives. The method has been shown to be unconditionally stable by using the Von-Neumann technique. The second method is the shifted Jacobi spectral collocation method based on an operational matrix of fractional derivatives. The proposed algorithms’ main feature is that when solving the original problem it is converted into a nonlinear system of algebraic equations. The efficiency of these methods is demonstrated by applying several examples in time-fractional coupled Burgers equations. The error norms and figures show the effectiveness and reasonable accuracy of the proposed methods.

Similar content being viewed by others

1 Introduction

Many phenomena in engineering and applied sciences can be represented successfully using fractional calculus models such as anomalous diffusion, materials, and mechanics, signal processing, biological systems, finance, etc. (see, for instance, [1–7]). There is a tremendous interest in fractional differential equations, as the theory of fractional derivatives itself and its applications have been intensively developed. The theory of fractional differential equations describes the reality of life more powerfully and systematically. In recent years, several researchers have studied differential equations of fractional order through diverse techniques [8–11].

The time-fractional Burgers’ equation is important since it is a kind of sub-diffusion convection equation. Several different methods for solving the equation have been developed such as the local fractional Riccati differential equation method in [12], the homotopy analysis transform method [13], the finite difference method [14], the variational iteration method [15]. The study of coupled Burgers equations is significant for t-dimensional. The system is a simple sedimentation model or the evolution of scaled volume concentrations of two types of fluid suspension or colloid particles under the influence of gravity. Many powerful methods had been developed to find analytic or numerical solutions of coupled Burgers’ equations such as homotopy perturbation method [16], differential transformation method [17], non-polynomial spline method [18], septic b-spline collocation method [19], Galerkin quadratic b-spline method [20], Adomian decomposition method [21], meshless radial point interpolation method [22].

Several vital analytical and numerical techniques have been proposed to solve the coupled nonlinear time-fractional Burger equations NLTFBEs. Prakash et al. [23] suggested an analytical algorithm based on the homotopy perturbation Sumudu transform method to investigate the coupled NLFBEs. Hoda et al. [24] introduced the Laplace–Adomian decomposition method, the Laplace variational iteration method, and the reduced differential transformation method for solving the one-dimensional and two-dimensional fractional coupled Burgers’ equations. In [25] Liu and Hou explicitly applied the generalized two-dimensional differential transform method to solve the coupled space- and time-fractional Burgers equations (STFBEs). Heydari and Avazzadeh [26] proposed an effective numerical method based on Hahn polynomials to solve the nonsingular variable-order time-fractional coupled Burgers’ equations. The authors in [27] suggested a hybrid spectral exponential Chebyshev approach based on a spectral collection method to solve the coupled TFBEs. Veeresha and Prakasha [28] and Singh et al. [29] presented the q-homotopy analysis transform method to solve the coupled TFBEs and STFBEs, respectively. The coupled STFBEs have also been solved using the Adomian decomposition method by Chan and An [30]. Islam and Akbar [31] obtained exact general solutions of the coupled STFBEs by using the generalized \((G'/G)\)-expansion method with the assistance of the complex fractional transformation. Prakash et al. [32] proposed the fractional variational iteration method to solve the same problem. Hussein [33] proposed a continuous and discrete-time weak Galerkin finite element approach for solving two-dimensional time-fractional coupled Burgers’ equations. Hussain et al. [34] obtained the numerical solutions of the coupled TFBEs using the meshfree spectral method.

In comparison with local methods, spectral methods are often efficient and highly accurate systems. Convergence speed is one of the spectral methods’ great advantages. Furthermore, spectral methods have a high level of precision (often so-called “exponential convergence”). The primary idea of all spectral method versions is to express the solution to the problem as a finite sum of certain foundation functions and then to choose the coefficients, to minimize the difference between precise and approximate solutions. Recently, the classical spectral methods have been developed to obtain accurate solutions for linear and nonlinear FDEs. Spectral approaches with the assistance of operational matrices of orthogonal polynomials have been considered to approximate the solution of FDEs (see, for example, [35–39]).

One of the disadvantages of the non-polynomial method is that the time step size must be small enough. The main advantage of the proposed methods is that they are easy to implement. Also, the solutions can be obtained with high accuracy with relatively fewer spatial grid nodes compared with other numerical techniques. For this reason and because the current methods can be directly applied to other applications, we are motivated to apply these techniques for coupled Burgers equations.

In this paper we develop two accurate numerical methods to approximate the numerical solutions of the coupled TFBEs. The first method is the non-polynomial B-spline method [8, 40–42] based on the L1-approximation and finite difference approximations for spatial derivatives. The second method is the shifted Jacobi spectral collocation method [43–45] with the assistance of the operational matrix of fractional and integer-order derivatives. The collocation approach proposed in this paper is somewhat different from those collocation methods commonly discussed in the literature. Now, we consider the time-fractional coupled Burgers’ equations of the form

subject to the initial and boundary conditions

where \(\alpha _{1}\) and \(\alpha _{2}\) are parameters describing fractional derivatives, x and t are the space and time variables, respectively. u and v are the velocity components to be determined. \(f (x,t )\) and \(g (x,t )\) are continuous functions on their domains. The functions \(p(x)\), \(q(x)\), \(f_{1}(t)\), \(f_{2}(t)\), \(g_{1}(t)\), \(g_{2}(t)\) are sufficient smooth functions. The fractional derivatives of order \(\alpha _{1}\) and \(\alpha _{2}\) in Eqs. (1) and (2) are treated in the sense of Liouville–Caputo defined by Jerome and Oldham [6]. In the case of \(\alpha _{1}=\alpha _{2}=1\), Eqs. (1) and (2) are reduced to the classical coupled Burgers equations.

Definition 1

([1])

A real function \(u(t)\), \(t > 0\), is said to be in the space \(C_{\varOmega }\), \(\varOmega \in \mathbb{R}\) if there exists a real number \(p >\varOmega \) such that \(u(t) = t^{p} u_{1}(t)\), where \(u_{1}(t) \in C(0,\infty )\), and it is said to be in the space \(C^{n}_{\varOmega }\) if and only if \(u^{(n)}\in C_{\varOmega }\), \(n\in \mathbb{N}\).

Definition 2

([21])

The Liouville–Caputo fractional derivative of \(u\in C_{\varOmega }^{n}\) (\(\varOmega \geq -1\)) is defined as

2 Non-polynomial B-spline method

In this section, we take a spline function of the form: \(H_{3}=\operatorname{span}\{1,x,\sinh (\omega x),\cosh (\omega x)\}\), where ω is the frequency of the hyperbolic part of spline functions which will be used to raise the accuracy of the method.

2.1 Derivation of the numerical method

Consider \(x\in [a,b]\) and \(t\in [0,\tau ]\). Let \(a=x_{0}< x_{1}<\cdots<x_{N}<x_{N+1}=b\) and \(0=t_{0}< t_{1}<\cdots<t_{M}=\tau \) be the uniform meshes of the intervals \([a,b]\) and \([0,\tau ]\), where \(x_{i}=a+ih\), \(h=(b-a)/(N+1)\), and \(t_{n}=nk\), \(k=\tau /M\) for \(n=0,1,\ldots,M\) and \(i=0,1,\ldots,N+1\). Let \(U_{i}^{n}\) and \(V_{i}^{n}\) be an approximation to \(u(x_{i},t_{n})\) and \(v(x_{i},t_{n})\), respectively, obtained by the segment \(P_{i}(x,t_{n})\) of the mixed spline function passing through the points \((x_{i},U_{i}^{n})\) and \((x_{i+1},U_{i+1}^{n})\), \((x_{i},V_{i}^{n})\) and \((x_{i+1},V_{i+1}^{n})\). Each segment has the form [8, 42]

for each \(i=0,1,\ldots,N\). To obtain expressions for the coefficients of Eq. (4) in terms of \(U_{i}^{n}\), \(U_{i+1}^{n}\), \(V_{i}^{n}\), \(V_{i+1}^{n}\), \(S_{i}^{n}\), and \(S_{i+1}^{n}\) which are as follows:

where \(P_{i}^{(2)}(x,t)=\frac{\partial ^{2}}{\partial x^{2}}P_{i}(x,t)\). Using Eqs. (4) and (5), we get

where \(a_{i}\equiv a_{i}(t_{n})\), \(b_{i}\equiv b_{i}(t_{n})\), \(c_{i}\equiv c_{i}(t_{n})\), \(d_{i}\equiv d_{i}(t_{n})\), and \(\theta =\omega h\).

Solving the last four equations, we obtain the following expressions:

Using the continuity condition of the first derivative at \(x=x_{i}\), that is, \(P_{i}^{\prime }(x_{i},t_{n})=P_{i-1}^{\prime }(x_{i},t_{n})\), we get the following equation:

Using Eq. (6), and after slight rearrangements, Eq. (7) becomes

Similarly, we get

where \(\gamma =\frac{h^{2}}{\theta ^{2} }-\frac{h^{2}}{\theta \sinh \theta }\), \(\beta = \frac{2h^{2}\cosh \theta }{\theta \sinh \theta }- \frac{2h^{2}}{\theta ^{2}}\), and \(\theta =\omega h \).

Remark 1

As \(\omega \longrightarrow 0\), that is, \(\theta \longrightarrow 0\), \(( \gamma ,\beta ) \longrightarrow ( \frac{h^{2}}{6},\frac{4h^{2}}{6} )\), and Eqs. (8) and (9) become as follows:

From Eqs. (1) and (2), we write \(S_{i}^{n}\) and \(\rho _{i}^{n}\) in the form

The time-fractional partial derivatives of order \(\alpha _{1}\) and \(\alpha _{2}\) in Eq. (1) are considered in the Liouville–Caputo fractional derivatives, which can be approximated by the following lemma.

Lemma 1

([46])

Suppose \(0<\alpha <1\) and \(g(t)\in C^{2}[0,t_{n}]\), it holds that

where \(\varphi _{q}^{\alpha }=(q+1)^{1-\alpha }-q^{1-\alpha }\), \(q\geq 0\).

Lemma 2

([47])

Let \(0<\alpha <1\) and \(\varphi _{q}=(q+1)^{1-\alpha }-q^{1-\alpha }\), \(q= 0, 1, \ldots\) , then \(1=\varphi _{0}^{\alpha }>\varphi _{1}^{\alpha }>\cdots >\varphi _{q}^{\alpha }\rightarrow 0\), as \(q\rightarrow \infty \).

Using Lemma 1, the Liouville–Caputo fractional derivative can be approximated as follows:

where \(\sigma _{1}=\frac{k^{-\alpha _{1}}}{\Gamma (2-\alpha _{1})}\), \(\sigma _{2}=\frac{k^{-\alpha _{2}}}{\Gamma (2-\alpha _{2})}\).

Substituting Eqs. (13) and (14) into Eqs. (10) and (11), the spatial derivatives \(S_{r}^{n}\) and \(\rho _{r} ^{n}\), \(r=i-1, i, i+1\), are discretized for \(n=1\) and \(n\geq 2\) as follows:

where \(\delta _{i}^{n}= ( V_{i}^{n}-2U_{i}^{n} ) \), \(\zeta _{i}^{n}= ( U_{i}^{n}-2V_{i}^{n} ) \), \(\eta _{i}^{n}=U_{i}^{n}\), and \(\xi _{i}^{n}=V_{i}^{n}\), \(i=1,2,\ldots,N\).

Substituting Eqs. (15) to (18) into Eqs. (8) and (9), after slight rearrangement, yields the following systems:

where \(i=1,2,\ldots,N\), \(n\geq 2\) and

The system thus obtained on simplifying Eqs. (19) to (22) consists of \((2N+4)\) unknown variables \(( U_{0},U_{1},\ldots,U_{N+1} ) \) and \(( V_{0},V_{1},\ldots,V_{N+1} ) \) in the \((2N ) \) linear equations. Four additional constraints are necessary to achieve a unique solution to the resulting scheme. These are obtained as follows by introducing boundary conditions:

Eliminating \(U_{0}\), \(U_{N+1}\) and V0, V\(_{N+1}\), the system gets reduced to a matrix system of dimension \((2N)\times (2N)\), and the initial values are obtained by the initial conditions.

Remark 2

The local truncation errors (see [46]) \(T=[T_{1i},T_{2i}]\), \(i=1,2,\dots ,N\), can be written as follows:

Equations (23) and (24) design two methods for choices of parameters β and γ as follows:

-

1.

If \(2\gamma +\beta =h^{2}\) and \(\gamma \neq \frac{h^{2}}{12}\), then \(T_{ji}=O(k^{2-\alpha _{2}}+h^{4})\), \(j=1,2\).

-

2.

If \(2\gamma +\beta =h^{2}\) and \(\gamma = \frac{h^{2}}{12}\), then \(T_{ji}=O(k^{2-\alpha _{2}}+h^{6})\), \(j=1,2\).

2.2 Convergence analysis

According to Remark 2, we have chosen \(2\gamma +\beta =h^{2}\), where \(\gamma =\frac{h^{2}}{12}\) and \(\beta =\frac{5h^{2}}{6}\). Let us rewrite Eqs. (21) and (22) as follows:

where \(R=[U,V]^{T}\), \(U=[U_{1}^{n},U_{2}^{n},\dots ,U_{N}^{n}]^{T}\), \(V=[V_{1}^{n},V_{2}^{n}, \dots ,V_{N}^{n}]^{T}\) and a matrix Q is given as a block matrix

where

and square matrices \(Q_{0}\), \(Q_{1}\), and \(Z_{x}, x=\delta ,\eta ,\xi ,\zeta \) are given by

A matrix \(P=[P_{1},P_{2}]^{T}\) where

Let \(\bar{U}=[u,v]^{T}\), \(u=[u_{1},u_{2},\dots ,u_{N}]^{T}\), and \(v=[v_{1},v_{2},\dots ,v_{N}]^{T}\) be the exact solutions of Eqs. (1) and (2) at nodal points \(x_{i}\), \(i=1,2,\dots ,N\), and then we have

where \(T=[T_{1i},T_{2i}]^{T}\) is the local truncation error described in Remark 2. From Eqs. (25) and (27), we can write the error equation as follows:

where \(E=[E_{1i},E_{2i}]^{T}\) is the error of discretization with \(E_{1i}=u_{i}-U_{i}^{n}\) and \(E_{2i}=v_{i}-V_{i}^{n}\).

For sufficiently small step h, the diagonal blocks \(Q_{11}\) and \(Q_{22}\) are invertible and the following condition holds:

According to [48], matrix Q is invertible. Moreover,

From Eq. (28) and norm inequalities, we have

Since

and from classifications of the matrices \(Q_{11}\), \(Q_{12}\), \(Q_{21}\), and \(Q_{22}\) defined in Eq. (26), we have

This shows that Eq. (25) is a second-order convergence method in the case \(2\gamma +\beta =h^{2}\), \(\gamma \neq h^{2}/12\), and a fourth-order convergence method in the case \(2\gamma +\beta =h^{2}\), \(\gamma = h^{2}/12\).

2.3 Stability analysis of the method

The stability analysis of the difference schemes listed in Eqs. (19) to (22) is discussed by assuming the nonlinear terms \(\delta _{r}^{n}\) and \(\eta _{r}^{n}\), \(r=i-1, i, i+1\), as local constants D and E respectively.

Let \(\tilde{U}_{i}^{n}\) and \(\tilde{V}_{i}^{n}\) be the approximate solutions of Eqs. (19) to (22) and define

With the above definition and regarding Eqs. (19) and (21), we can get the following round-off error equations:

where \(a_{1}=1-\gamma \sigma _{1}+\frac{D}{2h} ( \beta +2\gamma )\), \(a_{2}=-2-\beta \sigma _{1}\), \(a_{3}=1-\gamma \sigma _{1}- \frac{D}{2h} ( \beta +2\gamma )\), \(a_{4}=\frac{E}{2h} ( \beta +2\gamma )\), \(a_{5}=0\), \(a_{6}=-\frac{E}{2h} ( \beta +2\gamma ) \), \(a_{7}=-\gamma \sigma _{1} \varphi _{n-1}^{\alpha _{1}}\), and \(a_{8}=-\beta \sigma _{1}\varphi _{n-1}^{\alpha _{1}}\).

The Von Neumann method assumes that

where \(I=\sqrt{-1}\).

Substituting Eqs. (34) and (35) into Eq. (32), we get

after some algebraic manipulation, we have

where \(\varphi =\sigma _{1} ( \beta +2\gamma \cos ( \phi h ) ) \), \(\eta =2 ( 1-\cos (\phi h) ) +\varphi \), and \(\psi =\frac{D+E}{h} (\beta +2\gamma ) \sin ( \phi h ) \).

Substituting Eqs. (34) and (35) into Eq. (33) results in

After some rearrangement we get

Using mathematical induction, we can prove that \(\vert \varsigma _{n} \vert \leq \vert \varsigma _{0} \vert \) as follows:

For \(n=2\),

Let \(k\in \mathbb{Z_{+}} \) be given and suppose \(\vert \varsigma _{n} \vert \leq \vert \varsigma _{0} \vert \) is true for \(n = k\). Then

By Lemma 2, we have \(0<\varphi _{q}^{\alpha _{1}}<\varphi _{q-1}^{\alpha _{1}}\), \(q=0,1,\ldots \) , and consequently \((\varphi _{k-q-1}^{\alpha _{1}}-\varphi _{k -q}^{\alpha _{1}} )>0\). Thus

Expanding the summation in the last equation, the intermediate terms cancel each other, and we are left with the term \(\varphi _{0}^{\alpha _{1}} \vert \varsigma _{0} \vert \). Thus, \(\vert \varsigma _{n} \vert \leq \vert \varsigma _{0} \vert \) holds for \(n\geq 1\), and we have stability for \(\beta ,\gamma > 0\).

3 Shifted Jacobi spectral collocation method

This section introduces a numerical scheme based on the shifted Jacobi spectral collocation method (SJSCM) to obtain the approximate solution of the coupled Burgers system Eqs. (1) and (2).

The shifted Jacobi polynomial of degree j is denoted by \(P_{L,j}^{(\mu ,\eta )}(x); \mu ,\eta \geq -1\), \(x\in [0,L]\) constitute an orthogonal system with respect to the weight function \(\omega _{L}^{(\mu ,\eta )}(x)=x^{\eta }(L-x)^{\mu }\)

where \(\delta _{ij}\) is the Kronecker function and

The shifted Jacobi polynomial can be obtained with the following three-term recurrence relation:

with \(P_{L,0}^{(\mu ,\eta )}(x)=1\) and \(P_{L,1}^{(\mu ,\eta )}(x)=\frac{1}{L}(\mu +\eta +2)x-(\eta +1)\), where

The analytic form of shifted Jacobi polynomial \(P_{L,j}^{(\mu ,\eta )}(x)\) is given by

The values of the shifted Jacobi polynomials at the boundary points are given by

Suppose that \(f(x)\) is a square-integrable function with respect to the shifted Jacobi weight function \(\omega _{L}^{(\mu ,\eta )}\) on the interval \((0,L)\), then \(f(x)\) can be written in terms of \(P_{L,j}^{(\mu ,\eta )}\) as follows:

where

The shifted Jacobi–Gauss quadrature is used to approximate the previous integral as follows:

where \(x_{G,L,k}^{(\mu ,\eta )}, k=0,1,\ldots ,N\), are the roots of the shifted Jacobi polynomial \(P_{L,N+1}^{(\mu ,\eta )}(x)\) of degree \(N+1\) and \(\omega _{G,L,k}^{(\mu ,\eta )}, k=0,1,\ldots ,N \), are the corresponding Christoffel numbers

Now, if we approximate \(f(x)\) in Eq. (39) by the first \((N+1)\)-terms, then

with \(A^{T}= [ a_{0}\enskip a_{1}\enskip \cdots \enskip a_{N}] \) and \(\Psi _{L,N}(x)= [ P_{L,0}^{(\mu ,\eta )}(x)\enskip P_{L,1}^{(\mu ,\eta )}(x)\enskip \cdots \enskip P_{L,N}^{( \mu ,\eta )}(x) ] ^{T}\).

Similarly, in terms of the double shifted Jacobi polynomials, a function of two independent variables \(f(x, t)\) that is infinitely differentiable in \([0, L] \times [0, \tau ]\) can be extended as follows:

which can be approximated by the first \((M+1)\times (N+1)\) terms with the truncation error \(\sum_{i=M+1}^{\infty }\sum_{j=N+1}^{\infty }a_{ij}P_{\tau ,i}^{(\mu , \eta )}(t) P_{L,j}^{(\mu ,\eta )}(x)\) as follows:

with the coefficient matrix A given by

where

Using the shifted Jacobi–Gauss quadrature formula [36, 49], we can approximate the coefficients \(a_{ij}\) as follows:

where \(x_{G,L,\zeta }^{(\mu ,\eta )}\), \(t_{G,\tau ,\kappa }^{(\mu ,\eta )}\), \(\omega _{G,\tau ,\kappa }^{(\mu ,\eta )}\), and \(\omega _{G,L,\zeta }^{(\mu ,\eta )}\) are defined by Eqs. (40) and (41).

Theorem 1

([39])

Let \(\Psi _{\tau ,M}(t)\) be shifted Jacobi vector defined in Eq. (42), and let \(\alpha > 0\). Then

where \(D_{\alpha }\) is the \((M + 1) \times (M + 1)\) operational matrix of derivatives of order α in the Liouville–Caputo sense and is defined by

where \(\lfloor \alpha \rfloor \) is the floor function and

Similarly, the fractional derivative α of \(\Psi _{L,N}(x)\) can be expressed as in Eq. (45):

where \(D_{\alpha }\) is the \((N + 1) \times (N + 1)\) operational matrix of derivatives of order α.

3.1 Time-fractional coupled Burgers’ equation

We are going to consider the time-fractional coupled Burgers’ Eqs. (1) and (2), which may be written as follows:

with the initial-boundary conditions

Using Eq. (43), we approximate \(u(x,t)\), \(v(x,t)\), \(f(x,t)\), and \(g(x,t)\) as follows:

where U and V are unknown coefficients \((M+1)\times (N+1)\) matrices, while A and B are defined by Eq. (43), where

Using Theorem 1, we have

Substituting Eqs. (49) and (50) in Eqs. (47) and (48) and collocating at \((M+1)\times (N-1)\) points, we have

where \(x_{j}\), \(j=0,1,\ldots ,N-2\), are the roots of shifted Jacobi polynomial \(P_{L,N-1}^{(\mu ,\eta )} (x )\) of degree \(N-1\) and \(t_{i}\), \(i=0,1,\ldots ,M\), are the roots of shifted Jacobi polynomial \(P_{\tau ,M+1}^{(\mu ,\eta )} (t )\) of degree \(M+1\). System (51) consists of \(2(M+1)\times (N-1)\) algebraic equations in the unknown coefficients \(u_{ij}\) and \(v_{ij}\), \(i=0, 1,\ldots ,M\), \(j=0,1,\ldots ,N-2\). Four additional equations are needed to obtain a unique solution for the resulting scheme. These are accomplished by the boundary conditions

System (51) can be combined with Eq. (52) to form the system of \(2(M + 1)(N +1)\) nonlinear algebraic equations in \(2(M + 1)(N +1)\) undefined coefficients, which can be resolved using Newton’s iterative approach. Consequently, \(u_{M, N}(x, t)\) and \(v_{M,N}(x, t)\) can be calculated by the formulae given in Eq. (49).

The convergence and error analysis of the shifted Jacobi polynomial has been considered in [50, 51]. The Caputo fractional derivative of the shifted Jacobi polynomials satisfies the following estimate:

where \(\mathcal{C}\) is a positive generic constant and \(q=\max \{\mu ,\eta ,-1/2\}\). [50, Theorem 3] proved that the expansion coefficient \(a_{i,j}\) in Eq. (43) satisfies the following estimate:

Finally, by [51, Theorem 3], we find that the truncation error of solutions of Eqs. (47) and (48) obtained by shifted Jacobi polynomial satisfies the following estimates:

4 Numerical solutions

In this section, we discuss three numerical examples representing the time-fractional coupled Burgers equations to ensure the high accuracy and applicability of the suggested methods. We compute the \(L_{2}\) and \(L_{\infty }\) error norms by the following formulae:

where

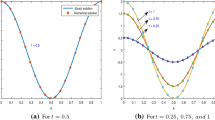

The proposed methods are examined up to \(\tau =1\), where the time step-size of the first algorithm is \(k=1/512\). In all figures, sub-figures (a) and (b) represent the approximate solutions and absolute errors of the SJSCM, respectively, and sub-figures (c) and (d) represent the approximate solutions and absolute errors of the non-polynomial B-spline method, respectively.

Example 1

Consider the system introduced in Eqs. (1) and (2) with initial-boundary conditions:

where

The exact solution of this problem is \(u ( x,t ) =v ( x,t ) =t^{3} \sin ( e^{- x} ) \).

The \(L_{2}\) and \(L_{\infty }\) error norms and the approximation solutions and absolute error distributions of Example 1 are given in Tables 1 to 3 and Figs. 1 to 3, respectively, for suggested methods with \(\alpha _{1}=\alpha _{2}=0.1, 0.4, 0.7\) for different values of N on \([0,1]\times [0,1]\). Tables 4 to 6 present the \(L_{2}\) and \(L_{\infty }\) error norms obtained while varying \(N \in \{6,8,10,12 \}\), \(\alpha _{1}=\alpha _{2}=0.2, 0.4, 0.6\) and \(x \in [0,3]\). Figures 4 to 6 display the graphs of approximate solution and absolute error for \(u(x,t)=v(x,t)\) with \(\alpha _{1}=\alpha _{2}=0.2, 0.4, 0.6\), \(N=12\) on \([0,3]\times [0,1]\).

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.1\), \(N=12\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.4\), \(N=12\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.7\), \(N=12\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.2\), \(N=12\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.4\), \(N=12\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 1 at \(\alpha _{1}=\alpha _{2}=0.6\), \(N=12\), \(0\leq x \leq 3\)

Example 2

Consider Eqs. (1) and (2) with initial-boundary conditions:

where

The exact solutions of this problem are \(u ( x,t ) =v ( x,t ) =\frac{t^{3}}{e^{-x}+2}\).

Example 2 is solved by the presented methods for two sets of parameters: \(\alpha _{1}=\alpha _{2}=0.01, 0.1, 0.5 \) on \([0, 1]\times [0,1]\) and \(\alpha _{1}=\alpha _{2}=0.05, 0.2, 0.4 \) on \([0, 3]\times [0,1]\). Tables 7 to 9 list the \(L_{2}\) and \(L_{\infty }\) error norms, and Figs. 7 to 9 illustrate the approximate solutions and the absolute errors of suggested methods for the first set of parameters. Results achieved by the established methods with respect to the second set of parameters are shown in Tables 10 to 12 and Figs. 10 to 12. It is remarkable that in Example 2 the approximate solutions obtained using SJSCM and the non-polynomial B-spline method are more accurate than those obtained using the method in [27].

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.01\), \(N=11\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.1\), \(N=11\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.5\), \(N=11\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.05\), \(N=11\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.2\), \(N=11\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 2 at \(\alpha _{1}=\alpha _{2}=0.4\), \(N=11\), \(0\leq x \leq 3\)

A comparison between the maximum absolute errors \((L_{\infty })\) obtained via the proposed methods with the corresponding results obtained in [27] is displayed in Tables 13 and 14.

Example 3

Finally, consider Eqs. (1) and (2) with the initial-boundary conditions:

where

The exact solutions of Eqs. (1) and (2) are \(u ( x,t ) =v ( x,t ) =t^{6} e^{-x}\).

Example 3 is solved by both suggested algorithms in two space intervals, firstly, when \(x\in [0,3]\) and \(t=1\). Tables 15 to 17 exhibit the \(L_{2}\) and \(L_{\infty }\) error norms for \(u(x,t)=v(x,t)\) at \(\alpha _{1}=\alpha _{2}=0.01, 0.1\), and 0.5 with the various choices of N. We can see that the proposed method’s numerical results achieve greater precision as the number of grid points in both space and time directions increases. Figures 13 to 15 show the graphical results of numerical solutions and absolute error distributions for \(u(x,t)=v(x,t)\) at \(\alpha _{1}=\alpha _{2}=0.01, 0.1, 0.5\). Secondly, when \(x\in [0,1]\) and \(t=1\) the \(L_{2}\) and \(L_{\infty }\) error norms at \(\alpha _{1}=\alpha _{2}=0.2, 0.4\), and 0.5 are reported in Tables 18 to 20, and the corresponding graphical solutions and absolute errors distributions are shown in Figs. 16 to 18.

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.01\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.1\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.5\), \(0\leq x \leq 3\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.2\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.4\), \(0\leq x \leq 1\)

(a) and (b) represent approximate solution and absolute error distribution, respectively, using SJSCM. (c) and (d) represent approximate solution and absolute error distribution, respectively, using the non-polynomial B-spline method of Example 3 at \(\alpha _{1}=\alpha _{2}=0.6\), \(0\leq x \leq 1\)

Note

The computations associated with the experiments discussed above were performed in Wolfram Mathematica 12.2 on a PC with Windows 64-bit OS + processor Intel Core i7 ∼2.4 GHz. The time taken to execute the non-polynomial algorithm is 14.3906 Sec., 29.2656 Sec., and 29.7344 Sec. at N = 5, N = 9, and N = 11, respectively, for SJSCM the time is 1.1875 Sec., 24.75 Sec., and 77.2813 Sec. at N = 5, N = 9, and N = 11, respectively.

5 Conclusion

In this paper, we solved the coupled TFBEs by two different methods. Firstly, we developed the non-polynomial B-spline method based on L1-formula to approximate the Liouville–Caputo time-fractional derivative. The study of stability using the Von Neumann method showed that the scheme is unconditionally stable. Secondly, we applied the shifted Jacobi spectral collocation method based on the operational matrix of fractional derivatives in the Liouville–Caputo sense with the aid of Jacobi–Gauss quadrature. From the tables and figures introduced in Sect. 4, it is clear that the SJSCM is more accurate and stable than the non-polynomial B-spline method for all different values \(\alpha _{1}\), \(\alpha _{2}\), and N. We also note that the accuracy of the non-polynomial B-spline method increases whenever the value of \(\alpha _{1}\) and \(\alpha _{2}\) decreases. The validity is tested by solving three problems of the presented methods. The elicited results confirm the high precision of the methods presented.

Availability of data and materials

Not applicable.

Abbreviations

- NLTFBEs:

-

Nonlinear time-fractional Burgers’ equations

- NLSTFBEs:

-

Nonlinear space- and time-fractional Burgers’ equations

- SJSCM:

-

Shifted Jacobi spectral collocation method

References

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications. Academic Press, San Diego (1999)

Hilfer, R., et al.: Applications of Fractional Calculus in Physics, vol. 35. World scientific, Singapore (2000)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations, vol. 204. Elsevier, Amsterdam (2006)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus, vol. 24. CRC Press, Boca Raton (2015)

Oldham, K., Spanier, J.: The Fractional Calculus Theory and Applications of Differentiation and Integration to Arbitrary Order. Elsevier, Amsterdam (1974)

Magin, R.L.: Fractional Calculus in Bioengineering, Part 1, vol. 32. Begel House Inc., Danbury (2004)

Talaat, S., Danaf, E., Abdel Alaal, F.E.I.: Non-polynomial spline method for the solution of the dissipative wave equation. Int. J. Numer. Methods Heat Fluid Flow 19(8), 950–959 (2009)

Srivastava, H.M.: Diabetes and its resulting complications: mathematical modeling via fractional calculus. Publ. Health 4(3), 1–5 (2020)

Srivastava, H.M.: Fractional-order derivatives and integrals: introductory overview and recent developments. Kyungpook Math. J. 60(1), 73–116 (2020)

Ali, M.R., Hadhoud, A.R., Srivastava, H.M.: Solution of fractional Volterra–Fredholm integro-differential equations under mixed boundary conditions by using the HOBW method. Adv. Differ. Equ. 2019(1), 115 (2019)

Yang, X.-J., Gao, F., Srivastava, H.M.: Exact travelling wave solutions for the local fractional two-dimensional Burgers-type equations. Comput. Math. Appl. 73(2), 203–210 (2017)

Saad, K.M., Atangana, A., Baleanu, D.: New fractional derivatives with non-singular kernel applied to the Burgers equation. Chaos, Interdiscip. J. Nonlinear Sci. 28(6), 063109 (2018)

Yadav, S., Pandey, R.K.: Numerical approximation of fractional Burgers equation with Atangana–Baleanu derivative in Caputo sense. Chaos Solitons Fractals 133, 109630 (2020)

Saad, K.M., Al-Sharif, E.H.: Analytical study for time and time-space fractional Burgers’ equation. Adv. Differ. Equ. 2017(1), 300 (2017)

Mirzazadeh, M., Ayati, Z.: New homotopy perturbation method for system of Burgers equations. Alex. Eng. J. 55(2), 1619–1624 (2016)

Abazari, R., Borhanifar, A.: Numerical study of the solution of the Burgers and coupled Burgers equations by a differential transformation method. Comput. Math. Appl. 59(8), 2711–2722 (2010)

Ali, K.K., Raslan, K.R., El-Danaf, T.S.: Non-polynomial spline method for solving coupled Burgers equations. Comput. Methods Differ. Equ. 3(3), 218–230 (2015)

Shallal, M.A., Ali, K.K., Raslan, K.R., Taqi, A.H.: Septic b-spline collocation method for numerical solution of the coupled Burgers’ equations. Arab J. Basic Appl. Sci. 26(1), 331–341 (2019)

Kutluay, S., Ucar, Y.: Numerical solutions of the coupled Burgers’ equation by the Galerkin quadratic b-spline finite element method. Math. Methods Appl. Sci. 36(17), 2403–2415 (2013)

Chen, Y., An, H.-L.: Numerical solutions of coupled Burgers equations with time-and space-fractional derivatives. Appl. Math. Comput. 200(1), 87–95 (2008)

Jafarabadi, A., Shivanian, E.: Numerical simulation of nonlinear coupled Burgers’ equation through meshless radial point interpolation method. Eng. Anal. Bound. Elem. 95, 187–199 (2018)

Prakash, A., Verma, V., Kumar, D., Singh, J.: Analytic study for fractional coupled Burgers’ equations via Sumudu transform method. Nonlinear Eng. 7(4), 323–332 (2018)

Ahmed, H.F., Bahgat, M.S.M., Zaki, M.: Analytical approaches to space-and time-fractional coupled Burgers’ equations. Pramana 92(3), 1–14 (2019)

Liu, J., Hou, G.: Numerical solutions of the space-and time-fractional coupled Burgers equations by generalized differential transform method. Appl. Math. Comput. 217(16), 7001–7008 (2011)

Heydari, M.H., Avazzadeh, Z.: Numerical study of non-singular variable-order time fractional coupled Burgers’ equations by using the Hahn polynomials. Eng. Comput. (2020). https://doi.org/10.1007/s00366-020-01036-5

Albuohimad, B., Adibi, H.: On a hybrid spectral exponential Chebyshev method for time-fractional coupled Burgers equations on a semi-infinite domain. Adv. Differ. Equ. 2017(1), 85 (2017)

Veeresha, P., Prakasha, D.G.: A novel technique for \((2 + 1)\)-dimensional time-fractional coupled Burgers equations. Math. Comput. Simul. 166, 324–345 (2019)

Singh, J., Kumar, D., Swroop, R.: Numerical solution of time-and space-fractional coupled Burgers’ equations via homotopy algorithm. Alex. Eng. J. 55(2), 1753–1763 (2016)

Chen, Y., An, H.-L.: Numerical solutions of coupled Burgers equations with time-and space-fractional derivatives. Appl. Math. Comput. 200(1), 87–95 (2008)

Islam, M.N., Akbar, M.A.: New exact wave solutions to the space-time fractional-coupled Burgers equations and the space-time fractional foam drainage equation. Cogent Phys. 5(1), 1422957 (2018)

Prakash, A., Kumar, M., Sharma, K.K.: Numerical method for solving fractional coupled Burgers equations. Appl. Math. Comput. 260, 314–320 (2015)

Hussein, A.J.: A weak Galerkin finite element method for solving time-fractional coupled Burgers’ equations in two dimensions. Appl. Numer. Math. 156, 265–275 (2020)

Hussain, M., Haq, S., Ghafoor, A., Ali, I.: Numerical solutions of time-fractional coupled viscous Burgers’ equations using meshfree spectral method. Comput. Appl. Math. 39(1), 6 (2020)

Alsuyuti, M.M., Doha, E.H., Ezz-Eldien, S.S., Youssef, I.K.: Spectral Galerkin schemes for a class of multi-order fractional pantograph equations. J. Comput. Appl. Math. 384, 113157 (2021)

Bhrawy, A.H., Zaky, M.A., Alzaidy, J.F.: Two shifted Jacobi–Gauss collocation schemes for solving two-dimensional variable-order fractional Rayleigh–Stokes problem. Adv. Differ. Equ. 2016(1), 272 (2016)

Bhrawy, A.H., Zaky, M.A.: A method based on the Jacobi tau approximation for solving multi-term time–space fractional partial differential equations. J. Comput. Phys. 281, 876–895 (2015)

Bhrawy, A.H., Zaky, M.A.: Numerical simulation for two-dimensional variable-order fractional nonlinear cable equation. Nonlinear Dyn. 80(1–2), 101–116 (2015)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A new Jacobi operational matrix: an application for solving fractional differential equations. Appl. Math. Model. 36(10), 4931–4943 (2012)

Rashidinia, J., Mohammadi, R.: Non-polynomial cubic spline methods for the solution of parabolic equations. Int. J. Comput. Math. 85(5), 843–850 (2008)

Ramadan, M.A., El-Danaf, T.S., Abd Alaal, F.E.I.: Application of the non-polynomial spline approach to the solution of the Burgers’ equation. Open Appl. Math. J. 1(1), 15–20 (2007)

El-Danaf, T.S., Hadhoud, A.R.: Parametric spline functions for the solution of the one time fractional Burgers’ equation. Appl. Math. Model. 36(10), 4557–4564 (2012)

Alsuyuti, M.M., Doha, E.H., Ezz-Eldien, S.S., Bayoumi, B.I., Baleanu, D.: Modified Galerkin algorithm for solving multitype fractional differential equations. Math. Methods Appl. Sci. 42(5), 1389–1412 (2019)

Bhrawy, A.H., Doha, E.H., Ezz-Eldien, S.S., Van Gorder, R.A.: A new Jacobi spectral collocation method for solving \(1 + 1\) fractional Schrödinger equations and fractional coupled Schrödinger systems. Eur. Phys. J. Plus 129(12), 260 (2014)

Doha, E.H., Bhrawy, A.H., Baleanu, D., Ezz-Eldien, S.S.: On shifted Jacobi spectral approximations for solving fractional differential equations. Appl. Math. Comput. 219(15), 8042–8056 (2013)

Li, M., Ding, X., Xu, Q.: Non-polynomial spline method for the time-fractional nonlinear Schrödinger equation. Adv. Differ. Equ. 2018(1), 318 (2018)

Chen, S., Liu, F., Zhuang, P., Anh, V.: Finite difference approximations for the fractional Fokker–Planck equation. Appl. Math. Model. 33(1), 256–273 (2009)

Gil’, M.I.: Invertibility conditions for block matrices and estimates for norms of inverse matrices. Rocky Mt. J. Math. 33(4), 1323–1335 (2003)

Guo, B., Wang, L.: Jacobi approximations in non-uniformly Jacobi-weighted Sobolev spaces. J. Approx. Theory 128(1), 1–41 (2004)

Hafez, R.M., Youssri, Y.H.: Jacobi collocation scheme for variable-order fractional reaction–subdiffusion equation. Comput. Appl. Math. 37(4), 5315–5333 (2018)

Doha, E.H., Hafez, R.M., Youssri, Y.H.: Shifted Jacobi spectral-Galerkin method for solving hyperbolic partial differential equations. Comput. Math. Appl. 78(3), 889–904 (2019)

Acknowledgements

The authors express their gratitude to the unknown referees for their helpful suggestions.

Funding

There is no external funding.

Author information

Authors and Affiliations

Contributions

All authors contributed equally. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hadhoud, A.R., Srivastava, H.M. & Rageh, A.A.M. Non-polynomial B-spline and shifted Jacobi spectral collocation techniques to solve time-fractional nonlinear coupled Burgers’ equations numerically. Adv Differ Equ 2021, 439 (2021). https://doi.org/10.1186/s13662-021-03604-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03604-5