Abstract

In this study we propose a hybrid spectral exponential Chebyshev method (HSECM) for solving time-fractional coupled Burgers equations (TFCBEs). The method is based upon a spectral collection method, utilizing exponential Chebyshev functions in space and trapezoidal quadrature formula (TQF), and also a finite difference method (FDM) for time-fractional derivative. Some test examples are included to demonstrate the efficiency and validity of the proposed method.

Similar content being viewed by others

1 Introduction

Several computational problems in various research areas such as mathematics, fluid dynamics, chemistry, biology, viscoelasticity, engineering and physics have arisen in semi-infinite domains [1–9]. Subsequently, many researchers have utilized various transformations on orthogonal polynomials to map \([ -1, 1]\) into \([0, \infty)\) maintaining their orthogonal property [10–15].

Spectral methods provide a computational approach that has become better known over the last decade and has become the topic of study for many researchers [16–26], especially when linked with the fractional calculus [9, 27–38] which is an important branch of applied mathematics. This type of differentiation and integration could be considered as a generalization of the usual definition of differentiation and integration to non-integer order.

In this paper, we study coupled Burgers equations with time-fractional derivatives given by

on the semi-infinite domain \([0,\infty)\).

The coupled Burgers equations have recently been applied to different areas of science, in particular in physical problems such as the phenomena of turbulence flow through a shock wave traveling in a viscous fluid (see [39, 40]).

The study of coupled Burgers equations is very important because the system is a basic model of sedimentation or evolution of scaled volume concentrations of two sorts of particles in liquid suspensions or colloids under the impact of gravity [40]. It has been studied by many authors using various techniques [41–46].

In this paper, we introduce the exponential Chebyshev functions collocation method based upon orthogonal Chebyshev polynomials to solve a time-fractional coupled Burgers equation. The fractional derivative is defined in the Caputo sense for time variable which is discretized utilizing a trapezoidal quadrature formula (TQF) and a finite difference method (FDM).

The justification of this paper is to apply the Chebyshev exponential method for efficient applicable in unbounded domains with steady state property (\(u(\infty)=\mathit{constant}\)), i.e., the solution to be regular at ∞. In fact, many problems in mathematical physics and astrophysics which occur on a semi-infinite interval are related to the diffusion equations such as Burgers, KdV and heat equations. Furthermore, many methods based on polynomials basis, such as Legendre, Chebyshev, Laguerre spectral methods and also semi-analytic methods such as Adomian decomposition, variational iteration and differential transform methods, can not justify the steady state property of fluid \(u(\infty )=\mathit{constant}\). In this study we will show that such difficulty can be surmounted by our proposed method.

The error analysis of exponential Chebyshev functions expansion has also been investigated, which confirms the efficiency of the method.

2 Definitions and basic properties

In this section, we give some definitions and basic properties of fractional calculus and Chebyshev polynomials which are required for our subsequent development.

2.1 Definition of fractional calculus

Here we recall definition and basic results of fractional calculus; for more details, we refer to [32].

Definition 1

A real function \(u(t)\), \(t>0\) is said to be in the space \(C_{\mu}\), \(\mu\in\mathbb{R}\) if there exists a real number \(p>\mu\) such that \(u(t)=t^{p}u_{1}(t)\), where \(u_{1}(t)\in C(0,\infty)\), and it is said to be in the space \(C_{\mu}^{n}\) if and only if \(u^{(n)}\in C_{\mu}\), \(n\in\mathbb{N}\).

Definition 2

The Riemann-Liouville fractional integral operator of order \(\alpha>0\), of a function \(f\in C_{\mu}\), \(\mu\geq-1\), is defined as

where \(\Gamma(\cdot)\) is the well-known gamma function.

Definition 3

The fractional derivative of \(u(t)\) in the Caputo sense is defined as

for \(m-1<\alpha\leq m\), \(m\in\mathbb{N}\), \(t>0\) and \(u\in C_{-1}^{m}\). Also it can be rewritten in the following form:

Similar to integer-order differentiation, Caputo fractional differential has the linear property:

where \(c_{1}\) and \(c_{2}\) are constants. If so, for the Caputo derivative, we have the following basic properties:

where c is constant, \(\lfloor\alpha\rfloor\) and \(\lceil\alpha\rceil\) are floor and ceiling functions, respectively, \(\mathbb{N}_{0}= \lbrace0,1,2,\ldots \rbrace\) and \(\mathbb{N}= \lbrace1,2,\ldots \rbrace\).

2.2 Exponential Chebyshev functions

The well-known first kind Chebyshev polynomials of degree n, defined on the interval \([-1,1]\), are given by

where \(s=\cos(\theta)\), and thus the following property is immediately obtained:

Also, we have the relation

where \(T_{0}(s)=1\) and \(T_{1}(s)=s\). \(T_{n}(s)\) is the eigenfunction of the singular Sturm-Liouville problem

The first kind Chebyshev polynomials are orthogonal in the interval \([-1,1]\) with respect to the weight function

The analytic form of the first kind Chebyshev polynomials of degree n is given by

From Eq. (5), the zeroes of \(T_{n}(s)\) are obtained as follows:

Definition 4

Exponential Chebyshev functions

We can use exponential transformation to have new functions which are defined on the semi-infinite interval. The nth exponential Chebyshev functions can be defined by the one-to-one transformation

as

According to (6) and (10), we may deduce the recurrences relation for \(E_{n}(x)\) in the form

with starting values \(E_{0}(x)=1\), \(E_{1}(x)=1-2e^{-\frac{x}{L}}\). The first few exponential Chebyshev functions of the first kind are as follows:

According to (7), \(E_{n}(x)\) is the nth eigenfunction of the singular Sturm-Liouville problem

Also, from formula (8), we can directly construct the nth exponential Chebyshev functions as

The roots of \(E_{n}(x)\) are immediately obtained from (9) as follows:

3 Function approximation

Let

which denotes a non-negative, integrable, real-valued function over the semi-infinite interval \(\Lambda=[0,\infty)\). Subsequently, we define

where

is the norm induced by the inner product of the space \(L^{2}_{\rho}(\Lambda)\),

It is easily seen that \(\{E_{j}(x) \}_{j\geq0}\) denotes a system which is mutually orthogonal under (15), i.e.,

The classical Weierstrass theorem implies that such a system is complete in the space \(L^{2}_{\rho}(\Lambda)\). Thus, for any function \(u(x)\in L^{2}_{\rho}(\Lambda)\), the following expansion holds:

where

If \(u(x)\) in (16) is truncated up to the mth terms, then it can be written as

Now, we can estimate an upper bound for function approximation in a special case. Firstly, the error function \(e_{m}(x)\) can be defined in the following form:

The completeness of the system \(\{E_{i}(x) \}_{i\geq0}\) is equivalent to the following property as m tends to infinity:

Accordingly, the \(L_{\infty}\) bound for \(e_{m}(x)\) will be

Lemma 1

The \(L_{\infty}\) and \(L_{\rho}\) errors for a function \(u\in L^{2}_{\rho}(\Lambda)\), defined by (19), satisfy the following relations:

Proof

The completeness of the system \(\{E_{i}(x) \}_{i\geq0}\) helped us to consider the error as

Using the definition of \(\Vert \cdot \Vert _{\rho}\), one has

Now by using Eq. (17) the first relation can be proved. Also,

Consequently, (17) completes the proof. □

This lemma shows that the convergence of exponential Chebyshev functions approximation is involved with the function \(u(x)\). Now, by knowing that the function \(u(x)\in L^{2}_{\rho}(\Lambda)\) has some good properties, we can present an upper bound for estimating the error of function approximation by these basis functions.

Theorem 1

Let \(u_{m}(x)\) be function approximation of \(u(x)\in L^{2}_{\rho}(\Lambda)\), obtained by (18), and \(\mathcal{U}(s)=u (-L\ln(\frac{1-s}{2}) )\) be analytic on \((-1,1)\), then an error bound for this approximation can be presented as follows:

where \(M_{\infty}\geq2\max_{i} \vert \mathcal {U}^{(i)}(s)\vert , s\in(-1,1)\).

Proof

Defining \(x= -L\ln(\frac{1-s}{2})\) gives

Knowing that \(\mathcal{U}(s)\) is analytic, we have

Using the following properties of Chebyshev polynomials

yields

Now, assuming \(M_{\infty}\geq2\max_{i} \vert \mathcal {U}^{(i)}(x)\vert \), \(s\in(-1,1)\) and using (23), we get

Now, according to Lemma 1, we can prove the theorem as follows:

□

From the previous theorem, any real function defined in \(L^{2}_{\rho}(\Lambda)\), whose mapping under the transformation \(-L\ln(\frac{1-s}{2})\) is analytic, has a convergence series solution in the form (18). Furthermore, we can show that the error defined in (19) has superlinear convergence defined below.

Definition 5

\(x_{m}\) tends to x̄ with superlinear convergence if there exist a positive sequence \(\lambda_{m}\longrightarrow0\) and an integer number N such that

Theorem 2

In Theorem 1, let \(M\geq M_{i}\) for any integer i, then the error is superlinear convergence to zero.

Proof

Choosing the positive sequence

for Theorem 1 gives \(e_{m+1}\leq\lambda_{m} e_{m}\), and consequently, Definition 5 completes the proof. □

According to Theorem 2, any function \(u(x)\in L^{2}_{\rho}(\Lambda )\) that is analytic under the transformation \(x=-L\ln(\frac{1-s}{2})\) has a superlinear convergence series in the form (16).

4 Spectral collection method to solve TFCBEs

In this section, we discuss the spectral collection method to solve the following time-fractional coupled Burgers equations:

where \(L_{1}\) and \(L_{2}\) are some derivative operators. The initial and boundary conditions are

The functions \(u(x,t)\) and \(v(x,t)\) are discretized in time \(t=t_{n}\), and then they can be expanded by the exponential Chebyshev functions as follows:

Also, the time-fractional derivative can be discretized by TQF and FDM as well.

4.1 Trapezoidal quadrature formula

Now we consider the following fractional differential equation:

which, by applying (4), converts to the Volterra integral equation

For the numerical computation of (28), the integral is replaced by TQF at point \(t_{n}\)

where \(g(s)=f(s,u(s))\) and \({\widetilde{g}_{n} }(s )\) is the piecewise linear interpolation of g with nodes and knots chosen at \(t_{j}\), \(j=0,1,2,\ldots,n\). After some elementary calculations, the right-hand side of (29) gives [47]

where

and \({\gamma_{j,n} ^{(\alpha)}}\) is a positive number bounded by (\(0 < {\gamma_{j,n} ^{(\alpha)}} \leq1 \)).

From (29) we immediately get

so that error bounds and orders of convergence for product integration follow from standard results of approximation theory. For a piecewise linear approximation to a smooth function \(g(t)\), the produced TQF is of second order [48].

Accordingly, the time-fractional derivative for Eqs. (25) can be converted to the following singular integro-partial differential equations:

Then TQF (30) together with (26) gives

where \(s_{\alpha}={\tau^{\alpha}}/{{\Gamma(\alpha+2 )}}\). From the above equations, the unknown coefficients \(a_{i}^{n}\) and \(b_{i}^{n}\), \(i=0,1,\ldots,m\), should be determined for any step of time. To do so, we use \(m-1\) collocation nodes \(x_{k}\), the roots of \(E_{m-1}(x)\), together with the boundary conditions as follows:

For any time step, the above equations form an algebraic system of nonlinear equations with \(2m+2\) unknowns which can be solved by the fixed point iterative method.

4.2 Finite difference approximations for time-fractional derivative

In this section, a fractional order finite difference approximation [27, 49] for the time-fractional partial differential equations is proposed.

Define \(t_{j}=j\tau\), \(j=0,1,2,\ldots,n\), where \(\tau={T}/{n}\). The time-fractional derivative term of order \(0< \alpha\leq1 \) with respect to time at \(t=t_{n}\) is approximated by the following scheme:

Similarly,

where

We apply this formula to discretize the time variable. The rate of convergence of this formula is \(O(\tau^{2-\alpha}) \).

Accordingly, Eqs. (25), using the initial conditions, are converted to

Again, similar to the last subsection, we use \(m-1\) collocation nodes \(x_{k}\), which are the roots of \(E_{m-1}(x)\), together with the boundary conditions (35) and (36) to obtain the unknown coefficients \(a_{i}^{n}\) and \(b_{i}^{n}\) at any time step.

5 Numerical experiments

In this section, we present four examples to illustrate the numerical results.

Example 1

Consider the following time-fractional coupled Burgers equations:

with the initial conditions

and the boundary conditions

Also, \(f(x,t)\) and \(g(x,t)\) are given by

Exact solution for this problem is \(u(x,t)=v(x,t)=t^{3} \sin(e^{-x})+1\).

In the first problem, we explain the proposed method with more details. Firstly, we approximate \(u(x,t_{n})\), \(v(x,t_{n})\) and their derivatives by ECFs as follows:

For this problem, the operators \(L_{1}\) and \(L_{2}\) defined in (25) after substituting the collocation nodes \(x_{k}\) are obtained as follows:

Note that the values of \(E_{i}(x_{k})\) and its derivatives can be obtained from Eq. (13) as well.

TQF implementation

Now TQF gives the following \(2m-2\) equations at any step of time \(t_{n}\)

where for this problem \(I_{u}(x_{k})=I_{v}(x_{k})=1\). Also it should be noted that the second hand sides of the above equations are known since they are obtained in the last steps of time. The above equations together with the boundary conditions (35) and (36)

construct a system of nonlinear equations which can be solved by the Newton method (or fsolve command) to find the coefficients \(a_{j}^{n}\) and \(b_{j}^{n}\) at any step of time.

FDM implementation

After substituting the collocation nodes \(x_{k}\) in Eqs. (39) and (40), and knowing that \(I_{u}(x_{k})=I_{v}(x_{k})=1\), the following \(2m-2\) equations at any step of time \(t_{n}\) are generated as

Now these equations along with four boundary conditions that appear in Eqs. (41) and (42) give a nonlinear system of equations which can be solved by the Newton method (or fsolve command) to find the coefficients \(a_{j}^{n}\) and \(b_{j}^{n}\) at any step of time.

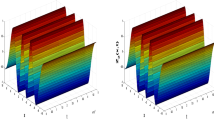

The maximum errors \(e_{m,\infty}(u)\) and \(e_{m,\infty}(v)\) obtained via the proposed methods are shown in Figure 1 with parameter \(L=3\). A comparison between TQF and FDM reveals that TQF approach is superior to FDM.

Example 2

We consider the time-fractional coupled Burgers equation with the initial condition

and the boundary conditions

where \(f(x,t)\) and \(g(x,t)\) are given by

Exact solution for this problem is \(u(x,t)=v(x,t)=\frac{t^{3}}{(e^{-x}+2)} \).

The maximum absolute errors for time-fractional coupled Burgers equation for this problem with (\(\alpha=0.4 \), \(\beta=0.4\), \(L=3\)) are reported in Tables 1 and 2.

Example 3

We consider the time-fractional coupled Burgers equation of the first example with the initial condition

and the boundary conditions

where \(f(x,t)\) and \(g(x,t)\) are given by

Exact solution of this problem is \(u(x,t)=v(x,t)=t^{6} e^{-x}+1\).

The maximum absolute errors for a time-fractional coupled Burgers equation for this problem are reported in Tables 3 and 4.

Example 4

Considering the following homogeneous TFCBEs:

with the initial and boundary conditions

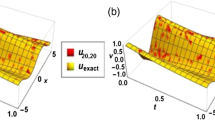

For this problem, only for the case \(\alpha=\beta=1\), the exact solution is \(u(x,t)=v(x,t)=\frac{2}{1+e^{-(x+t)}}\). Table 5 illustrates the maximum error for this case when \(\tau=1/64\). When \(\alpha=\beta=0.5\), we report the difference between the values of \(u_{7}\) and \(u_{6}\) at the final time \(T=1\) in Table 6. Also, the graph of \(u(x,t_{n})=v(x,t_{n})\), for different time steps, for this case is displayed in Figure 2.

Example 5

Consider the following homogeneous time-fractional Burgers equation [50, 51]:

with the following initial and boundary conditions:

where μ, σ, λ and ν are arbitrary constants. For this problem, the exact solution only exists in the case \(\alpha=\beta=1\) as follows:

We can compare the results obtained by the proposed method and three-term solution of the differential transform method (DTM) [50] for \(\alpha=\beta=1\). Figure 3 (left) displays the maximum error for these methods with \(\nu=1 \), \(\mu=-1\), \(\lambda=0\) and \(\sigma=-1\).

Also, we can compare our results by the variational iteration method (VIM) [52] for different α and β. We report the results obtained by the proposed method and VIM [52] for \(u(x,t)\) at the final time \(T=1\) while \(\alpha=\beta=0.5\) in Figure 3 (right).

6 Conclusion

In this paper we presented a numerical method for solving the time-fractional Burgers equation by utilizing the exponential Chebyshev functions and TQF and FDM as well. Numerical results illustrate the validity and efficiency of the method and comparison for the maximum absolute errors between spectral collection method with TQF and FDM. This technique can be used to solve fractional time partial differential equations.

References

Boyd, J: Chebyshev and Fourier Spectral Methods. Dover, New York (2000)

Bhrawy, A, Alhamed, Y, Baleanu, D, Al-Zahrani, A: New spectral techniques for systems of fractional differential equations using fractional-order generalized Laguerre orthogonal functions. Fract. Calc. Appl. Anal. 17(4), 1137-1157 (2014)

Garrappa, R, Popolizio, M: On the use of matrix functions for fractional partial differential equations. Math. Comput. Simul. 81, 1045-1056 (2011)

Noye, BJ, Dehghan, M: New explicit finite difference schemes for two-dimensional diffusion subject to specification of mass. Numer. Methods Partial Differ. Equ. 15, 521-534 (1999)

Bu, W, Ting, Y, Wu, Y, Yang, J: Finite difference/finite element method for two-dimensional space and time fractional Bloch-Torrey equations. J. Comput. Phys. 293, 264-279 (2015)

Choi, H, Kweon, J: A finite element method for singular solutions of the Navier-Stokes equations on a non-convex polygon. J. Comput. Appl. Math. 292, 342-362 (2016)

Parand, K, Abbasbandy, S, Kazem, S, Rezaei, A: An improved numerical method for a class of astrophysics problems based on radial basis functions. Phys. Scr. 83(1), 015011 (2011)

Guotao, W, Pei, K, Baleanu, D: Explicit iteration to Hadamard fractional integro-differential equations on infinite domain. Adv. Differ. Equ. 2016, 299 (2016)

Kumar, S, Kumar, A, Odibat, Z: A nonlinear fractional model to describe the population dynamics of two interacting species. Math. Method Appl. Sci. doi:10.1002/mma.4293

Guo, BY, Shen, J, Wang, Z: Chebyshev rational spectral and pseudospectral methods on a semi-infinite interval. Int. J. Numer. Methods Eng. 53, 65-84 (2002)

Sezer, M, Gülsu, M, Tanay, B: Rational Chebyshev collocation method for solving higher-order linear ordinary differential equations. Numer. Methods Partial Differ. Equ. 27, 1130-1142 (2010)

Ramadan, MA, Raslan, K, Danaf, TSE, Salam, MAAE: An exponential Chebyshev second kind approximation for solving high-order ordinary differential equations in unbounded domains, with application to Dawson’s integral. J. Egypt. Math. Soc., 1-9 (2016)

Ramadan, MA, Raslan, KR, Nassar, MA: An approximate solution of systems of high-order linear differential equations with variable coefficients by means of a rational Chebyshev collocation method. Electron. J. Math. Anal. Appl. 4(1), 86-98 (2016)

Bhrawy, AH, Abdelkawy, MA, Alzahrani, AA, Baleanu, D, Alzahrani, EO: A Chebyshev-Laguerre Gauss-Radau collocation scheme for solving time fractional sub-diffusion equation on a semi-infinite domain. Proc. Rom. Acad., Ser. A: Math. Phys. Tech. Sci. Inf. Sci. 16(4), 490-498 (2015)

Bhrawy, AH, Hafez, RM, Alzahrani, EO, Baleanu, D, Alzahrani, AA: Generalized Laguerre-Gauss-Radau scheme for the first order hyperbolic equations in a semi-infinite domain. Rom. Rep. Phys. 60(7-8), 918-934 (2015)

Kadem, A, Luchko, Y, Baleamnu, D: Spectral method for solution of the fractional transport equation. Rep. Math. Phys. 66, 103-115 (2010)

Shamsi, M, Dehghan, M: Determination of a control function in three-dimensional parabolic equations by Legendre pseudospectral method. Numer. Methods Partial Differ. Equ. 28, 74-93 (2012)

Gottlieb, D, Orszag, S: Numerical analysis of spectral methods, Philadelphia (1977)

Hesthaven, J, Gottlieb, S, Gottlieb, D: Spectral methods for time-dependent problems, Cambridge (2007)

Canuto, C, Quarteroni, A, Hussaini, M, Zang, T: Spectral Methods in Fluid Dynamics. Prentice-Hall, Englewood Cliffs, NJ (1986)

Hussien, HS: A spectral Rayleigh-Ritz scheme for nonlinear partial differential systems of first order. J. Egypt. Math. Soc. 24, 373-380 (2016)

Mao, Z, Shen, J: Efficient spectral-Galerkin methods for fractional partial differential equations with variable coefficients. J. Comput. Phys. 307, 243-261 (2016)

Dehghan, M, Izadi, FF: The spectral collocation method with three different bases for solving a nonlinear partial differential equation arising in modeling of nonlinear waves. Math. Comput. Model. 53, 1865-1877 (2011)

Fakhrodin, M, Mohyud-Din, ST: A fractional-order Legendre collocation method for solving the Bagley-Torvik equations. Adv. Differ. Equ. 2016, 269 (2016)

Bhrawy, AH, Zaky, MA, Alzaidy, JF: Two shifted Jacobi-Gauss collocation schemes for solving two-dimensional variable-order fractional Rayleigh-Stokes problem. Adv. Differ. Equ. 2016, 272 (2016)

Elahe, S, Farahi, MH: An approximate method for solving fractional TBVP with state delay by Bernstein polynomials. Adv. Differ. Equ. 2016, 298 (2016)

Fangai, Z, Changpin, L: Numerical methods for fractional calculus, China (2015)

Debnath, L: Recent application of fractional calculus to science and engineering. Int. J. Math. Math. Sci. 54, 3413-3442 (2003)

Mainardi, F: Fractional calculus: some basic problems in continuum and statistical mechanics. In: Fractals and Fractional Calculus in Continuum Mechanics. International Centre for Mechanical Sciences, vol. 378, pp. 291-348. Springer, Wien (1997)

Richard, H: Numerical methods for fractional calculus, Germany (2011)

Oldham, KB, Spanier, J: The fractional calculus, New York (1974)

Podlubny, I: Fractional differential equations, San Diego, California (1999)

Singh, J, Kumar, D, Nieto, JJ: A reliable algorithm for local fractional Tricomi equation arising in fractal transonic flow. Entropy 18(6), 206 (2016).

Srivastava, HM, Kumarc, D, Singh, J: An efficient analytical technique for fractional model of vibration equation. Appl. Math. Model. 45, 192-204 (2017)

Kumar, D, Singh, J, Baleanu, D: Numerical computation of a fractional model of differential-difference equation. J. Comput. Nonlinear Dyn. 11(6), 061004 (2016)

Kumar, D, Singh, J, Baleanu, D: A hybrid computational approach for Klein-Gordon equations on Cantor sets. Nonlinear Dyn. 87, 511-517 (2017)

Kumar, A, Kumar, S, Yan, S: Residual power series method for fractional diffusion equations. Fundam. Inform. 151, 213-230 (2017)

Anastassiou, GA, Argyros, IK, Kumar, S: Monotone convergence of extended iterative methods and fractional calculus with applications. Fundam. Inform. 151, 241-253 (2017)

Burgers, JM: A mathematical model illustrating the theory of turbulence. Adv. Appl. Mech. 1, 171-199 (1948)

Nee, J, Duan, J: Limit set of trajectories of the coupled viscous Burgers’ equations. Appl. Math. Lett. 11, 57-61 (1998)

Deghan, M, Asgar, H, Mohammad, S: The solution of coupled Burgers’ equations using Adomian-Pade technique. Appl. Math. Comput. 189, 1034-1047 (2007)

Liu, J, Hou, G: Numerical solutions of the space- and time-fractional coupled Burgers equations by generalized differential transform method. Appl. Math. Comput. 217, 7001-7008 (2011)

Elzaki, SM: Exact solutions of coupled Burgers equation with time-and space-fractional derivative. Int. J. Appl. Math. 4(1), 99-105 (2015)

Singh, J, Kumar, D, Swroop, R: Numerical solution of time- and space-fractional coupled Burgers equations via homotopy algorithm. Alex. Eng. J. 55(2), 1753-1763 (2016)

Kumar, S, Kumar, A, Baleneu, D: Two analytical methods for time-fractional nonlinear coupled Boussinesq-Burger’s equations arises in propagation of shallow water waves. Nonlinear Dyn. 85(2), 699-715 (2016)

Bhrawy, AH, Zaky, MA, Baleanu, D: New numerical approximations for space-time fractional Burgers’ equations via a Legendre spectral-collocation method. Rom. Rep. Phys. 67(2), 340-349 (2015)

Diethelm, K, Ford, N, Freed, A: A predictor-corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 29, 3-22 (2002)

Linz, P: Analytical and numerical methods for Volterra equations, SIAM, Philadelphia, PA

Qinghua, F, Meng, F: Finite difference scheme with spatial fourth-order accuracy for a class of time fractional parabolic equations with variable coefficient. Adv. Differ. Equ. 2016, 305 (2016)

Kurulay, M: The approximate and exact solutions of the space- and time-fractional Burgers equations. Int. J. Recent Res. Appl. Stud. 3(3), 257-263 (2010)

Momani, S: Non-perturbative analytical solutions of the space- and time-fractional Burgers equations. Chaos Solitons Fractals 28, 930-937 (2006)

Inc, M: The approximate and exact solutions of the space- and time-fractional Burgers equations with initial conditions by variational iteration method. J. Math. Anal. Appl. 345, 476-484 (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

BA and HA designed the study, developed the methodology, collected the data, performed the analysis and wrote the manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Albuohimad, B., Adibi, H. On a hybrid spectral exponential Chebyshev method for time-fractional coupled Burgers equations on a semi-infinite domain. Adv Differ Equ 2017, 85 (2017). https://doi.org/10.1186/s13662-017-1141-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1141-2