Abstract

In this paper, we study the existence of multiple positive solutions for fourth-order impulsive differential equation with integral boundary conditions and deviating argument. The main tool is based on the Avery and Peterson fixed point theorem. Meanwhile, an example to demonstrate the main results is given.

Similar content being viewed by others

1 Introduction

As an important area of investigation, the theory and applications of the fourth-order ordinary differential equations are emerging. The study concerns mainly the description of the deformations of an elastic beam by means of a fourth-order differential equation boundary value problem (BVP for short). Owing to its various applications in physics, engineering, and material mechanics, a lot of attention has been received by fourth-order differential equation BVPs. For more information, see [1–8].

In the meantime, many very interesting and significant cases of BVPs are constituted by integral boundary conditions. They include two-, three-, multi-point, and nonlocal BVPs as special cases. Therefore, in recent years, increasing attention has been given to integral boundary conditions [9–15] of fourth-order BVPs. Especially, we intend to mention some recent results.

In [12], Ma studied the following fourth-order BVP:

where \(p, q \in L^{1}[0,1]\), h and f are continuous. From an application of the fixed point index in cones, the existence of at least one symmetric positive solution could be obtained.

In 2015, the authors [13] investigated the fourth-order differential equation with integral boundary conditions,

By using a novel technique and fixed point theories, they showed the existence and multiplicity of positive solutions.

Unlike [12] and [13], a class of fourth-order differential equations with advanced or delayed argument were considered by the author of [14],

subject to the boundary conditions

or

The existence of multiple positive solutions is obtained by using a fixed point theorem due to Avery and Peterson.

In addition, to deviating arguments, the authors of [15] studied a fourth-order impulsive BVP as follows:

The boundary conditions above are special Sturm-Liouville integral boundary conditions, since \(ay(0)-by'(0)=ay(1)+by'(1)=\int _{0}^{1}g(s)y(s)\,ds\). Several existence and multiplicity results were derived by using inequality techniques and fixed point theories. For most research papers on impulsive differential equation BVPs, see [16–19] and the references therein.

Motivated by the mentioned results, we investigate a fourth-order impulsive differential equation Sturm-Liouville integral BVP with deviating argument,

subject to

where \(\xi,\eta>0\). Compared with [13–15], in this paper, (1.2) contains the general Sturm-Liouville integral boundary conditions, where \(g_{1}(s)\), \(g_{2}(s)\) could be two different functions in \(L^{1}[0,1]\). In this case, we have to establish a more complicated expression of operator T and to find the proper lower and upper bounds of Green’s functions (Lemma 2.2 and Lemma 2.4). Further, by using the fixed point theorem due to Avery and Peterson, the existence and multiplicity of positive solutions are obtained.

In (1.2), \(t_{k}\) (\(k=1, 2, \ldots, m\)) are fixed points with \(0=t_{0}< t_{1}< t_{2}<\cdots<t_{m}<t_{m+1}=1\), \(\triangle x'_{t_{k}}=x'({t_{k}}^{+})-x'({t_{k}}^{-})\), \(x'({t_{k}}^{+})\) and \(x'({t_{k}}^{-})\) represent the right-hand limit and the left-hand limit of \(x'(t_{k})\) at \(t=t_{k}\), respectively.

Throughout the paper, we always assume that \(J=[0,1]\), \(J_{0}=(0,1) \backslash\{t_{1}, t_{2}, \ldots, t_{m}\}\), \(\mathbb{R}^{+}=[0,+\infty)\), \(J_{k}=(t_{k}, t_{k+1}]\), \(k=0, 1, \ldots, m-1\). \(\alpha: J\rightarrow J\) is continuous and:

- (H1):

-

\(f\in C (J\times\mathbb{R}^{+}\times\mathbb{R}^{+}, \mathbb{R}^{+})\), with \(f(t,u,v)>0\) for \(t\in J\), \(u>0\), and \(v>0\);

- (H2):

-

h is a nonnegative continuous function defined on \((0,1)\); h is not identically zero on any subinterval on \(J_{0}\);

- (H3):

-

\(I_{k}:J\times\mathbb{R}^{+}\rightarrow\mathbb {R}^{+}\) is continuous with \(I_{k}(t,u)>0\) (\(k=1,2,\ldots,m\)) for all \(t\in J\) and \(u >0\);

- (H4):

-

\(g_{0}, g_{1}, g_{2} \in L^{1}[0,1]\) are nonnegative and \(\gamma=\int_{0}^{1}g_{0}(s)\,ds \in(0,1)\).

2 Expression and properties of Green’s function

For \(v(t)\in C(J)\), we consider the equation

with boundary conditions (1.2).

We shall reduce BVP (2.1) and (1.2) to two second-order problems. To this goal, first, by means of the transformation

we convert problem (2.1) and (1.2) into

and

Lemma 2.1

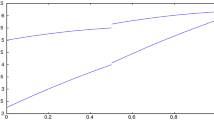

\(\lambda(t)\) is the solution of \(x''(t)=0\), \(x(0)=\xi\), \(x'(0)=1\). \(\mu(t)\) is the solution of \(y''(t)=0\), \(y(1)=\eta\), \(y'(1)=-1\). Then \(\lambda(t)\) is strictly increasing on J, \(\lambda(t)>0\) on \((0,1]\); \(\mu(t)\) is strictly decreasing on J, and \(\mu(t)>0\) on \([0,1)\). For any \(v(t)\in C(J)\), then the BVP (2.3) has a unique solution as follows:

where

and

where

Proof

Since λ and μ are two linearly independent solutions of the equation \(y''(t)=0\), we know that any solution of \(y''=v(t)\) can be represented by (2.5).

It is easy to check that the function defined by (2.5) is a solution of (2.3) if A and B are as in (2.9), respectively.

Now we show that the function defined by (2.5) is a solution of (2.3) only if A and B are as in (2.9), respectively.

Let \(y(t)=(Fv)(t)+A\lambda(t)+B\mu(t)\) be a solution of (2.3), then we have

and

Thus, by (2.8), we can obtain

Since

we have

Since

we have

From (2.11) and (2.12), we get

which implies that A and B satisfy (2.9), respectively. □

Assume that

- (H5):

-

\(\Delta<0\), \(\alpha[\mu]<\rho\), \(\beta[\lambda]<\rho\).

Lemma 2.2

Denote \(e_{1}(t)=G_{1}(t,t)\), \(\hat{e}_{1}(t)=\frac{1}{\rho}(1-t)(t+\xi)\), for \(t\in J\). Let \(\kappa[v]=\int_{0}^{1} e_{1}(s)v(s)\,ds\) and \(\hat{\kappa}[v]=\int_{0}^{1} \hat{e}_{1}(s)v(s)\,ds\), for \(v \in C(J, \mathbb{R}^{+})\). If (H5) is satisfied, then the following results are true:

-

(1)

\(\hat{e}_{1}(s)\hat{e}_{1}(t)\leq G_{1}(t,s)\leq e_{1}(s)\), for \(t,s \in J\);

-

(2)

\(0\leq\underline{A} \hat{\kappa}[v]\leq A(v)\leq\overline{A} \kappa[v]\), for \(v \in C(J, \mathbb{R}^{+})\);

-

(3)

\(0\leq\underline{B} \hat{\kappa}[v] \leq B(v)\leq\overline {B} \kappa[v]\), for \(v \in C(J, \mathbb{R}^{+})\),

where

Proof

Now we show that (1) is true. Obviously, \(G_{1}(t,s)\leq e_{1}(s) \) for \(t,s\in J\).

In fact, \(\hat{e}_{1}(s)\hat{e}_{1}(t)=\frac{1}{\rho^{2}}(1-s)(s+\xi)(1-t)(t+\xi)\), for \(s, t\in J\).

For \(0\leq s\leq t\leq1\), we notice that

It is easy to see that \(1-s\leq1\), \(1-t\leq\eta+1-t\), \(t+\xi\leq 1+\xi+\eta\), for \(s, t\in J\), \(\xi, \eta>0\), which implies

Hence, we have

Similarly, we can obtain

In the following we show (2) and (3) hold. In view of (H5), for \(v \in C(J, \mathbb{R}^{+})\), we have

In the same way, we have \(B(v)\leq\overline{B} \kappa[v]\), \(B(v)\geq\underline{B}\hat{\kappa}[v]\), for \(v \in C(J,\mathbb {R}^{+})\). □

Analogously to Lemma 2.1 in [12], we obtain the following result; we omit the proof.

Lemma 2.3

If (H4) holds, for any \(y \in C(J)\), the problem (2.4) has a unique solution x expressed in the form

where

From (2.14) and (2.15), we can prove that \(H(t,s)\) and \(G_{2}(t,s)\) have the following properties.

Lemma 2.4

Let \(H(t,s)\), \(G_{2}(t,s)\) be given as in Lemma 2.3. Assume that (H4) holds, then the following results are true:

-

(1)

\(e_{2}(s)e_{2}(t)\leq G_{2}(t,s) \leq e_{2}(s)\), for \(t, s \in J\);

-

(2)

\(\frac{\Gamma}{1-\gamma}e_{2}(s)\leq H(t,s) \leq\frac {1}{1-\gamma}e_{2}(s)\), for \(t, s \in J\),

where

Proof

It is easy to see that (1) holds. In the following, we prove that (2) is satisfied:

and

□

Lemma 2.5

Assume that (H1)-(H4) hold. Then problem (2.1) and (1.2) has a unique solution x given by

Lemma 2.6

Assume that (H3)-(H5) hold, for \(v\in C(J,\mathbb{R}^{+})\), the unique solution x of problem (2.1) and (1.2) satisfies \(x(t)\geq0\) on J.

Proof

By Lemma 2.4, we can obtain \(H(t,s)\geq0 \) for \(t, s \in J\). Hence, from Lemma 2.5, combining with Lemma 2.2 and (H3), we can obtain

This completes the proof. □

3 Background materials and definitions

Now, we present the prerequisite definitions in Banach spaces from the theory of cones.

Definition 3.1

Let E be a real Banach space. A nonempty convex closed set \(P\subset E \) is said to be a cone if

-

(i)

\(ku\in P \) for all \(u\in P\) and all \(k\geq0\), and

-

(ii)

\(u, -u\in P \) implies \(u=0\).

Definition 3.2

On a cone P of a real Banach space E, a map Λ is said to be a nonnegative continuous concave functional if \(\Lambda :P\rightarrow\mathbb{R}^{+} \) is continuous and

for all \(x, y \in P \) and \(t\in J\).

At the same time, on a cone P of a real Banach space E, a map φ is said to be a nonnegative continuous convex functional if φ: \(P\rightarrow\mathbb{R}^{+}\) is continuous and

for all \(x,y \in P \) and \(t\in J\).

Definition 3.3

If it is continuous and maps bounded sets into pre-compact sets, an operator is called completely continuous.

Let φ and Θ be nonnegative continuous convex functionals on P, Λ be a nonnegative continuous concave functional on P, and Ψ be a nonnegative continuous functional on P. Then for positive numbers a, b, c, and d, we define the following sets:

and

We will make use of the following fixed point theorem of Avery and Peterson to establish multiple positive solutions to problem (1.1) and (1.2).

Theorem 3.1

(See [20])

Let P be a cone in a real Banach space E. Let φ and Θ be nonnegative continuous convex functionals on P, Λ be nonnegative continuous concave functional on P, and Ψ be a nonnegative continuous functional on P satisfying \(\Psi(kx)\leq k\Psi(x)\) for \(0 \leq{k} \leq1 \), such that for some positive numbers M and d,

for all \(x\in\overline{P(\varphi,d)}\). Suppose

is completely continuous and there exist positive numbers a, b, and c with \(a< b\) such that

- (S1):

-

\(\{x\in P(\varphi,\Theta,\Lambda,b,c,d):\Lambda(x)>b\} \neq \emptyset\) and \(\Lambda(Tx)>b\) for \(x\in P(\varphi,\Theta ,\Lambda,b,c,d)\);

- (S2):

-

\(\Lambda(Tx)>b\) for \(x\in P(\varphi,\Lambda,b,d)\) with \(\Theta(Tx)>c\);

- (S3):

-

\(0 \notin R(\varphi,\Psi,a,d)\) and \(\Psi(Tx)< a\) for \(x\in R(\varphi,\Psi,a,d)\) with \(\Psi(x)=a\).

Then T has at least three fixed points \(x_{1}, x_{2}, x_{3} \in\overline{P(\varphi,d)}\), such that

and

4 Existence result for the case of \(\alpha(t) \geq t\) on J

Function \(h(t)\) in (1.1) satisfies (H2). We introduce the notations

Let \(X=C(J,\mathbb{R})\) be our Banach space with the maximum norm \(\lVert{x}\lVert=\max_{t\in J} \lvert x \lvert\).

Set

where Γ is defined as in Lemma 2.4. We define the nonnegative continuous concave functional \(\Lambda=\Lambda_{1}\) on P by

where \(\delta\in(0,1)\) is such that \(0<\delta<1-\delta<1\). Set \(J_{\delta_{1}}=[\delta,1]\).

Note that \(\Lambda_{1}(x) \leq\lVert{x}\lVert\). Put \(\Psi(x)=\Theta (x)=\varphi(x)=\lVert{x}\lVert\).

Theorem 4.1

Let assumptions (H1)-(H5) hold and \(\alpha(t) \geq t \) on J. In addition, we assume that there exist positive constants a, b, c, d, ω, L with \(a< b\) such that

and

- (A1):

-

\(f(t, u, v)\leq\frac{d}{\omega}\), for \((t, u, v)\in J \times[0,d] \times[0,d]\), \(I_{k}(t,u)\leq\frac {d}{\omega}\), for \((t,u)\in J_{k} \times[0,d]\);

- (A2):

-

\(f(t, u, v)\geq\frac{b}{L}\), for \((t, u, v)\in J_{\delta_{1}} \times[b,\frac{b}{\Gamma}]\times[b,\frac{b}{\Gamma}]\), \(I_{k}(t,u)\geq\frac{b}{L}\), for \((t,u)\in J_{\delta_{1}}\cap J_{k} \times[b,\frac{b}{\Gamma}]\);

- (A3):

-

\(f(t, u, v)\leq\frac{a}{\omega}\), for \((t, u, v)\in J \times[0,a] \times[0,a]\), \(I_{k}(t,u)\leq\frac {a}{\omega}\), for \((t,u)\in J_{k} \times[0,a]\).

Then problem (1.1) and (1.2) has at least three positive solutions \(x_{1}\), \(x_{2}\), \(x_{3} \) satisfying \(\|x_{i}\|\leq d \), \(i=1, 2, 3\), and

and

Proof

For any \(x\in C(J,\mathbb{R}^{+})\), we define operator T by

where \(hf_{x}(s)=h(s)f (s,x(s),x(\alpha(s)) )\). Indeed, \(T:X \rightarrow X\). Problem (1.1) and (1.2) has a solution x if and only if x solves the operator equation \(x=Tx\).

We need to prove the existence of at least three fixed points of T by verifying that operator T satisfies the Avery-Peterson fixed point theorem.

From the definition of T, we can obtain

In view of (H1), (H2), Lemma 2.1, and Lemma 2.2, we have

So Tx is concave on J. From (4.3) and (4.4), combining with Lemma 2.4 and (H3), we can obtain

Noting (2) in Lemma 2.4, it follows that

On the other hand, from the properties of \(H(t,s)\), we have

This proves that \(TP\subset P\).

Now we prove that the operator \(T: P\rightarrow P\) is completely continuous. Let \(x\in\overline{P_{r}}\), then \(\lVert x\lVert\leq r\). Note that h and f are continuous, so h is bounded on J and f is bounded on \(J\times[-r,r]\). It means that there exists a constant \(K>0\) such that \(\lVert Tx \lVert\leq K\). This proves that TP̅ is uniformly bounded. On the other hand, for \(t_{1}, t_{2} \in J \) there exists a constant \(L_{1} >0\) such that

This shows that TP̅ is equicontinuous on J, so T is completely continuous.

Let \(x\in\overline{P(\varphi,d)}\), so \(0\leq x(t)\leq d\), \(t\in J\), and \(\lVert x \lVert\leq d\). Note that also \(0\leq x (\alpha (t) )\leq d\), \(t\in J\) because \(0 \leq t \leq\alpha(t) \leq1\) on J. Hence

By (4.2), Lemma 2.2, Lemma 2.4, and (A1), we have

This shows that \(T :\overline{P(\varphi,d)}\rightarrow\overline {P(\varphi,d)}\).

To check condition (S1) we choose

Then

so

It proves that

Let \(b\leq x(t)\leq\frac{b}{\Gamma}\) for \(t\in[\delta,1]\), then \(\delta\leq t \leq\alpha(t) \leq1\) on \([\delta, 1]\). It yields \(b \leq x (\alpha(t) )\leq\frac{b}{\Gamma}\) on \([\delta,1]\). It gives

By (4.2), Lemma 2.2, Lemma 2.4, and (A2), we have

It proves condition (S1) holds.

Now we need to prove that condition (S2) is satisfied. Take

Then

So condition (S2) holds.

We finally show that condition (S3) also holds. Clearly, as \(\Psi(0)=0< a\), so \(0\notin R(\varphi,\Psi,a,d)\). Suppose that \(x \in R(\varphi,\Psi,a,d)\) with \(\Psi(x)=\lVert x \lVert=a\).

Similarly, by (4.2), Lemma 2.2, Lemma 2.4, and (A3), we have

It proves that condition (S3) is satisfied.

By Theorem 4.1, there exist at least three positive solutions \(x_{1} \), \(x_{2}\), \(x_{3}\) of problem (1.1) and (1.2) such that \(\lVert x_{i} \lVert\leq d \) for \(i= 1, 2, 3\),

and \(\lVert x_{3} \lVert < a\). This ends the proof. □

Example

We consider the following BVP:

with \(\alpha\in C(J,J)\), \(h(t)=Dt\), \(\alpha(t)\geq t\), \(\xi=\eta=\frac{1}{2}\), \(\rho=2\), and \(t_{1}=\frac{1}{12}\). It follows that \(\mu(t)=\frac{3}{2}-t\), \(\lambda(t)=t+\frac{1}{2}\), for \(t\in J\), and

Note that \(f\in C(\mathbb{R}^{+},\mathbb{R}^{+})\). As a function α we can take, for example, \(\alpha(t)=\sqrt{t}\).

In this case we have \(\gamma=\frac{1}{4}\), \(\Delta=-\frac{85}{36}\), \(\overline{A}=\frac{47}{170}\), \(\overline{B}=\frac{71}{170}\), \(\underline{A}=\frac{269}{6\text{,}800}\), \(\underline{B}=\frac{457}{6\text{,}800}\), \(l_{1}=\frac{11}{48}D\), \(\hat{l}_{1}=\frac{1}{12}D\), \(\Gamma=\frac{1}{24}\). Let \(a=1\), \(b=\frac{3}{2}\), \(c=36\), \(d\geq2\text{,}000\), \(D=2\text{,}784\). In this case we can take \(\omega=250\), \(L=1\). We see that all assumptions of Theorem 4.1 hold, so BVP (4.7) has at least three positive solutions.

5 Existence result for the case of \(\alpha(t) \leq t\) on J

The cone P is defined as in (4.1). We define the nonnegative continuous concave functional \(\Lambda=\Lambda_{2}\) on P by

where \(\delta\in(0,1)\) satisfying \(0<\delta<1-\delta<1\). Set \(J_{\delta_{2}}=[0,1-\delta]\). Similar to the proof of Theorem 4.1, we have the following result.

Theorem 5.1

Let assumptions (H1)-(H5) hold and \(\alpha(t) \leq t \) on J. In addition, we assume that there exist positive constants a, b, c, d, ω, L with \(a< b\) such that (4.2) holds and

- (A1):

-

\(f(t, u, v)\leq\frac{d}{\omega}\), for \((t, u, v)\in J \times[0,d] \times[0,d]\), \(I_{k}(t,u)\leq\frac {d}{\omega}\), for \((t,u)\in J_{k} \times[0,d]\);

- (A2):

-

\(f(t, u, v)\geq\frac{b}{L}\), for \((t, u, v)\in J_{\delta_{2}} \times[b,\frac{b}{\Gamma}]\times[b,\frac{b}{\Gamma}]\), \(I_{k}(t,u)\geq\frac{b}{L}\), for \((t,u)\in J_{\delta_{2}}\cap J_{k} \times[b,\frac{b}{\Gamma}]\);

- (A3):

-

\(f(t, u, v)\leq\frac{a}{\omega}\), for \((t, u, v)\in J \times[0,a] \times[0,a]\), \(I_{k}(t,u)\leq\frac {a}{\omega}\), for \((t,u)\in J_{k} \times[0,a]\).

Then problem (1.1) and (1.2) has at least three positive solutions \(x_{1}\), \(x_{2}\), \(x_{3} \) satisfying \(\|x_{i}\|\leq d \), \(i=1, 2, 3\), and

and

References

Sun, J, Wang, X: Monotone positive solutions for an elastic beam equation with nonlinear boundary conditions. Math. Probl. Eng. 2011, Article ID 609189 (2011)

Yao, Q: Positive solutions of nonlinear beam equations with time and space singularities. J. Math. Anal. Appl. 374, 681-692 (2011)

O’Regan, D: Solvability of some fourth (and higher) order singular boundary value problems. J. Math. Anal. Appl. 161, 78-116 (1991)

Zhang, X: Existence and iteration of monotone positive solutions for an elastic beam equation with a corner. Nonlinear Anal. 10, 2097-2103 (2009)

Bonanno, G, Bella, BD: A boundary value problem for fourth-order elastic beam equations. J. Math. Anal. Appl. 343, 1166-1176 (2008)

Cabada, A, Tersian, S: Existence and multiplicity of solutions to boundary value problems for fourth-order impulsive differential equations. Bound. Value Probl. 2014, 105 (2014)

Amster, P, Alzate, PPC: A shooting method for a nonlinear beam equation. Nonlinear Anal. 68, 2072-2078 (2008)

Zhao, Y, Huang, L, Zhang, Q: Existence results for an impulsive Sturm-Liouville boundary value problems with mixed double parameters. Bound. Value Probl. 2015, 150 (2015)

Kang, P, Wei, Z, Xu, J: Positive solutions to fourth-order singular boundary value problems with integral boundary conditions in abstract spaces. Appl. Math. Comput. 206, 245-256 (2008)

Zhang, X, Feng, M: Positive solutions for classes of multi-parameter fourth-order impulsive differential equations with one-dimensional singular p-Laplacian. Bound. Value Probl. 2014, 112 (2014)

Lv, X, Wang, L, Pei, M: Monotone positive solution of a fourth-order BVP with integral boundary conditions. Bound. Value Probl. 2015, 172 (2015)

Ma, H: Symmetric positive solutions for nonlocal boundary value problems of fourth order. Nonlinear Anal. 68, 645-651 (2008)

Zhang, X, Feng, M: Positive solutions of singular beam equations with the bending term. Bound. Value Probl. 2015, 84 (2015)

Jankowski, T: Positive solutions for fourth-order differential equations with deviating arguments and integral boundary conditions. Nonlinear Anal. 73, 1289-1299 (2010)

Feng, M, Qiu, J: Multi-parameter fourth order impulsive integral boundary value problems with one-dimensional m-Laplacian and deviating arguments. J. Inequal. Appl. 2015, 64 (2015)

Afrouzi, GA, Hadjian, A, Radulescu, VD: Variational approach to fourth-order impulsive differential equations with two control parameters. Results Math. 65, 371-384 (2014)

Sun, JT, Chen, HB, Yang, L: Variational methods to fourth-order impulsive differential equations. J. Appl. Math. Comput. 35, 232-340 (2011)

Jankowski, T: Positive solutions for second order impulsive differential equations involving Stieltjes integral conditions. Nonlinear Anal. 74, 3775-3785 (2011)

Ding, W, Wang, Y: New result for a class of impulsive differential equation with integral boundary conditions. Commun. Nonlinear Sci. Numer. Simul. 18, 1095-1105 (2013)

Avery, RI, Peterson, AC: Three positive fixed points of nonlinear operators on ordered Banach spaces. Comput. Math. Appl. 42, 313-322 (2001)

Acknowledgements

The work is supported by Chinese Universities Scientific Fund (Project No. 2016 LX002). The authors would like to thank the anonymous referees very much for helpful comments and suggestions, which lead to the improvement of presentation and quality of the work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Dou, J., Zhou, D. & Pang, H. Existence and multiplicity of positive solutions to a fourth-order impulsive integral boundary value problem with deviating argument. Bound Value Probl 2016, 166 (2016). https://doi.org/10.1186/s13661-016-0674-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-016-0674-8