Abstract

Seismic site characterization attempts to quantify seismic wave behavior at a specific location based on near-surface geophysical properties, for the purpose of mitigating damage caused by earthquakes. In recent years, techniques for estimating near-surface properties for site characterization using geophysical observations recorded at the surface have become an increasingly popular alternative to invasive methods. These observations include surface-wave phenomenology such as dispersion (velocity-frequency relationship) as well as, more recently, full seismic waveforms. Models of near-surface geophysical properties are estimated from these data via inversion, such that they reproduce the observed seismic observations. A wide range of inverse problems have been considered in site characterization, applying a variety of mathematical techniques for estimating the inverse solution. These problems vary with respect to seismic data type, algorithmic complexity, computational expense, physical dimension, and the ability to quantitatively estimate the uncertainty in the inverse solution. This paper presents a review of the common inversion strategies applied in seismic site characterization studies, with a focus on associated advantages/disadvantages as well as recent advancements.

Similar content being viewed by others

1 Introduction

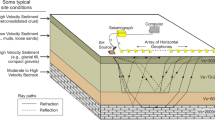

Seismic site characterization encompasses a variety of approaches to assess the hazards associated with earthquake ground shaking at a specific location, and is of key importance in mitigating damage caused by earthquakes. Site characterization generally requires estimation of the structure and geophysical properties of the shallow subsurface for the purpose of predicting seismic wave behavior. As seismic waves propagate into materials with lower seismic impedance (e.g., near-surface sediments and soils), the wave amplitude increases due to conservation of energy (Anderson et al. 1986; Anderson et al. 1996). Amplification of seismic waves also occurs at specific frequencies due to resonances within near-surface layers. Furthermore, larger-scale two- and three-dimensional (2D and 3D) structures such as sedimentary basins can trap and focus seismic energy, amplifying waves (e.g., Bard and Bouchon 1985; Campillo et al. 1989; Graves et al. 1998). Consequently, the geophysical properties at a site significantly affect the amplitude, frequency content (spectrum), and duration of ground motions during an earthquake. Greater knowledge of these properties (e.g., subsurface seismic velocities, density, and attenuation), including their spatial distribution, is therefore critical for understanding and predicting site-specific seismic hazards. Geophysical inversion provides a variety of approaches to estimate in situ sub-surface properties from surface observations, and often represents a convenient and economical alternative to invasive approaches (e.g., drilling and down-hole methods).

The general topic of geophysical inversion is considered in several texts (e.g., Parker 1994; Tarantola 2005; Menke 2018; Aster et al. 2018) and reviews in the literature (e.g., Jackson 1972; Parker 1977; Treitel and Lines2001; Sambridge and Mosegaard 2002). This paper presents a review of common inversion approaches for estimating geophysical models from seismic data for the purpose of site characterization. The paper is structured roughly in order of increasing complexity of the approaches reviewed. Many of the same inversion approaches considered here have been applied to estimate earth structure from local to global scales. Furthermore, these approaches have been applied to many other types of geophysical data (e.g., gravity, magnetic, electromagnetic). It is not intended, nor is it possible, for this paper to provide an exhaustive account of the seismic site characterization literature. It is also not the intention to promote one inversion approach over others, but rather to summarize for the reader the variety of techniques that are available (with a focus on recent advancements), and to consider some of the associated advantages/disadvantages.

The goals of this paper are to review the theory, assumptions, limitations, and practical application of inversion methods commonly used for seismic site assessment and characterization. Many factors in the inverse problem can influence the recovered model, and impact site characterization and predicted earthquake response. The range of issues considered here is broad and varies with respect to seismic data type, algorithmic complexity, computational expense, physical dimension, and the ability to quantitatively estimate the uncertainty in the inverse solution. Given the range of methods discussed here, this paper first provides a brief overview of some general aspects of inversion (applicable to all problems) in Section 2 before discussing specific inversion approaches in more detail. Most approaches considered here are based on recovering an optimal (best-fit) model of the shallow subsurface. Linearized and fully non-linear approaches to finding optimal one-dimensional (1D) models of subsurface structure are reviewed in Sections 3 and 4, respectively. As an alternative to methods that find a single optimal solution, Bayesian approaches which provide a probabilistic result are also reviewed in this paper in Section 5. Many studies in seismic site assessment are interested in more-complex 2D and 3D structures, such as sedimentary basins. Surface-wave tomography and full-waveform inversion (FWI) are two approaches for estimating 2D and 3D models that have recently become more applicable in site assessment studies, and are reviewed in Sections 6 and 7, respectively.

2 Theoretical overview

2.1 Models and data

Inversion can be defined as the estimation of the parameters of a postulated model that represents a physical system of interest, using observations of some process that interacts with the system (i.e., data). In the case of seismic site characterization, the physical system is the geophysical structure of the shallow subsurface, and the data are observations of seismic waves that interact with this structure. It is important to recognize that the model always represents an idealization of the actual physical system. For example, a model consisting of a 1D profile of seismic velocity assumes the subsurface is laterally homogeneous (and typically isotropic). An important issue in all inverse problems is whether the postulated model adequately represents the physical system. For example, assuming lateral homogeneity when the subsurface is actually laterally heterogeneous introduces modelling errors that can bias or preclude meaningful results. Such cases may necessitate more-complex 2D or 3D models of the system. Furthermore, within the context of site-specific seismic hazard assessment, such 2D or 3D models may ultimately be required to accurately predict the response of a site during an earthquake (e.g., Campillo et al. 1990; Trifunac2016).

In the case of a 1D profile, model parameters commonly represent the shear-wave velocity (VS) and compressional-wave velocity (VP) within discrete layers. Other parameters such as density (ρ) and attenuation are also considered. However, it is commonly accepted that the response of a site to an earthquake is predominantly influenced by the VS structure. In 2D or 3D models, parameters typically represent seismic velocities of discrete cells in a spatial grid. The number and sizes of layers or cells in the model are also issues in model selection. Depending on the particular approach, these spatial properties may also be considered as model parameters estimated in the inversion (e.g., the number and thicknesses of layers in a 1D scenario).

As mentioned above, the data considered in this review to constrain subsurface structure represent observations of seismic wave phenomenology (other types of geophysical data are sometimes used for site characterization, but seismic data are the most common and informative). Common seismic data include measurements of the dispersion (variation of phase or group velocity with frequency) and the horizontal-to-vertical spectral ratio (HVSR) of surface waves. Both of these data types can be extracted from ambient seismic noise, although controlled-source and earthquake recordings are also used. In some cases, surface-wave attenuation curves can be measured from multi-channel active-source recordings. Detailed discussions on the measurement and processing of the various data types here are included in other review papers in this issue. Furthermore, many site characterizations studies estimate near-surface structure using body-wave (active-source) imaging methods (e.g., Williams et al. 2000). These problems are formulated differently than those discussed in this review, and are considered in other review papers in this issue. In the case of tomographic inversion, the data typically represent the travel times of particular seismic phases. In FWI, rather than considering the data to be specific features of a recorded seismogram (such as wave amplitudes or travel times), the data are taken to be the seismogram itself, which contains more information (and associated complexities).

Many site characterization applications are based on estimating specific regulatory-based representations of near-surface structure (e.g., the travel-time averaged VS of the upper 30 m, known as VS30). Such site-characterization parameters can be extracted from seismic inversion results. However, it is worth noting that other studies estimate these site parameters directly, based on empirical relationships for surface-wave data at particular wavelengths (e.g., Martin and Diehl 2004; Albarello and Gargani2010), or other proxies such as surficial geology (e.g., Wills and Clahan 2006) and topography (e.g., Yong et al. 2012). As these approaches do not formally represent inversion, they are not considered further in this review, but see Yong (2016) and Savvaidis et al. (2018) for further discussion on this topic.

The near-surface attenuation properties of a site are of significant importance in seismic site assessment. A common technique for studying near-surface attenuation is via the amplitude spectra of earthquake recordings, which typically display a decrease in amplitude at high frequencies that is often modelled by a spectral decay factor κ. The path-corrected component of κ, called κ0, is believed to be a frequency-independent site-attenuation (and scattering) parameter (e.g., Pilz and Fäh 2017; Palmer and Atkinson 2020). Although useful, κ is typically estimated empirically, and does not represent the result of an inversion. Hence, this topic is not considered further here.

2.2 The forward problem

In order to consider an inverse problem, a solution must be available for the corresponding forward problem (also called the forward model or direct problem). For a given set of model parameters, the forward problem computes (predicts) the data that would be observed for this representation of the physical system (i.e., the forward problem simulates the physical processes that lead to the data). Mathematically, the forward problem represents a mapping from the model-parameter space to the data space, while the inverse problem represents the reverse mapping. Geophysical forward problems (predicting data for models) produce a unique solution that is generally stable in the sense that small changes to the model cause only small changes to the resulting data. In contrast, the inverse problem (estimating model parameters from data) is non-unique (more than one model can fit the data) and can be unstable (i.e., small changes to the data, such as errors, can cause large changes in the recovered model). Hence, solutions to inverse problems should always be appraised critically, and quantitative uncertainty estimation, when possible, is valuable.

To briefly consider the forward problem for the data types mentioned above, predicting surface-wave dispersion for laterally homogeneous models usually employs efficient numerical methods such as the Thomson-Haskell propagator-matrix (Thomson 1950; Haskell 1953; Knopoff 1964; Dunkin 1965; Gilbert and Backus 1966). For HVSR, a consensus for the cause of the shape of the spectral amplitude ratio has not been established (Lunedei and Malischewsky 2015; Molnar et al. 2018). As such, a variety of techniques have been used to solve the HVSR forward problem, with the various differences (or simplifications) in the underlying theories representing possible sources of error. Most 1D forward models are based on discrete layers with uniform geophysical properties within each layer. Model parameterizations that involve gradients (e.g., a power-law profile) can be parameterized in terms of multiple uniform sub-layers. Recently, spectral-element techniques have been developed to solve the 1D forward problem for more general profile forms including uniform layers, smoothly varying structure, or combinations thereof (e.g., Hawkins 2018). For tomographic inversion, the forward problem must determine the wavefront evolution or ray path for a particular seismic phase to accurately predict travel times (e.g., Rawlinson and Sambridge 2004). FWI requires computation of synthetic seismograms that accounts for complex heterogeneity and scattering effects. For this purpose, numerical approaches for solving the partial differential equations governing seismic wave propagation are typically employed (e.g., Bouchon et al. 1989; Virieux 1986; Komatitsch and Vilotte 1998; Akcelik et al.2003).

All inversion methods discussed in this paper incorporate an underlying approach to solving the corresponding forward problem, with associated assumptions that can impact the model solution. However, further discussion of the forward problem (unique to each data type and problem) is beyond the scope of this review.

2.3 Errors and misfit

The goal of most inversion approaches is to determine the set of model parameters for which the predicted data best fit the observed data. A perfect fit is generally not possible (or desirable) because of data errors, which include measurement errors on the observed data (e.g., due to instrument effects and competing seismic vibrations in the environment) and theory errors on the predicted data (due to simplified model parameterizations and approximate physics of the forward problem). A common measurement error in site characterization studies considering surface-wave dispersion is the misidentification, or interaction, of dominant modes in the data (O’Neill and Matsuoka 2005). Data errors (sum of measurement and theory errors) can be considered to represent all factors that cannot be modelled or accounted for in the inverse problem. Measurement errors are often considered to be statistical (i.e., aleatoric), whereas theory errors typically introduce systematic (i.e., epistemic) errors. In some cases, measurement errors can be characterized statistically from repeated sets of observed data. However, this approach does not apply to theory errors, the statistics of which are usually poorly known.

In many inversion approaches, the data misfit function is formulated based on knowledge or assumptions on the error processes. Considering the difference between observed and predicted data (called data residuals) to represent the errors, the misfit function can be derived by assuming a particular statistical distribution of the residuals given the model, which is then interpreted as the likelihood of the model. For example, the common assumption of Gaussian-distributed errors leads to an L2 misfit function (negative log-likelihood function) consisting of the sum of squared residuals, weighted by the inverse error covariance matrix. The Gaussian assumption is supported by the Central Limit Theorem, which states that the sum of a number of error processes tends to a Gaussian distribution regardless of the distributions of the individual processes. Further, L2 misfit minimization corresponds to least-squares methods, for which analytic solutions exist for linear and linearized problems. However, the assumption of Gaussian errors and least-squares misfit can be inappropriate for data sets containing outliers (data with improbably large errors). In such cases, the assumption of Lapalacian errors (i.e., a two-sided exponential distribution) is more robust. This assumption leads to an L1 misfit function based on the sum of absolute values of residuals, weighted by the square-root of the inverse covariance matrix. No analytic solution to L1 misfit minimization exists.

The L1 and L2 misfit functions mentioned above formally require knowledge of the error covariance matrix, which is often not available. However, under the assumption of independent, identically distributed (IID) errors (i.e., a diagonal covariance matrix with constant variance), the problem of misfit minimization is independent of the covariance matrix and unweighted misfit functions apply. This approach is commonly used for misfit minimization; however, it does not apply to parameter uncertainty estimation where error quantification is required. Furthermore, it should be noted that if the errors are not IID (common for geophysical data), the approach can lead to suboptimal (biased) solutions. In such cases, it may be preferable to estimate the error statistics from the data as part of the inverse problem. This requires variance estimation if the errors can be considered independent, or full covariance estimation for inter-dependent (e.g., serially correlated) errors. Variance/covariance can be estimated using non-parametric approaches where the residuals from an initial unweighted inversion are used to compute a diagonal or Topeplitz (band-diagonal) covariance matrix for a subsequent inversion. Alternatively, parametric approaches can be applied in non-linear (numerical) inversions by including the parameters for a model of the covariance matrix in the inversion.

Misfit functions can also be defined without a statistical foundation to serve the same purpose of quantifying the difference between observed and predicted data. For example, the optimal-transport metric has gained popularity as a misfit function in several seismological inverse problems (e.g., Métivier et al. 2016; Hedjazian et al. 2019). This misfit metric enhances certain desirable properties of the problem such as linearity of the relationship between data and model parameters, as well as the uniqueness of the problem. It is worth noting that several of the inversion strategies reviewed in this paper (particularly non-linear inversions) need not be based on specific assumptions on data errors.

Regardless of how the misfit function is defined, it can be interpreted as a hyper-surface in the model space (i.e., a multi-dimensional function over the parameters). The goal for many inverse methods is to estimate the optimal set of model parameters that represent the global minimum of the misfit surface. An important distinction is whether the inverse problem is linear, weakly non-linear, or strongly non-linear. Linear inverse problems have a single minimum of the misfit function over the parameter space. An idealization of such a misfit function is illustrated in Fig. 1a. In particular, for a linear inverse problem with Gaussian-distributed errors, the L2 misfit function represents the (negative) logarithm of a Gaussian likelihood function over the parameter space, and an analytic expression for this solution exists.

Most inverse problems in geophysics (including seismic site assessment and characterization) are non- linear. A rare example of a linear problem is the inversion of measured surface-wave attenuation curves to estimate near-surface P-wave and/or S-wave attenuation (described by dimensionless quality factor Q) where, for known velocity structure, the relationship between surface-wave attenuation and body-wave attenuation is linear (e.g., Xia et al. 2002b). In some cases, the non-linearity of an inverse problem may be weak, such that the problem can be solved via linearization, iteratively stepping down the local misfit gradient to the minimum (Section 3). For strongly non- linear problems, the misfit surface can be complex, potentially including multiple disconnected regions with low misfit (i.e., local minima) in the parameter space, as illustrated in Fig. 1b. Linearized inversions can fail (converge to local minima or diverge) for strongly non-linear problems, and non-linear approaches are required (Section 4).

Non-linear inversion in seismic site assessment and characterization comprises a diverse collection of methods. These include the downhill simplex method (DHS, Section 4.1), which moves roughly down the misfit gradient through geometric moves without computing derivatives of the hyper-surface; global search methods such as simulated annealing (SA) and genetic algorithms (GA, Section 4.2), which apply a directed random search based on natural optimization processes; and the neighborhood algorithm (NA, Section 4.3), which sequentially subdivides the parameter search space to converge to the solution. Large-scale problems such as tomography and FWI are typically based on linearization for practical (computational) reasons. An alternative to inversion methods that seek the best-fit model solution (a point estimate in the parameter space), Bayesian inversion is based on probabilistic (numerical) sampling over the parameter space to estimate properties of the posterior probability density (PPD), providing parameter estimates together with quantitative (non-linear) uncertainty analysis (Section 5).

2.4 Parameterization

The approach to parameterizing the model is another important issue in inversion. Adopting too few parameters (e.g., layers in a 1D problem or grid cells in 2D or 3D) can underfit the observed data, leaving model structure unresolved and biasing parameter estimates. Conversely, adopting too many parameters can overfit the data (i.e., fit the errors on the data), resulting in models with spurious (unconstrained) structural features. Furthermore, more model parameters typically means greater computation cost.

The inversion also depends on the form of the model represented by the parameterization. For example, as mentioned above, most 1D site-characterization studies consider the subsurface to be represented by a discrete stack of uniform layers, with discontinuities in geophysical properties at layer boundaries. However, some recent inversions consider gradient (smooth) structures represented by linear or power-law functions (e.g., Molnar et al. 2010) or, more generally, by polynomial basis functions (Gosselin et al. 2017). In some cases, these gradient-based parameterizations have been shown to characterize unconsolidated soils and sediments better than discrete layered models, although this is not universal for all sites.

Some approaches to parameterization, particularly for linearized inversions, include only the geophysical properties for a fixed discretization of the subsurface model, since solving for spatial parameters (e.g., layer thicknesses or cell sizes) can significantly increase the non-lineariy of the problem. In this approach, the model is typically over-parameterized with many layers/ grid cells that are below the spatial resolution of the data to provide flexibility for the solution. The resulting under-determined inversion can be constrained by regularization (described in Section 3) to explicitly control the data misfit and structure of the solution.

An alternative parameterization approach is to solve for the spatial properties of the model (individual layer thicknesses or cell sizes) as part of the problem. This is more often applied in non-linear approaches, where the increased non-linearity is of less concern. This approach typically assumes a small number of layers/cells to constrain model structure, but the issue of over- or under-parameterization is sometimes given little attention beyond qualitative practices such as progressively increasing the number of layers until the data misfit stops decreasing significantly. However, quantitative approaches to parameterization based on formal information criteria or by sampling probabilistically over the number of parameters have been applied in site-assessment inversion, and will be reviewed herein.

2.5 Practical considerations

The inversion approaches discussed in the following sections of this paper have various advantages and disadvantages. For instance, linearized inversions often require the fewest data predictions (forward solutions) and are the least computationally demanding. Bayesian inversion numerically samples the model probability over the parameter space, and can require tens to hundreds of thousands of data predictions. Consequently, Bayesian methods are typically the most computationally demanding, but also provide the most informative solutions. Non-linear optimizations generally fall somewhere between these extremes in terms of computational demand.

Forward solutions for 1D models in seismic site characterization are quite efficient such that inversions can generally be solved in a matter of seconds (for linearized methods) to minutes (for Bayesian methods) with serial algorithms on a desktop computer. In microtremor array methods for local site-assessment applications (e.g., estimating geophysical properties at a site over 10s to 100s metres depth), the 1D assumption is required only over the lateral extent of the seismometer array (also \(\sim \)10–100 m). For larger-scale studies (e.g., tomographic inversion and FWI for sedimentary basin structure), 2D or 3D parameterizations are generally required. In these cases, the computational demand of the forward problem is much greater and most approaches rely on linearized inversion. Bayesian methods have been applied to large-scale seismic inversions at significant computational expense (e.g., Bodin and Sambridge 2009; Bodin et al. 2012; Gosselin et al. 2021); however, to date, such methods have not been applied to site assessment.

Inverse methods are sometimes treated as a “black box,” with data as input and a model of earth structure as output, but with little consideration of the underlying processes. However, the output model can be non-physical or meaningless if the data are of poor quality or if the error assumptions or model parameterization do not apply. The observed data should be inspected (visually or otherwise) for quality control and, whenever possible, “sanity checks” applied to assess the reliability of model solutions. For example, in HVSR inversion, the peak frequency for the spectral-ratio curve is strongly linked to the depth of the largest seismic impedance contrast in the subsurface (e.g., the soil-bedrock interface), with a lower frequency indicating a deeper interface. If the observed HVSR data possess a low-frequency peak, then it is reasonable to expect the inversion solution to include a large change in seismic velocity at a deep interface. For all inverse problems, the agreement between the observed and predicted data should be examined to ensure a meaningful fit (but note that good data fit is a necessary but not sufficient condition for a meaningful model solution). Examining the data residuals after an inversion can also provide useful insight into the data-error statistics. Further discussion on “guidelines” and details pertinent to the processing and inversion of surface-wave data in particular is provided by Foti et al. (2011), Foti et al. (2018), and Vantassel and Cox (2021).

Due to the non-uniqueness and potential instability of inverse problems, it is important to assess the uncertainty of the model solution, when possible. Understanding and quantifying the uncertainties in estimated near-surface structure (and associated effects on site characterization) is of significant interest for engineering and planning purposes, and has been identified as a critical issue in seismic site characterization (Cornou et al. 2006). However, uncertainty estimation can be challenging for inverse problems, and many methods are approximate and/or qualitative. For instance, linearization errors as well as regularization schemes generally preclude quantitative uncertainty estimation for linearized inversions. Global-search methods are designed to locate the optimal solution, but not estimate uncertainties. However, Bayesian inversion methods can provide rigorous uncertainty quantification for non-linear problems, including seismic site assessment (e.g., Molnar et al. 2010; Dettmer et al. 2012; Gosselin et al. 2017). Furthermore, the estimated inversion uncertainties can be propagated into site-assessment analyses to characterize the site and predict the expected range of seismic amplification and resonance, representing a valuable result for engineers and planners (Molnar et al. 2013; Gosselin et al. 2018).

3 Linearized inversion

As mentioned in the previous section, although inverse problems associated with seismic site characterization are functionally non-linear, in some cases the analytic theory for linear inversion can be applied via local linearization and iteration. This section reviews common linearized inversion techniques and their application to site assessment.

Consider a non-linear inverse problem with vectors of model parameters m, observed data d, and predicted data d(m). Equating the observed data to those predicted for the model sought, and expanding the data functional (forward problem) about a starting model m0 to first order leads to

Here, A is the sensitivity or Jacobian matrix of partial derivatives, Aij = ∂di(m0)/∂m0j. Neglecting higher-order terms in the expansion linearizes the inverse problem about the initial model. Rearranging Eq. 1 and defining data and model perturbations δd = d −d(m0) and δm = m −m0, respectively, leads to a linear relation:

This is the fundamental relationship between changes in a proposed model and resulting changes in the forward-modelled data, which can be used to refine the initial model.

For some inverse problems, analytic expressions for the required partial derivatives are available, but in other cases they must be estimated numerically (e.g., via finite differences). The sensitivity matrix encapsulates the physics/geometry for the (linearized) problem, and can provide useful insight. For example, Xia et al. (1999) examined the sensitivity matrix for 1D linearized inversion of surface-wave dispersion data, and concluded quantitatively that dispersion data are significantly more sensitive to VS than VP or ρ. Furthermore, Xia et al. (2003) determined that, for a given frequency, higher-order modes are more sensitive than the fundamental mode to deeper structure, and thereby provide greater information for, and constraint on, such structure. Note that we discuss the sensitivity matrix within the context of a discrete, parameterized model. The continuous (and analytic) equivalent to the sensitivity matrix are often called the Fréchet derivatives (McGillivray and Oldenburg 1990).

As mentioned previously, the assumption of Gaussian-distributed errors for a linear inverse problem leads to an analytic solution. For Eq. 2, this assumption leads to a likelihood function

where

is the misfit (negative log-likelihood) function and C is the data error covariance matrix. The best-fit model perturbation δm (for the linearized problem) can be found by maximizing the likelihood or, equivalently, minimizing the misfit: setting ∂Φ(δm)/∂δm = 0 leads to

The model solution is given by m1 = m0 + δm. Since higher-order terms were neglected, this may not represent a satisfactory solution, but the procedure can be repeated iteratively until the data are fit appropriately and/or the parameters no longer change between iterations.

The linearized solution, Eq. 5, requires the matrix ATC− 1A to be well-conditioned. In practice, depending on the choice parameterization, inversions for seismic site characterization are often ill-conditioned (nearly singular), which leads to unstable inversions. The inversion can be stabilized using singular-value decomposition (e.g., Parolai et al. 2006) and/or by incorporating additional constraints on the model parameters independent of the data.

A common strategy to incorporate constraints for stability is regularization (Fig. 2). Rather than minimizing the data misfit Eq. 4, regularization considers a more general objective function that augments the data misfit with a model misfit term

where \(\delta \hat {\mathbf {m}}\) represents a preferred value for δm, R represents a weighting matrix (the regularization matrix), and β is a trade-off parameter determining the relative importance of the two terms. Minimizing Eq. 6 with respect to δm leads to the regularized solution which may be expressed by

Tradeoff between data misfit and regularization. Solid contours show the misfit surface with its minimum at the red star. Dashed contours show a hypothetical regularization surface with its minimum at the blue star (which does not fit the data). The regularized solution balances minimizing both functions. Adapted from Sambridge and Mosegaard (2002)

In Eq. 7, the term βRTR stabilizes the matrix inversion (cf. Eq. 5), given appropriate choices of β and R. The role of β as a trade-off parameter is clear: in the \(\lim \beta \!\rightarrow \! 0\) Eq. 7 simplifies to Eq. 5 which minimizes data misfit alone, while the \(\lim \beta \!\rightarrow \! \infty \) leads to \(\delta \mathbf {m} = \delta \hat {\mathbf {m}}\) which minimizes model misfit. The goal is to determine an appropriate value for β which provides an acceptable data misfit while stabilizing the inversion. If suitable knowledge of the data error covariance is available, β can be chosen to fit the data according to a statistical criterion (e.g., χ2 test). If not, a more subjective approach is to plot the data misfit versus the model misfit to determine a balance near the inflection point of this curve (the L-curve method, e.g., Hansen 1992).

The most common form of regularization used in (1D) near-surface seismic inversion sets R = I (the identity matrix) and \(\boldsymbol {\delta } \hat {\mathbf {m}} = \boldsymbol {0}\) (i.e., a preference for small linearized step size), such that Eq. 7 becomes

This solution adds β to the main diagonal of ATC− 1A to overcome ill-conditioning. For IID errors (i.e., C = σ2I), this method is often referred to as damped least squares. This regularization, favoring small δm, is consistent with local linearization which may only apply in a small region around the initial model.

Levenberg (1944) proposed a strategy for assigning β based on the number of linearized iterations such that β is larger for initial iterations (consistent with the local linear assumption) but gradually decreases for later iterations in order to better fit the observed data near convergence. Marquardt (1963) improved the inversion by specifying RTR to be the diagonal components of ATC− 1A such that the relative weighting of model parameters in the regularization is defined by information in the sensitivity matrix. The Levenburg-Marquart and damped least-squares methods have been used extensively in linearized inversion for 1D seismic site characterization (e.g., Xia et al. 1999; Xia et al. 2002a; Xia et al. 2003; Forbriger2003).

Alternatively, regularizations can be defined to represent first- or second-order spatial derivative operators applied to the parameters to minimize model gradients or roughness, respectively (Constable et al. 1987; Aster et al. 2018). These regularizations minimize complex structure, producing simple (flat or smooth) models. For these regularizations, the linearized problem, Eq. 2, is typically recast as

such that the inversion and regularization are formulated for the updated model rather than the model perturbation. Minimum-structure regularized inversions are often used in 2D and 3D engineering-scale problems in site assessment (considered in Sections 6 and 7).

Linearized inversion may fail by diverging, or converging to a local (rather than global) minimum, if the non-linearity of the problem is strong and/or the initial model is poor. Non-linear methods, discussed in the following section, are designed to overcome these problems. Compared to non-linear methods, linearized inversion typically requires fewer forward operations (data predictions) since they exploit misfit gradient information rather than employ directed random searches. Parolai et al. (2006) considered the inversion of surface-wave dispersion to estimate near-surface 1D VS structure using linearization as well as two non-linear methods (DHS and GA, discussed in Section 4). Similarly, Lu et al. (2016) compared linearization to SA. Both studies showed that, given a relatively accurate starting model, linearized inversion performed as well as non-linear methods. Some studies have considered multi-step hybrid inversions that initially apply a non-linear approach (e.g., GA) to estimate a good initial model for a subsequent linearized inversion (e.g., Picozzi and Albarello 2007; Lei et al. 2018). Further comparisons between linear and non-linear inversions of dispersion data for site characterization applications are given in Garofalo et al. (2016).

In linear inverse theory, the quality of the model solution can be assessed through the calculation of resolution and model covariance matrices. However, for linearized inversions, these measures suffer from linearization error, and regularization can preclude meaningful results; they do not appear to be commonly used in seismic site characterization.

4 Non-linear optimization

Linearized inversion methods considered in the previous section are sometimes referred to as local searches since, although they move efficiently downhill based on misfit gradient information, they typically remain close to the starting model and are prone to become trapped in local minima. As an alternative, non-linear search (optimization) methods are designed to widely search the space and (ideally) avoid sub-optimal solutions. A variety of non-linear optimization methods have been applied to geophysical inversion for site assessment. This includes DHS, global search methods of SA and GA, and NA, all of which are described in this section. The goal of these methods is to determine the set of model parameter values that minimizes the data misfit via numerical optimization; i.e., the various methods all solve the same problem, but apply different optimization schemes. NA has been most widely used in inversion for 1D seismic site characterization studies due to the availability of a user-friendly software implementation (geopsypack, Wathelet et al. 2020). For this reason, and because the original algorithm has been modified and improved for this application, the NA is reviewed in greater detail in Section 4.3. For a more technical discussion on some of the techniques reviewed in this section (for general applications in geophysics), as well as for further discussion on exploitation vs. exploration of the misfit hyper-surface in geophysical inverse methods, see Sambridge and Mosegaard (2002).

4.1 Downhill simplex

The DHS method (Nelder and Mead 1965) is an optimization method based on a geometric scheme for moving “downhill” in parameter space without calculating partial derivatives. DHS operates on a simplex (convex hull) of M + 1 models in an M-dimensional model parameter space, as illustrated in Fig. 3 for M = 3. Computing the misfit for each model of the simplex provides (limited) information on the local misfit gradient without derivative computations.

Geometrical steps of DHS illustrated for a 3D parameter space. Initial simplex of 4 models (a). Operations are applied to the model with the highest misfit including reflection (b), reflection plus expansion (c), and contraction (d). If all of these fail, a multiple contraction of M models towards the lowest-misfit model is applied (e)

The simplex undergoes a series of transformations in order to work its way downhill. Each model is ranked according to its misfit. The algorithm initially attempts to improve the model with the highest misfit by reflecting it through the face of the simplex (as shown in Fig. 3b). If this new model has the lowest misfit in the simplex, an extension by a factor of 2 in the same direction is attempted (Fig. 3c). If the extension further reduces the misfit the result is retained; otherwise it is not. If the model obtained by the reflection still has the highest misfit in the simplex, the reflection is rejected and a contraction by a factor of 2 towards the lowest-misfit model is attempted (Fig. 3d). If none of these steps decrease the misfit below the second highest in the simplex, then a multiple contraction by a factor of 2 in all dimensions toward the lowest-misfit model is performed (Fig. 3e). The above series of steps is repeated until convergence is achieved (generally based on the simplex shrinking to a point in model space) or a maximum number of iterations is reached.

The DHS method moves progressively downhill in misfit without relying on linearization, but as it has limited ability to move uphill (except potentially multiple contractions), it is prone to becoming trapped in local minima in the parameter space. To improve the confidence in finding the global minimum, it is recommended to run the procedure several times, initiated from different starting models.

The DHS algorithm has been widely used in 1D site characterization studies, particularly considering surface-wave dispersion data (e.g., Ohori et al. 2002; Parolai et al. 2006; Zomorodian and Hunaidi 2006). García-Jerez et al. (2016) developed a widely used software package (HV-Inv) that applies the DHS method (among other optional optimization methods) to invert HVSR data for shallow 1D velocity structure. The software also supports the joint inversion of HVSR and dispersion data. Baziw (2002) applied DHS to seismic cone penetration data, which measure seismic body waves rather than surface waves and are typically considered using simplified direct conversions to seismic velocities in soils (as opposed to formal inversions).

As mentioned above and similar to linearized inversion (Section 3), the DHS method is prone to converge to local minima in the parameter space. DHS has been combined with global search methods to exploit the advantages of each. In 1D site characterization studies considering HVSR and/or surface wave dispersion data, the DHS method has often been used in combination with an initial global search method (most-often SA, discussed in Section 4.2) to reduce the possibility of converging to a local minimum (e.g., Alfaro Castillo 2006; Poovarodom and Plalinyot 2013; García-Jerez et al. 2019; Maklad et al.2020).

4.2 Global search: simulated annealing and genetic algorithms

Global-search methods are designed to explore the parameter space widely, and explicitly include the ability to move uphill in misfit in order to escape from local minima in search of a better solution. Two widely used global-search methods in geophysics are SA and GA, which are both based on analogies of non-linear optimization processes that exist in nature.

SA is based on an analogy with the natural optimization process of thermodynamic annealing, by which crystals are grown and metals hardened (Van Laarhoven and Aarts 1987). The optimization algorithm consists of a series of iterations involving random perturbations of the unknown model parameters (representing the thermodynamic system) of \(\mathbf {m}\rightarrow \mathbf {m}^{\prime }\), with a resulting change to the data misfit function (analogous to free energy of the system) of \({\varPhi }(\mathbf {m})\rightarrow {\varPhi }(\mathbf {m}^{\prime })\). Perturbations that decrease the misfit are always accepted, while perturbations that increase misfit are sometimes accepted with an acceptance probability given by the Gibbs distribution of statistical mechanics

where \(\triangle {\varPhi }={\varPhi }(\mathbf {m}^{\prime })-{\varPhi }(\mathbf {m})\) represents the increase in misfit due to the perturbation and T is a control parameter (analogous to absolute temperature). According to this rule, perturbations that increase misfit are accepted with a conditional probability that decreases with increasing △Φ and decreasing T. Over the process of many such iterations the temperature T is gradually reduced from an initial high value (cooling/annealing the system in thermodynamic terms). Accepting some perturbations that increase Φ allows the algorithm to escape from local minima in search of a better solution. At early iterations (high T), the algorithm searches the parameter space in an essentially random manner. As T decreases, accepting increases in Φ becomes increasingly improbable, and the algorithm spends more time searching regions of low Φ, eventually converging to a solution which should approximate the global minimum.

The starting temperature, rate of reducing T, and the number and type of perturbations define the annealing schedule, which controls the efficiency and effectiveness of the algorithm. Adopting an annealing schedule that is too fast, i.e., decreases T too quickly or allows too few perturbations, can lead to sub-optimal solutions. Alternatively, adopting an annealing schedule that is overly cautious wastes computation time. Determining an appropriate annealing schedule is problem specific and generally requires some experimentation and familiarity with the inverse problem. SA can be related to Markov chain Monte Carlo (MCMC) methods (described for Bayesian inversion in Section 5), with the goal of optimization based on non-convergent sampling with decreasing T rather than probability estimation based on sampling to convergence at unit temperature.

SA has been used extensively in near-surface seismic studies, considering a wide range of seismic data types including surface-wave dispersion (e.g., Beaty et al. 2002; Yamanaka 2005; Pei et al. 2007; Lu et al. 2016), surface wave velocity spectra (e.g., Ryden and Park 2006), HVSR (e.g., García-Jerez et al. 2016), spectral ratios of direct S-waves from earthquakes (e.g., Dutta et al. 2009), and full seismic wavefields (e.g., Tran and Hiltunen 2012).

GA is based on an analogy to biological evolution according to the concept of “survival of the fittest.” GA simulates the genetic evolution of a collection (population) of models through many iterations (generations) to minimize their misfit. This is analogous to maximizing the population’s fitness to a specific ecological niche (Fogel et al. 1966; Holland et al. 1992; Sambridge and Mosegaard 2002). Each generation of models acts as parents for the next generation of (offspring) models through processes designed to mimic selection (pairing of parent models), crossover (recombination of parent genetic information in offspring), and mutation (random variations) in a manner that probabilistically favors models with lower misfits. To facilitate these processes, each model is normally coded as a string of parameters represented in binary (base 2) in terms of a pre-defined number of bits to represent a gene (and thereby discretizing the parameter space). A large variety of approaches to the selection, crossover, and mutation steps exist, which will not be discussed here. Like the annealing schedule for SA, these GA steps (and the tradeoff between their efficiency and robustness) are specific to the problem. GA has been widely used in near-surface seismic studies that invert surface-wave dispersion data (e.g., Yamanaka and Ishida 1996; Yamanaka 2005; Parolai et al. 2006), HVSR data (e.g., Fäh et al. 2001; Fäh et al. 2003), or both (e.g., Parolai et al.2005).

Global-search algorithms generally require subjective choices of tuning parameters that control algorithm performance (e.g., the annealing schedule in SA and the evolutionary steps in GA). These parameters are typically problem and data specific, making it challenging to know if the algorithm is properly tuned. Consequently, it is challenging to make rigorous and objective comparisons between techniques in terms of algorithm efficiency. In any case, Yamanaka (2005) compared several non-linear global-search techniques, including SA and GA, for inverting surface wave dispersion data to estimate shallow VS structure. They found that both techniques produced comparable results. In a similarly motivated study, Garofalo et al. (2016) compared results from several global-search and local-search techniques (including NA, SA, GA and linearized methods) for inverting surface-wave dispersion to estimate shallow VS structure. They found these methods all recovered similar velocity profiles over the depth range of data sensitivity. As mentioned in the previous section, Parolai et al. (2006) considered the inversion of surface-wave dispersion to estimate near-surface 1D VS structure at a site near Cologne, Germany, using linearized inversion as well as DHS and GA. They processed ambient seismic noise recorded on a 2D (100 m by 150 m L-shaped) array of 11 instruments to calculate Rayleigh-wave dispersion at 51 frequencies between 1 and 5 Hz (Fig. 4a). In their implementation of GA, genetic operations were applied to a population of 30 individual models and the inversion repeated numerous times with different random initial populations. The final optimal VS profile obtained from the linearized, DHS, and GA inversions are shown in Fig. 4b, with the associated predicted dispersion curves shown in Fig. 4a. The recovered VS profiles from the three inversion methods are very similar, and produce nearly identical predicted dispersion data. Furthermore, Parolai et al. (2006) showed that the estimated site response (calculated as the amplification spectra for vertically propagating SH waves) of the VS profiles obtained from various inversion strategies were consistent. These studies suggest that the inversion strategies are comparable in terms of recovering an optimal model (assuming a reasonable starting model for linearized inversion or DHS) when inverting high-quality surface-wave data for 1D structure. However, this assumes all other aspects of the inverse problem (i.e., forward physics, data error assumptions, model parameterization, etc.) are correct.

Examples of linearized, DHS, and GA inversions of surface-wave dispersion data. Measured phase-velocity dispersion data from ambient seismic noise recordings over a site near Cologne, Germany (a). Also shown in (a) are the predicted dispersion data for the final inversion solutions, which are nearly identical. The grey bounds around the measured data are estimated relative data errors. The final VS profiles obtained from the linearized, DHS, and GA inversions are shown in (b). Modified from Parolai et al. (2006)

The greatest advantage of global-search algorithms is that they are relatively insensitive to the starting/initial model, unlike linearized inversions. No global-search algorithm is guaranteed to converge to the global minimum in misfit within a finite number of steps, although they can be much more effective than linearization (at increased computational cost). Furthermore, there is no general approach to determine whether the solution obtained actually represents the global minimum, although confidence is increased if repeated runs of the algorithm (with different random initializations) produce similar results. As mentioned previously, several studies in site characterization have adopted global-search techniques (such as SA and GA) as a heuristic approach to determining a suitable initial model for a subsequent local search (e.g., linearized or DHS) inversion (e.g., Alfaro Castillo 2006; Picozzi and Albarello 2007; Poovarodom and Plalinyot 2013; Lei et al.2018; García-Jerez et al. 2019; Maklad et al. 2020). This provides greater assurance of a suitable initial model for local-search success, but, again, does not guarantee the global minimum-misfit solution.

4.3 Neighborhood algorithm

The NA is a popular optimization technique proposed by Sambridge (1999) with numerous applications in a wide range of fields, and in particular to the inversion of surface-wave properties for seismic site assessment (Wathelet et al. 2004).

Like SA and GA, the NA is based on a random search of the multi-dimensional parameter space for the minimum-misfit model. This is illustrated in Fig. 5 for a 2D optimization of the Rastrigin function (Fig. 4a, a non-convex function with multiple local minima often used as a performance test for optimization algorithms). The NA is initiated with a population of random models distributed over the parameter space (coloured circles in Fig. 5b), and tries to orient the random generation of subsequent new models towards regions of the space likely to provide the lowest misfit. This is achieved by forming a neighborhood approximation to the misfit surface. The parameter space is divided into Voronoi cells built from the model locations in the current population (generator points). Any location inside a Voronoi cell is closer to its generator point than to any other model in the population. A constant misfit is assigned to each cell, equal to the misfit of each generating point, leading to a nearest-neighbor interpolation of the misfit function. For example, the Voronoi cell geometry associated with the initial population (20 models represented by coloured circles) is shown in Fig. 5b. The algorithm progresses by randomly generating Ns new models inside only the Nr Voronoi cells with the lowest misfits. These are represented by open circles in Fig. 5b for Ns = 10 and Nr = 10. The data misfits and Voronoi geometry are updated to include these new models, as shown in Fig. 5c, where the coloured circles indicate all models (initial and new). Another set of Ns new models are generated within the Nr lowest-misfit cells (open circles in Fig. 5c), and new Voronoi cells are computed (Fig. 5d). The same process is repeated until convergence to an optimal solution. As described, the NA has only two tuning parameters, Ns and Nr, that control the algorithm behavior between exploration and optimization. Even if it is temporarily trapped in a local minima, NA can quickly evolve to other areas of the parameters space as demonstrated by Sambridge (1999).

Illustration of the NA for a 2D parameter space corresponding to the Rastrigin function. (a) A dense sampling of the Rastrigin function showing four minima. (b) 20 random models of an initial population (coloured circles) and the corresponding Voronoi geometry (solid lines). Ns = 10 new models (open circles in b) are generated randomly inside the Nr = 10 cells with the lowest misfit. (c) The new models are integrated into the Voronoi geometry and their misfits are computed, leading to a total population of 30 models (coloured circles). (d) This process is repeated to obtain 40 models

The NA implementation for surface-wave inversion proposed by Wathelet et al. (2004) was built around the original Fortran code provided by Sambridge (1999). Di Giulio et al. (2006) published one of the first applications of this inversion method to multiple ambient vibration (microtremor) arrays. The NA core was subsequently re-written in C++ by Wathelet (2008) to provide several improvements detailed below. This latter implementation has been widely used since 2008, with only minor modifications. Renalier et al. (2010) analyzed data from passive and active arrays at 10 documented sites in Europe to propose a parametrization strategy based on several repeated inversion trials with an increasing number of layers. With a database of 14 strong-motion sites in Europe, Di Giulio et al. (2012) addressed the parameterization issue following Akaike’s information criterion. Such quantitative approaches to parameterization based on formal information criteria are discussed further in the next section. Cox and Teague (2016) promoted parameterizations controlled by only a few tuning parameters: the number of layers and a layering ratio for VP and VS. This approach was further refined by Vantassel and Cox (2021). The original forward code (and inversion) focused on the phase velocity dispersion curves of Rayleigh waves. However, Love waves, group velocities (Roux et al. 2011), and Rayleigh-wave ellipticity (Hobiger et al. 2013) have also been considered.

Rickwood and Sambridge (2006) proposed an improved algorithm dedicated to parallel computing architectures that is particularly efficient when the computational cost of the forward problem is small. An open-source application dinver (distributed in geopsypack, Wathelet et al. 2020) implements a modified NA with a similar parallel structure. Unlike the algorithm proposed by Rickwood and Sambridge (2006), dinver was developed to minimize computer memory usage, which prevents distribution over multiple computing nodes (limiting the total number of CPUs that can be used simultaneously). Developed primarily for the inversion of dispersion curves and associated observables (autocorrelation and Rayleigh-wave ellipticity curves), dinver runs on a desktop computer with 4 or 8 cores in a reasonable computation time (a few minutes). Several other improvements of the original NA are implemented in dinver that are detailed below. Note that many of these improvements may also be applicable to other global-search techniques.

Wathelet (2008) noticed that, since the Voronoi geometry is not invariant to axis scales, the NA primarily explores parameters that the data are most sensitive to (i.e., VS of near-surface layers) and tends to neglect the variability of other parameters (VP or VS of deeper layers). With a lack of exploration of the deepest layers, the obtained population of models may suggest a better resolution beyond the usual wavelength (λ) rule of thumb: a maximum resolution depth between λ/3 and λ/2 (Cox and Teague 2016). Wathelet (2008) implemented dynamic parameter axis scaling that maintains the region of interest to an equal size in all dimensions to overcome this. Wathelet (2008) also implemented parameter conditions such as constraining Poisson’s ratio (e.g., from 0.25 to 0.5), avoiding multiple low-velocity zones, parameterizing interface depths or layer thicknesses, and limiting layer thicknesses to a minimum percentage of the total depth (e.g., 5%). Furthermore, Wathelet (2008) showed the advantage of parameterizing interface depths instead of layer thicknesses in Monte Carlo inversions of surface-wave data, as this avoids uncontrolled prior information. Specifically, for a model defined by a set of layers with randomly generated thicknesses, the depth distribution of the deepest layers tends to a normal distribution (as supported by the Central Limit Theorem). Consequently, this parameterization can introduce structure that is not supported by the data. In the NA, the generation of new models that fulfill all parameter conditions requires that the current population of models also fulfill these conditions. For very small Voronoi cells, precision errors can violate this assumption, leading to unpredictable results. This issue is solved by using discrete parameters (i.e., parameters can only take a predetermined discrete set of values), even though these geophysical parameters are physically continuous.

In the original NA, the sampling density around the best models is directly influenced by the total number of generated models, which is a subjective tuning parameter. With parameter discretization, there is a minimum distance between models that limits the sampling density. The algorithm is able to explore different regions of the parameter space once the sampling density limit is reached for current regions. Thus, the total number of models in the population controls only the exploration level, not the sampling density. This has the effect of improving overall exploration of the parameter space. For instance, in Fig. 5d, none of the Nr cells with the lowest misfit are located near the local minimum of the Rastrigin function in the upper right corner. This region of the parameter space is not explored unless there is a mechanism to control the sampling density. Figure 6 compares parameter exploration for continuous and discrete parameterizations distributed on a logarithmic scale (other scale distributions can also be considered). The comparison is based on the inversion of a synthetic dispersion curve between 2 and 20 Hz computed for the earth model given in Table 1. The inversion is run five times with the same 11-parameter model (4 layers). VS is allowed to vary from 50 to 3500 m/s, and interface-depths from 1 to 100 m for all layers. In Fig. 6a, the parameters are distributed continuously, while in Fig. 6b parameters are discrete. The figure shows improved relative exploration of the parameter space for discrete parameters.

The effect of parameterization on NA exploration. (a) Pseudo-continuous parameters distributed on a logarithmic scale (with a relative parameter precision of 10− 4%). (b) Discrete parameters distributed on a logarithmic scale (relative precision 1%). In both cases, an 11-dimensional model parameter space (4 layers with velocities VSi, VPi for i= 0–3 and interface depths Di for i= 0–2) is explored with 105 models. Plots show the relative range of sampled values in the final 50,000 generated models, normalized by the range sampled over the entire NA run, for five distinct inversion runs (gray lines connect points from the same run)

Figure 7 compares linear and logarithmic scalings for discrete parameters based on five repeated inversions of each for the same 11-parameter example. Figure 7a shows that the five NA inversion runs with linear parameter scaling are trapped in a local minimum, which is not the case for the inversions with logarithmic parameter scaling. For interface-depth parameters, the linear scale provides a higher probability of generating deep layers; conversely, the logarithmic scale provides higher probability for shallow layers. Figure 7b and d show that Vs structure below \(\sim \)30 m depth is not resolvable by the dispersion data. In Fig. 7b, all layer interfaces are found below \(\sim \)20 m depth, where the sensitivity of the dispersion data to Vs starts to diminish. Therefore, the variability (non-uniqueness) linked to the very shallow part is better explored in the logarithmic case. The conditions designed to avoid very thin layers, the data constraints, and a reasonable minimum depth imposed by the parameterization (1 m in this case for a minimum λ of 10 m) prevent the aggregation of all layers in the shallow part.

Comparison of linear and logarithmic discrete parameter scalings. (a) Misfit reduction versus the number of generated models for five runs (with distinct random initializations) for linear scaling (solid lines) and logarithmic scaling (dashed lines). (b) and (d) Vs profiles obtained with the five linear- and logarithmic-scaling inversion runs, respectively. The inset figures in (b) and (d) focus on the shallow part with velocity less than 300 m/s. (c) and (e) Corresponding predicted dispersion curves. The numbers of discrete parameter values is the same for both scales: 426, 323 and 452 for VSi, VPi and Di, respectively. The same misfit colour scale is used throughout. Models with the lowest misfits are shown on top of other models, hiding most of the models with high misfits. The black curve in (c) and (e) is the observed dispersion curve

The selection of the Nr best models for which the corresponding neighborhoods are sampled is based on the calculated misfit value. For most problems (as discussed in Section 2), this is defined by the L2 misfit normalized by the observed data uncertainties and by the number of data (Wathelet et al. 2004). Hence, a misfit value of unity indicates that the predicted data fit the observed data to (on average) one standard deviation. The NA algorithm can still be strongly influenced by small details in the observed data, and attempts to fit small scatters (even if they are assigned large standard deviations). Lomax and Snieder (1994) introduced the concept of an acceptable misfit level for GA inversions that implicitly considers only first-order details of the misfit function. Their inversion process was aimed at building as large an ensemble as possible of models that fit the observed data to a reasonable level. This represents a simplified view of data errors that transforms a Gaussian error distribution into a uniform distribution. This idea was also tested with the NA by Sambridge (2001).

Hollender et al. (2018) and Chmiel et al. (2021) used a flattened misfit at a smaller scale (for each frequency sample), as implemented in dinver. Compared to a usual misfit threshold value, this is a more demanding definition of an acceptable model as it requires all predicted data to be within one standard deviation of the observed data. However, as noted by Sambridge (2001), the algorithm looses part of the distance information contained in the misfit which can negatively affect convergence in high-dimensional parameter spaces. Generating models with identical misfit values has practical consequences for the way the NA behaves. Once all Nr Voronoi cells have an equal misfit value, all new models with the same misfit must also be included in the area of interest. Hence, dinver implements a dynamic Nr that increases each time a new good model (with identical misfit value) is found. This results in relatively uniform sampling (rather than oversampling) of the acceptable region of the parameter space. However, the population of models obtained does not necessarily reproduce the data uncertainty distribution. Interestingly, joint inversions of distinct observables such as dispersion data and HVSR result in an ensemble of models that are acceptable (i.e., within one standard deviation) with respect to both data types. This avoids the use of subjective weighting for each misfit component (i.e., data type) which is usually problem specific.

5 Bayesian inversion for probabilistic site characterization

Bayesian inference approaches to geophysical inversion are based on quantifying the PPD of earth models given observed data and prior information. Bayesian methods were first applied to active-source dispersion data by Schevenels et al. (2008) and Socco and Boiero (2008), and to ambient-noise (microtremor) dispersion by Foti et al. (2009). Furthermore, Cipta et al. (2018) applied a Bayesian inversion method to HVSR data. However, this section concentrates on a series of studies (Molnar et al. 2010; 2013; Dettmer et al. 2012; Gosselin et al. 2017; Gosselin et al. 2018) that developed a rigorous and quantitative overall approach, and which considered common microtremor dispersion data sets (with colocated invasive measurements) for direct comparison and evaluation.

To quantify model probability over the multi-dimensional parameter space, Bayesian inversions generally seek to compute statistical properties of the PPD. PPD properties of interest include parameter estimates, such as most-probable and mean values. More significantly, Bayesian inversion can quantify parameter uncertainties, which can be expressed as variances/covariances, credibility intervals, and (most notably) marginal probability densities. Since rigorous uncertainty analysis, rather than point estimation, is central to Bayesian inference, non-linear inversion and rigorous model selection assume greater significance. In the common case where appropriate earth and error models are not known a priori, they can be estimated from the data, as part of the inverse problem.

To avoid linearization errors in Bayesian inversion, non-linear parameter and uncertainty estimation is carried out numerically, typically employing Markov-chain Monte Carlo (MCMC) methods, as discussed in Section 5.1. MCMC draws random samples of the parameters from the PPD, such that statistical parameter/uncertainty estimates can be computed from the ensemble of samples. Efficient sampling strategies are key for practical inversion algorithms.

In terms of model selection, determining an appropriate earth-model parameterization is an important aspect of quantitative inversion. Over-parameterizing the model under-constrains parameters and can lead to spurious structure and to over-estimating parameter uncertainties. Conversely, under-parameterization can leave structure unresolved, biasing parameter estimates and under-estimating uncertainties. An objective approach to model selection is to choose the simplest parameterization that explains the data as quantified by the Bayesian information criterion (BIC), which is discussed in Section 5.2. Another approach is to marginalize over a set of possible parameterizations. Since this involves probabilistic sampling over models with different numbers of parameters (model dimensions), this approach is referred to as trans-dimensional (trans-D) inversion. Trans-D inversion, described in Section 5.3, has the advantage that the uncertainty in the parameterization is included in the parameter uncertainty estimates. Both of these model-selection approaches avoid subjective regularizations in the inversion, which can preclude meaningful uncertainty estimation.

Defining the data error model is another important component of rigorous uncertainty estimation. In many practical cases, the error distribution, including both measurement and theory errors, is not well known. The lack of specific information suggests that a simple distribution be assumed, with statistical parameters estimated from the data. Error models considered here are based on the assumption of Gaussian-distributed errors of unknown covariance, with the covariance matrix either estimated from data residuals or represented by an autoregressive (AR) process, as considered in Section 5.4.

In seismic site assessment, a number of studies have applied Bayesian methods to the inversion of Rayleigh-wave dispersion data derived from ambient seismic noise recorded at a small geophone array. The primary goal is to estimate the VS profile (VP and ρ profiles are also estimated in the inversion, but are of less significance and not considered here).

An important advantage of the Bayesian approach is that it is straightforward to propagate the uncertainty analysis for VS directly into uncertainties in site assessment factors, which represent the ultimate goal of the work. This cannot be accomplished with other approaches reviewed in this paper. Assessment factors of interest include VS30, which is used to categorize sites into classes according to the National Earthquake Hazards Reduction Program (NEHRP). VS30 is also used to predict peak ground velocity (PGV) and peak ground acceleration (PGA) amplification factors (relative to hard ground). However, amplification spectra calculated using the full VS profile generally provide better indicators of site amplification than VS30-based measures (Boore and Atkinson 2008). In Section 5.5, the inversion methods described in Sections 5.1–5.4 are illustrated for dispersion data from two distinct geological settings (Molnar et al. 2010), with comparisons to invasive measurements and calculation of probabilistic site assessments (Molnar et al. 2013).

5.1 Non-linear inversion: MCMC sampling

To briefly describe non-linear Bayesian inversion, let \({\mathscr{M}}\) represent the choice of model, with m the corresponding set of M unknown model parameters, and let d be N observed data. Assuming data and parameters to be random variables, they are related by Bayes’ theorem

In Eq. 10, \(P(\mathbf {m}|{\mathscr{M}})\) is the prior probability, representing available information for the model parameters (given a choice of model), independent of the data. \(P(\mathbf {d}|\mathbf {m},{\mathscr{M}})\), the conditional probability of d given m and \({\mathscr{M}}\), defines the data information. Interpreted as a function of d, this represents the residual error distribution. However, when d is considered fixed (once data are observed), the term is interpreted as the likelihood of the parameters, \({ L}(\mathbf {m}|{\mathscr{M}})\). The normalization term, \(P(\mathbf {d}|{\mathscr{M}})\), is referred to as Bayesian evidence. \(P(\mathbf {m}|\mathbf {d},{\mathscr{M}})\) defines the PPD, representing the state of parameter information given the data and prior. Henceforth, the dependence on model \({\mathscr{M}}\) is suppressed for simplicity when not required.

Non-linear Bayesian inversion is typically based on using MCMC methods to draw (dependent) random samples from the PPD while satisfying the requirement for reversibility of the Markov chain (Gilks et al. 1996; Sambridge and Mosegaard 2002). In particular, Metropolis-Hastings sampling constructs a Markov chain by applying a proposal density function \(Q(\mathbf {m}^{\prime }|\mathbf {m})\) to generate new parameters \(\mathbf {m}^{\prime }\) based only on the current values m, and accepting the proposed parameters as the next step in the chain with probability

The acceptance criterion is applied by drawing a uniform random number ξ on [0,1] and accepting the new parameters if \(\xi \le A(\mathbf {m}^{\prime }|\mathbf {m})\). If the proposed step is rejected, another copy of the current model is included in the Markov chain. Once convergent sampling is achieved, parameter estimates and uncertainties can be computed statistically from the ensemble of samples (omitting an initial burn-in stage while stationary sampling is established).

The choice of proposal density \(Q(\mathbf {m}^{\prime }|\mathbf {m})\) controls the efficiency of MCMC sampling. The goal is to achieve a well-mixed Markov chain that efficiently samples the parameter space, avoiding both small, ineffectual perturbations and high rejection rates. In Bayesian microtremor array inversion, Molnar et al. (2010) and Molnar et al. (2013) applied an efficient proposal density based on principal-component (PC) decomposition of the parameter covariance matrix (Dosso and Wilmut 2008). The PC decomposition provides both directions and length scales for effective parameter updates. Perturbations are applied in a rotated parameter space where the axes align with the dominant correlation directions (i.e., PC parameters are uncorrelated). The PC proposal is initiated from an analytic linearized estimate that is subsequently updated with a non-linear estimate from the on-going sampling (a diminishing adaptation). To achieve wide sampling over a potentially multi-modal parameter space, Gosselin et al. (2017) and Gosselin et al. (2018) applied the method of parallel tempering (Earl and Deem 2005; Dosso et al. 2012), which is based on a series of interacting Markov chains with successively relaxed likelihoods raised to powers 1/T ≤ 1, where T is referred to as the sampling temperature (similar to the temperature parameter in SA, discussed in Section 4.2). While only samples collected at T = 1 are unbiased and retained for analysis, probabilistic interchange between chains provides a robust and efficient sampling of the parameter space.

5.2 Model selection: Bayesian information criterion

As mentioned earlier, determining an appropriate model parameterization is an important aspect of Bayesian inference. In Eq. 10, the Bayesian evidence \(P(\mathbf {d}|{\mathscr{M}})\), which formally represents the conditional probability of the data for a particular model, can be considered the likelihood of the model given the data. Hence, a natural approach to model selection is to seek the model that maximizes the evidence. Unfortunately, the integral defining evidence is particularly challenging to evaluate numerically to sufficient precision (Chib 1995). Commonly, an asymptotic point estimate of evidence, the BIC, is applied, defined by (Schwarz 1978)

where \(\hat {\mathbf {m}}\) is the maximum-likelihood parameter estimate. Since the BIC approximates the negative of evidence, the model with the smallest BIC over a set of possible models is selected as the most appropriate choice. The first term on the right of Eq. 12 favors models with high likelihood (low data misfit); however, this is balanced by the second term which applies a penalty for additional parameters. Hence, minimizing the BIC provides the simplest model parameterization consistent with the resolving power of the data.

In Bayesian inversion for site assessment, Molnar et al. (2010) and Molnar et al. (2013) used the BIC to choose between models based on several fixed functional forms, including layered profiles and linear or power-law gradients (in all cases, layers thicknesses were unknowns included in the parameterization, as were the properties of an underlying semi-infinite basement). Gosselin et al. (2017) and Gosselin et al. (2018) considered depth-dependent model parameterizations in terms of Bernstein polynomials (BP) (Farouki and Rajan 1987; Quijano et al. 2016), which provide general (smooth) gradient forms. In this approach, a geophysical profile is represented as a B th-order BP in terms of B + 1 basis functions with the corresponding coefficients (weights) comprising the unknown parameters, as as well as gradient-layer thickness and basement properties. The BIC was applied to determine the polynomial order. While other sets of basis functions could be considered, BPs have the desirable property of optimal stability in regard to coefficient perturbations (as applied in MCMC sampling).

5.3 Model selection: Trans-D inversion

Trans-D inversion addresses model selection by sampling probabilistically over models with differing numbers of parameters (Green 1995; Malinverno 2002; Sambridge et al. 2006; Dettmer et al. 2010; Dosso et al. 2014). Let k index the choice from \(\mathcal {K}\) possible models; Bayes’ theorem for hierarchical models can be written (Green 1995)

In Eq. 13, P(k)P(mk|k) is the prior probability of the state (k,mk), P(d|k,mk) is interpreted as the likelihood L(k,mk), and P(k,mk|d) is the trans-D PPD. The PPD can be sampled numerically using the reversible-jump Markov chain Monte Carlo (rjMCMC) algorithm, which accepts a proposed transition between the current state (k,mk) and a proposed state \((k^{\prime },\mathbf {m}^{\prime }_{k^{\prime }})\) with a probability given by the Metropolis-Hastings-Green criterion (Green 1995)

where \(Q(k^{\prime },\mathbf {m}^{\prime }_{k^{\prime }}|k,\mathbf {m}_{k})\) is the proposal probability density and |J| is the Jacobian determinant for the transformation between parameter spaces (|J| = 1 for the rjMCMC algorithm described here).