Abstract

We study convex relaxations of nonconvex quadratic programs. We identify a family of so-called feasibility preserving convex relaxations, which includes the well-known copositive and doubly nonnegative relaxations, with the property that the convex relaxation is feasible if and only if the nonconvex quadratic program is feasible. We observe that each convex relaxation in this family implicitly induces a convex underestimator of the objective function on the feasible region of the quadratic program. This alternative perspective on convex relaxations enables us to establish several useful properties of the corresponding convex underestimators. In particular, if the recession cone of the feasible region of the quadratic program does not contain any directions of negative curvature, we show that the convex underestimator arising from the copositive relaxation is precisely the convex envelope of the objective function of the quadratic program, strengthening Burer’s well-known result on the exactness of the copositive relaxation in the case of nonconvex quadratic programs. We also present an algorithmic recipe for constructing instances of quadratic programs with a finite optimal value but an unbounded relaxation for a rather large family of convex relaxations including the doubly nonnegative relaxation.

Similar content being viewed by others

1 Introduction

In this paper, we are interested in nonconvex quadratic programs that can be represented as follows:

where \(q: \mathbb {R}^n \rightarrow \mathbb {R}\) is given by

The parameters are given by \(Q \in \mathbb {R}^{n \times n}\), which is a symmetric matrix, \(c \in \mathbb {R}^n\), \(A \in \mathbb {R}^{m \times n}\), and \(b \in \mathbb {R}^m\), and \(x \in \mathbb {R}^n\) is the decision variable. We denote the optimal value of (QP) by \(\ell ^* \in \mathbb {R}\cup \{-\infty \} \cup \{+\infty \}\), with the usual conventions that \(\ell ^* = -\infty \) if (QP) is unbounded below, and \(\ell ^* = +\infty \) if (QP) is infeasible. We denote the feasible region of (QP) by S, i.e.,

Apart from being interesting in their own right, nonconvex quadratic programs also arise as subproblems in sequential quadratic programming algorithms and augmented Lagrangian methods for general nonlinear programming problems (see, e.g., [29]).

In this paper, we identify a family of convex relaxations of (QP) that includes the well-known copositive and doubly nonnegative relaxations, with the property that each convex relaxation in this family is feasible if and only if (QP) is feasible. We therefore refer to this family of convex relaxations as feasibility preserving relaxations. Our contributions are as follows:

-

1.

For each feasibility preserving convex relaxation of (QP), we identify a decomposition of any feasible solution of the corresponding relaxation that plays a central role for establishing several properties of the relaxation (see Lemma 2).

-

2.

We present an alternative perspective of feasibility preserving convex relaxations of (QP), based on the observation that each such relaxation implicitly gives rise to a convex underestimator of the objective function \(q(\cdot )\) on S (see Proposition 1).

-

3.

If the objective function \(q(\cdot )\) is convex over the feasible region S, we show that the convex underestimator arising from each feasibility preserving convex relaxation of (QP) agrees with \(q(\cdot )\) over S (see Lemma 4). We also investigate the implications of the absence of convexity on some convex relaxations including the semidefinite relaxation (see Proposition 2).

-

4.

Under the assumption that the recession cone of S does not contain any direction of negative curvature, we show that the convex underestimator arising from the copositive relaxation is in fact the convex envelope of \(q(\cdot )\) on S (see Proposition 3).

-

5.

Under the assumption that S is nonempty and bounded, we show that the convex underestimator arising from the doubly nonnegative relaxation agrees with the objective function value at any local minimizer of (QP) (see Proposition 4).

-

6.

For any \(n \ge 5\), we present an algorithmic recipe to construct an instance of (QP) such that \(\ell ^*\) is finite but the lower bound arising from a rather large family of convex relaxations including the doubly nonnegative relaxation equals \(-\infty \) (see Proposition 5).

We next briefly review the related literature, with a focus on more recent studies. Semidefinite relaxations of (QP) arising from the “lifting” idea proposed in [34] have been studied extensively in the literature since the early 1990s. More recently, Burer [8] established that a rather large class of nonconvex optimization problems, which includes (QP) as a special case, admits an exact copositive relaxation, which unified, extended, and subsumed a number of similar results previously established for more specific classes of optimization problems. Burer’s result yields an explicit representation of a nonconvex optimization problem as a convex conic optimization problem, where the difficulty is now shifted to the copositive cone that does not admit a polynomial-time membership oracle (see, e.g., [28]). Nevertheless, this unifying result led to intensive research activity along two main directions. On the one hand, Burer’s result has been extended to larger classes of nonconvex optimization problems by introducing generalized notions of the copositive cone (see, e.g., [2,3,4, 6, 9, 11, 14, 16, 32], and also [24] for a recent geometric view of copositive reformulations). On the other hand, various tractable inner and outer approximations of the copositive cone have been proposed (see, e.g., [7, 12, 19, 25, 30, 31, 35]). In particular, by duality, Burer’s result implies that any tractable inner approximation of the copositive cone immediately gives rise to a convex relaxation of (QP).

Despite the fact that the exactness of copositive relaxation has been established for more general classes of nonconvex optimization problems than quadratic programs, which is our focus in this paper, our perspective allows us to establish several properties of a very large class of convex relaxations in a unified manner for this smaller class of nonconvex optimization problems, and pinpoints the relations between the underlying structures of feasible solutions of convex relaxations and the behavior of the relaxations. In particular, this perspective allows us to introduce the concept of feasibility preserving convex relaxations as well as induced convex underestimators and to study a fairly general class of convex relaxations of (QP) in this framework. We identify several new properties and extend some of the earlier results in the literature. Through this perspective, we obtain a strengthening of Burer’s well-known copositive reformulation result for the special case of (QP). Finally, we identify a key property that enables us to construct an instance of (QP) with a finite optimal value but an unbounded convex relaxation for a large family of relaxations including the doubly nonnegative relaxation.

This paper is organized as follows. We define our notation in Sect. 1.1. We review several results about nonconvex quadratic programs and introduce various convex cones in Sect. 2. Feasibility preserving convex relaxations and the corresponding convex underestimators are introduced in Section 3. Section 4 discusses the implications of convexity of the objective function of (QP) on convex relaxations. In Sect. 5, we study copositive relaxations and establish that the corresponding convex underestimator is in fact the convex envelope of the objective function under mild assumptions. In Sect. 6, we study properties of feasibility preserving convex relaxations for quadratic programs with bounded and unbounded feasible regions. Finally, we conclude the paper in Sect. 7.

1.1 Notation

We use \(\mathbb {R}^n, \mathbb {R}^n_+\), \(\mathbb {R}^{m \times n}\), and \(\mathcal{S}^n\) to denote the n-dimensional Euclidean space, the nonnegative orthant, the set of \(m \times n\) real matrices, and the space of \(n \times n\) real symmetric matrices, respectively. The vector of all ones and the identity matrix are denoted by e and I, respectively, whose dimensions will always be clear from the context. We use 0 to denote the real number 0, the vector of all zeroes, as well as the matrix of all zeroes. The convex hull of a set is denoted by \(\text {conv}(\cdot )\). We reserve uppercase calligraphic letters to denote the subsets of \(\mathcal{S}^n\). For an index set \({\mathbf {A}} \subseteq \{1,\ldots ,n\}\), we denote by \(|{\mathbf {A}}|\) the cardinality of \({\mathbf {A}}\). For \(x \in \mathbb {R}^n\), \(Q \in \mathcal{S}^n\), \({\mathbf {A}} \subseteq \{1,\ldots ,n\}\), and \({\mathbf {B}} \subseteq \{1,\ldots ,n\}\), we denote by \(x_{\mathbf {A}} \in \mathbb {R}^{|{\mathbf {A}}|}\) the subvector of x restricted to the indices in \({\mathbf {A}}\) and by \(Q_{{\mathbf {A}}{\mathbf {B}}}\) the submatrix of Q whose rows and columns are indexed by \({\mathbf {A}}\) and \({\mathbf {B}}\), respectively. Therefore, \(Q_{{\mathbf {A}}{\mathbf {A}}}\) denotes a principal submatrix of Q. We simply use \(x_j\) and \(Q_{ij}\) for singleton index sets. We also adopt Matlab-like notation. We use 1 : n to denote the index set \(\{1,\ldots ,n\}\). For \(x \in \mathbb {R}^n\) and \(y \in \mathbb {R}^m\), we denote by \([x;y] \in \mathbb {R}^{n+m}\) the column vector obtained by stacking x and y. For matrices in \(\mathcal{S}^{n+1}\), rows and columns are indexed using \(\{0,1,\ldots ,n\}\). We use superscripts to denote different elements in a set of vectors or matrices. For any \(U \in \mathbb {R}^{m \times n}\) and \(V \in \mathbb {R}^{m \times n}\), the trace inner product is denoted by

For any \(x \in \mathbb {R}^n\) and \(Q \in \mathcal{S}^n\), note that \(x^T Q x = \langle Q, x x^T \rangle \). For \({\tilde{x}} \in \mathbb {R}^n_+\), we define the following index sets:

For a closed convex cone \(\mathcal{K}\subseteq \mathcal{S}^n\), the dual cone, denoted by \(\mathcal{K}^*\), is given by

For any two convex cones \(\mathcal{K}_1\) and \(\mathcal{K}_2\), we have

2 Preliminaries

In this section, we review several results that will be useful for the subsequent exposition.

Consider an instance of (QP). In addition to S given by (1), which denotes the feasible region of (QP), we define the following sets:

Note that L denotes the recession cone of S. \(L_0\) and \(L_\infty \) are subsets of L that consist of recession directions of zero and negative curvature, respectively.

If \(S = \emptyset \), then (QP) is infeasible and we define \(\ell ^* = +\infty \). Otherwise, if (QP) is unbounded below, we define \(\ell ^* = -\infty \). The following well-known result, which completely characterizes unbounded instances of (QP), reveals that (QP) is unbounded below if and only if \(q(\cdot )\) is unbounded below on a ray of S.

Lemma 1

[15, Theorem 3] (QP) is unbounded below if and only if \(S \ne \emptyset \) and at least one of the following two conditions holds:

-

1.

\(L_\infty \ne \emptyset \).

-

2.

There exist \({{{\tilde{x}}}} \in S\) and \({{{\tilde{d}}}} \in L_0\) such that \((Q {{{\tilde{x}}}} + c)^T {{{\tilde{d}}}} < 0\), where \(L_0\) is given by (6).

If S is nonempty and bounded, then \(-\infty< \ell ^* < +\infty \) and there exists \(x^* \in S\) such that \(q(x^*) = \ell ^*\). If S is an unbounded polyhedron and \(q(\cdot )\) is bounded below on S, then the well-known result of Frank and Wolfe [17] implies that the optimal value is attained, i.e., there exists \(x^* \in S\) such that \(q(x^*) = \ell ^*\). Therefore, in each of these two cases, we denote the set of optimal solutions of (QP) by

We next recall two definitions (see, e.g., [33]). A function \(g: \mathbb {R}^n \rightarrow \mathbb {R}\) is said to be a convex underestimator of \(q(\cdot )\) on S if

The pointwise supremum of all convex underestimators of \(q(\cdot )\) on S, denoted by \(f_S(\cdot )\), is called the convex (lower) envelope of \(q(\cdot )\) on S:

Note that

The first relation in (10) simply follows by combining \(f_S({\tilde{x}}) \le q({\tilde{x}})\) for each \({\tilde{x}} \in S\) with the observation that \(g(x) = \ell ^*\) is a convex function such that \(g(x) \le q(x)\) for each \(x \in S\), which implies that \(f_S({\tilde{x}}) \ge \ell ^*\) for each \({\tilde{x}} \in S\) by (9). The second relation in (10) follows from the first one and the convexity of \(f_S(\cdot )\).

2.1 Local minimizers

In this section, we review a characterization of the set of local minimizers of (QP).

Consider an instance of (QP). Since S is a polyhedral set, the constraint qualification holds at every feasible point. Therefore, if \({\tilde{x}}\) is a local minimizer of (QP), then there exist \({\tilde{y}} \in \mathbb {R}^m\) and \({\tilde{s}} \in \mathbb {R}^n\) such that the following KKT conditions are satisfied:

We remark that \({\tilde{y}}\) and \({\tilde{s}}\) are actually the Lagrange multipliers scaled by 1/2.

In addition, any local minimizer \({\tilde{x}}\) satisfies the following second order necessary conditions:

where

and \({\mathbf {Z}}(\cdot )\) is given by (3).

Conversely, any \({\tilde{x}} \in \mathbb {R}^n\) that satisfies the KKT conditions (11)–(15) and the second order necessary conditions (16) is, in fact, a local minimizer of (QP) (see, e.g., [21, 27]). Therefore, the conditions (11)–(16) provide a complete characterization of the set of all local minimizers of (QP).

2.2 Convex cones of interest

Let us define the following cones in \(\mathcal{S}^{n}\):

Note that \(\mathcal{N}^{n}\) is the cone of componentwise nonnegative matrices, \(\mathcal{PSD}^{n}\) is the cone of positive semidefinite matrices, \(\mathcal{D}^n\) is the cone of doubly nonnegative matrices, \(\mathcal{CP}^n\) is the cone of completely positive matrices, and \(\mathcal{COP}^n\) is the cone of copositive matrices. Each of these cones is a proper convex cone. For \(\mathcal{K}\in \{\mathcal{N}^{n},\mathcal{PSD}^{n}\}\), we have \(\mathcal{K}^* = \mathcal{K}\), where \(\mathcal{K}^*\) is defined as in (4). On the other hand, the following relations can be easily established (see, e.g., [5]):

The following set of inclusions directly follows from the definitions:

Furthermore, by [13],

In addition, we define the following closed convex cone in \(\mathcal{S}^{n+1}\):

i.e., \(\mathcal{P}^{n+1}\) is the cone of positive semidefinite matrices in \(\mathcal{S}^{n+1}\) with an additional nonnegativity constraint on the 0th row and 0th column. It is easy to verify that

We finally define the following family of convex cones in \(\mathcal{S}^{n+1}\):

3 Feasibility preserving convex relaxations

In this section, we introduce a family of convex relaxations of (QP) and establish several properties of such relaxations.

Consider the following family of optimization problems:

where

and \(\mathcal{K} \in {\mathbb {K}}\). The optimal value is denoted by \(\ell _\mathcal{K} \in \mathbb {R}\cup \{-\infty \} \cup \{+\infty \}\), with the usual aforementioned conventions.

For any \(\mathcal{K} \in {\mathbb {K}}\), it is easy to verify that \((P(\mathcal{K}))\) is a convex relaxation of (QP) since \((P(\mathcal{K}))\) is a convex optimization problem and \({{{\tilde{Y}}}} = [1;{{{\tilde{x}}}}][1;{{{\tilde{x}}}}]^T\) is a feasible solution of \((P(\mathcal{K}))\) for any \({\tilde{x}} \in S\) with the same objective function value \(q({{{\tilde{x}}}})\). Therefore, we immediately obtain

Furthermore,

For the specific choices of \(\mathcal{K} = \mathcal{CP}^{n+1}\) and \(\mathcal{K} = \mathcal{D}^{n+1}\), we will henceforth refer to the corresponding convex relaxation \((P(\mathcal{K}))\) as the copositive relaxation and doubly nonnegative relaxation, respectively.

The next result establishes a useful property of \((P(\mathcal{K}))\) for any \(\mathcal{K} \in {\mathbb {K}}\) and forms the basis of our alternative perspective.

Lemma 2

Let \(\mathcal{K} \in {\mathbb {K}}\), where \({\mathbb {K}}\) is given by (25). Then,

is a feasible solution of \((P(\mathcal{K}))\) if and only if

-

1.

\({{{\tilde{Y}}}} \in \mathcal{K}\),

-

2.

\({{{\tilde{x}}}} \in S\), and

-

3.

there exist \({\tilde{d}}^j \in \mathbb {R}^n,~j = 1,\ldots ,k\) such that \({\tilde{X}} = {\tilde{x}} {\tilde{x}}^T + \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\), where \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\).

Proof

Let \(\mathcal{K} \in {\mathbb {K}}\) and \({{{\tilde{Y}}}}\) given by (30) be a feasible solution of \((P(\mathcal{K}))\). Clearly, \({{{\tilde{Y}}}} \in \mathcal{K}\). By (25), we have \({{{\tilde{x}}}} \in \mathbb {R}^n_+\) and \({{{\tilde{X}}}} - {\tilde{x}} {\tilde{x}}^T = {\tilde{D}} \in \mathcal{PSD}^n\), i.e., \({\tilde{D}} = \sum _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\) for some \({\tilde{d}}^j \in \mathbb {R}^n,~j = 1,\ldots ,k\). Furthermore,

which implies that \(\Vert b - A{{{\tilde{x}}}}\Vert ^2 = \langle A^T A, {\tilde{D}} \rangle = 0\) since \(A^T A \in \mathcal{PSD}^n\) and \({\tilde{D}} \in \mathcal{PSD}^n\). It follows that \(A {{{\tilde{x}}}} = b\) and \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\). The converse implication can be easily established. \(\square \)

Since \((P(\mathcal{K}))\) is a convex relaxation of (QP), it follows that (QP) is infeasible if \((P(\mathcal{K}))\) is infeasible. The proof of Lemma 2 implies the following converse result.

Corollary 1

If (QP) is infeasible, then \((P(\mathcal{K}))\) is infeasible for any \(\mathcal{K} \in {\mathbb {K}}\), where \({\mathbb {K}}\) is given by (25).

Proof

The assertion immediately follows from Lemma 2. \(\square \)

By Corollary 1, any convex relaxation of \((P(\mathcal{K}))\) of (QP), where \(\mathcal{K} \in {\mathbb {K}}\), will be referred to as a feasibility preserving relaxation.

For any \(\mathcal{K} \in {\mathbb {K}}\), Lemma 2 implies that S is given by the following projection of the feasible region of \((P(\mathcal{K}))\):

For a given \(\mathcal{K} \in {\mathbb {K}}\), this observation motivates us to define the following optimization problem parametrized by \({{{\tilde{x}}}} \in S\):

where \(\ell _\mathcal{K}({{{\tilde{x}}}}) \in \mathbb {R}\cup \{-\infty \} \cup \{+\infty \}\). Note that \((P(\mathcal{K},{{{\tilde{x}}}}))\) is a constrained version of \((P(\mathcal{K}))\). Similar to (29), we have

The following result establishes a useful property of the function \(\ell _\mathcal{K}(\cdot )\).

Proposition 1

Given an instance of (QP), let \(\mathcal{K} \in {\mathbb {K}}\), where \({\mathbb {K}}\) is given by (25). Then, \(\ell _\mathcal{K}(\cdot )\) is a convex underestimator of \(q(\cdot )\) on S, i.e., \(\ell _\mathcal{K}(\cdot )\) is convex and

Furthermore,

Proof

Let \(\mathcal{K} \in {\mathbb {K}}\). The convexity of \(\ell _\mathcal{K}(\cdot )\) follows from the fact that the optimal value function of a convex optimization problem is convex as a function of the right-hand side parameter varying on a convex set (see, e.g., [33, Theorem 29.1]). For any \({{{\tilde{x}}}} \in S\), note that \({{{\tilde{Y}}}} = [1;{{{\tilde{x}}}}][1;{{{\tilde{x}}}}]^T \in \mathcal{S}^{n+1}\) is a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\) with \(\langle {\widehat{Q}}, {{{\tilde{Y}}}} \rangle = q({{{\tilde{x}}}})\), which establishes (33). Therefore, \(\ell _\mathcal{K}(\cdot )\) is a convex underestimator of \(q(\cdot )\) on S.

We clearly have \(\ell _\mathcal{K} \le \ell _\mathcal{K}({\tilde{x}})\) for any \({{{\tilde{x}}}} \in S\) since \((P(\mathcal{K},{{{\tilde{x}}}}))\) is a constrained version of \((P(\mathcal{K}))\). If the optimal solution of \((P(\mathcal{K}))\) is attained and given by \(Y^* \in \mathcal{S}^{n+1}\), then \(Y^*\) is also an optimal solution of \((P(\mathcal{K},x^*))\), where \(x^* = Y^*_{0,1:n}\), proving (34). Otherwise, if \(Y^k \in \mathcal{S}^{n+1},~k = 1,2,\ldots \) is a sequence of feasible solutions of \((P(\mathcal{K}))\) such that \(\langle {{{\widehat{Q}}}}, Y^k \rangle \rightarrow \ell _\mathcal{K}\), then

where \(x^k = Y^k_{0,1:n}\), which implies that \(\ell _\mathcal{K}(x^k) \rightarrow \ell _\mathcal{K}\), also establishing (34) in this case. \(\square \)

For each \(\mathcal{K} \in {\mathbb {K}}\), Proposition 1 reveals that \(\ell _\mathcal{K}(\cdot )\) is a convex underestimator of \(q(\cdot )\) on S. By (21) and (24), the tightest and the weakest convex underestimators are given by \(\mathcal{K} = \mathcal{CP}^{n+1}\) and \(\mathcal{K} = \mathcal{P}^{n+1}\), respectively.

4 Role of convexity on feasibility preserving relaxations

In this section, we focus on the implications of convexity of the objective function in (QP) on feasibility preserving convex relaxations. We also consider the effect of the absence of convexity on the weakest convex relaxation in this family.

First, we focus on the instances of (QP) in which the objective function \(q(\cdot )\) is convex over the feasible region S. We remark that this is a weaker condition than and is implied by the convexity of \(q(\cdot )\) over \(\mathbb {R}^n\). The next lemma presents necessary and sufficient conditions for the convexity of \(q(\cdot )\) over S.

Lemma 3

Given an instance of (QP) with \(S \ne \emptyset \), the objective function \(q(\cdot )\) is convex over S if and only if Q is positive semidefinite on the null space of A, i.e.,

Proof

Let \({\tilde{x}} \in S\), \({\tilde{y}} \in S\), and let \(\lambda \in [0,1]\). Let us define \({\tilde{d}} = {\tilde{y}} - {\tilde{x}}\) so that \(A {\tilde{d}} = 0\). The assertion immediately follows from the identity below together with \(\lambda \in [0,1]\):

\(\square \)

Under the assumption that the objective function \(q(\cdot )\) is convex over the feasible region S, the following lemma shows that the convex underestimator arising from any feasibility preserving convex relaxation coincides with the objective function \(q(\cdot )\) and is therefore exact.

Lemma 4

Given an instance of (QP) with \(S \ne \emptyset \), suppose that the objective function \(q(\cdot )\) is convex over S. Then, for any \(\mathcal{K} \in {\mathbb {K}}\),

Therefore, the convex relaxation \((P(\mathcal{K}))\) is exact, i.e., \(\ell _\mathcal{K} = \ell ^*\). Furthermore, for any optimal solution \(Y^* \in \mathcal{S}^{n+1}\) of \((P(\mathcal{K}))\), \(x^* = Y^*_{0,1:n} {\in \mathbb {R}^n}\) is an optimal solution of (QP).

Proof

Let \({{{\tilde{x}}}} \in S\) and let \({{{\tilde{Y}}}} \in \mathcal{S}^{n+1}\) given by (30) be a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\). By Lemma 2,

where the inequality follows from (35) since \(q(\cdot )\) is convex over S and \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\) by Lemma 2. By Proposition 1, we obtain \(\ell _\mathcal{K}({{{\tilde{x}}}}) = q({{{\tilde{x}}}})\), and \(\ell _\mathcal{K} = \ell ^*\). Finally, if \(Y^* \in \mathcal{S}^{n+1}\) is an optimal solution of \((P(\mathcal{K}))\), it follows from Proposition 1 and (35) that \(\ell ^* = \ell _\mathcal{K} = \langle {\widehat{Q}}, Y^* \rangle \ge q(x^*) = (x^*)^T Q x^* + 2 c^T x^* \ge \ell ^*\), where \(x^* = Y^*_{0,1:n}\), which implies that \(x^*\) is an optimal solution of (QP). \(\square \)

By Lemma 4, the convex underestimator arising from any \(\mathcal{K} \in {\mathbb {K}}\) coincides with the objective function \(q(\cdot )\) over S. In addition, solving the convex relaxation \((P(\mathcal{K}))\) not only yields the optimal value \(\ell ^*\) of (QP) but also an optimal solution of (QP) if it is attained. We would like to also highlight the role played by Lemma 2 in the proof of Lemma 4 (i.e., \(\mathcal{K} \subseteq \mathcal{P}^{n+1} \subseteq \mathcal{PSD}^{n+1}\)). In particular, alternative convex relaxations arising from using, for instance, \(\mathcal{K} = \mathcal{N}^{n+1}\) may not necessarily yield an exact relaxation even if \(q(\cdot )\) is convex over S. On the other hand, we remark that the choice of \(\mathcal{K} = \mathcal{N}^{n+1}\) does not necessarily give rise to a feasibility preserving relaxation \((P(\mathcal{K}))\) unless one explicitly adds the constraint \(Ax = b\) to \((P(\mathcal{K}))\).

Clearly, if \(q(\cdot )\) is convex over \(\mathbb {R}^n\), then it is also convex over S. Under this more restrictive convexity assumption on \(q(\cdot )\), a similar result for the doubly nonnegative relaxation follows from [23, Lemma 2.7]. On the other hand, for the special case of standard quadratic programs, Gökmen and Yıldırım [18, Proposition 4] establish the exactness of the doubly nonnegative relaxation under the same corresponding assumption as in Lemma 4. Therefore, Lemma 4 subsumes and extends these results in two directions. First, the exactness result holds under the slightly weaker condition of convexity of \(q(\cdot )\) over the feasible region. Second, it holds for any \(\mathcal{K} \in {\mathbb {K}}\), including the weaker convex relaxation arising from \(\mathcal{K} = \mathcal{P}^{n+1}\), where \(\mathcal{P}^{n+1}\) is given by (23).

We now focus on the implications of relaxing the convexity assumption on the objective function of (QP) on convex relaxations. To that end, our next result establishes that the weakest convex relaxation arising from \(\mathcal{K} = \mathcal{P}^{n+1}\) yields a trivial convex underestimator whenever the objective function \(q(\cdot )\) is not convex over S.

Proposition 2

Given an instance of (QP) with \(S \ne \emptyset \), suppose that the objective function \(q(\cdot )\) is not convex over S. Then, for \(\mathcal{K} = \mathcal{P}^{n+1}\), \(\ell _\mathcal{K} ({\tilde{x}}) = -\infty \) for all \({\tilde{x}} \in S\), and \(\ell _\mathcal{K} = -\infty \).

Proof

Let \(\mathcal{K} = \mathcal{P}^{n+1}\) and let \({{{\tilde{x}}}} \in S\). By Lemma 3, there exists \({\tilde{d}} \in \mathbb {R}^n\) such that \(A {\tilde{d}} = 0\) and \({\tilde{d}}^T Q {\tilde{d}} < 0\). By Lemma 2, for any \(\lambda \ge 0\),

is a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\) and \(\langle {{{\tilde{Q}}}}, {{{\tilde{Y}}}} \rangle = q({{{\tilde{x}}}}) + \lambda {{{\tilde{d}}}}^T Q {{{\tilde{d}}}} \rightarrow -\infty \) as \(\lambda \rightarrow +\infty \), establishing that \(\ell _\mathcal{K} ({\tilde{x}}) = -\infty \) for all \({\tilde{x}} \in S\). The last assertion follows from Proposition 1. \(\square \)

By Lemma 4 and Proposition 2, it follows that the weakest convex relaxation arising from \(\mathcal{K} = \mathcal{P}^{n+1}\) is exact if the objective function \(q(\cdot )\) is convex over S, and provides no useful information otherwise. It is worth pointing out that Proposition 2 also holds for the weaker convex relaxation arising from \(\mathcal{K} = \mathcal{PSD}^{n+1}\). We remark, however, that the corresponding convex relaxation is not necessarily a feasibility preserving relaxation.

5 Copositive relaxation and convex envelope

Having established some properties of the weakest convex relaxation in the family of feasibility preserving relaxations arising from \(\mathcal{K} = \mathcal{P}^{n+1}\) in Sect. 4, we now focus on the strongest convex relaxation, namely the copositive relaxation, given by choosing \(\mathcal{K} = \mathcal{CP}^{n+1}\). Note that Burer [8] already established the exactness of the copositive relaxation for a family of optimization problems that include (QP) as a special case. In this section, for the specific class of quadratic programs, we establish the stronger result that the copositive relaxation in fact yields the convex envelope under a mild assumption on the directions of negative curvature.

Recall that Lemma 1 presents necessary and sufficient conditions for the unboundedness of the objective function \(q(\cdot )\) over S. In particular, we first focus on the condition \(L_\infty \ne \emptyset \), where \(L_\infty \) is given by (7). In this case, the following corollary reveals that the convex underestimator arising from any \(\mathcal{K} \in {\mathbb {K}}\) is the trivial one.

Corollary 2

Suppose that \(S \ne \emptyset \) and \(L_\infty \ne \emptyset \), where \(L_\infty \) is given by (7). Then, for any \({\tilde{x}} \in S\) and any \(\mathcal{K} \in {\mathbb {K}}\), we have \(\ell _\mathcal{K}({\tilde{x}}) = -\infty \), and \(\ell _\mathcal{K} = \ell ^* = -\infty \).

Proof

By (32), it suffices to prove the assertions for \(\mathcal{K} = \mathcal{CP}^{n+1}\), which directly follow from the hypothesis \(L_\infty \ne \emptyset \), Lemma 2, Proposition 1, and Lemma 1. \(\square \)

In light of Corollary 2, we henceforth make the following assumption.

Assumption 1

We assume that \(L_\infty = \emptyset \), i.e.,

Note that Assumption 1 is clearly satisfied if S is nonempty and bounded. If S is unbounded, Lemma 1 implies that (QP) may still be unbounded below under Assumption 1.

We next present the following decomposition result from [8] that outlines a useful property of the copositive relaxation (see also [2] for the equivalence between Burer’s original formulation and the simplified formulation \((P(\mathcal{K}))\) for \(K = \mathcal{CP}^{n+1}\)).

Lemma 5

[8, Lemma 2.2] Given an instance of (QP) with \(S \ne \emptyset \), let \({\tilde{x}} \in S\) and \(\mathcal{K} = \mathcal{CP}^{n+1}\). Then, \({\tilde{Y}} \in \mathcal{S}^{n+1}\) given by (30) is a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\) if and only if there exist \({\tilde{x}}^j \in S\), \(j = 1,\ldots ,k_1\), \(\lambda _j \ge 0,~j = 1,\ldots ,k_1\), and \({\tilde{d}}^j \in L,~j = 1,\ldots ,k_2\), where L is given by (5), such that \(\sum \limits _{j=1}^{k_1} \lambda _j = 1\), and

Relying on this result, Burer [8] established the following well-known exactness result for a larger class of optimization problems that includes (QP) as a special case. We present this result for quadratic programs.

Theorem 1

[8, Theorem 2.6] For any instance of (QP) with \(S \ne \emptyset \), the copositive relaxation of (QP) arising from \(\mathcal{K} = \mathcal{CP}^{n+1}\) is exact, i.e., \(\ell _\mathcal{K} = \ell ^*\). In addition, if \(\ell _\mathcal{K}({{{\tilde{x}}}}) = \ell ^*\), then \({{{\tilde{x}}}} \in \text {conv}(S^*)\), where \(S^*\) is given by (8).

For the specific class of quadratic programs, we remark that the assumption \(S \ne \emptyset \) in Theorem 1 can be removed due to Corollary 1.

Next, we present our main result in this section, which shows that Lemma 5 can in fact be used to establish a stronger result on copositive relaxations of quadratic programs.

Proposition 3

Let \(\mathcal{K} = \mathcal{CP}^{n+1}\). For any instance of (QP) with \(S \ne \emptyset \) that satisfies Assumption 1, the convex underestimator \(\ell _\mathcal{K}(\cdot )\) is the convex envelope of \(q(\cdot )\) on S, i.e.,

where \(f_S(\cdot )\) denotes the convex envelope of \(q(\cdot )\) on S given by (9).

Proof

Let \({\tilde{x}} \in S\). Since S is nonempty, the feasible region of \((P(\mathcal{K},{{{\tilde{x}}}}))\) is nonempty by Corollary 1. Let \({\tilde{Y}} \in \mathcal{S}^{n+1}\) be an arbitrary feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\). By Lemma 5, \({\tilde{Y}}\) admits a decomposition given by (36), i.e.,

where \({\tilde{x}}^j \in S\), \(j = 1,\ldots ,k_1\), \(\lambda _j \ge 0,~j = 1,\ldots ,k_1\) and \({\tilde{d}}^j \in L,~j = 1,\ldots ,k_2\), where L is given by (5), such that \(\sum \limits _{j=1}^{k_1} \lambda _j = 1\). Therefore,

where we used Assumption 1 in the second line, (9) in the third line, the convexity of \(f_S(\cdot )\) on the fourth line, and (36) in the last line. It follows that \(\ell _\mathcal{K}({{{\tilde{x}}}}) \ge f_S({{{\tilde{x}}}})\), which, together with (9) and Proposition 1, implies that \(\ell _\mathcal{K}({\tilde{x}}) = f_S({\tilde{x}})\). Therefore, \(\ell _\mathcal{K}(\cdot )\) is the convex envelope of \(q(\cdot )\) on S. \(\square \)

For the specific family of quadratic programs, Proposition 3 reveals that the copositive relaxation is not only exact, but also gives rise to the convex envelope \(f_S(\cdot )\) of \(q(\cdot )\) on S under Assumption 1. We note that the convex envelope of a quadratic function over a polytope was earlier characterized by an optimization problem in [1, Theorem 1]. Together with [8, Corollary 2.5], this result yields another proof of Proposition 3 under the assumption that S is nonempty and bounded. However, Proposition 3 generalizes this result to quadratic programs with an unbounded feasible region. Furthermore, we think that our perspective based on the optimization problem \((P(\mathcal{K},{{{\tilde{x}}}}))\) clearly pinpoints the role played by the convex underestimator arising from the copositive relaxation and highlights the usefulness of our view of convex relaxations from this perspective.

The following corollary summarizes the implications for any quadratic program.

Corollary 3

Let \(\mathcal{K} = \mathcal{CP}^{n+1}\). For any instance of (QP), \(\ell _\mathcal{K} = \ell ^*\). In addition, if \(-\infty< \ell ^* < +\infty \), then \(\ell _\mathcal{K}({{{\tilde{x}}}}) = \ell ^*\) if and only if \({{{\tilde{x}}}} \in \text {conv}(S^*)\), where \(S^*\) is given by (8).

Proof

The assertion follows from Proposition 3, Corollary 2, Corollary 1, Theorem 1, and (10). \(\square \)

By relying on (22), we close this section by discussing the implications of Proposition 3 in lower dimensions. To that end, let us next define the following subset of \({\mathbb {K}}\):

The following corollary extends Proposition 3 to any convex cone \(\mathcal{K} \in {\mathbb {K}}_0\) in lower dimensions.

Corollary 4

Let \(n \le 3\). For any instance of (QP) with \(S \ne \emptyset \) that satisfies Assumption 1 and any \(\mathcal{K} \in {\mathbb {K}}_0\), the convex underestimator \(\ell _\mathcal{K}(\cdot )\) is the convex envelope of \(q(\cdot )\) on S, i.e.,

where \(f_S(\cdot )\) denotes the convex envelope of \(q(\cdot )\) on S given by (9). Furthermore, this result also holds for \(n = 4\) if there exists \({\tilde{y}} \in \mathbb {R}^m\) such that \(A^T {\tilde{y}} = a \in \mathbb {R}^n_+\) and \(b^T {\tilde{y}} = 1\).

Proof

For \(n \le 3\), the result is an immediate corollary of Proposition 3 and (22) since \(\mathcal{K} = \mathcal{CP}^{n+1}\) for any \(\mathcal{K} \in {\mathbb {K}}_0\) and any \(n \le 3\).

Let \(n = 4\). We follow a similar argument as in [8, Sect. 3]. By the hypothesis, there exists \({\tilde{y}} \in \mathbb {R}^m\) such that \(A^T {\tilde{y}} = a \in \mathbb {R}^n_+\) and \(b^T {\tilde{y}} = 1\). Therefore, \({\tilde{x}} \in S\) implies \(a^T {\tilde{x}} = 1\).

Let \(\mathcal{K} \in {\mathbb {K}}_0\). If \(\mathcal{K} = \mathcal{CP}^{5}\), then the result follows from Proposition 3. Suppose that \(\mathcal{K} \ne \mathcal{CP}^{5}\). Let \({\tilde{x}} \in S\) and \({\tilde{Y}} \in \mathcal{S}^{n+1}\) given by (30) be a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\). By Lemma 2, \({\tilde{X}} = {\tilde{x}} {\tilde{x}}^T + {\tilde{D}}\), where \({\tilde{D}} = \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\) and \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\). Therefore,

which implies that \({\tilde{y}}^T A {\tilde{X}} A^T {\tilde{y}} = a^T {\tilde{X}} a = ({\tilde{y}}^T b)^2 = 1\). Furthermore,

Therefore, \(1 = a^T {\tilde{X}} a = a^T {\tilde{x}} + a^T {\tilde{D}} a = 1 + a^T {\tilde{D}} a\), which implies that \(a^T {\tilde{D}} a = 0\). Since \({\tilde{D}} \in \mathcal{PSD}^{n}\), it follows that \({\tilde{D}} a = 0\). Therefore, we obtain \({\tilde{X}} a = {\tilde{x}}\), and

Furthermore, since \({\tilde{Y}} \in \mathcal{K}\) and \(\mathcal{K} \in {\mathbb {K}}_0\), it follows that \({\tilde{X}} \in \mathcal{D}^n\), which implies that \({\tilde{X}} \in \mathcal{CP}^n\) by (22). Therefore, there exists \(U \in \mathbb {R}^{n \times k}\) such that U is componentwise nonnegative and \({\tilde{X}} = U U^T\). Combining this with the last equation above, we obtain

since \(a \in \mathbb {R}^n_+\). Therefore, the feasible region of \((P(\mathcal{K},{{{\tilde{x}}}}))\) is the same as that of the corresponding copositive relaxation. The result follows from Proposition 3. \(\square \)

As observed in [8, Section 3], we note that the assumption for the \(n = 4\) case is satisfied if, for instance, S is nonempty and bounded, or if a subset of the variables in (QP) is bounded.

Corollary 4 implies that the value of the convex envelope \(f_S({\tilde{x}})\), where \({\tilde{x}} \in S\), can be computed within any precision in polynomial time for any instance of (QP) that satisfies the assumptions of the corollary. We remark that Locatelli [26] formulated a semidefinite programming problem for the computation of the value of the convex envelope of a quadratic form over a polytope for any value of n under the assumption that all vertices and all maximal convex faces of the polytope are known.

6 Bounded and unbounded feasible regions

In this section, we present several results for feasibility preserving convex relaxations of (QP) with bounded and unbounded feasible regions.

First, we focus on the relation between the boundedness of the feasible region S of (QP) and that of the feasible region of \((P(\mathcal{K}))\) for \(\mathcal{K} \in {\mathbb {K}}\). By (31), it is clear that the boundedness of the latter set implies the boundedness of the former, and the unboundedess of the former set implies the unboundedness of the latter set. However, the converse implications, in general, do not hold for a given \(\mathcal{K} \in {\mathbb {K}}\). For instance, even if S is a nonempty polytope, Proposition 2 reveals that the feasible region of \((P(\mathcal{K}))\) can be unbounded for \(\mathcal{K} = \mathcal{P}^{n+1}\) if the objective function \(q(\cdot )\) is not convex over S.

On the other hand, under the stronger assumption that \(\mathcal{K} \in {\mathbb {K}}_0\), the aforementioned converse implications were established in [22, Lemma 2], i.e., the boundedness of S implies the boundedness of the feasible region of any feasibility preserving convex relaxation \((P(\mathcal{K}))\). We state this result in a slightly different form below and give a slightly different proof that highlights the relation between the recession cone of S and that of the feasible region of \((P(\mathcal{K}))\).

Lemma 6

Let \(\mathcal{K} \in {\mathbb {K}}_0\), where \({\mathbb {K}}_0\) is given by (37). Then, S is unbounded if and only if the feasible region of \((P(\mathcal{K}))\) is unbounded.

Proof

Let \(\mathcal{K} \in {\mathbb {K}}_0\). By the preceding discussion, if S is unbounded, then the feasible region of \((P(\mathcal{K}))\) is unbounded. Conversely, suppose that the feasible region of \((P(\mathcal{K}))\) is unbounded. By Lemma 2, \(S \ne \emptyset \). Then, by [33, Theorem 8.4], the recession cone of the feasible region of \((P(\mathcal{K}))\) is nonempty, i.e., there exists \(D \in \mathcal{K}\) such that \(D \ne 0\), \(D_{00} = 0\), which implies that \(D_{0,1:n} = 0\) since \(D \in \mathcal{PSD}^{n+1}\), and \(\langle {\widehat{A}}, D \rangle = 0\). Therefore, D is given by

where \({\tilde{D}} \in \mathcal{D}^n\) and \(\langle A^T A, {\tilde{D}} \rangle = 0\). Using a similar argument as in the proof of Lemma 2, it follows that \({\tilde{D}} = \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\), where \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\). Since \({\tilde{D}} \in \mathcal{N}^n \backslash \{0\}\), we have \({{{\tilde{d}}}} = {\tilde{D}} e \in \mathbb {R}^n_+ \backslash \{0\}\), i.e., \({{{\tilde{d}}}} = \sum \limits _{j = 1}^k (e^T {\tilde{d}}^j) {\tilde{d}}^j\). Therefore, \(A {{{\tilde{d}}}} = 0\), which implies that \({{{\tilde{d}}}} \in L\). Therefore, S is unbounded since \({{{\tilde{d}}}} \in \mathbb {R}^n_+ \backslash \{0\}\). \(\square \)

Under the assumption that \(\mathcal{K} \in {\mathbb {K}}_0\), Lemma 6 reveals that the corresponding convex relaxations not only preserve feasibility but also boundedness of the feasible region.

For any \(\mathcal{K} \in {\mathbb {K}}_0\), the proof of Lemma 6 gives a recipe for computing a recession direction of S by using any recession direction of the feasible region of \((P(\mathcal{K}))\). We next provide some insight on this process. Let \(B \in \mathbb {R}^{n \times k}\) be a matrix whose columns form a basis for the null space of A. For any \(\mathcal{K} \in {\mathbb {K}}_0\), the recession cone of the feasible region of \((P(\mathcal{K}))\), denoted by \(\mathcal{L}(\mathcal{K})\), then satisfies

As illustrated by the proof of Lemma 6, the nonnegativity condition on the submatrix \({\tilde{D}}\) is mainly responsible for the boundedness of the feasible region of \((P(\mathcal{K}))\) if S is bounded. In contrast, if \(\mathcal{K} = \mathcal{P}^{n+1}\), then this nonnegativity condition on \({\tilde{D}}\) is removed, which leads to a significantly larger set of recession directions \(\mathcal{L}(\mathcal{K})\). In particular, this observation plays a crucial role in the unfavorable result presented in Proposition 2 for the case that \(q(\cdot )\) is nonconvex over S even if S is nonempty and bounded.

6.1 Bounded feasible region

In this section, we focus on instances of (QP) for which S is a nonempty polytope. Under this assumption, Lemma 6 implies that \(\ell _\mathcal{K} > -\infty \) and the optimal solution of \((P(\mathcal{K}))\) is attained for any \(\mathcal{K} \in {\mathbb {K}}_0\) (see also [22]).

We first establish a technical result that identifies a useful property of the set of feasible solutions of \(P(\mathcal{K})\) for \(\mathcal{K} \in {\mathbb {K}}_0\). We will then use this property to establish a result about local minimizers of (QP).

Lemma 7

Let \(\mathcal{K} \in {\mathbb {K}}_0\) and consider any instance of (QP), where S is a nonempty polytope. Let \({\tilde{x}} \in S\), and let \({\tilde{Y}} \in \mathcal{S}^{n+1}\) be a feasible solution of \(P(\mathcal{K},{\tilde{x}})\) given by (30). Then, for any decomposition of \({{{\tilde{X}}}} = {\tilde{Y}}_{1:n,1:n}\) given by \({\tilde{X}} = {\tilde{x}} {\tilde{x}}^T + \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\) (cf. Lemma 2), we have

where \({\mathbf {Z}}({\tilde{x}})\) is given by (3).

Proof

Suppose that S is a nonempty polytope. Let \({\tilde{x}} \in S\) and let \(\mathcal{K} \in {\mathbb {K}}_0\). Let \({\tilde{Y}} \in \mathcal{S}^{n+1}\) be a feasible solution of \(P(\mathcal{K},{\tilde{x}})\) given by (30) and let \({{{\tilde{X}}}} = {\tilde{Y}}_{1:n,1:n}\). By Lemma 2, there exist \({\tilde{d}}^j \in \mathbb {R}^n,~j = 1,\ldots ,k\), such that \({\tilde{X}} = {\tilde{x}} {\tilde{x}}^T + \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\), where \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\). Let us define \({\mathbf {P}} = {\mathbf {P}}({\tilde{x}})\) and \({\mathbf {Z}} = {\mathbf {Z}}({\tilde{x}})\), where \({\mathbf {P}}({\tilde{x}})\) and \({\mathbf {Z}}({\tilde{x}})\) are given by (2) and (3), respectively. Then, by permuting the rows and columns of \({{{\tilde{X}}}}\) if necessary, we obtain

where we used \({\tilde{x}}_{{\mathbf {Z}}} = 0\). Since \({\tilde{U}} \in \mathcal{D}^n\), it follows that

Therefore,

Since S is bounded, \(A {\tilde{d}} = 0\), and \({\tilde{d}} \ge 0\), it follows that \({\tilde{d}} = 0\). Therefore,

Since \({\tilde{U}} \in \mathcal{D}^n\), it follows that \(\sum \limits _{j = 1}^k {\tilde{d}}^j_{{\mathbf {Z}}} ({\tilde{d}}^j_{{\mathbf {Z}}})^T \in \mathcal{N}^{|{\mathbf {Z}}|}\), which, together with the last equality, implies that \({\tilde{d}}^j_{{\mathbf {Z}}} = 0\) for each \(j = 1,\ldots ,k\). The assertion follows. \(\square \)

This technical result enables us to establish the following property of convex underestimators.

Proposition 4

Let \(\mathcal{K} \in {\mathbb {K}}_0\) and consider any instance of (QP), where S is a nonempty polytope. Then, for any local minimizer \({\tilde{x}} \in S\) of (QP), we have

In particular, for any global minimizer \(x^* \in S\) of (QP), \(\ell _\mathcal{K}({x}^*) = q({x}^*) = \ell ^*\).

Proof

Let S be a nonempty polytope, \(\mathcal{K} \in {\mathbb {K}}_0\), and let \({\tilde{x}} \in S\) be a local minimizer of (QP). Let us define \({\mathbf {P}} = {\mathbf {P}}({\tilde{x}})\) and \({\mathbf {Z}} = {\mathbf {Z}}({\tilde{x}})\), where \({\mathbf {P}}({\tilde{x}})\) and \({\mathbf {Z}}({\tilde{x}})\) are given by (2) and (3), respectively. Let \({\tilde{Y}}\) given by (30) be a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\). Then, by Lemma 2, there exist \({\tilde{d}}^j \in \mathbb {R}^n,~j = 1,\ldots ,k\), such that \({{{\tilde{X}}}} = {\tilde{Y}}_{1:n,1:n} = {\tilde{x}} {\tilde{x}}^T + \sum \limits _{j = 1}^k {\tilde{d}}^j ({\tilde{d}}^j)^T\), where \(A {\tilde{d}}^j = 0\) for each \(j = 1,\ldots ,k\). By Lemma 7, \({\tilde{d}}^j_i = 0\) for each \(j = 1,\ldots ,k\) and each \(i \in {\mathbf {Z}}\). Since \({\tilde{x}}\) is a local minimizer, there exist \({\tilde{y}} \in \mathbb {R}^m\) and \({\tilde{s}} \in \mathbb {R}^n\) such that (11)–(15) are satisfied. Therefore, for each \(j = 1,\ldots ,k\),

where we used (11) in the first equality, \(A {\tilde{d}}^j = 0\) in the third equality, \({\tilde{s}}_i = 0\) for \(i \in {\mathbf {P}}\) by (13), and \({\tilde{d}}^j_i = 0\) for \(i \in {\mathbf {Z}}\) in the last one. By (17), we have \({\tilde{d}}^j \in C_{{\tilde{x}}}\) for each \(j = 1,\ldots ,k\). Since \({\tilde{x}}\) is a local minimizer, \(({\tilde{d}}^j)^T Q {\tilde{d}}^j \ge 0\) for each \(j = 1,\ldots ,k\) by (16). Therefore,

for any feasible solution \({\tilde{Y}}\) of \((P(\mathcal{K},{{{\tilde{x}}}}))\). Therefore, \(\ell _\mathcal{K}({\tilde{x}}) \ge q({{{\tilde{x}}}})\). Combining this inequality with (33) yields (38). The second assertion simply follows from the fact that any global minimizer \(x^*\) of (QP) is also a local minimizer. \(\square \)

By Proposition 4, the convex underestimator \(\ell _\mathcal{K}(\cdot )\) agrees with \(q(\cdot )\) at each local minimizer of (QP) for any \(\mathcal{K} \in {\mathbb {K}}_0\). By Proposition 1,

where \(f_S(\cdot )\) denotes the convex envelope of \(q(\cdot )\) on S given by (9). Therefore, by Proposition 4, for any local minimizer \({\tilde{x}} \in S\) of (QP), we obtain \(\ell _\mathcal{K}({\tilde{x}}) = f_S({{{\tilde{x}}}}) = q({{{\tilde{x}}}})\) for any \(\mathcal{K} \in {\mathbb {K}}_0\). In contrast, for the copositive relaxation \(\mathcal{K} = \mathcal{CP}^{n+1}\), note that the relation \(\ell _\mathcal{K}({\tilde{x}}) = f_S({{{\tilde{x}}}}) = q({{{\tilde{x}}}})\) holds at any feasible solution \({\tilde{x}} \in S\) by Proposition 3.

6.2 Unbounded feasible region

In this section, we focus on instances of (QP) with an unbounded feasible region. We will primarily focus on the construction of instances of (QP) with a finite optimal value that admit an unbounded convex relaxation.

Recall that if S is nonempty and bounded, we have \(-\infty < \ell _\mathcal{K} \le \ell ^*\) for any \(\mathcal{K} \in {\mathbb {K}}_0\) by Lemma 6, i.e., the lower bound arising from any convex relaxation \(\mathcal{K} \in {\mathbb {K}}_0\) is finite whenever S is nonempty and bounded. An interesting question is whether the same relation \(-\infty < \ell _\mathcal{K} \le \ell ^*\) continues to hold for instances of (QP) with an unbounded feasible region and a finite optimal value. The following example, inspired from [10], illustrates a counterexample for \(n = 5\) and \(\mathcal{K} = \mathcal{D}^{n+1}\).

Example 1

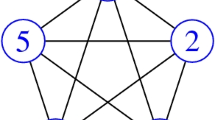

Consider the following instance of (QP) given by

Then, \(S \ne \emptyset \) since \(\left[ \begin{matrix}0&1&0&1&1 \end{matrix} \right] ^T \in S\). Furthermore, S is unbounded since \(\left[ \begin{matrix} 1&3&1&0&0 \end{matrix} \right] ^T \in L\). Note that \({Q} \in \mathcal{COP}^5\) since it is the well-known Horn matrix (see, e.g., [20]). Since \({c} \in \mathbb {R}^n_+\), it follows that \(q(x) = x^T {Q} x + 2 {c}^T x \ge 0\) for any \(x \in \mathbb {R}^n_+\), which implies that \(q(\cdot )\) is bounded below on S. Therefore, \(-\infty< \ell ^* < +\infty \).

Let us consider the convex relaxation of (QP) obtained from \(\mathcal{K} = \mathcal{D}^6\). For each \({{{\tilde{x}}}} \in S\), we claim that \(\ell _\mathcal{K}({{{\tilde{x}}}}) = -\infty \), i.e., the convex underestimator \(\ell _\mathcal{K}(\cdot )\) is the trivial one despite the fact that \(-\infty< \ell ^* < +\infty \). Indeed, for any \({{{\tilde{x}}}} \in S\), consider

where

Note that \({\tilde{D}} \in \mathcal{D}^5\), \(\langle {A}^T {A}, {\tilde{D}} \rangle = 0\), and \(\langle {Q}, {\tilde{D}} \rangle = {-1} < 0\). Therefore, by Lemma 2, \({{{\tilde{Y}}}}(\lambda )\) is a feasible solution of \((P(\mathcal{K},{{{\tilde{x}}}}))\) for any \(\lambda \ge 0\), and \(\langle {{{\widehat{Q}}}}, {{{\tilde{Y}}}} \rangle = q({{{\tilde{x}}}}) + \lambda \langle {Q}, {\tilde{D}} \rangle \rightarrow -\infty \) as \(\lambda \rightarrow +\infty \), establishing the assertion. It follows that \(\ell _\mathcal{K} = -\infty \) by Proposition 1, whereas \(\ell ^*\) is finite.

Our next result generalizes the construction in Example 1 to any \(n \ge 5\) and any \(\mathcal{K}\in {\mathbb {K}}_0 \backslash \{\mathcal{CP}^{n+1}\}\) under an additional technical assumption.

Proposition 5

Let \(\mathcal{K}\in {\mathbb {K}}_0 \backslash \{\mathcal{CP}^{n+1}\}\) be any cone that satisfies the following property:

Then, for any \(n \ge 5\), there exists an instance of (QP) such that \(-\infty< \ell ^* < +\infty \), whereas \(\ell _\mathcal{K}({{{\tilde{x}}}}) = -\infty \) for each \({{{\tilde{x}}}} \in S\), and \(\ell _\mathcal{K} = -\infty \).

Proof

Let \(n \ge 5\) and let \(\mathcal{K}\in {\mathbb {K}}_0 \backslash \{\mathcal{CP}^{n+1}\}\) be such that (39) is satisfied. Since \({\tilde{D}} \not \in \mathcal{CP}^{n+1}\) by (39), it follows that \(D \not \in \mathcal{CP}^n\). By (4), (19), and (20), there exists a matrix \(Q \in \mathcal{COP}^n\) such that \(\langle Q, D \rangle < 0\).

Choose any \(c \in \mathbb {R}^n_+\). Since \(Q \in \mathcal{COP}^n\), it follows that the objective function \(q(\cdot )\) is bounded below on the nonnegative orthant. Since \({\tilde{D}} \in \mathcal{K}\) and \(\mathcal{K}\subseteq \mathcal{D}^{n+1}\), it follows that \(D \in \mathcal{D}^n\), i.e., there exist \(d^j \in \mathbb {R}^n,~j = 1,\ldots ,k\), such that \(D = \sum \limits _{j=1}^k d^j (d^j)^T\). Let \(A \in \mathbb {R}^{m \times n}\) be any matrix such that \(Ad^j = 0,~j = 1,\ldots ,k\). Finally, choose any \(x \in \mathbb {R}^n_+\) and define \(b = Ax\) so that \(S \ne \emptyset \). A similar argument as in Example 1 reveals that \(\ell _\mathcal{K}({{{\tilde{x}}}}) = -\infty \) for each \({{{\tilde{x}}}} \in S\), and therefore, \(\ell _\mathcal{K} = -\infty \) by Proposition 1. \(\square \)

We remark that the proof of Proposition 5 yields an algorithmic recipe for constructing instances of (QP) with a finite optimal value for any \(n \ge 5\) and any \(\mathcal{K}\in {\mathbb {K}}_0 \backslash \{\mathcal{CP}^{n+1}\}\) that satisfies (39). In addition, it is worth noting that the assumption \(n \ge 5\) is crucial in the construction since the relation (39) cannot be satisfied for \(n \le 4\) due to (22).

7 Concluding remarks

In this paper, we considered a family of so-called feasibility preserving convex relaxations of nonconvex quadratic programs. By observing that each such relaxation implicitly gives rise to a convex underestimator, we established several properties of such relaxations. In particular, our results strengthen Burer’s well-known copositive representation result for the specific case of nonconvex quadratic programs.

We believe that our perspective in this paper is particularly useful since it sheds light onto how a convex relaxation obtained in higher dimensions translates back into the original dimension of the quadratic program. Furthermore, our results clearly highlight the important role played by the directions in the null space of the constraint matrix. An interesting research direction is to extend this perspective to a larger class of nonconvex optimization problems that can be formulated as copositive or generalized copositive optimization problems.

References

Anstreicher, K.M.: On convex relaxations for quadratically constrained quadratic programming. Math. Program. 136(2), 233–251 (2012). DOI: 10.1007/s10107-012-0602-3

Arima, N., Kim, S., Kojima, M.: A quadratically constrained quadratic optimization model for completely positive cone programming. SIAM J Optim 23(4), 2320–2340 (2013). https://doi.org/10.1137/120890636

Arima, N., Kim, S., Kojima, M.: Extension of completely positive cone relaxation to moment cone relaxation for polynomial optimization. J. Optim. Theory Appl. 168(3), 884–900 (2016). DOI: 10.1007/s10957-015-0794-9

Bai, L., Mitchell, J.E., Pang, J.: On conic QPCCs, conic QCQPs and completely positive programs. Math. Program. 159(1–2), 109–136 (2016). DOI: 10.1007/s10107-015-0951-9

Berman, A., Shaked-Monderer, N.: Completely Positive Matrices. World Scientific, Singapore (2003)

Bomze, I.M., Cheng, J., Dickinson, P.J.C., Lisser, A.: A fresh CP look at mixed-binary QPs: new formulations and relaxations. Math. Program. 166(1–2), 159–184 (2017). DOI: 10.1007/s10107-017-1109-8

Bundfuss, S., Dür, M.: An adaptive linear approximation algorithm for copositive programs. SIAM Journal on Optimization 20(1), 30–53 (2009)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120(2), 479–495 (2009). DOI: 10.1007/s10107-008-0223-z

Burer, S.: Copositive programming. In: Handbook on Semidefinite, Conic and Polynomial Optimization, pp. 201–218. Springer (2012)

Burer, S., Anstreicher, K.M., Dür, M.: The difference between 5\(\times \) 5 doubly nonnegative and completely positive matrices. Linear Algebra and its Applications 431(9), 1539–1552 (2009)

Burer, S., Dong, H.: Representing quadratically constrained quadratic programs as generalized copositive programs. Oper. Res. Lett. 40(3), 203–206 (2012). DOI: 10.1016/j.orl.2012.02.001

De Klerk, E., Pasechnik, D.V.: Approximation of the stability number of a graph via copositive programming. SIAM Journal on Optimization 12(4), 875–892 (2002)

Diananda, P.H.: On non-negative forms in real variables some or all of which are non-negative. Mathematical Proceedings of the Cambridge Philosophical Society 58(1), 17–25 (1962). DOI: https://doi.org/10.1017/S0305004100036185

Dickinson, P.J.C., Eichfelder, G., Povh, J.: Erratum to: On the set-semidefinite representation of nonconvex quadratic programs over arbitrary feasible sets. Optimization Letters 7(6), 1387–1397 (2013). DOI: https://doi.org/10.1007/s11590-013-0645-2

Eaves, B.C.: On quadratic programming. Management Science 17(11), 698–711 (1971). DOI: https://doi.org/10.1287/mnsc.17.11.698

Eichfelder, G., Povh, J.: On the set-semidefinite representation of nonconvex quadratic programs over arbitrary feasible sets. Optimization Letters 7(6), 1373–1386 (2013). DOI: https://doi.org/10.1007/s11590-012-0450-3

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Naval Research Logistics Quarterly 3(1–2), 95–110 (1956). https://doi.org/10.1002/nav.3800030109

Gökmen, Y.G., Yıldırım, E.A.: On standard quadratic programs with exact and inexact doubly nonnegative relaxations. Mathematical Programming (2021). https://doi.org/10.1007/s10107-020-01611-0

Gouveia, J., Pong, T.K., Saee, M.: Inner approximating the completely positive cone via the cone of scaled diagonally dominant matrices. Journal of Global Optimization 76, 383–405 (2020)

Hall, M., Newman, M.: Copositive and completely positive quadratic forms. Mathematical Proceedings of the Cambridge Philosophical Society 59(2), 329–339 (1963)

Jiaquan, L., Tiantai, S., Dingzhu, D.: On the necessary and sufficient condition of the local optimal solution of quadratic programming. Chinese Annals of Mathematics, Series B 3(5), 625–630 (1982)

Kim, S., Kojima, M., Toh, K.: A Lagrangian-DNN relaxation: A fast method for computing tight lower bounds for a class of quadratic optimization problems. Math. Program. 156(1–2), 161–187 (2016). DOI: https://doi.org/10.1007/s10107-015-0874-5

Kim, S., Kojima, M., Toh, K.C.: Doubly nonnegative relaxations are equivalent to completely positive reformulations of quadratic optimization problems with block-clique graph structures. Journal of Global Optimization 77(3), 513–541 (2020)

Kim, S., Kojima, M., Toh, K.C.: A geometrical analysis on convex conic reformulations of quadratic and polynomial optimization problems. SIAM Journal on Optimization 30(2), 1251–1273 (2020)

Lasserre, J.B.: New approximations for the cone of copositive matrices and its dual. Mathematical Programming 144(1–2), 265–276 (2014)

Locatelli, M.: Computing the value of the convex envelope of quadratic forms over polytopes through a semidefinite program. Operations Research Letters 41(4), 370–372 (2013). https://doi.org/10.1016/j.orl.2013.04.004

Majthay, A.: Optimality conditions for quadratic programming. Mathematical Programming 1(1), 359–365 (1971)

Murty, K.G., Kabadi, S.N.: Some NP-complete problems in quadratic and nonlinear programming. Mathematical Programming 39(2), 117–129 (1987). DOI: https://doi.org/10.1007/BF02592948

Nocedal, J., Wright, S.J.: Numerical Optimization, second edn. Springer, New York, NY, USA (2006)

Parrilo, P.A.: Structured semidefinite programs and semialgebraic geometry methods in robustness and optimization. Ph.D. thesis, California Institute of Technology (2000)

Pena, J., Vera, J., Zuluaga, L.F.: Computing the stability number of a graph via linear and semidefinite programming. SIAM Journal on Optimization 18(1), 87–105 (2007)

Peña, J., Vera, J.C., Zuluaga, L.F.: Completely positive reformulations for polynomial optimization. Math. Program. 151(2), 405–431 (2015). DOI: https://doi.org/10.1007/s10107-014-0822-9

Rockafellar, R.T.: Convex Analysis. Princeton Landmarks in Mathematics and Physics. Princeton University Press, Princeton (1970)

Shor, N.Z.: Quadratic optimization problems. Soviet Journal of Computer and Systems Sciences 25(6), 1–11 (1987)

Yıldırım, E.A.: On the accuracy of uniform polyhedral approximations of the copositive cone. Optimization Methods and Software 27(1), 155–173 (2012)

Acknowledgements

We are grateful to two anonymous reviewers for their insightful comments and suggestions, which considerably improved the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yıldırım, E.A. An alternative perspective on copositive and convex relaxations of nonconvex quadratic programs. J Glob Optim 82, 1–20 (2022). https://doi.org/10.1007/s10898-021-01066-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01066-3

Keywords

- Nonconvex quadratic programs

- Copositive relaxation

- Doubly nonnegative relaxation

- Convex relaxation

- Convex envelope