Abstract

In this study, we consider identification of parameters in a non-linear continuum-mechanical model of arteries by fitting the models response to clinical data. The fitting of the model is formulated as a constrained non-linear, non-convex least-squares minimization problem. The model parameters are directly related to the underlying physiology of arteries, and correctly identified they can be of great clinical value. The non-convexity of the minimization problem implies that incorrect parameter values, corresponding to local minima or stationary points may be found, however. Therefore, we investigate the feasibility of using a branch-and-bound algorithm to identify the parameters to global optimality. The algorithm is tested on three clinical data sets, in each case using four increasingly larger regions around a candidate global solution in the parameter space. In all cases, the candidate global solution is found already in the initialization phase when solving the original non-convex minimization problem from multiple starting points, and the remaining time is spent on increasing the lower bound on the optimal value. Although the branch-and-bound algorithm is parallelized, the overall procedure is in general very time-consuming.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The initiation and development of cardiovascular diseases has been associated with changes in the mechanical properties of the underlying arterial tissue [1, 2]. Measures reflecting arterial stiffness, such as the pulse wave velocity (PWV) [3], the stiffness index (\(\upbeta \)) [4], and the pressure-strain elastic modulus (Ep) [5], are often used to assess patients in the clinic [2, 6, 7]. The amount of information obtainable from these popular clinical measures is limited, however, since they typically average stiffness over the artery or assume a constant stiffness despite the distinctive non-linear stiffening behavior of the arterial wall [8]. Several research groups have tried to address these shortcomings and proposed methods to identify more realistic patient specific mechanical properties of arteries in vivo (in the living human) [9,10,11,12,13]. These studies use non-linear continuum-mechanical models and identify the material parameters by fitting the models to clinical data. The fitting is performed by solving an optimization problem; typically minimization of the non-linear least-squares differences of measured and predicted pressure-radius response.

Although these new methods offer more insight into the mechanical properties of arteries in vivo, they suffer a fundamental problem: the non-linear optimization problem to be solved is non-convex and generally possesses local solutions which are not the global solution. This becomes a problem when the new information is used to develop diagnostic tools and intervention criteria. Intrasubject comparison requires a set of parameters for each patient which is uniquely relatable to a ‘normal’ set for healthy individuals, and the global solution is a natural candidate.

The issue of non-convexity is commonly addressed by using a gradient-based optimization algorithm which finds a local solution in combination with multiple starting points [11,12,13]. The best local solution is then taken to be the global solution. Unfortunately, there is no guarantee that this heuristic method identifies the global solution. Furthermore, no estimate can be provided for the difference between the best local and the true global solution.

In biomedicine, simulated annealing, particle swarm and genetic algorithms are frequently used to solve optimization problems [14,15,16,17], but these algorithms are also of heuristic nature and do not necessarily identify the global solution. Deterministic global optimization methods such as branch-and-bound (B&B) on the other hand, guarantee that in finite time, a solution is found whose optimal value is at worst a user-specified value higher than that of the global solution [18].

B&B algorithms have been used previously to solve least-squares minimization problems to global optimality [19, 20]. These studies show that the algorithm’s efficiency strongly depends on the reformulation of the original optimization problem and benefits from a low number of unknown parameters and simple functional expressions. The parameter identification method in Gade et al. [13] exhibits these characteristics, thus making B&B a suitable candidate when searching for the global solution. General purpose global optimization solvers exist that can be used as black-box solvers [21,22,23,24], but efficient global optimization requires exploitation of problem-specific characteristics, something which is difficult without access to source code.

In this paper, we propose a B&B-type algorithm for the parameter identification method presented in Gade et al. [13] and explore the feasibility of computing global solutions for the mechanical properties of arteries in vivo. In particular, we compute global solutions for the clinical data of three subjects. For each data set we analyze four successively larger parameter regions around a candidate global solution to study the algorithms efficiency.

2 Parameter identification method

The parameter identification method is taken from Gade et al. [13]. For a thorough description and discussion, the reader is referred to the original paper.

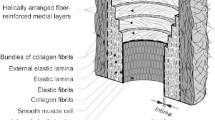

Unloaded (a) and loaded (b) state of an artery. The coordinates r, \(\theta \), and z of the cylindrical coordinate system are associated with the radial, circumferential, and axial direction, respectively. The thin dark-grey lines represent the collagen fibers embedded in the isotropic matrix which is colored in light grey

In the parameter identification method, an artery is treated as a homogeneous, incompressible, thin-walled cylinder which consists of an isotropic matrix with embedded collagen fibers. In the unloaded state, i.e. outside the human body, the artery has an inner radius \(R_\mathrm {i}\), a wall thickness H, and a length L, see Fig. 1a. In vivo, the artery is subjected to the blood pressure P from the inside and stretched to a length l which is invariant with respect to the changing blood pressure [25], see Fig. 1b. The stretch in the axial direction, i.e. \(\lambda _z\!=\!l/L\), results in an axial reaction force F. Furthermore, the inner radius and wall thickness in the loaded state are denoted with lower case letters, i.e. \(r_\mathrm {i}\) and h, and the cross-sectional area of the arterial wall \(A\!=\!2\pi r_\text {i}h+\pi h^2\) is constant because the arterial tissue is assumed to be incompressible and the axial stretch is constant. In this loaded state, two sets of stresses are calculated for an artery: equilibrium stresses and constitutively determined stresses.

By stating equilibrium in the circumferential and axial direction, the corresponding equilibrium stresses are calculated as, i.e. Laplace laws,

respectively. The axial reaction force cannot be measured in vivo and is estimated by assuming that the ratio between the axial and circumferential stresses is \(\gamma \!=\!0.59\) at the mean arterial blood pressure \({\bar{P}}\!=\!13.3~\text {kPa}\) [9]. Using Eqs. (1) and (2), the axial force is estimated as

where the inner radius \({\bar{r}}_\text {i}\) and the wall thickness \({\bar{h}}\) are associated with \({\bar{P}}\).

The second set of stresses is determined using the Holzapfel–Gasser–Ogden (HGO) constitutive model [26]. Following Gade et al. [13], the constitutively determined stresses are: in the circumferential direction

and in the axial direction

where \(c\!>\!0\) is a material constant describing the isotropic matrix, \(k_1,k_2\!>\!0\) are material constants associated with the embedded collagen fibers, and \(\beta \in [0,\pi /2]\) is the pitch angle of the symmetrically arranged collagen fibers relative to the circumferential direction, see Fig. 1a. Furthermore, \(I_4\) is the squared stretch along the collagen fibers calculated as

and \(\lambda _\theta \) is the stretch in the circumferential direction defined as the ratio of the loaded to the unloaded mid-wall circumference, i.e.

where H has been replaced by \(R_\mathrm {i}\), \(\lambda _z\), \(r_\mathrm {i}\), and h using that the wall volume is constant due to incompressibility.

The equilibrium stresses in Eqs. (1) and (2) are fully determined given a data set comprising time-resolved blood pressure (P) and inner radius \((r_\mathrm {i})\) measurements sampled n times and information about the cross-sectional area (A) of the arterial wall. The constitutively determined stresses in Eqs. (4) and (5), however, contain six unknown model parameters: the unloaded inner radius \(R_\text {i}\), the axial stretch \(\lambda _z\), the material constants c, \(k_1\), \(k_2\), and the angle \(\beta \). These parameters are determined by solving the following non-convex weighted least-squares minimization problem:

where \({{\mathbb {U}}}{{\mathbb {P}}}\) denotes upper problem in anticipation of the B&B algorithm, \(\varvec{\kappa }\!=\!\bigl (R_\text {i},\lambda _z,c,k_1,k_2,\beta \bigl )\) is the parameter vector, \(j\!=\!1,\dots ,n\) indicates a data point sample, and the superscripts L and U denote lower and upper bound, respectively. The weighting factor \(\psi \!=\!0.99\) is used to let the objective function be dominated by the circumferential stresses [13]. The non-linear inequality constraints in (\({{\mathbb {U}}}{{\mathbb {P}}}\)) are not present in the original optimization problem and are introduced to reduce the parameter space to obtain physiologically reasonable values, see the Discussion. The fitting ranges and the limits on the non-linear constraints are based on experimentally observed values [27,28,29,30] and summarized in Table 1.

The parameter identification method in Gade et al. [13] has been numerically validated and the \(95\%\) limits of agreement have been determined for each of the six parameters, see Table 2. These limits represent the interval around the identified value in which the true parameter is lying and are used to create the vicinity regions around a candidate global solution later on in the Sect. 5. For parameter \(k_2\) the difference of the identified and the correct value increases as the correct value increases. To compensate for this systematic error, the \(95\%\) limits of agreement are based on the ratio instead [13].

3 Global optimization approach

A B&B algorithm is used to solve the problem (\({{\mathbb {U}}}{{\mathbb {P}}}\)) to global optimality, meaning that the relative difference between the optimal values of the estimated and the true global solution is less than the tolerance \(\varepsilon \). The basic idea is to generate a sequence of non-increasing upper bounds \(U\!B\) and a sequence of non-decreasing lower bounds LB on the global solution. By successive subdivision of the parameter space along the B&B tree, \(\varepsilon \)-convergence is guaranteed in a finite number of iterations [18]. In each region of the parameter space, the original non-convex problem, i.e. the upper problem (\({{\mathbb {U}}}{{\mathbb {P}}}\)), is solved to local optimality and the upper bound is updated in case this local solution provides a lower value on the upper bound. Then the convex relaxation of the original problem, which will be referred to as the lower problem (\(\mathbb {LBP}\)), is solved to local, hence global optimality to establish a new lower bound on the global solution in the current region. If the lower bound is above the upper bound, the current region cannot contain the global solution and can therefore be excluded. Otherwise the current region is added to a list of active problems \({\mathcal {A}}\) which possibly contain the global optimum and the process is repeated until the relative difference between the upper and lower bound is less than the specified tolerance \(\varepsilon \).

3.1 Construction of convex relaxation

To facilitate the construction of the convex relaxation, the model equations (4) and (5) are not introduced in the objective function but enforced as constraints instead [19]. This makes the objective function convex in the auxiliary variables \(\sigma _{\theta \theta ,j}^\text {mod},\sigma _{zz,j}^\text {mod}\) which represent the model stresses and are introduced as additional unknowns to the optimization problem. The added constraints enforcing the model equations are non-linear due to the presence of bilinear, fractional, and componentwise convex terms. To facilitate the creation of convex relaxations of the model equations, the terms \(r_{\text {i},j}, R_\text {i},\lambda _z,\beta \) are replaced by scaled counterparts according to

where \(s\!=\!1000\) is a scaling factor chosen such that the magnitude of \({\tilde{R}}\) is similar to the other unknown parameters. After substitution of the scaled counterparts in the model equations, the following auxiliary variables are introduced:

where \(\rightarrow \) means an auxiliary variable replacing the term following the arrow. The auxiliary variables \(w_1\), \(w_2\), \(w_3\), \(w_5\), \(w_6\), \(w_7\), \(w_8\), \(w_9\), \(w_{12,j}\), \(w_{13,j}\) replace bilinear terms, \(w_4\) a fractional term, and \(w_{14,j}\) componentwise convex terms. For each of the bilinear, fractional, and componentwise convex auxiliary variables in Eq. (9), four inequality constraints are added to the optimization problem, see “Appendix A”. The auxiliary variables \(w_{10}\) and \(w_{11,j}\) are enforced as linear equality constraints and are introduced to decrease the total number of auxiliary variables. The resulting intermediate optimization problem is

where \({\varvec{{u}}}\!=\!\left[ \tilde{\varvec{\kappa }},\varvec{\sigma }^\text {mod}_{\theta \theta },\varvec{\sigma }^\text {mod}_{zz},{\varvec{{w}}}\right] \), \(\tilde{\varvec{\kappa }}\!=\!\left( {\tilde{R}},{\tilde{\lambda }}_z,c,k_1,k_2,{\tilde{\beta }}\right) \), and \({\varvec{{w}}}\) contains the auxiliary variables \(w_a\). Due to the introduction of auxiliary variables, the degree of non-linearity in the model equations has been greatly reduced. However, the model equations in (\(\mathbb {INP}\)) still have componentwise convex terms of the form \(x\exp \!\left( y\right) \) which are addressed according to “Appendix A.4”. For these terms, instead of introducing new auxiliary variables, the relaxation is incorporated directly into the model equations. The complete convex relaxation of (\({{\mathbb {U}}}{{\mathbb {P}}}\)) now reads

where

The non-linear inequality constraints present in (\({{\mathbb {U}}}{{\mathbb {P}}}\)) are enforced as simple box constraints on the corresponding (auxiliary) variables \({\tilde{R}}\), \(w_{11,j}\), and \(w_{14,j}\). The bounds on auxiliary variables \(w_{11,j}\), \(w_{12,j}\), and \(w_{13,j}\) are non-trivial due to the non-linear dependence on \({\tilde{R}}\), \({\tilde{\lambda }}_z\), and \({\tilde{\beta }}\), see “Appendix B”. Furthermore, tight bounds on the auxiliary variables representing the model stresses are added, see Sect. 4.1.

The lower bounding problem (\(\mathbb {LBP}\)) has in total \(16+6n\) variables and \(37+21n\) constraints. A comparison of the size of (\({{\mathbb {U}}}{{\mathbb {P}}}\)) and (\(\mathbb {LBP}\)) is provided in Table 3.

3.2 Branching method

A crucial choice for the efficiency of a B&B algorithm is the branching method, i.e. the selection of the parameter to branch on. The proposed strategy follows the ideas in Esposito and Floudas [19] and considers the deviations between the values of the auxiliary variables \(w_a\in {\varvec{{w}}}\) and the non-convex terms they replace expressed in terms of the six model parameters \(p\in \tilde{\varvec{\kappa }}\). The deviations are thus calculated as

where \(w_a^*\) denotes the value of the ath auxiliary variable at the solution of (\(\mathbb {LBP}\)) and \(w_a\!\left( \tilde{\varvec{\kappa }}^*\right) \) is the ath auxiliary variable expressed as an explicit function, see Eq. (9), of the model parameters and evaluated at the solution of (\(\mathbb {LBP}\)). The deviation of auxiliary variable \(w_1\) is for example defined as

In order to account for \(w_{14,j}\) being an exponent, the associated deviation is calculated using

instead.

Given the deviations in Eqs. (11) and (13), a region-reduction measure is introduced for every model parameter according to

where \(p^\mathrm {U},p^\mathrm {L}\) are the upper and lower bounds of parameter p in the current region and \(p^\mathrm {U,orig},p^\mathrm {L,orig}\) are the original upper and lower bounds. Finally, a total deviation \(\delta _{p}\) is calculated for every model parameter p by summing up all \(\delta _a\)’s in which this parameter is present and multiplying by the corresponding region reduction measure \(r_p\). The parameter which has the highest \(\delta _p\) is chosen to branch on and the corresponding region is bisected.

3.3 Proposed branch-and-bound algorithm

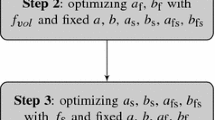

The rudimentary B&B algorithm described in the beginning of Sect. 3 is adapted to the properties of the problem at hand. In Gade et al. [13], the non-convex problem is solved from several starting points and the solution with the lowest error function value is taken to be the global solution. The same methodology is applied here when solving the upper problem for the first time. The supposedly good estimate of the global solution allows for not solving the upper problem until the lower bound reaches a user-specified threshold t.

The solution of both (\({{\mathbb {U}}}{{\mathbb {P}}}\)) and (\(\mathbb {LBP}\)) can be accelerated by using a good starting point. Since the upper problem is solved prior to the lower problem, little information about the current region is available. Therefore, when solving (\({{\mathbb {U}}}{{\mathbb {P}}}\)) the only criteria for choosing a starting point is whether it is feasible or not. For that purpose ten feasible or nearly feasible starting points are generated using Latin Hypercube Sampling [31] in the current parameter region. The starting point of the lower problem is determined by using the solution of the upper problem if available, otherwise it is generated using the same method as for the upper problem.

To speed up the B&B algorithm even further it is parallelized. Since it is not essential for the B&B algorithm that the sequence of lower bounds is always non-decreasing, it is possible to split the list of active problems \({\mathcal {A}}\) into \({\mathcal {A}}_1,\dots ,{\mathcal {A}}_v\), where v is the number of available CPUs, and assess them individually.Footnote 1 The individual assessment is done for a certain time T and then \({\mathcal {A}}_1,\dots ,{\mathcal {A}}_v\) are merged again into one list of active problems and the process is repeated until in less than 1000 active regions the lower bound is below a user-specified threshold t. From then on, the B&B algorithm is executed serially to make sure that the sequence of lower bounds is non-decreasing considering all active regions. The whole B&B algorithm is summarized in Algorithm 1.

3.4 Implementation

The proposed B&B algorithm is implemented in Matlab R2019b [32]. The upper problem (\({{\mathbb {U}}}{{\mathbb {P}}}\)) is solved using the function fmincon with the interior-point optimization algorithm. For numerical efficiency the model parameters c, \(k_1\), \(k_2\), and \(\beta \) are replaced in (\({{\mathbb {U}}}{{\mathbb {P}}}\)) by scaled counterparts [13],

where the superscribed tilde indicates a scaled parameter. The analytical gradient of the objective function, the analytical gradient of the non-linear inequality constraints, and the analytical Hessian of the Lagrangian are determined with Maple 2019.1 [33] and supplied to fmincon. To solve the upper problem from 10 starting points, the MultiStart-class feature is used.

The lower problem (\(\mathbb {LBP}\)) is solved using the Interior Point Optimizer (IPOPT) software package [34] which is interfaced to Matlab as mex code. Again, the analytical gradient of the objective function, the analytical gradient of the constraints, and the analytical Hessian of the Lagrangian are provided.

Although both optimization algorithms are based on the interior-point method, fmincon solves (\({{\mathbb {U}}}{{\mathbb {P}}}\)) and IPOPT (\(\mathbb {LBP}\)) more efficiently. The same stopping criteria are used for both algorithms: absolute change of objective function value must be less than \(10^{-10}\), and constraint violation must be less than \(10^{-8}\).

4 Material

The material for this study comes from the abdominal aorta of three healthy, non-smoking Caucasian males, representative for each age group. Subjects I, II, and III are 25, 41, and 69 years of age, respectively. The pressure-radius data is taken from Sonesson et al. [35] and for details about the data acquisition the reader is referred to that paper. From the raw data we create a pressure-radius curve consisting of \(n\!=\!18\) equidistant data points for each subject according to Stålhand [36], see Fig. 2. The total number of data points is chosen as small as possible, see the Discussion. Neither the deformed wall thickness nor the deformed wall cross-sectional area were recorded in Sonesson et al. [35]. The deformed wall cross-sectional area A is therefore estimated according to \(A\!=\!19.6\!+\!0.8\cdot \text {age}\), where A is in \(\text {mm}^2\) and age is in years [36].

4.1 Bounds on the auxiliary variables representing model stresses

The pressure-radius measurements show distinctive hysteresis, see Fig. 2. When the input data is converted into the Laplace stresses according to Eqs. (1) and (2), the hysteresis is still present, see Fig. 3. The constitutive model in Sect. 2 assumes a purely elastic material, however, and neglects hysteresis accordingly. The curves describing the model stresses in the circumferential and axial direction must, therefore, be (mostly) contained within the corresponding hysteresis loops if they are to be the best solution to the least-squares optimization problem. Individual lower and upper bounds on each auxiliary variable representing the model stresses are therefore introduced based on the hysteresis loops of the Laplace stresses. Afterwards the average difference between the upper and lower bounds, excluding the endpoints, is calculated and the stress ranges smaller than this average difference are symmetrically enlarged. The lower and upper limits for the endpoints are created by using twice the average stress difference. The resulting bounds on the auxiliary variables representing the circumferential and axial model stresses are shown in Fig. 3.

Bounds on the auxiliary variables representing the circumferential and axial model stresses for subject I. The black circles denote the pressure-radius measurements converted into Laplace stresses, the black lines are the hysteresis loops, and the red bars are the bounds for each auxiliary variable representing the model stresses. (Color figure online)

5 Results

The proposed B&B algorithm is applied to the clinical data and four successively increasing regions in the parameter space around a candidate global solution for each of the three subjects is investigated. The candidate global solutions are determined by solving (\({{\mathbb {U}}}{{\mathbb {P}}}\)) with 100 starting points generated with Latin Hypercube Sampling over the entire parameter space defined by the fitting ranges in Table 1. For subjects I, II, and III, respectively, \(81\%\), \(79\%\), and \(64\%\) of all starting points end up at the same solution with the lowest objective function value. The fact that the same solution is not always found demonstrates the non-convexity of the optimization problem and the associated existence of local solutions which are not the global solution. The candidate global solutions are summarized in Table 4. Around these candidate solutions four vicinity regions are established: \(1\%\), \(10\%\), \(100\%\), and \(500\%\) of the limits of agreement for each parameter, see Table 2. The vicinity regions respect the parameter boundaries in Table 1 and the explicit parameter spaces are summarized in Table 5. For each subject and each vicinity region, a stopping-tolerance in the range \(\varepsilon \!=\!0.01{-}0.095\) is required for the B&B algorithm, with larger tolerances for larger vicinity regions, see Table 6. The threshold t until the B&B algorithm is executed in parallel is put to \(90\%\) of the objective function value of the respective candidate global solution.

During the initialization step of the B&B algorithm, the respective candidate global solution is identified in every case and a better solution for (\({{\mathbb {U}}}{{\mathbb {P}}}\)) is never found. The qualitative progress of the difference between the upper and lower bound is similar for each subject in the same vicinity region, see Fig. 4. For many iterations, the relative difference is unity, i.e. \(LB\!=\!0\), followed by a comparably rapid drop until the lower bound has reached approximately \(90\%\) of the upper bound and the progress is substantially slowed down. The total amount of B&B iterations to achieve the required tolerance is large, especially for the two largest vicinity regions. Although each B&B iteration requires only a fraction of a second, the total CPU solution times are huge and are only manageable through parallelization, see Table 6.

6 Discussion

In all cases tested, the candidate global solution is identified during the initialization step of the B&B algorithm and no better solution is found, even after solving (\({{\mathbb {U}}}{{\mathbb {P}}}\)) several million times in the larger parameter regions. This is not an unusual behavior of deterministic global optimization methods which often require many iterations to verify that a promising solution found early in the process is indeed the global solution [18]. That no better solution of (\({{\mathbb {U}}}{{\mathbb {P}}}\)) is found highlights the successful combination of using multiple starting points, selection of starting points and the rescaling when solving (\({{\mathbb {U}}}{{\mathbb {P}}}\)) in the initialization step.

Initially a tolerance of \(\varepsilon \!=\!0.01\) was required for each subject and vicinity region. It was, however, soon realized that \(\varepsilon \!=\!0.01\) is not feasible for every optimization run, since the required computational power exceeded our available resources. An in-house cluster with 108 2.6 GHz cores and 576 GB RAM, as well as two personal computers, were running for approximately four months to solve all optimization runs with a combined CPU time of 5.4 years.Footnote 2 Especially the optimization runs for subject III lasted for a long time because the parameter space within each vicinity region is larger compared to the other two subjects. The increased parameter spaces for subject III are due to the higher \(k_2\)-value of the candidate global solution and the relative confidence interval for that parameter, see Sect. 2. The required tolerance is therefore lowered on an individual basis such that (\({{\mathbb {U}}}{{\mathbb {P}}}\)) is at least solved a few million times during which no better solution must be found.

Relative difference between lower and upper bound, \(\left( U\!B-LB\right) /U\!B\), during the solution process. The black, blue, and red lines correspond to subjects I, II, and III, respectively. The dash-dotted, dotted, dashed, and solid lines represent the vicinity regions \(1\%\), \(10\%\), \(100\%\), and \(500\%\), respectively. (Color figure online)

In its current form, the proposed B&B algorithm does not utilize parallelization once the lower bound has been lifted to \(90\%\) of the upper bound. This threshold is chosen for two reasons. First, the upper problem is solved again when the threshold has been passed. It is assumed that (\({{\mathbb {U}}}{{\mathbb {P}}}\)) becomes practically convex in the small parameter space of the respective active region and that a better solution is found in this state. The best solution of (\({{\mathbb {U}}}{{\mathbb {P}}}\)), i.e. the lowest upper bound, should be available throughout the B&B algorithm to exclude regions as early as possible and communication between the active regions split into parts is not provided. Second, when the list of active regions is split into several parts, the sequence of lower bounds will be different in each individually processed part. The more parts the list of active regions is split into and the longer these parts are processed individually, the more the lower bounds will diverge and the iteration number to achieve a certain tolerance will be overestimated. This overestimation has a step-like appearance where the tolerance progress is shifted towards higher B&B iterations. This is especially pronounced for subject III whose optimization runs have been parallelized the most, see Fig. 4. In order to get the correct tolerance progress towards the end of each optimization run, the B&B iterations are performed serially once the threshold is passed. Both aspects are of minor concern and the algorithm should be parallelized throughout if the goal is solely to identify mechanical properties of arteries with certification of global optimality.

Non-linear inequality constraints are added into the original optimization problem (\({{\mathbb {U}}}{{\mathbb {P}}}\)). Their main purpose is to reduce the parameter space to the physiological range. Circumferential stretches of \(0.8\!<\!\lambda _\theta \!<\!1.6\) are reported for the abdominal aorta [27, 37] and we take \(\lambda _\theta ^\text {L}\!=\!0.5\) and \(\lambda _\theta ^\text {U}\!=\!2\). The invariant \(I_4\) is a macroscopic quantity representing the squared stretch of collagen fibers. Hence, the upper bound on \(I_4\) can be related to the squared failure-stretch of collagen fibers and is estimated to be \(I_4^\text {U}\!=\!2\) [38, 39]. The lower limit on \(I_4\) is introduced to facilitate the construction of the lower bounding problem. Collagen fibers are, generally, considered to support tensile loads but buckle in compression [26]. Hence, the contribution of collagen in the constitutive model, cf. Sect. 2, should be omitted if \(I_4\!<\!1\). Due the difficulty in constructing a valid underestimator which is smooth at the transition from non-recruited \(\left( I_4\!<\!1\right) \) to recruited collagen fibers \(\left( I_4\!\ge \!1\right) \), collagen fibers are assumed to be always in extension. In order to test the validity of this assumption, the model parameters of the three subjects are identified with the original parameter identification method in Gade et al. [13] which does not restrict \(I_4\). Collagen fibers are recruited throughout the cardiac cycle in all cases, thus \(I_4\!\ge \!1\) appears to be a valid assumption. Lastly, the upper bound of the exponent in the exponential term appears explicitly in some constraints of (\(\mathbb {LBP}\)), i.e. \(\exp w_{14}^\text {U}\!=\!\exp \!\left[ k_2^\text {U}\left( I_4^\text {U}-1\right) ^2\right] \). Due to the high upper bound on \(k_2\) in Table 1, the exponential expression might become very large. To prevent numerical problems, the exponent is limited to 20.

The major determinant for the efficiency of a B&B-type algorithm is the creation of the convex underestimator. The convex underestimator must be as close as possible to the original function while the number of additional auxiliary variables and constraints should be kept to a minimum. Several alternative formulations of (\(\mathbb {LBP}\)) were examined, but the presented one turned out to be most efficient. In particular, the newly proposed underestimator for componentwise convex terms [40] gave a substantial speed-up compared to the more general \(\alpha \)-estimator [41]. Furthermore, the introduction of physiologically inspired auxiliary variables and the tight bounds on the auxiliary variables representing the model stresses based on the hysteresis loop, cf. Sect. 4.1, decrease the required computational time. With respect to the branching method, other variants were tested, in particular strategies 1–4 proposed in Adjiman et al. [42]: (S1) bisect the parameter which has the largest region reduction measure defined in Eq. (14); (S2) determine the auxiliary variable whose underestimator possesses the highest separation distance to the actual term it replaces. Bisect the involved parameter according to S1; (S3) Determine the auxiliary variable which differs the most from the actual term it replaces at the solution of (\(\mathbb {LBP}\)), cf. Eq. (11). Bisect the involved parameter according to S1; and (S4) calculate the deviation of each auxiliary variable according to S3. For every parameter, sum up all the deviations that the parameter is involved in and bisect the parameter with the highest sum. The branching method described in Sect. 3.2 was found to be superior, however. Branching on auxiliary variables is not used since updating all lower and upper bounds is non-trivial for branching on a member of \({\varvec{{u}}}\) compared to a member of \(\tilde{\varvec{\kappa }}\).

From an implementation point of view, one point is worth discussing. By looking at the total amount of variables and constraints of (\(\mathbb {LBP}\)), see Table 3, it is obvious that the amount of samples, i.e. the number of pressure-radius pairs, must be as low as possible. For the three investigated subjects in this study, \(n\!=\!18\) is found to be the lower limit to get a solution which is independent from the number of samples. For other subjects this number might be different.

Considering the clinical application, the proposed B&B algorithm appears to be impractical. The solution time is orders of magnitude too large, even if only the vicinity around a candidate global solution is explored and the process is massively parallelized. Furthermore, the smaller the relative tolerance is required to be, the longer the identification process will take since the B&B algorithm slows down substantially towards smaller relative differences between lower and upper bound. However, no better solution than the one identified during the initialization is found, even after solving (\({{\mathbb {U}}}{{\mathbb {P}}}\)) several million times. This indicates that the heuristic approach of using multiple starting points works well for this kind of optimization problem. The original parameter identification method in Gade et al. [13] with its comparably small computational requirements, therefore, provides a good estimate of the global solution if it is not even obtained.

Notes

Within each split part of \({\mathcal {A}}\) the sequence of lower bounds is non-decreasing.

More than half of the total time is required for the serial execution of the B&B algorithm, i.e. once the threshold t has been passed.

References

Tsamis, A., Krawiec, J.T., Vorp, D.A.: Elastin and collagen fibre microstructure of the human aorta in ageing and disease: a review. J. R. Soc. Interface 10(83), 20121004 (2013). https://doi.org/10.1098/rsif.2012.1004

Monica, E., Claudiu, S.: Arterial stiffness and hypertension: Which comes first? Maedica (Buchar) 12(3), 184–190 (2017)

Bramwell, J.C., Hill, A.V.: The velocity of the pulse wave in man. Proc. R. Soc. B Biol. Sci. 93(652), 298–306 (1922). https://doi.org/10.1098/rspb.1922.0022

Kawasaki, T., Sasayama, S., Yagi, S.I., Asakawa, T., Hirai, T.: Non-invasive assessment of the age related changes in stiffness of major branches of the human arteries. Cardiovasc. Res. 21(9), 678–687 (1987). https://doi.org/10.1093/cvrese/21.9.678

Peterson, L.H., Jensen, R.E., Parnell, J.: Mechanical properties of arteries in vivo. Circ. Res. 8(3), 622–639 (1960). https://doi.org/10.1161/01.RES.8.3.622

Laurent, S., Cockcroft, J., Van Bortel, L.M., Boutouyrie, P.H., Giannattasio, C., Hayoz, D., Pannier, B., Vlachopoulos, C., Wilkinson, I., Struijker-Boudier, H.A.J.: Expert consensus document on arterial stiffness: methodological issues and clinical applications. Eur. Heart J. 27(21), 2588–2605 (2006). https://doi.org/10.1093/eurheartj/ehl254

Mancia, G., De Backer, G., Dominiczak, A., Cifkova, R., Fagard, R., Germano, G., Grassi, G., Heagerty, A.M., Kjeldsen, S.E., Laurent, S., Narkiewicz, K.: 2007 guidelines for the management of arterial hypertension. Eur. Heart J. 28(12), 1462–1536 (2007). https://doi.org/10.1093/eurheartj/ehm236

Roach, M.R., Burton, A.C.: The reason for the shape of the distensibility curves of arteries. Can. J. Biochem. Physiol. 35(8), 681–690 (1957). https://doi.org/10.1139/o57-080

Schulze-Bauer, C.A.J., Holzapfel, G.A.: Determination of constitutive equations for human arteries from clinical data. J. Biomech. 36(2), 165–169 (2003)

Masson, I., Boutouyrie, P.H., Laurent, S., Humphrey, J.D., Zidi, M.: Characterization of arterial wall mechanical behavior and stresses from human clinical data. J. Biomech. 41(12), 2618–2627 (2008). https://doi.org/10.1016/j.jbiomech.2008.06.022

Smoljkić, M., Sloten, J.V., Segers, P., Famaey, N.: Non-invasive, energy-based assessment of patient-specific material properties of arterial tissue. Biomech. Model. Mechanobiol. 14(5), 1045–1056 (2015). https://doi.org/10.1007/s10237-015-0653-5

Wittek, A., Derwich, W., Karatolios, K., Fritzen, C.P., Vogt, S., Schmitz-Rixen, T., Blase, C.: A finite element updating approach for identification of the anisotropic hyperelastic properties of normal and diseased aortic walls from 4D ultrasound strain imaging. J. Mech. Behav. Biomed. Mater. 58, 122–138 (2016). https://doi.org/10.1016/j.jmbbm.2015.09.022

Gade, J.-L., Stålhand, J., Thore, C.-J.: An in vivo parameter identification method for arteries: numerical validation for the human abdominal aorta. Comput. Methods Biomech. Biomed. Eng. 22(4), 426–441 (2019). https://doi.org/10.1080/10255842.2018.1561878

Van Soest, A.J., Casius, L.J.R.: The merits of a parallel genetic algorithm in solving hard optimization problems. J. Biomech. Eng. 125(1), 141–146 (2003). https://doi.org/10.1115/1.1537735

Higginson, J.S., Neptune, R.R., Anderson, F.C.: Simulated parallel annealing within a neighborhood for optimization of biomechanical systems. J. Biomech. 38(9), 1938–1942 (2005). https://doi.org/10.1016/j.jbiomech.2004.08.010

Koh, B.I., Reinbolt, J.A., George, A.D., Haftka, R.T., Fregly, B.J.: Limitations of parallel global optimization for large-scale human movement problems. Med. Eng. Phys. 31(5), 515–521 (2009). https://doi.org/10.1016/j.medengphy.2008.09.010

Radcliffe, N.R., Easterling, D.R., Watson, L.T., Madigan, M.L., Bieryla, K.A.: Results of two global optimization algorithms applied to a problem in biomechanics. In: Spring Simulation Multiconference 2010, SpringSim’10, pp. 1–7 (2010). https://doi.org/10.1145/1878537.1878627

Horst, R., Tuy, H.: Global Optimization: Deterministic Approaches, 3rd edn. Springer, Berlin (1996). https://doi.org/10.1007/978-3-662-03199-5

Esposito, W.R., Floudas, C.A.: Global optimization in parameter estimation of nonlinear algebraic models via the error-in-variables approach. Ind. Eng. Chem. Res. 37(5), 1841–1858 (1998). https://doi.org/10.1021/ie970852g

Amaran, S., Sahinidis, N.V.: Global optimization of nonlinear least-squares problems by branch-and-bound and optimality constraints. Top 20(1), 154–172 (2012). https://doi.org/10.1007/s11750-011-0178-8

Sahinidis, N.V.: BARON: a general purpose global optimization software package. J. Glob. Optim. 8(2), 201–205 (1996). https://doi.org/10.1007/bf00138693

Löfberg, J.: YALMIP: a toolbox for modeling and optimization in MATLAB. In: Proceeding of IEEE International Symposium on Computer Aided Control Systems Design, pp. 284–289 (2004). https://doi.org/10.1109/cacsd.2004.1393890

Misener, R., Floudas, C.A.: ANTIGONE: algorithms for continuous/integer global optimization of nonlinear equations. J. Glob. Optim. 59(2–3), 503–526 (2014). https://doi.org/10.1007/s10898-014-0166-2

Gleixner, A., Eifler, L., Gally, T., Gamrath, G., Gemander, P., Gottwald, R.L., Hendel, G., Hojny, C., Koch, T., Miltenberger, M., Müller, B., Pfetsch, M.E., Puchert, C., Rehfeldt, D., Schlösser, F., Serrano, F., Shinano, Y., Viernickel, J.M., Vigerske, S., Weninger, D., Witt, J.T., Witzig, J.: The SCIP Optimization Suite 5.0. 61(December) (2017)

Schulze-Bauer, C.A.J., Mörth, C., Holzapfel, G.A.: Passive biaxial mechanical response of aged human iliac arteries. J. Biomech. Eng. 125(3), 395–406 (2003). https://doi.org/10.1115/1.1574331

Holzapfel, G.A., Gasser, T.C., Ogden, R.W.: A new constitutive framework for arterial wall mechanics and a comperative study of material models. J. Elast. 61(1), 1–48 (2000). https://doi.org/10.1023/A:1010835316564

Labrosse, M.R., Gerson, E.R., Veinot, J.P., Beller, C.J.: Axial prestretch and circumferential distensibility in biomechanics of abdominal aorta. J. Mech. Behav. Biomed. Mater. 17, 44–55 (2013). https://doi.org/10.1016/j.jmbbm.2012.08.004

Horny, L., Adamek, T., Gultova, E., Zitny, R., Vesely, J., Chlup, H., Konvickova, S.: Correlations between age, prestrain, diameter and atherosclerosis in the male abdominal aorta. J. Mech. Behav. Biomed. Mater. 4(8), 2128–2132 (2011). https://doi.org/10.1016/j.jmbbm.2011.07.011

Horny, L., Netusil, M., Daniel, M.: Limiting extensibility constitutive model with distributed fibre orientations and ageing of abdominal aorta. J. Mech. Behav. Biomed. Mater. 38, 39–51 (2014). https://doi.org/10.1016/j.jmbbm.2014.05.021

Schriefl, A.J., Zeindlinger, G., Pierce, D.M., Regitnig, P., Holzapfel, G.A.: Determination of the layer-specific distributed collagen fibre orientations in human thoracic and abdominal aortas and common iliac arteries. J. R. Soc. Interface 9(71), 1275–1286 (2012). https://doi.org/10.1098/rsif.2011.0727

Myers, R.H., Montgomery, D.C., Anderson-Cook, C.M.: Response Surface Methodology: Process and Product Optimization Using Designed Experiments. Wiley, New York (2009)

Matlab:Version 9.7.0 (R2019b). The MathWorks Inc., Natick (2019)

Maple: Version 2019.1. Maplesoft, a division of Waterloo Maple Inc., Waterloo (2019)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106(1), 25–57 (2006). https://doi.org/10.1007/s10107-004-0559-y

Sonesson, B., Länne, T., Vernersson, E., Hansen, F.: Sex difference in the mechanical properties of the abdominal aorta in human beings. J. Vasc. Surg. 20(6), 959–969 (1994). https://doi.org/10.1016/0741-5214(94)90234-8

Stålhand, J.: Determination of human arterial wall parameters from clinical data. Biomech. Model. Mechanobiol. 8(2), 141–148 (2009). https://doi.org/10.1007/s10237-008-0124-3

Schriefl, A.J., Schmidt, T., Balzani, D., Sommer, G., Holzapfel, G.A.: Selective enzymatic removal of elastin and collagen from human abdominal aortas: uniaxial mechanical response and constitutive modeling. Acta Biomater. 17, 125–136 (2015). https://doi.org/10.1016/j.actbio.2015.01.003

Martufi, G., Gasser, T.C.: A constitutive model for vascular tissue that integrates fibril, fiber and continuum levels with application to the isotropic and passive properties of the infrarenal aorta. J. Biomech. 44(14), 2544–2550 (2011). https://doi.org/10.1016/j.jbiomech.2011.07.015

Hamedzadeh, A., Gasser, T.C., Federico, S.: On the constitutive modelling of recruitment and damage of collagen fibres in soft biological tissues. Eur. J. Mech. A/Solids 72(May), 483–496 (2018). https://doi.org/10.1016/j.euromechsol.2018.04.007

Najman, J., Bongartz, D., Mitsos, A.: Convex relaxations of componentwise convex functions. Comput. Chem. Eng. (2019). https://doi.org/10.1016/j.compchemeng.2019.106527

Maranas, C.D., Floudas, C.A.: Global minimum potential energy conformations of small molecules. J. Glob. Optim. 4(2), 135–170 (1994). https://doi.org/10.1007/BF01096720

Adjiman, C.S., Androulakis, I.P., Floudas, C.A.: A global optimization method, \(\alpha \)BB, for general twice-differentiabe constrained NLPs-II. Implementation and computational results. Comput. Chem. Eng. 22(9), 1159–1179 (1998). https://doi.org/10.1016/S0098-1354(98)00218-X

McCormick, G.P.: Computability of global solutions to factorable nonconvex programs: part I—convex underestimating problems. Math. Program. (1976). https://doi.org/10.1007/BF01580665

Al-Khayyal, F.A.: Jointly constrained bilinear programs and related problems: an overview. Comput. Math. Appl. 19(11), 53–62 (1990). https://doi.org/10.1016/0898-1221(90)90148-D

Maranas, C.D., Floudas, C.A.: Finding all solutions of nonlinearly constrained systems of equations. J. Glob. Optim. 7(2), 143–182 (1995). https://doi.org/10.1007/BF01097059

Zamora, J.M., Grossmann, I.E.: A branch and contract algorithm for problems with concave univariate, bilinear and linear fractional terms. J. Glob. Optim. 14(3), 217–249 (1999). https://doi.org/10.1023/A:1008312714792

Funding

Open access funding provided by Linköping University. This work was financially supported by the Swedish Research Council under Grant 621-2014-4165.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Code availability

All necessary information for code development is included in this published article.

Availability of data and material

All data analyzed during this study are included in this published article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: Funding information “Open access funding provided by Linköping University” has been added in the article.

Appendices

Convex relaxations

1.1 Bilinear term

A bilinear term of the form xy, \(\left( x,y\right) \in \left[ x^\text {L},x^\text {U}\right] \times \left[ y^\text {L},y^\text {U}\right] \subset {\mathbb {R}}^2\), is replaced by the auxiliary variable \(w_{xy}\) and the following four linear inequality constraints are added to replace the non-linear equality constraint \(w_{xy}\!=\!xy\):

The first two constraints represent the convex hull [43] and the latter two the concave hull [44].

1.2 Fractional term

A fractional term of the form \(xy^{-1}\), \(\left( x,y\right) \in \left[ x^\text {L},x^\text {U}\right] \times \left[ y^\text {L},y^\text {U}\right] \subset {\mathbb {R}}^2_{>0}\), is replaced by the auxiliary variable \(w_{xy}\) and the following four inequality constraints are added to replace the non-linear equality constraint \(w_{xy}\!=\!xy^{-1}\):

The first two convex constraints represent the convex hull [45] and the latter two linear constraints the concave hull [46].

1.3 Componentwise convex term \(xy^2\)

A componentwise convex term of the form \(xy^2\), \(\left( x,y\right) \in \left[ x^\text {L},x^\text {U}\right] \times \left[ y^\text {L},y^\text {U}\right] \subset {\mathbb {R}}^2_{\ge 0}\), is replaced by the auxiliary variable \(w_{xy}\) and the following four inequality constraints are added to replace the non-linear equality constraint \(w_{xy}\!=\!xy^{2}\):

The first two convex constraints represent the convex hull [40] and the latter two linear constraints the concave hull, whose creation is inspired by the ideas for a bilinear term [44].

1.4 Componentwise convex term \(x\exp y\)

A componentwise convex term of the form \(x\exp {y}\), \(\left( x,y\right) \in \left[ x^\text {L},x^\text {U}\right] \times \left[ y^\text {L},y^\text {U}\right] \subset {\mathbb {R}}^2_{\ge 0}\), is replaced by the auxiliary variable \(w_{xy}\) and the following four inequality constraints are added to replace the non-linear equality constraint \(w_{xy}\!=\!x\exp {y}\):

where

The first two convex constraints represent the convex hull [40] and the latter two linear constraints the concave hull, whose creation is inspired by the ideas for a bilinear term [44].

Bounds on auxiliary variables

In order to calculate the tightest possible bounds on the auxiliary variables, they are determined by writing them as explicit functions of the original model parameters \(\varvec{\kappa }\). For most auxiliary variables the bounds are trivial and in the following only the non-trivial bounds are shown.

The bounds on auxiliary variable \(w_{11,j}\!=\!{\tilde{r}}_j{\tilde{R}}\left( 1-{\tilde{\beta }}\right) +{\tilde{\lambda }}_z{\tilde{\beta }}-1\) are:

In addition, \(I_{4,j}^\text {L}-1\!\le \!w_{11,j}^\text {L},w_{11,j}^\text {U}\!\le \!I_{4,j}^\text {U}-1\) must hold.

The bounds on auxiliary variable \(w_{12,j}\!=\!k_1{\tilde{R}}\left( 1-{\tilde{\beta }}\right) \left[ {\tilde{r}}_j{\tilde{R}}\left( 1-{\tilde{\beta }}\right) +{\tilde{\lambda }}_z{\tilde{\beta }}-1\right] \) are:

The bounds on auxiliary variable \(w_{13,j}\!=\!k_1{\tilde{\lambda }}_z{\tilde{\beta }}\left[ {\tilde{r}}_j{\tilde{R}}\left( 1-{\tilde{\beta }}\right) +{\tilde{\lambda }}_z{\tilde{\beta }}-1\right] \) are:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gade, JL., Thore, CJ. & Stålhand, J. Identification of mechanical properties of arteries with certification of global optimality. J Glob Optim 82, 195–217 (2022). https://doi.org/10.1007/s10898-021-01037-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01037-8