Abstract

Modern particle size statistics uses many different statistical distributions, but these distributions are empirical approximations for theoretically unknown relationships. This also holds true for the famous RRSB (Rosin-Rammler-Sperling-Bennett) distribution. Based on the compound Poisson process, this paper introduces a simple stochastic model that leads to a general product form of particle mass distributions. The beauty of this product form is that its two factors characterize separately the two main components of samples of particles, namely, individual particle masses and total particle number. The RRSB distribution belongs to the class of distributions following the new model. Its simple product form can be a starting point for developing new particle mass distributions. The model is applied to the statistical analysis of samples of blast-produced fragments measured by hand, which enables a precise investigation of the mass-size relationship. This model-based analysis leads to plausible estimates of the mass and size factors and helps to understand the influence of blasting conditions on fragment-mass distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It has been 90 years since Rosin and Rammler published their article on the RRSB distribution for the mass of coal particles [1]. Here RRSB stands for Rosin–Rammler-Sperling-Bennet, who developed stepwise the modern form of this distribution [2]. The paper by Rosin and Rammler [1] was a great success, and the RRSB distribution is today standard in general particle size statistics. However, many other distributions have also been introduced and used since then. For example, many distributions concerning rock fragmentation by blasting have been developed, such as Kuz-Ram formula [3, 4], SveDeFo formula [5,6,7], Kou-Rustan formula [8], Chung and Katsabanis formula [9], crush-zone formula (CZM) [10, 11], two-component formula [12], SweBrec distribution [13] and KCO (Kuznetsov-Cunningham-Ouchterlony) formula [14], xP-Frag distribution [15], and fragmentation-energy fan [16,17,18,19,20]. The corresponding literature is reviewed in [21, 22].

Although many distributions have been developed, most of them, including the RRSB distribution, are empirical or approximations for theoretically unknown relationships, with little binding with material properties or crushing devices. The problem of finding suitable models that explain these forms is still open. Three notable exceptions are Kolmogorov [23], Brown and Wohletz [24], and Fowler and Scheu [25], who modelled in different extent the fragmentation process. The first introduced the lognormal distribution to particle statistics and the others developed theories that lead to the RRSB and gamma distribution, respectively.

The present paper introduces a general stochastic model that leads to a wide class of particle mass distributions. It does not model the fragmentation process but instead the structure of particle samples, the number and the sizes and masses of particles. The model does not employ ideas of fractal geometry and self-similarity, but it only operates with finite random variables. The model components can be observed and statistically analysed in particle samples. It may help to understand the appearance of the RRSB distribution as well as the Gates-Gaudin-Schuhmann (GGS) power-law distribution.

The model is based on the point process approach introduced by Stoyan and Unland [26]. According to this approach, the particles of a sample are assumed to be ordered with respect to size (some quantity of the nature of a length, as for example, a Feret diameter, see Sect. 2) and interpreted as a point process on the real axis. The new idea of the present paper is to mark the points with the corresponding particle masses. These mass marks are random, and their random variability results in a large extent from fluctuations of particle shapes, which lead to random relations between particle size and mass.

This improved approach was inspired by the paper of Zhang et al. [27], which offers data of particle masses for single particles. The data came from blasting experiments in which the fragments larger than 20 mm were individually measured and weighted, while the fragments smaller than 20 mm were sieved.

The present paper first describes the stochastic model, which is closely related to the so-called compound Poisson process, a classical model of applied probability, see Sect. 2. Section 3 shows how known particle mass distributions may be classified as belonging to the model. The data from [27] are analysed statistically in Sect. 4 in the spirit of the model. Finally, the whole approach is discussed thoroughly.

2 The model including particle masses

This paper develops the theory in Stoyan and Unland [26] by including the particle masses into the theory. It uses the notation of that paper (which is in the same journal and in free access) and starts with its basic idea: to consider the particle sizes as points on the positive real line or x-axis.

Following the classical approach of sieving statistics, “sizes” are here one-dimensional geometrical characteristics of particles. In the example considered in Sect. 4, size is the maximum Feret diameter. In [26], where the particles were measured by means of image analysis (CPA), size was the diameter of the circle of equal projected area. And a third choice of size could be the diameter of a volume-equivalent sphere.

If arranged in an increased order, the size-points form a random sequence, which is interpreted as a one-dimensional point process. Following [26] we assume that this point process is an inhomogeneous Poisson process.

The notion of a Poisson process is explained in [26, 28, 29]. An inhomogeneous Poisson process is a random collection of points (in the case here considered: of particle sizes) on the positive x-axis. The numbers of points in disjoint intervals are independent, and the point density is controlled by a so-called intensity function \({\uplambda }_{0} \left( x \right)\), which here has the name size intensity. The random number N((a,b)) of points in the interval (a, b) has a Poisson distribution with mean.

meaning that the probability \(p\left( n \right)\) to find n particles in (a,b) is

where \({\uplambda } = E\left( {N\left( {\left( {a,b} \right)} \right)} \right)\).

The index ‘‘0″ at \({\uplambda }_{0} \left( x \right)\) indicates that zero-dimensional numbers are counted. The shape of \({\uplambda }_{0} \left( x \right)\) depends on the process by which the particles were produced. In the present paper as well as in [26] the size intensity is decreasing in x since there are more small particles than large ones; of course, other forms are possible.

Now, in addition to particle size, also particle mass m (measured in grams) is included in the modelling. First, the cumulated mass of all particles which are smaller than x is considered. The mean of this random variable is denoted in [26] by \(\Lambda_{3} \left( x \right)\) called cumulative mass intensity function, considered as a function of x. The index “3” indicates that three-dimensional quantities are counted and added.

The mean \(\Lambda_{3} \left( x \right)\) can be calculated starting with the following statistical assumption: the masses of the particles in the point sequence (ordered with respect to size) are independent but, of course, not identically distributed (In point process statistics one speaks of “independent, location-dependent marking”). Indeed, for fixed size x the mass can be greatly variable due to variability of shape. It can happen that a particle of large size may have low mass because of a special shape. Fortunately, for the calculation of \(\Lambda_{3} \left( x \right)\) only the mean mass of particles of size x (and not the corresponding distribution) is needed, which is denoted by \({\upmu }\left( x \right)\).

This deterministic mean-mass function \(\mu \left( x \right)\) is an important model characteristic, with µ(0) = 0 and µ(∞) = ∞. Its form heavily depends on the shape of the particles considered. In the case of spherical particles of identical material, it holds

Here \(\rho\) is density, e.g., in the unit of g/mm3, and x is the diameter. In this special case of identical particle shapes the masses for size x are constant, not random, given by (3). While \(\mu \left( x \right)\) may monotonously increase with increasing x, other forms may also apply, for example when small particles have high density.

Both functions\(, {\text{size intensity}} \lambda_{0} \left( x \right)\) and mean-mass function \(\mu \left( x \right)\), determine \(\Lambda_{3} \left( x \right)\) as well as the corresponding derivative \(\lambda_{3} \left( x \right)\) with respect to x. Indeed, \(\lambda_{3} \left( x \right)\) satisfies the equation.

The product formula (4) can be proved as follows. Let \(\Delta x\) be the length of a small size interval. The probability that in the size interval \(\left( {x, x + \Delta x} \right)\) a particle size is present is approximately \(\lambda_{0} \left( x \right)\Delta x\). Consequently, the increment of \(\Lambda_{3} \left( x \right)\) in the size interval is \(\lambda_{0} \left( x \right)\Delta x \cdot \mu \left( x \right)\) and it holds.

\({\Lambda }_{3} \left( {x + \Delta x} \right) = {\Lambda }_{3} \left( x \right) + \lambda_{0} \left( x \right)\Delta x \cdot \mu \left( x \right)\).

Then

and division by ∆x and the limit ∆x → 0 yields Eq. (4).

Note that \(\lambda_{3} \left( x \right)\) is fully determined by the mean-value functions \(\lambda_{0} \left( x \right)\) and µ(x), which is natural since \(\Lambda_{3} \left( x \right)\) is also a mean value.

3 The utility of Eq. (4)

3.1 Product-form of particle mass probability density

The cumulative mass intensity function \(\Lambda_{3} \left( x \right)\) is closely related to the cumulative particle mass distribution function \(Q_{3} \left( x \right)\),

if there is a finite maximum size \(x_{{{\text{max}}}}\). In the following we mainly consider the infinite case. Note that \(Q_{3} \left( x \right)\) here denotes the theoretical distribution function, whereas in the usual engineering literature it often denotes the empirical distribution function resulting from one (finite) particle sample.

Equation (4) implies that the corresponding mass density function \(q_{3} \left( x \right),{\text{ the derivative of}} \,Q_{3} \left( x \right)\) with respect to x, is proportional to \(\lambda_{0} \left( x \right) \cdot \mu \left( x \right),\)

This has two important statistical consequences:

-

(a)

The influences of the two determinants ‘frequency’ (given by \(\lambda_{0} \left( x \right)\)) and ‘size’ (given by \(\mu \left( x \right)\)) are separated and they appear as factors in (6),

-

(b)

Many forms of \(\lambda_{0} \left( x \right)\) and \(\mu \left( x \right)\) may lead to particle mass distribution functions. Mathematically it is sufficient that the integral over \(\lambda_{0} \left( x \right)\mu \left( x \right)\) is finite.

Point (a) may help to find relations of \(Q_{3} \left( x \right)\) to material properties and crushing conditions, while (b) may direct the search for forms of \(Q_{3} \left( x \right)\).

3.2 Particle mass distributions following the model

Some well-known particle mass distribution functions can be obtained by suitable choices of \(\lambda_{0} \left( x \right)\) and \(\mu \left( x \right).\) This may inspire researchers to find new distributions in their applications.

3.2.1 The RRSB distribution

Assume

and

with positive parameters b, m, n, u and v in suitable dimensions. Both functions are plausible and nice starting points, with decreasing number of particles and increasing mean mass of particles for increasing size x in a form similar to that in the case of spheres.

Then, if the model is accepted, the relation (6) yields that the particle mass probability density function \(q_{3} \left( x \right)\) is proportional to \(ax^{m} \cdot \exp \left( { - bx^{n} } \right), {\text{with}} \,a = uv,\) that is,

This relation is nearly the same result which Rosin and Rammler had before the interaction by Karl Sperling (the “S” in “RRSB”), see Eq. (1) in [2].

The standard form of the RRSB distribution is

where r is a positive parameter (called ‘uniformity parameter’) and \(x^{\prime}\) a further positive parameter (called ‘characteristic size’). The corresponding mass density function \(q_{3} \left( x \right)\) is the derivative of \(Q_{3} \left( x \right)\), i.e.,

The following choices of the parameters a, b, m, and n transform the relation (7) into (9):

3.2.2 The Gates-Gaudin-Schuhmann distribution

The Gates-Gaudin-Schuhmann distribution has the distribution function

where k is a positive parameter. The corresponding probability density function is

\(q_{3} \left( x \right) = \frac{k}{{x_{{{\text{max}}}}^{k} }} \cdot x^{k - 1} = \frac{k}{{x_{{{\text{max}}}}^{k} }} \cdot x^{ - l} \cdot x^{k + l - 1 } \quad {\text{for}} \quad 0 \le x \le x_{{{\text{max}}}}\),

where an additional parameter l is introduced. Also, this function has the product form (6), and the term \(\frac{k}{{x_{{{\text{max}}}}^{k} }} \cdot x^{ - l}\) may play the role of \(\lambda_{0} \left( x \right)\).

It is similar with the Gaudin-Meloy distribution [30],

3.2.3 The Gamma distribution

The gamma distribution is not so frequently used as RRSB and GGS, perhaps because of the appearance of the gamma function. Its probability density function is

with positive parameters c and p. It has the product form (6) with \(\lambda_{0} \left( x \right)\) proportional to exp(-cx) and µ(x) proportional to \(x^{p}\). Note that this is not RRSB for r = 1!

In order to demonstrate its importance, two quite different applications are mentioned here. It appeared in a statistical analysis of cubic quartz sand particles reported in [31], where the distribution is called Martin-Andreasen distribution. The shape parameter p has the fixed value 4, and the distribution is therefore a one-parameter distribution.

The gamma distribution plays an important role in [25] in which a mass distribution function with variable \(\phi = - {\text{log}}_{2} \left( x \right)\) was empirically found by statistical analysis of fragments from laboratory explosion of volcanic rocks, where x is particle size as in the present paper. This statistical result was then theoretically explained.

3.3 A problem of indefiniteness

When the model is accepted, it is natural to know the mean-mass function µ(x) and the size intensity function \(\lambda_{0} \left( x \right)\) when the density function \(q_{3} \left( x \right)\) is known. Unfortunately, the relation (6) shows that this wish cannot be fulfilled. When µ(x) is multiplied by some positive function z(x) and \(\lambda_{0} \left( x \right)\) is divided by the same function, the same \(q_{3} \left( x \right)\) is obtained.

This idea can be used to construct suitable functions \(\lambda_{0} \left( x \right)\) and µ(x) for standard distributions. Consider, for example, the case of the RRSB distribution with r = 0.5. Then

A choice of µ(x) as

would be nonsense since \({\upmu }_{0} \left( x \right)\) is decreasing in x, which means ‘mass is decreasing in size’. However, a z(x) = \(sx^{3} {\text{would}}\) yield a µ(x) proportional to \(x^{2.5}\) and a

which makes sense.

4 Statistics for fragments of rock blasting

All data of particles (called here “fragments”) used in this section come from blasting experiments of nine granite cylinders with a diameter of 240 mm and a length or height of 300 mm [27]. There was one drill hole charged with explosive PETN at the drill hole bottom. The detailed parameters of the cylinders and the explosive charges are shown in Table 1. Figure 1 shows all fragments of the granite cylinders S1–S9 after blasting. The classical result of particle mass statistics is shown in Fig. 2: the empirical \({Q}_{3}\) distribution functions for the nine cylinders. The accumulated mass passings of S6–S9 are clearly larger than those of S1–S5.

The fragments (particles) produced by blasting of granite cylinders (after [26])

Empirical \({Q}_{3}\left(x\right),\) accumulated mass passing vs. fragment (particle) size x (based on the data in [26])

Now follows the analysis of the same data in the spirit of the present paper. Only the larger fragments are considered since the data for fragments smaller than 100 mm form unstructured dense clouds of points. Note that these data, size = maximum Feret diameter x (mm) and mass \(m\left(\mathrm{g}\right)\), were measured by hand [27]. Figure 3 presents the empirical relation between \(m\left(x\right)\) and x. Figure 3a shows the measurement data of mass and size of all fragments for the cylinders in different colours. Very impressive is the great variability of the masses for large fragments.

Empirical mean-mass function µ(x) vs. particle size x, based on measurement data of [27]. a Scatterplot of all fragment sizes and masses. The colours refer to the nine cylinders. b Fragment mass vs. fragment size for all samples S1-S9. The solid curve represents the regression equation of the relation between mass and size

Before the statistical results are presented, the basic assumptions are considered: (1) the size points form Poisson processes and (2) the mass marks are independent. Both were tested with the best statistical tests available: Poisson with \({\upchi }^{2}\) goodness-of-fit test as described in [26], Section 4.3.3 and end of 6.3, and independence with the phase-frequency test of Wallis and Moore [32]. In the latter the up-jumps and down-jumps in the series of masses are considered. While the critical z-value of the test for error probability \(\alpha =0.05\) is 1.96, the test statistics for all cases without S9 were lower than 1.56. Only for S9 the value 2.03 was obtained, which is lower than the critical value for \(\alpha =0.01\). The Poisson test is described in detail in [26] and carried out exactly in the same way as there, of course with the corresponding intensity functions. While the critical \({\upchi }^{2}\) values are 2.9 and 19.0, the test statistics are all between 6.2 and 18.5 with exception of S4, where it was 19.4, which is below the upper \({\upchi }^{2}\)-value for \(\alpha =0.01\). Thus, the basic assumptions is considered to be satisfied by these data.

Figure 3b shows the measurement data of masses and sizes of the fragments of all rock cylinders S1-S9 and the curve of the corresponding non-linear regression as an estimate of \(\mu \left( x \right)\). Note that this is an approximation for the mean function of a stochastic process and not the empirical form of a functional relationship. The corresponding estimation equation is

Figure 4 shows all nine empirical mean-mass curves together with the datasets of mass measurement, which are interpreted as estimates of the corresponding mean-mass function µ(x) where the curves end at the largest measured fragment size. The corresponding regression parameters R-squared, RSE and coefficient c are collected in Table 2.

Figures 3 and 4 indicate that the fragment masses tend to increase with increasing size, which is consistent with common blast results, i.e., big fragments have larger masses. However, the variability of masses is great, and there is no monotone growth of masses with increasing size. It looks that the variability increases with increasing x. This is reasonable since the size and shape of a large fragment is dependent on several factors such as the material and length of stemming, the geometrical shape of rock sample, the specific charge, etc. For example, as shown in Fig. 4 and Table 2, the curves of four cylinders S6–S9 with full stemming have mostly low value of parameter c (0.00029, 0.00026, 0.00027 and 0.00036 for S6, S7, S8 and S9 respectively), while the curves of five cylinders S1–S5 with partial steel stemming have relatively high value of parameter c (0.00038, 0.00044, 0.00043, 0.00023 and 0.00052 for S1, S2, S3, S4 and S5 respectively). The above description indicates that stemming condition influences the relation between fragment size and fragment weight. In addition, from Fig. 4 and Table 2 it can be found that cylinder S7 has the least parameter c (= 0.00026) and smallest maximum fragment mass (about 2000 g) among all of four cylinders with sand stemming. The reason is that S7 has higher specific charge than other cylinders, meaning that specific charge effects the relation between fragment size and fragment weight. This result refines the measurement result for the accumulated mass passing vs. fragment size of the nine samples, as shown in Fig. 1. Figure 3 shows that the mean mass of all cylinders increases with increasing fragment size in the form of \(x^{3}\).

Now the estimates of size intensity \(\lambda_{0} \left( x \right)\) are presented. Remember that \(\lambda_{0} \left( x \right)\) is the density of size points on the x-axis at size x, note Eq. (1). As recommended and explained in [26], a kernel estimator was used for the estimation of \(\lambda_{0} \left( x \right)\). The estimator is.

where the summation goes over all fragment sizes \(x_{i}\). The symbol \(k\left( \cdot \right)\) denotes the kernel function, which is taken as a Gaussian probability density function,

The parameter σ controls the smoothness of the estimate, the function \(\lambda_{0} \left( x \right)\). After experimentation we decided to choose σ = 30 mm.

Figure 5a shows the empirical size intensities \(\lambda_{0} \left( x \right)\) for \(x \ge 100 {\text{mm}}\); for smaller x the values are very large because there are many small fragments. As expected, the curves decrease with increasing fragment size x. They look like decreasing exponential functions \(k \cdot \exp \left( { - \alpha x} \right)\), with similar \(\alpha\) in all cases and largest \(k\) for S6. Additionally, Fig. 5b shows again the curve for S6 plus the standard deviation of the errors. The standard deviation values were obtained by bootstrapping, following the ideas of [33]. This means that Poisson processes with the empirical \(\lambda_{0} \left( x \right)\) were simulated 1000 times and their intensity functions were re-estimated.

The above description indicates that for large x the mass-size relation of the granite fragments is similar to that of the case of a RRSB distribution.

5 Discussion

This paper is inspired by a quite unusual form of particle size statistics, where many large particles were measured individually by hand, both in size and in mass. This resulted in a unique data set and led to the new form of modelling the variability of particle samples and to a deeper understanding of particle mass distributions. Of course, it is not recommended to carry out such time-consuming measurements for daily use. For research purposes, they may, if applied to an interesting material, provide valuable information sometimes. Furthermore, when particle samples are sieved, very big particles that cannot be sieved should appear in the sieving report. And the total mass of the sample should always be reported.

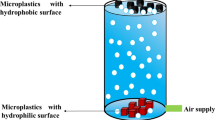

The model proposed in this paper is closely related to a classical model of applied probability, the so-called compound Poisson process. Figure 6 shows a sample of such a process, which is a standard model in financial mathematics. The points on the x-axis may be instants of insurance claims or of bankrupts, while the jumps are the corresponding values of claim or loan. In the context of the present paper the points are particle sizes and the jump heights are particle masses. The points belong to a Poisson process and the jump heights are independent random variables. The compound Poisson process is then the cumulative sum of jumps as a function of x as shown in Fig. 6 (in classical applications x is time). The theory of the compound Poisson process is presented in detail in Last and Penrose [29]. This book gives formulas that characterize the variability of such processes. However, our Eq. (3) belonging to the particular model with an inhomogeneous Poisson process and size-dependent mass distributions cannot be found there.

A piece of a sample of a compound Poisson process, in the particle size and mass interpretation. Here x is particle size and \(M\left(x\right)\) is the cumulative mass of all particles of mass \(\le x\). \({x}_{n}\) and \({M}_{n}\) are the size and the mass of the n-th particle, respectively. Note that this is not a curve based on sieving results. The jump points are random particle sizes

Of a quite different nature is the model in [24], which also leads to the RRSB distribution. It uses physical ideas, in particular branching trees of cracks. There the exponent r is related to the fractal dimension of a self-similar branching tree of cracks.

Note there is an interesting difference between the factors of the RRSB and the gamma distribution. Whereas for gamma \({\text{the size intensity }}\lambda_{0} \left( x \right)\) is proportional to exp(\(-\) cx) and the mean-mass function µ(x) is proportional to \(x^{p}\) and there is no connection between the two factors, the factors of the RRSB distribution are coupled by the exponent r, which appears in both factors. This may mark the gamma distribution as belonging to a simpler model.

The mean-mass function µ(x) of both distributions, RRSB and gamma, is controlled by a power term, \(x^{r}\) and \(x^{p}\), and the empirical µ(x) in Sect. 4 has such a form. This is also the case for spherical particles as in Eq. (2). This leads to the recommendation in the case of searching a new distribution for a particle sample to start with a mean-mass function proportional to a power of size x with positive exponent.

The size intensity \({ }\lambda_{0} \left( x \right)\) controls in a large extent the shape of \(q_{3} \left( x \right)\). For example, the existence of a maximum particle size \(x_{{{\text{max}}}}\) is indicated by \(\lambda_{0} \left( x \right) = 0\) for x > \(x_{{{\text{max}}}}\), see Fig. 5. Furthermore, if \(\lambda_{0} \left( x \right)\) has a pole at \(x = 0\) (i.e., \(\lambda_{0} \left( 0 \right) =\) ∞), then \(q_{3} \left( x \right)\) has also such a pole.

The exponent r in the RRSB distribution controls the variability of sizes and masses. Its value is frequently considered to be between 0.7 and 1.5. For the large fragments in Sect. 4 the parameter c plays a role similar to r. The values of c given in Table 2 are between 0.00023 and 0.00052. This large range of c value may depend on multiple factors such as stemming condition, specific charge, rock properties, etc. For example, the value c of S1–S5 is in a large range from 0.00023 to 0.00052, while the value c of S6–S9 is in a smaller range from 0.00026 to 0.00036. Since S4 has shortest stemming length (meaning highest gas ejection) among five cylinders with steel stemming, S4 can be excluded from S1 to S5. If so, it can be found from Table 2 that the value c of S1, S2, S3 and S5 is in a large range from 0.00038 to 0.00052, which is much larger than the value c of S6–S9. This result indicates that the partial steel stemming yields much higher c value than full sand stemming. One of main reasons for this result is that the partial steel stemming resulted in more gas ejection than the full sand stemming [27]. Considering that complex stemming conditions were used and only small rock cylinders employed in the blasts of S1–S9, more blasts with measured particle sizes and weights are needed to further develop this stochastic model.

6 Conclusion

A general stochastic model, which is closely related to an established model of applied probability, the so-called compound Poisson process, is introduced in this paper for particle mass distributions. It explains the appearance of the RRSB distribution, of the gamma distribution and of the GGS and GM distributions. The model has the potential to lead to new particle mass distributions by adapted choices of the factors \(\lambda_{0} \left( x \right)\) and µ(x). Future investigations of these functions may shed new light on various fragmentation processes, in particular stemming.

References

Rosin, P., Rammler, E.: The laws governing the fineness of powdered coal. J Inst Fuel 7, 29–36 (1933)

Stoyan, D.: Weibull, RRSB or extreme-value theorists? Metrika 76, 153–159 (2013). https://doi.org/10.1007/s00184-011-0380-6

Kuznetsov, V.M.: The mean diameter of the fragments formed by blasting rock. Soviet Mining Sci 9, 144–148 (1973)

Cunningham, C.V.B.: The Kuz-Ram model for prediction of fragmentation from blasting. In Proceedings of 1st International Symposium on Rock Fragmentation by Blasting, Luleå University of Technology, Sweden (1983). pp. 439–453

Langefors, U., Kihlström, B.: The modern technique of rock blasting. Almqvist & Wiksell, Stockholm (1963)

Holmberg, R.: Charge calculations for bench blasting. SveDeFo Report DS 1974:4, Swedish Detonic Research Foundation, Stockholm (1974) [In Swedish]

Larsson, B.: Report on blasting of high and low benches—fragmentation from production blasts. In: Proceedings of Discussion Meeting BK74, Swedish Rock Construction Committee, Stockholm (1974), pp. 247–273 [In Swedish]

Kou, S.Q., Rustan, A.: Computerized design and result predictions of bench blasting. In: Proceedings of 4th International Symposium Rock Fragmentation by Blasting, Vienna, Austria (1993), pp. 263–271

Chung, S.H., Katsabanis, P.D.: Fragmentation prediction using improved engineering formulae. Int J Blast Frag 4, 198–207 (2000)

Kanchibotla, S.S., Valery, W., Morrell, S.: Modelling fines in blast fragmentation and its impact on crushing and grinding. In: Proceedings of Explo’99: A Conference on Rock Breaking, Kalgoorlie (1999). pp. 137–144

Thornton, D., Kanchibotla, S.S., Brunton, I.: Modelling the impact of rock mass and blast design variation on blast fragmentation. Int J Blast Frag 6, 169–188 (2002)

Djordjevic, N.: Two-component of blast fragmentation. In Proceedings of 6th international Symposium Rock Fragmentation by Blasting, South African Institute of Mining and Metallurgy, Johannesburg (1999). pp. 213–219

Ouchterlony, F.: The Swebrec function: linking fragmentation by blasting and crushing. Mining Techn (Trans. of the Inst. of Mining & Met. A) 114:A29–A44 (2005)

Ouchterlony, F.: What does the fragment size distribution of blasted rock look like? In: Proceedings of 3rd EFEE Conf. on Explosives and Blasting, Brighton, UK. pp 189–199 (2005).

Ouchterlony, F.: A common form for fragment size distributions from blasting and a derivation of a generalized Kuznetsov’s x50-equation. In: Proceedings of 9th International Symposium on Rock Fragmentation by Blasting. CRC Press/Balkema, The Netherlands, pp. 199–208 (2009)

Ouchterlony, F., Sanchidrián, J.A., Moser, P.: Percentile fragment size predictions for blasted rock and the fragmentation–energy fan. Rock Mech Rock Eng 50(4), 751–779 (2017)

Ouchterlony, F., Sanchidrián, J.A., Genç, Ö.: Advances on the fragmentation-energy fan concept and the Swebrec function in modeling drop weight testing. Minerals 11, 1262 (2021)

Ouchterlony, F., Sanchidrián, J.A.: The fragmentation-energy fan concept and the Swebrec function in modeling drop weight testing. Rock Mech Rock Eng 51, 3129–3156 (2018)

Segarra, P., Sanchidrián, J.A., Navarro, J., Castedo, R.: The fragmentation energy-fan model in quarry blasts. Rock Mech Rock Eng 51, 2175–2190 (2018)

Sanchidrián, J.A., Segarra, P., Ouchterlony, F., Gómez, S.: The influential role of specific charge vs. delay in full-scale blasting: a perspective through the fragment size-energy fan. Rock Mech Rock Eng 55, 4209–4236 (2022)

Ouchterlony, F., Sanchidrián, J.A.: A review of development of better prediction equations for blast fragmentation. J Rock Mech Geotechn Eng 11, 1094–1109 (2019)

Zhang, Z.X., Sanchidrián, J.A., Ouchterlony, F., Luukkanen, S.: Reduction of fragment size from mining to mineral processing—a review. Rock Mech Rock Eng 56, 747–778 (2023). https://doi.org/10.1007/s00603-022-03068-3

Kolmogorov, A. N.: Über das logarithmisch normale Verteilungsgesetz der Dimensionen der Teilchen bei Zerstückelung, Dokl. Akad. Nauk SSSR 31, 99–101 (1941). (Translated as ‘The logarithmically normal law of distribution of dimensions of particles when broken into small parts’, NASA technical translation NASA TT F-12,287. NASA, Washington, DC, June 1969)

Brown, W.K., Wohletz, K.H.: Derivation of the Weibull distribution based on physical principles and its connection to the Rosin-Rammler and lognormal distributions. J. Appl, Phys. 78, 2758–2763 (1995)

Fowler, A.C., Scheu, B.: A theoretical explanation of grain size distributions in explosive rock fragmentation. Proc. Roy. Soc. A472, 20150843 (2016)

Stoyan, D., Unland, G.: Point process statistics improves particle size analysis. Granul Matter (2022). https://doi.org/10.1007/s10035-022-01278-8

Zhang, Z.X., Qiao, Y., Chi, L.Y.: Hou DF: experimental study of rock fragmentation under different stemming conditions in model blasting. Int J Rock Mech Min Sci 143, 104797 (2021)

Tijms, H.C.: A first course in stochastic models. J. Wiley & Sons, Chichester (2003)

Last, G., Penrose, M.: Lectures on the Poisson process. Cambridge University Press, Cambridge (2018)

Gaudin, A.M., Meloy, T.P.: Model and communition distribution equation for single fracture. Trans. Am. Inst. Min. Metall. Pet. Eng. 223, 40–43 (1962)

Kouzov, P.A.: Principles of the analysis of the dispersal state of industrial dusts and grinded materials, 3rd edn. In Russian) Khimiya, Leningrad (1983)

Sachs, L.: Applied statistics—a handbook of techniques. Springer, New York (1984)

Cowling, A., Hall, P., Phillips, M.J.: Bootstrap confidence regions for the intensity of a Poisson process. J Amer. Statist. Assoc. 91, 1516–1524 (1996)

Acknowledgements

The authors are grateful to Dr. Claus Bernhardt and Professor Georg Unland for helpful and constructive discussions on an earlier version of the paper. The constructive remarks of an anonymous reviewer improved the exposition and the statistical reasoning.

Funding

Open Access funding provided by University of Oulu including Oulu University Hospital.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stoyan, D., Zhang, ZX. A stochastic model leading to various particle mass distributions including the RRSB distribution. Granular Matter 25, 67 (2023). https://doi.org/10.1007/s10035-023-01359-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10035-023-01359-2