Abstract

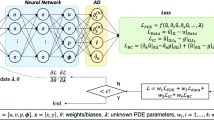

Physics informed neural network (PINN) frameworks have been developed as a powerful technique for solving partial differential equations (PDEs) with potential data integration. However, when applied to advection based PDEs, PINNs confront challenges such as parameter sensitivity in boundary condition enforcement and diminished learning capability due to an ill-conditioned system resulting from the strong advection. In this study, we present a multiscale stabilized PINN formulation with a weakly imposed boundary condition (WBC) method coupled with transfer learning that can robustly model the advection diffusion equation. To address key challenges, we use an advection-flux-decoupling technique to prescribe the Dirichlet boundary conditions, which rectifies the imbalanced training observed in PINN with conventional penalty and strong enforcement methods. A multiscale approach under the least squares functional form of PINN is developed that introduces a controllable stabilization term, which can be regarded as a special form of Sobolev training that augments the learning capacity. The efficacy of the proposed method is demonstrated through the resolution of a series of benchmark problems of forward modeling, and the outcomes affirm the potency of the methodology proposed.

Similar content being viewed by others

Data availability statement

The research data associated with this paper is not open to the public due to the policy of the author’s affiliation. Data requests can be made to the corresponding author of this research paper.

References

Hughes TJ, Cottrell JA, Bazilevs Y (2005) Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput Methods Appl Mech Eng 194(39–41):4135–4195

Chen J-S, Hillman M, Chi S-W (2017) Meshfree methods: progress made after 20 years. J Eng Mech 143(4):04017001

Franca LP, Nesliturk A, Stynes M (1998) On the stability of residual-free bubbles for convection-diffusion problems and their approximation by a two-level finite element method. Comput Methods Appl Mech Eng 166(1–2):35–49

Prakash C, Patankar S (1985) A control volume-based finite-element method for solving the Navier–Stokes equations using equal-order velocity-pressure interpolation. Numer Heat Transf 8(3):259–280

Li J, Chen C (2003) Some observations on unsymmetric radial basis function collocation methods for convection-diffusion problems. Int J Numer Meth Eng 57(8):1085–1094

Laible J, Pinder G (1989) Least squares collocation solution of differential equations on irregularly shaped domains using orthogonal meshes. Numer Methods Partial Differ Equ 5(4):347–361

Yamamoto S, Daiguji H (1993) Higher-order-accurate upwind schemes for solving the compressible Euler and Navier–Stokes equations. Comput Fluids 22(2–3):259–270

Franca LP, Farhat C (1995) Bubble functions prompt unusual stabilized finite element methods. Comput Methods Appl Mech Eng 123(1–4):299–308

Brooks AN, Hughes TJ (1982) Streamline upwind/Petrov–Galerkin formulations for convection dominated flows with particular emphasis on the incompressible Navier–Stokes equations. Comput Methods Appl Mech Eng 32(1–3):199–259

Hughes TJ, Feijoo GR, Mazzei L, Quincy J-B (1998) The variational multiscale method—a paradigm for computational mechanics. Comput Methods Appl Mech Eng 166(1–2):3–24

Chen J-S, Hillman M, Ruter M (2013) An arbitrary order variationally consistent integration for Galerkin meshfree methods. Int J Numer Meth Eng 95(5):387–418

Huang T-H (2023) Stabilized and variationally consistent integrated meshfree formulation for advection-dominated problems. Comput Methods Appl Mech Eng 403:115698

Ten Eikelder M, Bazilevs Y, Akkerman I (2020) A theoretical framework for discontinuity capturing: Joining variational multiscale analysis and variation entropy theory. Comput Methods Appl Mech Eng 359:112664

Sze V, Chen Y-H, Yang T-J, Emer JS (2017) Efficient processing of deep neural networks: a tutorial and survey. Proc IEEE 105(12):2295–2329

Raissi M, Perdikaris P, Karniadakis GE (2019) Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys 378:686–707

Cai S, Mao Z, Wang Z, Yin M, Karniadakis GE (2021) Physics-informed neural networks (PINNs) for fluid mechanics: a review. Acta Mech Sin 37(12):1727–1738

Jin X, Cai S, Li H, Karniadakis GE (2021) NSFnets (Navier–Stokes flow nets): physics-informed neural networks for the incompressible Navier–Stokes equations. J Comput Phys 426:109951

Wandel N, Weinmann M, Neidlin M, Klein R (2022) Spline-pinn: approaching pdes without data using fast, physics-informed hermite-spline cnns. Proc AAAI Conf Artif Intell 36(8):8529–8538

Mao Z, Jagtap AD, Karniadakis GE (2020) Physics-informed neural networks for high-speed flows. Comput Methods Appl Mech Eng 360:112789

Yang X, Zafar S, Wang J-X, Xiao H (2019) Predictive large-eddy-simulation wall modeling via physics-informed neural networks. Phys Rev Fluids 4(3):034602

He Q, Tartakovsky AM (2021) Physics-informed neural network method for forward and backward advection–dispersion equations. Water Resour Res 57(7):1

Jagtap AD, Kharazmi E, Karniadakis GE (2020) Conservative physics-informed neural networks on discrete domains for conservation laws: applications to forward and inverse problems. Comput Methods Appl Mech Eng 365:113028

Liu L, Liu S, Yong H, Xiong F, Yu T (2022) Discontinuity computing with physics-informed neural network. Preprint arXiv:2206.03864

He Q, Barajas-Solano D, Tartakovsky G, Tartakovsky AM (2020) Physics-informed neural networks for multiphysics data assimilation with application to subsurface transport. Adv Water Resour 141:103610

Wang S, Teng Y, Perdikaris P (2021) Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM J Sci Comput 43(5):A3055–A3081

Sukumar N, Srivastava A (2022) Exact imposition of boundary conditions with distance functions in physics-informed deep neural networks. Comput Methods Appl Mech Eng 389:114333

Rao C, Sun H, Liu Y (2021) Physics-informed deep learning for computational elastodynamics without labeled data. J Eng Mech 147(8):04021043

Peng P, Pan J, Xu H, Feng X (2022) RPINNs: rectified-physics informed neural networks for solving stationary partial differential equations. Comput Fluids 245:105583

Jacot A, Gabriel F, Hongler C (2018) Neural tangent kernel: convergence and generalization in neural networks. Adv Neural Inf Process Syst 31:1

Patel RG, Manickam I, Trask NA, Wood MA, Lee M, Tomas I, Cyr EC (2022) Thermodynamically consistent physics-informed neural networks for hyperbolic systems. J Comput Phys 449:110754

Mao Z, Meng X (2023) Physics-informed neural networks with residual/gradient-based adaptive sampling methods for solving PDEs with sharp solutions. Preprint arXiv:2302.08035

Kharazmi E, Zhang Z, Karniadakis GE (2019) Variational physics-informed neural networks for solving partial differential equations. Preprint arXiv:1912.00873

Kharazmi E, Zhang Z, Karniadakis GE (2021) hp-VPINNs: variational physics-informed neural networks with domain decomposition. Comput Methods Appl Mech Eng 374:113547

Ren X (2022) A multi-scale framework for neural network enhanced methods to the solution of partial differential equations. Preprint arXiv:2209.01717

Huang T-H, Wei H, Chen J-S, Hillman MC (2020) RKPM2D: an open-source implementation of nodally integrated reproducing kernel particle method for solving partial differential equations. Comput Part Mech 7(2):393–433

Liu WK, Jun S, Zhang YF (1995) Reproducing kernel particle methods. Int J Numer Meth Fluids 20(8–9):1081–1106

Baek J, Chen J-S, Susuki K (2022) A neural network-enhanced reproducing kernel particle method for modeling strain localization. Int J Numer Methods Eng 123(18):4422–4454

Chiu P-H, Wong JC, Ooi C, Dao MH, Ong Y-S (2022) CAN-PINN: a fast physics-informed neural network based on coupled-automatic-numerical differentiation method. Comput Methods Appl Mech Eng 395:114909

Liu L, Liu S, Xie H, Xiong F, Yu T, Xiao M, Liu L, Yong H (2022) Discontinuity computing using physics-informed neural networks. Available at SSRN 4224074

Tassi T, Zingaro A, Dede L (2021) A machine learning approach to enhance the SUPG stabilization method for advection-dominated differential problems. Preprint arXiv:2111.00260

Yadav S, Ganesan S (2022) AI-augmented stabilized finite element method. Preprint arXiv:2211.13418

Samaniego E, Anitescu C, Goswami S, Nguyen-Thanh VM, Guo H, Hamdia K, Zhuang X, Rabczuk T (2020) An energy approach to the solution of partial differential equations in computational mechanics via machine learning: concepts, implementation and applications. Comput Methods Appl Mech Eng 362:112790

Bai J, Jeong H, Batuwatta-Gamage C, Xiao S, Wang Q, Rathnayaka C, Alzubaidi L, Liu G-R, Gu Y (2022) An introduction to programming physics-informed neural network-based computational solid mechanics. Preprint arXiv:2210.09060

Bazilevs Y, Hughes TJ (2007) Weak imposition of Dirichlet boundary conditions in fluid mechanics. Comput Fluids 36(1):12–26

Vlassis NN, Sun W (2021) Sobolev training of thermodynamic-informed neural networks for interpretable elasto-plasticity models with level set hardening. Comput Methods Appl Mech Eng 377:113695

Yu J, Lu L, Meng X, Karniadakis GE (2022) Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Comput Methods Appl Mech Eng 393:114823

DeVore R, Hanin B, Petrova G (2021) Neural network approximation. Acta Numer 30:327–444

Nguyen-Thanh VM, Zhuang X, Rabczuk T (2020) A deep energy method for finite deformation hyperelasticity. Eur J Mech A/Solids 80:103874

Rivera JA, Taylor JM, Omella AJ, Pardo D (2022) On quadrature rules for solving partial differential equations using neural networks. Comput Methods Appl Mech Eng 393:114710

Glorot X, BengioY (2010) Understanding the difficulty of training deep feedforward neural networks. In: JMLR workshop and conference proceedings, pp 249–256

Hillman M, Chen J-S (2016) Nodally integrated implicit gradient reproducing kernel particle method for convection dominated problems. Comput Methods Appl Mech Eng 299:381–400

Pan SJ, Yang Q (2009) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Ganin Y, Lempitsky V (2015) Unsupervised domain adaptation by backpropagation. In: International conference on machine learning, pp 1180–1189

He J, Li L, Xu J, Zheng C (2018) Relu deep neural networks and linear finite elements. Preprint arXiv:1807.03973

Zhang L, Cheng L, Li H, Gao J, Yu C, Domel R, Yang Y, Tang S, Liu WK (2021) Hierarchical deep-learning neural networks: finite elements and beyond. Comput Mech 67:207–230

Chi S-W, Chen J-S, Hu H-Y, Yang JP (2013) A gradient reproducing kernel collocation method for boundary value problems. Int J Numer Methods Eng 93(13):1381–1402

Huang T-H, Chen J-S, Tupek MR, Koester JJ, Beckwith FN, Fang HE (2021) A variational multiscale immersed Meshfree method for heterogeneous material problems. Comput Mech 67(4):1059–1097

Hughes TJ, Franca LP, Hulbert GM (1989) A new finite element formulation for computational fluid dynamics: VIII. The Galerkin/least-squares method for advective-diffusive equations. Comput Methods Appl Mech Eng 73(2):173–189

Lin H, Atluri S (2000) Meshless local Petrov–Galerkin (MLPG) method for convection diffusion problems. Comput Model Eng Sci 1(2):45–60

Xu C, Cao BT, Yuan Y, Meschke G (2023) Transfer learning based physics-informed neural networks for solving inverse problems in engineering structures under different loading scenarios. Comput Methods Appl Mech Eng 405:115852

Yin M, Zheng X, Humphrey JD, Karniadakis GE (2021) Non-invasive inference of thrombus material properties with physics-informed neural networks. Comput Methods Appl Mech Eng 375:113603

Huang T-H, Chen J-S, Wei H, Roth MJ, Sherburn JA, Bishop JE, Tupek MR, Fang EH (2020) A MUSCL-SCNI approach for meshfree modeling of shock waves in fluids. Comput Part Mech 7(2):329–350

Hughes TJ, Wells GN (2005) Conservation properties for the Galerkin and stabilised forms of the advection-diffusion and incompressible Navier–Stokes equations. Comput Methods Appl Mech Eng 194(9–11):1141–1159

Acknowledgements

This study was supported by the National Science and Technology Council, NSTC (formerly Ministry of Science and Technology, MOST), Taiwan, under project contract number 109-2222-E-007-005-MY3. The author T.H. Huang is additionally supported by NSTC project contract number 112-2628-E-007-018 and 112-2221-E-007-028. We would also like to acknowledge the research suggestions from Prof. Wei-Fan Hu at National Central University and Prof. Yi-Ju Chou at National Taiwan University. The author would like to appreciate the anonymous reviewers for their comments and suggestions for improving the quality of this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author reports no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Weights normalization for penalty method

To normalize the penalty parameter in the PINN, we run the dimension analysis for the loss function, by setting \(u\) as the concentration field with the SI unit \({\text{mol}}/{{\text{m}}}^{3}\), \({a}_{i}\) as advection velocity vector component with SI unit \({\text{m}}/{\text{s}}\) and diffusivity coefficient \(k\) with SI unit \({{\text{m}}}^{2}/{\text{s}}\). Here \({\text{mol}}\) indicates the mole number, \({\text{m}}\) indicates the unit meter and \({\text{s}}\) indicate the unit second. To satisfy dimension consistency, all terms in the loss function in Eq. (10) should results in same units eventually.

The unit of equilibrium equation loss \({\Pi }_{S}\) is shown below.

where \({\text{O}}\left(\cdot \right)\) means the order of the argument. Similarly, the Dirichlet boundary condition and Neumann boundary condition loss can be represented as:

Then, in order to enforce dimension consistency between Eqs. (56)–(58), the penalty parameter \({\beta }_{D}\) and \({\beta }_{N}\) can be normailized by the Peclet number, diffusivity coefficient and the characteristic length \({L}_{c}\):

In the numerical testing, we then can define the following penalty parmeter by introducing a unitless coefficient \({\widehat{\beta }}_{D}\) and \({\widehat{\beta }}_{N}.\)

1.2 Weights normalization for weakly enforced boundary condition method

By performing the dimension analysis for the WBC formulation for the two terms shown in Eq. (32), one obtains:

Then by comparing Eqs. (63) and (64) with the loss of the equilibrium Eq. (56), the dimension of the two parameters \({C}_{a}\) and \({C}_{k}\) can be expressed in terms of the Peclet number, diffusivity coefficient and the characteristic length \({L}_{c}\):

Then, following the same logic shown in Eqs. (59) and (60), one can introduce controllable unitless coefficients \({\widehat{C}}_{a}\) and \({\widehat{C}}_{k}\) to represent \({C}_{a}\) and \({C}_{k}\)

1.3 Influence of weights normalization effect for penalty method and WBC approach

The one-dimensional boundary layer problem in Sect. 2.3.1.1 is given below in Fig. 32 to perform the parameter study for both the penalty method and the WBC method. First, we select four choices of penalty parameters \({\widehat{\beta }}_{D}=0.01, 0.1, 1, 100\) (\({\widehat{\beta }}_{D}=100.0\) is corresponding to unnormalized parameter \({\beta }_{D}=2.5\times {10}^{5}\), which is a large enough value to impose boundary condition) for the penalty method. It can be seen that without the WBC approach, non-convergence result is found for Peclet number equaling 100 (advection dominate case). Secondly, we perform the same study for WBC method \({\widehat{C}}_{a}={\widehat{C}}_{k}=0.01, 0.1, 1\) in comparison to an improper choice of parameter \({\widehat{C}}_{a}={\widehat{C}}_{k}=100.0\) (corresponding to unnormalized parameter \({C}_{a}={C}_{k}=2.5\times {10}^{5}\), making it large enough which make it similar to the penalty method). From the analysis, the value of \({\widehat{C}}_{a}={\widehat{C}}_{k}=100.0\) causes non-convergence solution in the \(Pe=100\) case, while all other choices robustly capture the boundary layer feature. Therefore, we are assured that proper normalization is necessary for the WBC approach.

1.4 Coefficient sensitivity analysis of the penalty parameter in WBC fine-tuning

This analysis is conducted to investigate the effect of penalty coefficient \({\gamma }_{D}\) selection in the WBC fine-tuning stage, providing a reasonable selection range as well as demonstrating the solution balancing phenomenon of pre-trained solution and Dirichlet boundary conditions as mentioned in Sect. 3.1. The two-dimensional point source boundary layer problem in Sect. 2.3.1.2 with different penalty coefficients defined on the boundary are conducted and demonstrated in Fig. 33. From the result, we can see that since the pre-trained model already captures the main features of the system (advection), the second term for Dirichlet boundary condition regularization is not sensitive to the penalty coefficient \({\gamma }_{D}\) anymore. However, employing an excessively large penalty coefficient will lead to oscillation in the result as shown in Fig. 33d and therefore a reasonable range \({\gamma }_{D}\in \left[\mathrm{0,1}\right]\) is recommended.

1.5 Numerical comparison of the proposed VMS-PINN with gradient-enhanced PINN (gPINN)

A numerical example is conducted to investigate the effect of parameter “\({c}_{VMS}\)” in Eq. (47) and VMS compared to the gPINN method [46]. The two-dimensional point source boundary layer problem (in Sect. 2.3.1.2) is used with all numerical setting remaining the same. The \({L}_{2}\) error norm in the training process is shown in Fig. 34, and both gPINN and VMS methods use 50,000 iterations for training. The gPINN implemented in this numerical example employs a loss function accordinig to [46], as shown in Eq. (69):

where \({\mathcal{L}}_{{g}_{{\varvec{x}}}}\) and \({\mathcal{L}}_{{g}_{t}}\) are the gradient loss with respect to space and time and uses a penalty parameter \(w=1.0\) according to the reference [46]. In this example, \({\mathcal{L}}_{{g}_{t}}\) is omitted since the problem is time independent. From the result, we can see that under the same experiment setting, the VMS has higher convergency compared with the gPINN result. It is possible that by optimal choice of the weight in the VMS gradient regularization term leads to a better result.

Numerical comparison of the proposed VMS method and the gradient enhanced PINN (gPINN) formulation in [46]. Both methods conduct 50,000 iterations of training

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hsieh, TY., Huang, TH. A multiscale stabilized physics informed neural networks with weakly imposed boundary conditions transfer learning method for modeling advection dominated flow. Engineering with Computers (2024). https://doi.org/10.1007/s00366-024-01981-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00366-024-01981-5