Abstract

Physics-Informed Neural Networks (PINN) are neural networks (NNs) that encode model equations, like Partial Differential Equations (PDE), as a component of the neural network itself. PINNs are nowadays used to solve PDEs, fractional equations, integral-differential equations, and stochastic PDEs. This novel methodology has arisen as a multi-task learning framework in which a NN must fit observed data while reducing a PDE residual. This article provides a comprehensive review of the literature on PINNs: while the primary goal of the study was to characterize these networks and their related advantages and disadvantages. The review also attempts to incorporate publications on a broader range of collocation-based physics informed neural networks, which stars form the vanilla PINN, as well as many other variants, such as physics-constrained neural networks (PCNN), variational hp-VPINN, and conservative PINN (CPINN). The study indicates that most research has focused on customizing the PINN through different activation functions, gradient optimization techniques, neural network structures, and loss function structures. Despite the wide range of applications for which PINNs have been used, by demonstrating their ability to be more feasible in some contexts than classical numerical techniques like Finite Element Method (FEM), advancements are still possible, most notably theoretical issues that remain unresolved.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Deep neural networks have succeeded in tasks such as computer vision, natural language processing, and game theory. Deep Learning (DL) has transformed how categorization, pattern recognition, and regression tasks are performed across various application domains.

Deep neural networks are increasingly being used to tackle classical applied mathematics problems such as partial differential equations (PDEs) utilizing machine learning and artificial intelligence approaches. Due to, for example, significant nonlinearities, convection dominance, or shocks, some PDEs are notoriously difficult to solve using standard numerical approaches. Deep learning has recently emerged as a new paradigm of scientific computing thanks to neural networks’ universal approximation and great expressivity. Recent studies have shown deep learning to be a promising method for building meta-models for fast predictions of dynamic systems. In particular, NNs have proven to represent the underlying nonlinear input-output relationship in complex systems. Unfortunately, dealing with such high dimensional-complex systems are not exempt from the curse of dimensionality, which Bellman first described in the context of optimal control problems [15]. However, machine learning-based algorithms are promising for solving PDEs [19].

Indeed, Blechschmidt and Ernst [19] consider machine learning-based PDE solution approaches will continue to be an important study subject in the next years as deep learning develops in methodological, theoretical, and algorithmic developments. Simple neural network models, such as MLPs with few hidden layers, were used in early work for solving differential equations [89]. Modern methods, based on NN techniques, take advantage of optimization frameworks and auto-differentiation, like Berg and Nyström [16] that suggested a unified deep neural network technique for estimating PDE solutions. Furthermore, it is envisioned that DNN will be able to create an interpretable hybrid Earth system model based on neural networks for Earth and climate sciences [68].

Nowadays, the literature does not have a clear nomenclature for integrating previous knowledge of a physical phenomenon with deep learning. ‘Physics-informed,’ ‘physics-based,’ ‘physics-guided,’ and ‘theory-guided’ are often some used terms. Kim et al [80] developed the overall taxonomy of what they called informed deep learning, followed by a literature review in the field of dynamical systems. Their taxonomy is organized into three conceptual stages: (i) what kind of deep neural network is used, (ii) how physical knowledge is represented, and (iii) how physical information is integrated. Inspired by their work, we will investigate PINNs, a 2017 framework, and demonstrate how neural network features are used, how physical information is supplied, and what physical problems have been solved in the literature.

1.1 What the PINNs are

Physics–Informed Neural Networks (PINNs) are a scientific machine learning technique used to solve problems involving Partial Differential Equations (PDEs). PINNs approximate PDE solutions by training a neural network to minimize a loss function; it includes terms reflecting the initial and boundary conditions along the space-time domain’s boundary and the PDE residual at selected points in the domain (called collocation point). PINNs are deep-learning networks that, given an input point in the integration domain, produce an estimated solution in that point of a differential equation after training. Incorporating a residual network that encodes the governing physics equations is a significant novelty with PINNs. The basic concept behind PINN training is that it can be thought of as an unsupervised strategy that does not require labelled data, such as results from prior simulations or experiments. The PINN algorithm is essentially a mesh-free technique that finds PDE solutions by converting the problem of directly solving the governing equations into a loss function optimization problem. It works by integrating the mathematical model into the network and reinforcing the loss function with a residual term from the governing equation, which acts as a penalizing term to restrict the space of acceptable solutions.

PINNs take into account the underlying PDE, i.e. the physics of the problem, rather than attempting to deduce the solution based solely on data, i.e. by fitting a neural network to a set of state-value pairs. The idea of creating physics-informed learning machines that employ systematically structured prior knowledge about the solution can be traced back to earlier research by Owhadi [125], which revealed the promising technique of leveraging such prior knowledge. Raissi et al [141, 142] used Gaussian process regression to construct representations of linear operator functionals, accurately inferring the solution and providing uncertainty estimates for a variety of physical problems; this was then extended in [140, 145]. PINNs were introduced in 2017 as a new class of data-driven solvers in a two-part article [143, 144] published in a merged version afterwards in 2019 [146]. Raissi et al [146] introduce and illustrate the PINN approach for solving nonlinear PDEs, like Schrödinger, Burgers, and Allen–Cahn equations. They created physics-informed neural networks (PINNs) which can handle both forward problems of estimating the solutions of governing mathematical models and inverse problems, where the model parameters are learnt from observable data.

The concept of incorporating prior knowledge into a machine learning algorithm is not entirely novel. In fact Dissanayake and Phan-Thien [39] can be considered one of the first PINNs. This paper followed the results of the universal approximation achievements of the late 1980s, [65]; then in the early 90s several methodologies were proposed to use neural networks to approximate PDEs, like the work on constrained neural networks [89, 135] or [93]. In particular Dissanayake and Phan-Thien [39] employed a simple neural networks to approximate a PDE, where the neural network’s output was a single value that was designed to approximate the solution value at the specified input position. The network had two hidden layers, with 3, 5 or 10 nodes for each layer. The network loss function approximated the \(L^2\) error of the approximation on the interior and boundary of the domain using point-collocation. While, the loss is evaluated using a quasi-Newtonian approach and the gradients are evaluated using finite difference.

In Lagaris et al [89], the solution of a differential equation is expressed as a constant term and an adjustable term with unknown parameters, the best parameter values are determined via a neural network. However, their method only works for problems with regular borders. Lagaris et al [90] extends the method to problems with irregular borders.

As computing power increased during the 2000s, increasingly sophisticated models with more parameters and numerous layers became the standard Özbay et al [127]. Different deep model using MLP, were introduced, also using Radial Basis Function Kumar and Yadav [87].

Research into the use of NNs to solve PDEs continued to gain traction in the late 2010s, thanks to advancements in the hardware used to run NN training, the discovery of better practices for training NNs, and the availability of open-source packages, such as Tensorflow [55], and the availability of Automatic differentiation in such packages [129].

Finally, more recent advancements by Kondor and Trivedi [83], and Mallat [102], brought to Raissi et al [146] solution that extended previous notions while also introducing fundamentally new approaches, such as a discrete time-stepping scheme that efficiently leverages the predictive ability of neural networks [82]. The fact that the framework could be utilized directly by plugging it into any differential problem simplified the learning curve for beginning to use such, and it was the first step for many researchers who wanted to solve their problems with a Neural network approach [105]. The success of the PINNs can be seen from the rate at which Raissi et al [146] is cited, and the exponentially growing number of citations in the recent years (Fig. 1).

However, PINN is not the only NN framework utilized to solve PDEs. Various frameworks have been proposed in recent years, and, while not exhaustive, we have attempted to highlight the most relevant ones in this paragraph.

The Deep Ritz method (DRM) [42], where the loss is defined as the energy of the problem’s solution.

Then there approaches based on the Galerkin method, or Petrov–Galerkin method, where the loss is given by multiplying the residual by a test function, and when is the volumetric residual we have a Deep Galerkin Method (DGM) [160]. Whereas, when a Galerkin approach is used on collocation points the framework is a variant of PINNs, i.e. a hp-VPINN Kharazmi et al [78].

Within the a collocation based approach, i.e. PINN methodology [109, 146, 191], many other variants were proposed, as the variational hp-VPINN, as well as conservative PINN (CPINN)[71]. Another approach is physics-constrained neural networks (PCNNs) [97, 168, 200]. while PINNs incorporate both the PDE and its initial/boundary conditions (soft BC) in the training loss function, PCNNs, are “data-free” NNs, i.e. they enforce the initial/boundary conditions (hard BC) via a custom NN architecture while embedding the PDE in the training loss. This soft form technique is described in Raissi et al [146], where the term “physics-informed neural networks” was coined (PINNs).

Because there are more articles on PINN than any other specific variant, such as PCNN, hp-VPINN, CPINN, and so on, this review will primarily focus on PINN, with some discussion of the various variants that have emerged, that is, NN architectures that solve differential equations based on collocation points.

Finally, the acronym PINN will be written in its singular form rather than its plural form in this review, as it is considered representative of a family of neural networks of the same type.

Various review papers involving PINN have been published. About the potentials, limitations and applications for forward and inverse problems [74] for three-dimensional flows [20], or a comparison with other ML techniques [19]. An introductory course on PINNs that covers the fundamentals of Machine Learning and Neural Networks can be found from Kollmannsberger et al [82]. PINN is also compared against other methods that can be applied to solve PDEs, like the one based on the Feynman–Kac theorem [19]. Finally, PINNs have also been extended to solve integrodifferential equations (IDEs) [128, 193] or stochastic differential equations (SDEs) [189, 195].

Being able to learn PDEs, PINNs have several advantages over conventional methods. PINNs, in particular, are mesh-free methods that enable on-demand solution computation after a training stage, and they allow solutions to be made differentiable using analytical gradients. Finally, they provide an easy way to solve forward jointly and inverse problems using the same optimization problem. In addition to solving differential equations (the forward problem), PINNs may be used to solve inverse problems such as characterizing fluid flows from sensor data. In fact, the same code used to solve forward problems can be used to solve inverse problems with minimal modification. Moreover, in the context of inverse design, PDEs can also be enforced as hard constraints (hPINN) [101]. Indeed, PINNs can address PDEs in domains with very complicated geometries or in very high dimensions that are all difficult to numerically simulate as well as inverse problems and constrained optimization problems.

1.2 What is this Review About

In this survey, we focus on how PINNs are used to address different scientific computing problems, the building blocks of PINNs, the aspects related to learning theory, what toolsets are already available, future directions and recent trends, and issues regarding accuracy and convergence. According to different problems, we show how PINNs solvers have been customized in literature by configuring the depth, layer size, activation functions and using transfer learning.

This article can be considered as an extensive literature review on PINNs. It was mostly conducted by searching Scopus for the terms:

((physic* OR physical) W/2 (informed OR constrained) W/2 “neural network”)

The primary research question was to determine what PINNs are and their associated benefits and drawbacks. The research also focused on the outputs from the CRUNCH research group in the Division of Applied Mathematics at Brown University and then on the (Physics–Informed Learning Machines for Multiscale and Multiphysics Problems) PhILMs Center, which is a collaboration with the Pacific Northwest National Laboratory. In such a frame, the primary authors who have been involved in this literature research are Karniadakis G.E., Perdikaris P., and Raissi M. Additionally, the review considered studies that addressed a broader topic than PINNs, namely physics-guided neural networks, as well as physics-informed machine learning and deep learning.

Figure 1 summarizes what the influence of PINN is in today’s literature and applications.

A number of papers related to PINNs (on the right) addressed problems on which PINNs are applied (on the left) by year. PINN is having a significant impact in the literature and in scientific applications. The number of papers referencing Raissi or including PINN in their abstract title or keywords is increasing exponentially. The number of papers about PINN more than quintupled between 2019 and 2020, and there were twice as many new papers published in 2021 as there were in 2020. Since Raissi’s first papers on arXiv in 2019 [146], a boost in citations can be seen in late 2018 and 2019. On the left, we display a sample problem solved in that year by one of the articles, specifically a type of time-dependent equation. Some of the addressed problems to be solved in the first vanilla PINN, for example, were the Allen–Cahn equation, the Korteweg–de Vries equation, or the 1D nonlinear Shrödinger problem (SE). By the end of 2019 Mao et al [103] solved with the same PINN Euler equations (EE) that model high-speed aerodynamic flows. By the end of 2020 Jin et al [73] solved the incompressible Navier–Stokes equations (NSE). Finally, in 2021 Cai et al [22] coupled the Navier–Stokes equations with the corresponding temperature equation for analyzing heat flow convection (NSE+HE)

2 The Building Blocks of a PINN

Physically-informed neural networks can address problems that are described by few data, or noisy experiment observations. Because they can use known data while adhering to any given physical law specified by general nonlinear partial differential equations, PINNs can also be considered neural networks that deal with supervised learning problems [52]. PINNs can solve differential equations expressed, in the most general form, like:

defined on the domain \(\Omega \subset {\mathbb {R}}^d\) with the boundary \(\partial \Omega \). Where \(\varvec{}{z} := [x_1,\ldots ,x_{d-1};t]\) indicates the space-time coordinate vector, \(\varvec{}{u}\) represents the unknown solution, \(\gamma \) are the parameters related to the physics, \(\varvec{}{f}\) is the function identifying the data of the problem and \({\mathcal {F}}\) is the non linear differential operator. Finally, since the initial condition can actually be considered as a type of Dirichlet boundary condition on the spatio-temporal domain, it is possible to denote \({\mathcal {B}}\) as the operator indicating arbitrary initial or boundary conditions related to the problem and \(\varvec{}{g}\) the boundary function. Indeed, the boundary conditions can be Dirichlet, Neumann, Robin, or periodic boundary conditions.

Equation (1) can describe numerous physical systems including both forward and inverse problems. The goal of forward problems is to find the function \(\varvec{}{u}\) for every \(\varvec{}{z}\), where \(\gamma \) are specified parameters. In the case of the inverse problem, \(\gamma \) must also be determined from the data. The reader can find an operator based mathematical formulation of Eq. (1) in the work of Mishra and Molinaro [111].

In the PINN methodology, \(\varvec{}{u}(\varvec{}{z})\) is computationally predicted by a NN, parametrized by a set of parameters \(\theta \), giving rise to an approximation

where \(\hat{(\cdot )}_\theta \) denotes a NN approximation realized with \(\theta \).

In this context, where forward and inverse problems are analyzed in the same framework, and given that PINN can adequately solve both problems, we will use \(\theta \) to represent both the vector of all unknown parameters in the neural network that represents the surrogate model and unknown parameters \(\gamma \) in the case of an inverse problem.

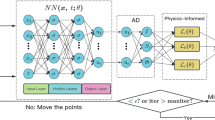

Physics-informed neural networks building blocks. PINNs are made up of differential equation residual (loss) terms, as well as initial and boundary conditions. The network’s inputs (variables) are transformed into network outputs (the field \(\varvec{}{u}\)). The network is defined by \(\theta \). The second area is the physics informed network, which takes the output field \(\varvec{}{u}\) and computes the derivative using the given equations. The boundary/initial condition is also evaluated (if it has not already been hard-encoded in the neural network), and also the labeled data observations are calculated (in case there is any data available). The final step with all of these residuals is the feedback mechanism, that minimizes the loss, using an optimizer, according to some learning rate in order to obtain the NN parameters \(\theta \)

In such a context, the NN must learn to approximate the differential equations through finding \(\theta \) that define the NN by minimizing a loss function that depends on the differential equation \({\mathcal {L}}_{\mathcal {F}}\), the boundary conditions \( {\mathcal {L}}_{\mathcal {B}}\), and eventually some known data \({\mathcal {L}}_{data}\), each of them adequately weighted:

To summarize, PINN can be viewed as an unsupervised learning approach when they are trained solely using physical equations and boundary conditions for forward problems; however, for inverse problems or when some physical properties are derived from data that may be noisy, PINN can be considered supervised learning methodologies.

In the following paragraph, we will discuss the types of NN used to approximate \(\varvec{}{u}(\varvec{}{z})\), how the information derived by \({\mathcal {F}}\) is incorporated in the model, and how the NN learns from the equations and additional given data.

Figure 2 summarizes all the PINN’s building blocks discussed in this section. PINN are composed of three components: a neural network, a physics-informed network, and a feedback mechanism. The first block is a NN, \(\hat{\varvec{}{u}}_\theta \), that accepts vector variables \(\varvec{}{z}\) from the Eq. (1) and outputs the filed value \(\varvec{}{u}\). The second block can be thought of PINN’s functional component, as it computes the derivative to determine the losses of equation terms, as well as the terms of the initial and boundary conditions of Eq. (2). Generally, the first two blocks are linked using algorithmic differentiation, which is used to inject physical equations into the NN during the training phase. Thus, the feedback mechanism minimizes the loss according to some learning rate, in order to fix the NN parameters vector \(\theta \) of the NN \(\hat{\varvec{}{u}}_\theta \). In the following, it will be clear from the context to what network we are referring to, whether the NN or the functional network that derives the physical information.

2.1 Neural Network Architecture

The representational ability of neural networks is well established. According to the universal approximation theorem, any continuous function can be arbitrarily closely approximated by a multi-layer perceptron with only one hidden layer and a finite number of neurons [17, 34, 65, 192]. While neural networks can express very complex functions compactly, determining the precise parameters (weights and biases) required to solve a specific PDE can be difficult [175]. Furthermore, identifying the appropriate artificial neural network (ANN) architecture can be challenging. There are two approaches: shallow learning networks, which have a single hidden layer and can still produce any non-linear continuous function, and deep neural networks (DNN), a type of ANN that uses more than two layers of neural networks to model complicated relationships [2]. Finally, the taxonomy of various Deep Learning architectures is still a matter of research [2, 117, 155]

The main architectures covered in the Deep Learning literature include fully connected feed-forward networks (called FFNN, or also FNN, FCNN, or FF–DNN), convolutional neural networks (CNNs), and recurrent neural networks (RNNs) [29, 92]. Other more recent architectures encompass Auto–Encoder (AE), Deep Belief Network (DBN), Generative Adversarial Network (GAN), and Bayesian Deep Learning (BDL) [5, 17].

Various PINN extensions have been investigated, based on some of these networks. An example is estimating the PINN solution’s uncertainty using Bayesian neural networks. Alternatively, when training CNNs and RNNs, finite-difference filters can be used to construct the differential equation residual in a PINN-like loss function. This section will illustrate all of these examples and more; first, we will define the mathematical framework for characterizing the various networks.

According to Caterini and Chang [25] a generic deep neural network with L layers, can be represented as the composition of L functions \(f_i(x_i,\theta _i)\) where \(x_i\) are known as state variables, and \(\theta _i\) denote the set of parameters for the i-th layer. So a function \(\varvec{}{u}(x)\) is approximated as

where each \(f_i\) is defined on two inner product spaces \(E_i\) and \(H_i\), being \(f_i\in E_i \times H_i\) and the layer composition, represented by \(\circ \), is to be read as \(f_2 \circ f_1 (x) = f_2(f_1(x))\).

Since Raissi et al [146] original vanilla PINN, the majority of solutions have used feed-forward neural networks. However, some researchers have experimented with different types of neural networks to see how they affect overall PINN performance, in particular with CNN, RNN, and GAN, and there seems there has been not yet a full investigation of other networks within the PINN framework. In this subsection, we attempt to synthesize the mainstream solutions, utilizing feed-forward networks, and all of the other versions. Table 1 contains a synthesis reporting the kind of neural network and the paper that implemented it.

2.1.1 Feed-forward Neural Network

A feed-forward neural network, also known as a multi-layer perceptron (MLP), is a collection of neurons organized in layers that perform calculations sequentially through the layers. Feed-forward networks, also known as multilayer perceptrons (MLP), are DNNs with several hidden layers that only move forward (no loopback). In a fully connected neural network, neurons in adjacent layers are connected, whereas neurons inside a single layer are not linked. Each neuron is a mathematical operation that applies an activation function to the weighted sum of it’s own inputs plus a bias factor [175]. Given an input \(x \in \Omega \) an MLP transforms it to an output, through a layer of units (neurons) which compose of affine-linear maps between units (in successive layers) and scalar non-linear activation functions within units, resulting in a composition of functions. So for MLP, it is possible to specify (3) as

equipping each \(E_i\) and \(H_i\) with the standard Euclidean inner product, i.e. \(E=H={\mathbb {R}}\) [26], and \(\alpha _i\) is a scalar (non-linear) activation function. The machine learning literature has studied several different activation functions, which we shall discuss later in this section. Equation (3) can also be rewritten in conformity with the notation used in Mishra and Molinaro [111]:

where for any \(1 \le k \le K\), it it is defined

Thus a neural network consists of an input layer, an output layer, and \((K-2)\) hidden layers.

2.1.2 FFNN Architectures

While the ideal DNN architecture is still an ongoing research; papers implementing PINN have attempted to empirically optimise the architecture’s characteristics, such as the number of layers and neurons in each layer. Smaller DNNs may be unable to effectively approximate unknown functions, whereas too large DNNs may be difficult to train, particularly with small datasets. Raissi et al [146] used different typologies of DNN, for each problem, like a 5-layer deep neural network with 100 neurons per layer, an DNN with 4 hidden layers and 200 neurons per layer or a 9 layers with 20 neuron each layer. Tartakovsky et al [170] empirically determine the feedforward network size, in particular they use three hidden layers and 50 units per layer, all with an hyperbolic tangent activation function. Another example of how the differential problem affects network architecture can be found in Kharazmi et al [78] for their hp-VPINN. The architecture is implemented with four layers and twenty neurons per layer, but for an advection equation with a double discontinuity of the exact solution, they use an eight-layered deep network. For a constrained approach, by utilizing a specific portion of the NN to satisfy the required boundary conditions, Zhu et al [199] use five hidden layers and 250 neurons per layer to constitute the fully connected neural network. Bringing the number of layers higher, in PINNeik [175], a DNN with ten hidden layers containing twenty neurons each is utilized, with a locally adaptive inverse tangent function as the activation function for all hidden layers except the final layer, which has a linear activation function. He et al [60] examines the effect of neural network size on state estimation accuracy. They begin by experimenting with various hidden layer sizes ranging from three to five, while maintaining a value of 32 neurons per layer. Then they set the number of hidden layers to three, the activation function to hyperbolic tangent, while varying the number of neurons in each hidden layer. Other publications have attempted to understand how the number of layers, neurons, and activation functions effect the NN’s approximation quality with respect to the problem to be solved, like [19].

2.1.3 Multiple FFNN

Although many publications employ a single fully connected network, a rising number of research papers have been addressing PINN with multiple fully connected network blended together, e.g. to approximate specific equation of a larger mathematical model. An architecture composed of five the feed-forward neural network is proposed by Haghighat et al [57]. In a two-phase Stefan problem, discussed later in this review and in Cai et al [22], a DNN is used to model the unknown interface between two different material phases, while another DNN describes the two temperature distributions of the phases. Instead of a single NN across the entire domain, Moseley et al [116] suggests using multiple neural networks, one for each subdomain. Finally, Lu et al [99] employed a pair of DNN, one for encoding the input space (branch net) and the other for encoding the domain of the output functions (trunk net). This architecture, known as DeepONet, is particularly generic because no requirements are made to the topology of the branch or trunk network, despite the fact that the two sub-networks have been implemented as FFNNs as in Lin et al [96].

2.1.4 Shallow Networks

To overcome some difficulties, various researchers have also tried to investigate shallower network solutions: these can be sparse neural networks, instead of fully connected architectures, or more likely single hidden layers as ELM (Extreme Learning Machine) [66]. When compared to the shallow architecture, more hidden layers aid in the modeling of complicated nonlinear relationships [155], however, using PINNs for real problems can result in deep networks with many layers associated with high training costs and efficiency issues. For this reason, not only deep neural networks have been employed for PINNs but also shallow ANN are reported in the literature. X–TFC, developed by Schiassi et al [153], employs a single-layer NN trained using the ELM algorithm. While PIELM [41] is proposed as a faster alternative, using a hybrid neural network-based method that combines two ideas from PINN and ELM. ELM only updates the weights of the outer layer, leaving the weights of the inner layer unchanged.

Finally, in Ramabathiran and Ramachandran [148] a Sparse, Physics-based, and partially Interpretable Neural Networks (SPINN) is proposed. The authors suggest a sparse architecture, using kernel networks, that yields interpretable results while requiring fewer parameters than fully connected solutions. They consider various kernels such as Radial Basis Functions (RBF), softplus hat, or Gaussian kernels, and apply their proof of concept architecture to a variety of mathematical problems.

2.1.5 Activation Function

The activation function has a significant impact on DNN training performance. ReLU, Sigmoid, Tanh are commonly used activations [168]. Recent research has recommended training an adjustable activation function like Swish, which is defined as \(x\cdot \text {Sigmoid}(\beta x)\) and \(\beta \) is a trainable parameter, and where \(\text {Sigmoid}\) is supposed to be a general sigmoid curve, an S-shaped function, or in some cases a logistic function. Among the most used activation functions there are logistic sigmoid, hyperbolic tangent, ReLu, and leaky ReLu. Most authors tend to use the infinitely differentiable hyperbolic tangent activation function \(\alpha (x) = \tanh (x)\) [60], whereas Cheng and Zhang [31] use a Resnet block to improve the stability of the fully-connected neural network (FC–NN). They also prove that Swish activation function outperforms the others in terms of enhancing the neural network’s convergence rate and accuracy. Nonetheless, because of the second order derivative evaluation, it is pivotal to choose the activation function in a PINN framework with caution. For example, while rescaling the PDE to dimensionless form, it is preferable to choose a range of [0, 1] rather than a wider domain, because most activation functions (such as Sigmoid, Tanh, Swish) are nonlinear near 0. Moreover the regularity of PINNs can be ensured by using smooth activation functions like as the sigmoid and hyperbolic tangent, allowing estimations of PINN generalization error to hold true [111].

2.1.6 Convolutional Neural Networks

Convolutional neural networks (ConvNet or CNN) are intended to process data in the form of several arrays, for example a color image made of three 2D arrays. CNNs usually have a number of convolution and pooling layers. The convolution layer is made up of a collection of filters (or kernels) that convolve across the full input rather than general matrix multiplication. The pooling layer instead performs subsampling, reducing the dimensionality.

For CNNs, according to Caterini and Chang [26], the layerwise function f can be written as

where \(\alpha \) is an elementwise nonlinearity, \(\Phi \) is the max-pooling map, and \({\mathcal {C}}\) the convolution operator.

It is worth noting that the convolution operation preserves translations and pooling is unaffected by minor data translations. This is applied to input image properties, such as corners, edges, and so on, that are translationally invariant, and will still be represented in the convolution layer’s output.

As a result, CNNs perform well with multidimensional data such as images and speech signals, in fact is in the domain of images that these networks have been used in a physic informed network framework.

For more on these topic the reader can look at LeCun et al [92], Chen et al [29], Muhammad et al [117], Aldweesh et al [2], Berman et al [17], Calin [23]

2.1.7 CNN Architectures

Because CNNs were originally created for image recognition, they are better suited to handling image-like data and may not be directly applicable to scientific computing problems, as most geometries are irregular with non-uniform grids; for example, Euclidean-distance-based convolution filters lose their invariance on non-uniform meshes.

A physics-informed geometry-adaptive convolutional neural network (PhyGeoNet) was introduced in Gao et al [48]. PhyGeoNet is a physics-informed CNN that uses coordinate transformation to convert solution fields from an irregular physical domain to a rectangular reference domain. Additionally, boundary conditions are strictly enforced making it a physics-constrained neural network.

Fang [44] observes that a Laplacian operator has been discretized in a convolution operation employing a finite-difference stencil kernel. Indeed, a Laplacian operator can be discretized using the finite volume approach, and the discretization procedure is equivalent to convolution. As a result, he enhances PINN by using a finite volume numerical approach with a CNN structure. He devised and implemented a CNN-inspired technique in which, rather than using a Cartesian grid, he computes convolution on a mesh or graph. Furthermore, rather than zero padding, the padding data serve as the boundary condition. Finally, Fang [44] does not use pooling because the data does not require compression.

2.1.8 Convolutional Encoder–Decoder Network

Autoencoders (AE) (or encoder–decoders) are commonly used to reduce dimensionality in a nonlinear way. It consists of two NN components: an encoder that translates the data from the input layer to a finite number of hidden units, and a decoder that has an output layer with the same number of nodes as the input layer [29].

For modeling stochastic fluid flows, Zhu et al [200] developed a physics-constrained convolutional encoder–decoder network and a generative model. Zhu et al [200] propose a CNN-based technique for solving stochastic PDEs with high-dimensional spatially changing coefficients, demonstrating that it outperforms FC–NN methods in terms of processing efficiency.

AE architecture of the convolutional neural network (CNN) is also used in Wang et al [177]. The authors propose a framework called a Theory-guided Auto–Encoder (TgAE) capable of incorporating physical constraints into the convolutional neural network.

Geneva and Zabaras [51] propose a deep auto-regressive dense encoder–decoder and the physics-constrained training algorithm for predicting transient PDEs. They extend this model to a Bayesian framework to quantify both epistemic and aleatoric uncertainty. Finally, Grubišić et al [54] also used an encoder–decoder fully convolutional neural network.

2.1.9 Recurrent Neural Networks

Recurrent neural network (RNN) is a further ANN type, where unlike feed-forward NNs, neurons in the same hidden layer are connected to form a directed cycle. RNNs may accept sequential data as input, making them ideal for time-dependent tasks [29]. The RNN processes inputs one at a time, using the hidden unit output as extra input for the next element [17]. An RNN’s hidden units can keep a state vector that holds a memory of previous occurrences in the sequence.

It is feasible to think of RNN in two ways: first, as a state system with the property that each state, except the first, yields an outcome; secondly, as a sequence of vanilla feedforward neural networks, each of which feeds information from one hidden layer to the next. For RNNs, according to Caterini and Chang [26], the layerwise function f can be written as

where \(\alpha \) is an elementwise nonlinearity (a typical choice for RNN is the tanh function), and where the hidden vector state h evolves according to a hidden-to-hidden weight matrix W, which starts from an input-to-hidden weight matrix U and a bias vector b.

RNNs have also been enhanced with several memory unit types, such as long short time memory (LSTM) and gated recurrent unit (GRU) [2]. Long short-term memory (LSTM) units have been created to allow RNNs to handle challenges requiring long-term memories, since LSTM units have a structure called a memory cell that stores information.

Each LSTM layer has a set of interacting units, or cells, similar to those found in a neural network. An LSTM is made up of four interacting units: an internal cell, an input gate, a forget gate, and an output gate. The cell state, controlled by the gates, can selectively propagate relevant information throughout the temporal sequence to capture the long short-term time dependency in a dynamical system [197].

The gated recurrent unit (GRU) is another RNN unit developed for extended memory; GRUs are comparable to LSTM, however they contain fewer parameters and are hence easier to train [17].

2.1.10 RNN Architectures

Viana et al [174] introduce, in the form of a neural network, a model discrepancy term into a given ordinary differential equation. Recurrent neural networks are seen as ideal for dynamical systems because they expand classic feedforward networks to incorporate time-dependent responses. Because a recurrent neural network applies transformations to given states in a sequence on a periodic basis, it is possible to design a recurrent neural network cell that does Euler integration; in fact, physics-informed recurrent neural networks can be used to perform numerical integration.

Viana et al [174] build recurrent neural network cells in such a way that specific numerical integration methods (e.g., Euler, Riemann, Runge–Kutta, etc.) are employed. The recurrent neural network is then represented as a directed graph, with nodes representing individual kernels of the physics-informed model. The graph created for the physics-informed model can be used to add data-driven nodes (such as multilayer perceptrons) to adjust the outputs of certain nodes in the graph, minimizing model discrepancy.

2.1.11 LSTM Architectures

Physicists have typically employed distinct LSTM networks to depict the sequence-to-sequence input-output relationship; however, these networks are not homogeneous and cannot be directly connected to one another. In Zhang et al [197] this relationship is expressed using a single network and a central finite difference filter-based numerical differentiator. Zhang et al [197] show two architectures for representation learning of sequence-to-sequence features from limited data that is augmented by physics models. The proposed networks is made up of two (\(PhyLSTM^2\)) or three (\(PhyLSTM^3\)) deep LSTM networks that describe state space variables, nonlinear restoring force, and hysteretic parameter. Finally, a tensor differentiator, which determines the derivative of state space variables, connects the LSTM networks.

Another approach is Yucesan and Viana [194] for temporal integration, that implement an LSTM using a previously introduced Euler integration cell.

2.1.12 Other Architectures for PINN

Apart from fully connected feed forward neural networks, convolutional neural networks, and recurrent neural networks, this section discusses other approaches that have been investigated. While there have been numerous other networks proposed in the literature, we discovered that only Bayesian neural networks (BNNs) and generative adversarial networks (GANs) have been applied to PINNs. Finally, an interesting application is to combine multiple PINNs, each with its own neural network.

2.1.13 Bayesian Neural Network

In the Bayesian framework, Yang et al [190] propose to use Bayesian neural networks (BNNs), in their B-PINNs, that consists of a Bayesian neural network subject to the PDE constraint that acts as a prior. BNN are neural networks with weights that are distributions rather than deterministic values, and these distributions are learned using Bayesian inference. For estimating the posterior distributions, the B-PINN authors use the Hamiltonian Monte Carlo (HMC) method and the variational inference (VI). Yang et al [190] find that for the posterior estimate of B-PINNs, HMC is more appropriate than VI with mean field Gaussian approximation.

They analyse also the possibility to use the Karhunen–Loève expansion as a stochastic process representation, instead of BNN. Although the KL is as accurate as BNN and considerably quicker, it cannot be easily applied to high-dimensional situations. Finally, they observe that to estimate the posterior of a Bayesian framework, KL–HMC or deep normalizing flow (DNF) models can be employed. While DNF is more computationally expensive than HMC, it is more capable of extracting independent samples from the target distribution after training. This might be useful for data-driven PDE solutions, however it is only applicable to low-dimensional problems.

2.1.14 GAN Architectures

In generative adversarial networks (GANs), two neural networks compete in a zero-sum game to deceive each other. One network generates and the other discriminates. The generator accepts input data and outputs data with realistic characteristics. The discriminator compares the real input data to the output of the generator. After training, the generator can generate new data that is indistinguishable from real data [17].

Yang et al [189] propose a new class of generative adversarial networks (PI–GANs) to address forward, inverse, and mixed stochastic problems in a unified manner. Unlike typical GANs, which rely purely on data for training, PI–GANs use automatic differentiation to embed the governing physical laws in the form of stochastic differential equations (SDEs) into the architecture of PINNs. The discriminator in PI–GAN is represented by a basic FFNN, while the generators are a combination of FFNNs and a NN induced by the SDE.

2.1.15 Multiple PINNs

A final possibility is to combine several PINNs, each of which could be implemented using a different neural network. Jagtap et al [71] propose a conservative physics-informed neural network (cPINN) on discrete domains. In this framework, the complete solution is recreated by patching together all of the solutions in each sub-domain using the appropriate interface conditions. This type of domain segmentation also allows for easy network parallelization, which is critical for obtaining computing efficiency. This method may be expanded to a more general situation, called by the authors as Mortar PINN, for connecting non-overlapping deconstructed domains. Moreover, the suggested technique may use totally distinct neural networks, in each subdomain, with various architectures to solve the same underlying PDE.

Stiller et al [167] proposes the GatedPINN architecture by incorporating conditional computation into PINN. This architecture design is composed of a gating network and set of PINNs, hereinafter referred to as “experts”; each expert solves the problem for each space-time point, and their results are integrated via a gating network. The gating network determines which expert should be used and how to combine them. We will use one of the expert networks as an example in the following section of this review.

2.2 Injection of Physical Laws

To solve a PDE with PINNs, derivatives of the network’s output with respect to the inputs are needed. Since the function \(\varvec{}{u}\) is approximated by a NN with smooth activation function, \(\hat{\varvec{}{u}}_\theta \), it can be differentiated. There are four methods for calculating derivatives: hand-coded, symbolic, numerical, and automatic. Manually calculating derivatives may be correct, but it is not automated and thus impractical [175].

Symbolic and numerical methods like finite differentiation perform very badly when applied to complex functions; automatic differentiation (AD), on the other hand, overcomes numerous restrictions as floating-point precision errors, for numerical differentiation, or memory intensive symbolic approach. AD use exact expressions with floating-point values rather than symbolic strings, there is no approximation error [175].

Automatic differentiation (AD) is also known as autodiff, or algorithmic differentiation, although it would be better to call it algorithmic differentiation since AD does not totally automate differentiation: instead of symbolically evaluating derivatives operations, AD performs an analytical evaluation of them.

Considering a function \(f:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) of which we want to calculate the Jacobian J, after calculating the graph of all the operations composing the mathematical expression, AD can then work in either forward or reverse mode for calculating the numerical derivative.

AD results being the main technique used in literature and used by all PINN implementations, in particular only Fang [44] use local fitting method approximation of the differential operator to solve the PDEs instead of automatic differentiation (AD). Moreover, by using local fitting method rather than employing automated differentiation, Fang is able to verify that a his PINN implementation has a convergence.

Essentially, AD incorporates a PDE into the neural network’s loss Eq. (2), where the differential equation residual is

and similarly the residual NN corresponding to boundary conditions (BC) or initial conditions (IC) is obtained by substituting \(\hat{\varvec{}{u}}_{\theta }\) in the second equation of (1), i.e.

Using these residuals, it is possible to assess how well an approximation \(u_{\theta }\) satisfies (1). It is worth noting that for the exact solution, u, the residuals are \(r_{\mathcal {F}}[u]=r_{\mathcal {B}}[u]=0\) [38].

In Raissi and Karniadakis [140], Raissi et al [146], the original formulation of the aforementioned differential equation residual, leads to the form of

In the deep learning framework, the principle of imposing physical constraints is represented by differentiating neural networks with respect to input spatiotemporal coordinates using the chain rule. In Mathews et al [106] the model loss functions are embedded and then further normalized into dimensionless form. The repeated differentiation, with AD, and composition of networks used to create each individual term in the partial differential equations results in a much larger resultant computational graph. As a result, the cumulative computation graph is effectively an approximation of the PDE equations [106].

The chain rule is used in automatic differentiation for several layers to compute derivatives hierarchically from the output layer to the input layer. Nonetheless, there are some situations in which the basic chain rule does not apply. Pang et al [128] substitute fractional differential operators with their discrete versions, which are subsequently incorporated into the PINNs’ loss function.

2.3 Model Estimation by Learning Approaches

The PINN methodology determines the parameters \(\theta \) of the NN, \(\hat{\varvec{}{u}}_\theta \), by minimizing a loss function, i.e.

where

The three terms of \({\mathcal {L}}\) refer to the errors in describing the initial \({\mathcal {L}}_{i}\) or boundary condition \({\mathcal {L}}_{b}\), both indicated as \({\mathcal {L}}_{\mathcal {B}}\), the loss respect the partial differential equation \({\mathcal {L}}_{{\mathcal {F}}}\), and the validation of known data points \({\mathcal {L}}_{data}\). Losses are usually defined in the literature as a sum, similar to the previous equations, however they can be considered as integrals

This formulation is not only useful for a theoretical study, as we will see in 2.4, but it is also implemented in a PINN package, NVIDIA Modulus [123], allowing for more effective integration strategies, such as sampling with higher frequency in specific areas of the domain to more efficiently approximate the integral losses.

Note that, if the PINN framework is employed as a supervised methodology, the neural network parameters are chosen by minimizing the difference between the observed outputs and the model’s predictions; otherwise, just the PDE residuals are taken into account.

As in Eq. (6) the first term, \({\mathcal {L}}_{\mathcal {F}}\), represents the loss produced by a mismatch with the governing differential equations \({\mathcal {F}}\) [60, 167]. It enforces the differential equation \({\mathcal {F}}\) at the collocation points, which can be chosen uniformly or unevenly over the domain \(\Omega \) of Eq. (1).

The remaining two losses attempt to fit the known data over the NN. The loss caused by a mismatch with the data (i.e., the measurements of \(\varvec{}{u}\)) is denoted by \({\mathcal {L}}_{data} (\theta )\). The second term typically forces \(\hat{\varvec{}{u}}_\theta \) to mach the measurements of \(\varvec{}{u}\) over provided points \((\varvec{}{z}, \varvec{}{u}^*)\), which can be given as synthetic data or actual measurements, and the weight \(\omega _d\) can account for the quality of such measurements.

The other term is the loss due to mismatch with the boundary or initial conditions, \({\mathcal {B}} (\hat{\varvec{}{u}}_\theta ) = \varvec{}{g}\) from Eq. (1).

Essentially, the training approach recovers the shared network parameters \(\theta \) from:

-

few scattered observations of \(\varvec{}{u}(\varvec{}{z})\), specifically \(\{\varvec{}{z}_{i}, \varvec{}{u}_i^*\}\), \(i = 1,\dots ,N_d\)

-

as well as a greater number of collocation points \(\{\varvec{}{z}_{i}, \varvec{}{r}_i = 0\}\), \(i = 1,\dots ,N_r\) for the residual,

The resulting optimization problem can be handled using normal stochastic gradient descent without the need for constrained optimization approaches by minimizing the combined loss function. A typical implementation of the loss uses a mean square error formulation [82], where:

enforces the PDE on a wide set of randomly selected collocation locations inside the domain, i.e. penalizes the difference between the estimated left-hand side of a PDE and the known right-hand side of a PDE [82]; other approaches may employ an integral definition of the loss [61]. As for the boundary and initial conditions, instead

whereas for the data points,

computes the error of the approximation u(t, x) at known data points. In the case of a forward problem, the data loss might also indicate the boundary and initial conditions, while in an inverse problem it refers to the solution at various places inside the domain [82].

In Raissi and Karniadakis [140], Raissi et al [146], original approach the overall loss (6) was formulated as

The gradients in \( {\mathcal {F}}\) are derived using automated differentiation. The resulting predictions are thus driven to inherit any physical attributes imposed by the PDE constraint [191]. The physics constraints are included in the loss function to enforce model training, which can accurately reflect latent system nonlinearity even when training data points are scarce [197].

2.3.1 Observations About the Loss

The loss \({\mathcal {L}}_{\mathcal {F}}(\theta )\) is calculated by utilizing automated differentiation (AD) to compute the derivatives of \(\hat{\varvec{}{u}}_\theta (\varvec{}{z})\) [60]. Most ML libraries, including TensorFlow and Pytorch, provide AD, which is mostly used to compute derivatives with respect to DNN weights (i.e. \(\theta \)). AD permits the PINN approach to implement any PDE and boundary condition requirements without numerically discretizing and solving the PDE [60].

Additionally, by applying PDE constraints via the penalty term \({\mathcal {L}}_{\mathcal {F}}(\theta )\), it is possible to use the related weight \(\omega _{\mathcal {F}}\) to account for the PDE model’s fidelity. To a low-fidelity PDE model, for example, can be given a lower weight. In general, the number of unknown parameters in \(\theta \) is substantially greater than the number of measurements, therefore regularization is required for DNN training [60].

By removing loss for equations from the optimization process (i.e., setting \(\omega _{\mathcal {F}}=0\)), neural networks could be trained without any knowledge of the underlying governing equations. Alternatively, supplying initial and boundary conditions for all dynamical variables would correspond to solving the equations directly with neural networks on a regular basis [106].

While it is preferable to enforce the physics model across the entire domain, the computational cost of estimating and reducing the loss function (6), while training, grows with the number of residual points [60]. Apart the number of residual points, also the position (distribution) of residual points are crucial parameters in PINNs because they can change the design of the loss function [103].

A deep neural network can reduce approximation error by increasing network expressivity, but it can also produce a large generalization error. Other hyperparameters, such as learning rate, number of iterations, and so on, can be adjusted to further control and improve this issue.

The addition of extra parameters layer by layer in a NN modifies the slope of the activation function in each hidden-layer, improving the training speed. Through the slope recovery term, these activation slopes can also contribute to the loss function [71].

2.3.2 Soft and Hard Constraint

BC constraints can be regarded as penalty terms (soft BC enforcement) [200], or they can be encoded into the network design (hard BC enforcement) [168]. Many existing PINN frameworks use a soft approach to constrain the BCs by creating extra loss components defined on the collocation points of borders. The disadvantages of this technique are twofold:

-

1.

satisfying the BCs accurately is not guaranteed;

-

2.

the assigned weight of BC loss might effect learning efficiency, and no theory exists to guide determining the weights at this time.

Zhu et al [199] address the Dirichlet BC in a hard approach by employing a specific component of the neural network to purely meet the specified Dirichlet BC. Therefore, the initial boundary conditions are regarded as part of the labeled data constraint.

When compared to the residual-based loss functions typically found in the literature, the variational energy-based loss function is simpler to minimize and so performs better [52]. Loss function can be constructed using collocation points, weighted residuals derived by the Galerkin–Method [76], or energy based. Alternative loss functions approaches are compared in Li et al [94], by using either only data-driven (with no physics model), a PDE-based loss, and an energy-based loss. They observe that there are advantages and disadvantages for both PDE-based and energy-based approaches. PDE-based loss function has more hyperparameters than the energy-based loss function. The energy-based strategy is more sensitive to the size and resolution of the training samples than the PDE-based strategy, but it is more computationally efficient.

2.3.3 Optimization Methods

The minimization process of the loss function is called training; in most of the PINN literature, loss functions are optimized using minibatch sampling using Adam and the limited-memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) algorithm, a quasi-Newton optimization algorithm. When monitoring noisy data, Mathews et al [106] found that increasing the sample size and training only with L-BFGS achieved the optimum for learning.

For a moderately sized NN, such as one with four hidden layers (depth of the NN is 5) and twenty neurons in each layer (width of the NN is 20), we have over 1000 parameters to optimize. There are several local minima for the loss function, and the gradient-based optimizer will almost certainly become caught in one of them; finding global minima is an NP-hard problem [128].

The Adam approach, which combines adaptive learning rate and momentum methods, is employed in Zhu et al [199] to increase convergence speed, because stochastic gradient descent (SGD) hardly manages random collocation points, especially in 3D setup.

Yang et al [189] use Wasserstein GANs with gradient penalty (WGAN–GP) and prove that they are more stable than vanilla GANs, in particular for approximating stochastic processes with deterministic boundary conditions.

cPINN [71] allow to flexibly select network hyper-parameters such as optimization technique, activation function, network depth, or network width based on intuitive knowledge of solution regularity in each sub-domain. E.g. for smooth zones, a shallow network may be used, and a deep neural network can be used in an area where a complex nature is assumed.

He et al [60] propose a two-step training approach in which the loss function is minimized first by the Adam algorithm with a predefined stop condition, then by the L-BFGS-B optimizer. According to the aforementioned paper, for cases with a little amount of training data and/or residual points, L-BFGS-B, performs better with a faster rate of convergence and reduced computing cost.

Finally, let’s look at a practical examples for optimizing the training process; dimensionless and normalized data are used in DeepONet training to improve stability [96]. Moreover the governing equations in dimensionless form, including the Stokes equations, electric potential, and ion transport equations, are presented in DeepM &Mnets [21].

In terms of training procedure initialization, slow and fast convergence behaviors are produced by bad and good initialization, respectively, but Pang et al [128] reports a technique for selecting the most suitable one. By using a limited number of iterations, one can first solve the inverse problem. This preliminary solution has low accuracy due to discretization, sampling, and optimization errors. The optimized parameters from the low-fidelity problem can then be used as a suitable initialization.

2.4 Learning Theory of PINN

This final subsection provides most recent theoretical studies on PINN to better understand how they work and their potential limits. These investigations are still in their early stages, and much work remains to be done.

Let us start by looking at how PINN can approximate the true solution of a differential equation, similar to how error analysis is done a computational framework. In traditional numerical analysis, we approximate the true solution \(\varvec{}{u}(\varvec{}{z})\) of a problem with an approximation scheme that computes \(\hat{\varvec{}{u}}_\theta (\varvec{}{z})\). The main theoretical issue is to estimate the global error

Ideally, we want to find a set of parameters, \(\theta \), such that \({\mathcal {E}}=0\).

When solving differential equations using a numerical discretization technique, we are interested in the numerical method’s stability, consistency, and convergence [8, 150, 171].

In such setting, discretization’s error can be bound in terms of consistency and stability, a basic result in numerical analysis. The Lax–Richtmyer Equivalence Theorem is often referred to as a fundamental result of numerical analysis where roughly the convergence is ensured when there is consistency and stability.

When studying PINN to mimic this paradigm, the convergence and stability are related to how well the NN learns from physical laws and data. In this conceptual framework, we use a NN, which is a parameterized approximation of problem solutions modeled by physical laws. In this context, we will (i) introduce the concept of convergence for PINNs, (ii) revisit the main error analysis definitions in a statistical learning framework, and (iii) finally report results for the generalization error.

2.4.1 Convergence Aspects

The goal of a mathematical foundation for the PINN theory is to investigate the convergence of the computed \(\hat{\varvec{}{u}}_\theta (\varvec{}{z})\) to the solution of problem (1), \(\varvec{}{u}(\varvec{}{z})\).

Consider a NN configuration with coefficients compounded in the vector \(\varvec{}{\theta }\) and a cardinality equal to the number of coefficients of the NN, \(\#\varvec{}{\theta }\). In such setting, we can consider the hypothesis class

composed of all the predictors representing a NN whose number of coefficients of the architecture is n. The capacity of a PINN to be able to learn, is related to how big is n, i.e. the expressivity of \({\mathcal {H}}_n\).

In such setting, a theoretical issue, is to investigate, how dose the sequence of compute predictors, \({\hat{u}}_\theta \), converges to the solution of the physical problem (1)

A recent result in this direction was obtained by De Ryck et al [37] in which they proved that the difference \({\hat{u}}_{\theta (n)} - u\) converges to zero as the width of a predefined NN, with activation function tanh, goes to infinity.

Practically, the PINN requires choosing a network class \({\mathcal {H}}_n\) and a loss function given a collection of N-training data [157]. Since the quantity and quality of training data affect \({\mathcal {H}}_n\), the goal is to minimize the loss, by finding a \(u_{\theta ^*} \in {\mathcal {H}}_n\), by training the N using an optimization process. Even if \({\mathcal {H}}_n\) includes the exact solution u to PDEs and a global minimizer is established, there is no guarantee that the minimizer and the solution u will coincide. A first work related on PINN [157], the authors show that the sequence of minimizers \(\hat{\varvec{}{u}}_{\theta ^*}\) strongly converges to the solution of a linear second-order elliptic and parabolic type PDE.

2.4.2 Statistical Learning Error Analysis

The entire learning process of a PINN can be considered as a statistical learning problem, and it involves mathematical foundations aspects for the error analysis [88]. For a mathematical treatment of errors in PINN, it is important to take into account: optimization, generalization errors, and approximation error. It is worth noting that the last one is dependent on the architectural design.

Let be N collocation points on \({\bar{\Omega }} = \Omega \cup \partial \Omega \), a NN approximation realized with \(\theta \), denoted by \({\hat{u}}_\theta \), evaluated at points \(z_i\), whose exact value is \(h_i\). Following the notation of Kutyniok [88], the empirical risk is defined as

and represents how well the NN is able to predict the exact value of the problem. The empirical risk actually corresponds to the loss defined in 2.3, where \(\hat{\varvec{}{u}}_\theta = {\mathcal {F}} (\hat{\varvec{}{u}}_\theta (\varvec{}{z}_i))\) and \(h_i=\varvec{}{f}(\varvec{}{z}_i)\) and similarly for the boundaries.

A continuum perspective is the risk of using an approximator \({\hat{u}}_\theta \), calculated as follows:

where the distance between the approximation \({\hat{u}}_\theta \) and the solution u is obtained with the \(L^2\)-norm. The final approximation computed by the PINN, after a training process of DNN, is \({\hat{u}}_\theta ^*\). The main aim in error analysis, is to find suitable estimate for the risk of predicting u i.e. \({\mathcal {R}}[{\hat{u}}_\theta ^*]\).

The training process, uses gradient-based optimization techniques to minimize a generally non convex cost function. In practice, the algorithmic optimization scheme will not always find a global minimum. So the error analysis takes into account the optimization error defined as follows:

Because the objective function is nonconvex, the optimization error is unknown. Optimization frequently involves a variety of engineering methods and time-consuming fine-tuning, using, gradient-based optimization methods. Several stochastic gradient descent methods have been proposed,and many PINN use Adam and L-BFGS. Empirical evidence suggests that gradient-based optimization techniques perform well in different challenging tasks; however, gradient-based optimization might not find a global minimum for many ML tasks, such as for PINN, and this is still an open problem [157].

Moreover a measure of the prediction accuracy on unseen data in machine learning is the generalization error:

The generalization error measures how well the loss integral is approximated in relation to a specific trained neural network. One of the first paper focused with convergence of generalization error is Shin et al [157].

About the ability of the NN to approximate the exact solution, the approximation error is defined as

The approximation error is well studied in general, in fact we know that one layer neural network with a high width can evenly estimate a function and its partial derivative as shown by Pinkus [133].

Finally, as stated in Kutyniok [88], the global error between the trained deep neural network \({\hat{u}}_\theta ^*\) and the correct solution function u of problem (1), can so be bounded by the previously defined error in the following way

These considerations lead to the major research threads addressed in recent studies, which are currently being investigated for PINN and DNNs in general Kutyniok [88].

2.4.3 Error Analysis Results for PINN

About the approximating error, since it depends on the NN architecture, mathematical foundations results are generally discussed in papers deeply focused on this topic Calin [24], Elbrächter et al [43].

However, a first argumentation strictly related to PINN is reported in Shin et al [158]. One of the main theoretical results on \({\mathcal {E}}_A\), can be found in De Ryck et al [37]. They demonstrate that for a neural network with a tanh activation function and only two hidden layers, \({\hat{u}}_\theta \), may approximate a function u with a bound in a Sobolev space:

where N is the number of training points, \(c,C>0\) are constants independent of N and explicitly known, \(u\in W^{ s,\infty } ([0,1]^d)\). We remark that the NN has width \(N^d\), and \(\#\theta \) depends on both the number of training points N and the dimension of the problem d.

Formal findings for generalization errors in PINN are provided specifically for a certain class of PDE. In Shin et al [157] they provide convergence estimate for linear second-order elliptic and parabolic type PDEs, while in Shin et al [158] they extend the results to all linear problems, including hyperbolic equations. Mishra and Molinaro [113] gives an abstract framework for PINN on forward problem for PDEs, they estimate the generalization error by means of training error (empirical risk), and number of training points, such abstract framework is also addressed for inverse problems [111]. In De Ryck et al [38] the authors specifically address Navier–Stokes equations and show that small training error imply a small generalization error, by proving that

This estimate suffer from the curse of dimensionality (CoD), that is to say, in order to reduce the error by a certain factor, the number of training points needed and the size of the neural network, scales up exponentially.

Finally, a recent work [18] has proposed explicit error estimates and stability analyses for incompressible Navier–Stokes equations.

De Ryck and Mishra [36] prove that for a Kolmogorov type PDE (i.e. heat equation or Black–Scholes equation), the following inequality holds, almost always,

and is not dependant on the dimension of the problem d.

Finally, Mishra and Molinaro [112] investigates the radiative transfer equation, which is noteworthy for its high-dimensionality, with the radiative intensity being a function of 7 variables (instead of 3, as common in many physical problems). The authors prove also here that the generalization error is bounded by the training error and the number of training points, and the dimensional dependence is on a logarithmic factor:

The authors are able to show that PINN does not suffer from the dimensionality curse for this problem, observing that the training error does not depend on the dimension but only on the number of training points.

3 Differential Problems Dealt with PINNs

The first vanilla PINN [146] was built to solve complex nonlinear PDE equations of the form \(\varvec{}{u}_t + {\mathcal {F}}_{\varvec{}{x}}\varvec{}{u} = 0\), where \(\varvec{}{x}\) is a vector of space coordinates, t is a vector time coordinate, and \({\mathcal {F}}_{\varvec{}{x}}\) is a nonlinear differential operator with respect to spatial coordinates. First and mainly, the PINN architecture was shown to be capable of handling both forward and inverse problems. Eventually, in the years ahead, PINNs have been employed to solve a wide variety of ordinary differential equations (ODEs), partial differential equations (PDEs), Fractional PDEs, and integro-differential equations (IDEs), as well as stochastic differential equations (SDEs). This section is dedicated to illustrate where research has progressed in addressing various sorts of equations, by grouping equations according to their form and addressing the primary work in literature that employed PINN to solve such equation. All PINN papers dealing with ODEs will be presented first. Then, works on steady-state PDEs such as Elliptic type equations, steady-state diffusion, and the Eikonal equation are reported. The Navier–Stokes equations are followed by more dynamical problems such as heat transport, advection-diffusion-reaction system, hyperbolic equations, and Euler equations or quantum harmonic oscillator. Finally, while all of the previous PDEs can be addressed in their respective Bayesian problems, the final section provides insight into how uncertainly is addressed, as in stochastic equations.

3.1 Ordinary Differential Equations

ODEs can be used to simulate complex nonlinear systems which are difficult to model using simply physics-based models [91]. A typical ODE system is written as

where initial conditions can be specified as \({\mathcal {B}}(\varvec{}{u}(t))= \varvec{}{g}(t)\), resulting in an initial value problem or boundary value problem with \({\mathcal {B}}(\varvec{}{u}(\varvec{}{x})) = \varvec{}{g}(\varvec{}{x})\). A PINN approach is used by Lai et al [91] for structural identification, using Neural Ordinary Differential Equations (Neural ODEs). Neural ODEs can be considered as a continuous representation of ResNets (Residual Networks), by using a neural network to parameterize a dynamical system in the form of ODE for an initial value problem (IVP):

where f is the neural network parameterized by the vector \(\theta \).

The idea is to use Neural ODEs as learners of the governing dynamics of the systems, and so to structure of Neural ODEs into two parts: a physics-informed term and an unknown discrepancy term. The framework is tested using a spring-mass model as a 4-degree-of-freedom dynamical system with cubic nonlinearity, with also noisy measured data. Furthermore, they use experimental data to learn the governing dynamics of a structure equipped with a negative stiffness device [91].

Zhang et al [197] employ deep long short-term memory (LSTM) networks in the PINN approach to solve nonlinear structural system subjected to seismic excitation, like steel moment resistant frame and the single degree-of-freedom Bouc–Wen model, a nonlinear system with rate-dependent hysteresis [197]. In general they tried to address the problems of nonlinear equation of motion :

where \({\mathbf {g}}(t)={{\textbf {M}}}^{-1}{\mathbf {h}}(t)\) denotes the mass-normalized restoring force, being M the mass matrices; h the total nonlinear restoring force, and \(\varvec{\Gamma }\) force distribution vector.

Directed graph models can be used to directly implement ODE as deep neural networks [174], while using an Euler RNN for numerical integration.

In Nascimento et al [121] is presented a tutorial on how to use Python to implement the integration of ODEs using recurrent neural networks.

ODE-net idea is used in Tong et al [172] for creating Symplectic Taylor neural networks. These NNs consist of two sub-networks, that use symplectic integrators instead of Runge-Kutta, as done originally in ODE-net, which are based on residual blocks calculated with the Euler method. Hamiltonian systems as Lotka–Volterra, Kepler, and Hénon–Heiles systems are also tested in the aforementioned paper.

3.2 Partial Differential Equations

Partial Differential Equations are the building bricks of a large part of models that are used to mathematically describe physics phenomenologies. Such models have been deeply investigated and often solved with the help of different numerical strategies. Stability and convergence of these strategies have been deeply investigated in literature, providing a solid theoretical framework to approximately solve differential problems. In this Section, the application of the novel methodology of PINNs on different typologies of Partial Differential models is explored.

3.2.1 Steady State PDEs

In Kharazmi et al [76, 78], a general steady state problem is addressed as:

over the domain \(\Omega \subset {\mathbb {R}}^d\) with dimensions d and bounds \(\partial \Omega \). \({\mathcal {F}}_s\) typically contains differential and/or integro-differential operators with parameters \(\varvec{}{q}\) and \( f(\varvec{}{x})\) indicates some forcing term.

In particular an Elliptic equation can generally be written by setting

Tartakovsky et al [170] consider a linear

and non linear

diffusion equation with unknown diffusion coefficient K. The equation essentially describes an unsaturated flow in a homogeneous porous medium, where u is the water pressure and K(u) is the porous medium’s conductivity. It is difficult to measure K(u) directly, so Tartakovsky et al [170] assume that only a finite number of measurements of u are available.

Tartakovsky et al [170] demonstrate that the PINN method outperforms the state-of-the-art maximum a posteriori probability method. Moreover, they show that utilizing only capillary pressure data for unsaturated flow, PINNs can estimate the pressure-conductivity for unsaturated flow. One of the first novel approach, PINN based, was the variational physics-informed neural network (VPINN) introduced in Kharazmi et al [76], which has the advantage of decreasing the order of the differential operator through integration-by-parts. The authors tested VPINN with the steady Burgers equation, and on the two dimensional Poisson’s equation. VPINN Kharazmi et al [76] is also used to solve Schrödinger Hamiltonians, i.e. an elliptic reaction-diffusion operator [54].

In Haghighat et al [56] a nonlocal approach with the PINN framework is used to solve two-dimensional quasi-static mechanics for linear-elastic and elastoplastic deformation. They define a loss function for elastoplasticity, and the input variables to the feed-forward neural network are the displacements, while the output variables are the components of the strain tensor and stress tensor. The localized deformation and strong gradients in the solution make the boundary value problem difficult solve. The Peridynamic Differential Operator (PDDO) is used in a nonlocal approach with the PINN paradigm in Haghighat et al [56]. They demonstrated that the PDDO framework can capture stress and strain concentrations using global functions.

In Dwivedi and Srinivasan [41] the authors address different 1D-2D linear advection and/or diffusion steady-state problems from Berg and Nyström [16], by using their PIELM, a PINN combined with ELM (Extreme Learning Machine). A critical point is that the proposed PIELM only takes into account linear differential operators.

In Ramabathiran and Ramachandran [148] they consider linear elliptic PDEs, such as the solution of the Poisson equation in both regular and irregular domains, by addressing non-smoothness in solutions.

The authors in Ramabathiran and Ramachandran [148] propose a class of partially interpretable sparse neural network architectures (SPINN), and this architecture is achieved by reinterpreting meshless representation of PDE solutions.

Laplace–Beltrami Equation is solved on 3D surfaces, like complex geometries, and high dimensional surfaces, by discussing the relationship between sample size, the structure of the PINN, and accuracy [46].

The PINN paradigm has also been applied to Eikonal equations, i.e. are hyperbolic problems written as

where v is a velocity and u an unknown activation time. The Eikonal equation describes wave propagation, like the travel time of seismic wave [162, 175] or cardiac activation electrical waves [53, 151].

By implementing EikoNet, for solving a 3D Eikonal equation, Smith et al [162] find the travel-time field in heterogeneous 3D structures; however, the proposed PINN model is only valid for a single fixed velocity model, hence changing the velocity, even slightly, requires retraining the neural network. EikoNet essentially predicts the time required to go from a source location to a receiver location, and it has a wide range of applications, like earthquake detection.

PINN is also proved to outperform the first-order fast sweeping solution in accuracy tests [175], especially in the anisotropic model.