Abstract

We study multiplicative statistics for the eigenvalues of unitarily-invariant Hermitian random matrix models. We consider one-cut regular polynomial potentials and a large class of multiplicative statistics. We show that in the large matrix limit several associated quantities converge to limits which are universal in both the polynomial potential and the family of multiplicative statistics considered. In turn, such universal limits are described by the integro-differential Painlevé II equation, and in particular they connect the random matrix models considered with the narrow wedge solution to the KPZ equation at any finite time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Random matrix theory has proven over time to be a powerful modern tool in mathematics and physics. With widespread applications in different areas such as engineering, statistical mechanics, probability, number theory, to mention only a few, its theory is rich and has been under intense development in the past thirty or so years. In a sense, much of the success of random matrix theory has been due to its exact solvability, or integrability, turning them into touchstones for predicting and confirming complex phenomena in nature.

One of the most celebrated results in random matrix theory is the convergence of fluctuations of the largest eigenvalue towards the Tracy–Widom law \(F_{\textrm{GUE}}\). This result was first obtained by Tracy and Widom [71] for matrices from the Gaussian Unitary Ensemble (GUE), who also showed that \(F_{\textrm{GUE}}\) is expressible in terms of a particular solution to the Painlevé II equation (shortly PII). Their findings sparked numerous advances in mathematics and physics, which began from the extension to several other matrix models but shortly afterwards widespread beyond the realm of random matrices.

Starting with the celebrated Baik–Deift–Johansson Theorem [5], the distribution \(F_{\textrm{GUE}}\) has been identified as the limiting one-point distribution for the fluctuations of a wide range of different probabilistic models. One of the most ubiquitous of such models is the KPZ equation, introduced in the 1980s by Kardar, Parisi and Zhang. Despite numerous developments surrounding it, exactly solving it remained an outstanding open problem until the early 2010s, when four different groups of researchers [2, 29, 49, 70] independently found an exact solution for its so-called narrow wedge solution. Amongst these works, Amir et al. [2] found the one-point distribution for the height function of the KPZ solution, showing that it relates to a distribution found a little earlier by Johansson in a grand canonical Gaussian-type matrix model [57], and further characterizing it in terms of the integro-differential Painlevé II equation. The latter is an extension of the PII differential equation, and almost as an immediate consequence the authors of [2] also obtained that this one-point distribution, in the large time limit, converges to \(F_{\textrm{GUE}}\) itself.

In much inspired by [5, 56] and later by [2, 29, 49, 70], it has been realized that several stochastic growth models share an inherent connection with statistics of integrable point processes, in what is formally established as an identity between a transformation of the growth model and statistics for the point process. To our knowledge, the very first instance of such relation appears in the work of Borodin [16] which connects higher spin vertex model with Macdonald measures. By taking appropriate limit of such connection, it was later found that the KPZ equation is connected to the Airy\(_2\) point process [20], ASEP is related to the discrete Laguerre Ensemble, stochastic six vertex model is connected in the same way to the Meixner ensemble, or yet the Krawtchouk ensemble [21].

As a common feature to these connections, the underlying correspondences establish that the so-called q-Laplace transform of the associated height function coincides with some multiplicative statistics of the point process. The latter, in turn, admits exact solvability, and it is widely believed that a long list of new insights on the growth models can be obtained by studying the corresponding multiplicative statistics of the point processes.

This program has already been taken to a great start for the KPZ equation, and exploring its connection with the Airy\(_2\) point process Corwin and Ghosal [37] were able to obtain bounds for the lower tail of the KPZ equation. Shortly afterwards, such bounds were improved by Cafasso and Claeys [27] with Riemann–Hilbert methods common in random matrix theory.

Our major goal is to take on the program of understanding multiplicative statistics for random particle systems, and carry out its detailed asymptotic analysis for one of the most inspiring models, namely eigenvalues of random matrices.

Statistics of eigenvalues of random matrices have been extensively studied in the past, notably in the context of the so-called linear statistics. In more recent times, statistics associated to Fisher-Hartwig singularities came to the spotlight, in particular due to their implications in connection with Gaussian Multiplicative Chaos, and are deeply understood to a quite high level of generality and precision; we refer the reader to [1, 6, 9, 30, 35, 44, 48, 72,73,74] for a non-exhaustive list of accomplishments in different directions. We consider a different type of statistics, inspired by the works in stochastic growth models and which motivate us to refer to them as multiplicative. Among other distinct features our family of multiplicative statistics has the key property that infinitely many singularities of its symbol are approaching the edge of the eigenvalue spectrum in a critical way.

In more concrete terms, we consider the Hermitian matrix model with an arbitrary one-cut regular polynomial potential V, and associate to it a general family of multiplicative statistics on its eigenvalues, indexed by a function Q satisfying certain natural regularity conditions. Our findings show that when the number of eigenvalues is large such multiplicative statistics become universal: in the large matrix limit they converge to a multiplicative statistics of the Airy\(_2\) point process which is independent of V and Q. This limiting statistics admits a characterization in terms of a particular solution to the integro-differential Painlevé II equation, and it is the same quantity that connects the KPZ equation and the Airy\(_2\) point process. So, in turn, we find that random matrix theory can recast the narrow wedge solution to KPZ equation for finite time in an universal way.

The random matrix statistics that we study are associated to a deformed orthogonal polynomial ensemble, also indexed by Q, which we analyze. As we learn from earlier work of Borodin and Rains [22] which was recently rediscovered and greatly extended by Claeys and Glesner [36] (and which we also briefly explain later on), this deformed ensemble is a conditional ensemble of a marked process associated to the original random matrix model. We show that the correlation kernel for this point process converges to a kernel constructed out of the same solution to the integro-differential PII equation that appeared in [2]. This kernel is again universal in both V and Q, and turns out to be the kernel of the induced conditional process on the marked Airy\(_2\) point process. Naturally, there are orthogonal polynomials and their norming constants and recurrence coefficients associated to this deformed ensemble. With our approach we also obtain similar universality results for such quantities, showing that they are indeed universal in V and Q and also connect to the integro-differential PII in a neat way.

Beyond the concrete results, with this work we also hope to shed light into the rich structure underlying multiplicative statistics for eigenvalues of random matrices with singular symbols beyond the Fisher-Hartwig type. Much of the recent relevance of Painlevé equations is due to its appearance in random matrix theory, see [50] for an overview of several of these connections. There has been a growing recent interest in integro-differential Painlevé-type equations [24, 26, 28, 32, 58, 64], and our results place the integro-differential PII as a central universal object in random matrix theory as well.

We scale the multiplicative statistics to produce a critical behavior at a soft edge of the matrix model, and consequently the core of our asymptotic analysis lies within the construction of a local approximation to all the quantities near this critical point. Our main technical tool is the application of the Deift-Zhou nonlinear steepest descent method to the associated Riemann–Hilbert problem (shortly RHP), and the mentioned local approximation is the so-called construction of a local parametrix. In our case, a novel feat is that this local parametrix construction is performed in a two-step way, first with the construction of a model problem varying with large parameter, and second with the asymptotic analysis of this model problem. In the latter, a RHP recently studied by Cafasso and Claeys [27] (see also the subsequent works [28, 32]) which is related to the lower tail of the KPZ equation shows up, and it is this RHP that ultimately connects all of our considered quantities to the integro-differential PII.

The choice of scaling of our multiplicative statistics is natural, illustrative but not exhaustive. As we point out later, with our approach it becomes clear that other scalings could also be analyzed, say for instance scaling around a bulk point, or yet soft/hard edge points with critical potentials, and indicate that other integrable systems extending the integro-differential PII may emerge.

2 Statement of Main Results

Let \(\Lambda ^{(n)} :=(\lambda _1<\ldots <\lambda _n)\) be a n-particle system with distribution

where \(Z_n\) is the partition function

The distribution (2.1) is the eigenvalue distribution of the unitarily-invariant random matrix model with potential function V [42, 66].

We associate to \(\Lambda ^{(n)}\) the multiplicative statistics

where \(\mathsf Z_n^Q(\mathsf s)\) is the partition function for the deformed model

and we denoted

When Q is linear, with a straightforward change of parameters \(\mathsf L^Q_n\) reduces to

where the expectation is over the set \(\mathcal X\) of configurations of points (that is, for us \(\mathcal X=\Lambda ^{(n)}\)) and \(q\in (0,1)\) should be viewed as a parameter of the model and, in general, \(\zeta \in \mathbb {C}\) is a free parameter. The expression (2.5) may be viewed as a transformation of the point process, where \(\zeta \in \mathbb {C}\) becomes the spectral variable of this transformation, and the matching \(\zeta ={{\,\mathrm{\mathrm e}\,}}^{-\mathsf s}\) motivates the distinguished role of \(\mathsf s\) in (2.3). In the context of random particle systems, this particular multiplicative statistics is associated to the notion of a q-Laplace transform [17, 19,20,21] that we already mentioned in the Introduction, and it has been one of the key quantities in several outstanding recent progresses in asymptotics for random particle systems [27, 37, 55].

We work under the following assumptions.

Assumption 2.1

-

(i)

The potential V is a nonconstant real polynomial of even degree and positive leading coefficient, and its equilibrium measure \(\mu _V\) is one-cut regular, we refer to Sect. 8.1 below for the precise definitions. Performing a shift on the variable, without loss of generality we assume that the right-most endpoint of \({{\,\textrm{supp}\,}}\mu _V\) is at the origin, so that

$$\begin{aligned} {{\,\textrm{supp}\,}}\mu _V=[-a,0], \end{aligned}$$for some \(a>0\).

-

(ii)

The function Q is real-valued over the real line, and analytic on a neighborhood of the real axis. We also assume that it changes sign at the right-most endpoint of \({{\,\textrm{supp}\,}}\mu _V\), with

$$\begin{aligned} Q(x)>0 \text { on }(-\infty ,0), \quad Q(x)<0 \text { on } (0,\infty ), \end{aligned}$$(2.6)with \(x=0\) being a simple zero of Q. A particular role is played by the negative value \(Q'(0)\), so we set

$$\begin{aligned} \mathsf t:=-Q'(0)>0. \end{aligned}$$(2.7)

Although \(\mathsf t\) in Assumption 2.1-(ii) will have the interpretation of time, we stress that in this paper it will be kept fixed within a compact of \((0,+\infty )\) rather than being made large or small.

For our results and throughout the whole work, we also talk about uniformity of several error terms with respect to \(\mathsf t\) in the sense that we now explain. Because Q is analytic on a neighborhood of the real axis, analytic continuation shows that it is completely determined by its derivatives \(Q^{(k)}(0)\), \(k\ge 0\). When we say that some error is uniform in \(\mathsf t\) within a certain range, we mean uniform when we vary Q as a function of \(-Q'(0)=\mathsf t\) while keeping all other derivatives \(Q^{(k)}(0)\), \(k\ge 2\), fixed.

The condition in Assumption 2.1-(i) is standard in random matrix theory and they are known to hold when, say, V is a convex function [69]. The one-cut assumption is made just for ease of presentation, as it simplifies the Riemann–Hilbert analysis at the technical level considerably. On the other hand, the regularity condition is used substantially in our arguments, but is standard in Random Matrix Theory literature and holds true generically [62]. Most of our results are of local nature near the right-most endpoint of \({{\,\textrm{supp}\,}}\mu _V\) and could be shown to hold true for multi-cut potentials near regular endpoints as well, with appropriate but non-essential modifications.

Assumption 2.1-(ii) should be seen as specifying enough regularity on the multiplicative statistics, here indexed by this factor Q. Because of condition (ii), we have the pointwise convergence

which means that the introduction of the factor \(\sigma _n\) in the original weight \({{\,\mathrm{\mathrm e}\,}}^{-nV}\) has the effect of producing an interpolation between this original weight and its cut-off version \(\chi _{(-\infty ,0)}{{\,\mathrm{\mathrm e}\,}}^{-nV}\), where from here onward \(\chi _J\) is the characteristic version of a set J. Comparing the Euler-Lagrange conditions on the equilibrium problem induced by the weights \({{\,\mathrm{\mathrm e}\,}}^{-nV}\) and \(\chi _{(-\infty ,0)}{{\,\mathrm{\mathrm e}\,}}^{-nV{}}\), the observation we just made heuristically indicates that the factor \(\sigma _n\) does not change the global behavior of eigenvalues. This may also be rigorously confirmed as an immediate consequence of our analysis, but we do not elaborate on this end.

On the other hand, introducing a local coordinate u near the origin via the relation \(z=-u/n^{2/3}\), the approximation

goes through, and we see that there is a competition between the term \(\mathsf s\) and Q(z) that affects the local behavior of the weight at the scale \(\mathcal {O}(n^{-2/3})\) near the origin, which is the same scale for nontrivial fluctuations of eigenvalues around the same point. The main results that we are about to state concern obtaining the asymptotic behavior as \(n\rightarrow \infty \) of several quantities of the model, and in particular they showcase how this term Q affects the local scaling regime of the eigenvalues near the origin and leads to connections with the integro-differential Painlevé II equation as already mentioned.

A central object in this paper is the multiplicative statistics

where the expectation is over the Airy\(_2\) point process with random configuration of points \(\{\mathfrak {a}_j\}\) [68]. Expectations of the Airy\(_2\) with respect to other meaningful symbols have been considered in the recent past, see for instance [25, 31, 34]. The quantity \(\mathsf L^{{{\,\textrm{Ai}\,}}}\) admits two remarkable characterizations, which are also of particular interest to us. The first is the formulation via a Fredholm determinant, namely

where \(\mathbb {K}^{\textrm{Ai}}_T\) is the integral operator on \(L^2(-s,\infty )\) acting with the finite temperature (or fermi-type) deformation of the Airy kernel \(\mathsf K_T^{\textrm{Ai}}\), defined by

The term ‘temperature’ stems from the connection between the KPZ equation and the random polymer models. Despite the name finite temperature, the parameter T here corresponds to the time in the KPZ equation, see (2.12) below. The Fredholm determinant \(\det \left( \mathbb {I}-\mathbb {K}^\mathrm{{Ai}}_T \right) \) appeared for the first time in the work of Johansson [57] as the limiting process of a grand canonical (that is, when the number of particles/size of matrix is also random) version of a Gaussian random matrix model, and interpolates between the classical Airy kernel when \(T\rightarrow +\infty \) and the Gumbel distribution when \(T\rightarrow 0^+\) with s scaled appropriately. In [57, Remark 1.13] Johansson already raises the question on whether a related classical (that is, not grand canonical) matrix model has limiting local statistics that interpolate between Gumbel and Tracy–Widom, as a feature similar to \(\det \left( \mathbb {I}-\mathbb {K}^\mathrm{{Ai}}_T \right) \). Since then, other works have found \(\det \left( \mathbb {I}-\mathbb {K}^\mathrm{{Ai}}_T \right) \) to be the limiting distribution for fluctuations around the largest particle of a point process [11, 39, 41, 64]. In common, these works consider specific models rather than obtaining \(\det \left( \mathbb {I}-\mathbb {K}^\mathrm{{Ai}}_T \right) \) as the universal limit for a whole family of particle systems. Finite-temperature type distributions extending \(\det \left( \mathbb {I}-\mathbb {K}^\mathrm{{Ai}}_T \right) \) have also appeared in the past, see for instance [18, 38, 54].

Another characterization of \(\mathsf L^{{{\,\textrm{Ai}\,}}}\) is via a Tracy–Widom type formula that relates it to the integro-differential PII. It reads

where \(\Phi \) solves the integro-differential Painlevé II equation

with boundary value

for any \(\delta >0\). This characterization has been obtained in the already mentioned work by Amir et al. [2], in connection with the narrow wedge solution to the KPZ equation, and following the work [20] by Borodin and Gorin has the interpretation that we now describe. For \(\mathcal H(X,T)\) being the Hopf-Cole solution to the KPZ equation with narrow wedge initial data at the space-time point (X, T), introduce the rescaled random variable

Based on the previous works [2, 29, 49, 70], in [20] the identity

between the height function of the KPZ equation and the multiplicative statistics \(\mathsf L^{{{\,\textrm{Ai}\,}}}(s,T)\) is identified. This is an instance of matching formulas relating growth processes with determinantal point processes that we already mentioned at the Introduction. One of the key aspects of this representation is that the Airy\(_2\) point process is determinantal, and consequently its statistics can be studied using techniques from exactly solvable/integrable models. Indeed Eq. (2.12) is the starting point taken by Cafasso and Claeys [27], who then connected \(\mathsf L^{{{\,\textrm{Ai}\,}}}(s,T)\) to a RHP that will also play a major role for us. Recently, Cafasso et al. [28] also obtained an independent proof of the representation (2.10), extending it to more general multiplicative statistics of the Airy\(_2\) point process. Other proofs and extensions of this integro-differential equation have also been recently found in related contexts [24, 26, 58]. Also, by exploring (2.12) the tail behavior of the KPZ equation has become rigorously accessible in various asymptotic regimes [27, 28, 32, 37].

As a first result, we prove that the multiplicative statistics \(\mathsf L^{{{\,\textrm{Ai}\,}}}\) is the universal limit of \(\mathsf L_n^Q(\mathsf s)\).

Theorem 2.2

Suppose that V and Q satisfy Assumptions 2.1 and fix \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\). For a constant \(\mathsf c_V>0\) that depends solely on V, and any \(\nu \in (0,2/3)\), the asymptotic estimate

holds true uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\).

The constant \(\mathsf c_V\) can be determined from (8.2) and (8.5) below, albeit in an implicit form as it depends on the associated equilibrium measure for V. It is the first derivative of a conformal map near the origin, which is constructed out of the equilibrium measure for V. Ultimately, we make a conformal change of variables of the form \(\zeta \approx \mathsf c_V z n^{2/3}\), which in turn identifies

In light of (2.9), this explains the evaluation \(s=-\mathsf s\mathsf c_V/\mathsf t\) and \(T=\mathsf t^3/\mathsf c_V^3\) on the right-hand side of (2.13).

Findings on random matrix theory surrounding the Tracy–Widom distribution have inspired an enormous development in the KPZ universality theory. One of the major developments surrounding the KPZ equation can be phrased by saying that the fluctuations of the height function for the narrow wedge solution coincide, in the large time limit, with the \(\beta =2\) Tracy–Widom law from random matrix theory. Theorem 2.2 is saying that the connection between random matrix theory and the KPZ equation can be recast already at any finite time, and not only for Gaussian models but also universally in V and Q. Similar connection exists [8] between the solution of the KPZ equation in half-space under the Robin boundary condition and Airy\(_1\) point process which, in turn, in the large time limit relate this KPZ solution to GOE matrices.

We emphasize that the error term in (2.13) is not in sharp form. In Sect. 3.1 we explain how this term arises from our techniques. We do not have indications regarding whether the true optimal error would be \(\mathcal {O}(n^{-2/3})\) (or of any polynomial order) or if it should involve, say, logarithmic corrections.

Our next results concern limiting asymptotic formulas for the matrix model underlying \(\mathsf L_n^Q\), starting with the partition function \(\mathsf Z_n^Q\) from (2.2). The quantities \(Z_n\) and \(\mathsf Z_n^{Q}\) are Hankel determinants associated to the symbols \({{\,\mathrm{\mathrm e}\,}}^{-nV}\) and \(\sigma _{n}{{\,\mathrm{\mathrm e}\,}}^{-nV}\). As mentioned earlier, a great deal of fine asymptotic information is known for a large class of symbols with so-called Fisher-Hartwig singularities. This class includes symbols with singularities of root type and jump discontinuities, and greatly extends the symbol \({{\,\mathrm{\mathrm e}\,}}^{-nV}\). We briefly review such results in a moment. However, the perturbation \(\sigma _n\) produces a wildly varying term in the symbol \(\sigma _n {{\,\mathrm{\mathrm e}\,}}^{-nV}\); in fact, as \(n\rightarrow \infty \) there are infinitely many simple poles of \(\sigma _n\) accumulating on the real axis, and to our knowledge not much is known of Hankel determinants associated to such symbols.

Under certain technical conditions, Bleher and Its [15] obtained a full asymptotic expansion for \(\log Z_n\) in inverse powers of \(n^2\), computing explicitly the very first high order terms, see also [10, 13, 14, 23, 51] for important early work obtaining similar results under different technical conditions. Thanks to several recent contributions valid in various degrees of generality [9, 30, 52, 72], a much more detailed information than (2.14) is known. In particular, to our knowledge a detailed asymptotic analysis of \(Z_n\) for general regular one-cut potentials without any further tehcnical assumptions has been completed by Berestycki, Webb and Wong [9], including lower order terms up to the constant. This asymptotic formula can also be read off from a more general result by Charlier [30, Theorem 1.1 and Remark 1.4], which under our conditions coincide with the result of [9] and reads as

where the constants \(\textbf{e}_1^V,\textbf{e}_2^V\) and \(\textbf{e}_4^V\) depend on V in an explicit manner.

As an immediate corollary to Theorem 2.2 we obtain some terms in the asymptotic expansion of the deformed partition function (2.4).

Corollary 2.3

Suppose V and Q satisfy Assumptions 2.1 and fix \(\mathsf s_0>0\) and \(\mathsf t_0>0\). For any \(\nu \in (0,2/3)\), the deformed partition function \(\mathsf Z_n^Q\) admits an expansion of the form

which is valid uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\), and the coefficients \(\textbf{e}_1^V\), \(\textbf{e}_2^V\) and \(\textbf{e}_4^V\) are as in (2.14).

Proof

Follows from the expansion (2.14), Theorem 2.2 and (2.3). \(\square \)

The order of error (2.15) is not \(\mathcal {O}(\log n/n)\) as in (2.14) but weaker and not sharp. This phenomenon can be traced back to the fact that \(\sigma _n\) has infinitely many poles accumulating on the real axis as \(n\rightarrow \infty \), see the discussion in Sect. 3.1 below. A similar error order was obtained in [15, Theorem 9.1 and Equation (9.68)], in a transitional regime from a one-cut to two-cut potential, and where the role played here by \(\mathsf L^{{{\,\textrm{Ai}\,}}}\) is replaced by the GUE Tracy–Widom distribution itself.

From the general theory of unitarily invariant random matrix models, it is known that the density appearing in (2.3) admits a determinantal form. Setting

this means that the identity

holds true for a function of two variables \(\mathsf K^Q_n(x,y)\) satisfying certain properties, known as the correlation kernel of the eigenvalue density on the left-hand side. The correlation kernel is not unique, but in the present setup it may be taken to be the Christoffel-Darboux kernel for the orthogonal polynomials for the weight \(\omega _n\), as we introduce in detail in (9.3), and whenever we talk about \(\mathsf K_n^Q\) we mean this Christoffel-Darboux kernel. In particular, \(\mathsf K^Q_n=\mathsf K^Q_n(\cdot \mid \mathsf s)\) does depend on both Q and \(\mathsf s\).

Our second result proves universality of the kernel \(\mathsf K_n^Q\), showing that its limit depends solely on \(\mathsf s\) and \(\mathsf t=-Q'(0)\), but not on other aspects on Q, and relates to the integro-differential PII. For its statement, it is convenient to introduce the new set of variables

With \(\Phi (\xi )=\Phi (\xi \mid \mathsf S,\mathsf T)\) being the solution to the integro-differential Painlevé II equation in (2.11) and the variables \(\mathsf s,\mathsf t\) and \(\mathsf S,\mathsf T\) related by (2.17), we set

and introduce the kernel

Theorem 2.4

Assume that V and Q satisfy Assumptions 2.1 and fix \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\). With

the estimate

holds true uniformly for u, v in compacts of \(\mathbb {R}\), and uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\).

In the recent work [36], Claeys and Glesner developed a general framework for certain conditional point processes, which in particular yields a probabilistic interpretation of the kernel \(\mathsf K_n^Q\) as we now explain. For a point process \(\Lambda \), we add a mark 0 to a point \(\lambda \in \Lambda \) with probability \(\sigma _n(\lambda )\) and a mark 1 with complementary probability \(1-\sigma _n(\lambda )\). This induces a decomposition of the point process \(\Lambda =\Lambda _0\cup \Lambda _1\), where \(\Lambda _j\) is the set of eigenvalues with mark j. We then consider the induced point process \(\widehat{\Lambda }\) obtained from \(\Lambda \) upon conditioning that \(\Lambda _1=\emptyset \), that is, that all points have mark 0.

When applied to the eigenvalue point process \(\Lambda =\Lambda ^{(n)}\) induced by the distribution (2.1), the theory developed in [36] shows that \(\widehat{\Lambda }^{(n)}\) is a determinantal point process with correlation kernel proportional to \(\omega _n(x)^{1/2}\omega _n(y)^{1/2}\mathsf K_n^Q(x,y)\) which, in turn, generates the same point process as the left-hand side of (2.19), see [36, Sections 4 and 5]. A comparison of the RHP that characterizes the kernel \(\mathsf K_\infty \) (see Sect. 5.1 below, in particular (5.16)) with the discussion in [36, Section 5.2] shows that \(\mathsf K_\infty \) is a (renormalized) correlation kernel for the marked point process \(\widehat{\{\mathfrak a_k\}}\) of the Airy\(_2\) point process \(\{\mathfrak a_k\}\) with the marking function \((1+{{\,\mathrm{\mathrm e}\,}}^{-\mathsf s+\mathsf t\lambda })^{-1}\). So Theorem 2.4 assures that the conditional process on the marked eigenvalues converges, at the level of rescaled correlation kernels, to the conditional process on the marked Airy\(_2\) point process.

We also obtain asymptotics for the norming constant \(\upgamma ^{(n,Q)}_{n-1}(\mathsf s)\) for the \({(n-1)}\)-th monic orthogonal polynomial for the weight \(\omega _n(x\mid \mathsf s)\) (see (9.2) for the definition), showing that its first correction term depends again solely on \(\mathsf s,\mathsf t\), and also relates to the integro-differential Painlevé II equation.

Theorem 2.5

Suppose that V and Q satisfy Assumptions 2.1 and fix \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\). The norming constant has asymptotic behavior

uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\), where \(\mathsf p(\mathsf s,\mathsf t)=\mathsf P(\mathsf S,\mathsf T)\), and the function \(\mathsf P\) relates to the solution \(\Phi \) from (2.11) via

Our approach also yields asymptotic formulas for the orthogonal polynomials and their recurrence coefficients, and relate them to the integro-differential Painlevé II equation as well, but for the sake of brevity we do not state them.

3 About our Approach: Issues and Extensions

3.1 Issues to be overcome

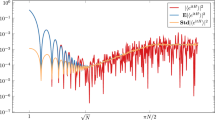

Our main tool for obtaining all of our results is the Fokas–Its–Kitaev [53] Riemann–Hilbert Problem (RHP) for orthogonal polynomials (shortly OPs) that encodes the correlation kernel \(\mathsf K_n^Q\), the norming constants \(\upgamma ^{(n,Q)}_{n-1}(\mathsf s)^2\) and ultimately also the multiplicative statistics \(\mathsf L_n^Q\), and its asymptotic analysis via the Deift-Zhou nonlinear steepest descent method [45, 47]. The overall arch of this asymptotic analysis is the usual one, summarized in the diagram in Fig. 1, and we now comment on its major steps.

Starting with the RHP for OPs that we name \(\textbf{Y}\), in the first step we transform \(\textbf{Y}\mapsto \textbf{T}\) with the introduction of the g-function (or, equivalently, the \(\phi \)-function), and this is done so with the help of the equilibrium measure for V that accounts only for the part \({{\,\mathrm{\mathrm e}\,}}^{-nV}\) of the weight \(\omega _n\). In the second step, we open lenses with a transformation \(\textbf{T}\mapsto \textbf{S}\) as usual.

The third step is the construction of the global parametrix \(\textbf{G}\). In our case, in this construction we also have to account for the perturbation \(\sigma _n\) of the weight \({{\,\mathrm{\mathrm e}\,}}^{-nV}\), so a Szegö function-type construction is used.

The fourth step is the construction of local parametrices at the endpoints \(z=-a,0\) of \({{\,\textrm{supp}\,}}\mu _V\), with the goal of approximating all the quantities locally near these endpoints. This is accomplished by, first, considering a change of variables \(z\mapsto \zeta =n^{2/3}\psi (z)\) after the conformal map \(\psi \) chosen appropriately for each endpoint and, then, constructing the solution to a model RHP \({\varvec{\Phi }}(\zeta )\) in the \(\zeta \)-plane. Following these steps, the local parametrix at the left edge \(z=-a\) of \({{\,\textrm{supp}\,}}\mu _V\) is standard and utilizes Airy functions.

The construction of the local parametrix at the right edge \(z=0\) is, however, a lot more involved. As we mentioned earlier, the factor \(\sigma _n\) affects asymptotics of local statistics near the origin. In fact, the weight \(\sigma _n\) has singularities at the points of the form

This means that for \(|\mathsf s|\ll n^{2/3}\) there are infinitely many poles of \(\sigma _n\) accumulating near the real axis. As such, in this case for large n the perturbed weight \(\omega _n\) fails to be analytic in any fixed neighborhood of the origin. If we were to consider only \(\mathsf s\rightarrow +\infty \) fast enough, one could still push the standard RHP analysis further with the aid of Airy local parametrices, at the cost of a worse error estimate. However, when \(\mathsf s=\mathcal {O}(1)\) we have poles accumulating too fast to the real axis, and a different asymptotic analysis has to be accomplished, in particular a new local parametrix is needed.

When changing coordinates \(z\mapsto \zeta \) near \(z=0\), the model problem \({\varvec{\Phi }}={\varvec{\Phi }}_n\) obtained is then n-dependent. This is so because the jump of the local parametrix involves \(\sigma _n\), and consequently in the process of changing variables the resulting model problem has a jump that involves a transformation of \(\sigma _n\) itself. This is in contrast with usual constructions with, say, Airy, Bessel or Painlevé-type parametrices, where the jumps can be turned into piecewise constant, or homogeneous, in the z-plane and, hence, also remain piecewise constant in the \(\zeta \)-plane. As we learned after finishing the first version of this paper somewhat similar issues have occurred for instance in [40, 60], although we handle this issue here in a different and independent manner. Another feature of the RHP for the model problem \({\varvec{\Phi }}_n\) is that its jump is not analytic on the whole plane, and instead it is analytic only in a growing (with n) disk, and for a fixed n we can only ensure that its jump matrix is \(C^\infty \) in a neighborhood of the jump contour.

All in all, this means that carrying out the asymptotic analysis of \({\varvec{\Phi }}_n(\zeta )\) as \(n\rightarrow \infty \) is also needed. As we said, the jump for \({\varvec{\Phi }}_n\) involves a transformation of \(\sigma _n\), so ultimately also depends on the function Q from (2.16). But it turns out that as \(n\rightarrow \infty \), we have the convergence \({\varvec{\Phi }}_n\rightarrow {\varvec{\Phi }}_0\) in an appropriate sense, where \({\varvec{\Phi }}_0\) is independent of Q. This limiting \({\varvec{\Phi }}_0\) is the solution to a RHP that appeared recently in connection with the KPZ equation [27] and which was later shown to connect with the integro-differential PII in the recent work of Claeys et al. [28, 32]. For this reason we term it the id-PII RHP.

With the construction of the global and local parametrices, the asymptotic analysis is concluded in the usual way, by patching them together and obtaining a new RHP for a matrix function \(\textbf{R}\). This matrix \(\textbf{R}\), in turn, solves a RHP whose jump is asymptotically close to the identity, and consequently \(\textbf{R}\) can be found perturbatively.

After concluding this asymptotic analysis, we undress the transformations \(\textbf{R}\mapsto \cdots \mapsto \textbf{Y}\) and obtain asymptotic expressions for the wanted quantities. For the kernel \(\mathsf K_n^Q\) and the norming constant \(\upgamma ^{(n,Q)}_{n-1}(\mathsf s)^2\), after this undressing Theorems 2.4 and 2.5 follow in a standard manner.

However, to obtain (2.13) quite some extra work is needed. When dealing with statistics of matrix models via OPs, one of the usual approaches is to extract the needed information via the partition function and its relation with the norming constants via a product formula, see for instance (9.6) below. Usually this is accomplished via some differential identity or with careful estimate of each term in the product formula, see for instance the works [4, 13, 15, 59] and their references for explorations along these lines. In virtue of the relation (2.3) this was in fact our original attempt, but several technical issues arise. Instead, at the end we express \(\mathsf L_n^Q\) directly as a weighted double integral of \(\mathsf K_n^Q(x,x\mid \mathsf s)\) in the variables in x and \(\mathsf s\), this is done in Proposition 9.1 below. The x-integral takes place over the whole real line, which means that when we undress \(\textbf{R}\mapsto \textbf{Y}\) we obtain a formula for \(\mathsf L_n^Q\) involving global and all local parametrices. The integral in \(\mathsf s\) extends to \(+\infty \), which is one of the main reasons why in our main statements we also keep track of uniformity of errors when \(\mathsf s\rightarrow +\infty \). We then have to estimate the double integral, accounting for exponential decays of most of the terms but also exact cancellations of some other terms. Ultimately, the whole analysis leads to a leading contribution coming solely from a portion of the integral that arises from the model problem \({\varvec{\Phi }}_n\). With a further asymptotic analysis of the latter integral we obtain an integral solely of \({{\varvec{\Phi }}}_0\) which then yields Theorem 2.2.

The convergence \({\varvec{\Phi }}_n\rightarrow {{\varvec{\Phi }}}_0\) is treated as a separate issue, and to achieve it we need several information about this id-PII parametrix \({{\varvec{\Phi }}}_0\). As a final outcome, we obtain that \({\varvec{\Phi }}_n\) is close to \({{\varvec{\Phi }}}_0\) with an error term of the form \(\mathcal {O}(n^{-\nu })\), for any \(\nu \in (0,2/3)\). But, in much due to the non-analyticity of the jump matrix for \({\varvec{\Phi }}_n\), we are not able to achieve a sharp order \(\mathcal {O}(n^{-2/3})\) unless further conditions were placed on Q. This non-optimal error explains the appearance of the same error order in (2.13). In the course of this asymptotic analysis we rely substantially in [28]. Among other needed info, we also borrow from the same work the connection of \({{\varvec{\Phi }}}_0\) with the integro-differential PII. In the same work, the authors actually show that \({{\varvec{\Phi }}}_0\) relates to particular solutions to the KdV equation that reduce to the integro-differential PII. As such, Theorems 2.4 and 2.5 could be phrased in terms of a solution to the KdV rather than to the integro-differential PII. We opt to phrase them with the latter because this formulation encodes that all self-similarities have already been accounted for.

If we were to assume that the jump matrix for \({\varvec{\Phi }}_n\) were piecewise analytic on the whole plane and not merely \(C^\infty \), we could deform \({\varvec{\Phi }}_n\) to a family of RHPs considered in [28]. With this in mind, the analysis of the convergence \({\varvec{\Phi }}_n\rightarrow {{\varvec{\Phi }}}_0\) is inspired by several aspects in this just mentioned work but, as we already said, here we are forced to work under different conditions on the jump matrix. In particular, one could adapt the methods in [28] to actually prove that \({\varvec{\Phi }}_n\) does too relate to an n-dependent solution to the integro-differential PII. Consequently, with a careful inspection of our work one could show that Theorems 2.2, 2.4 and 2.5 admit versions with n-dependent leading terms. For instance, relating the norming constant \(\upgamma ^{(n,Q)}_{n-1}(\mathsf s)^2\) with the model problem \({\varvec{\Phi }}_n\) one could obtain an asymptotic formula of the form

where the n-dependent function \(\mathsf p_n\) is obtained from \({\varvec{\Phi }}_n\) and relates to a n-dependent solution \(\Phi _n\) to the integro-differential PII. In fact, with standard arguments one could improve the formula above to a full asymptotic expansion in powers of \(n^{-1/3}\), with bounded but n-dependent coefficients. Underlying our arguments there is the statement that \(\mathsf p_n=\mathsf p+\mathcal {O}(n^{-\nu })\) for any \(\nu \in (0,2/3)\), which then yields Theorem 2.5. But as a drawback, although one could potentially improve (2.20) and also obtain the term of order \(n^{-2/3}\) explicitly, it is not possible to obtain the \(\mathcal {O}(n^{-1})\) term in (2.20) unless one improves the error \(\mathcal {O}(n^{-\nu })\) in the convergence \({\varvec{\Phi }}_n\rightarrow {{\varvec{\Phi }}}_0\) to a sharp error \(\mathcal {O}(n^{-2/3})\).

3.2 Possible extensions

Most of our approach may be extended to potentials V for which the equilibrium measure \(\mu _V\) is critical, and also under different conditions on Q as we now explain.

Apart from technical adaptations in several steps of the RHP for OPs which are nowadays well understood, our analysis carries over to potentials V for which the equilibrium measure \(\mu _V\) is regular but multicut, with the same conditions on Q when \(\mu _V\) has the origin as its right-most endpoint.

When, say, the density \(\mu _V\) vanishes to a higher power at a soft edge and/or Q changes sign with an arbitrary odd vanishing order at the same soft edge, we need to replace the power \(n^{2/3}\) in \(\sigma _n\) by another appropriate power to modify the local statistics near this point in a non-trivial critical manner. Once this is done, the asymptotic analysis of the RHP for OPs that we perform carries over mostly with minor modifications, and the only major issue to overcome is in the construction of a new local parametrix \(\widetilde{{\varvec{\Phi }}}_n\) near this soft edge point and its corresponding asymptotic analysis. In this case, we expect that \(\widetilde{{\varvec{\Phi }}}_n\rightarrow \widetilde{{\varvec{\Phi }}}_0\) for a new function \(\widetilde{{\varvec{\Phi }}}_0\). It is relatively simple to write a RHP that should be satisfied by this \(\widetilde{{\varvec{\Phi }}}_0\), and we expect it to be related to the KdV hierarchy [33] but with nonstandard initial data. It would be interesting to see if the particular solutions obtained this way reduce to integro-differential hierarchies of Painlevé equations, in the same spirit of the recent works [26, 58].

One could also consider similar statistics to (2.3) with a Q that vanishes at a bulk point of \({{\,\textrm{supp}\,}}\mu _V\). We do expect that most of our work carries through to this situation, at least when we impose V to be again one-cut regular and Q to vanish quadratically at a point inside \({{\,\textrm{supp}\,}}\mu _V\). The main issue that should arise is again on the construction of the local parametrix near this point, and its corresponding asymptotic analysis. This model should lead to multiplicative statistics of the Sine kernel (and the higher order generalizations of it). Similar considerations go through to hard-edge models, leading to multiplicative statistics of the Bessel process. To our knowledge, such multiplicative statistics of Bessel and Sine have not been considered in the literature so far. However, finite temperature versions of the Sine and Bessel kernels do have appeared, see for instance [11, 12, 39, 57].

3.3 Organization of the paper

The paper is organized in two parts. In the first part, we deal with a family of RHPs \({\varvec{\Phi }}_\tau \) that contains the model RHP \({\varvec{\Phi }}_n\) needed in the asymptotic analysis of OPs. In Section 4 we introduce \({\varvec{\Phi }}_\tau \) formally. In Sects. 5 and 6 we discuss the RHP \({{\varvec{\Phi }}}_0\), which is a particular case of \({\varvec{\Phi }}_\tau \), and review several of its properties, translating results from [27, 28] to our notation and needs. In Sect. 7 we prove the convergence \({\varvec{\Phi }}_\tau \rightarrow {{\varvec{\Phi }}}_0\) and of related quantities in the appropriate sense. The latter section contains all the needed results for the asymptotic analysis of the RHP for OPs, and concludes the first part of this paper.

The second part of the paper is focused on the asymptotic analysis of the RHP for OPs. In Sect. 8 we discuss several aspects that relate to the equilibrium measure. In Sect. 9 we introduce the Christoffel-Darboux kernel \(\mathsf K_n^Q\) and related quantities, and display how they relate to the RHP for OPs. In particular, in Proposition 9.1 we write \(\mathsf L_n^Q\) directly as an integral of the kernel \(\mathsf K_n^Q\), a result which may be of independent interest. In Sect. 10 we perform the asymptotic analysis of the RHP for the OPs. In Sect. 11 we use the conclusions from Sects. 10 and 7 and prove Theorems 2.4 and 2.5. Also from the results from Sects. 10 and 7 and assuming additional technical estimates, the proof of Theorem 2.2 is given in Sect. 11. Such remaining technical estimates are also ultimately a consequence of the RHP analysis, but their proofs are rather cumbersome and postponed to Sect. 12.

For the remainder of the paper it is convenient to denote

so \(\textbf{E}_{jk}\) is a \(2\times 2\) matrix with the (j, k)-entry equals 1 and all other entries zero. With this notation, the Pauli matrices, for instance, take the form

In particular, for any reasonably regular scalar function f, the spectral calculus yields

These notations will be used extensively in the coming sections.

4 A Model Riemann–Hilbert Problem

In this section we discuss a model Riemann–Hilbert Problem that will be used in the construction of a local parametrix in the asymptotic analysis for the orthogonal polynomials. As such, this model problem plays a central role in obtaining all our major results.

4.1 The model problem

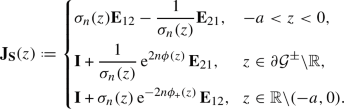

Set

orienting \(\mathsf \Sigma _0\) from the origin to \(\infty \), and the remaining arcs from \(\infty \) to the origin, see Fig. 2.

The contours \(\mathsf \Sigma _0,\mathsf \Sigma _1,\mathsf \Sigma _2\) and \(\mathsf \Sigma _3\) in (4.1) that constitute \(\mathsf \Sigma \)

The model RHP we are about to introduce depends on a function \(\mathsf h:\mathsf \Sigma \rightarrow \mathbb {C}\) used to describe its jump. For the moment we assume

These conditions are present only to ensure the RHP below is well posed and are far from optimal, but enough for our purposes. Later on we will impose more conditions on this function \(\mathsf h\), these conditions will be tailored to our later needs regarding the asymptotic analysis of OPs.

The associated RHP asks for finding a \(2\times 2\) matrix-valued function \({\varvec{\Phi }}\) with the following properties.

- \({\varvec{\Phi }}\)-1.:

-

The matrix \({\varvec{\Phi }}={\varvec{\Phi }}(\cdot \mid \mathsf h):\mathbb {C}{\setminus } \mathsf \Sigma \rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- \({\varvec{\Phi }}\)-2.:

-

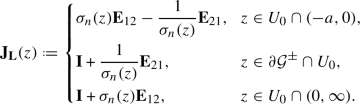

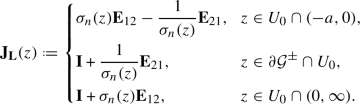

Along the interior of the arcs of \(\mathsf \Sigma \) the function \({\varvec{\Phi }}\) admits continuous boundary values \({\varvec{\Phi }}_\pm \) related by \({\varvec{\Phi }}_+(\zeta )={\varvec{\Phi }}_-(\zeta )\textbf{J}_{{\varvec{\Phi }}}(\zeta )\), \(\zeta \in \mathsf \Sigma \), with

(4.2)

(4.2) - \({\varvec{\Phi }}\)-3.:

-

As \(\zeta \rightarrow \infty \),

$$\begin{aligned} {\varvec{\Phi }}(\zeta )=\left( \textbf{I}+\frac{1}{\zeta }{\varvec{\Phi }}^{(1)}+\mathcal {O}(1/\zeta ^{2})\right) \zeta ^{\varvec{\sigma }_3/4}\textbf{U}_0^{-1} {{\,\mathrm{\mathrm e}\,}}^{-\frac{2}{3}\zeta ^{3/2}\varvec{\sigma }_3}, \end{aligned}$$(4.3)where

$$\begin{aligned} \textbf{U}_0:=\frac{1}{\sqrt{2}} \begin{pmatrix} 1 &{} \textrm{i}\\ \textrm{i}&{} 1 \end{pmatrix} \end{aligned}$$(4.4)and \({\varvec{\Phi }}^{(1)}={\varvec{\Phi }}^{(1)}(\mathsf h)\) is a matrix that depends on the choice of function \(\mathsf h\) but it is independent of \(\zeta \).

- \({\varvec{\Phi }}\)-4.:

-

The matrix \({\varvec{\Phi }}\) remains bounded as \(\zeta \rightarrow 0\).

Given \(\mathsf h\), it is not at all obvious that the RHP above has a solution and how to describe it. We study this model problem when \(\mathsf h=\mathsf h_\tau \) depends on an additional large parameter \(\tau \), in a way that appears naturally in the asymptotic analysis of the orthogonal polynomials mentioned earlier. For large values of \(\tau \), we then prove that the solution \({\varvec{\Phi }}\) exists and is asymptotically close to a model RHP that appeared recently [27] and that we discuss in a moment.

4.2 The model RHP with admissible data

For us, we need to consider the model problem \({\varvec{\Phi }}={\varvec{\Phi }}(\cdot \mid \mathsf h)\) with functions \(\mathsf h=\mathsf h_\tau \) satisfying certain properties which are formally introduced in the next definition.

Definition 4.1

We call a function \(\mathsf h_\tau :\Sigma \rightarrow \mathbb {C}\) admissible if it is of the form

where \(\mathsf H\) is defined on a neighborhood \(\mathcal S\) of \(\Sigma \) and satisfies the following properties.

-

(i)

The function \(\mathsf H\) is independent of \(\tau \) and \(\mathsf s\), of class \(C^\infty \) on \(\mathcal S\) and real-valued along \(\mathbb {R}\).

-

(ii)

\(\mathsf H\) is analytic on a disk \(D_\delta (0)\subset \mathcal S\) centered at the origin, and its unique zero on \(D_\delta (0)\) is at \(\zeta =0\), with

$$\begin{aligned} \mathsf t:=-\mathsf H'(0)>0. \end{aligned}$$ -

(iii)

There exist constants \(\eta ,\widehat{\eta }>0\) for which

$$\begin{aligned} {{\,\textrm{Re}\,}}\mathsf H(w)>\eta |w| \quad \text {for } w\in \mathsf \Sigma _1\cup \mathsf \Sigma _2\cup \mathsf \Sigma _3, \end{aligned}$$and

$$\begin{aligned} -\widehat{\eta }w^{3/2-\epsilon }<\mathsf H(w)<-\eta w \quad \text {for } w\in \mathsf \Sigma _0, \end{aligned}$$for some \(\epsilon \in (0,1/2]\).

Conditions (i)–(ii), and also the bounds in (iii) involving \(\eta \), are natural in our setup. The bound \(\mathsf H(w)>-\widehat{\eta }w^{3/2-\epsilon }\) is present for technical reasons, and it plays a role only for the proof of Lemma 7.4, allowing us to write certain estimates in a cleaner matter. It could be removed, at the cost of slightly more complicated error terms in the mentioned Lemma. For our purposes, namely to use \({\varvec{\Phi }}={\varvec{\Phi }}(\cdot \mid \mathsf h_\tau )\) as a local parametrix with an appropriate \(\mathsf h_{\tau }\), this condition is satisfied anyway (this will be accomplished in Proposition 8.3), so we include it in our definition here as well, as it simplifies our analysis.

In the course of the analysis for the RHP for the orthogonal polynomials discussed in Sect. 9, the function \(\mathsf H\) will be a transformation of the function Q appearing in the deformed weight (2.16), and the parameter \(\mathsf t\) that we defined here will play the same role as the one in the definition (2.7).

Given an admissible \(\mathsf h_\tau \), we denote

We are interested in the asymptotic analysis for \({\varvec{\Phi }}_\tau \) as \(\tau \rightarrow +\infty \) and \(\mathsf s\ge -\mathsf s_0\), for any \(\mathsf s_0>0\), and \(\mathsf t>0\) kept fixed within a compact of the positive axis.

We now explain in an ad hoc manner the appearance of a RHP for the integro-differential equation, which also relates to the KPZ equation. Definition 4.1-(ii) gives that \(\mathsf H\) has an expansion of the form

This means that any admissible function \(\mathsf h_\tau \) satisfies

In particular, the convergence

holds true uniformly in compacts as \(\tau \rightarrow \infty \). This indicates that the solution \({\varvec{\Phi }}_\tau \) should converge to the solution

of the model problem obtained from \(\mathsf h_0\). The RHP-\({{\varvec{\Phi }}}_0\) relates to the integro-differential PII and is a rescaled version of an RHP that appears in the description of the narrow wedge solution to the KPZ equation, as we discuss in the next section in detail.

5 The RHP for the Integro-Differential RHP

For the choice

the corresponding solution of the RHP–\({\varvec{\Phi }}\)

appeared for the first time in the work of Cafasso and Clayes [27] (this is the RHP-\(\Psi \) in Section 2 therein) in connection with the narrow wedge solution to the KPZ equation as we explain in a moment, in Sect. 5.1. To avoid confusion with the related quantities that we are about to introduce, we term it the KPZ RHP. In virtue of the identity

which follows from (4.6) and (5.1), we also have the correspondence

and we refer to \({{\varvec{\Phi }}}_0\) as the id-PII RHP. For the record, we state the existence of \({{\varvec{\Phi }}}_0\) formally as a result.

Proposition 5.1

For any \(\mathsf s\in \mathbb {R}\) and any \(\mathsf t>0\), the solution \({{\varvec{\Phi }}}_0\) exists and is unique. Furthermore, for any fixed \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\), the solution \({{\varvec{\Phi }}}_{0,+}(\zeta )\) remains bounded for \(\zeta \) in compacts of \(\mathbb {R}\) and \(\mathsf s\ge -\mathsf s_0\), \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\).

Proof

It is a consequence of [27, Section 2] that the solution \({{\varvec{\Phi }}}^{\mathrm {\scriptscriptstyle (KPZ)}}(\cdot \mid s,T)\) exists and is unique, for any \(s\in \mathbb {R}\) and \(T>0\), and from the correspondence (5.2) the existence and uniqueness of \({{\varvec{\Phi }}}_0\) is thus granted.

For the boundedness, we start from the representation

which follows from the \(L^p\) theory of RHPs (see [43]). The jump matrix admits an analytic continuation to any neighborhood of the real axis, and this analytic continuation remains bounded in compacts, also uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\) (see for instance (5.9) for the exact expression). With these observations in mind, the claimed boundedness follows from standard arguments. We skip additional details, but refer to the proof of Theorem 7.1, in particular (7.16) et seq., for similar arguments in a more involved context. \(\square \)

In this section we collect several results on \({{\varvec{\Phi }}}_0\) that were obtained in [27, 28] and which will be needed later.

But before proceeding, a word of caution. As we said, the RHP–\({{\varvec{\Phi }}}^{\mathrm {\scriptscriptstyle (KPZ)}}\) appeared first in [27], but was also studied in the subsequent work [28]. The meanings for the variables s, x and t in these two works are not consistent, but we need results from both of them. Comparing to the work [27] by Cafasso and Claeys, the correspondence is

This correspondence is consistent with (5.2). On the other hand, when comparing to the subsequent work [28] by Cafasso, Claeys and Ruzza, the correspondence between notations is

where \(\mathsf T,\mathsf S\) are as in (2.17).

In our asymptotic analysis, the most convenient choice of variables to work with is the choice \((\mathsf s,\mathsf t)\) and the correspondence \((\mathsf S,\mathsf T)\) from (2.17) that we have already been using, and which leads to the RHP \({{\varvec{\Phi }}}_0\) as we introduced. Nevertheless, we will need to collect results from both mentioned works, and when the need arises we refer to the correspondences of variables (5.3)–(5.4).

On the other hand, when making correspondence with integrable systems, in particular the integro-differential Painlevé II equation, it is more convenient to work with the variables \(\mathsf S\) and \(\mathsf T\) as in (2.17).

5.1 Properties of the id-PII parametrix

In this section we describe many of the findings from [27, 28], in a way suitably adapted to our notation and needs. In particular the connection of \({{\varvec{\Phi }}}_0\) introduced in (4.7) with the integro-differential Painlevé II equation is described in this section.

For

the identity

was shown in [27, Theorem 2.1] and will also be useful for us. With (5.2) we now rewrite this identity in terms of \({{\varvec{\Phi }}}_0\). With the principal branch of the argument, set

This function relates to \({{\varvec{\Delta }}}^{\mathrm {\scriptscriptstyle (KPZ)}}\) in (5.5) via

and (5.6) rewrites as

For further reference, it is now convenient to state the RHP for \({{\varvec{\Phi }}}_0\) explicitly.

- \({{\varvec{\Phi }}}_0\)-1.:

-

The matrix \({{\varvec{\Phi }}}_0:\mathbb {C}{\setminus } \mathsf \Sigma \rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- \({{\varvec{\Phi }}}_0\)-2.:

-

Along the interior of the arcs of \(\mathsf \Sigma \) the function \({{\varvec{\Phi }}}_0\) admits continuous boundary values \({{\varvec{\Phi }}}_{0,\pm }\) related by \({{\varvec{\Phi }}}_{0,+}(\zeta )={{\varvec{\Phi }}}_{0,-}(\zeta )\textbf{J}_{{{\varvec{\Phi }}}_0}(\zeta )\), \(\zeta \in \mathsf \Sigma \), with

(5.9)

(5.9) - \({{\varvec{\Phi }}}_0\)-3.:

-

As \(\zeta \rightarrow \infty \),

$$\begin{aligned} {{\varvec{\Phi }}}_0(\zeta )=\left( \textbf{I}+\mathcal {O}(1/\zeta )\right) \zeta ^{\varvec{\sigma }_3/4}\textbf{U}_0^{-1} {{\,\mathrm{\mathrm e}\,}}^{-\frac{2}{3}\zeta ^{3/2}\sigma _3}, \end{aligned}$$(5.10)where we recall that \(\textbf{U}_0\) is given in (4.4) and the principal branch of the roots are used.

- \({{\varvec{\Phi }}}_0\)-4.:

-

The matrix \({{\varvec{\Phi }}}_0\) remains bounded as \(\zeta \rightarrow 0\).

To compare with [28] we perform a transformation of this RHP. All the calculations that follow already take into account the correspondence (5.4) between the notation in the mentioned work and our notation.

With \({{\varvec{\Delta }}}_0\) as in (5.7) and introducing

we transform

Then \({\varvec{\Psi }}_0\) satisfies the following RHP.

- \({\varvec{\Psi }}_0\)-1.:

-

The matrix \({\varvec{\Psi }}_0:\mathbb {C}{\setminus } \mathbb {R}\rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- \({\varvec{\Psi }}_0\)-2.:

-

Along \(\mathbb {R}\) the function \({\varvec{\Psi }}_0\) admits continuous boundary values \({\varvec{\Psi }}_{0,\pm }\) related by

$$\begin{aligned} {\varvec{\Psi }}_{0,+}(\xi )={\varvec{\Psi }}_{0,-}(\xi ) \left( \textbf{I}+\frac{1}{1+{{\,\mathrm{\mathrm e}\,}}^{\xi }} \textbf{E}_{12}\right) , \quad \xi \in \mathbb {R}. \end{aligned}$$ - \({\varvec{\Psi }}_0\)-3.:

-

For any \(\delta \in (0,2\pi /3)\), as \(\xi \rightarrow \infty \) the matrix \({\varvec{\Psi }}_0\) has the following asymptotic behavior,

$$\begin{aligned} {\varvec{\Psi }}_0(\xi )= & {} \left( \textbf{I}+\mathcal {O}(1/\xi )\right) \xi ^{\varvec{\sigma }_3/4}\textbf{U}_0^{-1} {{\,\mathrm{\mathrm e}\,}}^{-\mathsf t^{-3/2}\left( \frac{2}{3}\xi ^{3/2}+\mathsf s\xi ^{1/2}\right) \sigma _3}\nonumber \\{} & {} \times {\left\{ \begin{array}{ll} \textbf{I}, &{} |\arg \xi |\le \pi -\delta , \\ \textbf{I}\pm \textbf{E}_{21}, &{} \pi -\delta<\pm \arg \xi <\pi . \end{array}\right. } \end{aligned}$$(5.12)

This RHP is the same RHP considered in [28, page 1120] (in fact, the keen reader will notice a sign difference between the last term in the right-hand side of (4.3) and the corresponding term in [28, page 1120], but the latter is a typo) with the choice \(\sigma (r)=(1+{{\,\mathrm{\mathrm e}\,}}^{-r})^{-1}\) therein and the correspondence of variables (5.4).

As a consequence, and with the change of variables \((\mathsf s,\mathsf t)\mapsto (\mathsf S,\mathsf T)\) from (2.17), we obtain that for some functions \(\mathsf Q=\mathsf Q(\mathsf S,\mathsf T),\mathsf R=\mathsf R(\mathsf S,\mathsf T), \mathsf P=\mathsf P(\mathsf S,\mathsf T)\) and

the asymptotic behavior (5.12) improves to

Stressing that the correspondence (5.4) is in place, the functions \(\mathsf P\) and \(\mathsf Q\) satisfy the relation [28, Equation (3.14)]

Furthermore, from [28, Equations (3.12),(3.16), Theorem 1.3 and Corollary 1.4] we see that \({\varvec{\Psi }}_0\) takes the form

where \(\Phi =\Phi (\xi \mid \mathsf S,\mathsf T)\) solves the NLS equation with potential \(2\partial _\mathsf S\mathsf P\),

In addition, \(\mathsf P\) and \(\Phi \) are related through the identity (2.21) which, in turn, implies that \(\Phi \) is the solution to the integro-differential Painlevé II equation in (2.11).

It is convenient to write some quantities of \({{\varvec{\Phi }}}_0\) directly in terms of the just introduced functions. The first identity we need for later is

which follows from (5.11) and (5.15) after a straightforward calculation, accounting also that \(\det {{\varvec{\Phi }}}_0=\det {\varvec{\Psi }}_0\equiv 1\).

The second relation we need is an improvement of the asymptotics of \({{\varvec{\Phi }}}_0\) in (5.10). With the coefficients

and the functions \(\mathsf q,\mathsf r,\mathsf p\) in (5.13), introduce

After some cumbersome but straightforward calculations, the asymptotics (5.14) improves (5.10) to

6 Bounds on the id-PII RHP

We need to obtain certain asymptotic bounds on \({{\varvec{\Phi }}}_0\) in different regimes. These bounds will be used later to show that the model problem \({\varvec{\Phi }}_\tau \) converges, as \(\tau \rightarrow +\infty \), to \({{\varvec{\Phi }}}_0\) as already indicated in (4.5) et seq. We split these necessary estimates in the next subsections, depending on the regime we are in.

In what follows, for a matrix-valued function \(\textbf{M}=(\textbf{M}_{jk})\) and a contour \(\Sigma \subset \mathbb {C}\), we also use the pointwise matrix norm

and the matrix \(L^p\) norm (possibly also with \(p=\infty \))

where the measure is always understood to be the arc-length measure. In particular, for any two given matrices \(\textbf{M}_1\) and \(\textbf{M}_2\) the inequality

is satisfied. Similar straightforward inequalities involving \(L^1,L^2\) and \(L^\infty \) and the pointwise norm (6.1) also hold, and will be used without further mention. Sometimes we also write

to identify that possible convergences are taking place in various norms simultaneously.

6.1 The singular regime

The first asymptotic regime we consider is

where \(\mathsf t_0\in (0,1)\) is any given value, and \(\mathsf s_0=\mathsf s_0(\mathsf t_0)>0\) will be made sufficiently large depending on \(\mathsf t_0>0\), but independent of \(\mathsf t\) within the range above. With (5.4) in mind, this is a particular case of the singular regime in [28].

For this asymptotic regime, we need the following result.

Proposition 6.1

For any \(\mathsf t_0\in (0,1)\) there exists \(\mathsf s_0=\mathsf s_0(\mathsf t_0)>0\), \(M=M(\mathsf t_0)>0\) and \(\eta =\eta (\mathsf t_0)>0\) such that the inequalities

hold true for any \(\mathsf s\ge \mathsf s_0\) and any \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

The proof of Proposition 6.1 is a recollection of the analysis in [28], so before going into the details we need to review some further notions from their work.

Introduce

This is the matrix appearing in [28, Equation (2.5)]. With the correspondence of variables (5.4) in mind, when we combine our identity (5.11) with [28, Equation (2.8)], we obtain the equality

The exact form of the matrix \(\textbf{Y}\) is not important for us, but we can interpret this last equality as a defining identity for \(\textbf{Y}\). What is important for us is that \(\textbf{Y}\) is analytic off the real axis, with a jump matrix \(\textbf{J}_{\textbf{Y}}\) on \(\mathbb {R}\) which admits an analytic continuation to a neighborhood of the axis.

The small norm theory for \(\textbf{Y}\) in our regime of interest was carried out in [28, Lemma 5.1 and Section 5.2]. As a consequence, we obtain that for any \(\mathsf t_0>0\) there exist \(M=M(\mathsf t_0)>0,\mathsf s_0=\mathsf s_0(\mathsf t_0)>0,\eta =\eta (\mathsf t_0)>0\) such that the inequalities

hold for any \(\mathsf s\ge \mathsf s_0\) and any \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\). Also as a consequence of the small norm theory, we obtain the expression

We combine this last identity with the fact that \(\textbf{J}_{\textbf{Y}}\) admits an analytic continuation in a neighborhood of \(\mathbb {R}\), and learn that there exists \(M=M(\mathsf t_0)>0\) for which

for every \(\zeta \in \mathbb {C}\), \(\mathsf s\ge \mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

Proof (Proof of Proposition 6.1)

For \(\zeta \in \mathsf \Sigma _0=(0,\infty )\), we use (6.5) and the definition of \({{\varvec{\Phi }}}_\textrm{Ai}\) in

Using the bound (6.6), the continuity and the known asymptotics as \(\zeta \rightarrow \infty \) of the Airy function and its derivative, the claim along \(\mathsf \Sigma _0\) follows.

The claim for \(\zeta \in \mathsf \Sigma _j\) with \(j=1,2,3\) follows in exactly the same explicit manner, we skip the details. \(\square \)

6.2 The non-asymptotic regime

In the non-asymptotic regime, we fix any \(\mathsf t_0\in (0,1)\) and \(\mathsf s_0>0\) and seek for bounds of certain entries of \({{\varvec{\Phi }}}_0\) which are valid uniformly within the range

For the next result, we recall the matrix norm introduced in (6.1).

Proposition 6.2

Fix any values \(\mathsf t_0\in (0,1)\) and \(\mathsf s_0>0\). There exist \(M=M(\mathsf s_0,\mathsf t_0)>0\) and \(\eta =\eta (\mathsf s_0,\mathsf t_0)>0\) for which the estimates

hold true uniformly for \(|\mathsf s|\le \mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le \mathsf t_0^{-1}\).

Proof

The asymptotic behavior as \(\zeta \rightarrow \infty \) in the RHP–\({{\varvec{\Phi }}}_0\) is valid uniformly up to the boundary values \({{\varvec{\Phi }}}_{0,\pm }\) as well, and also uniformly when the parameters \(\mathsf s\) and \(\mathsf t\) vary within compact sets, implying that

Combined with the continuity of the boundary value \({{\varvec{\Phi }}}_{0,+}\) with respect to both \(\zeta \) and also \(\mathsf s,\mathsf t\), the first estimate follows. The remaining estimates are completely analogous. \(\square \)

7 Asymptotic Analysis for the Model Problem with Admissible Data

We now carry out the asymptotic analysis as \(\tau \rightarrow +\infty \) of \({\varvec{\Phi }}_\tau \) introduced in (4.5). For that, we fix \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\) and work under the assumption that

During this section, \(\mathsf h_\tau \) always denotes an admissible function in the sense of Definition 4.1, and \({\varvec{\Phi }}_\tau \) is the solution to the associated RHP.

We also talk about uniformity of error terms in the parameter \(\mathsf t\) ranging on a compact interval \(K\subset (0,\infty )\), and by this we mean the following. The solution \({\varvec{\Phi }}_\tau \) depends on the parameter \(\mathsf t\) via the derivative \(\mathsf H'(0)=-\mathsf t\), see Definition 4.1. We view \(\mathsf H=\mathsf H_\mathsf t\) as varying with \(\mathsf t\) while keeping all the remaining derivatives \(\mathsf H^{(k)}(0)\), \(k\ne 1\) fixed. By analyticity this determines \(\mathsf H\) uniquely at \(D_\delta (0)\), but not outside this disk. We then consider \(\mathsf H_\mathsf t\) outside \(D_\delta (0)\) to be any extension from \(D_\delta (0)\) that satisfies Definition 4.1 with the additional requirement that the constants \(\eta , \widehat{\eta }\) and \(\epsilon \) in (iii) may depend on K but are independent of \(\mathsf t\in K\). Of course, for each \(\mathsf H_\mathsf t\) extended this way there corresponds a solution \({\varvec{\Phi }}_\tau \) of the associated RHP. By uniformity in \(\mathsf t\in K\) we mean that the error may depend on K and the corresponding values \(\eta , \widehat{\eta }\) and \(\epsilon \), but is valid for any \({\varvec{\Phi }}_\tau \) obtained with an extension \(\mathsf H_\mathsf t\) constructed with the explained requirement.

The asymptotic analysis itself makes use of somewhat standard arguments and objects in the RHP literature. Some consequences of this asymptotic analysis will be needed later, and we now state them.

The first such consequence is the existence of a solution with asymptotic formulas relating quantities of interest with the corresponding quantities in the id-PII RHP.

Theorem 7.1

Fix an admissible function \(\mathsf h_\tau \) in the sense of Definition 4.1. There exists \(\tau _0=\tau _0(\mathsf s_0,\mathsf t_0)>0\) for which for any \(\tau \ge \tau _0\) and any \(\mathsf s,\mathsf t\) as in (7.1), the RHP for \({\varvec{\Phi }}(\cdot \mid \mathsf h_\tau )\) admits a unique solution \({\varvec{\Phi }}={\varvec{\Phi }}_\tau \) as in (4.5).

Furthermore, for any \(\kappa \in (0,1)\), the coefficient \({\varvec{\Phi }}^{(1)}={\varvec{\Phi }}^{(1)}_\tau \) in the asymptotic condition (4.3) satisfies

where \({\varvec{\Phi }}^{(1)}_0\) is as in (5.17) and the error term is uniform for \(\mathsf s,\mathsf t\) as in (7.1). Also, still for \(\kappa \in (0,1)\) the asymptotic formula

holds true uniformly for \(x\in \mathsf \Sigma \) with \(|x|\le \tau ^{(1-\kappa )/2}\), and uniformly for \(\mathsf s,\mathsf t\) as in (7.1).

The second consequence connects the solution \({\varvec{\Phi }}_\tau \) directly with the statistics \(\mathsf Q\). For its statement, set

Theorem 7.2

Fix \(a,b>0\), \(\mathsf s_0>0\) and \(\mathsf t_0\in (0,1)\). For any \(\kappa \in (0,1)\), the estimate

holds as \(\tau \rightarrow +\infty \), uniformly for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\).

For the proof of these results, we compare \({\varvec{\Phi }}_\tau \) with the solution \({{\varvec{\Phi }}}_0\) of the id-PII RHP via the Deift-Zhou nonlinear steepest descent method. The required asymptotic analysis itself is carried out Sect. 7.1, and the proofs of Theorems 7.1 and 7.2 are completed in Sect. 7.2.

7.1 Asymptotic analysis

For \({{\varvec{\Phi }}}_0\) as introduced in (5.2) and whose properties were discussed in Sect. 5.1, we perform the transformation

Then \(\varvec{\Psi }_\tau \) satisfies the following RHP.

- \(\varvec{\Psi }_\tau \)-1.:

-

The matrix \(\varvec{\Psi }_\tau :\mathbb {C}{\setminus } \mathsf \Sigma \rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- \(\varvec{\Psi }_\tau \)-2.:

-

Along the interior of the arcs of \(\mathsf \Sigma \) the function \(\varvec{\Psi }_\tau \) admits continuous boundary values \(\varvec{\Psi }_{\tau ,\pm }\) related by \(\varvec{\Psi }_{\tau ,+}(\zeta )=\varvec{\Psi }_{\tau ,-}(\zeta )\textbf{J}_{\varvec{\Psi }_\tau }(\zeta )\), \(\zeta \in \mathsf \Sigma \). With

$$\begin{aligned} \lambda _0(\zeta ):=\frac{1}{1+{{\,\mathrm{\mathrm e}\,}}^{-\mathsf h_0(\zeta )}}, \quad \lambda _\tau (\zeta ):=\frac{1}{1+{{\,\mathrm{\mathrm e}\,}}^{-\mathsf h_\tau (\zeta )}}, \end{aligned}$$where \(\mathsf h_0\) is as in (4.6), the jump matrix \(\textbf{J}_{\varvec{\Psi }_\tau }\) is

(7.5)

(7.5) - \(\varvec{\Psi }_\tau \)-3.:

-

For \({\varvec{\Phi }}_\tau ^{(1)}\) and \({{\varvec{\Phi }}}_0^{(1)}\) the residues at \(\infty \) of \({\varvec{\Phi }}_\tau \) and \({{\varvec{\Phi }}}_0\), respectively, the matrix \(\varvec{\Psi }_\tau \) has the asymptotic behavior

$$\begin{aligned} \varvec{\Psi }_\tau (\zeta )=\textbf{I}+\frac{1}{\zeta }({\varvec{\Phi }}_\tau ^{(1)}-{{\varvec{\Phi }}}_0^{(1)})+\mathcal {O}(1/\zeta ^2)\qquad \text {as}\quad \zeta \rightarrow \infty . \end{aligned}$$(7.6) - \(\varvec{\Psi }_\tau \)-4.:

-

The matrix \(\varvec{\Psi }_\tau \) remains bounded as \(\zeta \rightarrow 0\).

The next step is to verify that the jump matrix decays to the identity in the appropriate norms. The terms in the jump that come from \({{\varvec{\Phi }}}_0\) are precisely the ones we already estimated in Sects. 6.1 and 6.2, so it remains to estimate the terms involving the \(\lambda \)-functions. The basic needed estimate is the following lemma.

Lemma 7.3

Fix \(\nu \in (0,1/2)\) and \(\mathsf t_0\in (0,1)\). The estimate

holds true uniformly for \(|\zeta |\le \tau ^\nu \) and uniformly for \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\), where the error term is independent of \(\mathsf s\in \mathbb {R}\).

Proof

The Definition 4.1 of admissibility of \(\mathsf h_\tau \) ensures that for \(\tau \) sufficiently large, we can expand the term \(\mathsf H(\zeta /\tau )\) in power series near the origin and obtain the expansion

valid uniformly for \(|\zeta |\le \tau ^\nu \), \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\), and with error independent of \(\mathsf s\in \mathbb {R}\). Recalling that \(\mathsf h_0(\zeta )=\mathsf s-\mathsf t\zeta \), the proof is complete. \(\square \)

We are now able to prove the appropriate convergence of \(\textbf{J}_{\varvec{\Psi }_\tau }\) to the identity matrix. We split the analysis into three lemmas, corresponding to different pieces of the contour \(\mathsf \Sigma \). In the results that follow we use the matrix norm notations introduced in (6.1)–(6.3).

Lemma 7.4

Fix \(\mathsf t_0\in (0,1)\), \(\mathsf s_0>0\) and \(\nu \in (0,1/2)\). There exist \(\tau _0=\tau _0(\mathsf t_0,\mathsf s_0,\nu )>0\), \(M=M(\mathsf t_0,\mathsf s_0,\nu )>0\) and \(\eta =\eta (\mathsf t_0,\mathsf s_0,\nu )>0\) for which the inequality

holds true for any \(\tau \ge \tau _0\), \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

Proof

Because both \(\mathsf h_\tau \) and \(\mathsf h_0\) are real-valued along the real line, the inequality

is immediate. For \(0\le \zeta \le \tau ^\nu \), we then use Lemma 7.3 and the explicit expression for \(\mathsf h_0\) in (4.6) and obtain

For \(\zeta \ge \tau ^\nu \), we instead use that both \(\mathsf h_\tau \) and \(\mathsf h_0\) are real-valued along the positive axis and write

From Definition 4.1-(iii) we bound \({{\,\mathrm{\mathrm e}\,}}^{-\tau \mathsf H(\zeta /\tau )}\le {{\,\mathrm{\mathrm e}\,}}^{\widehat{\eta }\zeta ^{3/2-\epsilon }}\) and simplify the last inequality to

for a new value \(\tilde{\eta }>0\).

Recall that \(\textbf{J}_{\varvec{\Psi }_\tau }\) was given in (7.5). We use (7.7) and (7.8) in combination with Propositions 6.1 and 6.2 to get the existence of a value \(\tau _0>0\) for which

where \(\eta>0,M>0\) may depend on \(\mathsf s_0,\mathsf t_0\) and \(\nu \in [0,1/2)\), but are independent of \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t_0\le \mathsf t\le 1/\mathsf t_0\). After appropriately changing the values of \(\tilde{\eta },\eta ,M\), and having in mind that \(\alpha <3/2\), the result follows from this inequality. \(\square \)

Next, we prove the equivalent result along the pieces of \(\mathsf \Sigma \) which are not on the real line.

Lemma 7.5

Fix \(\mathsf t_0\in (0,1)\), \(\mathsf s_0>0\) and \(\nu \in (0,1/2)\). There exist \(\tau _0=\tau _0(\mathsf t_0,\mathsf s_0,\nu )>0\), \(M=M(\mathsf t_0,\mathsf s_0,\nu )>0\) and \(\eta =\eta (\mathsf t_0,\mathsf s_0,\nu )>0\), for which the inequality

holds true for any \(\tau \ge \tau _0\), \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

Proof

Write

From Lemma 7.3, we estimate for \(0\le |\zeta |\le \tau ^\nu \),

where the implicit error term is independent of \(\mathsf s\) and uniform for \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\). On the other hand, from the explicit form of \(\mathsf h_0\) and Definition 4.1-(iii),

We combine this inequality with Propositions 6.1 and 6.2 and use them on (7.5). The conclusion is that there exist \(M>0\), \(\eta _1,\eta _2>0\) and \(\tau _0>0\), depending on \(\nu ,\mathsf t_0,\mathsf s_0\), for which the inequality

is valid for every \(\tau \ge \tau _0,\) \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\). The definition (4.1) of the contours \(\mathsf \Sigma _1\) and \(\mathsf \Sigma _3\) assure us that \({{\,\textrm{Re}\,}}\zeta ^{3/2}>0\) and \({{\,\textrm{Re}\,}}\zeta <0\) on these contours. After possibly changing the values of the constants \(\eta _1,\eta _2\) and M, the result follows. \(\square \)

Finally, we now handle the jump on the negative axis.

Lemma 7.6

Fix \(\mathsf t_0\in (0,1)\), \(\mathsf s_0>0\) and \(\nu \in (0,1/2)\). There exist \(\tau _0=\tau _0(\mathsf t_0,\mathsf s_0,\nu )>0, M=M(\mathsf t_0,\mathsf s_0,\nu )>0\) and \(\eta =\eta (\mathsf t_0,\mathsf s_0,\nu )>0\) for which the inequality

holds true for any \(\tau \ge \tau _0\), \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

Proof

The initial step is to rewrite the last line of (7.5) as

The identities

are trivial, and because \(\mathsf h_0\) and \(\mathsf h_\tau \) are real-valued along \(\mathsf \Sigma _2=(-\infty ,0)\), these equalities give

For \(|\zeta |\le \tau ^\nu \) we use Lemma 7.3 and estimate

whereas for \(\zeta \le -\tau ^\nu \) we use instead the definition of \(\mathsf h_0\) in (4.6) and Definition 4.1-(iii) and write

We combine these two inequalities with Propositions 6.1 and 6.2, and apply them to (7.11). As a result, we learn that there exist \(M>0,\eta>0,\tau _0>0\) for which the estimate

is valid for any \(\mathsf s\ge -\mathsf s_0,\) \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\), \(\tau \ge \tau _0\). After possibly changing the values of \(\eta ,M\), the result follows from standard arguments. \(\square \)

Now that we controlled the asymptotic behavior for the jump matrix \(\textbf{J}_{\varvec{\Psi }_\tau }\), we are ready to obtain small norm estimates for \(\varvec{\Psi }_{\tau }\) itself. We summarize these estimates in the next result. For that, we recall the matrix norm notations introduced in (6.1), (6.2), (6.3).

Theorem 7.7

Fix \(\mathsf t_0\in (0,1)\) and \(\mathsf s_0>0\). There exists \(\tau _0=\tau _0(\mathsf t_0,\mathsf s_0)>0\) for which the solution \(\varvec{\Psi }_\tau \) uniquely exists for any \(\tau \ge \tau _0\) and any \(\mathsf s\ge -\mathsf s_0, \mathsf t\in [\mathsf t_0,1/\mathsf t_0]\). Furthermore, it satisfies the following asymptotic properties.

Its boundary value \(\varvec{\Psi }_{\tau ,-}\) exists along \(\mathsf \Sigma \), and satisfies the estimate

for any \(\kappa \in (0,1)\), where the error term, for a given \(\kappa \), is uniform for \(\mathsf s\ge -\mathsf s_0\) and \(\mathsf t\in [\mathsf t_0,1/\mathsf t_0]\).

For \(\tau \) sufficiently large, the solution \(\varvec{\Psi }_\tau \) admits the representation

Still for \(\tau \) sufficiently large, \(\varvec{\Psi }_\tau \) satisfies

Proof

The small norm estimates provided by Lemmas 7.4, 7.5 and 7.6 allow us to apply the small norm theory for Riemann–Hilbert problems (see for instance [43, 47]), and the claims follow with standard methods. We stress that for this statement we only need the \(L^2\) and \(L^\infty \) estimates from the aforementioned lemmas, but the \(L^1\) estimates provided by them will be useful later. \(\square \)

7.2 Proof of main results of the section

We are ready to prove the main results of this section.

Proof of Theorem 7.1

During the whole proof we identify \(\nu =(1-\kappa )/2\).

The matrix \({{\varvec{\Phi }}}_0\) always exists, whereas Theorem 7.7 provides the existence of \(\varvec{\Psi }_{\tau }\) for \(\tau \) sufficiently large. From the relation (7.4) we obtain the claimed existence of \({\varvec{\Phi }}_\tau \).

Comparing (7.6) with (7.14) we obtain the identity