Abstract

Explicitly considering fail-safety within design optimization is computationally very expensive, since every possible failure has to be considered. This requires solving one finite element model per failure and iteration. In topology optimization, one cannot identify potentially failing structural members at the beginning of the optimization. Hence, a generic failure shape is applied to every possible location inside the design domain. In the current paper, the maximum stress is considered as optimization objective to be minimized, since failure is typically driven by the occurring stresses and thus of more practical relevance than the compliance. Due to the local nature of stresses, it is presumed that the optimization is more sensitive to the choice of the failure shape than compliance-based optimization. Therefore, various failure shapes, sizes and different numbers of failure cases are investigated and compared on the basis of a general load-path-based evaluation scheme. Instead of explicitly considering fail-safety, redundant structures are obtained at much less computational cost by controlling the maximum length scale. A common and easy to implement maximum length scale approach is employed and fail-safe properties are determined and compared against the explicit fail-safe approach.

Similar content being viewed by others

1 Introduction

During the design process of an aircraft, components with multiple and redundant load path are often required due to safety reasons. If for example one load path fails, e.g. caused by a fatigue crack or an accident, the component should still be able to carry a certain amount of the design load. This ensures a safe operation of the aircraft and a safe landing can be performed. A structure that fulfills these requirements is also called fail-safe.

For shape optimization and sizing optimization, fail-safe requirements can directly be incorporated as a constraint in the design optimization as originally proposed by Sun et al. (1976) for truss structures. The optimization requires one finite element simulation for each failed load path (for instance, for each member of a truss structure), which results in extremely large computational cost. For topology optimization, the question arises what to consider as a load path or structural member, as these evolve only during optimization. First work incorporating fail-safety into topology optimization was carried out by Jansen et al. (2014). Their approach is characterized by a local damage model, also referred to as failure patch approach. A simple square failure is applied to each possible location in the design domain resulting in nearly as many failure cases as finite elements, what makes this approach computationally very expensive. The objective is to minimize the worst-case compliance of all failure cases with respect to a volume constraint. Since the computationally cost is so high, Zhou and Fleury (2016) proposed to only use as much failure patches as needed to cover the design domain with no gap and no overlap. To further decrease the computational cost, Wang et al. (2020) selected the active failure patches based on a von Mises stress criterion and used a stabilized optimality criterion update scheme. Ambrozkiewicz and Kriegesmann (2018) chose to use actual load paths as failure patches instead of a regular grid, which significantly reduces computational costs. Still, the change of the structure and, consequently, of the failure scenarios considered penalizes the convergence of the optimization. Therefore, they proposed to embed the approach into a shape optimization (Ambrozkiewicz and Kriegesmann 2020).

All mentioned approaches for incorporating fail-safe requirements into topology optimization consider the compliance as objective function. However, when failure of a structure is considered, the maximum stress is of more practical relevance as already mentioned by Zhou and Fleury (2016). Stress-based topology optimization can be traced back around 30 years, where the singularity phenomenonFootnote 1 was first encountered by Kirsch (1990) in truss optimization. Since then, many contributions have been published dealing with different aspects of stress-based optimizations. Just to name a few, Yang and Chen (1996) first proposed to use a global stress measure, such as the maximum stress, which can be approximated for differentiation. Later, Le et al. (2009) described a practical and computational efficient method to perform stress minimization and stress constraint optimization. Recently, da Silva et al. (2019) addressed the problem of stress evaluation at jagged boundaries by introducing a method to limit the stresses oscillation and to ensure stress accuracy. A comprehensive overview of papers dealing with stresses in topology optimization can, for instance, be found in Le et al. (2009) and Holmberg et al. (2013).

Since explicitly considering fail-safety as optimization constraint results in a high computational effort, it seems natural to look for alternatives. And there are alternative and computationally less expensive approaches to obtain redundant structures, e.g. the approach recently published by Wu et al. (2018). They defined little subsets of the design space, mostly similar to the density filter (Bourdin 2001; Bruns and Tortorelli 2001), and then applied a volume constraint to these subsets. This approach is also known as local volume constraint approach. It forces material to be evenly distributed inside the design domain and was originally designed to create bone like infill structure, but could also be used to generate multiple load path and fail-safe designs. Another approach is to limit maximum member size, which enforces bigger material agglomeration to split up and thus, creating redundant load path. Contributions to maximum length scale methods were first given by Guest (2009), later by Lazarov and Wang (2017) or Carstensen and Guest (2018) and recently by Fernandez et al. (2019) and Fernández et al. (2020). Common to all is, they can be classified as indirect approaches in terms of generating fail-safe and multiple load path designs.

To the best of our knowledge, fail-safe design has not been optimized for stresses in a continuum topology optimization setting. Thus, and since stresses are crucial for failure to occur, the novel contribution of this paper is to consider the maximum stress as objective function following the failure patch approach of (Jansen et al. 2014). In difference to compliance-base optimization, the shape of the failure patches becomes highly relevant, as it may introduce singularities. Therefore, as a novel contribution, the influence of the patch shape is studied. The significance and arbitrariness of choosing a patch shape motivates the development of a general evaluation scheme. Inspired by Ambrozkiewicz and Kriegesmann (2018, 2020) and Gamache et al. (2018), a procedure based on image processing techniques is developed to identify structural members, i.e. struts and nodes. These structural struts and nodes are interpreted as load paths and can thus be considered as general failure cases. By cutting away each strut and node separately and evaluating the worst failure case, general and comparable fail-safe properties are obtained, independent of the actual optimization setup. Additionally, the existing local volume constraint approach by Wu et al. (2018) is implemented for a stress objective and compared against the proposed stress-based fail-safe approach. The empirical comparison is carried out to evaluate, if implicit optimized multiple load path designs can compete with explicit optimized fail-safe designs, which has not been done before.

This paper is organized as follows: first the optimization problem will be set up in Section 2. All relevant techniques applied, such as the variable filter methods, stiffness and stress interpolation schemes are recapitulated in Appendices A to C. The sensitivity analysis will be conducted in the Appendix D. Section 3 recapitulates the local volume constraint approach by Wu et al. (2018). In Section 4, a general evaluation scheme for fail-safe topologies will be proposed. In Sections 5 and 6, numerical results optimized with the proposed stress-based fail-safe optimization (FSO) will be shown and discussed on two different problems. Section 7 contains numerical results of an alternative way of obtaining fail-safe or multiple load path design, which are compared to the fail-safe optimized ones. In the end, a conclusion is drawn in Section 8.

2 Stress-based fail-safe optimization

To achieve a fail-safe design, our optimization algorithm is based on the failure patch approach proposed by Jansen et al. (2014). A finite element model discretized by a regular mesh with quadrilateral continuum elements and linear isotropic material is considered.

In contrast to the failure patch approach by Jansen et al. (2014), our objective is to minimize the worst-case von Mises stress qKS. The optimization problem reads:

where n is the number of elements, m is the number of failure cases, γ is the KS-factor or aggregation parameter and \(q^{(i)}_{j}\) is the j th relaxed elemental stresses of failure case i (for details see Appendix C). The maximum volume of all elements in the design domain is V0, the actual volume is V and α defines the volume fraction. For each element, one relative density is stored in ρ. Note, even though there are m × n elemental stresses to be aggregated, only n design variables (also referred to as relative densities) are needed. Failure cases are modeled by manipulating the corresponding entries in the stiffness matrix K (for details see Appendix B). The force vector is denoted as f, K(i) and u(i) are the stiffness matrix and displacement vector of failure case i respectively. Together, they represent the state equation for each failure case. Within this contribution, a volume fraction of α = 0.4 is chosen for all examples.

To avoid numerical difficulties in calculating the exponential function of large numbers, the alternative form (Wrenn 1989; Poon and Martins 2007) of the KS-function is used:

where, q0 is treated as constant for differentiation. The alternative form (2) is equal to the common one (1) and gives the same results, subjected to round off errors (Wrenn 1989). This can also be shown by simple mathematical reformulation:

As in the original failure patch approach (Jansen et al. 2014), the aggregation parameter γ is updated every 10 iterations. Based on numerical studies, a proper setting is found to be γ = 10/q0 (see Section 6.1). The initial aggregation parameter is determined in the same manner. Choosing a proper factor for the aggregation parameter’s update is essential for a stable and accurate stress-based FSO. Similar to the p-norm, an increasing aggregation parameter leads to a better approximation of q0, but it also increases gradient oscillations and, in worst case, this can result in a diverging optimization (Yang and Chen 1996; Verbart et al. 2017). With a too low aggregation parameter on the other hand, the worst failure case is not captured and is thus ignored by the optimizer, resulting in a less fail-safe topology. This trade-off is also described by Verbart et al. (2017).

Jansen et al. (2014) already pointed out that “the designs changes strongly during the optimization” and requires an aggregation parameter update every 10 iterations. Following this recommendation and to provide mesh independencyFootnote 2 the aggregation parameter is updated with respect to a constant factor for the exponential argument including the overall maximum stress q0. Note, it is not the aggregation parameter itself which is updated to a predefined value, but the exponentials argument, i.e. the product of the aggregation parameter γ and the relaxed von Mises stress \(q^{(i)}_{j}\). An alternative way of achieving mesh independency is described by Verbart et al. (2017) using the lower bound form of the KS-function.

Design variables are filtered and projected (see Appendix A). Element stiffnesses are penalized following the SIMP approach using projected variables (see Appendix B) where stresses are interpolated using the RAMP interpolation (see Appendix C). The gradient of the objective function (1), (2) is given in Appendix D. The optimization process is summarized in Fig. 1.

As a gradient-based iterative optimization algorithm, the method of moving asymptotes (MMA) is applied (Svanberg 1987). We use standard settings with external move limits, allowing an absolute change per design variable of ± 0.1. The internal move limit parameters are set to asyinit = 0.01, asyincr = 1.2, and asydecr = 0.7. The iterations are limited to a maximum of 500 for the FSO. The local volume constraint optimization required 1000 to 1500 iterations. If not stated otherwise, the projection parameter β is increased every 100 iterations and the aggregation parameter γ in (1), (2) is updated every 10 iterations. All other parameters are kept constant during the entire optimization, the objective and constraint value are both normalized at the beginning and scaled by a factor of 100.

3 Multiple-load-path design by maximum member size constraint

A simple and easy to implement method to create redundant designs with multiple load path is the local volume constraint approach by Wu et al. (2018). The stress-based optimization problem is formulated as follows:

where qKS is the aggregated von Mises stress considering only the elemental stresses qj in (2), φ is the local volume fraction and v is the aggregated local volume. The local volume is calculated per element and aggregated by the p-mean as follows:

with Le representing all elements inside the test region of element e. The test region is similar to the element neighborhood (see, e.g. the density filter in (9)) and can be of any shape, e.g. circular, annular or elliptic. If not noted otherwise, a circular test region as in (9) is chosen but with a different radius. To ensure equal maximum length scale over the whole design domain, each local volume is divided by the maximum of all test region volumes \(v_{max} = {\max \limits } \left ({\sum }^{L_{e}}_{k=1} v_{k}(\boldsymbol {\rho })\right ) \forall L_{e}\). The aggregation parameter is set to p = 16 and kept constant during optimization. Also here, a trade-off between a stable optimization and an accurate constraint approximation has to be made. If a higher aggregation parameter is chosen, the local volume constraint is enforced more strictly, but the problem might become unstable. For the designs in Section 7, an aggregation parameter of p = 16 turned out to give good convergence and acceptable results and is thus not further increased.

Considering a circular test region, the maximum length scale can be controlled by either changing the radius in (9) or by varying the local volume fraction φ. For a local volume fraction φ ≈ 1, the test region’s size is equal to the maximum length scale (2R = smax). This correlation is, e.g. utilized in the maximum length scale approach by Fernandez et al. (2019). Contrary to that, with a given R and a user defined maximum length scale smax, it is also possible to calculate the required volume fraction analytically by simple geometric evaluation. For that the integral form of a circle’s area can be used:

Evaluating (6) on the interval [−s/2,s/2] and dividing it by the circle’s area gives the local volume fraction φ. Figure 2 shows the local volume fraction for different maximum length scale and radii.

The maximum member size of an elliptic test region is determined straightforwardly using the analytical expression for an ellipse. Assuming that the minor axis w of the ellipse is controlling the maximum length scale and oriented along the x-axis. The following equation can be used to determine the local volume fraction for a chosen maximum length scale.

where, h is the major axis. Evaluating (7) on the interval [0,w] and dividing it by ellipse’s area gives the local volume fraction φ. Figure 3 shows the local volume fraction for different maximum length scale and radii.

Design variables are filtered and projected (see Appendix A). Element stiffnesses are penalized following the SIMP approach using projected variables (see Appendix B) where stresses are interpolated using the RAMP interpolation (see Appendix C). The gradient of the objective function (4) is given in Appendix D. The optimization algorithm and its settings are equal to the once of the stress-based optimization described in Section 2. The optimization process is similar to the one depicted in Fig. 1 but without the parallelized block.

4 Evaluation of fail-safe properties based on actual load paths

The results of a fail-safe topology optimization depends on the size and shape of the failure patch and of the failure patch density, i.e. the number of all possible failure locations considered (Zhou and Fleury 2016; Ambrozkiewicz and Kriegesmann 2018). When comparing two designs that are obtained with, for instance, a rectangular and circular failure patch, the question arises: how can we decide which design is better? Generally speaking, a design is considered as better if the maximum stress is lower for all possible failure scenarios. But which failure shape, size and density should be taken into account for the comparison? Each design is optimal for the failures defined during its optimization and hence, less or far from optimal for any other failure shape, size and density.

To circumvent this problem and to be able to compare designs of different optimizations with different failure patch settings, an evaluation based on actual load path is proposed in this section. Treating actual load, i.e. structural struts and nodes, as possible failing load paths is close to the intention of requiring a redundant design in regulation guidelines.

4.1 Load path identification based on image processing

The load paths are identified based on image processing techniques slightly similar to Gamache et al. (2018) and inspired by Ambrozkiewicz and Kriegesmann (2020). While the above mentioned approaches require clustering all elements to nodes and struts, here, we only need to define failure patches cutting through an entire strut or node.

For that, the continuous density field is first transferred to a binary field and then skeletonized, with which the location of structural nodes can easily be identified as the branch points (see Fig. 4). By subtracting the branch points from the skeleton, structural struts are left over as pixel lines. These pixel lines can then be clustered and the corresponding midpoint and major/minor axis are calculated. By that, the location of all nodes and struts and the orientation of all struts are defined. The size of the cut is determined incrementally until a certain amount of void elements is reached.

As shown in Fig. 5, all struts are cut and all nodes are taken away completely. Struts and nodes right of the dashed line are not taken into account, since they are outside of the damaged area, where no damage has been considered during optimization. Furthermore, to avoid removing too big areas, the maximum node size is limited to 10% of the maximum model dimension.

4.2 Extended cut procedure to avoid singularities

In some cases, a load path cut causes very high stress in medium density elements or in very thin struts, which do not contribute to the structural integrity. Removing such elements actually decreases the maximum stress, and they should therefore not be considered for a fail-safe evaluation. To bypass these numerical artefacts, an extended cut procedure is developed, testing for potential stress reducing further cuts. Figure 6 shows how this extended cut procedure works. The maximum stressed element is removed iteratively by setting its density to 0 as long as it reduces the maximum stress. To assure that only very small dispensable struts or elements are cut, the process is stopped after a predefined maximum number of element deletions, or if three element deletions in a row lead to a stress increase. In both cases, the configuration with the lowest stress is chosen as final worst-case stress. Note, to avoid that unloaded struts vanish in stress plots, transparency has been applied to each element according to its density. Due to the very low density of elements E19211 and E23891, they appear very opaque, but still they exhibit the highest stress and are thus removed during the extended cut procedure.

4.3 Fail-safe measure and stress scale

Fail-safe properties are expressed in terms of a fail-safe factor \(FSF=\tfrac {q_{max}}{q_{FOD}}\), which is simply the ratio of the maximum worst-case von Mises stress based on a load path failure and the maximum von Mises stress of the undamaged or free of damage (FOD) design qFOD. Note, the maximum von Mises stress is approximated by the KS function during the optimization, but when discussing the numerical results the true maximum von Mises stress is considered. Beside the fail-safe factor FSF, the stress of the undamaged design qFOD and the worst-case stress qmax is provided for each design. Also, the elements removed by the extended cut procedure (see above) are highlighted with a red ×.

When comparing designs of different optimization setups, big differences in maximum stresses occur. To avoid featureless stress plots, the stress scale is truncated at the lowest maximum stress for each example, i.e. cantilever beam and L-shaped beam.

5 Numerical results for stress-based fail-safe optimization of the cantilever beam

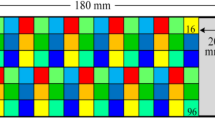

The proposed stress-based FSO is investigated on the well-known cantilever example (see Fig. 7). For all shown results, following parameters are chosen if not stated otherwise. The design domain is discretized with 360 by 120 square unit sized elements. Nodes on the left hand side are clamped and in the middle of the right hand side a unit load is distributed among 13 nodes to avoid stress concentrations. Material properties are set to a Young’s modulus of E0 = 1 and a Poisson’s ratio of ν = 0.3. The overall volume is constraint to α = 40% and a filter radius of R = 6 is applied. In the following, the cantilever example is optimized for different failure patch numbers, sizes and shapes. The optimized designs are evaluated based on their actual load paths. Note, the blue area in Fig. 7 is free of any damage. During optimization and postprocessing, no failure is considered in the marked area. As reference, an optimized cantilever beam without considering failure cases during the optimization is depicted in Fig. 8. It can be observed, if the marked node is removed, the maximum stress increases dramatically and will probably lead to catastrophic failure of the beam. As expected and anticipating the following investigations, the fail-safe properties can be improved significantly by explicitly considering failure during the optimization no matter which parameters are chosen. For the sake of completeness, a mesh independency study is performed in Appendix F. Here, the fail-safe optimized results are discussed.

5.1 Influence of the failure patch density

Following the approach of Jansen et al. (2014) and moving the failure patch element by element through the design space results in a very high number of failure cases to be evaluated in each iteration. Here, our main motivation is to reduce the computational cost by reducing the number of failure patches. Zhou and Fleury (2016) already pointed out that using as many failure patches as needed to cover the design domain without gap and overlap “is sufficient for achieving an applicable solution”. Of course, if fewer failure patches are considered, there will always be a failure patch that results in a higher worst-case stress. But since we are evaluating the fail-safe performance based on actual load paths, an “applicable solution” is sufficient. Thus, in this section, different failure patch densities are investigated with respect to their load path failure.

In order to keep the computing time manageable, the following investigation on failure patch density is performed on a lower resolution (180 by 60 square elements), similar to the original approach (Jansen et al. 2014). Also, the filter radius is halved R = 3. The optimization for a high failure patch density and an aggregation parameter update of γ = 10/q0 turned out to be unstable, supposedly due to very localized and switching stress peaks (as will be explained in the following). Thus, to further stabilize the optimization, the aggregation parameter is updated every 10 iterations to γ = 8/q0.

Different numbers of square failure patches are investigated by varying the failure increment. The failure increment is the step size, by which the failure is moved through the entire design domain (see Fig. 7). The failure size of 12 defines a 12 by 12 element wide square failure patch. Starting with a failure size of 12 and a failure increment of 12, leading to a total number of 60 failure patches. The failure patch density is then doubled by letting the failure patches overlap by half (failure increment = 6), resulting in 216 failure patches. Finally, all possible failure positions (failure increment = 1), giving 6860 failure patches, are investigated.

The results given in Fig. 9 show that the worst-case stress qmax, evaluated based on load paths, increases with increasing failure patch density. Additionally, the secondary load path is getting thinner and is even disappearing in some places for the highest number of failure patches. Both observations can be traced back to the local nature of stresses. Failure cases leaving over just few elements, e.g. on a main load path, result in high maximum stresses. Decreasing the failure increment will worsen this situation. Bearing in mind that the KS-approximation can be interpreted as a weighted average, it comes clear, the more failure patches leave over just a few elements between failure patch and boundary, the less other failure patches will influence the outcome. In the end, this results in a design more similar to the optimization without failure, rather than in a fail-safe design. Only in the clamped corners the optimization always provides redundant load paths.

With increasing failure patch density, unresolved spots or medium density struts emerge. Also, a slight asymmetry can be observed. Again, this is the result of very localized high stresses which magnify small numerical inaccuracies. In turn, this leads to an alternating stress peak location and by that to gradient switching. In the end, this results in an unstable or even diverging optimization. Furthermore, this effect is amplified by an increasing aggregation parameter, which is the reason for a reduction to γ = 8/q0 for this investigation.

Evaluating fail-safe properties, the FSF appears to be lowest for a failure patch number of 216 (Fig. 9 middle), even though the worst-case stress qmax is higher compared to the lowest number of failure patches. This is due to the higher stress of the undamaged structure qFOD. Beside the FSF, which is defined as a ratio between undamaged and worst-case stress, the actual worst-case stress qmax is considered to be more important. Even though the worst-case stress evaluated with square failure patches at every possible location is naturally worst for the lowest number of failure patches (Jansen et al. 2014; Zhou and Fleury 2016; Ambrozkiewicz and Kriegesmann 2018), the worst-case stress based on the proposed load path evaluation is lowest. Thus, from a practical point of view, it is sufficient to use as many failure patches as needed to cover the design domain without gap and overlap. Also Zhou and Fleury (2016) recommended a low number of failure cases for practical applications. Thus, in the following only failure patches without gap and overlap are considered.

5.2 Influence of the failure size

Figure 10 depicts final topologies and worst-case failure for different failure patch sizes. The failure size considered during optimization is indicated with orange squares. The actual worst-case load path failure is marked in red.

As already observed by Jansen et al. (2014), the failure patch size can influence the final topology strongly. A too small failure patch size can lead to a non-fail-safe design without redundant load path (see Fig. 10 top). It can generally be said that the distance between primary and secondary load path is equal to the failure size.

The lowest worst-case stress qmax and best FSF are both achieved by a medium failure size of 30. Interestingly, there is no big difference between failure size 24 and 30. Considering the biggest failure size 40, the straight struts between primary and secondary load path are somewhat intuitive. Considering a low stress or lightweight design, it becomes more and more favorable to approach a truss like structure. In truss structures the struts are only loaded along their longitudinal axis and can thus be better utilized compared to thick bars transferring bending loads. It is also observed that the structural nodes are preferably placed at the corner of failure patches, since these areas are never completely removed during optimization. By that, the design is obviously controlled by the size and placement of the failure patches. Considering nodes as possible failure might be regarded as very conservative and can lead to bad worst-case stress, as can be observed for big failure size (40). However, considering a medium failure size (24 and 30) structural nodes are not always the worst-case failure.

5.3 Influence of the failure shape

Figure 11 depicts the results for different failure shapes. Since stress singularities introduced by square failure patches create high stresses, circular and blurred failure patches are examined. Not overlapping square and circle failure shapes of failure size 24 are considered together with overlapping circles without gap or unremoved elements. Additional to these three configurations the failure patches got through a filter step (9) to blur the edges.Footnote 3 The failure considered in the optimization is indicated in orange. Actual worst-case load path failures, taken into account for post processing only, are marked in red. Elements removed by the extended cut procedure are marked with a red ×.

As can be observed, there is no big difference in all undamaged stresses qFOD. However, both the worst-case stress qmax and the FSF differ quit a lot, ranging from 0.687 to 1.101 and 1.87 to 3.02 respectively. Examining the worst-case failure in the blurred-square optimized design, reveals the location of the failure node. It is placed right between four failure patches and never removed completely during optimization. Such nodes also occur for square or circle failure patches, but are usually not the worst-case failure. For overlapping circle failure patches, diagonal struts are preferable placed between failure patches and never removed completely during optimization, whereas nodes can be placed more freely and are preferably placed in regions where no overlap occurs.

To our surprise, there is no advantage in using different failure shapes. A simple square failure shape gives the best fail-safe properties by using a low number of failure patches. Reducing the number of stress singularities by using circular blurred failure shapes did not have any advantageous effect on the optimization convergence or results for the failure patch density considered.

6 Numerical results for stress-based fail-safe optimization of the L-beam

In this section, the proposed stress-based FSO is applied to the well-known L-beam example (see Fig. 12) often used as benchmark for stress-based topology optimization. The design domain is discretized with 300 by 300 square unit sized elements. Nodes on the top edge are clamped and at the right tip a unit load is distributed among 16 nodes to avoid stress concentrations. Material properties are set to Young’s modulus of E0 = 1 and Poisson’s ratio of ν = 0.3. The overall volume is constraint to α = 40% and a filter radius of R = 6 is applied.

6.1 Stress-based optimization without failures

Stress optimized designs are usually expected to avoid stress singularities. The optimization algorithm’s ability to capture these stress singularities can be controlled by increasing the aggregation parameter. A too high aggregation parameter on the other hand causes numerical instabilities and thus one has to make a trade-off, as discussed in Section 2. Figure 13 depicts the results for different aggregation parameter updates. The aggregation parameter γ is updated to 5/q0, 10/q0, 15/q0 and 20/q0. For this example, i.e. without considering failure cases, higher aggregation parameter can be used without running into numerical instabilities. A stress-based FSO is less robust in terms of numerical stability and thus a lower update factor has to be chosen. As can be observed, an aggregation parameter updated to γ = 10/q0 is sufficient to circumvent the re-entrant corner and avoids numerical instabilities during the FSO.

Table 1 lists the actually achieved maximum aggregation parameters γmax and the corresponding KS approximation qKS for all designs depicted in Fig. 13. Considering an aggregation parameter update of γ = 10/q0, the KS approximation is roughly 50% above the true maximum stress. For an aggregation parameter update of γ = 20/q0, the difference is still 20%. Again, since we are aiming at minimizing the stress, this difference and the actual approximated value are of minor importance. The focus is set on a good compromise between a stable optimization and stress singularity recognition.

6.2 Influence of the failure size

Figure 14 shows results for different failure sizes. Similar to the cantilever example (see Fig. 10), small failure sizes are not able to create redundant load path. On the other hand, a too big failure size causes one big node in the middle, being the secondary load path for both the inner (at the re-entrant corner) and outer (left bottom corner) load path. Also, the maximum stress of the undamaged structure is mostly similar for all failure sizes.

All depicted designs exhibit a stress singularity at the re-entrant corner even though the undamaged structure is considered in the optimization by empty failure patches. The reason can be found by investigating the optimization setup: The worst-case stress qmax, with respect to the optimized square failure patch, is in the best case (failure size 40) 1.64 times higher than the undamaged stress qFOD. Additionally, there are other failure patches creating higher stresses in other locations than at the re-entrant corner. Remember, the KS-aggregation in (1) is a weighted average, which is dominated by the worst-case failure. With that in mind, the re-entrant corner can obviously not be detected or circumvented as long as there are other failure cases creating higher stresses than the one at the re-entrant corner. The authors conducted a multi objective optimization additionally taking into account the undamaged stress field (see Appendix E). Applying a high weighing factor to the undamaged stress field yields a design in which the re-entrant corner is avoided, while the design is less redundant and shows worse fail-safe performance.

Both the worst-case stress qmax and the FSF are lowest for the biggest failure size and mainly driven by the shape of the notch revealed by removing the structural node at the re-entrant corner (compare failure size 30 and 40 in Fig. 14). For the cantilever beam example treating nodes as possible failure seemed to be too conservative in some cases. Contrary to that, for the L-beam example, considering only nodes and struts as possible failure seems to be too optimistic. The cuts shown in Fig. 15 starting at the re-entrant corner and running into an adjacent hole are not identified as possible failure, even though they appear to be more realistic than a failure of the whole node. Still, the proposed approach considers more realistic failures that the patch-based approach.

6.3 Influence of the failure shape

Numerical results for different failure shapes are given in Fig. 16. The failure size and increment is 30, except for the overlapping circles. For overlapping circles, a bigger failure size is chosen such that diagonal failures barely touch each other. Blurred square and circle failure shapes as well as the simple circle failure shape show deficiencies in splitting up the primary load path especially in diagonally oriented members and at the re-entrant corner. The optimized design obtained with overlapping blurred circular failures avoids the stress singularity at the re-entrant corner. However, the FSF and the worst-case stress qmax = 1.29 are higher compared to a simple square optimized design (qmax = 1.11 see also Fig. 14). Both, the lowest worst-case stress qmax and the lowest FSF are obtained for a square shaped failure patch optimized design. The lowest undamaged stress qFOD = 0.366 is naturally obtained for the design circumventing the re-entrant corner.

7 Fail-safe evaluation of alternative multiple load path designs

In the following section, multiple load path designs are obtained by using maximum length scale techniques. This approach requires a fraction of the computational cost of the previously discussed FSO. Therefore, the fail-safe performance of designs obtained with a maximum length scale approach are investigated. Parameters are chosen such that at least two redundant load path are obtained. Different configurations are tested and the most representative results are compared against the best performing fail-safe optimized design.

For all examples depicted below (cantilever and L-beam) the filter radius R = 6, the global volume fraction α = 0.4 and the stress aggregation is chosen identically to the above investigated FSO. The projection parameter β is updated in every iteration, starting at the first iteration with β0 = 1, which is then multiplied by a constant factor of approximately 1.007 in order to reach a value of βmax = 16 after 400 iterations. From there on β is kept constant. Hence, in the first 400 iterations there is a small error in the gradient. However, this small error is deemed acceptable and does not penalize the convergence.

Two different test regions, also referred to as element neighborhood, are applied. First, the classical circular test region and second, the so called anisotropic filter, which increases the cross connections in areas of high anisotropic stresses. The anisotropic filter consists of two elliptic test region per element, one rotated by 90∘. Both test regions are described in the original approach by Wu et al. (2018) and are indicated with a green circle or ellipses in Fig. 17. Note that the local volume constraint implicitly imposes an upper bound on the global volume resulting in a not fully utilized global volume fraction, which was already observed by Wu et al. (2018). Table 2 summarizes the local volume parameter and lists the actual global volume fractions α∗ achieved at the end of the optimization.

7.1 Numerical results for the cantilever example

Stress optimized and length scale–controlled designs for the cantilever example are depicted in Fig. 17. The first design (a) is obtained with a circular test region and the remaining designs (b–d) are obtained with the anisotropic filter of different local volume fractions and ellipse radii (see Table 2). The stress scale is truncated at the best worst-case stress of the fail-safe optimized designs (compare to Fig. 10).

Comparing the obtained multiple load path designs (see Fig. 17) to a cantilever design optimized without failure (see Fig. 8), it is observed that the worst-case stress and FSF can be improved by the maximum length scale approach. Despite comparing all multiple load path designs to the best fail-safe design in Figs. 10 and 11, the best alternative multiple load path design (d) is still showing a 3.9 times higher worst-case stress. The maximum undamaged stress is superior to the ones obtained for the fail-safe optimized designs, which widens the gap between qmax and qFOD and results in a very high FSF. The FSF is approximately factor 10 higher compared to the fail-safe optimized designs.

The computational cost for deriving the designs in Fig. 17 is approximately 2 h on a single CPU and the best design in Figs. 10 and 11 need approximately 12 h on 16 CPUs. It is obvious that stress-based FSO is very computationally expensive, but there is a big difference in the worst-case stresses and fail-safe performance.

7.2 Numerical results for the L-beam example

Stress optimized and length scale–controlled designs for the L-beam example are depicted in Fig. 18. The first design (a) is obtained with a circular test region and all others (b–d) are obtained with the anisotropic filter of different local volume fractions and ellipse radii (see Table 2). The stress scale is truncated at the best worst-case stress of the fail-safe optimized designs (compare to Fig. 14).

For the following comparison, designs with redundant load path from Figs. 14 and 16 are considered only. Comparing multiple load path designs in Fig. 18 to the ones from Figs. 14 and 16, it is observed that the worst-case stress qmax is much lower for the fail-safe optimized designs. On the other hand, the undamaged stress qFOD is slightly lower for the length scale optimized designs, which is a results of avoiding the re-entrant corner. In conclusion, the fail-safe performance is quite detrimental for the length scale optimized designs due to the very high worst-case stress and FSF.

7.3 General remarks

Although the undamaged stress of the length scale optimized designs is lower, the high worst-case stress based on the load path evaluation is quite detrimental for a fail-safe application. This might be due to the following reasons. Firstly, fail-safeness is obviously not explicitly considered in the optimization. Secondly, since a stress-based optimization thickens structural members to decrease stresses, local volume constraints restrict forming big members. Thus, even if a stress objective is chosen, the optimizer cannot place material where it might be needed. Thirdly, in stress-based FSO the secondary load path is usually thicker than the primary one, since the available height or width is smaller and thus the moment of inertia is increase by increasing the thickness to lower the stress (compare Figs. 11 and 16). This freedom is not given for a length scale optimization.

Summarized, the computational benefits of using maximum length scale control causes a significant loss in performance compared to the explicit stress-based FSO. Moreover, the maximum length scale optimization requires much heuristic parameter tuning which in practice might results in running countless optimizations until a good parameter setting is found without guaranteeing a good fail-safe performance.

8 Conclusion

A major drawback of the original failure patch approach is that it requires an excessive amount of failure cases to be calculated per iteration. To overcome this burden, it is proposed to only use as many failure patches as needed to cover the design domain without gap and overlap. Conceding that the most critical failure case of all regularly shaped failure patches may be missed, this is irrelevant for practical applications. In practice, it is more meaningful to consider the failure of actual load path. Thus, a novel evaluation scheme, based on actual load path is proposed to characterize fail-safe designs. By applying the proposed evaluation scheme, it could be shown, that it is sufficient to use only few failure patches in the stress-based FSO to generate well performing fail-safe designs. At the same time, considering fewer failure patches reduced the computational cost significantly.

The proposed load-path-based evaluation scheme is a general and universal procedure, which can be applied to all kinds of lattice-like topologies to achieve comparable results. This is especially important for stress-based optimization, where the underlying failure shape supposedly influences the outcome of the optimization. Surprisingly, the investigation of different failure shapes did not show any significant influence compared to the simple square failure patch. Different failure patch sizes and shapes of course lead to slightly different designs, but avoiding stress singularities by using round or even blurred failure patches did not improve the convergence behavior nor the fail-safe properties.

Controlling the maximum length scale also allows deriving designs with redundant load paths. Although the undamaged stress is lower, the very high worst-case stress is quite detrimental for a fail-safe application. Still, the worst-case stress is lower compared to a simple stress optimized design. The computational cost of the maximum length scale approach seems much lower than the explicit approach. Nevertheless, it involves much more heuristic parameter tuning than the explicit approach, which in practice requires to run many optimizations to find a good parameter setting. In difference to that, the assumptions required for the explicit approach (failure shape and size) are more reasonable and easier to justify.

Notes

The singularity phenomenon is only one of the three often mentioned challenges related to stress-based topology optimization. The two other challenges are namely the local nature of stresses and the highly nonlinear design response (see Le et al. 2009, da Silva et al. 2019 and Holmberg et al. 2013 among others).

A mesh independency study has been performed. For more details, see Appendix F.

To generate blurred failure patches, a full density field (ρ = 1) is generated and the elemental densities of the considered sharp-edge failure patch are set to zero. This density field is then filtered with the variable filter applying a filter radius of 33% of the failure size. Intermediate densities (considering a threshold of 0.98) are stored and multiplied with the projected density field to scale the elemental stiffness matrix (see Appendix B).

References

Ambrozkiewicz O, Kriegesmann B (2018) Adaptive strategies for fail-safe topology optimization. In: EngOpt 2018 - 6th international conference on engineering optimization. https://doi.org/10.1007/978-3-319-97773-7_19, Lisbon, Portugal, pp 200–211

Ambrozkiewicz O, Kriegesmann B (2020) Density-based shape optimization for fail-safe design. J Comput Des Eng 7(0):1–15. https://doi.org/10.1093/jcde/qwaa044

Bendsoe MP, Sigmund O (2004) Topology optimization: theory, methods and applications, 2nd edn. Springer-Verlag, Berlin Heidelberg. https://doi.org/10.1007/978-3-662-05086-6

Bourdin B (2001) Filters in topology optimization. Int J Numer Methods Eng 50(9):2143–2158. https://doi.org/10.1002/nme.116

Bruns TE, Tortorelli DA (2001) Topology optimization of non-linear elastic structures and compliant mechanisms. Comput Methods Appl Mech Eng 190(26):3443–3459. https://doi.org/10.1016/S0045-7825(00)00278-4

Carstensen JV, Guest JK (2018) Projection-based two-phase minimum and maximum length scale control in topology optimization. Struct Multidiscip Optim 58(5):1845–1860. https://doi.org/10.1007/s00158-018-2066-4

Clausen A, Andreassen E (2017) On filter boundary conditions in topology optimization. Struct Multidiscip Optim 56(5):1147–1155. https://doi.org/10.1007/s00158-017-1709-1

Fernandez E, Collet M, Alarcon P, Bauduin S, Duysinx P (2019) An aggregation strategy of maximum size constraints in density-based topology optimization. Struct Multidiscip Optim 60(5):2113–2130. https://doi.org/10.1007/s00158-019-02313-8

Fernández E, Kk Yang, Koppen S, Alarcon P, Bauduin S, Duysinx P (2020) Imposing minimum and maximum member size, minimum cavity size, and minimum separation distance between solid members in topology optimization. Comput Methods Appl Mech Eng 368:113,157. https://doi.org/10.1016/j.cma.2020.113157

Gamache JF, Vadean A, Noirot-Nerin E, Beaini D, Achiche S (2018) Image-based truss recognition for density-based topology optimization approach. Struct Multidiscip Optim, pp 1–13. https://doi.org/10.1007/s00158-018-2028-x

Guest JK (2009) Imposing maximum length scale in topology optimization. Struct Multidiscip Optim 37(5):463–473. https://doi.org/10.1007/s00158-008-0250-7

Holmberg E, Torstenfelt B, Klarbring A (2013) Stress constrained topology optimization. Struct Multidisc Optim 48:33–47. https://doi.org/10.1007/s00158-012-0880-7

Jansen M, Lombaert G, Schevenels M, Sigmund O (2014) Topology optimization of fail-safe structures using a simplified local damage model. Struct Multidiscip Optim 49(4):657–666. https://doi.org/10.1007/s00158-013-1001-y

Kirsch U (1990) On singular topologies in optimum structural design. Struct Optim 2(3):133–142. https://doi.org/10.1007/BF01836562

Lazarov BS, Wang F (2017) Maximum length scale in density based topology optimization. Comput Methods Appl Mech Eng 318:826–844. https://doi.org/10.1016/j.cma.2017.02.018

Le C, Norato J, Bruns T, Ha C, Tortorelli D (2009) Stress-based topology optimization for continua. Struct Multidiscip Optim 41(4):605–620. https://doi.org/10.1007/s00158-009-0440-y

Poon NMK, Martins JRRA (2007) An adaptive approach to constraint aggregation using adjoint sensitivity analysis. Struct Multidiscip Optim 34(1):61–73. https://doi.org/10.1007/s00158-006-0061-7

da Silva GA, Beck AT, Sigmund O (2019) Stress-constrained topology optimization considering uniform manufacturing uncertainties. Comput Methods Appl Mech Eng 344:512–537. https://doi.org/10.1016/j.cma.2018.10.020

Stolpe M, Svanberg K (2001) An alternative interpolation scheme for minimum compliance topology optimization. Struct Multidiscip Optim 22(2):116–124. https://doi.org/10.1007/s001580100129

Sun PF, Arora JS, Jr H EJ (1976) Fail-safe optimal design of structures. Eng Optim 2 (1):43–53. publisher: Taylor & Francis _eprint: https://doi.org/10.1080/03052157608960596

Svanberg K (1987) The method of moving asymptotes - a new method for structural optimization. Int J Numer Methods Eng 24(2):359–373. https://doi.org/10.1002/nme.1620240207

Verbart A, Langelaar M, Keulen Fv (2017) A unified aggregation and relaxation approach for stress-constrained topology optimization. Struct Multidiscip Optim 55(2):663–679. https://doi.org/10.1007/s00158-016-1524-0

Wang F, Lazarov BS, Sigmund O (2011) On projection methods, convergence and robust formulations in topology optimization. Struct Multidiscip Optim 43(6):767–784. https://doi.org/10.1007/s00158-010-0602-y

Wang H, Liu J, Wen G, Xie YM (2020) The robust fail-safe topological designs based on the von Mises stress. Finite Elements in Anal Des 171:103,376. https://doi.org/10.1016/j.finel.2019.103376

Wrenn GA (1989) An Indirect Method for Numerical Optimization using the Kreisselmeir-Steinhauser function. National Aeronautics and Space Administration Office of Management. Scientific and Technical Information Division, google-Books-ID: LjsCAAAAIAAJ

Wu J, Aage N, Westermann R, Sigmund O (2018) Infill optimization for additive manufacturing—approaching bone-like porous structures. IEEE Trans Vis Comput Graph 24 (2):1127–1140. https://doi.org/10.1109/TVCG.2017.2655523

Xu S, Cai Y, Cheng G (2010) Volume preserving nonlinear density filter based on heaviside functions. Struct Multidiscip Optim 41(4):495–505. https://doi.org/10.1007/s00158-009-0452-7

Yang RJ, Chen CJ (1996) Stress-based topology optimization. Struct Optim 12(2-3):98–105. https://doi.org/10.1007/BF01196941

Zhou M, Fleury R (2016) Fail-safe topology optimization. Struct Multidiscip Optim, pp 1–19. https://doi.org/10.1007/s00158-016-1507-1

Acknowledgements

The authors thankfully acknowledge the helpful reviewer comments. We thank Krister Svanberg for providing his MATLAB implementation of the MMA.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research is funded by the German Research Foundation (reference number KR 4914/3-1).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: Jianbin Du

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Replication of results

The author states that the paper contains all information necessary to reproduce the results.

Appendices

Appendix A: Recapitulation of two-step variable filter

We implement the classical density filter together with a projection filter (Xu et al. 2010; Wang et al. 2011). The density filter, first proposed by Bourdin (2001) and Bruns and Tortorelli (2001), averages the density of element e according to the weighted distance in a circular neighborhood Ne. The neighborhood elements are defined as:

where R = 0 is the filter radius, xj are the centroid coordinates of element j and xe are the centroid coordinates of element e. The filtered density for each element \(\tilde {\rho }_{e}\) is then calculated as follows:

where \(w\left (\mathbf {x}_{j} \right ) = R-\left \| \mathbf {x}_{j} - \mathbf {x}_{e} \right \|\) is a conic weighting function, vj is the volume and ρj is the density of element j.

To avoid boundary effects, Clausen and Andreassen (2017) suggested to extend the design space with zero density elements. To save memory, the denominator in (9) can simply be set to the maximum of all element neighborhoods \(\max \limits \left ({\sum }_{j=1}^{N_{e}} w\left (\mathbf {x}_{j}\right ) v_{j} \right ) \forall N_{e}\). By that, the design space is virtual extended for elements close to the boundary. This modification is however not applied to elements near boundary conditions or load introduction.

Due to the averaging effect of the density filter, a second filter, a so-called projection filter, is applied to obtain black and white designs. A common approach to project the filtered densities was first proposed by Xu et al. (2010) and can be simplified to (Wang et al. 2011):

with β the projection parameter, controlling the nonlinearity. With η ∈ [0, 1] the inflection point can be shifted and is also referred to as threshold. A close to linear projection is achieved for β = 1 and η = 0.5. For \(\beta \to \ \ \infty \) the projection functions becomes the step function. Usually, the projection parameter is increased through a continuation scheme to avoid numerical instabilities. Especially in stress-based topology optimization, choosing a proper projection parameter and continuation is crucial and can influence the final outcome strongly. If not noted otherwise, the initial β0 = 1 and it is increase every 100 iterations by a factor of 2 until βmax = 16 is reached.

Appendix B: Stiffness interpolation and fast model assembly

The stiffness is interpolated by utilizing the solid isotropic material with penalization (SIMP) scheme. It interpolates the Young’s modulus of each element E0 acc. to the element’s relative projected density as follows:

where Ee is the interpolated Young’s modulus of element e. The penalization factor is p and Emin is a small number to avoid a singular stiffness matrix. For all later shown results, we have chosen E0 = 1, Emin = 10− 9 and p = 3.

The interpolated Young’s modulus is then used to scale the elemental stiffness matrix \(\mathbf {k}_{e}^{0}\):

where \(\mathbf {k}_{e}^{0}\) is the elemental stiffness matrix for unit Young’s modulus. The scaled elemental stiffness matrix ke is then used to assemble the global stiffness matrix K. This is done once per iteration.

Note, K represents the global stiffness matrix of the undamaged density field. To avoid an entire assembly of K(i) for each failure case, only affected entries are modified. If N is the complete set of all element indices, then all elements belonging to failure i are represented by P(i) ⊂ N. The stiffness matrix of failure i becomes

Still, a fail-safe optimization requires solving of one state (1) per failure case, which makes this approach computational expensive. But since these state equations are independent of each other, assembling and solving can be parallelized straightforwardly (compare to Fig. 1).

Appendix C: Stress interpolation

Elemental stresses are calculated at the element’s centroid assuming a linear elastic material model and a plane stress state as follows:

where Ce is the constitutive matrix for isotropic material, Be is the strain-displacement matrix and ue is the elemental displacement vector. The von Mises stresses in (1) are then calculated according to the simple formula:

where σe,11, σe,22 and σe,12 are the components of the stress tensor for a plane stress state. Similar to stiffness interpolation, elemental stresses are penalized with respect to elemental densities to avoid the singularity phenomenon (Le et al. 2009). Herein, we use the RAMP interpolation scheme (Stolpe and Svanberg 2001) with a penalization factor of p = − 0.5.

Appendix D: Sensitivity analysis

Gradient-based optimization algorithms require the derivatives of the objective and constraint function. According to common practice, the partial derivatives of the KS aggregated von Mises stress (2) with respect to the elemental densities are determined using the chain rule and the adjoint method (Bendsoe and Sigmund 2004). Starting by subtracting the equilibrium constraint (Ku −f = 0) multiplied by Lagrangian multiplier λ(i) from the objective function, namely the KS aggregated von Mises stress, yields:

Differentiating this expression with respect to the projected design variables \(\bar {\rho }_{e}\) yields:

Following derivatives result from the KS aggregation:

Equation (18) can be rearranged, isolating \({\delta \mathbf {u}^{(i)}\left .\left (\boldsymbol {\rho }\right )\right )}/\) \({\delta \bar {\rho }_{j}^{(i)}}\):

Requesting the term inside curly brackets to vanish yields the adjoint system:

Since the above given derivatives are derived with respect to the projected density \(\bar {\rho }_{e}\) the derivative with respect to the design variables ρe needs to be determined as well.

Appendix E: Multi-objective fail-safe analysis

Since the L-beam designs obtained by explicitly considering fail-safeness during optimization do not avoid the stress singularity at the re-entrant corner, a multi-objective optimization is carried out. The objective function in (1) is replaced by:

where stresses of the undamaged stress field \(q_{j}^{FOD}\) are aggregated independent of the fail-safe stresses \(q_{j}^{(i)}\) i.e. with a different aggregation parameter γFOD.

The aggregation parameter of the undamaged stress field γFOD is updated to \(\gamma ^{FOD}=10/q_{0}^{FOD}\). The update is performed simultaneously to γ. The weighting factor κ∗ = κqKS/qFOD takes into account a constant factor κ and the ratio of the fail-safe aggregated stress and the undamaged stress. This results in a normalization with respect to the undamaged stress field. The weighting factor is updated in every iteration to assure a constant ratio.

Results for different κ are depicted in Fig. 19. It is observed that the undamaged stress field is dominating the optimization for increasing κ, showing a convergence towards an optimization without failure patches (compare to Fig. 13).

Appendix F: Mesh independency

Figure 20 depicts designs for different mesh resolutions obtained with and without considering failure patches during optimization. The model dimensions are set to 180 by 60 and elements sizes are set to 1, 2/3 and 1/2 respectively (from top to bottom). As in Section 5.1 the aggregation parameter is updated to γ = 8/q0 every 10 iterations. All remaining settings are equal to the ones chosen in Section 5.1.

The designs obtained without failure do not differ. The fail-safe optimized designs are almost equal except the inner structure, i.e. supporting struts and redundant load path. These differences are considered to be minor and it is concluded that mesh independency is assured for the proposed approach.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kranz, M., Lüdeker, J.K. & Kriegesmann, B. An empirical study on stress-based fail-safe topology optimization and multiple load path design. Struct Multidisc Optim 64, 2113–2134 (2021). https://doi.org/10.1007/s00158-021-02969-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-021-02969-1