Abstract

In this paper, following the studies in Amorim et al. (Partial Differ Equ Appl 4, 36, 2023), we consider some new aspects of the motion of the director field of a nematic liquid crystal submitted to a magnetic field and to a laser beam. In particular, we study the existence and partial orbital stability of special standing waves, in the spirit of Cazenave and Lions (Commun Math Phys 85:549–561, 1982) and Hadj Selem et al. (Milan J Math 82:273–295, 2014) and we present some numerical simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

A great number of technological applications related to data display and non-linear optics, use thin films of nematic liquid cristals, cf. [7] for the general theory of nematic liquid cristals. In such devices the local direction of the optical axis of the liquid crystal is represented by a unit vector \(\textbf{n}(x,t)\), called the director, and may be modified by the application of an electric or magnetic field. The interaction of a light beam with the dynamics of the director \(\textbf{n}(x,t)\), under a magnetic field, helps to improve the device performance.

In this paper we consider the model introduced in [1] to describe the motion of the director field of a nematic liquid crystal submitted to an external constant strong magnetic field \(\textbf{H}\), with intensity \(H \in {\mathbb {R}}\), and also to a laser beam, assuming some simplifications and approximations motivated by previous experiments and models (cf. [2, 3, 20, 21], for magneto-optic experiments, and [16, 24] for the simplified director field equation). The system under consideration reads

where i is the imaginary unit, u(x, t) is a complex valued function representing the wave function associated to the laser beam under the presence of the magnetic field \(\textbf{H}\) orthogonal to the director field, \( \rho \in {\mathbb {R}}\) measures the angle of the director field with de x axis,\(v=\rho _{x}\), \(a,H \in {\mathbb {R}}, b > 0\) are given constants, with initial data

and where the function \(\sigma (v)\) is given by

where \(\alpha \ge \beta > 0\) are elastic constants of the liquid crystal, cf. [16], and

where \(\chi _a > 0\) is the anisotropy of the magnetic susceptibility, cf. [20].

In the quasilinear case \(\alpha > \beta , \alpha \simeq \beta \), the study of the existence of a weak global solution to the Cauchy problem for the system (1.1) with the initial data (1.2), in suitable spaces, has been developed in [1], by application of the compensated compactness method introduced in [22] to the regularised system with a physical viscosity and the vanishing viscosity method (cf. also [8, 9] for two examples of this technique applied to related systems of short waves-long waves).

In Sect. 2 we prove, in the general case (\(\lambda \ge 0\)), by application of Theorem 6 in [19], a local in time existence and uniqueness theorem of a classical solution for the Cauchy problem (1.1), (1.2). For this purpose we need to introduce some functional spaces and point out several well known results:

Let A be the linear operator defined in \(L^2({\mathbb {R}})\) by

where \(D(A)= \big \{ u \in X | Au \in L^2 ({\mathbb {R}}) \big \}\), with

We also define the norm \(\Vert u\Vert _X^2 = \Vert u_x\Vert _2^2 +\Vert xu\Vert _2^2 \), for \(u\in X\), denoting by \(\Vert .\Vert _p\) the norm \(\Vert .\Vert _{L^p({\mathbb {R}})}\). It can be proved, cf. [23], that if \(u\in X_1=\big \{ u | xu, u_x \in L^2 ({\mathbb {R}}) \big \}\), then \(u\in L^2({\mathbb {R}})\) with

and so \(X=X_1\), and it is not difficult to prove that the injection of X in \(L^q({\mathbb {R}}), 2\le q < + \infty \), is compact (cf. [11]).

Moreover, it may be also proved, cf. [4], lemma 9.2.1, that A is self-adjoint in \(L^2({\mathbb {R}})\), \((Au,u) \le 0, \forall u\in D(A)\), and (cf. [14]),

We can now state the first result that will be proved in Sect. 2:

Theorem 1.1

Let \((u_0,\rho _0,\rho _1)\in D(A)\times H^3\times H^2\) and \(\lambda \ge 0\). Then, there exists \( T^* = T^*(u_0,\rho _0,\rho _1) > 0\) such that, for all \(T < T^*\), there exists an unique solution \((u,\rho )\) to the Cauchy problem (1.1), (1.2) with \(u\in C ( [0,T]; D(A) )\cap C^1( [0,T]; L^2 )\) and \( \rho \in C ( [0,T]; H^3 )\cap C^1( [0,T]; H^2 ) \cap C^2 ( [0,T]; H^1)\).

As it is well known, in the quasilinear case the local solution, in general, blows-up in finite time. In Sect. 3, by obtaining the convenient estimates, we prove the following result in the semilinear case (\(\alpha = \beta \)):

Theorem 1.2

Let \((u_0,\rho _0,\rho _1)\in D(A)\times H^3\times H^2\) and \(\lambda =0\). Then, there exists an unique global in time solution \((u,\rho )\) to the Cauchy problem (1.1), (1.2), with \(u\in C ( [0,+\infty ); D(A) )\cap C^1( [0,+\infty ); L^2 )\) and \( \rho \in C ( [0,+\infty ); H^3 )\cap C^1( [0,+\infty ); H^2 ) \cap C^2 ( [0,+\infty ); H^1)\).

In the special case of initial data with compact support, we will prove in Sect. 4 the following result:

Theorem 1.3

Assuming the hypothesis of Theorem 1.2, consider the particular case where

Then, for each \( t > 0 \) and \( \varepsilon > 0 \), there exists a \(\delta = \delta ( t,\varepsilon ,\Vert u_0\Vert _{H^1} ) > 0 \), such that

where \(B( 0, \delta ) = \big \{ x\in {\mathbb {R}}| |x| < \delta \big \} \).

The proof of this result follows a technique introduced in [6] in the case of the nonlinear Schrödinger equation.

In Sect. 5, which contains the main result in the paper, we study the existence and possible partial orbital stability of the standing waves for the system (1.1) with \(a=-1\) (attractive case) and \(\lambda \ge 0 \). These solutions are of the form

and the system (1.1) takes the aspect (we fix \(\alpha =1\), without loss of generality):

We can rewrite this system as a scalar equation

where \(\rho (f)\) is the solution to \( - \rho _{xx}- \lambda (\rho _x^3)_x + b\rho = f\). It is not difficult to prove that if \(f \in L^2\), there exists a unique \(\rho \in H^2\) satisfying the previous equation. This allows for instance to prove that \(\rho (|u|^2) u^2 \in L^1\) provided that \(u\in X\). Now, to find nontrivial solutions of this equation belonging to D(A), the domain of the linear operator defined by (1.5), we will closely follow the technique introduced in [11] for the case of the Gross–Pitaevskii equation. More precisely, we consider the energy functional defined in X by (with \(\int . dx = \int _{\mathbb {R}}. dx\)):

and we look to solve the following constrained minimization problem for a prescribed \(c>0\):

We start by proving the following result which corresponds to Lemma 1.2 in [11].

Theorem 1.4

We have:

i) The energy functional \({\mathcal {E}}\) is \(C^1\) on X real.

ii) The mapping \(c \rightarrow {\mathcal {I}}_c \) is continuous.

iii) Any minimizing sequence of \({\mathcal {I}}_c\) is relatively compact in X and so, if \(\{u_n\}_{n\in {\mathbb {N}}} \subset X\) is a corresponding minimizing sequence, then there exists \(u\in X\) such that \(\Vert u\Vert ^2_2 = c^2\) and \(\lim _ {n\rightarrow +\infty } u_n = u\) in X. Moreover \(u(x)= u(|x|)\) is radial decreasing and satisfies (1.13) for a certain \(\mu \in {\mathbb {R}}\).

To prove this result we follow the ideas in [11] and introduce the real space \( {\tilde{X}}= \big \{w=(u,v) \in X\times X\big \}\), for reals u and v, with norm

and observe that if \(u =u_1 + i u_2\), with \(u_1={\mathcal {R}}e\, u, u_2={\mathcal {I}}m\, u\), the Eq. (1.13) can be written in the system form:

with \(w=(u_1,u_2)\in {\tilde{X}}, u_1={\mathcal {R}}e\, u, u_2={\mathcal {I}}m\, u\).

In the new space \({\tilde{X}}\), the functional defined in (1.14) takes the form, for \(w=(u,v)\in {\tilde{X}}, |w|^4=(|u_1|^2 + |u_2|^2)^2,\)

and, for all \(c>0\),we introduce

and the sets

Following [5] and [11], we introduce the following definition:

Definition: The set \({\mathcal {Z}}_c\) is said to be stable if \({\mathcal {Z}}_c \ne \varnothing \) and for all \(\varepsilon > 0\), there exists \(\delta > 0\) such that, for all \(w_0=({u_1}_0,{u_2}_0) \in {\tilde{X}} \), we have, for all \(t\ge 0\),

where \(\psi (x,t) = (u_1(x,t),u_2(x,t))\) corresponds to the solution \( u(x,t) = u_1(x,t) + i u_2(x,t) \) of the first equation in the Cauchy problem (1.1),(1.2), with initial data \( u_0(x) = {u_1}_0(x) + i {u_2}_0(x) \) and where \(\rho (x,t)= \rho (|u(x,t)|^2)(x,t)\) satisfies

This corresponds to the hypothesis \(\rho _{tt} \simeq 0\), cf. [2, 3, 20]. The local existence and uniqueness in X to the corresponding Cauchy problem for the Schrödinger equation is a consequence of Theorem 3.5.1 in [4]. It is easy to get the global existence of such solution \(\psi (t)\) if their initial data is closed to \({\mathcal {Z}}_c\). Indeed, denote by T the maximal time of existence and suppose that \({\mathcal {Z}}_c\) is stable at least up to the time T. So, using the stability at time T, we see that \(\psi (T)\) is uniformly bounded in \({\tilde{X}}\). Therefore, we can apply the local existence result for initial data \(\psi (T)\). This contradicts the maximality of T and yields to the global existence.

Proceeding as in the proof of Theorem 1.3 (see in particular (5.9)), we can show that

where C is a constant not depending on t. So, if w is stable, we derive, in the conditions of the definition,

We point out that, if \(w=(u_1,u_2)\in {\mathcal {Z}}_c\), then there exists a Lagrange multiplier \(\mu \in {\mathbb {R}}\) such that w satisfies (1.17), that is \(u = u_1 + i u_2\) satisfies (1.13).

We will prove the following result which is a variant of Theorem 2.1 in [11]:

Theorem 1.5

The functional \(\tilde{{\mathcal {E}}}\) is \(C^1\) in \({\tilde{X}}\) and we have

i) For all \(c>0, {\mathcal {I}}_c = \mathcal {{{\tilde{I}}}}_c, {\mathcal {Z}}_c \ne \varnothing \) and \({\mathcal {Z}}_c\) is stable.

ii) For all \(w\in {\mathcal {Z}}_c, |w| \in {\mathcal {W}}_c\).

iii) \({\mathcal {Z}}_c = \big \{ e^{i\theta }u, \theta \in {\mathbb {R}}\big \}\), with u real being a minimizer of (1.15).

The proof of this result is similar to the proof of Theorem 2.1 in [11]. We repeat some parts of the original proof for sake of completeness. Next, in Sect. 6, also following closely [11], we prove a bifurcation result asserting in particular that all solutions of the minimisation problem (1.15) belongs to a bifurcation branch starting from the point \((\lambda _0,0)\) (in the plane \((\mu ,u)\)) where \(\lambda _0\) is the first eigenvalue of the operator \(-\partial _{xx} +H^2 x^2\).

Proposition 1.6

The point \((\lambda _0,0)\) is a bifurcation point for (1.13) in the plane \((\mu ,u)\) where \(-\mu \in {\mathbb {R}}^+\) and \(u\in X\). The branch issued from this point is unbounded in the \(\mu \) direction (it exists for all \(-\mu >\lambda _0\)). Moreover solutions to (1.13) belonging to this branch are in fact minimizers of problem (1.15).

As already mentioned, the proof of this proposition follows closely the one of [11, Theorem 3.1]. An important ingredient which has also independent interest is the following uniqueness result.

Proposition 1.7

There exists a unique radial positive solution to (1.13) such that \(\lim _{r\rightarrow \infty } u(r)=0\).

The proof of this proposition is strongly inspired by [15].

Finally, in Sect. 7 we present some numerical simulations illustrating the behaviour of the standing waves according to the intensity of the magnetic field \(\textbf{H}\), and also the limit as the Lagrange multiplier \(-\mu \) approaches the bifurcation value \(\lambda _0\).

2 Local Existence in the General Case

In order to prove Theorem 1.1, let us introduce the Riemann invariants associated to the second equation in the system (1.1),

where \(w=\rho _t, v=\rho _x\). We derive

Noticing that

is one-to-one and smooth, we have \(v=f^{-1}(l-r)=v(l,r)\) and, for classical solutions, the Cauchy problem (1.1), (1.2) is equivalent to the system

with initial data (cf. (1.5), (1.8)),

In order to apply Kato’s theorem (cf. [19, Thm. 6]) to obtain the existence and uniqueness of a local in time strong solution, cf. Theorem 1.1, for the corresponding Cauchy problem, we need to pass to real spaces, introducing the variables

Now, we can pass to the proof of Theorem 1:

With \( ({u_{1}}_{0},{u_{2}}_{0}) = (u_1(.,0),u_2(.,0))\), let

and

The initial value problem (2.2), (2.3) can be written in the form

Let us take

(the condition \(\rho _0 \in H^3({\mathbb {R}})\) will be used later). We now set \( Z = (L^2({\mathbb {R}}))^2\times (L^2({\mathbb {R}}))^3\) and \(S=((1-A)I)^2\times ((1-\Delta )I)^3\), which is an isomorphism \(S:Y \rightarrow Z\). Furthermore, we denote by \(W_R\) the open ball in Y of radius R centered at the origin and by \(G(Z,1,\omega )\) the set of linear operators \(\Lambda :\,D(\Lambda )\subset Z\rightarrow Z\) such that:

-

\(-\Lambda \) generates a \(C_0\)-semigroup \(\{e^{-t\Lambda }\}_{t\in {\mathbb {R}}_+}\);

-

for all \(t\ge 0\), \(\Vert e^{-t\Lambda }\Vert \le e^{\omega t}\), where, for all \(U\in W_R\),

$$\begin{aligned} \omega =\frac{1}{2} \sup _{x\in {\mathbb {R}}}\Vert \frac{\partial }{\partial x}a(\rho ,l,r)\Vert \le c(R), \quad c: [0,+\infty [\rightarrow [0,+\infty [ \text { continuous, and } \end{aligned}$$$$\begin{aligned} a(\rho ,l,r)=\left[ \begin{array}{cccccccccc} 0&{}0&{}0\\ 0&{}-\sqrt{\alpha +3\lambda v^2}&{}0\\ 0&{}0&{}\sqrt{\alpha +3\lambda v^2} \end{array}\right] . \end{aligned}$$

By the properties of the operator A (cf. Sect. 1) and following [19, Section 12], we derive

and it is easy to see that g verifies, for fixed \(T>0\), \(\Vert g(t,U(t))\Vert _Y\le \theta _R\), \(t\in [0,T]\), \(U\in C([0,T];W_R)\).

For \((\rho ,l,r)\) in a ball \({\tilde{W}}\) in \((H^2({\mathbb {R}}))^3\), we set (see [19, (12.6)]), with [., .] denoting the commutator matrix operator,

We now introduce the operator \(B(U)\in {\mathcal {L}}(Z)\), \(U=(F_1,F_2,\rho ,l,r) \in W_R\), by

In [19, Section 12], Kato proved that for \((\rho ,l,r)\in {\tilde{W}}\) we have

Hence, we easily derive

Now, it is easy to see that conditions (7.1)–(7.7) in Section 7 of [19] are satisfied and so we can apply Theorem 6 in [19] and we obtain the result stated in Theorem 1, with \( \rho \in C ( [0,T]; H^2 )\cap C^1( [0,T]; H^1 ) \cap C^2 ( [0,T]; L^2)\).

To obtain the requested regularity for \(\rho \) it is enough to remark that, since \(\rho _x=v, \rho _t = w, \rho _0 \in H^3, v_0={\rho _0}_x\in H^2, w_0 =\rho _1 \in H^2\), we deduce \(\rho _x =v \in C ( [0,T]; H^2 ), \rho _t=w \in C ( [0,T]; H^2 )\), and this achieves the proof of Theorem 1.1.

3 Global Existence in the Semilinear Case

Now, we consider the semilinear case, that is when \( \alpha = \beta \) and so \(\lambda = 0\).

Hence we pass to the proof of Theorem 1.2. For the local in time unique solution \((u,\rho )\) defined in the interval \([0,T^*[, T > 0\), to the Cauchy problem (1.1),(1.2), obtained in Theorem 1.1, we easily deduce the following conservation laws (cf. [1]) in the case \(\lambda \ge 0, \alpha >0 \):

Applying the Gagliardo–Nirenberg inequality to the term \(|\frac{a}{2} \int |u(x,t)|^{4}\;dx|\) and since \(b>0\) we easily derive (cf. [1]), for \(t\in [0,T^*[\),

We continue with the proof of Theorem 1.2, in the semilinear case, that is \(\lambda =0\). We have, for \(t\in [0,T^*[,\)

Next we estimate \(\Vert Au(t)\Vert _2,\Vert \rho _{xt}(t)\Vert _2\) and \(\Vert \rho _{xx}\Vert _2\). For \(\lambda = 0\), the system (2.2) reads

with initial data (2.3). To simplify, we assume \(\alpha =\beta =b=1\).

Recall that we have, since \(\lambda =0\),

From (3.5), we derive

and so

and a similar estimate for \(l_x.\) We deduce, with \(c_4(t)\) being a positive, increasing and continuous function,

Moreover, we derive from (3.5), formally,

\(\le c_5\int |u_t|^2 dx\), and hence

We deduce from (3.5),

We have by (3.5),

and so, formally,

by (3.9) and (1.8). But, by (3.6), we derive

and so, by (3.7) and (3.10), we deduce

and similarly

We conclude that

with \(c_{11}(t)\) being a positive, increasing and continuous function of \(t \ge {0}\). This achieves the proof of Theorem 1.2 (the operations that we made formally can be easily justified by a convenient smoothing procedure).

4 Special Case of Initial Data with Compact Support

We assume the hypothesis of Theorem 1.2, that is is we consider the semilinear case (\(\lambda = 0\)) and, without loss of generality, we take \(\alpha = \beta = b = |a| = 1\). We also assume that the initial data verifies (1.9) for a certain \( d > 0 \). Following [6, Section 2], if we take \( \phi \in W^{1,\infty } ({\mathbb {R}}), \) real valued, and u is the solution of the Schrödinger equation in (1.1), we easily obtain

We derive

where

Moreover, from the wave equation in (1.2) with \(\lambda = 0\), we deduce for \(t \ge 0\),

We assume

We have, by the Gagliardo–Nirenberg inequality and (4.1),

Now, with

we deduce, from (4.2), (4.4) and with

Hence, if we define

we derive, by (4.7), (4.8) and (4.1),

Now, we fix \( t > 0 \) and \( \varepsilon > 0 \) and assume that the initial data verifies (1.9). We introduce the set \( C = {\mathbb {R}}{\setminus } (D + B( 0, \delta ))\), \(\delta \) to be chosen, and the function \( \phi \in W^{1,\infty } ({\mathbb {R}}), \) real valued, verifying (4.3), \(\phi = 0\) in D, \(\phi =1\) in C and \( \Vert \phi _x\Vert _\infty =\frac{1}{ \delta } \). We have \(f(0)=0\), \(\phi u_0 = 0\), and so, by (4.9), we easily obtain

and now we can choose \(\delta \) such that (1.10) is satisfied. This concludes the proof of Theorem 1.3.

5 Existence and Partial Stability of Standing Waves

We will consider the system (1.1) in the attractive case \(a =-1\) and without loss of generality we assume that \(\alpha =1\). We want to study the existence and behaviour of standing waves of the system (1.1), that is solutions of the form (1.11). As we have seen in the introduction, we can rewrite this system as a scalar equation (1.13). Following the technique introduced in [11] for the Gross–Pitaevski equation, we consider the energy functional defined in X by (1.14). Recall that \( X \subset L^q ({\mathbb {R}}), 2 \le q < + \infty \), with compact injection, and the norm in X is equivalent to the following norm (which by abuse we also denote by \(\Vert . \Vert _{X}\))

We now pass to the proof of Theorem 1.4, which is a variant of Lemma 1.2 in [11], whose proof we closely follow. Let \(\{u_n\}\) be a minimizing sequence of \({\mathcal {E}}\) defined by (1.14) in X (real), that is

defined by (1.15). Multiplying the equation satisfied by \(\rho (|u|^2)\) by u and integrating by parts, we find

Using Young’s inequality, we get, for a constant C depending on b (we allow this constant to change from line to line),

So using the two previous lines, we get that

From Hölder’s inequality, we obtain that, for some constant \({\tilde{C}}>0\),

and, by Gagliardo–Nirenberg inequality,

Hence, reasoning as in [11], (1.1) in Lemma 1.2, we derive, for each \(\varepsilon > 0\) and \(x\in X\), such that \(\Vert u\Vert _2^2 = c^2\),

and so, for \(u\in X\) such that \(\Vert u\Vert _2^2 = c^2\), we deduce

and we can choose \(\varepsilon \) such that \(1- \varepsilon ^2 (\frac{1}{4}+\frac{{\tilde{C}}}{2} ) > 0\).

Hence, the minimizing sequence is bounded in X and there exists a subsequence \({\{u_n}\}\) such that \(u_n \rightharpoonup u\) in X (weakly). Recalling that the injection of X in \(L^4({\mathbb {R}})\) is compact, we derive

Moreover, by lower semi-continuity, we deduce

On the other hand, we have, setting \(f:= \rho (|u|^2) - \rho (|u_n|^2):=\rho - \rho _n\),

Notice using Young’s inequality that

So proceeding as in (5.2), we can show that

Using this last estimate, we deduce that

since \( |u_n|^2 \rightarrow |u|^2\) in \(L^2({\mathbb {R}})\).

Hence, u is a minimizer of (1.14), that is

We conclude that \( {\mathcal {E}}(u_n) \rightarrow {\mathcal {E}}(u)\) and so

We derive that \(u\in X\). We denote by \( u^ {\star }\) the Schwarz rearrangement of the real function u, (cf. [18] for the definition and general properties). We know that

The Polya–Szego inequality asserts that, for any \(f\in W^{1,p}\) with \(p\in [1,\infty ]\),

Moreover, by [11], we have

By [12, Theorem 6.3] (see also [13]), we know that

provided that \(G(t)= \int _0^t g (s) ds\) and \(g: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\) is such that

where \(K>0\), \(l>1\) and \(s\ge 0\). We want to apply this result for \(G(s)=\rho (s^2) s^2\). So \(g(s)= (\rho (s^2))_s s^2 +2\,s \rho (s^2) \). Observe that \((\rho (s^2))_s :=f \) is the solution to \(- f_{xx} - 3\lambda ((\rho (s^2))_x^2 f_x )_x +bf =2\,s\). Using the maximum principle, we can show that \(g: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\). On the other hand, by standard elliptic regularity theory, we have that \(|\rho (s^2)|, |\rho (s^2)_s|\le C(s+s^2 )\), for any \(s>0\). So, [12, Theorem 6.3] yields that

Combining all the previous inequalities, we see that \({\mathcal {E}} (u^{\star }) < {\mathcal {E}} (u)\) unless \(u= u^{\star }\) a.e. and this proves that the minimizers of (1.14) are non-negative and radial decreasing. This completes the proof of Theorem 1.4.

We now pass to the proof of Theorem 1.5, which follows the lines of the proof of Theorem 2.1 in [11]. For sake of completeness we repeat some parts of the proof to make it easier to follow.

We recall that, cf. [5], to prove the orbital stability it is enough to prove that \({\mathcal {Z}} \ne \varnothing \) and that any sequence \(\big \{w_n=(u_n,v_n)\big \} \subset {\tilde{X}}\) such that \(\Vert w_n\Vert _2^2 \rightarrow c^2\) and \(\tilde{{\mathcal {E}}}(w_n) \rightarrow \tilde{{\mathcal {I}}}_c\), is relatively compact in \({\tilde{X}}\). By the computations in the proof of Theorem 1.4, we have that the sequence \(\{w_n\}\) is bounded in \({\tilde{X}}\) and so we can assume that there exists a subsequence, still denoted by \(\{w_n\}\) and \(w=(u,v) \in {\tilde{X}}\) such that \(w_n \rightharpoonup w\) weakly in \({\tilde{X}}\), that is \(u_n \rightharpoonup u, v_n \rightharpoonup v\) in X. Hence, there exists a subsequence, still denoted by \(\{w_n)\}\), such that there exists

Now, we introduce \(\varrho _n = |w_n| = ( u_n^2 + v_n^2 )^\frac{1}{2} \), which belongs to X. Following the proof of [11, Theorem 2.1], we have

\({\varrho _n}_x = \frac{u_n {u_n}_x + v_n {v_n}_x}{(u_n^2 + v_n^2)^\frac{1}{2} }\), if \(u_n^2 + v_n^2 > 0\), and \({\varrho _n}_x = 0\), otherwise.

We deduce

Hence, we derive as in [11, Theorem 2.1],

and

Applying Theorem 1.4 with \(c_n = \Vert \varrho _n\Vert _2\), we obtain

Hence, by (5.14) and (5.16), we derive

and so, by (5.13) and (5.17), we get

We can rewrite this last line as

Now, by (5.15), (5.17) and iii) in Theorem 1.4, we conclude that there exists \(\varrho \in X\) such that \(\varrho _n \rightarrow \varrho \) in X and \(\Vert \varrho \Vert _2^2 = c^2 , {\mathcal {E}} (\varrho ) = {\mathcal {I}}_c.\) Moreover \(\varrho \in H^2({\mathbb {R}}) \subset C^1({\mathbb {R}})\) is a solution of (1.13) and \(\varrho > 0\). We prove that \(\varrho =(u^2 + v^2)^{\frac{1}{2}} \) just as in the proof of Theorem 2.1 in [11, p. 279].

Finally, we prove that \(\Vert {w_n}_x\Vert _2^2\rightarrow \Vert w_x\Vert _2^2 \). By applying (5.19) we have \(\lim _{n \rightarrow \infty } \Vert w_n\Vert _2^2 = \lim _{n \rightarrow \infty } \Vert {\varrho _n}_x\Vert _2^2\) and \(\Vert {\varrho _n}_x\Vert _2^2 \rightarrow \Vert \varrho _x\Vert _2^2\), since \(\varrho _n \rightarrow \varrho \) in X. Hence, \(\Vert w_x\Vert _2^2 \le \lim _{n \rightarrow \infty } \Vert {w_n}_x\Vert _2^2 = \Vert \varrho _x\Vert _2^2.\) But it is easy to see that

because \( ({u u_x + v v_x}^2) \le (u^2 + v^2) (|u_x|^2 + (|v_x|^2).\) Hence, \(\Vert {w_n}_x\Vert _2^2 \rightarrow \Vert w_x\Vert _2^2\). We also have that \( w_n \rightharpoonup w\), weakly in \( {{\tilde{X}}} \). In particular, by compactness, \( w_n \rightarrow w\) in \((L^2({\mathbb {R}}))^2 \cap (L^4({\mathbb {R}}))^2.\)

Since \(\mathcal {{\tilde{E}}}(w_n) \rightarrow \mathcal {{\tilde{I}}}_c = \mathcal {{\tilde{E}}}(c)\), we derive that \(\int x^2|w_n|^2dx \rightarrow \int x^2|w|^2dx\) and so \(\Vert w_n\Vert _{{{\tilde{X}}}}^2 \rightarrow \Vert w \Vert _{{{\tilde{X}}}}^2\). We conclude that \(w_n \rightarrow w\) in \( {{\tilde{X}}}\), and this achieves the proof of Theorem 1.5.

Remark 5.1

We would like to remark that in the semilinear case, namely when \(\lambda =0\), we can simplify some arguments. Indeed, by applying the Fourier transform to (1.13), we can solve explicitly this equation and derive

The energy functional is then given by:

We can use directly (5.20) to obtain an estimate on \(\rho \). To prove the symmetry of minimizers, we can use Proposition 3.2 in [17], noticing that \((|u|^2)^{\star } = |u^{\star }|^2\), to deduce that

6 Bifurcation Structure

This section is devoted to the study of the bifurcation structure of solution to the minimization problem (1.15) namely we prove Proposition 1.6. We begin by showing a Pohozaev identity which is also of independent interest.

Lemma 6.1

(Pohozaev identity) Let \(u\in X\) be a solution to (1.13). Then we have

Proof

To simplify notation, we set \(\rho := \rho (|u|^2)\). Multiplying the Eq. (1.13) by xu and integrating by parts, we get

On the other hand, multiplying the equation by u and integrating by parts, we get

So combining the two previous lines, we find

\(\square \)

Let us denote by \(u_c\) a function achieving the minimum for the problem (1.15) and by \(\mu _c\) its lagrange multiplier. We also set \(\lambda _0\) for the first eigenvalue of the harmonic oscillator \(-\partial _{xx}+H^2 x^2 \). We will show that \(\mu _c\) converges to \(-\lambda _0\) when the mass c goes to 0.

Proposition 6.2

We have

Proof

In a first time, we are going to show that \(-\mu _c \le \lambda _0\). Multiplying the equation satisfied by \(u_c\) by \(u_c\) and integrating by parts, we get

Thus, we deduce that

Let \(u_0\) be the eigenfunction associated to \(\lambda _0\) namely \(\Vert u_0\Vert _{X}^2 =\lambda _0\) and \(\Vert u_0\Vert _2=1\). We set \(v_c =cu_0\). Using that \(u_c\) is a minimiser of problem (1.15), we have that \(E(u_c)\le E (v_c)\) and

This proves that \(-\mu _c \le \lambda _0\).

Using Pohozaev’s identity (see Lemma 6.1) and (6.1), we have

So, recalling that \(-\mu _c \le \lambda _0\), we have for a constant \(M>0\) not depending on c that

Notice that, integrating by parts and using radial coordinates,

In the last inequality, we used that \(u_r \le 0\). So, by (5.3), we obtain, for some constant M not depending on c,

The Gagliardo–Nirenberg’s inequality (5.4) and Young’s inequality then imply that

We have, by definition of \(\lambda _0\),

Then, using (5.3) and Gagliardo–Nirenberg’s inequality (5.4), we deduce that, for some constant k not depending on c,

Taking \(c\rightarrow 0\), the result follows.

\(\square \)

Adapting the proof of Proposition 6.7 of [15], we can prove the uniqueness of positive solution to (1.13) namely Proposition 1.7.

Proof of Proposition 1.7

We denote by \(u(r,\alpha _1)\) the radial solution to (1.13) such that \(u(0,\alpha _1)=\alpha _1\). Suppose that there exist two numbers \(0<\alpha _1<{\tilde{\alpha }}_1\) such that \(u(r,\alpha _1)\) and \(u(r,{\tilde{\alpha }}_1)\) are two positive radial solutions decaying to 0 at infinity. To simplify notation, we set \(u(r)=u(r,\alpha _1)\) and \(\eta (r)=u(r,{\tilde{\alpha }}_1)\). Let \(\psi = \eta - u \). In the following, we denote by \(u'= \partial _r u\). Then \(\psi \) satisfies

Multiplying the previous equation by u and multiplying (1.13) by \(\psi \), taking the difference and integrating by parts, we find

Observe that the left-hand side goes to 0 as \(r\rightarrow \infty \) whereas if we assume that \(\eta (r)>u(r)\) for all \(r\ge 0\), the left-hand side converges to a negative constant. So there exists \(\gamma _1\) such that \(\eta (\gamma _1)=u(\gamma _1)\) (by the maximum principle, we can show that \(\rho (|u|^2) -\rho (|\eta |^2)<0\)).

Next, we will show that it is in fact the only intersection point between u and \(\eta \). Indeed, suppose by contradiction that there exists \(\gamma _2>\gamma _1\) such that

This implies that

Let \(\xi \) be a solution to

In fact, we can think of \(\xi \) as \(u^\prime \) noticing that \((\rho (|u|^2) u )_x= u^\prime (\rho (|u|^2) + u \partial _u (\rho (|u|^2)))\). Let

Observe that the function \(u\rightarrow \rho (|u|^2) u\) is convex. Indeed \(\partial _{uu}(\rho (|u|^2) u)= u\partial _{uu} \rho (|u|^2) + 2 \partial _u \rho (|u|^2)\) where \(\partial _u \rho (|u|^2) :=f\) is the solution to

and, \(\partial _{uu}\rho (|u|^2)=g\) is the solution to

By the maximum principle, we see that \(f\ge 0\) and \(g\ge 0\) (since by comparison principle we can show that \(\rho (t x_1)\le t \rho (x_1)\) which implies, using once more comparison principle that \(\rho (t x_1 +(1-t) x_2)\le t \rho (x_1) + (1-t)\rho (x_2)\), for all \(x_1,x_2\ge 0\) and \(t\in [0,1]\)). Using this and the convexity of \(u^p\), we see that \(\chi (r)>0\) when \(r \in (\gamma _1 ,\gamma _2)\). Taking the difference of (6.2) multiplied by \(\xi \) and (6.3) multiplied by \(\psi \) and integrating by parts on \([\gamma _1,r]\), we find

Taking \(r=\gamma _2\) in the previous identity, we get

This is a contradiction since the left-hand side is strictly negative while the right-hand side is strictly positive. This establishes that u and \(\eta \) intersect exactly once.

Finally, we show that \(\eta \) has to change sign. Suppose by contradiction that

This implies that \(\psi (r)<0\) for \(r\in (\gamma _1,\infty )\), \(\psi ^\prime (\gamma _1)<0\) and \(\psi (\gamma _1)=0\). Since \(u,u^\prime \) and \(u''\) go to 0 as \(r\rightarrow \infty \) (and the same for \(\eta \)), we see that the left-hand side of (6.4) goes to 0 taking \(r\rightarrow \infty \) whereas the right-hand side converges to a positive constant. Therefore, \(\eta \) cannot be positive everywhere and consequently u is the unique positive radial solution to our equation. \(\square \)

We are finally in position to prove our bifurcation result, i.e. Proposition 1.6.

Proof of Proposition 1.6

Since \(\lambda _0\) is a simple eigenvalue, we can apply standard bifurcation results (see for instance [10, Theorem 2.1]) to deduce that \((\lambda _0,0)\) is indeed a bifurcation point and that the branch is unique provided that we are sufficiently close to the bifurcation point. Next, Proposition 6.2 guarantees that the minimizer of (1.15) \(u_c\) actually belongs to this branch at least for \(c>0\) small enough. Finally, we use our uniqueness result Proposition 1.7 to see that the set \(\{u_c,c>0\}\) is convex and therefore included in the bifurcating branch. \(\square \)

7 Numerical Simulations

In this section we perform some numerical simulations to illustrate our results. We investigate the limit \(\mu \rightarrow -\lambda _0\) mentioned in the previous section, and analyse the behaviour of standing waves with the variation of the intensity of the magnetic field \(\textbf{H}\).

7.1 Numerical Method

Our first goal is to numerically approximate the standing waves (1.11), according to the system (1.12). Following [11], we use a shooting method. However, in the present case, the director field angle \(\rho = \rho (|u|^2)\) acts as an additional potential type term, depending on u itself. Due to this, we perform a Picard iteration and look for a fixed point u of the operator \(\varphi \mapsto \Phi (\varphi )\), where \(\Phi (\varphi )\) is the solution of

with \(\rho (\varphi )\) solving

and with boundary conditions \(u(0) = u_0>0\), \(\rho (0) = \rho _0 >0\), \(u(\infty ) =u'(0) = \rho (\infty )= \rho '(0) = 0\).

According to the results in previous sections, we look for \(u\in {\mathbb {R}}\) even, smooth, vanishing at infinity, strictly positive and decreasing with |x|. For convenience, we shall denote the class of functions verifying these conditions by \({\mathcal {V}}\). Although there is no result giving a similar structure for \(\rho (x)\), it is natural to assume that \(\rho \) satisfies the same hypotheses as u, at least for small \(\lambda \), and so we look for \(u,\rho \in {\mathcal {V}}\).

We now describe our procedure in more detail. First, equations (7.1),(7.2) can be recast as a first-order system:

with boundary conditions \(u(0) = u_0>0\), \(\rho (0)=\rho _0>0\), \(v(0)=w(0)=0\), and \(\varphi \in {\mathcal {V}}\).

At each stage in the Picard iteration, we need, for a given \(\varphi \in {\mathcal {V}}\), to find \((u,w,\rho ,v)\) solving (7.3). As mentioned, we employ a shooting method, which we now describe. Suppose that we have computed \(\rho (\varphi ), v(\varphi )\), and wish to compute u, w. The idea is to adjust the initial value \(u(0)=u_0\) so that \(u(\infty ) =0\). Following [11], \(u_0\) should verify \(u_0 = \sup \{ \beta>0 : u(x;\beta )>0, x>0\}\) where \(u(x;\beta )\) is the solution of (7.1) with \(u(0) =\beta \), \(u\in {\mathcal {V}}\). At each step of the shooting method, we look for \(u_0\) in an interval \([a_n,b_n]\). We set \(u_{0,n} = (a_n+b_n)/2\) and solve the first two equations of (7.3) using an explicit Euler scheme (which is sufficient for our purposes) with \(w(0) = 0\). Then, if u attains negative values for some x, we set \(a_{n+1} = u_{0,n}\), \(b_{n+1} = b_n\), thus decreasing \(u_{0,n+1}\). Conversely, if u(x) is increasing at some point (so that it does not belong in the class \({\mathcal {V}}\)), we set \(a_{n+1}=a_n\) and \(b_{n+1} = u_{0,n}\), which increases \(u_{0,n+1}\).

The procedure to compute \(\rho \) and v is similar, except that the behaviour of \(\rho \) exhibits an inverse dependence on the initial value \(\rho (0)\); thus in each iteration of the shooting method the value of \(\rho (0)\) is increased when \(\rho \) becomes negative, and decreased when \(\rho \) becomes increasing.

Let us mention that on each iteration of the shooting method, the equation for v in (7.3) contains a nonlinear term when \(\lambda \ne 0\). The discretized equation reads

and so we use a Newton method at each step to approximately solve for \(v_{j+1}\).

As a starting point to the Picard iteration, we take \(u^{(0)}(x) \in {\mathcal {V}}\) as the solution with \(\rho =0\), that is, \(u^{(0)}\) solves \(u_{xx} - H^2 x^2 u + |u|^2 u = \mu u\) with \(u^{(0)}\in {\mathcal {V}}\). With an initial guess \(u^{(0)}(x) \in {\mathcal {V}}\) for the Picard iteration in hand, we compute \(u^{(1)}(x), w^{(1)}(x)\), and so on, using the shooting method, according to

where \(\rho (u^{(n-1)})\) solves

(also using the shooting method), with boundary conditions \(u(0) = u_0>0\), \(\rho (0)=\rho _0>0\), \(v(0)=w(0)=0\).

7.2 Numerical Results

Numerical approximation of the standing wave u(x) and the director field angle \(\rho (x)\), solutions to (1.12), computed using a shooting method and Picard iteration (Picard iterations in dashed lines). Parameters are \(H=1,\mu =-0.8,\lambda =0.1, b=1\)

In Fig. 1, we plot the standing wave u(x) and the director field angle \(\rho (x)\) calculated according to the procedure described previously. The dashed lines correspond to the iterations of the Picard method. For this simulation, we have used a spatial step \(dx = 0.002\) (corresponding to 3000 spatial points) and 15 Picard iterations.

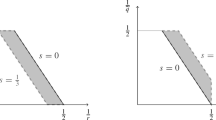

Next, we illustrate the result of Proposition 6.2. First, note that it is easy to see that \(u^*(x) = e^{-\frac{H}{2}x^2}\) is the first eigenfunction of the harmonic oscillator \(-\partial _{xx} + H^2 x^2\), with eigenvalue \(\lambda _0=H\). Note that in our notations, the parameter \(-\mu \) plays the role of \(\lambda _0\). In parallel to [11], and in accordance with Proposition 6.2, we verify numerically that the \(L^2\) norm of \(u_\mu \) goes to zero as \(\mu \rightarrow -\lambda _0^+\). Taking \(H=\lambda _0=2,\) we show in Fig. 2 the numerical solutions of (1.12) for various values of \(\mu \rightarrow -\lambda _0^+\). We can see that the solutions appear to converge to zero, although the convergence is very slow. In Fig. 3, we show how the \(L^2\) norm of \(u=u_\mu \) varies as the Lagrange multiplier \(\mu \) tends to the value \(-\lambda _0\). Our numerical tests indicate that, although slow, the convergence to zero of the \(L^2\) norm of \(u_\mu \) is verified, in accordance with Proposition 6.2.

Numerical approximation of the standing wave u(x) and the director field angle \(\rho (x)\), solutions to (1.12), with \(\mu \rightarrow -\lambda _0\). Parameters are \(H=\lambda _0 =2,\lambda =0.1, b=1\)

The norm \(\Vert u\Vert _2^2\) as a function on the Lagrange multiplier \(\mu \) as \(\mu \rightarrow -\lambda _0 = - H =-2\), in \(\log \)-\(\log \) scale. The values of \(\mu \) are the same as in Fig. 2, but \(\mu \) is ranging from \(-1.9\) to \(-1.9999153\), taking 60 values (left). On the right is a zoom on the last 15 values of \(\mu \)

Next, we investigate numerically the behaviour of the standing wave when the intensity of the magnetic field, H, is varied. It turns out that for each set of parameters that we analyzed, there is a maximum (relatively small) value of H such that our numerical method diverges for larger values of H. This may be related to the observation that the behaviour of u (and \(\rho \)) with respect to u(0) is very sensitive to perturbations: any arbitrarily small perturbation of the u(0) found by the shooting method produces a solution which (numerically at least) quickly blows up exponentially. The desired solution appears to be unstable in this sense, and this effect appears more markedly for larger values of H. Still, in Fig. 4 we show the behaviour of the solution for H between 0 and 2, which lets us nevertheless see the general trend. In particular, it is clear that the director field angle becomes more concentrated at the origin for larger values of H.

Numerical approximation of the standing wave u(x) and the director field angle \(\rho (x)\), solutions to (1.12), with varying magnetic field intensity H. Parameters are \(\mu =0.2\), \(\lambda =0.1,\) \(b=2\)

References

Amorim, P., Dias, J.P., Martins, A.F.: On the motion of the director field of a nematic liquid crystal submitted to a magnetic field and a laser beam. Partial Differ. Equ. Appl. 4, 36 (2023)

Baqer, S., Frantzeskakis, D.J., Horikis, T.P., Houdeville, C., Marchant, T.R., Smyth, N.F.: Nematic dispersive shock waves from nonlocal to local. Appl. Sci. 11, 4736 (2021)

Baqer, S., Smyth, N.F.: Modulation theory and resonant regimes for dispersive shock waves in nematic liquid crystals. Physica D 403, 132334 (2020)

Cazenave, T.: Semilinear Schrödinger Equations. Courant Lecture Notes in Mathematics, vol. 10. CIMS and AMS, Providence (2003)

Cazenave, T., Lions, P.L.: Orbital stability of standing waves for some nonlinear Schrödinger equations. Commun. Math. Phys. 85, 549–561 (1982)

Correia, S.: Finite speed of disturbance for the nonlinear Schrödinger equation. Proc. R. Soc. Edinb. Sect. A 149, 1405–1419 (2019)

De Gennes, P.G., Prost, J.: The Physics of Liquid Crystals, vol. 83. Oxford University Press, Oxford (1993)

Dias, J.P., Figueira, M., Frid, H.: Vanishing viscosity with short wave-long wave interactions for systems of conservative laws. Arch. Ration. Mech. Anal. 196, 981–1010 (2010)

Dias, J.P., Figueira, M., Oliveira, F.: On the Cauchy problem describing an electron-phonon interaction. Chin. Ann. Math. 32(B), 483–496 (2011)

Hadj Selem, F.: Radial solutions with prescribed numbers of zeros for the nonlinear Schrödinger equation with harmonic potential. Nonlinearity 24, 1795–1819 (2011)

Hadj Selem, F., Hajaiej, H., Markowich, P.A., Trabelsi, S.: Variational approach to the orbital stability of standing waves of the Gross–Pitaevskii equation. Milan J. Math. 82, 273–295 (2014)

Hajaiej, H.: Cases of equality and strict inequality in the extended Hardy–Littlewood inequalities. Proc. R. Soc. Edinb. Sect. A 135, 643–661 (2005)

Hajaiej, H., Stuart, C.A.: Symmetrization inequalities for composition operators of Carathéodory type. Proc. Lond. Math. Soc. (3) 87(2), 396–418 (2003)

Hirose, M., Ohta, M.: Structure of positive radial solutions to scalar field equations with harmonic potential. J. Differ. Equ. 178, 519–540 (2002)

Hirose, M., Ohta, M.: Uniqueness of positive solutions to scalar field equations with harmonic potential. Funkcial. Ekvac. 50(1), 67–100 (2007)

Hunter, J.K., Saxton, R.: Dynamics of director fields. SIAM J. Appl. Math. 51, 1498–1521 (1991)

Jaming, P.: On the Fourier transform of the symmetric decreasing rearrangements. Ann. Inst. Fourier 61, 53–77 (2011)

Lieb, E.H., Loss, M.: Analysis, Graduate Studies in Mathematics, vol. 14, 2nd edn. AMS, Providence (2001)

Kato, T.: Quasi-linear Equations of Evolution, with Applications to Partial Differential Equations. Lecture Notes in Mathematics, vol. 448, pp. 25–70. Springer, New York (1975)

Martins, A.F., Esnault, P., Volino, F.: Measurements of viscoelastic coefficients of main-chain nematic polymers by an NMR technique. Phys. Rev. Lett. 57, 1745–1748 (1986)

Motoc, C., Iacobescu, G.: Magneto-Optic effects in nematic liquid crystal doped with dazo-dyes. Mod. Phys. Lett. B 20, 1015–1022 (2006)

Shearer, J., Serre, D.: Convergence with physical viscosity for nonlinear elasticity. Unpublished preprint (1993)

Weinstein, M.I.: Nonlinear Schrödinger equations and sharp interpolation estimates. Commun. Math. Phys. 87, 567–576 (1983)

Zhang, P., Zheng, Y.: Rarefactive solutions to a nonlinear variational wave equation of liquid crystals. Commun. PDE 26, 381–419 (2001)

Acknowledgements

P. Amorim was partially supported by Conselho Nacional de Pesquisa (CNPq) Grant No. 308101/2019-7. J.-B and by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001. Casteras and J.P. Dias were supported by the Fundação para a Ciência e a Tecnologia (FCT) through the grant UIDB/04561/2020. J.P.Dias is indebted to R.Carles, H.Frid, E.Ducla Soares, A.Farinha Martins and L.Sanchez for important suggestions and comments. The authors also would like to thank the referees for their useful comments.

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amorim, P., Casteras, JB. & Dias, JP. On the Existence and Partial Stability of Standing Waves for a Nematic Liquid Crystal Director Field Equations. Milan J. Math. 92, 143–167 (2024). https://doi.org/10.1007/s00032-024-00395-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00032-024-00395-8