Abstract

Purpose

The main purpose of the present study was to evaluate the predictive validity of medical school admissions data, preclinical and clinical achievement data, postgraduate evaluations, and the United States Medical Licensing Exam Step 1 and 2 data for performance on Step 3.

Methods

A total of 321 physicians (178 men, 55.4 %; 143 women, 44.6 %) participated. Admissions data, medical school preclinical achievement data, medical school clerkship clinical performance data, postgraduate clinical performance data, and USMLE Step 1 and 2 data were independent variables. Step 3 was the dependent variable in a backwards multiple regression. An analysis of covariance with Step 3 scores as the dependent variable, Step 2 scores as the covariate, and residency program (narrowly focused vs. broadly focused two levels) as the independent variable was conducted.

Results

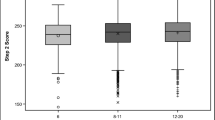

The regression analyses resulted in an optimal model fit with the most parsimonious combination of independent variables (multiple R = 0.686, R 2 = 0.470, p < 0.001): Step 2 (β = 0.606) + verbal reasoning (β = 0.125) + PGY1 (β = 0.161) + Year 3 (β = 0.157). Candidates in broadly focused residencies scored higher on Step 3 compared to those in narrowly focused residencies (F = 5.17, p < 0.05).

Conclusions

The predictive validity of Step 2, verbal reasoning, PGY1 assessment, Year 3 average for Step 3 performance is supported by the optimal regression model. Candidates in broadly focused residencies scored higher on Step 3 compared to those in narrowly focused residencies. The results are evidence for the validity of Step 3 as a measure of clinical competence.

Similar content being viewed by others

References

National Board of Medical Examiners, http://www.usmle.org/ (accessed June 25, 2015) USMLE® Website, Copyright © 1996-2016 Federation of State Medical Boards (FSMB) and National Board of Medical Examiners® (NBME®).

Julian ER. Validity of the medical college admission test for predicting medical school performance. Acad Med. 2005;80(10):910–7.

Donnon T, Oddone E, Violato C. The predictive validity of the MCAT for medical school performance and medical board licensing examinations: a meta-analysis of the published research. Acad Med. 2007;82:100–6.

Violato C, Donnon T. Does the medical college admission test predict clinical reasoning skills? A longitudinal study employing the Medical Council of Canada clinical reasoning examination. Acad Med. 2005;80:S14–6.

Sawhill AJ, Dillon GF, Ripkey DR, Hawkins RE, Swanson DB. The impact of postgraduate training and timing on USMLE step 3 performance. Acad Med. 2003;78:S10–2.

Feinberg RA, Swygert KA, Haist SA, Dillon GF, Murray CT. The impact of postgraduate training on USMLE® step 3® and its computer-based case simulation component. J Gen Intern Med. 2012;27(1):65–70. doi:10.1007/s11606-011-1835-1 .Epub 2011 Aug 31

Dong T, Swygert KA, Durning SJ, Saguil A, Gilliland WR, Cruess DF, et al. Validity evidence for medical school OSCEs: associations with USMLE step assessments. Teach Learn Med. 2014;26:379–86.

Harik P, Cuddy MM, O’Donovan S, Murray CT, Swanson DB, Clause BE. Assessing potentially dangerous medical actions with the computer-based case simulation portion of the USMLE step 3 examination. Acad Med. 2009;84:S79–82.

Andriole DA, Jeffe DB, Hageman HL, Whelan AJ. What predicts USMLE step 3 performance? Acad Med. 2005;80:S21–4.

Hecker K, Violato C. How much do differences in medical schools influence student performance? A longitudinal study employing hierarchical linear modeling’. Teach Learn Med. 2008;20:104–13.

Veloski JJ, Callahan CA, Xu G, Hojat M, Nash DB. Prediction of students’ performances on licensing examinations using age, race, sex, undergraduate GPAs, and MCAT scores. Acad Med. 2000;75:S28–30.

Collin T, Violato C, Hecker K. Aptitude, achievement and competence in medicine: a latent variable path model. Adv Health Sc Educ. 2008;14(3):355–66.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

This study was approved by the Institutional Review Board at Wake Forest University School of Medicine

Rights and permissions

About this article

Cite this article

Violato, C., Shen, E. & Gao, H. Does Step 3 of the United States Medical Licensing Exam Measure Clinical Competence? A Predictive Validity Study. Med.Sci.Educ. 26, 317–322 (2016). https://doi.org/10.1007/s40670-016-0263-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-016-0263-6