Abstract

In this article, a new explicit group iterative scheme is developed for the solution of two-dimensional fractional Rayleigh–Stokes problem for a heated generalized second-grade fluid. The proposed scheme is based on the high-order compact Crank–Nicolson finite difference method. The resulting scheme consists of three-level finite difference approximations. The stability and convergence of the proposed method are studied using the matrix energy method. Finally, some numerical examples are provided to show the accuracy of the proposed method.

Similar content being viewed by others

1 Introduction

The fractional calculus has gained attention because of its application in engineering, physics, and chemistry [1–5]. Fractional differential equations represent more complex models, but mostly it is difficult to solve them analytically. Therefore different researchers are looking for numerical methods, e.g., finite element method, spectral method, and finite difference method, to find the solution to these fractional differential equations [6–22]. The finite difference method is relatively simple and easy; that is why it has been seen more in the literature for the solution of fractional differential equations.

In this paper, we consider two dimensional (2D) Rayleigh–Stokes problem for a heated generalized second-grade fluid with fractional derivative and a nonhomogeneous term of the form:

with initial and boundary conditions

where \(0< \gamma < 1 \), \(\Omega = \{ (x,y) | 0\leq x \leq L, 0\leq y \leq L \} \).

The Rayleigh–Stokes problem has gained attention in recent years. This problem plays a vital role to show the dynamic behavior of some non-Newtonian fluids, and the fractional derivative in this model is used to capture the viscoelastic behavior of the flow [23, 24].

Several numerical methods are presented in the literature for the solution of fractional Rayleigh–Stokes problem, for example, Chen et al. [25] have solved the problem using explicit and implicit finite difference methods, they have also presented its stability and convergence using Fourier analysis. The convergence order for both schemes is \(O(\tau +\Delta x^{2} +\Delta y^{2})\). Ramy et al. [26] solved Rayleigh–Stokes problem using Jacobi spectral Galerkin method. The method they derived is efficient and easily generalizes to multiple dimensions. The advantages of this method are reasonable accuracy and relatively fewer degrees of freedom. Mohebbi et al. [27] used a higher-order implicit finite difference scheme for two-dimensional Rayleigh–Stokes problem and discussed its convergence and stability by Fourier analysis. The convergence order of their scheme is shown to be \(O(\tau +\Delta x^{4} +\Delta y^{4}) \).

High-order schemes produce more accurate results, but suffer from slow convergence due to the increase of complexity in the algorithm. Since explicit group methods reduce algorithm complexity [28–31], we propose the use of explicit group method for the solution of two-dimensional Rayleigh–Stokes problem for a heated generalized second-grade fluid. The main purpose of this article is to solve two-dimensional Rayleigh–Stokes problem with the high-order explicit group method (HEGM).

The paper is arranged as follows; in Sect. 2, we give the formulation of the high-order compact explicit group scheme, and its stability is discussed in Sect. 3. In Sect. 4, the convergence of the proposed scheme is discussed. In Sect. 5, some numerical examples are presented with discussion, and finally, the conclusion is presented in Sect. 6.

2 The group explicit scheme

First, let us define the following notations:

where \(\Delta x = \Delta y= h = \frac{L}{M}\) which represent the space step and \(\Delta t= \frac{T}{N}\) represents the time step. The operators \(\delta _{x}^{2}\) and \(\delta _{y}^{2}\), which consist of the three-point stencil [32], satisfy

and

The relationship between the Grunwald–Letnikov and Riemann–Liouville fractional derivatives is defined as [27, 33]

where \(\omega _{k}^{1-\gamma } \) are the coefficients of the generating function, that is, \(\omega (z, \gamma )= \sum_{k=0}^{\infty }\omega _{k}^{\gamma }z^{k} \). We consider \(\omega (z, \gamma )= (1-z)^{\gamma }\) for \(p=1\), so the coefficients are \(\omega _{0}^{\gamma }=1\) and

Let \(\eta _{l}=\omega _{l}^{1-\gamma } \), then

From (5) we can obtain the following:

Using (3), (4), (7), (8), and (1), we have

Multiplying both sides by \(\tau (1+\frac{1}{12}\delta _{x}^{2}) (1+\frac{1}{12}\delta _{y}^{2})\), we have

After simplifying and rearranging, we get Crank–Nicoslon (C–N) high-order compact scheme

where

Applying (8) to the group of four points (as shown in Fig. 1) will result in the following \(4\times 4\) system:

where

The matrix (9) is inverted to get the high-order compact explicit group equation

where

Figure 1 shows grid points on the x–y plane with mesh size \(m=10\), where the groups of four points are computed using (10) and the remaining points are computed using (8).

3 Stability of the proposed method

First we recall the following lemma.

Lemma 1

([34])

The coefficients \(\eta _{l}\) satisfy the following relations:

The stability of the proposed method is analyzed using the matrix analysis method. Form (9), we obtain

Proposition 1

The high-order explicit group scheme (12) is unconditionally stable.

Proof

Let \(w_{i,j}^{k}\) and \(W_{i,j}^{k}\) be the approximate and exact solutions, respectively, for (1), and let \(\epsilon _{i,j}^{k}=W_{i,j}^{k}-w_{i,j}^{k}\) denote the error at time level k. Then from (11),

where

From (11) we know

where I is the identity matrix and E is the matrix with unity values along each diagonal immediately below and above the main diagonal. Let \(\rho _{1},\rho _{2} \), and \(\rho _{3} \) represent the maximum eigenvalues for \(M_{1}\), \(N_{1}\), and \(P_{1}\), respectively, then

From (12), when \(k=0\),

Supposing

we will prove it for \(s=k+1\). Indeed, from (12)

So, using matrix analysis via mathematical induction, we proved that the proposed method is unconditionally stable. □

4 Convergence of the proposed method

Let us denote the truncation errors for the group of four points \(w_{i,j}^{k+\frac{1}{2}}, w_{i+1,j}^{k+\frac{1}{2}}, w_{i+1,j+1}^{k+ \frac{1}{2}}, w_{i,j+1}^{k+\frac{1}{2}}\) by \(e_{i,j}^{k+\frac{1}{2}},e_{i+1,j}^{k+\frac{1}{2}},e_{i+1,j+1}^{k+ \frac{1}{2}}\), \(e_{i,j+1}^{k+\frac{1}{2}}\) then let \(R^{k+\frac{1}{2}}=\{R_{1,1}^{k+\frac{1}{2}}, R_{1,2}^{k+\frac{1}{2}}, \dots,R_{1,\frac{m-1}{4}}^{k+\frac{1}{2}},R_{2,1}^{k+\frac{1}{2}},R_{2,2}^{k+ \frac{1}{2}},\dots, R_{\frac{m-1}{4},\frac{m-1}{4}}^{k+\frac{1}{2}} \}\) where \(R_{i,j}^{k+\frac{1}{2}}=\{e_{i,j}^{k+\frac{1}{2}},e_{i+1,j}^{k+ \frac{1}{2}},e_{i+1,j+1}^{k+\frac{1}{2}},e_{i,j+1}^{k+\frac{1}{2}}\}\) and \(i,j=1,2,\dots,\frac{m-1}{4}\). Then from (8) we have

where φ is a constant.

Define the error as

By substituting (19) into (11) and using \(E^{0}=0\), we get

Proposition 2

Suppose \(E^{k+1}\) \((k=0,1,\dots,N)\) satisfy (20), then

Proof

We will use mathematical induction. When \(k=0\),

Assume that

then for \(s=k+1\),

where \(\phi = \frac{81 h^{2}-324 (\gamma \tau ^{\gamma }+\tau )-4.5 s_{1}(\gamma +1) +2}{79 h^{2}+348 (\tau ^{\gamma }+\tau )} \) and \(\phi \in (0,1)\), so

□

Theorem 1

The high-order explicit group scheme (10) is convergent with the order of convergence \(O(\tau + h^{4})\).

Proof

From (18), we have

Hence, we proved that the high-order explicit group scheme (10) is convergent with the order of convergence \(O(\tau + h^{4}) \). □

5 Numerical experiments and discussion

In this section, three numerical experiments were simulated using Core i7 Duo 3.40 GHz, 4 GB RAM and Windows 7 using Mathematica software. The acceleration technique “Successive over-relaxation (SOR)” is used with relaxation factor \(\omega =1.8\) and convergence tolerance \(\zeta =10^{-5}\) for the maximum error \((L_{\infty })\); \(C_{1}\)- and \(C_{2}\)-order of convergence are used for space and time variables and calculated using [34]

where h, τ and \(L_{\infty }\) represent the space-step, the time-step, and the infinity norm, respectively.

The following three numerical experiments are considered:

Example 1

([27])

where \(0< x,y<1\), with initial and boundary conditions

and with the exact solution

Example 2

([27])

where \(0< x,y<1\), with initial and boundary conditions

and with the exact solution

Example 3

where \(0< x,y<1\), with initial and boundary conditions

and with the exact solution

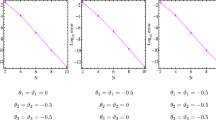

The execution time, error, and number of iteration are shown for the comparison between standard point and HEGM from Table 1 to Table 6. The execution time in HEGM is reduced by (5–35)%, (7–35)%, (10–25)%, (8–18)%, (12.5–28.48)%, and (21.29–42.24)% as compared to C–N point method in Tables 1 to 6, respectively, and it can also be seen in Figs. 4 and 5. Table 7 and Table 8 show the maximum errors and CPU timing at different values of γ’s for Example 1 and Example 2 respectively. Table 9 shows the maximum error at different values of the relaxation factor (ω’s). Tables 10 to 14 represent the space and time variables’ order of convergence for the HEGM, which show that the theoretical order of convergence is in agreement with the experimental order of convergence. Figures 2 to 5 represent 3D graphs for the exact and approximate solutions of Examples 1 and 2, which show that the proposed method is effective and reliable. The comparison of execution timing between FEG (HEGM) and SP (C-N) for Example 1 and Example 2 are shown in Figure 6 and Figure 7 respectively, which depicted that HEGM method required less execution time as compared to the C-N. Figures 8 and 9 show the graphs of the maximum error using HEGM when \(\gamma =0.5\) and \(\tau =\frac{1}{20}\) for Examples 1 and 2, respectively. The computational effort is shown in Tables 16 and 17; it can be seen that the HEGM requires fewer operations as compared to the high-order Crank–Nicolson finite difference method.

Exact solution for Example 1

Approximate solution for Example 1 when \(h = \tau = \frac{1}{30}\)

Exact solution for Example 2

Approximate solution for Example 2 when \(h = \tau = \frac{1}{30}\)

Execution time (in s) for different mesh sizes when \(\gamma =0.75\) for Example 1

Execution time (in s) for different mesh sizes when \(\gamma =0.75\) for Example 2

6 Conclusion

In this paper, we have solved two-dimensional fractional Rayleigh–Stokes problem for a heated generalized second-grade fluid using the HEGM. The \(C_{2}\)-order of convergence shows that the theoretical order of convergence agrees with the experimental order of convergence. The proposed method reduces execution time and computational complexity as compared to the high-order compact Crank–Nicolson finite difference scheme. We proved the unconditional stability using the matrix analysis method; moreover, the proposed method is convergent.

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Miller, K.S., Ross, B.: An introduction to the fractional calculus and fractional differential equations (1993)

Podlubny, I.: Fractional Differential Equations. Mathematics in Science and Engineering., vol. 198. Academic Press, San Diego (1999)

Khan, M.A., Ali, N.H.M.: High-order compact scheme for the two-dimensional fractional Rayleigh–Stokes problem for a heated generalized second-grade fluid. Adv. Differ. Equ. 2020(1), 1 (2020)

Saadatmandi, A., Dehghan, M.: A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 59(3), 1326–1336 (2010)

Dehghan, M., Manafian, J., Saadatmandi, A.: Solving nonlinear fractional partial differential equations using the homotopy analysis method. Numer. Methods Partial Differ. Equ. 26(2), 448–479 (2010)

Liu, Q., Liu, F., Turner, I., Anh, V.: Finite element approximation for a modified anomalous subdiffusion equation. Appl. Math. Model. 35(8), 4103–4116 (2011)

Li, X., Xu, C.: A space-time spectral method for the time fractional diffusion equation. SIAM J. Numer. Anal. 47(3), 2108–2131 (2009)

Abbaszadeh, M., Dehghan, M.: A finite-difference procedure to solve weakly singular integro partial differential equation with space-time fractional derivatives. Eng. Comput., 1–10 (2020)

Abbaszadeh, M., Dehghan, M.: A reduced order finite difference method for solving space-fractional reaction-diffusion systems: the Gray–Scott model. Eur. Phys. J. Plus 134(12), 620 (2019)

Dehghan, M., Abbaszadeh, M.: A finite element method for the numerical solution of Rayleigh–Stokes problem for a heated generalized second grade fluid with fractional derivatives. Eng. Comput. 33(3), 587–605 (2017)

Hendy, A.S., Zaky, M.A.: Global consistency analysis of \(L_{1}\)-Galerkin spectral schemes for coupled nonlinear space-time fractional Schrödinger equations. Appl. Numer. Math. (2020)

Zaky, M.A., Hendy, A.S., Macías-Díaz, J.E.: Semi-implicit Galerkin–Legendre spectral schemes for nonlinear time-space fractional diffusion–reaction equations with smooth and nonsmooth solutions. J. Sci. Comput. 82(1), 1–27 (2020)

Zaky, M.A.: Recovery of high order accuracy in Jacobi spectral collocation methods for fractional terminal value problems with non-smooth solutions. J. Comput. Appl. Math. 357, 103–122 (2019)

Zaky, M.A.: An accurate spectral collocation method for nonlinear systems of fractional differential equations and related integral equations with nonsmooth solutions. Appl. Numer. Math. (2020)

Zaky, M.A.: An improved tau method for the multi-dimensional fractional Rayleigh–Stokes problem for a heated generalized second grade fluid. Comput. Math. Appl. 75(7), 2243–2258 (2018)

Yanbing, Y., Ahmed, M.S., Lanlan, Q., Runzhang, X.: Global well-posedness of a class of fourth-order strongly damped nonlinear wave equations. Opusc. Math. 39(2), 297 (2019)

Ali, U., Abdullah, F.A., Mohyud-Din, S.T.: Modified implicit fractional difference scheme for 2D modified anomalous fractional sub-diffusion equation. Adv. Differ. Equ. 2017(1), 1 (2017)

Khan, M.A., Ali, N.H.M.: Fourth-order compact iterative scheme for the two-dimensional time fractional sub-diffusion equations. Math. Stat. 8(2A), 52–57 (2020)

Salama, F.M., Ali, N.H.M., Abd Hamid, N.N.: Efficient hybrid group iterative methods in the solution of two-dimensional time fractional cable equation. Adv. Differ. Equ. 2020(1), 1 (2020)

Abbaszadeh, M., Dehghan, M.: Investigation of the Oldroyd model as a generalized incompressible Navier–Stokes equation via the interpolating stabilized element free Galerkin technique. Appl. Numer. Math. 150, 274–294 (2020)

Mirzaei, D., Dehghan, M.: New implementation of MLBIE method for heat conduction analysis in functionally graded materials. Eng. Anal. Bound. Elem. 36(4), 511–519 (2012)

Dehghan, M.: Three-level techniques for one-dimensional parabolic equation with nonlinear initial condition. Appl. Math. Comput. 151(2), 567–579 (2004)

Tan, W.-C., Xu, M.-Y.: The impulsive motion of flat plate in a generalized second grade fluid. Mech. Res. Commun. 29(1), 3–9 (2002)

Fetecau, C., Jamil, M., Fetecau, C., Vieru, D.: The Rayleigh–Stokes problem for an edge in a generalized Oldroyd-b fluid. Z. Angew. Math. Phys. 60(5), 921–933 (2009)

Chen, C.-M., Liu, F., Burrage, K., Chen, Y.: Numerical methods of the variable-order Rayleigh–Stokes problem for a heated generalized second grade fluid with fractional derivative. IMA J. Appl. Math. 78(5), 924–944 (2013)

Hafez, R.M., Zaky, M.A., Abdelkawy, M.A.: Jacobi spectral Galerkin method for distributed-order fractional Rayleigh–Stokes problem for a generalized second grade fluid. Front. Phys. 7, 240 (2020). https://doi.org/10.3389/fphy

Mohebbi, A., Abbaszadeh, M., Dehghan, M.: Compact finite difference scheme and RBF meshless approach for solving 2D Rayleigh–Stokes problem for a heated generalized second grade fluid with fractional derivatives. Comput. Methods Appl. Mech. Eng. 264, 163–177 (2013)

Kew, L.M., Ali, N.H.M.: New explicit group iterative methods in the solution of three dimensional hyperbolic telegraph equations. J. Comput. Phys. 294, 382–404 (2015)

Ali, N.H.M., Saeed, A.M.: Preconditioned modified explicit decoupled group for the solution of steady state Navier–Stokes equation. Appl. Math. Inf. Sci. 7(5), 1837 (2013)

Ali, N.H.M., Kew, L.M.: New explicit group iterative methods in the solution of two dimensional hyperbolic equations. J. Comput. Phys. 231(20), 6953–6968 (2012)

Balasim, A.T., Ali, N.H.M.: Group Iterative Methods for the Solution of Two-Dimensional Time-Fractional Diffusion Equation. AIP Conference Proceedings, vol. 1750. AIP, New York (2016)

Cui, M.: Compact finite difference method for the fractional diffusion equation. J. Comput. Phys. 228(20), 7792–7804 (2009)

Yuste, S.B.: Weighted average finite difference methods for fractional diffusion equations. J. Comput. Phys. 216(1), 264–274 (2006)

Abbaszadeh, M., Mohebbi, A.: A fourth-order compact solution of the two-dimensional modified anomalous fractional sub-diffusion equation with a nonlinear source term. Comput. Math. Appl. 66(8), 1345–1359 (2013)

Acknowledgements

All the financial aid for publishing this paper is provided by the Fundamental Research Grant Scheme (FRGS) of Prof. Norhashidah Mohd Ali and Universiti Sains Malaysia.

Funding

The authors acknowledge the Fundamental Research Grant Scheme (FRGS) (203, PMATHS, 6711805) for the support of this work.

Author information

Authors and Affiliations

Contributions

The main idea of this article was proposed by MAK, NHMA and NNAH. MAK prepared the manuscript initially and performed all the steps of the proofs in this research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Abbreviations

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khan, M.A., Ali, N.H.M. & Hamid, N.N.A. A new fourth-order explicit group method in the solution of two-dimensional fractional Rayleigh–Stokes problem for a heated generalized second-grade fluid. Adv Differ Equ 2020, 598 (2020). https://doi.org/10.1186/s13662-020-03061-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-03061-6