Abstract

In this paper, we solve two-dimensional modified anomalous fractional sub-diffusion equation using modified implicit finite difference approximation. The stability and convergence of the proposed scheme are analyzed by the Fourier series method. We show that the scheme is unconditionally stable and the approximate solution converges to the exact solution. A numerical example is given to show the application and feasibility of the proposed scheme.

Similar content being viewed by others

1 Introduction

There has been much interest over the last two decades in fractional calculus and its applications. A comprehensive treatment of fractional calculus and applications can be found in Miller and Ross [1], Oldham and Spanier [2], Podlubny [3] and Samko et al. [4]. Many researchers have used numerical methods to solve biological and fluid dynamics type models and investigated the stability and convergence analysis, see [5–9]. Here, the modified anomalous sub-diffusion equation has been proposed to describe the processes that become less anomalous as time progresses by inclusion of a secondary fractional time derivative acting on diffusion operator [10–13].

Many researchers have solved this problem with different methods. Li and Wang [14] proposed an improved efficient difference method for modified anomalous sub-diffusion equation with a nonlinear source term. They used weighted and shifted Grünwald-Letnikov for Riemann-Liouville fractional derivative and compact difference for space derivative and used second-order interpolation formula for nonlinear source term. Dehghan et al. [15] used a finite difference scheme for Riemann-Liouville fractional derivative and the Legendre spectral element method for space component. For a semi-discrete scheme, they took integral on both sides and then for full discretization used the Legendre spectral element method for one- and two-dimensional modified anomalous sub-diffusion equation. Liu et al. [13] demonstrated a new implicit numerical method for modified anomalous sub-diffusion equation with nonlinear source term in a bounded domain and analyzed stability and convergence by a new energy method. Cao et al. [16] studied the implicit midpoint method and constructed a new numerical scheme for modified fractional sub-diffusion equation with nonlinear source term. Ding and Li [17] used second-order Riemann-Liouville fractional derivative and constructed two kinds of novel numerical schemes and discussed stability, convergence and solvability by the Fourier method. So many authors have used a high-order difference scheme with different methods for modified anomalous sub-diffusion equation and applied Grünwald-Letnikov definition for Riemann-Liouville fractional derivative and also discussed stability and convergence [11, 18, 19].

In this paper, we modify the implicit difference method completely numerically for modified anomalous fractional sub-diffusion equation by applying the discretized form of Riemann-Liouville integral operator and the backward difference formula to remove the partial derivative with respect to time. We also analyze the stability and convergence of the modified scheme by the Fourier series method.

In this paper, we consider the following modified anomalous fractional sub-diffusion equation [18]:

subject to the initial and boundary conditions

where φ, \(\varphi_{1}\), \(\varphi_{2}\), \(\varphi_{3}\) and \(\varphi_{4}\) are known functions, A, B are constants and \(\frac{\partial ^{1-\alpha}}{\partial t^{1-\alpha}}\) and \(\frac{\partial^{1-\beta }}{\partial t^{1-\beta}}\) are the Riemann-Liouville fractional derivatives of fractional order \(1-\alpha\) and \(1-\beta\), respectively, defined by [3, 20].

Here

is the Riemann-Liouville integral of fractional order \(0<\alpha, \beta <1\).

The following two lemmas will be used in this paper [20].

Lemma 1

If \(u(t) \in C^{1} [0,T]\), then

where \(|R_{k}^{\gamma}|\leq C b_{k}^{\gamma}\tau\).

Lemma 2

The coefficients \(b_{k}^{(\gamma)}\) (\(k=0,1,2,\ldots\)) satisfy the following properties:

-

(i)

\(b_{0}^{(\gamma)}=1\), \(b_{k}^{(\gamma)}>0\), \(k=0,1,2,\ldots\) .

-

(ii)

\(b_{k-1}^{(\gamma)}>b_{k}^{(\gamma)}\), \(k=1,2,\ldots\) .

-

(iii)

There exists a positive constant \(C>0\) such that \(\tau\leq C b_{k}^{(\gamma)} \tau^{\gamma}\), \(k=1, 2,\ldots\) .

-

(iv)

\(\sum_{j=0}^{k}b_{j}^{(\gamma)} \tau^{\gamma}=(k+1)^{\gamma}\leq T^{\gamma}\).

2 Modified implicit difference approximation

In this section, we develop a modified implicit difference scheme for the modified anomalous fractional sub-diffusion equation (1)-(3). For the discretization of the Riemann-Liouville fractional derivative, we use the definition in (4)-(5) and replace the second-order space derivatives by central difference approximation. We take the space steps as \(x_{i}=i\Delta x\), in the x-direction with \(i=1,2,\ldots,M-1\), \(\Delta x=\frac{L}{M}\), and the time step is \(t_{k}=k\tau\), \(k=1,2,\ldots,N\), where \(\tau=\frac {T}{N}\). Let \(u_{i}^{k}\) be the numerical approximation to \(u(x_{i},t_{k})\). By applying (4) and (5) to equation (1), we obtain

Firstly, for the discretization of equation (9), we are using Lemma 1 for the Riemann-Liouville integral operator, then central difference approximation for second-order space derivatives and applying backward difference approximation for the partial derivative with respect to time, we have

Here,

and

From the above, we present a modified implicit difference scheme for the modified anomalous fractional sub-diffusion equation (1)-(3) with the initial and boundary conditions as follows:

where \(i=1,2,\ldots M_{x}-1\), \(j=1,2,\ldots M_{y}-1 \) and \(k=1,2,\ldots N-1 \) with

3 Stability of the modified implicit scheme

In this section, we investigate the stability of the modified implicit numerical scheme using the Fourier series method. Let \(U_{i}^{k} \) be the approximate solution for (13), and we have

where \(i=1,2,\ldots,M_{x}-1\), \(j=1,2,\ldots,M_{y}-1\) and \(k=1,2,\ldots ,N-1\).

Next, the error is defined as

where \(e_{i,j}^{k}\) satisfies (16) and

The error and initial conditions are given by

By defining the following grid functions for \(k=1,2,\ldots,N\)

\(e^{k} (x,y)\) can be expanded in Fourier series such as

where

From the definition of \(l^{2} \) norm and Parseval’s equality, we have

Supposing that

where \(\sigma_{1}=2\pi l_{1}/L\), \(\sigma_{2}=2\pi l_{2}/L\) and substituting (24) in (18), we obtain

where

Proposition 1

If \(\lambda^{k}\) (\(k=1,2,\ldots,N\)) satisfy (25), then \(|\lambda ^{k} |\leq|\lambda^{0}|\).

Proof

By using mathematical induction, we take \(k=1\) in (25)

and as \(\mu_{1}, \mu_{2}\geq0\) and \(b_{0}^{(\alpha)}=b_{0}^{(\beta)}=1\), then

Now, assuming that

and as \(0<\alpha, \beta<1\), from (25) and Lemma 2, we obtain

The proof of Proposition 1 by induction is completed. □

Proposition 1 and equation (23) concluded that the solution of equation (13) satisfies

this proved that the modified implicit difference scheme in (13) is unconditionally stable.

4 Convergence of the modified implicit scheme

In this section, we analyze the convergence of the modified implicit scheme by following a similar approach as that in Section 3.

Let the exact solution \(u(x_{i}, y_{j}, t_{k})\) be represented by Taylor series, then the truncation error of the modified implicit scheme is obtained as

with \(i=1,2,\ldots,M_{x}-1\), \(j=1,2,\ldots,M_{y}-1\), \(k=1,2,\ldots,N\).

From (1), we have

Since i, j and k are finite, thus there is a positive constant \(C_{1}\) for all i, j and k, which then leads to

with \(i=1,2,\ldots,M_{x}-1\), \(j=1,2,\ldots,M_{y}-1\), \(k=1,2,\ldots,N\). The error is defined as

From (31), we have

To obtain the error equation, subtract (35) from (13) to obtain

with error boundary conditions

and the initial condition

Next, we define the following grid functions for \(k=1,2,\ldots,N\):

and

\(i=1,2,\ldots,M_{x}-1\), \(j=1,2,\ldots,M_{y}-1\), \(k=1,2,\ldots,N\).

Here, \(E^{k}(x,y)\) and \(R^{k}(x,y)\) can be expanded in Fourier series such as

where

From the definition of \(l^{2}\) norm and Parseval’s equality, we have

and

Based on the above, suppose that

respectively, substituting (47) and (48) into (36) gives

where \(\mu_{1}\) and \(\mu_{2}\) are mentioned in Section 3.

Proposition 2

Let \(\xi^{k}\) (\(k=1,2,\ldots,N\)) be the solution of (49), then there is a positive constant \(C_{2}\) so that

Proof

From \(E^{0}=0\) and (43), we have

From (44) and (46), then there is a positive constant \(C_{2}\) such that

Using mathematical induction for \(k=1\), then from (49) and (50), we obtain

Since \(\mu_{1}, \mu_{2}\geq0\), from (51), we get

Now suppose that

As \(0<\alpha, \beta<1\), from (48), (50) and Lemma 2, we have

As \(\mu_{1}, \mu_{2}\geq0\) and \((1- b_{k-1})\geq0\), for all values, so

The proof of Proposition 2 by induction is completed. □

Theorem 1

The modified implicit difference scheme is \(l^{2}\) convergent and the order of convergence is \(O(\tau+\tau(\Delta x)^{2}+\tau(\Delta y)^{2})\).

Proof

In view of Proposition 2, (45), (46) and (55)

as \(k \tau\leq R\), thus

where \(C=C_{1} C_{2} R L\).

This completes the proof of the theorem. □

5 Numerical experiments

In this section, we solve a numerical example to test the theoretical analysis. The maximum errors between the numerical solution and the exact solution are compared with the mentioned references, i.e., the maximum error is defined as follows:

Example 1

Consider the following two-dimensional modified anomalous fractional sub-diffusion equation [18]:

where

subject to the initial and boundary conditions

The exact solution is given by

The developed modified implicit scheme is applied to problem (59)-(61). Table 1 shows the errors \(E_{\infty}\) at \(T=1.0\) for the space step size \(\Delta x=\Delta y=\frac{1}{10}\) and for various values of τ. Note that the time step τ is defined by \(\tau =\frac{T}{N}\).

In Tables 1 and 2, the numerical results seem to confirm our theoretical analysis for various values of time step size τ, α and β.

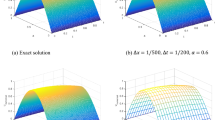

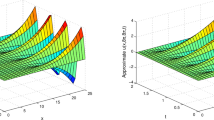

Figures 1 and 2 show the numerical solution of equation (59) and compare it with the exact solution at \(\alpha=0.25, 75\), \(\beta=0.45, 0.85\), \(y=0.1\) and \(T=1.0\), respectively. It can be seen that the numerical solution is in excellent agreement with the exact solution. These results proved our theoretical analysis.

6 Conclusion

A modified implicit difference scheme for two-dimensional modified anomalous fractional sub-diffusion equation has been described in this paper. The modified scheme has the advantage of low complexity, low computation and it is easy to implement. We have used the Fourier series method and found that the scheme with convergence order \((\tau +\tau(\Delta x)^{2}+\tau(\Delta y)^{2})\) is unconditionally stable and convergent. The result of an application to a particular example has been discussed graphically and numerically. A comparison of the numerical methods with the proposed scheme for the example has shown that the scheme is feasible and accurate. This technique can also be extended to explicit and Crank-Nicolson method and can be applied to other types of fractional differential equations.

References

Miller, KS, Ross, B: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Oldham, KB, Spanier, J: Fractional Calculus. Academic Press, New York (1974)

Podlubny, I: Fractional Differential Equations. Academic Press, New York (1999)

Samko, SG, Kilbas, AA, Marichev, OI: Fractional Integrals and Derivatives: Theory and Applications. Gordon & Breach, Yverdon (1993)

Hameed, M, Khan, AA, Ellahi, R, Raza, M: Study of magnetic and heat transfer on the peristaltic transport of a fractional second grade fluid in a vertical tube. Int. J. Eng. Sci. Technol. 18(3), 496-502 (2015)

Shirvan, KM, Ellahi, R, Mirzakhanlari, S, Mamourian, M: Enhancement of heat transfer and heat exchanger effectiveness in a double pipe heat exchanger filled with porous media: numerical simulation and sensitivity analysis of turbulent fluid flow. Appl. Therm. Eng. 109, 761-774 (2016)

Sheikholeslami, M, Zaigham Zia, QM, Ellahi, R: Influence of induced magnetic field on free convection of nanofluid considering Koo-Kleinstreuer (KKL) correlation. Appl. Sci. 6(11), 324 (2016)

Shirvan, KM, Mamourian, M, Mirzakhanlari, S, Ellahi, R, Vafai, K: Numerical investigation and sensitivity analysis of effective parameters on combined heat transfer performance in a porous solar cavity receiver by response surface methodology. Int. J. Heat Mass Transf. 105, 811-825 (2017)

Nawaz, M, Zeeshan, A, Ellahi, R, Abbasbandy, S, Rashidi, S: Joules heating effects on stagnation point flow over a stretching cylinder by means of genetic algorithm and Nelder-Mead method. Int. J. Numer. Methods Heat Fluid Flow 25, 665-684 (2015)

Gang, G, Kun, L, Yuhui, W: Exact solutions of a modified fractional diffusion equation in the finite and semi-infinite domains. Physica A 417, 193-201 (2015)

Mohebbi, A, Abbaszadeh, M, Dehghan, M: A high-order and unconditionally stable scheme for the modified anomalous fractional sub-diffusion equation with a nonlinear source term. J. Comput. Phys. 240, 6-48 (2013)

Liu, Q, Liu, F, Turner, I, Anh, V: Finite element approximation for a modified anomalous subdiffusion equation. Appl. Math. Model. 35, 4103-4116 (2011)

Liu, F, Yang, C, Burrage, K: Numerical method and analytical technique of the modified anomalous subdiffusion equation with a nonlinear source term. J. Comput. Appl. Math. 231(1), 160-176 (2009)

Li, Y, Wang, D: Improved efficient difference method for the modified anomalous sub-diffusion equation with a nonlinear source term. Int. J. Comput. Math. 94, 821-840 (2017)

Dehghan, M, Abbaszadeh, M, Mohebbi, A: Legendre spectral element method for solving time fractional modified anomalous sub-diffusion equation. Appl. Math. Model. 40, 3635-3654 (2016)

Cao, X, Xianxian, C, Wen, L: The implicit midpoint method for the modified anomalous sub-diffusion equation with a nonlinear source term. J. Comput. Appl. Math. 318, 199-210 (2017)

Ding, H, Li, C: High-order compact difference schemes for the modified anomalous subdiffusion equation. Numer. Methods Partial Differ. Equ. 32, 213-242 (2016)

Abbaszadeh, M, Mohebbi, A: A fourth-order compact solution of the two-dimensional modified anomalous fractional sub-diffusion equation with a nonlinear source term. Comput. Math. Appl. 66, 1345-1359 (2013)

Wang, Z, Vong, S: Compact difference schemes for the modified anomalous fractional sub-diffusion equation and the fractional diffusion-wave equation. J. Comput. Phys. 277, 1-15 (2014)

Yang, Q: Novel analytical and numerical methods for solving fractional dynamical systems. PhD by Publication, Queensland University of Technology (2010)

Chen, CM, Liu, F, Burrage, K, Chen, Y: Numerical method of the variable-order Rayleigh-Stocks’ problem for a heated generalized second grade fluid with fractional derivative. IMA J. Appl. Math. 1-21 (2005)

Chen, CM, Liu, F, Turner, I, Anh, V: Numerical methods with fourth-order spatial accuracy for variable-order nonlinear Stokes’ first problem for a heated generalized second grade fluid. Comput. Math. Appl. 62, 971-986 (2011)

Hu, Z, Zhang, L: Implicit compact difference schemes the fractional cable equation. Appl. Math. Model. 36, 4027-4043 (2012)

Inc, M, Cavlak, E, Bayram, M: An approximate solution of fractional cable equation by homotopy analysis method. Bound. Value Probl. 2014, 58 (2014)

Shirvan, KM, Mamourian, M, Mirzakhanlari, S, Ellahi, S: Numerical investigation of heat exchanger effectiveness in a double pipe heat exchanger filled with nanofluid: a sensitivity analysis by response surface methodology. Powder Technol. 313, 99-111 (2017)

Ma, LL, Liu, DB: An implicit difference approximation for fractional cable equation in high-dimensional case. J. Liao. Tech. Univ. Nat. Sci. 4, 024 (2014)

Lin, Y, Jiang, W: Numerical method for Stokes’ first problem for a heated generalized second grade fluid with fractional derivatives. Numer. Methods Partial Differ. Equ. 27, 1599-1609 (2011)

Liu, F, Yang, Q, Turner, I: Two new implicit numerical methods for the fractional cable equation. J. Comput. Nonlinear Dyn. 6(1), 011009 (2010)

Liu, J, Li, H, Liu, Y: A new fully discrete finite difference/ element approximation for fractional cable equation. J. Appl. Math. Comput. 52(1), 345-361 (2016)

Sweilam, NH, Assiri, TA: Non-standard Crank-Nicholson method for solving the variable order fractional cable equation. Appl. Math. Inf. Sci. 9(2), 943-951 (2015)

Tan, W, Masuoka, T: Stokes first problem for a second grade fluid in a porous half-space with heated boundary. Int. J. Non-Linear Mech. 40, 515-522 (2005)

Zhai, S, Feng, X, He, Y: An unconditionally stable compact ADI method for 3D time-fractional convection-diffusion equation. J. Comput. Phys. 269, 138-155 (2014)

Zhuang, P, Liu, F, Anh, V, Turne, I: New solution and analytical techniques of the implicit numerical methods for the anomalous sub-diffusion equation. SIAM J. Numer. Anal. 46(2), 1079-1095 (2008)

Zhuang, P, Liu, F, Turner, L, Anh, V: Galerkin finite element method and error analysis for the fractional cable equation. Numer. Algorithms 72, 447-466 (2016)

Acknowledgements

Umair Ali would like to thank The World Academy of Science (TWAS) and Universiti Sains Malaysia for the award of TWAS-USM postgraduate Fellowship. The authors gratefully acknowledge that this research was partially supported by the Research Creativity and Management Office, Universiti Sains Malaysia under the Fundamental Research Grant Scheme (203/PMATHS/6711570).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ali, U., Abdullah, F.A. & Mohyud-Din, S.T. Modified implicit fractional difference scheme for 2D modified anomalous fractional sub-diffusion equation. Adv Differ Equ 2017, 185 (2017). https://doi.org/10.1186/s13662-017-1192-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1192-4