Abstract

Considering stochastic perturbations of white and color noises, we introduce the Markov switched stochastic Nicholson-type delay system with patch structure. By constructing a traditional Lyapunov function we show that solutions of the addressed system are not only positive, but also do not explode to infinity in finite time and, in fact, are ultimately bounded. Then we estimate its ultimate boundedness, moment, and Lyapunov exponent. Finally, we present an example of numerical simulations to verify theoretical results.

Similar content being viewed by others

1 Introduction

Considering that in the models of marine protected areas and B-cell chronic lymphocytic leukemia [1] the mortality rate is perturbed by the white noise of the environment, Yi and Liu [2] and Wang et al. [3] have presented a stochastic Nicholson-type delay system with patch structure:

where \(i\in I:=\{1,2,\ldots,n\}\), \(x_{i}(t)\) is the size of the population at time t, \(a_{i}\) is the per capita daily adult death rate, \(p_{i}\) is the maximum per capita daily egg production, \(\frac{1}{\gamma _{i}}\) is the size at which the population reproduces at its maximum rate, \(\tau_{i}\) is the generation time, \(b_{ij}\) (\(i\neq j\)) is the migration coefficient from compartment i to compartment j, \(B_{i}(t)\) is an independent white noise with \(B_{i}(0)=0\) and intensity \(\sigma _{i}^{2}\). It is well known that the scalar Nicholson blowflies delay differential equation originated from [4, 5], and Berezansky et al. [6] summarized some results and introduced several open problems to attract many scholars [7–18]. Stochastic system (1.1) can be regarded as a generalization of the deterministic Nicholson blowflies model.

In the real world, it is complex for any practical system, since besides white noises, there are color noise interferences. One type of color noises is the so-called telegraph noise, which causes the system to switch from one environmental regime to another [19] and can mostly be modeled by a continuous-time Markov chain to describe the switching process between two or more regimes. To the best of our knowledge, almost no one or a few researchers consider the Markov switched stochastic Nicholson-type delay system with patch structure. This prompts us to propose the following stochastic system:

with initial conditions

where \(\xi(t)\) (\(t\geq0\)) is a continuous-time irreducible Markov chain with invariant distribution \(\pi=(\pi_{k}, k\in{ S})\), which takes values in a finite state space \({S}=\{1,2,\ldots, N\}\), and its generator \(Q=(\nu_{ij})_{N\times N}\) satisfies

Here \(\nu_{ij}\geq0\) for \(i, j\in{S}\) with \(i\neq j\), and \(\sum_{j=1}^{j=N}\nu_{ij}=1\) for each \(i\in{S}\), \(B_{i}(t)\) are independent Brownian motions with \(B_{i}(0)=0\) (\(i\in I\)), and they are independent of the Markov chain \(\xi(t)\). For \(i,j\in I\) and \(k\in {S}\), the parameters \(\tau_{i}\), \(a_{i}(k)\), and \(\gamma_{i}(k)\) are positive, and \(b_{ij}(k)\), \(p_{i}(k)\), and \(\sigma_{i}^{2}(k)\) are nonnegative constants. Since system (1.2) describes the dynamics of a Markov switched stochastic Nicholson-type delay system with patch structure, it is important to study whether or not the solution:

remains positive or never becomes negative,

does not explode to infinity in finite time,

is ultimately bounded in mean, and

to estimate the moment and sample Lyapunov exponent.

In this paper, we discuss these problems one by one. In Sect. 2, we consider the existence and uniqueness of the global positive solution of (1.2)–(1.3). Next, we study its ultimate boundedness in mean, its moment, and its sample Lyapunov exponent in Sect. 3. We carry out an example and its numerical simulation to illustrate theoretical results in Sect. 4. Finally, we provide a brief conclusion to summarize our work.

2 Preliminary results

In this section, we introduce some basic definitions and lemmas, which are important for the proof of the main result. Unless otherwise specified, \((\varOmega,\{\mathcal{F}_{t}\}_{t\geq 0},\mathcal{P})\) is a complete probability space with filtration \(\{ \mathcal{F}_{t}\}_{t\geq0}\) satisfying the usual conditions (i.e., it is right continuous, and \(\mathcal{F}_{0}\) contains all \(\mathcal{P}\)-null sets). Let \(B_{i}(t)\) (\(i\in I\)) be independent standard Brownian motions defined on this probability space. For simplicity, in the following sections, we use the following notation:

Let \(p\geq1\) be such that for each \(i\in I\), \(A_{i}(p,\xi(t))>0\), and \(C_{i}(p,\xi(t))\) is bounded, where

It is easy to see that for \(i\in I\), \(A_{i}(1,\xi(t))=a_{i}(\xi(t))>0\) and by continuity we can find \({p>1}\) such that \(A_{i}(p,\xi(t))>0\). Considering the function \(F(x):=-\alpha x^{p}+\beta x^{p-1}\), we can easily obtain that \(F(x)\) increases on \([0, \frac{\beta(p-1)}{\alpha p}]\) and decreases on \([\frac{\beta(p-1)}{\alpha p}, +\infty)\), where \(\alpha,\beta>0\) and \(p>1\). Also, \(F(0)=F(\frac{\beta}{\alpha})=0\), \(\lim_{x\rightarrow+\infty} F(x)=-\infty\), and \(\max_{x\in\mathbb {R}_{+}}F(x)=F(\frac{\beta(p-1)}{\alpha p})\) is bounded. Thus it is natural that \(C_{i}(p,\xi(t))=pF(\frac{ p_{i}(\xi(t))(p-1)}{ep \gamma_{i}(\xi (t))A_{i}(\xi(t))})\) is also bounded.

Definition 2.1

(see [20])

System (1.2) is said to be ultimately bounded in mean if there is a positive constant L independent of initial conditions (1.3) such that

Lemma 2.1

For\(A\in\mathbb{R}\), \(B\in\mathbb{R}_{+}\), we have\(\frac {Ax^{2}+Bx}{1+x^{2}}\leq G(A,B)\)for\(x\in\mathbb{R}\), where

Proof

By Lemma 1.2 of [21] the result easily follows, so we omit the proof. □

Lemma 2.2

For any given initial conditions (1.3), there exists a unique solution\(x(t)=(x_{1}(t),\ldots,x_{n}(t))\)of system (1.2) on\([0,\infty)\), which remains in\(\mathbb{R}^{n}_{+}\)with probability one, that is, \(x(t)\in\mathbb{R}^{n}_{+}\)for all\(t\geq0\)almost surely.

Proof

Because all coefficients of system (1.2) are locally Lipschitz continuous, for any given initial condition (1.3), there exists a unique maximal local solution \(x(t)\) on \([-\tau, \nu_{e})\), where \(\nu _{e}\) is the explosion time.

Firstly, we prove that \(x(t)\) is positive on \([0, \nu_{e})\) almost surely. For \(t\in[0,\tau]\), system (1.2) with initial conditions given in (1.3) becomes the system of stochastic linear differential equations:

where \(\alpha_{i}(\xi(t))=p_{i}(\xi(t))\varphi_{i}(t-\tau_{i})e^{-\gamma_{i}(\xi (t))\varphi_{i}(t-\tau_{i})}\geq0\) a.s., \(t\in[0,\tau]\). From the stochastic comparison theorem [22], \(x_{i}(t)\geq I_{i}(t)\) a.s. for \(t\in[0,\tau]\), where \(I_{i}(t)\) (\(i\in I\)) are the solutions of the stochastic differential equations

For \(t\in[0,\tau]\), system (2.2) has the explicit solutions

where \(\eta_{i}(\xi(t))=-(a_{i}(\xi(t))+\sum_{j=1,j\neq i}^{n}b_{ij}(\xi (t))-\frac{\sigma^{2}_{i}(\xi(t))}{2})t+\sigma_{i}(\xi(t)) B_{i}(t)\). Hence, for \(t\in[0,\tau]\), \(i\in I\), we have \(x_{i}(t)\geq I_{i}(t)>0\) a.s.

Using the same method, we have \(x_{i}(t)>0\) a.s. for \(t\in[\tau, 2\tau]\), \(i\in I\). Moreover, repeating this procedure, we also have \(x_{i}(t)>0\) (\(i\in I\)) a.s. on \([m\tau, (m+1)\tau]\) for any integer \(m\geq1\). Thus system (1.2) with initial conditions (1.3) has the unique positive solution \(x(t)\) almost surely for \(t\in[0, \tau_{e})\).

Next, we prove that \(x(t)\) exists globally. Let \(m_{0}\geq1\) be sufficiently large such that \(\max_{-\tau\leq t\leq0}\varphi_{i}(t)< m_{0}\), \(i\in I\). For every integer \(m\geq m_{0}\), define the stopping time

where throughout this paper, \(\inf\emptyset:=\infty\). Obviously, \(\nu _{m}\) is increasing as \(m\rightarrow\infty\). Set \(\nu_{\infty}=\lim_{m\rightarrow\infty}\nu_{m}\), where \(\nu_{\infty}\leq\nu_{e}\) a.s. If we can prove that \(\nu_{\infty}=\infty\) a.s., then \(\nu_{e}=\infty\) and \(x(t)\in R^{n}_{+}\) for all \(t\geq0\) a.s. For this purpose, we need to show that \(\nu _{\infty}=\infty\) a.s. Define \(V(x)=\sum_{i=1}^{n}(x_{i}-1-\ln x_{i})\). For \(t\in[0, \nu_{m}\wedge T)\), it is easy to show by Itô formula that

where \(m\geq m_{0}\) and \(T>0\) are arbitrary, and

In the last inequality, we used the fact that \(\sup_{x\geq 0}xe^{-x}=\frac{1}{e}\). For any \(m\geq m_{0}\), integrating both sides of (2.3) from 0 to \(\nu_{m}\wedge T\) and taking expectations yield that

Since for every \(\omega\in\{\nu_{m}\leq T\}\), there exists at least one of \(x_{i}(\nu_{m},\omega)\) (\(i\in I\)) equal to m, we have that \(V(x(\nu _{m}\wedge T))\geq(m-1-\ln m)\). Then from (2.5) it follows that

where \(I_{\{\nu_{m}\leq T\}}\) is the indicator function of \(\{\nu_{m}\leq T\}\). Letting \(m\rightarrow\infty\) gives \(\lim_{m\rightarrow\infty}P\{ \nu_{m}\leq T\}=0\), and hence \(P\{\nu_{\infty}\leq T\}=0\). Since \(T>0\) is arbitrary, we must have \(P\{\nu_{\infty}<\infty\}=0\). So \(P\{\nu_{\infty}=\infty\}=1\) as required, which completes the proof of Lemma 2.2. □

Remark 2.1

Without color noises (i.e., \(\xi(t)\equiv\) constant), system (1.2) is a stochastic Nicholson-type delay system with white noises in [2, 3]. Moreover, without migrations (i.e., \(b_{ij}(\xi(t))\equiv 0\), \(i,j\in I\)), system (1.2) is a direct extension of n stochastic Nicholson’s blowflies delay differential equations that includes the stochastic model in [21, 23], and the restricted conditions \(a_{i}>\frac{\sigma_{i}^{2}}{2}\) (\(i\in I\)) in [23, 24] for the existence and uniqueness of global positive solution are unnecessary. Thus Lemma 2.2 generalizes and improves Lemma 2.2 in [23, 24], Lemma 2.2 in [3], Theorem 2.1 in [21], and Theorem 2.1 in [2].

3 Main results

By Lemma 2.2, we show that the solution of the Markov switched stochastic Nicholson-type delay system (1.2) with initial conditions (1.3) remain in \(\mathbb{R}^{n}_{+}\) almost surely and do not explode to infinity in finite time. This good property gives a great opportunity to study more complicated dynamic behaviors of system (1.2). In this section, we study the remaining problems: estimating the ultimate boundedness in mean, the average in time of the pth moment, and a sample Lyapunov exponent for system (1.2).

Theorem 3.1

For any given initial conditions (1.3), the solution\(x(t)=(x_{1}(t),\ldots,x_{n}(t))\)of system (1.2) has the property

where\(a=\min_{i\in I}\{a_{i}^{-}\}\), \(c=\max_{i\in I}\{(\frac{p_{i}}{e\gamma _{i}})^{+} \}\), that is, system (1.2) is ultimately bounded in mean.

Proof

By Lemma 2.2 the global solution \(x(t)\) of (1.2) is positive on \(t\geq0\) with probability one. It follows from (1.2) and the fact \(\sup_{x\geq0}xe^{-x}=\frac{1}{e}\) that

which, together with Itô’s formula, implies that

Integrating both sides of (3.3) from 0 to t and then taking the expectations, we have

This implies

Since \(E|x(t)|=E\sqrt{\sum_{i=1}^{n}x_{i}^{2}(t)}\leq E(\sum_{i=1}^{n}x_{i}(t))\), it is easy to get \(\limsup_{t\rightarrow\infty}E|x(t)|\leq\frac {nc}{a}\), which is the required statement (3.1). The proof is now completed. □

Theorem 3.2

The solution\(x(t)=(x_{1}(t),\ldots,x_{n}(t))\)of (1.2) with initial conditions (1.3) satisfies

where\(A(\xi(t))=-\min_{i\in I}A_{i}(2,\xi(t))\), \(B(\xi(t))= \sqrt{ \sum_{i=1}^{n}\frac{p_{i}^{2}(\xi(t))}{e^{2}\gamma^{2}_{i}(\xi(t))}}\), \(G(\xi (t))=G(A(\xi(t)),B(\xi(t)))\), and\(H_{i}(\xi(t))=a_{i}(\xi(t))+\sum_{j=1,j\neq i}^{n}b_{ij}(\xi(t))+\frac{1}{2}\sigma_{i}^{2}(\xi(t))\), \(i\in I\).

Proof

In view of Itô’s formula, Young’s inequality, and the fact \(\sup_{x\geq0}xe^{-x}=\frac{1}{e}\), from (1.2) it follows that

Since the Markov chain \(\xi(t)\) has an invariant distribution \(\pi=(\pi _{i}, i\in{ S})\), this implies

Using Itô’s formula, the Young and Cauchy inequalities, and the fact \(\sup_{x\geq0}xe^{-x}=\frac{1}{e}\) again, from (1.2) and Lemma 2.1 we get that

where \(M_{i}(t)=2\int_{0}^{t}\frac{\sigma_{i}(\xi(\xi(s)) x_{i}^{2}(s)}{1+|x(s)|^{2}}\,dB_{i}(s)\), \(i\in I\).

Meanwhile, the exponential martingale inequality (Theorem 1.7.4 of [25]) implies that, for every \(l>0\),

Using the convergence of \(\sum_{l=1}^{\infty}\frac{1}{l^{2}}\) and the Borel–Cantelli lemma (Lemma 1.2.4 of [25]), we obtain that there exists a set \(\varOmega_{0}\in\mathcal{F}\) with \(P(\varOmega_{0})=1\) and a random integer \(l_{0}=l_{0}(\omega)\) such that, for every \(\omega\in \varOmega_{0}\),

for all \(0\leq t\leq l\), \(l\geq l_{0}\), \(i\in I\). Substituting (3.9) into (3.8), for any \(\omega\in\varOmega_{0}\), \(l\geq l_{0}\), \(0< l-1\leq t\leq l\), we have

Letting \(l\rightarrow\infty\) and recalling that the Markov chain \(\xi (t)\) has an invariant distribution \(\pi=(\pi_{j}, j\in{ S})\), we get that

By Itô’s formula, from system (1.2) we obtain that

which, with the help of the large number theorem for martingales (Theorem 1.3.4 [25]) and the invariant distribution of the Markov chain \(\xi(t)\), implies

The proof is completed. □

Remark 3.1

Under the conditions \(\alpha>\frac{\sigma^{2}}{2}\) in [23, 24] and \(2(a_{1}+b_{2})-\sigma_{1}^{2}-(b_{1}+b_{2})\theta>0\), \(2(a_{2}+b_{1})-\sigma _{2}^{2}-(b_{1}+b_{2})/\theta>0\) in [2], and \(\lambda_{\max }^{+}(-DA-A^{T}D+D)<0\) in [3], the authors of [2, 3] and [23, 24] have estimated the ultimate boundedness, moment, and Lyapunov exponent of a relevant stochastic Nicholson-type model, respectively. However, these estimates in Theorems 3.1 and 3.2 of this paper are independent of any a priori conditions and only depend on the invariant distribution π of the Markov chain \(\xi(t)\). In particular, these estimates can also be applied for no migration cases in [21]. Therefore Theorems 3.1 and 3.2 are a generalization and improvement of the corresponding results in [2, 3, 21, 23, 24]. Indeed, the stochastic models of [2, 3, 23, 24] are only concerned with white noises, but not with color noises. Moreover, we prove the existence of global positive solutions and estimate their ultimate boundedness, moment, and Lyapunov exponent without the restricted conditions \(\alpha>\frac{\sigma^{2}}{2}\) in [23, 24], \(2(a_{1}+b_{2})-\sigma_{1}^{2}-(b_{1}+b_{2})\theta>0\), \(2(a_{2}+b_{1})-\sigma _{2}^{2}-(b_{1}+b_{2})/\theta>0\) in [2], and \(\lambda_{\max}^{+}(-DA-A^{T}D+D)<0\) in [3]. Although Zhu et al. [21] have considered both white and color noises in stochastic Nicholson’s blowflies model, it is a scalar equation, and its initial condition \(\varphi(s)\in C([-\tau ,0],(0,+\infty))\) is more strict than the initial conditions (1.3) in this paper. Then the model considered in this paper, the Markov switched stochastic Nicholson-type delay system with patch structure includes the models of [2, 3, 21, 23, 24] with \(n=1,2\) and a constant Markov chain \(\xi(t)\).

4 An example and its numerical simulations

In this section, we give an example with simulations to check our main results.

Example 4.1

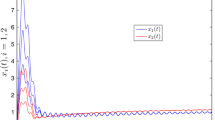

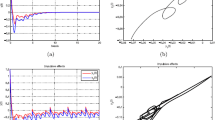

We choose \({S}=\{1,2,3\}\), \(P=[-9,4,5;2,-5,3;2,2,-4]\), \(a_{1}=[0.2,0.25,0.3]\), \(a_{2}=[0.1,0.15,0.2]\), \(a_{3}=[0.13,0.18,0.22]\), \(b_{12}=[0.3,0.4,0.5]\), \(b_{13}=[0.2,0.3,0.4]\), \(b_{21}=[0.3,0.4,0.5]\), \(b_{23}=[0.1,0.2,0.3]\), \(b_{31}=[0.2,0.3,0.4]\), \(b_{32}=[0.1,0.2,0.3]\), \(p_{1}=[1.5,1.6,1.7]\), \(p_{2}=[1.4,1.5,1.6]\), \(p_{3}=[1.3,1.4,1.5]\), \(\gamma _{1}=[1,1.5,2]\), \(\gamma_{2}=[2,2.5,3]\), \(\gamma_{3}=[1.5,2,2.5]\), \(\sigma _{1}=[0.8,0.9,1]\), \(\sigma_{2}=[0.7,0.8,0.9]\), \(\sigma_{3}=[0.9,1,1.1]\), \(\tau =1\) and initial conditions \(\varphi_{1}(s)=1.1\), \(\varphi_{2}(s)=1\), \(\varphi _{3}(s)=0.9\), \(s\in[-1,0]\). Then the irreducible Markov chain \(\xi(t)\) has a unique stationary distribution \(\pi=(0.1818,0.3377,0.4805)\). It follows from Lemma 2.2 that system (1.2) has a unique global solution \(x(t)=(x_{1}(t), x_{2}(t), x_{3}(t))\), which remains in \(\mathbb{R}^{3}_{+}\) with probability one, as shown in Fig. 1(a). According to the numerical methods of stochastic differential equations in [26, 27], we give the following discrete algorithm to simulate Example 4.1:

where \(i=1,2,3\), \(\Delta t=0.01\), \(k=100\), \(\xi_{n}\in{S}\) (Fig. 1(b)) is a 3-state Markov chain with generator P, \(\{ U_{i}^{n}\}\) is a sequence of mutually independent random variables with \(E U_{i}^{n}=0\) and \(E (U_{i}^{n})^{2}=1\), independent of the Markov chain \(\xi_{n}\).

Numerical solutions of Markov switched stochastic Nicholson-type delay system with patch structure (4.1) for initial values \(\varphi_{1}(s)=1.1\), \(\varphi_{2}(s)=1\), \(\varphi_{3}(s)=0.9\), \(s\in[-1,0]\)

Furthermore, from Theorems 3.1 and 3.2 we have the following estimates:

5 Conclusions

This paper is concerned with n connected Nicholson’s blowflies models under perturbations of white and color noises. Using a traditional Lyapunov function, we show that the solution of the Markov switched stochastic Nicholson-type delay system with patch structure remains positive and does not explode in finite time. Meanwhile, we estimate its ultimate boundedness, pth moment, and Lyapunov exponent. From Remarks 2.1 and 3.1 we find that the results obtained in this paper extend and improve some results in [2, 3, 21, 23, 24, 28, 29]. Inspired by the latest stochastic models in [30, 31], in the future work, we will deeply study dynamic behaviors of the addressed system, such as persistence, extinction, and so on.

References

Berezansky, L., Idels, L., Troib, L.: Global dynamics of Nicholson-type delay systems with applications. Nonlinear Anal., Real World Appl. 12, 436–445 (2011)

Yi, X., Liu, G.: Analysis of stochastic Nicholson-type delay system with patch structure. Appl. Math. Lett. 96, 223–229 (2019)

Wang, W., Shi, C., Chen, W.: Stochastic Nicholson-type delay differential system. Int. J. Control (2019). https://doi.org/10.1080/00207179.2019.1651941

Nicholson, A.J.: An outline of the dynamics of animal populations. Aust. J. Zool. 2, 9–65 (1954)

Gurney, W.S.C., Blythe, S.P., Nisbet, R.M.: Nicholson’s blowflies revisited. Nature 287, 17–21 (1980)

Berezansky, L., Braverman, E., Idels, L.: Nicholson’s blowflies differential equations revisited: main results and open problems. Appl. Math. Model. 34, 1405–1417 (2010)

Chen, W., Liu, B.: Positive almost periodic solution for a class of Nicholson’s blowflies model with multiple time-varying delays. J. Comput. Appl. Math. 235, 2090–2097 (2011)

Wang, W.: Positive periodic solutions of delayed Nicholson’s blowflies models with a nonlinear density-dependent mortality term. Appl. Math. Model. 36, 4708–4713 (2012)

Liu, B.: Global exponential stability of positive periodic solutions for a delayed Nicholson’s blowflies model. J. Math. Anal. Appl. 412, 212–221 (2014)

Yao, L.: Dynamics analysis of Nicholson’s blowflies models with a nonlinear density-dependent mortality. Appl. Math. Model. 64, 185–195 (2018)

Jian, S.: Global exponential stability of non-autonomous Nicholson-type delay systems. Nonlinear Anal., Real World Appl. 13, 790–793 (2012)

Wang, L.: Almost periodic solution for Nicholson’s blowflies model with patch structure and linear harvesting terms. Appl. Math. Model. 37, 2153–2165 (2013)

Liu, X., Meng, J.: The positive almost periodic solution for Nicholson-type delay systems with linear harvesting term. Appl. Math. Model. 36, 3289–3298 (2012)

Liu, P., Zhang, L., Liu, S., et al.: Global exponential stability of almost periodic solutions for Nicholson’s blowflies system with nonlinear density-dependent mortality terms and patch structure. Math. Model. Anal. 22(4), 484–502 (2017)

Faria, T.: Periodic solutions for a non-monotone family of delayed differential equations with applications to Nicholson systems. J. Differ. Equ. 263, 509–533 (2017)

Caetano, D., Faria, T.: Stability and attractivity for Nicholson systems with time-dependent delays. Electron. J. Qual. Theory Differ. Equ. 2017, Article ID 63 (2017)

Xu, Y.: New stability theorem for periodic Nicholson’s model with mortality term. Appl. Math. Lett. 94, 59–65 (2019)

Long, X., Gong, S.: New results on stability of Nicholson’s blowflies equation with multiple pairs of time-varying delays. Appl. Math. Lett. 100, Article ID 106027 (2020)

Luo, Q., Mao, X.: Stochastic population dynamics under regime switching. J. Math. Anal. Appl. 334, 69–84 (2007)

Bahar, A., Mao, X.: Stochastic delay population dynamics. Int. J. Pure Appl. Math. 11, 377–399 (2004)

Zhu, Y., Wang, K., Ren, Y., Zhuang, Y.: Stochastic Nicholson’s blowflies delay differential equation with regime switching. Appl. Math. Lett. 94, 187–195 (2019)

Ikeda, N., Watanabe, S.: Stochastic Differential Equations and Diffusion Processes. North-Holland, Amsterdam (1981)

Wang, W., Wang, L., Chen, W.: Stochastic Nicholson’s blowflies delayed differential equations. Appl. Math. Lett. 87, 20–26 (2019)

Wang, W., Chen, W.: Stochastic delay differential neoclassical growth model. Adv. Differ. Equ. 2019, Article ID 355 (2019)

Mao, X.: Stochastic Differential Equations and Applications. Horwood, Chichester (1997)

Higham, D.J.: An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev. 43, 525–546 (2001)

Ray, A., Chowdhury, A.R., Ghosh, D.: Effect of noise on chaos synchronization in time-delayed systems: numerical and experimental observations. Physica A 392, 4837–4849 (2013)

Wang, W., Chen, W.: Stochastic Nicholson-type delay system with regime switching. Syst. Control Lett. 136, Article ID 104603 (2020)

Wang, W., Chen, W.: Persistence and extinction of Markov switched stochastic Nicholson’s blowflies delayed differential equation. Int. J. Biomath. 13(3), Article ID 2050015 (2020)

Liu, S., Zhang, L., Xing, Y.: Dynamics of a stochastic heroin epidemic model. J. Comput. Appl. Math. 351, 260–269 (2019)

Zhang, L., Liu, S., Zhang, X.: Asymptotic behavior of a stochastic virus dynamics model with intracellular delay and humoral immunity. J. Appl. Anal. Comput. 9(4), 1425–1442 (2019)

Acknowledgements

Not applicable.

Availability of data and materials

Data sharing not applicable to this paper as no data sets were generated or analyzed during the current study.

Funding

This work was supported by the Natural Scientific Research Fund of Zhejiang Province of China (Grant No. LY18A010019), Shanghai Talent Development Fund (Grant No. 2017128) and “Xulun” Scholar Plan of Shanghai Lixin University of Accounting and Finance.

Author information

Authors and Affiliations

Contributions

The authors declare that the study was realized in collaboration with the same responsibility. All authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, W., Deng, G. & Chen, W. Markov switched stochastic Nicholson-type delay system with patch structure. Adv Differ Equ 2020, 258 (2020). https://doi.org/10.1186/s13662-020-02721-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02721-x