Abstract

The Zhang neural network (ZNN) has become a benchmark solver for various time-varying problems solving. In this paper, leveraging a novel design formula, a noise-tolerant continuous-time ZNN (NTCTZNN) model is deliberately developed and analyzed for a time-varying Lyapunov equation, which inherits the exponential convergence rate of the classical CTZNN in a noiseless environment. Theoretical results show that for a time-varying Lyapunov equation with constant noise or time-varying linear noise, the proposed NTCTZNN model is convergent, no matter how large the noise is. For a time-varying Lyapunov equation with quadratic noise, the proposed NTCTZNN model converges to a constant which is reciprocal to the design parameter. These results indicate that the proposed NTCTZNN model has a stronger anti-noise capability than the traditional CTZNN. Beyond that, for potential digital hardware realization, the discrete-time version of the NTCTZNN model (NTDTZNN) is proposed on the basis of the Euler forward difference. Lastly, the efficacy and accuracy of the proposed NTCTZNN and NTDTZNN models are illustrated by some numerical examples.

Similar content being viewed by others

1 Introduction

Due to the important role that the time-varying Lyapunov equation plays in a broad spectrum of areas, there has been a rapid increase in its algorithm design, and many numerical methods and neural dynamics have been proposed to solve this problem and its time-invariant version; see, e.g., [1–6] on this subject. Let \(t_{\mathrm{0}}\in \mathbb{R}\) and \(t_{\mathrm{f}}\in \mathbb{R}\) denote the start and the final time instant of the solving process, respectively. The time-varying Lyapunov equation can be expressed as

where \(A(t)\in \mathbb{R}^{n\times n}\) and \(B(t)\in \mathbb{R}^{n\times n}\) are smoothly time-variant matrix signals, and \(X\in \mathbb{R}^{n\times n}\) is the unknown matrix to be determined. In this paper, we are going to compute the online solution of problem (1) in real time, and the solution set of (1) is assumed to be nonempty throughout our discussions in this paper.

Since the seminal paper written by Hopfield [7], neural networks, especially recurrent neural networks, have been widely utilized to solve various time-invariant and time-varying problems. As an important subtopic of recurrent neural network, the Zhang neural network (ZNN) was firstly proposed by Zhang Yunong on March 2001 [1]. In recent years, ZNN has been generally deemed as a benchmark solver for various dynamics systems appeared in practice, such as the robots’ kinematic control, the pendulum system [8], the synchronization of chaotic sensor systems [9]. Based on a simple ordinary differential equation (ODE), i.e., the test problem to analyze the stability property of a numerical method for initial value problem, for the ZNN every component of an indefinite error function directly exponentially tends to zero, which endows ZNN with the ability to track the time-dependent solution of time-varying problems in an error-free manner. The ZNN has been intensively studied in the literature with its increasingly applications, and many more efficient variants of ZNN have been presented. For example, for potential digital hardware realization, several multi-step discrete-time ZNNs have been designed in [10–13]. To accelerate the convergence speed of the ZNN, a super-exponential ZNN with time-varying design parameter is proposed by Chen et al. [9]. Moreover, based on some deliberated designed activation functions, many continuous-time ZNNs with finite-time convergence property have been presented [14, 15].

Though the above ZNNs have received remarkable progress in various time-varying or future problem solving, they are sensitive to noise and prone to generating large error in a noiseless environment. However, noise is ubiquitous in real life, which cannot be ignored completely. For example, in the background extraction from surveillance video with missing and noisy data [16, 17], the observed data are often contaminated with additive Gaussian noise. The ZNN with anti-noise property has drawn increasing attention from researchers in recent years. To the best or our knowledge, Jin et al. [18] firstly designed an integration-enhanced ZNN formula to solve for real time-varying matrix inversion with additive constant noise. Then, Guo et al. [19] proposed a modified ZNN formula to solve a time-varying nonlinear equation with additive harmonic noise, whose convergence is analyzed based on an ingenious Lyapunov function. To analyze time-dependent matrix inversion with dynamic bounded gradually disappearing noise or dynamic bounded non-disappearing noise, a new noise-tolerant and predefined-time ZNN model is presented by Xiao et al. [20], in which the sign function plays an important role in proving its convergence and robustness.

However, the above three noise-tolerate ZNNs [18–20] all fail to deal with time-varying linear noise, which is unbounded and differs from the noise considered in the previous studies [18–20]. Thus there is much room for those papers to be improved. In this paper, based on a novel design formula, we are going to design a noise-tolerate continuous-time ZNN (termed NTCTZNN) to solve the time-varying Lyapunov equation which is contaminated with linear noise. The new ZNN is immune to linear noise and can counter its negative compact completely. Moreover, for potential digital hardware realization, the discrete-time version of NTCTZNN model is proposed on the basis of the Euler forward difference. A theoretical analysis of the proposed NTCTZNN and its discrete version is also discussed in detail.

In a nutshell, the contributions of this paper can be summarized as follows.

A novel noise-tolerant continuous-time Zhang neural network (termed NTCTZNN) with double integrals is proposed for solving the time-varying linear equations.

The proposed ZNN model is guaranteed to converge to the solution of the time-varying linear equations without noise or with constant noise or with time-varying linear noise.

A discrete-time version of the NTCTZNN model is proposed based on the Euler forward difference.

Numerical results including comparisons are presented to verify the obtained theoretical results.

The remainder of this paper is organized as follows. In Sect. 2, a novel noise-tolerant continuous-time ZNN (termed NTCTZNN) model is designed for the time-varying Lyapunov equation, and its convergence results are rigorously discussed. Section 3 describes the discrete version of the NTCTZNN (termed NTDTZNN), and proves its global convergence. Section 4 presents some numerical results to verify the efficiency of the NTCTZNN and the NTDTZNN. Finally, Sect. 5 concludes the paper with future research directions.

2 NTCTZNN model and its convergence

In this section, we shall design a novel noise-tolerant continuous-time Zhang neural network (NTCTZNN) for the time-varying Lyapunov equation and prove its convergence.

Firstly, let us review the noise-tolerant ZNN model with integral designed by Jin et al. [18]:

where \(\gamma >0\) and \(\lambda >0\) are two designed parameters. Setting \(e(t)=A(t)^{\top }X+XA(t)-B(t)\) in (2), we get a noise-tolerant continuous-time ZNN model for time-varying Lyapunov equation as follows:

where \(n(t)\in \mathbb{R}^{n\times n}\) denotes an unknown additive noise. The noise-tolerant ZNN model (3) has the following convergence property.

Lemma 2.1

The noise-tolerant ZNN model (3) converges to a theoretical solution of problem (1) globally, no matter how large the unknown matrix-form constant noise is. In addition, it converges towards a theoretical solution of problem (1) with the upper bound of the limit of the steady-state residual error being\(\|a\|/\lambda \)in the presence of the unknown matrix-form time-varying linear noise, where\(n(t)=at\in \mathbb{R}^{n\times n}\)is a constant nose.

Proof

See Theorems 1–3 in [18]. □

To further improve the efficiency of noise-tolerant continuous-time ZNN model (3), we present a novel design formula with double integrals as follows:

Setting \(e(t)=A(t)^{\top }X+XA(t)-B(t)\) in the design formula (4), we get a new noise-tolerant continuous-time ZNN model for a time-varying Lyapunov equation:

Setting

the integral and differential equation (5) can be written as the following system of differential equations:

In a practical computation, we need to transform the above system of differential equations in matrix form to that in vector form. Based on the Kronecker product ⊗ and the vec-operator vec, we get the NTCTZNN in vector form:

The design formula (4) can be utilized to solve the time-varying linear matrix equation and the time-varying Sylvester equation.

- (1)

Consider the time-varying linear matrix equation

$$ A(t)X=B(t), $$where \(A(t)\in \mathbb{R}^{m\times n}\), \(B(t)\in \mathbb{R}^{m\times p}\). Applying the design formula (4) to solve the above time-varying linear matrix equation, we have

$$\begin{aligned}& \hat{A}(t)\operatorname{vec}\bigl(\dot{X}(t)\bigr) \\& \quad =-\gamma \bigl(\hat{A}(t)\operatorname{vec}(X)-\operatorname{vec} \bigl(B(t)\bigr) \bigr)- \lambda \int _{0}^{t} \bigl(\hat{A}(\tau ) \operatorname{vec}\bigl(X(\tau )\bigr)-\operatorname{vec}\bigl(B( \tau )\bigr) \bigr)\,d\tau \\& \qquad{} -\mu \int _{0}^{t}du \int _{0}^{u} \bigl(\hat{A}(v)\operatorname{vec} \bigl(X(v)\bigr)- \operatorname{vec}\bigl(B(v)\bigr) \bigr)\,dv- \bigl( \dot{\hat{A}}(t)\operatorname{vec}\bigl(X(v)\bigr) \\& \qquad {}-\operatorname{vec}\bigl( \dot{B}(t)\bigr) \bigr)+\operatorname{vec}\bigl( n(t)\bigr), \end{aligned}$$(6)where \(\hat{A}(t)=I_{p}\otimes A(t)\).

- (2)

Consider the time-varying Sylvester equation

$$ A_{1}(t)X+XA_{2}(t)=B(t), $$where \(A_{1}(t)\in \mathbb{R}^{m\times m}\), \(A_{2}(t)\in \mathbb{R}^{n\times n}\) and \(B(t)\in \mathbb{R}^{m\times n}\). Applying the design formula (4) to solve the time-varying Sylvester equation, we have

$$\begin{aligned}& \hat{A}(t)\operatorname{vec}\bigl(\dot{X}(t)\bigr) \\& \quad =\operatorname{vec} \biggl(-\gamma \bigl(A(t)^{\top }X(t)+X(t)A(t)-B(t) \bigr) \\& \qquad {}- \lambda \int _{0}^{t} \bigl(A(\tau )^{\top }X( \tau )+X(\tau )A(\tau )-B(\tau ) \bigr)\,d\tau \\& \qquad {}-\mu \int _{0}^{t}du \int _{0}^{u} \bigl(A(v)^{\top }X(v)+X(v)A(v)-B(v) \bigr)\,dv \\& \qquad {}- \bigl(\dot{A}(t)^{\top }X(t)+X(t)\dot{A}(t)- \dot{B}(t) \bigr)+ n(t) \biggr), \end{aligned}$$(7)where \(\hat{A}(t)=I_{n}\otimes A_{1}(t)+A_{2}(t)^{\top }\otimes I_{m}\).

Assumption 2.1

To ensure the convergence property of the residual error\(\|e(t)\|\)generated by the NTCTZNN (5), the designed parametersγ, λandμare restricted to satisfy\(\gamma >0\), \(\lambda >0\), \(\mu >0\)and all the roots of the polynomial

are in the left half plane.

Remark 2.1

If we set \(\gamma =3\), \(\lambda =2\), \(\mu =1\), three roots of (8) are \(-0.7849 + 1.3071\mathrm{i}\), \(-0.7849 - 1.3071\mathrm{i}\), −0.4302. So Assumption 2.1 holds with \(\gamma =3\), \(\lambda =2\), \(\mu =1\).

According to a different type of the noise \(n(t)\), we divide the proof of the convergence of NTCTZNN (5) into the following four cases.

Case 1: If the unknown noise \(n(t)=0\in \mathbb{R}^{n\times n}\), we have the following convergence result.

Theorem 2.1

When\(n(t)=0\)andγ, λsatisfy the condition (8), the residual error\(\|e(t)\|\)generated by NTCTZNN (5) globally and exponentially converges to zero.

Proof

Set

and let \(e_{ij}(t)\), \(\varepsilon _{ij}(t)\), \(\dot{\varepsilon }_{ij}(t)\), \(\ddot{\varepsilon }_{ij}(t)\) and \(\dddot{\varepsilon }_{ij}(t)\) be the ijth element of \(e(t)\), \(\varepsilon (t)\), \(\dot{\varepsilon }(t)\), \(\ddot{\varepsilon }(t)\) and \(\dddot{\varepsilon }(t)\), respectively. Then the ijth subsystem of the dynamical system (6) can be written as

whose characteristic equation is

The discriminant of the cubic equation (10) is defined as

According to the Fan equations [21], if \(\gamma ^{2}=3\lambda \), \(\gamma \lambda =9\mu \), Eq. (10) has a real triple root, denoted by \(s_{1}\), which is a negative constant due to Assumption 2.1. So the general solution of the third-order ordinary differential equation (9) is

where \(c_{1ij}\), \(c_{2ij}\), \(c_{3ij}\) are three constants determined by the initial conditions. Then, differentiating the above equation twice, we have

The matrix form error \(e(t)\) is

where \(c_{1}=(c_{1ij})\in \mathbb{R}^{n\times n}\), \(c_{2}=(c_{2ij})\in \mathbb{R}^{n\times n}\), \(c_{3}=(c_{3ij})\in \mathbb{R}^{n\times n}\). Then

The conclusion of this theorem holds from the above inequality and \(s_{1}<0\).

If \(\Delta >0\), Eq. (10) has a real root and two complex conjugate roots, denoted by

where \(\mathrm{i}=\sqrt{-1}\) denotes the imaginary unit. Since γ, λ and μ satisfy Assumption 2.1, we have \(s_{1}<0\), \(\alpha <0\). From the above analysis, the general solution of the third-order ordinary differential equation (9) is

where \(c_{1ij}\), \(c_{2ij}\), \(c_{3ij}\) are three constants determined by the initial conditions. Then, differentiating the above equation twice, we have

The matrix form error \(e(t)\) is

where \(c_{1}=(c_{1ij})\in \mathbb{R}^{n\times n}\), \(c_{2}=(c_{2ij})\in \mathbb{R}^{n\times n}\), \(c_{3}=(c_{3ij})\in \mathbb{R}^{n\times n}\). Then

The conclusion of this theorem holds from the above inequality and \(s_{1}<0\) and \(\alpha <0\).

If \(\Delta =0\), Eq. (10) has a multiple root and all of its roots are real, denoted by \(s_{1}\), \(s_{2}=s_{3}\). The general solution of third-order ordinary differential equation (9) is

where \(c_{1ij}\), \(c_{2ij}\), \(c_{3ij}\) are three constants determined by the initial conditions. Then, differentiating the above equation twice, we have

The matrix form error \(e(t)\) is

where \(c_{1}=(c_{1ij})\in \mathbb{R}^{n\times n}\), \(c_{2}=(c_{2ij})\in \mathbb{R}^{n\times n}\), \(c_{3}=(c_{3ij})\in \mathbb{R}^{n\times n}\). Then

The conclusion of this theorem holds from the above inequality and \(s_{1}<0\) and \(s_{2}<0\).

If \(\Delta <0\), Eq. (10) has three distinct real roots, denoted by \(s_{1}\), \(s_{2}\), \(s_{3}\). The general solution of third-order ordinary differential equation (9) is

where \(c_{1ij}\), \(c_{2ij}\), \(c_{3ij}\) are three constants determined by the initial conditions. Then, differentiating the above equation twice, we have

The matrix form error \(e(t)\) is

where \(c_{1}=(c_{1ij})\in \mathbb{R}^{n\times n}\), \(c_{2}=(c_{2ij})\in \mathbb{R}^{n\times n}\), \(c_{3}=(c_{3ij})\in \mathbb{R}^{n\times n}\). Then

The conclusion of this theorem holds from the above inequality and \(s_{1}<0\), \(s_{2}<0\) and \(s_{3}<0\). □

Case 2: If the unknown noise \(n(t)\) is a constant noise \(n(t)=a\in \mathbb{R}^{n\times n}\), we have the following convergence result.

Theorem 2.2

No matter how large the unknown constant noise\(n(t)=(a_{ij})\in \mathbb{R}^{n\times n}\)is, the residual error\(\|e(t)\|\)generated by NTCTZNN (5) for problem (1) converges to zero.

Proof

Obviously, the NTCTZNN (5) can be decoupled into \(n^{2}\) differential equations:

Taking the Laplace transformation on both sides of (11), one has

where \(\varepsilon _{ij}(t)\) is the image function of \(e_{ij}(t)\). From (12), we have

Three poles of its transfer function are \(s_{1}\), \(s_{2}\) and \(s_{3}\), which are located on the left half-plane because γ, λ and μ satisfy Assumption 2.1. Thus the system (12) is stable and the final value theorem holds. That is,

This completes the proof. □

Case 3: If the unknown noise \(n(t)\) is a time-varying linear noise \(n(t)=at+b\in \mathbb{R}^{n\times n}\), we have the following convergence result.

Theorem 2.3

No matter how large the unknown linear noise\(\bar{ n}=at+b=(a_{ij}t+b_{ij})\in \mathbb{R}^{n\times n}\)is, the residual error\(\|e(t)\|\)generated by NTCTZNN (5) for problem (1) converges to zero.

Proof

Similar to the proof of Theorem 2.3, we have

This completes the proof. □

Case 4: If the unknown noise \(n(t)\) is a time-varying quadratic noise \(n(t)=at^{2}+bt+c\in \mathbb{R}^{n\times n}\), we have the following convergence result.

Theorem 2.4

For the unknown quadratic noise\(\bar{ n}=at^{2}+bt+c=(a_{ij}t^{2}+b_{ij}t+c_{ij})\in \mathbb{R}^{n \times n}\), we have

Proof

Similar to the proof of Theorem 2.3, we have

This completes the proof. □

3 NTDTZNN and its convergence

For potential digital hardware realization, we shall present a noise-tolerant discrete-time ZNN (NTDTZNN) model for problem (1) and prove its global convergence.

We use the Euler forward difference to discretize the term \(\dot{X}(t)\) in NTCTZNN (5) and get the following NTDTZNN model:

where \(\tau >0\) is the sampling gap.

Lemma 3.1

NTDTZNN (13) can be written as

Proof

From (13), we have

in which the second and the fourth equalities follows from the Euler forward difference. Then the above equality can be easily further written as (14). This completes the proof. □

Theorem 3.1

Considering the linear noise\(n_{k}=ak+b\in \mathbb{R}^{n\times n}\), the limit of the residual error\(\|e_{k}\|\)generated by NTDTCNN (13) is\(O(h^{2})\)if and only if the parametersγ, λandτsatisfy

Proof

Obviously, equality (14) also holds for k, that is,

Similarly, equality (17) also holds for k, that is,

Setting \(\bar{e}_{k}=e_{k}-\mathcal{O}(\tau ^{2})\), equality (19) can rewritten as

The characteristic equation of (20) is

If all of the characteristic-equation roots’ moduli in (21) are less than 1, the NTDTZNN (13) is stable. According to the Jury stability criterion [22], it is easy to deduce that the roots of characteristic equation (21) is inside the unit circle if and only if the four inequalities in (15) hold. The proof is completed. □

Remark 3.1

If we set \(\gamma =5\), \(\lambda =2\), \(\mu =1\) and \(\tau =0.1\), it is easy to check that the four inequalities in (15) hold. If we set \(\gamma =5\), \(\lambda =2\), \(\mu =1\) and \(\tau =0.01\), it is easy to check that the fourth inequality in (15) does not hold.

Following a similar procedure, we can deduce the discrete form of the continuous-time ZNN in [18] for problem (1), which is denoted by NTDTZNN-p, as follows:

Corollary 3.1

NTDTZNN-p (22) with constant noise\(n(t)=a\)is convergent if and only if the parametersγ, λandτsatisfy

Proof

Equality (22) can be written as

which also holds for k, that is,

Subtracting the above two equalities, we have

That is,

whose characteristic equation is

According to the Jury stability criterion [22] again, it is easy to deduce that the roots of characteristic equation (24) is inside the unit circle if and only if the three inequalities in (23) hold. The proof is completed. □

Remark 3.2

If we set \(\gamma =5\), \(\lambda =2\) and \(\tau =0.1\), it is easy to check that the three inequalities in (23) hold.

4 Numerical results

In this section, two simulation examples are included to substantiate the validity and fast convergence performance of NTCTZNN (5) and NTDTZNN (13). For comparative purposes, the CTZNN model in [8] (denoted by CTZNN), the NTCTZNN in [18] (denoted by NTCTZNN-p) and NTDTZNN-p (22) are included to solve time-varying Lyapunov equation.

By some simple manipulations, the CTZNN model in [8] for problem (1) is

and the NTCTZNN model in [18] for problem (1) is

In the following experiments, we set \(\gamma =5\), \(\lambda =2\), \(\mu =1\), \(h=0.1\), and

Example 4.1

Consider the following time-varying Lyapunov equation:

where

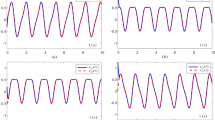

Firstly, we consider the zero noise \(n(t)=0\) and the constant noise \(n(t)=1\). The numerical results are plotted in Fig. 1.

Numerical results of Example 4.1

Secondly, we consider the linear noise \(n(t)=t+1\) and the quadratic noise \(n(t)=t^{2}+t+1\). The numerical results are plotted in Fig. 2.

Numerical results of Example 4.1

The numerical results are depicted in Figs. 1 and 2 indicate that: (1) For the zero noise, CTZNN becomes oscillating firstly, and NTCTZNN is the last one. The three tested models all can solve Example 4.1 with high accuracy. (2) For the constant noise, CTZNN fails to solve Example 4.1, and the other two models successfully solve Example 4.1. Moreover, we again find that NTCTZNN-p becomes oscillating firstly than NTCTZNN. (3) For the linear noise, both CTZNN and NTCTZNN-p fail to solve Example 4.1, while NTCTZNN successfully solve Example 4.1. (4) For the quadratic noise, all the three tested models do not work well, and the accuracy of NTCTZNN is about 1, which is in accordance with Theorem 2.4 (\(a_{ij}=1\), \(\mu =1\)). Overall, this example indicates that

where \(A\succ B\) denotes the performance of model A is better than that of model B.

Example 4.2

Consider the following time-varying Lyapunov equation:

where

We use the NTDTZNN (13) to solve this problem with constant noise \(n(t)=1\) or linear noise \(n(t)=t+1\). The numerical results are depicted in Fig. 3, from which we find that Res generated by NTDTZNN is oscillating decreasing. In fact, at the final time, 50 s, Res generated by NTDTZNN with the both types of noises is about 10−9, which indicates that NTDTZNN successfully solves this problem with high accuracy. For NTDTZNN with constant noise, the accuracy is about 10−7, a litter worse than that of NTDTZNN, but NTDTZNN with linear noise fails to solve this problem, which is in accordance with Corollary 3.1.

Numerical results of Example 4.2

5 Conclusions

In this paper, a novel noise-tolerant continuous-time ZNN (NTCTZNN) model and its discrete form (NTDTZNN) have been designed to solve a time-varying Laypunov equation. It has been proved that NTCTZNN and NTDTZNN inherently possess robustness to various type of noise. Numerical results as regards the two proposed models have been presented to substantiate their efficiency for solving time-varying Lyapunov equation.

In the future, we shall further improve NTCTZNN by introducing triple integrals to enhance its robustness to quadratic noise and study delayed ZNNs based on the theoretical results obtained in [23–28].

References

Zhang, Y.N., Jiang, D.C., Wang, J.: A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 13(5), 1053–1063 (2002)

Xie, L., Liu, Y.J., Yang, H.Z.: Gradient based and least squares based iterative algorithms for matrix equations \(AXB+CX^{\top }D=F\). Comput. Math. Appl. 59(11), 3500–3507 (2010)

Hajarian, M.: Developing biCOR and CORS methods for coupled Sylvester-transpose and periodic Sylvester matrix equations. Appl. Math. Model. 39(9), 6073–6084 (2015)

Sun, M., Wang, Y.J., Liu, J.: Two modified least-squares iterative algorithms for the Lyapunov matrix equations. Adv. Differ. Equ. 2019, 305 (2019)

Sun, M., Liu, J.: Noise-tolerant continuous-time Zhang neural networks for time-varying Sylvester tensor equations. Adv. Differ. Equ. 2019, 465 (2019)

Sun, M., Wang, Y.J.: The conjugate gradient methods for solving the generalized periodic Sylvester matrix equations. J. Appl. Math. Comput. 60, 413–434 (2019)

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 79(8), 2554–2558 (1982)

Zhang, Y.N., Huang, H.C., Yang, M., Li, J.: Discrete-time formulation, control, solution and verification of pendulum systems with zeroing neural dynamics. Theor. Comp. Sci. (2019, in press)

Chen, D.C., Li, S., Wu, Q.: Rejecting chaotic disturbances using a super-exponential-zeroing neurodynamic approach for synchronization of chaotic sensor systems. Sensors 19, 74 (2019)

Jin, L., Zhang, Y.N.: Discrete-time Zhang neural network for online time-varying nonlinear optimization with application to manipulator motion generation. IEEE Trans. Neural Netw. Learn. Syst. 26(7), 1525–1531 (2015)

Sun, M., Tian, M.Y., Wang, Y.J.: Discrete-time Zhang neural networks for time-varying nonlinear optimization. Discrete Dyn. Nat. Soc. 4745759, 1–14 (2019)

Sun, M., Wang, Y.J.: General five-step discrete-time Zhang neural network for time-varying nonlinear optimization. Bull. Malays. Math. Sci. Soc. 43, 1741–1760 (2020)

Sun, M., Liu, J.: General six-step discrete-time Zhang neural network for time-varying tensor absolute value equations. Discrete Dyn. Nat. Soc. 2019, Article ID 4861912 (2019)

Xiao, L., Liao, B.L., Jin, J., Liu, R.B., Yang, X., Ding, L.: A finite-time convergent dynamic system for solving online simultaneous linear equations. Int. J. Comput. Math. 94(9), 1778–1786 (2017)

Xiao, L.: A finite-time convergent Zhang neural network and its application to real-time matrix square root finding. Neural Comput. Appl. 31(Suppl 2), S793–S800 (2019)

Sun, M., Wang, Y.J., Liu, J.: Generalized Peaceman–Rachford splitting method for multiple-block separable convex programming with applications to robust PCA. Calcolo 54(1), 77–94 (2017)

Sun, H.C., Liu, J., Sun, M.: A proximal fully parallel splitting method for stable principal component pursuit. Math. Probl. Eng. 9674528, 1–15 (2017)

Jin, L., Zhang, Y.N.: Integration-enhanced Zhang neural network for real-time-varying matrix inversion in the presence of various kinds of noises. IEEE Trans. Neural Netw. Learn. Syst. 27(12), 2615–2627 (2016)

Guo, D.S., Li, S., Stanimirovic, P.S.: Analysis and application of modified ZNN design with robustness against harmonic noise. IEEE Trans. Ind. Inform. (2019, in press)

Xiao, L., Zhang, Y.S., Dai, J.H., Chen, K., Yang, S., Li, W.B., Liao, B.L., Ding, L., Li, J.C.: A new noise-tolerant and predefined-time ZNN model for time-dependent matrix inversion. Neural Netw. 117, 124–134 (2019)

Fan, S.J.: A new extracting formula and a new distinguishing means on the one variable cubic equation. J. Hainan Normal University (National Science) 2(2), 91–98 (1989)

Jury, E.I.: A note on the modified stability table for linear discrete time systems. IEEE Trans. Circuits Syst. 38(2), 221–223 (1991)

Yang, X., Wen, S.G., Liu, Z.F., Li, C., Huang, C.X.: Dynamic properties of foreign exchange complex network. Mathematics 7(9), 832 (2019)

Hu, H., Yi, T., Zou, X.: On spatial-temporal dynamics of a Fisher-KPP equation with a shifting environment. Proc. Am. Math. Soc. 148(1), 213–221 (2020)

Long, X., Gong, S.H.: New results on stability of Nicholson’s blowflies equation with multiple pairs of time-varying delays. Appl. Math. Lett. 100, 106027 (2020)

Huang, C.X., Qiao, Y.C., Huang, L.H., Agarwal, R.P.: Dynamical behaviors of a food-chain model with stage structure and time delays. Adv. Differ. Equ. 2018(1), 186 (2018)

Huang, C.X., Cao, J., Wen, F.H., Yang, X.G.: Stability analysis of SIR model with distributed delay on complex networks. PLoS ONE 11(8), e0158813 (2016)

Wang, F., Yao, Z.: Approximate controllability of fractional neutral differential systems with bounded delay. Fixed Point Theory 17(2), 495–508 (2016)

Acknowledgements

The authors thank the anonymous reviewer for the valuable comments and suggestions that have helped them in improving the paper.

Availability of data and materials

Please contact the authors for data requests.

Funding

This work is supported by the National Natural Science Foundation of China and Shandong Province (No. 11671228, 11601475, ZR2016AL05), and the PhD research startup foundation of Zaozhuang University.

Author information

Authors and Affiliations

Contributions

The first author provided the problem and gave the proof of the main results, and the second author finished the numerical experiment. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there are no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, M., Liu, J. A novel noise-tolerant Zhang neural network for time-varying Lyapunov equation. Adv Differ Equ 2020, 116 (2020). https://doi.org/10.1186/s13662-020-02571-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02571-7