Abstract

This article deals with the fractional multi-dimensional Burgers equation in the sense of the Caputo fractional derivative. An approximate analytical solution of the problem is established by the homotopy perturbation method (HPM). Furthermore, the convergence analysis and the error estimation derived by the HPM are shown.

Similar content being viewed by others

1 Introduction

The fractional calculus, a generality of arbitrary-order differentiation and integration, has been an excellent instrument for the rational explanation of the real world problems of science and engineering (for more details, see [23]). Furthermore, some publications ([1, 10], and [31]) suggest that the fractional calculus is the same as a memory function, which is a powerful tool for describing the long-term state of the process dependent not only on the current conditions but also on all of the historical conditions. These are the reasons why fractional calculus has interested many researchers. Many applications of fractional calculus arise in physics, biology, finance, and fluid mechanics, see [7, 15, 16, 26], and [32].

The Burgers equation is a simplified form of the Navier–Stokes equation. It is well known that the Navier–Stokes equations describe the physics of many phenomena of scientific and engineering interest. They may be used to model the weather prediction [22], fluid flow in a pipe [11], air flow around a wing of aircraft [9], discharge of the granular silo [28] and [33], etc. The Burgers equation has appeared frequently in various areas of applied mathematics fields such as acoustic transmission, shockwave, and gas dynamics (refer to [6, 8], and [18]). A classical Burgers equation is determined by the following form:

where ε, η are constants. From [13, 17], and [27], the analytical solution of the Burgers equation can be obtained by using the Backlund transformation method, the tanh-coth method, the Hopf–Cole transformation, and the separation of variables method.

In 2006, Momani [21] modified the one-dimensional Burgers equation by substituting the Caputo fractional derivative for the time and the space derivatives. He showed the analytical solutions for the generalized Burgers equation by Adomian decomposition method.

In 2014, Gomez [14] considered the Jumaries modified Riemann–Liouville fractional derivative Burgers equation. He obtained the solution for the fractional Burgers equation by using the fractional complex transform.

In 2017, Al-Sharif and Saad [25] applied the variational iteration method to solve the time and space-time fractional Burgers equation for various initial conditions.

In this paper, we study the n-dimensional Burgers equation in which the time and space derivatives are replaced by the Caputo fractional derivative. The modified Burgers equation is called fractional multi-dimensional Burgers equation. The HPM has successfully been applied to solve many linear and nonlinear differential equations, see [2,3,4,5, 19, 29], etc. It is well known that the homotopy perturbation method (HPM) is an effective method which provides a simple solution without any assumption of linearization [12] and [20], Therefore, we use the HPM technique to obtain the approximate analytical solution for the fractional multi-dimensional Burgers equation.

The remaining part of the paper is organized as follows. Section 2 deals with the definitions and the properties of the fractional integration and differentiation. The idea for applying the HPM to the fractional multi-dimensional Burgers equation is described in Sect. 3. The existence of solutions for the fractional Burgers equation and the convergence analysis of the HPM are established in Sects. 4 and 5, respectively. The approximate analytical solution for the fractional multi-dimensional Burgers equation in 1, 2, and 3 dimensions is shown in Sect. 6. The last section is about the conclusion of this article.

2 Fractional calculus

In this section, we give the definitions and some properties of fractional-order integration and differentiation used throughout this paper.

Definition 2.1

([24])

The Riemann–Liouville fractional integral operator of order \(0<\alpha <1\) for a function \(f:[0,\infty )\rightarrow \mathbb{R}\) is defined by

where Γ denotes the gamma function.

Consequently, some properties of the Riemann–Liouville fractional integral operator are as follows: for any \(\alpha ,\rho \geq 0\) and \(\beta >-1\),

Definition 2.2

([24])

The Caputo fractional derivative operator of order \(0<\alpha <1 \) for a function \(f:[0, \infty )\rightarrow \mathbb{R}\) is given by

We next give some properties of the Caputo derivative.

Lemma 2.3

Let f be a continuous function on \([0,a]\) with \(a>0\), and let \(0<\alpha \leq 1\) and \(\beta >\alpha -1\), then

3 The idea of the HPM

Let \(\varOmega \subseteq \mathbb{R}^{n}\) be an opened and bounded domain, and let T be a positive constant with \(0< T \leq \infty \). To illustrate the idea of HPM technique, let us consider the fractional Burgers equation: for any \((\vec{x},t)\in \varOmega \times (0,T]\),

with the initial condition

where \(\vec{x}=(x_{1},x_{2},\ldots ,x_{n})\in \varOmega \), ε and η are constants, \(g(\vec{x})\) is a given function, \(D_{t}^{\alpha }\) denotes the Caputo fractional derivative with respect to t of the order \(\alpha \in (0,1]\), and \(D_{x_{i}}^{\beta }\) denotes the Caputo fractional derivative with respect to \(x_{i}\) for all \(i=1,2,3,\ldots \) of the order \(\beta \in (\frac{1}{2},1]\).

Applying the HPM technique, we first construct the homotopy function v by

and v satisfies the following:

where \(0\leq p\leq 1\) is an embedding parameter, \(\tilde{u}_{0}( \vec{x},t)\) is an approximate initial function of Equation (4) which can be freely chosen. Equation (4) becomes

From Equation (5), we see that

From the HPM technique, the solution \(v(\vec{x},t;p)\) is expressed as an infinite series

Substituting Equation (6) into Equation (5) and comparing the coefficients with the corresponding power of p, the iterative procedure is obtained in the following form:

or we have that

Taking the Riemann–Liouville integral operator \(J_{t}^{\alpha }\) on both sides of Equation (7) and using Lemma 2.3, we then get

By the assumption that the solution \(v(\vec{x},t;p)\) is in the form of the power series:

when p converges to 1, the solution v converges to the solution u of problem (2) with initial condition (3), that is,

which is the analytical solution of problem (2) with initial condition (3).

4 Existence and uniqueness

In this section, we apply the Banach fixed point theorem to ensure that the fractional multi-dimensional Burgers equation (2) with initial condition (3) has a unique solution. We firstly introduce a Banach space \(C(\overline{\varOmega } \times [0,T])\) with

with its norm

Lemma 4.1

If \(u(\vec{x},t)\) and its partial derivatives are continuous on \(\overline{\varOmega }\times [0,T]\), then \(D_{t}^{ \alpha }u(\vec{x},t)\), \(D_{x_{i}}^{\beta }u(\vec{x},t)\), and \(D_{x_{i}}^{2\beta }u(\vec{x},t)\) are bounded for all \(i=1,2,3,\ldots\) .

Proof

Let \(M_{1}=\max_{0\leq \tau \leq t\leq T}\vert t- \tau \vert ^{-\alpha }\). We will show that \(D_{t}^{\alpha }\) is bounded.

Consider

There is a positive constant \(L_{1}\) such that \(\max_{\vec{x}\in \overline{\varOmega }}\vert u(\vec{x},0)\vert \leq L _{1}\Vert u\Vert \), we obtain

where \(L_{2}\) is a constant and \(L_{2}=\frac{M_{2}}{\varGamma (1-\alpha )}+L_{1}\). Similarly, we can show that \(\Vert D_{x_{i}}^{\beta }u( \vec{x},t)\Vert \leq L_{3}\Vert u\Vert \) and \(\Vert D_{x_{i}}^{2 \beta }u(\vec{x},t)\Vert \leq L_{4}\Vert u\Vert \), where \(L_{3}\) and \(L_{4}\) are some positive constants for all \(i=1,2,3,\ldots \) . □

The following theorem deals with the existence and uniqueness of the solution of the fractional multi-dimensional Burgers equation (2) with initial condition (3).

Theorem 4.2

Let \(f(u(\vec{x},t))= -u(\vec{x},t)\sum_{i=1} ^{n}D_{x_{i}}^{\beta }u(\vec{x},t)\) satisfy the Lipschitz condition with the Lipschitz constant \(L_{5}\), and let \(M_{2}= \max_{0\leq \tau \leq t\leq T}\vert t-\tau \vert ^{\alpha -1}\). If \(\frac{1}{\varGamma (\alpha )}(\eta L_{4}+\varepsilon L_{5})M_{2}T<1\), then problem (2) with initial condition (3) has a unique solution u on \(\overline{\varOmega }\times [0,T]\).

Proof

We define an operator \(F:C(\overline{\varOmega }\times [0,T]) \rightarrow C(\overline{\varOmega }\times [0,T])\) by

We will show that F is the contraction mapping. Let us consider that, for any \(u,v\in C(\overline{\varOmega }\times [0,T])\),

From the assumption of the theorem, this applies that F is the contraction mapping. By the Banach fixed point theorem, we can conclude that the fractional multi-dimensional Burgers equation (2) with initial condition (3) has a unique continuous solution u for any \((\vec{x},t)\in \overline{\varOmega }\times [0,T]\). □

5 Convergence analysis and error estimation

The convergence of HPM to solution for the fractional Burgers equation and error estimation of HPM are given by the following two theorems.

Theorem 5.1

Let \(v_{n}(\vec{x},t)\) be the function in a Banach space \(C(\overline{\varOmega }\times [0,T])\) defined by Equation (10) for any \(n\in \mathbb{N}\). The infinite series \(\sum_{k=0} ^{\infty }v_{k}(\vec{x},t)\) converges to the solution u of Equation (2) if there exists a constant \(0<\zeta <1\) such that \(v_{n}(\vec{x},t)\leq \zeta v_{n-1}(\vec{x},t)\) for all \(n\in \mathbb{N}\).

Proof

We define that \(\{S_{n}\}_{n=0}^{\infty }\) is the sequence of the partial sums of the series \(\sum_{k=0}^{\infty }v_{k}( \vec{x},t)\) as

We will show that \(\{S_{n}\}_{n=0}^{\infty }\) is a Cauchy sequence in the Banach space \(C(\overline{\varOmega }\times [0,T])\). For all \(n,m\in \mathbb{N}\) with \(n\geq m\), we have

It follows from \(0<\zeta <1\) that we have that \(1-\zeta ^{n-m}<1\). Hence,

Since \(u_{0}(\vec{x},t)\) is bounded,

Thus, \(\{S_{n}\}_{n=0}^{\infty }\) is a Cauchy sequence in the Banach space \(C(\overline{\varOmega }\times [0,T])\); consequently, the solution \(\sum_{k=0}^{\infty }v_{k}(\vec{x},t)\) converges to u. □

Next, we give the theorem to truncate an inaccurate solution as follows.

Theorem 5.2

The maximum absolute error of the series solution, defined in Equation (10), is estimated as

Proof

For \(n,m\in \mathbb{N}\) with \(n\geq m\), from Equation (11), we have

By Theorem 5.1, we obtain that \(S_{n}\) converges to \(u(\vec{x},t)\) as \(n\rightarrow \infty \). So, the above equation becomes

Since \(0<\zeta <1\), we obtain \(1-\zeta ^{n-m}<1\). Hence, the above inequality becomes

The proof is completed. □

6 Application of HPM to the fractional multi-dimensional Burgers equations

Here, the approximate analytical solution of fractional one-, two-, and three-dimensional Burgers equation is established by the HPM technique. Throughout this section, we choose the function \(\tilde{u_{0}}(\vec{x},t)=0\).

Example 1

Consider the fractional one-dimensional Burgers equation: for any \((x,t)\in (0,1)\times (0,T]\),

with an initial condition \(u(x,0)=x\) for any \(x \in [0,1]\).

From the HPM technique, the homotopy function v satisfies the following equation:

Substituting Equation (9) and the initial condition into Equation (15) and equating the coefficients with the corresponding power of p, the iterative procedure is given by

and so on. It follows from the above equations and Equation (8) that we obtain

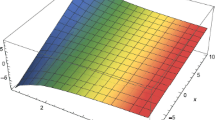

and so on. The remaining terms of the solution can be obtained in the same way. Thus, by the HPM technique, the approximate analytical solution u of problem (14) and the initial condition \(u(x,0)=x\) is

In the particular case, when we substitute \(\alpha =1\) and \(\beta =1\), the approximate solution of Equation (14) for this case is in the closed form:

which is the same analytical solution as in [30].

Example 2

Consider the fractional two-dimensional Burgers equation: for any \((x,y,t)\in (0,1)\times (0,1)\times (0,T]\),

with the initial condition \(u(x,y,0)=x+y\) for \((x,y) \in [0,1] \times [0,1]\).

Similar to the previous example, the values of \(v_{k}(x,y,t)\) are obtained solving from the iterative procedure with \(u(x,y,0)=x+y\) as follows:

and so on. Thus, the approximate analytical solution u of problem (16) is

In the special case, when \(\alpha =1\) and \(\beta =1\), the approximate analytical solution of Equation (16) with the initial condition \(u(x,y,0)=x+y\) is of the following form:

which is the same analytical solution as in [30].

Example 3

Consider the fractional three-dimensional Burgers equation: for any \((x,y, z,t)\in (0,1)\times (0,1)\times (0,1)\times (0,T]\),

with the initial condition \(u(x,y,z,0)=x+y+z\) for \((x,y,z) \in [0,1] \times [0,1] \times [0,1]\). Similar to Example 2, the values of \(v_{k}(x,y,z,t)\) are obtained solving from the iterative procedure with \(u(x,y,z,0)=x+y+z\) as follows:

and so on. Hence, the approximate analytical solution u of problem (17) with the initial condition \(u(x,y,z,0)=x+y+z\) is

For the case \(\alpha =1\) and \(\beta =1\), the analytical solution u is given by

which is the same as in [30].

7 Conclusion

In this article, we consider the fractional n-dimensional Burgers equation based on the Caputo-type fractional derivative with the initial condition. We show the existence and uniqueness of the fractional n-dimensional Burgers equation by using the Banach fixed point theorem. After that, we show the approximate analytical solution of the fractional Burgers equation in 1, 2, and 3 dimensions by the HPM technique. It is indicated that the HPM process is simple, easy, and effective.

References

Al-rabtah, A., Erturk, V.S., Momani, S.: Solutions of a fractional oscillator by using differential transform method. Comput. Math. Appl. 59(3), 1356–1362 (2010)

Biazar, J., Eslami, M.: A new homotopy perturbation method for solving systems of partial differential equations. Comput. Math. Appl. 62(1), 225–234 (2011)

Biazar, J., Eslami, M., Aminikhah, H.: Application of homotopy perturbation method for systems of Volterra integral equations of the first kind. Chaos Solitons Fractals 42(5), 3020–3026 (2009)

Biazar, J., Ghazvini, H.: Exact solutions for nonlinear Burgers’ equation by homotopy perturbation method. Numer. Methods Partial Differ. Equ. 25(4), 833–842 (2009)

Biazar, J., Ghazvini, H., Eslami, M.: He’s homotopy perturbation method for systems of integro-differential equations. Chaos Solitons Fractals 39(3), 1253–1258 (2009)

Burgers, J.M.: The Non-Linear Diffusion Equation: Asymptotic Solutions and Statistical Problems. Spinger, New York (1974)

Cartea, A., del-Castillo-Negrete, D.: Fractional diffusion models of option prices in markets with jumps. Phys. A, Stat. Mech. Appl. 374(2), 749–763 (2007)

Cole, J.D.: On a quasi-linear parabolic equation occurring in aerodynamics. Q. Appl. Math. 9(3), 225–236 (1951)

Das, A.: Detailed study of complex flow fields of aerodynamical configurations by using numerical methods. Sadhana 19(3), 361–399 (1994)

Du, M., Wang, Z., Hu, H.: Measuring memory with the order of fractional derivative. Sci. Rep. 3(1), 3431 (2013)

Dutta, P., Saha, S.K., Nandi, N.: Numerical study on flow separation in 90 pipe bend under high Reynolds number by \(k-\varepsilon \) modelling. Int. J. Eng. Sci. Technol. 19(2), 904–910 (2016)

El-Sayed, A.M.A., Elsaid, A., Hammad, D.: A reliable treatment of homotopy perturbation method for solving the nonlinear Klein–Gordon equation of arbitrary (fractional) orders. J. Appl. Math. 2012, Article ID 581481 (2012)

Fletcher, C.A.J.: Generating exact solutions of the two-dimensional Burgers’ equations. Int. J. Numer. Methods Fluids 3(3), 213–216 (1983)

Gomez S., C.A.: A note on the exact solution for the fractional Burgers equation. Int. J. Pure Appl. Math. 93(2), 229–232 (2014)

Hilfer, R.: Applications of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Kulish, V.V., Lage, J.L.: Application of fractional calculus to fluid mechanics. J. Fluids Eng. 124(3), 803 (2002)

Kythe, P.K., Puri, P., Schaferkotter, M.R.: Partial Differential Equations and Mathematica. CRC Press, Boca Raton (1997)

Lombard, B., Matignon, D., Le Gorrec, Y.: A fractional Burgers equation arising in nonlinear acoustics: theory and numerics. IFAC Proc. Vol. 43(23), 406–411 (2013)

Matinfar, M., Saeidy, M., Eslami, M.: Solving a system of linear and nonlinear fractional partial differential equations using homotopy perturbation method. Int. J. Nonlinear Sci. Numer. Simul. 14(7–8), 471–478 (2013)

Mohyud-Din, S.T., Noor, M.A.: Homotopy perturbation method for solving partial differential equations. Z. Naturforschung A 64(3–4), 157–170 (2009)

Momani, S.: Non-perturbative analytical solutions of the space- and time-fractional Burgers equations. Chaos Solitons Fractals 28(4), 930–937 (2006)

Muller, A., Kopera, M.A., Marras, S., Wilcox, L.C., Isaac, T., Giraldo, F.X.: Strong scaling for numerical weather prediction at petascale with the atmospheric model NUMA. Int. J. High Perform. Comput. Appl. 33(2), 411–426 (2019)

Oldham, K.B., Spanier, J.: The Fractional Calculus: Theory and Applications of Differentiation and Integration to Arbitrary Order. Academic Press, San Diego (1974)

Podlubny, I.: Fractional Differential Equation. Academic Press, San Diego (1999)

Saad, K.M., Al-Sharif, E.H.: Analytical study for time and time-space fractional Burgers’ equation. Adv. Differ. Equ. 2017, 300 (2017)

Sarwar, S., Zahid, M.A., Iqbal, S.: Mathematical study of fractional-order biological population model using optimal homotopy asymptotic method. Int. J. Biomath. 9(6), 1650081 (2016)

Sivastava, V.K., Tamsir, M., Ashutosh: Generating exact solution of three dimensional coupled unsteady nonlinear generalized viscous Burgers’ equations. Int. J. Math. Sci. 5(3), 1–13 (2013)

Staron, L., Lagree, P.Y., Popinet, S.: Continuum simulation of the discharge of the granular silo. Eur. Phys. J. E 37(1), 5 (2014)

Sweilam, N.H., Khader, M.M.: Exact solutions of some coupled nonlinear partial differential equations using the homotopy perturbation method. Comput. Math. Appl. 58(11–12), 2134–2141 (2009)

Taghizadeh, N., Akbari, M., Ghelichzadeh, A.: Exact solution of Burgers equations by homotopy perturbation method and reduced differential transformation method. Aust. J. Basic Appl. Sci. 5(5), 580–589 (2011)

Tarasov, V.E.: Generalized memory: fractional calculus approach. Fractal Fract. 2(4), 23 (2018)

Zakariya, Y., Afolabi, Y., Nuruddeen, R., Sarumi, I.: Analytical solutions to fractional fluid flow and oscillatory process models. Fractal Fract. 2(2), 18 (2018)

Zugliano, A., Artoni, R., Santomaso, A., Primavera, A.: Numerical simulation of granular solids’ behaviour: interaction with gas. In: Milan: Proc. of the COMSOL Conference (2009)

Acknowledgements

This research was funded by King Mongkut’s University of Technology North Bangkok, contract no. KMUTMB-61-GOV-03-44, and this research was partially supported by the Centre of Excellence in Mathematics, PERDO, Commission on Higher Education, Ministry of Education, Thailand.

Funding

This research was funded by King Mongkut’s University of Technology North Bangkok, contract no. KMUTMB-61-GOV-03-44, and this research was partially supported by the Centre of Excellence in Mathematics, PERDO, Commission on Higher Education, Ministry of Education, Thailand.

Author information

Authors and Affiliations

Contributions

PSr and PSa developed the Burgers equation. WS and PSa proposed the research idea for solving the modified Burgers equation. PSr wrote this paper. All authors contributed to editing and revising the manuscript. All author read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sripacharasakullert, P., Sawangtong, W. & Sawangtong, P. An approximate analytical solution of the fractional multi-dimensional Burgers equation by the homotopy perturbation method. Adv Differ Equ 2019, 252 (2019). https://doi.org/10.1186/s13662-019-2197-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2197-y