Abstract

This paper is concerned with the global fixed-time synchronization issue for semi-Markovian jumping neural networks with time-varying delays. A novel state-feedback controller, which includes integral terms and time-varying delay terms, is designed to realize the fixed-time synchronization goal between the drive system and the response system. By applying the Lyapunov functional approach and matrix inequality analysis technique, the fixed-time synchronization conditions are addressed in terms of linear matrix inequalities (LMIs). Finally, two numerical examples are provided to illustrate the feasibility of the proposed control scheme and the validity of theoretical results.

Similar content being viewed by others

1 Introduction

In the past decades, the neural networks (NNs) have been found extensive applications in many areas, such as pattern recognition, computer vision, speech synthesis, artificial intelligence and so on; see [1–3]. Such a wide range of applications attract considerable attention from many scholars to the dynamical behavior of the networks. Up to now, many significant works with respect to NNs have been reported; see [4–9], and the references therein.

Synchronization, which means that the dynamical behaviors of coupled systems achieve the same state, is a fundamental phenomenon in networks. At present, considerable attention has been devoted to the analysis of the synchronization of NNs and some effective synchronization criteria of NNs have been established in the literature [10–15]. Via the sliding mode control, the synchronization problem for complex-valued neural network was addressed in [12]. Reference [14] elaborates the impulsive stabilization and impulsive synchronization of discrete-time delayed neural networks. By adopting the periodically intermittent control scheme, the exponential lag synchronization issue for neural networks with mixed delays was described in [15]. It should be pointed out that most of these synchronization criteria are based on the Lyapunov stability theory, which is defined over an infinite-time interval. However, from the practical perspective, we are inclined to realize the synchronization goal in a finite-time interval. Because in a finite-time interval the maximal synchronization time can be calculated through appropriate methods. Hence, it is significative to study the finite-time synchronization of NNs. In Ref. [16], the finite-time robust synchronization issue for memristive neural networks was discussed. By utilizing the discontinuous controllers, the finite-time synchronization issue for the coupled neural networks was addressed in [17]. And under the sampled-date control scheme, some finite-time synchronization criteria for inertial memristive neural networks were established in [18].

For the finite-time synchronization, the settling time heavily depends on the initial conditions, which may lead to different convergence times under different initial conditions. However, the initial conditions may be invalid in practice. In order to overcome these shortcomings, a new concept named fixed-time synchronization was firstly taken into account in [19]. Hints for future research on the fixed-time synchronization problem can be found in [20–25]. By designing a sliding mode controller, the fixed-time synchronization issue for complex dynamical networks was addressed in [21]. Robust fixed-time synchronization for uncertain complex-valued neural networks with discontinuous activation functions was introduced in [23]. Furthermore, the fixed-time synchronization issue for delayed memristor-based recurrent neural networks was investigated in [25].

As is well known, time delay is inevitable in the process of transitional information because of the finite velocity of the transmission signal. Time delays often cause the systems to be instable and oscillatory. Thus, considering the synchronization of NNs with delays is meaningful. Owing to the value of the delay not always being fixed, exploring the synchronization of NNs with time-varying delays has become the subject of great interests for many scholars. Finite-time and fixed-time synchronization analysis for inertial memristive neural networks with time-varying delays was addressed in [26]. Reference [27] also presents an intensive study of the fixed-time synchronization issue for the memristor-based BAM neural networks with time-varying discrete delays. In [28], the author elaborated the synchronization control problem for chaotic neural networks with time-varying and distributed delays. Moreover, the robust extended dissipativity criteria for discrete-time uncertain neural networks with time-varying delays were investigated in [29].

By adding the Markovian process into the network systems of NNs, a new network model is developed. Up to now, the study concerning synchronization of Markovian jumping NNs, especially the global finite-time synchronization of Markovian jumping NNs have received wide attention from the scholars, and a number of results have been developed, such as finite-time synchronization [30], robust control [31], exponential synchronization [32], and state estimation [33]. However, the sojourn-time of a Markovian process obeys an exponential distribution, which results in the transition rate to be a constant. That limits the application of Markovian process. Compared with Markovian process, semi-Markovian process can obey to some other probability distributions, such as Weibull distribution, Gaussian distribution, which makes the semi-Markovian process has a more extensive application prospect. Hence, the investigation for semi-Markovian jumping NNs is of great theoretical value and practical significance, which has been conducted in [34–38]. In [34], the finite-time \(H_{\infty }\) synchronization for complex networks with semi-Markov jump topology was investigated by adopting a suitable Lyapunov function and LMI approach. In [36], the exponential stability issue for the semi-Markovian jump generalized neural networks with interval time-varying delays was addressed. And in [38], the improved stability and stabilization results for stochastic synchronization of continuous-time semi-Markovian jump NNs with time-varying delays were also studied. However, to the best of our knowledge, little attention was paid to the synchronization issue for semi-Markovian jumping NNs. This motivates us to study the fixed-time synchronization of semi-Markovian jumping NNs with time-varying delays.

Motivated by the aforementioned discussions, we intend to realize the fixed-time synchronization goal for semi-Markovian jumping NNs with time-varying delays. By applying Lyapunov functional approach, the fixed-time synchronization conditions are presented in terms of LMIs. Therefore, the novelty of our contributions is in the following:

-

(1)

A novel state-feedback controller, which includes double-integral terms, is designed to ensure the fixed-time synchronization, which can further improve the effectiveness of the convergence.

-

(2)

A new formula for calculating the settling time for semi-Markovian jumping nonlinear system is developed; see Theorem 3.2.

-

(3)

The time-varying delays and semi-Markovian processes are introduced in the construction of the NNs models.

-

(4)

The fixed-time synchronization conditions are addressed in terms of LMIs.

The rest of this article is arranged as follows. Some preliminaries and model description are described in Sect. 2. In Sect. 3, we introduce the main results, the fixed-time synchronization conditions are derived under different nonlinear controllers. In Sect. 4, two examples are presented to show the correctness of our main results. Section 5, also the final part, the conclusion of this paper is shown.

Notation

R represents the set of real numbers. \(R^{n} \) denotes the n-dimensional Euclidean space, and \(R^{n\times n}\) denotes the set of all \(n\times n \) matrices. Given column vectors \(x=(x_{1},x_{2}, \ldots,x_{n})^{T} \in R^{n}\), where the superscript T represents the transpose operator. \(X< Y\) (\(X>Y\)), which means that \(X-Y\) is negative (positive) definite. \(\mathscr{E}\) stand for mathematical expectation. \(\Gamma V(x(t),r(t),t)\) denotes the infinitesimal generator of \(V(x(t),r(t),t)\). For real matrix \(P=(p_{ij})_{n\times n}\), \(|P|=(|p_{ij}|)_{n\times n}\), \(\lambda_{\min }(P)\) and \(\lambda_{ \max }(P)\) denote the minimum and maximum eigenvalues of P, respectively. ∗ stands for the symmetric terms in a symmetric block matrix. \(\|x\|\) stands for the Euclidean norm of the vector x, i.e., \(\|x\|=(x^{T}x)^{\frac{1}{2}}\). Matrices, if their dimensions are not explicitly stated, are assumed to have compatible dimensions for the algebraic operation.

2 Preliminaries and model description

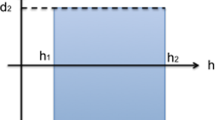

Let \((\Omega,\mathscr{F},\{\mathscr{F}\}_{t\geq 0},\mathcal{P})\) be the complete probability space and the filtration \(\mathscr{F} _{t\geq 0}\) satisfies the usual conditions that it is right continuous and increasing while \(\mathscr{F}\) contains all \(\mathcal{P}\)-null sets, where Ω is the sample space, \(\mathscr{F}\) is the algebra of events and \(\mathcal{P}\) is the probability measure defined on \(\mathscr{F}\). Let \(\{r(t),t\geq 0\}\) be a continuous-time semi-Markovian process taking values in a finite state space \(S=\{1,2,3,\ldots,N\}\). The evolution of the semi-Markovian process \(r(t)\) obeys the following probability transitions:

where \(h>0\), \(\lim_{h\rightarrow 0}\frac{o(h)}{h}=0\), \(\pi_{rk}(h)\geq 0 \) (\(r,k\in S\), \(r\neq k\)) is the transition rate from mode r to k and for any state or mode, it satisfies

Remark 2.1

It is worth noting that in the continuous-time semi-Markovian process, the transition rate \(\pi _{rk}(h)\) is time-varying and depend on the sojourn-time h. Meanwhile, the probability distribution of sojourn-time h obeys the Weibull distribution, etc [39]. If the sojourn-time h subjects to the exponential distribution, and the transition rate \(\pi _{rk}(h)=\pi _{rk}\), is a constant. Then the continuous-time semi-Markovian process recedes to the continuous-time Markovian process. On the other hand, the transition rate \(\pi_{rk}(h)\) is bounded, with \(\underline{\pi }_{rk}\leq \pi_{rk}(h)\leq \overline{ \pi }_{rk}\), \(\underline{\pi }_{rk}\) and \(\overline{\pi }_{rk}\) are known constant scalars, and \(\pi_{rk}(h)\) can be denoted as \(\pi_{rk}(h)=\pi_{rk}+\Delta \pi_{rk}\), where \(\pi_{rk}=\frac{1}{2}(\underline{ \pi }_{rk}+\overline{\pi }_{rk})\), and \(| \Delta \pi_{rk}| \leq \kappa_{rk}\) with \(\kappa_{rk}=\frac{1}{2}(\underline{\pi }_{rk}-\overline{ \pi }_{rk})\), see [37].

The model we consider in this paper is the neural networks model with semi-Markovian jumping parameters. The dynamical behavior of the drive system is described as the following stochastic differential equation:

and the corresponding response system is

where \(\{r(t),t\geq 0\}\) is the continuous-time semi-Markovian process and \(r(t)\) stands for the evolution of the mode at time t. \({x(t)}=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T} \in R^{n}\), \({y(t)}=(y_{1}(t),y_{2}(t),\ldots, y_{n}(t))^{T} \in R^{n}\) denotes the state vector of the ith neuron at time t; \(D(r(t))\in R^{n}\) is a positive-definite diagonal matrix; \(A(r(t)) \in R^{n\times n}\) and \(B(r(t))\in R^{n\times n}\) are matrices with real values in mode \(r(t)\); \({f(x(t))}=(f_{1}(x_{1}(t)),f _{2}(x_{2}(t)),\ldots,f_{n}(x_{n}(t)))^{T}\in R^{n}\) is the neuronal activation function; \(I=(I_{1},I_{2},\ldots,I _{n})^{T}\) denotes the external input on the ith neuron. \(u(t)=(u_{1}(t),u_{2}(t),\ldots,u_{n}(t))^{T}\) stands for the control input, which will be designed later. \(\psi (t)=(\psi _{1}(t),\psi _{2}(t),\ldots,\psi _{n}(t)\in \mathcal{C}([-\tau,0];R^{n})\) and \(\phi (t)=(\phi _{1}(t),\phi _{2}(t),\ldots,\phi _{n}(t))\in \mathcal{C}([-\tau,0];R^{n})\) are the initial conditions of system (1) and (2), respectively.

Variable \(\tau (t)\) denotes the time-varying delay function, and it is assumed to satisfy

where \(\tau >0\) and  are known constants.

are known constants.

For notation simplicity, we replace \(D(r(t))\), \(A(r(t))\), \(B(r(t))\) with \(D_{r}\), \(A_{r}\) and \(B_{r}\), respectively, for \(r(t)=r\in S\). Then the neural networks models can be rewritten as follows:

For the purpose of this paper, we suppose that the activation function \(f_{i}(\cdot)\) satisfies the following assumption:

- (\(H_{1}\)):

-

For any \(x_{i}{(t)}\), \(y_{i} {(t)}\in R^{n} \), \({f_{i}}(\cdot)\) satisfies

$$\bigl\vert f_{i}\bigl(y_{i}(t)\bigr)-f_{i} \bigl(x_{i}(t)\bigr) \bigr\vert \leq \mu _{i} \bigl\vert y_{i}(t)-x_{i}(t) \bigr\vert \quad \mbox{and}\quad \bigl\vert f_{i}(\cdot) \bigr\vert \leq Q_{i}, $$where \(\mu_{i}>0\) and \(Q_{i}>0\) are both known constants.

Let \(e_{i}(t)=y_{i}(t)-x_{i}(t)\) be the synchronization error, then the error dynamics system can be expressed as

where \({e(t)=(e_{1}(t),e_{2}(t),\ldots,e_{n}(t))^{T}}\), \(g_{i}(e_{i}(t))=f_{i}(y_{i}(t))-f_{i}(x_{i}(t))\) and \(g_{i}(e_{i}(t- \tau (t)))=f_{i}(y_{i}(t-\tau (t)))-f_{i}(x_{i}(t-\tau (t)))\), and \({\varphi (t)}= \psi_{i}(t)-\phi_{i}(t)\).

Remark 2.2

From assumption (\(H_{1}\)), we can conclude that \(g_{i}(\cdot)\) is also continuous and bounded, then

where \(H_{i}\) is a known positive constant.

Before proceeding our main results, some basic definitions and lemmas are introduced.

Definition 2.1

The neural network system (3) is said to be synchronized with the system (4) in finite time, if for any initial condition \({\varphi (t)}\), \(-\tau \leq t\leq 0\), there exists a settling time function \(T_{\varphi }=T(\varphi)\), such that

Moreover, if there exists a constant \(T_{\max }>0\), such that \(T_{\varphi }\leq T_{\max }\), then the neural network system (3) is said to be synchronized onto system (4) in fixed time. \(T_{\max }\) is called the synchronization settling time.

Lemma 2.1

([40])

Given any scalar ε and matrix \(S\in R^{n\times n}\), the following inequality:

holds for any symmetric positive-definite matrix \(W\in R^{n\times n}\).

Lemma 2.2

([41])

For any constant vector \(x\in R^{n}\) and \(0< c< l\), the following norm equivalence holds:

Lemma 2.3

Let \(U\in R^{n\times n}\) be a symmetric matrix, and let \(x\in R^{n}\), then the following inequality holds:

Lemma 2.4

([42])

Suppose there exists a continuous nonnegative function \(V(t): R^{n}\rightarrow R_{+}\cup {(0)}\), such that

-

(1)

\(V(e(t))>0\) for \(e(t)\neq 0\), \(V(e(t))=0\Leftrightarrow e(t)=0\);

-

(2)

for given constants \(\alpha >0\), \(\beta >0\), \(0<\rho <1\), and \(\upsilon >1\), any solution \(e(t)\) satisfies the following inequality:

$$\begin{aligned} \begin{aligned} D^{+}V\bigl(e(t)\bigr)\leq -\alpha V^{\rho }\bigl(e(t)\bigr)-\beta V^{\upsilon }\bigl(e(t)\bigr), \end{aligned} \end{aligned}$$

then the error system (5) is globally fixed-time stable for any initial conditions \(\varphi (t)\), and it satisfies

with the settling time estimated as

Lemma 2.5

([43])

Suppose there exists a continuous nonnegative function \(V(t): R^{n}\rightarrow R_{+}\cup {(0)}\), such that

-

(1)

\(V(e(t))>0\) for \(e(t)\neq 0\), \(V(e(t))=0\Leftrightarrow e(t)=0\);

-

(2)

for some α, \(\beta >0\), \(\rho =1-\frac{1}{2p}\), \(\upsilon =1+\frac{1}{2p}\), \(p>1\), any solution \(e(t)\) satisfies

$$\begin{aligned} \begin{aligned} D^{+}V\bigl(e(t)\bigr)\leq -\alpha V^{\rho }\bigl(e(t)\bigr)-\beta V^{\upsilon }\bigl(e(t)\bigr), \end{aligned} \end{aligned}$$

then the error system (5) is globally fixed-time stable, and the settling time bounded by

Lemma 2.6

([44])

Suppose that there exists a positive-definite, continuous differential function \(V(t)\) which satisfies

where \(\alpha >0\), \(0<\rho <1\) are two constants. Then we have \(\lim_{t\rightarrow {T^{*}}} V(t)=0\), and \(V(t)\equiv 0\), \(\forall t\geq {T^{*}}\), with the settling time \(T^{*}\) estimated as

3 Main results

In this subsection, the fixed-time synchronization conditions are developed between the system (3) and (4). For this purpose, we adopt the following discontinuous feedback controller:

where \(0<\rho <1\), \(\upsilon >1\), \(\lambda_{i1}\), \(\lambda_{i2}\), \(\lambda_{i3}\), \(\lambda_{i4}\), \(i=1,2\), are the parameters to be designed later.

Theorem 3.1

Under assumption (\(H_{1}\)), for given scalars \(0<\alpha <1\) and \(\beta >1\), if there exist symmetric positive-definite matrices \(P_{r}\), \(W_{rk}\), such that

where \(\widetilde{\Omega }=\sum_{k=1}^{N}\pi_{rk}P_{k}+\sum_{k=1,k \neq r}^{N} [\frac{\kappa_{rk}^{2}}{4}W_{rk}+(P_{k}-P_{r})W_{rk} ^{-1}(P_{k}-P_{r}) ]\), then the drive system (3) is synchronized onto the response system (4) in fixed time.

Proof

Consider the following Lyapunov functional:

For simplicity, here, we replace \(V(e(t),t,r)\), \(\mathscr{L}V(e(t),t,r)\) with \(V(t)\) and \(\mathscr{L}V(t)\), respectively. With regard to Itô formula, we have

where Δt is a small positive number. Hence, for every \(r(t)={r}\in S\), it can be deduced that

Considering \(\pi_{rk}(h)=\pi_{rk}+\Delta \pi_{rk}\), \(\Delta \pi_{{rr}}=-\sum_{k=1,k\neq r}^{N}\Delta \pi_{rk}\) and applying Lemma 2.1, we obtain

Then calculating the derivative of \(V(t)\) along the trajectory of (5), we have

Based on assumption (\(H_{1}\)), we get

Substituting the controller (6) into (10), it yields

By the condition (7), (11) can be rewritten as the following inequality:

In view of Lemmas 2.2 and 2.3, it derives that

According to (8), one obtains

Then, taking the expectation on both sides of (12), we can get

As is well known, for any \(t>0\), \(\mathscr{E}[(V(t))^{ \frac{1+\rho }{2}}]=(\mathscr{E}[V(t)])^{\frac{1+\rho }{2}}\) and \(\mathscr{E}[(V(t))^{\frac{1+\upsilon }{2}}]= (\mathscr{E}[V(t)])^{\frac{1+ \upsilon }{2}}\), then we have the following inequality:

By Lemma 2.4, we know that the error system (5) is globally fixed-time stable. And the settling time is estimated as

Hence, under the controller (6), the fixed-time synchronization conditions is derived. The proof is completed. □

Remark 3.1

The function \(f_{i}(\cdot)\) we choose in this paper is continuous and bounded by a constant \(G_{i}\). It is a special condition for the function \(f_{i}(\cdot)\). The boundedness is not necessary in general conditions. In this paper, for estimating the parameter accurately, we choose the function bounded by \(G_{i}\). In other continuous cases, there only needs the condition \(|f_{i}(y_{i}(t))-f_{i}(x_{i}(t))|\leq \mu _{i}|y_{i}(t)-x_{i}(t)|\). Owing to \(\frac{|f_{i}(y_{i}(t))-f_{i}(x_{i}(t))|}{|y_{i}(t)-x_{i}(t)|}\leq \mu _{i}\), thus, for the constant \(\mu _{i}\), the value of \(\mu _{i}\) is determined by the selection of activation function \(f_{i}(\cdot)\).

Remark 3.2

To the best of our knowledge, of the current literature on the synchronization issue for NNs, only a part of the matrices in the network systems and Lyapunov functional are distinct for different system modes. Hence, the network systems and the Lyapunov functional in this paper are more general than the existing results (such as [24, 26]). Meanwhile, inspired by [33], the double-integral terms is introduced into the Lyapunov functional to deal with the adverse effect caused by the integral terms which include the semi-Markovian jumping parameters. The following theorem is established to show the advantage of this approach.

In the following, the fixed-time synchronization conditions are addressed in terms of LMIs between the system (3) and (4). For this purpose, we adopt the following discontinuous feedback controller which includes the integral terms:

Theorem 3.2

Under assumption (\(H_{1}\)), for given scalars \(0<\alpha <1\) and \(\beta >1\), if there exist symmetric positive-definite matrices \(P_{r}\), \(W_{rk}\), and \(K_{r}\), symmetric matrix \(K\geq 0\), such that

where \(\overline{\Omega }=K_{r}+\sum_{k=1}^{N}\pi_{rk}P_{k}+ \sum_{k=1,k\neq r}^{N} [\frac{\kappa_{rk}^{2}}{4}W_{rk}+(P_{k}-P _{r})W_{rk}^{-1}(P_{k}-P_{r}) ]+\tau K\),

then the drive system (3) is synchronized onto the response system (4) in fixed time.

Proof

Consider the following Lyapunov functional:

For \(V_{1}(t)\), based on (8) and (9), we have

Calculating the derivatives of \(V_{2}(t)\) and \(V_{3}(t)\) along the trajectory of (5), it yields

and

Combining (16)–(18), we acquire

Based on assumption (\(H_{1}\)) and the error system (5), we have

Under the condition of the Theorem, we have the following inequality:

Substituting (13) into (19), we can obtain

By the conditions (14) and (15), then employing Lemma 2.3, we have

According to Lemma 2.2, we get

thus

Thus, (20) can be rewritten as

According to the conditions given in (15), then, based on Lemma 2.2, we get

where \(0<\rho <1\), \(\upsilon >1\), \(\lambda_{i3}\), \(\lambda_{i4}>0\).

Taking the expectation on both sides of (21), it yields

It is easily known that \(\mathscr{E}[(V(t))^{\frac{1+\rho }{2}}]=(\mathscr{E}[V(t)])^{\frac{1+\rho }{2}}\) and \(\mathscr{E}[(V(t))^{\frac{1+\upsilon }{2}}]=(\mathscr{E}[V(t)])^{\frac{1+\upsilon }{2}}\), then (22) can be rewritten as

Together with Lemma 2.4 and (23), we conclude that the error system (5) is globally fixed-time stable, and the settling time is estimated as

Hence, the fixed-time synchronization conditions are addressed in terms of LMIs. The proof is completed. □

Remark 3.3

To the best of our knowledge, many existing works with respect to the fixed-time synchronization conditions for NNs, see [25, 27], address these in terms of algebraic inequalities. Compared with the approach used in [25], the fixed-time synchronization conditions obtained in Theorem 3.2 can be addressed in terms of LMIs, which can be solved by utilizing the LMI toolbox in Matlab. It should be mentioned that the condition (14) cannot be solved directly in terms of LMIs, because there exists a nonlinear term \(\sum_{r=1,k\neq r}^{N}(P_{k}-P_{r})W_{rk}^{-1}(P_{k}-P_{r})\) in Ω̅. In order to overcome this difficulty, constructing a diagonal matrix \(\operatorname{diag}\{\sum_{r=1,k\neq r}^{N}(P_{k}-P_{r})W_{rk}^{-1}(P_{k}-P_{r}), 0\}\) is necessary. Then, utilizing the condition of the transition rate \(\pi _{rk}(h)\) and Schur complement lemma which are mentioned in [37], the matrix inequalities is turned into the linear matrix inequalities, which can be solved in terms of LMIs.

Corollary 3.1

Suppose the conditions in Theorem 3.2 admit. Under the controller (13), the drive system (3) is synchronized with the response system (4) in fixed time, and the settling time is estimated as

with \(p>1\).

Corollary 3.2

Under assumption (\(H_{1}\)), for given scalars \(0<\rho <1\), the drive system (3) is synchronized onto the response system (4) in a finite-time interval based on the following controller:

if there exist symmetric positive-definite matrices \(P_{r}\), \(W_{rk}\), such that

where \(\widetilde{\Omega }=\sum_{k=1}^{N}\pi_{rk}P_{k}+\sum_{k=1,k \neq r}^{N} [\frac{\kappa_{rk}^{2}}{4}W_{rk}+(P_{k}-P_{r})W_{rk} ^{-1}(P_{k}-P_{r}) ]\).

Meanwhile, the settling time is estimated as

Remark 3.4

Compared with the finite-time synchronization conditions obtained in [30], it needs more conditions to realize the fixed-time synchronization goal. For finite-time synchronization, there only needs such a term \(-V^{\rho }(t)\), \(0<\rho <1\); whereas for fixed-time synchronization, it needs the two terms \(-V^{\rho }(t)\) (\(0<\rho <1\)) and \(-V^{\nu }(t)\) (\(\nu >1\)). Similar to the results in [30], the settling time \(T^{*}\) of the finite-time synchronization obtained in Corollary 3.2 depends on the initial condition \(V(0)\). When \(V(0)\) is so large that the \(T^{*}\) is not reasonable in practice application. However, the settling time \(T_{\varphi }\) of the fixed-time synchronization obtained in Theorem 3.2 is independent of any initial conditions. Thus, the settling time can be accurately evaluated by selecting appropriate control input parameters and semi-Markovian jumping parameters.

4 Numerical examples

Example 1

In this section, we perform two examples to demonstrate the correctness of Theorem 3.2.

Consider the 2-dimensional semi-Markovian jumping neural networks system. The parameters we choose as follows:

The scalars we use in this paper are chosen as follows. The activation function is taken as \(f(t)=\tanh (t)\), thus \(\mu_{1}=\mu_{2}=1\), and \(G_{1}=G_{2}=1\). \(\rho =0.5\), \(\upsilon =2\). The time-varying delay function is assumed to be \(\tau (t)=0.5+0.5\cos (t)\), the initial value is \({x(t)}=(e^{3t},e^{3t})^{T}\), \({y(t)}=(\sin (3t),\tanh (3t))^{T}\), \(I=(0,0)^{T}\). We can easily see that its upper bound \(\tau =1\),  .

.

The transition rates for each mode are given as follows:

For mode 1

For mode 2

Then we can get the parameters \(\pi_{rk}\), \(\kappa_{rk}\), where \(r,k \in S=\{1,2\}\).

Through simple computations, we have

Meanwhile, the parameters of the controller we choose as follows:

For mode 1

For mode 2

and the settling time \(T_{\max }\) can be calculated as 4.45.

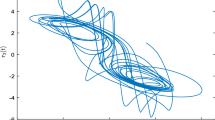

Based on the values given above, then the first and second state trajectories of the systems (3) and (4) are displayed in Fig. 1 and Fig. 2, respectively. And the trajectories of the corresponding synchronization error system are depicted in Fig. 3. Hence, the correctness of Theorem 3.2 is proved.

State trajectory of variables \(x_{1}(t)\) and \(y_{1}(t)\) under the controller (13)

State trajectory of variables \(x_{2}(t)\) and \(y_{2}(t)\) under the controller (13)

The trajectories of the synchronization error \(e_{1}(t)\) and \(e_{2}(t)\) under the controller (13)

Example 2

Consider the 3-dimensional semi-Markovian jumping neural networks system. The parameters we choose as follows:

It is assumed that the activation function and the time-varying delay function are taken as the same as Example 1. The initial conditions we choose as \({x(t)}=(3e^{2t},3e^{2t},3e^{2t})^{T}\), \({y(t)}=(3\sin (2t),3\sin (2t),3\tanh (2t))^{T}\), \(I=(0,0,0)^{T}\). And the relevant parameters are \(\mu_{1}=\mu_{2}=\mu _{3}=1\), \(G_{1}=G_{2}=G_{3}=1\), \(\rho =0.5\), \(\upsilon =2.0\).

The transition rates for each mode are given as follows.

For mode 1

For mode 2

Then we can get the parameters \(\pi_{rk}\), \(\kappa_{rk}\), where \(r, k \in S=\{1,2\}\),

Through simple computations, we have

For mode 1

For mode 2

and the settling time \(T_{\max }\) is evaluated as 4.45.

Under controller (13), the first, second and third state trajectories of the system (3) and (4) are plotted in Figs. 4, 5, and 6, respectively. Moreover, Fig. 7 shows the trajectories of the corresponding synchronization error system. The numerical simulation perfectly supports Theorem 3.2.

State trajectory of variables \(x_{1}(t)\) and \(y_{1}(t)\) under the controller (13)

State trajectory of variables \(x_{2}(t)\) and \(y_{2}(t)\) under the controller (13)

State trajectory of variables \(x_{3}(t)\) and \(y_{3}(t)\) under the controller (13)

The trajectories synchronization errors \(e_{1}(t)\), \(e_{2}(t)\), and \(e_{3}(t)\) under the controller (13)

5 Conclusion

In this paper, the fixed-time synchronization issue for semi-Markovian jumping neural networks with time-varying delays is discussed. A novel state-feedback controller is designed which includes double-integral terms and time-varying delay terms. Based on the linear matrix inequality (LMI) technique, the Lyapunov functional method, some effective conditions are established to guarantee the fixed-time synchronization of neural networks. Moreover, the upper bound of the settling time can be explicitly evaluated. To a certain extent, the results obtained in this paper have improved the previous works. More complex conditions, such as discontinuous functions, stochastic disturbances and fixed-time synchronization for complex dynamical networks will be taken into consideration in future research.

References

Nitta, T.: Orthogonality of decision boundaries in complex-valued neural networks. Neural Comput. 16, 73–97 (2004)

Yu, D., Deng, L., Seide, F.: The deep tensor neural network with applications to large vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 21, 388–396 (2012)

Wang, D.: Pattern recognition: neural networks in perspective. IEEE Expert 8, 52–60 (2002)

Abdurahman, A., Jiang, H., Teng, Z.: Finite-time synchronization for memristor-based neural networks with time-varying delays. Neural Netw. 69, 20–28 (2015)

Yu, J., Hu, C., Jiang, H.: Projective synchronization for fractional neural networks. Neural Netw. 49, 87–95 (2014)

Ying, W., Yan, J., Cui, B.: Lag synchronization of neural network and its application in secure communication. Appl. Res. Comput. 27, 3456–3457 (2010)

Jun, D., Chen, A.: Exponential synchronization of a class of neural network on time scales. J. Xiangnan Univ. 29, 6–12 (2008)

Li, H., Liao, H., Huang, H.: Synchronization of uncertain chaotic systems based on neural network and sliding mode control. Acta Phys. Sin. 60, 020512 (2011)

Hu, J., Cao, J., Alofi, A.: Pinning synchronization of coupled inertial delayed neural networks. Cogn. Neurodyn. 9, 341–350 (2015)

Lang, J., Zhang, Y., Zhang, B.: Event-Triggered Network-Based Synchronization of Delayed Neural Networks. Elsevier, Amsterdam (2016)

Michalak, A., Nowakowski, A.: Finite-time stability and finite-time synchronization of neural network-dual approach. J. Franklin Inst. 354, 8513–8528 (2017)

Hao, Z., Wang, X., Lin, X.: Synchronization of complex-valued neural network with sliding mode control. J. Franklin Inst. 353, 345–358 (2016)

Peng, X., Wu, H., Song, K.: Non-fragile chaotic synchronization for discontinuous neural networks with time-varying delays and random feedback gain uncertainties. Neurocomputing 273, 89–100 (2018)

Chen, W., Lu, X., Zheng, W.: Impulsive stabilization and impulsive synchronization of discrete-time delayed neural networks. IEEE Trans. Neural Netw. Learn. Syst. 26, 734–748 (2017)

Hu, C., Yu, J., Jiang, H.: Exponential lag synchronization for neural networks with mixed delays via periodically intermittent control. Chaos 20, 023108 (2010)

Zhao, H., Li, L., Peng, H.: Finite-time robust synchronization of memristive neural network with perturbation. Neural Process. Lett. 47, 509–533 (2018)

Shen, J., Cao, J.: Finite-time synchronization of coupled neural networks via discontinuous controllers. Cogn. Neurodyn. 5, 373–385 (2011)

Huang, D., Jiang, M., Jian, J.: Finite-time synchronization of inertial memristive neural networks with time-varying delays via sampled-date control. Neurocomputing 266, 527–539 (2017)

Polyakov, A.: Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Autom. Control 57, 2016–2110 (2012)

Hu, C., Yu, J., Chen, Z.: Fixed-time stability of dynamical systems and fixed-time synchronization of coupled discontinuous neural networks. Neural Netw. 89, 74–83 (2017)

Khanzadeh, A., Pourgholi, M.: Fixed-time sliding mode controller design for synchronization of complex dynamical networks. Nonlinear Dyn. 88, 2637–2649 (2017)

Zuo, Z.: Non-singular fixed-time terminal sliding mode control of non-linear systems. IET Control Theory Appl. 9, 545–552 (2014)

Ding, X., Cao, J., Alsaedi, A.: Robust fixed-time synchronization for uncertain complex-valued neural networks with discontinuous activation functions. Neural Netw. 90, 42–55 (2017)

Wang, L., Zeng, Z., Hu, J.: Controller design for global fixed-time synchronization of delayed neural networks with discontinuous activations. Neural Netw. 87, 122–131 (2017)

Cao, J., Li, R.: Fixed-time synchronization of delayed memristor-based recurrent neural networks. Sci. China Inf. Sci. 60, 032201 (2017)

Wei, R., Cao, J., Alsaedi, A.: Finite-time and fixed-time synchronization analysis of inertial memristive neural networks with time-varying delays. Cogn. Neurodyn. 12, 121–134 (2018)

Chen, C., Li, L., Peng, H.: Fixed-time synchronization of memristor-based BAM neural networks with time-varying discrete delay. Neural Netw. 96, 47–54 (2017)

Li, T., Song, A., Fei, S.: Synchronization control of chaotic neural networks with time-varying and distributed delays. Nonlinear Anal. 71, 2372–2384 (2009)

Wu, H., Zhang, H., Li, R.: Finite-time synchronization of chaotic neural networks with mixed time-varying delays and stochastic disturbance. Memet. Comput. 7, 231–241 (2015)

Ren, H., Deng, F., Peng, Y.: Finite time synchronization of Markovian jumping stochastic complex dynamical systems with mix delays via hybrid control strategy. Neurocomputing 272, 683–693 (2018)

Xiong, J., Lam, J.: Robust H2 control of Markovian jumping systems with uncertain switching probabilities. Int. J. Syst. Sci. 40, 255–265 (2009)

Chandrasekar, A., Rakkiyappan, R., Rihan, F.: Exponential synchronization of Markovian jumping neural networks with partly unknown transition probabilities via stochastic sampled-data control. Neurocomputing 133, 385–398 (2014)

Wu, H., Wang, L., Wang, Y., Niu, P., Fang, B.: Exponential state estimation for Markovian jumping neural networks with mixed time-varying delays and discontinuous activation functions. Int. J. Mach. Learn. Cybern. 7, 641–652 (2016)

Shen, H., Park, J., Wu, Z.: Finite time \(H_{\infty}\) synchronization for complex networks with semi-Markov jump topology. Commun. Nonlinear Sci. Numer. Simul. 24, 40–51 (2015)

Pradeep, C., Yang, C., Murugesu, R., Rakkiyappand, R.: An event-triggered synchronization of semi-Markov jump neural networks with time-varying delays based on generalized free-weighting-matrix approach. Math. Comput. Simul. (2017). https://doi.org/10.1016/j.matcom.2017.11.001

Huang, J., Shi, Y.: Stochastic stability and robust stabilization of semi-Markov jump linear systems. Int. J. Robust Nonlinear Control 23, 2028–2043 (2013)

Liu, X., Yu, X.: Finite-time \(H_{\infty}\) control for linear systems with semi-Markovian switching. Nonlinear Dyn. 85, 1–12 (2016)

Li, F., Shi, P., Wu, L.: State estimation and sliding mode control for semi-Markovian jump systems. Automatica 51, 385–393 (2015)

Huang, J., Shi, Y.: Stochastic stability of semi-Markov jump linear systems: an LMI approach. In: Proceedings of the IEEE Conference on Decision and Control, vol. 413, pp. 4668–4673. IEEE Comput. Soc., Los Alamitos (2015)

Xiong, J., Lam, J.: Robust H2 control of Markovian jump systems with uncertain switching probabilities. Int. J. Syst. Sci. 40, 255–265 (2009)

Hardy, G., Littlewood, J., Polya, G.: Inequalities. Cambridge Mathematical Library, Cambridge (1934)

Polyakov, A.: Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Autom. Control 57, 2106–2110 (2012)

Levant, A.: On fixed and finite time stability in sliding mode control. In: Proceedings of the IEEE Conference on Decision and Control, pp. 4260–4265. IEEE Comput. Soc., Los Alamitos (2013)

Tang, Y.: Terminal sliding mode control for rigid robots. Automatica 34, 51–56 (1998)

Acknowledgements

The authors would like to thank the Editors and the Reviewers for their insightful and constructive comments, which have helped to enrich the content and improve the presentation of the results in this paper.

Availability of data and materials

Not applicable.

Funding

This work was jointly supported by the Natural Science Foundation of Hebei Province of China (A2018203288), the Postgraduate Innovation Project of Hebei Province of China (CXZZSS2018048) and High level talent project of Hebei Province of China (C2015003054).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhao, W., Wu, H. Fixed-time synchronization of semi-Markovian jumping neural networks with time-varying delays. Adv Differ Equ 2018, 213 (2018). https://doi.org/10.1186/s13662-018-1666-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1666-z