Abstract

In this paper, an effective numerical method to solve the Cauchy type singular Fredholm integral equations (CSFIEs) of the first kind is proposed. The collocation technique based on Bernstein polynomials is used for approximation the solution of various cases of CSFIEs. By transforming the problem into systems of linear algebraic equations, we see that this approach is computationally simple and attractive. Then the approximate solution of the problem in truncated series form is obtained by using the matrix form of this method. Convergence and error analyses of the presented method are mentioned. Finally, numerical experiments show the validity, accuracy, and efficiency of the proposed method.

Similar content being viewed by others

1 Introduction

The concept of the principal value of a Cauchy type singular integral equation is well known. This kind of equations is applied in many branches of engineering and science like fracture mechanics [1], aerodynamics [2] and occurs in a variety of mixed boundary value problems of mathematical physics [2–4]. The Cauchy singular integral equations form is given as

where \(y(t)\) is an unknown function and \(g(s)\) is a given function [5]. When \(k(s,t)=0\), equation (1) is reduced to the following equation:

Equation (2) is an airfoil equation in aerodynamics. For all different cases in [6], the complete analytical solution of (2) is presented. Let it be displayed by

where \(j =1,2,3,4\) show Cases (i), (ii), (iii), (iv), respectively.

Case (i). The solution is bounded at both end points \(s = \pm1\),

provided that

Case (ii). The solution is unbounded at both end points \(s = \pm1\),

where

Case (iii). The solution is bounded at the end point \(s = 1\), but unbounded at \(s = -1\),

Case (iv). The solution is bounded at the end point \(s = -1\), but unbounded at \(s = 1\),

Due to the singularity of the integrands of CSFIEs, solving the CSFIEs is analytically difficult. However, a wide variety of applications of these equations show that cases of the CSFIEs have a special significance. On the other hand, the volume of work done in the area of the CSFIEs is relatively small. Hence, it is important that the approximate solutions of the CSFIEs can be solved by numerical methods.

In previous work, several numerical methods have been introduced to solve the Cauchy singular integral equation in various techniques, such as the cubic spline method [7], using Gaussian quadrature and an overdetermined system [4], the generalized inverses technique [8], the iteration method [9], using Bernstein polynomials [10], the Jacobi polynomials technique [11], the rational functions method [12], using Chebyshev polynomials [13] and the Nyström method [14]. Liu et al. [15] devised a collocation scheme for a certain Cauchy singular integral equation based on the superconvergence analysis. Panja and Mandal [16] used the Daubechies scale function to solve the second kind integral equation with a Cauchy type kernel. Recently, other good numerical methods have been proposed for an approximate solution of a Cauchy type singular integral equation, like the Bernstein polynomials method [17], using Legendre polynomials [18], the differential transform technique [19] and the reproducing kernel method [20]. Also, Dezhbord et al. [21] presented the reproducing kernel Hilbert space method for solving equation (2).

In recent years, several types of matrix collocation methods have been proposed for solving the singular integral and the singular integro-differential equations (see [22, 23]). In the present paper, we use a different collocation method for CSFIEs. Since the Bernstein polynomials have many good properties, such as the positivity continuity, recursion’s relation, symmetry, unity partition of the basis set over \([a,b]\), uniform approximation, differentiability and integrability, these polynomials are applied for the collocation methods [24–27]. Also, using the expansion of different functions in Bernstein polynomials leads to good numerical results and has a high efficiency in convergence theorems.

In this work, we use the different matrix collocation method based on Bernstein polynomials for solving the CSFIEs of the first kind. The rest of this paper is organized as follows: In Section 2, we point out some definitions of the Bernstein polynomials and collocation method as used for solving CSFIEs. By reducing the singularity, the transformation of the main equation to the equivalent integral equations is performed in Section 3. The next section is devoted to a description of a numerical method based on Bernstein polynomials. In Section 5, an error analysis of the proposed method are discussed. In Section 6, numerical results with the exact solution for some examples have been compared.

2 Preliminaries

2.1 The Bernstein polynomials

The Bernstein polynomials of degree n are defined by

By using the binomial expansion, they can be written

Also, the Bernstein polynomials of the nth degree on the interval \([a,b]\) are [25]

2.2 Collocation method

We use the truncated Bernstein polynomial series form based on the Cases (i), (ii), (iii) and (iv) from (2) to obtain approximate solutions as follows:

where \(x_{j,i}, (i=0,1,\dots,n)\), \((j=1,2,3,4)\) are the unknown Bernstein coefficients.

3 Removing singularity of equation (2)

It is clear that the approximate solutions based on the analytical solutions of equation (2) can be represented by the following relations [21]:

where \(x(s)\) is the well-behaved function on \([-1,1]\) and we have the weight functions for the corresponding cases as follows, respectively:

Now, in order to reduce the singular term, we have to convert equation (2) to the equivalent integral equations. The unknown functions \(x(s)\) of equation (12) can be expressed as the following cases:

Case (i). By using (3), (12) and (13), \(y_{1}(s)\) can be represented in the form

So by substituting (14) into equation (2), we have

Note that the singular term is integrable in the sense of the Cauchy principal value. We have

Thus, the singular term has been removed and equation (15) is transformed into

Case (ii). The solution \(y_{2}(s)\) can be represented in the form

Also, by substituting (18) into equation (2), we have

In the sense of the Cauchy principal value,

Thus, the singular term has been removed and equation (19) is transformed into

Case (iii). The solution \(y_{3}(s)\) can be represented in the form

We substitute (22) into equation (2) and we get

also, from (16), we get

so, equation (22) is converted into

Case (iv). The solution \(y_{4}(s)\) can be represented in the form

By substituting (26) into equation (2), we have

also, from (20), we get

so, equation (26) is transformed into

In all of equations (17), (21), (25) and (29) \(\frac{x(t)-x(s)}{t-s}=x'(s)\) while \(t=s\) and it means that the singularity of equation (2) has been removed. Finally, for computing integrals, we use the Gauss-Chebyshev quadrature rule.

4 Description of the technique

First, we rewrite equations (17), (21), (25), and (29) in the following form:

where

and \(\frac{x(t)-x(s)}{t-s}=x'(s)\) while \(t=s\). According to equations (31) and by placing the collocation points \(s_{m}\) defined by

into (30), we get

Using (11) and (31), we obtain

and

We use the Gauss-Chebyshev quadrature rule of the first kind for computing the integral part in Case (ii) and select the Gauss-Chebyshev quadrature rule of the second kind for computing the integral part in the other cases. So, we can rewrite (33) for all cases as follows:

where

For simplicity, we write equations (35) as follows:

Hence, the main matrix form (37) corresponding to all cases of (2) can be written separately in the form

where

and

After solving equations (38) for Cases (i), (ii), (iii) and (iv), the unknown coefficients \(x_{j,i}\) are determined and we can approximate the solutions of (17), (21), (25) and (29) with substituting \(x_{j,i}\), \(i=0,1,\dots ,n\) \(j=1,2,3,4\) in (11). So, the approximate solutions for (2) in all cases follow:

5 Error estimation analysis

In the current section, we intend to give an error analysis based on the Bernstein polynomials for the presented method by using an interpolation polynomial [26].

Theorem 1

Let f be a function in \(C^{n+1}[-1,1]\) and let \(p_{n}\) be a polynomial of degree ≤n that interpolates the function f at \(n+1\) distinct points \(s_{0},s_{1},\dots,s_{n} \in[-1,1]\), then for each \(s\in[-1,1]\) there exists a point \(\xi_{s} \in[-1,1]\) such that

Let \(\omega_{j} f\), \(j=1,2,3,4\) be the exact solution of equation (2) and \(p_{n}\) be the interpolation polynomial of f. Now, if f is sufficiently smooth, we can write f as \(f=p_{n}+R_{n}\) where \(R_{n}\) is the error function

If \(y_{n}=\omega_{j} x_{n}\), \(j=1,2,3,4\) is the Bernstein polynomial series solution of (2), given by Cases (i), (ii), (iii) and (iv) of (11), then \(y_{n}\) satisfies (2) on the nodes. So, \(x_{n}\) and \(p_{n}\) are the solutions of \(\mathbf{A}_{j} \mathbf{X}_{j}=\mathbf{G}\) and \(\mathbf {A}_{j} \bar{\mathbf{X}}_{j}=\mathbf{G}+\triangle{\mathbf{G}}\) where

where \(i=0,1,\dots,n\).

In the following theorem, we present an upper bound of the absolute errors for our method.

Theorem 2

Assume that \(x(s)\) and \(f(s)\) are Bernstein polynomial series solution and exact solution of (17), so \(y(s)=\omega_{1}(s) x(s)\) and \(\omega_{1}(s) f(s)\) are Bernstein polynomial series solution and the exact solution of equation (2). \(p_{n}(s)\) denotes the interpolation polynomial of \(f(s)\). If \(\mathbf{A}_{1}, \mathbf{X}_{1}, \bar{\mathbf{X}}_{1}, \mathbf{G}\) and △G are defined as above, and \(f(s)\) is sufficiently smooth, then

where \(\smash{ \max_{-1 \leq s \leq1} \vert \omega_{1}(s) \vert } \leq M_{1}\) and \(\smash{ \max_{0 \leq i \leq n} \vert x_{1,i}-\bar{x}_{1,i} \vert }\leq M_{2}\).

Proof

Taking into account the given assumptions, we have

where

This completes the proof. □

The same reasoning applies for other similar theorems for Cases (ii), (iii), and (iv).

6 Numerical experiments

In this section, the following examples are given to illustrate the performance of the presented method in solving the CSFIEs and the accuracy of the technique. These examples have been solved by our method with \(n=5\), \(N=4\) and the results are showed in the tables and figures. In Example 1, the results are computed by using a program written in Mathematica 11.0 and are compared with the computed solutions of another well-known method.

Example 1

Let us consider the first kind of Cauchy type singular Fredholm integral equation given by

where \(g(s)=s^{4} + 5s^{3} + 2s^{2} +s - \frac{11}{8}\).

This equation has an exact solution for all following cases:

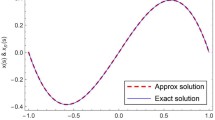

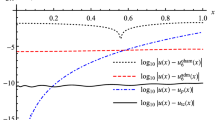

In Figures 1, 3, 5 and 7, we plot the exact solutions and the approximate solutions for \(n=5\) and \(N=4\) for all cases and it is clear that the approximate solution is in good agreement with the exact solution. Also, the errors of the presented method are plotted in Figures 2, 4, 6 and 8 and are compared with Chebyshev polynomial approximations in all cases in Tables 1, 2, 3 and 4.

Example 2

Suppose we have the following CSFIE:

where \(g(s)=-s^{4} + \frac{3}{2}s^{2} - \frac{3}{8}\).

This equation has exact solution for all following cases:

In Figures 9, 11, 13 and 15, we plot the exact solutions and the approximate solutions for \(n=5\) and \(N=4\) for all cases and it is clear that the approximate solution is in good agreement with the exact solution. Also, the errors of the presented method are plotted in Figures 10, 12, 14 and 16 and are presented in all cases in Tables 5, 6, 7 and 8.

7 Conclusion

Earlier, other numerical methods for solving the singular integral equation with Cauchy kernel were introduced, but in this work we present a new efficient approach. The present technique is a simple method to obtain the approximate solutions of different cases of singular integral equation with Cauchy kernel. The approximate approach is based on the Bernstein polynomials based on the collocation method. Removing the singularity of the equations in all cases has been set and an approximation for the integral in determining the system of linear equations was implemented as well. By comparing the approximate solutions with the exact solutions in the numerical results, it is obvious that the approximate solutions are close to the well-known results for various cases.

References

Erdogan, F, Gupta, GD, Cook, TS: Numerical solution of singular integral equations. In: Sih, GC (ed.) Methods of Analysis and Solutions of Crack Problems: Recent Developments in Fracture Mechanics Theory and Methods of Solving Crack Problems, pp. 368-425. Springer, Dordrecht (1973)

Ladopoulos, E: Singular Integral Equations: Linear and Non-linear Theory and Its Applications in Science and Engineering. Springer, New York (2013)

Gakhov, F: Boundary Value Problems. Dover, New York (1990)

Kim, S: Solving singular integral equations using Gaussian quadrature and overdetermined system. Comput. Math. Appl. 35(10), 63-71 (1998)

Golberg, M: Introduction to the numerical solution of Cauchy singular integral equations. In: Numerical Solution of Integral Equations, pp. 183-308. Springer, Berlin (1990)

Lifanov, IK: Singular Integral Equations and Discrete Vortices. Vsp, Utrecht (1996)

Jen, E, Srivastav, R: Cubic splines and approximate solution of singular integral equations. Math. Comput. 37(156), 417-423 (1981)

Kim, S: Numerical solutions of Cauchy singular integral equations using generalized inverses. Comput. Math. Appl. 38(5), 183-195 (1999)

Mennouni, A, Guedjiba, S: A note on solving Cauchy integral equations of the first kind by iterations. Appl. Math. Comput. 217(18), 7442-7447 (2011)

Mandal, B, Bhattacharya, S: Numerical solution of some classes of integral equations using Bernstein polynomials. Appl. Math. Comput. 190(2), 1707-1716 (2007)

Karczmarek, P, Pylak, D, Sheshko, MA: Application of Jacobi polynomials to approximate solution of a singular integral equation with Cauchy kernel. Appl. Math. Comput. 181(1), 694-707 (2006)

Yaghobifar, M, Long, NN, Eshkuvatov, Z: Analytical solutions of characteristic singular integral equations in the class of rational functions. Int. J. Contemp. Math. Sci. 5(56), 2773-2779 (2010)

Eshkuvatov, Z, Long, NN, Abdulkawi, M: Approximate solution of singular integral equations of the first kind with Cauchy kernel. Appl. Math. Lett. 22(5), 651-657 (2009)

De Bonis, MC, Laurita, C: Nyström method for Cauchy singular integral equations with negative index. J. Comput. Appl. Math. 232(2), 523-538 (2009)

Liu, D, Zhang, X, Wu, J: A collocation scheme for a certain Cauchy singular integral equation based on the superconvergence analysis. Appl. Math. Comput. 219(10), 5198-5209 (2013)

Panja, M, Mandal, B: Solution of second kind integral equation with Cauchy type kernel using Daubechies scale function. J. Comput. Appl. Math. 241, 130-142 (2013)

Setia, A: Numerical solution of various cases of Cauchy type singular integral equation. Appl. Math. Comput. 230, 200-207 (2014)

Setia, A, Sharma, V, Liu, Y: Numerical solution of Cauchy singular integral equation with an application to a crack problem. Neural Parallel Sci. Comput. 23, 387-392 (2015)

Abdulkawi, M: Solution of Cauchy type singular integral equations of the first kind by using differential transform method. Appl. Math. Model. 39(8), 2107-2118 (2015)

Beyrami, H, Lotfi, T, Mahdiani, K: A new efficient method with error analysis for solving the second kind Fredholm integral equation with Cauchy kernel. J. Comput. Appl. Math. 300, 385-399 (2016)

Dezhbord, A, Lotfi, T, Mahdiani, K: A new efficient method for cases of the singular integral equation of the first kind. J. Comput. Appl. Math. 296, 156-169 (2016)

Yüzbaşı, Ş, Sezer, M: A numerical method to solve a class of linear integro-differential equations with weakly singular kernel. Math. Methods Appl. Sci. 35(6), 621-632 (2012)

Yüzbaşı, Ş, Karaçayır, M: A Galerkin-like approach to solve high-order integrodifferential equations with weakly singular kernel. Kuwait J. Sci. 43(2) 106-120 (2016)

Işik, OR, Sezer, M, Güney, Z: Bernstein series solution of a class of linear integro-differential equations with weakly singular kernel. Appl. Math. Comput. 217(16), 7009-7020 (2011)

Yousefi, S, Behroozifar, M, Dehghan, M: Numerical solution of the nonlinear age-structured population models by using the operational matrices of Bernstein polynomials. Appl. Math. Model. 36(3), 945-963 (2012)

Yuzbasi, S: A collocation method based on Bernstein polynomials to solve nonlinear Fredholm-Volterra integro-differential equations. Appl. Math. Comput. 273, 142-154 (2016). doi:10.1016/j.amc.2015.09.091

Yüzbaşı, Ş: Numerical solutions of fractional Riccati type differential equations by means of the Bernstein polynomials. Appl. Math. Comput. 219(11), 6328-6343 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Seifi, A., Lotfi, T., Allahviranloo, T. et al. An effective collocation technique to solve the singular Fredholm integral equations with Cauchy kernel. Adv Differ Equ 2017, 280 (2017). https://doi.org/10.1186/s13662-017-1339-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1339-3