Abstract

In this paper, we present a reduced high-order compact finite difference scheme for numerical solution of the parabolic equations. CFDS4 is applied to attain high accuracy for numerical solution of parabolic equations, but its computational efficiency still needs to be improved. Our approach combines CFDS4 with proper orthogonal decomposition (POD) technique to improve the computational efficiency of the CFDS4. The validation of the proposed method is demonstrated by four test problems. The numerical solutions are compared with the exact solutions and the solutions obtained by the CFDS4. Compared with CFDS4, it is shown that our method has greatly improved the computational efficiency without a significant loss in accuracy for solving parabolic equations.

Similar content being viewed by others

1 Introduction

Many problems in physical phenomena, engineering equipment, and living organisms, such as the proliferation of gas, the infiltration of liquid, the conduction of heat, and the spread of impurities in semiconductor materials, can be described with parabolic equations [1,2,3]. In addition, the system related to parabolic equation can be used to describe the phase separation in material sciences [4] and a class of abstract control systems concerned with parabolic equation has been developed [5]. Especially, there exists analysis about linear and nonlinear boundary value problems [6]. Thus, it is of great value to study their exact solutions. In practical engineering calculations, their exact solutions are not easy to obtain directly. We have to find their numerical solutions by various numerical methods. Therefore, the study of numerical solutions of parabolic equations is of great theoretical and practical value. Until now, several types of numerical methods have been developed for numerical simulation of the parabolic problems. For example, the authors in [7] used two-level finite difference schemes to solve one-dimensional parabolic equation [7]. Zhou et al. proposed finite element methods to solve parabolic equation [8]. In [9], a new finite volume method for parabolic equation is presented. Zhang et al. provided the Crank–Nicolson-type difference scheme to solve the equations with spatial variable coefficient [10].

In the methods mentioned above, the finite difference scheme is always regarded as one of the effective methods to solve partial differential equations due to its simple form and easiness to be understood and programmed. In the last decade, the high-order compact finite difference scheme (CFDS) has been implemented for numerical solution of various types of partial differential equations. Such as the Rosenau-regularized long wave (RLW) equation [11], integro-differential equations [12], Burgers’ equation [13], Helmholtz equation [14], Navier–Stokes equations [15], Schrödinger equations [16], Poisson equation [17], sine-Gordon equation [18]. Although the high-order CFDS can get high accurate solution, it usually either needs small spatial discretization or extended finite difference stencils and a small time step, which results in computationally expensive calculations. In general, the computational accuracy and computational efficiency are often seen as the two important factors to evaluate a numerical algorithm. Though the CFDS can achieve a satisfying numerical solution and possesses fast rate of convergence, it also brings many difficulties to practical application such as heavily computational load. Therefore, it is necessary for us to develop the high-order CFDS that not only simplifies the computational load and saves computational time of calculation in the actual engineering problems, but also holds sufficiently accurate solution. In recent years, some reduced models based on proper orthogonal decomposition (POD) have attracted more and more attention in the field of computational mechanics [19, 20]. POD, also known as Karhunen–Loève decomposition (KLD), principal component analysis (PCA), or singular value decomposition (SVD), provides a powerful technique to reduce a large number of interdependent variables to a much smaller number of uncorrelated variables while retaining as much as possible of the variation in the original variables [21,22,23,24]. Thus, we can use the POD technique to greatly reduce the computational cost.

In the past decades, POD has been widely applied to numerical solutions to construct some reduced models. For example, Luo and Sun combined POD with finite difference method, finite element method, and finite volume method to solve the parabolic equations [25,26,27,28], Navier–Stokes equations [29, 30], Sobolev equations [31], viscoelastic wave equation [32], hyperbolic equations [33], and Burgers’ equation [34]. Dehghan and Abbaszadeh proposed POD with upwind local radial basis functions-differential quadrature method to solve compressible Euler equation [35], applied POD into Galerkin method to simulate two-dimensional solute transport problems [36], and combined POD with empirical interpolation method [37]. Zhang proposed a fast and efficient meshless method based on POD for transient heat conduction problem [38] and convection-diffusion problems [19]. Especially, the authors in [39] gave the Carleman estimates for singular parabolic equation. However, to our best knowledge, there are no published results when POD is used to reduce the CFDS4 for parabolic equations. The main goal of this paper is to construct a numerical algorithm which has high computational accuracy and efficiency for solving parabolic equations. Thus, the focus of this paper is on combining the CFDS4 and the POD method, namely the R-CFDS4, to solve parabolic equations.

The paper is organized as follows. The CFDS4 for 1D parabolic equations is derived in Sect. 2. In Sect. 3, R-CFDS4 based on POD for 1D parabolic equations is developed. In Sect. 4, the error estimates between the exact solutions are given. In Sect. 5, a reduced alternating direction implicit fourth-order compact finite difference scheme (R-ADI-CFDS4) based on POD for 2D parabolic equations is developed. In Sect. 6, some numerical examples are presented to demonstrate the efficiency and reliability of the algorithm. Section 7 provides main conclusions.

2 The fourth-order compact FDS for 1D parabolic equation

Firstly, we consider the following 1D initial-boundary value problem \(\mathrm{P} _{1}\):

where a is a positive constant, \(f(x,t)\) and \(\varphi (x)\) are two given enough smooth functions. Let h be the spatial step increment on x-direction and τ be the time step increment, and then write \({x_{j}} = jh \) (\(j = 0,1,2, \ldots ,J\)), \({t_{n}} = n\tau \) (\(n = 0,1,2, \ldots ,N\)), \(u_{j}^{n} \approx u({x_{j}},{j_{n}})\).

Next, we derive the CFDS4 for problem \(\mathrm{P}_{1}\), noting the following Taylor formulas:

Adding Eq. (2) to Eq. (3), we get

Let \(v(x,t) = \frac{{{\partial ^{2}}u}}{{\partial {x^{2}}}}\), then Eq. (4) can be rewritten as follows:

In Eq. (5), if we choose \(t = {t_{n + {\frac{1 }{2}}}}\), then we obtain

Noting that Eq. (1) and \(v(x,t) = \frac{{{\partial ^{2}}u}}{ {\partial {x^{2}}}}\), we get

For the first-order derivative of time, using the center difference quotient formula, we get

Thus, substituting Eq. (8) to Eq. (7), we obtain

Similar with Eq. (2) and Eq. (3), we have the following formulas:

and

By adding Eq. (10) to Eq. (11), we can get the following formula:

Substituting Eq. (9) and Eq. (12) into Eq. (6), we get the formula

Let \(r = \frac{{a\tau }}{{{h^{2}}}}\), and using numerical solution \(u_{j}^{n}\) to replace exact solution \(u({x_{j}}, {t_{n}})\) and ignoring higher-order items, we get

Finally, considering the initial and boundary conditions Eq. (1), we obtain the compact difference formula for problem \(\mathrm{P}_{1}\):

The stability of Eq. (15) is unconditionally stable [40], and the error of the above compact difference scheme is as follows:

3 A reduced fourth-order compact FDS for 1D parabolic equation

In the scheme mentioned above, we only use three points to achieve high accuracy. However, the CFDS4 can get high accuracy by the scheme in Eq. (15), which usually needs extended finite difference stencils or small temporal step. Nevertheless, it will bring overlong computational time in the large real-life engineering problems. Hence, a key issue for engineering problems is how to build a reduced model with the high efficiency and hold sufficiently high accuracy compared with the scheme in Eq. (15).

The POD is an effective approach which can not only reduce the computational time significantly, but also guarantee the high accuracy of algorithm. The main idea of POD is to generate a group of optimal bases of the assemble of snapshots. Then, the reduced CFDS4 is constructed by the optimal basis. The snapshots, in this paper, are collected from some numerical solutions of CFDS4. As a matter of fact, when we compute the actual problem, since the development and change of a large number of future nature phenomena are closely related to previous results, one may choose the numerical experiments or observation data as the snapshots. Therefore, in this section, we first obtain the optimal basis from the snapshot and then use the optimal basis to derive a R-CFDS4 for parabolic equations.

The set of snapshots \(\{ {u_{j}^{{n_{i}}}} \}_{i = 1} ^{d}\) (\(j = 1, 2, \ldots ,J - 1\), \(1 \le {n_{1}} \le {n_{2}} < \cdots < n_{d} \le N \)) can be written as an \(( {J - 1} ) \times d\) matrix A as follows:

Applying the singular value decomposition (SVD) on matrix A, we obtain

where \({\boldsymbol{U}} = {{\boldsymbol{U}}_{ ( {J - 1} ) \times ( {J - 1} )}}\) and \({\boldsymbol{V}} = {{\boldsymbol{V}}_{d \times d}}\) are all orthogonal matrices, \({{\boldsymbol{D}}_{r}} = \operatorname{diag}({\lambda _{1}},{\lambda _{2}}, \ldots ,{\lambda _{r}})\). The matrix \({\boldsymbol{U}} = ( {{\boldsymbol{\theta }}_{1}},{{\boldsymbol{\theta }}_{2}}, \ldots ,{{\boldsymbol{\theta }} _{J - 1}})\) contains the orthogonal eigenvectors to \({\boldsymbol{A}}{{\boldsymbol{A}} ^{\mathrm{T}}}\). The singular values \({\lambda _{i}} \) (\({i = 1,2, \ldots ,r} \)) satisfy the relation of \({\lambda _{1}} \ge {\lambda _{2}} \ge \cdots \ge {\lambda _{r}} > 0\). Denote d columns of \({{\boldsymbol{A}} _{ ( {J-1} ) \times d}}\) by \({{\boldsymbol{\alpha }} ^{i}}={ ( {u_{1}^{{n_{i}}},u_{2}^{{n_{i}}}, \ldots ,u_{J-1}^{{n_{i}}}} )^{\mathrm{T}}}\) (\(i = 1,2, \ldots ,d\)), and define a projection \({P_{M}}\) by

where \(0 < M \le d\) and \(( \cdot , \cdot )\) is the inner product of vectors. Then there exists the following result[41, 42]:

where \(\| \cdot \|_{2}\) is the standard normal of vector. Thus \(\{ {{\boldsymbol{{\theta }}_{l}}} \}_{l = 1}^{M}\) is a group of optimal bases and \({\boldsymbol{\theta }} = ({{\boldsymbol{\theta }}_{1}},{{\boldsymbol{\theta }} _{2}}, \ldots ,{{\boldsymbol{\theta }}_{M}})\) is a matrix constructed by the orthogonal eigenvectors such that \({{\boldsymbol{\theta }}^{T}}{\boldsymbol{\theta }} = {\boldsymbol{I}}\) (I is a unit matrix of order M)

In the following, we construct a reduced CFDS4 for problem \(\mathrm{P}_{1}\). Equation (15) can be rewritten as follows:

Let \({{\boldsymbol{u}}^{n}} = { ( {u_{1}^{n},u_{2}^{n}, \ldots ,u_{J - 1} ^{n}} )^{\mathrm{T}}}\), then we can rewrite Eq. (21) as the following matrix form:

where

and

\(f_{j}^{n} = f({x_{j - 1}},{t_{n + { \frac{1 }{2}}}}) + 10f( {x_{j}},{t_{n + { \frac{1 }{2}}}}) + f({x_{j + 1}},{t_{n + { \frac{1 }{2}}}})\) (\(j = 1,2, \ldots ,J - 1\)).

Substituting

to \({{\boldsymbol{u}}^{n}}\) of Eq. (22) and noting that \(\boldsymbol{\theta ^{T}}\boldsymbol{\theta } = I\), we obtain the R-CFDS4 as follows:

where

After \(\boldsymbol{\beta ^{n}}\) is obtained from Eq. (24), one gets the POD optimal solution \(\boldsymbol{u^{*n}} = \boldsymbol{\theta }\boldsymbol{\beta ^{n}}\). From the above derivation process, it can be seen that CFDS needs to solve the \(( {J - 1} ) \times ( {J - 1} )\) equations in each time step, while R-CFDS4 Eq. (24) only needs \(M \times M\) equations, usually \(J \gg M\), which means R-CFDS4 requires much less computational time than CFDS4 within each time step. Thus, R-CFDS4 can save a lot of computational time during the whole process of solving parabolic equations.

4 The error analysis of a reduced fourth-order FDS for 1D parabolic problem

Theorem 1

Let \(\boldsymbol{u^{n}}\) be the solution vector of Eq. (15) and \(\boldsymbol{u^{*n}}\) be the solution vectors of Eq. (24). If \(\{ {u_{i}^{l}} \}_{l = 1}^{d}\) are uniformly chosen from \(\{ {u_{i}^{n}} \}_{n = 1}^{N}\) and \({ \Vert \boldsymbol{{K_{1}}} \Vert _{2}} \le \frac{1}{2}\), \({ \Vert \boldsymbol{{K_{2}}} \Vert _{2}} \le \frac{1}{2}\), then

where \({ \Vert {\boldsymbol{K_{1}}} \Vert _{2}}\) and \({ \Vert {\boldsymbol{K_{2}}} \Vert _{2}}\) are the normal of matrix \(\boldsymbol{K_{1}}\) and \(\boldsymbol{K_{2}}\), respectively.

Proof

There are two cases in Eq. (26). The first case is \(n = {n_{l}}\) (\(l = 1,2, \ldots ,d\)). Using the definition (Eq. (19))

and Eq. (23) and Eq. (24), we have that \({{\boldsymbol{u}}^{*}} ^{{n_{l}}} = {P_{M}}({{\boldsymbol{u}}^{{n_{l}}}})\). Applying Eq. (20), we have the following conclusion:

The second case is \(n \ne {n_{l}}\). We let \({t_{n}} \in ( {{t _{{n_{l}}}},{t_{{n_{l}} + 1}}} )\), comparing Eq. (22) with Eq. (24), Eq. (24) can be written as similar forms of Eq. (22) as follows:

Subtracting Eq. (29) from Eq. (22), we obtain that

We let \(\frac{c}{2} = \max \{ {{{ \Vert {{{\boldsymbol{K}}_{1}}} \Vert } _{2}},{{ \Vert {{{\boldsymbol{K}}_{2}}} \Vert }_{2}}} \}\), then

Summing Eq. (31) from \({n_{l}}\), \({n_{l}} + 1, \ldots ,n - 1\), we get

By the discrete Gronwall lemma, we get

If \({ \Vert {\boldsymbol{K_{1}}} \Vert _{2}} \le \frac{1}{2}\) and \({ \Vert {\boldsymbol{K_{2}}} \Vert _{2}} \le \frac{1}{2}\), \(\{ {u_{i}^{{n_{l}}}} \}_{l = 1}^{d}\) are uniformly chosen from \(\{ {u_{i}^{n}} \}_{n = 1}^{N}\), which implies \(n - {n_{l}} \le N/d\), then we obtain from the above inequality that

In the synthesis, the above two cases obtain Eq. (26), which completes Theorem 1. □

Theorem 2

Under the assumption of Theorem 1, let \({u_{j} ^{*n}}\) be the solution of the R-CFDS4 and \(u ( {{x_{j}},{t_{n}}} )\) be the exact solution of problem (\(\mathrm{P}_{1}\)). Then there are the following error estimates:

Proof

Let \(u_{j}^{n}\) be the solution of the CFDS4, then

since

Meanwhile, according to Theorem 1 and vector norm, we get

Thus, we can obtain Eq. (34). □

5 A reduced fourth-order compact FDS for 2D parabolic problem and its error analysis

In this section, we firstly construct alternating direction implicit fourth-order compact finite difference scheme (ADI-CFDS4) for the 2D parabolic equations, then develop R-ADI-CFDS4 for the 2D parabolic equations and provide error estimates between solutions of the R-ADI-CFDS4 and exact solutions, which is similar to 1D parabolic problems.

Let \(\varOmega \subset {R^{2}}\) be a bounded domain. Consider the following initial-boundary value problem (\(\mathrm{P}_{2}\)):

Partition the domain \(\varOmega \times (0,T)\) with \({x_{i}} = i{h_{x}} \) (\(i = 0,1,2, \ldots ,L\)), \({y_{j}} = j{h_{y}}\) (\(j = 0,1,2, \ldots ,J\)), and \({t_{n}} = n\tau \) (\(n = 0,1,2, \ldots ,N\)), where τ is temporal step increment, \({h_{x}}\) and \({h_{y}}\) are the spatial step increments on x-direction and y-direction, respectively.

Let \(u_{i,j}^{n} \approx u({x_{i}},{y_{j}},{t_{n}})\); \({r_{1}} = \frac{ \tau }{{h_{x}^{2}}}\), \({r_{2}} = \frac{\tau }{{h_{y}^{2}}}\)

and

Then the ADI-CFDS4 of problem can be described as follows:

In Eq. (41), \(1 \le i \le L - 1\), \(1 \le j \le J - 1 \), \(0 \le n \le N - 1\).

The difference scheme is unconditionally stable [40], and the error is as follows:

Let \(u_{l}^{n} = u_{i,j}^{n}\), \(f_{l}^{n} = f_{i,j}^{n}\) (\(l = ( {i - 1} ) \cdot L + j\), \(m = L \cdot J\), \(1 \le l \le m\), \(1 \le j \le J\), \(1 \le i \le L\), \(1 \le n \le N\)). Using the similar methods as in Sect. 3, we obtain the POD base \(\boldsymbol{\theta } = {\boldsymbol{\theta } _{m \times M}}\) such that \(\boldsymbol{\theta ^{T}}\boldsymbol{\theta } = {\boldsymbol{I}_{M \times M}}\). Then the corresponding vector formulation of Eq. (41) can be written as follows:

where \(\boldsymbol{u^{n}} = {(u_{1}^{n},u_{2}^{n},u_{3}^{n}, \ldots ,u_{m} ^{n})^{T}}\), \(\boldsymbol{F^{n}} = {(f_{1}^{n},f_{2}^{n},f_{3}^{n}, \ldots ,f _{m}^{n})^{T}}\), and \(\bar{\boldsymbol{u}}^{\boldsymbol{n}} = {(\bar{u}_{1}^{n},\bar{u} _{2}^{n},\bar{u}_{3}^{n}, \ldots ,\bar{u}_{m}^{n})^{T}}\). The form of \(\boldsymbol{K_{1}}\) and \(\boldsymbol{K_{2}}\) is the same as Eq. (21) except its order number. If \(\boldsymbol{u^{n}}\) and \(\bar{\boldsymbol{u}}^{\boldsymbol{n}}\) of Eq. (38) are substituted for \(\boldsymbol{u^{*n}} = {\boldsymbol{\theta } _{m \times M}}{( \boldsymbol{\alpha ^{n}})_{M \times 1}}\), \(\bar{\boldsymbol{u}}^{\boldsymbol{n}} = {\boldsymbol{\theta } _{m \times M}}{(\bar{\boldsymbol{\alpha }}^{\boldsymbol{n}})_{M \times 1}}\), \(\boldsymbol{F^{n}} = {\boldsymbol{\theta } _{m \times M}}(\bar{\boldsymbol{F}}^{\boldsymbol{n}})_{M \times 1}\) (\(n = 0,1,2, \ldots ,N\)), respectively, then we obtain the R-ADI-CFDS4 as follows:

where \(n = 0,1,2,3, \ldots ,N\), \(\boldsymbol{\alpha ^{0}} = \boldsymbol{\theta ^{T}} \boldsymbol{u^{0}} = \boldsymbol{\theta ^{T}} \boldsymbol{(u_{1}^{0},u_{2}^{0}, \ldots ,u_{m}^{0})^{T}}\).

After solving \({\alpha ^{n}}\) from Eq. (44), one gets the POD optimal solutions \(\boldsymbol{u^{*n}} = \boldsymbol{\theta } \boldsymbol{\alpha ^{n}} \) (\(n = 0,1,2, \ldots ,N\)) of problem (\(\mathrm{P}_{2}\)).

Theorem 3

Let \(u_{i,j}^{*n}\) be the POD solutions \(\boldsymbol{u^{*n}} = \boldsymbol{\theta }\boldsymbol{\alpha ^{n}} \) (\(n = 0,1,2, \ldots ,N\)) of Eq. (44). \(u_{i,j}^{n}\) be the solutions of Eq. (43). \(u ( {{x_{i}},{y_{j}},{t_{n}}} )\) be the exact solutions of problem (\(\mathrm{P}_{2}\)). If \({ \Vert {\boldsymbol{K_{1}}} \Vert _{2}} \le \frac{1}{2}\) and \({ \Vert {\boldsymbol{K_{2}}} \Vert _{2}} \le \frac{1}{2}\) are uniformly chosen from \(\{ {u_{i,j}^{n}} \} _{n = 1}^{N}\), we conclude that

6 Numerical examples

In this section, we use the four test problems to demonstrate the advantages of the R-CFDS4 for 1D parabolic equations and the R-ADI-CFDS4 for 2D parabolic equations. Our algorithm is implemented with MATLAB R2017a running on a desktop with Intel Core i7 4790 CPU at 2.93 GHz and 7.98 GB memory.

Example 1

Consider 1D parabolic equation (\(\mathrm{SP} _{1}\))

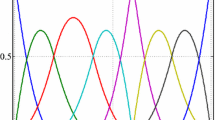

The exact solution is \(u(x,t) = \exp ( - t)\sin (x)\). We take the numerical solutions of CFDS4 with \(h=0.02\pi \), \(\tau =0.01\) at \(t = 0.1,0.2,0.3, \ldots ,2\) as snapshots. It can be obtained by computing that eigenvalue \({\lambda _{6}} \le 6 \times {10^{ - 16}}\). The number of optimal bases is referred to the paper [31]. By computation in Theorem 1, we know that as long as the initial five or more eigenvectors of matrix \({\boldsymbol{A}}{{\boldsymbol{A}}^{\mathrm{T}}}\) are chosen as the optimal basis, the R-CFDS4 can satisfy the desirable accuracy requirement. Numerical solutions of R-CFDS4 and the difference between CFDS4 and R-CFDS4 at \(t=0.4,0.8,1.2,1.6,2.0\) have been drawn in Fig. 1, which shows that the results of CFDS4 are in very good agreement with those of R-CFDS4. The errors of CFDS4 and R-CFDS4 at several points and the computational time are also reported in Table 1 and Table 2. This implies that the R-CFDS4 is more efficient than the CFDS4 under guaranteeing with the sufficient accuracy of numerical solutions for solving the 1D parabolic equation. In Fig. 2, the errors of CFDS4 and R-CFDS4 are almost similar. Besides, the difference between the CFDS4 and R-CFDS4 is drawn, which means that R-CFDS4 can achieve almost the same accuracy as CFDS4 under the same nodes and step. The differences between the CFDS4 and R-CFDS4 with five optimal bases are no more than \(3 \times {10^{ - 15}}\) in Fig. 1. In Fig. 3 and Table 3, the R-CFDS4 solution based five POD bases at different moments and details for consuming time at \(t=6\) have been given, which shows that the R-CFDS4 can be extended to a time interval that is longer than the time interval on which the snapshots were collected.

To show that Eq. (34) is fourth-order accurate in space and second-order in time, we first let \(\tau =0.0002\), then reduce h by factor of 2 each time. The data in Table 4 clearly show that Eq. (34) is fourth-order accurate in space since the maximal error is reduced by a factor about 24 each time. In a similar way, the second-order accurate in time of Eq. (34) has been confirmed because the maximal error is reduced by a factor about 22 each time at \(h=0.0025\pi \) in Table 5.

Example 2

Consider 1D parabolic equation (\(\mathrm{SP} _{2}\))

The exact solution is \(u(x,t) = x({x^{2}} + {e^{t}})\). We take the numerical solutions of CFDS4 with \(h=0.02\), \(\tau =0.01\) at \(t = 0.05,0.1,0.15, \ldots ,1\) as snapshots. We choose the initial ten vectors in the U as the optimal basis to ensure desirable accuracy requirement. It is shown that eigenvalue \({\lambda _{11}} \le 2 \times {10^{ - 13}}\) by computing. Similar to Example 1, numerical solutions of R-CFDS4 and difference between R-CFDS4 and CFDS4 at \(t=0.2,0.4,0.6,0.8,1.0\) have been drawn in Fig. 4. It is not difficult to see that the results of CFDS4 are in excellent agreement with those of R-CFDS4. The errors of CFDS4 and R-CFDS4 are shown in Table 6 and exhibited in Fig. 5. It is not difficult to see that the CFDS4 and R-CFDS4 are basically identical. Furthermore, by comparing the expended CPU time of the R-CFDS4 with that of the CFDS4 in Table 6 and Table 7, the obvious advantages of R-CFDS4 in computational efficiency can be clearly found. The differences between the CFDS4 and R-CFDS4 with ten optimal bases are no more than \(8 \times {10^{ - 10}}\) in Fig. 4. In Fig. 6 and Table 8, the R-CFDS4 solution based ten POD bases at different moments and details for consuming time at \(t=5\) have been given, which shows that the R-CFDS4 can be extended to a time interval that is longer than the time interval on which the snapshots were collected.

In order to explore whether the convergence order is consistent with the theoretical results, τ is fixed as 0.0025, then h is reduced by a factor of 2 each time. The maximal error in Table 9 is reduced by a factor about 24 each time and has confirmed the fourth-order accurate in space of Eq. (34). In a similar way, we fix h as 0.0025, the maximal error is reduced by a factor about 22 each time in Table 10, which indicates that the R-CFDS4 is second-order accurate in time.

Example 3

Consider the 2D parabolic equation (\(\mathrm{SP} _{3}\))

where \(\varOmega = \{ (x,y);0 \le x \le \pi ,0 \le y \le \pi \} \), ∂Ω denotes the boundary of Ω.

In this example, the exact solution is \(u(x,y,t) = {e^{ - 2t}}\sin x \sin y\). We take the numerical solutions of ADI-CFDS4 with \({h_{x}}= {h_{y}}= 0.02\pi \), \(\tau = 0.02\) at \(t = 0.1,0.2,0.3, \ldots ,2\) as snapshots. It is shown that eigenvalue \({\lambda _{6}} \le 2 \times {10^{ - 15}}\) by computing. Similar with Example 1, we also choose five POD bases for our computation of 2D parabolic equations. Numerical solutions of R-ADI-CFDS4 and the difference between R-ADI-CFDS4 and ADI-CFDS4 have been drawn in Fig. 7 and Fig. 8. We can clearly find that the numerical solutions of R-ADI-CFDS4 are in very excellent agreement with ADI-CFDS4. In Table 13 and Table 14, Error 1 is the error of R-ADI-CFDS4, Error 2 is the error of ADI-CFDS4. The errors of ADI-CFDS4 and R-ADI-CFDS4 at several points and the computational time are shown and compared in Table 13 and Table 14, which indicates that R-ADI-CFDS4 could save more computational time than ADI-CFDS4 under guaranteeing the sufficient accuracy of ADI-CFDS4. In Fig. 9 and Fig. 7, it can be found that the numerical solution of R-ADI-CFDS4 is almost similar with the numerical solution of ADI-CFDS4. In Fig. 8, the error of ADI-CFDS4 is depicted, which shows the sufficient accuracy of ADI-CFDS4. The differences between the ADI-CFDS4 and R-ADI-CFDS4 with five optimal bases are no more than \(3 \times {10^{ - 14}}\) in Fig. 9 and Fig. 7. In Table 15, the details for consuming time at \(t=4\) have been given, which shows the advantages of R-ADI-CFDS4.

In order to verify the convergence order of Eq. (42), we fix τ as 0.0001 in Table 11. The maximal error is reduced by a factor about 24 each time, which shows the R-ADI-CFDS4 is fourth-order accurate in space. Similarly, the data in Table 12 have confirmed the second-order accurate in time of R-ADI-CFDS4.

Example 4

Consider the 2D parabolic equation (\(\mathrm{SP} _{4}\))

\(\varOmega = \{ (x,y);0 \le x \le \pi ,0 \le y \le \pi \} \), ∂Ω denotes the boundary of Ω. The exact solution is \(u(x,y,t) = {e^{ - t}}\sin x\sin y\). We take the numerical solutions of ADI-CFDS4 with \({h_{x}}={h_{y}}= 0.02\pi \), \(\tau = 0.02\) at \(t = 0.1,0.2,0.3, \ldots ,2\) as snapshots. It is shown that eigenvalue \({\lambda _{6}} \le 7 \times {10^{ - 15}}\) by computing. Numerical solutions of R-ADI-CFDS4 and difference between R-ADI-CFDS4 and ADI-CFDS4 have been drawn in Fig. 10. We can clearly find that the approximate solutions of R-ADI-CFDS4 are in very excellent agreement with ADI-CFDS4. Error 1 and Error 2 are the same as in Example 3. The errors of several points and computational time are written in Table 16 and Table 17, which shows the high efficiency and reliability of R-ADI-CFDS4 compared with ADI-CFDS4. The differences between the ADI-CFDS4 and R-ADI-CFDS4 with five optimal bases are no more than \(4 \times {10^{ - 14}}\) in Fig. 10 and Fig. 11. R-ADI-CFDS4 solution based five POD bases at \(t=4\) are given in Fig. 11, while the details for consuming time at \(t=4\) are listed in Table 18. Meanwile, we also list the error of ADI-CFDS4 at \(t=2\) in Fig. 12.

The convergence order of R-ADI-CFDS4 is also verified. In Table 19, we first fix τ as 0.0001. Next, \(h_{x}\) and \(h_{y}\) are reduced a factor of 2 each time, the R-ADI-CFDS4 is fourth-order accurate in space since the maximal error is reduced by a factor about 24 each time. Then, in Table 20, we take \(h_{x}=h_{y}\) as 0.02π. The τ is reduced a factor of 2 each time, R-ADI-CFDS4 is second-order accurate in time since the maximal error is reduced by a factor about 22 each time.

7 Conclusions

In this paper, we developed a reduced fourth-order compact difference scheme for solving parabolic equations. The efficiency and accuracy of the proposed algorithm were examined by two 1D problems and two 2D problems. The numerical examples illustrated that the fourth-order compact difference scheme coupled with POD technique not only keeps high computational accuracy, but also brings significant computational time saving for solving parabolic equation. In the future, we plan to improve our algorithm to solve more complicated parabolic equations in three dimensions.

References

Qiu, T.Q., Tien, C.L.: Short-pulse laser heating on metals. Int. J. Heat Mass Transf. 35, 719–726 (1992)

Faghri, A., Zhang, Y.: Transport Phenomena in Multiphase Systems. Academic Press, Boston (2006)

Tzou, D.Y., Dai, W.: Thermal lagging in multi-carrier systems. Int. J. Heat Mass Transf. 52, 1206–1213 (2009)

Enyi, C.D.: Robust exponential attractors for a parabolic–hyperbolic phase-field system. Bound. Value Probl. 2018, 146 (2018)

Grabowski, P.: Small-gain theorem for a class of abstract parabolic systems. Opusc. Math. 38, 651–680 (2018)

Papageorgiou, N.S., Radulescu, V.D., Repovs, D.D.: Nonlinear Analysis—Theory and Methods. Springer, Berlin (2019)

Martín-Vaquero, J., Sajavičius, S.: The two-level finite difference schemes for the heat equation with nonlocal initial condition. Appl. Math. Comput. 342, 166–177 (2019)

Zhou, C., Zou, Y., Chai, S., Zhang, Q., Zhu, H.: Weak Galerkin mixed finite element method for heat equation. Appl. Numer. Math. 123, 180–199 (2018)

Hermeline, F.: A finite volume method for the approximation of diffusion operators on distorted meshes. J. Comput. Phys. 160, 481–499 (2000)

Pu, Z., Hai, P.: The error analysis of Crank–Nicolson-type difference scheme for fractional subdiffusion equation with spatially variable coefficient. Bound. Value Probl. 2017, 15 (2017)

Wang, H., Wang, J., Li, S.: A new conservative nonlinear high-order compact finite difference scheme for the general Rosenau-RLW equation. Bound. Value Probl. 2015, 77 (2015)

Zhao, J., Corless, R.: Compact finite difference method for integro-differential equations. Appl. Math. Comput. 177, 271–288 (2006)

Liao, W.: An implicit fourth-order compact finite difference scheme for one-dimensional Burgers’ equation. Appl. Math. Comput. 206, 755–764 (2008)

Sutmann, G.: Compact finite difference schemes of sixth order for the Helmholtz equation. J. Comput. Appl. Math. 203, 15–31 (2007)

Shukla, R., Tatineni, M., Zhong, X.: Very high-order compact finite difference schemes on non-uniform grids for incompressible Navier–Stokes equations. J. Comput. Phys. 224, 1064–1094 (2007)

Gao, Z., Xie, S.: Fourth-order alternating direction implicit compact finite difference schemes for two-dimensional Schrödinger equations. Appl. Numer. Math. 61, 593–614 (2011)

Sutmann, G., Steffen, B.: High-order compact solvers for the three-dimensional Poisson equation. J. Comput. Appl. Math. 187, 142–170 (2006)

Mohebbi, A., Dehghan, M.: High-order solution of one-dimensional sine-Gordon equation using compact finite difference and DIRKN methods. Math. Comput. Model. 51, 537–549 (2010)

Zhang, P., Zhang, X., Xiang, H., Song, L.: A fast and stabilized meshless method for the convection-dominated convection-diffusion problems. Numer. Heat Transf., Part A, Appl. 70, 420–431 (2016)

Luo, Z., Chen, G.: Proper Orthogonal Decomposition Methods for Partial Differential Equations. Academic Press, New York (2018)

Liang, Y.C., Lee, H.P., Lim, S.P., Lin, W.Z., Lee, K.H., Wu, C.G.: Proper orthogonal decomposition and its applications—part I: theory. J. Sound Vib. 252, 527–544 (2002)

Rathinam, M., Petzold, L.: A new look at proper orthogonal decomposition. SIAM J. Numer. Anal. 41, 1893–1925 (2003)

Kerschen, G., Golinval, J., Vakakis, A., Bergman, L.: The method of proper orthogonal decomposition for dynamical characterization and order reduction of mechanical systems: an overview. Nonlinear Dyn. 41, 147–169 (2005)

Luo, Z.: A POD-based reduced-order TSCFE extrapolation iterative format for two-dimensional heat equations. Bound. Value Probl. 2015, 59 (2015)

Sun, P., Luo, Z., Zhou, Y.: Some reduced finite difference schemes based on a proper orthogonal decomposition technique for parabolic equations. Appl. Numer. Math. 60, 154–164 (2010)

Luo, Z., Li, H., Sun, P.: A reduced-order Crank–Nicolson finite volume element formulation based on POD method for parabolic equations. Appl. Math. Comput. 219, 5887–5900 (2013)

An, J., Luo, Z., Li, H., Sun, P.: Reduced-order extrapolation spectral-finite difference scheme based on POD method and error estimation for three-dimensional parabolic equation. Front. Math. China 10, 1025–1040 (2015)

Luo, Z., Li, H., Sun, P.: A reduced-order Crank–Nicolson finite volume element formulation based on POD method for parabolic equations. Appl. Math. Comput. 219, 5887–5900 (2013)

Luo, Z., Chen, J., Navon, I.M., Yang, X.: Mixed finite element formulation and error estimates based on proper orthogonal decomposition for the nonstationary Navier–Stokes equations. SIAM J. Numer. Anal. 47, 1–19 (2008)

Luo, Z., Li, H., Sun, P., Gao, J.: A reduced-order finite difference extrapolation algorithm based on POD technique for the non-stationary Navier–Stokes equations. Appl. Math. Model. 37, 5464–5473 (2013)

Luo, Z., Teng, F.: A reduced-order extrapolated finite difference iterative scheme based on POD method for 2D Sobolev equation. Appl. Math. Comput. 329, 374–383 (2018)

Luo, Z., Teng, F.: An optimized SPDMFE extrapolation approach based on the POD technique for 2D viscoelastic wave equation. Bound. Value Probl. 2017, 6 (2017)

Luo, Z., Jin, S., Chen, J.: A reduced-order extrapolation central difference scheme based on POD for two-dimensional fourth-order hyperbolic equations. Appl. Math. Comput. 289, 396–408 (2016)

Luo, Z., Yang, X., Zhou, Y.: A reduced finite difference scheme based on singular value decomposition and proper orthogonal decomposition for Burgers equation. Appl. Math. Comput. 229, 97–107 (2016)

Dehghan, M., Abbaszadeh, M.: An upwind local radial basis functions-differential quadrature (RBF-DQ) method with proper orthogonal decomposition (POD) approach for solving compressible Euler equation. Eng. Anal. Bound. Elem. 92, 244–256 (2018)

Dehghan, M., Abbaszadeh, M.: A reduced proper orthogonal decomposition (POD) element free Galerkin (POD-EFG) method to simulate two-dimensional solute transport problems and error estimate. Appl. Numer. Math. 126, 92–112 (2018)

Dehghan, M., Abbaszadeh, M.: A combination of proper orthogonal decomposition discrete empirical interpolation method (POD-DEIM) and meshless local RBF-DQ approach for prevention of groundwater contamination. Comput. Math. Appl. 75, 1390–1412 (2018)

Zhang, X., Xiang, H.: A fast meshless method based on proper orthogonal decomposition for the transient heat conduction problems. Int. J. Heat Mass Transf. 84, 729–739 (2015)

Fragnelli, G., Mugnai, D.: Carleman estimates for singular parabolic equations with interior degeneracy and non-smooth coefficients. Adv. Nonlinear Anal. 6, 61–84 (2017)

Hua, D., Li, X.: Numerical Method and Program Realization of Differential Equation. Publishing House of Electronics Industry, Beijing (2016) (in Chinese)

Luo, Z., Chen, J., Zhu, J., Wang, R.: An optimizing reduced order FDS for the tropical Pacific Ocean reduced gravity model. Int. J. Numer. Methods Fluids 55, 143–161 (2007)

Luo, Z., Wang, R., Zhu, J.: Finite difference scheme based on proper orthogonal decomposition for the nonstationary Navier–Stokes equations. Sci. China Ser. A, Math. 50, 1186–1196 (2007)

Acknowledgements

The authors express their sincere thanks to the anonymous reviews for their valuable suggestions and corrections for improving the quality of this paper.

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Funding

This work is financially supported by the Academic Mainstay Foundation of Hubei Province of China (No. D20171202) and the National Natural Science Foundation of China (Grant No. 11826208).

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Stability analysis

Appendix: Stability analysis

To study the stability of our scheme, we assume \(f=0\). Equation (22) can be written as \(\boldsymbol{A}{\boldsymbol{u}^{k + 1}} = \boldsymbol{B}{\boldsymbol{u}^{k}}\), where

By computing the formula of eigenvectors, the eigenvectors of A and B can be written as follows:

and

It is easy to conclude that the eigenvectors of \({\boldsymbol{A}^{ - 1}} \boldsymbol{B}\) are

The \(\vert {{\lambda _{j}}} \vert \le 1\) is equivalent to

It is easy to see that when \(r>0 \), there is the following inequality:

Because \(r = \frac{{a\tau }}{{{h^{2}}}}>0 \), we can conclude that \(\vert {{\lambda _{j}}} \vert \le 1\) and \(\rho ({A^{ - 1}}B) \le 1\). From that, we can deduce that Eq. (15) is unconditionally stable.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, B., Zhang, X. A reduced fourth-order compact difference scheme based on a proper orthogonal decomposition technique for parabolic equations. Bound Value Probl 2019, 130 (2019). https://doi.org/10.1186/s13661-019-1243-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-019-1243-8